Windows Azure and Cloud Computing Posts for 4/10/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

My (@rogerjenn) Using Excel 2010 and the Hive ODBC Driver to Visualize Hive Data Sources in Apache Hadoop on Windows Azure updated 4/12/2012 begins:

Introduction

Microsoft’s Apache Hadoop on Windows Azure Community Technical Preview (Hadoop on Azure CTP) includes a Hive ODBC driver and Excel add-in, which enable 32-bit or 64-bit Excel to issue HiveQL Queries against Hive data sources running in the Windows Azure cloud. Hadoop on Azure is a private CTP, which requires an invitation for its use. You can request an invitation by completing a Microsoft Connect survey here. Hadoop on Azure’s Elastic MapReduce (EMR) Console enables users to create and use Hadoop clusters in the following sizes at no charge:

The Apache Software Foundation’s Hive™ is a related data warehousing and ad hoc querying component of Apache Hadoop v1.0. According to the Hive Wiki:

Hive is a data warehouse system for Hadoop that facilitates easy data summarization, ad-hoc queries, and the analysis of large datasets stored in Hadoop compatible file systems. Hive provides a mechanism to project structure onto this data and query the data using a SQL-like language called HiveQL. At the same time this language also allows traditional map/reduce programmers to plug in their custom mappers and reducers when it is inconvenient or inefficient to express this logic in HiveQL.

The following two earlier OakLeaf blog posts provide an introduction to Hadoop on Azure in general and its Interactive Hive Console in particular:

- Introducing Apache Hadoop Services for Windows Azure (4/2/2012)

- Using Data from Windows Azure Blobs with Apache Hadoop on Windows Azure CTP (4/9/2012)

This demonstration requires creating a flightdata_asv Hive data source table from downloadable tab-delimited text files as described in the second article and the following section. You design and execute an aggregate query against the Hive table and then visualize the query result set with an Excel bar chart. …

and ends with the steps required to create this worksheet and bar chart:

Jo Maitland (@JoMaitlandSF) asserted Hadoop cloud services don’t go far enough in a 4/12/2012 research report for GigaOm Pro (requires registration for a free trial subscription):

GoGrid and Sungard join a long list of service providers offering Hadoop in the cloud, but these services are missing a key ingredient at the top of the stack that will appeal to the mainstream. …

Microsoft’s Hive ODBC driver and add-in are a candidate for Jo’s “key ingredient,” as noted in the preceding article.

Datanami (@Datanami) asked Half the World’s Data to Touch Hadoop by 2015? on 4/12/2012:

It’s no secret that Hortonworks has placed its bets on Apache Hadoop, but the company has extended its view of the long-term success of the open source goldmine.

According to Shaun Connolly, VP of the company’s corporate strategy, Hadoop with touch or process 50 percent of the world’s data by 2015, a staggering figure, especially if the multi-zettabyte prediction for world data volumes is true for that year.

Connolly says that the fundamental drivers leading the interest in Hadoop and the adoption of Hadoop really are rooted in estimates like those from analyst group IDC, which estimates that the amount of data that enterprise data centers will be processing will grow by 50x.

Beyond data volume, however, is an equally important issue. Connolly says that if you look at data flow and what enterprise data centers, there is a gap in terms of how data is being handled. He states that 80% of that data flowing through enterprise data centers will be required to touch in some form or fashion--users may not store it, but it will flow through the business.

What this means, says Connolly, is that volume, the velocity, and the variety of data flowing through enterprises are key elements. He notes that if one is to look at traditional key application architectures today, they’re really not set up for that challenge, and it’s a challenge that requires a fundamental rethink of architectures overall. This is where Hadoop comes in…

The Hortonworks business lead claims that Hadoop can address these weaknesses because it was purpose-built to address those three V’s of big data (volume and the variety and velocity). It can store unstructured data, semi-structured data very well across commodity hardware, but says Connolly, it’s able to do it in an economically viable way, which makes it a standout technology option.

Hortonworks says that to stay ahead of the curve and prepare the Hadoop era, broad availability to technologies is step one—a fact that they say is covered by their commitment to open source software.

The second element of the equation is creating a broad and vibrant ecosystem in and around that, and enabling that ecosystem. As Connolly says, “It sort of gives us hand and glove with the open source technology, providing open APIs around that platform, but really focusing at Hortonworks as a business. When enabling sort of the key vendors and solution providers and platform vendors up and down the data stack, to be able to integrate their solutions that may already be within the enterprise today. Integrate them very tightly with this next generation data platform, so they’re able to offer more value to their existing enterprise customers."

Related Stories

Hortonworks has a contract to assist Microsoft with implementing its Apache Hadoop on Windows Azure CTP.

Carl Nolan (@carl_nolan) described a Framework for Composing and Submitting .Net Hadoop MapReduce Jobs in a 4/10/2012 post:

If you have been following my blog you will see that I have been putting together samples for writing .Net Hadoop MapReduce jobs; using Hadoop Streaming. However one thing that became apparent is that the samples could be reconstructed in a composable framework to enable one to submit .Net based MapReduce jobs whilst only writing Mappers and Reducers types.

To this end I have put together a framework that allows one to submit MapReduce jobs using the following command line syntax:

MSDN.Hadoop.Submission.Console.exe -input "mobile/data/debug/sampledata.txt" -output "mobile/querytimes/debug"

-mapper "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduce\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"Where the mapper and reducer parameters are .Net types that derive from a base Map and Reduce abstract classes. The input, output, and files options are analogous to the standard Hadoop streaming submissions. The mapper and reducer options (more on a combiner option later) allow one to define a .Net type derived from the appropriate abstract base classes.

Under the covers standard Hadoop Streaming is being used, where controlling executables are used to handle the StdIn and StdOut operations and activating the required .Net types. The “file” parameter is required to specify the DLL for the .Net type to be loaded at runtime, in addition to any other required files.

As an aside the framework and base classes are all written in F#; with sample Mappers and Reducers, and abstract base classes being provided both in C# and F#. The code is based off the F# Streaming samples in my previous blog posts. I will cover more of the semantics of the code in a later post, but I wanted to provide some usage samples of the code.

As always the source can be downloaded from:

http://code.msdn.microsoft.com/Framework-for-Composing-af656ef7

Mapper and Reducer Base Classes

The following definitions outline the abstract base classes from which one needs to derive.

C# Base

- public abstract class MapReduceBase

- {

- protected MapReduceBase();

- public override void Setup();

- }

C# Base Mapper

- public abstract class MapperBaseText : MapReduceBase

- {

- protected MapperBaseText();

- public override IEnumerable<Tuple<string, object>> Cleanup();

- public abstract override IEnumerable<Tuple<string, object>> Map(string value);

- }

C# Base Reducer

- public abstract class ReducerBase : MapReduceBase

- {

- protected ReducerBase();

- public override void Cleanup();

- public abstract override object Reduce(string value, IEnumerable<string> value);

- }

F# Base

- [<AbstractClass>]

- type MapReduceBase() =

- abstract member Setup: unit -> unit

- default this.Setup() = ()

F# Base Mapper

- [<AbstractClass>]

- type MapperBaseText() =

- inherit MapReduceBase()

- abstract member Map: string -> IEnumerable<string * obj>

- abstract member Cleanup: unit -> IEnumerable<string * obj>

- default this.Cleanup() = Seq.empty

F# Base Reducer

- [<AbstractClass>]

- type ReducerBase() =

- inherit MapReduceBase()

- abstract member Reduce: string -> IEnumerable<string> -> obj

- abstract member Cleanup: unit -> unit

- default this.Cleanup() = ()

The objective in defining these base classes was to not only support creating .Net Mapper and Reducers but also to provide a means for Setup and Cleanup operations to support In-Place Mapper optimizations, utilize IEnumerable and sequences for publishing data from the Mappers and Reducers, and finally provide a simple submission mechanism analogous to submitting Java based jobs.

For each class a Setup function is provided to allow one to perform tasks related to the instantiation of each Mapper and/or Reducer. The Mapper’s Map and Cleanup functions return an IEnumerable consisting of tuples with a a Key/Value pair. It is these tuples that represent the mappers output. Currently the types of the key and value’s are respectively a String and an Object. These are then converted to strings for the streaming output.

The Reducer takes in an IEnumerable of the Object String representations, created by the Mapper output, and reduces this into a single Object value.

Combiners

The support for Combiners is provided through one of two means. As is often the case, support is provided so one can reuse a Reducer as a Combiner. In addition explicit support is provided for a Combiner using the following abstract class definition:

C# Base Combiner

- [AbstractClass]

- public abstract class CombinerBase : MapReduceBase

- {

- protected CombinerBase();

- public override void Cleanup();

- public abstract override object Combine(string value, IEnumerable<string> value);

- }

F# Base Combiner

- [<AbstractClass>]

- type CombinerBase() =

- inherit MapReduceBase()

- abstract member Combine: string -> IEnumerable<string> -> obj

- abstract member Cleanup: unit -> unit

- default this.Cleanup() = ()

Using a Combiner follows exactly the same pattern for using mappers and reducers, as example being:

-combiner "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinCombiner, MSDN.Hadoop.MapReduceCSharp"

The prototype for the Combiner is essentially the same as that of the Reducer except the function called for each row of data is Combine, rather than Reduce.

Binary and XML Processing

In my previous posts on Hadoop Streaming I provided samples that allowed one to perform Binary and XML based Mappers. The composable framework also provides support for submitting jobs that support Binary and XML based Mappers. To support this the following additional abstract classes have been defined:

C# Base Binary Mapper

- [AbstractClass]

- public abstract class MapperBaseBinary : MapReduceBase

- {

- protected MapperBaseBinary();

- public override IEnumerable<Tuple<string, object>> Cleanup();

- public abstract override IEnumerable<Tuple<string, object>> Map(string value, Stream value);

- }

C# Base XML Mapper

- [AbstractClass]

- public abstract class MapperBaseXml : MapReduceBase

- {

- protected MapperBaseXml();

- public abstract override string MapNode { get; }

- public override IEnumerable<Tuple<string, object>> Cleanup();

- public abstract override IEnumerable<Tuple<string, object>> Map(XElement value);

- }

F# Base Binary Mapper

- [<AbstractClass>]

- type MapperBaseBinary() =

- inherit MapReduceBase()

- abstract member Map: string -> Stream -> IEnumerable<string * obj>

- abstract member Cleanup: unit -> IEnumerable<string * obj>

- default this.Cleanup() = Seq.empty

F# Base Binary Mapper

- [<AbstractClass>]

- type MapperBaseXml() =

- inherit MapReduceBase()

- abstract member MapNode: string with get

- abstract member Map: XElement -> IEnumerable<string * obj>

- abstract member Cleanup: unit -> IEnumerable<string * obj>

- default this.Cleanup() = Seq.empty

To support using Mappers and Reducers derived from these types a “format” submission parameter is required. Supported values being Text, Binary, and XML; the default value being “Text”.

To submit a binary streaming job one just has to use a Mapper derived from the MapperBaseBinary abstract class and use the binary format specification:

-format Binary

In this case the input into the Mapper will be a Stream object that represents a complete binary document instance.

To submit an XML streaming job one just has to use a Mapper derived from the MapperBaseXml abstract class and use the XML format specification, along with a node to be processed within the XML documents:

-format XML –nodename Node

In this case the input into the Mapper will be an XElement node derived from the XML document based on the nodename parameter.

Samples

To demonstrate the submission framework here are some sample Mappers and Reducers with the corresponding command line submissions:

C# Mobile Phone Range (with In-Mapper optimization)

- namespace MSDN.Hadoop.MapReduceCSharp

- {

- public class MobilePhoneRangeMapper : MapperBaseText

- {

- private Dictionary<string, Tuple<TimeSpan, TimeSpan>> ranges;

- private Tuple<string, TimeSpan> GetLineValue(string value)

- {

- try

- {

- string[] splits = value.Split('\t');

- string devicePlatform = splits[3];

- TimeSpan queryTime = TimeSpan.Parse(splits[1]);

- return new Tuple<string, TimeSpan>(devicePlatform, queryTime);

- }

- catch (Exception)

- {

- return null;

- }

- }

- /// <summary>

- /// Define a Dictionary to hold the (Min, Max) tuple for each device platform.

- /// </summary>

- public override void Setup()

- {

- this.ranges = new Dictionary<string, Tuple<TimeSpan, TimeSpan>>();

- }

- /// <summary>

- /// Build the Dictionary of the (Min, Max) tuple for each device platform.

- /// </summary>

- public override IEnumerable<Tuple<string, object>> Map(string value)

- {

- var range = GetLineValue(value);

- if (range != null)

- {

- if (ranges.ContainsKey(range.Item1))

- {

- var original = ranges[range.Item1];

- if (range.Item2 < original.Item1)

- {

- // Update Min amount

- ranges[range.Item1] = new Tuple<TimeSpan, TimeSpan>(range.Item2, original.Item2);

- }

- if (range.Item2 > original.Item2)

- {

- //Update Max amount

- ranges[range.Item1] = new Tuple<TimeSpan, TimeSpan>(original.Item1, range.Item2);

- }

- }

- else

- {

- ranges.Add(range.Item1, new Tuple<TimeSpan, TimeSpan>(range.Item2, range.Item2));

- }

- }

- return Enumerable.Empty<Tuple<string, object>>();

- }

- /// <summary>

- /// Return the Dictionary of the Min and Max values for each device platform.

- /// </summary>

- public override IEnumerable<Tuple<string, object>> Cleanup()

- {

- foreach (var range in ranges)

- {

- yield return new Tuple<string, object>(range.Key, range.Value.Item1);

- yield return new Tuple<string, object>(range.Key, range.Value.Item2);

- }

- }

- }

- public class MobilePhoneRangeReducer : ReducerBase

- {

- public override object Reduce(string key, IEnumerable<string> value)

- {

- var baseRange = new Tuple<TimeSpan, TimeSpan>(TimeSpan.MaxValue, TimeSpan.MinValue);

- return value.Select(stringspan => TimeSpan.Parse(stringspan)).Aggregate(baseRange, (accSpan, timespan) =>

- new Tuple<TimeSpan, TimeSpan>((timespan < accSpan.Item1) ? timespan : accSpan.Item1, (timespan > accSpan.Item2) ? timespan : accSpan.Item2));

- }

- }

- }

MSDN.Hadoop.Submission.Console.exe -input "mobilecsharp/data" -output "mobilecsharp/querytimes"

-mapper "MSDN.Hadoop.MapReduceCSharp.MobilePhoneRangeMapper, MSDN.Hadoop.MapReduceCSharp"

-reducer "MSDN.Hadoop.MapReduceCSharp.MobilePhoneRangeReducer, MSDN.Hadoop.MapReduceCSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceCSharp\bin\Release\MSDN.Hadoop.MapReduceCSharp.dll"C# Mobile Min (with Mapper, Combiner, Reducer)

- namespace MSDN.Hadoop.MapReduceCSharp

- {

- public class MobilePhoneMinMapper : MapperBaseText

- {

- private Tuple<string, object> GetLineValue(string value)

- {

- try

- {

- string[] splits = value.Split('\t');

- string devicePlatform = splits[3];

- TimeSpan queryTime = TimeSpan.Parse(splits[1]);

- return new Tuple<string, object>(devicePlatform, queryTime);

- }

- catch (Exception)

- {

- return null;

- }

- }

- public override IEnumerable<Tuple<string, object>> Map(string value)

- {

- var returnVal = GetLineValue(value);

- if (returnVal != null) yield return returnVal;

- }

- }

- public class MobilePhoneMinCombiner : CombinerBase

- {

- public override object Combine(string key, IEnumerable<string> value)

- {

- return value.Select(timespan => TimeSpan.Parse(timespan)).Min();

- }

- }

- public class MobilePhoneMinReducer : ReducerBase

- {

- public override object Reduce(string key, IEnumerable<string> value)

- {

- return value.Select(timespan => TimeSpan.Parse(timespan)).Min();

- }

- }

- }

MSDN.Hadoop.Submission.Console.exe -input "mobilecsharp/data" -output "mobilecsharp/querytimes"

-mapper "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinMapper, MSDN.Hadoop.MapReduceCSharp"

-reducer "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinReducer, MSDN.Hadoop.MapReduceCSharp"

-combiner "MSDN.Hadoop.MapReduceCSharp.MobilePhoneMinCombiner, MSDN.Hadoop.MapReduceCSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceCSharp\bin\Release\MSDN.Hadoop.MapReduceCSharp.dll"F# Mobile Phone Query

- // Extracts the QueryTime for each Platform Device

- type MobilePhoneQueryMapper() =

- inherit MapperBaseText()

- // Performs the split into key/value

- let splitInput (value:string) =

- try

- let splits = value.Split('\t')

- let devicePlatform = splits.[3]

- let queryTime = TimeSpan.Parse(splits.[1])

- Some(devicePlatform, box queryTime)

- with

- | :? System.ArgumentException -> None

- // Map the data from input name/value to output name/value

- override self.Map (value:string) =

- seq {

- let result = splitInput value

- if result.IsSome then

- yield result.Value

- }

- // Calculates the (Min, Avg, Max) of the input stream query time (based on Platform Device)

- type MobilePhoneQueryReducer() =

- inherit ReducerBase()

- override self.Reduce (key:string) (values:seq<string>) =

- let initState = (TimeSpan.MaxValue, TimeSpan.MinValue, 0L, 0L)

- let (minValue, maxValue, totalValue, totalCount) =

- values |>

- Seq.fold (fun (minValue, maxValue, totalValue, totalCount) value ->

- (min minValue (TimeSpan.Parse(value)), max maxValue (TimeSpan.Parse(value)), totalValue + (int64)(TimeSpan.Parse(value).TotalSeconds), totalCount + 1L) ) initState

- box (minValue, TimeSpan.FromSeconds((float)(totalValue/totalCount)), maxValue)

MSDN.Hadoop.Submission.Console.exe -input "mobile/data" -output "mobile/querytimes"

-mapper "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.MobilePhoneQueryReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceFSharp\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"F# Store XML (XML in Samples)

- // Extracts the QueryTime for each Platform Device

- type StoreXmlElementMapper() =

- inherit MapperBaseXml()

- override self.MapNode = "Store"

- override self.Map (element:XElement) =

- let aw = "http://schemas.microsoft.com/sqlserver/2004/07/adventure-works/StoreSurvey"

- let demographics = element.Element(XName.Get("Demographics")).Element(XName.Get("StoreSurvey", aw))

- seq {

- if not(demographics = null) then

- let business = demographics.Element(XName.Get("BusinessType", aw)).Value

- let sales = Decimal.Parse(demographics.Element(XName.Get("AnnualSales", aw)).Value) |> box

- yield (business, sales)

- }

- // Calculates the Total Revenue of the store demographics

- type StoreXmlElementReducer() =

- inherit ReducerBase()

- override self.Reduce (key:string) (values:seq<string>) =

- let totalRevenue =

- values |>

- Seq.fold (fun revenue value -> revenue + Int32.Parse(value)) 0

- box totalRevenue

MSDN.Hadoop.Submission.Console.exe -input "stores/demographics" -output "stores/banking"

-mapper "MSDN.Hadoop.MapReduceFSharp.StoreXmlElementMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.StoreXmlElementReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceFSharp\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"

-nodename Store -format XmlF# Binary Document (Word and PDF Documents)

- // Calculates the pages per author for a Word document

- type OfficePageMapper() =

- inherit MapperBaseBinary()

- let (|WordDocument|PdfDocument|UnsupportedDocument|) extension =

- if String.Equals(extension, ".docx", StringComparison.InvariantCultureIgnoreCase) then

- WordDocument

- else if String.Equals(extension, ".pdf", StringComparison.InvariantCultureIgnoreCase) then

- PdfDocument

- else

- UnsupportedDocument

- let dc = XNamespace.Get("http://purl.org/dc/elements/1.1/")

- let cp = XNamespace.Get("http://schemas.openxmlformats.org/package/2006/metadata/core-properties")

- let unknownAuthor = "unknown author"

- let authorKey = "Author"

- let getAuthorsWord (document:WordprocessingDocument) =

- let coreFilePropertiesXDoc = XElement.Load(document.CoreFilePropertiesPart.GetStream())

- // Take the first dc:creator element and split based on a ";"

- let creators = coreFilePropertiesXDoc.Elements(dc + "creator")

- if Seq.isEmpty creators then

- [| unknownAuthor |]

- else

- let creator = (Seq.head creators).Value

- if String.IsNullOrWhiteSpace(creator) then

- [| unknownAuthor |]

- else

- creator.Split(';')

- let getPagesWord (document:WordprocessingDocument) =

- // return page count

- Int32.Parse(document.ExtendedFilePropertiesPart.Properties.Pages.Text)

- let getAuthorsPdf (document:PdfReader) =

- // For PDF documents perform the split on a ","

- if document.Info.ContainsKey(authorKey) then

- let creators = document.Info.[authorKey]

- if String.IsNullOrWhiteSpace(creators) then

- [| unknownAuthor |]

- else

- creators.Split(',')

- else

- [| unknownAuthor |]

- let getPagesPdf (document:PdfReader) =

- // return page count

- document.NumberOfPages

- // Map the data from input name/value to output name/value

- override self.Map (filename:string) (document:Stream) =

- let result =

- match Path.GetExtension(filename) with

- | WordDocument ->

- // Get access to the word processing document from the input stream

- use document = WordprocessingDocument.Open(document, false)

- // Process the word document with the mapper

- let pages = getPagesWord document

- let authors = (getAuthorsWord document)

- // close document

- document.Close()

- Some(pages, authors)

- | PdfDocument ->

- // Get access to the pdf processing document from the input stream

- let document = new PdfReader(document)

- // Process the pdf document with the mapper

- let pages = getPagesPdf document

- let authors = (getAuthorsPdf document)

- // close document

- document.Close()

- Some(pages, authors)

- | UnsupportedDocument ->

- None

- if result.IsSome then

- snd result.Value

- |> Seq.map (fun author -> (author, (box << fst) result.Value))

- else

- Seq.empty

- // Calculates the total pages per author

- type OfficePageReducer() =

- inherit ReducerBase()

- override self.Reduce (key:string) (values:seq<string>) =

- let totalPages =

- values |>

- Seq.fold (fun pages value -> pages + Int32.Parse(value)) 0

- box totalPages

MSDN.Hadoop.Submission.Console.exe -input "office/documents" -output "office/authors"

-mapper "MSDN.Hadoop.MapReduceFSharp.OfficePageMapper, MSDN.Hadoop.MapReduceFSharp"

-reducer "MSDN.Hadoop.MapReduceFSharp.OfficePageReducer, MSDN.Hadoop.MapReduceFSharp"

-combiner "MSDN.Hadoop.MapReduceFSharp.OfficePageReducer, MSDN.Hadoop.MapReduceFSharp"

-file "%HOMEPATH%\MSDN.Hadoop.MapReduceFSharp\bin\Release\MSDN.Hadoop.MapReduceFSharp.dll"

-file "C:\Reference Assemblies\itextsharp.dll" -format BinaryOptional Parameters

To support some additional Hadoop Streaming options a few optional parameters are supported.

-numberReducers X

As expected this specifies the maximum number of reducers to use.

-debug

The option turns on verbose mode and specifies a job configuration to keep failed task outputs.

To view the the supported options one can use a help parameters, displaying:

Command Arguments:

-input (Required=true) : Input Directory or Files

-output (Required=true) : Output Directory

-mapper (Required=true) : Mapper Class

-reducer (Required=true) : Reducer Class

-combiner (Required=false) : Combiner Class (Optional)

-format (Required=false) : Input Format |Text(Default)|Binary|Xml|

-numberReducers (Required=false) : Number of Reduce Tasks (Optional)

-file (Required=true) : Processing Files (Must include Map and Reduce Class files)

-nodename (Required=false) : XML Processing Nodename (Optional)

-debug (Required=false) : Turns on Debugging OptionsUI Submission

The provided submission framework works from a command-line. However there is nothing to stop one submitting the job using a UI; albeit a command console is opened. To this end I have put together a simple UI that supports submitting Hadoop jobs.

This simple UI supports all the necessary options for submitting jobs.

Code Download

As mentioned the actual Executables and Source code can be downloaded from:

http://code.msdn.microsoft.com/Framework-for-Composing-af656ef7

The source includes, not only the .Net submission framework, but also all necessary Java classes for supporting the Binary and XML job submissions. This relies on a custom Streaming JAR which should be copied to the Hadoop lib directory.

To use the code one just needs to reference the EXE’s in the Release directory. This folder also contains the MSDN.Hadoop.MapReduceBase.dll that contains the abstract base class definitions.

Moving Forward

Moving forward there a few considerations for the code, that I will be looking at over time:

Currently the abstract interfaces are all based on Object return types. Moving forward it would be beneficial if the types were based on Generics. This would allow a better serialization process. Currently value serialization is based on string representation of an objects value and the key is restricted to s string. Better serialization processes, such as Protocol Buffers, or .Net Serialization, would improve performance.

Currently the code only supports a single key value, although the multiple keys are supported by the streaming interface. Various options are available for dealing with multiple keys which will next be on my investigation list.

In a separate post I will cover what is actually happening under the covers.

If you find the code useful and/or use this for your MapReduce jobs, or just have some comments, please do let me know.

Avkash Chauhan (@avkashchauhan) explained Programmatically retrieving Task ID and Unique Reducer ID in MapReduce in a 4/10/2012 post:

For each Mapper and Reducer you can get [both] Task attempt id and Task ID. This can be done when you set up your map using the Context object. You may also know that when setting a Reducer, an unique reduce ID is used inside reducer class setup method. You can get this ID as well.

There are multiple ways you can get this info:

1. Using JobConf Class.

- JobConf.get("mapred.task.id") will provide most of the info related with Map and Reduce task along with attempt id.

2. You can use Context Class and use as below:

- To get task attempt ID - context.getTaskAttemptID()

- Reducer Task ID - Context.getTaskAttemptID().getTaskID()

- Reducer Number - Context.getTaskAttemptID().getTaskID().getId()

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Wayne Berry returns to the Windows Azure blog with his SQL Azure Database Query Usage (CPU) Graph in the Management Portal post of 4/10/2012:

Within the SQL Azure Management Portal, customers can check their service usage with the help of various graphs. One of these graphs is the SQL Azure Database Query Usage (CPU) graph, which provides information on the amount of execution time for all queries running on a SQL Azure database over a trailing three month period.

In some cases, this graph was not rendering properly for customers, displaying an error message instead. This error was purely an issue with the portal and not with the underlying SQL Azure databases. We have removed the graph from the management portal, and are looking for alternative ways to surface the same data in a reliable and stable manner. We do apologize for any inconvenience this change has caused.

To learn more about the SQL Azure Management Portal, you can view this video.

Cihan Biyikoglu (@cihangirb) described AzureWatch for Monitoring Federated Databases in SQL Azure in a 4/9/2012 post:

AzureWatch has a great new experience now for watching Federations.

With AzureWatch it gets easier to monitor the whole deployment in an aggregate view for the following resource dimensions;

Database Size

- Number of open transactions

- Number of open connections

- Number of blocking queries

- Number of federated members (root database only)

Igor writes about the support in-depth here;

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

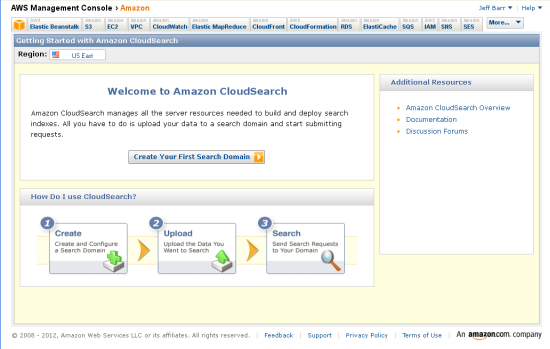

Mary Jo Foley (@maryjofoley) asserted “Microsoft is moving its Bing Search programming interface to the Windows Azure Marketplace and turning it into a paid subscription service” in a deck for her Microsoft's answer to Amazon's CloudSearch: Bing on Azure Marketplace post of 4/12/2012 to ZDNet’s All About Microsoft blog:

The same day that Amazon announced the beta of its new CloudSearch technology, Microsoft announed its counterstrike: Moving the Bing Search application programming interface (API) to the Windows Azure Marketplace.

The Windows Azure Marketplace is the site where Microsoft and third party vendors can sell (or offer for free) their data, apps and services.

Microsoft officials said the Bing API Marketplace transition will “begin in several weeks and take a few months to complete.” Via a post to the Bing Developer blog on April 12, officials did say that Microsoft plans to make the API available on a monthly subscription basis.

“Developers can expect subscription pricing to start at approximately $40 (USD) per month for up to 20,000 queries each month,” according to the post. However, “(d)uring the transition period, developers will be encouraged to try the Bing Search API for free on the Windows Azure Marketplace, before we begin charging for the service.”

In the interim, Microsoft is advising developers that they can continue to use the Bing Search API 2.0 for free. After the transition, it will no longer be free for public use and will be available from the Azure Marketplace only.

“We understand that many of you are using the API as an important element in your websites and applications, and we will continue to share details with you through the Bing Developer Blog as we approach the transition,” the Softies added.

Amazon CloudSearch, which went to beta today, is Amazon’s new managed search service in the cloud designed to allow developers to add search to their applications. Amazon officials blogged today that customers can set it up and start processing queries in less than an hour for less than $100 per month. The service relies on a set of CloudSearch APIs that can be managed through the Amazon Web Services console.

Alex James (@adjames) concluded his WCF Actions series with Actions in WCF Data Services – Part 3: A sample provider for the Entity Framework on 4/12/2012:

This post is the last in a series on Actions in WCF Data Services. The series was started with an example experience for defining actions (Part 1) and how IDataServiceActionProvider works (Part 2). In this post we’ll go ahead and walk through a sample action provider implementation that delivers the experience outlined in part 1 for the Entity Framework.

If you want to walk through the code while reading this you’ll find the code here: http://efactionprovider.codeplex.com.

Implementation Strategy

The center of the implementation is the EntityFrameworkActionProvider class that derives from ActionProvider. The EntityFrameworkActionProvider is specific to EF, whereas the ActionProvider provides a generic starting point for an implementation of IDataServiceActionProvider that enables the experience outlined in Part 1.

There is quite a bit going on in the sample code, so I can’t walk through all of it in a single blog post, instead I’ll focus on the most interesting bits of the code:Finding Actions

Data Service providers can be completely dynamic, producing a completely different model for each request. However with the built-in Entity Framework provider the model is static, basically because the EF model is static, also the actions are static too, because they are defined in code using methods with attributes on them. This all means one thing – our implementation can do a single reflection pass to establish what actions are in the model and cache that for all requests.

So every time the EntityFrameActionProvider is constructed, it first checks a cache of actions defined on our data source, which in our case is a class derived from EF’s DBContext. If a cache look up it successful great, if not it uses the ActionFactory class to go an construct ServiceActions for every action declared on the DBContext.

The algorithm for the ActionFactory is relatively simple:

- It is given the Type of the class that defines all the Actions. For us that is the T passed to DataService<T>, which is a class derived from EF’s DBContext.

- It them looks for methods with one of these attributes [Action], [NonBindableAction] or [OccasionallyBindableAction], all of which represent different types of actions.

- For each method it finds it then uses the IDataServiceMetadataProvider it was with constructed with to convert the parameters and return types into ResourceTypes.

- At this point it can construct a ServiceAction for each.

Parameter Marshaling

When an action is actually invoked we need to convert any parameters from WCF Data Services internal representation into objects that the end users methods can actually handle. It is likely that marshaling will be quite different for different underlying providers (EF, Reflection, Custom etc), so the ActionProvider uses an interface IParameterMarshaller, whenever it needs to convert parameters. The EF’s parameter marshaled looks like this:

public class EntityFrameworkParameterMarshaller: IParameterMarshaller

{

static MethodInfo CastMethodGeneric = typeof(Enumerable).GetMethod("Cast");

static MethodInfo ToListMethodGeneric = typeof(Enumerable).GetMethod("ToList");public object[] Marshall(DataServiceOperationContext operationContext, ServiceAction action, object[] parameters)

{

var pvalues = action.Parameters.Zip(parameters, (parameter, parameterValue) => new { Parameter = parameter, Value = parameterValue });

var marshalled = pvalues.Select(pvalue => GetMarshalledParameter(operationContext, pvalue.Parameter, pvalue.Value)).ToArray();return marshalled;

}

private object GetMarshalledParameter(DataServiceOperationContext operationContext, ServiceActionParameter serviceActionParameter, object value)

{

var parameterKind = serviceActionParameter.ParameterType.ResourceTypeKind;

// Need to Marshall MultiValues i.e. Collection(Primitive) & Collection(ComplexType)

if (parameterKind == ResourceTypeKind.EntityType)

{// This entity is UNATTACHED. But people are likely to want to edit this...

IDataServiceUpdateProvider2 updateProvider = operationContext.GetService(typeof(IDataServiceUpdateProvider2)) as IDataServiceUpdateProvider2;

value = updateProvider.GetResource(value as IQueryable, serviceActionParameter.ParameterType.InstanceType.FullName);

value = updateProvider.ResolveResource(value); // This will attach the entity.

}

else if (parameterKind == ResourceTypeKind.EntityCollection)

{

// WCF Data Services constructs an IQueryable that is NoTracking...

// but that means people can't just edit the entities they pull from the Query.

var query = value as ObjectQuery;

query.MergeOption = MergeOption.AppendOnly;

}

else if (parameterKind == ResourceTypeKind.Collection)

{

// need to coerce into a List<> for dispatch

var enumerable = value as IEnumerable;

// the <T> in List<T> is the Instance type of the ItemType

var elementType = (serviceActionParameter.ParameterType as CollectionResourceType).ItemType.InstanceType;

// call IEnumerable.Cast<T>();

var castMethod = CastMethodGeneric.MakeGenericMethod(elementType);

object marshalledValue = castMethod.Invoke(null, new[] { enumerable });

// call IEnumerable<T>.ToList();

var toListMethod = ToListMethodGeneric.MakeGenericMethod(elementType);

value = toListMethod.Invoke(null, new[] { marshalledValue });

}

return value;

}

}This is probably the hardest part of the whole sample because it involves understanding what is necessary to make the parameters you pass to the service authors action methods updatable (remembers Actions generally have side-effects).

For example when Data Services see’s something like this: POST http://server/service/Movies(1)/Checkout

It builds a query to represent the Movie parameter to the Checkout action, however when Data Services is building queries, it doesn’t need the Entity Framework to track the results – because all it is doing is serializing the entities and then discarding them. However in this example, we need to take the query and actually retrieve the object in such as way that it is tracked by EF, so that if it gets modified inside the action EF will notice and push changes back to the Database during SaveChanges.

Delaying invocation

As discussed in Part 2, we need to delay actual invocation of the action until SaveChanges(), to do this we return an implementation of an interface called IDataServiceInvokable:

public class ActionInvokable : IDataServiceInvokable

{

ServiceAction _serviceAction;

Action _action;

bool _hasRun = false;

object _result;public ActionInvokable(DataServiceOperationContext operationContext, ServiceAction serviceAction, object site, object[] parameters, IParameterMarshaller marshaller)

{

_serviceAction = serviceAction;

ActionInfo info = serviceAction.CustomState as ActionInfo;

var marshalled = marshaller.Marshall(operationContext,serviceAction,parameters);info.AssertAvailable(site,marshalled[0], true);

_action = () => CaptureResult(info.ActionMethod.Invoke(site, marshalled));

}

public void CaptureResult(object o)

{

if (_hasRun) throw new Exception("Invoke not available. This invokable has already been Invoked.");

_hasRun = true;

_result = o;

}

public object GetResult()

{

if (!_hasRun) throw new Exception("Results not available. This invokable hasn't been Invoked.");

return _result;

}

public void Invoke()

{

try

{

_action();

}

catch {

throw new DataServiceException(

500,

string.Format("Exception executing action {0}", _serviceAction.Name)

);

}

}

}As you can see this does a couple of things, it creates an Action (this time a CLR one - just to confuse the situation i.e. a delegate that returns void), that actually calls the method on your DBContext via reflection passing the marshaled parameters. It also has a few guards, one to insure that Invoke() is only called once, and another to make sure GetResult() is only called after Invoke().

Actions that are bound *sometimes*

Part 1 and 2 introduce the concept of occasionally bindable actions, but basically an action might not always be available in all states, for example you might not always be able to checkout a movie (perhaps you already have it checked out).

The ActionInfo class has an IsAvailable(…) method which is used by the ActionProvider whenever WCF Data Services need to know if an Action should be advertised or not. The implementation of this will call the method specified in the [OccasionallyBindableAction] attribute. The code is complicated because it supports always return true without actually checking if the actual availability method is too expensive to call repeatedly. This is indicated using the [SkipCheckForFeeds] attribute.

Getting the Sample Code

The sample is on codeplex made up of two projects:

- ActionProviderImplementation – the actual implementation of the Entity Framework action provider.

- MoviesWebsite – a sample MVC3 website that exposes a simple OData Service

Summary

For most of you downloading the samples and using them to create a OData Service with Actions over the Entity Framework is all you’ll need.

However if you are actually considering tweaking the example or building your own from scratch hopefully you now have enough context.

Alex James (@adjames) concluded his WCF Actions series with Actions in WCF Data Services – Part 2: How IDataServiceActionProvider works on 4/11/2012:

In this post we will explorer the IDataServiceActionProvider interface, which must be implemented to add Actions to a WCF Data Service.

However if you are simply creating an OData Service and you can find an implementation of IDataServiceActionProvider that works for you (I’ll post sample code with Part 3) then you can probably skip this post.

Now before we continue, to understand this post fully you’ll need to be familiar with Custom Data Service Providers and a good place to start is here.

IDataServiceActionProvider

Okay so lets take a look at the actions interface:

public interface IDataServiceActionProvider

{

bool AdvertiseServiceAction(

DataServiceOperationContext operationContext,

ServiceAction serviceAction,

object resourceInstance,

bool resourceInstanceInFeed,

ref ODataAction actionToSerialize);

IDataServiceInvokable CreateInvokable(

DataServiceOperationContext operationContext,

ServiceAction serviceAction,

object[] parameterTokens);

IEnumerable<ServiceAction> GetServiceActions(

DataServiceOperationContext operationContext);

IEnumerable<ServiceAction> GetServiceActionsByBindingParameterType(

DataServiceOperationContext operationContext,

ResourceType bindingParameterType);

bool TryResolveServiceAction(

DataServiceOperationContext operationContext,

string serviceActionName,

out ServiceAction serviceAction);

}

You’ll notice that every method either takes or returns a ServiceAction. ServiceAction is the metadata representation of an Action, that includes information like the Action name, its parameters, its ReturnType etc.When you implement IDataServiceActionProvider you are augmenting the metadata for your service which is defined by your services implementation of IDataServiceMetadataProvider with Actions and handling dispatch to those actions as appropriate.

We added this new interface rather than creating a new version of IDataServiceMetadataProvider because we didn’t have time to add an Action implementation for the built-in Entity Framework and Reflection provider, but we still wanted you be able to add actions when using those providers. This separation of concerns allows you to use the built-in providers and layer in support for Actions on the side.

However one problem remains: to create a new Action you will need access to the ResourceTypes in the your service, so you can create Action parameters and specify Action ReturnTypes. Previously you couldn’t get at the ResourceTypes unless you created a completely custom provider. So to give you access to the ResourceTypes we added an implementation of IServiceProvider to the DataServiceOperationContext class which is passed to every one of the above methods.

Now anywhere you have one of these operationContexts you can get the current implementation of IDataServiceMetadataProvider (and thus the ResourceTypes) like this:

var metadata = operationContext.GetService(typeof(IDataServiceMetadataProvider)) as IDataServiceMetadataProvider;

Exposing ServiceActions

There are 3 methods that the Data Services Server uses to learn about actions:

- GetServiceActions(DataServiceOperationContext) – returns every ServiceAction in the service, and is used when we need all the metadata, i.e. if someone goes to $metadata

- GetServiceActionsByBindingParameterType(DataServiceOperationContext,ResourceType) – returns every ServiceAction that can be bound to the ResourceType specified. This is used when we are returning an entity and we want to include information about Actions that can be invoked against that entity. The contract here is you should only include Actions that take the specified ResourceType exactly (i.e. no derived types) as the binding parameter to the action. We will call this method once for each ResourceType we encounter during serialization.

- TryResolveServiceAction(DataServiceOperationContext,serviceActionName,out serviceAction) – return true if a ServiceAction with the specified name is found.

Now you could clearly implement both GetServiceActionsByBindingParameterType(..) and TryResolveServiceAction(..) by calling GetServiceActions(..), but Data Services tries to avoid loading all the metadata at once wherever possible, so you get the opportunity to provide more efficient targeted implementations.

Basically 99% of the time Data Services doesn’t need every ServiceAction, so it won’t ask for all of them most of the time.

To expose an Action you simply create a ServiceAction and return it from these methods as appropriate. For example to create a ServiceAction that corresponds to this C# signature (where Movie is an entity):

void Rate(Movie movie, int rating)

You would do something like this:

ServiceAction movieRateAction = new ServiceAction(

“Rate”, // name of the action

null, // no return type i.e. void

null, // no return type means we don’t need to know the ResourceSet so use null.

OperationParameterBindingKind.Always, // i.e this action is always bound to an Movie entities

// other options are Never and Always.

new [] {

new ServiceActionParameter(“movie”, movieResourceType),

new ServiceActionParameter(“rating”, ResourceType.GetPrimitiveType(typeof(int)))

}

);As you can see nothing too tricky here.

Advertizing ServiceActions

If you looked at the first post you’ll remember that some Actions are available only in certain states. This is configured when you create your the ServiceAction, something like this:

ServiceAction checkoutMovieAction = new ServiceAction(

“Checkout”, // name of the action

ResourceType.GetPrimitiveType(typeof(bool)), // Edm.Boolean is the returnType

null, // the returnType is a bool, so it doesn’t have a ResourceSet

OperationParameterBindingKind.Sometimes, // You can’t always checkout a movie

new [] { new ServiceActionParameter(“Movie”, movieResourceType) }

);Notice in this example the OperationParameterBindingKind is set to Sometimes which means the Checkout Action is not available for every Movie. So when DataServices returns a Movie it will check with the ActionProvider to see if the Action is currently available. Which it does by calling:

bool AdvertiseServiceAction(

DataServiceOperationContext operationContext,

ServiceAction serviceAction, // the action that the server knows MAY be bound.

object resourceInstance, // the entity which MAY allow the action to be bound.

bool resourceInstanceInFeed, // whether the server is serializing a single entity or a feed (expect multiple calls).

ref ODataAction actionToSerialize); // modifying this parameter allows you to do customize things like the URL

// the client will POST to to invoke the action.For example you might check if the current user (i.e. HttpContext.Current.User) has a Movie checked out already, to decide whether they can Checkout that Movie or not.

The resourceInstanceInFeed parameter needs a special mention. Sometimes working out whether an Action is available is time or resource intensive, for example if you have to do a separate database query. Generally this isn’t a problem if you are returning just one Entity, but if you are returning a whole feed of entities it is clearly undesirable. The OData protocol says that in situations like this you should err by exposing actions that aren’t actually available (and fail later if they are invoked). WCF Data Services doesn’t know if it is expensive to establish action availability, so to help you decide whether to do the check it lets you know whether you are in a feed or not. This way your Action provider can just return true, it if knows it is costly to calculate and it is in a feed.

Invoking ServiceActions

When an action is invoked, your implementation of this is called:

IDataServiceInvokable CreateInvokable(

DataServiceOperationContext operationContext,

ServiceAction serviceAction,

object[] parameterTokens);Notice you don’t actually invoke the action immediately instead you return an implementation of IDataServiceInvokable, which looks like this:

public interface IDataServiceInvokable

{

object GetResult();

void Invoke();

}As you can see this is a simple interface, but why do we delay calling the action?

Well actions generally have side-effects so they need to work in conjunction with the UpdateProvider (or IDataServiceUpdateProvider2), to actually save those changes to disk. To support Actions you need an Update Provider like the built-in Entity Framework provider that implements the new IDataServiceUpdateProvider2 interface:

public interface IDataServiceUpdateProvider2 : IDataServiceUpdateProvider, IUpdatable

{

void ScheduleInvokable(IDataServiceInvokable invokable);

}This allows WCF Data Services to schedule arbitrary work to happen during IDataServiceUpdateProvider.SaveChanges(..), which allows update providers and action providers to be written independently. Which is great because if you are using the Entity Framework you really don’t want to have to write an update provider from scratch.

Now when you implement IDataServiceInvokable you are responsible for 3 things:

- Capturing and potentially marshaling the parameters.

- Dispatching the parameters to the code that actually implements the Action when Invoke() is called.

- Storing any results from Invoke() so they can be retrieved using GetResult()

The parameters themselves are passed as tokens. This is because it is possible to write a Data Service Provider that works with tokens that represent resources, if this is the case you may need to convert these token into actual resources before dispatching to the actual action. What is required depends 100% on the rest of the provider code, so it is impossible to say exactly what you need to do here. However in part 3 well explore doing this for the Entity Framework.

If the first parameter to the action is a binding parameter (i.e. an EntityType or a Collection of EntityTypes) it will be passed as an un-enumerated IQueryable. Most of the time this isn’t too interesting but it does mean you can do nifty tricks like write an action that doesn’t actually retrieve the entities from the database if appropriate.

Summary

This post walked you through the design of IDataServiceActionProvider and the expectations for people implementing this interface. While this is quite a tricky interface to implement, it is low level code and hopefully you will be able to find an existing implementation that works for you. Indeed in Part 3 we will share and walk through an sample implementation for the Entity Framework designed to deliver the Service Author Experience we introduced in Part 1.

Andrew Brust (@andrewbrust, pictured below) asked “A Technical Fellow at Microsoft says we’re headed for an in-memory database tipping point. What does this mean for Big Data?” in a deck for his In-memory databases at Microsoft and elsewhere post of 4/11/2012:

Yesterday, Microsoft’s Dave Campbell, a Technical Fellow on the SQL Server team, posted to the SQL Server team blog on the subject of in-memory database technology. Mary Jo Foley, our “All About Microsoft” blogger here at ZDNet, provided some analysis on Campbell’s thoughts in a post of her own. I read both, and realized there’s an important Big Data side to this story.

In a nutshell

In his post, Campbell says in-memory is about to hit a tipping point and, rather than leaving that assertion unsubstantiated, he provided a really helpful explanation as to why.

Campbell points out that there’s been an interesting confluence in the database and computing space:

- Huge advances in transistor density (and, thereby, in memory capacity and multi-core ubiquity)

- As-yet untranscended limits in disk seek times (and access latency in general)

This combination of factors is leading — and in some cases pushing — the database industry to in-memory technology. Campbell says that keeping things closer to the CPU, and avoiding random fetches from electromechanical hard disk drives, are the priorities now. That means bringing entire databases, or huge chunks of them, into memory, where they can be addressed quickly by processors.

Compression and column stores

Compression is a big part of this and, in the Business Intelligence world, so are column stores. Column stores keep all values for a column (field) next to each other, rather than doing so with all the values in a row (record). In the BI world, this allows for fast aggregation (since all the values you’re aggregating are typically right next to each other) and high compression rates.

Microsoft’s xVelocity technology (branded as “VertiPaq” until quite recently) uses in-memory column store technology. The technology manifested itself a few years ago as the engine behind PowerPivot, a self-service BI add-in for Excel and SharePoint. With the release of SQL Server 2012, this same engine has been implemented inside Microsoft’s full SQL Server Analysis Services component, and had been adapted for use as a special columnstore index type in the SQL Server relational database as well.

The BD Angle

How does this affect Big Data? I can think of a few ways:

- As I’ve said in a few posts here, Massively Parallel Processing (MPP) data warehouse appliances are Big Data products. A few of them use columnar, in-memory technology. Campbell even said that columnstore indexes will be added to Microsoft’s MPP product soon. So MPP has already started to go in-memory.

- Some tools that can connect to Hadoop and can provide analysis and data visualization services for its data, may use in-memory technology as well. Tableau is one example of a product that does this.

- Databases used with Hadoop, like HBase, Cassandra and HyperTable, fall into the “wide column store” category of NoSQL databases. While NoSQL wide column stores and BI column store databases are not identical, their technologies are related. That creates certain in-memory potential for HBase and other wide column stores, as their data is subject to high rates of compression.

Keeping Hadoop in memory

Hadoop’s MapReduce approach to query processing, to some extent, combats disk latency though parallel computation. This seems ripe for optimization though. Making better use of multi-core processing within a node in the Hadoop cluster is one way to optimize. I’ve examined that in a recent post as well.

- Also Read: Making Hadoop optimizations Pervasive

Perhaps using in-memory technology in place of disk-based processing is another way to optimize Hadoop. Perhaps we could even combine the approaches: Campbell points out in his post that the low latency of in-memory technology allows for better utilization of multi-cores.

Campbell also says in-memory will soon work its way into transactional databases and their workloads. That’s neat, and I’m interested in seeing it. But I’m also interested in seeing how in-memory can take on Big Data workloads.

Perhaps the Hadoop Distributed File System (HDFS) might allow in-memory storage to be substituted in for disk-based storage. Or maybe specially optimized solid state disks will be built that have performance on par with RAM (Random Access Memory). Such disks could then be deployed to nodes in a Hadoop cluster.

No matter what, MapReduce, powerful though it is, leaves some low hanging fruit for the picking. The implementation of in-memory technology might be one such piece of fruit. And since Microsoft has embraced Hadoop, maybe it will take a run at making it happen.

Addendum

For an approach to Big Data that does use in-memory technology but does not use Hadoop, check out JustOneDB. I haven’t done much due diligence on them, but I’ve talked to their CTO, Duncan Pauly, about the product. He and the company seem very smart and have some fairly breakthrough ideas about databases today and how they need to change.

The current Apache Hadoop on Windows Azure CTP requires all data used by Hadoop clusters to be held in memory.

The WCF Data Services Team explained What happened to application/json in WCF DS 5.0? in a 4/11/2012 post:

The roadmap for serialization formats

We have been talking for a while about a more efficient format for JSON serialization. The new serialization format will be part of the OData v3 protocol, and we believe that the much-improved JSON format should be the default response when requesting application/json.

You may notice that when you upgrade to WCF DS 5.0, requesting the old JSON format is a bit different. To get the old JSON format, you must now explicitly request application/json;odata=verbose or set the MaxDataServiceVersion header to 2.0.

When will I get a 415 response and what can I do about it?

WCF Data Services 5.0 will return a 415 in response to a request for application/json if the service configuration allows a v3 response and if the client does not specify a MaxDataServiceVersion header or specifies a MaxDataServiceVersion header of 3.0. Clients should always send a value for the MaxDataServiceVersion header to ensure forward compatibility.

There are two ways to get a response formatted with JSON verbose:

1. Set the MaxDataServiceVersion header to 2.0 or less. For instance:

GET http://odata.example.org/Items?$top=5 HTTP/1.1

Host: odata.example.org

Connection: keep-alive

User-Agent: Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.152 Safari/535.19

Accept: application/json

Accept-Encoding: gzip,deflate,sdch

Accept-Language: en-US,en;q=0.8

Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.3

MaxDataServiceVersion: 2.0

2. Request a content type of application/json;odata=verbose. For instance:

GET http://odata.example.org/Items?$top=5 HTTP/1.1

Host: odata.example.org

Connection: keep-alive

User-Agent: Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.152 Safari/535.19

Accept: application/json;odata=verbose

Accept-Encoding: gzip,deflate,sdch

Accept-Language: en-US,en;q=0.8

Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.3

MaxDataServiceVersion: 3.0

It’s possible to modify the headers with jQuery as follows:

$.ajax(url, {

dataType: "json", // this tells jquery that you are expcting a json object and so it will parse the data for you.

beforeSend: function (xhr) {

xhr.setRequestHeader("Accept", "application/json;odata=verbose");

xhr.setRequestHeader("MaxDataServiceVersion", "3.0");

},

success: function (data) {

// do something interesting with the data you get back.

}

});

Why did WCF DS 5.0 ship before JSON light?

We had a set of features that were ready to be released as part of WCF DS 5.0. The more efficient JSON implementation is currently in progress and will be released sometime later this year.

I would have rather seen the resources devoted to WCF Actions be concentrated on JSON formatting.

Rob Collie (@PowerPivotPro) posted DataMarket Revisited: The Truth is Out There on 4/10/2012:

How many discoveries are right under our noses,

if only we cross-referenced the right data sets?Convergence of Multiple “Thought Streams”

Yeah, I love quoting movies. And tv shows. And song lyrics. But it’s not the quoting that I enjoy – it’s the connection. Taking something technical, for instance, and spotting an intrinsic similarity in something completely unrelated like a movie – I get a huge kick out of that.

That tendency to make connections kinda flows through my whole life – sometimes, it’s even productive and not just entertaining.

Anyway, I think I am approaching one of those aha/convergence moments. It’s actually a convergence moment “squared,” because it’s a convergence moment about… convergence. Here are the streams that are coming together in my head:

1) “Expert” thinking is too often Narrow thinking

I’ve read a number of compelling articles and anecdotes about this in my life, most recently this one in the New York Times. Particularly in science and medicine, you have to develop so many credentials just to get in the door that it tends to breed a rigid and less creative environment.

And the tragedy is this: a conundrum that stumps a molecular cancer scientist might be solvable, at a glance, by the epidemiologist or the mathematician in the building next door. Similarly, the molecular scientist might breeze over a crucial clue that would literally leap off the page at a graph theorist like my former professor Jeremy Spinrad.

2) Community cross-referencing of data/problems is a longstanding need

Flowing straight out of problem #1 above is this, need #2. And it’s been a recognized need for a long time, by many people.

Swivel and ManyEyes Both Were Attempts at this Problem

I remember being captivated, back in 2006-2007, with a website called Swivel.com. It’s gone now – and I highly recommend reading this “postmortem” interview with its two founders – but the idea was solid: provide a place for various data sets to “meet,” and to harness the power of community to spot trends and relationships that would never be found otherwise. (Apparently IBM did something similar with a project called ManyEyes, but it’s gone now, too).

There is, of course, even a more mundane use than “community research mashups” – our normal business data would benefit a lot by being “mashed up” with demographics and weather data (just to point out the two most obvious).

I’ve been wanting something like this forever. As far back as 2001, when we were working on Office 2003, I was trying to launch a “data market” type of service for Office users. (An idea that never really got off the drawing board – our VP killed it. And, at the time, I think that was the right call).

3) Mistake: Swivel was a BI tool and not just a data marketplace

When I discovered that Swivel was gone, before I read the postmortem, I forced myself to think of reasons why they might have failed. And my first thought was this: Swivel forced you to use THEIR analysis tools. They weren’t just a place where data met. They were also a BI tool.

And as we know, BI tools take a lot of work. They are not something that you just casually add to your business model.

In the interview, the founders acknowledge this, but their choice of words is almost completely wrong in my opinion:

- Check out the two sections I highlighted. The interface is not that important. And people prefer to use what they already have. That gets me leaning forward in my chair.

- YES! People prefer to use the analysis/mashup toolset they already use. They didn’t want to learn Swivel’s new tools, or compensate for the features it lacked. I agree 100%.

- But to then utter the words “the interface is not that important” seems completely wrong to me. The interface, the toolset, is CRITICAL! What they should have said in this interview, I think, is “we should not have tried to introduce a new interface, because interface is critical and the users already made up their mind.”

4) PowerPivot is SCARY good at mashups

I’m still surprised at how simple and magical it feels to cross-reference one data set against another in PowerPivot. I never anticipated this when I was working on PowerPivot v1 back at Microsoft. The features that “power” mashups – relationships and formulas – are pretty… mundane. But in practice there’s just something about it. It’s simple enough that you just DO it. You WANT to do it.

Remember this?

OK, it’s pretty funny. But it IS real data. And it DOES tell us something surprising – I did NOT know, going in, that I would find anything when I mashed up UFO sightings with drug use. And it was super, super, super easy to do.

When you can test theories easily, you actually test them. If it was even, say, 50% more work to mash this up than it actually was, I probably never would have done it. And I think that’s the important point…

PowerPivot’s mashup capability passes the critical human threshold test of “quick enough that I invest the time,” whereas other tools, even if just a little bit harder, do not. Humans prioritize it off the list if it’s even just slightly too time consuming.

Which, in my experience, is basically the same difference as HAVING a capability versus having NO CAPABILITY whatsoever. I honestly think PowerPivot might be the only data mashup tool worth talking about. Yeah, in the entire world. Not kidding.

5) “Export to Excel” is not to be ignored

Another thing favoring PowerPivot as the world’s only practically-useful mashup tool: it’s Excel.

I recently posted about how every data application in the world has an Export to Excel button, and why that’s telling.

Let’s go back to that quote from one of the Swivel founders, and examine one more portion that I think reflects a mistake:

Can I get a “WTF” from the congregation??? R and SAS but NO mention of Excel! Even just taking the Excel Pro, pivot-using subset of the Excel audience (the people who are reading this blog), Excel CRUSHES those two tools, combined, in audience. Crushes them.

Yeah, the mundane little spreadsheet gets no respect. But PowerPivot closes that last critical gap, in a way that continues to surprise even me. Better at business than anything else. Heck, better at science too. Ignore it at your peril.

6) But Getting the Data Needs to be Just as Simple!

So here we go. Even in the UFO example, I had to be handed the data. Literally. Our CEO already HAD the datasets, both the UFO sightings and the drug use data. He gave them to me and said “see if you can do something with this.”

There is no way I EVER would have scoured the web for these data sets, but once they were conveniently available to me, I fired up my convenient mashup tool and found something interesting.

7) DataMarket will “soon” close that last gap

In a post last year I said that Azure DataMarket was falling well short of its potential, and I meant it. That was, and is, a function of its vast potential much more so than the “falling short” part. Just a few usability problems that need to be plugged before it really lights things up, essentially.

On one of my recent trips to Redmond, I had the opportunity to meet with some of the folks behind the scenes.

Without giving away any secrets, let me say this: these folks are very impressive. I love, love, LOVE the directions in which they are thinking. I’m not sure how long it’s going to take for us to see the results of their current thinking.

But when we do, yet another “last mile” problem will be solved, and the network effect of combining “simple access to vast arrays of useful data sets” with “simple mashup tool” will be transformative. (Note that I am not prone to hyperbole except when I am saying negative things, so statements like this are rare from me.)

In the meantime…

While we wait for the DataMarket team’s brainstorms to reach fruition, I am doing a few things.

- I’ve added a new category to the blog for Real-World Data Mashups. Just click here.

- I’m going to do share some workbooks that make consumption of DataMarket simple. Starting Thursday I will be providing some workbooks that are pre-configured to grab interesting data sets from Data Market. Stay tuned.

- I’m likely to run some contests and/or solicit guest posts on DataMarket mashups.

- I’m toying with the idea of Pivotstream offering some free access to certain DataMarket data sets in our Hosted PowerPivot offering.

Rob is CTO of Pivotstream, former co-founder of the PowerPivot team and leader of Excel BI team at MS.

Alex James (@adjames) started a series with Actions in WCF Data Services – Part 1: Service Author Code on 4/10/2012:

If you read our last post on Actions you’ll know that Actions are now in both OData and WCF Data Services and that they are cool:

“Actions will provide a way to inject behaviors into an otherwise data-centric model without confusing the data aspects of the model, while still staying true to the resource oriented underpinnings of OData."

Our key design goal for RTM was to allow you or third party vendors to create an Action Provider that adds Action support to an existing WCF Data Services Provider. Adding Actions support to the built-in Entity Framework Provider for example.

This post is the first of a series that will in turn introduce:

- The Experience we want to enable, i.e. the code the service author writes.

- The Action Provider API and why it is structured as it is.

- A sample implementation of an Action Provider for the Entity Framework

Remember if you are an OData Service author, happy to use an Action Provider written by someone else, all you need worry about is (1), and that is what this post will cover.

Scenario

Imagine that you have the following Entity Framework model

public class MoviesModel: DbContext

{

public DbSet<Actor> Actors { get; set; }

public DbSet<Director> Directors { get; set; }

public DbSet<Genre> Genres { get; set; }

public DbSet<Movie> Movies { get; set; }

public DbSet<UserRental> Rentals { get; set; }

public DbSet<Tag> Tags { get; set; }

public DbSet<User> Users { get; set; }

public DbSet<UserRating> Ratings { get; set; }

}Which you expose as using WCF Data Services configured like this:

config.SetEntitySetAccessRule("*", EntitySetRights.AllRead);

config.SetEntitySetAccessRule("EdmMetadatas", EntitySetRights.None);

config.SetEntitySetAccessRule("Users", EntitySetRights.None);

config.SetEntitySetAccessRule("Rentals", EntitySetRights.None);

config.SetEntitySetAccessRule("Ratings", EntitySetRights.None);

Notice that some of the Entity Framework data is completely hidden (Users,Rentals,Ratings and of course EdmMetadatas) and the rest is marked as ReadOnly. This means in this service people can see information about Movies, Genres, Actors, Directors and Tags, but they currently can’t edit this data through the service. Essentially some of the database is an implementation detail you don’t want the world to see. With Action for the first time it is easy to create a real distinction between your Data Model and your Service Model.Now imagine you have a method on your DbContext that looks something like this:

public void Rate(Movie movie, int rating)

{

var userName = GetUsername();

var movieID = movie.ID;// Retrieve the existing rating by this user (or null if not found).

var existingRating = this.Ratings.SingleOrDefault(r =>

r.UserUsername == userName &&

r.MovieID == movieID

);if (existingRating == null)

{

this.Ratings.Add(new UserRating { MovieID = movieID, Rating = rating, UserUsername = userName });

}

else

{

existingRating.Rating = rating;

}

}This code allows a user to rate a movie, simply by providing a rating and a movie. It uses ambient context to establish who is making the request (i.e. HttpContext.Current.User.Identity.Name), and looks for a rating by that User for that Movie (a user can only rate a movie once), if it finds one it gets modified otherwise a new rating is created.

Target Experience

Now imagine you want to expose this as an action. The first step would be to make your Data Service implement IServiceProvider like this:

public class MoviesService : DataService<MoviesModel>, IServiceProvider

{

public object GetService(Type serviceType)

{

if (typeof(IDataServiceActionProvider) == serviceType)

{

return new EntityFrameworkActionProvider(CurrentDataSource);

}

return null;

}

…

}Where EntityFrameworkActionProvider is the custom action provider you want to use, in this case a sample provider that we’ll put up on codeplex when Part 3 of this series is released (sorry to tease).

Next configure your service to expose Actions, in the InitializeService method:

config.SetServiceActionAccessRule("*", ServiceActionRights.Invoke);

config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V3;As per usual * means all Actions, but you could just as easy expose just the actions you want by name.

Finally with that groundwork done, expose your action by adding the [Action] attribute:

[Action]

public void Rate(Movie movie, int rating)

{With this done the action will show up in $metadata, like this:

<FunctionImport Name="Rate" IsBindable="true">

<Parameter Name="movie" Type="MoviesWebsite.Models.Movie" />

<Parameter Name="rating" Type="Edm.Int32" />

</FunctionImport>

And it will be advertised in Movie entities like this (in JSON format):

{

"d": {

"__metadata": {

…

"actions": {

"http://localhost:15238/MoviesService.svc/$metadata#MoviesModel.Rate": [

{

"title": "Rate",

"target": "http://localhost:15238/MoviesService.svc/Movies(1)/Rate"

}

]

}

},

…

"ID": 1,

"Name": "Avatar"

}

}This action can be invoked using the WCF Data Services Client like this:

ctx.Invoke(

new Uri(“http://localhost:15238/MoviesService.svc/Movies(1)/Rate”),

new BodyParameter(“rating”,4)

);or, if you prefer being closer to the metal, more directly like this:

POST http://localhost:15238/MoviesService.svc/Movies(1)/Rate HTTP/1.1

Content-Type: application/json

Content-Length: 20

Host: localhost:15238{

"rating": 4

}All very easy don’t you think?

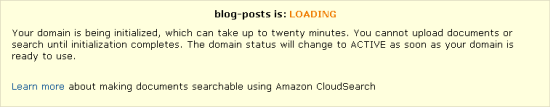

Actions whose availability depends upon Entity State