Introducing Apache Hadoop Services for Windows Azure

The SQL Server Team (@SQLServer) announced Apache Hadoop Services for Windows Azure, a.k.a. Apache Hadoop on Windows Azure or Hadooop on Azure, at the Profesional Association for SQL Server (PASS) Summit in October 2011.

• Update 8/23/2012 for Service Update 3 (SU3) released to the Web on 8/21/2012. SU3 updated Hadoop Common, MapReduce, and HDFS to v1.0.1, Hive to 0.8.1, Pig to 0.9.3, and Sqoop to 1.4.2. Versions of Mahout and CMU Pegasus are unchanged from Service Update 2 (SU2.) SU3 delivers 3-node clusters, a REST management APIs for Hadoop job submission, progress inquiry and killing jobs, as well as a C# SDK v1.0, PowerShell cmdlets, and direct browser access to a cluster. Stay tuned for a post to Red Gate Software’s ACloudyPlace blog about SU3.

Update 4/2/2012: Updated Samples Gallery screen capture in step 3 with five samples added in March 2012, Apache Sqoop as a Top-Level Project, and links to sections from TOC.

Table of Contents

- Introduction

- Tutorial: Running the 10GB GraySort Sample’s TeraGen Job

- Tutorial: Running the 10GB GraySort Sample’s TeraSort Job

- Tutorial: Running the 10GB GraySort Sample’s TeraValidate Job

- Apache Hadoop on Windows Azure Resources

Introduction

Val Fontama’s Availability of Community Technology Preview (CTP) of Hadoop based Service on Windows Azure post of 12/14/2011 described Apache Hadoop on Windows Azure and how to obtain an invitation to the CTP:

In October at the PASS Summit 2011, Microsoft announced expanded investments in “Big Data”, including a new Apache Hadoop™ based distribution for Windows Server and service for Windows Azure. In doing so, we extended Microsoft’s leadership in BI and Data Warehousing, enabling our customers to glean and manage insights for any data, any size, anywhere. We delivered on our promise this past Monday, when we announced the release of the Community Technology Preview (CTP) of our Hadoop based service for Windows Azure.

Today this preview is available to an initial set of customers. Those interested in joining the preview may request to do so by filling out this survey. Microsoft will issue a code that will be used by the selected customers to access the Hadoop based Service. We look forward to making it available to the general public in early 2012. Customers will gain the following benefits from this preview:

Broader access to Hadoop through simplified deployment and programmability. Microsoft has simplified setup and deployment of Hadoop, making it possible to setup and configure Hadoop on Windows Azure in a few hours instead of days. Since the service is hosted on Windows Azure, customers only download a package that includes the Hive Add-in and Hive ODBC Driver. In addition, Microsoft has introduced new JavaScript libraries to make JavaScript a first class programming language in Hadoop. Through this library JavaScript programmers can easily write MapReduce programs in JavaScript, and run these jobs from simple web browsers. These improvements reduce the barrier to entry, by enabling customers to easily deploy and explore Hadoop on Windows.

Breakthrough insights through integration Microsoft Excel and BI tools. This preview ships with a new Hive Add-in for Excel that enables users to interact with data in Hadoop from Excel. With the Hive Add-in customers can issue Hive queries to pull and analyze unstructured data from Hadoop in the familiar Excel. Second, the preview includes a Hive ODBC Driver that integrates Hadoop with Microsoft BI tools. This driver enables customers to integrate and analyze unstructured data from Hadoop using award winning Microsoft BI tools such as PowerPivot and PowerView. As a result customers can gain insight on all their data, including unstructured data stored in Hadoop.

- Elasticity, thanks to Windows Azure. This preview of the Hadoop based service runs on Windows Azure, offering an elastic and scalable platform for distributed storage and compute.

We look forward to your feedback! Learn more at www.microsoft.com/bigdata.

Val Fontama

Senior Product Manager

SQL Server Product Management

…

9. AzureHadoop (or is it HadoopAzure?): Microsoft made available the preview bits for the Hadoop distribution for Windows Azure in December 2011. The final release is slated for March 2012. (Microsoft and partner Hortonworks are also working on an on-premises Hadoop on Windows Server distribution.) Hadoop on Windows Azure is interesting because it combines Microsoft’s big-data plans and products with its cloud platform. The idea Microsoft will be pushing in 2012 is that Hadoop on Azure will give users of Microsoft’s analytics tools, including plain-old Excel, a way to make use of the growing number of data sets stored on Windows Azure.

…

Tutorial: Running the 10GB GraySort Sample’s TeraGen Job

Following is a step-by-step tutorial for running the first process of the 10GB GraySort sample project:

1. After you receive your invitation code, navigate to https://www.hadooponazure.com/ and log-in with your Windows Live ID and invitation code to open the Account page with the Request a New Cluster content active. Type a globally unique DNS Name for your cluster, hadoop1 for this example, select a Cluster Size (Large for this example), and type a administrative Username, Password and password confirmation:

• Update 8/23/2012 for SU3: No-charge cluster size is now fixed at 3 nodes with 1.5 TB disk space with a five-day lifetime, non-renewable. Users who want m

Note: There is no charge for Windows Azure resources used during the CTP, so you don’t need to provide a credit card to create your cluster.

2. When your cluster is provisioned, the Account page’s content changes to include tiles to create a new job as well as access your cluster by different methods:

Note: You must renew your cluster every two days. It’s clear that that faux-Metro UIs are de rigueur at Microsoft these days.

3. Click the Samples tile to open the Account/Sample Gallery page, which describes the currently available samples as of 4/2/2012.

Note: The above screen capture includes five additional sample projects added in March 2012. The Apache Software Foundation asserted Open Source big data tool used for efficient bulk transfer between Apache Hadoop and structured datastores in an introduction to its The Apache Software Foundation Announces Apache Sqoop as a Top-Level Project post of 4/2/2012.

4. The GraySort MapReduce sample is a useful starting point because it runs in a reasonably short time (about 4 minutes with a Large cluster), so click the 10GB GraySort tile to open its Account/SampleDetails page, which describes the sample:

5. Click the Deploy to Your Cluster button to automatically populate text boxes with values for the TeraGen program, which generates 10 GB of data:

Note: If you have tried SQL Azure Labs’ Microsoft Codename “Data Numerics” CTP, you’ll notice that the process for creating the Hadoop cluster and executing the first MapReduce job is much more automated that that described in my Deploying “Cloud Numerics” Sample Applications to ... post of 1/28/2012 (updated 1/30/2012).

5. Click the Execute Job button to run the TeraGen program, which initially displays this Job Info page:

6. After a few seconds, the program begins adding lines of Debut Output for the 50 maps in increments close to 1 percent:

Note: Hadoop automatically repairs the failures reported above, but it’s surprising that lines for 78 and 79 percent are missing.

7. When processing completes, click the left-arrow button to return to the Account page with a tile for the TeraGen process added:

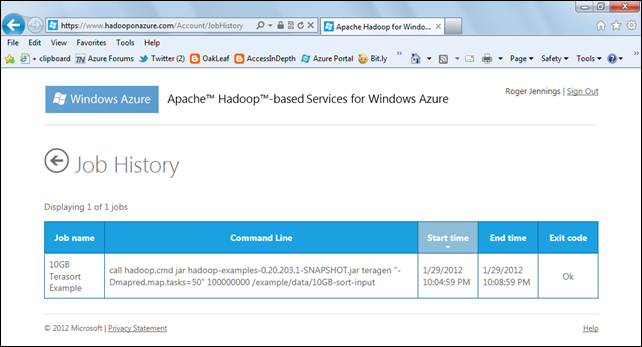

8. Click the Job History tile to display a summary of the preceding operation, which confirms successful completion with an Exit Code = Ok cell:

9. Click the left-arrow button to return to the main Accounts page and click the Manage Cluster tile to display total storage used (30 GB) and other data source options (Data Market, Windows Azure blob storage, and Amazon S3):

Tutorial: Running the 10GB GraySort Sample’s TeraSort Job

10. Return to the Account page, click the Samples tile to open the Account/Samples page (see step 3), click the 10GB GraySort tile to open its Account/SampleDetails page (see step 4), and click the Deploy to Your Cluster button to open the Create Job page.

11. Replace teragen with terasort in the Parameter 1 text box, add a space and =Dmapred.reduce.tasks=25 as a suffix to the existing Parameter 2 text box value, and change the Parameter 3 text box’s value to /example/data/10GB-sort-input /example/data/10GB-sort-out:

12. Click the top, middle, and bottom arrow symbols adjacent to the three text boxes to validate the three parameter values:

Note: If you receive Invalid instead of OK for any of the parameter values and you’ve verified the content is identical to the above, click the garbage can symbols next to the offending text boxes to remove them, click Add Parameter button three times to recreate them, retype the parameter values shown above, and click the validation arrows again.

The Final Command should read:

Hadoop jar hadoop-examples-0.20.203.1-SNAPSHOT.jar terasort "-Dmapred.map.tasks=50 -Dmapred.reduce.tasks=25" /example/data/10GB-sort-input /example/data/10GB-sort-out

13. Click the Execute Job button to start the sorting process:

14. About 45 minutes after you start the job, the StdOutput and StdError results appear:

Notice that Reduce operations don’t occur until Mapping is ~80% complete.

15. Click the Job History tile to display the summary for the TeraSort option:

16. Return to the Account page and click the Manage Cluster tile to determine the additional storage space used (10 GB) by the TeraSort operation:

Tutorial: Running the 10GB GraySort Sample’s TeraValidate Job

17. Return to the Account page, click the Samples tile to open the Account/Samples page (see step 3), click the 10GB GraySort tile to open its Account/SampleDetails page (see step 4), and click the Deploy to Your Cluster button to open the Create Job page.

18. Delete the parameters and add three new empty parameters. Type teravalidate in the Parameter 1 text box, click the arrow to validate the parameter, type "-Dmapred.map.tasks=50 -Dmapred.reduce.tasks=25" in the Parameter 2 text box value and validate it, and type /example/data/10GB-sort-out /example/data/10GB-sort-validate in the Parameter 3 text box’s value and validate it:

The final command should read:

Hadoop jar hadoop-examples-0.20.203.1-SNAPSHOT.jar teravalidate "-Dmapred.map.tasks=50 -Dmapred.reduce.tasks=25" /example/data/10GB-sort-out /example/data/10GB-sort-validate

19. Click the Execute Job button to start the task:

20. After about five minutes, the task completes with the following (partial) Debug Output:

Note: It’s not clear from the debug output above how to determine the result of the validation task.

21. Return to the Account page and click the Job history tile to verify the outcome:

Note: Job #3’s failure was due to a mismatch in the input file name, as emphasized above.

Apache Hadoop on Windows Azure Resources

Download the Apache Hadoop-based Services for Windows Azure How-To and FAQ whitepaper in PDF or *.docx format.

Wesley McSwain posted a Apache Hadoop Based Services for Windows Azure How To Guide, which is similar (but not identical) to the above document, to the TechNet wiki on 12/13/2011. The latest update when this post was written was 1/18/2012. Here’s its content:

This content is a work in progress for the benefit of the Hadoop Community. Please feel free to contribute to this wiki page based on your expertise and experience with Hadoop.

If you have any questions, please use the groups DL http://tech.groups.yahoo.com/group/hadooponazurectp/

How-Tos

- Setup your Hadoop cluster

- Running Sample Jobs

- Writing your own Job and running on Cluster

- Job Administration

- Interactive Console:

- Tasks with the Interactive JavaScript Console

- Tasks with Hive on the Interactive Console

- Remote Desktop

- Connecting Windows Azure Blob Storage from Hadoop Cluster

- Open Ports

- Manage Data

- Import Data from Data Market

- Setup ASV - Use your Windows Azure Blob Storage Account

- Setup S3 - Use your Amazon S3 account

- Apache Hadoop on Windows Azure:

- Scenarios

* Does not include details for TeraSort or TeraValidate options

FAQs

More Information

Blogs / Twitter to Follow

Below are some blogs to follow on Hadoop on Azure [links added]

- Alexander Stojanovic (Founder and [General Manager]) of Hadoop on Azure and Windows), @stojanovic, http://conceptualorigami.blogspot.com/

- Dave Vronay, @davevr, http://dvronay.blogspot.com/2011/12/design-of-portal-for-hadooponazurecom.html

- Brad Sarsfield, @bradoop

- Denny Lee, @dennylee, http://dennyglee.com/

- Avkash Chauhan, @avkashchauhan, http://blogs.msdn.com/b/avkashchauhan/

0 comments:

Post a Comment