Importing Windows Azure Marketplace DataMarket DataSets to Apache Hadoop on Windows Azure’s Hive Databases

Table of Contents

- Introduction

- Prerequisites

- Obtaining Values Required by the Interactive Hive Console

- Loading the Hive Table with Data from the Utility Rate Service

- Querying the UtilityRateService Hive Database

- Viewing Job History

- Attempting to Load ContentItem Records from the Microsoft Codename “Social Analytics” DataSet

- Conclusion

• Updated 8/18/2012 to reflect unavailability of SQL Labs’ Microsoft Codename “Social Analytics” data set and availability of the Air Carrier Flight Delay data set from the Windows Azure Marketplace DataMarket.

Introduction

From the Apache Hive documentation:

The Apache HiveTM data warehouse software facilitates querying and managing large datasets residing in distributed storage. Built on top of Apache HadoopTM, it provides

- Tools to enable easy data extract/transform/load (ETL)

- A mechanism to impose structure on a variety of data formats

- Access to files stored either directly in Apache HDFSTM or in other data storage systems such as Apache HBaseTM

- Query execution via MapReduce

Hive defines a simple SQL-like query language, called QL, that enables users familiar with SQL to query the data. At the same time, this language also allows programmers who are familiar with the MapReduce framework to be able to plug in their custom mappers and reducers to perform more sophisticated analysis that may not be supported by the built-in capabilities of the language. QL can also be extended with custom scalar functions (UDF's), aggregations (UDAF's), and table functions (UDTF's).

Hive does not mandate read or written data be in the "Hive format"---there is no such thing. Hive works equally well on Thrift, control delimited, or your specialized data formats. Please see File Format and SerDe in the Developer Guide for details.

Hive is not designed for OLTP workloads and does not offer real-time queries or row-level updates. It is best used for batch jobs over large sets of append-only data (like web logs). What Hive values most are scalability (scale out with more machines added dynamically to the Hadoop cluster), extensibility (with MapReduce framework and UDF/UDAF/UDTF), fault-tolerance, and loose-coupling with its input formats.

TechNet’s How to Import Data to Hadoop on Windows Azure from Windows Azure Marketplace wiki article of 1/18/2012, last revised 1/19/2012, appears to be out of date and doesn’t conform to the current Apache Hadoop on Windows Azure and MarketPlace DataMarket portal implementations. This tutorial is valid for the designs as of 4/3/2012 and will be updated for significant changes thereafter.

Prerequisites

• Updated 8/18/2012 to reflect availability of OakLeaf Systems’ Air Carrier Flight Delay data set from the Windows Azure Marketplace DataMarket. See my Accessing the US Air Carrier Flight Delay DataSet on Windows Azure Marketplace DataMarket and “DataHub” post of 5/15/2012. My Creating An Incremental SQL Azure Data Source for OakLeaf’s U.S. Air Carrier Flight Delays Dataset of 5/8/2012 describes how I created the final 864-MB, 2,427,284-row dataset:

You also need an Windows Azure Marketplace Data Market account with subscriptions for one or more (preferably free) OData datasets, such as Microsoft’s Utility Rate Service. To subscribe to this service:

1. Navigate to the DataMarket home page, log in with your Windows Live ID and click the My Account link to confirm your User Name (email address) and obtain your Primary Account Key:

2. Open Notepad, copy the Primary Account Key to the clipboard, and paste it to Notepad’s window for use in step ? below.

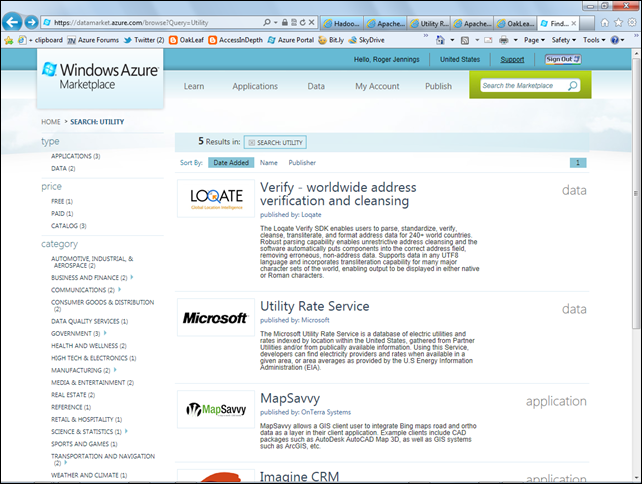

3. Search for utility, which displays the following screen:

4. Click the Utility Rate Service to open its Data page:

5. Click the Sign Up button to open the Subscription page, mark the Accept the Offer Terms and Privacy Policy check box:

6. Click the Sign Up button to display the Purchase page:

Obtaining Values Required by the Interactive Hive Console

The Interactive Hive Console requires your DataMarket User ID, Primary Account Key, and text of the OData query.

1. If you’re returning to the DataMarket, confirm your User ID and save your Primary Account Key as described in step 1 of the preceding section.

2. If you’re returning to the DataMarket, click the My Data link under the My Account link to display the page of the same name and click the Use button to open the interactive DataMarket Service Explorer.

If you’ve continuing from the preceding section, click the Explore This DataSet link to open the interactive DataMarket Service Explorer.

3. In the DataMarket Service Explorer, accept the default CustomerPlans query and click Run Query to display the first 100 records:

4. Click the Develop button to display the OData query URL to retrieve the first 100 records to a text box:

5. Copy the query text and paste it to the Notepad window with your Primary Account Key.

Loading the Hive Table with Data from the Utility Rate Service

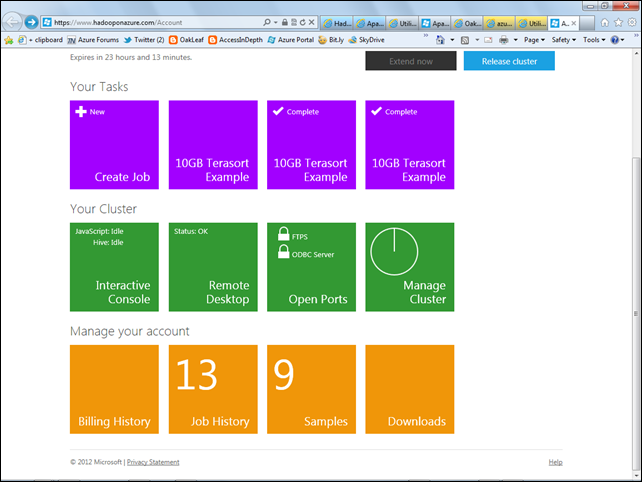

1. Navigate to the Apache Hadoop on Windows Azure portal, log in with the Windows Live ID you used in your application for an invitation code, and scroll to the bottom of the Welcome Back page:

Note: By default, your cluster’s lifespan is 48 hours. You can extend it’s life when 6 hours or fewer remain by clicking the Extend Cluster button.

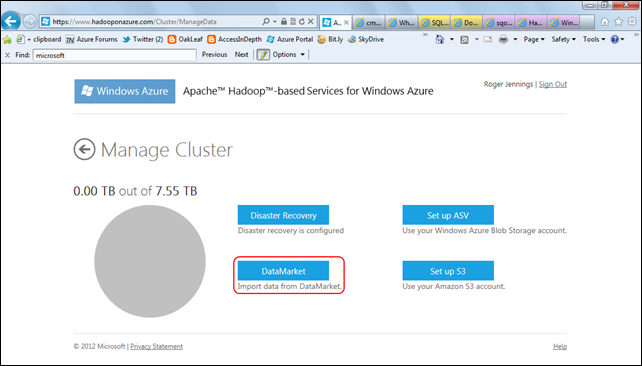

2. Click the Manage Cluster tile to open that page:

3. Click the DataMarket button to open the Import from DataMarket page. Fill in the first three text boxes with your Live ID and the data you saved in the preceding section; remove the $top=100 parameter from the query to return all rows, and type UtilityRateService as the Hive Table Name:

4. Click the Import Data button to start the import process and wait until import completes, as indicated by the DataLoader progress = 100[%] message:

The text of the generated data import Command with its eight required arguments is:

c:\apps\dist\bin\dataloader.exe

-s datamarket

-d ftp

-u "roger_jennings@compuserve.com"

-k "2ZPQEl2CouvtQw+..............................Qud0E="

-q "https://api.datamarket.azure.com/Data.ashx/Microsoft/UtilityRateService/ Prices?"

-f "ftp://d5061cb5f84c9ea089bfa052f1a0a3f2:da835ebe965c87b428923032057014f7@

localhost:2222/uploads/UtilityRateService/UtilityRateService"

-h "UtilityRateService"

Querying the UtilityRateService Hive Database

Hive QL query syntax is similar, but not identical, to ANSI SQL. Hive translates QL queries into MapReduce job(s) and executes them in the cluster. This causes Hive query batches to exhibit substantial latency.

Following are a few of the basic similarities and differences in Hive and T-SQL DQL statements:

- Hive supports basic SQL SELECT syntax but not IN, EXISTS or subqueries in the WHERE clause.

- LIMIT n with a DESC sort substitutes for T-SQL’s TOP(n) modifier.

- Hive supports LEFT, LEFT SEMI, RIGHT, FULL and OUTER equality JOINS between two or more tables but not inequality joins.

- Hive offers a wide variety of aggregate functions, including count(col), sum(col), avg(col), min(col), max(col), variance(col), var_pop(col), var_samp(col), stddev_pop(col), stddev_samp(col), and many others

To execute a few sample DQL queries with Hive QL, do the following:

1. Return to the home (Welcome Back) page and click the Interactive Console tile to open the JavaScript console and click the Hive button.

2. Wait a few seconds for the Table and Column list boxes to populate and select the utilityrateservice table, which populates the the Columns list with the first column name.

3. To verify that DataMarket’s default interactive page size of 100 records doesn’t apply to DataMarket API queries, type SELECT Count(*) FROM utilityrateservice in the text box (Hive QL isn’t case-sensitive).

4. Click the Evaluate button, and wait about a minute for the query to complete, which displays the result and Hive history:

5. Click the Clear screen button, type SELECT * FROM utilityrateservice LIMIT 5 in the text box and click the Evaluate button to display five random rows:

Note: Evaluating SELECT * FROM utilityrateservice ORDER BY id DESC LIMIT 5 takes 15 or more minutes because all rows must be loaded, sorted and then the top five displayed. Returned data sets larger than 1 MB are truncated for display.

6. To display the table schema with data types, evaluate the DESCRIBE utilityrateservice statement:

7. To add detailed information about the table, evaluate DESCRIBE EXTENDED utilityrateservice:

Viewing Job History

To view the history, execution time and status of the MapReduce jobs you’ve run, return to the home page and click the Job History tile:

Attempting to Load ContentItem Records from the Microsoft Codename “Social Analytics” DataSet

• Updated 8/18/2012: SQL Labs’ Microsoft Codename “Social Analytics” data set is no longer available because the “Social Analytics” lab was terminated in July 2012. According to the Lab’s site:

The Microsoft Codename "Social Analytics" lab phase is complete.

Here are our learning goals for this lab:

How useful do customers find this scenario?

API prioritization: We wanted to understand which features developers needed to implement first and how easy it is to implement these (for example, content item display, how to modify filters, etc.).

API usability: Were our APIs hard to use, easy to use, consistent with existing methods, or did they add to the concept count?

We have received a lot of useful feedback which will shape our future direction. We would like to thank everybody who participated for their valuable feedback!

Microsoft Codename “Social Analytics” is an SQL Azure Labs incubator project that is “aimed at developers who want to integrate social web information into business applications. This lab includes:

-

The Engagement Client, used to browse and view analytics on a social dataset

-

Two pre-defined, actively streaming datasets which include data from top social sources such as Twitter, Facebook, blogs and forums

-

The Social Analytics API, based on the Open Data Protocol and delivered through the DataMarket on the Windows Azure Marketplace” [Emphasis added.]

The “Social Analytics” team maintains a blog here.

Interactive queries attempted as described in the earlier Obtaining Values Required by the Interactive Hive Console section above don’t appear to work.

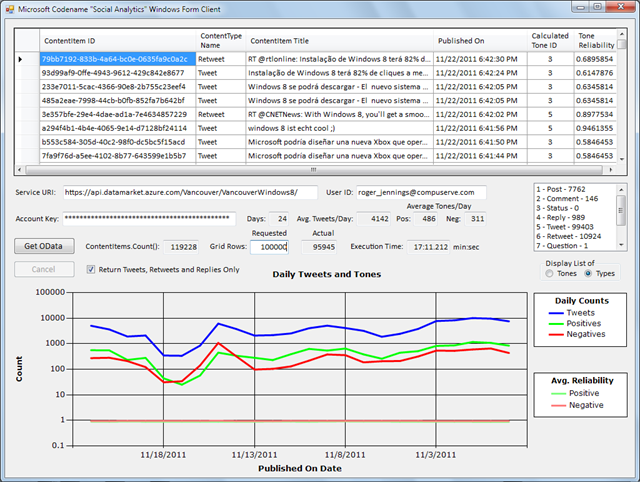

My Codename “Social Analytics” WinForms Client sample application summarizes consumer engagement with and sentiment about Windows 8 based on a combination of Twitter tweets, retweets, replies, and direct messages, as well as messages from a few other social Web sites. This screen capture shows data for about four weeks in October/November 2011:

The ContentItems dataset contained 1,674,190 rows on 4/3/2012 at 11:00 AM PDT.

A C# LINQ query generates the following OData URL query (captured by Fiddler2) to return selected columns of the ContentItems table in 500-row batches:

https://api.datamarket.azure.com/Vancouver/VancouverWindows8/ContentItems()?$filter=CalculatedToneId%20ne%20null&$orderby=PublishedOn%20desc&$skip=0&$top=500&$expand=ContentItemType&$select=Id,ContentItemTypeId, ContentItemType/Name,Title,PublishedOn,CalculatedToneId,ToneReliability, Summary,HtmlUrl

Pasting my User Name, Account Key, and the above query into the Import from DataMarket page to create a Hive ContentItems table, as shown here:

Clicking the Import Data button results in the following error message:

The Debug Output is:

Caused by: The remote server returned an error: (400) Bad Request.

Stack trace:

at System.Net.HttpWebRequest.GetResponse()

at Microsoft.Hadoop.DataLoader.ODataSource.ExportSchema()

at Microsoft.Hadoop.DataLoader.DataLoaderMediator.StartDataTransfer(IDataSource source, IHdfsChannel destination)

at Microsoft.Hadoop.DataLoader.DataLoaderProgram.Main(String[] args)

I’ve notified the Codename “Social Analytics” team of this problem and will update this article if and when I receive information from them.

For more details about (the departed) Codename “Social Analytics” Lab, see:

- Twitter Sentiment Analysis: A Brief Bibliography of 2/17/2012

- More Features and the Download Link for My Codename “Social Analytics” WinForms Client Sample App of 12/26/2011

- New Features Added to My Microsoft Codename “Social Analytics” WinForms Client Sample App of 11/21/2011

- My Microsoft Codename “Social Analytics” Windows Form Client Detects Anomaly in VancouverWindows8 Dataset of 11/19/2011

- Querying Microsoft’s Codename “Social Analytics” OData Feeds with LINQPad of 11/5/2011

- Problems Browsing Codename “Social Analytics” Collections with Popular OData Browsers of 11/4/2011

- Using the Microsoft Codename “Social Analytics” API with Excel PowerPivot and Visual Studio 2010 of 11/2/2011

- SQL Azure Labs Unveils Codename “Social Analytics” Skunkworks Project of 11/1/2011

Conclusion

Data import from the Windows Azure Marketplace DataMarket to user-specified Hive tables is one of three prebuilt data import options for Hadoop on Azure. The other two on the Manage Cluster page are:

- ASV (Windows Azure blob storage)

- S3 (Amazon Simple Storage Service)

Import of simple DataMarket datasets is a relatively easy process. As demonstrated by the problems with the VancouverWindows8 dataset’s ContentItems table, more complex datasets might fail for unknown reasons.

Writing Java code for MapReduce jobs isn’t for novices or faint-hearted code jockeys. The interactive Hive console and the HiveQL syntax simplify the query process greatly.

0 comments:

Post a Comment