Windows Azure and Cloud Computing Posts for 4/2/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Updated 4/5/2012 at 2:15 PM PST: See new Windows Azure Security documentation in the Cloud Security and Governance section and the official locations of two new Windows Azure data centers in the Windows Azure Infrastructure and DevOps section.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Avkash Chauhan (@avkashchauhan) explained Why it is important to set proper content-type HTTP header for blobs in Azure Storage in a 4/4/2012 post:

When you try to consume a content from Windows Azure blob storage you might see that sometime the content does not render correctly to browser or played correctly by the plugin used. After a few issues I worked on, I found this is mostly because the proper content-type HTTP header is not set with the blob content itself. Most of the browser Tag and plugin depends on HTTP header types and adding content-type becomes important in this regard.

For example, when you upload an audio MP3 blob to Windows Azure storage you must have the content-type header set to the blob otherwise the content will not be played correctly by the HTML5 audio element. Here is how you can do it correctly:

First I have uploaded a Kalimba.mp3 at my Windows Azure Blob Storage which is in publicly accessible container name “mp3”:

Let’s check the HTTP header type for the http://happybuddha.blob.core.windows.net/mp3/Kalimba.mp3 blob:

Now create a very simple video.html as below to play the MP3 content in HTML5 supported browser using “Audio” tag:

<!DOCTYPE html> <html xmlns="http://www.w3.org/1999/xhtml"> <head id="Head1" runat="server"> <head> <body> <audio ID="audio" controls="controls" autoplay="autoplay" src="http://happybuddha.blob.core.windows.net/mp3/Kalimba.mp3"></audio> </body> </html>Now play the video on Chrome or IE9 browser (which supports HTML5 Audio Tag):

Now let’s change the blob HTTP header type to include “Content-Type=<the_correct_content_type>”:

Finally open the Video.html in HTML5 supported browser and you can see the results:

So when you are bulk uploading your blobs, you can add proper content-type HTTP header programmatically to resolve such issues.

Avkash Chauhan (@avkashchauhan) explained Processing already sorted data with Hadoop Map/Reduce jobs without performance overhead in a 4/3/2012 post:

While working with Map/Reduce jobs in Hadoop, it is very much possible that you have got “sorted data” stored in HDFS. As you may know the “Sort function” exists not only after map process in map task but also with merge process during reduce task, so having sorted data to sort again would be a big performance overhead. In this situation you may want to have your Map/Reduce job not to sort the data.

Note: If you have tried changing map.sort.class to no-op, it would haven’t work as well.

So the question comes:

- if it is possible to force Map/Reduce to not to sort the data again (as it is already sorted) after map phase?

- Or “how to run Map/Reduce jobs in a way that you can control how do you want to results, sorted or unsorted”?

So if you do not need result be sorted the following Hadoop patch would be great place to start:

- Support no sort dataflow in map output and reduce merge phrase :https://issues.apache.org/jira/browse/MAPREDUCE-3397

Note: Before using above Patch the I would suggest reading the following comment from Robert about this patch:

- Combiners are not compatible with mapred.map.output.sort. Is there a reason why we could not make combiners work with this, so long as they must follow the same assumption that they will not get sorted input? If the algorithms you are thinking about would never get any benefit from a combiner, could you also add the check in the client. I would much rather have the client blow up with an error instead of waiting for my map tasks to launch and then blow up 4+ times before I get the error.

- In your test you never validate that the output is what you expected it to be. That may be hard as it may not be deterministic because there is no sorting, but it would be nice to have something verify that the code did work as expected. Not just that it did not crash.

- mapred-default.xml Please add mapred.map.output.sort to mapred-default.xml. Include with it a brief explanation of what it does.

- There is no documentation or examples. This is a new feature that could be very useful to lots of people, but if they never know it is there it will not be used. Could you include in your patch updates to the documentation about how to use this, and some useful examples, preferably simple. Perhaps an example computing CTR would be nice.

- Performance. The entire reason for this change is to improve performance, but I have not seen any numbers showing a performance improvement. No numbers at all in fact. It would be great if you could include here some numbers along with the code you used for your benchmark and a description of your setup. I have spent time on different performance teams, and performance improvement efforts from a huge search engine to an OS on a cell phone and the one thing I have learned is that you have to go off of the numbers because well at least for me my intuition is often wrong and what I thought would make it faster slowed it down instead.

- Trunk. This patch is specific to 0.20/1.0 line. Before this can get merged into the 0.20/1.0 lines we really need an equivalent patch for trunk, and possibly 0.21, 0.22, and 0.23. This is so there are no regressions. It may be a while off after you get the 1.0 patch cleaned up though.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

SearchSQLServer.com published My (@rogerjenn) Manage, query SQL Azure Federations using T-SQL on 4/5/2012. It begins:

SQL Azure Federations is Microsoft’s new cloud-based scalability feature. It enhances traditional partitioning by enabling database administrators (DBAs) and developers to use their Transact-SQL (T-SQL) management skills with “big data” and the new fan-out query tool to emulate MapReduce summarization and aggregation features.

Highly scalable NoSQL databases for big data analytics is a hot topic these days, but organizations can scale out and scale up traditional relational databases by horizontally partitioning rows to run on multiple server instances, a process also known as sharding. SQL Azure is a cloud-based implementation of customized SQL Server 2008 R2 clusters that run in Microsoft’s worldwide data center network. SQL Azure offers high availability with a 99.9% service-level agreement provided by triple data replication and eliminates capital investment in server hardware required to handle peak operational loads.

The SQL Azure service released Dec. 12 increased the maximum size of SQL Azure databases from 50 GB to 150 GB, introduced an enhanced sharding technology called SQL Azure Federations and signaled monthly operating cost reductions ranging from 45% to 95%, depending on database size. Federations makes it easier to redistribute and repartition your data and provides a routing layer that can handle these operations without application downtime.

How can DBAs and developers leverage their T-SQL management skills with SQL Azure and eliminate the routine provisioning and maintenance costs of on-premises database servers? The source of the data to be federated is a subset of close to 8 million Windows Azure table rows of diagnostic data from the six default event counters: Network Interface Bytes Sent/Sec and Bytes Received/Sec, ASP.NET Applications Requests/Sec, TCPv4 Connections Established, Memory Available Bytes and % Processor Time. The SQL Server 2008 R2 Service Pack 1 source table (WADPerfCounters) has a composite, clustered primary key consisting of PartitionKey and RowKey values. The SQL Azure destination tables are federated on an added CounterID value of 1 through 6, representing the six event counters. These tables add CounterID to their primary key because Federation Distribution Key values must be included.

T-SQL for creating federations, adding tables

SQL Server Management Studio (SSMS) 2012 supports writing queries with the new T-SQL [CREATE | ALTER | DROP | USE] federations keywords. You can download a trial version of SQL Server 2012 and the Express version of SSMS 2012 only here.To create a new SQL Azure Federation in the database that contains the table you want to federate, select it in SSMS’ Available Databases list, open a new Query Editor window and whereDataType is int, bigint, uniqueidentifier or varbinary(n) type, the following:

CREATE FEDERATION FederationName (DistributionKeyName DataType RANGE)

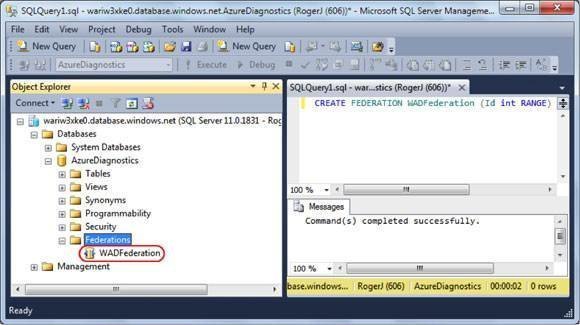

Then click Execute. For example, to create a Federation named WADDiagnostics with Id (from CounterId) as the Distribution Key Name, type this:

CREATE FEDERATION WADFederation (ID int RANGE)

GOThe RANGE keyword indicates that the initial table will contain all ID values.

When you refresh the Federations node, the new WADFederation node appears, as shown in Figure 1.

Figure 1. SQL Server Management Studio 2012 Express and higher editions support writing T-SQL queries against SQL Azure Federations.

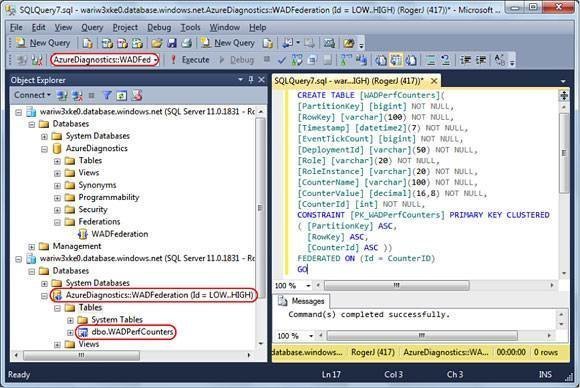

Adding a table to a Federation requires appending the FEDERATED ON(DistributionKeyName = SourceColumnName) clause to the CREATE TABLEstatement. For example, to create an initial WADPerfCounters table into which you can load data, double-click the WADFederation node to add the AzureDiagnostics::WADFederation federated database node, select it in the Available Databases list, open a new query and type the following:

CREATE TABLE [WADPerfCounters](

[PartitionKey] [bigint] NOT NULL,

[RowKey] [varchar](100) NOT NULL,

[Timestamp] [datetime2](7) NOT NULL,

[EventTickCount] [bigint] NOT NULL,

[DeploymentId] [varchar](50) NOT NULL,

[Role] [varchar](20) NOT NULL,

[RoleInstance] [varchar](20) NOT NULL,

[CounterName] [varchar](100) NOT NULL,

[CounterValue] [decimal](16,8) NOT NULL,

[CounterId] [int] NOT NULL,

CONSTRAINT [PK_WADPerfCounters] PRIMARY KEY CLUSTERED

( [PartitionKey] ASC,

[RowKey] ASC,

[CounterId] ASC ))

FEDERATED ON (Id = CounterID)

GOThen execute. Refresh the lower Tables list to display the first federated table (see Figure 2).

Figure 2. The CREATE TABLE instruction for SQL Azure Federations requires use of the FEDERATED ON keywords. …

Read more in the Adding Rows with Data Migration Wizard section.

Gregory Leake posted Announcing SQL Azure Data Sync Preview Update on 4/3/2012:

Today we are pleased to announce that the SQL Azure Data Sync preview release has been upgraded to Service Update 4 (SU4) within the Windows Azure data centers. SU4 is now running live. This update adds the most -requested feature: users can edit an existing Sync Group to accommodate changes they have made to their database schemas, without having to re-create the Sync Group. In order to use the new Edit Sync Group feature, you must install the latest release of the local agent software, available here. Existing Sync Groups do not need to be re-created, they will work with the new features automatically.

The complete list of changes are listed below:

- Users can now modify a sync group without having to recreate it. See the MSDN topicEdit a Sync Group for details.

- New information in the portal helps customers more easily manage sync groups:

- The portal displays the upgrade and support status of the user’s local agent software: the local agent version is shown with a recommendation to upgrade if your client agent is not the latest, a warning if support for your agent version expires soon and an error if your agent is no longer supported.

- The portal displays a warning for sync groups that may become out-of-date if changes fail to apply for 60 or more days.

- The update fixes issues that affected ORM (Object Relational Model) frameworks such as Entity Framework and NHibernate working with Data Sync.

- The service provides better error and status messages.

If you are using SQL Azure and are not familiar with SQL Azure Data Sync, you can watch this online video demonstration.

The ability to edit an existing Sync Group enables some common customer scenarios, and we have listed some of these below as examples.

Scenario 1: User adds a table and a column to a sync group

- William uses Data Sync to keep the products databases in his branch offices up to date.

- He decides to add a new Material attribute to his products to indicate whether the product is made from Animal, Vegetable or Mineral.

- He adds a Material column to his Products table and a Material table to contain the range of material values.

- He modifies his sync group to include the new Material table and the new Material column in the Product table.

- The Data Sync service adjusts the sync configuration in William’s databases and begins synchronizing the new data.

- William categorizes his products using a Category ID in his Products table, which references records in a Category table in his database.

- He decides to discontinue the use of the Category attribute in favor of a set of descriptive tags which apply to each product.

- He modifies his application to use the descriptive tags in place of the Category attribute.

- William modifies the Sync Group to exclude the Category attribute for his Products table.

- He modifies the Sync Group to exclude the Category table.

- The Data Sync service adjusts the sync configuration in William’s databases and no longer synchronizes the Category data.

- William maintains a set of attributes for his products that include Notes and Thumbnail images.

- William decides to increase the length of the columns he uses to store these attributes:

- He changes the Notes column from CHAR(32) to CHAR(128) to accommodate more verbose notes.

- He changes the Thumbnail column from BINARY(1000) to BINARY(MAX) to hold higher resolution thumbnail images.

- William modifies his application to use the expanded attributes.

- William modifies his database by altering the Notes and Thumbnail columns to the larger sizes.

- He updates his Sync Group with the new column lengths for the Notes and Thumbnail columns.

- The Data Sync service adjusts the sync configuration in William’s databases and synchronizes the expanded columns.

Scenario 2: User removes a table and a column from a sync group

- William categorizes his products using a Category ID in his Products table, which references records in a Category table in his database.

- He decides to discontinue the use of the Category attribute in favor of a set of descriptive tags which apply to each product.

- He modifies his application to use the descriptive tags in place of the Category attribute.

- William modifies the Sync Group to exclude the Category attribute for his Products table.

- He modifies the Sync Group to exclude the Category table.

- The Data Sync service adjusts the sync configuration in William’s databases and no longer synchronizes the Category data.

Scenario 3: User changes the length of a column in a sync group

- William maintains a set of attributes for his products that include Notes and Thumbnail images.

- William decides to increase the length of the columns he uses to store these attributes:

- He changes the Notes column from CHAR(32) to CHAR(128) to accommodate more verbose notes.

- He changes the Thumbnail column from BINARY(1000) to BINARY(MAX) to hold higher resolution thumbnail images.

- William modifies his application to use the expanded attributes.

- William modifies his database by altering the Notes and Thumbnail columns to the larger sizes.

- He updates his Sync Group with the new column lengths for the Notes and Thumbnail columns.

- The Data Sync service adjusts the sync configuration in William’s databases and synchronizes the expanded columns.

Sharing Your Feedback

For community-based support, post a question to the SQL Azure MSDN forums. The product team will do its best to answer any questions posted there.

To suggest new SQL Azure Data Sync features or vote on existing suggestions, click here.

To log a bug in this release, use the following steps:

- Navigate to https://connect.microsoft.com/SQLServer/Feedback.

- You will be prompted to search our existing feedback to verify your issue has not already been submitted.

- Once you verify that your issue has not been submitted, scroll down the page and click on the orange Submit Feedback button in the left-hand navigation bar.

- On the Select Feedback form, click SQL Server Bug Form.

- On the bug form, select Version = SQL Azure Data Sync Preview

- On the bug form, select Category = SQL Azure Data Sync

- Complete your request.

- Click Submit to send the form to Microsoft.

If you have any questions about the feedback submission process or about accessing the new SQL Azure Data Sync preview, please send us an email message: sqlconne@microsoft.com.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

My (@rogerjenn) Importing Windows Azure Marketplace DataMarket DataSets to Apache Hadoop on Windows Azure’s Hive Databases article of 4/3/2012 begins:

Introduction

From the Apache Hive documentation:

The Apache HiveTM data warehouse software facilitates querying and managing large datasets residing in distributed storage. Built on top of Apache HadoopTM, it provides

Tools to enable easy data extract/transform/load (ETL)

- A mechanism to impose structure on a variety of data formats

- Access to files stored either directly in Apache HDFSTM or in other data storage systems such as Apache HBaseTM

- Query execution via MapReduce

Hive defines a simple SQL-like query language, called QL, that enables users familiar with SQL to query the data. At the same time, this language also allows programmers who are familiar with the MapReduce framework to be able to plug in their custom mappers and reducers to perform more sophisticated analysis that may not be supported by the built-in capabilities of the language. QL can also be extended with custom scalar functions (UDF's), aggregations (UDAF's), and table functions (UDTF's).

Hive does not mandate read or written data be in the "Hive format"---there is no such thing. Hive works equally well on Thrift, control delimited, or your specialized data formats. Please see File Format and SerDe in the Developer Guide for details.

Hive is not designed for OLTP workloads and does not offer real-time queries or row-level updates. It is best used for batch jobs over large sets of append-only data (like web logs). What Hive values most are scalability (scale out with more machines added dynamically to the Hadoop cluster), extensibility (with MapReduce framework and UDF/UDAF/UDTF), fault-tolerance, and loose-coupling with its input formats.

TechNet’s How to Import Data to Hadoop on Windows Azure from Windows Azure Marketplace wiki article of 1/18/2012, last revised 1/19/2012, appears to be out of date and doesn’t conform to the current Apache Hadoop on Windows Azure and MarketPlace DataMarket portal implementations. This tutorial is valid for the designs as of 4/3/2012 and will be updated for significant changes thereafter.

Prerequisites

The following demonstration of the Interactive Hive console with datasets from the DataMarket requires an invitation code to gain access to the portal. If you don’t have access, complete this Microsoft Connect survey to obtain an invitation code and follow the instructions in the welcoming email to gain access to the portal. Read the Windows Azure Deployment of Hadoop-based services on the Elastic Map Reduce (EMR) Portal for a detailed description of the signup process (as of 2/23/2012).

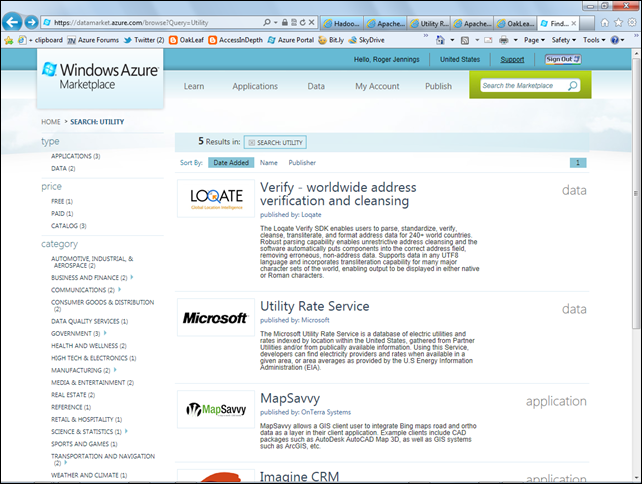

You also need an Windows Azure Marketplace Data Market account with subscriptions for one or more (preferably free) OData datasets, such as Microsoft’s Utility Rate Service. To subscribe to this service:

1. Navigate to the DataMarket home page, log in with your Windows Live ID and click the My Account link to confirm your User Name (email address) and obtain your Primary Account Key:

2. Open Notepad, copy the Primary Account Key to the clipboard, and paste it to Notepad’s window for use in step ? below.

3. Search for utility, which displays the following screen:

4. Click the Utility Rate Service to open its Data page:

5. Click the Sign Up button to open the Subscription page, mark the Accept the Offer Terms and Privacy Policy check box:

6. Click the Sign Up button to display the Purchase page:

Obtaining Values Required by the Interactive Hive Console

The Interactive Hive Console requires your DataMarket User ID, Primary Account Key, and text of the OData query.

1. If you’re returning to the DataMarket, confirm your User ID and save your Primary Account Key as described in step 1 of the preceding section.

2. If you’re returning to the DataMarket, click the My Data link under the My Account link to display the page of the same name and click the Use button to open the interactive DataMarket Service Explorer.

If you’ve continuing from the preceding section, click the Explore This DataSet link to open the interactive DataMarket Service Explorer.

3. In the DataMarket Service Explorer, accept the default CustomerPlans query and click Run Query to display the first 100 records:

4. Click the Develop button to display the OData query URL to retrieve the first 100 records to a text box:

5. Copy the query text and paste it to the Notepad window with your Primary Account Key.

Loading the Hive Table with Data from the Utility Rate Service

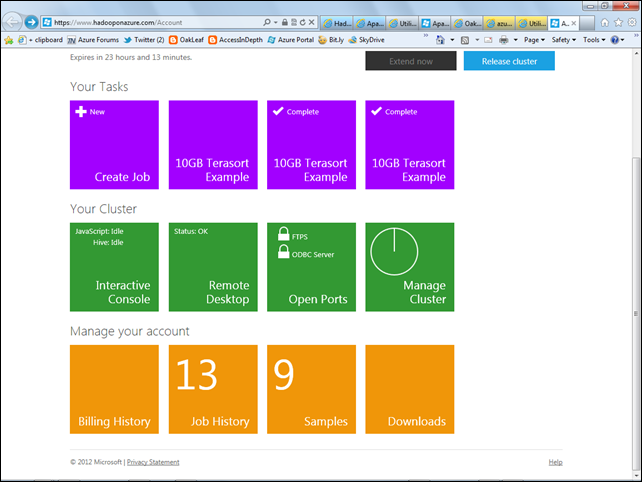

1. Navigate to the Apache Hadoop on Windows Azure portal, log in with the Windows Live ID you used in your application for an invitation code, and scroll to the bottom of the Welcome Back page:

Note: By default, your cluster’s lifespan is 48 hours. You can extend it’s life when 6 hours or fewer remain by clicking the Extend Cluster button.

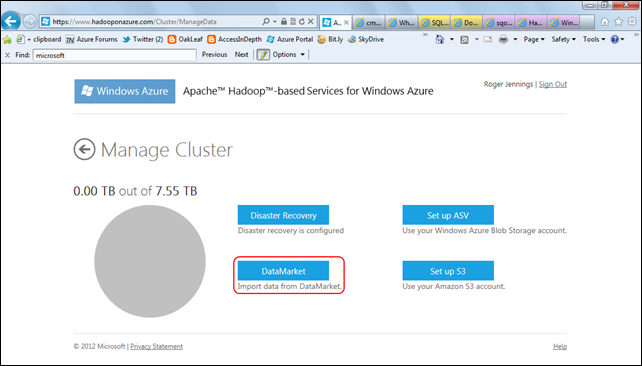

2. Click the Manage Cluster tile to open that page:

3. Click the DataMarket button to open the Import from DataMarket page. Fill in the first three text boxes with your Live ID and the data you saved in the preceding section; remove the $top=100 parameter from the query to return all rows, and type UtilityRateService as the Hive Table Name:

4. Click the Import Data button to start the import process and wait until import completes, as indicated by the DataLoader progress = 100[%] message:

The text of the generated data import Command with its eight required arguments is:

c:\apps\dist\bin\dataloader.exe

-s datamarket

-d ftp

-u "roger_jennings@compuserve.com"

-k "2ZPQEl2CouvtQw+..............................Qud0E="

-q "https://api.datamarket.azure.com/Data.ashx/Microsoft/UtilityRateService/ Prices?"

-f "ftp://d5061cb5f84c9ea089bfa052f1a0a3f2:da835ebe965c87b428923032057014f7@

localhost:2222/uploads/UtilityRateService/UtilityRateService"

-h "UtilityRateService" …

The post continues with “Querying the UtilityRateService Hive Database”, “Viewing Job History”, and “Attempting to Load ContentItem Records from the Microsoft Codename ‘Social Analytics’ DataSet” sections and concludes:

Conclusion

Data import from the Windows Azure Marketplace DataMarket to user-specified Hive tables is one of three prebuilt data import options for Hadoop on Azure. The other two on the Manage Cluster page are:

- ASV (Windows Azure blob storage)

- S3 (Amazon Simple Storage Service)

Import of simple DataMarket datasets is a relatively simple process. As demonstrated by the problems with the VancouverWindows8 dataset’s ContentItems table, more complex datasets might fail for unknown reasons.

Writing Java code for MapReduce jobs isn’t for novices or faint-hearted code jockeys. The interactive Hive console and the HiveQL syntax simplify the query process greatly.

My (@rogerjenn) Analyze Years of Air Carrier Flight Arrival Delays in Minutes with the Windows Azure HPC Scheduler article of 4/3/2012 for Red Gate Software’s ACloudyPlace blog begins:

The Microsoft Codename “Cloud Numerics” Lab from SQL Azure Labs provides a Visual Studio template to enable configuring, deploying and running numeric computing SaaS applications on High Performance Computing (HPC) clusters in Microsoft data centers.

Apache Hadoop, MapReduce, and other Hadoop subcomponents get most of the attention from big-data journalists, but there are many special-purpose alternatives that offer shortcuts to specific data analysis techniques. For example, Microsoft’s Codename “Cloud Numerics” Lab offers a prebuilt .NET runtime, as well as sets of common mathematical, statistical, and signal processing functions for execution on local or cloud-based Windows HPC Server 2008 R2 clusters (see Figure 1.) Microsoft announced a preview version of the Windows Azure HPC Scheduler at the //BUILD/ conference in September 2011 and released the commercial version on November 11. The Windows Azure HPC Scheduler SDK, together with a set of sample applications, for use with the Windows Azure SDK v1.6 followed on December 14 and the “Cloud Numerics” team released its Lab v0.1 on January 10, 2012.

Figure 1: The Microsoft “Cloud Numerics” components

The Microsoft “Cloud Numerics” Lab provides a Visual Studio 2010 C# project template and deployment utility, .NET 4 runtime, and .NET native and system libraries for numeric analysis in a single downloadable package. (Diagram based on Microsoft’s The “Cloud Numerics” Programming and runtime execution model documentation.)

Why Use the “Cloud Numerics” Lab and Windows HPC Scheduler?

The “Cloud Numerics” Lab is designed for developers of computationally intensive applications that involve large datasets. It specifically targets projects that benefit from “bursting” computational work from desktop PCs to small-scale supercomputers running Windows HPC Server 2008 R2 in Microsoft data centers. Few small businesses or medium-size enterprises can afford the capital investment or administrative and operating costs of dedicated, on-premises HPC clusters that might be used for only a few hours or minutes per week. Although Microsoft’s new Apache Hadoop on Windows Azure developer preview offers similar advantages, Hadoop and its subcomponents are Java-centric. This means Microsoft shops can incur substantial training costs to bring their .NET developers up-to-speed with Hadoop, HDFS, MapReduce, Hive, Pig, Sqoop, and other open-source software.

Installing the “Cloud Numerics” package from Connect.Microsoft.com adds a customizable Microsoft Cloud Numerics Application C# template to Visual Studio 2010 that most .NET developers will be able to use out-of-the-box without a significant learning curve. The template generates a solution with these projects automatically:

- AppConfigure – Publishes a fully-configured Windows HPC Server cluster to Windows Azure aided by a graphical Cloud Numerics Deployment Utility

- HeadNode – Provides failover and mediates access to the cluster resources as the single point of management and job scheduling for the cluster (see Figure 2)

- ComputeNode – Carries out the computation tasks assigned to it by the Scheduler

- FrontEnd – Provides a Web-accessible endpoint and UI for the Windows Azure HPC Schedule Web Portal, which display job status and diagnostic messages

- AzureSampleService – Defines roles for and number of HeadNode, ComputeNode, and FrontEnd instances; generates ServiceConfiguration.Local.csfg, ServiceConfigure.Cloud.csfg, and ServiceDefinition.csdef files

- MSCloudNumericsApp – Provides replaceable C# code stubs for specifying and reading data sources, defining computational functions, and determining output format and destination (usually a blob in Windows Azure storage)

Figure 2: The Microsoft Cloud Numerics Application template generates and deploys these components to local Windows HPC Server 2008 R2 runtime instances or Windows Azure HPC hosted services.

The template includes a simple MSCloudNumericsApp project for random-number arrays that developers can run locally to verify correct installation and, optionally, deploy with a Windows Azure HPC Scheduler to a service hosted in a Microsoft data center. My initial Introducing Microsoft Codename “Cloud Numerics” from SQL Azure Labs and Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters tutorials describe these operations in detail.

Like Hadoop/MapReduce projects, “Cloud Numerics” apps are batch processes. They split data into chunks, run operations on the chunks in parallel and then combine the results when computation completes. “Cloud Numerics” apps operate on distributed numeric arrays, also called matrices. They primarily deliver aggregate numeric values, not documents, and aren’t intended to perform “selects” or other relational data operations. .NET developers without a background in statistics, linear algebra, or matrix operations probably will need some assistance from their more mathematically inclined colleagues to select and apply appropriate analytic functions. On the other hand, math majors will require minimal programming guidance to modify the MSCloudNumericsApp code to define their own jobs and deploy them to Windows Azure independently. …

The article continues with “Creating and Running the Air Carrier Flight Arrival Delays Solution” and “Test-Drive Microsoft Codename ‘Data Numerics’” sections.

For more details about the Microsoft Codename “Data Numerics” PaaS Lab, see my two tutorials linked above.

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity and Workflow

Vittorio Bertocci (@vibronet) described Authenticating Users from Passive IPs in Rich Client Apps – via ACS in a 4/4/2012 post:

It’s been a couple of years that we released the first samples showing how to take advantage of ACS from Windows Phone 7 applications; the iOS samples, released in the Summer, and the Windows8 Metro sample app last Fall demonstrated that the pattern applies to just any type of rich clients.

Although we explored the general idea at length, and provided many building blocks which enable you to put it in practice without knowing all that much about the gory details, apparently we never really provided a detailed description of what is going on. The general developer populace (rightfully) won’t care about the mechanics, but I am receiving a lot of details questions from some of the identirati, who might end up carrying this approach even further, to everybody’s advantage.

I am currently crammed in a small seat in cattle class, inclusive of crying babies and high-pitch barking dogs (yes, plural), on a Seattle-Paris flight that is bringing me to a 1-week vacation at home. It’s hardly the best situation to type a lengthy post, but if I don’t do it I know this will but me for the entire week and I don’t want it to distract me from the mega family reunion and the 20th anniversary party of my alma mater so my dear readers, wear confortable clothes and gather around, because in the next few hours (for the writer, at least) we are going to shove our hands deep into the guts of this pattern and shine our bright spotlight pretty much in every fold. Thank you Ping Identity for the noise-cancelling headset you got me during last Cloud Identity Summit, without which this post would not have been possible.

The General Idea

Rich clients. How do they handle authentication? Let’s indulge our example bias and pick some notable case from the traditional world.

Take an email client, like Outlook: in the most general case, Outlook won’t play games and will simply ask you for your credentials. The same goes for SQL Management Studio, Live Writer, FileZilla, Messenger. That’s because your mail server, SQL engine, blog engine, FTP server and Live ID all have programmatic endpoints that accept raw credentials.

A slightly less visible case is the use of Office apps within the context of your domain, in which you are silently authenticated and authorized: however I assure that you are not using your midichlorians to submit that file share to your will. It’s simply that you are operating in one environment fully handled by your network software, where there is an obvious, implicit authority (the KDC) which is automatically consulted whenever you do anything that requires some privilege. There is an unbroken chain of authentication which starts when you sign in the workstation and unfolds all the way to your latest request, and it is all supported by programmatic authentication endpoints.

All this has worked pretty well for few decades, and still enjoys very wide application: however it does not cover all the authentication needs of today’s scenarios. Here there’s a short list of situations in which the approach falls short:

- No endpoints for non-interactive authentication

Nowadays some of the most interesting user populations can be found among the users of social networks, web applications and similar. Those guys built a business on people navigating with their browsers to their pages, or on people navigating with their browsers to others’ pages which end up calling their API. Although the meteoric rise of the mobile app is shifting things around, the basic premises are sound. Those applications and web sites might provide endpoints accepting raw credentials, but more often than not they will expose their authentication capabilities via browser-based flows: that works well when you are integrating with them from a web application, as the uses would consume it from a browser. Things get less clear when the calling application is a rich client.:Manufacturing web requests and scraping the responses is technically feasible, but extremely brittle and above all a very likely a glaring violation of the terms and conditions of the target IP. My advice is, don’t even try it. There are other solutions.- Changing or hard requirements on authentication mechanics

When you drive authentication with raw credentials from a rich client, you bear the burden of making things happen: creating the credential gathering experience, dispatching the credentials t the IP according to the protocol of choice & interpret the response, and so on. If you just hurl passwords from A to B, or you take advantage of an underlying Kerberos infrastructure, that’s not that bad: but if you need to do more complicated stuff, things get tougher. Say that your industry regulations impose you to use, in addition to username and password, a one-time key sent to the user’s mobile phone": now you need to create the appropriate UI elements, drive the challenge/response experience, and adapt the protocol bits accordingly. What happens if in 1 month the law changes, and now your IP imposes you to use a hard token or a smart card as well? That means writing new infra code, and redeploy it to all your clients. Not the happiest place to be.- Consent experiences

More and more often, the authentication experience is compounded with some kind of consent experience: not only the user needs to authenticate with the IP, he or she also needs to decide what information and resources should be made available to the application triggering the authentication flow.

Needless to say, each IP can have completely different needs here: for example, different resource types require different privileges. Icing on the cake, those things are as fluid as mercury and will change several times per year. Creating a general-purpose rich UI to accommodate for all that is perhaps not impossible, but would require a gargantuan all-up effort for which there’s simply no pressure for. Why bother, when a browser can easily render your latest consent experience no matter how often you change it?- Chaining authorities

This is subtler. Say that the service you want to call from your rich client trusts A; however your users do not have an account with A. They do have an account with B, and A trusts B. Great! The transitive closure of trust relationship will pull you out of trouble, right? B can act as federation provider, A as identity provider, and the user gets to access the service. Only, with rich clients it’s not that simple. In the passive case, you can take the user to a rise and redirect him/her as many times as needed, each leg rendering its own UI, gathering its own specific set of info using whatever protocol flavor it deems appropriate, without requiring changes in the browser or in the service. In the rich client you have to muscle through every leg: every call to an STS entails knowing exactly the protocol options they use, every new credential type must be accounted for in the client code: doing dynamic stuff is possible (some of the oldest readers know what I am talking about) but certainly non-trivial.Ready for some good news? All of the above can be solved, or at least mitigated, by a bit of inventiveness. Rich clients render their UI with their own code, rather than feeding it to a browser: but if the browser works so well for the authentication scenarios depicted above, why not opening one just when needed for driving he authentication phase?

The idea is sound, but as usual the devil is in the details. With some exceptions, the protocols used for browser based authentication aim at delivering a token (or equivalent) to one application on a server; if we want this impromptu browser trick to work, we need to find a way of “hijacking” the token toward the rich client app before it gets sent to the server.

The good news keep coming. ACS offers a way for you to use it from within a browser driven by a rich client, and faithfully deliver tokens to the calling client app while still maintaining the usual advantages it offers: outsourcing of trust relationships, support for many identity provider types, claims transformation rules, lightweight token formats suitable for REST calls, and so on.

If all you care is knowing that such method exists, and you are fine with using the samples we provide without tweaking them, you should stop reading now.

If you decide to push farther, here there’s what to expect. The way in which you use ACS from a browser in a rich client is based on a relatively minor tweak on how we handle WS-Federation and home realm discovery. Since I can’t assume that you are all familiar with the innards of WS-Federation (which you would, if you would have taken advantage of yesterday’s 50% off promotion from O’Reilly) I am going to give you a refresher of the relevant details. Done then, I’ll explain how the method works in term of the delta in respect to traditional ws-fed.

An ACS & WS-Federation Refresher

The figure below depicts what append during a classic browser-based authentication flow taking advantage of ACS.

In order to keep things manageable I omitted the initial phase, in which an unauthenticated request to the web app results in a redirect to the home realm discovery page: I start the flow directly from there. Furthermore, instead of showing a proper HTML HRD page I use a JSON feed, assuming that the browser would somehow render it for the user so that its corresponding entries will be clickable. Hopefully things will get clearer once I get in the details.

Home Realm Discovery

Let’s say that you want to help the user to authenticate with a given web application, protected by ACS. ACS knows which identity providers the application is willing to accept users from, and knows how to integrate with those. How do you take advantage of that knowledge? You have two easy ways:

- you can rely on one page that ACS automatically generates for you, which presents to the user a list of the acceptable identity providers. That’s super-easy, and that’s also what I show in the diagram above in step 1. However it is not exactly the absolute best in term of experience; ACS renders the bare minimum for the user to make a choice, and the visuals are – how should I put it? – a bit rough. And that’s by design.

- Another alternative is to obtain the list of identity providers programmatically. ACS offers a special endpoint you can use to obtain a JSON feed of identity providers trusted for a given relying party, inclusive of all the coordinates required for engaging each of them in one authentication flow. Once you have that list, you can use that info to create whatever authentication experience and/or look&feel you deem appropriate.

The second alternative is actually pretty clever! Let’s take a deeper look. How do you obtain the JSON feed for a given RP? You just GET the following:

https://w8kitacs.accesscontrol.windows.net:443/v2/metadata/IdentityProviders.js?protocol=wsfederation&realm=http%3a%2f%2flocalhost%3a7777%2f&reply_to=http%3a%2f%2flocalhost%3a7777%2f&context=&request_id=&version=1.0&callback=

The first part is the resource itself, the IP feed; it follows the customary rule for constructing ACS endpoints, the namespace identifier (bold) followed by the ACS URL structure. The green part specifies that we want to integrate ACS and our app using WS-Federation. The last highlighted section identifies which specific RP (among the ones described in the target namespace) we want to deal with. What do we get back? The following:

[

{

"Name": "Windows Live™ ID",

"LoginUrl": "https://login.live.com/login.srf?wa=wsignin1.0&wtrealm=https%3a%2f%2faccesscontrol.windows.net%2f&wreply=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fwsfederation&wp=MBI_FED_SSL&wctx=pr%3dwsfederation%26rm%3dhttp%253a%252f%252flocalhost%253a7777%252f%26ry%3dhttp%253a%252f%252flocalhost%253a7777%252f",

"LogoutUrl": "https://login.live.com/login.srf?wa=wsignout1.0",

"ImageUrl": "", "EmailAddressSuffixes": []

},

{

"Name": "Yahoo!",

"LoginUrl": "https://open.login.yahooapis.com/openid/op/auth?openid.ns=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0&openid.mode=checkid_setup&openid.claimed_id=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.identity=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.realm=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid&openid.return_to=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid%3fcontext%3dpr%253dwsfederation%2526rm%253dhttp%25253a%25252f%25252flocalhost%25253a7777%25252f%2526ry%253dhttp%25253a%25252f%25252flocalhost%25253a7777%25252f%26provider%3dYahoo!&openid.ns.ax=http%3a%2f%2fopenid.net%2fsrv%2fax%2f1.0&openid.ax.mode=fetch_request&openid.ax.required=email%2cfullname%2cfirstname%2clastname&openid.ax.type.email=http%3a%2f%2faxschema.org%2fcontact%2femail&openid.ax.type.fullname=http%3a%2f%2faxschema.org%2fnamePerson&openid.ax.type.firstname=http%3a%2f%2faxschema.org%2fnamePerson%2ffirst&openid.ax.type.lastname=http%3a%2f%2faxschema.org%2fnamePerson%2flast",

"LogoutUrl": "",

"ImageUrl": "",

"EmailAddressSuffixes": []

},

{

"Name": "Google",

"LoginUrl": "https://www.google.com/accounts/o8/ud?openid.ns=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0&openid.mode=checkid_setup&openid.claimed_id=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.identity=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.realm=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid&openid.return_to=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid%3fcontext%3dpr%253dwsfederation%2526rm%253dhttp%25253a%25252f%25252flocalhost%25253a7777%25252f%2526ry%253dhttp%25253a%25252f%25252flocalhost%25253a7777%25252f%26provider%3dGoogle&openid.ns.ax=http%3a%2f%2fopenid.net%2fsrv%2fax%2f1.0&openid.ax.mode=fetch_request&openid.ax.required=email%2cfullname%2cfirstname%2clastname&openid.ax.type.email=http%3a%2f%2faxschema.org%2fcontact%2femail&openid.ax.type.fullname=http%3a%2f%2faxschema.org%2fnamePerson&openid.ax.type.firstname=http%3a%2f%2faxschema.org%2fnamePerson%2ffirst&openid.ax.type.lastname=http%3a%2f%2faxschema.org%2fnamePerson%2flast",

"LogoutUrl": "",

"ImageUrl": "",

"EmailAddressSuffixes": []

},

{

"Name": "Facebook",

"LoginUrl": "https://www.facebook.com/dialog/oauth?client_id=194667703936106&redirect_uri=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%2fv2%2ffacebook%3fcx%3dcHI9d3NmZWRlcmF0aW9uJnJtPWh0dHAlM2ElMmYlMmZsb2NhbGhvc3QlM2E3Nzc3JTJmJnJ5PWh0dHAlM2ElMmYlMmZsb2NhbGhvc3QlM2E3Nzc3JTJm0%26ip%3dFacebook&scope=email",

"LogoutUrl": "",

"ImageUrl": "",

"EmailAddressSuffixes": []

}

]Well, that’s clearly meant to be consumed by machines: however JSON is clear enough for us to take this guy apart and understand what’s there.

First of all, the structure: every IP gets a name (useful for presentation purposes), a login URL (more about that later), a rarely populated logout URL, the URL of one image (again useful for presentation purposes) and a list of email suffixes (longer conversation, however: useful if you know the email of your user and you want to use it to automatically pair him/her to the corresponding IP, instead of showing all the list).

The login URL is the most interesting property. Let’s take the first one:

https://login.live.com/login.srf?wa=wsignin1.0&wtrealm=https%3a%2f%2faccesscontrol.windows.net%2f&wreply=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fwsfederation&wp=MBI_FED_SSL&wctx=pr%3dwsfederation%26rm%3dhttp%253a%252f%252flocalhost%253a7777%252f%26ry%3dhttp%253a%252f%252flocalhost%253a7777%252f

This is the URL used to sign in using Live. The yellow part, together with various other hints (wreply, wctx, etc) suggests that the integration with Live is also based on WS-Federation. This is what is often referred to as a deep link: it contains all the info required to go to the IP and then get back to the ACS address that will take care of processing the incoming token and issue a new token for the application. The part highlighted green shows such endpoint. Note the wsfederation entry in the wctx context parameter, it will come in useful later. You don’t need to grok al the details here: suffice to say that if the user clicks on this link he’ll be transported in a flow where he will authenticate with live id, will be bounced back to ACS and will eventually receive the token needed to authenticate with the application. Al with a simple click on a link.

Want to try another one? let’s take a look at the URL for Yahoo:

https://open.login.yahooapis.com/openid/op/auth?openid.ns=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0&openid.mode=checkid_setup&openid.claimed_id=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.identity=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.realm=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid&openid.return_to=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid%3fcontext%3dpr%253dwsfederation%2526rm%253dhttp%25253a%25252f%25252flocalhost%25253a7777%25252f%2526ry%253dhttp%25253a%25252f%25252flocalhost%25253a7777%25252f%26provider%3dYahoo!&openid.ns.ax=http%3a%2f%2fopenid.net%2fsrv%2fax%2f1.0&openid.ax.mode=fetch_request&openid.ax.required=email%2cfullname%2cfirstname%2clastname&openid.ax.type.email=http%3a%2f%2faxschema.org%2fcontact%2femail&openid.ax.type.fullname=http%3a%2f%2faxschema.org%2fnamePerson&openid.ax.type.firstname=http%3a%2f%2faxschema.org%2fnamePerson%2ffirst&openid.ax.type.lastname=http%3a%2f%2faxschema.org%2fnamePerson%2flast

Now that’s a much longer one! I am sure that many of you will recognize the OpenID/attribute exchange syntax, which happens to be the redirect-based protocol that ACS uses to integrate with Yahoo. The value of the return_to parameter hints at how ACS processes the flow: once again, notice the wsfederation string; and once again, all it takes to authenticate is a simple click on the link.

Google also integrates with ACS via OpenID, hence we can skip it. How about Facebook, though?

https://www.facebook.com/dialog/oauth?client_id=194667703936106&redirect_uri=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%2fv2%2ffacebook%3fcx%3dcHI9d3NmZWRlcmF0aW9uJnJtPWh0dHAlM2ElMmYlMmZsb2NhbGhvc3QlM2E3Nzc3JTJmJnJ5PWh0dHAlM2ElMmYlMmZsb2NhbGhvc3QlM2E3Nzc3JTJm0%26ip%3dFacebook&scope=email

Yet another integration protocol: this time it’s OAuth2. The flow leverages one Facebook app I created for the occasion, as it’s standard procedure with ACS. Another link type, same behavior: for the user, but also for the web app developer, it’s just a matter of following a link and eventually an ACS-issued token comes back.

Getting Back a Token

Alrighty, let’s say that the user clicks on one of the login URL links. In the diagram that’s step 2. In this case I am showing a generic IP1. As you know by now, the IP can use whatever protocol ACS and the IP agreed upon. In the diagram I am using WS-Federation, which is what you’d see if IP1 would be an ADFS2 or Live ID.

WS-Federation uses an interesting way of returning a token upon successful authentication. It basically sends back a form, containing the token and various ancillary parameters; it also sends a Javascript fragment that will auto-post that form to the requesting RP. That’s what happens in step 2 (IP to ACS) and 3 (ACS to the web application). Let’s take a closer look to the response returned by ACS:

1: <![CDATA[2: <html><head><title>Working...</title></head><body>3: <form method="POST" name="hiddenform" action="http://localhost:7777/">4: <input type="hidden" name="wa" value="wsignin1.0" />5: <input type="hidden" name="wresult" value="&lt;t:RequestSecurityTokenResponse6: Context=&quot;rm=0&amp;amp;7: id=passive&amp;amp;ru=%2fdefault.aspx%3f&quot;8: xmlns:t=&quot;http://schemas.xmlsoap.org/ws/2005/02/trust&quot;><t:Lifetime>9: <wsu:Created xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd">10: 2012-03-28T19:19:56.488Z</wsu:Created>11: <wsu:Expires xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd">2012-03-28T19:29:56.488Z</wsu:Expires>12: </t:Lifetime>13: <wsp:AppliesTo xmlns:wsp="http://schemas.xmlsoap.org/ws/2004/09/policy"><EndpointReference xmlns="http://www.w3.org/2005/08/addressing"><Address>http://localhost:7777/</Address></EndpointReference>14: </wsp:AppliesTo>15: <t:RequestedSecurityToken>16: <Assertion ID="_906f33bd-11ca-4d32-837b-71f8a3a1569c"17: IssueInstant="2012-03-28T19:19:56.504Z"18: Version="2.0" xmlns="urn:oasis:names:tc:SAML:2.0:assertion">19: <Issuer>https://w8kitacs.accesscontrol.windows.net/</Issuer>20: <ds:Signature [...]21: lt;/Assertion></t:RequestedSecurityToken>22: [....]23: lt;/t:RequestSecurityTokenResponse>" />24: <input type="hidden" name="wctx" value="rm=0&id=passive&ru=%2fdefault.aspx%3f" />25: <noscript><p>Script is disabled. Click Submit to continue.</p><input type="submit" value="Submit" />26: </noscript>27: </form>28: <script language="javascript">29: window.setTimeout('document.forms[0].submit()', 0);30: </script></body></html>31: ]]>Now THAT’s definitely not meant to be read by humans. I did warn you at the beginning of the post, didn’t I.

Come on, it’s not that bad: let me be your Virgil here. As I said above, in WS-Federation tokens are returned to the target RP by sending back a form with autopost: and that’s exactly what we have here. Lines 3-27 contain a form, which features a number of input fields used to do things such as signaling to the RP the nature of the operation (line 4: we are signing in) and transmitting the actual bits of the requested token (line 5 onward).

Lines 28-30 contain the by-now-famous autoposter script. And that’s it! The browser will receive the above, sheepishly (as in passively) execute the script and POST the content as appropriate.

The RP will likely have interceptors like WIF which will recognize the POST for what it is (a signin message), find the token, mangle it as appropriate and authenticate (or not) the user. All as described in countless introductions to claims-based identity.

Congratulations, you now know much more about how ACS implements HRD and how WS-Fed works than you’ll ever actually need. Unless, of course, you want to understand in depth how you can pervert that flow into something that can help with rich clients. …not from a Jedi

The Javascriptnotify Flow

Let’s get back to the rich client problem. We already said that we can pop out a browser from our rich client when we need to – that is to say when we have to authenticate the user - and close it once we are done. The flow we have just examined seems almost what we need, both for what concerns the HRD (more about that later) and the authentication flow. The only thing that does not work here is the last step.

Whereas in the ws-fed flow the ultimate recipient of the token issuance process is the entity that requires it for authentication purposes, that is to say the web site, in the rich client case it is the client itself that should obtain the token and store it for later use (securing calls to a web service). It is a bit if in the WS-Federation case the token would stop at the browser, instead of being posted to the web site. Here, let me steal my own thunder: we make that happen by providing in ACS an endpoint which is almost WS-Federation, but in fact provides a mechanism for getting the token where/when we need it in a rich client flow. We call it javascriptnotify. Take a look at the diagram below.

That looks pretty similar to the other one, with some important differences:

- there is a new actor, the rich client application. Although the app is active before and after the authentication phase, here we represent things only form the moment in which the browser pops out (identified in the diagram by the browser control entry, which can be embedded or popped out as dialog according to the style of the apps in the target platform) to the moment in which the authentication flow terminates

- the diagram does not show the intended audience of the token, the web service. Here we focus just on the token acquisition rather than use (which comes later in the app flow), whereas in the passive case the two are inextricably entangled.

Here I did in step 1 the same HRD feed/page simplification I did above; I’ll get back to it at the end of the post. The URl we use for getting the feed, though, has an important difference:

https://w8kitacs.accesscontrol.windows.net/v2/metadata/IdentityProviders.js?protocol=javascriptnotify&realm=urn:testservice&version=1.0

The URI of the resource is the same, and the realm of the target service is obviously different; the interesting bit here is the protocol parameter, which now says “javascriptnotify” instead of “wsfederation”. Let’s see if that yields to differences in the actual feed:

{

"Name": "Windows Live™ ID",

"LoginUrl": "https://login.live.com/login.srf?wa=wsignin1.0&wtrealm=https%3a%2f%2faccesscontrol.windows.net%2f&wreply=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fwsfederation&wp=MBI_FED_SSL&wctx=pr%3djavascriptnotify%26rm%3durn%253atestservice",

"LogoutUrl": "https://login.live.com/login.srf?wa=wsignout1.0",

"ImageUrl": "",

"EmailAddressSuffixes": []

},

{

"Name": "Yahoo!",

"LoginUrl": "https://open.login.yahooapis.com/openid/op/auth?openid.ns=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0&openid.mode=checkid_setup&openid.claimed_id=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.identity=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.realm=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid&openid.return_to=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid%3fcontext%3dpr%253djavascriptnotify%2526rm%253durn%25253atestservice%26provider%3dYahoo!&openid.ns.ax=http%3a%2f%2fopenid.net%2fsrv%2fax%2f1.0&openid.ax.mode=fetch_request&openid.ax.required=email%2cfullname%2cfirstname%2clastname&openid.ax.type.email=http%3a%2f%2faxschema.org%2fcontact%2femail&openid.ax.type.fullname=http%3a%2f%2faxschema.org%2fnamePerson&openid.ax.type.firstname=http%3a%2f%2faxschema.org%2fnamePerson%2ffirst&openid.ax.type.lastname=http%3a%2f%2faxschema.org%2fnamePerson%2flast",

"LogoutUrl": "",

"ImageUrl": "",

"EmailAddressSuffixes": []

},

{

"Name": "Google",

"LoginUrl": "https://www.google.com/accounts/o8/ud?openid.ns=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0&openid.mode=checkid_setup&openid.claimed_id=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.identity=http%3a%2f%2fspecs.openid.net%2fauth%2f2.0%2fidentifier_select&openid.realm=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid&openid.return_to=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%3a443%2fv2%2fopenid%3fcontext%3dpr%253djavascriptnotify%2526rm%253durn%25253atestservice%26provider%3dGoogle&openid.ns.ax=http%3a%2f%2fopenid.net%2fsrv%2fax%2f1.0&openid.ax.mode=fetch_request&openid.ax.required=email%2cfullname%2cfirstname%2clastname&openid.ax.type.email=http%3a%2f%2faxschema.org%2fcontact%2femail&openid.ax.type.fullname=http%3a%2f%2faxschema.org%2fnamePerson&openid.ax.type.firstname=http%3a%2f%2faxschema.org%2fnamePerson%2ffirst&openid.ax.type.lastname=http%3a%2f%2faxschema.org%2fnamePerson%2flast",

"LogoutUrl": "",

"ImageUrl": "",

"EmailAddressSuffixes": []

},

{

"Name": "Facebook",

"LoginUrl": "https://www.facebook.com/dialog/oauth?client_id=194667703936106&redirect_uri=https%3a%2f%2fw8kitacs.accesscontrol.windows.net%2fv2%2ffacebook%3fcx%3dcHI9amF2YXNjcmlwdG5vdGlmeSZybT11cm4lM2F0ZXN0c2VydmljZQ2%26ip%3dFacebook&scope=email",

"LogoutUrl": "",

"ImageUrl": "",

"EmailAddressSuffixes": []

}

]The battery is starting to suffer, so I have to accelerate a bit. I am not extracting the Login URLs of the various IPs, but I highlighted the places where ws-federation has been substituted by javascriptnotify (facebook follows a different approach, more about is some other time: but you can see that the two redirect_uri are different).

The integration between the IP and ACS – step 2 - goes as usual, modulo a different value in some context parameter; I didn’t show it in details earlier, I won’t show it now. What is different, though, is that coming back from the IP there’s something in the URL which tells ACS that the token should not be issued via WS-Federation, but using Javascriptnotify. That will influence how ACS sends back a token, that is to say the return portion of leg 3. Earlier we got a form containing the token, and an autoposter script; let’s see what we get now.

1: <html xmlns="http://www.w3.org/1999/xhtml">2: <head>3: <title>Loading </title>4: <script type="text/javascript">1:2: try {3: window.external.notify(4: '{5: "appliesTo":"urn:testservice",6: "context":null,7: "created":1332868436,8: "expires":1332869036,9: "securityToken":"<?xml version="1.0" encoding="utf-16"?\u003e<wsse:BinarySecurityToken wsu:Id="uuid:0ebe45bb-c8f5-4b58-b6fd-20cc54667721" ValueType="http://schemas.xmlsoap.org/ws/2009/11/swt-token-profile-1.0" EncodingType="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-soap-message-security-1.0#Base64Binary" xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd" xmlns:wsse="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd"\u003eaHR0cCUzYSUyZiUyZnNjaGVtYXMueG1sc29hcC5vcmclMmZ3cyUyZjIwMDUlMmYwNSUyZmlkZW50aXR5JTJmY2xhaW1zJTJmbmFtZWlkZW50aWZpZXI9MWJOdnROc2c5cmolMmIxdTVwTFlwTkE5VndveFFVUEx4TmtMNGJnMmZYWk13JTNkJmh0dHAlM2ElMmYlMmZzY2hlbWFzLm1pY3Jvc29mdC5jb20lMmZhY2Nlc3Njb250cm9sc2VydmljZSUyZjIwMTAlMmYwNyUyZmNsYWltcyUyZmlkZW50aXR5cHJvdmlkZXI9dXJpJTNhV2luZG93c0xpdmVJRCZBdWRpZW5jZT11cm4lM2F0ZXN0c2VydmljZSZFeHBpcmVzT249MTMzMjg2OTAzNiZJc3N1ZXI9aHR0cHMlM2ElMmYlMmZ3OGtpdGFjcy5hY2Nlc3Njb250cm9sLndpbmRvd3MubmV0JTJmJkhNQUNTSEEyNTY9blpPWE1pN3k2VkE4QlEyVXJWQWlpTDZXSkQ4OHJjb3ZvanBVb2t3dmklMmY4JTNk</wsse:BinarySecurityToken\u003e",10: "tokenType":"http://schemas.xmlsoap.org/ws/2009/11/swt-token-profile-1.0"}');11: }12: catch (err) {13: alert("Error ACS50021: windows.external.Notify is not registered.");14: }15:</script>16: </head>17: <body>18: </body>19: </html>I hope you’ll find in you to forgive the atrocious formatting I am using, hopefully that does not get in the way of making my point.

As you can see above, the response from ACS is completely different; it’s a script which substantially passes a string to whomever in the container implements a handler for the notify event. And the string contains.. surprise surprise, the token we requested. If our rich client provided a handler for the notify event, it will now receive the token bits for storage and future use. Mission accomplished: the browser control can now be closed and the rich native experience can resume, ready to securely invoke services with the newfound token.

The notifi-ed string also contains some parameter about the token itself (audience, validity interval, type, etc). Those parameters can come in handy to know what the token is good for, without the need for the client to actually parse and understand the token format (which can be stored and used as amorphous blob, nicely decoupling the client from future updates in the format or changes in policy).

A bit more on token formats. ACS allows you to define which token format should be used for which RP, regardless of the protocol. When obtaining tokens for REST services, you normally want to get tokens in a lightweight format (as you’ll likely need to use them in places – like the HTTP headers - where long tokens risk being clipped). In this example, in fact, I decided to use SWT tokens for my service urn: testservice. However ACS would have allowed me to send back a SAML token just as well. One more point in favor of keeping the client format-agnostic, given that the service might change policy at any moment.

That’s it for the flow! I might be biased, but IMHO it’s not that hard; in any case, I am glad that the vast majority of developers will never have to know things at this level of detail.

If you are interested in taking a look at code that handles the notify, I’d suggest getting the ACS2+WP7 lab and taking a look at Labs\ACS2andWP7\Source\Assets\SL.Phone.Federation\Controls\AccessControlServiceSignIn.xaml.cs, and specifically to SignInWebBrowserControl_ScriptNotify.Now that you know what it’s supposed to do, I am sure you’ll find it straightforward.

A Note About HRD on Rich Clients

Before closing and getting some sleep, here there’s a short digression on home realm discovery and rich clients.

At the beginning of the WS-Federation refresher I mentioned that web site developers have the option of relying on the precooked HRD page, provided by ACS for development purposes, or take the matter in their own hands and use the HRD JSON feed to acquire the IPs coordinates programmatically and integrate tem in their web site’s experience.

A rich client developer has even more options, which can be summarized in the following;

- Provide a native HRD experience. This is what we demonstrate in all of our rich client +ACS samples such as the WP7 and the Windows8 Metro ones: we acquire the JSON IP feed and we use it to display the possible IPs using the style and controls afforded by the target platform. When the user makes a choice, we open a browser (or conceptual equivalents, let’s not get too fiscal here) and point it to the LoginUrl associated to the IP of choice.

There are many nice things to be said about this approach, the main one being that you have the chance of leveraging the strengths of your platform of choice. The other side of the coin is that you might need to write quite a lot of code. Also: if you are writing multiple versions of your client, targeting multiple platforms, you’ll have to rethink the code of the HRD experience just as many times.- Drive the HRD experience from the browser. Of course you can always decide to just follow the passive case more closely, and handle the HRD experience in the browser as well. There are multiple ways of doing so, from driving it with a page served from some hosted location to generating the HTML from the JSON IPs feed directly on the client side. The advantage is that the structure of your app can be significantly simplified, with all the authentication code nicely ring-fenced in the browser control sub-system. The down side is that you’ll likely not blend with the target platform as well as you could if you’d use its visual elements & primitives directly.

Well folks, I have a confession to make. Right now I am still writing from a plane, and there are still babies crying around, but this time it’s the Paris-Seattle: the vacation is over and I am coming back. I didn’t finish this post on my way in, the magic noise cancelling headset fought bravely but in the end the babies-dogs combined attack could not be contained. Believe it or not, I actually managed to enjoy my vacation without thinking about this: however as soon as I got on the return plane I ALT-TABbed my way to Live Writer (left open for the whole week) and finalized. Good, because I caught few bugs that eluded [m]y stressed self of one week ago but were super-evident (“risplenda come un croco in polveroso prato” – from memory! No internet on transatlantic flights) to the rested self of time present.

I am not sure how generally useful this post is going to be. Once again, to be sure; this is NOT for the general-purpose developer. However I do know that this will provide some answers to very specific questions I got; and If you got this far, however, something tells me that “general-purpose” is not the right label for you.

As usual: if you have questions, write away!

Brian Hitney (@bhitney) described Getting Started with the Windows Azure Cache in a 4/4/2012 post to the US Cloud Connection blog:

Windows Azure has a great caching service that allows applications (whether or not they are hosted in Azure) to share in-memory cache as a middle tier service. If you’ve followed the ol’ Velocity project, then you’re likely aware this was a distributed cache service you could install on Windows Server to build out a middle tier cache. This was ultimately rolled into the Windows Server AppFabric, and is (with a few exceptions) the same that is offered in Windows Azure.

The problem with a traditional in-memory cache (such as, the ASP.NET Cache) is that it doesn’t scale – each instance of an application maintains their own version of a cached object. While this has a huge speed advantage, making sure data is not stale across instances is difficult. Awhile back, I wrote a series of posts on how to do this in Windows Azure, using the internal HTTP endpoints as a means of syncing cache.

On the flip side, the problem with building a middle tier cache is the maintenance and hardware overhead, and it introduces another point of failure in an application. Offering the cache as a service alleviates the maintenance and scalability concerns.

The Windows Azure cache offers the best of both worlds by providing the in-memory cache as a service, without the maintenance overhead. Out of the box, there are providers for both the cache and session state (the session state provider, though, requires .NET 4.0). To get started using the Windows Azure cache, we’ll configure a namespace via the Azure portal. This is done the same way as setting up a namespace for Access Control and the Service Bus:

Selecting new (upper left) allows you to configure a new namespace – in this case, we’ll do it just for caching:

Just like setting up a hosted service, we’ll pick a namespace (in this case, ‘evangelism’) and a location. Obviously, you’d pick a region closest to your application. We also need to select a cache size. The cache will manage its size by flushing the least used objects when under memory pressure.

To make setting up the application easier, there’s a “View Client Configuration” button that creates cut and paste settings for the web.config:

In the web application, you’ll need to add a reference to Microsoft.ApplicationServer.Caching.Client and Microsoft.ApplicationServer.Caching.Core. If you’re using the cache for session state, you’ll also need to reference Microsoft.Web.DistributedCache (requires .NET 4.0), and no additional changes (outside of the web.config) need to be done. For using the cache, it’s straightforward:

using (DataCacheFactory dataCacheFactory =

new DataCacheFactory())

{

DataCache dataCache = dataCacheFactory.GetDefaultCache();

dataCache.Add("somekey", "someobject", TimeSpan.FromMinutes(10));

}If you look at some of the overloads, you’ll see that some features aren’t supported in Azure:

That’s it! Of course, the big question is: what does it cost? The pricing, at the time of this writing, is:

One additional tip: if you’re using the session state provider locally in the development emulator with multiple instances of the application, be sure to add an applicationName to the session state provider:

<sessionState mode="Custom" customProvider="AppFabricCacheSessionStoreProvider">

<providers>

<add name="AppFabricCacheSessionStoreProvider"

type="Microsoft.Web.DistributedCache.DistributedCacheSessionStateStoreProvider,

Microsoft.Web.DistributedCache"

cacheName="default" useBlobMode="true" dataCacheClientName="default"

applicationName="SessionApp"/>

</providers>

</sessionState>The reason is because each website, when running locally in IIS, generates a separate session identifier for each site. Adding the applicationName ensures the session state is shared across all instances.

Alan Smith continued his Service Bus series with Transactional Messaging in the Windows Azure Service Bus with a 4/2/2012 post:

Introduction

I’m currently working on broadening the content in the Windows Azure Service Bus Developer Guide. One of the features I have been looking at over the past week is the support for transactional messaging. When using the direct programming model and the WCF interface some, but not all, messaging operations can participate in transactions. This allows developers to improve the reliability of messaging systems. There are some limitations in the transactional model, transactions can only include one top level messaging entity (such as a queue or topic, subscriptions are no top level entities), and transactions cannot include other systems, such as databases.

As the transaction model is currently not well documented I have had to figure out how things work through experimentation, with some help from the development team to confirm any questions I had. Hopefully I’ve got the content mostly correct, I will update the content in the e-book if I find any errors or improvements that can be made (any feedback would be very welcome). I’ve not had a chance to look into the code for transactions and asynchronous operations, maybe that would make a nice challenge lab for my Windows Azure Service Bus course.

Transactional Messaging

Messaging entities in the Windows Azure Service Bus provide support for participation in transactions. This allows developers to perform several messaging operations within a transactional scope, and ensure that all the actions are committed or, if there is a failure, none of the actions are committed. There are a number of scenarios where the use of transactions can increase the reliability of messaging systems.

Using TransactionScope

In .NET the TransactionScope class can be used to perform a series of actions in a transaction. The using declaration is typically used de define the scope of the transaction. Any transactional operations that are contained within the scope can be committed by calling the Complete method. If the Complete method is not called, any transactional methods in the scope will not commit.

// Create a transactional scope. using (TransactionScope scope = new TransactionScope()) { // Do something. // Do something else. // Commit the transaction. scope.Complete(); }In order for methods to participate in the transaction, they must provide support for transactional operations. Database and message queue operations typically provide support for transactions.

Transactions in Brokered Messaging

Transaction support in Service Bus Brokered Messaging allows message operations to be performed within a transactional scope; however there are some limitations around what operations can be performed within the transaction.

In the current release, only one top level messaging entity, such as a queue or topic can participate in a transaction, and the transaction cannot include any other transaction resource managers, making transactions spanning a messaging entity and a database not possible.

When sending messages, the send operations can participate in a transaction allowing multiple messages to be sent within a transactional scope. This allows for “all or nothing” delivery of a series of messages to a single queue or topic.

When receiving messages, messages that are received in the peek-lock receive mode can be completed, deadlettered or deferred within a transactional scope. In the current release the Abandon method will not participate in a transaction. The same restrictions of only one top level messaging entity applies here, so the Complete method can be called transitionally on messages received from the same queue, or messages received from one or more subscriptions in the same topic.

Sending Multiple Messages in a Transaction

A transactional scope can be used to send multiple messages to a queue or topic. This will ensure that all the messages will be enqueued or, if the transaction fails to commit, no messages will be enqueued.

An example of the code used to send 10 messages to a queue as a single transaction from a console application is shown below.

QueueClient queueClient = messagingFactory.CreateQueueClient(Queue1); Console.Write("Sending"); // Create a transaction scope. using (TransactionScope scope = new TransactionScope()) { for (int i = 0; i < 10; i++) { // Send a message BrokeredMessage msg = new BrokeredMessage("Message: " + i); queueClient.Send(msg); Console.Write("."); } Console.WriteLine("Done!"); Console.WriteLine(); // Should we commit the transaction? Console.WriteLine("Commit send 10 messages? (yes or no)"); string reply = Console.ReadLine(); if (reply.ToLower().Equals("yes")) { // Commit the transaction. scope.Complete(); } } Console.WriteLine(); messagingFactory.Close();The transaction scope is used to wrap the sending of 10 messages. Once the messages have been sent the user has the option to either commit the transaction or abandon the transaction. If the user enters “yes”, the Complete method is called on the scope, which will commit the transaction and result in the messages being enqueued. If the user enters anything other than “yes”, the transaction will not commit, and the messages will not be enqueued.

Receiving Multiple Messages in a Transaction

The receiving of multiple messages is another scenario where the use of transactions can improve reliability. When receiving a group of messages that are related together, maybe in the same message session, it is possible to receive the messages in the peek-lock receive mode, and then complete, defer, or deadletter the messages in one transaction. (In the current version of Service Bus, abandon is not transactional.)

The following code shows how this can be achieved.

using (TransactionScope scope = new TransactionScope()) { while (true) { // Receive a message. BrokeredMessage msg = q1Client.Receive(TimeSpan.FromSeconds(1)); if (msg != null) { // Wrote message body and complete message. string text = msg.GetBody<string>(); Console.WriteLine("Received: " + text); msg.Complete(); } else { break; } } Console.WriteLine(); // Should we commit? Console.WriteLine("Commit receive? (yes or no)"); string reply = Console.ReadLine(); if (reply.ToLower().Equals("yes")) { // Commit the transaction. scope.Complete(); } Console.WriteLine(); }Note that if there are a large number of messages to be received, there will be a chance that the transaction may time out before it can be committed. It is possible to specify a longer timeout when the transaction is created, but It may be better to receive and commit smaller amounts of messages within the transaction.

It is also possible to complete, defer, or deadletter messages received from more than one subscription, as long as all the subscriptions are contained in the same topic. As subscriptions are not top level messaging entities this scenarios will work.

The following code shows how this can be achieved.

try { using (TransactionScope scope = new TransactionScope()) { // Receive one message from each subscription. BrokeredMessage msg1 = subscriptionClient1.Receive(); BrokeredMessage msg2 = subscriptionClient2.Receive(); // Complete the message receives. msg1.Complete(); msg2.Complete(); Console.WriteLine("Msg1: " + msg1.GetBody<string>()); Console.WriteLine("Msg2: " + msg2.GetBody<string>()); // Commit the transaction. scope.Complete(); } } catch (Exception ex) { Console.WriteLine(ex.Message); }Unsupported Scenarios