Windows Azure and Cloud Computing Posts for 4/6/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity, Workflow, EAI and EDI

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

My (@rogerjenn) Using Data from Windows Azure Blobs with Apache Hadoop on Windows Azure CTP post of 4/9/2012 begins:

Contents:

Introduction

- Creating the Azure Blob Source Data

- Configuring the ASV Data Source

- Scenario 1: Working with ASV Files in the Hadoop Command Shell via RDP

- Scenario 2: Working with ASV Files in the Interactive JavaScript Console

- Scenario 3: Working with ASV Files in the Interactive Hive Console

- Using the Interactive JavaScript Console to Create a Simple Graph from Histogram Tail Data

- Viewing Hadoop/MapReduce Job and HDFS Head Node Details

- Understanding Disaster Recovery for Hadoop on Azure

Introduction

Hadoop on Azure Team members Avkash Chauhan (@avkashchauhan) and Denny Lee (@dennylee) have written several blog posts about the use of Windows Azure blobs as data sources for the Apache Hadoop on Windows Azure Community Technical Preview (CTP). Both authors assume readers have some familiarity with Hadoop, Hive or both and use sample files with only a few rows to demonstrate Hadoop operations. The following tutorial assumes familiarity with Windows Azure blob storage techniques, but not with Hadoop on Azure features. Each of this tutorial’s downloadable sample flightdata text files contains about 500,000 rows. An alternative flighttest_2012_01.txt file with 100 rows is provided to speed tests with Apache Hive in the Interactive Hive console.

Note: As mentioned later, an initial set of these files is available from my SkyDrive account here.

My (@rogerjenn) Introducing Apache Hadoop Services for Windows Azure article, updated 4/2/2012 provides an overview of the many facets of Microsoft’s recent replacement for it’s original big-data analytic offerings from Microsoft Research, Dryad, DryadLINQ and LINQ to HPC.

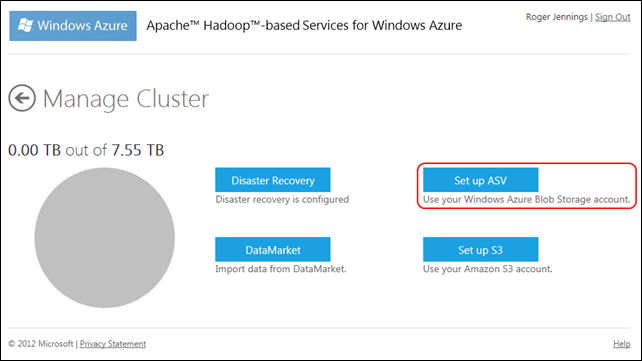

The Hadoop On Azure Elastic Map Reduce (EMR) portal’s Manage Cluster page offers two methods for substituting remote blob storage for Hadoop Distributed File System (HDFS) storage:

- Enabling the ASV protocol to connect to a Windows Azure Blob storage account or

- Setting up a connection to an Amazon Simple Storage Services (S3) account

Figure 1. The Hadoop on Azure EMR portal’s Manage Cluster page automates substituting Windows Azure or Amazon S3 storage for the HDFS database.

Note: My Importing Windows Azure Marketplace DataMarket DataSets to Apache Hadoop on Windows Azure’s Hive Databases tutorial of 4/3/2012 describes how to take advantage of the import from the Windows Azure Marketplace DataMarket option. For more information about Disaster Recovery, see the Understanding Disaster Recovery for Hadoop on Azure section at the end of this post.

This tutorial uses the ASV protocol in the following three scenarios:

- Hadoop/MapReduce with the Remote Desktop Protocol (RDP) and Hadoop Command Shell

- Hadoop/MapReduce with the Interactive JavaScript console

- Apache Hive with the Interactive Hive console

Note: According to Denny Lee, ASV is an abbreviation for Azure Storage Vault.

Scenarios 1 and 2 use the Hadoop FileSystem (FS) Shell Commands to display and manipulate files. According to the Guide for these commands:

The FileSystem (FS) shell is invoked by bin/hadoop fs <args>. All the FS shell commands take path URIs as arguments. The URI format is scheme://authority/path.

- For HDFS the scheme is hdfs

- For the local filesystem the scheme is file

- [For Azure blobs, the scheme is asv]

The scheme and authority are optional. If not specified, the default scheme specified in the configuration is used. An HDFS file or directory such as /parent/child can be specified as hdfs://namenodehost/parent/child or simply as /parent/child (given that your configuration is set to point to hdfs://namenodehost). Most of the commands in FS shell behave like corresponding Unix commands. Differences are described with each of the commands. Error information is sent to stderr and the output is sent to stdout.

Scenario 3 executes Apache Hive’s CREATE EXTERNAL TABLE instruction to generate a Hive table having Windows Azure blobs, rather than HDFS, as its data source.

Note: The technical support forum for Apache Hadoop on Windows Azure is the Apache Hadoop on Azure CTP Yahoo! Group.

And continues with the remaining topics in the preceding table of contents.

Andrew Brust (@andrewbrust) reported Hadoop 2.0: MapReduce in its place, HDFS all grown-up in a 4/6/2012 post to ZDNet’s Big on Data blog:

What are some of the cool things in the 2.0 release of Hadoop? To start, how about a revamped MapReduce? And what would you think of a high availability (HA) implementation of the Hadoop Distributed File System (HDFS)? News of these new features has been out there for a while, but most people are still coming up the basic Hadoop learning curve, so not a lot of people understand the 2.0 stuff terribly well. I think a look at the new release is in order.

Honestly, I didn’t really grasp HA-HDFS or the new MapReduce stuff myself until recently. That changed on Thursday, when I had the pleasure of speaking to a real Hadoop Pro: Todd Lipcon of Cloudera. Todd is one of the people working on HA-HDFS and in addition to his engineering knowledge, he possesses the rare skill of explaining complicated things rather straightforwardly. Consider him my ghost writer for this post.

What’s the story, Jerry?

To me, Hadoop 2.0 is an Enterprise maturity turning point for Hadoop, and thus for Big Data:

- HDFS loses its single-point-of-failure through a secondary standby “name node.” This is good.

- Hadoop can process data without using MapReduce as its algorithm. This is potentially game-changing.

If you wanted an executive summary of Hadoop 2.0, you’re done. If you’d like to understand a bit better how it works, read on.

But how?

The big changes in Hadoop 2.0 come about from a combination of a modularization of the engine and some common sense:

- Modularization: The “cluster scheduler” part Hadoop MapReduce (the thing that schedules the map and reduce tasks) has become its own component

- MapReduce is now just a computation layer on top of the cluster scheduler, and it can be swapped out

- Common Sense: HDFS, which has a “name node” and a bunch of “worker nodes,” now features a standby copy of the name node

- The standby name node can take over in case of failure of, or planned maintenance to, the active one. (Lipcon told me the maintenance scenario is the far more common use case and pain point for Hadoop users)

Ramifications

The scale out infrastructure of Hadoop can now be used without the MapReduce computational approach. That’s good, because MapReduce solutions don’t always work well, even for legitimate Big Data solutions. And in my conversation with Pervasive’s CTO Mike Hoskins, I learned that even if you subscribe to the MapReduce paradigm, Hadoop’s particular implementation can be improved upon:

- Also Read: Making Hadoop optimizations Pervasive

With this new setup, I can see a whole ecosystem of Hadoop plug-in algorithms evolving. Certain Data Mining engines have used that to particular advantage before, and the Hadoop community is very large, so this has major potential. We may also see optimized algorithms evolve for specific hardware implementations of Hadoop. That could be good (in terms of the performance wins) or bad (in terms of fragmentation). But either way, for Enterprise software, it’s probably a requirement.

And with new algorithms comes the ability to use languages other than Java, making Hadoop more interesting to more developers. In my last post, I explained why Hadoop’s current alternate-language, mechanism, its “Streaming” interface, is less than optimal.

- Also Read: MapReduce, streaming beyond Java

Pluggable algorithms in MapReduce will transcend this problems with Streaming. They’ll make Hadoop more a platform, and less an environment. That’s the only way to go in the software world these days.

The High Availability implementation of HDFS makes Hadoop more useful in mission-critical workloads. Honestly, this may be more about perception than substance. But who cares, really? If it enhances corporate adoption and confidence in Hadoop, it helps the platform. Technology breakthroughs aren’t always capability-based.

A note on versions

When can people start using this stuff? Right now, in reality; but more like “soon” in practice. To help clear this up, let’s get some Hadoop version and nomenclature voodoo straight.

Hadoop pros like to speak of the “0.20 branch” of the product’s source code and the “0.23 branch” too. But each of those pre-release-sounding version numbers got a makeover, to v1.0 and 2.0 respectively. The pros working closely with this stuff may still use the decimal designations even now, so keep in mind that discussions of 0.23 really reference 2.0.

I should also point out version 4 of Cloudera’s CDH (”Cloudera’s Distribution Including Apache Hadoop”), which is based on Hadoop 2.0, is in Beta. As such, I think of Hadoop 2.0 as beta software, even if you can pull the code down from Apache and easily put it in production. It’s still more of an early adopter release, so proceed with caution.

MapReduce in Hadoop 2.0, by the way, is sometimes referred to as MapReduce 2.0, other times as MRv2 and, in still different circles, as YARN (Yet Another Resource Negotiator).

Cloudera’s take

Cloudera has blog posts on both HA-HDFS and MRv2 that cover the features in almost whitepaper-level technical detail. Beyond technical explication, Cloudera’s being pretty prudent in their implemntation: CDH4 actually contains both the 1.0 and 2.0 implementations of Hadoop MapReduce (you pick one or the other when you set up your cluster). This will allow older MapReduce jobs to run on the new distro, with HA-HDFS. In effect, Cloudera is shipping MRv1 as a deprecated, yet supported, feature in CDH 4. That’s a good call.

Your take-away

Hadoop is growing up, and it’s not backing off. Learn it, get good at it and use it. If you want, you can download a working VMWare image of CDH3 or component tarballs of CDH4 right now.

![image_thumb1[1] image_thumb1[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEh1hk-Mh7-3rmEW1B0pQZnwY5diJMYUxcV-G1Kc3NGYUyTksLQYAdY9Rjqf3W9rw2xWcVtQ64UuhU_EzGWuU81BpxYBEZORp0rMGDgBe0O5ttnIKpLoKy6Z-zs8Mwrb5ZNZxQdwAanI/?imgmax=800)

![image_thumb1[1] image_thumb1[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhFg07kXReCzhjDX6uZVrFU61IzJjY2NrM4HZyKc1UfMbJ6_f-lMNCLkqEiPu-kjg54i_75ywTwdHLC5oogcBBz9gop1ElUDPCtyIlCEsVbbWS77oku-SwVnEkQ2NLVWkTdxP17_zDs/?imgmax=800)

Avkash Chauhan (@avkashchauhan) explained Programmatically setting the number of reducers with MapReduce job in Hadoop Cluster in a 4/5/2012 post (missed when published):

When submitting a Map/Reduce job in Hadoop cluster, you can provide number of map task for the jobs and the number of reducers are created depend on the Mappers input and the Hadoop cluster capacity. Or you can push the job and Map/Reduce framework will adjust it per cluster configuration. So setting the total number of reducer somehow is not required and not a situation to worry upon. However if you hard code to number of reducers in Map/Reduce framework then it does not matter how many nodes are in your cluster the reducers will be used as per your configuration.

If you would want to have fixed number of reducer at run time, you can do it while passing the Map/Reduce job at command line. Using “-D mapred.reduce.tasks” with desired number will spawn that many reducers at runtime.

Modifying programmatically then number of reducers is important when someone is using partitioner in Map/reduce implementation. And because of having partitioner it becomes very important to ensure that the number of reducers are at least equal to the total number of possible partitions because total number of partitions are mostly the total number of nodes in the Hadoop cluster.

When you need to get the cluster details at run time programmatically, you can use ClusterStatus API to get those details.

http://mit.edu/~mriap/hadoop/hadoop-0.13.1/docs/api/org/apache/hadoop/mapred/ClusterStatus.html

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Shirley Wang announced SQL Azure Data Sync Service Update 4 Now Live! on 4/6/2012:

A new service update (SU4) for SQL Azure Data Sync service is now live! The most noteworthy new feature in SU4 is to allow users to edit existing sync groups to cater for schema changes. For step-by-step guidance on how to edit sync group for different kinds of schema changes, please refer to this post on Windows Azure blog: http://blogs.msdn.com/b/windowsazure/archive/2012/04/03/announcing-sql-azure-data-sync-preview-update.aspx

Allowing sync group update to cater for schema change was the feature with the highest voting on Data Sync feature voting site: http://www.mygreatwindowsazureidea.com/forums/44459-sql-azure-data-sync-feature-voting. Go there today to add or vote for your favorite Data Sync feature!

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

Avi Kovarski of the Microsoft Codename “Data Hub” Team announced in an 4/10/2012 email to me that “the project was released this week in the pre-release [SQL Azure] Lab[s] version.” From SQL Azure Labs’ Microsoft Codename "Data Hub" page:

An Online Service for Data Discovery, Distribution and Curation

Information drives today’s business environment. Enable your knowledge workers to spend less time searching for the data they need and more time analyzing and sharing it. Create your own enterprise "Data Hub" and empower your knowledge workers to more efficiently get the information you need to drive your business to the next level.

Give your company the competitive advantage it needs with "Data Hub".

"Data Hub" enables:

Easy Publication and Distribution of Data

"Data Hub" enables quick and easy publication by data owners of their data sets and data artifacts such as reports, templates, models and visualizations.Seamless Data Discovery and Consumption

Knowledge workers can easily search the "Data Hub" to discover and consume cleansed and certified enterprise data as well as community driven data. Annotations, semantics, and visualizations make the data useful and relevant. "Data Hub" will also support social collaboration.Flexible Data Management and Monitoring

IT can easily provision a "Data Hub", manage its content and access permissions. Usage telemetry enables IT to monitor consumption.

Register here; download a PDF datasheet here. The project currently is limited to datasets stored in SQL Azure databases.

Andrew Brust (@andrewbrust) asked Why is Big Data Revolutionary? in a 4/10/2012 post to ZDNet’s Big on Data blog:

Last week, Dan Kusnetzky and I participated in a ZDNet Great Debate titled “Big Data: Revolution or evolution?” As you might expect, I advocated for the “revolution” position. The fact is I probably could have argued either side, as sometimes I view Big Data products and technologies as BI (business intelligence) in we-can-connect-to-Hadoop-too clothing.

But in the end, I really do see Big Data as different and significantly so. And the debate really helped me articulate my position, even to myself. So I present here an abridged version of my debate assertions and rebuttals.

Big Data’s manifesto: don’t be afraid

Big Data is unmistakably revolutionary. For the first time in the technology world, we’re thinking about how to collect more data and analyze it, instead of how to reduce data and archive what’s left. We’re no longer intimidated by data volumes; now we seek out extra data to help us gain even further insight into our businesses, our governments, and our society.

The advent of distributed processing over clusters of commodity servers and disks is a big part of what’s driving this, but so too is the low and falling price of storage. While the technology, and indeed the need, to collect, process and analyze Big Data, has been with us for quite some time, doing so hasn’t been efficient or economical until recently. And therein lies the revolution: everything we always wanted to know about our data but were afraid to ask. Now we don’t have to be afraid.

A Big Data definition

My primary definition of Big Data is the area of tech concerned with procurement and analysis of very granular, event-driven data. That involves Internet-derived data that scales well beyond Web site analytics, as well as sensor data, much of which we’ve thrown away until recently. Data that used to be cast off as exhaust is now the fuel for deeper understanding about operations, customer interactions and natural phenomena. To me, that’s the Big Data standard.

Event-driven data sets are too big for transactional database systems to handle efficiently. Big Data technologies like Hadoop, complex event processing (CEP) and massively parallel processing (MPP) systems are built for these workloads. Transactional systems will improve, but there will always be a threshold beyond which they were not designed to be used.

2012: Year of Big Data?

Big Data is becoming mainstream…it’s moving from specialized use in science and tech companies to Enterprise IT applications. That has major implications, as mainstream IT standards for tooling, usability and ease of setup are higher than in scientific and tech company circles. That’s why we’re seeing companies like Microsoft get into the game with cloud-based implementations of Big Data technology that can be requested and configured from a Web browser.

The quest to make Big Data more Enterprise-friendly should result in the refinement of the technology and lowering the costs of operating it. Right now, Big Data tools have a lot of rough edges and require expensive, highly-specialized technologists to implement and operate them. That is changing though, which is further proof of its revolutionary quality.

Spreadmarts aren’t Big Data, but they have a role

Is Big Data any different from the spreadsheet models and number crunching we’ve grown accustomed to? What the spreadsheet jocks have been doing can legitimately be called analytics, but certainly not Big Data, as Excel just can’t accommodate Big Data sets as defined earlier. It wasn’t until 2007 that Excel could even handle more than 16,384 rows per spreadsheet. It can’t handle larger operational data loads, much less Big Data loads.

But the results of Big Data analyses can be further crunched and explored in Excel. In fact, Microsoft has developed an add-in that connects Excel to Hive, the relational/data warehouse interface to Hadoop, the emblematic Big Data technology. Think of Big Data work as coarse editing and Excel-based analysis as post-production. [Emphasis added.]

The fact that BI and DW are complementary to Big Data is a good thing. Big Data lets older, conventional technologies provide insights on data sets that cover a much wider scope of operations and interactions than they could before. The fact that we can continue to use familiar tools in completely new contexts makes the something seemingly impossible suddenly become accessible, even casual. That is revolutionary.

Natural language processing and Big Data

There are solutions for carrying out Natural Language Processing (NLP) with Hadoop (and thus Big Data). One involves taking the Python programming language and a set of libraries called NTLK (Natural Language ToolKit) Another example is Apple’s Siri technology on the iPhone. Users simply talk to Siri to get answers from a huge array of domain expertise.

Sometimes Siri works remarkably well; at other times it’s a bit klunky. Interestingly, Big Data technology itself will help to improve natural language technology as it will allow greater volumes of written works to be processed and algorithmically understood. So Big Data will help itself become easier to use.

Big Data specialists and developers: can they all get along?

We don’t need to make this an either/or question. Just as there have long been developers and database specialists, there will continue to be call for those who build software and those who specialize in the procurement and analysis of data that software produces and consumes. The two are complementary.

But in my mind, people who develop strong competency in both will have very high value indeed. This will be especially true as most tech professionals seem to self-select as one or the other. I’ve never thought there was a strong justification for this, but I’ve long observed it as a trend in the industry. People who buck that trend will be rare, and thus in demand and very well-compensated.

The feds and Big Data?

The recent $200 million investment in Big Data announced by the U.S. Federal government received lots of coverage, but how important is it, really? It has symbolic significance, but I also think it has flaws. $200 million is a relatively small amount of money, especially when split over numerous Federal agencies.

But when the administration speaks to the importance of harnessing Big Data in the work of the government and its importance to society, that tells you the technology has power and impact. The US Federal Government collects reams of data; the Obama administration makes it clear the data has huge latent value.

Big Data and BI are separate, but connected

Getting back to my introductory point, is Big Data just the next generation of BI? Big Data is its own subcategory and will likely remain there. But it’s part of the same food chain as BI and data warehousing and these categories will exist along a continuum less than they will as discrete and perfectly distinct fields.

That’s exactly where things have stood for more than a decade with database administrators and modelers versus BI and data mining specialists. Some people do both, others specialize in on or the other. They’re not mutually exclusive, nor is one merely a newer manifestation of the other.

And so it will be with Big Data: an area of data expertise with its own technologies, products and constructs, but with an affinity to other data-focused tech specializations. Connections exist throughout the tech industry and computer science, and yet distinctions are still legitimate, helpful and real.

Where does this leave us?

In the debate, we discussed a number of scenarios where Big Data ties into more established database, Data Warehouse, BI and analysis technologies. The tie-ins are numerous indeed, which may make Big Data’s advances seem merely incremental. After all, if we can continue to use established tools, how can the change be “Big?”

But the revolution isn’t televised through these tools. It’s happening away from them.We’re taking huge amounts of data, much of it unstructured, using cheap servers and disks. And then we’re on-boarding that sifted data into our traditional systems. We’re answering new, bigger questions, and a lot of them. We’re using data we once threw away, because storage was too expensive, processing too slow and, going further back, broadband was too scarce. Now we’re working with that data, in familiar ways — with little re-tooling or disruption. This is empowering and unprecedented, but at the same time, it feels intuitive.

That’s revolutionary.

Marcelo Lopez Ruiz (@mlrdev) announced New WCF Data Services Release on 4/9/2012:

WCF Data Services 5.0 for OData v3 is out today. Read all about it here, and get it while it's hot. Actions, geospatial support, and an assortment of other goodies, not the least of which is support for Any and All operators. This restriction has been around for a long time, and it's great to see that workaround are no longer required.

Glenn Gailey (@ggailey777) described My Favorite Things in OData v3 and WCF Data Services 5.0 in a 4/9/2012 post:

Great news…WCF Data Services 5.0 was released today!

This new product release provides client and server support for OData v3 for the .NET Framework and Silverlight. Previous versions of WCF Data Services were actually part of the .NET Framework and Silverlight, but this out-of-band version is actually it’s own product—designed specifically to support OData v3. As such, I wanted to specifically call out my favorite new features supported in this release (and of course in OData v3).

New Feature Raves

Here is the short list of new features and functionality that I have been looking forward to…

Navigation Properties on Derived Types

This might be the single most asked for feature in this release. At least, it was number one on the wish list, with 495 votes. While I’ve only had to work around this limitation once so far, I’m glad to see it’s finally here!

Named Streams

It used to be that you could only have one resource stream per entity type. Now with named streams, you can have as many properties of Stream type defined on the entity as you need. This is great if you need to support, say, multiple image resolutions on the same entity or an image and other kinds of blobs.

For more information, see Streaming Provider (WCF Data Services).Any and All

I’ve created dozens of service operations to enable my OData client apps to traverse many-to-many (*:*) associations. I sure am glad to now be able to write queries like this:

var filteredEmployees = from e in context.Employees where e.Territories

.Any(t => t.TerritoryDescription

.Contains(territory)) select e;This was number three on the wish list. For more information, see LINQ Considerations (WCF Data Services).

My Other Votes

Here are some of my other votes that made into this release:

- Support DbContext as a EF provider context

- Auto generate methods on the client to call service operations (this is provided by the new OData T4 C# template)

- Annotations

For a list of all the new features and behaviors in WCF Data Services 5.0, see What's New in WCF Data Services.

Still Missing and Not Quite There

And, in the interest of fairness, here are the things that I still wish were in WCF Data Services and maybe also the OData protocol. Some of these are still on the wish list.

JSON Support in the Client

It wasn’t until I got into mobile device development that I really saw the value of JSON versus Atom in reducing bandwidth, and the OData protocol supports both. While WCF Data Services has always supported JSON in the data service (with this caveat), the client has only ever supported Atom. (As you can see in Pablo’s post, Atom is hugely more verbose than any JSON.) Interestingly, in this release the client has been revised to leverage the OData Library for serialization, as does the server, and ODataLib supports JSON. This means that the client should also also have the ability to “speak” JSON—so please OData folks, turn it on!

Enums

It looks like the Entity Framework team has already added enum type support to the forthcoming EF version 5. As such, I would have loved to see this support added to OData as well. Plus, I hate to mention it, but Support Enums as Property Types on Entities is a close second in votes with 491.

Collection Support for the Entity Framework Provider

Support for collection properties has been added to both the OData protocol and is available when using custom data service providers. However, there is no way to make this work with Entity Framework. I understand the difficulty with mapping an unordered collection of types into a relational database, but it still makes me sad. I am not going to implement a custom provider just to use collections (plus I want enums more).

Property-Level Change Tracking on the Client

This is not such a problem, but it’s just a little irritating that while OData supports property-level updates with a MERGE request, the client has never supported this. It would be a good way to reduce some network bandwidth. A workaround for this does exist, but as I mention in this post, you must essentially write a wrapper for DataServiceContext that does property-level tracking and rewrite outgoing MERGE requests.

Functions

Well, in this release we now have service actions, although I still find them very hard to both conceptualize and implement for an EF provider—akin to custom data service providers. What I really want is service functions, which is in OData v3, but has yet to be implemented by the WCF Data Services product. Hopefully, when we get functions they will be a bit easier to implement than actions for then Entity Framework provider.

Updates to the Async Clients

This release also includes an updated client for Silverlight that supports OData v3. Now we just need an updated OData v3 client for Windows Phone 7.5, which is not included in this release. Also, and perhaps more importantly, there is as of yet no publicly available client for Windows 8 Metro apps. We really need this Metro client support in Visual Studio 11, so let’s all hope it makes it in there by RTM.

Oh, and did I mention that all the clients needs to support JSON, especially the Windows Phone client? Although, this new discussion about a lighter JSON format for OData gives me hope for more JSON-centric clients in the future.

Anyway…be sure to try out OData v3 and WCF Data Services 5.0.

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity, Workflow, EAI and EDI

Alan Smith (@alansmith) described his Windows Azure Service Bus Splitter and Aggregator patterns in a 4/10/2012 post:

This article will cover basic implementations of the Splitter and Aggregator patterns using the Windows Azure Service Bus. The content will be included in the next release of the “Windows Azure Service Bus Developer Guide”, along with some other patterns I am working on.

I’ve taken the pattern descriptions from the book “Enterprise Integration Patterns” by Gregor Hohpe. I bought a copy of the book in 2004, and recently dusted it off when I started to look at implementing the patterns on the Windows Azure Service Bus. Gregor has also presented an session in 2011 “Enterprise Integration Patterns: Past, Present and Future” which is well worth a look.

I’ll be covering more patterns in the coming weeks, I’m currently working on Wire-Tap and Scatter-Gather. There will no doubt be a section on implementing these patterns in my “SOA, Connectivity and Integration using the Windows Azure Service Bus” course.

There are a number of scenarios where a message needs to be divided into a number of sub messages, and also where a number of sub messages need to be combined to form one message. The splitter and aggregator patterns provide a definition of how this can be achieved. This section will focus on the implementation of basic splitter and aggregator patens using the Windows Azure Service Bus direct programming model.

In BizTalk Server receive pipelines are typically used to implement the splitter patterns, with sequential convoy orchestrations often used to aggregate messages. In the current release of the Service Bus, there is no functionality in the direct programming model that implements these patterns, so it is up to the developer to implement them in the applications that send and receive messages.

Splitter

A message splitter takes a message and spits the message into a number of sub messages. As there are different scenarios for how a message can be split into sub messages, message splitters are implemented using different algorithms.

The Enterprise Integration Patterns book describes the splatter pattern as follows:

How can we process a message if it contains multiple elements, each of which may have to be processed in a different way?

Use a Splitter to break out the composite message into a series of individual messages, each containing data related to one item.

The Enterprise Integration Patterns website provides a description of the Splitter pattern here.

In some scenarios a batch message could be split into the sub messages that are contained in the batch. The splitting of a message could be based on the message type of sub-message, or the trading partner that the sub message is to be sent to.

Aggregator

An aggregator takes a stream or related messages and combines them together to form one message.

The Enterprise Integration Patterns book describes the aggregator pattern as follows:

How do we combine the results of individual, but related messages so that they can be processed as a whole?

Use a stateful filter, an Aggregator, to collect and store individual messages until a complete set of related messages has been received. Then, the Aggregator publishes a single message distilled from the individual messages.

The Enterprise Integration Patterns website provides a description of the Aggregator pattern here.

A common example of the need for an aggregator is in scenarios where a stream of messages needs to be combined into a daily batch to be sent to a legacy line-of-business application. The BizTalk Server EDI functionality provides support for batching messages in this way using a sequential convoy orchestration.

Scenario

The scenario for this implementation of the splitter and aggregator patterns is the sending and receiving of large messages using a Service Bus queue. In the current release, the Windows Azure Service Bus currently supports a maximum message size of 256 KB, with a maximum header size of 64 KB. This leaves a safe maximum body size of 192 KB.

The BrokeredMessage class will support messages larger than 256 KB; in fact the Size property is of type long, implying that very large messages may be supported at some point in the future. The 256 KB size restriction is set in the service bus components that are deployed in the Windows Azure data centers.

One of the ways of working around this size restriction is to split large messages into a sequence of smaller sub messages in the sending application, send them via a queue, and then reassemble them in the receiving application. This scenario will be used to demonstrate the pattern implementations.

Implementation

The splitter and aggregator will be used to provide functionality to send and receive large messages over the Windows Azure Service Bus. In order to make the implementations generic and reusable they will be implemented as a class library. The splitter will be implemented in the LargeMessageSender class and the aggregator in the LargeMessageReceiver class. A class diagram showing the two classes is shown below.

Alan continues with source code listings and concludes:

In order to test the application, the sending application is executed, which will use the LargeMessageSender class to split the message and place it on the queue. The output of the sender console is shown below.

The console shows that the body size of the large message was 9,929,365 bytes, and the message was sent as a sequence of 51 sub messages.

When the receiving application is executed the results are shown below.

The console application shows that the aggregator has received the 51 messages from the message sequence that was creating in the sending application. The messages have been aggregated to form a massage with a body of 9,929,365 bytes, which is the same as the original large message. The message body is then saved as a file.

Improvements to the Implementation

The splitter and aggregator patterns in this implementation were created in order to show the usage of the patterns in a demo, which they do quite well. When implementing these patterns in a real-world scenario there are a number of improvements that could be made to the design.

Copying Message Header Properties

When sending a large message using these classes, it would be great if the message header properties in the message that was received were copied from the message that was sent. The sending application may well add information to the message context that will be required in the receiving application.

When the sub messages are created in the splitter, the header properties in the first message could be set to the values in the original large message. The aggregator could then used the values from this first sub message to set the properties in the message header of the large message during the aggregation process.

Using Asynchronous Methods

The current implementation uses the synchronous send and receive methods of the QueueClient class. It would be much more performant to use the asynchronous methods, however doing so may well affect the sequence in which the sub messages are enqueued, which would require the implementation of a resequencer in the aggregator to restore the correct message sequence.

Handling Exceptions

In order to keep the code readable no exception handling was added to the implementations. In a real-world scenario exceptions should be handled accordingly.

Harish Agarwal posted Announcing the Refresh of Service Bus EAI & EDI Labs on 4/9/2012:

As part of our continuous innovation on Windows Azure, today we are excited to announce the refresh of Windows Azure Service Bus EAI & EDI Labs release. The first labs release was done in Dec 2011 and you can read more about it here. As with former labs releases, we are sharing some early thinking on possible feature additions to Windows Azure and are committed to gaining your feedback right from the beginning.

The capabilities showcased in this release enable two key scenarios on Windows Azure:

- Enterprise Application Integration (EAI) which provides rich message processing capabilities and the ability to connect private cloud assets to the public cloud.

- Electronic Data Interchange (EDI) targeted at business-to-business (B2B) scenarios in the form of a finished service built for trading partner management.

Signing up for the labs is easy and free of charge. All you need to do to check out the new capabilities is:

- Download and install the SDK

- Sign in to the labs environment using a Windows Live ID

We encourage you to ask questions and provide feedback on the Service Bus EAI & EDI Labs Release forum.

You can read more on how to use the new capabilities in the MSDN Documentation.

Please keep in mind that there is no SLA promise for labs, so you should not use these capabilities for any production needs. We will endeavor to provide advanced notice of changes and updates to the release via the forum above but we reserve the right to make changes or end the lab at any point without prior notice.

EAI & EDI capabilities enhancements include:

- The bridge has been enhanced to process both positional and delimited flat files along with xml messages. It can also pull a message from your existing FTP server and then process it further

- Flow of messages within a bridge is no longer a black box: we have exposed the operational tracking of messages within it along with its metadata

- Creating and editing schemas has become simpler and easier using the schema editor we have added as a first-class-experience in our Visual Studio project. Yes, we heard your feedbackJ. Fetching schemas from another service is also simpler using an integrated wizard experience

- You can now send messages to the bridge in UTF-16, UTF-16LE and UTF-16BE too apart from UTF-8

- We have further enriched the Mapper functionality to support number formatting, timezone manipulations and different ways to generate unique Ids. To handle errors and null data, we let user configure the behavior of the runtime

- The Visual Studio Server Explorer experience to create, configure and deploy LOB entities on-premises has become simpler using a new wizard which is much easier to use

- From the EDI Portal you can delete agreements to reduce the clutter. You can also change agreement settings and redeploy agreements

- EDI messages can be tracked for one or more agreements and the view is exposed through the EDI portal. The view also supports search and correlation of messages and acknowledgements

- We now support out-of-the-box archiving in EDI. All EDI messages can be archived and downloaded from the EDI portal

- We have added preliminary support for send side batching in EDI based on message count. This would be enhanced to include more batching criteria in future releases.

- We have also improved the EDI Portal performance and made improvements to the error messages

- There are UX enhancements all across which should make your experience smoother

Further capabilities and enhancements will come as part of future refreshes. Stay tuned!

Richard Seroter (@rseroter) provided a third-party view of the new EAI and EDI features as well as StereamInsight and Tier 3’s Iron Foundry PaaS offering in his Three Software Updates to be Aware Of post of 4/6/2012:

In the past few days, there have been three sizable product announcements that should be of interest to the cloud/integration community. Specifically, there are noticeable improvements to Microsoft’s CEP engine StreamInsight, Windows Azure’s integration services, and Tier 3’s Iron Foundry PaaS.

First off, the Microsoft StreamInsight team recently outlined changes that are coming in their StreamInsight 2.1 release. This is actually a pretty major update with some fundamental modification to the programmatic object model. I can attest to the fact that it can be challenge to build up the necessary host/query/adapter plumbing necessary to get a solution rolling, and the StreamInsight team has acknowledged this. The new object model will be a bit more straightforward. Also, we’ll see IEnumerable and IObservable become more first-class citizens in the platform. Developers are going to be encouraged to use IEnumerable/IObservable in lieu of adapters in both embedded AND server-based deployment scenarios. In addition to changes to the object model, we’ll also see improved checkpointing (failure recovery) support. If you want to learn more about StreamInsight, and are a Pluralsight subscriber, you can watch my course on this product.

Next up, Microsoft released the latest CTP for its Windows Azure Service Bus EAI and EDI components. As a refresher, these are “BizTalk in the cloud”-like services that improve connectivity, message processing and partner collaboration for hybrid situations. I summarized this product in an InfoQ article written in December 2011. So what’s new? Microsoft issued a description of the core changes, but in a nutshell, the components are maturing. The tooling is improving, the message processing engine can handle flat files or XML, the mapping and schema designers have enhanced functionality, and the EDI offering is more complete. You can download this release from the Microsoft site.

Finally, those cats at Tier 3 have unleashed a substantial update to their open-source Iron Foundry (public or private) .NET PaaS offering. The big takeaway is that Iron Foundry is now feature-competitive with its parent project, the wildly popular Cloud Foundry. Iron Foundry now supports a full suite of languages (.NET as well as Ruby, Java, PHP, Python, Node.js), multiple backend databases (SQL Server, Postgres, MySQL, Redis, MongoDB), and queuing support through Rabbit MQ. In addition, they’ve turned on the ability to tunnel into backend services (like SQL Server) so you don’t necessarily need to apply the monkey business that I employed a few months back. Tier 3 has also beefed up the hosting environment so that people who try out their hosted version of Iron Foundry can have a stable, reliable experience. A multi-language, private PaaS with nearly all the services that I need to build apps? Yes, please.

Each of the above releases is interesting in its own way and to me, they have relationships with one another. The Azure services enable a whole new set of integration scenarios, Iron Foundry makes it simple to move web applications between environments, and StreamInsight helps me quickly make sense of the data being generated by my applications. It’s a fun time to be an architect or developer!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Jialiang Ge (@jialge) posted [Sample of Apr 10th] Increase ASP.NET temp folder size in Windows Azure to the Microsoft All-In-One Code Framework blog on 4/10/2012:

Sample Download: http://code.msdn.microsoft.com/CSAzureIncreaseTempFolderSi-d58c604d

By default the ASP.NET temp folder size in a Windows Azure web role is limited to 100 MB. This is sufficient for the majority of applications, but some applications may require more storage space for temp files. The sample demonstrates how to increase the ASP.NET temp folder size.

The sample was written by Microsoft Escalation Engineer Narahari Dogiparthi.

You can find more code samples that demonstrate the most typical programming scenarios by using Microsoft All-In-One Code Framework Sample Browser or Sample Browser Visual Studio extension. They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates. If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, or read the introduction on our homepage http://1code.codeplex.com/.

Introduction

By default the ASP.NET temporary folder size in a Windows Azure web role is limited to 100 MB. This is sufficient for the vast majority of applications, but some applications may require more storage space for temporary files. In particular this will happen for very large applications which generate a lot of dynamically generated code, or applications which use controls that make use of the temporary folder such as the standard FileUpload control. If you are encountering the problem of running out of temporary folder space you will get error messages such as OutOfMemoryException or ‘There is not enough space on the disk.’.

Building the Sample

This sample can be run as-is without making any changes to it.

Running the Sample

- Open the sample on the machine where VS 2010, Windows Azure SDK 1.6 are installed.

- Right click on the cloud service project i.e. CSAzureIncreaseTempFolderSize and choose Publish.

- Follow the steps in publish Wizard and choose subscription details, deployment slots, etc. and enable remote desktop for all roles.

- After successful publish, login to Windows Azure VM via RDP and verify that IIS is using newly created AspNetTemp1GB for storing temporary files instead of default temporary ASP.net folder.

Using the Code

1) In the ServiceDefinition.csdef create one LocalStorage resource in the Web Role, and set the Runtime executionContext to elevated. The elevated executionContext allows us to use the ServerManager class to modify the IIS configuration during role startup.

<WebRole name="IncreaseAspnetTempFolderSize" vmsize="Small"> <Runtime executionContext="elevated" /> <Sites> <Site name="Web"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint1" /> </Bindings> </Site> </Sites> <Endpoints> <InputEndpoint name="Endpoint1" protocol="http" port="80" /> </Endpoints> <LocalResources> <LocalStorage name="AspNetTemp1GB" sizeInMB="1000" /> </LocalResources> <Imports> <Import moduleName="Diagnostics" /> </Imports> </WebRole>2) Add reference to Microsoft.Web.Administration (location: <systemdrive>\system32\inetsrv) assembly and add below using statement to your project

using Microsoft.Web.Administration;3) Add the following code to the OnStart routine in WebRole.cs. This code configures the Website to point to the AspNetTemp1GB LocalStorage resource.

public override bool OnStart() { // For information on handling configuration changes // see the MSDN topic at http://go.microsoft.com/fwlink/?LinkId=166357. // Get the location of the AspNetTemp1GB resource Microsoft.WindowsAzure.ServiceRuntime.LocalResource aspNetTempFolder = Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment.GetLocalResource("AspNetTemp1GB"); //Instantiate the IIS ServerManager ServerManager iisManager = new ServerManager(); // Get the website. Note that "_Web" is the name of the site in the ServiceDefinition.csdef, // so make sure you change this code if you change the site name in the .csdef Application app = iisManager.Sites[RoleEnvironment.CurrentRoleInstance.Id + "_Web"].Applications[0]; // Get the web.config for the site Configuration webHostConfig = app.GetWebConfiguration(); // Get a reference to the system.web/compilation element ConfigurationSection compilationConfiguration = webHostConfig.GetSection("system.web/compilation"); // Set the tempDirectory property to the AspNetTemp1GB folder compilationConfiguration.Attributes["tempDirectory"].Value = aspNetTempFolder.RootPath; // Commit the changes iisManager.CommitChanges(); return base.OnStart(); }More Information

For more information about the ASP.NET Temporary Folder see http://msdn.microsoft.com/en-us/magazine/cc163496.aspx

Hovannes Avoyan described how to Configure the Diagnostic Monitor from within an Windows Azure Role in a 4/10/2012 post:

All systems require a degree of monitoring. We monitor on premise installations for usage, performance, outages, tracing, and for a multitude of other reasons. Services which are deployed on premise operate in a controlled environment. The organisation’s IT team is very much aware of what systems are running on the organisation’s servers. It is an environment which can be managed by the organisation’s own IT team. On the contrary cloud environments tend to be disruptive by nature. Thus in cloud environments monitoring is crucial.

In this article we will walk through the steps required to :

- configure a Windows Azure application for the gathering of data for diagnostic purposes;

- configure the application to persist the gathered data in a Windows Azure Storage Account;

- read the data and post to your Monitis Account.

Configure the Diagnostic Monitor from within an Windows Azure Role.

As depicted in Figure 1 below a Diagnostic Monitor is associated with each Windows Azure Role. By default the Diagnostic Monitor will gather trace logs, infrastructure logs, and IIS logs. The diagnostic data is stored in the local storage of the Windows Azure Instance. Local storage is not persistent across deployments of an application. Thus the diagnostic data should be moved to persistent storage such as the Windows Azure Storage Account to be accessed at will. We will give examples below.

Figure 1 – Windows Azure Role

For the purpose of this example we will create a simple Windows Azure Application within Microsoft Visual Studio 2010.

Step 1 – Create a Windows Azure Project using C#.

Select Cloud from the list of installed templates.

Figure 2 – Create a Windows Azure Project

Step 2 – Select ASP.Net Web Role

Figure 3 – Select ASP.NET Web Role

Step 3 – Rename the Web Role to MonitisWebRole1

By default the project will contain a Web Role. A Web Role can be used to deliver the user interface elements of an application. Rename WebRole1 to MonitisWebRole1.

Figure 4 – A default project has been created. Note that the project contains 1 Web Role

The renamed Web Role is displayed below

Figure 5 – Web Role has been renamed

…

Read more.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Brice Lambson (@brice_lambson) of the ADO.NET Team announced EF Power Tools Beta 2 Available in a 4/9/2012 post:

The Entity Framework Power Tools provide additional design-time tools in Visual Studio to help you develop using the Entity Framework. Today we are releasing Beta 2 of the Power Tools.

Where do I get it?

The Entity Framework Power Tools are available on the Visual Studio Gallery.

You can also install them directly from Visual Studio by selecting 'Tools -> Extension Manager...' then searching for "Entity Framework Power Tools" in the Online Gallery.

If you encounter an error while upgrading from an older version of EF Power Tools, you will need to uninstall the older version first then install EF Power Tools Beta 2. This is due to a bug in Visual Studio that blocks the upgrade because the certificate used to sign Beta 2 has a different expiry date than Beta 1.

What's new in Beta 2?

Beta 2 improves the quality of the previous release, and also adds new functionality.

- Code generated by reverse engineer can be customized using T4 templates.

- Fixed many reverse engineer bugs relating to the code that we generate.

- Generate Views (formerly Optimize Entity Data Model) is now available for database-first and model-first. Right-click on an EDMX file to access the command.

- Enhanced DbContext and database discovery. We fixed many bugs around how we locate your DbContext and the database it connects to.

For a complete list of the features included in the Power Tools see the What does it add to Visual Studio section.

Compatibility

The Power Tools are compatible with Visual Studio 2010 and Entity Framework 4.2 or later.

You can also install the Power Tools on Visual Studio 11 Beta, but you may receive the error "A constructible type deriving from DbContext could not be found in the selected file." This is caused by a bug in the Visual Studio 11 unit test tools where an older version of EntityFramework.dll is being loaded. We are working closely with that team to resolve the issue. As a workaround, you can delete the HKEY_CURRENT_USER\Software\Microsoft\VisualStudio\11.0_Config\BindingPaths\{BFC24BF4-B994-4757-BCDC-1D5D2768BF29} registry key. Be aware, however, that this will cause the Unit Test commands to stop working. Thank you, early adopters, for your superhuman patience with prerelease software.

Support

This release is a preview of features that we are considering for a future release and is designed to allow you to provide feedback on the design of these features. EF Power Tools Beta 2 is not intended or licensed for use in production.

If you have a question, ask it on Stack Overflow using the entity-framework tag.

What does it add to Visual Studio?

EF Power Tools Beta 2 is for Code First, model-first, and database-first development and adds the following context menu options to an 'Entity Framework' sub-menu inside Visual Studio.

When right-clicking on a C# project

- Reverse Engineer Code First

This command allows one-time generation of Code First mappings for an existing database. This option is useful if you want to use Code First to target an existing database as it takes care of a lot of the initial coding. The command prompts for a connection to an existing database and then reverse engineers POCO classes, a derived DbContext, and Code First mappings that can be used to access the database.

- If you have not already added the EntityFramework NuGet package to your project, the latest version will be downloaded as part of running reverse engineer.

- The reverse engineer process by default produces a complete mapping using the fluent API. Items such as column name will always be configured, even when they would be correctly inferred by conventions. This allows you to refactor property/class names etc. without needing to manually update the mapping.

- The Customize Reverse Engineer Templates command (see below) lets you customize how code is generated.

- A connection string is added to the App/Web.config file and is used by the context at runtime. If you are reverse engineering to a class library, you will need to copy this connection string to the configuration file of the consuming application(s).

- This process is designed to help with the initial coding of a Code First model. You may need to adjust the generated code if you have a complex database schema or are using advanced database features.

- Running this command multiple times will overwrite any previously generated files, including any changes that have been made to generated files.

- Customize Reverse Engineer Templates

Adds the default reverse engineer T4 templates to your project for editing. After updating these files, run the Reverse Engineer Code First command again to reverse engineer POCO classes, a derived DbContext, and Code First mappings using your project's customized templates.

- The templates are added to your project under the CodeTemplates\ReverseEngineerCodeFirst folder.

When right-clicking on a code file containing a derived DbContext class

- View Entity Data Model (Read-only)

Displays the Code First model in the Entity Framework designer.

- This is a read-only representation of the model; you cannot update the Code First model using the designer.

- View Entity Data Model XML

Displays the EDMX XML representing the Code First model.- View Entity Data Model DDL SQL

Displays the DDL SQL to create the database targeted by the Code First model.- Generate Views

Generates pre-compiled views used by the EF runtime to improve start-up performance. Adds the generated views file to the containing project.

- View compilation is discussed in the Performance Considerations article on MSDN.

- If you change your model then you will need to re-generate the pre-compiled views by running this command again.

When right-clicking on an Entity Data Model (*.edmx) file

- Generate Views

This is the same as the Generate Views command above except that, instead of generating pre-compiled views for a derived DbContext class, it generates them for your database-first or model-first entity data model.

Jan Van der Haegen (@janvanderhaegen) described How to make your required fields NOT have a bold label using Extensions Made Easy… (By Kirk Brownfield) on 4/8/2012:

I’ll be honest, I haven’t spent much time on Extensions Made Easy lately. I have good excuses though: I’m writing a “getting started with LightSwitch eBook” (link on the way), I founded a “LightSwitch exclusive startup“, I’m writing about LightSwitch for MSDN magazine, I’m working on my next extension called “LightSwitch Power Productivity Studio“, but mainly because I consider EME in its current form to be complete, in the way that it can do all that I intended it to do, all that I felt was missing/not easy enough in the LightSwitch RTM, and maybe even a bit more, like deep linking in a LightSwitch application…

Ironically, just when I stopped writing about EME, other people started discovering the power behind the lightweight extension, and have been happily sharing…

- Jewel Lambert, a new but quickly rising LightSwitch hacker, called me a LightSwitch giant for EME alone…

- Keith Craigo, who won the LightSwitch Star Contest grand prize back in January, recently posted on how he uses EME to make it “look pretty”… (Thanks a million for the article by the way!)

- Bala Somasundaram, well he’s EME’s #1 fan since it was first published, there’s too many links I could post

- Kirk Brownfield, posted on the LightSwitchHelpWebsite on how to make your LightSwitch buttons have rounded corners with EME… Yes, it’s in VB.NET!

Kirk recently mailed me with another question: “how can one make the required fields NOT have a bold label”? For those of you that haven’t noticed, when you use the LightSwitch default shell & theme, required properties will have a bold label like in the screenshot below:

By the way, my apologies on the crappy graphics in my MSDN screenshots. I turned ClearType off on my machine, as requested in the MSDN Magazine writer guidelines. If you take a screenshot with ClearType on, it looks crappy on-screen but much better when printed. Bad move for the MSDN Magazine web edition, obviously…

Before I could even answer his mail, a second mail came in from Kirk with the response to his own question. Kirk later happily granted me the privilege of posting me the answer…

Firstly, set up your LightSwitch project so you can create a theme inside your LightSwitch application. (Everyone hates the “Extension” debugging mess…) A sample of how to do this can be found here.

Next up, since you want to do some control styling, not just mess with the colors and the fonts a bit, you need to export a new control style to the LightSwitch framework. I wrote about this earlier, and Keith posted the VB.Net version!

The question of course is what control you need to style, and for this you need to understand a bit about LightSwitch’s Meta-data driven MVVM implementation (MV3). Unfortunately, the joke is on you here, because there’s little to no documentation about the subject (hold your breath for exactly 7 days and there will be… ) The very short preview: the LightSwitch default theme & shell provide Views that binds to ViewModelMetaData, which in turn binds to your ViewModels and Models. The XAML (View) part that shows the label, is called an AttachedLabelPresenter.

Kirk actually found the control to style, by looking at the source code of the LightSwitch Metro Theme, reposted here for your convenience…

<ResourceDictionary xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:framework="clr-namespace:Microsoft.LightSwitch.Presentation.Framework;assembly=Microsoft.LightSwitch.Client" xmlns:internalstyles="clr-namespace:Microsoft.LightSwitch.Presentation.Framework.Styles.Internal;assembly=Microsoft.LightSwitch.Client" xmlns:internalconverters="clr-namespace:Microsoft.LightSwitch.Presentation.Framework.Converters.Internal;assembly=Microsoft.LightSwitch.Client" xmlns:converters="clr-namespace:MetroThemeExtension.Presentation.Converters" xmlns:windows="clr-namespace:System.Windows;assembly=System.Windows"> <internalstyles:RequiredFontStyleFontWeightConverter x:Key="RequiredFontStyleFontWeightConverter"/> <converters:TextToUpperConverter x:Key="ToUpperCaseConverter"/> <!-- The attached label presenter is the control that places labels next to controls. It is restyled here to put the label--> text in upper case. The style is applied using implicit styles, so do not give the style a key --> <Style TargetType="framework:AttachedLabelPresenter"> <Setter Property="IsTabStop" Value="False" /> <Setter Property="Template"> <Setter.Value> <ControlTemplate TargetType="framework:AttachedLabelPresenter"> <TextBlock x:Name="TextBlock" Text="{Binding DisplayNameWithPunctuation, RelativeSource={RelativeSource TemplatedParent}, Converter={StaticResource ToUpperCaseConverter}}" VerticalAlignment="Top" HorizontalAlignment="Left" TextWrapping="Wrap" FontWeight="{Binding Converter={StaticResource RequiredFontStyleFontWeightConverter}}"> <windows:VisualStateManager.VisualStateGroups> <windows:VisualStateGroup x:Name="AttachedLabelPositionStates"> <windows:VisualState x:Name="None"/> <windows:VisualState x:Name="LeftAligned"> <Storyboard> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="Margin" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0"> <DiscreteObjectKeyFrame.Value> <windows:Thickness>0,3,5,0</windows:Thickness> <!--DiscreteObjectKeyFrame.Value> <!--DiscreteObjectKeyFrame> <!--ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="HorizontalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Left"/> <!--ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="VerticalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Top"/> <!--ObjectAnimationUsingKeyFrames> </Storyboard> VisualState> <windows:VisualState x:Name="RightAligned" > <Storyboard> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="Margin" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0"> <DiscreteObjectKeyFrame.Value> <windows:Thickness>0,3,5,0</windows:Thickness> <!--DiscreteObjectKeyFrame.Value> <!--DiscreteObjectKeyFrame> <!--ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="HorizontalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Right"/> <!--ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="VerticalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Top"/> <!--ObjectAnimationUsingKeyFrames> </Storyboard> VisualState> <windows:VisualState x:Name="Top" > <Storyboard> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="Margin" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0"> <DiscreteObjectKeyFrame.Value> <windows:Thickness>0,0,0,5</windows:Thickness> </DiscreteObjectKeyFrame.Value> </DiscreteObjectKeyFrame> </ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="HorizontalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Left"/> </ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="VerticalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Bottom"/> </ObjectAnimationUsingKeyFrames> </Storyboard> </windows:VisualState> <windows:VisualState x:Name="Bottom"> <Storyboard> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="Margin" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0"> <DiscreteObjectKeyFrame.Value> <windows:Thickness>0,5,0,0</windows:Thickness> </DiscreteObjectKeyFrame.Value> </DiscreteObjectKeyFrame> </ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="HorizontalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Left"/> </ObjectAnimationUsingKeyFrames> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="TextBlock" Storyboard.TargetProperty="VerticalAlignment" Duration="0"> <DiscreteObjectKeyFrame KeyTime="0" Value="Top"/> </ObjectAnimationUsingKeyFrames> </Storyboard> </windows:VisualState> </windows:VisualStateGroup> </windows:VisualStateManager.VisualStateGroups> </TextBlock> </ControlTemplate> </Setter.Value> </Setter> </Style> </ResourceDictionary>The interesting bits are all near the top. First thing to notice, is the comment:

<!-- The attached label presenter is the control that places labels next to controls. It is restyled here to put the label text in upper case. The style is applied using implicit styles, so do not give the style a key -->Ok, so that confirms what we thought: the AttachedLabelPresenter is the control that places labels next to control. Good naming (Nomen est Omen)!

Also, the Metro theme puts all Labels in upper case, and for this exercise, we only wanted to get rid of the bold. Let’s revert that by getting rid of the ToUpperCaseConverter a couple of lines below that comment line:

Text="{Binding DisplayNameWithPunctuation, RelativeSource={RelativeSource TemplatedParent},Converter={StaticResource ToUpperCaseConverter}}"Nice, following all the links posted in this blog post, and removing that converter, we have a LightSwitch application where the way labels are represented, looks exactly like the standard theme, but is completely under our control. One thing we can now accomplish, is to get rid of the functionality where required fields have a bold label. This is accomplished by getting rid of the following line completely (not just the converter!):

FontWeight="{Binding Converter={StaticResource RequiredFontStyleFontWeightConverter}}">Awesomesauce, another mission accomplished!

As you might have suspected, I wrote this blog post myself, but titled it “By Kirk Brownfield” since he basically came up with the solution before I could even read his first mail.

He promised me to send information about some other LightSwitch hacking adventures he’s been undertaking, I’m personally looking forward to reading about it, and sharing it with you!

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Channel9 posted video archives of presentations at Lang.NEXT 2012 on 4/9/2012:

Lang.NEXT 2012 is a cross-industry conference for programming language designers and implementers on the MIcrosoft Campus in Redmond, Washington. With three days of talks, panels and discussion on leading programming language work from industry and research, Lang.NEXT is the place to learn, share ideas and engage with fellow programming language design experts and enthusiasts. Native, functional, imperative, object oriented, static, dynamic, managed, interpreted... It's a programming language geek fest.

Learn more about Lang.NEXT from the event organizers:

We had a great cast of characters speaking at this event. Experts and inconoclasts included:

Andrei Alexandrescu, Facebook

Andrew Black, Portland State University

Andy Gordon, Microsoft Research and University of Edinburgh

Andy Moran, Galois

Bruce Payette, Microsoft

Donna Malayeri, Microsoft

Dustin Campbell, Microsoft

Erik Meijer, Microsoft

Gilad Bracha, Google

Herb Sutter, Microsoft

Jeff Bezanson, MIT

Jeroen Frijters, Sumatra Software

John Cook, M.D. Anderson Cancer Center

John Rose, Oracle

Kim Bruce, Pomona College

Kunle Olukotun, Stanford

Luke Hoban, Microsoft

Mads Torgersen, Microsoft

Martin Odersky, EPFL, Typesafe

Martyn Lovell, Microsoft

Peter Alvaro, University of California at Berkeley

Robert Griesemer, Google

Stefan Karpinski, MIT

Walter Bright, Digital MarsSessions were recorded and Channel9 interviews took place!

Several of the presentations involved Windows Azure and cloud computing.

Brian Swan (@brian_swan) posted Pie in the Sky (April 6, 2012) to the [Windows Azure’s] Silver Lining Blog on 4/6/2012:

As Larry mentioned last week, we’ve both been heads down working on updates for the Windows Azure platform, hence the relatively quiet blog of late. This week, however, we have managed to pick up our reading pace a bit…sharing the good stuff we’ve come across here. Enjoy (and learn!)…

How to setup deployments in Azure so that they use different databases depending on the environment? This Stackoverflow thread has some good ideas about how to do testing in Windows Azure.

- Business Continuity for Windows Azure: This document explains how to think about and plan for availability in three categories when using Windows Azure: 1) Failure of individual servers, devices, or network connectivity, 2)Corruption, unwanted modification, or deletion of data, and 3) Widespread loss of facilities

- https://www.hadooponazure.com/: Second preview of Hadoop based service for Windows Azure now available with expanded capacity and more.

- Bill Wilder does Hadoop on Azure: Carl and Richard of DotNetRocks.com interview Bill Wilder about Hadoop on Azure.

- Getting Acquainted with Node.js on Windows Azure: A good getting started guide.

- Announcing New Datacenter Options for Windows Azure: "Effective immediately, compute and storage resources are now available in “West US” and “East US”, with SQL Azure coming online in the coming months."

- Getting Acquainted with NoSQL on Windows Azure: Starts with an overview of NoSQL, then moves into guidance about using various NoSQL options in Windows Azure.

- Yahoo!’s Mojito framework is now open source: This is an MVC framework that runs on the client and server side. Can do stuff like render the first page load on the server, and then subsequent renders of portions of the page are handled client side.

- Startups, this is how design works: A resource for understanding what good design is.

- Practical Load Testing of REST APIs: You are doing load testing of your APIs right?

- Identifying the Tenant in Multi-Tenant Azure Applications – Part 1: Contrary to the Part 1 in the title, this is actually part 3. This article discusses approaches to identifying the tenant at runtime.

- CQRS on Windows Azure: A discussion of implementing Command Query Responsibility Segregation on Windows Azure in .NET. Sample included.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Keith Combs (@Keith_Combs) posted a lengthy Planning Guide for Infrastructure as a Service (IaaS) to TechNet on 4/5/2012 (missed when posted). It begins:

Infrastructure as a Service, or IaaS is the industry term used to describe the capability to provide computing infrastructure resources in a well-defined manner, similar to what is seen with public utilities. These resources include server resources that provide compute capability, network resources that provide communications capability between server resources and the outside world, and storage capability that provides persistent data storage. Each of these capabilities has unique characteristics that influence the class of service that each capability can provide. For example, server resources may be classified as Small, Medium and Large representing their capability to handle compute bound or I/O intensive workloads while storage resources may be classified by access speed and/or resiliency. These unique characteristic take a service providers approach and are made available in a utility like pay-as-you-go (also known as "metered") basis.

The utility approach is analogous to other utilities that provide service to your home or business. You negotiate an agreement with a service provider to deliver a capability. That capability may be electric power, heating or cooling, water or an IT service. Over time you consume resources from each provider to run your home or business and each month you receive a statement outlining your consumption and cost associated with that consumption. You therefore pay for what you consume, no more and no less. The cost for each service is derived from a formula that is all inclusive of the provider's costs to deliver that service in a profitable manner. That formula also likely includes costs associated with future development, maintenance and upgrade of the service beyond the initial investment.

The consumer of a service may not necessarily know, nor desire to know, how the provider implements the capability to provide each service. In spite of this, the consumer should have an understanding of the ramifications of service failure when something does go wrong. A failure will happen at some point, the key questions are how often these failures happen and for what duration. When they do happen what has been done to provide service continuity in my home or business? A clear analogy is have you ever driven through a town after a significant storm and noticed the street lights, traffic lights and building lights are all out? Then you come across a single building that is lit up among a field of darkness and conclude they must have had a plan in place to provide service continuity in the event of a utility failure.

When a utility provides a service to consumers, these consumers expect that service to be delivered in a reliable and efficient manner. To do this the utility must implement service monitoring throughout the service delivery chain to monitor the load on the system during peaks and schedule routine maintenance during off peak periods. The utility must also develop processes to manage tasks for capacity management, routine maintenance and incident management to respond to events that happen during the course of providing the service. In a private cloud we share many common practices with the utility model. IT expects that the systems that make up a private cloud are managed and monitored to provide early notification of peak periods and respond to these changes and routine problems with proven, commonly accepted incident management processes to prevent service downtime or degradation and restore services when failures occur in a well defined manner

<Return to section navigation list>

Cloud Security and Governance

Michael Collier (@MichaelCollier) posted Understanding Windows Azure Security on 4/10/2012:

Whenever I talk with clients about Windows Azure or lead a training class on Windows Azure security is always one of the first, and most passionate, topics discussed. People want, even need, to feel comfortable that the data and application logic is going to be safe when they give up physical control of that data or logic (the “secret sauce”). When it comes to cloud computing, there is a lot of FUD about security. In order to feel comfortable and knowledgeable about the security aspects of Windows Azure, it’s important to spend some time educating yourself on the security aspects of the platform.