Creating a Private Data Marketplace with Microsoft Codename “Data Hub”

•• Updated 5/11/2012 with change of dataset name from US Air Carrier Flight Delays, Monthly to US Air Carrier Flight Delays to reflect change of SQL Azure dataset to new multi-month/year format. (See my Creating An Incremental SQL Azure Data Source for OakLeaf’s U.S. Air Carrier Flight Delays Dataset post of 5/8/2012 for more information about the new dataset.)

• Updated 4/30/2012 with link to OakLeaf’s new US Air Carrier Flight Delays, Monthly (free) data set on the public Windows Azure Marketplace DataMarket.

Contents:

- Introduction

- Obtaining CPT Invitations

- Creating Your “Data Hub” Marketplace

- Provisioning a SQL Azure Database to Store *.csv Data

- Understanding the FAA’s On_Time_Performance.csv Files

- Uploading *.csv Files to Your Data Source

- Publishing the New Data Source

- Previewing and Approving the Marketplace Submission

- Publishing the Offering

- Exploring the User’s Experience

Introduction

SQL Server Labs describes their recent Codename “Data Hub” Consumer Technical Preview (CTP) as “An Online Service for Data Discovery, Distribution and Curation.” At its heart, “Data Hub” is a private version of the public Windows Azure Marketplace DataMarket that runs as a Windows Azure service. The publishing process is almost identical to the public version, except for usage charges and payment transfers. “Data Hub” enables data users and developers, as well as DBAs, to:

- Make data in SQL Azure discoverable and accessible in OData (AtomPub) format by an organization’s employees

- Enable data analysts and business managers to view and manipulate data from the Marketplace with Service Explorer, Excel, and Excel PowerPivot

- Publish datasets for further curation and collaboration with other users in the organization

- Federate data from the Windows Azure Marketplace DataMarket for the organization’s employees (in addition to the organization’s uploaded data)

The initial CTP supports the preceding features but is limited to SQL Azure as a data source and OData (AtomPub) as the distribution format. Microsoft is considering other data source and distribution formats.

•• Note: OakLeaf’s US Air Carrier Flight Delays, Monthly data sets are publicly accessible at https://oakleaf.clouddatahub.net/ by clicking the Government or Transportation category link. To issue a query with Data Explorer, do this:

- Click the Sign In button at the top right of the page

- Log in with your Windows Live ID

- Click the US Air Carrier Flight Delays link on the landing page

- Click the Add to Collection button

- Click the US Air Carrier Flight Delays link in the My Collection page

- Click the Explore Data button to open the Data Explorer

- Click the Run Query button to display the first 23 rows of data:

For a more detailed description, see the Exploring the User’s Experience at the end of this post.

•• Alternatively, you can register with the public Windows Azure Marketplace Datamarket and then subscribe to the same datasets from OakLeaf’s new US Air Carrier Flight Delays data set. After you subscribe to the free dataset, you can also use it as a data source for Apache Hadoop on Windows Azure Hive tables.

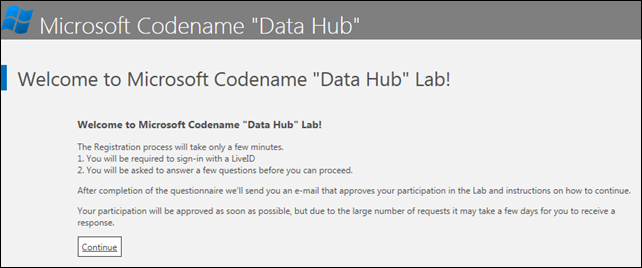

Obtaining CTP Invitations

“Data Hub” and the “Data Transfer” CTP are invitation-only CTPs. You can request an invitation by clicking the Start Here link for “Data Hub” to open the Welcome page:

Complete the questionnaire and wait for the e-mail that advises you’ve been approved as a user. At present, users are limited to use of the CTP for three weeks.

“Data Hub” integrates “Data Transfer” for uploading comma-separated-value (*.csv) data files to existing or new SQL Azure database tables. As noted below, I found using “Data Transfer” independently of “Data Hub” worked for some large files that “Data Hub” wouldn’t process. Therefore, I recommend you apply for the “Data Transfer CTP” by clicking the Start Here button on the landing page:

My Test-Drive SQL Azure Labs’ New Codename “Data Transfer” Web UI for Copying *.csv Files to SQL Azure Tables or Azure Blobs of 11/30/2011 was an early tutorial. I’ll post an updated tutorial for using Codename “Data Transfer” with On_Time_Performance_YYYY_MM.csv files shortly.

Creating Your “Data Hub” Marketplace

After you receive your “Data Hub” invitation, follow the instructions in the e-mail and complete the Create Your Marketplace page. The domain prefix, oakleaf for this example, must be unique within the clouddatahub.net domain. Specify the Start and End IP Addresses for your expected users. (0.0.0.0 and 255.255.255.255 admit all users making your Marketplace public):

Click Create Marketplace to take ownership of the subdomain:

After the creating process completes, your homepage (at https://oakleaf.clouddatahub.net for this example) appears as shown here:

Your Account Key is equivalent to a password for “Data Hub” administration by users with Live IDs other than the administrator’s.

Provisioning a SQL Azure Database to Store *.csv Data

Click the Publish Data menu link to open the Welcome to the Publishing Portal page, which has Connect, Publish, Approve, Federate and View Marketplace menu links.

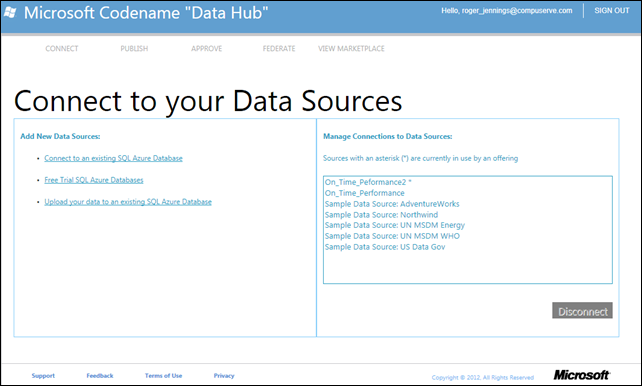

Click the Connect link to open the Connect to your Data Sources page, which offers five prebuilt sample data sources, AdventureWorks … US Data Gov:

I previously provisioned the On_Time_Performance data source from one of the four Free Trial SQL Azure Databases you receive with your invitation and On_Time_Performance2 data source from an existing database created with the “Data Transfer” CTP.

To use one of the four free SQL Azure databases, click the Free Trial SQL Azure Databases link in the left pane of the preceding page to open the Create New Database page. Type a unique name for your database (On_Time_Performance_Test for this example):

And click Create to add the database to the Data Sources list and display the Upload a File page.

Understanding the FAA’s On_Time_Performance.csv Files

The Creating the Azure Blob Source Data section of my Using Data from Windows Azure Blobs with Apache Hadoop on Windows Azure CTP post of 4/6/2012 described the data set I wanted to distribute via a publicly accessible, free Windows Azure DataMarket dataset. The only differences between it and the tab-delimited *.txt files uploaded to blobs that served as the data source for an Apache Hive table were:

- Inclusion of column names in the first row

- Addition of a formatted date field (Hive tables don’t have a native date or datetime datatype, so Year, Month and DayOfMonth fields were required.)

- Field delimiter character (comma instead of tab)

Following is a screen capture of the first 20 data rows of the ~500,000-row On_Time_Performance_2012_1.csv table:

You can download sample On_Time_Performance_YYYY_MM.csv files from the OnTimePerformanceCSV folder of my Windows Live SkyDrive account. The files are narrowed versions of the On_Time_On_Time_Performance_YYYY_MM.csv files from the Bureau of Transportation’s Research and Innovative Technology Administration site. For more information about these files, see The FAA On_Time_Performance Database’s Schema and Size section of my Analyzing Air Carrier Arrival Delays with Microsoft Codename “Cloud Numerics” article of 3/26/2012. Each original *.csv file has 83 columns, about 500,000 rows and an average size of about 225 MB.

On_Time_Performance_2012_1.csv has 486,133 data rows, nine columns and weighs in at 16.5 MB. Other On_Time_Performance_YYYY_MM.csv files with similar row count and size are being added daily. There also are truncated versions of the files with 100, 1,000, 10,000, 100,000, 150,000 and 200,000 rows that I used for determining the large-file *.csv upload problem with “Data Hub” in the On_Time_Performance_Test subfolder.

Tab-delimited sample On_Time_Performance_YYYY_MM.txt files (without the first row of column names and formatted date) for use in creating blobs to serve as the data source for Hive databases are available from my Flight Data Files for Hadoop on Azure SkyDrive folder.

Provision of the files through a private Azure DataMarket service was intended to supplement the SkyDrive downloads. I also plan to provide the full files in a free submission to the Windows Azure Marketplace Datamarket site in early May.

Uploading *.csv Files to Your Data Source

Download one of the smaller test files from my SkyDrive On_Time_Performance_Test subfolder to your local machine. Choose the 10,000-row file if you have a DSL connection with slow upload speed.

Click the Upload a File page’s Browse button and navigate to the *.csv file you downloaded:

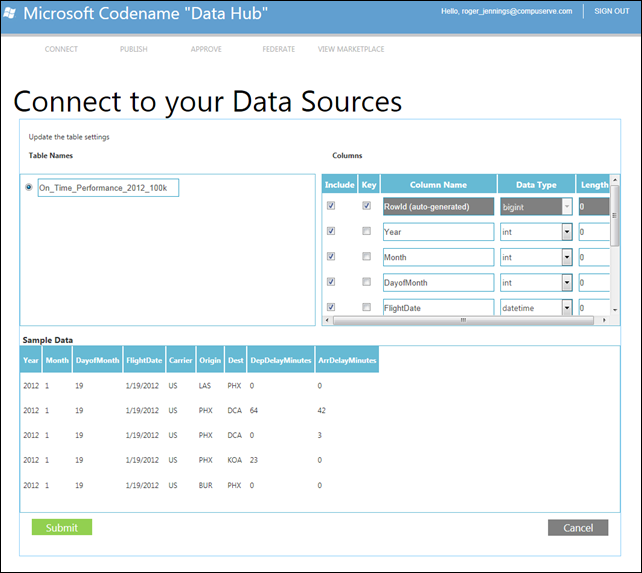

Click the Upload button to start the upload to an Azure blog process. You receive no feedback for the progress of the upload until the Update the Table Settings page opens:

All data rows are useful, so accept the default Include settings and click Submit to transfer the data from an Azure blob to an SQL Azure table named for the *.csv file, which replaces the above page with a Loading File to Database message. It often takes longer to load the file to the database than to upload it to a blob.

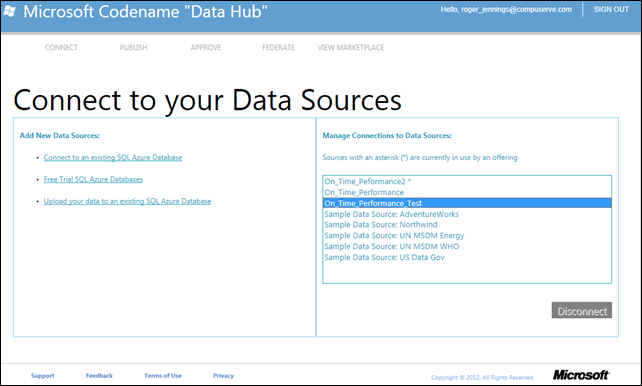

When the Success message appears, click the Connect menu link to verify that the new database appears in the Data Sources list:

Publishing the New Data Source

Click the Publish menu link to open the My Offerings page and click the Add Offering button to open the 1. Data Source page.

Select the table(s) you want to include in the data source, type a Friendly Name for the column, click the Columns button for the table to display the column list and clear the columns that you don’t want to be queryable:

Indexes are required on all queryable columns. Month and Year don’t need to be queryable because they are the same for all rows in the table.

Click the 2. Contacts button to save the Data Source information and open the Contacts page. Type your Name, Email alias and Phone Number:

Click the 3. Details button to open the Details page. Complete the required data, select from one to four categories, open the Documentation list and add links to appropriate documentation URLs, and add a logo image:

Click the 4. Status/Review menu link to open the Status/Review page. Click the Request Approval button to send a request the Data Hub team to approve the entry.

Note: I will post a tutorial on federating content from the public Windows Azure Marketplace DataMarket after Microsoft approves my pending submission, which is identical to this private Marketplace entry.

Previewing and Approving the Marketplace Submission

Click the Approve menu link to open the My Approvals page. Click the Approve/Decline button to open the message pane, mark the Approve option, type an optional message to the requester, and click the Display Actions button to open Preview Offering choices:

Click Preview Offering in Marketplace to verify that the offering details appear as expected:

Click the Explore This Dataset link to open the dataset in the Service Explorer and click the Run Query button to display the first 22 of 100 rows with this default URL query https://api-oakleaf.clouddatahub.net/Data.ashx/default/US_Air_Carrier_Flight_Delays_Monthly/preview/On_Time_Performance_2012_1?$top=100:

Type a Carrier code, such as WN in the Carrier text box and OAK in the Dest[ination] text box to provide data for Southwest Airlines flights to Oakland with this URL query: https://api-oakleaf.clouddatahub.net/Data.ashx/default/US_Air_Carrier_Flight_Delays_Monthly/preview/On_Time_Performance_2012_1?$filter=Carrier%20eq%20%27WN%27%20and%20Dest%20eq%20%27OAK%27&$top=100:

You also can visualize and export data, as well as click the Develop button to open a text box containing the current URL query. Click the XML button to display formatted OData (AtomPub) content:

Return to the My Approvals page and click the Send button to notify the originator of the approval and clear the My Approvals counts.

Publishing the Offering

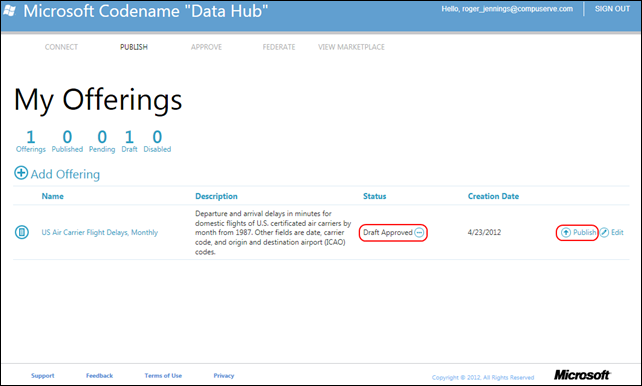

Click the Publish menu link to display the offering with the Draft Approved as the status and the Publish option enabled:

Click the Publish button to publish your offering for others to use:

Exploring the User’s Experience

Click the Marketplace menu link to return to the landing page, type a search term (OakLeaf for this example) to find your offering:

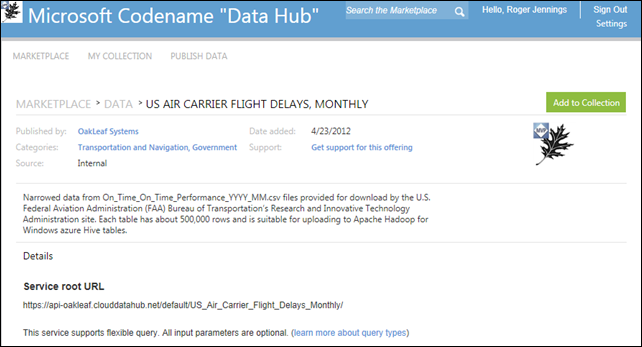

Click the offering name link to open it as an ordinary user and display the Add to Collection button:

Click Add to Collection to add the offering to the My Collection list:

When you sign out and navigate to the default URL, https://oakleaf.clouddatahub.net/, the landing page appears as shown here:

The Science & Statistics item is the default Data.gov offering. Government or Transportation and Navigation link opens the user view of the offering landing page with a Sign In to Add to Your Collection button:

The service URL is: https://api-oakleaf.clouddatahub.net/default/US_Air_Carrier_Flight_Delays_Monthly/. Navigating to this URL and clicking the Show All Data button displays the default collections (for an earlier data source):

1 comments:

"At its heart, “Data Hub” is a private version of the public Windows Azure Marketplace DataMarket"

Thanks Roger, I always like one-liners (elevator statements" that make it easy to grok something. That sentance really solidifies Data Hub for me. Thanks.

Post a Comment