Windows Azure and Cloud Computing Posts for 10/27/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

David Pallman reported the availability of Azure Storage Explorer 4 Beta Refresh on 10/27/2010:

Last week we released Azure Storage Explorer 4. We have released several refreshes since then, in order to respond to feedback from the first several hundred downloaders. Some of these refreshes are important because they fix a bug or add a valuable feature. If you get the latest refresh (Beta 1 Refresh 4 or later), Azure Storage Explorer 4 will now notify you when there is a new version so you won't have to remember to check back on the CodePlex site for updates. If you're on Azure Storage Explorer 4, please update to the refresh so that you'll have the best experience.

Here are some of the features added in the refreshes:

- Blob uploads now automatically set ContentType based on file type

- CSV download/upload now preserves column types

- New download/upload formats supported: Plain XMl and AtomPub XML

- UI improvements

- Private/public folder icons indicate whether blob containers are public or not

- Automatically checks for a new software version

- Checks for and corrects blob containers with old (outdated) permissions

- Attributes and updates to current Azure standard

- Preserves window position and size between sessions

- Allows culture to be set

Wally B. McClure posted ASP.NET Podcast Show #143 - Windows Azure Part I - Web Roles on 10/25/2010:

This show is on Web Roles in Azure, Blob Storage, and the Visual Studio 2010 Azure tools.

Jerry Huang compares Rackspace CloudFiles vs. Amazon S3 vs. Azure blob storage in this 10/25/2010 post to his Gladinet blog:

As usual, when the support of a new cloud storage service provider is added to the Gladinet platform, we will do basic speed test against supported service providers to see how they compare to each other.

This time we will compare Rackspace CloudFiles with Amazon S3 and Windows Azure Blob Storage.

The Test

Gladinet Cloud Desktop is used for the test. CloudFiles, Amazon S3 and Azure storage accounts are mounted into Windows Explorer as virtual folders.

The test is simple, From Windows Explorer, a 28M file is drag and dropped into each service provider’s virtual folder. A stop watch is used to time the duration it takes each to complete transfer (upload).

After that, the Gladinet Cloud Desktop cache is cleared. The same 28M file is drag and dropped from the cloud storage virtual folder into local folder. The transfer (download) time is also captured.

The Result

Service Providers Upload Time (Sec) Download Time (Sec) Rackspace CloudFiles 194 37 Amazon S3 East 204 34 Amazon S3 West 203 45 Windows Azure Blob 197 76 The upload speed is around 150 KBytes/sec for Rackspace transfer. The download speed is around 800KBytes/sec.

As a reference point, here is a bandwidth test with the Internet connection. The pipe to the Internet has decent speed to conduct this test.

Conclusion

It looks like Rackspace compares head to head with Amazon S3. when Rackspace has the fastest upload time, S3 East has the fastest download time.

Grab a copy of Gladinet Cloud Desktop and test it yourself!

Related Post: Windows Azure Blob Storage vs. Amazon S3

The comparisons would have been more interesting (and possibly more accurate) if Jerry identified the Azure data center he used and provided data for Microsoft’s South Central (San Antonio) and North Central (Chicago) data centers.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Steve Yi of the SQL Azure Team posted Video: Interacting with a SQL Azure Database on 10/27/2010:

In the first video, Your First SQL Azure Database, we created a new blank SQL Azure database in the cloud [see article below]. In this second part, learn how to provision your database with tables and basic queries using Transact-SQL and additional tools including Visual Studio Web Developer Express and SQL Server Management Studio.

[D]irect link to Microsoft Showcase Site: Interacting with a SQL Azure Database

Steve Yi posted a link to a Video: SQL Azure: Creating Your First Database on 10/27/2010:

This video is a brief tutorial that walks through the three steps to create a SQL Azure database and provision a simple database.

Alternative direct link to Microsoft Showcase Site: Creating Your First Database.

See the Steve Marx (@smarx) described Building a Mobile-Browser-Friendly List of PDC 2010 Sessions with Windows Azure and OData item in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

Gregg Duncan updated the OData Primer wiki on 10/27/2010:

The OData Primer wiki provides a large collection of links to OData-related articles in the following categories:

List of Articles about Consuming OData Services

- List of Articles about Producing OData Services

- List of Podcasts about OData

- List of Screencasts, Videos, Slide Decks and Presentations about OData

- Where to get your questions answered?

- OData Feeds to Use and Test (Free)

- OData Tools

- Open Discussions

- OData Open Source/Source Available Projects and Code Samples

Ahmed Moustafa posted a Walkthrough: OData client for Windows Live Services to the WCF Data Services site on 10/26/1020:

As described recently on the Windows Live team blog, programming against your Windows Live artifacts (Calendar, Photos, etc) is now possible using OData. This blog post will walk through how to use our recently released WCF Data Services CTP to interact with the Windows Live OData endpoint.

In general the Windows Live OData endpoint is just like any other OData endpoint so the good news is you already pretty much know how to use it J. This post will cover the basics of using some of the new features in our latest WCF Data Services CTP and some of the Windows Live specific aspects you need to know such as:

- Security: The Windows Live OData endpoint uses OAuth for security, as such requires the user to sign in with their windows live account before any operation against the user’s data (contacts, photos etc.) can be done.

- Different base addresses for Collections: In other words your Contacts & Photos data may not share a common base URI as can be seen in the snippet below from the Windows Live OData endpoint Service Document. We’ve added a new feature in this CTP called an “Entity Set Resolver” (which we’ll go describe in detail in a subsequent blog post) that makes it easy to work with partitioned collection in an OData service:

<service xmlns:xml="http://www.w3.org/XML/1998/namespace" xmlns:atom="http://www.w3.org/2005/Atom" xmlns="http://www.w3.org/2007/app">

<workspace xml:base="http://apis.live.net/V4.1/">

<collection href="http://contacts.apis.live.net/V4.1/">

<atom:title name="text">Contacts</atom:title>

<accept>application/atomsvc+xml</accept>

<accept>application/json</accept>

<accept>text/xml</accept>

<accept>binary/xml</accept>

<categories fixed="no"/>

</collection>

<collection href="http://photos.apis.live.net/V4.1/">

<atom:title name="text">Photos</atom:title>

<accept>application/atomsvc+xml</accept>

<accept>application/json</accept>

<accept>text/xml</accept>

<accept>binary/xml</accept>

<categories fixed="no"/>

</collection>

</workspace>

</service>

Listing: Sample Live Service Document

Walkthrough

Lets walkthrough the sequence of steps needed to create a simple application that displays albums & the photos associated with those albums for a given user. Please note that all files referenced in the in this post are part of the attached Visual Studio solution.

1. To retrieve the authentication token for a given user refer to the live blog post here to get the code needed to produce the sign in window, using the appropriate Client Application ID, Client Secret key live application information and auth token.

2. To get a Client Application ID and Client key tied to your Live user account please visit manage.dev.live.com. In the attached sample they are specified in the LiveDataServiceContext partial class, in the clientId & clientSecret fields. You will need to replace the values of these fields with the ones tied to your Live account.

3. To integrate the sign in process and auth token retrieval with the construction of a DataServiceContext class, please, see LiveDataServiceContext.cs:

partial void OnContextCreated()

{

messengerConnectSigninHelper = new MessengerConnectSigninHelper();

messengerConnectSigninHelper.SignInCompleted += new MessengerConnectSigninHelper.SignInCompletedEventHandler(SignInCompletedEventHandler);

messengerConnectSigninHelper.GetTokenAsync(LiveApplicationInformation, null);

}

void SignInCompletedEventHandler(SignInCompletedEventArgs e)

{

this.AuthToken = messengerConnectSigninHelper.AuthorizationToken;

this.entitySetResolver.ParseServiceDocumentComplete += new ParseServiceDocumentComplete(EntitySetResolver_ParseServiceDocumentComplete);

this.entitySetResolver.GetBaseUrisFromServiceDocumentAsync(this.BaseUri);

}

At this point the user is signed in & we have the Auth token to use in subsequent requests.

4. The next order of business is to retrieve the Live Service Document so that we can retrieve the base URIs for all the collections (Photos, Albums, etc) exposed by the OData service. This step is required because the collections in the Windows Live OData service are partitioned and available from different base URIs. Refer to LiveEntitySetResolver.GetBaseUrisFromServiceDocumentAsync() in the attached project for details.

5. Now that we have base URIs for every collection (Albums, Photos, etc) described by the Service Document we need to register a delegate with the DataServiceContext that will be invoked each time the Data Services client needs the base URI for a Collection. Refer to the LiveEntitySetResolver constructor for details:

ctx.ResolveEntitySet = this.ESR; // hook up the “Entity Set Resolver”

6. To include the authentication token retrieved from setup 1 in subsequent requests to the OData service you need to register a SendingRequest event handler with the data services context:

dataServicesctx.SendingRequest += new EventHandler<SendingRequestEventArgs>(context_SendingRequest);

Before every request the authorization header needs to be included in the request:

public void context_SendingRequest(object sender, SendingRequestEventArgs e)

{

e.RequestHeaders[MessengerConnectConstants.AuthorizationHeader] = authToken;

}

7. At this point the DataServiceContext is ready to use to retrieve albums & photos. For example, the snippet from MainPage.liveCtx_Initalized() below uses the DataServiceContext to execute a LINQ query to retrieve non empty Albums.

albumQuery = new DataServiceCollection<Album>(liveCtx);

var query = from p in liveCtx.Albums

where p.Size > 0

select p;

albumQuery.LoadCompleted += new EventHandler<LoadCompletedEventArgs>(dsc_LoadCompleted);

albumQuery.LoadAsync(query);

8. Given that a metadata endpoint is not yet available for the Windows Live OData endpoint the easiest way to get started is to reuse the types (Album, Photo, etc) in the attached sample project. Once the metadata endpoint is up and running you will be able to generate the client types by using the “Add Service Reference” gesture in Visual Studio or the DataSvcUtil.exe command line tool just as you would with any OData metadata endpoint.

Azure Support posted SQL Azure Performance – Query Optimization on 10/26/2010:

SQL Azure Performance Tuning is a much tougher task than SQL Server, as I noted in the Azure Report Card – SQL Azure lacks some of the basic tools available to SQL Server DBA’s. There really isn’t too much to do tuning your SQL Azure instance as almost all the settings available for SQL Server are automatically managed by the SQL Azure platform. However, there is still some performance tuning to be done – mostly in the execution of the operations performed by the database. A major tool missing from SQL Azure is the SQL Server Profiler, despite this however there is still some optimization that can be performed on your SQL Azure queries – you can do this by either using SSMS or SET STASTICS.

SQL Azure Query Optimization Using SSMS

The first step to query optimization is determining the efficiency of the query. In SSMS, the Execution Plan graphically shows the cost of excuting a query. To see the Execution Plan, first toggle on “Include Actual Execution Plan” by selecting Query > Include Actual Execution Plan or from the toolbar as shown below:

Then when you execute the query, you will also be presented with an Execution Plan tab besides the Results and Messages tabs:

A good resource on reading Execution Plans is the MSDN resource here.

In the above query I have made the most basic mistake – reading more data than will be required, it is rare we will need to return all the columns in a table, it’s also rare that we will need every row in a table (so always use WHERE to minimize the rows that are read) .

SQL Azure Query Optimization Using SETSTASTICS

The TSQL SETSTATISTICS command will monitor the execution of a query and then provide statistics on either the time taken to execute the query or the I/O expense of the query.

To test the time of the query execution use the Command SET STATISTICS TIME ONE at the start of a query. This will then output the parse, compile and execution times for the query to the Messages tab:

To test the I/O expense of the query execution use the Command SET STATISTICS IO ONE at the start of a query. This will then output the IO performance for the query to the Messages tab:

To learn about using this data to optimize the performance of you SQL Azure queries a great resource is Simple Query tuning with STATISTICS IO and Execution plans .

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Dave Kearns asserted “[It’s] imperative that identity services be readily available and easy to use for those creating the code to run the cloud services” in a deck for his Keeping the cloud within reach post of 10/26/2010 to NetworkWorld’s Security blog:

In looking back 10 years to what was in this newsletter in October 2000 I see that it was the first time I mentioned a phrase which, for many years, I tried to repeat as often as possible when talking about directories and their use by programmers. But as we approach the age of cloud-based services, the phrase is equally applicable to identity services and their use by programmers.

What I said was: "To be useful, the directory must be pervasive and ubiquitous." Some people think the two words are synonymous, but there is a difference.

By pervasive, I mean that its available anywhere and every time we want to use it. By ubiquitous, I mean its available everywhere and any time we want to use it. See the difference?

For a programmer to make use of a service (rather than create his own) he has to know that it will always be available ("every time" and "any time") wherever ("anywhere" and "everywhere") he needs it. If there are places or times that the data isn't available, and the program needs it, then the programmer will build a separate structure that is available any time and every time, anywhere and everywhere.

- That's what lead to the success of Active Directory -- even organizations using something else as their enterprise directory had some Windows servers so AD was always there -- programmers could count on it. You couldn't say that about Netscape's directory or Novell's.

As we move into the cloud-services era, it is imperative that identity services be readily available and easy to use for those creating the code to run the services. There's no single service that can be relied on, though, so its going to take a feature-rich set of standard protocols to make it happen.

The discussion arose as almost an aside in a three part look at "Essential qualities of directory services," qualities that are just as important today as they were in 2000: a well-designed directory service needs the ability to be distributed, replicated and partitioned. Ten years ago, we were only looking at the needs of the enterprise but today the identity datastore (i.e., the directory) is going global so these attributes are needed more than ever. We'll look into that more closely next time.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Steve Marx (@smarx) described Building a Mobile-Browser-Friendly List of PDC 2010 Sessions with Windows Azure and OData in this 10/26/2010 post:

I learned today that there’s an OData feed of all the PDC 2010 sessions. I couldn’t help but build a Windows Azure application that consumes that feed and provides a simple web page that works well on mobile browsers. You can use it at http://pdc10sessions.cloudapp.net.

I’m enjoying the ease of use of OData in .NET. OData is the protocol that Windows Azure tables uses, and I’m also starting to use that to expose data in other applications I write. To share the joy, I thought I’d share how I built this app.

All I did was add a service reference to http://odata.microsoftpdc.com/ODataSchedule.svc and write a simple controller method. Here’s the controller method:

// cache for thirty seconds [OutputCache(Duration=30000, VaryByParam="*")] public ActionResult Index() { var svc = new ScheduleModel(new Uri("http://odata.microsoftpdc.com/ODataSchedule.svc")); // prefetch presenters to join locally var presenters = svc.Speakers.ToDictionary(p => p.Id); // prefetch timeslots to join locally var timeslots = svc.TimeSlots.ToDictionary(t => t.Id); var sessions = from session in svc.Sessions.Expand("Presenters").ToList() where session.TimeSlotId != Guid.Empty // skip the recorded sessions let timeslot = timeslots[session.TimeSlotId] let presenter = session.Presenters.Count > 0 ? presenters[session.Presenters[0].Id] : null orderby timeslot.Start // tuple because I'm too lazy to make a class :) select Tuple.Create(session, presenter, timeslot.Start == DateTime.MaxValue ? "recorded session" : timeslot.Start.ToString()); return View(sessions); }And here’s the ASP.NET MVC page:

<%@ Import Namespace="PDCSchedule_WebRole.ScheduleService" %> <%@ Page Language="C#" MasterPageFile="~/Views/Shared/Site.Master" Inherits="System.Web.Mvc.ViewPage<IEnumerable<System.Tuple<Session, Speaker, string>>>" %> <asp:Content ID="Content1" ContentPlaceHolderID="TitleContent" runat="server"> PDC 2010 Session Schedule </asp:Content> <asp:Content ID="Content2" ContentPlaceHolderID="MainContent" runat="server"> <% foreach (var tuple in Model) { var session = tuple.Item1; var speaker = tuple.Item2; var startTime = tuple.Item3; %> <div class="session"> <h1 class="title"> <%: session.FullTitle %> <%: speaker != null ? string.Format(" ({0})", speaker.FullName) : string.Empty %> </h1> <p class="location"> <%: startTime %><%: !string.IsNullOrEmpty(session.Room) ? string.Format(" ({0})", session.Room) : string.Empty %> </p> <p class="description"><%: session.FullDescription %></p> </div> <% } %> </asp:Content>The only other important thing I did was add a meta tag to get mobile browsers to use the right viewport size:

<meta name="viewport" content="width:320" />

I’ll be using this at PDC this Thursday and Friday to find the sessions I’m interested in. If you’ll be attending PDC in person, I hope you find this useful too.

Steve’s app isn’t likely to win any design awards, but it’s quick. Here’s the opening screen in the VS2010’s WP7 emulator:

For more background about PDC2010’s OData feed, see The Professional Developers Conference 2010 team delivered on 10/26/2010 a complete OData feed of PDC10’s schedule at http://odata.microsoftpdc.com/ODataSchedule.svc/ article in the OData section of my Windows Azure and Cloud Computing Posts for 10/25/2010+ post.

See the mobile version of my manually created PDC 2010 session list at Windows Azure, SQL Azure, AppFabric and OData Sessions at PDC 2010:

Ryan Cain posted source code for his PDC 2010 OData Feed iPhone App on 10/26/2010:

I spent a few hours today and hacked, and I do mean hacked, together a quick iPhone App to consume the OData feed for PDC 2010. Since the turnaround is way to quick to push something like this through the app store, I've pushed to code up to github for anyone to download and play with.

You can access it at:

http://github.com/oofgeek/PDC10-OData-Viewer.

I will in no way claim that this code is good, that it manages memory well or does anything other than "work". But if you're heading to PDC10, and have an iPhone and an Apple Dev account download the code and party on! Also note that I'm fairly new to iPhone development, and coming from over 8 years with .NET I'm still definitely cutting my teeth on this new platform.

In order to get the code to compile you will need the OData iPhone SDK, which you can download at:

http://www.odata.org/developers/odata-sdk.Follow the install instructions that come with the SDK to re-configure the project to point to your install of the SDK. The header and library paths on the Project info are currently pointing to a folder on my machine, as well as the binary for the library.

Also this is my first time pushing out code to github, so hopefully I didn't royally screw something up.

Have fun and see you in Redmond!

The Windows Azure Team published Reaching New Heights: Lockheed Martin’s Windows Azure Infographic on 10/27/2010:

The original Lockheed Martin Merges Cloud Agility with Premises Control to Meet Customer Needs case study of 7/19/2010 offers this abstract:

Headquartered in Bethesda, Maryland, Lockheed Martin is a global security company that employs about 136,000 people worldwide and is principally engaged in the research, design, development, manufacture, integration, and sustainment of advanced technology systems, products, and services. The company wanted to help its customers obtain the benefits of cloud computing, while balancing security, privacy, and confidentiality concerns.

The company used the Windows Azure platform to develop the Thundercloud™ design pattern, which integrates on-premises infrastructure with compute, storage, and application services in the cloud. Now, Lockheed Martin can provide its customers with vast computing power, enhanced business agility, and reduced costs of application infrastructure, while maintaining full control of their data and security processes.

Patrick Butler Monterde posted Microsoft Patterns and Practices: Cloud Development on 10/27/2010:

I was quite thrilled to contribute on both Cloud Development releases by the Microsoft Pattern and Practices team. These two books contain great insights in how to develop and migrate applications to Windows Azure.

Summary

How do you build applications to be scalable and have high availability? Along with developing the applications, you must also have an infrastructure that can support them. You may need to add servers or increase the capacities of existing ones, have redundant hardware, add logic to the application to handle distributed computing, and add logic for failovers. You have to do this even if an application is in high demand for only short periods of time.

The cloud offers a solution to this dilemma. The cloud is made up of interconnected servers located in various data centers. However, you see what appears to be a centralized location that someone else hosts and manages. By shifting the responsibility of maintaining an infrastructure to someone else, you're free to concentrate on what matters most: the application.

Link: http://msdn.microsoft.com/en-us/library/ff898430.aspx

Active Releases:

- Moving Applications to the Cloud on the Microsoft Windows Azure™ Platform

- Developing Applications for the Cloud on the Microsoft Windows Azure™ Platform

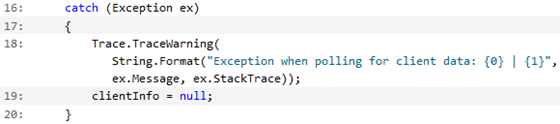

Jim O’Neill continued his series with Azure@home Part 10: Worker Role Run Method (continued) on 10/27/2010:

This post is part of a series diving into the implementation of the @home With Windows Azure project, which formed the basis of a webcast series by Developer Evangelists Brian Hitney and Jim O’Neil. Be sure to read the introductory post for the context of this and subsequent articles in the series.

In the last post, we started looking at the primary method of every worker role implementation: Run. Run is typically implemented as an infinite loop, and its manifestation in Azure@home is no exception.

I covered the implementation of SetupStorage (Line 4) already – this is where the Folding@home client executable is copied to the local storage within the Azure virtual machine hosting the WorkerRole instance. Once that’s done, the main loop takes over:

- checking to see if a client has provided his or her name and location via the default.aspx web page (Line 9), and then

- launching work units through the Folding@home client to carry out protein folding simulations (Line 12).

If you’re wondering about the break statement at Line 13, it’s there to enable the Run loop to exit – and therefore recycle the role instance.

Why? The LaunchFoldingClientProcess method (Line 12) also includes a loop and will continually invoke the Folding@home client application – until there’s an uncaught exception or the client record is removed externally. These are both somewhat unexpected events for which a reset is rather appropriate. It’s not the only way to handle these situations, but it was a fairly straightforward mechanism that didn’t add a lot of additional exception handling code. Remember this is didactic not production code!GetFoldingClientData Implementation

Recall that the workflow for Azure@home involves storing client information (Folding@home user name, latitude and longitude) in a table aptly named client in Azure storage (this was described in Part 4 of this series). GetFoldingClientData is where the WorkerRole reads the information left by the WebRole:

ClientInformation is the same class – extending TableServiceEntity – that I described earlier in this series (Part 3), so the code here should look rather familiar. There are really three distinct scenarios that can occur when this code is executed (keep in mind it’s executed as part of a potentially infinite loop):

- Record in client table exists,

- Record in client table does not exist, or

- Client table itself does not exist.

Record in client table exists

A record will exist in the client table after the user submits the default.aspx page (via which are provided the Folding@home user name and location). At this point, Line 14 above will return the information provided in that entity – the entity will not be null – and so the Run loop (reprised below) can proceed passing in the client information to the LaunchingFoldingClientProcess method (Line 12). As we’ll see later, that method also checks the client table to determine if it should continue looping itself. (The loop below will actually terminate if LaunchFoldingClientProcess ever returns – as mentioned in the callout above.)

Record in client table does not exist

In this scenario, the line below will return a null object (‘default’) to clientInfo, so the Run loop will simply sleep for 10 seconds before checking again. This is essentially a polling implementation, where the WorkerRoles are waiting for the end user to enter information via the default.aspx page.

Client table itself does not exist

The WorkerRole is strictly a reader (consumer) of the client table and doesn’t have the responsibility for creating it – that falls to the processing in default.aspx (as described in an earlier post). There’s a very good chance then that the WorkerRole instances, which start polling right away, will make a request against a table that doesn’t yet exist. This is where the exception handling below comes in:

The exception handling is a bit coarse: the exception caught in this circumstance is a DataServiceQueryException, with the rather generic message:

An error occurred while processing this request.You have to dig a bit further, into the InnerException (of type DataServiceClientException), to get the StatusCode (404) and Message:

<?xml version="1.0" encoding="utf-8" standalone="yes"?><error xmlns="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata"><code>ResourceNotFound</code><message xml:lang="en-US">The specified resource does not exist.</message></error>which indicate that the resource was not found. The “resource” is the client table, as can be seen in the RESTful RequestUri generated by the call to FirstOrDefault<ClientInformation> in Line 14:

http://snowball.cloudapp.net/client()?$top=1The exception message and stack trace are written to the Azure logs via the TraceWarning call in Line 18 (and the configuration of a DiagnosticMonitorTraceListener as described in my post on Worker Role and Azure Diagnostics).

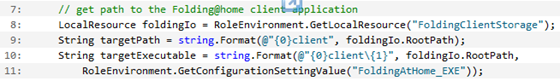

LaunchFoldingClientProcess Implementation

The entire implementation of LaunchFoldingClientProcess is provided below for context, and then beyond that I’ll break out the major sections of the implementation, eventually repeating each segment of this code:

77 lines of C# code excised for brevity.

Creating Configuration File

The Folding@home console client application (Folding@home-Win32-x86.exe) can prompt for the information it needs to run – user name, team number, passkey, size of work unit, etc. – or it can run using a configuration file, client.cfg. Since the executable is running in the cloud, it can’t be interactive, so the WriteConfigFile code sets up this configuration file (in local storage). You can read more about the console application’s configuration options on the Stanford site. In the interest of space, I’m leaving out the WriteConfigFile implementation, but you can download all the code from the distributed.cloudapp.net site for self-study.

Constructing Path to Folding@home application

This section of code constructs the full path to the Folding@home client application as installed in local storage on the VM housing the WorkerRole instance. Code here should look quite similar to that of my previous blog post. Note that

FoldingAtHome_EXE(Line 11) is a configuration variable set in the ServiceConfiguration.cscfg file and points to the name of Stanford’s console client (default: Folding@home-Win32-x86.exe).Setting Reporting Interval

Recall from the architecture diagram (right), that progress on each work unit is reported to the workunit table in your own Azure storage account (labeled 7 in the diagram) and to the distributed.cloudapp.net application (labeled 8).

Using development storage, you may want to report status frequently for testing and debugging. During production though, you’re charged for storage transactions and potentially bandwidth (if your deployment is not collocated in the same data center as distributed.cloudapp.net). Add to that the fact that the progress on most work units is rather slow – some work units take days to complete – and it’s easy to conclude that the polling interval doesn’t need to be subsecond! In Azure@home, the configuration includes the

AzureAtHome_PollingIntervalvalue (in minutes), and that’s the value being configured here. If the parameter doesn’t exist, the default is 15 minutes (Line 18). ….

Jim continues with more source code.

… In the next post, we’ll delve into how progress is reported both to the local instance of Azure storage and to the main Azure@home application (distributed.cloudapp.net).

See the David Pallman reported the availability of Azure Storage Explorer 4 Beta Refresh article in the Azure Blob, Drive, Table and Queue Services section above.

<Return to section navigation list>

Visual Studio LightSwitch

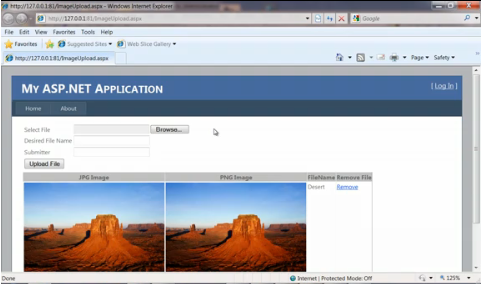

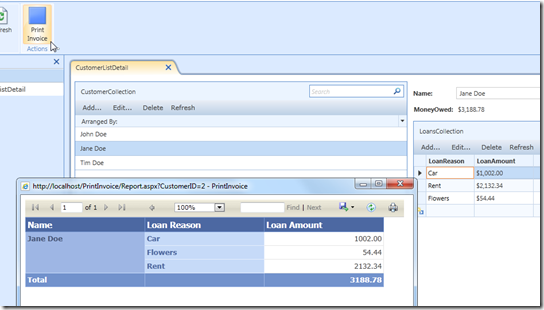

Michael Washington described Printing Sql Server Reports (.rdlc) With LightSwitch in this illustrated 10/26/2010 tutorial:

Printing in LightSwitch was previously covered here:

http://lightswitch.adefwebserver.com/Blog/tabid/61/EntryId/3/Printing-With-LightSwitch.aspx

The disadvantages of that approach are:

- You have to make a custom control / You have to be a programmer to make reports

- Printing in Silverlight renders images that are slightly fuzzy

In this article, we will demonstrate creating and printing reports in LightSwitch using Microsoft Report Viewer Control.

Note, you will need Visual Studio Professional, or higher, to use the method described here.

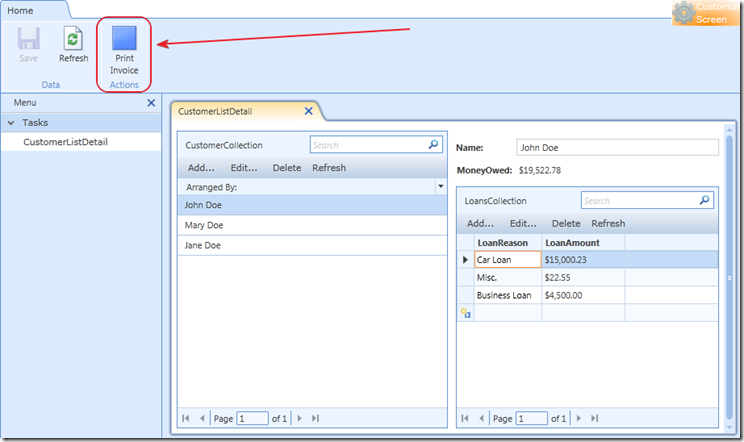

We remove the Silverlight custom control that we previously used for printing an invoice, but we want to keep the Print Invoice Button.

We want this Button to open the Report (that we will create later) and pass the currently selected Customer (so the Report will know which Customer to show).

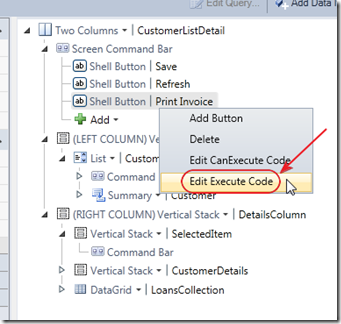

We replace the previous Print Invoice Button code with the following code:

partial void Button_Execute(){// Only run if there is a Customer selectedif (this.CustomerCollection.SelectedItem != null){Microsoft.LightSwitch.Threading.Dispatchers.Main.BeginInvoke(() =>{System.Text.StringBuilder codeToRun = new System.Text.StringBuilder();codeToRun.Append("window.open(");codeToRun.Append("\"");codeToRun.Append(string.Format("{0}", String.Format(@"{0}/Report.aspx?CustomerID={1}",GetBaseAddress(), this.CustomerCollection.SelectedItem.Id.ToString())));codeToRun.Append("\",");codeToRun.Append("\"");codeToRun.Append("\",");codeToRun.Append("\"");codeToRun.Append("width=680,height=640");codeToRun.Append(",scrollbars=yes,menubar=no,toolbar=no,resizable=yes");codeToRun.Append("\");");try{HtmlPage.Window.Eval(codeToRun.ToString());}catch (Exception ex){MessageBox.Show(ex.Message,"Error", MessageBoxButton.OK);}});}}#region GetBaseAddressprivate static Uri GetBaseAddress(){// Get the web address of the .xap that launched this applicationstring strBaseWebAddress = HtmlPage.Document.DocumentUri.AbsoluteUri;// Find the position of the ClientBin directoryint PositionOfClientBin =HtmlPage.Document.DocumentUri.AbsoluteUri.ToLower().IndexOf(@"/default");// Strip off everything after the ClientBin directorystrBaseWebAddress = Strings.Left(strBaseWebAddress, PositionOfClientBin);// Create a URIUri UriWebService = new Uri(String.Format(@"{0}", strBaseWebAddress));// Return the base addressreturn UriWebService;}#endregionDeploy The Application and Add The Report

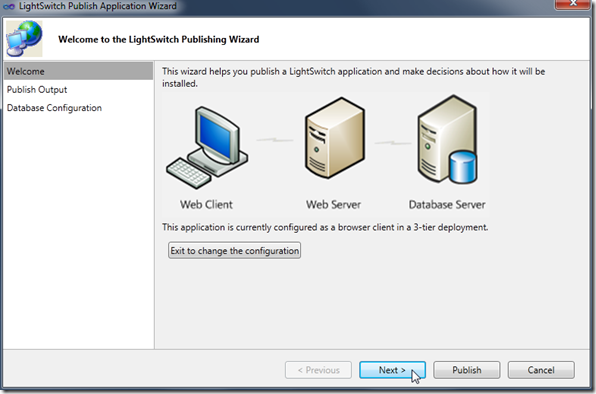

This article explains how to Deploy a LightSwitch Application:

It is recommended that you simply deploy the application to your local computer and then copy it to the final server after you have added the reports(s) .

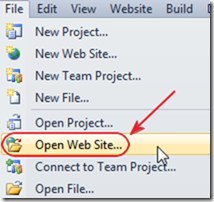

After we have Deployed the application, we open the Deployed application in Visual Studio.

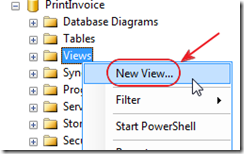

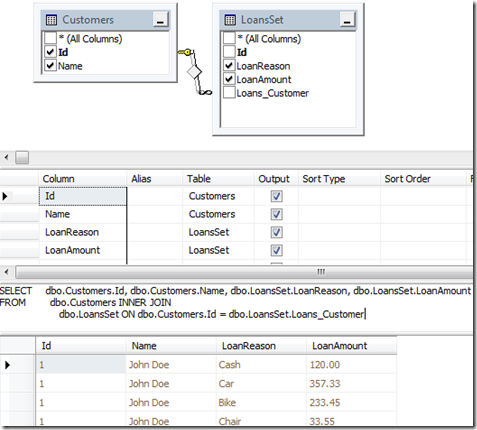

When the application is Deployed, a database is created (or updated). We will open this Database and add a View.

We design a View and save it.

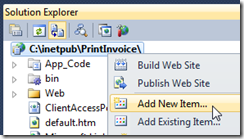

In Visual Studio we Add New Item…

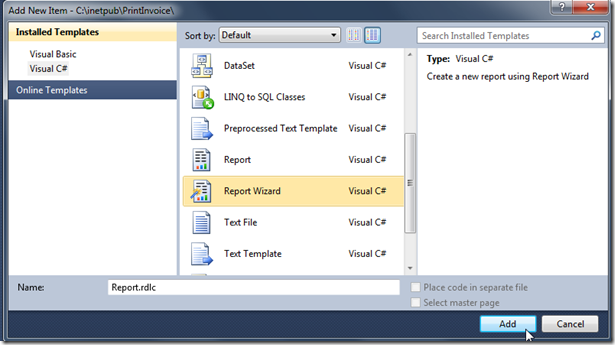

We add a Report to the project. …

Michael continues with the process to add the report.

Steve Lange posted on 10/26/2010 the slide deck for his Turn It On! My Visual Studio LightSwitch (Beta1) Presentation:

Tonight I presented to the Denver Visual Studio .NET User Group

on Visual Studio LightSwitch. Thank you to those who attended!

For those of you who missed it, or just want the content anyway, below are links to my presentation.

You can find it on SkyDrive

, on my profile at SlideShare

, or directly at Visual Studio LightSwitch (Beta 1) Overview

.

View more presentations

from Steve Lange

.

Below are a few links to get you started as well:

Lastly, a few of you asked for the funny looping slide deck I used during the break. That’s on SkyDrive HERE

.

Return to section navigation list>

Windows Azure Infrastructure

Michael Vizard explained How Mobile and Cloud Computing Drive Each Other in a 10/27/2010 post to ITBusinessEdge.com’s ITUnmasked blog:

The killer application for cloud computing will be mobile computing; and vice versa the killer application for mobile computing is going to be the cloud.

There’s a tendency to talk about mobile and cloud computing as two distinct trends. In reality, the adoption of one is driving the other. If you look at mobile computing, all the data being used is almost invariably stored in a cloud. As these devices become more prevalent throughout the enterprise, they will be accessing federated clouds of resources running on both public and private infrastructure. The more mobile devices there are, the greater the demand for cloud services. Of course, without cloud computing services, mobile computing devices couldn't do much, so in reality mobile and cloud computing are really two ends of the same continuum.

A new survey conducted by the developerWorks group of IBM finds that most IT professionals are firmly convinced of the dominance of mobile computing, but only half are as solidly convinced about the future of cloud computing. Drew Clark, director of strategy for the IBM Venture Capital Group, says the combination of mobile and cloud computing will clearly change the way IT resources are deployed and managed throughout the enterprise.

But the most interesting thing about all this, says Clark, is how it changes the way IT people see themselves five years from now. As IT continues to mature into a set of services rather than something IT professionals build, IT people are increasingly appreciating the value of domain knowledge in specific vertical industries.

That means that as IT evolves, says Clark, the value that IT people will bring to the business equation in the future is not necessarily how the technology works, but rather how best to apply it.

For more information about and links to the IBM survey, see the “Windows Azure Infrastructure” section of my Windows Azure and Cloud Computing Posts for 10/8/2010+ post (scroll down or search for IBM).

Ian Gotts asserted “You've heard of public clouds, private clouds and hybrid clouds, but is there room for yet another word in the cloud computing lexicon, a stealth cloud? That is, a cloud without the support of the IT department?” as a preface to his A New Cloud: The Stealth Cloud? post to CIO.com of 10/27/2010:

What is the Stealth Cloud?

The term "Cloud Computing" seems to have struck a chord in a way that ASP, OnDemand, SaaS and all the previous incarnations never have. Every analyst and journalist is blogging and tweeting about it, there are a slew of conferences and events, and a surprising number of books have already been published.

With the explosion of cloud computing, there is now more than one sort of cloud as well. There are already public clouds, private clouds, community clouds, and hybrid clouds. In addition to these, I would like to propose that a new term, "stealth cloud", should be added to the lexicon. As the name suggests it does its job — quietly, unseen, and unnoticed. Essentially, the stealth cloud refers to services being consumed by business users without the knowledge, permission or support of the CIO and the IT department.

Consumers are Business People Too

Business people are embracing the ideas of cloud computing like never before. They can see immediate value to their business from the applications and services being offered. As the technology becomes easier to develop, there seems to be no limit to what is being provided in the cloud, much of which is packaged in a very compelling, slick user experience.

When the business user is provided with these elegant services as a consumer it is inevitable that they bring them to work. With services such as online backup, project management, CRM, collaboration and social networking all available through a browser, is it any surprise business users are signing up and ignoring the (seemingly) staid and boring applications provided by the IT department?

A while back a large U.K. central Government organization surveyed the IT infrastructure and discovered over 2,500 unsupported business-created applications on PCs and servers; MSAccess databases, spreadsheets, custom apps, on and on. Of the 2,500 that were discovered, a staggering 500 were mission critical. With the stealth cloud it is impossible to discover which applications or services are being used except by getting every user to "fess-up" to the IT department. Now why should they do that?

Why is it an Issue, and for Whom?

Stealth cloud computing sounds like a perfect way of reducing the IT workload and backlog of requests for systems as a form of "crowdsourcing." Thousands of innovative entrepreneurs are providing solutions, often quite niche, to business problems at little or no cost to the business. IT departments should see cloud computing as an ally, because embracing it will make them appear far more responsive to the business; however, stealth cloud computing seems to be having the reverse effect.

Read more: Continue Reading, 2, 3, next page »

James Urquhart examined 'Moving to' versus 'building for' cloud computing in a 10/26/2010 post to CNet’s The Wisdom of Clouds blog:

Microsoft Chief Software Architect Ray Ozzie (pictured below) has written a memo that has generated tremendous buzz among the cloud-computing community. Microsoft CEO Steve Ballmer announced Ozzie's impending retirement from the company last week, and Ozzie took that opportunity to write "Dawn of a New Day," which outlines a future for computing that is both a challenge and opportunity for the software maker.

Don Reisinger has excellent analysis of that post, so I won't break it down in detail for you here. However, Ozzie's post is interesting to me because it highlights a key nuance of cloud adoption that I think IT organizations need to think about carefully: the difference between "moving to" and "building for" the cloud.

I, and others, have noted several times before that one of the key challenges for enterprises adopting cloud is the fact that they are not "greenfield" cloud opportunities. They have legacy applications--lots of them--and those applications must remain commercially viable, available, and properly integrated with the overall IT ecosystem for the enterprise to operate and thrive.

As Ozzie notes, however, the future introduces a "post-PC" era, in which software becomes "continuously available" and devices evolve into "appliance-like connected devices." That new future sounds simple enough, but there are huge challenges in making that a seamless, scalable computing model.

You can't just "port" existing applications into a new model like that. Software just doesn't work that way. While data formats and application metaphors may remain relatively consistent, key fundamentals such as user interface components (e.g. touch screens), data management (e.g. nonrelational data stores and new multidevice synchronization schemes), and even programming styles (e.g. "fail-ready" software) mean existing code is unlikely to be ready-made for a true cloud-computing model.

That's not to say that existing apps shouldn't be moved to cloud environments where it makes sense to do so. Development and testing, for example, are two legacy computing environments that benefit greatly from the dynamic, pay-as-you-go model of cloud. I am hearing reports that even relatively complicated legacy applications, such as SAP implementations, can benefit from cloud adoption if there is a dynamic usage model that meets the criteria laid out in Joe Weinman's "cloudonomics" work.

However, today IT organizations have to begin consciously thinking about where they are taking their business with respect to cloud. Not just technology, but the entire business. Are you going to look at the cloud as a data center alternative for your existing computing model, or are you going to architect your business to take advantage of the cloud?

A great example of the latter is online movie-streaming leader Netflix. In recent talks, Adrian Cockcroft, Netflix's cloud architect, outlined the company's decisive move away from private data centers to public cloud computing and content delivery networks. Making heavy use of the entire Amazon Web Services portfolio, Netflix has designed not only its IT systems for the cloud, but its online business model has evolved to make the company more "cloud ready."

The point is that you can't simply move your existing IT to an "infrastructure as a service" and declare yourself ready for a cloud-based future. Yes, you should move legacy systems to public or private cloud systems when it makes economic sense to do so, but you need to begin to evaluate all of your business systems--and, likely, business models--to determine if they will win or even survive in a continuous service, always-connected world.

There is a huge difference between "moving to" the cloud and "building for" the cloud. Are you prepared to invest enough in both?

Jay Fry explained Using cloud to test deployment scenarios you didn't think you could in a 10/26/2010 post to his Data Center Dialog blog:

Often, IT considers cloud computing for things you were doing anyway, with the hope of doing them much cheaper. Or, more likely from what I’ve heard, much faster. But last week a customer reminded me about one of the more important implications of cloud: you can do things you really wouldn’t have done otherwise.

And what a big, positive benefit for your IT operations that can be.

A customer’s real, live cloud computing experience at Gartner SymposiumThe occasion was last week’s Gartner’s Symposium and ITXpo conference in Orlando. I sat in on the CA Technologies session that featured Adam Famularo, the general manager of our cloud business (and my boss), and David Guthrie, CTO of Premiere Global Services, Inc. (PGi). It probably isn’t a surprise that Guthrie’s real-world experiences with cloud computing provided some really interesting tidbits of advice.

Guthrie talked about how PGi, a $600 million real-time collaboration business that provides audio & web conferencing, has embraced cloud computing (you’ve used their service if you’ve ever heard “Welcome to Ready Conference” and other similar conferencing and collaboration system greetings).

What kicked off cloud computing for PGi? Frustration. Guthrie told the PGi story like this: a developer was frustrated with the way PGi previously went about deploying business services. From his point of view, it was way too time-consuming: each new service required a procurement process, the set-up of new servers at a co-location facility, and the like. That developer tracked down 3Tera AppLogic (end of sales pitch) and PGi began to put it to use as a deployment platform for cloud services.

What does PGi use cloud computing for? Well, everything that’s customer facing. “All the services that we deliver for our customers are in the cloud,” said Guthrie. That means they use a cloud infrastructure for their audio and web collaboration meeting services. They use it for their pgi.com web site, sales gateways, and customer-specific portals as well.

Guthrie stressed the benefits of speed that come from cloud computing. “It’s all about getting technology to our customers faster,” said Guthrie. “Ramp time is one of the biggest benefits. It’s about delivering our services in a more effective way – not just from the cost perspective, but also from the time perspective.”

Cloud computing changes application development and deployment. Cloud, Guthrie noted in his presentation, “really changed out entire app dev cycle. We now have developers closer to how things are being deployed.” He said it helped fix consistency problems between applications across dev, QA, and production.

During his talk, Guthrie pointed to some more fundamental differences they've been seeing, too. He described how the cloud-based approach has been helping his team be more effective. “I’ve definitely saved money by getting things to market quicker,” Guthrie said.But, more importantly, said Guthrie, “it makes you make better applications.” “I was also able to add redundancy where I previously wouldn’t have considered it” thanks to both cost efficiencies and the ease with which cloud infrastructure (at least, in his CA 3Tera AppLogic-based environment) can be built up and torn down.

“You can test scenarios that in the past were really impractical,” said Guthrie. He cited doing side-by-side testing of ideas and configurations that never would have been possible before to see what makes the most sense, rolling out the one that worked best, and discarding those that didn't. Except in this case, "discarding" doesn't mean tossing out dedicated silos of hardware (and hard-wired) infrastructure that you had to manually set up just for this purpose.

Practical advice, please: what apps are cloud-ready? At the Gartner session, audience members were very curious about the practicalities of using cloud computing, asking Guthrie to make some generalizations from his experience on everything from what kind of applications work best in a cloud environment, to how he won over his IT operations staff. (I don’t think last week’s audience is unique in wanting to learn some of these things.)

“Some apps aren’t ready for this kind of infrastructure,” said Guthrie, while “some are perfectly set for this.” And, unlike the assumption many in the room expressed, it’s not generally true that packaged apps are more appropriate to bring to a cloud environment than home-grown applications.

Guthrie’s take? You should decide which apps to take to the cloud not by whether they are packaged or custom, but with a different set of criteria. For example, stateless applications that live on the web are perfect for this kind of approach, he believes.

Dealing with skepticism about cloud computing from IT operations.

One of the more interesting questions brought out what Guthrie learned about getting the buy-in and support of the operations team at PGi. “I don’t want you to think I’ve deployed these without a lot of resistance. I don’t want to act like this was just easy. There were people that really resisted it,” he said.

Earning the buy-in of his IT operations people required training for the team, time, and even a quite a bit of evangelism, according to Guthrie. He also laid down an edict at one point: for everything new, he required that “you have to tell me why it shouldn’t go on the cloud infrastructure.” What seemed draconian at first, actually turned out to be the right strategic choice.“As the ops team became familiar [with cloud computing], they began to embrace it,” Guthrie said.

Have you had similar real-world experiences with the cloud? Or even contradictory ones? I’m eager to hear your thoughts on PGi’s approach and advice (as you might guess, I much prefer real-world discussions to theoretical ones any day of the week). Leave comments here or contact me directly; I’ll definitely continue this discussion in future posts.

CloudHarmony posted Introducing Web Services for Cloud Performance Metrics on 10/24/2010:

Over the past year we've amassed a large repository of cloud benchmarks and metrics. Today, we are making most of that data available via web services. This data includes the following:

- Available Public Clouds: What public clouds are around and which cloud services they offer including:

- Cloud Servers/IaaS: e.g. EC2, GoGrid

- Cloud Storage: e.g. S3, Google Storage

- Content Delivery Networks/CDNs: e.g. Akamai, MaxCDN, Edgecast

- Cloud Platforms: e.g. Google AppEngine, Microsoft Azure, Heroku

- Cloud Databases: e.g. SimpleDB, SQL Azure

- Cloud Messaging: e.g. Amazon SQS, Azure Message Queue

- Cloud Servers: What instance sizes, server configurations and pricing are offered by public clouds. For example, Amazon's EC2 comes in 10 different instances sizes ranging from micro to 4xlarge. Our cloud servers pricing data includes typical hourly, daily, monthly pricing as well as complex pricing models such as spot pricing (dynamically updated) and reserve pricing where applicable

- Cloud Benchmark Catalog: This includes names, descriptions and links to the benchmarks we run. Our benchmarks cover both system and network performance metrics

- Cloud Benchmark Results: Access to our repository of 6.5 million benchmarks including advanced filtering, aggregation and comparisons. We are continually conducting benchmarks so this data is constantly being updated

We are releasing this data in hopes of improving transparency and making the comparison of cloud services easier. There are many ways that this data might be used. In this post, we'll go through a few examples to get you started and let you take it from there.

Our web services API provides both RESTful (HTTP query request and JSON or XML response) and SOAP interfaces. The API documentation and SOAP WSDLs are published here: http://cloudharmony.com/ws/api

The sections below are separated into individual examples. This is not intended to be comprehensive documentation for the web services, but rather a starting point and a reference for using them. More comprehensive technical documentation is provided for each web service on our website.

Example 1: Lookup Available Clouds

In this first example we'll use the getClouds web service to lookup all available public clouds. The table at the top of the web service documentation describes the data structure that is used by this web service call.

Request URI: JSON Response

http://cloudharmony.com/ws/getClouds

Request URI: XML Response

The post continues with 21 examples of increasing complexity and specifivity.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Jon Shende asked “Can traditional GRC practices adapt to the cloud computing environment?” in a preface to his GRC and the Cloud - Governance, Risk Management & Compliance post of 10/27/2010:

As we become more technology dependent, more so in today's "cloud"-driven environment, IT security needs to evolve from the traditional sense of digital security.

We should see the advent and acceptance of a more holistic, flexible and adaptive model of security that focuses more on managing information security, people and processes in a natural evolution from the traditional model of implementation, monitoring and updating.

According to Teubner and Feller [1] "Governance is understood as securing a responsible corporate management, having its roots in value-based management."

With regard to Risk Management, Marshall Krantz said it best:

"Faced with threats from all quarters - recession and credit crunch, heated global competition, continuing Sarbanes-Oxley pressures - companies are making intensive risk management a top priority "[2]

We can also assert that Compliance constraints aim to ensure that an enterprise satisfies all pertinent legal reporting responsibilities and regulatory transparency demands.

With technologies evolving as rapidly as they are, combined with complexities caused by:

The constant drumbeat of global integration

Possibility of vendor conflicts of interest

Increasing demands for transparency

Multinational or country specific regulations

and new organizational threats cropping up faster than measures for mitigation are implemented;

enterprises must take appropriate steps to integrate governance, risk management and compliance as part of their modus operandi. A main source of contention within IT governance, is the use of multiple vendors for IT resources, where the objectives of vendors may not necessarily align with that of an enterprise; this can lead to notable governance issues for an enterprise.

According to a SETLabs briefing [3] "Governance Risk and Compliance (GRC) is fast emerging as the next biggest business requirement that is most likely to ensconce itself in the psyche of enterprises."

The briefing also mentioned the concept of Integrated GRC. The aim of which is to improve collaboration between all identified stakeholder, as well as increase the level of GRC integration. This is turn will improve on legacy siloed GRC systems which has been shown to decrease transparency and agility in governance.

Its objective is directed at increasing market competitiveness, reducing risk and improving compliance.

From this we can gather that the traditionally accepted progression of GRC will also need to adapt and evolve; more so as enterprises engage more services within the sphere of cloud computing - in other words an adaptive approach to GRC.

It is understood that in terms of risk management, IT security etc, the board of directors holds ultimate responsibility when it comes to safeguarding enterprise assets. This does not mean that other individuals are exempt from responsibility, far from it, in fact there should be a top down approach to ownership and accountability when is comes to ensuring the safety of company assets.

Edward Humphreys [4] stated that "security incidents occurs because of flaws , gaps or vulnerabilities somewhere in management framework, chain of policy,direction and implementation."

As technologies evolve and cloud computing becomes more accepted and implemented one can question whether there will be a blurring of responsibilities between the technical and non technical aspects of information security management and by extension GRC.

Von Solms [4] made a statement a few years ago that "a separation between operational and compliance management of information security becomes essential." But will this work in today's environment, or do we need to adapt to evolving technologies that will span outside the traditional realm of GRC and IT Security Management?

My quest for an answer,at least relating to GRC and the cloud computing ecosystem led me to a company called Agiliance and a conversation with their CEO, Joe Fantuzzi about their Risk Vision OpenGRCTM platform. Accordingly, this

platform's foundation supports six integrated applications and over 100,000 controls via standards and regulatory content. It also allows a user the capability to create target reports, import and export files seamlessly,while preserving file structure; has SQL and web services APIs for I/O with any applications, databases, files and systems as well as gives a user access up-to-date laws and regulations with 100,000+ controls and sub-controls.

What caught my attention however was his explanation of their "Cloud Risk Management - RiskVision Cloud Risk Software Services."

This service made allowances for ensuring an accountable implementation of enterprise policy and security assessments within the cloud, as well as, implementing compliance and risk scores with incident management that measure and test enterprise risk, compliance posture, threat levels,vendor risk and policy conformity.

Fantuzzi also spoke about the company's vision for deployment into the cloud and defined their take on Cloud Risk Governance Stages,where he demonstrated their target assessment for both public and private cloud environments in terms of cloud adoption states of readiness, operations and audit.

From an observational viewpoint, its Cloud Risk Operational Monitoring portion appeared to be effectively positioned for application within the cloud ecosystem.

Walking thorough the product, Fantuzzi also demonstrated allowances for seemless functional operations within several virtual environments, with threat/vulnerabilities connectors that were aligned with ongoing technical and vulnerability checks.

One purpose was to ensure continuous compliance checks as well as maintain ongoing monitoring for potential attacks - zero day or otherwise.

He also mentioned an objective of this product is to provide a user with prioritized risk actions and a 360 view of risk posture. Important factors when is comes to ensuring proper governance and management of risk in our compliance driven environments.

I am certain that those of you familiar with NISTs Standards Acceleration to Jumpstart Cloud Adoption of Cloud Computing (SAJAAC), can see this product aligning and growing with SAJAAC as the cloud ecosystem evolves and possible see it as a good utility for both the public and private cloud environments in terms of a cloud step regarding GRC management.

References

[1]Teubner, A. and Feller, T. 2008. Information Technology,Governance and Compliance Wirtschaftsinformatik. 50, 5 (2008), 400-406.

[2] Krantz, M. 2008. Survival in the Age of Risk http://www.cfo.com/article.cfm/11917608/1/c_2984351?f=related

[3] SETLabs Briefings Vol 6 No 3 2008.Pg 39

[4] Edward Humphreys :Information Security Management Standard: Compliance, governance and risk management. Elsevier Information Security Technical Report 13 (2008) 247-255

[5]Information Security Governance - Compliance Management vs operational management - S.H. (Basie) von Solms: Elsevier Computers and Security (2005) 24, 443-447

[6] Joe Fantuzzi CEO Agiliance. www.agiliance.com

Rob Sanders (@AusRob) posted Microsoft Security Report on Botnets on 10/26/2010:

What is a Botnet?

Well, in a nutshell, a Botnet is a internetworked series of computers which are running distributed software.

The more notorious form of Botnet (and the topic of this post) typically infects other computers using a variety of attacks and vulnerabilities, increasing their overall size and computing power. You might hear the term “zombie computer” associated with a malicious Botnet – this is accredited to an unwilling participant (computer) in a Botnet, controlled remotely.

Tell me more

As you might know, there has been a massive increase in the scale and complexity of Botnets over the past few years. Recently, Microsoft Security has published a fairly comprehensive report on the nature of Botnets and also how to defend your IT assets from becoming part of a Botnet.

More on the report published by Microsoft:

“This is the first time that Microsoft has released this depth of intelligence on botnets. Over the years, there have been plenty of industry security reports published on botnets, but this report is based on data from 600 million systems worldwide and some of the busiest online services on the Internet like Bing and Hotmail. Microsoft cleaned botnet infections from6.5 million systems in just 90 days in 2010-helping to free the owners of those systems who, unwittingly and unknowingly, were potentially being used by cyber criminals to perpetrate cybercrimes.“

This is not just a high level report for the casual IT professional; it contains much more and is worth setting aside some time to review. There is a section dedicated to some suggested ways to fight back against Botnets including detection, analysis and even a section on honeypots and darknets (of which this author has a decent amount of knowledge, I might add).

If you have anything to do with network security, distributed software on the Internet this is one report worth the read.

Lori MacVittie (@lmacvittie) posted The Impact of Security on Infrastructure Integration to F5’s DevCentral blog on 10/27/2010:

Automation implies integration. Integration implies access. Access requires authentication and authorization. That’s where things start to get interesting…

Discussions typically associated with application integration – particularly when integrating applications that are deployed off-premise – are going to happen in the infrastructure realm. It’s just a matter of time. That’s because many of the same challenges the world of enterprise application integration (EAI) has already suffered through (and is still suffering, right now, please send them a sympathy card) will rear up and meet the world of enterprise infrastructure integration head on (we’ll send you a sympathy card, as well)

I’m not trying to be fatalistic but rather realistic and, perhaps this one time, to get ahead of the curve. Automation and the complex system of scripts and daemons and event-driven architectures required to achieve the automated data center of tomorrow are necessarily going to raise some alarm bells with someone in the organization; if not now then later. And trust me, trying to insert an authentication and authorization system into an established system is no walk in the park.

If you don’t recall why this integration is crucial to a fully dynamic (automated) data center, check out The New Network

and then come back. Go ahead, I’ll wait. See what I mean? With all the instruction and sharing going on, you definitely want to have some kind of security in place. At least that’s what folks seem to bring up. “Sounds good, but what about security? Who is authorizing all these automatic changes to my router/switch/load balancer?”

THE CHALLENGE

The challenge with implementing such a system – whether it’s integrated as part of the component itself or provided by an external solution – is maintaining performance. In the past we haven’t really been all that concerned with the speed with which configuration changes in the network and application delivery network infrastructure occurred because such modification occurred during maintenance windows with known downtime. But today, in on-demand and real-time environments, we expect such events to occur as fast as possible (and that’s when we aren’t frustrated the system didn’t read our minds and perform the actions on our behalf in the first place).

Consider the performance impact and potential fragility of a process comprising a chain of components, each needing a specific configuration modification. Each component must authenticate and then authorize whatever or whoever is attempting to make the change before actually executing the change. In a multi-tenant infrastructure or a very large enterprise architecture this almost necessarily implies integration of all components with a centrally managed identity management system. That means each component must:

- Receive a request

- Extract the credentials

- Authenticate credentials

- Authorize access/execution

- Perform/execute the requested action

- Write it to a log (auditing, people, AUDITING)

- Respond to the request with status

The interdependencies between data center components grows exponentially as every component must be integrated with some central identity management system as well as each other and the management console (or script) from which such actions initiated. That’s all in addition to doing what it was intended to do in the data center, which is some networking or application delivery networking task. Each integration necessarily introduces (a) a point of failure and (b) process execution latency. That means performance will be impacted, even if only slightly. Chain enough of these integrations in a row and real-time becomes near-time perhaps becomes some-time. And failure on any single component can cascade through the system, causing disruption at best and outages at worst.

But consider the impact of not ensuring that requests are coming from an authorized source. Yeah, potential chaos. No way to really track who is doing what. It’s a compliance and infosec nightmare, to say the least. We’re at an impasse of sorts, at the moment. We need the automation and integration to move forward and onward but the security risks may be too high for many organizations to accept.

API KEYS MAY HOLD the KEY

Most Web 2.0 applications and cloud computing management frameworks leverage an API key to authorize a specific action. Given the fact that Infrastructure 2.0 is largely driven by a need to automate via open, standards-based APIs, it seems logical that rather than continue to use the old username-password or SSL client

certificate methods of the past that infrastructure vendors would move toward API key usage as well.

Consider the benefits, especially when attempting to normalize usage of infrastructure with more traditional components. While it’s certainly true that cloud computing providers, who build out frameworks of their own to manage and meter and ultimately bill customers for usage, they still need a way to interface with the infrastructure providing services in such a way as to make it possible to meter and bill out that usage, as well. Wouldn’t it be fantastic if infrastructure supported the same methods of authentication as the cloud computing and dynamic data center environments they enable?

But Lori, you’re thinking, the use of API keys to authenticate requests doesn’t really address any of the challenges.

Au contraire mon frère, but it does!

Consider that instead of needing to authenticate a user by extracting a username and password and validating them against an identity store that you can simply verify the API key is valid (along with some secret verification code, like the security code on your credit card) and away you go. You don’t need to verify the caller, just that the call itself is valid and legitimate based on the veracity of the API key and security code, much in the way that credit cards are validated today.

While this doesn’t eliminate the need to verify credentials per se, it does do three things:

1. Decreases the time necessary to extract and validate. If we assume that the API key and associated security code are passed along in, say, the HTTP headers, extraction should be fast and simple for just about every network component in the data center (I am assuming SSL/TLS encrypted transport layer here to keep prying eyes from discovering the combination). Passing the same information in full payload is possible, of course, but more time consuming to extract as the stream has to be buffered, the data found and extracted, and then formatted in a way that it can be verified.

2. Normalizes credentials across the infrastructure. If there were, say, some infrastructure standard that specified the way in which such API keys were generated, then it would be possible to share a single API key across the infrastructure. Normalization would enable correlation and metering in a consistent way and if it is only the security code that changes per user, we can then leverage that as the differentiator for authorization of specific actions within the environment.

Imagine that we take this normalization further and centrally log using a custom format that includes the API key and service invoked. A management solution could then use those aggregated logs and, indexing on the API key, compile a list of all services invoked by a given customer and from that generate – even in real-time – a current itemized billing scheme.

3. Eliminates dependency on third-party identity stores. By leveraging a scheme that is self-verifiable, there is no need to require validation against a known identity store. That means any piece of infrastructure supporting such a scheme can immediately validate the key without making an external call, which reduces the latency associated with such an act and it eliminates another potential point of failure. It also has the effect of removing a service that itself must be scaled, managed, and secured which reduces complexity for cloud computing providers and organizations implementing private cloud computing environments.

THE INTERSECTION of INFOSEC and INFRASTRUCTURE INTEGRATION

Traditional enterprise application integration methods of addressing the challenge of managing credentials internally often leverages credential mapping or a single, “master” set of credentials to authenticate and authorize applications. This method has worked in the past but it also imposes additional burdens on the long-term maintenance and management of credentials and introduces performance problems and does not support a multi-tenant architecture well.

An API key-based scheme may not be “the” solution, but something has to be done regarding security and its impact on infrastructure that necessarily needs to turn on a dime and potentially support multiple tenants. Security is an integral part of an enterprise architecture (or should be) and there are alternative methods to the traditional username/password credential systems we’ve been leveraging for applications for what feels like eons now. It’s not just a matter of improving performance, that’s almost little more than a positive side effect in this case; it’s about ensuring that there exists a security model that’s feasible and flexible enough to fit into emerging data center models in a way that’s more aligned with current integration practices.

Infrastructure 2.0 has the potential to change the way in which we architect our networks, but in order to do so we may have to change the way in which we view authentication and authorization to those network and application network components that are so critical to achieving a truly automated data center.

<Return to section navigation list>

Cloud Computing Events

Wade Wegner doesn’t really answer What have I been doing for PDC10? in this 10/27/2010 post:

While I’ve been involved with the Professional Developers Conference (PDC) in the past (keynote in ‘08, breakout in ‘08, and breakout in ‘09), I’ve never been as personally vested and involved as I am this year. Whether you look at as a perk or curse, it’s one of the jobs I signed up for when I took the roll as a Technical Evangelist for the Windows Azure Platform (personally, I love it!).

So, what have I been working on?

Sadly, there’s not much I can talk about at the moment. As a Technical Evangelist, my job is to work with the engineering teams and help them bring their technology to you. I’ve spent a significant amount of time in Building 18 the last few months digging into a lot of new stuff and preparing for PDC and beyond. All of the content I’ve been focused on – keynote, sessions, hands-on labs, interviews, etc. – is embargoed until after the keynote presentation. You can bet that I’ll post about everything after the keynote, but until then let me drop a few teasers:

- Don’t miss the keynote, especially Bob Muglia. We’re making some key announcements, and you’ll get a chance to see an application I’ve spent a significant amount of time working on.

- Two weeks ago I recorded a session for PDC that will not get published until after the keynote tomorrow. Watch for it.

[Emphasis added.]

- Don’t miss the following sessions:

- Composing Applications with AppFabric Services, Karandeep Anand

- Connecting Cloud & On-Premises Apps with the Windows Azure Platform, Yousef Khalidi

- Identity & Access Control in the Cloud, Vittorio Bertocci

- Building High Performance Web Applications with the Windows Azure Platform, Matthew Kerner

Of course there are a lot of other great sessions, but don’t miss these here!

If you are fortunate enough to attend PDC10 this year, look me up! In addition to participating and attending the sessions above, I’ll be at the Welcome Reception, Ask-the-Experts, and the Attendee Party. I’ll also be at the PDC10 Workshop this Saturday, where I’ll give a chalk talk and help proctor HOLs and engage in 1:1’s with attendees.

Drew Robbins posted Frameworks and Tools at PDC10 on 10/26/2010:

PDC10 kicks off this week on the Microsoft Campus in Redmond, Washington. We’ve been pretty busy over the last few months getting ready for this week. It’s great to see the conference finally here and have our investment of time pay off.

My role in PDC10 was organizing the Framework & Tools theme across several product teams at Microsoft that are represented. The theme description was the following:

Frameworks & Tools – go deep on various topics related to languages, frameworks, runtimes and tools. Learn how to build the most cutting edge applications using the full power and flexibility of the Microsoft platform.

Planning a PDC theme is a lot of fun because you get to ask the product teams what message they want to carry to developers. We get to be some of the first to hear how the story of our platform will evolve.