Windows Azure and Cloud Computing Posts for 10/15/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Updated 10/17/2010: Articles marked ••

• Updated 10/16/2010: Articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

• Bill McColl asserted “Architectures such as MapReduce and Hadoop are good for batch processing of big data, but bad for realtime processing” as a preface to his Hadoop and Realtime Cloud Computing post of 10/15/2010:

Big data is creating a massive disruption for the IT industry. Faced with exponentially growing data volumes in every area of business and the web, companies around the world are looking beyond their current databases and data warehouses for new ways to handle this data deluge.

Taking a lead from Google, a number of organizations have been exploring the potential of MapReduce, and its open source clone Hadoop, for big data processing. The MapReduce/Hadoop approach is based around the idea that what's needed is not database processing with SQL queries, but rather dataflow computing with simple parallel programming primitives such as map and reduce.

As Google and others have shown, this kind of basic dataflow programming model can be implemented as a coarse-grain set of parallel tasks that can be run across hundreds or thousands of machines, to carry out large-scale batch processing on massive data sets.Google themselves have been using MapReduce for batch processing for over six years, and others, such as Facebook, eBay and Yahoo have been using Hadoop for the same kind of batch processing for several years now. So today, parallel dataflow is firmly established as an alternative to databases and data warehouses for offline batch processing of big data. But now the game is changing again...

In recent months, Google has realized that the web is now entering a new era, the realtime era, and that batch processing systems such as MapReduce and Hadoop cannot deliver performance anywhere near the speed required for new realtime services such as Google Instant. Google noted that

- "MapReduce isn't suited to calculations that need to occur in near real-time"

- "You can't do anything with it that takes a relatively short amount of time, so we got rid of it"

Other industry leaders, such as Jeff Jonas, Chief Scientist for Analytics at IBM, have made similar remarks in recent weeks. In his recent video "Big Thoughts on Big Data", Jonas notes that with only batch processing tools to handle it, organizations grappling with a relentless avalanche of realtime data will get dumber over time rather than getting smarter.

- "The idea of waiting for a batch job to run doesn't cut it. Instead, how can an organization make sense of what it knows, as a transaction is happening, so that it can do something about it right then"

- "I'm not a big fan of batch processes... I've never seen a batch system grow up an become a realtime streaming system, but you can take a realtime streaming system and make it eat batches all day long"

- "I like Hadoop but it's meant for batch activities. That's not the kind of back-end you would use for realtime sense-making systems"

So coarse-grain dataflow architectures such as Hadoop are good for batch, but bad for realtime.

To power realtime big data apps we need a completely new type of fine-grain dataflow architecture. An architecture that can, for example, continuously analyze a stream of events at a rate of say one million events per second per server, and deliver results with a maximum latency of five seconds between data in and analytics out. At Cloudscale we set out to crack this major technical problem, and to build the world's first "realtime data warehouse". The linearly scalable Cloudscale parallel dataflow architecture not only delivers game-changing realtime performance on commodity hardware, but also, as Jeff Jonas notes above "can eat batches all day long" like a traditional MapReduce or Hadoop architecture. There isn't really an established name yet for such a system. I guess we could call it a "Redoop" architecture (Realtime Dataflow on Ordinary Processors, or Realtime Hadoop).

Bill is Founder & CEO, Cloudscale Inc.

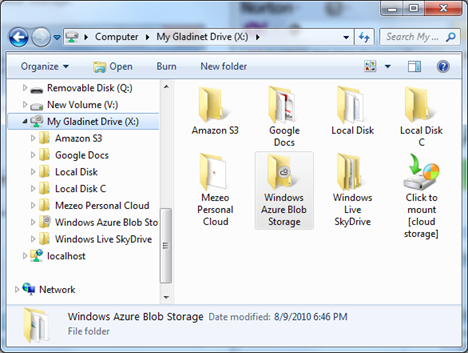

• Jerry Huang touts the Gladinet Cloud Desktop and CloudAFS in his Windows Azure Storage Desktop Integration post of 10/12/2010:

When you hear of Windows Azure Storage, you may be thinking it is a developer’s storage. First of all, you may see it in action from Visual Studio 2010. Second, you may be hearing it together with a geeky term – Azure Blob Storage. Since you are not a developer, you dismiss it and walk on.

Now wait a second; clear your mind; picture Windows Azure Storage as an external drive, offsite hosted by Microsoft, with drag and drop integration from Windows Explorer, would you still ignore?

After all, Windows Azure Storage is just one of the many cloud storage, such as Amazon S3, Google Storage for Developer, AT&T Synaptic Storage, Rackspace CloudFiles and so on.

As soon as the Windows Azure Storage can be integrated to your desktop, you can put it to use with familiar Windows user experience.

Scenario A – Direct Desktop Integration

It is like buying your own USB key drive and plug it into your PC or laptop, you can have a mapped network drive to Windows Azure directly from your desktop. With Gladinet Cloud Desktop, you have a network drive, a desktop sync folder and backup functionalities, all backed by Windows Azure Storage.

Scenario B – Gateway Access Through File Server

Windows Azure Storage can be mounted on a file server side. When you are at work, you can do a mapped network drive to the file server, indirectly using Windows Azure Storage. The benefit is that your colleagues and you can do shared access to the file server.

The IT admin can install Gladinet CloudAFS on the file server and mount Windows Azure Storage as tier2 storage.

On your desktop side, you will need to do a map network drive.

In scenario A, you use Windows Azure Storage as your own personal storage from the cloud. In scenario B, you use Windows Azure Storage as your company’s extended storage supported by the cloud.

Either way, you have Windows Azure Storage integrated to your desktop.

Related Articles:

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Katka Vaughan describes part of her Netflix Browser for Windows Phone 7 - Part 1 in a very detailed post of 10/15/2010 to The Code Project:

Learn how to use the Pivot and Panorama controls, page navigation, OData and more! [Download the source code from a link on the app’s Code Project page. Requires site registration.]

I have created a video of the application.

Contents

- Introduction

- Background

- The Demo Application

- What Will be Covered

- Prerequisites

- Understanding the Pivot and Panorama Controls

- The Demo Application: Getting Started

- Panorama Control

- String Format Converter

- Pivot Control

- Conclusion

- History

Introduction

With the growing excitement around Windows Phone 7 and Daniel writing Windows Phone 7 Unleashed, I couldn’t resist getting involved in the space. After all, I have the advantage of an early preview of Daniel’s book. So I downloaded the tools and started playing around. I wanted to explore the Panorama and Pivot controls, and came up with a neat demo application that uses Netflix data, and showcases the Pivot and Panorama controls as well as OData, page navigation, WrapPanel and more. This article will be in part an overview of the Panorama and Pivot controls, and in part a walkthrough of the demo application.

Note: As I worked through the article, the number of pages kept growing and so I have split the article into two parts.

Background

The final Windows Phone Developer Tools have been released, and with that, among other things, we have the official release of two navigation controls: the Pivot & Panorama. These controls are vital to the visual user experience on Windows Phone, and the phone's built-in applications use them extensively. Thus, undoubtedly, you will find yourself using them a lot. This is not to say, however, that they are a suitable choice for all applications. As these controls are quite new and unique to Windows Phone (at least currently), there may be some confusion in understanding them. There are several resources available. The particularity useful ones include: Windows Phone Design Days – Pivot and Panos video presented by Amy Alberts and Chad Roberts, and the UI design and interaction guide for Windows Phone 7. I will be drawing on these resources in the overview below.

Prior to the official Microsoft release of these controls, there were some alternatives such as Stephane Crozatier’s Panorama & Pivot controls available on Codeplex. For those who built their applications with these early controls, the advice is to migrate to the official controls. Fortunately Stephane Crozatier has written a transition guide.

What Will be Covered

- Panorama Control

- Pivot Control

- String Formatting

- Consuming Odata (in part 2)

- Page Navigation (in part 2)

- Progress Bar (in part 2)

- WrapPanel (in part 2)

The Demo Application

The demo application is a Panorama application that allows users to browse through Netflix data. At the top layer the user is presented with a list of genres, new releases and highest rated movies. The user can then drill down into each movie to get movie details. Selecting a genre from the genre list will take the user to a Pivot, which lists movies for the selected genre; representing the data in three different ways: all movies for that genre, movies sorted by year, and movies sorted by average rating. In an ideal situation I would like to see the list of all genres to be only the top level genres instead of a very long list of genres and subgenres, but there wasn’t an easy way of querying this.

Prerequisites

- Windows Vista or Windows 7

- Windows Phone 7 Developers Tools which includes Visual Studio Express 2010 for Windows Phone, Windows Phone Emulator, Expression Blend 4 for Windows Phone, and XNA Game Studio 4.0.

- Visual Studio Express 2010 and Expression Bled 4 will only be installed if you do not already have them installed.

- If you have previously installed the Beta Tools, you will have to uninstall it before installing the final tools. Note that the installation may take some time. Leave the installation running, it will eventually complete.

- Silverlight for Windows Phone Toolkit

- OData Client Library for Windows Phone 7 (The one currently available is from March 2010)

Understanding the Pivot and Panorama Controls

The Pivot and Panorama are two layout controls that help you represent data on the phone in a unique way. They have several things in common. They both hold a number of horizontally arranged individual views (sections). They are visually quite similar; both show a bit of the off-screen content, have titles and section titles (see Figures 1 and 2). Both controls have built-in touch navigation that allows the user to navigate left to right between the different sections using the pan and flick touch-gestures, and up and down touch gestures for when the section’s content doesn’t fit into the height of the screen boundaries. The left to right navigation is cyclical (i.e. after the last section, the first section appears).

The controls are also quite different. This is mainly because they each serve a different purpose. The Panorama is meant to entice the user to explore; whereas the Pivot is about tasks and getting something done. As Amy Alberts and Chad Roberts say in their presentations, Pivots are efficient, focused, habitual whereas the Panorama is expansive, dynamic, inviting exploration. We can see this differentiation in Figure 1 and 2, where the Panorama offers a visually appealing experience, enticing the user with an attractive background, large title, variety of UI controls, animation, thumbnails etc, while the Pivot appears less appealing. There is also one other difference, and that is the content width of each view. The Panorama views usually span over screen boundaries, whereas the Pivot content is confined to the screen width; fitting within the screen. Users can navigate through the pivot sections not only via the left to right gestures, but also by directly selecting the Pivot Title.

Panorama

The Panorama is a wide horizontal canvas that spans over screen boundaries. The canvas is sliced into several different views and uses layered animations and UI controls to represent data in different ways, i.e. lists, grids, buttons etc. For usability issues the number of these sections should be limited to four, but the width and height of each section can vary. The panorama tends to be explorative, essentially working as the top layer to several other experiences. As the Panorama is intended to coax the user to explore, it should show content that is interesting, tailored for that user; it should not feel generic. We should also not want to overwhelm the user with too much data. As Jeff Wilcox says “Panorama is meant to be the starting space, think white space not tons of data”. The Panorama contains data, and links that take the user to more detailed pages of the content, for example to the Pivot. The user then transits from the exploratory mode of the Panorama to the more focused mode of the Pivot.

Figure 1: Panorama

As you can see in Figure 1, some pixels of the next section are showing to the right. This is to hint the user that there is more content to explore. A Panorama application should offer a visually appealing experience. This can be achieved with an attractive background. It can be either a single colour or a background image. According to the design guidelines the image size should be 800 pixels high and 480 to 1000 pixels wide. The image could possibly be wider but no more that 2000 px as the image will be clipped at this width. Note that an image with a height of less then 800 pixels will be stretched to that height without constraining proportions. The background image is the bottom layer of the Panorama with the different sections overlaying on top of it. You should not attempt to have the background dependent on the content because they are not going to match up. As you can see in the video, the Panorama title moves in different rate then its section titles as the user moves thought the Panorama.

Pivot

Some regard the pivot being a lot like the TabControl for the phone. The pivot also has several different views (sections). It is recommended to limit the number of sections to 7 to ensure users can comprehend what is presented to them.

Unlike with the Panorama where the content may go beyond the screen boundaries, the Pivot content is confined to the screen width. Also, contrary of the Panorama, the Pivot doesn’t display the few pixels of the content of the next section, but rather gives users an indication how the data is filtered through the section titles at the top of the screen (See Figure 2).

Figure 2: Pivot

As we said before, Pivots are about getting something done, and so Pivots are suited to have an ApplicationBar. This is contrary to Panorama based applications which should not show an ApplicationBar. Therefore, if your page needs an application bar use a Pivot instead of a Panorama. Pivots are designed to represent data or items of similar type. For example, pivots can be used as filters for similar content around the same task flow. A good example of a pivot is the Email application, which shows all emails in one view, flagged emails in another, unread email in another, etc.

Things to Avoid

You should not place a Pivot inside a Panorama, or nest Pivots. Also you should not attempt to use either the Pivot or Panorama for creating a wizard flow. As Amy Alberts says, the user sees Panoramas and Pivots as distinct areas of data being surfaced to them, and not as a flow of UI that the user walks through. So for a wizard use a page flow instead. It is also recommended not to use a map control inside a Pivot or Panorama. You should also avoid animating the panorama title or section titles as they are going to be moving as the user moves through the control. Also avoid dynamically changing the Panorama title. The UI guidelines also state not to override the horizontal pan and flick functionality because it collides with the interaction design of the controls. Also keep to the recommended number of views, seven for Pivot and four for Panorama. And keep in mind that the user should never lose his or her place inside the Panorama, and should always know how to get back. You would also not want to have a Panorama, and every link on the Panorama, taking you to a Pivot. …

Katka continues with detailed, step-by-step instructions and source code for creating the app.

Bio: Katka has several years of experience working in software development in the areas of market research and e-commerce. She has wide ranging experience in developing Java, ASP.Net MVC, ASP.Net, WPF and Silverlight applications. She is particularly excited about Silverlight. Katka is an Aussie/Czech girl currently based in Geneva, Switzerland.

Tip: Unblock the NetFlixBrowser.sln and the \bin\debug\System.Data.Services.Client.dll files before building and running the project. Here are captures of my favorite Brazilian movies and guitarist from Katka’s app (click for full-size captures):

Bravo Katka! Stay tuned for part 2.

•• Brendan Forster (@shiftkey), Aaron Powell (@slace) and Tatham Oddie (@tathamoddie) developed and demoed The Open Conference Protocol with a Windows Phone 7 app on 10/16/2010:

Open Conference Protocol

The Open Conference Protocol enables you to expose conference data in a machine readable way, all through your existing conference website. Most of the 'protocol' is actually just the existing hCalendar, hCard and Open Graph standards. We're just defining how they should all work together.

Updating your website to include this meta data benefits everyone - not just clients of the Open Conference Protocol.

How do I make my website Open Conference compatible?

When an application wants to query your conference data, they'll start by requesting your homepage. From there, here's how they find the data…

Conference Name

Where we find it (first match wins):

contentattribute of the<meta property="og:title" content="…" />tag (see Open Graph protocol spec)- value of the

<title>tagWhat you need to do for an optimal app experience:

Usually nothing - the

<title>tag is generally sufficient.Adding the

<meta property="og:title" content="…" />tag will also improve the experience for users posting your conference link on Facebook.Conference Logo

Where we find it (first match wins):

- value of the

<meta property="og:image" content="…" />tag (see Open Graph protocol spec)srcattribute of the first<img src="…" />tag on the page where thesrcattribute contains "logo"If neither approach yields a logo, client implementations will display their own placeholder.

What you need to do for an optimal app experience:

- create a version of your conference logo that is 100px square

- link to it using a

<meta property="og:image" content="…" />tagAdding the

<meta property="og:image" content="…" />tag will also improve the experience for users posting your conference link on Facebook.Session Data

Where we find it:

The session listing is loaded from the URL specified by a

<link rel="conference-sessions" href="…" />tag in the<head>section.If the

<link>tag doesn't exist, the homepage will be used as the session listing.Individual session data is loaded from the session listing using the hCalendar microformat. The following mapping is used:

- Session Code:

uid- Title:

summary- Time:

dtstartanddtend- Room:

location- Abstract:

description- Speakers:

contact(specified once per speaker)What you need to do for an optimal app experience:

- Add the

<link rel="conference-sessions" href="…" />tag to your homepage- Mark up your session listing page with the hCalendar microformat

Speaker Data

Where we find it:

The speaker listing is loaded from the URL specified by a

<link rel="conference-speakers" href="…" />tag in the<head>section.If the

<link>tag doesn't exist, the homepage will be used as the speaker listing.Individual speaker data is loaded from the speaker listing using the hCard microformat. The following mapping is used:

- Name:

fn- Bio:

note- Photo:

photo- Website:

url- Twitter Handle:

nickname- Email:

- Company:

org- Job Title:

roleWhat you need to do for an optimal app experience:

- Add the

<link rel="conference-speakers" href="…" />tag to your homepage- Mark up your speaker listing page with the hCard microformat

How do I query Open Conference data easily?

Being an open protocol, it's totally possible to implement it yourself. Of course, this isn't the most interesting code to write. To help you focus on building your client application instead of the protocol, we've implemented a JSON API bridge.

The API lives at:

http://openconferenceprotocol.org/api/conference-data?uri=[conference]For example, to get all of the conference data for Web Directions South 2010 you'd call:

http://openconferenceprotocol.org/api/conference-data?uri=http://south10.webdirections.orgWe've posted a demo conference site with full meta data here:

http://openconferenceprotocol.org/demo-confGo ahead, try it. [See below]

Who built this?

The Open Conference Protocol, the JSON API bridge and the Windows Phone 7 client were all built by Brendan Forster (@shiftkey), Aaron Powell (@slace) and Tatham Oddie (@tathamoddie) for the Amped hackday. We built all of this in 10 hours on 16th October 2010.

Check out the YouTube video of the trio’s prize-winning presentation here:

Fiddler2 shows the following request header and response header/JSON payload for the preceding test:

GET http://openconferenceprotocol.org/api/conference-data?uri=http://openconferenceprotocol.org/demo-conf HTTP/1.1

Accept: image/gif, image/jpeg, image/pjpeg, application/x-ms-application, application/vnd.ms-xpsdocument, application/xaml+xml, application/x-ms-xbap, application/vnd.ms-excel, application/vnd.ms-powerpoint, application/x-shockwave-flash, application/x-silverlight-2-b2, application/x-silverlight, application/msword, */*

Referer: http://openconferenceprotocol.org/

Accept-Language: en-us

User-Agent: Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; GTB6.5; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.5.21022; .NET CLR 3.5.30428; .NET CLR 3.5.30729; OfficeLivePatch.1.3; .NET CLR 3.0.30729; OfficeLiveConnector.1.4; MS-RTC LM 8)

Accept-Encoding: gzip, deflate

Host: openconferenceprotocol.org

Connection: Keep-Alive

HTTP/1.1 200 OK

Date: Sun, 17 Oct 2010 17:14:13 GMT

Server: Microsoft-IIS/6.0

X-Powered-By: ASP.NET

X-AspNet-Version: 4.0.30319

X-AspNetMvc-Version: 3.0

Cache-Control: private

Content-Type: application/json; charset=utf-8

Content-Length: 844

{"Title":"Demo Conference","LogoUri":"http://openconferenceprotocol.org/custom-logo.gif","Sessions":[{"SessionCode":"WEB304","Title":"All about jQuery","StartTime":"\/Date(1287224100000)\/","EndTime":"\/Date(1287225900000)\/","Room":"Room G04","Abstract":"Let\u0027s talk about how awesome jQuery is and how much we all love it.","Speakers":[{"Name":"Bob Munro","Website":"http://openconferenceprotocol.org/","Photo":"http://openconferenceprotocol.org/photo.png","Twitter":"TwitterHandle","Email":"contact@email.com","Bio":"This is the bio","JobTitle":"Job Title","Company":"CompanyName"},{"Name":"Jackson Watson","Website":"http://openconferenceprotocol.org/","Photo":"http://openconferenceprotocol.org/photo.png","Twitter":"TwitterHandle","Email":"contact@email.com","Bio":"This is the bio","JobTitle":"Job Title","Company":"CompanyName"}]}]}

Here’s the Web Directions South logo, reduced in size:

The Open Conference Protocol project appears to compete with Falafel Software’s OData-based EventBoard Windows Phone 7 app described in my Windows Azure and SQL Azure Synergism Emphasized at Windows Phone 7 Developer Launch in Mt. View post of 10/14/2010. I’m sure EventBoard took longer than 30 man-hours to develop; according to John Watters, it took two nights just to port the data source to Windows Azure. OData implementations have the advantage that you can query the data. Hopefully, the PDC10 team will provide an OData feed of session info eventually.

Sudhir Hasbe (pictured below) gave props on 10/15/2010 to Dallas on PHP (via WebMatrix) by Jim O’Neil:

Jim [O’Neil] has written an awesome post on how you could connect to Dallas from PHP.

Frequent readers know that I’ve been jazzed about Microsoft Codename “Dallas” ever since it was announced at PDC last year (cf. my now dated blog series), so with the release of CTP3 and my upcoming talk at the Vermont Code Camp, I thought it was time for another visit.

Catching Up With CTP3

I

typically introduce “Dallas” with an alliterative (ok, cheesy) elevator pitch: “Dallas Democratizes Data,” and while that characterization has always been true – the data transport mechanism is HTTP and the Atom Publishing Protocol – it’s becoming ‘truer’ with the migration of “Dallas” services to OData (the Open Data Protocol).

OData is an open specification for data interoperability on the web, so it’s a natural fit for “Dallas,” which itself is a marketplace for data content providers like NASA, Zillow, the Associated Press, Data.gov, and many others.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Pedro Felix posted his Webday 2010 slides (in English) on 10/15/2010:

The slides for my Azure AppFabric session at the Portuguese WebDay event are published here.

Slide decks for the Azure AppFabric are uncommon and Pedro’s are a good read. An example:

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Rob Garfoot described how to program Simulated Push Notifications on Windows Phone 7 in this 10/5/2010 article (missed when posted):

I’m currently prepping for my TechEd Europe WP7 session and I like to prepare for the worst. My demo relies on some cloud services and also on WP7 push notifications. Push notifications are nice but they have one drawback for demos, I need an internet connection.

Now in the real world my application would indeed need an internet connection or it would actually be kind of useless, but in demoland I don’t need one as I can run the cloud services portion in the Azure DevFabric which is nice in case the internet connectivity in the demo room goes down, I have a backup.

The same isn’t true of push notifications, they are managed by Microsoft and if I can’t get to them I can run my demo even though my own cloud services are running locally. In order to get around this I wrote a simple WCF service that also runs in my Azure DevFabric along with my other services that can act as a push notification endpoint. It’s not totally seamless but it works quite nicely for me as I now have a fallback for push notifications as well as for my own cloud services.

The Service

The service is a REST based WCF service that has two methods on it’s rather simple interface.

The service then queues any incoming messages until the client gets them. The notify endpoint will quite happily take push notification requests from my existing Azure services without modification.

The Client

The WP7 application isn’t quite as lucky. I could probably have done something that faked getting the notifications a little better but the simplest solution was to just have a background timer that polls my service and gets the notifications. It then processes these using the normal code path for push notifications in my application.

I really should probably use the reactive extensions (RX) to manage my push notifications in the client as that would make them nice and mockable too but for now this works as a solution and keeps the client code reasonably understandable for demo purposes.

All in all it works quite nicely and means I don’t have to have an internet connection to now demo my application, even with push notifications.

• My First Hack at a Blogger Template for the New Oakleaf Mobile Blog post of 10/14/2010 offers a preview of the new OakLeaf Mobile blog:

I’ve started learning Windows Phone 7 and Silverlight programming, but Blogger doesn’t offer mobile templates. So I’m working on a template for the new OakLeaf Mobile blog. Here’s the Windows Phone 7 Emulator displaying the first shot at the template (click for full-size capture):

I removed the NavBar with the old <!--<body></body> - -> trick (see Mobiforge’s Blogger Mobile post), set the body width to 800 px, and increased the date-header font size to 22px. You need to double-click (tap) a couple of times to set the displayed width correctly.

The OakLeaf Mobile blog will concentrate on data-centric Windows Phone 7 applications and infrastructure, with a emphasis on Windows Azure and SQL Azure data sources.

Obviously, I’ll need to adjust my blogging style to much shorter posts for mobile users. In the meantime, I’m hoping that Blogger wakes up and provides professionally-designed mobile-compliant templates for its service.

The original version of the preceding post is here.

• Katrien De Graeve posted to Katrien's Channel9 Corner on 10/16/2010 a 01:10:34 REMIX10 - Building for the cloud: integrating an application on Windows Azure video presentation by Maarten Balliauw:

It’s time to take advantage of the cloud! In this session Maarten builds further on the application created during Gill Cleeren’s Silverlight session [below]. The campaign website that was developed in Silverlight 4 still needs a home. Because the campaign will only run for a short period of time, the company chose for cloud computing on the Windows Azure platform. Learn how to leverage flexible hosting with automated scaling on Windows Azure, combined with the power of a cloud hosted SQL Azure database to create a cost-effective and responsive web application.

• Katrien also posted on 10/13/2010 Gill Cleereon’s 01:13:54 REMIX10 - Building a Silverlight 4 Application End to End about the app that Maarten used in the above presentation:

When you’re asked to build a new Silverlight application from scratch, it may be a bit hard to know where to start exactly. What would you say if you spend 75 minutes of your day and I show you the steps involved in creating a complete business application? Sounds neat, doesn’t it?! In the scenario, we are following a company that wants to set up a campaign site in Silverlight 4 that makes it possible to send Christmas cards. Among others, we’ll be spending some time with printing and webcam interaction, data binding, COM interop, out-of-browser features and data service access. Indeed, Christmas does come early this year!

• Mark Seeman posted Windows Azure Migration Sanity Check on 10/14/2010:

Recently I attended a workshop where we attempted to migrate an existing web application to Windows Azure. It was a worthwhile workshop that left me with a few key takeaways related to migrating applications to Windows Azure.

The first and most important point is so self-evident that I seriously considered not publishing it. However, apparently it wasn’t self-evident to all workshop participants, so someone else might also benefit from this general advice:

Before migrating to Windows Azure, make sure that the application scales to at least two normal servers.

It’s as simple as that – still, lots of web developers never consider this aspect of application architecture.

Why is this important in relation to Azure? The Windows Azure SLA only applies if you deploy two or more instances, which makes sense since the hosting servers occasionally need to be patched etc.

Unless you don’t care about the SLA, your application must be able to ‘scale’ to at least two servers. If it can’t, fix this issue first, before attempting to migrate to Windows Azure. You can test this locally by simply installing your application on two different servers and put them behind a load balancer (you can use virtual machines if you don’t have the hardware). Only if it works consistently in this configuration should you consider deploying to Azure.

Here are the most common issues that may prevent the application from ‘scaling’:

- Keeping state in memory. If you must use session state, use one of the out-of-process session state store providers.

- Using the file system for persistent storage. The file system is local to each server.

Making sure that the application ‘scales’ to at least two servers is such a simple sanity check that it should go without saying, but apparently it doesn’t.

Please note that I put ‘scaling’ in quotes here. An application that runs on only two servers has yet to prove that it’s truly scalable, but that’s another story.

Also note that this sanity check in no way guarantees that the application will run on Azure. However, if the check fails, it most likely will not.

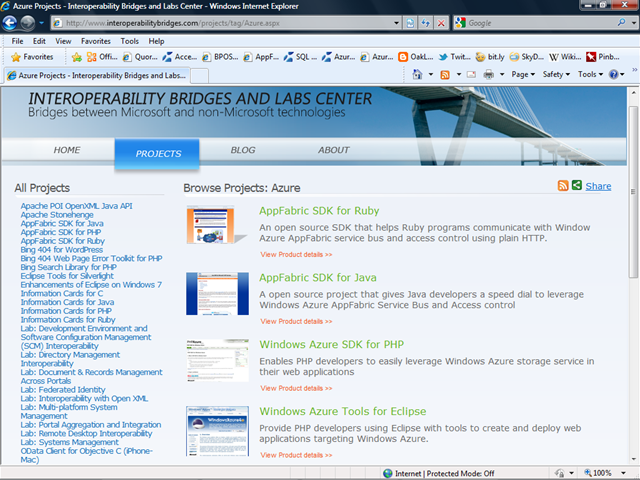

• Microsoft’s Interoperability Strategy Group hosts the Interoperability Bridges and Labs Center site, shown here with a Windows Azure filter applied. The site “is dedicated to technical collaborative work between Microsoft, customers, partners and open sources communities to improve interoperability between Microsoft and non-Microsoft technologies.”

The Center is run by the Microsoft Interoperability Strategy Group working with many other teams at Microsoft, with customers input and with the community at large to build technical bridges, labs and solutions to improve interoperability in mixed IT environments.

In this site, you will find a live directory of these technical and freely downloadable interoperability Bridges with related content such as demos, technical articles, helpful best practices from the projects leads and sharing technical guidance. You will also find Labs, which contain technical guidance explaining how to best achieve interoperability in specific product scenarios.

The vast majority of the projects are run as Open Source projects with third party and community members and released under a broad BSD license, or other licenses such as MS-PL or Apache, so that our customers, partners and the community can use them in many open and broad reaching scenarios.

We welcome feedback and discussions on the Interoperability Strategy Group blog at Interoperability @ Microsoft.

Nick Eaton reported Sony Ericsson to introduce Windows Phone 7 in 2011 in a 10/15/2010 post to the SeattlePI blog:

Sony Ericsson, Sony Corp.'s handset division, will introduce Microsoft Windows Phone 7 devices next year as it dumps Nokia's Symbian mobile operating system, CEO Bert Nordberg said today.

The company, which estimated it has a 19 percent revenue share of the Google Android market, plans to diversify its mobile platform offerings, Bloomberg reported. During Sony Ericsson's third-quarter earnings conference call, Nordberg said handset sales were disappointing as profit failed to meet Wall Street analysts' expectations.

He also said there is a shortage of smart-phone circuit boards and LED screens, Bloomberg reported. The components are in high demand as the mobile market continues to explode.

On Monday, Microsoft announced nine Windows Phone 7 devices for the upcoming launch of its new mobile OS. Sony Ericsson was not among the manufacturers, and Nordberg said his company has no plans to deliver Windows Phone 7 handsets for the holiday shopping season.

A list of the Windows Phone 7 devices and their features is available here. In an unscientific seattlepi.com reader poll -- which is still open -- as of today the HTC HD7 was getting the most votes for the "coolest" Windows Phone 7 handset

Peter Burrows and Dina Bass asserted “If its new excursion into mobile is a hit, Microsoft stays at the center of computing. If it flops, uh-oh” as the deck to their Microsoft Is Pinning Its Hopes on Windows Phone 7 story of 10/14/2010 for Bloomberg BusinessWeek:

In an interview shortly after he unveiled Microsoft's (MSFT) new Windows Phone 7 mobile software on Oct. 11, Chief Executive Officer Steve Ballmer declared a new era for Microsoft. "This is a big launch for us—a big, big launch," he boomed.

Ballmer, never known for understatement, may be lowballing this one. Gartner (IT) expects smartphone sales to surpass PCs in 2012. Microsoft remains immensely profitable thanks to its aging PC monopoly, and it will remain so even if it never figures out the smartphone market. Yet the stakes go beyond numbers—it's about staying at the center of computing while the world's information moves away from PCs and into the cloud.

People get e-mail, music, and Season Two of Mad Men on smartphones and other mobile devices—essentially pocket computers—and that information is warehoused at and delivered from far-off data centers, not PC hard drives. "If Microsoft gets this right, the stock is really, really cheap," says Michael F. Holland, founder of investment firm Holland & Co. "It would be an indication they've been able to evolve into a 21st century company."

By almost any measure, Microsoft is nearly out of the mobile game. Its market share fell to 5 percent from 22 percent in 2004, says Gartner. Customer satisfaction of Windows smartphones is 24 percent, according to ChangeWave Research; it's 74 percent for iPhones and 65 percent for handsets powered by Google's (GOOG) Android. There are a few hundred apps for Windows mobile, vs. 250,000 for Apple (AAPL) and 70,000 for Android. Asked about Windows Phone 7's chances, Google Android chief Andy Rubin has said: "The world doesn't need another platform."

Unless, of course, Windows Phone 7 handsets, on sale Oct. 21 in Europe and Nov. 8 in the U.S., blow away consumers as the iPhone did back in 2007. Today, consumers load their smartphones with apps. Rather than tap between separate programs, Windows Phone 7 users will choose from a few larger icons—Microsoft calls them tiles—that aggregate information from related apps. For example, a "People" tile lets users contact friends via phone, text, or Facebook without having to click on any of those apps. An "Office" tile opens a screen to edit and send a PowerPoint deck or Word file, no attachments necessary. This is the biggest step forward since Android, says Jonathan Sasse, senior marketing vice-president of Internet music company Slacker.

Microsoft mobile chief Andy Lees says Windows Phone 7 reflects his group's new approach to design. In the past, the company wrote the software and left it to licensees to ensure great products. This time, Microsoft set strict rules. All Windows Phone 7 handsets must come with three buttons (home, search, and back) and a camera with at least five megapixels of resolution. "In some cases that meant saying no to some of our largest partners, says Lees. He adds that Microsoft doesn't need to have as many apps as Apple or Android, just the most popular ones. That way Microsoft can assure the quality of Windows handsets. Ours is a structured ecosystem," says Lees.

The Windows Azure Team posted Adoption Program Insights: Sending Emails from Windows Azure (Part 2 of 2) on 10/15/2010:

The Adoption Program Insights series describes experiences of Microsoft Services consultants involved in the Windows Azure Technical Adoption Program assisting customers deploy solutions on the Windows Azure platform. This post is by Norman Sequeira and Tom Hollander.

This is the second part of a two-part series describing options for sending emails from Windows Azure applications. In part 1 we described a pattern that uses a custom on-premise Email Forwarder Service to send mails on behalf of a Windows Azure application. In this post we describe how to use your email server's web services APIs to accept messages directly from a Windows Azure application.

Pattern 2: Using Email Server's Web Services APIs

This pattern leverages the web services capability of Exchange Server 2007 or Exchange Server 2010 to send email out directly from the Windows Azure platform. This approach could also be used other email servers that provide web service interfaces, however this post only describes the implementation for Microsoft Exchange Server.

Since both web and worker roles can make outbound connections to Internet resources via HTTP or HTTPS, it is possible for the web/worker roles to communicate with the web services hosted on Exchange Server. Both Exchange Server 2007 and Exchange Server 2010 support EWS (Exchange Web Services) as an interface for automation. Exchange Web Services offers rich feature set which supports most common mail automation related scenarios like sending email, adding attachments, checking a user's mailbox, configuring delegate access etc. For a full list of features supported by EWS, please check out this MSDN article.

If your organisation has opted to use Exchange Online instead of an on-premise email server, the same EWS based approach can be used from Windows Azure without any code changes since both Exchange Online Standard and Exchange Online Dedicated offer EWS support. Exchange Online is a part of the Microsoft Business Productivity Online Suite (BPOS) which is a set of messaging and collaboration solutions offered by Microsoft.

The following diagram shows how a Windows Azure application could leverage EWS to send emails on its behalf:

Sample Code

The EWS Managed API provides strongly typed .NET interfaces for interacting with the Exchange Web Services. Once the Exchange Web Services Managed API is installed, your first step is to add a reference to the Microsoft.Exchange.WebServices.dll in your existing Windows Azure project in Visual Studio:

Using the EWS Managed API against a Test Server

Test servers are deployed with self-signed certificates, which are not trusted by the Microsoft .NET Framework. If you use the EWS Managed API against a test server, you might receive the following error:

To resolve this issue, you can write code to disable certificate validation in test scenarios. You should use #if DEBUG or similar techniques to ensure this code is not used for production scenarios!

using System.Net.Security;

using System.Security.Cryptography.X509Certificates;// Hook up the cert callback.

System.Net.ServicePointManager.ServerCertificateValidationCallback =

delegate(

Object obj,

X509Certificate certificate,

X509Chain chain,

SslPolicyErrors errors)

{

// Validate the certificate and return true or false as appropriate.

// Note that it not a good practice to always return true because not

// all certificates should be trusted.

};Creating an Instance of the ExchangeService Object

Next it's time to connect to the server by creating and configuring an ExchangeService instance. Note the use of Autodiscover to determine the service's URL based on an e-mail address:

ExchangeService service = new ExchangeService(ExchangeVersion.Exchange2007_SP1);

//Retrieve the credential settings from the Azure configuration

string userName = RoleEnvironment.GetConfigurationSettingValue("EWSUserName");

string password = RoleEnvironment.GetConfigurationSettingValue("EWSPassword");

string domain = RoleEnvironment.GetConfigurationSettingValue("EWSDomain");

service.Credentials = new WebCredentials(userName, password, domain);

// In case the EWS URL is not known, then the EWS URL can also be derived automatically by the EWS Managed API

// using the email address of a mailbox hosted on the Exchange server

string emailAddress = RoleEnvironment.GetConfigurationSettingValue("emailAddress");

service.AutodiscoverUrl(emailAddress);

Sending Email

Once an instance of the ExchangeService object has been created and initialized, it can be used for sending/receiving mails.

EmailMessage message = new EmailMessage(service);

message.ToRecipients.Add("someone@server.com");

message.From = new EmailAddress("someone@azureapp.com");

message.Subject = "Sending mail from Windows Azure";

message.Body = new MessageBody(BodyType.HTML, "This is the body of the mail sent out from Windows Azure environment");

//code for sending attachments.

//The AddFileAttachment method takes in a 'display name' and 'byte array' as parameters

message.Attachments.AddFileAttachment("Attachment1", attachment1);

//The following property 'ContentId' is used in case the attachment needs to be referenced from the body of the mail

message.Attachments[0].ContentId = "Attachment1";

// The following method sends the mail and also saves a copy in the 'Sent Items' folder

message.SendAndSaveCopy();

Architectural Considerations

It is important to understand the architectural implications for any solution. Some of the considerations for the Email Server Web Service APIs approach include:

- Cost: Outbound data flow from Windows Azure to EWS will incur the additional cost to the overall solution. The overall cost impact will vary based on the email volume and bandwidth usage in individual solution, that must be studied carefully before the implementation of this pattern.

- Performance: Large email attachments may pose some performance impact since these needs to be serialized and downloaded to the Exchange Servers by using EWS (on-premise or online). This must also be considered carefully.

Summary

Even though Windows Azure does not include its own native SMTP server, there are several options for building applications that send emails. If you have an on-premise email server, you can use Windows Azure storage to send messages to a custom Email Forwarder Service which then forwards them onto your email server. If your email server exposes a web services API, you can use this to communicate directly instead of using a custom service. And if you have direct access to a SMTP server (including a commercial service) you can configure your Windows Azure app to use that.

One final comment - no matter which of these approaches you use, keep in mind that if your application needs to send out a very large number of emails (for example, for user registrations) you may run into problems if your email server is configured to limit email volume or if the recipients' servers are likely to reject messages as spam. In this case you will need to choose or configure your email server carefully to ensure your messages make it to their final destination.

Ryan Dunn (@dunnry) posted Load Testing on Windows Azure and a link to source code on 10/15/2010:

For the 30th Cloud Cover show, Steve and I talked about coordinating work. It is not an entirely new topic as there have been a couple variations on the topic before. However, the solution I coded is actually different than both of those. I chose to simply iterate through all the available instances and fire a WCF message to each one. I could have done this is parallel or used multi-casting (probably more elegant), but for simplicity sake: it just works.

The reason I needed to coordinate work was in context of load testing a site. Someone had asked me about load testing in Windows Azure. The cloud is a perfect tool to use for load testing. Where else can you get a lot of capacity for a short period of time? With that thought in mind, I searched around to find a way to generate a lot of traffic quickly.

I built a simple 2 role solution where a controller could send commands to a worker and the worker in turn would spawn a load testing tool. For this sample, I chose a tool called ApacheBench. You should watch the show to understand why I chose ApacheBench instead of the more powerful WCAT.

Ryan Dunn (@dunnry) and Steve Marx (@smarx) produced a 00:35:22 Cloud Cover Episode 30 - Coordinating Work and Load Testing video episode for Channel9 on 10/15/2010:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at@cloudcovershow

In this episode:

- We talk about how coordinating work across worker roles.

- Demonstrate a tool to generate web traffic for load testing websites.

- Talk about the gotchas of running certain types of applications in the cloud today.

Show Links:

New Online Demo: Introducing FabrikamShipping SaaS

Windows Azure Platform University Helps Partners Tap Into Business Opportunities with Windows Azure

Cerebrata Powershell Cmdlets

Using the Windows Azure Tools for Eclipse with PHP

SQL Azure Whitepapers Available

Microsoft Sync Framework: Windows Azure Sync Service Sample

Chris Koenig delivered Important Info for WP7 Application Developers in this 10/15/2010 post:

If you are developing an application for Windows Phone 7 and are hoping to get your application published into the marketplace – then you need to read this post!

Straight from the WP7 Marketplace gurus, here are the top things to consider before submitting an application for certification to help increase the chances the application will pass testing the first time. This list is based on some of the experiences we’ve had working with some of our early adoption partners who are busy building the applications you’ve seen us talk about at some of our Bootcamps and Launch Events.

1) READ the docs!!! Understand the application policies that represent the requirements all applications need to meet in order to pass certification testing. Here are links for your convenience

- Windows Phone 7 Series UI Design and Interaction Guide [PDF]

This document is all about the design of your application. Do you have a Pivot Control stacked inside a Panorama Control? That would be bad. Are you using the Windows Phone 7 built-in styles to display text and highlights? If not, it could raise some additional questions. PLEASE read this first one thoroughly (it’s a long read) to make sure you understand what METRO is all about, and what the design checkers will be looking for.- Windows Phone 7 Application Certification Requirements [PDF]

This document is a short, easy to read explanation of the application certification process and the requirements behind it. At less than 30 pages, this one is one you also want to start off with because there are some very specific requirements that you’ll have to include in your applications depending on what your application does. Using the Location Sensor? Do you ask the user if that’s OK to do? Do you have a way to turn it off? Will your application “work” with the Location Sensor turned off? This is the kind of information in this document and it’s critical that you read it before you submit your application.2) Know your iconography.

- Test Case 4.6 - Screen shots should encompass the full 480 x 800 dimension, must be a direct capture of the phone screen or emulator and needs to represent the correct aspect ratio.

- Test Case 4.5 – Avoid using the default Windows Mobile icons.

- Including a panorama background image is optional, but recommended. This will enable Microsoft to potentially feature your panorama image on the Marketplace catalog to help improve your application’s visibility with the likely result of more downloads.

3) Support Information – Test Case 5.6.

- Until 10/31/2010, it is recommended that applications include the version number or support information (for example a URL or email), which is easily discoverable by end-users.

- Modify your applications now to help plan for 11/1/2010 when this test case will be enforced.

4) Toast Notification – Test Case 6.2

- There must be the ability for the user to disable toast notification.

- On first use of HttpNotificationChannel.BindtoShellToast method, the application must ask the user for explicit permission to receive a toast notification.

5) Applications Running Under a Locked Screen – Test Case 6.3

- This only applies to applications that continue to execute when running under the locked screen and does not apply to applications in a suspended state.

- Prompt the user for explicit permission to run under a locked screen upon first use of ApplicationIdleDetectionMode.

6) Back Button – Test Case 5.2.4

- Back button behavior is one of the most typical failures.

- A common failure is pressing the back button during application runtime exits the application, instead of returning the application to a previous page or closing the presented menu or dialog.

7) Themes – Test Case 5.1.1. Avoid controls and text washing-out by testing applications with the Theme Background set to “light”.

Languages. Be sure that the application description and the text the application displays to end users is localized appropriately in the target language.

9) Failures upon Upload to the Marketplace. There is a validation tool that assesses your application upon upload to the Marketplace. Some common failures are:

- Error 1029 – Your XAP in missing an interop manifest. Make sure the interop syntax is specified in the manifest file. If the account does not have permissions to run interop, this error message will also be generated.

10) Windows Phone Developer Tools. Be sure to use the RTM version of the Windows Phone Developer Tools as applications built on previous tool versions will fail testing.

- There were some important changes between the two versions, so make sure you re-test your application on the RTM bits to makes sure everything is cool.

Chris is a Microsoft Developer Evangelist in Dallas, TX.

The HPC In The Cloud blog posted Accenture Announces Deployment of Digital Diagnostics Solution to Azure Cloud on 10/14/2010:

Accenture is deploying its digital diagnostics engine, which scans websites to identify performance problems and structural deficiencies, into the Windows Azure platform. Accenture is deploying the diagnostic engine through Accenture Interactive, the company’s digital marketing, marketing analytics and media management business.

The announcement was made at the third annual Microsoft 2010 Asia High Tech Summit in Tokyo. According to Accenture Interactive, placing the Accenture Digital Diagnostics Engine (ADDE) on the Windows Azure platform will increase the speed and efficiency of websites by reducing problems that limit performance.

Many organizations’ websites continue to be plagued by implementation problems that undermine performance, user experience and compliance. Examples of such problems include poor site indexing and searchability attributes, lack of analytic tag integrity, redundant content, pages that are slow to download and poor link integrity, along with risky accessibility and data security violations.

By combining the data gathered from ADDE website scans with Accenture Interactive consulting services, solutions can be applied to client Web environments, often before critical business or security problems arise, providing organizations with a higher performing online capability. ADDE also is designed to deliver improved visitor experiences, increase traffic volume and contribute to more conversions and higher value per conversion while reducing corporate and brand liabilities.

“As the digital ecosystem becomes increasingly complex, organizations realize that point solutions are not the answer to Web site governance,” said Dr. Stephen Kirkby, managing director of research and platform development for Accenture Interactive. “Development, deployment and management of Web standards provide the foundation for an agile and intelligent digital operation, and ADDE now offers those capabilities from the cloud for more effective management of the Web environment.”

The Windows Azure platform allows customers to run solutions in the cloud or on-site and change that mix as their needs change. Offering ADDE on this Microsoft platform allows customers to leverage and extend their investments, data centers and applications. Customers can also transition aspects of their data analysis operations to the cloud to reduce costs, enhance existing capabilities and build transformative applications that can lead to new business opportunities.

“The launch of the Accenture Interactive Digital Diagnostics solution on the Windows Azure platform enables customers to accelerate the time required to realize a return on their IT investment,” said Sanjay Ravi, worldwide managing director, Discrete Industry, Microsoft Corp. “To successfully compete, enterprises must act now to gain control of their complex Web environments to create more agile and intelligent digital environments. Purchasing software as a service enables companies to rapidly take advantage of new technologies at a lower total cost of ownership.”

To demonstrate ADDE capabilities at the 2010 Asia High Tech Summit, Accenture and Microsoft will offer attendees a free assessment and detailed analysis of their websites. The findings of these assessments can be used in conjunction with Accenture consulting services to help improve the performance of web platforms for clients, reduce the cost of their operational environments and help them continually improve the process around the implementation of web delivery standards.

“Our primary objective is to deliver world-class digital solutions to our clients to enable them to continually improve their customers’ online interactions and experiences,” said Peter Proud, managing director of Strategic Accounts and Alliances for Accenture Interactive.

According to Accenture, competing successfully in the high-tech and electronics industry requires enterprises to assemble and effectively integrate globally connected networks of partners, customers and end consumers in totally new ways. In keeping with the theme of this year's Asia High Tech Summit, “Applying Innovation in a Connected World,” moving Accenture Digital Diagnostics onto the cloud will support these emerging connected networks by enabling Web sites to deliver better connected customer experiences and improved digital marketing results.

The Windows Azure Team posted a Real World Windows Azure: Interview with Scott Houston, Chief Executive Officer at InterGrid case study on 10/14/2010:

As part of the Real World Windows Azure series, we talked to Scott Houston, Chief Executive Officer at InterGrid, about using the Windows Azure platform to deliver the company's GreenButton service. Here's what he had to say:

MSDN: Tell us about InterGrid and the services you offer.

Houston: As a Microsoft Registered Partner, we offer cost-effective solutions for companies that need occasional bursts of computing power for complex, intensive computing processes, such as computer-aided drafting and rendering of high-quality, three-dimensional images.

MSDN: What was the biggest challenge InterGrid faced prior to implementing the Windows Azure platform?

Houston: Our customers, including automobile manufacturers, often render photorealistic designs that require significant computer processing power and can take hours to process. We found that companies occasionally want to render designs much more quickly-such as when they're with a client and making changes to a design. To put in place an infrastructure that would deliver the high-performance computing power needed for those complex processes would be cost-prohibitive and unrealistic.

MSDN: Describe the solution you built with the Windows Azure platform?

Houston: GreenButton is an application programming interface (API) that can be embedded in any software application so that an employee can simply click the GreenButton icon to use Windows Azure compute power on demand. When an employee clicks GreenButton, the job is sent to a Windows Communication Foundation web service, which is hosted in a Windows Azure Web role. It is then sent through the Windows Azure AppFabric Service Bus and split into multiple tasks. Each task is then sent to Queue storage in Windows Azure, where it is picked up and processed by Windows Azure Worker roles. Once the processing is complete, users can download the rendered image or animation file.

By using GreenButton, which is embedded in the user interface of a software application such as Deep Exploration by Right Hemisphere, users can render high-quality stills and animation in a matter of minutes.

MSDN: What makes your solution unique?

Houston: With the GreenButton API, any developer who is creating a software application can enable on-demand, high-performance computing. Developers can embed GreenButton into their applications without worrying about provisioning the infrastructure to support the compute processes.

MSDN: Have you reached new markets since implementing the Windows Azure platform?

Houston: We have identified a list of more than 1,000 applications in five industries across 100 million potential users of GreenButton that could immediately recognize benefits of on-demand computing. The future is very bright, especially with a global, stable player in the cloud-Microsoft-behind us.

MSDN: What benefits have you seen since implementing the Windows Azure platform?

Houston: Aside from opening new opportunities, we're able to deliver tremendous value to independent software vendors and their customers. With GreenButton embedded in an application, software users can use the on-demand scalability and processing power of Windows Azure on a pay-as-you-go basis, giving them the ability to cost-effectively render high-quality images in a matter of minutes, but without making heavy investments in server infrastructure.

Read the full story at: www.microsoft.com/casestudies/Case_Study_Detail.aspx?CaseStudyID=4000007883

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

Peter Galli reported a new CTP of Windows Azure Tools for Eclipse/PHP Ships in this 10/12/2010 post to the Port25 blog:

The Microsoft Interoperability Technical Strategy team is shipping a new Community Technology Preview of the Windows Azure Tools for Eclipse for PHP, which gives PHP developers using Eclipse the tools they need to create and deploy web applications targeting Windows Azure.

This news follows on the announcement a few weeks ago of a series of new and updated tools/SDK for PHP developers targeting Windows Azure, which included the Windows Azure Tools for Eclipse/PHP.

This CTP update refines the new features we announced in the upcoming version 2, which should be ready next month, and includes the ability for developers to do one-click deployment of PHP Applications from Eclipse directly to Windows Azure; to use Windows Azure Diagnostics; the integration of the Open Source AppFabric SDK for PHP Developers; support for Multiple Web Roles and Worker Roles; and support of Windows Azure Drive for PHP developers.

For more information, you can read the full post on the Interoperability Blog, and take a look at Brian Swan's comprehensive tutorial called Using the Windows Azure Tools For Eclipse with PHP, where he shows developers how to take advantage of Windows Azure using these tools.

Peter is the Open Source Community Manager for Microsoft's Platform Strategy Group.

The HPC in the Cloud blog reported Microsoft Research Makes Microsoft Biology Foundation and MODISAzure-Based Environmental Service Available to Scientists and Researchers on 10/12/2010:

With more than 200 researchers in attendance at the seventh annual eScience Workshop, Microsoft Research showcases two technologies that facilitate data-driven research: the Microsoft Biology Foundation (MBF) and a MODISAzure-based environmental service.

Programmers and developers in bioscience now have access to the first version of MBF, part of the Microsoft Biology Initiative. With this platform, Microsoft Research is bringing new technology and tools to the area of bioinformatics and biology, empowering scientists with the resources needed to advance their research. This programming-language-neutral bioinformatics toolkit built as an extension to the Microsoft .NET Framework serves as a library of commonly used bioinformatics functions. MBF implements a range of parsers for common bioinformatics file formats; a range of algorithms for manipulating DNA, RNA and protein sequences; and a set of connectors to biological Web services such as National Center for Biotechnology Information BLAST.

"Biologists face a number of issues today, such as detecting correlations between human genome sequencing or identifying the likelihood for a patient to develop a certain disease," said Tony Hey, corporate vice president of Microsoft External Research. "The MBF aims to provide healthcare research facilities with the tools needed to help scientists advance their research and ensure data accuracy."

Several universities and companies are already using MBF as a foundation for a wide range of experimental tools that could enable scientists and clinicians with the technologies needed to make critical advancements in healthcare.

The Informatics Group at Johnson & Johnson Pharmaceutical Research and Development leveraged MBF to extend its Advanced Biological & Chemical Discovery informatics platform to seamlessly integrate small and large molecule discovery data.

"The bioinformatics features and functionality within the MBF equipped us with pre-existing functions so we didn't have to re-invent the wheel," said Jeremy Kolpak, senior analyst at Johnson & Johnson Pharmaceutical Research and Development. "Ultimately, it saved us a tremendous amount of time, allowing us to focus on the development of higher-level analysis and visualization capabilities, and delivering them faster to our scientists, thus improving their ability to make data-driven discoveries and critical diagnoses."

Another service available for researchers leverages MODISAzure and was created by Dennis Baldocchi, biometeorologist at U.C. Berkeley, Youngryel Ryu, biometeorologist at Harvard University, and Catharine van Ingen, Microsoft eScience researcher. This MODISAzure-based environmental service combines state-of-art biophysical modeling with a rich cloud-based dataset of satellite imagery and ground-based sensor data to support global-scale carbon-climate science synthesis analysis.

Using this research, scientists from different disciplines can share data and algorithms to better understand and visualize how ecosystems behave as climate change occurs. This service is built on MODISAzure, an image-processing pipeline on the Microsoft Windows Azure cloud computing platform.

"To study Earth science we need to have systems that are everywhere, all of the time, and today with our MODISAzure-based environmental service, we have taken a giant step toward that goal," Baldocchi said.

Available under an open source license, the MBF is freely downloadable at http://research.microsoft.com/bio.

Alison Behr asked Ballmer banks on Windows Phone 7; should developers follow suit? in a 10/12/2010 post to SDTimes on the Web:

Steve Ballmer appeared on the Today Show yesterday morning, touting new cell phones running Windows Phone 7. He was reminded that his bonuses had not been what they could be because of problems in the cell-phone area. Matt Lauer asked if they had fixed it. Ballmer responded: “Look at these beautiful new Windows phones!”

On display was the Samsung Focus, and Ballmer said there would be a choice of smartphones depending on what users wanted.

“The phones will be individual," he said. "People have different needs. Some people will want keyboards, some people will want very thin and light, some people will want music, sound, different kinds of cameras.” The hub is key to the phones because it lays out all the key functionalities and their associated apps.

This is good news for Windows developers because now there will be multiple platforms for them to target. Microsoft has been taking it on the chin (as have development shops) after years of declining sales of phones based on Microsoft's Windows Mobile software.The new handsets' chief competition will be Apple's iPhone and the growing market of phones running Google's Android operating system.

The Samsung Focus, priced at US$200, will hit AT&T stores on Nov. 8, according to Microsoft. Two more phones for AT&T, one made by HTC and LG Electronics, and one for T-Mobile USA (also made by HTC), will be close behind. In all, Microsoft announced nine phones for the U.S. market, including one from Dell.

In May, Microsoft launched a family of smartphones, the Kin, which was a failure. But the company is bringing to bear full support for the Windows Phone 7. Additionally, it has lined up 60 carriers in 30 countries to carry Windows Phone 7, which helps make it an attractive OS for developers to get behind.The Android and iPhone are more application-centered. That said, companies such as Motorola have designed overlay software for Android that's similar to Windows Phone 7's hub idea. The software works with Microsoft's bread-and-butter Office app and connects to Xbox Live, but it does not support text copy and paste. Microsoft said that feature will appear in an update in early 2011.

Words of caution: Besides winning over hardware manufacturers and carriers, Microsoft has to win over software developers for Windows Phone 7 to be successful. Windows Mobile apps won't work on Windows Phone 7, so Microsoft has to attract a new base of developers, and they may not want to risk their own skin in the game while waiting for Windows Phone 7 to gain traction in the market.

Alyson Behr is an SD Times contributing editor and founder of Behr Communications, a Los Angeles-based content provider and Web development firm.

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@BethMassi) announced yet another New LightSwitch Video on Deployment Just Released on 10/15/2010:

We just released another new “How Do I” video on the LightSwitch Developer Center:

#12 - How Do I: Deploy a Visual Studio LightSwitch Application?

This video compliments the following articles:

Deployment Guide: How to Configure a Machine to Host a 3-tier LightSwitch Beta 1 Application

Implementing Security in a LightSwitch ApplicationIf you missed the rest of the video series, check out the rest that are available on the LightSwitch Developer Center Learn page:

- #1 - How Do I: Define My Data in a LightSwitch Application?

- #2 - How Do I: Create a Search Screen in a LightSwitch Application?

- #3 - How Do I: Create an Edit Details Screen in a LightSwitch Application?

- #4 - How Do I: Format Data on a Screen in a LightSwitch Application?

- #5 - How Do I: Sort and Filter Data on a Screen in a LightSwitch Application?

- #6 - How Do I: Create a Master-Details (One-to-Many) Screen in a LightSwitch Application?

- #7 - How Do I: Pass a Parameter into a Screen from the Command Bar in a LightSwitch Application?

- #8 - How Do I: Write business rules for validation and calculated fields in a LightSwitch Application?

- #9 - How Do I: Create a Screen that can Both Edit and Add Records in a LightSwitch Application?

- #10 - How Do I: Create and Control Lookup Lists in a LightSwitch Application?

- #11 - How Do I: Set up Security to Control User Access to Parts of a Visual Studio LightSwitch Application?

Enjoy!

Return to section navigation list>

Windows Azure Infrastructure

• Steve “Planky” Plank (pictured below at right) posted on 10/16/2010 a 00:09:40 interview with Microsoft’s Doug Hauger as Video: General Manager of Windows Azure answers some “tougher” questions:

While he was visiting the UK, I managed to get some time with the General Manager of Windows Azure and put the questions to him that customers put to me. It’s captured on video. If you’ve ever had an unexpectedly large credit-card bill for your Azure usage, or see Azure as something for the enterprise rather than the start-up – watch as Doug Hauger [pictured below] explains what Microsoft have done to address these issues and a few more.

In case you can’t find the free Windows Azure usage URLs he mentions. here they are:

Hope you enjoy the video.

Rubel Khan posted Are You New to Windows Azure? – Cloud Computing on 10/15/2010:

Would you like to see how others are leveraging Windows Azure? Try out these short Learning Snack videos.

Learning Snacks

“What is Windows Azure?” “How do I get started on the Windows Azure platform?” “How have organizations benefited by using Windows Azure?” These are some of the questions answered by Microsoft’s new series of Windows Azure Learning Snacks. Time-strapped? You can learn something new in less than five minutes:

- Introduction to Windows Azure Platform

- Windows Azure Platform and Interoperability

- Cloud Computing and Microsoft Windows Azure

- Customer Success Stories with Microsoft Windows Azure

Possibly related posts: (automatically generated)

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

Jay Frey (@jayfry3), pictured below, quotes Bert Armijo and James Staten in a The first 200 servers are the easy part: private cloud advice and why IT won’t lose jobs to the cloud post of 10/13/2010:

The recent CIO.com webcast that featured Bert Armijo of CA Technologies and James Staten of Forrester Research offered some glimpses into the state of private clouds in large enterprises at the moment. I heard both pragmatism and some good, old-fashioned optimism -- even when the topic turned to the impact of cloud computing on IT jobs.

Here are some highlights worth passing on, including a few juicy quotes (always fun):

- Cloud has executive fans, and cloud decisions are being made at a relatively high level. In the live polling we did during the webcast, we asked who was likely to be the biggest proponent of cloud computing in attendees’ organizations. 53% said it was their CIO or senior IT leadership. 23% said it was the business executives. Forrester’s James Staten interpreted this to mean that business folks are demanding answers, often leaning toward the cloud, and the senior IT team is working quickly to bring solutions to the table, often including the cloud as a key piece. I suppose you could add: “whether they wanted to or not.”

- Forrester’s Staten gave a run-down of why many organizations aren’t ready for an internal cloud – but gave lots of tips for changing that. If you’ve read James’ paper on the topic of private cloud readiness (reg required), you’ve heard a lot of these suggestions. There were quite a few new tidbits, however:

- On creating a private cloud: “It’s not as easy as setting up a VMware environment and thinking you’re done.” Even if this had been anyone’s belief at one point, I think the industry has matured enough (as have cloud computing definitions) for it not to be controversial any more. Virtualization is a good step on the way, but isn’t the whole enchilada.

- “Sharing is not something that organizations are good at.” James is right on here. I think we all learned this on the playground early in life, but it’s still true in IT. IT’s silos aren’t conducive to sharing things. James went farther, actually, and said, “you’re not ready for private cloud if you have separate virtual resource pools for marketing…and HR…and development.” Bottom line: the silos have got to go.

- So what advice did James give for IT organizations to help speed their move to private clouds? One thing they can do is “create a new desired state with separate resources, that way you can start learning from that [cloud environment].” Find a way to deliver a private cloud quickly (I can think of at least one)