Windows Azure and Cloud Computing Posts for 10/4/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

![AzureArchitecture2H_thumb3113[3] AzureArchitecture2H_thumb3113[3]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEji9RZJJmM8Tf-56Kvio8snvnSfpY4Srt4Gg5X3Bjfp4MPJkom4KiWMPsDxfDNZnRv7EWzpIaND4cI3oAJi9Et_7a7AaV3TFvQSuz_EP8y43XRk_QjobhSPF4U6-rzmbSTST-EHe_CO/?imgmax=800)

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Jim O’Neill continued his series with Azure@home Part 7: Asynchronous Table Storage Pagination of 10/5/2010:

This post is part of a series diving into the implementation of the @home With Windows Azure project, which formed the basis of a webcast series by Developer Evangelists Brian Hitney and Jim O’Neil. Be sure to read the introductory post for the context of this and subsequent articles in the series.

In the last post of this series, I morphed the original code into something that would incorporate pagination across Azure table storage – specifically for displaying completed Folding@home work units in the GridView of status.aspx (shown to the right).

That implementation focuses on the use of DataServiceQuery and requires some code to specifically handle continuation tokens. In this post, we’ll look at using CloudTableQuery instead, which handles continuation tokens ‘under the covers,’ and we’ll go one step further to incorporate asynchronous processing, which can increase the scalability of an Azure application.

DataServiceQuery versus CloudTableQuery

If you’ve worked with WCF Data Services (or whatever it was called at the time: ADO.NET Data Services or “Astoria”), you’ll recognize DataServiceQuery as “the way” to access a data service – like Azure Table Storage. DataServiceQuery, though, isn’t aware of the nuances of the implementation of cloud storage, specifically the use of continuation tokens and retry logic. That’s where CloudTableQuery comes in – a DataServiceQuery can be adapted into a CloudTableQuery via the AsTableServiceQuery extension method or via a constructor.

RetryPolicy

Beyond this paragraph, I won’t delve into the topic of retry logic in this series. The collocation of the Azure@home code and storage in the same data center definitely minimizes latency and network unpredictability; nevertheless, retry logic is something to keep in mind for building a robust service (yes, even if everything is housed in the same data center). There are a few built-in retry policies you can use, including none or retrying n times with a constant interval or with an exponential backoff scheme. Since RetryPolicy is a delegate, you can even build your own fancy logic. Note, Johnny Halife provides some food for thought regarding scalability impact, and he even recommends using no retry policy and just handling the failures yourself.

If you’re wondering what the default retry policy is, I didn’t find it documented and actually would have assumed it was NoRetry, but empirically it looks like RetryExponential is used with a retry count of 3, a minBackoff of 3 seconds, a maxBackoff of 90 seconds, and a deltaBackoff of 2 seconds. The backoff calculation is:

minBackoff + (2^currentRetry - 1) * random (deltaBackoff *0.8, deltabackoff * 1.2)

so if I did my math right, the attempt will be retried three times if needed: the first retry 4.6 to 5.4 seconds after the first, the second 7.8 to 10.2 seconds later, and the last 14.2 to 19.8 seconds after that. Keep in mind, these values don’t appear to be currently documented, so presumably could change.

Query Execution Methods

You may have noticed that DataServiceQuery has both the synchronous Execute method and an analogous asynchronous pair (BeginExecute/EndExecute), so the implementation described in my last post could technically be made asynchronous without using CloudTableQuery. But where’s the fun in that, especially since CloudTableQuery exposes some methods that more transparently handle the continuation token logic.

CloudTableQuery similarly has an Execute method, which will generate multiple HTTP requests and traverse whatever continuation tokens necessary to fulfill the original query request. As such, you don’t really get (or need) direct visibility into continuation tokens as you do via the QueryOperationResponse for a DataServiceQuery. There is a drawback though. In our pagination scenario for instance, a single Execute would return the first page of rows (with page size defined by the Take extension method), but there seems to be no convenient way to access the continuation token to signal to a subsequent Execute where to start getting the next page of rows.

BeginExecuteSegmented and EndExecuteSegmented do provide the level of control we’re looking for, but with a bit more ceremony required. A call to this method pair will get you a segment of results formatted as a ResultSegment instance (see right). You can see right away that each segment maintains a continuation token (class ResultContinuation) and encapsulates a collection of Results corresponding to the query; however, that may or may not be the complete set of data!

For sake of example, consider a page size of over 1200 rows. As you may recall from the last post, there are occasions where you do will not get all of the entities in a single request. There’s a hard limit of 1000 entities per response, and there are additional scenarios that have similar consequences and involve far fewer rows, such as execution timeouts or results crossing partition boundaries.

At the very least, due to the 1000-entity limit, the ResultSegment you get after the asynchronous call to BeginExecuteSegmented will contain only 1000 rows in its Results collection (well, ideally; see callout box below), but, it will have HasMoreResults set to true, because it knows the desired request has not been fulfilled: another 200 entities are needed.

That’s where GetNext (and its asynchronous analogs) come in. You use GetNext to make repeated requests to flush all results for the query, here 1200 rows. Once HasMoreResults is false, the query results have been completely retrieved, and a request for the next page of 1200 rows would then require another call to BeginExecuteSegmented, passing in the ContinuationToken stored in the previous ResultSegment as the starting point.

Think of it as a nested loop; pseudocode for accessing the complete query results would look something like this

token = null repeat call Begin/EndExecuteSegmented with token while HasMoreResults call GetNext to retrieve additional results

set token = ContinuationToken until token is nullThere’s a bug in the Storage Client (I'm using Version 1.2) where the entity limit is set to 100 versus 1000, so if you are running a tool such as Fiddler against the scenario above, you’ll actually see 12 requests and 12 responses of 100 entities returned. The logic to traverse the segments is still accurate, just the maximum segment size is off by an order of magnitude.

What’s essentially happening is that the “server” acknowledges your request for a bunch of data, but decides that it must make multiple trips to get it all to you. It’s kind of like ordering five glasses of water for your table in a restaurant: the waiter gets your order for five, but having only two hands (and no tray) makes three trips to the bar to fulfill your request. BeginExecuteSegmented represents your order, and GetNext represents the waiter’s subsequent trips to fulfill it.

If all this is still a bit fuzzy, stick with me, hopefully it will become clearer as we apply this to the Azure@home sample.

Updating Azure@home

The general structure of the new status.aspx implementation using CloudTableQuery will be roughly the same as what we created last time; I’ll call the page statusAsync.aspx from here on out to avoid confusion.

AsyncPageData class

As before, session state is used to retain the lists of completed and in-progress work units, as well as the continuation token marking the current page of results. Last time the class was PageData, and this time I’ll call it AsyncPageData:

protected class AsyncPageData { public List<WorkUnit> InProgressList = new List<WorkUnit>(); public List<CompletedWorkUnit> CompletedList = new List<CompletedWorkUnit>(); public ResultContinuation ContinuationToken = null; public Boolean QueryResultsComplete = false; }The only difference from PageData is the use of the ResultContinuation class to track the continuation token, versus individual string values for the partition key and row key. That continuation token is captured from a ResultSegment described earlier.

RetrieveCompletedUnits

If you review the refactoring we did in the last post, you’ll see that the logic to return one page of data is already well encapsulated in the RetrieveCompletedUnits method, and in fact, the only code change strictly necessary is in that method. Here’s an updated implementation, which works, but as you’ll read later in this post is not ideal.

54 lines of C# code excised for brevity.

Now this certainly works, but we can do better.

The ASP.NET Page Lifecycle Revisited

Recall in my last post, I laid out a graphic showing the page lifecycle. That lifecycle all occurs on an ASP.NET thread, specifically a worker thread from the CLR thread pool. The size of the thread pool then determines the maximum number of concurrent ASP.NET requests at any given time. In ASP.NET 3.5 and 4, the default size of the thread pool is 100 worker threads per processor.

Now consider what happens with the implementation I just laid out above. The page request, and the execution of RetrieveCompletedUnits, occurs on a thread pool worker thread. In Line 22, an asynchronous request begins, using an I/O Completion port under the covers, and the worker thread is left blocking at Line 48 just waiting for the asynchronous request to complete. If there are (n x CPUs) such requests being processed when a request comes in for a quick and simple ASP.NET page, what happens? Chances are the server will response with a “503 Service Unavailable” message, even though all the web server may be doing is waiting for external I/O processes – like an HTTP request to Azure table storage – to complete. The server is busy… busy waiting!

So what has the asynchronous implementation achieved? Not much! First of all, the page doesn’t return to the user any quicker, and in fact may be marginally (though probably imperceptibly) slower because of the threading choreography. And then, as described above, we haven’t really done anything to improve scalability of our application. So why bother?

Well, the idea of using asynchronous calls was the right one, but the implementation was flawed. In ASP.NET 2.0, asynchronous page support was added to the ASP.NET page lifecycle. This support allows you to register “begin” and “end” event handlers to be processed asynchronously at a specific point in the lifecycle known as the “async point” – namely between PreRender and PreRenderComplete, as you can see below:

Since the asynchronous I/O (green boxes) is handled via I/O completion ports (which form the underlying implementation of the familiar BeginExecute/EndExecute implementations in .NET), the ASP.NET worker thread can be returned to the pool as soon as the I/O request starts. That worker thread is now free to service potentially many more additional requests versus being forced to sit around and wait for completion of the I/O on the current page. When the asynchronous I/O operation is complete, the next available worker thread can pick right up and resume the rest of the page creation.

Now here’s the key part to why this improves scalability: the thread listening on the I/O completion port is an I/O thread versus a worker thread, so it’s not cannibalizing a thread that could be servicing another page request. Additionally, a single I/O completion port (and therefore I/O thread) could be handling multiple outstanding I/O requests, so with I/O threads there isn’t necessarily a one-to-one correspondence to ASP.NET page requests as there is with worker threads.

To summarize: by using the asynchronous page lifecycle, you’re deferring the waiting to specialized non-worker threads, so you can put the worker threads to use servicing additional requests versus just waiting around for some long running, asynchronous event to complete.

Implementing ASP.NET Asynchronous Pages

Implementing ASP.NET asynchronous pages is much easier than explaining how they work! First off, you need to indicate the page will perform some asynchronous processing by adding the Async property to the @ Page directive. You can also set an AsyncTimeout property so that page processing will terminate if it hasn’t completed within the specified duration (defaulting to 45 seconds).

<%@ Page Language="C#" AutoEventWireup="true" CodeBehind="StatusAsync.aspx.cs"Inherits="WebRole.StatusAsync" EnableViewState="true" Async="true" %>Setting Async to true is important! If not set, the tasks registered to run asynchronously will still run, but the main thread for the page will block until all of the asynchronous tasks have completed, and then that main thread will resume the page generation. That, of course, negates the benefit of releasing the request thread to service other clients while the asynchronous tasks are underway.

There are two different mechanisms to specify the asynchronous methods: using PageAsyncTask or invoking AddOnPrerenderCompleteAsync. In the end, they accomplish the same things, but PageAsyncTask provides a bit more flexibility:

- by enabling specification of a callback method if the page times out,

- retaining the HttpContext across threads, including things like User.Identity,

- allowing you to pass in state to the asynchronous method, and

- allowing you to queue up multiple operations.

I like flexibility, so I’m opting for that approach. If you want to see AddOnPrerenderCompleteAsync in action, check out Scott Densmore’s blog post which similarly deals with paging in Windows Azure.

Registering Asynchronous Tasks

Each PageAsyncTask specifies up to three event handlers: the method to call to begin the asynchronous task, the method to call when the task completes, and optionally a method to call when the task does not complete within a time-out period (specified via the AsyncTimeout property of the @Page directive). Additionally, you can specify whether or not multiple asynchronous task can run in parallel.

When you register a PageAsyncTask, via Page.RegisterAsyncTask, it will be executed at the ‘async point’ described above – between PreRender and PreRenderComplete. Multiple tasks will be executed in parallel if the ExecuteInParallel property for a task is via the constructor.

In the current implementation of status.aspx, there are two synchronous methods defined to retrieve the work unit data, RetrieveInProgressUnits and RetrieveCompletedUnits. For the page to execute those tasks asynchronously, each invocation of those methods (there are several) must be replaced by registering a corresponding PageAsyncTask. …

Jim continues with more C# examples.

Wrapping it up

I’ve bundled the complete code for the implementation of the asynchronous page retrieval as an attachment to this blog post. I also wanted to provide a few additional resources that I found invaluable in understanding how asynchronous paging in general works in ASP.NET:

Asynchronous Pages in ASP.NET 2.0, Jeff Prosise

ASP.NET Thread Usage on IIS 7.0 and 6.0, Thomas Marquardt

Performing Asynchronous Work, or Tasks, in ASP.NET Applications, Thomas Marquardt

Multi-threading in ASP.NET, Robert Williams

Improve scalability in ASP.NET MVC using Asynchronous requests, Steve SandersonFor the next post, I’ll be moving on (finally!) to the Web Role implementation in Azure@home.

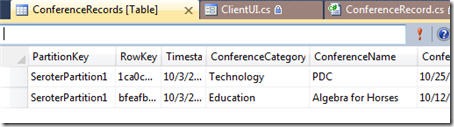

Richard Seroter continued his table storage comparison series with Comparing AWS SimpleDB and Windows Azure Table Storage – Part II on 10/3/2010:

In my last post, I took an initial look at the Amazon Web Services (AWS) SimpleDB product and compared it to the Microsoft Windows Azure Table storage. I showed that both solutions are relatively similar in that they embrace a loosely typed, flexible storage strategy and both provide a bit of developer tooling. In that post, I walked through a demonstration of SimpleDB using the AWS SDK for .NET.

In this post, I’ll perform a quick demonstration of the Windows Azure Table storage product and then conclude with a few thoughts on the two solution offerings. Let’s get started.

Windows Azure Table Storage

First, I’m going to define a .NET object that represents the entity being stored in the Azure Table storage. Remember that, as pointed out in the previous post, the Azure Table storage is schema-less so this new .NET object is just a representation used for creating and querying the Azure Table. It has no bearing on the underlying Azure Table structure. However, accessing the Table through a typed object differs from the AWS SimpleDB which has a fully type-less .NET API model.

I’ve built a new WinForm .NET project that will interact with the Azure Table. My Azure Table will hold details about different conferences that are available for attendance. My “conference record” object inherits from TableServiceEntity.

Notice that I have both a partition key and row key value. The PartitionKey attribute is used to identify and organize data entities. Entities with the same PartitionKey are physically co-located which in turn, helps performance. The RowKey attribute uniquely defines a row within a given partition. The PartitionKey + RowKey must be a unique combination.

Next up, I built a table context class which is used to perform operations on the Azure Table. This class inherits from TableServiceContext and has operations to get, add and update ConferenceRecord objects from the Azure Table.

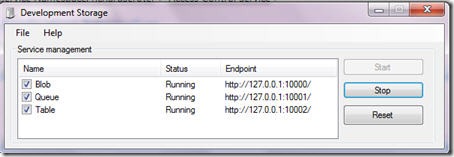

In my WinForm code, I have a class variable of type CloudStorageAccount which is used to interact with the Azure account. When the “connect” button is clicked on my WinForm, I establish a connection to the Azure cloud. This is where Microsoft’s tooling is pretty cool. I have a local “fabric” that represents the various Azure storage options (table, blob, queue) and can leverage this fabric without ever provisioning a live cloud account.

Connecting to my development storage through the CloudStorageAccount looks like this:

After connecting to the local (or cloud) storage, I can create a new table using the ConferenceRecord type definition, URI of the table, and my cloud credentials.

Now I instantiate my table context object which will add new entities to my table.

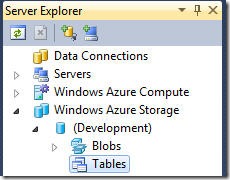

Another nice tool built into Visual Studio 2010 (with the Azure extensions) is the Azure viewer in the Server Explorer window. Here I can connect to either the local fabric or the cloud account. Before I run my application for the first time, we can see that my Table list is empty.

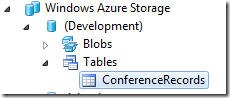

If I start up my application and add a few rows, I can see my new Table.

I can do more than just see that my table exists. I can right-click that table and choose to View Table, which pulls up all the entities within the table.

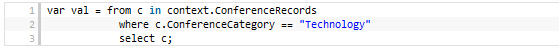

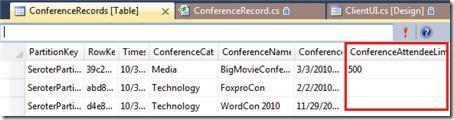

Performing a lookup from my Azure Table via code is fairly simple and I can either loop through all the entities via a “foreach” and conditional, or, I can use LINQ. Here I grab all conference records whose ConferenceCategory is equal to “Technology”.

Now, let’s prove that the underlying storage is indeed schema-less. I’ll go ahead and add a new attribute to the ConferenceRecord object type and populate it’s value in the WinForm UI. A ConferenceAttendeeLimit of type int was added to the class and then assigned a random value in the UI. Sure enough, my underlying table was updated with the new “column’” and data value.

I can also update my LINQ query to look for all conferences where the attendee limit is greater than 100, and only my latest column is returned.

Summary of Part II

In this second post of the series, we’ve seen that the Windows Azure Table storage product is relatively straightforward to work with. I find the AWS SimpleDB documentation to be better (and more current) than the Windows Azure storage documentation, but the Visual Studio-integrated tooling for Azure storage is really handy. AWS has a lower cost of entry as many AWS products don’t charge you a dime until you reach certain usage thresholds. This differs from Windows Azure where you pretty much pay from day one for any type of usage.

All in all, both of these products are useful for high-performing, flexible data repositories. I’d definitely recommend getting more familiar with both solutions.

David Tesar described Data Security in Azure Part 1 of 2 in this recent 00:13:59 video segment from TechNet Edge:

Windows Azure provides the extensible and highly manageable platform to create, run, scale, upgrade and retire your applications. In this interview, Charlie Kaufman talks about various methods and tools for securing your application data in Windows Azure. The talk covers methods for securing of your Azure Storage accounts and data during moving it to the cloud, aspects protocols for securing requests to and responses from the Azure Storage, platform-provided methods for ensuring data integrity, and discussion of cryptographic pubic key distribution between Azure roles and Azure Fabric Controller.

Explore Windows Azure options at http://bit.ly/TryAzure

I find it strange that Microsoft still hasn’t standardized the pronunciation of “Azure.”

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

No significant OData articles so far today.

Steve Yi posted Video: Extending SQL Azure data to SQL Compact using Sync Framework 2.1 to the SQL Azure Team blog on 10/4/2010:

This week we will be focusing on the Sync Framework and to kick it off Liam Cavanagh has posted a webcast entitled "Extending SQL Azure data to SQL Compact using Sync Framework 2.1". In this video he shows you how to modify the code from the original webcast to allow a SQL Compact database to synchronize with a SQL Azure database.

In the corresponding blog post he includes the main code (program.cs) associated with this console application that allows him to synchronize the Customer and Product table from the SQL Azure AdventureWorks databases to SQL Compact.

I wonder what happened to Walter Wayne Berry. Steve Yi appears to have taken his place as chief SQL Azure blog spokesperson.

Jonathan Lewis performs a detailed investigation of SQL Server clustered indexes in his Oracle to SQL Server: Putting the Data in the Right Place article of 9/17/2010 (missed when posted):

Jonathan Lewis is an Oracle expert who is recording his exploration of SQL Server in a series of articles. In this fourth part, he turns his attention to clustered indexes, and the unusual requirement SQL Server seems to have for the regular rebuilding of indexes.

In the previous article in this series, I investigated SQL Server's strategy for free space management in heap tables, and concluded that SQL Server does not allow us to specify the equivalent of a fill factor for a heap table. This means that updates to heap tables will probably result in an unreasonable amount of row movement, and so lead to an unreasonable performance overhead when we query the data.

In this article, it's time to contrast this behaviour with that observed for clustered indexes, and to find out how (or whether) clustered indexes avoid the update threat suffered by heap tables. There is an important reason behind this investigation – and it revolves round a big (possibly cultural) difference between SQL Server and Oracle. Although there are always special cases (and a couple of bug-driven problems), indexes in Oracle rarely need to be rebuilt – but in the SQL Server world I keep getting told that it’s absolutely standard (and necessary) practice to rebuild lots of indexes very regularly.

Ultimately I want to find out if regular index rebuilding really is necessary, why it’s necessary, and when it can be avoided. I'm fairly certain that I’m not going to be able the answers in the space of a single article – but I might be able to raise a few interesting questions. …

Jonathan continues with an analysis of fill factor and index utilization, which might be of interest to developers working with large SQL Azure tables.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Vittorio Bertocci (@vibronet) reported that the New Update of the Windows Azure Training Kit Contains a new ACS HOL in a 10/5/2010 post:

We are finally out with one update of the Windows Azure Training Kit! You can download it from here, as usual.

The kit contains tons of news, you can read all about it from James’ update: my contribution this time is the introductory lab to the new ACS, which will show up in the next update to the identity training kit as well.

This time we wanted to keep things super-simple, hence we focused on the absolute minimum: handling multiple IPs, basic claims transformation and customization of the sign-in experience.

This is by far the simplest identity lab we’ve ever produced, and gives just a taste of the basic new capabilities in the labs release of ACS; subsequent labs will get deeper and tackle more advanced scenarios. In the meanwhile… have fun!

David Kearns reviewed a Cyber-Ark white paper and VibroNet’s new WIF book in his Book, white paper tackle IdM issues post of 10/1/2010 to Network World’s Security blog:

There's a new book and a new white paper I want to tell you about today, and both should be of interest to most of you.

First, on the cloud computing front, is a new white paper from the folks at Cyber-Ark called "Secure Your Cloud and Outsourced Business with Privileged Identity Management". Note that you will need to register to download and view the paper -- but it's worth it.

According to PR guru Liz Campbell (from Fama PR on behalf of Cyber-Ark): "As outsourcing and the various instantiations of cloud-based services become increasingly essential to business operations, enterprises need to find ways to exercise governance over their critical assets and operations by extending control over privilege, both internally and externally. Service providers, for their part, must be prepared to address their clients' privilege/risk management requirements to maintain a strong competitive position in the market. This paper reviews the '10 steps for securing the extended enterprise'."It talks (in depth) about privileged access, how it's incorporated (or should be) in the cloud and how to discover if your cloud service provider understands the benefits and problems of privileged account management and privileged user management. Very useful.

On the book front, my good friend Vittorio Bertocci (he's a senior architect evangelist with Microsoft) has just released "Programming Windows Identity Foundation". This is the book that corporate programmers, and IT code dabblers, need to bring identity services into their home-grown services and applications. As the blurb states: "Get hands-on guidance designed to help you put the newest .NET Framework component -- Windows Identity Foundation, the identity and access logic for all on-premises and cloud development -- to work." And that's true.

- Peter Kron, who's a principal software developer on the Windows Identity Foundation team (and admits to keeping a well-thumbed proof copy on his desk) said this:

"In this book, Vittorio takes the reader through basic scenarios and explains the power of claims. He shows how to quickly create a simple claims-based application using WIF. Beyond that, he systematically explores the extensibility points of WIF and how to use them to handle more sophisticated scenarios such as Single Sign-on, delegation, and claims transformation, among others.

"Vittorio goes on to detail the major classes and methods used by WIF in both passive browser-based applications and active WCF services. Finally he explores using WIF as your applications move to cloud-based Windows Azure roles and RIA futures."

If you're working with identity, services and applications in a Windows environment you need this book.

I agree with David’s review of Vittorio’s book; haven’t read the white paper yet.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bruno Terkaly explained How to teach cloud computing – The Windows Azure Platform – Step 1 (Getting your environment ready) by leveraging the newly updated Windows Azure Training Kit in this 10/5/2010 post:

Huge Opportunity For Developers

IDC predicts that the cloud service market will grow to $55 billion by 2014 at a CAGR (Compound Annual Growth Rate) of 29%. That's right - about 30%. If you aren't learning about cloud computing, you are going to miss [a big] opportunity.

Traditional software is expected to grow at a CAGR of 5%; to a market size of $460 billion. Even though cloud market is 12% of the traditional software market by 2014, due to the accelerated growth rate, there will be a time when cloud will take the leadership position in the market place.

Everybody agrees the cloud makes sense because the cloud offers:

- Pay-per-use

- Easy/fast deployment

The challenges remain:

- Security

- Availability

- Performance and Cost

Learn to Teach Cloud Computing

If your goal is to teach cloud computing, then the next several posts will jump start you and get you to be in front of a group and teaching Windows Azure.

What is Azure?

Windows Azure provides developers with on-demand compute and storage to host, scale and manage Web applications on the Internet through Microsoft data centers.

How about an excellent and to-the-point FAQ. Well then, try http://www.microsoft.com/windowsazure/faq/.

These posts speak to my heart – it is what I am (or was) – a teacher, an instructor for many years. I’ve been a developer for many years as well.

I’ve taught all kinds of classes in many, many countries and dozens of cities throughout the US, Canada, and Mexico. I’ve taught in Asia (Korea, Taiwan, Japan, and Singapore). I’ve taught all over Latin America, including Costa Rica, Brazil, Mexico. My point is simple. I want to continue teaching, and better yet, teach you how to teach Azure.

Teach Azure Now

The point of my series of posts is to help individuals and companies teach cloud computing. It will start from the beginning and the posts will help you teach others how to code for Azure. I need this for myself, as an evangelist, to do my job.

I will walk you through some of the labs in the training kit and even talk about a couple of cloud projects I’ve done, some of the blogged about already.

The audience I’m targeting is basic .NET programmers who know C#.

Windows Azure Platform Training Kit

The point is to leverage any resources you can to teach Azure. Let’s start with some training kits.

The link is here: ttp://www.microsoft.com/downloads/details.aspx?FamilyID=413E88F8-5966-4A83-B309-53B7B77EDF78&displaylang=en

The first thing we want to do is look at the training kit and get started. Notice that we have a lot of great sections to look at:

- Home

- Hands-on

- Labs

- Demos

- Samples

- Presentations

- Prerequisites

- Online Resources

Also, install the Windows Azure AppFabric SDK V1.0

This is the end of Step 1 – Getting your environment ready.

David Aiken (@TheDavidAiken) reported the availability of an Updated Windows Azure Training Kit on 10/5/2010:

Yesterday my old team (yes I’ve changed jobs), released the latest version of the Windows Azure platform training kit. The kit is now in 2 versions, one for vs2008 developers and one for 2010 developers. You can download the kit from http://www.microsoft.com/downloads/en/details.aspx?FamilyID=413E88F8-5966-4A83-B309-53B7B77EDF78&displaylang=en.

Here is what is new in the training kit:

- Updated all hands-on labs and demo scripts for Visual Studio 2010, the .NET Framework 4, and the Windows Azure Tools for Visual Studio version 1.2 release

- Added a new hands-on lab titled "Introduction to the AppFabric Access Control Service (September 2010 Labs Release)"

- Added a new hands-on lab "Debugging Applications in Windows Azure"

- Added a new hands-on lab "Asynchronous Workload Handling"

- Added a new exercise to the "Deploying Applications in Windows Azure" hands-on lab to show how to use the new tools to directly deploy from Visual Studio 2010.

- Added a new exercise to the "Introduction to the AppFabric Service Bus" hands-on lab to show how to connect a WCF Service in IIS 7.5 to the Service Bus

- Updated the "Introduction to AppFabric Service Bus" hands-on lab based on feedback and split the lab into 2 parts

- All of the presentations have also been updated and refactored to provide content for a 3 day training workshop.

- Updated the training kit navigation pages to include a 3 day agenda, a change log, and an improved setup process for hands-on labs.

So go download the kit right now and keep your skills sharp.

THIS POSTING IS PROVIDED “AS IS” WITH NO WARRANTIES, AND CONFERS NO RIGHTS

Maarten Balliauw claimed “Designing applications and solutions for cloud computing and Windows Azure requires a completely different way of considering the operating costs” in his Windows Azure: Cost Architecting for Windows Azure article for the October 2010 issue of TechNet Magazine:

Cloud computing and platforms like Windows Azure are billed as “the next big thing” in IT. This certainly seems true when you consider the myriad advantages to cloud computing.

Computing and storage become an on-demand story that you can use at any time, paying only for what you effectively use. However, this also poses a problem. If a cloud application is designed like a regular application, chances are that that application’s cost perspective will not be as expected.

Different Metrics

In traditional IT, one would buy a set of hardware (network infrastructure, servers and so on), set it up, go through the configuration process and connect to the Internet. It’s a one-time investment, with an employee to turn the knobs and bolts—that’s it.

With cloud computing, a cost model replaces that investment model. You pay for resources like server power and storage based on their effective use. With a cloud platform like Windows Azure, you can expect the following measures to calculate your monthly bill:

- Number of hours you’ve reserved a virtual machine (VM)—meaning you pay for a deployed application, even if it isn’t currently running

- Number of CPUs in a VM

- Bandwidth, measured per GB in / out

- Amount of GB storage used

- Number of transactions on storage

- Database size on SQL Azure

- Number of connections on Windows Azure platform AppFabric

You can find all the pricing details on the Windows Azure Web site at microsoft.com/windowsazure/offers. As you can see from the list here, that’s a lot to consider.

Limiting Virtual Machines

Here’s how it breaks down from a practical perspective. While limiting the amount of VMs you have running is a good way to save costs, for Web Roles, it makes sense to have at least two VMs for availability and load balancing. Use the Windows Azure Diagnostics API to measure CPU usage, the amount of HTTP requests and memory usage in these instances, and scale your application down when appropriate.

Every instance of whichever role running on Windows Azure on a monthly basis doubles the amount of hours on the bill. For example, having three role instances running on average (sometimes two, sometimes four) will be 25 percent cheaper than running four instances all the time with almost no workload.

For Worker Roles, it also makes sense to have at least two role instances to do background processing. This will help ensure that when one is down for updates or a role restart occurs, the application is still available. By using the available tooling for Windows Azure, you can configure a Worker Role out-of-the-box for doing just one task.

If you’re running a photo-sharing Web site, for example, you’ll want to have a Worker Role for resizing images and another for sending out e-mail notifications. Using the “at least two instances” rule would mean running four Worker Role instances, which would result in a sizeable bill. Resizing images and sending out e-mails don’t actually require that amount of CPU power, so having just two Worker Roles for doing both tasks should be enough. That’s a savings of 50 percent on the monthly bill for running Windows Azure. It’s also fairly easy to implement a threading mechanism in a Worker Role, where each thread performs its dedicated piece of work.

Windows Azure offers four sizes of VM: small, medium, large and extra large. The differences between each size are the number of available CPUs, the amount of available memory and local storage, and the I/O performance. It’s best to think about the appropriate VM size before actually deploying to Windows Azure. You can’t change it once you’re running your application.

When you receive your monthly statement, you’ll notice all compute hours are converted into small instance hours when presented on your bill. For example, one clock hour of a medium compute instance would be presented as two small compute instance hours at the small instance rate. If you have two medium instances running, you’re billed for 720 hours x 2 x 2.

Consider this when sizing your VMs. You can realize almost the same compute power using small instances. Let’s say you have four of them billed at 720 hours x 4. That price is the same. You can scale down to two instances when appropriate, bringing you to 720 hours x 2. If you don’t need more CPUs, more memory or more storage, stick with small instances because they can scale on a more granular basis than larger instances.

Billing for Windows Azure services starts once you’ve deployed an application, and occurs whether the role instances are active or turned off. You can easily see this on the Windows Azure portal. There’s a large image of a box. “When the box is gray, you’re OK. When the box is blue, a bill is due.” (Thanks to Brian H. Prince for that one-liner.)

When you’re deploying applications to staging or production and turning them off after use, don’t forget to un-deploy the application as well. You don’t want to pay for any inactive applications. Also remember to scale down when appropriate. This has a direct effect on monthly operational costs.

When scaling up and down, it’s best to have a role instance running for at least an hour because you pay by the hour. Spin up multiple worker threads in a Worker Role. That way a Worker Role can perform multiple tasks instead of just one. If you don’t need more CPUs, more memory or more storage, stick with small instances. And again, be sure to un-deploy your applications when you’re not using them.

Bandwidth, Storage and Transactions

Bandwidth and transactions are two tricky metrics. There’s currently no good way to measure these, except by looking at the monthly bill. There’s no real-time monitoring you can consult and use to adapt your application. Bandwidth is the easier of those two metrics to measure. The less amount of traffic you put over the wire, the less the cost will be. It’s as simple as that.

Things get trickier when you deploy applications over multiple Windows Azure regions. Let’s say you have a Web Role running in the “North America” region and a storage account in the “West Europe” region. In this situation, bandwidth for communication between the Web Role and storage will be billed.

If the Web Role and storage were located in the same region (both in “North America,” for example), there would be no bandwidth bill for communication between the Web Role and storage. Keep in mind that when designing geographically distributed applications, it’s best to keep coupled services within the same Windows Azure region.

When using the Windows Azure Content Delivery Network (CDN), you can take advantage of another interesting cost-reduction measure. CDN is metered in the same way as blob storage, meaning per GB stored per month. Once you initiate a request to the CDN, it will grab the original content from blob storage (including bandwidth consumption, thus billed) and cache it locally.

If you set your cache expiration headers too short, it will consume more bandwidth because the CDN cache will update itself more frequently. When cache expiration is set too long, Windows Azure will store content in the CDN for a longer time and bill per GB stored per month. Think of this for every application so you can determine the best cache expiration time.

The Windows Azure Diagnostics Monitor also uses blob storage for diagnostic data such as performance counters, trace logs, event logs and so on. It writes this data to your application on a pre-specified interval. Writing every minute will increase the transaction count on storage leading to extra costs. Setting it to an interval such as every 15 minutes will result in fewer storage transactions. The drawback to that, however, is the diagnostics data is always at least 15 minutes old.

Also, the Windows Azure Diagnostics Monitor doesn’t clean up its data. If you don’t do this yourself, there’s a chance you’ll be billed for a lot of storage containing nothing but old, expired diagnostic data.

Transactions are billed per 10.000. This may seem like a high number, but you’ll pay for them, in reality. Every operation on a storage account is a transaction. Creating a blob container, listing the contents of a blob container, storing data in a table on table storage, peeking for messages in a queue—these are all transactions. When performing an operation such as blob storage, for example, you would first check if the blob container exists. If not, you would have to create it and then store a blob. That’s at least two, possibly three, transactions.

The same counts for hosting static content on blob storage. If your Web site hosts 40 small images on one page, this means 40 transactions. This can add up quickly with high-traffic applications. By simply ensuring a blob container exists at application startup and skipping that check on every subsequent operation, you can cut the number of transactions by almost 50 percent. Be smart about this and you can lower your bill.

Indexes Can Be Expensive

SQL Azure is an interesting product. You can have a database of 1GB, 5GB, 10GB, 20GB, 30GB, 40GB, or 50GB at an extremely low monthly price. It’s safe and sufficient to just go for a SQL Azure database of 5GB at first. If you’re only using 2GB of that capacity, though, you’re not really in a pay-per-use model, right?

In some situations, it can be more cost-effective to distribute your data across different SQL Azure databases, rather than having one large database. For example, you could have a 5GB and a 10GB database, instead of a 20GB database with 5GB of unused capacity. This type of strategic storage will affect your bill if you do it smartly, and if it works with your data type.

Every object consumes storage. Indexes and tables can consume a lot of database storage capacity. Large tables may occupy 10 percent of a database, and some indexes may consume 0.5 percent of a database.

If you divide the monthly cost of your SQL Azure subscription by the database size, you’ll have the cost-per-storage unit. Think about the objects in your database. If index X costs you 50 cents per month and doesn’t really add a lot of performance gain, then simply throw it away. Half a dollar is not that much, but if you eliminate some tables and some indexes, it can add up. An interesting example on this can be found on the SQL Azure team blog post, “The Real Cost of Indexes” (blogs.msdn.com/b/sqlazure/archive/2010/08/19/10051969.aspx).

There is a strong movement in application development to no longer use stored procedures in a database. Instead, the trend is to use object-relational mappers and perform a lot of calculations on data in the application logic.

There’s nothing wrong with that, but it does get interesting when you think about Windows Azure and SQL Azure. Performing data calculations in the application may require extra Web Role or Worker Role instances. If you move these calculations to SQL Azure, you’re saving on a role instance in this situation. Because SQL Azure is metered on storage and not CPU usage, you actually get free CPU cycles in your database.

Developer Impact

The developer who’s writing the code can have a direct impact on costs. For example, when building an ASP.NET Web site that Windows Azure will host, you can distribute across role instances using the Windows Azure storage-backed session state provider. This provider stores session data in the Windows Azure table service where the amount of storage used, the amount of bandwidth used and the transaction count are measured for billing. Consider the following code snippet that’s used for determining a user’s language on every request:

if (Session["culture"].ToString() == "en-US") { // .. set to English ... } if (Session["culture"].ToString() == "nl-BE") { // .. set to Dutch ... }Nothing wrong with that? Technically not, but you can optimize this by 50 percent from a cost perspective:

string culture = Session["culture"].ToString(); if (culture == "en-US") { // .. set to English ... } if (culture == "nl-BE") { // .. set to Dutch ... }Both snippets do exactly the same thing. The first snippet reads session data twice, while the latter reads session data only once. This means a 50 percent cost win in bandwidth and transaction count. The same is true for queues. Reading one message at a time 20 times will be more expensive than reading 20 messages at once.

As you can see, cloud computing introduces different economics and pricing details for hosting an application. While cloud computing can be a vast improvement in operational costs versus a traditional datacenter, you have to pay attention to certain considerations in application architecture when designing for the cloud.

Maarten Balliauw is a technical consultant in Web technologies at RealDolmen, one of Belgium’s biggest ICT companies. His interests are ASP.NET MVC, PHP and Windows Azure. He’s a Microsoft Most Valuable Professional ASP.NET and has published many articles in both PHP and .NET literature such as MSDN Magazine, Belgium, and PHP Architect. Balliauw is a frequent speaker at various national and international events. Find his blog at blog.maartenballiauw.be.

See also Jonathan Lewis performs a detailed investigation of SQL Server clustered indexes in his Oracle to SQL Server: Putting the Data in the Right Place article of 9/17/2010 in the SQL Azure Database, Codename “Dallas” and OData section above.

Esther Schindler claimed “Web developers who want to (or must) embrace cloud computing need to learn more than a few new tools. Experts explain the skills you need to hone” in the deck for her feature-length Programming for Cloud Computing: What's Different article of 10/4/2010 for IT World:

The "cloud computing" label is applied to several technologies, and is laden with vendor and industry hype. Yet underneath it all, most of us acknowledge that there's something to all this stuff about utility computing and whatever-as-a-service.

The distinction is especially important to software developers, who know that the next-big-thing is apt to have an impact on their employability. Savvy, career-minded programmers are always trying to improve their skill sets, preparing for the next must-have technology.

What's different about cloud computing, compared to "regular" web development? If a programmer wants to equip herself to take advantage of the cloud in any of its myriad forms -- Software as a Service (SaaS), Platform as a Service (PaaS), or Infrastructure as a Service (Iaas) -- to what technologies should she pay attention? If your company has traditionally done web development but is planning to adopt the cloud in a big way, what unique skill sets should you look for? The learning curve can be even more bewildering because the concerns of a SaaS developer may be very different from one who's using a virtual development environment, yet the industry treats "the cloud" as if it's one monolithic technology.

"Developing applications in the cloud is a little like visiting Canada from America. Most everything is the same, but there are small differences that you notice and need to accommodate." -- David J. Jilk, Standing Cloud

For advice, I turned to experienced developers and vendors (the techies who lie awake at night agonizing about these issues, not the marketing people).

Here, therefore, is an overview of the things to pay attention to as you explore the cloud, including tools, scalability, security, architecture design, and expanding infrastructure knowledge.

Know Your Tools

Let's get the easy item out of the way first. Moving to the cloud probably requires you to learn new APIs, such as those for the Google App Engine, SalesForce.com, or whichever software your application will depend on. For most developers, learning a new API, poking at a new database tool, or exploring a new open source platform is a regular occurrence, though you do need to budget time for it.

And, while some development tools have extensions to permit deployment in the cloud, programmers have to learn those features. "In the case of Microsoft SQL Azure, there are technical challenges beyond a 'regular' web development environment," says Alpesh Patel, director of engineering at Ektron. You might have to come up to speed on sparse columns, extended stored procedures, Service Broker, or Common Language Runtime (CLR) and CLR User-Defined Types.

SQL Azure doesn’t support extended stored procedures, Service Broker, the Common Language Runtime (CLR) or CLR User-Defined Types.

Unosquare announced Microsoft hires Unosquare for Azure Research in a 10/3/2010 post to its company blog:

Starting this month, Unosquare has started a research project on how to run a popular open source, PHP application on Windows Azure and SQL Azure. Most developers agree that running a PHP application on the cloud is not rocket science. Initial research shows it is certainly no walk in the park. One of the first obstacles of such a task, is packaging PHP and its extensions correctly. Doing so requires a deep understanding of both, the file structure of deployed web roles and the php.ini file.

The second challenge is to migrate all SQL server calls using the commonly available MSSQL extension to the less common, native SQLSRV extension. Yet another problem is the migration of session handlers to Azure table storage services. And if these difficulties were not enough, a full implementation of a stream wrapper that is capable of simulating folders in blob storage is also required.

At this point, it is not known if Microsoft will release the results of this research, but it is very probable they will. We will certainly keep you posted.

Johan Hedberg wrote To learn or not to learn [Windows Azure] - it’s about delivering business value on 9/19/2010 (missed when posted):

For a developer Windows Azure is an opportunity. But it is also an obstacle. It represents a new learning curve much like the ones presented to us by the .NET framework over the last couple of years (most notably with WF, WCF and WPF/Silverlight). The nice things about it though is that it’s still .NET (if you prefer). There are new concepts – like “tables”, queues and blobs, web and worker roles, cloud databases and service buses – but, it’s also re-using those things we have been working with for numerous years like .NET, SQL, WCF and REST (if you want to).

You might hear that Azure is something that you must learn. You might hear that you are a dinosaur if you don’t embrace the Windows Azure cloud computing paradigm and learn its APIs and Architectural patterns.

Don’t take it literally. Read between the lines and be your own judge based on who you are and what role you hold or want to achieve. In the end it comes down to delivering business value – which often comes down to revenue or cost efficiency.

For the CxO cloud should be something considered. For the architect, Azure should be something grasped and explored. For the Lead Dev, Azure should be something spent time on. For the Joe Devs of the world, Azure is something that you should be prepared for, because it might very well be there in your next project and if it is – you are the one that knows it and excels.

As far as developers embracing Windows Azure I see a lot of parallels with WCF when that launched. Investments were done in marketing it as the new way of developing (in that case primarily services or interprocess communication). At one point developers were told that if they were not among the ones who understood it and did it, they were among the few left behind. Today I see some of the same movement around Azure, and in some cases the same kind of sentiment is brought forward.

I disagree. Instead my sentiment around this is: it depends. Not everyone needs to learn it today. But you will need to learn it eventually. After all… today, a few years later - Who among us would choose asmx web services over WCF today? Things change. Regardless of how you feel about it. Evolution is funny that way.

Because of the development and breadth of the .NET Framework together with diverse offerings surrounding it a wide range of roles are needed. In my opinion the “One Architect” no longer exists. Much the same with the “One Developer”. Instead the roles exists for different areas, products and technologies – in and around .NET. Specialization has become the norm. I believe Azure ads to this.

I give myself the role of architect (within my field). Though I would no sooner take on the task of architecting a Silverlight application than my first pick of on boarding a new member in our integration team would be someone that has been (solely) a Silverlight developer for the last couple of years.

How is Azure still different though? Azure (cloud) will (given time) affect almost all of Microsoft’s (and others) products and technologies (personal opinion, not quoting a official statement). It’s not just a new specialization - it will affect you regardless of your specialization.

You have to learn. You have to evolve. Why not start today?

Abel Avram [pictured at right] posted Patterns for Building Applications for Windows Azure to the InfoQ News blog on 9/15/2010 (missed when posted):

J.D. Meier, a Principal Program Manager for the patterns & practices group at Microsoft, has listed a number of ASP.NET application patterns for Windows Azure, showing how the components work in the cloud. He also gave an example of mapping a standard web application to the cloud.

The Canonical Windows Azure Application pattern contains Web Roles taking requests from the web and Worker Roles servicing these requests. Web Roles and Worker Roles are decoupled by the Queue Services as shown in the following figure:

A simpler version of this pattern is ASP.NET Forms Auth to Azure Tables:

Meier lists ten more patterns for building ASP.NET applications in the Azure cloud, some of them using form authorization, others using claims-based authorization, and other using WCF:

- Pattern #1 - ASP.NET Forms Auth to Azure Tables

- Pattern #2 - ASP.NET Forms Auth to SQL Azure

- Pattern #3 - ASP.NET to AD with Claims

- Pattern #4 - ASP.NET to AD with Claims (Federation)

- Pattern #5 - ASP.NET to WCF on Azure

- Pattern #6 - ASP.NET On-Site to WCF on Azure

- Pattern #7 - ASP.NET On-Site to WCF on Azure with Claims

- Pattern #8 - REST with AppFabric Access Control

- Pattern #9 - ASP.NET to Azure Storage

- Pattern #10 - ASP.NET to SQL Azure

- Pattern #11 - ASP.NET On-Site to SQL Azure Through WCF

The Web Application non-cloud pattern (see Microsoft Application Architecture Guide) describes a browser accessing a server application built on three basic layers – presentation, business, and data:

According to Meier, this pattern can be applied to Azure by incorporating the above mentioned layers into a Web Role, having the option to use Azure Storage for data and Azure Web Services for services:

The CodePlex patterns & practices - Windows Azure Guidance project contains more guidelines for moving, developing and integrating applications in the Microsoft cloud.

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@BethMassi) produced #8 - How Do I: Write business rules for validation and calculated fields in a LightSwitch Application? in the latest MSDN 00:19:13 video segment for LightSwitch:

In this video you will learn how to use the Entity Designer to specify declarative validation rules for fields as well as how to write complex business logic that runs on both the client and the server.

Beth Massi (@BethMassi) described #7 - How Do I: Pass a Parameter into a Screen from the Command Bar in a LightSwitch Application? in a recent 00:10:25 MSDN video segment:

In this video you will learn how to use the Screen and Query Designers to pass parameters into a screen and use parameters in queries. You will also see how to open a screen in code from the application command bar.

Beth Massi (@BethMassi) explained #6 - How Do I: Create a Master-Details (One-to-Many) Screen in a LightSwitch Application? in a recent 00:09:00 MSDN video segment:

In this video you will learn how to create a master-details (one-to-many) edit screen in Visual Studio LightSwitch. You will see how to use the Screen Designer to add child collections to a screen as well as how to create a new default edit screen with an editable child grid.

Return to section navigation list>

Windows Azure Infrastructure

Charles Arthur [pictured right] claimed to be “Liveblogging what's being said in London by the chief executive of Microsoft” as a lead-in to his Live at the LSE: Steve Ballmer on the cloud and the future of Microsoft's business post of 10/5/2010 for the guardian.co.uk:

8.59am: We're here at the LSE writing about Steve Ballmer, who is giving a speech entitled "Seizing the Opportunity of the Cloud: the Next Wave of Business Growth"

We're already about half an hour in (so that my battery wouldn't die - LSE has dispensed with power sockets in its lecture theatre).

So far Ballmer has given a brief outline of how IT has developed. He sounds like someone who is constantly straining to make his voice heard in a crowded noisy room. But he also looks less bulky than one might expect.

9.02am: UK is a country where there's early adoption of tech - which is part of why the Move software for Xbox will be launched here first.

These are terrible slides. He's no Steve Jobs.

...and now he's finished his speech. Eh? I thought we were in for an hour's disposition. Nope, half an hour of vague stuff was all.

9.04am: Reuters asks what makes Microsoft's cloud good. Computer Weekly asks ...."I was once at an event where you were introduced as Bill Gates.."

"We look alike," Ballmer says.

Stuxnet - is that something that heralds a new threat? (We suspect Ballmer hasn't heard of it.)

"I love where we are in the cloud. On the business side feel like we are ahead of whoever the closest second rival is. Where we are v the other competitors...

"On the platform side, Windows Azure, we have competitors like Amazon, and who are doing only the private cloud like Oracle." No mention of the G-word.

9.06am: "On the consumer side we have opportunities to improve our market share... [still no G-oogle word]... and the Kinect stuff... cloud, TV connectivity thing, it's really early, but if you look at what you can do with an Xbox this holiday it's ahead of our competitors."

Pause.

"Oh. Stuxnet."

Pause.

"The degree to which inevitably society commits to the back end infrastructure is a big deal. We can all do a great job respecting privacy.. there will be bad guys in the world.. we need to design infrastructures.. that we get the same sort of protection ... that people expect in the prior world. That isn't going to be easy, and certainly we're hard at work at it.

"As our nation states work against this... don't expect government harmonisation on this any time soon." [Don't think he knows what it is yet.]

9.09am: Colleague in the press says: "I don't understand a bloody word he says."

What's his favourite Xbox game? Ballmer into his element. "BEACH VOLLEYBALL BABY!!!" he yells. "My kids would tel you, THAT'S A LOT OF AIR UNDER YOUR FEET DADDY!"

9.10am: Complicated question from a student (they always are - students need to learn how to ask direct questions a la Paxman) about how technology will help people at the bottom of the economic pile.

"This will drive productivity, advance.. the size of the world's pie will be expanded by what we're doing... most of what I've talked about has the prospect both of helping people at the bottom of the economic pyramid and has the potential of making technology more affordable not less... because don't have to build proprietary infrastructure for each process."

9.12am: The Register asks if the operating system is dead. Goldman Sachs downgraded you. What does it mean for Windows 8. And his view on Chrome OS - Google's operating system.

"What? His what? It what?" Ballmer plays with her.

I ask: you talk about cloud computing, but you've lost a pile of money in Online Services. Can you make money from it, and can it replace any lost revenue in the cloud?

9.14am: Ballmer: "how are we doing? Pretty darn good. Could be better... we're going to make about $26bn in profit pre tax, only one company does better..

"We're making money in our online services, except in our search service we have made very deliberate decision to invest for the long run, if you believe that it's the right thing to do, we had a round in the early 2000s people were saying it was wrong to do the Xbox, I don't think it was wrong.

"Does that mean there aren't things we could have done differently.. will we have more competition.. of course. If you're in a business with cool things happening, of course you'll have competition."

..."In terms of how the IT business is made up by our count there's 20-25m people who work directly somehow in the IT business, in a vendor or IT department of a company. There's maybe 10-15% whose jobs will be automated in new ways as a consequence of the move to the cloud.

"On the other hand most IT shops have a backlog of things they want to build that is super long. [Bill Gates-ism, that 'super-' prefix.]"

9.18am: "What's your take on tablet computing and the cloud and the growth of Android in the cloud and how it's hindering the growth of Windows in this area?" (Student question. Ask structured questions, folks. That's how you get the useful answers.)

Another question: "....strategy... competition... operating systems..."

Another question from someone who says he's a "patent lawyer and a judge": "does the patent system help or hinder you, and with the cloud with all companies having different bits will it get in the way?"

Ballmer takes the last first: "Real good question." (It is.) "Is the patent system perfect - it's not. We think patent law ought to be reformed to reflect modern times. In general are we better with today's patent or none, we're better with today's. Patent reform needs to be taken up on this side and the US, but getting rid of the patent system in some way would not impede technological progress."

"There's ways you want to weed out frivolous innovation... there's negotiation between substandard companies, not extralegally, where companies try to work these things through privately; I believe the small inventor should have a seat at the table, some companies feel under the gun, but they should.

"Patent reform should be taken up because the pharma business and the IT, software business, neither existed when patent law was written, and there is reform which could help it do more than it could.

"And this is from a company that's paid more out than we've taken in licensing patents."

9.23am: E-government and savings: "the fundamental advance that will help the UK government and others save money is the automation of tasks that today take labour.

"We have been a force for price reduction, but that misses the big picture: software helps automate things that people do and reduce the hardware that it requires, because both those are bigger in the food chain in terms of costs."

9.24am: On phones and tablets: "we're going to be able to afford to have phones and tablets in our pocket. Big screens are great for a big demo - we saw that with the Kinect demo.

"On the pocket side, we got an early jump, we've got competition that I'm ... not happy about, but we've got competion. It's my belief that with Windows Phone people are going to say 'wow', yesterday a kid wanted to take a picture of me with ONE OF OUR COMPETITORS PHONES and I told him he could have a free Xbox if he got RID OF THAT UGLY PHONE and got one of our PRETTY phones." [Getting the hang of the Ballmer EMPHASES now. They leap out at you.]

"The people typing on a keyboard look happier... than those aren't.. I can tell you how many computers, how many Macs, how many iPads..

"You'll see slates, but if you want most of the benefits of what a PC can offer, creating, a form factor that has been tuned over years, you'll see us expand the footprint of what Windows can target..

"But we have to get back into phones.. and I love my Windows Phone 7."

9.27am: Chinese student question: why has piracy? become such a problem in China but not India? And which is a bigger threat in the future - blocking, or piracy?

Another question: what's his take on privacy issues relating to cloud computing?

Final question: what would it take to bring about the demise of Microsoft?

Ballmer: "I'M GOING TO START WITH THE THIRD QUESTION!!!"

9.29am: Ballmer: "The demise of Microsoft would require our complicit behaviour because we'd not be getting our job done. Companies' futures are in their own hands but they're not assured.

"We gotta invent, we gotta create, we gotta do new things. Because our past can be a help and a hindrance."

[Believe me, this is far better than the lecture. That was dull.]

"We can see companies almost disappear and then burst back.. I put Apple in that category..

"I kinda like what we're doing. That's about as good a job as I can do of not answering your question."

9.31am: Privacy: Ballmer says "We built something into Internet Explorer so that you could browse privately... it was a little controversial inside Microsoft when we did it, and there's a whole ecosystem on the web that's not happy about.

"My privacy I care a lot about. I gave you my email address (steveb@microsoft.com, if you're interested) but I'm not going to be your friend on Facebook.

"My son, he's 15, he doesn't care much about his privacy, but he wants something back for giving it away - he says 'why don't they just pay me $25 per month, they can track me everywhere'."

9.33am: On privacy still: "It's got to be a contract with the user."

Piracy: "Piracy in China is 8 times worse than in India, 20 times worse than in UK. Enforcement of the law needs to be stepped up. If you look at the environment there's a lot more than in India, in Russia.

"I think the Chinese government hears the message, it's more of a problem for Chinese companies - they need to have IP, it's to the disadvantage even more of the Chinese companies. It woudl be worth a lot to us; China is the No.2 market in the world, will be the No.1 market in the world soon for smartphones and PCs.

"Don't know how you'd control it.

"As you move to the cloud there will be regulations coming from the government, and that could be a problem, I'm a little nervous about that, particularly in the Chinese case, but you'll have to wait and see how it works out."

9.35am: ..winding up now. Apparently he's going to get an LSE baseball cap. He looks like the perfect Little League father.

And we're done. Well, it was interesting, at least on patents - and China.

Stay tuned for a link to the official transcript from Microsoft PressPass.

Tim Anderson (@timanderson) reported Steve Ballmer ducks questions at the London School of Economics in this 10/5/2010 post:

This morning Microsoft CEO Steve Ballmer spoke at the London School of Economics on the subject of Seizing the opportunity of the Cloud: the next wave of business growth. Well, that was supposed to be the topic; but as it happened the focus was vague – maybe that is fitting given the subject. Ballmer acknowledged that nobody was sure how to define the cloud and did not want to waste time attempting to do so, “cloud blah blah blah”, he said.

It was a session of two halves. Part one was a talk with some generalisations about the value of the cloud, the benefits of shared resources, and that the cloud needs rather than replaces intelligent client devices. “That the cloud needs smart devices was controversial but is now 100% obvious,” he said. He then took the opportunity to show a video about Xbox Kinect, the controller-free innovation for Microsoft’s games console, despite its rather loose connection with the subject of the talk.

Ballmer also experienced a Windows moment as he clicked and clicked on the Windows Media Player button to start the video; fortunately for all of us it started on the third or so attempt.

Just when we were expecting some weighty concluding remarks, Ballmer abruptly finished and asked for questions. These were conducted in an unusual manner, with several questions from the audience being taken together, supposedly to save time. I do not recommend this format unless the goal is to leave many of the questions unanswered, which is what happened.

Some of the questions were excellent. How will Microsoft compete against Apple iOS and Google Android? Since it loses money in cloud computing, how will it retain its revenues as Windows declines? What are the implications of Stuxnet, a Windows worm that appears to be in use as a weapon?

Ballmer does such a poor job with such questions, when he does engage with them, that I honestly do not think he is the right person to answer them in front of the public and the press. He is inclined to retreat into saying, well, we could have done better but we are working hard to compete. He actually undersells the Microsoft story. On Stuxnet, he gave a convoluted answer that left me wondering whether he was up-to-date on what it actually is. The revenue question he did not answer at all.

There were a few matters to which he gave more considered responses. One was about patents. “We’re better off with today’s patent system than with no patent system”, he said, before acknowledging that patent law as it stands is ill-equipped to cope with the IT or pharmaceutical industries, which hardly existed when the laws were formed.

Another was about software piracy in China. Piracy is rampant there, said Ballmer, twenty times worse than it is the UK. “Enforcement of the law in China needs to be stepped up,” he said, though without giving any indication of how this goal might be achieved.

He spoke in passing about Windows Phone 7, telling us that it is a great device, and added that we will see slates with Windows on the market before Christmas. He said that he is happy with Microsoft’s Azure cloud offering in relation to the Enterprise, especially the way it includes both private and public cloud offerings, but admits that its consumer cloud is weaker. [Emphasis added.]

Considering the widespread perception that Microsoft is in decline – its stock was recently downgraded to neutral by Goldman Sachs – this event struck me as a missed opportunity to present cogent reasons why Microsoft’s prospects are stronger than they appear, or to clarify the company’s strategy from cloud to device, in front of some of the UK’s most influential technical press.

I must add though that a couple of students I spoke to afterwards were more impressed, and saw his ducking of questions as diplomatic. Perhaps those of us who have followed the company’s activities for many years are harder to please.

Update: Charles Arthur has some more extensive quotes from the session in his report here.

Related posts:

David Linthicum posted The Office Political Side of Cloud Computing to ebizQ’s Where SOA Meets Cloud blog on 10/5/2010:

There seems to be a side effect of cloud computing, that is the political football that cloud computing has become within most enterprises. While you would think that technology is something that's apolitical, cloud computing seems to be a lot different considering the far reach of this technology, and the way that it may change the business.

Core to this issue are the former cloud computing skeptics, that now see cloud computing as a way to grab some power and some career momentum. You know the guys, just last year stating "We'll never run any core business applications outside of our firewall." However, the same guys have recently changed their tune in light of the hype and momentum, and when questioned about their past statements suddenly get selective amnesia.

The truth of the matter is that cloud computing has been coming from the bottom up, and not the top down. Developers, architects, and other rank-and-file IT staffers saw the benefits of cloud computing early on and have been slowing and quietly moving data and applications to the cloud, in many instances under the radar of IT leadership.

These days cloud computing has been getting boardroom attention, considering that it's on all business news channels and in the mainstream business press. Thus, in reaction to the hype they are calling IT leadership on the carpet around how they are approaching cloud computing, and therefore the recent change in attitude.

Those that are looking for a power grab are quickly positing themselves as cloud computing thought leaders within the enterprise, and in many cases grabbing projects away from those lower in the organization who have been promoting the use of cloud computing all along. So, these political animals are leading the cloud computing charge in many instances. Not because it's the right technology to leverage or they understand it, but because it's good career positioning to do so. In many cases they are not the right people to promote the use of cloud computing within the enterprises, unfortunately.

Not sure there is any cure for this.

David Linthicum claimed “Oracle's potshot at Salesforce.com is counterproductive -- and a sign of things to come” in the intro to his Let the cloud-to-cloud sniping begin post to InfoWorld’s Cloud Computing Blog of 10/5/2010:

CEO Larry Ellison is trying to define the essence of the cloud, which is a marked departure from his past rage against the cloud computing machine. As InformationWeek reported, "[Ellison] said Oracle's new 'cloud in a box' is different from Salesforce.com, which is not really cloud computing because its applications aren't virtualized. Furthermore, he contended, Salesforce.com's use of multitenancy represents 'a weak security model' and threat to the privacy of its customer's data."

First, the facts for those in the enterprise looking at the cloud to solve real business problems: SaaS systems don't have to use virtualization to be secure; in many instances, virtualization is not only unnecessary but also counterproductive. The architecture and enabling technology, such as virtualization, depends on what type of cloud you're building. There is no mandatory technology checklist. Perhaps Ellison is trying to push down the price of Salesforce.com's stock so that Oracle can purchase Salesforce.com on the cheap. Or perhaps he hasn't spent much time in the real world of cloud computing