Windows Azure and Cloud Computing Posts for 10/9/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 10/10/2010 with articles marked • Tip: Paste the bullet to the Ctrl+F search box.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Supergadgets rated my Cloud Computing with the Windows Azure Platform among the Best Windows Azure Books in a recent HubPages post:

… Cloud Computing with the Windows Azure Platform

I prefer Wrox's IT books much as well because they are mostly written by very experienced authors. Cloud computing with Azure book is an excellent work with the best code samples I have seen for Azure platform. Although the book is a bit old and some Azure concepts evolved in a different way, still it is one of the best about Azure. Starting with the concept of cloud computing, the author takes the reader to a progressive journey into the depths of Azure: Data center architecture, Azure product fabric, upgrade & failure domains, hypervisor (in which some books neglect the details), Azure application lifecycle, EAV tables usage, blobs. Plus, we learn how to use C# in .Net 3.5 for Azure programming. Cryptography, AES topics in security section are also well-explained. Chapter 6 is also important to learn about Azure Worker management issues.

Overall, another good reference on Windows Azure which I can suggest reading to the end.

p.s: Yes, the author had written over 30 books on different MS technologies.

Book Highlights from Amazon

- Handles numerous difficulties you might come across when relocating from on-premise to cloud-based apps (for example security, privacy, regulatory compliance, as well as back-up and data recovery)

- Displays how you can conform to ASP.NET validation and role administration to Azure web roles

- Discloses the advantages of offloading computing solutions to more than one WorkerRoles when moving to Windows Azure

- Shows you how to pick the ideal mixture of PartitionKey and RowKey values for sharding Azure tables

- Talks about methods to enhance the scalability and overall performance of Azure tables …

Azure Blob, Drive, Table and Queue Services

• Clemens Vasters (@clemensv) released Blobber– A trivial little [command-line] tool for uploading/listing/deleting Windows Azure Blob Storage files on 10/9/2010:

There must be dozens of these things, but I didn’t find one online last week and I needed a tool like this to prep a part of my keynote demo in Poland last week – and thus I wrote one. It’s a simple file management utility that works with the Windows Azure Blob store. No whistles, no bells, 344 lines of code if you care to look, both exe and source downloads below, MS-PL license.

Examples:

List all files from the 'images' container:

Blobber.exe -o list -c images -a MyAcct -k <key>

List all files matching *.jpg from the 'images' container:

Blobber.exe -o list -c images -a MyAcct -k <key> *.jpg

List all files matching *.jpg from the 'images' container (case-insensitive):

Blobber.exe -o list -l -c images -a MyAcct -k <key> *.jpg

Delete all files matching *.jpg from the 'images' container:

Blobber.exe -o deletefile -l -c images -a MyAcct -k <key> *.jpg

Delete 'images' container:

Blobber.exe -o deletectr -c images -a MyAcct -k <key>

Upload all files from the c:\pictures directory into 'images' container:

Blobber.exe -o upload -c images -a MyAcct -k <key> c:\pictures\*.jpg

Upload like above and include all subdirectories:

Blobber.exe -o upload -s -c images -a MyAcct -k <key> c:\pictures\*.*

Upload like above and convert all file names to lower case:

Blobber.exe -o upload -l -s -c images -a MyAcct -k <key> c:\pictures\*.*

Upload all files from the c:\pictures directory into 'images' container on dev storage:

Blobber.exe -o upload -l -s -c images -d c:\pictures\*.*

Arguments:

-o <operation> upload, deletectr, deletefile, list (optional, default:'list')

-o list [options] -c <container> <relative-uri-suffix-pattern> (* and ? wildcards)

-o upload [options] [-s] -c <container> <local-path-file-pattern>

-o deletefile [options] -c <container> <relative-uri-suffix-pattern> (* and ? wildcards)

-o deletectr [options] -c <container>

-c <container> Container (optional, default:'files')

-s Include local file subdirectories (optional, upload only)

-p Make container public (optional)

-l Convert all paths and file names to lower case (optional)

-d Use the local Windows Azure SDK Developer Storage (optional)

-b <baseUri> Base URI (optional override)

-a <accountName> Account Name (optional if specified in config)

-k <key> Account Key (optional if specified in config)You can also specify your account infomation in blobber.exe.config and omit the -a/-k arguments.

Executable: Blobber-exe.zip (133.4 KB)

Source: Blobber-src.zip (5.41 KB)[Update: I just found that I unintentionally used the same name as a similar utility from Codeplex: http://blobber.codeplex.com/. Sorry.]

Neil MacKenzie posted Performance in Windows Azure and Azure Storage on 10/9/2010 to his new WordPress blog:

The purpose of this post is to provide links to various posts about the performance of Azure and, in particular, Azure Storage.

Azure Storage Team

The Azure Storage Team blog – obligatory reading for anyone working with Azure Storage – has a number of posts about performance.

The Windows Azure Storage Abstractions and their Scalability Targets post documents limits for storage capacity and performance targets for Azure blobs, queues and tables. The post describes a scalability target of 500 operations per second for a single partition in an Azure Table and a single Azure Queue. There is an additional scalability target of a “few thousand requests per second” for each Azure storage account. The scalability target for a single blob is “up to 60 MBytes/sec.”

The Nagle’s Algorithm is Not Friendly towards Small Requests post describes issues pertaining to how TCP/IP handles small messages < 1460 bytes). It transpires that Azure Storage performance may be improved by turning Nagle off. The post shows how to do this.

Rob Gillen

Rob Gillen (@argodev) has done a lot of testing of Azure Storage performance and, in particular, on maximizing throughput for uploading and downloading of Azure Blobs. He has documented this in a series of posts: Part 1, Part 2, Part 3. The most surprising observation is that it while operating completely inside Windows Azure it is not worth doing parallel downloads of a blob because the overhead of reconstructing the blob is too high.

He has another post, External File Upload Optimizations for Windows Azure, that documents his testing of using various block sizes for uploads of blobs from outside an Azure datacenter. He suggests that choosing a 1MB block size may be an appropriate rule-of-thumb choice. These uploads can, of course, be performed in parallel.

University of Virginia

A research group at the University of Virginia presented the results of its investigations into the performance of Windows Azure at the 2010 ACM International Symposium on High Performance Distributed Computing. The document is downloadable from the ACM Library or (somewhat cheaper) from the webpage of one of the researchers. There is also a PowerPoint version for those with a short attention span.

The paper covers both Windows Azure and Azure Storage. The researchers used up to 192 instances at a time to investigate both the times taken for instance-management tasks and the maximum storage throughput as the number of instances varied. There is a wealth of performance information which deserves the attention of anyone developing scalable services in Windows Azure. Who would have guessed, for example, that inserting a 1KB entity into an Azure Table is 26 times faster than updating the same entity while inserting a 64KB entity is only 4 times faster.

This work is credited to: Zach Hill, Jie Li, Ming Mao, Arkaitz Ruiz-Alvarez, and Marty Humphrey – all of the University of Virginia.

Microsoft eXtreme Computing Group

The Microsoft eXtreme Computing Group has a website, Azurescope, that documents benchmarking and guidance for Windows Azure. The Azurescope website has a page describing Best Practices for Developing on Window Azure and a set of pages with code samples demonstrating various optimal techniques for using Windows Azure Storage services. Note that Rob Gillen and the University of Virginia group are also involved in the Azurescope project.

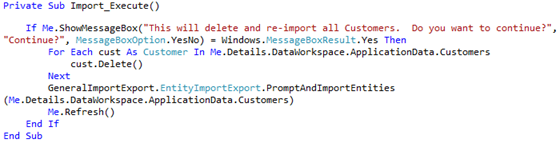

Bill Wilder asked Why Don't Windows Azure Libraries Show Up In Add Reference Dialog when Using .NET Framework Client Profile? on 10/9/2010:

You are writing an application for Windows – perhaps a Console App or a WPF Application – or maybe an old-school Windows Forms app. Every is humming along. Then you want to interact with Windows Azure storage. Easy, right? So you Right-Click on the References list in Visual Studio, pop up the trusty old Add Reference dialog box, and search for Microsoft.WindowsAzure.StorageClient in the list of assemblies.

But it isn’t there!

You already know you can’t use the .NET Managed Libraries for Windows Azure in a Silverlight app, but you just know it is okay in a desktop application.

You double-check that you have installed Windows Azure Tools for Microsoft Visual Studio 1.2 (June 2010) (or at least Windows Azure SDK 1.2 (last refreshed from June in Sept 2010 with a couple of bug-fixes)).

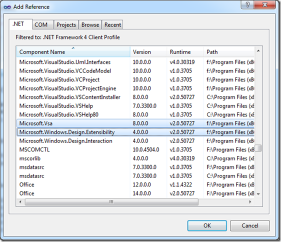

You sort the list by Component Name, then leveraging your absolute mastery of the alphabet, you find the spot in the list where the assemblies ought to be, but they are not there. You see the one before in the alphabet, the one after it in the alphabet, but no Microsoft.WindowsAzure.StorageClient assembly in sight. What gives?

Look familiar? Where is the Microsoft.WindowsAzure.StorageClient assembly?

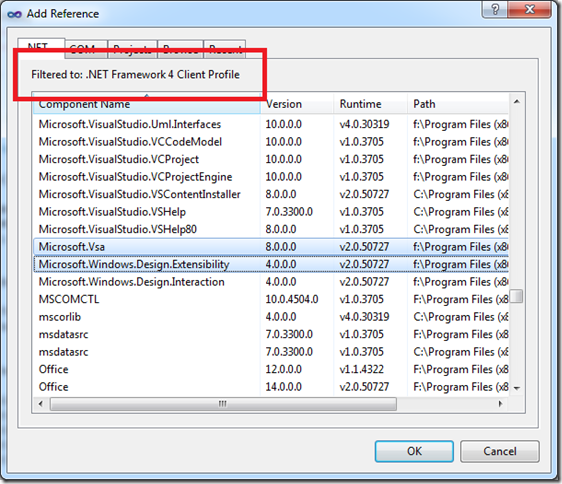

Azure Managed Libraries Not Included in .NET Framework 4 Client Profile

If your eyes move a little higher in the Add Reference dialog box, you will see the problem. You are using the .NET Framework 4 Client Profile. Nothing wrong with the Client Profile – it can be a friend if you want a lighter-weight version of the .NET framework for deployment to desktops where you can’t be sure your .NET platform bits are already there – but Windows Azure Managed Libraries are not included with the Client Profile.

Bottom line: Windows Azure Managed Libraries are simply not supported in the .NET Framework 4 Client Profile

How Did This Happen?

It turns out that in Visual Studio 2010, the default behavior for many common project types is to use the .NET Framework 4 Client Profile. There are some good reasons behind this, but it is something you need to know about. It is very easy to create a project that uses the Client Profile because it is neither visible – and with not apparent option for adjustment – on the Add Project dialog box – all you see is .NET Framework 4.0:

The “Work-around” is Simple: Do Not Use .NET Framework 4 Client Profile

While you are not completely out of luck, you just can’t use the Client Profile in this case. And, as the .NET Framework 4 Client Profile documentation states:

If you are targeting the .NET Framework 4 Client Profile, you cannot reference an assembly that is not in the .NET Framework 4 Client Profile. Instead you must target the .NET Framework 4.

So let’s use the (full) .NET Framework 4.

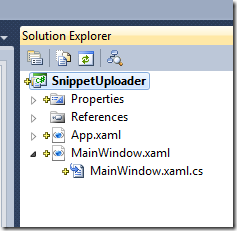

Changing from .NET Client Profile to Full .NET Framework

To move your project from Client Profile to Full Framework, right-click on your project in Solution Explorer (my project here is called “SnippetUploader”):

From the bottom of the pop-up list, choose Properties.

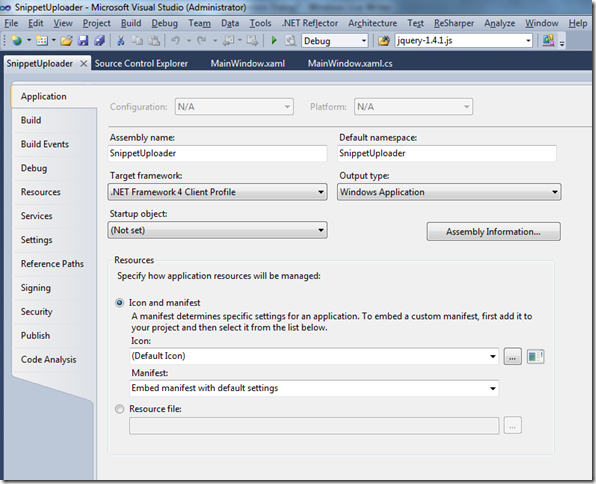

This will bring up the Properties window for your application. It will look something like this:

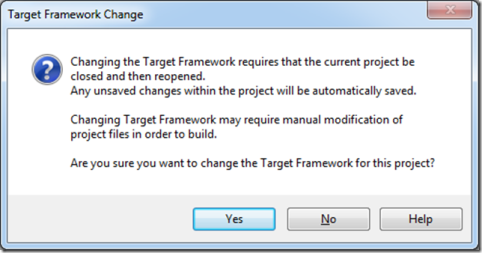

Of course, by now you probably see the culprit in the screen shot: change the “Target framework:” from “.NET Framework 4 Client Profile” to “.NET Framework 4” (or an earlier version) and you have one final step:

Now you should be good to go, provided you have Windows Azure Tools for Microsoft Visual Studio 1.2 (June 2010) installed. Note, incidentally, that the Windows Azure tools for VS mention support for

…targeting either the .NET 3.5 or .NET 4 framework.

with no mention of support the .NET Client Profile. So stop expecting it to be there!

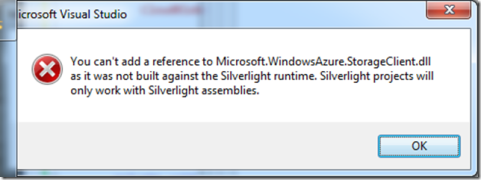

Bill Wilder reported You can't add a reference to Microsoft.WindowsAzure.StorageClient.dll as it was not build against the Silverlight runtime on 10/8/2010:

Are you developing Silverlight apps that would like to talk directly to Windows Azure APIs? That is perfectly legal, using the REST API. But if you want to use the handy-dandy Windows Azure Managed Libraries – such as Microsoft.WindowsAzure.StorageClient.dll to talk to Windows Azure Storage – then that’s not available in Silverlight.

As you may know, Silverlight assembly format is a bit different than straight-up .NET, and attempting to use Add Reference from a Silverlight project to a plain-old-.NET assembly just won’t work. Instead, you’ll see something like this:

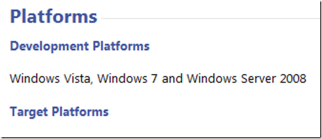

If you pick a class from the StorageClient assembly – let’s say, CloudBlobClient – and check the documentation, it will tell you where this class is supported:

Okay – so maybe it doesn’t exactly – the Target Platforms list is empty – presumably an error of omission. But going by the Development Platforms list, you wouldn’t expect it to work in Silverlight.

There’s Always REST

As mentioned, you are always free to directly do battle with the Azure REST APIs for Storage or Management. This is a workable approach. Or, even better, expose the operations of interest as Azure services – abstracting them as higher level activities. You have heard of SOA, haven’t you?

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• David Ebbo (@DavidEbbo) tweeted on 10/9/2010:

We have a preliminary #nupack feed using oData at http://bit.ly/dkczL7. You'll need to build newest client to use it.

Here’s what the first entry looks like:

See Kelly Fiveash’s Microsoft lovingly open sources .NET package manager Register article of 10/7/2010 for more information about NuPack.

• See Microsoft’s Campbell Gunn announced “no plans in Version 1 to support/extend oData” in a 10/9/2010 reply to apacifico’s Lightswitch dataprovider support and odata question of 10/9/2010 in the LightSwitch Extensibility forum in the Visual Studio LightSwitch section below.

• Nikos Makris explained Transforming Latitude and Longitude into Geography SQL Server Type in this brief post of 10/9/2010:

SQL Server 2008 provides support for spatial data and many accompanying functions. SQL Server Reporting Services 2008 R2 has a built in support for maps (SHD files or even bing maps). There is a handy wizard in Report Builder 3.0 that will guide you through through the report creation process with map. If you want to use Bing Maps you have to have a Geography type in your SQL datasource somewhere.

I recently faced a situation where the customer had the Lat & Long coordinates of the spots which should be plotted in a map. In order to convert lat & long in Geography type use the following T-SQL:

geography::STPointFromText('POINT(' + CAST([Longitude] AS VARCHAR(10)) + ' ' +

CAST([Latitude] AS VARCHAR(10)) + ')', 4326)Then you have the option of using the Bing maps in your SSRS reports.

4326 refers to the EPSG:4326 Geographic longitude-latitude projection. You can learn more about EPSG:4326 here and read Microsoft Research’s Adding the EPSG:4326 Geographic Longitude-Latitude Projection to TerraServer publication by Siddharth Jain and Tom Barclay of August 2003. The latter might tell you more than you want to know about EPSG:4326.

My (@rogerjenn) Patrick Wood Requests Votes for Access 2010 ADO Connections to SQL Azure post to the AccessInDepth blog of 10/9/2010 follows up on an earlier post:

In his You Can Vote to Get ADO for Microsoft Access® to Connect to SQL Azure™ post of 10/9/2010, Patrick Wood wrote:

Microsoft has put much more emphasis lately on listening to developers and users when they ask for Features to be added to Microsoft Software. This has led to many improvements in new software releases and updates. Microsoft is really listening to us but you have to know where to give them your requests.

A few days ago on the SQL Azure Feature Voting Forum I put in a request for “Microsoft Access ADO–Enable Microsoft Access ADO 2.x Connections to SQL Azure.” My comment was “This will enable more secure connections to SQL Azure and provide additional functionality. ODBC linked tables and queries expose your entire connection string including your server, username, and password.”

On Friday Roger Jennings posted about this on his Roger Jennings’ Access Blog, “I agreed and added three votes.” He also wrote about it on his software consulting organization blog, OakLeaf Systems and added a link to the The #sqlazure Daily which I have found to be a great place to keep up with the very latest tweets, articles, and news about SQL Azure. Roger Jennings has written numerous books about programming with Microsoft Software, a number of which have been about Microsoft Access. His books are among the very best about Access and contain a wealth of helpful detailed information. I have never had a conversation or any correspondence with before now but I greatly appreciate his support in this matter.

Why do we need ADO (this is not ADO.Net) when we have ODBC to link tables and queries to SQL Azure? If you are just planning to use SQL Azure for your own use you can get by fine without it. But if you distribute Access Databases that use SQL Azure as a back end then you have to be very careful because an ODBC DSN is a plain text file that contains your server web address, your user name, and your password. If you use DSN-less linked tables and queries another programmer can easily read all your connection information through the TableDef.Connect or QueryDef.Connect properties even if you save your database as an accde or mde file.

ADO allows you to use code to connect to SQL Server and if we had the same capability with SQL Azure this would allow us to keep all of our connection information in code. Then using accde or mde files will provide us with better security. ADO also provides additional functionality which can make developing with SQL Azure easier.

We have already picked up a few votes for ADO but we need a lot more to let Microsoft know there are enough of us developers who want ADO to make it worthy of their attention.

You can vote for ADO here. You can use 3 votes at a time and your support is greatly appreciated.

You can read more about SQL Azure and Microsoft Access at my Gaining Access website.

Happy computing,

Patrick (Pat) Wood

Gaining Access

Dan Jones explains Moving Databases Between SQL Azure Servers with a .dacpac in a 10/9/2010 post:

This week I had to move a couple of databases between two SQL Azure accounts. I needed to decommission one account and thus get the important stuff out before nuking it. My goal was straight forward: move two databases from one SQL Azure server (and account) to another SQL Azure server (and account). The applications have a fairly high tolerance for downtime which meant I didn’t have to concern myself with maintaining continuity.

For the schema I had two options to chose from: script the entire database (using Management Studio) or extract the database as a .dacpac. For the data I also had two options: include the data as part of the script or use the Import/Export Wizard to copy the data. As a side note, I always thought this tool was named backwards – shouldn’t it be the Export/Import tool?

I opted to go the .dacpac route for two simple reasons: first, I wanted to get the schema moved over first and validate it before moving the data and second, I wanted to have the schema in a portable format that wasn’t easily altered. Think of this as a way of preserving the integrity of the schema. Transact-SQL scripts are too easy to change without warning.

I connected Management Studio to the server being decommissioned and from each database I created a .dacpac – I did this by right-clicking the database in Object Explorer, selecting Tasks –> Extract Data-tier Application… I followed the prompts in the wizard, accepting all of the defaults. Neither of my schema are very complex so the process was extremely fast for both.

Once I had the two .dacpacs on my local machine I connected Management Studio to the new server. I expanded the Management node, right-clicked on Data-tier Applications and selected Deploy Data-tier Application. This launched the deployment wizard. I followed the wizard, accepting the defaults. I repeated this for the second database.

Now that I had the schema moved over, and since I used the Data-tier Application functionality I had confidence everything moved correctly – because I didn’t receive any errors! It’s time to move the data.

I opted to use the Import/Export wizard for this. It was a simple and straight forward. I launched the wizard, pointed to the original database, pointed to the new database, selected my tables and let ‘er rip! It was fast (neither database is very big) and painless. One thing to keep in mind when doing this is it’s not performing a server to server copy; it brings the data locally and then pushes it back up to the cloud.

The final step was to re-enable the logins. For security reasons passwords are not stored in the .dacpac. When SQL logins are created during deployment, each SQL login is created as a disabled login with a strong random password and the MUST_CHANGE clause. Thereafter, users must enable and change the password for each new SQL login (using the ALTER LOGIN command, for example). I quick little Transact-SQL and my logins are back in business.

The entire process took me about 15 minutes to complete (remember my databases are relatively small) – it was awesome!

Every time I use SQL Azure I walk away with a smile on my face. It’s extremely cool that I have all this capability at my finger tips and I don’t have to worry about managing a service, applying patches, etc.

Dan is a Principal Program Manager at Microsoft on the SQL Server Manageability team.

Pablo M. Cibraro (@cibrax) explained ASP.NET MVC, WCF REST and Data Services – When to use what for RESTful services on 10/8/2010:

Disclaimer: This post only contains personal opinions about this subject

In the way I see it, REST mainly comes in for two scenarios, when you need to expose some AJAX endpoints for your web application or when you need to expose an API to external applications through well defined services.

For AJAX endpoints, the scenario is clear, you want to expose some data for being consumed by different web pages with client scripts. At this point, what you expose is either HTML, text, plain XML, or JSON, which is the most common, compact and efficient format for dealing with data in javascript. Different client libraries like JQuery or MooTools already come with built-in support for JSON. This data is not intended for being consumed by other clients rather than the pages running in the same web domain. In fact, some companies add custom logic in these endpoints for responding only to requests originated in the same domain.

For RESTful services, the scenario is completely different, you want to expose data or business logic to many different client applications through a well know API. Good examples of REST services are twitter, the Windows Azure storage services or the Amazon S3 services to name a few.

Smart client applications implemented with Silverlight, Flex or Adobe Air also represent client applications that can be included in this category with some restrictions, as they can not make cross domain call to HTTP services by default unless you override the cross-domain policies.

A common misconception is to think that REST services only mean CRUD for data, which is one of the most common scenarios, but business workflows can also be exposed through this kind of service as it is shown in this article “How to GET a cup of coffee”

When it comes to the .NET world, you have three options for implementing REST services (I am not considering third party framework or OS projects in this post),

- ASP.NET MVC

- WCF REST

- WCF Data Services (OData)

ASP.NET MVC

This framework represents the implementation of the popular model-view-controller (MVC) pattern for building web applications in the .NET space. The reason for moving traditional web application development toward this pattern is to produce more testable components by having a clean separation of concerns. The three components in this architecture are the model, which only represents data that is shared between the view and the controller, the view, which knows how to render output results for different web agents (i.e. a web browser), and finally the controller, which coordinates the execution of an use case and the place where all the main logic associated to the application lives on. Testing ASP.NET Forms applications was pretty hard, as the implementation of a page usually mixed business logic with rendering details, the view and the controller were tied together, and therefore you did not have a way to focus testing efforts on the business logic only. You could prepare your asp.net forms application to use MVC or MVP (Model-View-Presenter) patterns to have all that logic separated, but the framework itself did not enforce that.

On the other hand, in ASP.NET MVC, the framework itself drives the design of the web application to follow an MVC pattern. Although it is not common, developers can still make terrible mistakes by putting business logic in the views, but in general, the main logic for the application will be in the controllers, and those are the ones you will want to test.

In addition, controllers are TDD friendly, and the ASP.NET MVC team has made a great work by making sure that all the ASP.NET intrinsic objects like the Http Context, Sessions, Request or Response can be mocked in an unit test.

While this framework represents a great addition for building web applications on top of ASP.NET, the API or some components in the framework (like view engines) not necessarily make a lot of sense when building stand alone services. I am not saying that you could not build REST services with ASP.NET MVC, but you will have to leverage some of the framework extensibility points to support some of the scenarios you might want to use with this kind of services.

Content negotiation is a good example of an scenario not supported by default in the framework, but something you can implement on your own using some of the available extensions. For example, if you do not want to tie your controller method implementation to an specific content type, you should return an specific ActionResult implementation that would know how to handle and serialize the response in all the supported content types. Same thing for request messages, you might need to implement model binders for mapping different content types to the objects actually expected by the controller.

The public API for the controller also exposes methods that are more oriented to build web applications rather than services. For instance, you have methods for rendering javascript content, or for storing temporary data for the views.

If you are already developing a website with ASP.NET MVC, and you need to expose some AJAX endpoints (which actually represents UI driven services) for being consumed in the views, probably the best thing you can do is to implement them with MVC too as operations in the controller. It does not make sense at this point to bring WCF to implement those, as it would only complicate the overall architecture of the application. WCF would only make sense if you need to implement some business driven services that need to be consumed by your MVC application and some other possible clients applications as well.

This framework also introduced a new routing mechanism for mapping URLs to controller actions in ASP.NET, making possible to have friendly and nice URLS for exposing the different resources in the API.

As this framework is layered on top of ASP.NET, one thing you might find complicated to implement is security. Security in ASP.NET is commonly tied to a web application, so it is hard to support schemes where you need different authentication mechanisms for your services. For example, if you have basic authentication enabled for the web application hosting the services, it would be complicated to support other authentication mechanism like OAuth. You can develop custom modules for handling these scenarios, but that is something else you need to implement.

WCF REST

The WCF Web Http programming model was first introduced as part of the .NET framework 3.5 SP1 for building non-SOAP http services that might follow or not the different REST architectural constraint. This new model brought to the scene some new capabilities for WCF by adding new attributes in the service model ([WebGet] and [WebInvoke]) for routing messages to service operations through URIs and Http methods, behaviors for doing same basic content negotiation and exception handling, a new WebOperationContext static object for getting access and controlling different http header and messages, and finally a new binding WebHttpBinding for handling some underline details related to the http protocol.

The WCF team later released an REST starter kit in codeplex with new features on top of this web programming model to help developers to produce more RESTful services. This starter kit also included a combination of examples and Visual Studio templates for showing how some of the REST constraints could be implemented in WCF, and also a couple of interesting features to support help pages, output caching, intercepting request messages and a very useful Http client library for consuming existing services (HttpClient)

Many of those features were finally included in the latest WCF release, 4.0, and also the ability of routing messages to the services with friendly URLs using the same ASP.NET routing mechanism that ASP.NET MVC uses.

As services are first citizens in WCF, you have exclusive control over security, message size quotas and throttling for every individual services and not for all services running in a host as it would happen with ASP.NET. In addition, WCF provides its own hosting infrastructure, which is not dependant of ASP.NET so it is possible to self hosting services in any regular .NET application like a windows service for example.

In the case of hosting services in ASP.NET with IIS, previous versions of WCF (3.0 and 3.5) relied on a file with “svc” extension to activate the service host when a new message arrived. WCF 4.0 now supports file-less activation for services hosted in ASP.NET, which relies on a configuration setting, and also a mechanism based on Http routing equivalent to what ASP.NET MVC provides, making possible to support friendly URLs. However, there is an slight difference in the way this last one works compared to ASP.NET MVC. In ASP.NET MVC, a route specifies the controller and also the operation or method that should handle a message. In WCF, the route is associated to a factory that knows how to create new instances of the service host associated to the service, and URI templates attached to [WebGet] and [WebInkoke] attributes in the operations take care of the final routing. This approach works much better in the way I see it, as you can create an URI schema more oriented to resources, and route messages based on Http Verbs as well without needing to redefine additional routes.

The support for doing TDD at this point is somehow limited fore the fact that services rely on the static context class for getting and setting http headers, making very difficult to initialize that one in a test or mock it for setting some expectations.

The content negotiation story was improved in WCF 4.0, but it still needs some twists to make it complete as you might need to extend the default WebContentTypeMapper class for supporting custom media types other than the standard “application/xml” for xml and “application/json” for JSON.

The WCF team is seriously considering to improve these last two aspects and adding some other capabilities to the stack for a next version.

WCF Data Services

WCF Data Services, formerly called ADO.NET Data Services, was introduced in the .NET stack as way of making any IQueryable data source public to the world through a REST API. Although a WCF Data Service sits on top of the WCF Web programming model, and therefore is a regular WCF service, I wanted to make a distinction here for the fact that this kind of service exposes metadata for the consumers, and also adds some restrictions for the URIs and the types that can be exposed in the service. All these features and restrictions have been documented and published as a separate specification known as OData.

The framework includes a set of providers or extensibility points that you can customize to make your model writable, extend the available metadata, intercepting messages or supporting different paging and querying schemas.

A WCF Data Service basically uses URI segments as mechanism for expressing queries that can be translated to an underline linq provider, making possible to execute the queries on the data source itself, and not something that happens in memory. The result of executing those queries is what finally got returned as response from the service. Therefore, WCF Data services use URI segments to express many of supported linq operators, and the entities that need to be retrieved. This capability of course is what limit the URI space that you can use on your service, as any URI that does not follow the OData standard will result in an error.

Content negotiation is also limited to two media types, JSON and Xml Atom, and the content payload itself is restricted to specific types that you can find as part of the OData specification.

Besides of those two limitations, WCF Data Service is still extremely useful for exposing a complete data set with query capabilities through a REST interface with just a few lines of code. JSON and Atom are two very accepted formats nowadays, making this technology very appealing for exposing data that can easily be consumed by any existing client platform, and even web browsers.

Also, for Web applications with ajax and smart client applications, you do not need to reinvent the wheel and create a complete set of services for just exposing data with a CRUD interface. You get your existing data model, configure some views or filters for the data you want to expose in the model in the data service itself, and that is all.

Steve Yi posted Model First For SQL Azure to the SQL Azure Team blog on 9/30/2010 (missed when posted)

One of the great uses for ADO.NET Entity Framework 4.0 that ships with .NET Framework 4.0 is to use the model first approach to design your SQL Azure database. Model first means that the first thing you design is the entity model using Visual Studio and the Entity framework designer, then the designer creates the Transact-SQL for you that will generate your SQL Azure database schema. The part I really like is the Entity framework designer gives me a great WYSIWYG experience for the design of my tables and their inter-relationships. Plus as a huge bonus, you get a middle tier object layer to call from your application code that matches the model and the database on SQL Azure.

Visual Studio 2010

The first thing to do is open Visual Studio 2010, which has the 4.0 version of the Entity Framework, this version works especially well with SQL Azure. If you don’t have Visual Studio 2010, you can download the Express version for free; see the Get Started Developing with the ADO.NET Entity Framework page.

Data Layer Assembly

At this point you should have a good idea of what your data model is, however you might not know what type of application you want to make; ASP.NET MVC, ASP.NET WebForms, Silverlight, etc.. So let’s put the entity model and the objects that it creates in a class library. This will allow us to reference the class library, as an assembly, for a variety of different applications. For now, create a new Visual Studio 2010 solution with a single class library project.

Here is how:

- Open Visual Studio 2010.

- On the File menu, click New Project.

- Choose either Visual Basic or Visual C# in the Project Types pane.

- Select Class Library in the Templates pane.

- Enter ModelFirst for the project name, and then click OK.

The next set is to add an ADO.NET Entity Data Model item to the project, here is how:

- Right click on the project and choose Add then New Item.

- Choose Data and then ADO.NET Entity Data Model

- Click on the Add Button.

- Choose Empty Model and press the Finish button.

Now you have an empty model view to add entities (I still think of them as tables).

Designing You Data Structure

The Entity Framework designer lets you drag and drop items from the toolbox directly into the designer pane to build out your data structure. For this blog post I am going to drag and drop an Entity from the toolbox into the designer. Immediately I am curious about how the Transact-SQL will look from just the default entity.

To generate the Transact-SQL to create a SQL Azure schema, right click in the designer pane and choose Generate Database From Model. Since the Entity Framework needs to know what the data source is to generate the schema with the right syntax and semantics we are asked by Entity Framework to enter connection information in a set of wizard steps.

Since I need a New Connection to I press the Add Connection button on the first wizard page. Here I enter connection information for a new database I created on SQL Azure called ModelFirst; which you can do from the SQL Azure Portal. The portal also gives me other information I need for the Connection Properties dialog, like my Administrator account name.

Now that I have the connection created in Visual Studio’s Server Explorer, I can continue on with the Generate Database Wizard. I want to uncheck that box that saves my connection string in the app.config file. Because this is a Class Library the app.config isn’t relevant -- .config files go in the assembly that calls the class library.

The Generate Database Wizard creates an Entity Framework connection string that is then passed to the Entity Framework provider to generate the Transact-SQL. The connection string isn’t stored anywhere, however it is needed to connect to the SQL Azure to find out the database version.

Finally, I get the Transact-SQL to generate the table in SQL Azure that represents the Transact-SQL.

-- -------------------------------------------------- -- Creating all tables -- -------------------------------------------------- -- Creating table 'Entity1' CREATE TABLE [dbo].[Entity1] ( [Id] int IDENTITY(1,1) NOT NULL ); GO -- -------------------------------------------------- -- Creating all PRIMARY KEY constraints -- -------------------------------------------------- -- Creating primary key on [Id] in table 'Entity1' ALTER TABLE [dbo].[Entity1] ADD CONSTRAINT [PK_Entity1] PRIMARY KEY CLUSTERED ([Id] ASC); GOThis Transact-SQL is saved to a .sql file which is included in my project. The full project looks like this:

I am not going to run this Transact-SQL on a connection to SQL Azure; I just wanted to see what it looked like. The table looks much like I expected it to, and Entity Framework was smart enough to create a clustered index which is a requirement for SQL Azure.

Summary

Watch for our upcoming video and interview with Faisal Mohamood of the Entity Framework team to demonstrate a start-to-finish example of Model First. From modeling the entities, generating the SQL Azure database, and all the way to inserting and querying data utilizing Entity Framework.

Make sure to check back, or subscribe to the RSS feed to be alerted as we post more information. Do you have questions, concerns, comments? Post them below and we will try to address them.

C|Net Download posted a link to Kreuger Systems, Inc.’s OData Browser 1.0 for iPhone recently:

From Krueger Systems, Inc.:

OData Browser enables you to query and browse any OData source. Whether you're a developer or an uber geek who wants access to raw data, this app is for you. It comes with the following sources already configured:

- Netflix - A huge database of movies and TV shows

- Open Government Initiative - Access to tons of data published by various US government branches

- Vancouver Data Service - Huge database that lists everything from parking lots to drinking fountains

- Nerd Dinner - A social site to meet other nerds

- Stack Overflow, Super User, and Server Fault - Expert answers for your IT needs Anything else!

If you use Sharepoint 2010, IBM WebSphere, Microsoft Azure, then you can use this app to browse that data. The app features:

- Support for data relationship following

- Built-in map if any of the data specifies a longitude and latitude

- Built-in browser to navigate URLs and view HTML

- Query editor that lists all properties for feeds

Use this app to query your own data or to learn about OData. There is a vast amount of data available today and data is now being collected and stored at a rate never seen before. Much, if not most, of this data however is locked into specific applications or formats and difficult to access or to integrate into new uses. The Open Data Protocol (OData) is a Web protocol for querying and updating data that provides a way to unlock your data and free it from silos that exist in applications today.

Preconfigured sources are the usual suspects.

Hilton Giesenow produced an undated 00:12:26 Use SQL Azure to build a cloud application with data access video segment for MSDN:

Microsoft SQL Azure provide for a suite of great relational-database-in-the-cloud features. In this video, join Hilton Giesenow, host of The Moss Show SharePoint Podcast, as he explores how to sign up and get started creating a Microsoft SQL Azure database. In this video we also look at how to connect to the SQL Azure database using the latest release of Microsoft SQL Server Management Studio Express (R2) as well as how to configure an existing on-premises ASP.NET application to speak to SQL Azure.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Juval Lovey wrote AppFabric Service Bus Discovery for MSDN Magazine’s October 2010 issue:

In my January 2010 article, “Discover a New WCF with Discovery” (msdn.microsoft.com/magazine/ee335779), I presented the valuable discovery facility of Windows Communication Foundation (WCF) 4. WCF Discovery is fundamentally an intranet-oriented technique, as there’s no way to broadcast address information across the Internet.

Yet the benefits of dynamic addresses and decoupling clients and services on the address axis would apply just as well to services that rely on the service bus to receive client calls.

Fortunately, you can use events relay binding to substitute User Datagram Protocol (UDP) multicast requests and provide for discovery and announcements. This enables you to combine the benefit of easy deployment of discoverable services with the unhindered connectivity of the service bus. This article walks through a small framework I wrote to support discovery over the service bus—bringing it on par with the built-in support for discovery in WCF—along with my set of helper classes. It also serves as an example of rolling your own discovery mechanism.

AppFabric Service Bus Background

If you’re unfamiliar with the AppFabric Service Bus, you can read these past articles:

- “Working With the .NET Service Bus,” April 2009

msdn.microsoft.com/magazine/dd569756- “Service Bus Buffers,” May 2010

msdn.microsoft.com/magazine/ee336313Solution Architecture

For the built-in discovery of WCF, there are standard contracts for the discovery exchange. Unfortunately, these contracts are defined as internal. The first step in a custom discovery mechanism is to define the contracts used for discovery request and callbacks. I defined the IServiceBusDiscovery contract as follows:

- [ServiceContract]

- public interface IServiceBusDiscovery

- {

- [OperationContract(IsOneWay = true)]

- void OnDiscoveryRequest(string contractName,string contractNamespace,

- Uri[] scopesToMatch,Uri replayAddress);

- }

The single-operation IServiceBusDiscovery is supported by the discovery endpoint. OnDiscoveryRequest allows the clients to discover service endpoints that support a particular contract, as with regular WCF. The clients can also pass in an optional set of scopes to match.

Services should support the discovery endpoint over the events relay binding. A client fires requests at services that support the discovery endpoint, requesting the services call back to the client’s provided reply address.

The services call back to the client using the IServiceBusDiscoveryCallback, defined as:

- [ServiceContract]

- public interface IServiceBusDiscoveryCallback

- {

- [OperationContract(IsOneWay = true)]

- void DiscoveryResponse(Uri address,string contractName,

- string contractNamespace, Uri[] scopes);

- }

The client provides an endpoint supporting IServiceBusDiscoveryCallback whose address is the replayAddress parameter of OnDiscoveryRequest. The binding used should be the one-way relay binding to approximate unicast as much as possible. Figure 1 depicts the discovery sequence.

Figure 1 Discovery over the Service Bus

The first step in Figure 1 is a client firing an event of discovery request at the discovery endpoint supporting IServiceBusDiscovery. Thanks to the events binding, this event is received by all discoverable services. If a service supports the requested contract, it calls back to the client through the service bus (step 2 in Figure 1). Once the client receives the service endpoint (or endpoints) addresses, it proceeds to call the service as with a regular service bus call (step 3 in Figure 1).

Discoverable Host

Obviously a lot of work is involved in supporting such a discovery mechanism, especially for the service. I was able to encapsulate that with my DiscoverableServiceHost, defined as:

See article for longer C# source code examples.

Besides discovery, DiscoverableServiceHost always publishes the service endpoints to the service bus registry. To enable discovery, just as with regular WCF discovery, you must add a discovery behavior and a WCF discovery endpoint. This is deliberate, so as to both avoid adding yet another control switch and to have in one place a single consistent configuration where you turn on or off all modes of discovery.

You use DiscoverableServiceHost like any other service relying on the service bus:

See article for longer C# source code examples.

Note that when using discovery, the service address can be completely dynamic.

Figure 2 provides the partial implementation of pertinent elements of DiscoverableServiceHost.

Figure 2 Implementing DiscoverableServiceHost (Partial)

See article for longer C# source code examples.

The helper property IsDiscoverable of DiscoverableServiceHost returns true only if the service has a discovery behavior and at least one discovery endpoint. DiscoverableServiceHost overrides the OnOpening method of ServiceHost. If the service is to be discoverable, OnOpening calls the EnableDiscovery method.

EnableDiscovery is the heart of DiscoverableServiceHost. It creates an internal host for a private singleton class called DiscoveryRequestService (see Figure 3).

Figure 3 The DiscoveryRequestService Class (Partial)

See article for longer C# source code examples.

The constructor of DiscoveryRequestService accepts the service endpoints for which it needs to monitor discovery requests (these are basically the endpoints of DiscoverableServiceHost).

EnableDiscovery then adds to the host an endpoint implementing IServiceBusDiscovery, because DiscoveryRequestService actually responds to the discovery requests from the clients. The address of the discovery endpoint defaults to the URI “DiscoveryRequests” under the service namespace. However, you can change that before opening DiscoverableServiceHost to any other URI using the DiscoveryAddress property. Closing DiscoverableServiceHost also closes the host for the discovery endpoint.

Figure 3 lists the implementation of DiscoveryRequestService.

See article for longer C# source code examples.

OnDiscoveryRequest first creates a proxy to call back the discovering client. The binding used is a plain NetOnewayRelayBinding, but you can control that by setting the DiscoveryResponseBinding property. Note that DiscoverableServiceHost has a corresponding property just for that purpose. OnDiscoveryRequest then iterates over the collection of endpoints provided to the constructor. For each endpoint, it checks that the contract matches the requested contract in the discovery request. If the contract matches, OnDiscoveryRequest looks up the scopes associated with the endpoint and verifies that those scopes match the optional scopes in the discovery request. Finally, OnDiscoveryRequest calls back the client with the address, contract and scope of the endpoint.

Discovery Client

For the client, I wrote the helper class ServiceBusDiscoveryClient, defined as:

I modeled ServiceBusDiscoveryClient after the WCF DiscoveryClient, and it’s used much the same way, as shown in Figure 4.

Figure 4 Using ServiceBusDiscoveryClient

See article for longer C# source code examples.

ServiceBusDiscoveryClient is a proxy for the IServiceBusDiscovery discovery events endpoint. Clients use it to fire the discovery request at the discoverable services. The discovery endpoint address defaults to “DiscoveryRequests,” but you can specify a different address using any of the constructors that take an endpoint name or an endpoint address. It will use a plain instance of NetOnewayRelayBinding for the discovery endpoint, but you can specify a different binding using any of the constructors that take an endpoint name or a binding instance. ServiceBusDiscoveryClient supports cardinality and discovery timeouts, just like DiscoveryClient.

Figure 5 shows partial implementation of ServiceBusDiscoveryClient.

Figure 5 Implementing ServiceBusDiscoveryClient (Partial)

See article for longer C# source code examples.

The Find method needs to have a way of receiving callbacks from the discovered services. To that end, every time it’s called, Find opens and closes a host for an internal synchronized singleton class called DiscoveryResponseCallback. Find adds to the host an endpoint supporting IServiceBusDiscoveryCallback. The constructor of DiscoveryResponseCallback accepts a delegate of the type Action<Uri,Uri[]>. Every time a service responds back, the implementation of DiscoveryResponse invokes that delegate, providing it with the discovered address and scope. The Find method uses a lambda expression to aggregate the responses in an instance of FindResponse. Unfortunately, there’s no public constructor for FindResponse, so Find uses the CreateFindResponse method of DiscoveryHelper, which in turn uses reflection to instantiate it. Find also creates a waitable event handle. The lambda expression signals that handle when the cardinality is met. After calling DiscoveryRequest, Find waits for the handle to be signaled, or for the discovery duration to expire, and then it aborts the host to stop processing any discovery responses in progress.

More Client-Side Helper Classes

Although I wrote ServiceBusDiscoveryClient to be functionally identical to DiscoveryClient, it would benefit from a streamlined discovery experience offered by my ServiceBusDiscoveryHelper:

- public static class ServiceBusDiscoveryHelper

- {

- public static EndpointAddress DiscoverAddress<T>(

- string serviceNamespace,string secret,Uri scope = null);

- public static EndpointAddress[] DiscoverAddresses<T>(

- string serviceNamespace,string secret,Uri scope = null);

- public static Binding DiscoverBinding<T>(

- string serviceNamespace,string secret,Uri scope = null);

- }

DiscoverAddress<T> discovers a service with a cardinality of one, DiscoverAddresses<T> discovers all available service endpoints (cardinality of all) and DiscoverBinding<T> uses the service metadata endpoint to discover the endpoint binding. Much the same way, I defined the class ServiceBusDiscoveryFactory:

- public static class ServiceBusDiscoveryFactory

- {

- public static T CreateChannel<T>(string serviceNamespace,string secret,

- Uri scope = null) where T : class;

- public static T[] CreateChannels<T>(string serviceNamespace,string secret,

- Uri scope = null) where T : class;

- }

CreateChannel<T> assumes cardinality of one, and it uses the metadata endpoint to obtain the service’s address and binding used to create the proxy. CreateChannels<T> creates proxies to all discovered services, using all discovered metadata endpoints.

Announcements

To support announcements, you can again use the events relay binding to substitute UDP multicast. First, I defined the IServiceBusAnnouncements announcement contract:

- [ServiceContract]

- public interface IServiceBusAnnouncements

- {

- [OperationContract(IsOneWay = true)]

- void OnHello(Uri address, string contractName,

- string contractNamespace, Uri[] scopes);

- [OperationContract(IsOneWay = true)]

- void OnBye(Uri address, string contractName,

- string contractNamespace, Uri[] scopes);

- }

As shown in Figure 6, this time, it’s up to the clients to expose an event binding endpoint and monitor the announcements.

Figure 6 Availability Announcements over the Service Bus

The services will announce their availability (over the one-way relay binding) providing their address (step 1 in Figure 6), and the clients will proceed to invoke them (step 2 in Figure 6).

Service-Side Announcements

My DiscoveryRequestService supports announcements:

- public class DiscoverableServiceHost : ServiceHost,...

- {

- public const string AnnouncementsPath = "AvailabilityAnnouncements";

- public Uri AnnouncementsAddress

- {get;set;}

- public NetOnewayRelayBinding AnnouncementsBinding

- {get;set;}

- // More members

- }

However, on par with the built-in WCF announcements, by default it won’t announce its availability. To enable announcements, you need to configure an announcement endpoint with the discovery behavior. In most cases, this is all you’ll need to do. DiscoveryRequestService will fire its availability events on the “AvailabilityAnnouncements” URI under the service namespace. You can change that default by setting the AnnouncementsAddress property before opening the host. The events will be fired by default using a plain one-way relay binding, but you can provide an alternative using the AnnouncementsBinding property before opening the host. DiscoveryRequestService will fire its availability events asynchronously to avoid blocking operations during opening and closing of the host. Figure 7 shows the announcement-support elements of DiscoveryRequestService.

Figure 7 Supporting Announcements with DiscoveryRequestService

See article for longer C# source code examples.

The CreateAvailabilityAnnouncementsClient helper method uses a channel factory to create a proxy to the IServiceBusAnnouncements announcements events endpoint. After opening and before closing DiscoveryRequestService, it fires the notifications. DiscoveryRequestService overrides both the OnOpened and OnClosed methods of ServiceHost. If the host is configured to announce, OnOpened and OnClosed call CreateAvailabilityAnnouncementsClient to create a proxy and pass it to the PublishAvailabilityEvent method to fire the event asynchronously. Because the act of firing the event is identical for both the hello and bye announcements, and the only difference is which method of IServiceBusAnnouncements to call, PublishAvailabilityEvent accepts a delegate for the target method. For each endpoint of DiscoveryRequestService, PublishAvailabilityEvent looks up the scopes associated with that endpoint and queues up the announcement to the Microsoft .NET Framework thread pool using a WaitCallback anonymous method. The anonymous method invokes the provided delegate and then closes the underlying target proxy.

Receiving Announcements

I could have mimicked the WCF-provided AnnouncementService, as described in my January article, but there’s a long list of things I’ve improved upon with my AnnouncementSink<T>, and I didn’t see a case where you would prefer to use AnnouncementService in favor of AnnouncementSink<T>. I also wanted to leverage and reuse the behavior of AnnouncementSink<T> and its base class.

Therefore, for the client, I wrote ServiceBusAnnouncementSink<T>, defined as:

- [ServiceBehavior(UseSynchronizationContext = false,

- InstanceContextMode = InstanceContextMode.Single)]

- public class ServiceBusAnnouncementSink<T> : AnnouncementSink<T>,

- IServiceBusAnnouncements, where T : class

- {

- public ServiceBusAnnouncementSink(string serviceNamespace,string secret);

- public ServiceBusAnnouncementSink(string serviceNamespace,string owner,

- string secret);

- public Uri AnnouncementsAddress get;set;}

- public NetEventRelayBinding AnnouncementsBinding {get;set;}

- }

The constructors of ServiceBusAnnouncementSink<T> require the service namespace.

ServiceBusAnnouncementSink<T> supports IServiceBusAnnouncements as a self-hosted singleton. ServiceBusAnnouncementSink<T> also publishes itself to the service bus registry. ServiceBusAnnouncementSink<T> subscribes by default to the availability announcements on the “AvailabilityAnnouncements” URI under the service namespace. You can change that (before opening it) by setting the AnnouncementsAddress property. ServiceBusAnnouncementSink<T> uses (by default) a plain NetEventRelayBinding to receive the notifications, but you can change that by setting the AnnouncementsBinding before opening ServiceBusAnnouncementSink<T>. The clients of ServiceBusAnnouncementSink<T> can subscribe to the delegates of AnnouncementSink<T> to receive the announcements, or they can just access the address in the base address container. For an example, see Figure 8.

Figure 8 Receiving Announcements

See article for longer C# source code examples.

Figure 9 shows the partial implementation of ServiceBusAnnouncementSink<T> without some of the error handling.

Figure 9 Implementing ServiceBusAnnouncementSink<T> (Partial)

See article for longer C# source code examples.

The constructor of ServiceBusAnnouncementSink<T> hosts itself as a singleton and saves the service namespace. When you open ServiceBusAnnouncementSink<T>, it adds to its own host an endpoint supporting IServiceBusAnnouncements. The implementation of the event handling methods of IServiceBusAnnouncements creates an AnnouncementEventArgs instance, populating it with the announced service address, contract and scopes, and then calls the base class implementation of the respective announcement methods, as if it was called using regular WCF discovery. This both populates the base class of the AddressesContainer<T> and fires the appropriate events of AnnouncementSink<T>. Note that to create an instance of AnnouncementEventArgs, you must use reflection due to the lack of a public constructor.

The Metadata Explorer

Using my support for discovery for the service bus, I extended the discovery feature of the Metadata Explorer tool (presented in previous articles) to support the service bus. If you click the Discover button (see Figure 10), for every service namespace you have already provided credentials for, the Metadata Explorer will try to discover metadata exchange endpoints of discoverable services and display the discovered endpoints in the tree.

Figure 10 Configuring Discovery over the Service Bus

The Metadata Explorer will default to using the URI “DiscoveryRequests” under the service namespace. You can change that path by selecting Service Bus from the menu, then Discovery, to bring up the Configure AppFabric Service Bus Discovery dialog (see Figure 10).

For each service namespace of interest, the dialog lets you configure the desired relative path of the discovery events endpoint in the Discovery Path text box.

The Metadata Explorer also supports announcements of service bus metadata exchange endpoints. To enable receiving the availability notification, bring up the discovery configuration dialog box and check the Enable checkbox under Availability Announcements. The Metadata Explorer will default to using the “AvailabilityAnnouncements” URI under the specified service namespace, but you can configure for each service namespace any other desired path for the announcements endpoint.

The support in the Metadata Explorer for announcements makes it a simple, practical and useful service bus monitoring tool.

Juval Lowy is a software architect with IDesign providing .NET and architecture training and consulting. This article contains excerpts from his recent book, “Programming WCF Services 3rd Edition” (O’Reilly, 2010). He’s also the Microsoft regional director for the Silicon Valley. Contact Lowy at idesign.net.

Thanks to the following technical expert for reviewing this article: Wade Wegner

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Steve Evans (@scevans) posted Windows Azure Staging Model on 10/10/2010:

One of my favorite features of Windows Azure is their Production/Staging model. I think the best way to explain why I think this is so well implemented is to walk you through the processes.

Here we have Test1 running in Production and Test2 running in Staging. Clients that go to your production URL are routed to your production code. Clients that go to your staging URL are routed to your staging code. This allows you to have your customers use your production code, while you can test your new code running in staging.

Now that I’ve tested my staging code I am ready to move it to production. I click the swap button located in-between my two versions and Test2 becomes production and Test1 is moved to staging. What happens behind the scenes is the load balancer (managed by the Azure platform) starts directing incoming requests to your production URL to servers running the Test2 code base and requests coming into your staging URL are routed to servers running the Test1 code base. This process literally takes a few seconds since the Test2 code base is already running on servers. This also gives you the advantage of being able to immediately switch back to your old code base if something goes wrong.

Now we have updated our code again and have pushed up Test3 to our staging area. We now have Test2 still running in production, and can do testing on Test3 in staging.

Now that we have tested our Test3 code and are ready to move it to production we hit the swap button again and Test3 becomes production and Test2 is moved to staging ready to be moved back into production on a moments notice.

One thing to take note of is that the Web Site URL’s for Production and Staging never changed. Unfortunately neither of them are URL’s you want your customers to see or you would want work with. What you want to do is create to DNS CNAME records. In the example case I’m using here you would create two DNS records:

serktools-stagetest.clodapp.net CNAME test.serktools.com

e7e3f38589d04635a6d0d0aee22bd842.cloudapp.net CNAME stage.test.serktools.com

• The VAR Guy interviewed Allison Watson in a 00:04:03 Microsoft’s Allison Watson: SaaS and Cloud, Part 2 video segment on 3/10/2010:

The VAR Guy FastChat Video interviews Microsoft Channel Chief Allison Watson about cloud opportunities for partners. This is part II, covering how VARs can embrace Windows Azure and Business Productivity Online Suite (BPOS), plus SaaS pricing questions.

Prior to assuming her current role in 2010, Watson spent eight years as head of the Worldwide Partner Group, directing Microsoft's worldwide strategy for the diverse ecosystem of more than 640,000 independently owned-and-operated partner companies that support Microsoft and its customers in 170 countries.

• The VAR Guy interviewed Allison Watson on 3/10/2010 in a 00:04:19 Microsoft’s Allison Watson: SaaS and Cloud, Part 1 video segment:

The VAR Guy FastChat Video interviews Microsoft Channel Chief Allison Watson about Microsoft's cloud strategy for partners; SaaS competition with Google and Salesforce.com; plus Microsoft Business Productivity Online Suite (BPOS) and Windows Azure.

These interviews might be dated, but they express Microsoft’s marketing approach to Windows Azure and BPOS at about the time of the Microsoft Worldwide Partners Conference in the Spring of 2010.

On Windows claimed “Descartes' Global Logistics Network (the GLN) is being extended using Windows Azure, Microsoft’s cloud computing platform for Descartes' software-as-a-service (SaaS) 3.0 solutions” as a lead-in to its Microsoft, Descartes use Azure cloud article of 10/8/2010:

Descartes unveiled its new SaaS 3.0 strategy to extend its GLN SaaS technology platform with cloud-computing capabilities

"We're excited to work with Microsoft, one of the leaders in cloud-computing technology, to federate with our GLN. This gives our customers increased enterprise agility by providing component based solutions in the cloud," said Frank Hamerlinck, senior vice president of research and development at Descartes.

"By uniting the GLN services with the Windows Azure Platform, our customers and partners can have robust and reliable solutions that are easily accessible and open for integration,” added Hamerlinck. “This model results in an accelerated time-to-value for our customers, ultimately driving improvements in their logistics operations."

"Descartes is moving ahead with a well thought out hybrid approach to cloud computing. Windows Azure will allow Descartes to quickly extend its GLN based on customer demand, while keeping costs low," said Nicole Denil, Director of Americas for the Windows Azure Platform at Microsoft.

The two companies are scheduled to discuss the details of this relationship and the Descartes technology vision and research and development methodology at its Global User Group Conference in Florida on 5-7 October.

Dan Tohatan (@pavethemoon) evaluates the cost of cloud hosting for his SaaS project in a Cloud Computing Adoption: Slow, but Why? post of 10/8/2010:

It is a fairly well-established fact that cloud computing as been in a very niche market so far. Certainly, no company that I've worked at - and I've worked at more than 3 companies in the last 4 years, has implemented cloud computing. They are all talking about it. But they have yet to turn words into actions.

I am now looking for reasons why the slowness in cloud computing adoption, mainly because I myself am developing my first cloud-driven application (Vmana). One blogger says that "Windows Azure is too expensive for small apps." I tend to agree. Most websites and web apps run on low-budget shared servers. My entire domain (dacris.com) runs perfectly fine in a shared hosting environment. Why would I ever consider running a cloud computing environment that would cost me at least 4 TIMES more money?

The Pros

Here are some uses that I believe are legitimate for cloud computing:

1. Part-time server, for prototyping and demos

Say you want to spin up a server for 20 hours to demonstrate an app to your clients. Would you go and get a hosting account, pre-pay 3 months, and end up spending around $50? Or, would you prefer spending $2 on a cloud server with a greater degree of performance and flexibility? I would certainly opt for the latter.2. Large-scale data center for public data

Suppose I have a Digg-like site that gets millions of views per day and I need a large-scale data center to host that on. Would I be inclined to build a data center myself, or outsource that work to a third-party provider? At that scale, it's likely that from a cost perspective the outsourcing option makes more sense.Notice one thing - there is no middle ground. You either have to have a requirement for a large, Facebook-size data center, or for a part-time server for demo and prototyping use.

The Cons

The main concerns with cloud computing seem to be as follows:

1. What if I want to run my server full time?

You can, but you won't get much bang for the buck out of it. In fact, you'll be spending more than you need on hardware that you probably don't need that is more expensive than either VPS or shared hosting which is more than enough for 99% of sites out there. I mean, VPS starts at $20/month. You get the same flexibility and almost the same performance as cloud computing, for a quarter of the cost. This comes about because it turns out that all cloud computing providers charge by CPU-hour. For a month consisting of 720 hours, that usually adds up to at least $80/month.2. What about security?

The question you have to ask yourself is, is your data sensitive enough that you can't trust a third-party provider (be it Microsoft, Godaddy, or whatever) with it? There is an argument to be made that smaller, more local providers are more trustworthy from a security standpoint because even if the data leaks internally, not a lot of eyeballs will see it or be likely to leak it. Whereas with a provider like Microsoft, with thousands of employees, it's much easier to find a rogue hacker within the company who would love to expose your data to the public. Now, even beyond that, if you can't trust anyone but insiders with your data, then really your only option is to build an internal data center.Conclusions

Any good article has to have some conclusions. So here we go. I think the security issue is not really a concern because hosting providers have roughly the same level of security as cloud computing providers. However, the cost issue is a clear barrier to entry. Why should I pay $80 for the exact same service that I can go next door and pay $20 for? I think where cloud computing has gone wrong is in the idea that users would be willing to pay retail markup for essential back-end services. At this point, you can bet your bottom dollar Microsoft is paying only $0.01 per hour of CPU time internally to provide Windows Azure to you at $0.12 per hour.

At this time, unless I'm looking to build an Amazon-like site or need a prototyping server with root access, I would not consider cloud computing. I would much rather go with VPS or shared hosting. This is probably what millions of other people are thinking as well.

<Return to section navigation list>

Visual Studio LightSwitch

• Microsoft’s Campbell Gunn announced “no plans in Version 1 to support/extend oData” in a 10/9/2010 reply to apacifico’s Lightswitch dataprovider support and odata question of 10/9/2010 in the LightSwitch Extensibility forum:

A very bad decision, which needs revisiting.

• Peter Kellner explained Building [a] Job Ads Management Module With LightSwitch Beta 1 For Silicon Valley Code Camp in a 9/30/2010 post (missed when posted):

The End Result

Motivation

As you can imagine, The Silicon Valley Code Camp web site has lots of “back end” functions that need to be done. That is, things like doing mailings, assigning roles to users, making schedules, allocating rooms and times and literally hundreds of other tasks like that. Over the 5 years of code camp, I’ve always built simple protected ASP.NET web pages to do this. I’ve always used the simplest ASP.NET control I could find, such as GridView, DetailsView, DropDownList, and SqlDataSource. The interfaces usually basically work but are very clumsy and lacking in both functionality and aesthetics.

Why Now

I’ve seen lots of short demos on LightSwitch for Visual Studio and recently read on someone else’s blog that they are now building all their simple applications using LIghtSwitch. Also, my friend Beth Massi has been running around the world espousing the greatness of this product and I knew if I ran into any dumb issues that she’d bail me out (I’m the king of running into dumb issues. I’ve found that given two choices that seem right, I always pick the wrong one which is what actually happened here along the way, and Beth did bail me out).

First Blood

First thing to do (after installing LightSwitch) is to say “File/New/Project”. My plan is to add this project right off my SV Codecamp solution. So, here goes.

So far so good. Next step is to choose attach to an external database

Continuing, but don’t get tripped up here like I did. You will use WCF RIA Services under the covers but you don’t want to select that choice. You want to say that you want to connect to a database and let LightSwitch do the work for you.

PIck your database and connection strings.

Now, pick the tables you plan on working with. If this were Linq2sql, I’d be choosing them all, but now that I’m in RIA Services land, I’m hoping I can have separate “Domains” and not have to reference all the tables all the time. Jury is still out on that one but for now, I’m following the advice of the team and just picking the tables I want to manage now.

And, I’m going to name the Data Source “svcodecampDataJobs”. I’ll have to see how this goes and report later. I’m doing this live so I really don’t know where I’ll end up.

Click finish, then rebuild all and it all works. It comes up with this screen showing me my relationship between the tables. It is showing me a Company table with a link to a JobListing table which is what I have. Here is what LightSwitch shows me.

The reality of my database is that I also have a JobListingDates table that is now shown here. Taking a step backwards to explain my database, I have a simple company table, the company has a detail table associated with it called JobListings, and the JobListings table has a details file associated with it called JobListingsDates. That is, a company may run an ad for 30 days, take it down for 30,and bring it back up again.

Here is what that schema actually looks like in SqlManager from EMS.

One thing I like about great software is that it has things that are discoverable. So, just now, I double clicked on the little table called JobListings and the view changed to having JobListing as primary and if you look on the bottom right, it shows JobListingDate. Very cool. I have no idea where this is all going but I’m starting to get excited. Here is what I’m looking at now.

Building a Screen

So now, let’s push the “Screen” button and see what happens (while looking at the Company View).

This is nice, I get a list of sample screens. How about if we build an Editable Grid Screen with the hope of editing and adding new Companies. Notice that I’m naming it EditableGridCompany and choosing the Company for the data in the dropdown.

You now get a screen that is a little scary looking so rather than actually try and understand it, I thought “maybe I’m done, maybe this will just run”. So, here goes, Debug/Start Without Debug. Here is the scary screen, followed by what happens after the run.

And the Run:

Wow! Paging, Fancy editing including date pickers, exporting to Excel, Inserts, Updates and Deletes on the company table. This is amazing. Let me add another Grid so that I can add JobListings to the company. To do that, go back to the solution explorer and choose add screen.

Then again, I have choices.

I choose Details Screen and check Company in the dropdown, Company Details and Company JobListings for the additional data.

Another intimidating screen, but simply do Debug/Debug Start.