Windows Azure and Cloud Computing Posts for 10/20/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Update 10/21/2010: Articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

• See my Windows Azure, SQL Azure, AppFabric and OData Sessions at PDC 2010 post of 10/21/2010, which lists all PDC 2010 sessions related to Windows Azure, SQL Azure, Windows Azure AppFabric, Codename “Dallas” and OData, in the Cloud Computing Events section below.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• The SQL Azure Team announced SQL Azure Sessions at PDC 2010 in this 10/20/2010 post:

The session list for the sold out PDC 2010 two day conference in Redmond have been posted here. The SQL Azure sessions announced on the PDC 2010 web site are listed below.

Building Offline Applications using the Sync Framework and SQL Azure

By: Nina Hu

In this session you will learn how to build a client application that operates against locally stored data and uses synchronization to keep up-to-date with a SQL Azure database. See how Sync Framework can be used to build caching and offline capabilities into your client application, making your users productive when disconnected and making your user experience more compelling even when a connection is available. See how to develop offline applications for Windows Phone 7 and Silverlight, plus how the services support any other client platform, such as iPhone and HTML5 applications, using the open web-based sync protocol.

Building Scale-Out Database Solutions on SQL Azure

By: Lev Novik

SQL Azure provides an information platform that you can easily provision, configure, and use to power your cloud applications. In this session we will explore the patterns and practices that help you develop and deploy applications that can exploit the full power of the elastic, highly available, and scalable SQL Azure Database service. The talk will detail modern scalable application design techniques such as sharding and horizontal partitioning and dive into future enhancements to SQL Azure Databases.

• See my Windows Azure, SQL Azure, AppFabric and OData Sessions at PDC 2010 post of 10/21/2010, which lists all PDC 2010 sessions related to Windows Azure, SQL Azure, Windows Azure AppFabric, Codename “Dallas” and OData, in the Cloud Computing Events section below.

• Abel Avram posted The Future of WCF Is RESTful to the InfoQ blog on 10/21/2010:

Glenn Block, a Windows Communication Foundation (WCF) Program Manager, said during an online webinar entitled “WCF, Evolving for the Web” that Microsoft’s framework for building service-oriented applications is going to be refactored radically, the new architecture being centered around HTTP.

Block started the online session by summarizing the current trends in the industry:

- a move to cloud-based computing

- a migration away from SOAP

- a shift towards browsers running on all sorts of devices

- an increase in the adoption of REST

- emerging standards like OAuth, WebSockets

He mentioned that the current architecture of WCF is largely based on SOAP as shown in this slide:

While it affords communicating to many types of services, the current WCF architecture is complex and it does not scale well, so Block is looking forward to see a simpler communication between services based on HTTP as depicted in the following slide:

HTTP was introduced in .NET 3.5, allowing the creation of services accessed via HTTP, but “it does not give access to everything HTTP has to offer, and it is a very flat model, RPC oriented, whereas the Web is not. The Web is a very rich set of resources,” according to Block. Instead of retrofitting the current WCF to work over HTTP, Block considers WCF should be re-architected with HTTP in mind using a RESTful approach.

WCF will contain helper APIs for pre-processing HTTP requests or responses, doing all the parsing and manipulation of arguments, encapsulating the HTTP information in objects that can be later transferred for further processing. This will relieve the user from dealing with HTTP internals directly if he wants to. This feature will also present a plug-in capability for media-type formatters of data formats like JSON, Atom, OData, etc. WCF will support some of them out of the box, but the user will be able to add his own formatters.

The new WCF is already being built, Block demoing sample code using it, but he mentioned that the feature set and what WCF is going to look like is not set in stone. They will publish an initial version of the framework on CodePlex in the near future for the community to be able to test and react, shaping the future of WCF. More details are to come during PDC 2010.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

• See my Windows Azure, SQL Azure, AppFabric and OData Sessions at PDC 2010 post of 10/21/2010, which lists all PDC 2010 sessions related to Windows Azure, SQL Azure, Windows Azure AppFabric, Codename “Dallas” and OData, in the Cloud Computing Events section below.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• John Hagel III and John Seely Brown published Learning From The Cloud: Cloud computing's new vistas, like research to Forbes.com on 10/20/2010:

It's easy to see why startups love the idea of cloud computing. When you're burning through expensive early-stage capital, no one wants to use dedicated, perishable resources like computing power, bandwidth, storage and software when you can get what you need, when you need it, from the cloud. Larger enterprises are coming onto the cloud more slowly, lured by the prospect of lower IT operating costs as well as the ability to easily and cheaply handle unexpected fluctuations in demand.

Even the government is adopting cloud computing. As its budget experiences painful cuts, the city of Miami is using Microsoft's ( MSFT - news - people ) Windows Azure platform to host a computing-intensive mapping application that visualizes calls to the city's 311 municipal information service. The approach costs 75% less than in-house servers, and dramatically increases the transparency and awareness of previously hidden municipal problems.

As these projects show, cloud computing is, at the moment, largely viewed as a low-cost form of IT outsourcing. That's all well and good. But organizations focusing only on this one perspective on the cloud will be missing the next wave of potential for cloud computing: using the cloud as a platform for experimentation and learning.

Largely unnoticed by analysts, a few companies of all sizes have begun to appreciate the ability of cloud computing to spur broader experimentation. Because cloud computing reduces the cost of technology initiatives, cloud-aware organizations are more open to launching multiple prototypes, testing pilot ideas in parallel. Projects that take off can scale up more quickly, while those that stall at the gate can be painlessly dialed back. As a result, learning accelerates.

For example, Varian, a scientific-instruments company, uses the cloud to run intensive "Monte Carlo" computer simulations of design prototypes, leading to more rapid feedback cycles. A design for a mass spectrometer that would have required six weeks with internal processors took only a day on Amazon's Elastic Compute Cloud (EC2). Varian reports that running a calculation on one machine for 100 hours costs the same as using 100 machines for an hour.

The forms of processor-intensive research being shifted to the cloud run the gamut from analyzing molecular data to mapping the Milky Way. In Palo Alto, Calif., a nanotech startup that's still in stealth mode rents time remotely over the Internet for experiments using sophisticated electron microscopes--devices it could never have afforded before.

Software code-testing is also shifting to the cloud, as are simulations in the financial-services industry. Semiconductor companies are experimenting with cloud computing for the electronic design automation that speeds up chip design. Processes with relatively light computing requirements, such as software regression testing, already fit well with cloud computing. More intensive processes, like simulations and high-end design tools, are starting to migrate to the cloud.

Even more interesting are the experiments using cloud computing to accelerate learning from customers, speeding up both time-to-market and customer feedback. For instance, Amazon uses its own cloud-based Relational Database Service to much more quickly and cheaply manipulate the tremendous amount of data it generates from simulations of its 98 million active customers.

And 3M ( MMM - news - people ) in May launched an inexpensive Web-based application that uses Microsoft data centers to give any designer anywhere in the world the ability to upload a design--a logo, a packaging concept, a store environment--and to then use complex algorithms to immediately analyze its effectiveness. 3M's "Visual Attention Service" maps the "hot spots" on a design where people's eyes naturally go and evaluates the image's "visual saliency." In future versions, 3M plans to allow customers to create entire databases of test images and experiment with a variety of designs. The cloud tool significantly reduces the time and cost of design iterations, while improving the overall impact of the designs.

John Hagel III is co-chairman and John Seely Brown is independent co-chairman of the Deloitte Center for the Edge. Their books include The Power of Pull, The Only Sustainable Edge, Out of the Box, Net Worth, and Net Gain.

• See my Windows Azure, SQL Azure, AppFabric and OData Sessions at PDC 2010 post of 10/21/2010, which lists all PDC 2010 sessions related to Windows Azure, SQL Azure, Windows Azure AppFabric, Codename “Dallas” and OData, in the Cloud Computing Events section below.

The Windows Azure Team published a Real World Windows Azure: Interview with Richard Godfrey, Cofounder and CEO, KoodibooK case study on 10/21/2010:

As part of the Real World Windows Azure series, we talked to Richard Godfrey, cofounder and CEO of KoodibooK, about using the Windows Azure platform to develop the company's unique self-publish service, which lets customers design and publish personalized photo albums in minutes. Here's what he had to say:

MSDN: Can you please tell us about KoodibooK?

Godfrey: KoodibooK provides a unique "Create Once, Publish Anywhere" experience for people looking to capture memorable moments in their lives with a personalized photo album.

MSDN: What were the company's main goals in designing and building the KoodibooK publishing application?

Godfrey: Our primary mission in developing the KoodibooK service was to make it the fastest way for consumers to design, build, and publish their own photo albums. We wanted to give our customers the chance to build and start enjoying their book in as little as 10 minutes.

MSDN: Can you describe how the Windows Azure platform helped you meet those goals?

Godfrey: The Windows Azure platform is ideal to meet our needs. The simplicity of the architecture model of Windows Azure made it extremely easy to develop services to handle the publishing workflow from start to finish.

MSDN: Can you describe how the KoodibooK solution uses other Microsoft technologies, together with Windows Azure?

Godfrey: The actual book design application-the templates and tools that people use to organize their photos, create page layouts, and add effects-is built on the Windows Presentation Foundation. Customers download the application from our site and run it on their computer.

As the images are written to Windows Azure Blob storage, all reference data about individual publishing projects is simultaneously stored in Microsoft SQL Azure databases. To give users a high-fidelity, interactive viewing experience online, we use the Microsoft Silverlight browser plug-in to handle the presentation of the published book. Users can see a full-scale version of their book, flip through pages, use the Deep Zoom feature of Silverlight to see incredible detail, and much more.

MSDN: What makes the KoodibooK photo album solution unique?

Godfrey: With our solution, people can create their own custom album in minutes instead of hours, mainly because our design solution uses a client application, instead of relying on a web-based system. And we let people pull in content from just about anywhere-from online photo storage locations, such as their Facebook account, from Flickr, from blogs, or from local drives. This helps simplify our service and opens it up to a wider range of user preferences. And then we give people lots of options in terms of publishing their finished book. They can print a bound version from one of our professional print vendors, or they can share their book online so that people can view it on a PC or their mobile computing device.

With the Koodibook publishing tool, customers can interact with a 3-D preview of their personalized photo album before printing.

MSDN: Can you describe the benefits KoodibooK has gained through the use of Windows Azure, along with Windows Presentation Foundation and Microsoft Silverlight?

Godfrey: As a startup with just a handful of employees, we simply couldn't allocate a lot of time or budget to building and managing the infrastructure. Setting up and configuring services to run in Windows Azure is such a straightforward process. We just created a cloud service project in Visual Studio, published it to Windows Azure, and it worked.

Because of the interoperability of Microsoft technologies, we've been able to reuse code from the client application to optimize server-side components. This means we can quickly develop and deploy new functionality that works throughout all of the different parts of the solution. So we've been able to roll out product improvements on a consistent basis, which is a critical part of our growth strategy.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008355

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

• Mary Jo Foley (@maryjofoley) reported Microsoft starts moving more of its own services onto Windows Azure on 10/18/2010:

Up until recently, relatively few of Microsoft own products or services were running on the company’s Windows Azure operating system.

Some of Live Mesh was on it. The Holm energy-tracking app was an Azure-hosted service. Pieces of its HealthVault solution were on Azure. But Hotmail, CRM Online, the Business Productivity Online Suite (BPOS [now Office 365]) of hosted enterprise apps? Nope, nope and nope.

I asked the Softies earlier this year if the lack of internal divisions hosting on top of Azure could be read as a lack of faith in Microsoft’s cloud OS. Was it just too untried and unproven for “real” apps and services?

The Azure leaders told me to watch for new and next-generation apps for both internal Microsoft use and external customer use to debut on Azure in the not-too-distant future. It looks like that’s gradually starting to happen.

Microsoft Research announced on October 18 a beta version of WikiBhasha, “a multilingual content creation tool for Wikipedia, codeveloped by WikiPedia and Microsoft. The beta is an open-source MediaWiki extension, available under the Apache License 2.0, as well as in user-gadget and bookmarklet form. It’s the bookmarklet version that is hosted on Azure.

Gaming is another area where Microsoft has started relying on Azure. According to a case study Microsoft published last week, the “Windows Gaming Experience” team built social extensions into Bing Games on Azure, enabling that team to create in five months a handful of new hosting and gaming services. (It’s not the games themselves hosted on Azure; it’s complementary services like secure tokens, leaderboard scores, gamer-preferences settings, etc.) The team made use of Azure’s hosting, compute and storage elements to build these services that could be accessed by nearly two million concurrent gamers at launch in June — and that can scale up to “support five times the amount of users,” the Softies claim.

Microsoft also is looking to Azure as it builds its next-generation IntelliMirror product/service, according to an article Microsoft posted to its download center. Currently, IntelliMirror is a set of management features built into Windows Server. In the future (around the time of Windows 8, as the original version of the article said), some of thesee services may actually be hosted in the cloud.

An except from the edited, October 15 version of the IntelliMirror article:

The “IntelliMirror service management team, like many of commercial customers of Microsoft, is evaluating the Windows Azure cloud platform to establish whether it can offer an alternative solution for the DPM (data protection manager) requirements in IntelliMirror. The IntelliMirror service management team sees the flexibility of Windows Azure as an opportunity to meet growing user demand for the service by making the right resources available when and where they are needed.

“The first stage of the move toward the cloud is already underway. Initially, IntelliMirror service management team plans to set up a pilot on selective IntelliMirror and DPM client servers by early 2011, to evaluate the benefits of on-premises versus the cloud for certain parts of the service.”

There’s still no definitive timeframe as to when — or even if — Microsoft plans to move things like Hotmail, Bing or BPOS onto Azure. For now, these services run in Microsoft’s datacenters but not on servers running Azure.

Brent Stineman (@brentcodemonkey) pled for free or low cost Windows Azure instances for developers in his Its about driving adoption post of 10/20/2010:

So I exchanged a few tweets with Buck Woody yesterday. For those not familiar, Buck is an incredibly passionate SQL Server guy with Microsoft who recently moved over to their Azure product family. Its obvious from some of the posts that Buck has made that he was well versed in ‘the cloud’ before the move, but he hasn’t let this stop him from being very vocal about sharing his excitement about the possibilities. But I digress…

The root of the exchange was regarding access to hosted Azure services for developer education/training. Now before I get off on my rant, I want to be a bit positive. MSFT has taken some great steps to making Azure available for developers. The CTP was nice and long and there were few restrictions (at least in the US) to participation. They also gave out initial MSDN and BizSpark Azure subscription benefits that were fair and adequate. They have even set up free labs for SQL Azure and the Azure AppFabric. And of course, there’s the combination of free downloads and the local development fabric.

All in all, it’s a nice set of tools for allow for initial learning on the Windows Azure platform. The real restriction remains the ability to actual deploy hosted services and test them in a “production environment”. Now the Dev Fabric is great and all, but as anyone that’s spent any time with Azure will tell you, you still need to test your apps in the cloud. There’s simply no substitute. And unfortunately, there is no Windows Azure lab.

Affective November 1st 2010, several of the aforementioned benefits are being either removed (AzureUSAPass) or significantly reduced. Now I fully understand that it costs money for MSFT to provide these benefits and I am grateful for what I’ve gotten. But I’m passionate about the platform and I’m concerned that with these changes, it will be even more difficult to help “spread the faith” as it were.

So consider this my public WTF. Powers that be, please consider extending these programs and the current benefit levels indefinitely. I get questions on a weekly basis from folks about “how can I learn about Azure”. I’d hate to have to start telling them they need to have a credit card. Many of these folks are the grass roots types that are doing this on their own time and dime but can help influence LARGE enterprises. Furthermore, you have HUGE data centers with excess capacity available. I’m certain that a good portion of that capacity is kept in an up state and as such is consuming resources. So why not put it to good use and help equip an army. An army of developers all armed with the promise and potential of cloud computing.

The more of these soldiers we have, the easier it will be to tear down the barriers that are blocking cloud adoption and overcome the challenges that these solutions face.

Ok… my enthusiasm about this is starting to make me sound like a revolutionary. So before I end up on another watch list I’ll cut this tirade short. Just please, either extend these programs or give us other options for exploring learning your platform.

I’ve been lobbying for lowering developers’ entry cost for Windows Azure, SQL Azure and Azure AppFabric for more than a year with only moderate success.

• See Amazon Web Services announced a new AWS Free Usage Tier on 10/21/2010 in the Other Cloud Computing Platforms and Services section below.

Bruce Kyle asked Want to Know When You Approach Your Azure Limit? in a 10/20/2010 post to the US ISV Evangelist blog:

One issue customers have had in the past is that they would exceed their predefined limits on Windows Azure Platform without notification (especially when using MSDN offerings and such). Now we have a way to notify you.

We are now sending customers emails notifications when their compute hour usage exceeds 75%, 100% and 125% of the number of compute hours included in their plan.

You can also get email every week of your consumption for the first 13 weeks of your subscription. After that, you’ll receive alerts when you reach certain thresholds.

For more information, see the Windows Azure team blog post Announcing Compute Hours Notifications for Windows Azure Customers.

You can also view you current usage and unbilled balance at any time on the Microsoft Online Services Customer Portal.

This isn’t news, but a reminder might be helpful to those who missed the first post.

Karsten Januszewski revealed The Latest Twitpocolypse in a 10/19/2010 post:

There’s another Twitpocolypse on the horizon. If you’ve developed against the Twitter API—and especially if you’re using a JSON parser for deserialization—you’d better read up.

In summary, there’s a serialization issue now that Twitter is moving to 64 bit signed integers, since Javascript can’t handle numbers greater than 53 bits. And Twitter passes the tweet id as a number, not a string, so they’ve introduced a new property that passes the id as a string. This means you’ll have to change your code to parse the id_str property, which is passed as a string, as opposed to the id property:

{"id": 10765432100123456789, "id_str": "10765432100123456789"}If you’re using .NET and deserializing into an int instead of a long or a string, you could be at risk. So check your code.

Fortunately, both Archivist Web and Archivist Desktop are immune to this issue. In the case of Archivist Web, which uses the most excellent TweetSharp library, ids are cast to long variables. In the case of Archivist Desktop, which uses a custom-built deserialization framework, ids are cast to string variables.

Interesting to watch as Twitter continues to scale and deal with id generation issues. You can see an earlier example of issues around id generation in this lab note.

See examples of The Archivist’s tweet histories in the left frame of the OakLeaf Systems blog.

Nicole Hemsoth described Cloud-Driven Tools from Microsoft Research Target Earth, Life Sciences in a 10/19/2010 post to the HPC in the Cloud blog:

Following its eScience Workshop at the University of California, Berkeley last week, Microsoft made a couple of significant announcements to over 200 attendees about new toolsets available to aid in ecological and biological research.

At the heart of its two core news items is a new ecological research tool called MODISAzure coupled with the announcement of the Microsoft Biology Foundation, both of which are tied to Microsoft's Azure cloud offering, which until relatively recently has not on the scientific cloud computing radar to quite the same degree as Amazon's public cloud resource.

While the company's Biology Foundation announcement is not as reliant on the cloud for processing power as much as it supplies a platform for collaboration and information-sharing, the ecological research tool provides a sound use case of scientific computing in the cloud. All of the elements for what is useful about the cloud for researchers is present: dynamic scalability, processing power equivalent or more powerful than local clusters, and the ability for researchers to shed some of the programming and cluster management challenges in favor of on-demand access.

MODISAzure and Flexible Ecological Research

Studies of ecosystems, even on the minute, local scale are incredibly complex undertakings due to the fact that any ecosystem is comprised of a large number of elements; from water, climate and plant cycles to external influences, including human interference, the list of constituent parts that factor into the broader examination of an ecosystem seems almost endless. Each element doubles onto itself, forming a series of sub-factors that must be considered -- a task that requires supercomputer assistance, or at least used to.

Last week at its annual eScience Workshop, Microsoft Research teamed up with the University of California, Berkeley to announce a new research tool that simplifies complex data analysis that creators claim will focus on "the breathing of the biosphere." Notice how the word "breathing" here implies that there will be a near real-time implication to the way data is collected and analyzed, meaning that researchers will be able to see the ecosystem as it exists in each moment -- or as it "breathes" or exists in a particular moment.

In order to monitor the breathing of a biosphere, data from satellite images from the over 500 FLUXNET towers are analyzed in minute detail, often down to what the team describes as a single-kilometer-level, or, if needed, on a global scale. The FLUXNET towers themselves, which are akin to a network of sensor arrays that measure fluctuations in carbon dioxide and water vapor levels, can provide data that can then be scaled over time, meaning that researchers can either get a picture of the present via the satellite images or can take the data and look for patterns that stretch back over a ten-year period if needed.

It is in this flexibility of timelines that researchers have to draw from that the term "breathing" comes into play. According to Catharine van Ingen, a partner architect on the project from Microsoft Research, "You see more different things when you can look big and look small. The ability to have that kind of living, breathing dataset ready for science is exciting. You can learn more and different things at each scale."

To be more specific, as Microsoft stated in its release, the system "combines state-of-the-art biophysical modeling with a rich cloud-based dataset of satellite imagery and ground-based sensor data to support carbon-climate science synthesis analysis on a global scale."

This system is based on MODISAzure, which Microsoft describes as a "pipeline for downloading, processing and reducing diverse satellite imagery." This satellite imagery, which is collected from the network of FLUXNET towers, employs the Windows Azure platform to gain the scalable boost it needs to deliver the results to researchers' desktops.

What this means, in other words, is that in theory, scientists studying the complex interaction of forces in an ecosystem and would otherwise rely on supercomputing capacity to handle such tasks, are now granted a maintenance- and hassle-free research tool via the power of Microsoft's cloud offering.

The Azure Forum Support Team posted a list of chapters to date of their Bring the clouds together: Azure + Bing Maps series on 8/11/2010 (missed when posted):

We will start to write series of articles under the name of "Bring the clouds together". Those series contain articles, sample applications, and live demonstrations, that show how you can combine the power of Microsoft cloud computing solutions, as well as related technologies. We will provide both source code and written guidance to help you design and develop cloud applications of your own.

This first series will focus on how to combine Windows Azure, SQL Azure, and Bing Maps. You can find a preview of live demonstration on http://sqlazurebingmap.cloudapp.net/. Currently this is a preview, which may contain some bugs. As we go through this series, we will release the source code, and fix the issues in the live demonstration.

Chapters list

The chapters list will grow as new articles become available.

Topic Description Chapter 1: Introducing the Plan My Travel application Describes the sample application, including its features, and high level overviews of the implementation. Chapter 2: Choosing the right platform and technology Discusses how to make decition when architecting for a typical cloud based consumer oriented application. Chapter 3: Designing a scalable cloud database Discusses how to design a scalable cloud database. Chapter 4: Working with spatial data Discusses how to work with spatial data in SQL Azure. Chapter 5: Accessing spatial data with Entity Framework Discusses how to access spatial data in a .NET application, and a few considerations you must take when working with SQL Azure. Chapter 6: Expose data to the world with WCF Data Services Discusses how to expose data to the world using WCF Data Services. In particular, it walks through how to create a reflection provider for WCF Data Services.

Earlier, the team posted:

- Cloud + Device: Combine the power of Windows Azure, IE 9, and Windows Phone 7 (Part 3) of 09-19-2010: In the last post , we focused on the device side. We created a graphic rich web application using HTML 5 canvas, and browsed it in IE 9. This post focuses on the integration. That is, how to connect client applications to cloud services. You can see the...

- Cloud + Device: Combine the power of Windows Azure, IE 9, and Windows Phone 7 (Part 2) 09-19-2010: In the last post , we talked about how IE 9 powers Windows 7 devices, how Silverlight/XNA powers Windows Phone 7 devices, and how Windows Azure cloud connects those devices. This post focuses on the device side. We create a graphic rich web application...

- Cloud + Device: Combine the power of Windows Azure, IE 9, and Windows Phone 7 09-19-2010: Earlier this week, we released two important products: Internet Explorer 9 Beta and Windows Phone 7 Developer Tools RTM . At first glance, these products have nothing to do with Windows Azure. But if you remember the 5 dimensions of the cloud, you'll...

<Return to section navigation list>

Visual Studio LightSwitch

• Matt Thalman explained Query Reuse in Visual Studio LightSwitch on 10/21/2010:

One of the features available in Visual Studio LightSwitch is to model queries that can be reused in other queries that you model. This allows developers to write a potentially complex query once and be able to define other queries which reuse that logic. In V1 of LightSwitch, this query reuse is exposed through the concept of a query source.

All queries that you create have a source. The source of a query defines the set of entities on which the query will operate. By default, any new query that you create will have its source set to the entity set of the table for which you created the query. So if I create a query for my Products table, the source of the query will be the Products entity set. You can think of the entity set as being similar to a SELECT * FROM TABLE for the table. The entity set is always the root; it does not have a source. Developers have the ability to change a query’s source. The source of a query can be set to another query that has already been defined. (You can do this as long as both queries are returning the same entity type. You can’t, for example, define a query for customers and a query for products and define the source of the products query to be the customer query.) This effectively creates a query chain where the results of one query are fed to the next query where the results are further restricted.

To illustrate how this works, let’s start with a sample set of Product data to work with:

I’ve defined a query named ProductsByCategory that returns the products of a given category:

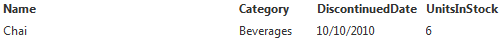

This query will return the following data when “Beverages” is passed as the Category parameter:

Now this query can be reused for any other query which wants to retrieve data for a specific category but also wants to further refine the results. In this case, I’ll define a query named DiscontinuedProducts that returns all discontinued products for a given category.

Notice the red bounding box which I’ve added to indicate that this query’s source is set to the ProductsByCategory query. You’ll also notice that this query “inherits” the Category parameter from its source query. This allows the DiscontinuedProducts query to consume that parameter in its filter should it need to do so. The result of this query is the following:

It may be helpful to think of object inheritance in the context of query sources. In other words, the DiscontinuedProducts query inherits the logic of ProductsByCategory and extends that logic with its own.

It should be noted that any sorts that are defined for queries are only applicable for the query that is actually being executed. So if ProductsByCategory sorted its products by name in descending order and DiscontinuedProducts sorted its products by name in ascending order, then the results of the DiscontinuedProducts query will be in ascending order. In other words, the sorting defined within base queries are ignored.

• Kunal Chowdhury posted a detailed, fully illustrated Beginners Guide to Visual Studio LightSwitch (Part–1) on 10/20/2010:

Visual Studio LightSwitch is a new tool for building data-driven Silverlight Application using Visual Studio IDE. It automatically generates the User Interface for a DataSource without writing any code. You can write a small amount of code also to meet your requirement.

Recently, I got some time to explore Visual Studio LightSwitch. I created a small DB application with proper data inserting UI within a small amount of time (without any XAML or C# code).

Here in this article, I will guide you to understand it with the help of a small application. There will be a series of articles on this topic regularly. Read the complete article to learn about creating a Silverlight data driven application with the help of Visual Studio LightSwitch.

Setting up LightSwitch Environment

Microsoft Visual Studio LightSwitch Beta 1 is a flexible, business application development tool that allows developers of all skill levels to quickly build and deploy professional-quality desktop and Web business applications. To start with LightSwitch application development, you need to install the Visual Studio LightSwitch in your development machine. To do so, follow the below steps:

- Install Visual Studio 2010

- Install Visual Studio LightSwitch

The LightSwitch installation will install all other components to your PC one by one including SQL Express, Silverlight 4, LightSwitch Beta server etc.

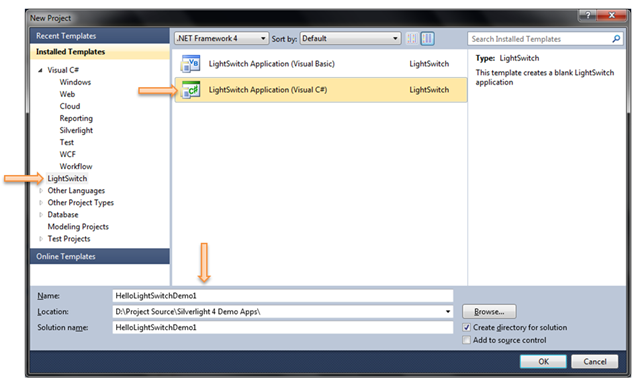

You can download the LightSwitch Beta 1 from here: Microsoft Download Center (Visual Studio LightSwitch Beta 1)Creating a LightSwitch Project

Once you installed Visual Studio LightSwitch, Run the Product to create a new project. Go to File –> New –> Project or press Ctrl + Shift + N to open the “New Project”. From the left panel, select “LightSwitch”. In the right pane, it will filter the LightSwitch project templates. This will include of type VB & C#. Select your respective type. Here I will use the C# version.

In the above dialog Window, enter the name of the project, select proper location for the project to create and hit “Ok”. This will create the blank LightSwitch project for you by the Visual Studio 2010 IDE. It will take some time for the project creation. So, be patient.Create a Database Table

After the project has been created by the IDE, it will open up the following screen:

You can see that, it has two options in the UI. You can create a new table for your application. Also, you can attach an external database. If you open the Solution Explorer, you will see that, the project is totally empty. It has only two folders named “Data Sources” and “Screens”.

“Data Sources” stores your application data i.e. Database Tables. On the other side, the “Screens” folder stores the UI screens created by you. I will describe them later in this tutorial.

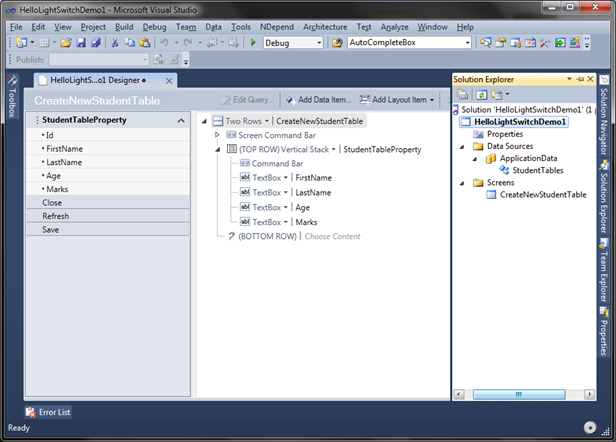

Let’s create a new Table for our application. Click on the “Create new table” to continue. This will bring up the following screen in your desktop:

In the above page, you can design your Table structure as you do in the SQL server while creating a new table. Each table will have a “Id” assigned to the table of type “Int32” & it is a Primary Key to the table. Add some additional columns to the table.

In the above snapshot, you can see that, there are several column types (data types) available in LightSwitch application. For our sample application, we will create 4 additional columns called “FirstName (String)”, “LastName (String)”, “Age (Int16)” and “Marks (Decimal)”. Change the title of the table from “Table1Item” to “StudentTable”. This name will be used while saving the table. Save the table now. If you want to change the name of the table later, just rename the table header and save it. This will automatically update the table name.

Be sure to set all the fields as “Required” field. This will be helpful validating the data. I will show it to you later.Create a Data Entry Screen

Once you are done with structuring your database table columns, you will need to create a UI screen for your application to insert records. Click on the “Screen…” button from the top panel as shown in the below snapshot:

This will open the “Add New Screen” dialog window to the screen. Select “New Data Screen” from the Screen Template, provide a Screen Name in the right panel & chose the database table as Screen Data from the dropdown.

Click “Ok” to continue. This will create a new UI screen for your application to insert new data record by end user. Now, in the solution explorer you can see that, the “Data Sources” folder has one database named “ApplicationData” and it has a table called “StudentTables”. In the “Screens” folder you can find the recently created data entry screen named “CreateNewStudentTable”.

You can change the design of the UI from the below screen:

You can add or delete new field or controls. You can also rearrange the controls in the UI. For our first sample application, we will go with the default layout controls.See the Application in Action

Woo!!! Our application is ready. We will able to insert new records in our database table from our application. No need to write a single line of code. What? You are not agreeing with me! Let’s run the application by pressing F5. This will build your solution. It will take some time to compile the solution. Once it builds successfully, it will open the following UI in your desktop:

It is a Silverlight OOB Application. If you want to confirm, right click on the application & you will see the Silverlight context menu pops up in the screen.

OMG!!! We didn’t do anything to design the above UI! The Visual Studio LightSwitch automatically created the screen for you with a “Save” and “Refresh” button. You can see a collapsible “Menu” panel at the left of the screen. In the right side, you will see tabular panel containing some labels and TextBox which will be require to insert data in your application database.

In the top right corner of the screen, you will see a “Customize Screen” button. Once you click this, it will pop up another Child Window for you to customize the application screen at runtime. This will not be visible, once you deploy the application. We will cover them later in different article.

Kunal continues with

- Validation of Fields

- More on Save

- Customize the Screen

- End Note

Topics

Return to section navigation list>

Windows Azure Infrastructure

• Joe Panetieri analyzed Microsoft’s Cloud Strategy: Three Reasons to Worry in a 10/20/2010 post to the MSPMentor blog:

When Microsoft announced plans to re-brand its SaaS platform from BPOS to Office 365, I took a day or so to digest the news. No doubt, Microsoft has made some solid SaaS and cloud computing moves in the past year. But I believe this week’s rebranding efforts reveal that Microsoft’s cloud initiatives are experiencing considerable turbulence. Here’s why.

Consider two recent moves:

1. Exit, Stage Left: Microsoft earlier this week revealed that Chief Software Architect Ray Ozzie will be leaving the company. CEO Steve Ballmer put a positive spin on Ozzie’s departure, claiming Microsoft’s “progress in services and the cloud” was “now full speed ahead in all aspects of our business.”

2. What’s In A Name?: On October 19, Microsoft announced plans to dump the BPOS (Business Productivity Online Suite) brand, in favor of a new Office 365 brand. Matt Weinberger, over on The VAR Guy, likes the branding move and he also applauds Microsoft’s SMB cloud efforts. But I’m not sure I fully agree.

When it debuts in 2011, Microsoft says Office 365 will include Microsoft Exchange Online, Microsoft SharePoint Online, Microsoft Lync Online and the latest version of Microsoft Office Professional Plus desktop suite. Translation: Microsoft is taking one of its strongest brands — Office — and linking it to the cloud. (Here’s a comprehensive Q&A from Microsoft.)

Three Signs of Trouble?

What is Microsoft really saying here? A few potential answers, based purely on my speculation:

1. The BPOS Brand was stalled: I suspect many customers and partners could not follow YATAFR (yet another technology acronym from Redmond). Perhaps similarly, Microsoft may have faced challenges with Office Communications Server, which is now rebranded as Microsoft Lync.

2. The BPOS Brand was tarnished: Recent BPOS outages cast a bit of a cloud over Microsoft and its SaaS strategy. By changing the brand to Office 365, Microsoft is suggesting that (A) the company’s SaaS strategy is ubiquitous like Microsoft Office and (B) Microsoft’s SaaS platform is reliable and online 365 days out of the year. But the new name is hardly original. Just ask Seagate’s i365 storage as a service business.

3. Perhaps Ray Wasn’t Really the Man: No doubt, Microsoft has some promising cloud platforms. I hear positive buzz about Windows Azure, which continues to attract more and more ISV interest. Moreover, offerings like SharePoint Online and Exchange Online are widely popular in the SaaS world.

But something just wasn’t clicking with Microsoft’s All In cloud efforts. A few examples: Exchange 2010 has been widely available on-premise for nearly a year but it’s not yet widely available in Microsoft BPOS. That’s a real head-scratcher. Moreover, SaaS service providers like Intermedia have long offered hosted Exchange 2010, meaning that Microsoft trails its own Exchange partners in the cloud market.

Are the items above Ray Ozzie’s fault? I doubt it. Was it a coincidence that Microsoft announced Ray Ozzie’s imminent departure the same week that the company announced rebranding plans for BPOS? Perhaps.

But something doesn’t add up…

• Bob Warfield recommended PaaS Strategy: Sell the Condiments, Not the Sandwiches in this 10/20/2010 essay:

This is part two of a two-part series I’ve wanted to do about strategy for PaaS (Platform-as-a-Service) vendors. The overall theme is that Platforms as a Service require too much commitment from customers. They are Boil the Ocean answers to every problem under the sun. That’s great, but it requires tremendous trust and commitment for customers to accept such solutions. I want to explore alternate paths that have lower friction of adoption and still leave the door open in the long run for the full solution. PaaS vendors need to offer a little dating before insisting on marriage and community property.

In Part one of this PaaS Strategy series, I covered the idea of focusing on getting the customer’s data over before trying to get too much code. We talked about Analytics, Integration with other Apps, Aggregation, and similar services as being valuable PaaS offerings that wouldn’t require the customer to rewrite their software from the ground up to start getting value from your PaaS. Now I want to talk about the idea of starting to get some code to come over to the PaaS without having to have all of it. My fundamental premise is to create a series of packages that can be adopted into the architecture of a product without forcing the product to be wholesale re-architected. I use the term “packages” very deliberately.

Consider Ruby on Rails Gems as a typical packaging system. A gem can be anything from a full blown RoR application to a library intended to be used as part of an application. We’re more focused on the latter. The SaaS/PaaS world has a pretty good handle on packaging applications in the form of App Stores, but it needs to take the next step. A proper PaaS Store (we’re gonna need a better name!) would include not only apps built on the platform, but libraries usable by other apps and data too. Harkening back to my part one article, data is valuable and the PaaS vendor should make it possible to share and monetize the data. Companies like Hoovers and LinkedIn make it very clear that there is data that is valuable and would add value if you could link your data to that data.

What are other packages that a PaaS PackageStore might offer?

I am fond of saying that when you set out to write a piece of software, 70% of the code written adds no differentiated business advantage for your effort at all. It’s just stuff you have to get done. Stuff like your login and authentication subsystem. You’re not really going to try to build a better login and authentication system, are you? You just want it to work and follow the industry best practices. A Login system is a pretty good example of a useful PaaS package for a variety of reasons:

- It doesn’t have a lot of UI, and what UI it does have is pretty generic. Packages with a lot of UI are problematic because they require a lot of customization to make them compatible with your product’s look and feel. That’s not to say it can’t be done, just that it’s a nuisance.

- It adds a lot of value and it has to be done right. As a budding young company, I’d pay some vendor who can point to much larger companies who use their login package. It would make my customers feel better to know this critical component was done well.

- It involves a fair amount of work to get one done. There is a fair amount of code and it has to be tested very carefully.

- I can’t really add unique value with it, it just has to work, and everyone expects it to work the same way.

- It is a divisible subsystem with a well-defined API and a pretty solid “bulkhead” interface. What goes on the other side of the bulkhead is something my architecture can largely ignore. It doesn’t have to spread its tendrils too far and wide through my system in order to add value. Therefore, it doesn’t perturb my architecture, and if I had to, I could replace it pretty easily.

These are all great qualities for such a package. What are some others? Think about the Open Source libraries you’ve used for software in the past, because all of that is legitimate territory:

- Search: Full text search as delivered by packages like Lucene is a valuable adjunct.

- Messaging: Adobe and Amazon both have messaging services available.

- Mobile: A variety of services could be envisioned for mobile ranging from making it easy to deal with voice delivered to and from telephones to SMS messages to full blown platforms that facilitate delivering transparent access to your SaaS app on a smartphone.

- Billing: There are companies like Zuora out there focused on exactly this area. Billing and payment processing comes in all shapes and sizes, and many businesses need access to it. You needn’t have full-blown Zuora to add value.

- Attachments: Many apps like to have a rich set of attachments. There’s a whole series of problems that have to be solved to make that happen, and most of it adds no value at all to your solution. Doing a great job of storing, searching, viewing, and editing attachments would be an ideal PaaS package. There are loads of interesting special cases too. Photos and Videos, for example.

I want to touch on an interesting point of competitive differentiation and selling. Let’s pick one of these that has a lot of potential for richness like Photos. Photos are a world unto themselves as you start adding facilities like resizing, cropping, and other image processing chores, not to mention face recognition. There’s tons of functionality there that the average startup might never get to, but that their users might think was pretty neat. After a while, the PaaS can set the bar for what’s expected when an app deals with photos. They do this when their Photos package is plush enough and adopted widely enough, that people come to expect it’s features. When it reaches the point where the features are expected, but a startup can’t begin to write them from scratch, the PaaS vendor wins big. The PaaS customers can win big too, because in the early days, before that plushness becomes the norm, a good set of packages can really add depth to the application being built.

PaaS vendors that want to take advantage of this for their own marketing should focus on packages that deliver sizzle. I can imagine a PaaS package vendor that totally focuses on sizzle. They’ve got photos, video, maps, charts (bar charts to Gantt charts), calendars, Social Media integrations, mobile, messaging, all the stuff that when it appears in the demo, delivers tons of sizzle and conveys a slick User Experience.

Before moving on, I want to briefly consider the opposite end of the spectrum from sizzle. There’s a lot of really boring stuff that has to get done in an application too. We’ve touched on login/authentication. SaaS Operations is another area that I predict a PaaS could penetrate with good success. Ops covers a whole lot of territory and the ops needs of SaaS can be quite a bit different than the ops needs of typical on-prem software. For one thing, it needs to scale cheaply. You can’t throw bodies at it. For another, you have to diagnose and manage problems remotely. One of the most common complaints at SaaS companies I’ve worked with is the customer saying performance is terrible. Is it a problem with the servers? Is it a problem in the Internet between your servers and the customer? Is it a problem inside their firewall? Or is it a problem on their particular machine? Having a PaaS subsystem that instrumented every leg of the journey, and made it easy to diagnose and report all that would be another valuable, though not particularly sexy offering.

I hope I have shown that there is a lot of evolution left for the PaaS world. It started out with boil the ocean solutions that demand an application be completely rewritten before it can gain advantage from the platform. I believe the future is in what I’ll call “Incremental PaaS”. This is PaaS that adds value without the rewrite. It’s still a service, and you don’t have to touch code beyond the API’s to access the packages, but it adds value and simplifies the process of creating new Cloud Applications of all kinds.

Read Part one of Bob’s essay.

See my Windows Azure, SQL Azure, AppFabric and OData Sessions at PDC 2010 post of 10/21/2010, which lists all PDC 2010 sessions related to Windows Azure, SQL Azure, Windows Azure AppFabric, Codename “Dallas” and OData, in the Cloud Computing Events section below.

• David Lemphers reviewed Gartner’s “Strategic Technologies” list in an Oooh, Cloud + Mobile + Analytics post of 10/20/2010:

So Gartner released it’s Top 10 Strategic Technologies for 2011 yesterday, and I had to jump on the back with some of my own observations.

Now, the lens I’m looking at Gartner’s list with is heavily based on my last 30 days at PwC. When we think about Cloud Computing @ PwC, which happens to be #1 on Gartner’s list, we tend to think about it in a slightly different way to most.

See, Cloud to us is an instrument, a technique, a mindset, I mean, let’s go all out, it’s a lifestyle. We take some of the smartest folks in leading business verticals, like Finance or Media & Entertainment for example, and challenge them to identify new ways of doing business where cloud computing (and other leading technologies by the way) is a key enabler. This leads to some amazing ideas and business outcomes!

When I look at the Gartner list, three things jump out at me. Cloud obviously, and I think Garner is right on the money when they suggest a lot of the action will happen in understanding how to select a cloud provider and how to maintain cloud services from a governance and policy perspective. Mobile is a logical extension of the cloud paradigm shift; if you start moving massive computing to the utility domain, then more immersive, intimate mobile devices are key. Business and lifestyle now collapse onto each other, and you need a mobile computing platform that can not only provide you with a meaningful social experience, but also one that is cognizant and capable from a business point of view.

And finally, analytics. Well, my time at Live Labs and Windows Azure taught me one thing, if you can get lots of machines, talking to lots of other services (like Twitter, Bing, etc), and use that to correlate your own information needs, you’re on a winner. Being able to qualify a factoid against the web and social corpus, at real-time speed, directly from your palm, is infinitely powerful.

I’m also going to add in Interactive Experiences as a leading area, I mean, Kinect creates amazing possibilities in this area.

I’m super excited about where Cloud, Mobile and Analytics will intersect in 2011. What about you?

• Gartner claimed “Analysts Examine Latest Industry Trends During Gartner Symposium/ITxpo, October 17-21, in Orlando” and put cloud computing in the first position of its Gartner Identifies the Top 10 Strategic Technologies for 2011 of 10/19/2010:

Gartner, Inc. today highlighted the top 10 technologies and trends that will be strategic for most organizations in 2011. The analysts presented their findings during Gartner Symposium/ITxpo, being held here through October 21.

Gartner defines a strategic technology as one with the potential for significant impact on the enterprise in the next three years. Factors that denote significant impact include a high potential for disruption to IT or the business, the need for a major dollar investment, or the risk of being late to adopt.

A strategic technology may be an existing technology that has matured and/or become suitable for a wider range of uses. It may also be an emerging technology that offers an opportunity for strategic business advantage for early adopters or with potential for significant market disruption in the next five years. As such, these technologies impact the organization's long-term plans, programs and initiatives.

“Companies should factor these top 10 technologies in their strategic planning process by asking key questions and making deliberate decisions about them during the next two years,” said David Cearley, vice president and distinguished analyst at Gartner.

“Sometimes the decision will be to do nothing with a particular technology,” said Carl Claunch, vice president and distinguished analyst at Gartner. “In other cases, it will be to continue investing in the technology at the current rate. In still other cases, the decision may be to test or more aggressively deploy the technology.”

The top 10 strategic technologies for 2011 include:

- Cloud Computing. Cloud computing services exist along a spectrum from open public to closed private. The next three years will see the delivery of a range of cloud service approaches that fall between these two extremes. Vendors will offer packaged private cloud implementations that deliver the vendor's public cloud service technologies (software and/or hardware) and methodologies (i.e., best practices to build and run the service) in a form that can be implemented inside the consumer's enterprise. Many will also offer management services to remotely manage the cloud service implementation. Gartner expects large enterprises to have a dynamic sourcing team in place by 2012 that is responsible for ongoing cloudsourcing decisions and management.

- Mobile Applications and Media Tablets. Gartner estimates that by the end of 2010, 1.2 billion people will carry handsets capable of rich, mobile commerce providing an ideal environment for the convergence of mobility and the Web. Mobile devices are becoming computers in their own right, with an astounding amount of processing ability and bandwidth. There are already hundreds of thousands of applications for platforms like the Apple iPhone, in spite of the limited market (only for the one platform) and need for unique coding.

The quality of the experience of applications on these devices, which can apply location, motion and other context in their behavior, is leading customers to interact with companies preferentially through mobile devices. This has lead to a race to push out applications as a competitive tool to improve relationships and gain advantage over competitors whose interfaces are purely browser-based. …

Read about the remaining eight strategic technologies here.

Robert Duffner introduced himself to the Windows Azure community in his Hi, I'm Robert post of 10/20/2010:

I'm Robert Duffner, director of Product Management for Windows Azure and I'm kicking off a new series of blog posts where I will be interviewing thought leaders in cloud computing. I'll be reaching out broadly across the globe to industry, public sector, and academia. You definitely don't want to miss these intellectually stimulating and thought provoking discussions on the cloud, my first interview is with Chris C. Kemp, NASA's first chief technology officer for IT and the driving force behind the Nebula cloud computing pilot.

Whether I'm engaging senior IT executives or developers, I try to focus on amplifying the voice of the customer with the various advocacy programs I'm currently driving. This is all in an effort to help shape the future product direction of the Windows Azure Platform. Some of these include leading the Windows Azure Platform Customer Advisory Board as well as driving the Windows Azure Technology Adoption Program (TAP) to help our early adopter customers and partners validate the platform and shape the final product by providing early and deep feedback. I also stay engaged with the top Windows Azure experts in the world as the community leader for the Windows Azure Most Valued Professionals (MVP) program.

Outside of work I'm an explorer and anthropologist who enjoys traveling anywhere. You can see some of my exploits here. I've actually been to all 7 continents, including Antarctica! But lately, I love to hike in the Pacific Northwest - the Cascades, the Olympics, and Mount Rainier. More details about my experience and background can be found here.

Thanks for your interest in Windows Azure and stay tuned!

David Linthicum warns “With the increased success of cloud computing, we're bound to create a few monsters will bedevil a dependent IT” in a deck for his The danger of the coming 'big cloud' monopolies post of 10/20/2010 to InfoWorld’s Cloud Computing blog:

Fast-forward five years: The Senate convenes a meeting to discuss recent price hikes by the three largest cloud computing providers. Businesses are up in arms because cloud computing subscription prices are tied directly to their IT spending and, thus, their bottom line. Also, we've grown so dependent on these large cloud computing providers that moving to other clouds or to internal data centers is just not practical. In other words, we work in a functional monopoly where a few providers control the public cloud market. We all know Big Oil and Big Tobacco. How about Big Cloud?

We've been here before with commodity markets such as energy and food, but never with IT technology. In light of the cloud's rising trajectory as an efficient and cheap way of doing computing, cloud monopolies are nearly guaranteed to pop up at some point.

The logic behind this is clear. Cloud computing providers need many points of presence (local data centers) to deliver the reliability, compliance, and performance that most businesses will demand and governments will mandate. The ultimate spending on infrastructure to support this will easily creep past $1 billion.

To get there, cloud providers will have to combine assets, merging and merging again until they rival GM, BP, Acher Daniels Midland, Monsanto, DuPont, AT&T, Koch Industries, Apple, and Microsoft in terms of size and revenue. In many cases, the existing big technology and/or cloud providers will combine, or groups of cloud providers will merge just to survive -- all of this to live up to the infrastructure needs required by the market.

The trouble with this scenario, as with any other functional monopoly that has come along over the years, is too much control is the hands of a few. Thus, businesses are left vulnerable to shifting priorities and costs.

Of course, we have our government to check in on these matters, but as we've seen many times before, the numbers of Senate hearings with our elected representatives jumping uglies on some CEO typically does not lead to productive outcomes. Instead, we're left hoping the market will eventually right the wrongs. In fact, U.S. businesses -- and Silicon Valley in particular -- usually discourage the government from getting involved in market activities until disaster strikes, as in the cases of Enron and the recent banking scandals. The market almost always rules.

Read more: next page ›, 2

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie (@lmacvittie) asserted Authentication is not enough. Authorization is a must for all integrated services – whether infrastructure components, applications, or management frameworks as a preface to her Authorization is the New Black for Infosec post of 10/20/2010 to F5’s DevCentral blog:

If you’ve gone through the process of allowing an application access to Twitter or Facebook then you’ve probably seen OAuth in action. Last week a mini-storm was a brewing over such implementations, primarily regarding the “overly-broad permission structure” implemented by Twitter.

Currently Twitter application developers are given 2 choices when registering their apps – they can either request “read-only access” or “read & write” access. For Twitter “read & write” means being able to do anything through the API on a user’s behalf.

…Twitter’s overly-broad permission structure amplifies the concern around OAuth token security because of what those tokens allow apps to do.

Reading that blog post and the referenced articles led to one of those “aha” moments when you realize something is a much larger problem than it first appears. After all, many folks as a general rule do not allow any external applications access to their Facebook or Twitter accounts, so they aren’t concerned by such broad permission structures. But if you step back and think about the problem in more generalized terms, in terms of how we integrate applications and (hopefully) infrastructure to enable IT as a Service, you might start seeing that we have a problem, Houston, and we need to address it sooner rather than later.

Interestingly enough, as I was getting ready to get my thoughts down on this subject I was also keeping track of Joe Weinman and his awesome stream of quotes coming out of sessions he attended at the Enterprise Cloud Summit held at Interop NY. He did not disappoint as suddenly he started tweeting quotes from a talk entitled, “Infrastructure and Platforms: A Combined Strategy”, given by Nimbula co-founder and vice- president of products, Willem van Biljon.

The sentiment exactly mirrored my thoughts on the subject: traditional permissions are “no longer enough” and are highly dependent “on the object being acted upon” as well as the actor. It’s contextual, requiring that the strategic point of control enforcing and apply policies does so on a granular level and in the context of the actor, the object acted upon (the API call, anyone?) and even location. While it may be permissible for an admin to delete an object whilst doing so from within the recognized organization management network, it may not be permissible for the same action to be carried out from iPad that’s located in Paris. Because that may be indicative of a breach, especially when you don’t have an office in Paris.

More disturbing, if you think about it, is the nature of infrastructure integration via an API, a la Infrastructure 2.0. Like Twitter’s implementation and many integration solutions that were designed with internal-to-the-organization only use cases in mind, one need only authenticate to the component in order to gain complete access to all functions possible through the API.

The problem is, of course, that just because you want to grant User/Application A access to everything, you might not want to allow User/Application B to have access to the entire system. Application delivery infrastructure solutions, for example, have a very granular set of API calls that allow third-party applications to control everything. Once authenticated a user/application can as easily modify the core networking configuration as they can read the status of a server.

There is no real authorization scheme, only authentication. That’s obviously a problem.

THE FIVE Ws of CONTEXT-AWARE AUTHORIZATION

What’s needed is the ability to “tighten” security around an API because at the core of most management consoles is the concept of integration with the components they managed via an API. Organizations need to be able to apply granular authorization – access to specific API calls/methods based on user or at the very least role – so that only those who should be able to tweak the network configuration can do so. This is not only true for infrastructure; it’s especially important for applications with APIs, the purpose for which is application integration across the Internet.

Merely obtaining credentials and authenticating them is not enough. Yes, that’s Joe Bob from the network team, that’s nice. What we need to know is what he’s allowed to do and to what and perhaps from where and even when and perhaps how. We need to have the ability to map out these variables in a way that allows organizations to be as restrictive – or open – as needs be to comply with organizational security policies and any applicable regulations. Perhaps Joe Bob can modify the network configuration of component A but only from the web management console and only during specified maintenance windows, say Saturday night. Most organizations would be fine with less detail – Job Bob can modify the network from any internal device/client at any time, but Mary Jo can only read status information and health check data and she can do so from any location.

Whether we’re talking fine-grained or broad-grained permissions at the API call level is less the issue than simply having some form of authorization scheme beyond system-wide “read/write/execute” permissions, which is where we are today with most infrastructure components and most Web 2.0 sites. We need to drill down into the APIs and start examining authorization on a per-call basis (or per-grouping-of-calls basis, at a minimum).

And in the case of cloud computing and multi-tenant architectures, we further need to recognize that it’s not just the API-layer that needs authorization but also the “tenant”. After all, we don’t want Joe Bob from Tenant A messing with the configuration for Tenant B, unless of course Joe Bob is part of the operations team for the provider and needs that broad level of access.

AN INFOSEC STRETCH GOAL

The goal to provide a complete automated and authorization-enabled data center is certainly a “stretch” goal, and one that will likely remain a “work in progress” for the foreseeable future. Many, many components in data centers today are not API-enabled, and there’s no guarantee that they will be any time in the future. Those that are currently so enabled are not necessarily multi-tenant, and there’s no guarantee that they will be any time in the future. And many organizations simply do not have the skills required to perform the work integration work necessary to build out a collaborative, dynamic infrastructure. And there’s no guarantee that they will any time in the future. But we need to start planning and viewing security with an eye toward authorization as the goal, recognizing that the authentication schemes of the past were great when access was to an operator or admin and only from a local console or machine. The highly distributed nature of new data center architectures and the increasing interest in IT as a Service make it necessary to consider not just who can manage infrastructure but what they can manage and from where.

The challenges to addressing the problem of authorization and security are many but not insurmountable. The first step is to recognize that it is necessary and develop a strategic architectural plan for moving forward. Then infosec professionals can assist in developing the incremental tactics necessary to implement such a plan. Just as a truly dynamic data center takes time and will almost certainly evolve through stages, so too will implementing an authorization-focused identity management strategy for both applications and the infrastructure required to deliver them.

Jonathan Penn described Why Cloud Radically Changes The Face Of The Security Market in a 10/20/2010 post to his Forrester blog:

When does a shift create new market? When you have to develop new products, sell them to different people than before who serve different roles, have a different value proposition for your solutions, and they're sold with different pricing and profitability models - well, that in my view is a different market.

Cloud represents such a disruption for security. And it's going to be a $1.5 billion market by 2015. I discuss the nature of this trend and its implications in my latest report, "Security And The Cloud".

Most of the discussion about cloud and security solutions has been about security SaaS: the delivery model for security shifting from on-premise to cloud-based. That's missing the forest for the trees. Look at how the rest of IT (which is about 30 times the size of the security market) is moving to the cloud. What does that mean in terms of how we secure these systems, applications, and data? The report details how the security market will change to address this challenge and what we're seeing of that today.

Vendors have finally started to come to market with solutions, though as you'll see from the report, we're still at the early stages with far more to go. And developing solutions for cloud environments requires a lot more than scaling up and supporting multi-tenancy. But heightened pressure by cloud customers and prospects is fueling the rapid evolution of solutions. How rapid and radical an evolution? By 2015, security will shift from being the #1 inhibitor of cloud to one of the top enablers and drivers of cloud services adoption.

<Return to section navigation list>

Cloud Computing Events

• My Windows Azure, SQL Azure, AppFabric and OData Sessions at PDC 2010 post of 10/21/2010 lists all PDC 2010 sessions related to Windows Azure, SQL Azure, Windows Azure AppFabric, Codename “Dallas” and OData. The list was gathered from all session channels (tracks), because the Cloud channel is missing many Azure-related presentations.

The PDC site runs on Windows Azure, by the way.

• Cloudcor, Inc. announced its hybrid Up 2010 Cloud Computing Conference to be held 11/15/2010 in San Francisco, CA, 11/18/2010 in Islandia, NY, and 11/16, 11/17 and 11/19/2010 on the Internet as a virtual conference. Following are Microsoft’s conference sessions:

Headline Keynote

The Move is On: Cloud Strategies for Businesses

by Doug Hauger - General Manager, Cloud Infrastructure Services Product Management group at Microsoft.Keynote Session

PaaS Lessons from cloud pioneers running enterprise scale solutions

by Shawn Murray – Microsoft Incubation SalesKeynote Session

Microsoft’s PaaS Solution: The Windows Azure Platform

by Zane Adam - General Manager, Marketing and Product Management, Azure and Integration Services at Microsoft.Keynote Session

Windows Azure Platform Security Essentials for TDMs

by Yousef Khalidi, Distinguished Engineer at Microsoft.Keynote Session

The Future of SaaS, and Why Kentucky Board of Education Moved 700k Seats into the Cloud, in One Weekend

by Danny Kim, CTO, FullArmor.

Microsoft was the sole “Diamond Sponsor” of the conference when this article was posted.

• Geva Perrry announced the speaker roster for the QCon San Francisco 2010 Cloud Track on 10/21/2010:

Back in July I wrote that I am hosting the QCon San Francisco Cloud Computing track this year and made an informal call for speakers.

Well, I wanted to update on the awesome line-up of speakers that we have for this event and offer my blog readers a $100 discount on registering to the conference, which takes place November 1-5 at the Westin San Francisco. The cloud track takes place all in one day on Friday, November 5.

To receive the $100 discount, sign up here with registration code PERR100.

Here's the agenda. [Click the links for detailed abstracts and speaker bios.] Hope to see you all there!

Track: Real Life Cloud Architectures; Host: Geva Perry

This track covers how a variety of applications are using Cloud computing today, with a focus on mature organizations who have merged some aspect of cloud into their offerings rather than new/small companies which started out in the cloud. The aim of this track is to provide concrete takeaways for how to start using cloud computing in-house when you have an existing application to work with.

Time Session Speaker 10:35 12:05 14:05 15:35 16:50 Amr Awadallah, Roger Bodamer, Simon Guest, Damien Katz & Razi Sharir

• Wes Yanaga invited ISVs (and others) on 10/20/2010 to Attend PDC10 Live Event at Microsoft Silicon Valley Campus:

Missed your opportunity to attend PDC10 in Redmond? If so, you can still join in on the excitement via the live stream and in-person delivered sessions. Attend this October 28th event at the Microsoft Silicon Valley Campus - this year’s content will focus on the next generation of Cloud Services, client & devices, and framework & tools. You can get the highlights of PDC without heading to Redmond.

Welcome keynote by Dan’l Lewin (CVP, Strategic and Emerging Business Development for Microsoft) and two in-person session on OData & Windows Phone 7. Join us for an opportunity to receive limited edition t’s, enter to win an XBOX w/Kinect, Windows Phone 7 and more! Register here: 1032464622

• Andrew R. Hickey reported Cloud Computing Shines At Interop New York in this 10/20/2010 article for CRN:

Cloud computing is taking center stage at this week's Interop New York 2010, with dozens of cloud players showcasing their latest and greatest solutions to make the leap to the cloud a smooth one.