Windows Azure and Cloud Computing Posts for 10/25/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 10/26/2010 with articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Steve Marx (@smarx) announced in a 10/25/2010 tweet:

I built my own TwitPic with a Windows Phone 7 client. Let's see if it works

Here’s an early diagram:

Stay tuned for further developments.

Ranier Stropek posted a tutorial about Windows Azure Storage to the Time Cockpit blog on 10/25/2010:

The Windows Azure platform offers different mechanisms to store data permanently. In this article I would like to introduce the storage types of Windows Azure and demonstrate their use by showing an example application.

Storage Types In Windows Azure

If you want to store data in Windows Azure you can choose from four different data stores:

- Queues

- SQL Azure

- Blob Storage

- Table Storage

Windows Azure Queues

The first one is easy to explain. I am sure that every developer is used to the concept of FiFo queues. Azure queues can be used to communicate between different applications or application roles. Additionally Azure queues offer some quite unique features that are extremely handy whenever you use them to hand off work from an Azure web role to an Azure worker role. I want to point your attention especially to the following two ones:

- Auto-reappearance of messages

If a receiver takes out a message from the queue and crashes when handling it, it is likely that the receiver will not be able to reschedule the work before dying. To handle such situations Azure queues let you specify a time span when getting an element out of the queue. If you do not delete the received message within that time span Azure will automatically add the message to the queue again so that another instance can pick it up.- Dequeue counter

The dequeue count is closely related to the previously mentioned auto-reappearance feature. It can help detecting "poisoned" messages. Imagine an invalid message that kills the process that has received it. Because of auto-reappearance another instance will pick up the message - and will also be killed. After some time all your workers will be busy dying and restarting. The dequeue counter tells you how often the message has already been taken out of the queue. If it exceeds a certain number you could remove the message without further processing (maybe logging would be a good idea in such a situation).Before we move to the next type of storage mechanism in Azure let me give you some tips & tricks concerning queues:

- Azure queues have not been built to transport large messages (message size must not be larger than 8KB). Therefore you should not include the messages' payload in the queue messages. Store the payload in any of the other storages (see below) and use the queue to pass a reference.

- Write application that are tolorant to system failures and therefore make your message processing idempotent.

- Do not rely on a certain message delivery order.

- If you need really high throughput package multiple logical messages (e.g. tasks) into a single physical Azure queue message or use multiple queues in parallel.

- Add poisoned message handling (see description above)

- If you use your Azure queues to pass work from your web roles to your worker roles write some monitoring code that checks the queue length. If it gets to long you could implement a mechanism to automatically start new worker instances. Similarly you can shut down instances if your queue remains emtpy or short for a longer period of time.

SQL Azure

Yes, SQL Azure is a SQL Server in the cloud. No, SQL Azure is not just another SQL Server in the cloud. With inventing SQL Azure Microsoft did much more than buying some server, put Hyper-V on them and let the virtual machines run SQL Server 2008 R2. It is correct that behind the scenes SQL Server is doing the heavy lifting deep down in the dark corners of Azure's data centers. However, a lot of things are happening before you get access to your server.

The first important thing to note is that SQL Azure comes with a firewall/load balancer that you can configure e.g. through Azure's management portal. You can configure which IP addresses should be able to establish a connection to your SQL Azure instance.

If you have passed the first firewall you get connected with SQL Azure's Gateway Layer. I will not go into all details about the gateways because this is not a SQL Azure deep dive. The gateway layer is on the one hand a proxy (find the SQL Server nodes that are dedicated to your SQL Azure account) and on the other hand a stateful firewall. "Stateful firewall" means that the gateway understands TDS (Tabular Data Stream, SQL Server native communication language) and checks TDS packages before they hit the underlying SQL Servers. Only if the gateway layer finds everything ok with the TDS packages (e.g. right order, user and password ok, encrypted, etc.) your requests are handed over the the SQL Servers.

The beauty of SQL Azure is that you as a developer can work with SQL Azure just like you work with your SQL Server that stands in your own data center. SQL Azure supports the majority of programming features that you you are used to. You can access it using ADO.NET, Entity Framework or any other data access technology that you like. However, there are some limitations to SQL Azure because of security and scalability reasons. Please check MSDN for details about the restrictions.

Again some tips & tricks that could help when you start working with SQL Azure:

- Use SQL Server Management Studio 2008 R2 in order to be able to manage our SQL Azure instances in your Object Explorer.

- Never forget that SQL Azure always is a database cluster behind the scenes (you get three nodes for every database). Therefore you have to follow all Microsoft guidelines for working with database clusters (e.g. implement auto-reconnect in case of failures, auto-retry, etc.; check MSDN for details).

- Don't forget to estimate costs for SQL Azure before you start to use it. SQL Azure can be extremely cost-efficient for your applications. There are situations (especially if you have very large databases or a lot of very small ones) in which SQL Azure can get expensive.

Windows Azure Blob Storage

Windows Azure has been built to scale. Therefore typical Azure applications consist of many instances (e.g. web farm, farm of worker machines, etc.). As a consequence there is a need for a kind of file system that can be shared by all computers participating in a certain system (clients and servers!). Azure Blob Storage is the solution for that.

Natively Azure Blob Storage speaks a REST-based protocol. If you want to read or write data from and to blobs you have to send http requests. Don't worry, you do not have to deal with all the nasty REST details. The Windows Azure SDK hides them from you.

Similarly to SQL Azure I will not go into all details of Azure Blob Storage here. You will see how to access blobs in the example shown below. Let me just give you the following tips & tricks about what you can do with Azure Blobs:

- Azure Blob Storage has been built to store massive amounts of data. Don't be afraid of storing terabytes in your blob store if you need to. Even a single blob can hold up to 1TB (page blobs).

- Azure differs between block blobs (streaming + commit-based writes) and page blobs (random read/write). Maybe I should write a blog post about the differences... Until then please check MSDN for details.

- Blobs are organized into containers. All the blobs in a container can be structured in a kind of directory system similar to the directory system that you know from your on-premise disk storage. You can specify access permissions on container and blob level.

- You can programatically ask for a shared access signature (i.e. signed URL) for any blob in your Azure Blob store. With this URL a user can direcly access the blob's content (if necessary you can restrict the time until when the URL will be valid). Therefore you can e.g. generate a confirmation document, put it into blob store and send the user a direct link to it without having to write a single line of code for providing it's content (btw - this means also less load on your web roles).

Windows Azure Table Storage

Azure Table Storage is not your father's database. It is a No SQL data store. Just like with Azure Blob Storage you have to use REST to access Azure tables (if you use the Windows Azure SDK you use WCF Data Services to access Table Storage).

Every row in an Azure table consists of the following parts:

- Partition Key

The partition key is similar to the table name in a RDBMS like SQL Server. However, every record can consist of a different set of properties even if the records have the same partition key (i.e. no fixed schema, just storing key/value pairs).- Row Key

The row key identifies a single row inside a partition. Partition key + row key have to be unique throughout your whole Table Storage service.- Timestamp

Used to implement optimistic locking.At the time of writing this article Azure Table Storage supports the following data types: String, binary, bool, DateTime, GUID, int, int64 and double.

So when to use what - SQL Azure or Azure Tables?? Here are some guidelines that could help you to choose what's right for your application:

- In SQL Azure storage is quite expensive while transactions are free. In Azure tables storage is very cheap but you have to pay for every single transaction. So if you have small data that is frequently accessed use SQL Azure, if you have large amounts of data that has to be stored but that is seldom access used Azure tables. If you find both scenarios in your application you could combine both storage technologies (this is what we do in our program time cockpit.

- At the time of writing SQL Azure does only offer a single (rather small) machine size for databases. Because of this SQL Azure does not really scale. If you need more performance you have to build your own scaling mechanisms (e.g. distribute data accross multiple SQL Azure databases using for instance SyncFramework). This is different for Azure tables. They scale very well. Azure will store different partitions (remember the partition key I mentioned before) on different servers in case of heavy load. This is done automatically! If you need and want automatic scaling you should prefer Azure tables over SQL Azure.

- Azure Table Storage is not good when it comes to complex queries. If you need and want all the great features that T-SQL offers you, you should stick to SQL Azure instead of Azure tables.

- The amount of data you can store in SQL Azure is limited whereas Azure tables have been built to store terabytes of data. …

Ranier continues with detailed “Azure Storage In Action” source code examples.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

•• The Professional Developers Conference 2010 team delivered on 10/26/2010 a complete OData feed of PDC10’s schedule at http://odata.microsoftpdc.com/ODataSchedule.svc/. Here’s the root:

Here’s the session entry for the first, “Building High Performance Web Applications” session:

The format is similar to that used by Falafel Software for their EventBoard app, which I described in my Windows Azure and SQL Azure Synergism Emphasized at Windows Phone 7 Developer Launch in Mt. View post of 10/14/2010.

•• Thanks to Jamie Thompson (@jamiet) for the heads up for the preceding in his PDC schedule published as OData, but where's the iCalendar feed? post of the same date:

Chris Sells announced on twitter earlier today that the schedule for the upcoming Professional Developers' Conference (PDC) has been published as an OData feed at: http://odata.microsoftpdc.com/ODataSchedule.svc

Whoop-de-doo! Now we can, get this, view the PDC schedule as raw XML rather than on a web page or in Outlook or on our phone, how cool is THAT? (conveying sarcasm in the written word is never easy but hopefully I've managed it here!)

Seriously, I admire Microsoft's commitment to OData, both in their Creative Commons licensing of it and support of it in a myriad of products but advocating its use for things that it patently should not be used for is verging on irresponsible and using OData to publish schedule information is a classic example.

A standard format for publishing schedule information over the web already exists, its called iCalendar (RFC5545). The beauty of iCalendar is that it is supported today in many tools (e.g. Outlook, Google Calendar, Hotmail Calendar, Apple iCal) so I can subscribe to an iCalendar feed and see that schedule information alongside, and intertwined with, my personal calendar and any other calendars that I happen to subscribe to. Moreover the beauty of subscribing versus importing is that any changes to the schedule will automatically get propogated to me. Can any of that be achieved with an OData feed? No!

On the off-chance that anyone in the PDC team is reading this I implore you, please, publish the schedule in a format that makes it useful. OData is not that format.

As an aside, I am an avid proponent of iCalendar and have a strong belief that adoption of it both in our work and home lives could have significantly positive repercussions for all of us. With that in mind I actively canvas people to publish their data in iCalendar format and also contribute to Jon Udell's Elmcity project which you can read more about at Elmcity Project FAQ. I encourage you to contribute.

As I mentioned in my Brendan Forster (@shiftkey), Aaron Powell (@slace) and Tatham Oddie (@tathamoddie) developed and demoed The Open Conference Protocol with a Windows Phone 7 app article in the “SQL Azure Database, Codename “Dallas” and OData” section of my Windows Azure and Cloud Computing Posts for 10/15/2010+ post (scroll down):

The Open Conference Protocol project[, which uses existing hCalendar, hCard and Open Graph standards] appears to compete with Falafel Software’s OData-based EventBoard Windows Phone 7 app described in my Windows Azure and SQL Azure Synergism Emphasized at Windows Phone 7 Developer Launch in Mt. View post of 10/14/2010. I’m sure EventBoard took longer than 30 man-hours to develop; according to John Watters, it took two nights just to port the data source to Windows Azure. OData implementations have the advantage that you can query the data. Hopefully, the PDC10 team will provide an OData feed of session info eventually.

PDC10’s OData feed must have been available to some earlier than today, because Craig Dunn announced his Conf for PDC10 on Windows Phone 7 app on 10/26/2010:

A first-cut of the PDC10 schedule can now be downloaded for the Windows Phone 7 version of Conf - now available on Marketplace (search for Conf/look for this tile).

To download new conference data in Conf

This is what the app looks like with PDC10 data loaded:

- Start on the first panel of the Panorama

- Scroll down to other conferences... and touch Download more...

- When the list downloads from the server, touch PDC10

- PDC10 should appear in the list - if not, switch between the conferences until it does :-s

The iPhone version of Conf is currently awaiting AppStore approval - fingers crossed for Thursday!

It appears to me that OData is gaining traction in the event reporting arena.

Wayne Walter Berry posted Gaining Performance Insight into SQL Azure to the TechNet Wiki on 10/25/2010:

Understanding query performance in SQL Azure can be accomplished by utilizing SQL Server Management Studio or the SET STATISTICS Transact-SQL commands. Since SQL Server Profiler isn’t currently supported with SQL Azure, this article will discuss some alternatives the provide database administrators insight into exactly what Transact-SQL statements are being submitted to the server, and how the server accesses the database to return result sets.

SQL Server Management Studio

Utilizing SQL Server Management Studio you can view the Actual Execution Plan on a query. This gives insight into the indexes that SQL Azure is using to query the data, the number of rows returned at each step, and which steps is taking the longest.

Here is how to get started:

- Open SQL Server Management Studio 2008 R2; this version easily connects to SQL Azure.

- Open a New Query Window.

- Copy/Paste Your Query into the New Query Window.

- Click on the toolbar button to enable the Actual Execution Plan.

Or, choose Include Actual Execution Plan from the menu bar.

- Once you have included your plan, run the query. This will give you another results tab that looks like this:

Reading an execution plan is the same in SQL Server 2008 R2 as it is in SQL Azure, and how to read them is beyond the scope of this blog post, to find out more about Execution Plans, read: Reading the Graphical Execution Plan Output. One of the things I use execution plans for is to develop covered indexes to improve the performance of the query. For more information about covered index read this blog post.

USING “SET STATISTICS”

SET STATISTICS is a Transact SQL command you can run in the query window of SQL Server Management Studio to get back statistics about your queries execution. There are a couple variants on this command, one of which is SET STATISTICS TIME ON. The TIME command returns the parse, compile and execution times for your query.

Here is an example of the Transact SQL that turns on the statistic times:

SET STATISTICS TIME ON

SELECT *

FROM SalesLT.Customer

INNER JOIN SalesLT.SalesOrderHeader ON

SalesOrderHeader.CustomerId = Customer.CustomerId

I executed the example on the Adventure Works database loaded into SQL Azure, and got these results:

SET STATISTICS will give you some “stop watch” metrics about your queries, as you optimized them you can rerun them with SET STATISTICS TIME ON to determine if they are getting faster.

Another flavor of SET STATISTICS is SET STATISTICS IO ON, this variant will give you information about the IO performance of the query in SQL Azure. My example query looks like this:

SET STATISTICS IO ON

SELECT *

FROM SalesLT.Customer

INNER JOIN SalesLT.SalesOrderHeader ON

SalesOrderHeader.CustomerId = Customer.CustomerId

And the output looks like this:

We have covered I/O performance in SQL Azure in an earlier this blog post, so I will not go into detail again here.

Observing running queries

With SQL Server you can utilize SQL Profiler to show all the queries running in real-time. In SQL Azure, you can still get access to the running queries and their execution count, via the Procedure cache, with a Transact-SQL query similar to this:

SELECT q.text, s.execution_count

FROM sys.dm_exec_query_stats as s

cross apply sys.dm_exec_sql_text(plan_handle) AS q

ORDER BY s.execution_count DESC

For more information about how the procedure cache works in SQL Azure, see this blog post.

Glad to see Wayne is posting again!

Beth Massi wrote Add Some Spark to Your OData: Creating and Consuming Data Services with Visual Studio and Excel 2010 for the Sep/Oct 1020 issue of Code Magazine:

The Open Data Protocol (OData) is an open REST-ful protocol for exposing and consuming data on the web. Also known as Astoria, ADO.NET Data Services, now officially called WCF Data Services in the .NET Framework. There are also SDKs available for other platforms like JavaScript and PHP. Visit the OData site at www.odata.org.

With the release of .NET Framework 3.5 Service Pack 1, .NET developers could easily create and expose data models on the web via REST using this protocol. The simplicity of the service, along with the ease of developing it, make it very attractive for CRUD-style data-based applications to use as a service layer to their data. Now with .NET Framework 4 there are new enhancements to data services, and as the technology matures more and more data providers are popping up all over the web. Codename “Dallas” is an Azure cloud-based service that allows you to subscribe to OData feeds from a variety of sources like NASA, Associated Press and the UN. You can consume these feeds directly in your own applications or you can use PowerPivot, an Excel Add-In, to analyze the data easily. Install it at www.powerpivot.com.

As .NET developers working with data every day, the OData protocol and WCF data services in the .NET Framework can open doors to the data silos that exist not only in the enterprise but across the web. Exposing your data as a service in an open, easy, secure way provides information workers access to Line-of-Business data, helping them make quick and accurate business decisions. As developers, we can provide users with better client applications by integrating data that was never available to us before or was clumsy or hard to access across networks.

In this article I’ll show you how to create a WCF data service with Visual Studio 2010, consume its OData feed in Excel using PowerPivot, and analyze the data using a new Excel 2010 feature called sparklines. I’ll also show you how you can write your own Excel add-in to consume and analyze OData sources from your Line-of-Business systems like SQL Server and SharePoint.

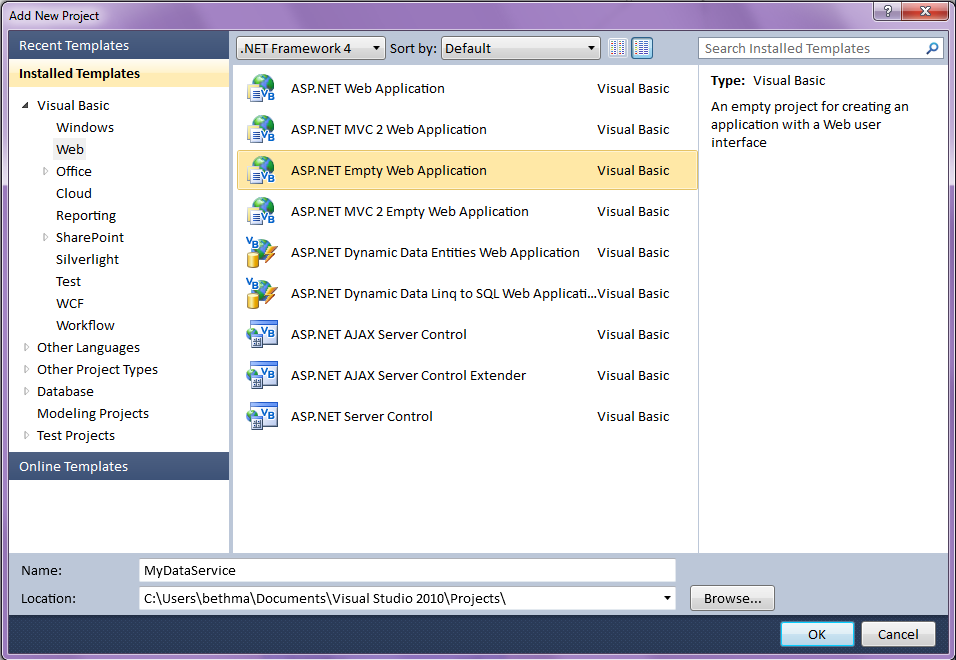

Creating a Data Service Using Visual Studio 2010

Let’s quickly create a data service using Visual Studio 2010 that exposes the AdventureWorksDW data warehouse. You can download the AdventureWorks family of databases here: http://sqlserversamples.codeplex.com/. Create a new Project in Visual Studio 2010 and select the Web node. Then choose ASP.NET Empty Web Application as shown in Figure 1. If you don’t see it, make sure your target is set to .NET Framework 4. This is a new handy project template to use in VS2010 especially if you’re creating data services.

Figure 1: Use the new Empty Web Application project template in Visual Studio 2010 to set up a web host for your WCF data service.

Click OK and the project is created. It will only contain a web.config. Next add your data model. I’m going to use the Entity Framework so go to Project -> Add New Item, select the Data node and then choose ADO.NET Entity Data Model. Click Add and then you can create your data model. In this case I generated it from the AdventureWorksDW database and accepted the defaults in the Entity Model Wizard. In Visual Studio 2010 the Entity Model Wizard by default will include the foreign key columns in the model. You’ll want to expose these so that you can set up relationships easier in Excel.

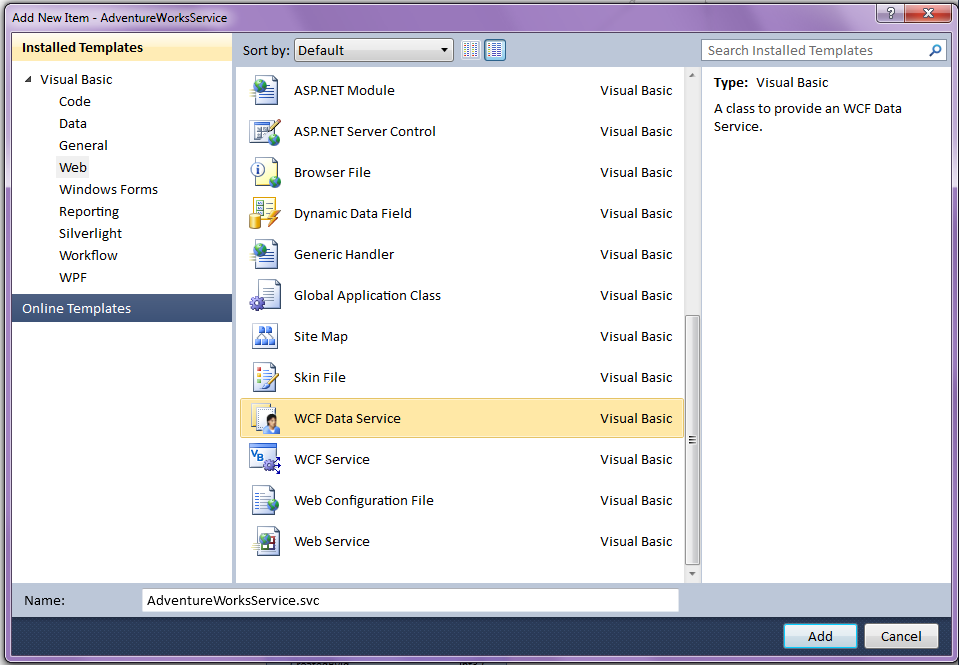

Next, add the WCF Data Service (formerly known as ADO.NET Data Service in Visual Studio 2008) as shown in Figure 2. Project -> Add New Item, select the Web node and then scroll down and choose WCF Data Service. This item template is renamed for both .NET 3.5 and 4 Framework targets so keep that in mind when trying to find it.

Figure 2: Select the WCF Data Service template in Visual Studio 2010 to quickly generate your OData service.

Now you can set up your entity access. For this example I’ll allow read access to all my entities in the model:

Public Class AdventureWorksService

Inherits DataService(

Of AdventureWorksDWEntities)

' This method is called only once to

' initialize service-wide policies.

Public Shared Sub InitializeService(

ByVal config As DataServiceConfiguration)

' TODO: set rules to indicate which

'entity sets and service operations

' are visible, updatable, etc.

config.SetEntitySetAccessRule("*",

EntitySetRights.AllRead)

config.DataServiceBehavior.

MaxProtocolVersion =

DataServiceProtocolVersion.V2

End Sub

End ClassYou could add read/write access to implement different security on the data in the model or even add additional service operations depending on your scenario, but this is basically all there is to it on the development side of the data service. Depending on your environment this can be a great way to expose data to users because it is accessible anywhere on the web (i.e., your intranet) and doesn’t require separate database security setup. This is because users aren’t connecting directly to the database, they are connecting via the service. Using a data service also allows you to choose only the data you want to expose via your model and/or write additional operations, query filters, and business rules. For more detailed information on implementing WCF Data Services, please see the MSDN library.

You could deploy this to a web server or the cloud to host for real or you can keep it here and test consuming it locally for now. Let’s see how you can point PowerPivot to this service and analyze the data a bit.

Read more: Article Pages 2, 3 - Next Page: 'Using PowerPivot to Analyze OData Feeds' >>

- Page 1: Add Some Spark to Your OData: Creating and Consuming Data Services with Visual Studio and Excel 2010

- Page 2: Using PowerPivot to Analyze OData Feeds

- Page 3: Consuming SharePoint 2010 Data Services

The San Francisco SQL Server Users Group will feature a Consuming Odata Services for Business Applications presentation by Beth Massi on 11/10/2010 6:30 PM at the Microsoft San Francisco office, 835 Market Street Suite 700, San Francisco, CA 94103:

Speaker: Beth Massi of Microsoft

The Open Data Protocol (OData) is a REST-ful protocol for exposing and consuming data on the web and is becoming the new standard for data-based services.

In this session you will learn how to easily create these services using WCF Data Services in Visual Studio 2010 and will gain a firm understanding of how they work as well as what new features are available in .NET 4 Framework.

You’ll also see how to consume these services and connect them to other public data sources in the cloud to create powerful BI data analysis in Excel 2010 using the PowerPivot add-in.

Finally, we'll build our own Office add-ins that consume OData services exposed by SharePoint 2010.

Speaker: Beth Massi is a Senior Program Manager on the Visual Studio BizApps team at Microsoft and a community champion for business application developers. She has over 15 years of industry experience building business applications and is a frequent speaker at various software development events. You can find Beth on a variety of developer sites including MSDN Developer Centers, Channel 9, and her blog www.BethMassi.com

Follow her on Twitter @BethMassi

Sounds to me as if Beth’s presentation will be based on her Code Magazine article (above).

1989Poster published .NET Data Access Essential [Training] with links to download the entire eight parts on 10/24/2010:

The Microsoft .NET Framework is a robust development platform with an enriched ecosystem of tools, components and features enabling developers to enhance their skill sets and create compelling solutions. Learn about the flexibility that this Framework provides for accessing data in your applications.ADO.NET is a set of computer software components that programmers can use to access data and data services. It is a part of the base class library that is included with the Microsoft .NET Framework.

1. Introduction to LINQ

2. A Closer Look at LINQ to SQL

3. Intro to WCF Data Services & OData

4. Getting Started with ADO.NET Entity Framework

5. Deeper Look at ADO.NET Entity Framework

6. Azure Data Storage Options

<Return to section navigation list>

AppFabric: Access Control and Service Bus

•• Clint Warriner of the Microsoft CRM Team announced Windows Azure Web Role Hosted Service + Microsoft Dynamics CRM Online Impersonation on 10/21/2010 (missed when posted):

We have had a lot of demand for some direction on how to impersonate a Microsoft Dynamics CRM Online user from a Windows Azure hosted service (Web Role). I have completed a walkthrough that will guide you through this process using the default Cloud Web Role project in VS 2008/2010.

This walkthrough uses Windows Identity Foundation instead of RPS due to some limitations I had with RPS. You can find the documentation and sample code on http://code.msdn.microsoft.com/crmonlineforazure.

Clemens Vasters (@clemensv) published Windows Azure AppFabric Datacenter IP ranges on 10/25/2010:

We know that there’s a number of you out there who have outbound firewall rules in place on your corporate infrastructures that are based on IP address whitelisting. So if you want to make Service Bus or Access Control work, you need to know where our services reside.

Below is the current list of where the services are deployed as of today, but be aware that it’s in the nature of cloud infrastructures that things can and will move over time. IP address whitelisting strategy isn’t really the right thing to do when the other side is a massively multi-tenant infrastructure such as Windows Azure (or any other public cloud platform, for that matter)

- Asia (SouthEast): 207.46.48.0/20, 111.221.16.0/21, 111.221.80.0/20

- Asia (East): 111.221.64.0/22, 65.52.160.0/19

- Europe (West): 94.245.97.0/24, 65.52.128.0/19

- Europe (North): 213.199.128.0/20, 213.199.160.0/20, 213.199.184.0/21, 94.245.112.0/20, 94.245.88.0/21, 94.245.104.0/21, 65.52.64.0/20, 65.52.224.0/19

- US (North/Central): 207.46.192.0/20, 65.52.0.0/19, 65.52.48.0/20, 65.52.192.0/19, 209.240.220.0/23

- US (South/Central): 65.55.80.0/20, 65.54.48.0/21, 65.55.64.0/20, 70.37.48.0/20, 70.37.64.0/18, 65.52.32.0/21, 70.37.160.0/21

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

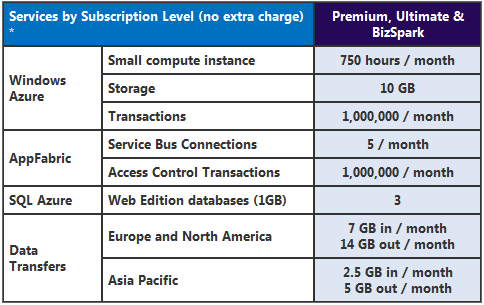

•• The Windows Azure Team announced Windows Azure Platform Benefits for MSDN Subscribers “have been extended to 16 months!” in a 10/25/2010 update:

The Windows Azure Platform is an internet-scale cloud computing and services platform hosted in Microsoft data centers. The platform provides a range of functionality to build applications from consumer web to enterprise scenarios. MSDN subscribers can take advantage of this platform to build and deploy their applications.

Introductory 16-month Offer Available Today

Estimated savings: $2,518 (USD)

What to Know Before You Sign Up

The first phase of this introductory offer will last for 8 months from the time you sign up, then you will renew once for another 8 months. After that, you'll cancel this introductory account and sign up for the ongoing MSDN benefit (see below) based on your subscription level. Here's how to manage your account settings after you've signed up:

- Sign in to the Microsoft Online Services Customer Portal.

- Click on the Subscriptions tab and find the subscription called “Windows Azure Platform MSDN Premium”.

- Under the Actions section, make sure one of the options is “Opt out of auto renew”. This ensures your benefits will extend automatically. If you see “Opt in to auto renew” instead, select it and click Go to ensure your benefits continue for another 8 months.

- After your first 8 months of benefits have elapsed (you can check your start date by hovering over the “More…” link under “Windows Azure Platform MSDN Premium” on this same page), you will need to come back to this page and choose “Opt out of auto renew” so that your account will close at the end of the 16-month introductory benefit period. If you keep this account active after 16 months, all usage will be charged at the normal “consumption” rates.

You'll need your credit card to sign up. If you use more than the amount of services included with your MSDN subscription, we'll bill your card for these overages. You can visit the Microsoft Online Services Customer Portal to look up your usage at any time.

* Available for signup today in the countries listed. Not available to Empower for ISV members; only available to the 3 Technical Contacts for Certified Partners and Gold Certified Partners.

Future Subscriber Benefits (starting November 2010 or later)

** Not available to subscribers currently participating in the introductory offer. Not available to Empower for ISV members; only available to the 3 Technical Contacts for Certified Partners and Gold Certified Partners.

Rob Conery prefaced his Introducing the New Authorize.NET SDK post of 10/25/2010 with “I've never had an easy time with payment gateways. Their APIs tend to be ridiculously verbose and written by engineers who like ... writing verbose APIs. When I was approached to design and build the new Authorize.NET SDK - I jumped at the chance. I do hope you'll like it.”:

The Money Shot

Rather than drag you through pre-amble, here's all you need to do to charge your customers:

This is a console application - and it's showing you how Authorize.NET's Advanced Integration Method (or AIM) works. I managed to get the meat of the transaction complete in 3 lines of code - pretty slick if you ask me!

I worked with the crew at LabZero and it was an all-out "platform blitz": PHP, Ruby and Rails, Java, and .NET. We had some fun and friendly competition to see who could roll the cleanest SDK - and I think I topped the Ruby one pretty handily :). Anyway - back to the code.

Design Principle One: Testability

Payment system SDKs and sample code usually don't take testing into account and I wanted to change that. I don't like how invasive gateway code can be - so I kept it as light and nimble as possible.

There are 3 core interfaces that you work against - only one that you need to know about (the gateway itself). The first is IGateway:

This interface logs you into Authorize.NET and sends off an IGatewayRequest, returning an IResponse. There are 4 IGatewayRequests:

- AuthorizationRequest: This is the main request that you'll use. It authorizes and optionally captures a credit card transaction.

- CaptureRequest: This request will run a capture (transacting the money) on an previously authorized request.

- CreditRequest: Refunds money

- VoidRequest: Voids and authorization.

The essential flow is:

- Create the Gateway

- Create your Request

- Send() your request through the Gateway, and get an IResponse back

- The IResponse will tell you all about your transaction, including any errors …

Rob continues with “Design Principle Two: Smaller Method Calls,” “Design Principle Three: Love the Dev With Helpers,” “ApiFields: An On-The-Fly Reference API,” “ASP.NET MVC Reference Application,” and:

Obligatory Hedge

This isn't a "grand release" with a ton of hoopla. It's the first drop, and I will be refining things and working out bugs as we move on. I'm also building on some more functionality - so drops in the future are coming. If you do find an issue - you want to be sure to report it to the support forums (not me - LabZero is tracking all of this stuff rather tightly).

Hope you enjoy.

Sacha Dawes reported Windows Azure Monitoring Management Pack Release Candidate (RC) Now Available For Download in a 10/25/2010 post to TechNet’s Nexus SC: The System Center Team blog:

We are pleased to announce the availability of the Release Candidate (RC) of the Monitoring Management Pack (MP) for Windows Azure (version 6.1.7686.0), which can be immediately deployed by customers running System Center Operations Manager 2007 and Operations Manager 2007 R2.

As more customers adopt Windows Azure to develop and deploy application, we know that integrating the management of those applications into their existing console is an important requirement. This management pack enables Operations Manager customers to monitor the availability and performance of application that are running on Windows Azure.

Customers should head to the MP page on Microsoft Pinpoint to find more information on the MP, as well as download the bits (due to replication times, some customers may have to wait up to 24 hours from this notice before being able to download the bits). Customers who download the MP will be supported by Microsoft in their production deployments.

To answer some immediate questions on the management pack and its capabilities:

Q. What does the Windows Azure MP do?

A. The Windows Azure MP includes the following capabilities:

- Discovery of Windows Azure applications.

- Status of each role instance.

- Collection and monitoring performance information.

- Collection and monitoring of Windows events.

- Collection and monitoring of the .NET Framework trace messages from each role instance.

- Grooming of performance, event, and the .NET Framework trace data from Windows Azure storage account.

- Change the number of role instances.

Q. Why is the Azure Management Pack a Release Candidate (RC) and not RTW?

A. We have released this as an RC as we work to implement new monitoring scenarios in the cloud.

Q. When will the RTW version of the Azure Management Pack be available?

A. We expect to make it available in H1 CY2011

Q. Is this MP supported in production deployments?

A. Yes the Release Candidate of the Azure Management Pack will be supported for customers using it in production deployments.

Roberto Bonini continued his Windows Azure Feedreader Episode 7: User Subscriptions series on 10/25/2010:

Episode 7 is up. I somehow found time to do it over several days.

There are some audio issues, but they are minor. There is some humming noises for most of the show. I’ve yet to figure out where they came from. Apologies for that.

This week we

- clear up our HTML Views

- implement user subscriptions

We aren’t finished with user subscriptions by any means. We need to modify our OPML handling code to take account of the logged in user.

Enjoy:

Remember, you can head over to vimeo.com to see the show in all its HD glory.

Next week we’ll finished up that user subscriptions code in the OPML handling code.

And we’ll start our update code as well.

Chirag Mehta (@chirag_mehta) analyzes The Future Of BI In The Cloud in this 10/25/2010 essay:

Actual numbers vary based on whom you ask, but the general consensus is that the Business Intelligence (BI) and Analytics in the cloud is a fast growing market. IDC expects a compounded annual growth rate (CAGR) of 22.4% through 2013. This growth is primarily driven by two kinds of SaaS applications. The first kind is a purpose-specific analytics-driven application for business processes such as financial planning, cost optimization, inventory analysis etc. The second kind is a self-service horizontal analytics application/tool that allows the customers and ISVs to analyze data and create, embed, and share analysis and visualizations.

The category that is still nascent and would require significant work is the traditional general-purpose BI on large data warehouses (DW) in the cloud. For the most enterprises, not only all the DW are on-premise, but the majority of the business systems that feed data into these DW are on-premise as well. If these enterprises were to adopt BI in the cloud, it would mean moving all the data, warehouses, and the associated processes such as ETL in the cloud. But then, the biggest opportunities to innovate in the cloud exist to innovate the outside of it. I see significant potential to build black-box appliance style systems that sit on-premise and encapsulate the on-premise complexity – ETL, lifecycle management, and integration - in moving the data to the cloud.

Assuming that the enterprises succeed in moving data to the cloud, I see a couple of challenges, if treated as opportunities, will spur the most BI innovation in the cloud.

Traditional OLAP data warehouses don’t translate well into the cloud:

The majority of on-premise data warehouses run on some flavor of a relational or a columnar database. The most BI tools use SQL to access data from these DW. These databases are not inherently designed to run natively on the cloud. On top of that, the optimizations performed on these DW such as sharding, indices, compression etc. don’t translate well into the cloud either since cloud is a horizontally elastic scale-out platform and not a vertically integrated, scale-up, system.

The organizations are rethinking their persistence as well as access languages and algorithms options, while moving their data to the cloud. Recently, Netflix started moving their systems into the cloud. It’s not a BI system, but it has the similar characteristics such as high volume of read-only data, a few index-based look-ups etc. The new system uses S3 and SimpleDB instead of Oracle (on-premise). During this transition, Netflix picked availability over consistency. Eventual consistency is certainly an option that BI vendors should consider in the cloud. I have also started seeing DW in the cloud that uses HDFS, Dynamo, and Cassandra. Not all the relational and columnar DW systems will translate well into NoSQL, but I cannot overemphasize the importance of re-evaluating persistence store and access options when you decide to move your data into the cloud.

Hive, a DW infrastructure built on top of Hadoop, is a MapReduce meet SQL approach. Facebook has a 15 petabytes of data in their DW running Hive to support their BI needs. There are a very few companies that would require such a scale, but the best thing about this approach is that you can grow linearly, technologically as well as economically.

The cloud does not make it a good platform for I/O intensive applications such as BI:

One of the major issues with the large data warehouses is, well, the data itself. Any kind of complex query typically involves an intensive I/O computation. But, the I/O virtualization on the cloud, simply does not work for large data sets. The remote I/O, due to its latency, is not a viable option. The block I/O is a popular approach for I/O intensive applications. Amazon EC2 does have block I/O for each instance, but it obviously can’t hold all the data and it’s still a disk-based approach.

For BI in the cloud to be successful, what we really need is ability for scale-out block I/O, just like scale-out computing. Good news is that there is at least one company, Solidfire, that I know, working on it. I met Dave, the founder, at the Structure conference reception. He explained to me what he is up to. Solidfire has a software solution that uses solid state drives (SSD) as scale-out block I/O. I see huge potential in how this can be used for BI applications.

When you put all the pieces together, it makes sense. The data is distributed across the cloud on a number of SSDs that is available to the processors as block I/O. You run some flavor of NoSQL to store and access this data that leverages modern algorithms and more importantly horizontally elastic cloud platform. What you get is commodity and blazingly fast BI at a fraction of cost with pay-as-you-go subscription model.

Now, that’s what I call the future of BI in the cloud.

Chirag says:

In my current role I am helping SAP explore, identify, and execute the growth opportunities by leveraging the next generation technology platform - in-memory computing, cloud computing, and modern application frameworks - to build simple, elegant, and effective applications to meet the latent needs of the customers.

Marketwire published on 10/25/2010 a Zuora Enables ISV to Rapidly Deploy, Meter, Price, and Bill for Windows Azure Platform Cloud Solution press release that also describes the Zuora Toolkit for Windows Azure:

Company News

Zuora, the leader in subscription billing, today announced that sharpcloud has successfully implemented the Zuora Z-Commerce for the Cloud platform in 20 days. sharpcloud provides visual, social roadmapping which is used by customers to manage knowledge and networks around their strategic planning and innovation programs. sharpcloud is developed and hosted on Windows Azure.

sharpcloud leveraged Windows Azure's platform and tools to bring their solution to life, and quickly set about the task of monetizing, namely, enabling sharpcloud for subscriptions. It quickly became clear that building such a complicated solution in-house was not an option.

Through the Microsoft Windows Azure and BizSpark One programs, sharpcloud was able to get started quickly with the Zuora team, which was already well versed and integrated into the Windows Azure ecosystem.

sharpcloud is now using Zuora to enable billing, payments and subscription management for its Windows Azure-based application, specifically:

Multiple pricing plans to sell to individuals and businesses

Support for multiple currencies -- GBP, USD, and Euros

Online commerce capabilities

Support for credit cards; PCI compliance

Seamless integration to PayPal for payment processing

A platform to handle subscription orders from different partners, including Fujitsu

sharpcloud deployed the Zuora Toolkit for Windows Azure to dramatically accelerate time-to-market and automate the pricing and web orders for its Windows Azure platform cloud-based application with seamless integration to PayPal.

Commentary

"Microsoft and BizSpark One have given us the tools, platform and program to research, develop and release to web our social business application," said Sarim Khan, CEO and Co-Founder, sharpcloud. "Zuora has filled the missing link by enabling billing, payments and subscription management for our application, giving us the flexibility to price and package and in the future expand to new markets."

"Cloud computing has dramatically improved the way that ISVs can develop, deliver and support innovative business solutions," said Michael Maggs, senior director, Windows Azure partner strategy at Microsoft. "Zuora enables Windows Azure developers to rapidly monetize those applications with the right billing infrastructure so they can spend more effort on building successful cloud businesses."

"Billing is the lifeblood of ISVs in the cloud, and Zuora is the leader in helping companies like sharpcloud rapidly monetize their solutions on Windows Azure," said Shawn Price, president at Zuora. "Zuora is committed to working closely with Microsoft to deliver on the cloud commerce capabilities that the more than 10,000 Windows Azure developers and customers require."

Enabling the Cloud Computing Business Model on Windows Azure

Based on Zuora's industry-leading on-demand subscription billing and commerce platform, Z-Commerce for the Cloud enables the new business models introduced by cloud computing such as usage and pay-as-you-go pricing as their existing infrastructures were built for one-time and perpetual pricing models. Prior to Zuora, cloud providers and ISVs were could not deliver on the need to meter, price, and bill for cloud services -- the heart of the new cloud business model.

At this week's Microsoft VC Summit, Zuora CEO Tien Tzuo will be presenting a session that illustrates this shift entitled "Azure: Microsoft Cloud Computing Strategy - How to Successfully Build, Deploy and Monetize the Windows Azure Platform" that will describe how cloud computing is creating significant opportunities for ISVs to build and monetize a range of new applications with Zuora and Microsoft technologies.

Zuora Toolkit for Windows Azure

- To drive success and adoption for the Windows Azure ecosystem, Zuora has delivered the Zuora Toolkit for Windows Azure in conjuncture with Microsoft to enable developers and ISVs to easily automate commerce from within their Windows Azure application and/or website in a matter of minutes. With the Zuora Toolkit for Windows Azure, developers and ISVs can:

- create flexible price plans and packages;

- support usage and pay-as-you-go pricing models;

- initiate a subscription order online;

- accept credit cards with PCI Level 1 compliance; and

- manage the customers, recurring subscriptions, and invoicing.

- The Zuora Toolkit for Windows Azure can be accessed at http://developer.zuora.com/. Zuora is also providing a Z-Commerce for the Cloud code sample for developers to embed in their cloud solutions at http://developer.zuora.com/samplecode.html. …

Elizabeth White asserted “Global Alliance With Microsoft Enables Innovative Delivery of Banking Services” in a deck to her Misys Collaborates with Microsoft to Extend Financial Apps to the Cloud post of 10/25/2010:

Misys plc, the global application software and services company, announces a new strategic alliance with Microsoft Corp. This new initiative builds on last year's mission-critical applications development alliance and will deliver Misys' banking and capital markets applications via the Windows Azure cloud platform. The technical collaboration with Microsoft, announced at Sibos 2010 in Amsterdam, will provide financial institutions with the choice and flexibility they need to maximise the return on their IT investment and deliver innovative services to their customers more rapidly.

Financial institutions typically depend on a multitude of applications and systems that are integrated with customers, partners and external financial networks. Running these applications requires complex data centre and support structures that are expensive to operate. Cloud computing, and specifically Windows Azure, enables banks to move from a capital intensive cost model to one which is based on the consumption of technology. No longer will banks need to over-order computing resources because the scale of the Azure platform allows high volume workloads such as end-of-day processing to be consumed on demand.

Misys and Microsoft have successfully deployed instances of the Misys BankFusion Universal Banking solution to the Windows Azure platform. The Misys solution is built on state-of-the-art BankFusion technology, which adheres to a rigorous set of standards but is unconstrained by proprietary infrastructure, which makes it possible to run the solution in the cloud. Both companies have received significant interest from banks looking to reduce complexity and operational risks by running their banking systems in the cloud.

"The combination of BankFusion, the most advanced financial services platform on the market today, and the innovative Windows Azure cloud computing infrastructure is world-beating," said Al-Noor Ramji, EVP and General Manager, Misys. "New banking solutions must reduce operational costs. By making our solutions available in the cloud, we are enabling our clients to benefit from increased agility with lower TCO and risk, while simultaneously providing them with unprecedented speed and flexibility with access to the latest solutions. The initiative lets banks concentrate once again on the business of banking."

"This is a very exciting time for the financial services industry," said Karen Cone, general manager, Worldwide Financial Services, Microsoft. "Our enterprise cloud computing expertise, coupled with the industry-leading solutions from Misys, brings a unique value proposition to the sector. Through strategic engineering alliances with industry leaders such as Misys, we are focused on delivering both the on-premise and cloud-based solutions that our customers need to gain the benefits of cloud services on their own terms. They are able to leverage and extend their existing IT investments to take advantage of cloud computing, resulting in a reduction of cost and the ability to enhance operations through cloud-based improvements and build transformative applications that create new business opportunities."

"Globally, large banks face increasing demands for innovative, scalable services across business units to meet both competitive forces and marketplace expectations. At the same time, smaller banks are beginning to understand the value of investing in operations, processes, and technologies that make them more flexible and nimble," said Rodney Nelsestuen, Senior Research Director, TowerGroup. "Whether large or small, today's financial institutions must seek to improve operations while not losing sight of the need to manage costs closely. "To that end, TowerGroup has witnessed a growing interest in new variable cost models and on demand service models such as those emerging in cloud computing, or newer forms of managed and shared services, and outsourcing across a variety of technologies and services. These emerging approaches offer large banks the opportunity to leverage scale while smaller banks can compete effectively through shorter time to market and lower upfront investment."

The collaboration between Microsoft and Misys demonstrates that the financial services industry is now moving to the next generation of banking platforms. Many financial institutions already run finished services in the cloud such as Microsoft Exchange, Microsoft Office and Microsoft Dynamics CRM Online solutions. This news extends the Microsoft cloud capability to banking applications.

Tim Anderson (@timanderson) published An honest assessment of Windows Phone 7 on 10/25/2010:

I’ve been using Windows Phone 7 for a week and a half now, in the shape of an HTC Mozart on Orange. So what do I think?

I am not going to go blow-by-blow through the features – others have done that, and while it is important to do, it does not convey well what the phone is like to use. Instead, this is my first impression of the phone together with some thoughts on its future.

First, it is a decent smartphone. Take no notice of comments about the ugliness of the user interface. Although it looks a little boxy in pictures, in practice it is fun to use.

Some things take a bit of learning. For example, There is a camera button on the phone, and a full press on this activates the camera from almost anywhere. Within the camera, a full press takes a picture, but a half press or a press and hold activates autofocus. I did not find this behaviour immediately intuitive, but it is something you get used to.

There is plenty to like about the phone. This includes the dynamically updating tiles; the picture hub and the ability to auto-upload pictures to Skydrive, Microsoft’s free cloud storage; and neat touches such as the music controls which appear over the lock screen when you activate the screen during playback; or the Find your Phone feature which can ring your phone loudly even if it is set to silent, or lock the phone and add an if found message.

The People hub is fabulous if you use Facebook. I don’t use Facebook much, but even with my limited use, I noticed that as soon as I linked with Facebook, the phone felt deeply personalised to me, with little pictures of people I know in the People tile. The ability to link two profiles to one contact is good.

I also like the Office hub which includes Sharepoint workspace mobile – useful for synching content. Microsoft should push this hard, especially as Office 365, which includes hosted Exchange and Sharepoint, gains users.

There are some excellent design touches. For example, many apps have a menu bar with icons at the foot of the screen. There are no captions, which saves space, but by tapping a three-dot icon you can temporarily display captions. In time you learn them and no longer need to.

The pros and cons of hubs

Microsoft has addressed what is a significant issue in other smartphones: how to declutter the user interface. Windows Phone 7 hubs collect several related apps and features (between which there is no sharp difference) into a multi-page view. There are really six hubs:

- People

- Pictures (includes the camera)

- Music and videos

- Marketplace

- Office

- Games

I like the hubs in general; but there are a few issues. Of the hubs listed above, four of them work well: People, Pictures, Music/Videos, and Games. Marketplace is not really a hub any more than “phone” is a hub – it is just a way to access a single feature. Office is handy but it is not a hub gathering all the apps that address a particular area; it is a Microsoft brand. If I made a word processor app I could not add it to the Office hub.

Further, operators and OEMs can add their own hubs, but will most likely make bad decisions. There is a pointless HTC hub on my device which combines weather and featured apps. It also features a dizzying start-up animation which soon gets tired. I have no idea what the HTC hub is meant to do, other than to promote the HTC brand.

Speaking of brands, I have deliberately left the home screen on my Mozart as supplied by Orange. As you can see from the picture above, Orange decided we would rather see four Orange apps occupy 50% of the home screen (before you scroll down), than other features such as web browsing, music and video, pictures and so on. Why isn’t Orange a hub so that at least all this stuff is in one place?

The user can modify the home screen easily enough, and largely remove the Orange branding. But to get back to my point about hubs: it is not clear to me what a hub is meant to be. It is not really a category, because you cannot create hubs or add and remove apps from them, and because of the special privileges given to OEMs we get nonsense like the HTC hub, alongside works of art like the Pictures hub.

There is still more good than bad in the hub concept, but it need work.

Not enough features?

I have no complaint about lack of features in this first release of Windows Phone 7. Yes, I would like tethering. Yes, I would like the ability to copy an URL from the web browser to the Twitter client. But I am happy with the argument that Microsoft was more concerned with getting the foundation right, than with supplying every possible feature in version one.

I am less happy with the notion that Microsoft can afford for the initial devices to be a bit hopeless, and fix it up in later versions. I am not sure how much time the company has, before the world at large just presumes it cannot match iPhone or Android and forgets Microsoft as a smartphone company.

Is it a bit hopeless, or very good at what it does? I am still not sure, mainly because I seem to have had more odd behaviour than some other early adopters. Example: licence error after downloading from marketplace; apps that don’t open or which give an error and inform me that they have to close; black screens. A few times I’ve had to restart; once I had to remove the battery – thank you HTC Notes, which has been updated and now does not work at all. It is possible that there is some issue with my review device, such as faulty RAM, or maybe the amount of memory in a Mozart is inadequate. I am going to assume the former, but await other reports with interest.

The one area where Windows Phone 7 is weak is in app availability. I would like a WordPress app, for example. Clearly this will fix itself if the device is popular, though there are some issues facing third-party developers which will impede this somewhat.

App Development and the Marketplace

The development platform for third parties is meant to be Silverlight and XNA, two frameworks based on .NET which address general apps and games respectively. These are strong platforms, backed up by Visual Studio and the C# programming language, so not a bad development story as far as it goes.

That said, there are a couple of significant issues here. One is that third-party apps do not have access to all the features of the phone and cannot multi-task. Switch away from an app and it dies. This can result in a terrible user experience. For example, I fire up the impressive game The Harvest. Good though it is, it takes a while to load. Finally it loads and play resumes from where I got to last time. I’m just wondering what to tap, when the lock screen kicks in – since I have not tapped anything for a bit (because the game was loading), the device has decided to lock. I flick back the lock. Unfortunately the game has been killed, and starts over with resume and a long loading process.

The other area of uncertainly relates to native code development. C/C++ and native code is popular for mobile apps. It is efficient, which is good for devices with constrained resources; and while native code is by definition not cross-platform, large chunks of the code for one platform will likely port OK to another.

Third party developers cannot do native code development for Windows Phone 7. Or can they? Frankly, I have heard conflicting reports on this from Microsoft, from developers, and even from other journalists.

At the beginning, when the Windows Phone 7 development platform was announced at the Mix conference last year, it was stated that the only third-party developers allowed to use native code were Adobe, because Microsoft wants Flash on the device, operators and OEM hardware vendors. At the UK reviewer’s workshop, I was assured by a Microsoft spokesperson that this is still the case, and that no other third parties have been given special privileges.

I am sceptical though. I expect important third parties like Spotify will use native code for their apps, and/or get access to additional APIs. If you have a good enough relationship with Microsoft, or an important enough app, it will be negotiable.

In fact, I hope this is the case; and I also expect that there will be an official, public native code SDK for the device within a year or two.

As it is, the situation is unsatisfactory. I dislike the idea that only operators and OEMs can use native code – especially as this group does not have the best track record for creating innovative and useful apps. I have more confidence in third party developers to come up with compelling apps than operators or hardware vendors – who all too often just want to plug their brand.

I also think the Marketplace needs work. If I search marketplace, I want it filtered to apps only by default, but for some reason the search covers music and video as well, so If I search for a twitter client, I get results including a song called Hit me up on Twitter. That’s nonsense.

I wonder if the submission process is a too lax at the moment, because of Microsoft is so anxious to fill Marketplace with apps. I suppose there will always be too many lousy apps in there, on this and other platforms. Still, while nobody likes arbitrary rejections, I suspect Microsoft would win support if it were more rigorous about enforcing standards in areas like how well apps resume after they are killed by the operating system, and in their handling of the back button, two areas which seem lacking at the moment.

Complaints and annoyances

One persistent annoyance with the HTC Mozart is the proximity of menu bar which appears at the bottom of many apps, with the with “hardware” buttons for back, start, and search which are compulsory on all Windows Phone 7 devices. The problem is that on the Mozart, these buttons are the same as app buttons, triggered by a light touch. So I accidentally hit back, start or search instead of one of the menu buttons. I have similar issues with the onscreen keyboard. I’m learning to be very very careful where I tap in that region, which makes using the device less enjoyable.

Another annoyance is the unpredictability of the back button. I am often unsure whether this is going to navigate me back within an app, or kick me out of the app.

Some of the apps are poor or not quite done. This will sort itself presuming the phone is not a complete flop. For example, in Twozaic, when typing a tweet, the post button is almost entirely hidden by the keyboard. I would like an Android style close keyboard button (update: though the back button should do this consistently).

I have already mentioned problems with bugs and crashes, which I am hoping are specific to my device.

It seems to me that Microsoft has taken a look at Apple’s extraordinarily profitable approach to devices and thought “We want some of that.” The device is equally as locked down as an iPhone – except that in Apple’s case there are no OEMs to disrupt the user experience with half-baked apps, and operators are also prevented from interfering. With Windows Phone we kind-of have the worst of both worlds: operators and OEMs can spoil the phone’s usability – though this is constrained in that clued-up users can get rid of what they do not want – but we are still restricted from doing things like attaching the phone as USB storage.

Still not completely fixed – the OEM problem

My final reflection (for now) is that Windows Phone 7 still reflects Microsoft’s OEM problem. This device matters more to Microsoft than it does either to the operators or the OEM hardware vendors – who have plenty to be getting on with other mobile operating systems. In consequence, the launch devices do not do justice to the capabilities of Windows Phone 7, and in some cases let it does badly. I do not much like the HTC Mozart, and suspect that HTC just has not given the phone the attention that it needed.

One solution would be for Microsoft to make its own device. Another would be for some hardware vendor to come up with a superb device that would make us re-evaluate the platform. Those with long memories will recall that HTC did this for Windows CE, with the original iPAQ, the first devices using that operating system which performed satisfactorily.

HTC could do it again, but has not delivered with the Mozart, or I suspect with its other launch devices.

I have also noted issues with way Orange has customised my device, which is another part of the same overall issue.

Despite Microsoft’s moves to mitigate its OEM problem, by enforcing consistency of hardware and by (mostly) retaining control over the user interface, it is still an area of concern.

Related posts:

Clearly, the success of the WP7 will have a major influence on new Windows Azure and SQL Azure deployments as mobile clients become an increasing consumer of cloud-based apps.

Avkash Chauhan explained How to change the VM size for your Windows Azure Service in a 10/25/2010 post:

As you may know, Azure VM size is set in 4 different catagories:

- Small - 250GB

- Medium - 500GB

- Large - 1TB

- ExtraLarge - 2TB

It is possible that after your service is running and you might need to tune your service to add more space in Azure VM or reduce the VM size to save some cost if resources are higher then your current service need. This concept is defined as "elastic scale" and Windows Azure takes this concept very well.

Here are a few ways you can accomplish it:

1. Auto-Scale using Azure Service Management API.:

There is a great blog on how to accomplish it: http://blogs.msdn.com/b/gonzalorc/archive/2010/02/07/auto-scaling-in-azure.aspx

2. In Place Upgrade for your Azure Service:

- You can dynamically upload a new package with a higher VM size to Staging slot first

- Perform VIP swap so your service can use the higher VM size Service

- Delete the Deployment in Staging slot otherwise you may pay for both slots.

Eric Knorr (pictured below) asserted “Even as Microsoft rolls out its Office 365 cloud offering, the company stubbornly doubles down on the desktop” as a deck for his What Office 365 says about Microsoft post of 10/25/2010 to InfoWorld’s Modernizing IT blog:

On the day Microsoft announced Office 365, Kurt DelBene, president of the Microsoft Office Division, said: "This resets the bar for what people will expect of productivity applications in the cloud."

Oh, Microsoft. Why must you say these things?

[ Also on InfoWorld: Read Woody Leonard's excellent analysis of why Ray Ozzie left Microsoft. | Then have a look at Neil McAllister's comparative review of "Office suites in the cloud: Microsoft Office Web Apps versus Google Docs and Zoho." ]

We all know that Office 365 is basically an upgrade and repackaging of BPOS (Business Productivity Online Suite), which consists of Microsoft-hosted versions of SharePoint, Exchange, and Office Communications. The obvious difference is that 365 adds -- drumroll, please -- Office Web Apps.

In the heat of last Tuesday's announcement, some people jumped to the conclusion that the addition of Web Apps meant, at long last, that Microsoft had an answer to Google Apps. The truth: Not anymore than it already did. You can already use Office Web Apps and SkyDrive for free on the Office Live site; BPOS is available separately for $10 per user per month. Office 365 wraps the two together -- so how exactly is the bar being "reset"?

Cloudy with a chance of misinformation

To be fair, the private beta program for Office 365 has just started, and Office 365 will probably be considerably better than BPOS, with online 2010 versions of SharePoint, Exchange, and Office Communications -- the last renamed Lync Server and, according to InfoWorld contributor J. Peter Bruzzese, vastly improved.

But guess what else is part of Office 365? Office Professional Plus, a desktop product. So not only does Office 365 fail to "reset the bar" for productivity applications in the cloud, its main productivity applications aren't in the cloud at all. As before, Office Web Apps are intended to be browser-based extensions to the desktop version of Office.

Office 365 will actually come in two flavors: an enterprise version that includes Office Professional Plus and a small-business version that does not. Wait, does that mean you can use the small-business version of Office 365 without a locally installed version of Office? Nope. Check the system requirements for the small-business version and you will find the following note: "Office 2007 SP2 or Office 2010."

Read more: 2, next page ›

SharePoint Server 2010 in the cloud is needed to make Microsoft Access 2010 Web Databases accessible at reasonable cost to a few hundred or so users.

Jeffrey Schwartz posted Cloud Credo to Microsoft Partners on 10/22/2010 to his Schwartz Report blog for the Redmond Channel Partner newsletter:

Why should Microsoft's partners who sell software to customers feel inclined to sacrifice those revenues and margins in favor of the company's cloud services?

"If you don't do it, you will be irrelevant in the next four or five years," said Vahé Torossian, corporate vice president of Microsoft's Worldwide Small and Midmarket Solutions and Partners (SMS&P) Group. Jon Roskill, corporate vice president of the Microsoft Worldwide Partner Group, reports to Torossian, and Torossian reports to Chief Operating Officer Kevin Turner.

Torossian spoke to a group of about 60 partners yesterday at this month's local International Association of Microsoft Channel Partners (IAMCP) meeting held at Microsoft's New York office. "Believe me, I'm a very polite guy, I don't want to be blunt for the sake of being blunt, I am saying that because it starts with us."

Microsoft's competitors, the likes of Google and Saleforce.com, are making this push without the legacy of a software business to protect. Torossian told partners that he believes 30 percent of all of its customers will transition their IT operations to the cloud with or without Microsoft.

"I think it's important that you start to lead the transition and assuming that your customers have already been meeting with your competitor," he said. "We are giving you the opportunity to have the discussion with your customers [where you can say] if you're really interested in the cloud, you'll be able to get there. We want to position ourselves as a leader in the cloud."

It should come as no surprise to those who have been following Microsoft's "we're-all-in" the cloud proclamations these days. But the fact that he responded so bluntly underscores how unabashed Microsoft is in trying to get its message out to partners.

"I think he was just being blatantly honest, I don't think there was any softer way to say it, it's the truth," said Mark Mayer, VP of sales and marketing at Aspen Technology Solutions, a Hopatcong, N.J.-based solution provider. "His most important point, is his competition doesn't' have a legacy business. He's not competing apples to apples."

Howard Cohen, president of the New York IAMCP chapter, said he initially thought Torossian was joking, but quickly realized he was serious. "I think it was refreshing," Cohen said. "He wasn't saying 'you should do this because we want you to,' he was saying 'we're doing this because we have to. The market demands it and you should come along with us.' I think that's an accurate message. I too believe if you ignore cloud services you won't be doing much."

The challenge is for solution providers and partners to deal with the change in a way that allows them to transition and maintain a profitable business, said Neil Rosenberg, president and CEO of Quality Technology Solutions Inc., a Parsippany, N.J.-based partner.

A $50,000 Exchange deployment might translate to a $15,000 consulting engagement to deploy Exchange Online. "Making up the volume is challenging," Rosenberg said.

So what's he doing about it? He's starting to focus more on the application stack, getting involved in more SharePoint and business intelligence development and consulting, for example.

"On the flip side, I don't think infrastructure is going to go away, it's going to gradually reduce in terms of what customers are looking to have in house, and there's going to be a whole separate set of services around management and planning of the infrastructure," Rosenberg said. "Stuff still needs to be managed in the cloud, just in a different way."

As Office365 application migrate to the Windows Azure Platform, they will become an integral part of many Windows Azure and SQL Azure projects.

<Return to section navigation list>

Visual Studio LightSwitch

Bob Baker posted Microsoft Visual Studio LightSwitch ONETUG Presentations and Sample posted on 10/23/2010:

I had a great time at both the September and October meetings presenting A Lap around Microsoft Visual Studio LightSwitch (currently in Beta), a new and exciting Rapid Application Development environment for business analysts and Silverlight developers.

Microsoft Visual Studio LightSwitch gives you a simpler and faster way to create professional-quality business applications for the desktop, the web, and the cloud. LightSwitch is a new addition to the Visual Studio family. Visit this page often to learn more about this exciting product.

I have posted a zip file containing the two PowerPoint presentations, a database backup, the LightSwitch solution shown at the meetings with some brief instructions on my SkyDrive (which you can get from the link below). I hope you find these resources useful. As always, feel free to contact me if you have any further questions.

Download the sample code from SkyDrive here.

Return to section navigation list>

Windows Azure Infrastructure

Microsoft, IBM and Oracle share Gartner’s Magic Quadrant for Application Infrastructure for Systematic SOA-Style Application Projects according to this 10/21/2010 report:

Figure 1. Magic Quadrant for Application Infrastructure for Systematic SOA-Style Application Projects

Source: Gartner (October 2010)

Market Overview

In recent years, Gartner has identified a trend in enterprise IT projects away from the best-of-breed middleware selection and toward selecting a sole, or at least a primary, provider of enabling technology for the planned project type. Thus, we have noted the emergence of a new type of market, defined by the requirements of a particular type of IT project, rather than by the taxonomy of vendor offerings (the traditional type of technology markets).

While continuing to analyze markets for specialized products — for example, enterprise application servers, horizontal portals, business process management suites and business intelligence tools — Gartner is also providing analysis of the overall application infrastructure market through the lens of some prevailing use patterns (see "Application Infrastructure Magic Quadrants Reflect Evolving IT Demands"). Buyers in such markets are not looking to invest in a grand, all-encompassing application infrastructure technology stack, but rather are looking for a vendor that understands and supports the kind of project requirements they face.