Windows Azure and Cloud Computing Posts for 9/29/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

••• Updated 10/4/2010: New Azure MVPs •••

•• Updated 10/3/2010: Articles marked ••

• Updated 10/2/2010: Articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

•• Richard Seroter blogged Comparing AWS SimpleDB and Windows Azure Table Storage – Part I on 9/30/2010:

We have a multitude of options for storing data in the cloud. If you are looking for a storage mechanism for fast access to non-relational data, then both the Amazon Web Service (AWS) SimpleDB product and the Microsoft Windows Azure Table storage are viable choices. In this post, I’m going to do a quick comparison of these two products, including how to leverage the .NET API provided by both.

First, let’s do a comparison of these two.

Amazon SimpleDB Windows Azure Table Feature Storage Metaphor Domains are like worksheets, items are rows, attributes are column headers, items are each cell Tables, properties are columns, entities are rows Schema None enforced None enforced “Table” size Domain up to 10GB, 256 attributes per item, 1 billion attributes per domain 255 properties per entity, 1MB per entity, 100TB per table Cost (excluding transfer) Free up to 1GB, 25 machine hours (time used for interactions); $0.15 GB/month up to 10TB, $0.14 per machine hour 0.15 GB/month Transactions Conditional put/delete for attributes on single item Batch transactions in same table and partition group Interface mechanism REST, SOAP REST Development tooling AWS SDK for .NET Visual Studio.NET, Development Fabric

These platforms are relatively similar in features and functions, with each platform also leveraging aspects of their sister products (e.g. AWS EC2 for SimpleDB), so that could sway your choice as well.

Both products provide a toolkit for .NET developers and here is a brief demonstration of each.

Amazon Simple DB using AWS SDK for .NET

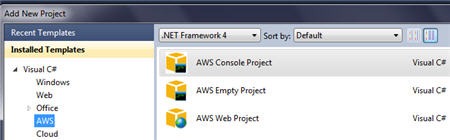

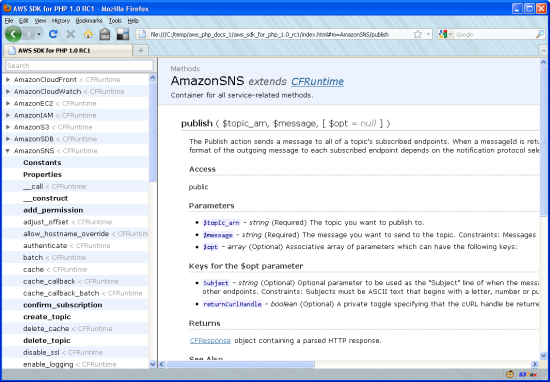

You can download the AWS SDK for .NET from the AWS website. You get some assemblies in the GAC, and also some Visual Studio.NET project templates.

In my case, I just built a simple Windows Forms application that creates a domain, adds attributes and items and then adds new attributes and new items.

After adding a reference to the AWSSDK.dll in my .NET project, I added the following “using” statements in my code:

Then I defined a few variables which will hold my SimpleDB domain name, AWS credentials and SimpleDB web service container object.

I next read my AWS credentials from a configuration file and pass them into the AmazonSimpleDB object.

Now I can create a SimpleDB domain (table) with a simple command.

Deleting domains looks like this:

And listing all the domains under an account can be done like this:

To create attributes and items, we use a PutAttributeRequest object. Here, I’m creating two items, adding attributes to them, and setting the value of the attributes. Notice that we use a very loosely typed process and don’t work with typed objects representing the underlying items.

If we want to update an item in the domain, we can do another PutAttributeRequest and specify which item we wish to update, and with which new attribute/value.

Querying the domain is done with familiar T-SQL syntax. In this case, I’m asking for all items in the domain where the ConferenceType attribute equals ‘Technology.”

Summary of Part I

Easy stuff, eh? Because of the non-existent domain schema, I can add a new attribute to an existing item (or new one) with no impact on the rest of the data in the domain. If you’re looking for fast, highly flexible data storage with high redundancy and no need for the rigor of a relational database, then AWS SimpleDB is a nice choice. In part two of this post, we’ll do a similar investigation of the Windows Azure Table storage option.

• Johnny Halife announced on v1.0.2 of his Windows Azure Storage for Ruby is available from GitHub:

Windows Azure Storage library — simple gem for accessing WAZ‘s Storage REST API

A simple implementation of Windows Azure Storage API for Ruby, inspired by the S3 gems and self experience of dealing with queues. The major goal of the whole gem is to enable ruby developers [like me =)] to leverage Windows Azure Storage features and have another option for cloud storage.

The whole gem is implemented based on Microsoft’s specs from the communication and underlying service description and protocol (REST). The API is for ruby developers built by a ruby developer. I’m trying to follow idioms, patterns and fluent type of doing APIs on Ruby.

This work isn’t related at all with StorageClient Sample shipped with Microsoft SDK and written in .NET, the whole API is based on my own understanding, experience and values of elegance and ruby development.

Full documentation for the gem is available at waz-storage.heroku.com

How does this differ from waz-queues and waz-blobs work?

Well, this is a sum up of the whole experience of writing those gems and getting them to work together to simplify end user experience. Although there’re some breaking changes, it’s pretty backward compatible with existing gems.

What’s new on the 1.0.2 version?

- Completed Blobs API migration to 2009-09-19, _fully supporting_ what third-party tools do (e.g. Cerebrata) [thanks percent20]

Neil MacKenzie offered Examples of the Windows Azure Storage Services REST API in this 8/18/2010 post to his Convective blog (missed when posted):

In the Windows Azure MSDN Azure Forum there are occasional questions about the Windows Azure Storage Services REST API. I have occasionally responded to these with some code examples showing how to use the API. I thought it would be useful to provide some examples of using the REST API for tables, blobs and queues – if only so I don’t have to dredge up examples when people ask how to use it. This post is not intended to provide a complete description of the REST API.

The REST API is comprehensively documented (other than the lack of working examples). Since the REST API is the definitive way to address Windows Azure Storage Services I think people using the higher level Storage Client API should have a passing understanding of the REST API to the level of being able to understand the documentation. Understanding the REST API can provide a deeper understanding of why the Storage Client API behaves the way it does.

Fiddler

The Fiddler Web Debugging Proxy is an essential tool when developing using the REST (or Storage Client) API since it captures precisely what is sent over the wire to the Windows Azure Storage Services.

Authorization

Nearly every request to the Windows Azure Storage Services must be authenticated. The exception is access to blobs with public read access. The supported authentication schemes for blobs, queues and tables and these are described here. The requests must be accompanied by an Authorization header constructed by making a hash-based message authentication code using the SHA-256 hash.

The following is an example of performing the SHA-256 hash for the Authorization header

private String CreateAuthorizationHeader(String canonicalizedString)

{

String signature = string.Empty;

using (HMACSHA256 hmacSha256 = new HMACSHA256(AzureStorageConstants.Key))

{

Byte[] dataToHmac = System.Text.Encoding.UTF8.GetBytes(canonicalizedString);

signature = Convert.ToBase64String(hmacSha256.ComputeHash(dataToHmac));

}String authorizationHeader = String.Format(

CultureInfo.InvariantCulture,

"{0} {1}:{2}",

AzureStorageConstants.SharedKeyAuthorizationScheme,

AzureStorageConstants.Account,

signature);return authorizationHeader;

}This method is used in all the examples in this post.

AzureStorageConstants is a helper class containing various constants. Key is a secret key for Windows Azure Storage Services account specified by Account. In the examples given here, SharedKeyAuthorizationScheme is SharedKey.

The trickiest part in using the REST API successfully is getting the correct string to sign. Fortunately, in the event of an authentication failure the Blob Service and Queue Service responds with the authorization string they used and this can be compared with the authorization string used in generating the Authorization header. This has greatly simplified the us of the REST API.

Table Service API

The Table Service API supports the following table-level operations:

The Table Service API supports the following entity-level operations:

These operations are implemented using the appropriate HTTP VERB:

- DELETE – delete

- GET – query

- MERGE – merge

- POST – insert

- PUT – update

This section provides examples of the Insert Entity and Query Entities operations.

Insert Entity

The InsertEntity() method listed in this section inserts an entity with two String properties, Artist and Title, into a table. The entity is submitted as an ATOM entry in the body of a request POSTed to the Table Service. In this example, the ATOM entry is generated by the GetRequestContentInsertXml() method. The date must be in RFC 1123 format in the x-ms-date header supplied to the canonicalized resource used to create the Authorization string. Note that the storage service version is set to “2009-09-19” which requires the DataServiceVersion and MaxDataServiceVersion to be set appropriately.

Neil continues with details and code snippets of the remaining methods of the Table Service API and sections for the Blob and Queue Services APIs.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

•• Francois Lascelles compared RESTful Web services and signatures in WS-* (SOAP), OAuth v1 and v2 in a 10/2/2010 post:

A common question relating to REST security is whether or not one can achieve message level integrity in the context of a RESTful web service exchange. Security at the message level (as opposed to transport level security such as HTTPS) presents a number of advantages and is essential for achieving a number of advanced security related goals.

When faced with the question of how to achieve message level integrity in REST, the typical reaction of an architect with a WS-* background is to incorporate an XML digital signature in the payload. Technically, including an XML dSig inside a REST payload is certainly possible. After all, XML dSig can be used independently of WS-Security. However there are a number of reasons why this approach is awkward. First, REST is not bound to XML. XML signatures only sign XML, not JSON, and other content types popular with RESTful web services. Also, it is practical to separate the signatures from the payload. This is why WS-Security defines signatures located in SOAP headers as opposed to using enveloped signatures. And most importantly, a REST ‘payload’ by itself has limited meaning without its associated network level entities such as the HTTP verb and the HTTP URI. This is a fundamental difference between REST and WS-*, let me explain further.

Below, I illustrate a REST message and a WS-* (SOAP) message. Notice how the SOAP message has it’s own SOAP headers in addition to transport level headers such as HTTP headers.

The reason is simple: WS-* specifications go out of their way to be transport independent. You can take a soap message and send it over HTTP, FTP, SMTP, JMS, whatever. The ‘W’ in WS-* does stand for ‘Web’ but this etymology does not reflect today’s reality.

In WS-*, the SOAP envelope can be isolated. All the necessary information needed is in there including the action. In REST, you cannot separate the payload from the HTTP verb because this is what defines the action. You can’t separate the payload from the HTTP URI either because this defines the resource which is being acted upon.

Any signature based integrity mechanism for REST needs to have the signature not only cover the payload but also cover those HTTP URIs and HTTP verbs as well. And since you can’t separate the payload from those HTTP entities, you might as well include the signature in the HTTP headers.

This is what is achieved by a number of proprietary authentication schemes today. For example Amazon S3 REST authentication and Windows Azure Platform both use HMAC based signatures located in the HTTP Authorization header. Those signatures cover the payload as well as the verb, the URI and other key headers.

OAuth v1 also defined a standard signature based token which does just this: it covers the verb, the uri, the payload, and other crucial headers. This is an elegant way to achieve integrity for REST. Unfortunately, OAuth v2 dropped this signature component of the specification. Bearer type tokens are also useful but, as explained by Eran Hammer-Lahav in this post, dropping payload signatures completely from OAuth is very unfortunate.

You might be interested also in Francois’ related Enteprise SaaS integration using REST and OAuth (9/17/2010) and OAuth-enabling the enterprise (8/5/2010) posts.

See •• Azret Botash of DevExpress will present Introduction to OData on 10/5/2010 at 10:00 AM to 11:00 AM PDT according to a GoToMeeting.com post of 10/3/2010 in the Cloud Computing Events section below.

•• David Ramel posted Using WebMatrix with PHP, OData, SQL Azure, Entity Framework and More to Redmond Developer News’ Data Driver blog on 9/30/2010:

I've written before about Microsoft's overtures to the PHP community, with last month's release of PHP Drivers for SQL Server being the latest step in an ongoing effort to provide interoperability between PHP and Microsoft technologies.

With a slew of other new products and services released (relatively) recently, such as SQL Azure, OData and WebMatrix, I decided to see if they all work together.

Amazingly, they do. Well, amazing that I got them to work, anyway. And as I've said before, if I can do it, anyone can do it. But that's the whole point: WebMatrix is targeted at noobs, and I wanted to see if a hobbyist could use it in conjunction with some other new stuff.

WebMatrix is a lightweight stack or tool that comes with stripped-down IIS and SQL Server components, packaged together so pros can do quick deployments and beginners can get started in Web development.

WebMatrix is all over PHP. It provides PHP code syntax highlighting (though not IntelliSense). It even includes a Web Gallery that lets you install popular PHP apps such as WordPress (see Ruslan Yakushev's tutorial). Doing so installs and configures PHP and the MySQL database.

I chose to install PHP myself and configure it for use in WebMatrix (see Brian Swan's tutorial).

After doing that, I tested the new PHP Drivers for SQL Server. The August release added PDO support, which allows object-oriented programming.

I used the PDO driver to access the AdventureWorksLTAZ2008R2 test database I have hosted in SQL Azure. After creating a PDO connection object, I could query the database and loop over the results in different ways, such as with the PDO FETCH_ASSOC constant:

(while $row = $sqlquery->fetch(PDO::FETCH_ASSOC) )

or with just the connection object:

foreach ($conn->query($sqlquery) as $row)

which both basically return the same results. You can see those results as a raw array on a site I created and deployed on one of the WebMatrix hosting partners, Applied Innovations, which is offering its services for free throughout the WebMatrix beta, along with several other providers. (By the way, Applied Innovations provided great service when I ran into a problem of my own making, even though the account was free.)

Having tested successfully with a regular SQL Azure database, I tried using PHP to access the same database enabled as an OData feed, courtesy of SQL Azure Labs. That eventually worked, but was a bit problematic in that this was my first exposure to PHP and I haven't worked that much with OData's Atom-based XML feed that contains namespaces, which greatly complicated things.

It was simple enough to grab the OData feed ($xml = file_get_contents ("ODataURL"), for one example), but to get down to the Customer record details buried in namespaces took a lot of investigation and resulted in ridiculous code such as this:

echo $xmlfile->entry[(int)$customerid]->children

('http://www.w3.org/2005/Atom')->content->

children

('http://schemas.microsoft.com/ado/2007/08/dataservices/metadata')->

children

('http://schemas.microsoft.com/ado/2007/08/dataservices')->

CompanyName->asXML();just to display the customer's company name. I later found that registering an XPath Namespace could greatly reduce that monstrous line, but did require a couple more lines of code. There's probably a better way to do it, though, if someone in the know would care to drop me a line (see below).

Anyway, the ridiculous code worked, as you can see here.

I also checked out the OData SDK for PHP. It generates proxy classes that you can use to work with OData feeds. It worked fine on my localhost Web server, but I couldn't get it to work on my hosted site. Microsoft Developer Evangelist Jim O'Neil, who used the SDK to access the "Dallas" OData feed repository, suggested I needed "admin privileges to configure the php.ini file to add the OData directory to the include variable and configure the required extensions" on the remote host. I'm sure that could be done easily enough, but I didn't want to bother the Applied Innovations people any further about my free account.

So I accessed OData from a WebMatrix project in two different ways. But that was using PHP files. At first, I couldn't figure out how to easily display the OData feed in a regular WebMatrix (.cshtml) page. I guess I could've written a bunch of C# code to do it, but WebMatrix is supposed to shield you from having to do that. Which it does, in fact, with the OData Helper, one of several "helpers" for tasks such as using Windows Azure Storage or displaying a Twitter feed (you can see an example of the latter on my project site). You can find more helpers online.

The OData Helper made it trivial to grab the feed and display it in a WebMatrix "WebGrid" using the Razor syntax:

@using Microsoft.Samples.WebPages.Helpers

@{

var odatafeed = OData.Get("[feedurl]");

var grid = new WebGrid(odatafeed, columnNames : new []{"CustomerID",

"CompanyName", "FirstName", "LastName"});

}

@grid.GetHtml();which results in this display.

Of course, using the built-in SQL Server Compact to access an embedded database was trivial:

@{

var db = Database.OpenFile("MyDatabase.sdf");

var query = "SELECT * FROM Products ORDER BY Name";

var result = db.Query(query);

var grid = new WebGrid(result);

}

@grid.GetHtml();which results in this.

Finally, I tackled the Entity Framework, to see if I could do a regular old query on the SQL Azure database. I clicked the button to launch the WebMatrix project in Visual Studio and built an Entity Data Model that I used to successfully query the database with both the ObjectQuery and Iqueryable approaches. Both of the queries' code and resulting display can be seen here.

Basically everything I tried to do actually worked. You can access all the example results from my Applied Innovations guest site. By the way, it was fairly simple to deploy my project to the Applied Innovations hosting site. That kind of thing is usually complicated and frustrating for me, but the Web Deploy feature of WebMatrix really streamlined the process.

Of course, WebMatrix is in beta, and the Web Deploy quit working for me after a while. I just started FTPing all my changed files, which worked fine.

I encountered other little glitches along the way, but no showstoppers. For example, the WebMatrix default file type, .cshtml, stopped showing up as an option when creating new files. In fact, the options available for me seemed to differ greatly from those I found in various tutorials. That's quite a minor problem, but I noticed it.

As befits a beta, there are a lot of known issues, which you can see here.

Overall, (much like LightSwitch) I was impressed with WebMatrix. It allows easy ASP.NET Web development and deployment for beginners and accommodates further exploration by those with a smattering of experience.

David is Features Editor for MSDN Magazine, part of 1105 Media.

Glenn Berry delivered A Small Selection of SQL Azure System Queries in a 9/29/2010 post:

I had a question come up during my “Getting Started with SQL Azure” presentation at SQLSaturday #52 in Denver last Saturday that I did not know the answer to. It had to do with how you could see what your data transfer usage was in and out of a SQL Azure database. This is pretty important to know, since you will be billed at the rate of $0.10/GB In and $0.15/GB Out (for the North American and European data centers). Knowing this information will help prevent getting an unpleasant surprise when you receive your monthly bill for SQL Azure.

SQL Server MVP Bob Beauchemin gave me a nudge in the right direction, and I was able to put together these queries, which I hope you find useful:

-- SQL Azure System Queries -- Glenn Berry -- September 2010 -- http://glennberrysqlperformance.spaces.live.com/ -- Twitter: GlennAlanBerry -- You must be connected to the master database to run these -- Get bandwidth usage by database by hour (for billing) SELECT database_name, direction, class, time_period, quantity AS [KB Transferred], [time] FROM sys.bandwidth_usage ORDER BY [time] DESC; -- Get number of databases by SKU for this SQL Azure account (for billing) SELECT sku, quantity, [time] FROM sys.database_usage ORDER BY [time] DESC; -- Get all SQL logins (which are the only kind) for this SQL Azure "instance" SELECT * FROM sys.sql_logins; -- Get firewall rules for this SQL Azure "instance" SELECT id, name, start_ip_address, end_ip_address, create_date, modify_date FROM sys.firewall_rules; -- Perhaps a look at future where you will be able to clone/backup a database? SELECT database_id, [start_date], modify_date, percent_complete, error_code, error_desc, [error_severity], [error_state] FROM sys.dm_database_copies ORDER BY [start_date] DESC; -- Very unclear what this is for, beyond what you can infer from the name SELECT instance_id, instance_name, [type_name], type_version, [description], type_stream, date_created, created_by, database_name FROM dbo.sysdac_instances;

•• Adam Mokan showed how to create a Windows Server AppFabric Monitoring oData Service in a 9/29/2010 post:

I recently caught an excellent blog post [Windows Server AppFabric Monitoring - How to create operational analytics reports with AppFabric Monitoring and Excel PowerPivot] by Emil Velenov about how to pull data from the Windows Server AppFabric monitoring database and create some really nice dashboard-like charts in Excel 2010 using the PowerPivot feature. At my current job, we are not yet using the Office 2010 suite, but Emil’s post sparked my interest in publishing the data from AppFabric somehow. I decided to spend an hour and create a WCF Data Service and publish the metrics using the oData format.

I’m not going to get into every detail because the aformentioned blog post does a much better job at that. However, I will note that in my case, I only had a need to pull data from two of AppFabric’s database views. I am currently only tracking WCF services and focused on the ASEventSources and ASWcfEvents views in the monitoring database. I did turn off the data aggregation option on the server’s web.config before writing my query. Emil’s post goes into detail on this step. Here is the query I wrote to get the data I felt was important. It gives me a nice simple breakdown of the site my service was running under (I have a couple different environments on this server for various QA and test setups), the name of the service, the service virtual path (I am using “svc-less” configuration, the operation name, event type (either “success” or “error”), the date/time the service was called, and finally the duration.

Query Results

Using this query, I created a view in the AppFabric database called “WcfStats”. Obviously, you could skip that portion and use the query in a client app, create a stored proc, use an ORM, and so-on. I went with a view, created a new SQL Server user account with read-only access and then started working on my WCF Data Service and utilized EF4 to create an entity based off this view.

I will say that I’ve not had the chance to work with a WCF Data Service up until now, so I’m sure I’m missing a “best practice” or something. Drop me a comment, if so.

Data Service Code

After publishing the service, I was instantly able to interact with it using LINQPad.

Results from querying the oData service in LINQPad

URL generated by LINQPad showing the query

Now I have a nice oData service out on my intranet for other developers to consume, to write reports against, and whatever else they can think of. Another developer is already working on a Silverlight 4 monitor which consumes this data. Once Office 2010 is rolled out, its native support for oData will make it trivial to pull this into Excel.

Hopefully this quick experiment will help inspire you to come up with some cool new ways to interact with AppFabric’s monitoring features.

• Ryan Dunn (@dunnry, right) and Steve Marx (@smarx, center) bring you Cloud Cover Episode 28 - SQL Azure with David Robinson (left) Part 11 on 10/1/2010:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at@cloudcovershow

In this episode:

- Listen to David Robinson explain how to migrate your Access databases to SQL Azure.

- Learn about deploying SQL Azure and Windows Azure services together

Show Links:

Windows Azure Domain Name System Improvements

Maximizing Throughput in Windows Azure - Part 3 (of 11)

EpiServer CMS on Windows Azure Part 1 (of 11)

F# and Windows Azure with Don Syme Part 3 (of 11)

• Benko posted Finding Sasquatch and other data mysteries with Jonathan Carter on MSDN Radio on 10/1/2010:

This week we explore the mountains of data around us by looking at the Open Data Protocol (OData). Jonathan Carter is a Technology Evangelist who helps people explore the possible with these new technologies, including how to build client and server-side solutions to implement and expose data endpoints. Join us and find out how you can leverage these technologies. This show is hosted by Mike Benkovich and Mithun Dhar.

But the question remains…who is Jonathan Carter [pictured at right]? We know he’s a frequent contributor to channel 9 and speaks regularly at conferences around the world. Join us as we ask the hard questions. Register at http://bit.ly/msdnradio-20 to join the conversation. See you on Monday!

• Steve Yi posted Demo: Using Entity Framework to Create and Query a SQL Azure Database In Less Than 10 Minutes to the SQL Azure Team blog on 9/30/2010:

As we were developing this week's posts on utilizing Entity Framework with SQL Azure we had an opportunity to sit down and talk with Faisal Mohamood, Senior Program Manager of the Entity Framework team.

As we've shared in earlier posts this week, one of the beautiful things about EF is the Model First feature which lets developers visually create an entity model within Visual Studio. The designer then creates the T-SQL to generate the database schema. In fact, EF give developers the opportunity to create and query SQL Azure databases all within the Visual Studio environment and not have to write a line of T-SQL.

In the video below, I discuss some of the basics of Entity Framework (EF) with Mohamood and its benefits as the primary data access framework for .NET developers.

In the second video Faisal provides a real-time demonstration of Model First and creates a rudimentary SQL Azure database without writing any code. Then we insert and query records utilizing Entity Framework, accomplishing all this in about ten minutes and ten lines of code. To see all the details, click on "full screen" button in the lower right of the video player. If you have a fast internet connection you should be able to see everything in full fidelity without pixelation.

Summary

Hopefully the videos in this post illustrate how easy it is to do database development in SQL Azure by utilizing Entity Framework. Let us know what other topics you'd like us to explore in more depth. And let us know if video content like this is interesting to you - drop a comment, or use the "Rate This" feature at the beginning of the post.

If you're interested in learning more, there are some outstanding walkthroughs and learning resources in MSDN, located here.

Steve Yi of the SQL Azure Team posted Model First For SQL Azure on 9/29/2010:

One of the great uses for ADO.NET Entity Framework 4.0 that ships with .NET Framework 4.0 is to use the model first approach to design your SQL Azure database. Model first means that the first thing you design is the entity model using Visual Studio and the Entity framework designer, then the designer creates the Transact-SQL for you that will generate your SQL Azure database schema. The part I really like is the Entity framework designer gives me a great WYSIWYG experience for the design of my tables and their inter-relationships. Plus as a huge bonus, you get a middle tier object layer to call from your application code that matches the model and the database on SQL Azure.

Visual Studio 2010

The first thing to do is open Visual Studio 2010, which has the 4.0 version of the Entity Framework, this version works especially well with SQL Azure. If you don’t have Visual Studio 2010, you can download the Express version for free; see the Get Started Developing with the ADO.NET Entity Framework page.

Data Layer Assembly

At this point you should have a good idea of what your data model is, however you might not know what type of application you want to make; ASP.NET MVC, ASP.NET WebForms, Silverlight, etc.. So let’s put the entity model and the objects that it creates in a class library. This will allow us to reference the class library, as an assembly, for a variety of different applications. For now, create a new Visual Studio 2010 solution with a single class library project.

Here is how:

- Open Visual Studio 2010.

- On the File menu, click New Project.

- Choose either Visual Basic or Visual C# in the Project Types pane.

- Select Class Library in the Templates pane.

- Enter ModelFirst for the project name, and then click OK.

The next set is to add an ADO.NET Entity Data Model item to the project, here is how:

- Right click on the project and choose Add then New Item.

- Choose Data and then ADO.NET Entity Data Model

- Click on the Add Button.

- Choose Empty Model and press the Finish button.

Now you have an empty model view to add entities (I still think of them as tables).

Designing You[r] Data Structure

The Entity Framework designer lets you drag and drop items from the toolbox directly into the designer pane to build out your data structure. For this blog post I am going to drag and drop an Entity from the toolbox into the designer. Immediately I am curious about how the Transact-SQL will look from just the default entity.

To generate the Transact-SQL to create a SQL Azure schema, right click in the designer pane and choose Generate Database From Model. Since the Entity Framework needs to know what the data source is to generate the schema with the right syntax and semantics we are asked by Entity Framework to enter connection information in a set of wizard steps.

Since I need a New Connection to I press the Add Connection button on the first wizard page. Here I enter connection information for a new database I created on SQL Azure called ModelFirst; which you can do from the SQL Azure Portal. The portal also gives me other information I need for the Connection Properties dialog, like my Administrator account name.

Now that I have the connection created in Visual Studio’s Server Explorer, I can continue on with the Generate Database Wizard. I want to uncheck that box that saves my connection string in the app.config file. Because this is a Class Library the app.config isn’t relevant -- .config files go in the assembly that calls the class library.

The Generate Database Wizard creates an Entity Framework connection string that is then passed to the Entity Framework provider to generate the Transact-SQL. The connection string isn’t stored anywhere, however it is needed to connect to the SQL Azure to find out the database version.

Finally, I get the Transact-SQL to generate the table in SQL Azure that represents the Transact-SQL.

-- -------------------------------------------------- -- Creating all tables -- -------------------------------------------------- -- Creating table 'Entity1' CREATE TABLE [dbo].[Entity1] ( [Id] int IDENTITY(1,1) NOT NULL ); GO -- -------------------------------------------------- -- Creating all PRIMARY KEY constraints -- -------------------------------------------------- -- Creating primary key on [Id] in table 'Entity1' ALTER TABLE [dbo].[Entity1] ADD CONSTRAINT [PK_Entity1] PRIMARY KEY CLUSTERED ([Id] ASC); GOThis Transact-SQL is saved to a .sql file which is included in my project. The full project looks like this:

I am not going to run this Transact-SQL on a connection to SQL Azure; I just wanted to see what it looked like. The table looks much like I expected it to, and Entity Framework was smart enough to create a clustered index which is a requirement for SQL Azure.

Summary

Watch for our upcoming video and interview with Faisal Mohamood of the Entity Framework team to demonstrate a start-to-finish example of Model First. From modeling the entities, generating the SQL Azure database, and all the way to inserting and querying data utilizing Entity Framework.

Make sure to check back, or subscribe to the RSS feed to be alerted as we post more information. Do you have questions, concerns, comments? Post them below and we will try to address them.

Abhishek Sinha of the SQL Server Team announced SQL Server 2008 SP2 is available for download today! on 9/29/2010:

Microsoft is pleased to announce the availability of SQL Server 2008 Service Pack 2. Both the Service Pack and Feature Pack updates are now ready for download on the Microsoft Download Center. Service Pack 2 for SQL Server 2008 includes new compatibility features with SQL Server 2008 R2, product improvements based on requests from the SQL Server community, and hotfix solutions provided in SQL Server 2008 SP1 Cumulative Update 1 to 8.

Key improvements in Microsoft SQL Server 2008 Service Pack 2 are:

- Reporting Services in SharePoint Integrated Mode. SQL Server 2008 SP2 provides updates for Reporting Services integration with SharePoint products. SQL Server 2008 SP2 report servers can integrate with SharePoint 2010 products. SQL Server 2008 SP2 also provides a new add-in to support the integration of SQL Server 2008 R2 report servers with SharePoint 2007 products. This now enables SharePoint Server 2007 to be used with SQL Server 2008 R2 Report Server. For more information see the “What’s New in SharePoint Integration and SQL Server 2008 Service Pack 2 (SP2)” section in What's New (Reporting Services).

- SQL Server 2008 R2 Application and Multi-Server Management Compatibility with SQL Server 2008.

- SQL Server 2008 Instance Management With SP2 applied, an instance of the SQL Server 2008 Database Engine can be enrolled with a SQL Server 2008 R2 Utility Control Point as a managed instance of SQL Server. SQL Server 2008 SP2 enables organizations to extend the value of the Utility Control Point to instances of SQL Server 2008 SP2 without having to upgrade those servers to SQL Server 2008 R2. For more information, see Overview of SQL Server Utility in SQL Server 2008 R2 Books Online.

- Data-tier Application (DAC) Support. Instances of the SQL Server 2008 Database Engine support all DAC operations delivered in SQL Server 2008 R2 after SP2 has been applied. You can deploy, upgrade, register, extract, and delete DACs. SP2 does not upgrade the SQL Server 2008 client tools to support DACs. You must use the SQL Server 2008 R2 client tools, such as SQL Server Management Studio, to perform DAC operations. A data-tier application is an entity that contains all of the database objects and instance objects used by an application. A DAC provides a single unit for authoring, deploying, and managing the data-tier objects. For more information, see Designing and Implementing Data-tier Applications. …

Thanks to all the customers who downloaded Microsoft SQL Server 2008 SP2 CTP and provided feedback.

Abhishek Sinha, Program Manager, SQL Server Sustained Engineering

Related Downloads & Documents:

- SQL Server 2008 SP2: http://go.microsoft.com/fwlink/?LinkId=196550

- SQL Server 2008 SP2 Express: http://go.microsoft.com/fwlink/?LinkId=196551

- SQL Server 2008 SP2 Feature Packs: http://go.microsoft.com/fwlink/?LinkId=202815

- SQL Server 2008 SP1 CU’s Released: http://support.microsoft.com/kb/970365

- Knowledge Base Article For Microsoft SQL Server 2008 SP2: http://support.microsoft.com/kb/2285068.

- Overview of SQL Server Utility

- Designing and Implementing Data-tier Applications.

- What's New (Reporting Services)

Scott Kline posted SQL Azure, OData, and Windows Phone 7 on 9/29/2010:

One of the things I really wanted to do lately was to get SQL Azure, OData, and Windows Phone 7 working together; in essence, expose SQL Azure data using the OData protocol and consume that data on a Windows Mobile Phone 7 device. This blog will explain how to do just that. This example is also in our SQL Azure book in a bit more detail, but with the push for WP7 I thought I'd give a sneak-peak here.

You will first need to download and install a couple of things, the first of which is the OData client Library for Windows Phone 7 Series CTP which is a library for consuming OData feeds on the Windows Phone 7 series devices. This library has many of the same capabilities as the ADO.NET Data Services client for Silverlight. The install will simply extract a few files to the directory of your choosing.

The next item to download is the Windows Phone Developer Tools, which installs the Visual Studio Windows Phone application templates and associated components. These tools provide integrated Visual Studio design and testing for your Windows Phone 7 applications.

Our goal is to enable OData on a SQL Azure database so that we can expose our data and make it available for the Windows Phone 7 application to consume. OData is a REST-based protocol which standarizes the querying and updating of data over the Web. The first step then is to enable OData on the SQL Azure database by going into the SQL Azure Labs site and enabling OData. You will be required to log in with your Windows Live account, then once in the SQL Azure Labs portal select the SQL Azure OData Service tab. As the home page states, SQL Azure Labs is in Developer Preview.

The key here is the URI at the bottom of the page in the User Mapping section. I'll blog another time on what the User Mapping is, but for now, highlight and copy the URI to the clipboard. You'll be using it later.

Once OData is enabled on the selected SQL Azure database, you are ready to start building the Windows Phone application. In Visual Studio 2010, you will notice new installed templates for the Windows Phone in the New Project dialog. For this example, select the Windows Phone Application.

Once the project is created, you will need to add a reference to the OData Client Library installed earlier. Browse to the directory to which you extracted the OData Client Library and add the System.Data.Services.Client.dll library.

The next step is to create the necessary proxy classes that are needed to access a data service from a .NET Framework client application. The proxy classes can be generated by using the DataSvcUtil tool, a command-line tool that consumes an OData feed and generates the client data service classes. Use the following image as an example to generate the appropriate data service classes. Notice the /uri: paramter. This is the same URI listed in the first image above, and what the DataSvcUtil will use to generate the necessary proxy classes.

Once the proxy class is generated, add it to the project. Next, add a new class to your project and add the following namespaces which provide addtional functionality needed to query the OData source and work with collections.

using System.Linq;

using System.ComponentModel;

using System.Collections.Generic;

using System.Diagnostics;

using System.Text;

using System.Windows.Data;

using TechBioModel;

using System.Data.Services.Client;

using System.Collections.ObjectModel;Next, add the following code to the class. The LoadData method first initializes a new TechBio instance of the proxy generated class, passing the URI to the OData service to call out to the service. A LINQ query is used to pull the data you want and the results loaded into the Docs DataServiceCollection.

public class classname

{

public TechBioModel()

{

LoadData();

}void LoadData()

{

TechBio context = new TechBio(new Uri("https://odata.sqlazurelabs.com/OData.svc/v0.1/servername/TechBio"));var qry = from u in context.Docs

where u.AuthorId == 113

select u;var dsQry = (DataServiceQuery<Doc>)qry;

dsQry.BeginExecute(r =>

{

try

{

var result = dsQry.EndExecute(r);

if (result != null)

{

Deployment.Current.Dispatcher.BeginInvoke(() =>

{

Docs.Load(result);

});

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message.ToString());

}

}, null);}

DataServiceCollection<Doc> _docs = new DataServiceCollection<Doc>();

public DataServiceCollection<Doc> Docs

{

get

{

return _docs;

}

private set

{

_docs = value;

}

}

}I learned from a Shawn Wildermuth blog post that the reason you need to use the Dispatcher is that this call is not guaranteed to be executed on the UI thread so the Dispatcher is required to ensure the this call is executed on the UI thread. Next, add the following code to the App.xaml. This will get called by the load of the phone with the application starts.

private static TechBioModel tbModel = null;

public static TechBioModel TBModel

{

get

{

if (tbModel == null)

tbModel = new TechBioModel();return tbModel;

}

}To call the code above, add the following code to the OnNavigatedTo event of the phone iteslf (the MainPage constructor)

protected override void OnNavigatedTo(System.Windows.Navigation.NavigationEventArgs e)

{

base.OnNavigatedTo(e);if (DataContext == null)

DataContext = App.TBModel;}

Lastly, you need to go to the UI of the phone and add a ListBox and then tell the ListBox where to get its data. Here we are binding the ListBox to the Docs DataServiceCollection.

<ListBox Height="611" HorizontalAlignment="Left" Name="listBox1"

VerticalAlignment="Top" Width="474"

ItemsSource="{Binding Docs}" >You are now ready to test. Run the project and when the project is deployed to the phone and run, data from the SQL Azure database is queried and displayed on the phone.

In this example you saw an simple example of how to consume an OData feed on on Windows Phone 7 application that gets its data from a SQL Azure database.

Channel9 completed its SQL Azure lesson series with Exercise 4: Supportability– Usage Metrics near the end of September 2010:

Upon commercial availability for Windows Azure, the following simple consumption-based pricing model for SQL Azure will apply:

There are two editions of SQL Azure databases: WebEdition with a 1 GB data cap is offered at USD $9.99 per month; Business Edition with a 10 GB data cap is offered at USD $99.99 per month.

Bandwidth across Windows Azure, SQL Azure and .NET Services will be charged at $0.10/GB for ingress data and $0.15 / GB for egress data.

Note: For more information on the Microsoft Windows Azure cloud computing pricing model refer to: Windows Azure Platform Pricing: http://www.microsoft.com/windowsazure/pricing/ Confirming Commercial Availability and Announcing Business Model: http://blogs.msdn.com/windowsazure/archive/2009/07/14/confirming-commercial-availability-and-announcing-business-model.aspx

In this exercise, we will go through the mechanisms on how to programmatically calculate bandwidth and database costs with these steps:

Herve Roggero explained How to implement fire-and-forget SQL Statements in SQL Azure in this 9/28/2010 post:

Implementing a Fire Hose for SQL Azure

While I was looking around in various blogs, someone was looking for a way to insert records in SQL Azure as fast as possible. Performance was so important that transactional consistency was not important. So after thinking about that for a few minutes I designed small class that provides extremely fast queuing of SQL Commands, and a background task that performs the actual work. The class implements a fire hose, fire-and-forget approach to executing statements against a database.

As mentioned, the approach consists of queueing SQL commands in memory, in an asynchronous manner, using a class designed for this purpose (SQLAzureFireHose). The AddAsynch method frees the client thread from the actual processing time to insert commands in the queue. In the background, the class then implements a timer to execute a batch of commands (hard-coded to 100 at a time in this sample code). Note that while this was done for SQL Azure, the class could very well be used for SQL Server. You could also enhance the class to make it transactional aware and rollback the transaction on error.

Sample Client Code

First, here is the client code. A variable, of type SQLAzureFireHose is declared on top. The client code inserts each command to execute using the AddAsynch method, which by design will execute quickly. In fact, it should take about 1 millisecond (or less) to insert 100 items.

SQLAzureFireHose Class

The SQLAzureFireHouse class implements the timer (that executes every second) and the asynchronous add method. Every second, the class fires the timer event, gets 100 SQL statements queued in the _commands object, builds a single SQL string and sends it off to SQL Azure (or SQL Server). This also minimizes roundtrips and reduces the chattiness of the application. …

C# class code omitted for brevity.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

•• Valery Mizonov described Implementing Reliable Inter-Role Communication Using Windows Azure AppFabric Service Bus, Observer Pattern & Parallel LINQ in this 9/30/2010 post to the Windows Server AppFabric Customer Advisory Team blog:

The following post is intended to walk you through the implementation of a loosely coupled communication pattern between processes and role instances running on Windows Azure platform. The scenario discussed and implemented in this paper is based off a real-world customer project and built upon a powerful combination of Windows Azure AppFabric Service Bus, Observer design pattern and Parallel LINQ (PLINQ).

Background

In the world of highly distributed applications, information exchange often occurs between a variety of tiers, layers and processes that need to communicate with each other either across disparate computing environments or within a proximity of given local boundaries. Over the years, the communication paradigms and messaging patterns have been able to support the many different scenarios for inter- and intra-process data exchange, some of which were durable and successful whilst the others have suffered from a limited existence.

The design patterns for high-scale cloud computing may result in breaking up of what could have been monolithic in the past and could communicate through direct method invocation into more granular, isolated entities that need to run in different processes, on different machines, in different geographical locations. Along those lines, the Windows Azure platform brings in its own unique and interesting challenges for creating an efficient implementation of the cross-process communication concepts in the Cloud to be able to connect web and worker roles together in a seamless and secure fashion.

In this paper, we take a look at how the Windows Azure platform AppFabric Service Bus helps address data exchange requirements between loosely coupled cloud application services running on Windows Azure. We explore how multicast datagram distribution and one-way messaging enable one to easily build a fully functional publish/subscribe model for Azure worker roles to be able to exchange notification events with peers regardless of their actual location.

For sake of simplicity, the messaging concept discussed in this paper will be further referenced to as “inter-role communication” although one should treat this as a communication between Azure role instances given the nature of the definition of a “role” in the Windows Azure platform’s vocabulary.

The Challenge

The inter-role communication between Windows Azure role instances is not a brand new challenge. It has been on the agenda of many Azure solution architects for some time until the support for internal endpoints has been released. An internal endpoint in the Windows Azure roles is essentially the internal IP address automatically assigned to a role instance by the Windows Azure fabric. This IP address along with a dynamically allocated port creates an endpoint that is only accessible from within a hosting datacenter with some further visibility restrictions. Once registered in the service configuration, the internal endpoint can be used for spinning off a WCF service host in order to make a communication contract accessible by the other role instances.

Consider the following example where an instance of the Process Controller worker role needs to exchange units of work and other processing instructions with other roles. In this example, the internal endpoints are being utilized. The Process Controller worker role needs to know the internal endpoints of each individual role instance which the process controller wants to communicate with.

Figure 1: Roles exchanging resource-intensive workload processing instructions via internal endpoints.The internal endpoints provide a simplistic mechanism for getting one role instance to communicate to the others in 1:1 or 1:many fashion. This leads to a fair question: where is the true challenge? Simply put, there aren’t any. However, there are constraints and special considerations that may de-value the internal endpoints and shift focus over to a more flexible alternative. Below are the most significant “limitations” of the internal endpoints:

Internal endpoints must be defined ahead of time – these are registered in the service definition and locked down at design time;

The discoverability of internal endpoints is limited to a given deployment – the role environment doesn’t have explicit knowledge of all other internal endpoints exposed by other Azure hosted services;

Internal endpoints are not reachable across hosted service deployments – this could render itself as a limiting factor when developing a cloud application that needs to exchange data with other cloud services deployed in a separate hosted service environment even if it’s affinitized to the same datacenter;

Internal endpoints are only visible within the same datacenter environment – a complex cloud solution that takes advantage of a true geo-distributed deployment model cannot rely on internal endpoints for cross-datacenter communication;

The event relay via internal endpoints cannot scale as the number of participants grows – the internal endpoints are only useful when the number of participating role instances is limited and with underlying messaging pattern still being a point-to-point connection, the role instances cannot take advantage of the multicast messaging via internal endpoints.

In summary, once a complexity threshold is reached, one may need to re-think whether or not the internal endpoints may be a durable choice for inter-role communication and what other alternatives may be available. At this point, we organically approached the section that is intended to drill down into an alternative solution that scales, is sufficiently elastic and compensates for the constraints highlighted above.

The Solution

Among other powerful communication patterns supported by the AppFabric Service Bus, the one-way messaging between publishers and subscribers presents a special interest for inter-role communication. It supports multicast datagrams which provide a mechanism for sending a single message to a group of listeners that joined the same multicast group. Simply put, this communication pattern enables communicating a notification to a number of subscribers interested in receiving these notifications.

The support for “publish/subscribe” model in the AppFabric Service Bus promotes the relevant capabilities into a natural choice for loosely coupled communication between Azure roles. The role instances authenticate and register themselves on a Service Bus service namespace and choose the appropriate path from the global hierarchical naming system on which the subscriber would be listening to incoming events. The Service Bus creates all underlying transport plumbing and multicasts published events to the active listener registrations on a given rendezvous service endpoint (also known as topic).

Figure 2: Roles exchanging resource-intensive workload processing instructions via Service Bus using one-way multicast (publish/subscribe).

The one-way multicast eventing is available through the NetEventRelayBinding WCF extension provided by the Azure Service Bus. When compared against internal endpoints, the inter-role communication that uses Service Bus’ one-way multicast benefits from the following:

Subscribers can dynamically register themselves at runtime – there is no need to pre-configure the Azure roles to be able to send or receive messages (with an exception of access credentials that are required for connectivity to a Service Bus namespace);

Discoverability is no longer an issue as all subscribers that listen on the same rendezvous endpoint (topic) will equally be able to receive messages, irrespectively whether or not these subscribers belong the same hosted service deployment;

Inter-role communication across datacenters is no longer a limiting factor - Service Bus makes it possible to connect cloud applications regardless of the logical or physical boundaries surrounding these applications;

Multicast is a highly scalable messaging paradigm, although at the time of writing this paper, the number of subscribers for one-way multicast is constrained to fit departmental scenarios with fewer than 20 concurrent listeners (this limitation is subject to change).

Now that all the key benefits are evaluated, let’s jump to the technical implementation and see how a combination of the Service Bus one-way multicast messaging and some value-add capabilities found in the .NET Framework 4.0 help create a powerful communication layer for inter-role data exchange.

Note that the sample implementation below has been intentionally simplified to fit the scope of a demo scenario for this paper.

Defining Application Events

To follow along, download the full sample code from the MSDN Code Gallery. …

Valery continues with a detailed analysis of the sample source code.

• Alik Levin announced Windows Identity Foundation (WIF)/Azure AppFabric Access Control Service (ACS) Survival Guide Published to the TechNet Wiki on 10/2/2010:

I have just published Windows Identity Foundation (WIF) and Azure AppFabric Access Control (ACS) Service Survival Guide.

It has the following structure:

Problem Scope

- What is it?

- How does it fit?

- How To Make It Work?

- Case Studies

- WIF/ACS Anatomy

- Architecture

- Identification (how a client identifies itself)

- Authentication (how client's credentials validated)

- Identity flow (how the token flows through the layers/tiers)

- Authorization (how relying party - application or service - decides to grant or deny access)

- Monitoring

- Administration

- Quality Attributes

- Supportability

- Testability

- Interoperability

- Performance

- Security

- Flexibility

- Content Channels

- MSDN/Technet

- Codeplex

- Code.MSDN

- Blogs

- Channel9

- SDK

- Books

- Conventions

- Forums

- Content Types

- Explained

- Architecture scenarios

- Guidelines

- How-to's

- Checklists

- Troubleshooting cheat sheets

- Code samples

- Videos

- Slides

- Documents

- Related Technology

- Industry

- Additional Q&A

Constructive feedback on how to improve much appreciated.

Related Books

• Alik Levin posted Azure AppFabric Access Control Service (ACS) v 2.0 High Level Architecture – REST Web Service Application Scenario to his MSDN blog on 9/30/2010:

This is a follow up to a previous post Azure AppFabric Access Control Service (ACS) v 2.0 High Level Architecture – Web Application Scenario. This post outlines the high level architecture for a scenario where Azure AppFabric Access Control Service (ACS) V2 involved in authentication and identity flow process between a client and a RESTful Web Service. Good description of the scenario, including visuals and solution summary, can be found here - App Scenario – REST with AppFabric Access Control. The sequence diagram can be found here - Introduction (skip to Web Service Scenario).

In this case there is no involvement of end user, so that User Experience part is irrelevant here.

Important to mention on when to use what for token signing. As per Token Signing:

- Add an X.509 certificate signing credential if you are using the Windows Identity Foundation (WIF) in your relying party application.

- Add a 256-bit symmetric signing key if you are building an application that uses OAuth WRAP.

These keys or certificates are used to protect tokens from tampering while on transit. These certificates and keys are not for authentication. They help maintaining trust between Azure AppFabric Access Control Service (ACS) and the Web Service.

Try out yourself using bootstrap samples available here:

- ASP.NET Simple Service: A very simple ASP.NET web service that uses ACS for authentication and simple authorization

- WCF Username Authentication: A very simple WCF service that authenticates with ACS using a username and password

- WCF Certificate Authentication: A very simple WCF service that authenticates with ACS using a certificate

Related Books

- Programming Windows Identity Foundation (Dev - Pro)

- Developing More-Secure Microsoft ASP.NET 2.0 Applications (Pro Developer)

- Ultra-Fast ASP.NET: Build Ultra-Fast and Ultra-Scalable web sites using ASP.NET and SQL Server

- Advanced .NET Debugging

- Debugging Microsoft .NET 2.0 Applications

More Info

- Azure AppFabric Access Control Service (ACS) v 2.0 High Level Architecture – Web Application

- Windows Identity Foundation (WIF) Explained – Web Browser Sign-In Flow (WS-Federation Passive Requestor Profile)

- Protocols Supported By Windows Identity Foundation (WIF)

- Windows Identity Foundation (WIF) By Example Part I – How To Get Started.

- Windows Identity Foundation (WIF) By Example Part II – How To Migrate Existing ASP.NET Web Application To Claims Aware

- Windows Identity Foundation (WIF) By Example Part III – How To Implement Claims Based Authorization For ASP.NET Application

- Identity Developer Training Kit

- A Guide to Claims-Based Identity and Access Control – Code Samples

- A Guide to Claims-Based Identity and Access Control — Book Download

Lori MacVittie (@lmacvittie) claimed Enterprise developers and architects beware: OAuth is not the double rainbow it is made out to be. It can be a foundational technology for your applications, but only if you’re aware of the risks in an introduction to her Mashable Sees Double Rainbows as Google Goes Gaga for OAuth essay of 9/29/2010:

OAuth has been silently growing as the favored mechanism for cross-site authentication in the Web 2.0 world. The ability to leverage a single set of credentials across a variety of sites reduces the number of username/password combinations a user must remember. It also inherently provides for a granular authorization scheme.

Google’s announcement that it now offers OAuth support for Google Apps APIs was widely mentioned this week including Mashable’s declaration that Google’s adoption implies all applications must follow suit. Now. Stop reading, get to it. It was made out to sound like that much of an imperative.

Google’s argument that OAuth is more secure than the ClientLogin model was a bit of a stretch considering the primary use of OAuth at this time is to integrate Web 2.0 and social networking sites – all of which rely upon a simple username/password model for authentication. True, it’s safer than sharing passwords across sites, but the security of data can still be compromised by a single username/password pair.

OAUTH on the WEB RELIES on a CLIENT-LOGIN MODEL

The premise of OAuth is that the credentials of another site are used for authentication but not shared or divulged. Authorization for actions and access to data are determined by the user on a app by app basis. Anyone familiar with SAML and assertions or Kerberos

will recognize strong similarities in the documentation and underlying OAuth protocol. Similar to SAML and Kerberos, OAuth tokens - like SAML assertions or Kerberos tickets - can be set to expire, which is one of the core reasons Google claims it is “more” secure than the ClientLogin model.

OAuth has been a boon to Web 2.0 and increased user interaction across sites because it makes the process of authenticating and managing access to a site simple for users. Users can choose a single site to be the authoritative source of identity across the web and leverage it at any site that supports OAuth and the site they’ve chosen (usually a major brand like Facebook or Google or Twitter). That means every other site they use that requires authentication is likely using one central location to store their credentials and identifying data.

One central location that, ultimately, requires a username and a password for authentication.

Yes, ultimately, OAuth is relying on the security of the same ClientLogin model it claims is less secure than OAuth. Which means OAuth is not more secure than the traditional model because any security mechanism is only as strong as the weakest link in the chain. In other words, it is quite possible that a user’s entire Internet identity is riding on one username and password at one site. If that doesn’t scare you, it should. Especially if that user is your mom or child and they haven’t quite got the hang of secure passwords or manually change their passwords on a regular basis.

When all your base are protected by a single username and password, all your base are eventually belong to someone else.

Now, as far as the claim that the ability to expire tokens makes OAuth more secure, I’m not buying it. There is nothing in the ClientLogin model that disallows expiration of credentials. Nothing. It’s not inherently a part of the model, but it’s not disallowed in any way. Developers can and probably should include an expiration of credentials in any site but they generally don’t unless they’re developing for the enterprise because organizational security policies require it. Neither OAuth nor the ClientLogin model are more secure because the ability exists to expire credentials. Argue that the use of a nonce makes OAuth less susceptible to replay attacks and I’ll agree. Argue that OAuth’s use of tokens makes it less susceptible to spying-based attacks and I’ll agree. Argue that expiring tokens is the basis for OAuth’s superior security model and I’ll shake my head.

SECURITY through DISTRIBUTED OBSCURITY

The trick with OAuth is that the security is inherently contained within the distributed, hidden nature of the credentials. A single site might leverage two, three, or more external authoritative sources (OAuth providers) to authenticate users and authorize access. It is impossible to tell which site a user might have designated as its primary authoritative source at any other site, and it is not necessarily (though it is likely, users are creatures of habit) the case that the user always designates the same authoritative source across external sites. Thus, the security of a given site relying on OAuth for authorization distributes the responsibility for security of credentials to the external, authorizing site. Neat trick, isn’t it? Exclusively using OAuth effectively surrounds a site with an SEP field. It’s Somebody Else’s Problem if a user’s credentials are compromised or permissions are changed because the responsibility for securing that information lies with some other site. You are effectively abrogating responsibility and thus control. You can’t enforce policy on any data you don’t control.

The distributed, user-choice aspect of OAuth obscures the authoritative source of those credentials, making it more difficult to determine which site should be attacked to gain access. It’s security through distributed obscurity, hiding the credential store “somewhere” on the web.

CAVEAT AEDIFICATOR

That probably comes as a shock at this point, but honestly the point here is not to lambast OAuth as an authentication and authorization model. Google’s choice to allow OAuth and stop the sharing of passwords across sites is certainly laudable given that most users tend to use the same username and password across every site they access. OAuth has reduced the number of places in which that particular password is stored and therefore might be compromised. OAuth is scriptable, as Google pointed out, and while the username/password model is also

scriptable doing so almost certainly violates about a hundred and fifty basic security precepts. Google is doing something to stop that, and for that they should be applauded.

OAuth can be a good choice for application authentication and authorization. It naturally carries with it a more granular permission (authorization) model that means developers don’t have to invent one from scratch. Leveraging granular permissions is certainly a good idea given the nature of APIs and applications today. OAuth is also a good way to integrate partners into your application ecosystem without requiring management of their identities on-site – allowing partners to identify their own OAuth provider as an authoritative source for your applications can reduce the complexity in supporting and maintaining their identities. But it isn’t more secure – at least not from an end-user perspective, especially when the authoritative source is not enforcing any kind of strict password and username policies. It is that end-user perspective that must be considered when deciding to leverage OAuth for an application that might have access to sensitive data. An application that has access to sensitive data should not rely on the security of a user’s Facebook/Twitter/<insert social site here> password. Users may internally create strong passwords because corporate policy forces them to. They don’t necessarily carry that practice into their “personal” world, so relying on them to do so would likely be a mistake.

Certainly an enterprise architect can make good use of Google’s support for OAuth if s/he is cautious: the authoritative source should be (a) under the control of IT and (b) integrated into an identity store back-end capable of enforcing organizational policies regarding authentication, i.e. password expirations and strengths. When developing applications using Google Apps and deciding to leverage OAuth, it behooves the developer to be very cautious about which third party sites/applications can be used for authentication and authorization, because the only real point of control regarding the sharing of that data is in the choices allowed, i.e. which OAuth providers are supported. Building out applications that leverage an OAuth foundation internally will enable single-sign on capabilities in a way that eliminates the need for more complex architecture. It also supports the notion of “as a service” that can be leveraged later when moving toward the “IT as a Service” goal.

OAuth is not necessarily “more” secure than the model upon which it ultimately relies, though it certainly is more elegant from an architectural and end-user perspective. The strength of an application’s security when using OAuth is relative to the strength of the authoritative source of authentication and authorization. Does OAuth have advantages over the traditional model, as put forth by Google in its blog? Yes. Absolutely. But security of any system is only as strong as the weakest link in the chain and with OAuth today that remains in most cases a username/password combination.

That ultimately means the strength or weakness of OAuth security remains firmly in the hands (literally) of the end-user. For enterprise developers that translates into a “Caveat aedificator” or in plain English, “Let the architect beware.”

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• My (@rogerjenn) Windows Azure Uptime Report: OakLeaf Table Test Harness for September 2010 (99.54%) post of 10/3/2010 shows Windows Azure meets its SLA for two months in a row with a single instance:

•• Björn Axéll reported on 10/3/2010 release of Windows Azure Application Monitoring Management Pack v6.1.7686.0 of 10/1/2010:

The Windows Azure Management Pack enables you to monitor the availability and performance of applications that are running on Windows Azure.

Feature Summary

After configuration, the Windows Azure Management Pack offers the following functionality:

- Discovers Windows Azure applications.

- Provides status of each role instance.

- Collects and monitors performance information.

- Collects and monitors Windows events.

- Collects and monitors the .NET Framework trace messages from each role instance.

- Grooms performance, event, and the .NET Framework trace data from Windows Azure storage account.

- Changes the number of role instances via a task.

NOTE! The management group must be running Operations Manager 2007 R2 Cumulative Update 3 [of 10/1/2010].

Download Windows Azure Application Monitoring Management Pack [v6.1.7686.0] from Microsoft

• The Windows Azure Team posted an Update on the ASP.NET Vulnerability on 10/1/2010:

A security update has been released which addresses the ASP.NET vulnerability we blogged about previously. This update is included in Guest OS 1.7, which is currently being rolled out to all Windows Azure data centers globally. Some customers will begin seeing Guest OS 1.7 as early as today, and broad rollout will complete within the next two weeks.

If you’ve configured your Windows Azure application to receive automatic OS upgrades, no action is required to receive the update. If you don’t use automatic OS upgrades, we recommend manually upgrading to Guest OS 1.7 as soon as it’s available for your application. For more information about configuring OS upgrades, see “Configuring Settings for the Windows Azure Guest OS.”

Until your application has the update, remember to review Scott Guthrie’s blog post to determine if your application is vulnerable and to learn how to apply the a workaround for additional protection.

The Windows Azure Team added Windows Azure Guest OS 1.7 (Release 201009-01) to the MSDN Library on 9/30/2010:

Windows Azure Platform Updated: September 30, 2010

The following table describes release 201008-01 of the Windows Azure Guest OS 1.7:

Friendly name Windows Azure Guest OS 1.7 (Release 201009-01) Configuration value WA-GUEST-OS-1.7_201009-01 Release date October 1, 2010 Features

- Stability and Security patch fixes applicable to Windows Azure OS

- Latest security update to resolve a publicly disclosed vulnerability in ASP.NET

Security Patches

The Windows Azure Guest OS 1.7 includes the following security patches, as well as all of the security patches provided by previous releases of the Windows Azure Guest OS:

Bulletin ID Parent KB Vulnerability Description MS10-047 981852 Vulnerabilities in Windows Kernel could allow Elevation of Privilege MS10-048 2160329 Vulnerabilities in Windows Kernel-Mode Drivers Could Allow Elevation of Privilege MS10-049 980436 Vulnerabilities in SChannel could allow Remote Code Execution MS10-051 2079403 Vulnerability in Microsoft XML Core Services Could Allow Remote Code Execution MS10-053 2183461 Cumulative Security Update for Internet Explorer MS10-054 982214 Vulnerabilities in SMB Server Could Allow Remote Code Execution MS10-058 978886 Vulnerabilities in TCP/IP could cause Elevation of Privilege MS10-059 982799 Vulnerabilities in the Tracing Feature for Services Could Allow an Elevation of Privilege MS10-060 983589 Vulnerabilities in the Microsoft .NET Common Language Runtime and in Microsoft Silverlight Could Allow Remote Code Execution MS10-070 2418042 Vulnerability in ASP.NET Could Allow Information Disclosure

Windows Azure Guest OS 1.7 is substantially compatible with Windows Server 2008 SP2, and includes all Windows Server 2008 SP2 security patches through August 2010.

Note:

When a new release of the Windows Azure Guest OS is published, it can take several days for it to fully propagate across Windows Azure. If your service is configured for auto-upgrade, it will be upgraded sometime after the release date indicated in the table below, and you’ll see the new guest OS version listed for your service. If you are upgrading your service manually, the new guest OS will be available for you to upgrade your service once the full roll-out of the guest OS to Windows Azure is complete.

See Also: Concepts

• SpiveyWorks (@michael_spivey, @windowsazureapp) announced on 10/2/2010 a Body Mass Index Calculator running on Windows Azure:

Click Join In on the live page or here for more information about SpiveyWorks Notes.

• Gunther Lenz posted Introducing the Nasuni Filer 2.0, powered by Windows Azure to the US ISV Evangelism blog on 10/1/2010:

Nasuni CEO Andres Rodriguez and Microsoft Architect Evangelist Gunther Lenz present Nasuni Filer 2.0. Now supporting Hyper-V, Windows Azure and VFS, the Nasuni Filer turns cloud storage into a file server with unlimited storage, snapshot technology and end-to-end encryption.

Event Sponsors:

• Bill Hilf posted Windows Azure and Info-Plosion: Technical computing in the cloud for scientific breakthroughs to the Official Microsoft Blog on 9/30/2010:

Today Microsoft and Japan’s National Institute of Informatics (NII) announced a joint program that will give university researchers free access to Windows Azure cloud computing resources for the “Info-Plosion Project.” This project is aimed at developing new and better ways to retrieve information and follows a similar agreement with the National Science Foundation to provide researchers with Windows Azure resources for scientific technical computing.