Flurry of Outages on 4/19/2012 for my Windows Azure Tables Demo in the South Central US Data Center

•• Updated 4/30/2012 with root cause analysis (RCA) details.

• Updated 4/26/2012 with details of similar outage in the North Central U.S. data center on 4/25/2012 and another South Central U.S. data center outage on the same date. See items marked • near the end of this post.

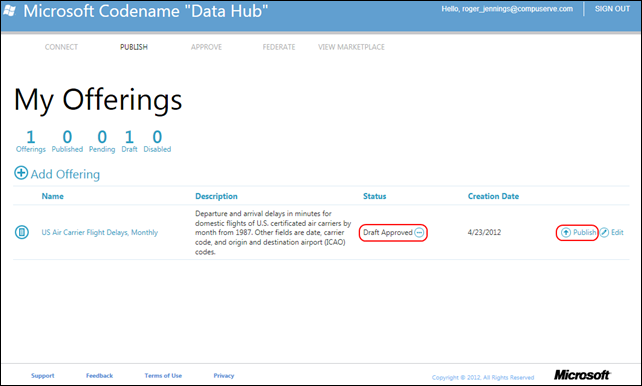

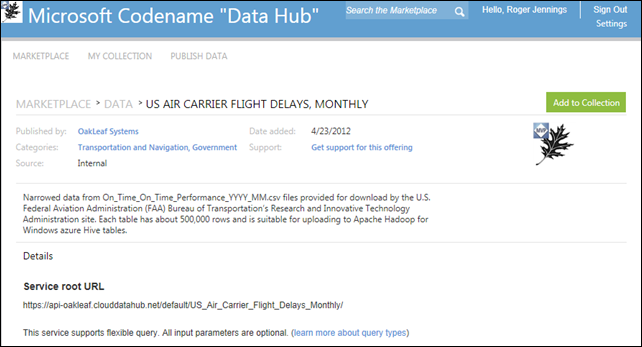

My OakLeaf Systems Azure Table Services Sample Project (Tools v1.4 with Azure Storage Analytics) demo application, which runs on two Windows Azure compute instances in Microsoft’s South Central US (San Antonio) data center incurred an extraordinary number of compute outages on 4/19/2012. Following is the Pingdom report for that date:

The Mon.itor.us monitoring service showed similar downtime.

This application, which I believe is the longest (almost) continuously running Azure application in existence, usually runs within Microsoft’s Compute Service Level Agreement for Windows Azure: “Internet facing roles will have external connectivity at least 99.95% of the time.”

The following table from my Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: March 2012 post of 4/3/2012 lists outage and response time from Pingdom for the last 10 months:

The Windows Azure Service Dashboard reported Service Management Degradation in the Status History but not problems with existing hosted services:

[RESOLVED] Partial Service Management Degradation

19-Apr-12

11:11 PM UTC We are experiencing a partial service management degradation in the South Central US sub region. At this time some customers may experience failed service management operations in this sub region. Existing hosted services are not affected and deployed applications will continue to run. Storage accounts in this sub region are not affected either. We are actively investigating this issue and working to resolve it as soon as possible. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.20-Apr-12

12:11 AM UTC We are still troubleshooting this issue and capturing all the data that will allow us to resolve it. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.1:11 AM UTC The incident has been mitigated for new hosted services that will be deployed in the South Central US sub region. Customers with hosted services already deployed in this sub region may still experience service management operations failures or timeouts. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

1:47 AM UTC The repair steps have been executed and successfully validated. Full service management functionality has been restored in the affected cluster in the South Central US sub-region. We apologize for any inconvenience this has caused our customers.

However, Windows Azure Worldwide Service Management did not report problems:

I have requested a Root Cause Analysis from the Operations Team and will update this post when I receive a reply. See below.

• Mon.itor.us reported that my OakLeaf Systems Azure Table Services Sample Project (Tools v1.4 with Azure Storage Analytics) demo suffered another 30 minute outage on 4/25/2012 starting at 8:10 PM.

•• Avkash Chauhan, a Microsoft Sr. Escalation Engineer and frequent Windows Azure and Hadoop on Azure blogger, reported the root cause of the outage as follows:

At approximately 6:45 AM on April 19th, 2012 two network switches failed simultaneously in the South Central US sub region. One role instance in your ‘oakleaf’ compute deployment was behind each of the affected switches. While the Windows Azure environment is segmented into fault domains to protect from hardware failures, in rare instances, simultaneous faults may occur.

As this was a silent and intermittent failure, detection mechanisms did not alert our engineering teams of the issue. We are taking action to correct this at platform and network layers to ensure efficient response to such issues in the future. Further, we will be building additional intelligence into the platform to handle such failures in an automated fashion.

We apologize for any inconvenience this issue may have caused.

Thank you,

The Windows Azure team

• The Windows Azure Operations Team reported [Windows Azure Compute] [North Central US] [Yellow] Windows Azure Service Management Degradation on 4/24/2012:

Apr 24 2012 10:00PM We are experiencing a partial service management degradation with Windows Azure Compute in the North Central US sub region. At this time some customers may experience errors while deploying new hosted services. There is no impact to any other service management operations. Existing hosted services are not affected and deployed applications will continue to run. Storage accounts in this region are not affected either. We are actively investigating this issue and working to resolve it as soon as possible. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

Apr 24 2012 11:30PM We are still troubleshooting this issue, and capturing all the data that will allow us to resolve it. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

Apr 25 2012 1:00AM We are working on the repair steps in order to address the issue. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

Apr 25 2012 1:40AM The repair steps have been executed successfully, the partial service management degradation has been addressed and the resolution verified. We apologize for any inconvenience this caused our customers.

Following is a snapshot of the Windows Azure Service Dashboard details pane on 4/25/2012 at 9:00 AM PDT:

The preceding report was similar to the one I reported for the South Central US data center above. That problem affected hosted services (including my Windows Azure tables demo app). I also encountered problems creating new Hadoop clusters on 4/24/2012. Apache Hadoop on Windows Azure runs in the North Central US (Chicago) data center, so I assume services hosted there were affected, too.

• Update 4/26/2012: Microsoft’s Brad Sarsfield reported in a reply to my Can't Create a New Hadoop Cluster thread of 4/25/2012 on the HadoopOnAzureCTP Yahoo! Group:

Things should be back to normal now. Sorry for the hiccup. We experienced a few hours of deployment unreliability on Azure.

Update 4/25/2012 9:30 AM PDT: The problem continues with this notice:

Apr 25 2012 3:12PM We are experiencing a partial service management degradation with Windows Azure Compute in the North Central US sub region. At this time some customers may experience errors while carrying out service management operations on existing hosted services. There is no impact to creation of new hosted services. Deployed applications will continue to run. Storage accounts in this region are not affected either. We are actively investigating this issue and working to resolve it as soon as possible. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

Image courtesy of Catherine Haley

Image courtesy of Catherine Haley In the early 1960s, Polytron developed and patented a method for raising sunken ships with urethane foam, which led us into some interesting projects for the U.S. Navy. Our largest project in 1965 was refloating the

In the early 1960s, Polytron developed and patented a method for raising sunken ships with urethane foam, which led us into some interesting projects for the U.S. Navy. Our largest project in 1965 was refloating the

Fluidyne Helix flowmeters gained widespread use for measuring larger flows of more viscous liquids, such as bunker fuels for cargo and passenger vessels and diesel fuel for large generators. At the right is a copy of an advertisement for Helix flowmeters from the November 1977 issue of Chemical & Engineering News magazine.

Fluidyne Helix flowmeters gained widespread use for measuring larger flows of more viscous liquids, such as bunker fuels for cargo and passenger vessels and diesel fuel for large generators. At the right is a copy of an advertisement for Helix flowmeters from the November 1977 issue of Chemical & Engineering News magazine. Max Machinery, Inc.

Max Machinery, Inc. After the litigation with Max Machinery concluded, I decided to write computer software. I wrote a program to manage the business of

After the litigation with Max Machinery concluded, I decided to write computer software. I wrote a program to manage the business of