Windows Azure and Cloud Computing Posts for 5/24/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 5/25/2011 with new articles marked • by [please]with(c), Jesse Liberty, Beth Massi, Brian Swan, Jonathan Rozenblit, James Hamilton, JP Morgenthal, Lori MacVittie, Jeff Barr, AppFabric Team, David Strom, Steve Yi, David Rubenstein, Wade Wegner, Marcelo Lopez Ruiz, Vittorio Bertocci and Windows Azure Team. Many of the new articles cover WP7 Mango features related to Windows Azure and SQL Azure.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting, SQL Compact

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting, SQL Compact

• [please]with(c) (@pleasewithc) discussed issues with Distributing a SQL CE database in a WP7 Mango application in a 3/24/2011 post to his Arsanth blog:

Possible! But – the creation of such a database sounds a little unconventional. Well it’s a bit of a hack, to be honest. Clearly there’s no tooling for this yet, so this is how one is obliged to roll.

Sean McKenna gave a talk at MIX11 about data, where he discussed two different types of local storage available to your WP7 application. First is the traditional isolated storage area (similar to what’s available in desktop Silverlight), and the other is an “App storage” area, a read-only area that basically consists of your .xap deployment. In his talk, Sean suggests you can read a database from the read-only App storage area, which in turn implies you can distribute an .sdf file with your app.

Around 37 minutes into the talk, he mentions that for reference data, you’ll want to “pre-populate that information; do that offline, on your desktop, and include that in your [.xap]“. The accompanying slide outlines the process in more detail:

- Create a simple project to pre-populate data in the emulator

- Pull out database file using Isolated Storage explorer

Whether or not you must do it this way, I don’t know; whether you could use any existing .sdf file I don’t know. I assume better tooling for modeling data will come sometime further down the road, but for the time being Mango SQL CE database creation is strictly a code-first affair.

Jesse Liberty provides an O/RM approach to using SQL Server CE with WP7 Mango in the article below. The question for today is “Will the WP7 Mango support SQL Azure Data Sync?”

• Jesse Liberty (@JesseLiberty) asserted Coming in Mango–SQL Server CE in a 5/10/2011 post to his Windows Phone Geek blog (missed when posted):

With the forthcoming Mango release of Windows Phone (tools to be released this month) you can store structured data in a Sql Server Compact Edition (CE) database file in isolated storage (called a local database).

You don’t actually interact directly with the database, but instead you use Linq to SQL (see my background article on Linq). LINQ to SQL allows your application to use Linq to speak to the local (relational) database

Geek Details: LINQ to SQL consists of an object model and a runtime.

The object model is encapsulated in the Data Context object (an object that inherits from System.Data.Linq.DataContext).

The runtime mediates between objects (the DataContext ) and relational data (the local database). Thus, LINQ To SQL acts as an ORM: an Object Relational Mapping framework.

To get started, let’s build a dead-simple example that hardwires two objects and places them into the database, and then extracts them and shows their values in a list box.

The program will store information about Books, including the Author(s) and the Publisher, and then display that information on demand.

The initial UI consists of a button that will create two books, a button that will retrieve the two books and a list box that will display the two books:

Note that for now, I’ve left out the details of the DataTemplate; we’ll return to that shortly.

The DataContext

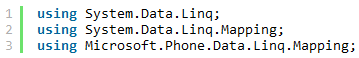

We begin the work of managing the data by creating a Data Context, which is, as noted above, an object that derives from DataContext. Add a reference to System.data.linq and these three using statements to the top of every related file:

With these in place we can create the BooksDataContext, which will consist of three Tables and a constructor that passes its connection string to the base class:

As written the program will not compile as we have not yet defined the Book, Author and Publisher classes. Let’s do so now, and we’ll do so with an understanding of relational data and normalization: that is, we’ll have the Book class keep an ID for each author and for each publisher, rather than duplicating that information in each instance of Book.

This approach is informed by relational database theory but is good class design in any case.

Note: to keep this first example simple, we’ll make the fatuous assumption that every book has but a single author.

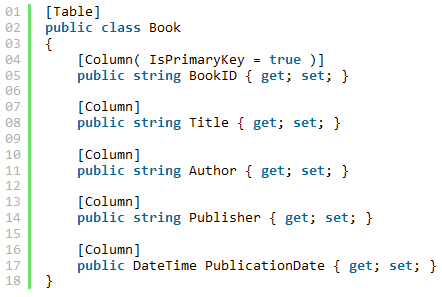

The following code appears in Book.cs,

Table and Column are Linq to SQL mapping attributes (there are many others). We’ll return, in future tutorials, to the details of these attributes and to how you can index additional properties (the primary key is automatically indexed) and create relationships.

For completeness, here are the contents of Author.cs and Publisher.cs,

We create an instance of the database in MainPage.xaml.cs, in the constructor, where we also wire up event handlers for the two buttons,

Notice the syntax for storing our database file (bookDB.sdf) inside of isolated storage. With the DataContext we get back we create the new database.

When CreateBook is clicked the event handler is called, and at this time we want to create two books. Since our books have a publisher and different authors, we must create those objects first (or we’ll refer to a publisher or author ID that doesn’t exist). Let’s start with the creation of the publisher:

This code gets a new reference to the same database, and then instantiates a Publisher object, initializing all the Publisher fields. It then instructs the database context to insert this record when the Submit command is called. Before calling that command, however, we’ll create a few author records:

With these four records ready to be submitted, we call SubmitChanges on the databaseContext,

We’re now ready to create the two book objects. Once again we instantiate the C# object and initialize its fields, and once again we mark each instance to be inserted on submission,

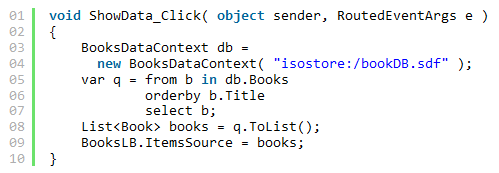

The two books are now in the database, and we can prove that to ourselves by implementing the event handler for the ShowData button,

Once again we create a new DataContext but pointing to the same local database. We now execute a LINQ Query against the database, obtaining every record in the Books table, ordered by title. We convert the result to an enumerable (a List<>) and set that as the ItemsSource property for the BooksListBox. This then becomes the datacontext for the databinding in the DataTemplate of the list box.

There is much more to do to turn this into anything approaching a useful application (e.g., creating the relationships between the tables so that we see the Author and Publisher’s names rather than their ID). All of that is to come in future tutorials.

The take-away from this first tutorial, however, is, I hope, that working with the database in Mango is straight-forward and that the semantics (and to a large degree the syntax) is pretty much what you would expect.

The computer thinks these might be related:

Jesse is a Senior Community Program Manager in Microsoft Developer Guidance, focused on Windows Phone 7.

Mauricio Rojas reported The Mango is close!!! in a 5/24/2011 post:

Jejeje. Well if you are a phone developer, either an iOS guy who is thinking on offering his/her apps in the MS Marketplace or just someone with a great interest in Windows Phone then you should know what mango is.

Mango is a new update for the Windows Phone, and it will mean a lot for developers, because if you already liked WP7 you will love MANGO.

Click here to watch a 00:10:45 Channel9 video about WP7 by Joe Belfiore.

- Mango means HTML5 yes!

- Mango means Multitasking!

- Mango means Hands-free messaging, did you ever

wanted to send SMS without using your fingers, now you can!I don’t think is mentioned in this video but Mango is also SQLCE a great alternative for Android and iPhone developers who were using SQLite.

So far, I haven’t seen any indication of a SQL Azure Data Sync version for WP7’s new SQL CE feature, which presumably will support HTML5’s IndexedDB feature. For more details about Microsoft’s early implementation of IndexedDB, a wrapper around SQL Server Compact, see my Testing IndexedDB with the Trial Tool Web App and Microsoft Internet Explorer 8 or 9 Beta post of 1/7/2011, Testing IndexedDB with the SqlCeJsE40.dll COM Server and Microsoft Internet Explorer 8 or 9 Beta of 1/6/2011, and Testing IndexedDB with the Trial Tool Web App and Mozilla Firefox 4 Beta 8 (updated 1/6/2011).

See also my Claudio Caldato reported The IndexedDB Prototype Gets an Update in a 2/4/2011 post to the Interoperability @ Microsoft blog article here (scroll down).

You’ll find additional WP7 Mango details in Sharon Chan’s WP: Mango update coming to Windows Phone in the fall article in the Seattle Times’ The Seattle Times: Microsoft Pri0 column:

NEW YORK -- A bunch of new features will be coming in a release code-named Mango to Windows Phone this fall, Microsoft said Tuesday.

Andy Lees, president of Microsoft's Mobile Communications Business, said the changes are designed to "make the smartphone smarter and easier. ... This lays out the focus of the Mango release, smarter and easier for communications, applications and the Internet."

Microsoft launched Windows Phone in October. Microsoft gave no update today on how many people have bought Windows Phone devices.

Microsoft did say that Nokia's first phones will run the Mango release, and Microsoft is already testing it on Nokia phones in labs in Redmond.

New handset partners Acer, Fujitsu and ZTE will make phones that run the Mango version of Windows Phone. Samsung, LG and HTC will also make new phones that run Mango. Microsoft did not say when Nokia, a new partner, will ship its first phones running Microsoft's mobile operating system.

Microsoft said there are now more than 18,000 apps for the Windows Phone.

"For the ecosystem, the stars are aligning," Lees said. "We think Windows Phone Mango will be a tipping point of opportunity."

Microsoft had some problems with its last update for Windows Phone called NoDo, and many phone owners complained that it was late and confusing when they would receive it.

Here are some areas of improvement for Windows Phone in the Mango release.

Mashing Facebook, Twitter and texts in the address book. Each contact in the address book will show a communication history, including Facebook chats, tweets and previous emails, texts and voice mails. You can group people together in the address book in lists and launch Facebook messages to the group.

Text messages can be read aloud by the phone, and the phone can convert speech into text messages.

Applications will get three-dimensional graphics. The company showed a British Airways app with a seat chooser that lets the person do a virtual walk through of the plane. The app also pushed a QR code for a boarding pass to the home page of the phone screen. Users will see these new features only if developers take advantage of them in building new apps.

Bing will be able to do visual search with the phone's camera, such as snapping a photo of a book cover and connecting the phone to a Bing search about the book. Quick cards from Bing will pull together relevant information on a restaurant or concert venue and link to relevant apps.

Internet Explorer 9 will be coming in a mobile version.

Microsoft said the update will include more than 500 new features.

Join us back here at noon Tuesday for a Seattle Times live chat about all the new features in Windows Phone.

• Steve Yi posted TechEd 2011 Highlights: Highlighted [SQL Azure] Articles on TechEd 2011 on 3/25/2011:

TechEd wound to a close last Friday. Our engineering leaders put together some great demos and presentations that help developers take advantage of cloud data with SQL Azure, as well as preview what’s coming next on the roadmap. The blogosphere has been very active with articles from people who have been excited to share their insights and experiences from the conference. Below are a few that deserve a closer look:

Mary-Jo Foley wrote a great post that summarized the SQL Azure announcements at TechEd, especially our initiatives in event-processing by putting StreamInsight in the cloud: All About Microsoft: “Microsoft readies 'Austin,' an Azure-hosted event-processing service"

Summary: Microsoft’s making a progression to transfer SQL Server capabilities into the cloud, and transform them into service solutions. Foley’s article details one of Microsoft’s latest solutions: Codename “Austin”. In addition to providing technical insight on “Austin”, the article elaborates on some of the possible scenarios that the solution could be implemented, as well as highlighting other fascinating SQL Azure news features that are worth reading about.

Robert Wahbe, Corporate Vice President of Server & Tools, authored a guest post on the The Official Microsoft Blog, titled “Charting your Course into the Cloud”

Summary: The article summarizes the keynote talk that he delivered to developers and other IT professionals at TechEd. During the conference, Wahbe highlighted evolving trends in technologies like cloud computing, the changes in the device landscape, the economics of cloud computing and how Microsoft is helping partners manage this new environment. The article references to several Microsoft press releases, a whitepaper and a case study which makes this a valuable read.

Author Paul Thurrott who writes the blog, Windows IT Pro wrote this post on TechEd: “What I’ve Learned (So Far) at TechEd 2011”

Summary: This article was written after Day 1 of TechEd, but it highlights some of the exciting technologies that were featured just in one day. Thurrott’s article highlights solutions such as MultiPoint Server 2011, and Windows Phone 7.5 "Mango” He also has written an article here, which summarizes Microsoft’s case for cloud offerings during the TechEd keynote speech.

Susan Inbach blogs in the Canadian Solution Developer and wrote this piece recently on TechEd: “Get Excited About Your Job Again- TechEd”

Summary: This article is more of an interesting lifestyle piece about what it’s like to be at a developer conference. Inbach highlights some of the networking tactics that she used at TechEd to make new contacts and get exposed to new ideas. It really got her engaged in the entire experience. I think that this is a fun piece that really highlights why, if you get the opportunity, you should go to a developer’s conference like TechEd.

There were so many things to write about TechEd this year. If you weren’t able to view the demonstrations and other presentations from TechEd 2011 that were reviewed in the articles above, take a look at them all here at Channel 9.

• Steve Yi (pictured below) posted Real World SQL Azure: Interview with Kai Boo Lee, Business Development Director, aZaaS on 3/24/2011:

As part of the Real World SQL Azure series, we recently talked to Kai Boo Lee, Business Development Director at aZaaS, about using Microsoft SQL Azure and Windows Azure to host the aZaaS Cloud Application Engine, a business application that customers can tailor to their needs. Here’s what he had to say:

MSDN: Where did you come up with the name aZaaS?

Lee: Our company name—aZaaS—means “anything from A to Z as a service.” We provide software-as-a-service solutions that make it possible for customers to enjoy the benefits of technology and automation without the need to purchase, install, or maintain software, hardware, and data centers.

MSDN: Tell me more about aZaaS.

Lee: aZaaS has been providing solutions to global customers of all sizes since 2009. During our first year of operations, we won the Partner of the Year award from Microsoft and we’ve been a Microsoft Gold Certified Partner since 2010. We also participated in Microsoft BizSpark, a program that supports the early stages for technology startup companies with software, training, support, and marketing resources. We have 40 employees who work in China, Taiwan, and Singapore. Microsoft has honored two of our employees with expert designations: One is a Microsoft Regional Director and another is a Microsoft Most Valuable Professional. We specialize in cloud solutions based on Microsoft technologies and are recognized experts with Microsoft Silverlight, a development platform for creating rich media applications and business applications.

MSDN: What made you decide to build aZaaS Cloud Application Engine and make it customizable?

Lee: We developed a cloud-based application that features functionality for human resources management. Our customers around the world were frequently asking for customizations. A key factor for all our customers is time-to-market. We decided to build a platform that includes ready-made modules that could be customized with minimal effort by anyone. It had to be flexible enough that customers could easily develop solutions based on their own business models and processes.

MSDN: Why did you choose SQL Azure?

Lee: We’re at the point where we have a lot more customers—200 and growing—and as the volume of transactions on each customer’s application increases, we need to be able to scale on demand. We decided to go with SQL Azure, the cloud-based relational and self-managed database service. It’s part of the Windows Azure platform, a general-purpose cloud platform that makes complicated tasks simple across a dynamic environment. We needed the relational capabilities in SQL Azure to build complex relationships between the data that customers use. At the moment, SQL Azure has the only fully fledged relational database management services available for the cloud.

MSDN: How does the aZaaS Cloud Application Engine work?

Lee: The aZaaS Cloud Application Engine consists of two parts: Application Designer and Application Workspace. We developed the Application Designer to be used by people who are experts in their business domains. That is, business users who are familiar with their organizations’ work processes can easily design the various modules—and create forms or define reporting mechanisms—to meet internal requirements. After customizing the application, users can then publish their updates to the cloud. From the Application Workspace, users can operate the customized aZaaS Cloud Application Engine in the familiar Windows environment.

MSDN: What did you have to do to build the database functionality with SQL Azure?

Lee: aZaaS uses the relational cloud database, which is built on Microsoft SQL Server technologies, to provide a fully automated and highly available multitenant database service on the aZaaS Cloud Application Engine. We are capitalizing on the scale-out database features of SQL Azure. We are also taking advantage of the streamlined SQL Azure features for consolidating databases in the cloud and quickly provisioning databases. We partition the aZaaS Cloud Application Engine database for each tenant. Each tenant can access only those portions of the database that belong to them. It helps that SQL Azure has very granular access control, so we were able to achieve multitenancy quite easily.

MSDN: What benefits have you and your customers realized?

Lee: For any customer that’s making use of web-based applications—which is basically everyone—we believe that the Windows Azure platform is the way to go. It speeds time-to-market, for starters. We can deploy a new tenant to SQL Azure in less than one day. The entire deployment process is at least nine times faster than it is with an on-premises model. Plus, the cost of hosting the SQL Azure solution on the Windows Azure platform is about 20 percent of the cost of hosting it in a customer’s own data center. For aZaaS, we can provide subscription-based services to a larger portion of our customers at a much reduced cost. This gives us a more consistent revenue base. Also, we can add functionality and services without interrupting a customer’s business processes.

Read the full story at: www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000010017

To read more about SQL Azure, visit www.SQLAzure.com.

<Return to section navigation list>

MarketPlace DataMarket and OData

• Marcelo Lopez Ruiz reported a Netflix + OData + datajs sample released on 5/25/2011:

Check out the sample and code walkthrough at http://kashyapas.com/2011/05/releasing-netflix-catalog-using-htmlodatadatajsjquery/.

Some highlights:

All the app components run in the browser!

- Clever use of the datajs cache - those values aren't changing anytime soon, and it definitely speeds things up to avoid round-trips. Nice way of reducing the page startup time.

- Templates are stored as plain text files on the server, then retrieved at runtime when the page needs them - again, clever!

- A server that opens up its data for cross-domain access enables some very, very powerful scenarios.

The code is available on CodePlex and is short & sweet. Enjoy!

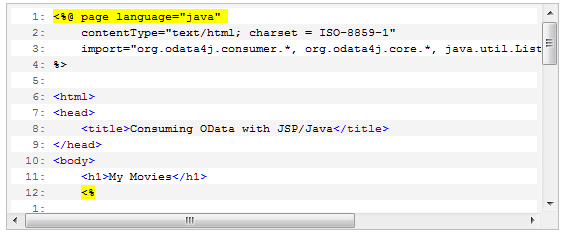

• Brian Swan explained Consuming OData via JSP in Windows Azure in a 5/24/2011 post:

A colleague recently asked me if I knew anything about consuming OData from a Java deployment in Windows Azure. My answer at the time was “no”, but with a quick pointer to http://code.google.com/p/odata4j/ I know the answer would soon change to “yes”. In this short post, I’ll show you how I used this tutorial, Deploying a Java Application to Windows Azure with Command-line Ant, to quickly comsume OData from Java running in Windows Azure.

Note: This post is not an investigation of the odata4j API. It is simply a look at how to deploy odata4j to Windows Azure. However, I will note that I didn’t see any functionality in the odata4j API for generating classes from an OData feed (unlike the OData SDK for PHP). This means that you need to know the structure of the feed you are consuming when writing code.

As I mentioned in the intro, to get my OData/JSP page running in Windows Azure, I basically followed this tutorial, written by Ben Lobaugh. Using Ben’s tutorial as a guide, you really only need to change a few things:

1. Download and install odata4j. In the You can download odata4j here: http://code.google.com/p/odata4j/. After you have downloaded and unzipped the archive, move the odata4j-bundle-0.4.jar file to the /lib/ext directory of your Java installation.

2. Create your jre6.zip archive with the odata4j files included. In the Select the Java Runtime Environment section of Ben’s tutorial, you are directed to create a .zip archive from your Java installation. Make sure you have added the odata4j-bundle-0.4.jar file to the /lib/ext directory of your Java installation before creating the archive.

3. Change the HelloWorld.jsp code to use the odata4j API. In the Preparing your Java application section of Ben’s tutorial, you need to write code that uses the odata4j API. Here’s a very simple example:

Note the references to org.odata4j.consumer and org.odata4j.core at the top of the page.

That’s it. If you follow the rest of Ben’s tutorial for testing your application in the Compute Emulator and deploying it to Windows Azure, you should have your application up and running quickly.

Next up, the Restlet OData extension. :-)

Glenn Gailey (@ggailey777) described OData Updates in Windows Phone “Mango” in a 5/24/2011 post:

Today, the Windows Phone team announced the next version of Windows Phone, code-named “Mango.” You can read more about Mango in this morning’s blog post from the Windows Phone team. There is also a developer tools update for this Mango beta release, which now includes the OData client library for Windows Phone; you can get it from here.

Here’s a list of new OData client library functionality in Mango:

- OData Client Library for Windows Phone Is Included in the Windows Phone SDK

In this release, the OData client library for Windows Phone is included in the Windows Phone Developer SDK. It no longer requires a separate download.- Add Service Reference Integration

You can now generate the client data classes used to access an OData service simply by using the Add Service Reference tool in Visual Studio. For more information, see How to: Consume an OData Service for Windows Phone.- Support for LINQ

You can now compose Language Integrated Query (LINQ) queries to access OData resources instead of having to determine and compose the URI of the resource yourself. (URIs are still supported for queries.)- Tombstoning Improvements

New methods have been added to the DataServiceState class that improve performance and functionality when storing client state. You can now serialize nested binding collections as well as any media resource streams that have not yet been sent to the data service.- Client Authentication

You can now provide credentials, in the form of a login and password, that are used by the client to authenticate to an OData service.For more information on these new OData library behaviors, see the topic Open Data Protocol (OData) Overview for Windows Phone in the Mango beta release documentation.

Note: These enhancements essentially makes the OData client library for Windows Phone functionally equivalent to the WCF Data Services library for Silverlight, which is nice for folks porting Silverlight apps to Windows Phone.

See also the Beth Massi (@bethmassi) posted her Trip Report: TechEd North America, Atlanta Georgia on 5/14/2011 item about her Creating and Consuming Open Data Protocol (OData) Services Tech*Ed North America 2011 session in the Visual Studio LightSwitch section below.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• Vittorio Bertocci (@vibronet) explained Storing Encrypted Tokens with the Windows Phone Developer Tools 7.1 in a 5/25/2011 post:

If you went through the hands on lab for using ACS on your Windows Phone 7 application, you already know that saving the user the hassle to re-authenticate all the times entails a security tradeoff. Here there’s what I wrote in the lab’s instructions:

Saving a token on the phone’s storage is not very secure. The isolated storage may prevent other applications from stealing the persisted token, but it does not prevent somebody with physical access to the device to eventually get to it. Any form of encryption would not solve the issue if the decryption key resides on the phone, no matter how well it is hidden. You could require the user to enter a PIN at every application run, and use the PIN to decrypt the token, however the approach presents obvious usability and user acceptance challenges.

While we wait for a better solution to emerge, it may be of some consolation considering that saving token is much better than saving direct credentials such as a username/password pair. A token is typically scoped to be used just with a specific service, and it has an expiration time: this somewhat limits what an attacker can do with a token, whereas no such restrictions would be present should somebody acquire username and password.

Well, guess what: a better solution is emerging, and as of yesterday you can get a sneak peek of it with the Windows Phone Developer Tools 7.1.

The solutions happens to be very simple, too: in a nutshell, it consists in making DPAPI available on the device.

In “Mango” you have access to ProtectedData, which you may recognize as the class that provides you access to DPAPI for encrypting and decrypting data via static methods Protect and Unprotect.

The ProtectedData class available on the device differs from the one in “big .NET” in the way in which it handles the scope.

- In .NET you can choose to encrypt your data with the machine key or with the current user key. See MSDN.

- On the device every application gets its own key, which is created on first use. Calls to Protect and Unprotect from the application code will implicitly use the application key, ensuring that all data remain private to the app itself: in fact, the scope parameter is not even present in the method’s signature. For what I understand, the key will survive subsequent application updates.

Back to the problem of saving tokens on the device: how do we modify the flow in the current samples in order to take advantage of this new feature?

Very straightforward. In step 12 of task 5 of the ACS+WP7 lab you hook up your app to a store façade, RequestSecurityTokenResponseStore, which is responsible for persisting in isolated storage the RSTR messages received from ACS (you can find it in SL.Phone.Federation/RequestSecurityTokenResponseStore). All you need to do is ensure that you use Protect and Unprotect when putting stuff in and out of isolated storage.

Note: Why the entire RSTR instead of just the token? Mainly because we can extract the expiration from directly from there, whereas the token itself may be encrypted for the destination service hence opaque to the client. We use that info just as an optimization – we can save a roundtrip to the service if we already know that the token expired – but the service is still on point for validating incoming tokens hence errors on this are not too costly.

As we progressively refresh our ACS+WP7 content to take advantage of the new Mango features (with its occasional road bumps) we’ll incorporate those new features, but I wanted to make sure that you know ASAP how to solve the token-persistence-on-the-device problem. Don’t you feel better already?

• Vittorio Bertocci (@vibronet) descrobed “Mango” and the ACS+Phone Samples in a 5/25/2011 post:

Yesterday we released the Beta of the Windows Phone Developer Tools 7.1, with a boatload of new awesome features (I’ll write about one of those in the next post).

Beta versions are likely to have some known issues, and this one is no exception. In fact, there is a bug that you are likely to encounter if you go through the ACS+WP7 hands-on lab, the Windows Azure Toolkit for Windows Phone 7 (which still targets Windows Phone 7.0, see Wade’s post here) and the phone sample on the ACS site.

The WebBrowser control in the emulator periodically resets the scale and position of the page being rendered, making it challenging to enter your credentials on IP authentication pages that are not designed for mobile. It is still definitely possible, but you may need to do a bit of chasing of the username and password fields on the screen (or type without having the fields into view, using the page up combination for entering values in the emulator via the PC’s keyboard).

While we wait for a release of the tools in which the bug has been fixed, here there are a couple of workarounds you may want to consider if your Windows Phone app scenario requires ACS:

- Use the mobile version of the IP authentication pages. Many providers offer mobile-friendly versions of their authentication pages, that will render well on the phone’s screen without requiring you to zoom in and pan through the page. In that case the bug will just make the page flicker a bit, but you’ll be able to enter your data without issues.

This entails taking control of the home realm discovery experience, as the default pages used by ACS for contacting the preconfigured IPs are not the mobile-ready versions. For ideas on how to do it I suggest taking a look at the code of the phone client generated by the template in the latest Windows Azure Toolkit for Windows Phone 7- Keep the Windows Phone Tools 7.0 on some of your machines. I am keeping a couple of my machines on 7.0: not only because of this specific issue, but also because AFAIK with 7.1 Beta you cannot publish apps to the marketplace and I need to be able to do so (if I’ll finally find the time to update my poor neglected Chinese & Japanese dictionary apps)

If neither of the above works for you, let me stress that entering your credentials is still perfectly possible: it just requires you a bit more effort. Those are the joys of prerelease software.

• The App Fabric Team reported AppFabric Service Bus on NuGet and CodePlex on 5/25/2011:

Today, the Service Bus team is happy to announce a couple of initiatives to make it even easier to get started learning and using the Service Bus: the AppFabric Service Bus NuGet Package and the Service Bus Samples CodePlex Site. Both our Samples and SDK are still available as a download from MSDN, but we're hopeful that these new choices might be simpler and more convenient.

Service Bus on NuGet

NuGet is an open source package manager for .Net which is integrated into Visual Studio and makes it simple to install and update libraries. While traditionally you would need to download an install an SDK before using it within your projects, NuGet adds a new 'Add Library Package Reference' option to Visual Studio which downloads, configures and updates the references you select automatically. The Service Bus NuGet Package contains two DLL files and configuration information needed to use the AppFabric May CTP - you can use this package instead of needing to download and install the Windows Azure AppFabric SDK v2.0 CTP May 2011 Update. Give it a try and let us know what you think in the AppFabric CTP Forums.

Service Bus Samples on CodePlex

We're also excited today to offer you a new way to find and browse our library of sample code at our new CodePlex site. We'll be using the CodePlex site to help get more samples, tools and utilities out into your hands early and often. You can Browse the Source Code online or Download a copy if you prefer. Our CodePlex site licenses sample code to you under the open Apache 2.0 License - we're hopeful that makes it easier to re-use, re-distribute and share our sample code and tools. We'll be adding more code to the site regularly and would love to hear your feedback on the things you'd like to see us share.

As always, we're constantly hoping to hear from you about how we're doing - are CodePlex and NuGet useful delivery vehicles for you, or do you prefer to download and install the SDK and Samples? Please let us know on the forums!

• The App Fabric Team posted An Introduction to Service Bus Topics on 5/25/2011:

In the May CTP of Service Bus, we’ve added a brand-new set of cloud-based, message-oriented-middleware technologies including reliable message queuing and durable publish/subscribe messaging. Last week I posted the Introduction to Service Bus Queues blog entry. This post follows on from that and provides an introduction to the publish/subscribe capabilities offered by Service Bus Topics. Again, I’m not going to cover all the features in this article, I just want to give you enough information to get started with the new feature. We’ll have follow-up posts that drill into some of the details.

I’m going to continue with the retail scenario that I started in the queues blog post. Recall that sales data from individual Point of Sale (POS) terminals needs to be routed to an inventory management system which uses that data to determine when stock needs to be replenished. Each POS terminal reports its sales data by sending messages to the DataCollectionQueue where they sit until they are received by the inventory management system as shown below:

Now let’s evolve this scenario. A new requirement has been added to the system: the store owner wants to be able to monitor how the store is performing in real-time.

To address this requirement we need to take a “tap” off the sales data stream. We still want each message sent by the POS terminals to be sent to the Inventory Management System as before but we want another copy of each message that we can use to present the dashboard view to the store owner.

In any situation like this, where you need each message to be consumed by multiple parties, you need the Service Bus Topic feature. Topics provide the publish/subscribe pattern in which each published message is made available to each subscription registered with the Topic. Contrast this with the queue where each message is consumed by a single consumer. That’s the key difference between the two models.

Messages are sent to a topic in exactly the same way as they are sent to a queue but messages aren’t received from the topic directly, instead they are received from subscriptions. You can think of a topic subscription like a virtual queue that gets copies of the messages that are sent to the topic. Messages are received from a subscription in exactly the same way as they are received from a queue.

So, going back to the scenario, the first thing to do is to switch out the queue for a topic and add a subscription that will be used by the Inventory Management System. So, the system would now look like this:

The above configuration would perform identically to the previous queue-based design. That is, messages sent to the topic would be routed to the Inventory subscription from where the Inventory Management System would consume them.

Now, in order to support the management dashboard, we need to create a second subscription on the topic as shown below:

Now, with the above configuration, each message from the POS terminals will be made available to both the Dashboard and Inventory subscriptions.

Show Me the Code

I described how to sign-up for a Service Bus account and create a namespace in the queues blog post so I won’t cover that again here. Recall that to use the Service Bus namespace, an application needs to reference the AppFabric Service Bus DLLs, namely Microsoft.ServiceBus.dll and Microsoft.ServiceBus.Messaging.dll. You can find these as part of the SDK download.

Creating the Topic and Subscriptions

Management operations for Service Bus messaging entities (queues and topics) are performed via the ServiceBusNamespaceClient which is constructed with the base address of the Service Bus namespace and the user credentials. The ServiceBusNamespaceClient provides methods to create, enumerate and delete messaging entities. The snippet below shows how the ServiceBusNamespaceClient is used to create the DataCollectionTopic.

Uri ServiceBusEnvironment.CreateServiceUri("sb", "ingham-blog", string.Empty); string name = "owner"; string key = "abcdefghijklmopqrstuvwxyz"; ServiceBusNamespaceClient namespaceClient = new ServiceBusNamespaceClient( baseAddress, TransportClientCredentialBase.CreateSharedSecretCredential(name, key) ); Topic dataCollectionTopic = namespaceClient.CreateTopic("DataCollectionTopic");Note that there are overloads of the CreateTopic method that allow properties of the topic to be tuned, for example, to set the default time-to-live to be applied to messages sent to the topic. Next, let’s add the Inventory and Dashboard subscriptions.

dataCollectionTopic.AddSubscription("Inventory"); dataCollectionTopic.AddSubscription("Dashboard");Sending Messages to the Topic

As I mentioned earlier, applications send messages to a topic in the same way that they send to a queue so the code below will look very familiar if you read the queues blog post. The difference is the application creates a TopicClient instead of a QueueClient.

For runtime operations on Service Bus entities, i.e., sending and receiving messages, an application first needs to create a MessagingFactory. The base address of the ServiceBus namespace and the user credentials are required.

Uri ServiceBusEnvironment.CreateServiceUri("sb", "ingham-blog", string.Empty); string name = "owner"; string key = "abcdefghijklmopqrstuvwxyz"; MessagingFactory factory = MessagingFactory.Create( baseAddress, TransportClientCredentialBase.CreateSharedSecretCredential(name, key) );From the factory, a TopicClient is created for the particular topic of interest, in our case, the DataCollectionTopic.

TopicClient topicClient = factory.CreateTopicClient("DataCollectionTopic");A MessageSender is created from the TopicClient to perform the send operations.

MessageSender ms = topicClient.CreateSender();Messages sent to, and received from, Service Bus topics (and queues) are instances of the BrokeredMessage class which consists of a set of standard properties (such as Label and TimeToLive), a dictionary that is used to hold application properties, and a body of arbitrary application data. An application can set the body by passing in any serializable object into CreateMessage (the example below passes in a SalesData object representing the sales data from the POS terminal) which will use the DataContractSerializer to serialize the object. Alternatively, a System.IO.Stream can be provided.

BrokeredMessage bm = BrokeredMessage.CreateMessage(salesData); bm.Label = "SalesReport"; bm.Properties["StoreName"] = "Redmond"; bm.Properties["MachineID"] = "POS_1"; ms.Send(bm);Receiving Messages from a Subscription

Just like when using queues, messages are received from a subscription using a MessageReceiver. The difference is that the MessageReceiver is created from a SubscriptionClient rather than a QueueClient. Everything else remains the same including support for the two different receive modes (ReceiveAndDelete and PeekLock) that I discussed in the queues blog post.

So, first we create the SubscriptionClient, passing the name of the topic and the name of the subscription as parameters. Here I’m using the Inventory subscription.

SubscriptionClient subClient = factory.CreateSubscriptionClient("DataCollectionTopic", "Inventory");Next we create the MessageReceiver and receive a message.

MessageReceiver mr = subClient.CreateReceiver(); BrokeredMessage receivedMessage = mr.Receive(); try { ProcessMessage(receivedMessage); receivedMessage.Complete(); } catch (Exception e) { receivedMessage.Abandon(); }Subscription Filters

So far, I’ve said that all messages sent to the topic are made available to all registered subscriptions. The key phrase there is “made available”. While Service Bus subscriptions see all messages sent to the topic, it is possible to only copy a subset of those messages to the virtual subscription queue. This is done using subscription filters. When a subscription is created, it’s possible to supply a filter expression in the form of a SQL92 style predicate that can operate over the properties of the message, both the system properties (e.g., Label) and the application properties, such as StoreName in the above example.

Let’s evolve the scenario a little to illustrate this. A second store is to be added to our retail scenario. Sales data from all of the POS terminals from both stores still need to be routed to the centralized Inventory Management System but a store manager using the Dashboard tool is only interested in the performance of her store. We can use subscription filtering to achieve this. Note that when the POS terminals publish messages, they set the StoreName application property on the message. Now we have two stores, let’s say Redmond and Seattle, the POS terminals in the Redmond store stamp their sales data messages with a StoreName of Redmond while the Seattle store POS terminals use a StoreName of Seattle. The store manager of the Redmond store only wishes to see data from its POS terminals. Here’s how the system would look:

To set this routing up, we need to make a simple change to how we’re creating the Dashboard subscription as follows:

dataCollectionTopic.AddSubscription("Dashboard", new SqlFilterExpression("StoreName = 'Redmond'");With this subscription filter in place, only messages with the StoreName property set to Redmond will be copied to the virtual queue for the Dashboard subscription.

There is a bigger story to tell around subscription filtering. Applications have an option to have multiple filter rules per subscription and there’s also the ability to modify the properties of a message as it passes in to a subscription’s virtual queue. We’ll cover these advanced topics in a separate blog post.

Wrapping up

Hopefully this post has shown you how to get started with the topic-based publish/subscribe feature being introduced in the new May CTP of Service Bus.

It’s worth noting that all of the reasons for using queuing that I mentioned in the introduction to queuing blog post also apply to topics, namely:

Temporal decoupling – message producers and consumers do not have to be online at the same time.

Load leveling – peaks in load are smoothed out by the topic allowing consuming applications to be provisioned for average load rather than peak load.

Load balancing – just like with a queue, it’s possible to have multiple competing consumers listening on a single subscription with each message being handed off to only one of the consumers, thereby balancing load.

Loose coupling – it’s possible to evolve the messaging network without impacting existing endpoints, e.g., adding subscriptions or changing filters to a topic to accommodate new consumers.

We’ve only really just scratched the surface here; we’ll go in to more depth in future posts.

Finally, remember one of the main goals of our CTP release is to get feedback on the service. We’re interested to hear what you think of the Service Bus messaging features. We’re particularly keen to get your opinion of the API. So, if you have suggestions, critique, praise, or questions, please let us know at http://social.msdn.microsoft.com/Forums/en-US/appfabricctp/. Your feedback will help us improve the service for you and other users like you.

Tim Anderson (@timanderson) reported Cracks appear in Microsoft’s bundled installers for Visual Studio 2010 as I try ADFS on 5/24/2011:

I am trying out Microsoft Active Directory Federation Services (ADFS), chasing the dream of single sign-on between on-premise Active Directory and the cloud.

Oddly, although ADFS has been around for a while, it feels more bleeding edge than it should. ADFS is critical to Microsoft’s cloud platform play, and it needs to build this stuff right into Windows Server and .NET rather than making it a downloadable add-on.

The big problem with installers, whether on Windows or elsewhere, is dependencies and versions. You get some variant of DLL Hell, when A requires the latest version of B, and C requires an old version of B, and you need both A and C installed. The issue on Windows has reduced over the years, partly because of more side-by-side installations where multiple versions co-exist, and partly because Microsoft has invested huge effort into its installers.

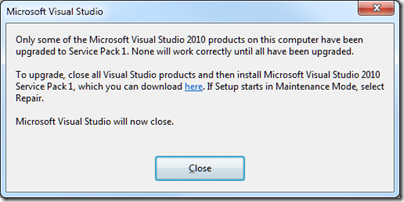

There are still issues though, and I ran into a few of them when trying ADFS. I have Visual Studio 2010 installed on Windows 7 64-bit, and it is up-to-date with Service Pack 1, released in April. However, after installing the Windows Identity Foundation (WIF) runtime and SDK, I got this error when attempting to start Visual Studio:

Only some of the Microsoft Visual Studio 2010 products on this computer have been upgraded to Service Pack 1. None will work correctly until all have been upgraded.

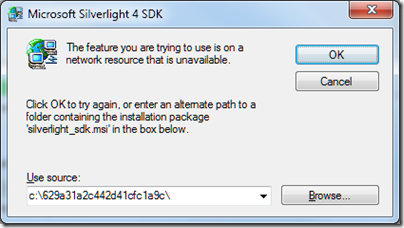

I’m guessing that the WIF components have not been updated to take account of SP1 and broke something. Never mind, I found my Visual Studio SP1 .ISO (I avoid the web installs where possible), ran setup, and choose to reapply the service pack. It trundled along until it decided that it needed to run or query the Silverlight 4 SDK setup:

A dialog asked for silverlight_sdk.msi. I wasted some time over this. Why is the installer looking for silverlight_sdk.msi in a location that does not exist? I’d guess because the Silverlight SDK installer is wrapped as an executable that unpacked the MSI there, ran it and then deleted it. Indeed, I discovered that both the Silverlight 4 SDK and the Silverlight 4 Tools for Visual Studio are .EXE files that wrap zip archives. You can rename them with a .zip or .7z extension and extract them with the open source 7 Zip, but not for some reason with the ZIP extractor built into Windows. Then you can get hold of silverlight_sdk.msi.

I did this, but then discovered that silverlight_sdk.msi is also on the Visual Studio SP1 ISO. All I needed to do was to point the installer there, though it is odd that it cannot find the file of its own accord.

It also seems to me that this scenario should not occur. If the MSI for installation A might be needed later by installation B, it should not be put into a temporary location and then deleted.

The SPI repair continued, and I got a reprise of the same issue but with the Visual C++ runtimes. The following dialog appeared twice for x86, and twice for x64:

These files are also on the SP1 .ISO, so I pointed the installer there once again and setup continued.

Unfortunately something else was wrong. After a lengthy install, the SP1 installer started rollback without so much as a warning dialog, and then exited declaring that a fatal error had occurred. I looked at the logs

I rebooted, tried again, same result.

I was about to trawl the forums, but thought I should try running Visual Studio 2010 again, just in case. Everything was fine.

Logic tells me that the SP1 “rollback” was not quite a rollback, since it fixed the problem. Then again, bear in mind that it was rolling back the reapplication of the service pack which is different from the usual rollback scenario.

Visual Studio, .NET, myriad SDKs that each get updated at different times, developers who download and install these in an unpredictable order … it is not surprising that it goes wrong sometimes; in fact it is surprising that it does not go wrong more often. So I guess I should not beat up Microsoft too much about this. Even so this was an unwelcome reminder of a problem I have not seen much in the last few years, other then with beta installs which play by different rules.

Related posts:

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Wade Wegner (@wadewegner) announced WPDT 7.1 Beta Support Added to the Windows Azure Toolkit for Windows Phone 7 in a 5/14/2011 post:

At this point I’m sure you’ve heard that the Windows Phone Developer Tools for "Mango" released today (also called the Windows Phone Developer Tools 7.1 Beta). To learn about these tools I suggest you review the following:

Download & release notes: Windows Phone Developer Tools for Mango

- What’s New in Windows Phone Developer Tools

- Code Samples for Windows Phone

To support the WPDT 7.1 beta, we just released the Windows Azure Toolkit for Windows Phone 7 V1.2.2. This release provides support for the beta tools so that you can use these new tools and still use the toolkit. Additionally, we have updated the dependency checker wizard so that we can ensure you have all the prerequisites to successfully use the toolkit.

One thing I want to make clear is that the toolkit is specifically designed to build Windows Phone 7.0 applications, not applications for the new Windows Phone OS 7.1 or “Mango” platform. Consequently, our project templates continue to target Windows Phone 7.0 application projects and not Windows Phone 7.1 application projects for Windows Phone.

Regardless of whether you have downloaded or installed the new tools, the experience hasn’t changed – the dependency checker will still check and ensure that dependencies and prerequisites are installed on your machine. The only difference is that you’ll now see an optional download for the Windows Phone Developer Tools 7.1 Beta:

You can click the Download link to download and install the WPDT 7.1 Beta. Once you have successfully installed the WPDT 7.1 beta you can run the wizard again and it should complete without a problem.

• Wade Wegner (@wadewegner) described Deploying Your Services from the Windows Azure Toolkit for Windows Phone 7 in a 5/24/2011 post:

One of the most common requests I’ve received for the Windows Azure Toolkit for Windows Phone 7 has been a tutorial for deploying the services to Windows Azure. It’s taken longer than it should have—my humblest apologies—but I’ve finally put one together. You’ll need to follow the following steps outlined below:

Create a Certificate (used for SSL)

- Export the PFX File

- Upload the PFX File to Windows Azure

- (optional) Supporting Apple Push Notifications

- Update and Deploy to Windows Azure

- Update Your Windows Phone Project

If you have any problems or confusion regarding the steps below, please let me know.

Create a Certificate

- Open the Visual Studio Command Prompt window as an administrator.

- Change the directory to the location where you want to save the certificate file.

- Type the following command, making sure to replace <CertificateName> with your hosted service URL:

makecert -sky exchange -r -n "CN=<CertificateName>" -pe -a sha1 -len 2048 -ss My "<CertificateName>.cer"For example:

Note: The makecert tool will both create the .cer file and register the certificate in your Personal Certificates store. For more information, check the following article: http://msdn.microsoft.com/en-us/library/gg432987.aspx.

Export the PFX File

- Open the Certificate Manager snap-in for the management console by typing certmgr.msc in the Start menu textbox.

- The new certificate was automatically added to the personal certificate store. Export the certificate by right-clicking it, pointing to All Tasks, and then clicking Export.

- On the Export Private Key page, make sure to select Yes, export the private key.

- Choose a name and export the file to a .pfx file.

- Finish the wizard.

Note: You now have a copy of the certificate (.pfx) with the private key. For more information, check the following article: http://msdn.microsoft.com/en-us/library/gg432987.aspx.

Upload the PFX File to Windows Azure

- Open a browser, navigate to the Windows Azure Platform Management Portal at https://windows.azure.com and log in with your credentials.

- Select Hosted Services, Storage Accounts & CDN and then Hosted Services.

- Expand your hosted service project, and select the Certificates folder.

- Click Add Certificate.

- Click Browse and select the PFX file you saved. Enter the password, and click Create.

- Once the certificate has been uploaded and created, copy the Thumbprint for later use.

Supporting Apple Push Notifications

During the project creation through the project template, you are given the option to support Apple Push Notifications through the Azure Web role.

If you chose to support Apple Push Notifications, please follow these additional steps:

- In order to use the toolkit to send Apple push notifications, you should have obtained the appropriate SSL certificate for your iOS application. You can find more information on how to obtain an Apple development certificate in the Provisioning and Development article in the Local and Push Notification Programming Guide.

- Upload the same Apple development certificate selected during the project template wizard to your hosted service. To do this, you can follow the same steps required to upload the SSL certificate described in the previous section.

Update and Deploy to Windows Azure

- In the Windows Azure Project, double-click the role (e.g. WP7CloudApp1.Web) to open the role properties.

- Select the Settings tab and update the DataConnectionString and Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString to point to your production storage account.

- Select the Certificates tab, and set the SslCertificate property value to the Thumbprint you saved from the Windows Azure portal. This will replace the thumbprint for the localhost certificate with the thumbprint related to the certificate you uploaded into your hosted service.

- Add the .cer file to your web application project. This way, you can point your phone users to download the certificate and install into the emulator. Right-click on the Web application project, choose Add Existing Item, select the .cer file you previously created, and add it to the project.

- Open the .cer file properties and make sure that the Build Action is set to Content.

- Now you can publish your Windows Azure project to your hosted service. You can do this from the Windows Azure Platform Management Portal or directly from Visual Studio 2010.

Update Your Windows Phone Project to Consume Your Windows Azure Hosted Service

In your Windows Phone project, open the App.xaml file. Update the following resources:

- To test against the deployed services, right-click the Windows Phone project, navigate to the Debug context menu, and click on Start new instance

• The Windows Azure Team reported Windows Azure Selected by CA Technologies to Deliver Backup and Recovery Solution

CA Technologies recently announced that its industry-leading CA ARCserve Backup and Recovery technology will be available to customers as a software-as-a-service (SaaS) offering based on the Windows Azure platform.

The new Windows Azure-based solution is scheduled for availability in the second half of 2011. Customers will find this solution attractive in situations where conventional, internally deployed backup-and-restore systems and software implementation are not feasible or practical from a financial or operational perspective. Small businesses or remote offices that lack the necessary on-site IT staff are some examples of where this solution could be applied.

The new solution is designed to meet the needs of CA Technologies and Microsoft partners—especially those who want to incorporate its data protection functionality into their broader cloud-based IT management offerings.

Please click here to read the press release.

• David Rubinstein described Bridging Java and .NET, in the clouds and on the ground in a 5/25/2011 post to the SDTimes on the Web blog:

Not all APIs are created equal, neither RESTful nor SOAP-based. This can cause problems when trying to integrate applications from multiple platforms across clouds, or from clouds to the ground.

Java-to-.NET integration software provider JNBridge today has released JNBridgePro 6.0, a platform that provides tools and adapters for interoperability while removing the complexity of creating those connections, according to Wayne Citrin, CTO of JNBridge.

“The whole idea of using the cloud is mixing and matching,” he said. “Web services standards and REST don’t work for everything. Not all useful APIs are REST-based. Lots of legacy apps will have direct-access APIs, not SOAP or REST.”

Cloud-based integration hubs and brokers may have some adapters for software to hook in, but not all software, Citrin said. JNBridgePro 6.0 is used to create custom adapters for hubs and brokers around such things as .NET, PHP or Ruby he added.JNBridgePro 6.0 supports Java and .NET interoperability in the same process, in different processes on the same machine, and in difference machines across a network, Citrin explained.

When using a Java client to access an application in a Microsoft Windows Azure cloud, for example, complexities arise because the Azure cloud drive API is not part of the Windows Azure Tools for Java kit that Microsoft offers, he said. JNBridgePro can be used to create a proxy between the client and cloud drive for direct interoperability, he said. Further, he said, “A lot of the complications Microsoft hasn’t been addressed in its documentation regarding deploying Java in an Azure cloud. We provide additional information around that.”

JNBridgePro addresses another of the cloud’s complexities, that of persistence, Citrin said. In theory, an instance can disappear anytime when it’s based in the cloud, so JNBridge had to remove its licensing information from a registry and make it an Internet-based mechanism that imposes licensing limits on elasticity, he said.

“It can accommodate instances up to certain limits. If those are about to be exceeded, you can go to the website and sign up for more

• The Windows Azure Team described Upcoming Academy Live Sessions Cover Partner Solutions Powered by Windows Azure in a 5/24/2011 post:

Don’t miss these FREE upcoming Academy Live sessions to learn more about partner solutions that can help you build and monetize your applications and keep your website secure on the Windows Azure platform. Click on each title to learn more and to register.

- Monetizing the Cloud, Software licensing on Windows Azure with InishTech

- Wednesday, May 25, 2011, 8:00 AM PDT

- Join InishTech for this overview session to learn how ISVs can monetize their Windows Azure applications using the Software Licensing and Protection Services for ISVs.

- Website security testing on Windows Azure with Cenzic

- Wednesday June 1, 2011, 8:00 AM PDT

- Attend this session with Cenzic to learn how Cenzic’s ClickToSecure Cloud web testing service and Windows Azure can help protect your website from hacker attacks.

- Monetizing ISV Applications On Windows Azure With Metanga

- Friday Jun 3, 2011, 8:00 AM PDT

- In this session, Metanga will demonstrate their SaaS billing application and show how it helps ISVs bring applications quickly to market and monetize them on Windows Azure.

Each session is 60 minutes.

• Jonathan Rozenblit (@jrozenblit, pictured below) recommended Rob Tiffany’s Connecting Windows Phones and Slates to Windows Azure TechEd session in a 5/24/2011 post:

In the words of Rob Tiffany (@robtiffany), Mobility Architect at Microsoft, “the combination of mobile and cloud computing is like peanut butter and chocolate. Nobody does it better than Microsoft when Windows Phones and Slates are connected to Windows Azure over wireless.” If you haven’t had a chance to see how Windows Azure offers you, the mobile developer, an easy way to add redundant storage, compute power, database access, queuing, caching, and web services that are accessible by any mobile platform, check out this session from TechEd North America last week here.

In this session, Rob helps you make sense of wireless-efficient web services and data transports, such as JSON. You’ll learn how to scale out processing through multiple Web and Worker role instances. Next, you’ll learn how to partition your data with Windows Azure Tables to dramatically increase your scalability via parallel reads. You’ll also learn about providing loose-coupling for inbound data from devices through the use of Azure Queues and Worker Roles. Finally, you’ll combine all this with distributed AppFabric Caching and Output Caching to provide a responsive mobile experience at Internet scale.

Did this make you think of cool ideas for the two? I’d love to hear them, so please share. Are you doing something with Windows Phone 7 and Windows Azure? I’d love to hear how you’re using it. Share the coolness in this LinkedIn discussion.

Igor Papirov posted Auto scaling strategies for Windows Azure, Amazon's EC2 and other cloud platforms to his Paraleap Technologies blog on 5/23/2011:

The strategies discussed in this article can be applied to any cloud platform that has an ability to dynamically provision compute resources, even though I rely on examples from AzureWatch auto scaling and monitoring service for Windows Azure

The topic of auto scaling is an extremely important one when it comes to architecting cloud-based systems. The major premise of cloud computing is its utility based approach to on-demand provisioning and de-provisioning of resources while paying only for what has been consumed. It only makes sense to give the matter of dynamic provisioning and auto scaling a great deal of thought when designing your system to live in the cloud. Implementing a cloud-based application without auto scaling is like installing an air-conditioner without a thermostat: one either needs to constantly monitor and manually adjust the needed temperature or pray that outside temperature never changes.

Many cloud platforms such as Amazon's EC2 or Windows Azure do not automatically adjust compute power dedicated to applications running on their platforms. Instead, they rely upon various tools and services to provide dynamic auto scaling. For applications running in Amazon cloud, auto-scaling is offered by Amazon itself via a service CloudWatch as well as third party vendors such as RightScale. Windows Azure does not have its own auto scaling engine but third party vendors such as AzureWatch can provide auto scaling and monitoring.

Before deciding on when to scale up and down, it is important to understand when and why changes in demand occur. In general, demand on your application can vary due to planned or unplanned events. Thus it is important to initially divide your scaling strategies into these two broad categories: Predictable and Unpredictable demand.

The goal of this article is to describe scaling strategies that gracefully handle unplanned and planned spikes in demand. I'll use AzureWatch to demonstrate specific examples of how these strategies can be implemented in Windows Azure environment. Important note: even though this article will mostly talk about scale up techniques, do not forget to think about matching scale down techniques. In some cases, it may help to think about building an auto scaling strategy in a way similar to building a thermostat.

Unpredictable demand

Conceptualizing use-cases of rarely occurring unplanned spikes in demand is rather straight forward. Demand on your app may suddenly increase due to a number of various causes, such as:

- an article about your website was published on a popular website (the Slashdot effect)

- CEO of your company just ordered a number of complex reports before a big meeting with shareholders

- your marketing department just ran a successful ad campaign and forgot to tell you about the possible influx of new users

- a large overseas customer signed up overnight and started consuming a lot of resources

Whatever the case may be, having an insurance policy that deals with such unplanned spikes in demand is not just smart. It may help save your reputation and reputation of your company. However, gracefully handling unplanned spikes in demand can be difficult. This is because you are reacting to events that have already happened. There are two recommended ways of handling unplanned spikes:

Strategy 1: React to unpredictable demand

When utilization metrics are indicating high load, simply react by scaling up. Such utilization metrics can usually include CPU utilization, amount of requests per second, number of concurrent users, amounts of bytes transferred, or amount of memory used by your application. In AzureWatch you can configure scaling rules that aggregate such metrics over some amount of time and across all servers in the application role and then issue a scale up command when some set of averaged metrics is above a certain threshold. In cases when multiple metrics indicate change in demand, it may also be a good idea to find a "common scaling unit", that would unify all relevant metrics together into one number.

Strategy 2: React to rate of change in unpredictable demand

Since scale-up and scale-down events take some time to execute, it may be better to interrogate the rate of increase or decrease of demand and start scaling ahead of time: when moving averages indicate acceleration or deceleration of demand. As an example, in AzureWatch's rule-based scaling engine, such event can be represented by a rule that interrogates Average CPU utilization over a short period of time in contrast to CPU utilization over a longer period of time

(Fig: scale up when Average CPU utilization for the last 20 minutes is 20% higher than Average CPU utilization over the last hour and Average CPU utilization is already significant by being over 50%)

Also, it is important to keep in mind that scaling events with this approach may trigger at times when it is not really needed: high rate of increase will not always manifest itself in the actual demand that justifies scaling up. However, in many instances it may be worth it to be on the safe side rather than on the cheap side.

Predictable demand

While reacting to changes in demand may be a decent insurance policy for websites with potential for unpredictable bursts in traffic, actually knowing when demand is going to be needed before it is really needed is the best way to handle auto scaling. There are two very different ways to predict an increase or decrease in load on your application. One way follows a pattern of demand based on historical performance and is usually schedule-based, while another is based on some sort of a "processing queue".

Strategy 3: Predictable demand based on time of day

There are frequently situations when load on the application is known ahead of time. Perhaps it is between 7am and 7pm when a line-of-business (LOB) application is accessed by employees of a company, or perhaps it is during lunch and dinner times for an application that processes restaurant orders. Whichever it may be, the more you know at what times during the day the demand will spike, the better off your scaling strategy will be. AzureWatch handles this by allowing to specify scheduling aspects into execution of scaling rules.

Strategy 4: Predictable demand based on amount of work left to do

While schedule-based demand predictions are great if they exist, not all applications have consistent times of day when demand changes. If your application utilizes some sort of a job-scheduling approach where the load on the application can be determined by the amount of jobs waiting to be processed, setting up scaling rules based on such metric may work best. Benefits of asynchronous or batch job execution where heavy-duty processing is off-loaded to back-end servers can not only provide responsiveness and scalability to your application but also the amount of waiting-to-be-processed jobs can serve as an important leading metric in the ability to scale with better precision. In Windows Azure, the preferred supported job-scheduling mechanism is via Queues based on Azure Storage. AzureWatch provides an ability to create scaling rules based on the amount of messages waiting to be processed in such a queue. For those not using Azure Queues, AzureWatch can also read custom metrics through a special XML-based interface.

Combining strategies

In the real world, implementing a combination of more than one of the above scaling strategies may be prudent. Application administrators likely have some known patterns for their applications's behaviour that would define predictable bursting scenarios, but having an insurance policy that would handle unplanned bursts of demand may be important as well. Understanding your demand and aligning scaling rules to work together is key to successful auto scaling implementation.

The Anime News Network reported Microsoft Launches Cloud Girl Manga for Windows Azure on 5/23/2011:

Engineer Claudia pitches cloud servces to solve Windows 7 mascot Nanami's problems

Microsoft Japan launched the first of four chapters from Cloud Girl - Mado to Kumo to Aoi Sora- (Cloud Girl: Windows, Clouds, and Azure Skies), an "epic manga" that explains and promotes Microsoft's Windows Azure cloud services system. The Microsoft Developer Network already posted a preview of the manga, in which the cloud services are personified by the character "Claudia." The manga includes a cameo by Nanami Madobe, the formerly unofficial blue-haired mascot character who was created to promote the Microsoft Windows 7 operating system.

In the story, the novice engineer Claudia visits the personal computer parts shop where her cousin Nanami works part-time. Trouble ensues when the shop launches a promotional campaign on Twitter, and the shop's retail site goes down due to the overwhelming hits. It is up to Claudia to make things right again. Along the way, Claudia pitches Windows Azure as a hassle-free way to avoid deadling with issues like security patches, uprading and swapping parts, and manually backing up data.

In cloud services, applications, data, and other resources are hosted on the network instead of stored on each computer. Microsoft claims that Windows Azure will help developers and administrators by getting rid of the exhaustive, redundant work that goes into hardware maintenance, patches, and the latest operating system upgrades for multiple computers.

Image © 2011 Microsoft

<Return to section navigation list>

Visual Studio LightSwitch

• Beth Massi (@bethmassi) reported the availability of Contoso Construction - LightSwitch Advanced Development Sample in a 5/25/2011 post:

Last week I demonstrated a more advanced sample at TechEd and showed different levels of customization that you can do to your LightSwitch applications as a professional developer by putting your own code into the client and server tiers. You can watch the session where I went through it here: Extending Microsoft Visual Studio LightSwitch Applications (also check out additional resources from my trip report I posted yesterday).

Download the Contoso Construction Sample

This sample demonstrates some of the more advanced code, screen, and data customizations you can do with Visual Studio LightSwitch Beta 2 as a professional developer (you get paid to write code). If you are not a professional developer or do not have any experience with LightSwitch, please see the Getting Started section of the LightSwitch Developer Center for step-by-step walkthroughs and How-to videos. Also please make sure you read the setup instructions below.

Features of this sample include:

- A “Home screen” with static images and text similar to the Course Manager sample

- Personalization with My Appointments displayed on log in

- “Show Map..” links under the addresses in data grids

- Picture editors

- Reporting via COM interop to Word

- Import data from Excel using the Excel Importer Extension

- Composite LINQ queries to retrieve/aggregate data

- Custom report filter using the Advanced Filter Control

- Emailing appointments via SMTP using iCal format in response to events on the save pipeline

Building the Sample

You will need Visual Studio LightSwitch Beta 2 installed to run this sample. Before building the sample you will need to set up a few things so that all the pieces work. Once you complete the following steps, press F5 to run the application in debug mode.

1. Install Extensions

You will need the following extensions installed to load this application:

http://code.msdn.microsoft.com/Filter-Control-for-Visual-90fb8e93

http://code.msdn.microsoft.com/Excel-Importer-for-Visual-61dd4a90

And the Bing Map control from the Training Kit:

http://go.microsoft.com/?linkid=9741442These are .VSIX packages and are also located in the root folder of this sample. Close Visual Studio and then double-click them to install.

2. Set Up Bing Map Control

In order to use the Bing Maps Control and the Bing Maps Web Services, you need a Bing Maps Key. Getting the key is a free and straightforward process you can complete by following these steps:

- Go to the Bing Maps Account Center at https://www.bingmapsportal.com.

- Click Sign In, to sign in using your Windows Live ID credentials.

- If you haven’t got an account, you will be prompted to create one.

- Enter the requested information and then click Save.

- Click the "Create or View Keys" link on the left navigation bar.

- Fill in the requested information and click "Create Key" to generate a Bing Maps Key.

- In the ContosoConstruction application open the MapScreen screen.

- Select the Bing Map control and enter the key in the Properties window.

3. Set Up Email Server Settings

When you create, update or cancel an appointment in the system between a customer and an employee emails can be sent. In order for the emailing of appointments to work you must add the correct settings for your SMTP server in the ServerGenerated project's Web.config:

- Open the ContosoConstruction project and in the solution explorer select "File View".

- Expand the ServerGenerated project and open the Web.config file.

- You will see the following settings that you must change to valid values:

<add key="SMTPServer" value="smtp.mydomain.com" />

<add key="SMTPPort" value="25" />

<add key="SMTPUserId" value="admin" />

<add key="SMTPPassword" value="password" />

- Run the application and open the employees screen, select Test User and specify a

valid email address. When you select this user on appointments, emails will be sent here.

Additional Setup Notes:

Personalization:

The system is set to Forms Authentication but if you change it to Windows Authenticaion then in order for the "My Appointments" feature to work you will need to add yourself into the Employees table and specify your domain name as the user name. Make sure to specify a valid email address if you want to email appointments.

Excel Import: