Windows Azure and Cloud Computing Posts for 5/5/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 5/7/2011 with new articles marked • by Beth Massi, Michael Washington, Richard Waddell, Bill Zack, Yung Chow, Marcelo Lopez Ruiz, Doug Rehnstrom, Chris Hoff, Wall Street & Technology, Avkash Chauhan, James Hamilton, Neil MacKenzie, Rob Tiffany, Max Uritsky, the Windows Azure Connect Team, and the Windows Azure AppFabric Labs Team.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

• Marcelo Lopez Ruiz reported tracking down a Windows Azure Storage Emulator returning 503 - Service Unavailable problem in a 5/6/2011 post:

Today's post is the result of about four hours of tracking down a tricky problem, so hopefully this will help others.

My problem began when I was testing an Azure project with the storage emulator. The code that was supposed to work with the blob service would fail any request with a "503 - Service Unavailable" error. All other services seemed to be working correctly.

Looking at the headers in the Response object of the exception, I could see that this was produced by the HTTP Server library by the telling Server header (Microsoft-HTTPAPI/2.0 in my case). So this wasn't really a problem with the storage emulator - something was failing earlier on.

Looking at the error log at %SystemRoot%\System32\LogFiles\HTTPERR\httperr1.log in an Administrator command prompt, there almost no details, so I had to look around more to figure out what was wrong.

Turns out that some time ago I had configure port 10000 on my machine to self-host WCF services according to the instructions on Configuring HTTP and HTTPS, using http://+:10000. The storage emulator currently sets itself up as http://127.0.0.1:10000/. According to the precedence rules, "+" trumps an explicit host name, so that was routed to first, but there was no service registered for "+" at the moment, so http.sys was correctly returning 503 - Service Unavailable.

To verify, I can simply run this command from an Aministrator command prompt:

C:\Windows\system32>netsh http show urlacl

URL Reservations:

-----------------Reserved URL : http://*:2869/

User: NT AUTHORITY\LOCAL SERVICE

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;LS)Reserved URL : http://+:80/Temporary_Listen_Addresses/

User: \Everyone

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;WD)... (an entry for http://+:10000/ was among these!) ...

The first was a simple one-liner, again from an Administrator command prompt, to delete that bit of configuration I didn't need anymore, and the storage server is up and running again.

netsh http delete urlacl url=http://+:10000/

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi recommended Andrew Brust’s Whitepaper on NoSQL and the Windows Azure Platform in a 5/4/2011 post to the SQL Azure Team blog:

I wanted to bring to your attention a great whitepaper on “NoSQL and the Windows Azure platform” written by Andrew Brust, a frequently quoted technology commentator and consultant.

This paper is important because there is a renewed interest in certain non-relational data stores which are sometimes collectively referred to as “NoSQL” technologies and this is a category which certainly has its niche of avid supporters. NoSQL databases often inherently use approaches like distributed horizontal scale-out and do away with some of the ACID characteristics of traditional relational databases in favor of flexibility and performance especially for certain workloads like scalable web applications. This in turn provokes a number of questions as to which scenarios NoSQL is appropriate for, whether it is a favored technology for the cloud and how it relates to cloud offerings like the Windows Azure platform.

I really enjoyed reading Andrew’s whitepaper because it does a great job of educating users on NoSQL and its major subcategories; understanding the NoSQL technologies already available in the Windows Azure platform; and on evaluating NoSQL and relational database approaches.

We have long believed that SQL Azure is unique because it has proven relational database technologies but is architected from the ground up for the scale, simplicity and ease of use of the cloud. But whether you are using SQL Azure or not, if you are working on cloud technologies you will likely at some point be confronted with decisions that relate to NoSQL and Andrew’s perspectives on this topic help to bring greater clarity to a topic which can be quite confusing at first.

<Return to section navigation list>

MarketPlace DataMarket, WCF and OData

Wes Yanaga posted Windows Azure Marketplace–DataMarket News on 5/5/2011:

Highlights

ISV Applications: Does your application leverage DataMarket data feeds? We are very intersted in helping to promote the many applications that use data from DataMarket. If you have built an application using 1 or more DataMarket feeds please send an email with the details of your applciation and which feeds you use to datamarketisv@microsoft.com. Your application could be featured on our blogs, in this newsletter and on our website.

World Dashboards by ComponentArt: ComponentArt's mission is to deliver the world's most sophisticated data presentation technology. ComponentArt has built a set of amazing World Data Digital Dashboards to show developers how they can build rich visualizations based on DataMarket data. Check out the dashboards at http://azure.componentart.com/.

Content Update

World Life Networks: The Social Archive - Micro-Blog Data (200+ Sources) by Keyword, Date, Location, Service—The Social Archive provides data from 200+ microblogs based on keyword, date, location, and/or service. UTF8 worldwide data on Entertainment, Geography, History, Lifestyle, Music, News, Science, Shopping, Technology, Transportation, etc. Updated every 60 seconds.

Microsoft:

Microsoft Utility Rate Service—The Microsoft Utility Rate Service is a database of electric utilities and rates indexed by location within the United States, gathered from Partner Utilities and/or from publically available information. Using this Service, developers can find electricity providers and rates when available in a given area, or area averages as provided by the US Energy Information Administration (EIA).

Government of the United Kingdom:

UK Foreign and Commonwealth Office Travel Advisory Service—Travel advice and tips for British travellers on staying safe abroad and what help the FCO can provide if something goes wrong.

United Nations:

UNSD Demographic Statistics—United Nations Statistics Division—The United Nations Demographic Yearbook collects, compiles and disseminates official statistics on a wide range of topics such as population size and composition, births, deaths, marriage and divorce. Data have been collected annually from national statistical authorities since 1948.

Indian Stock Market information available free on DataMarket:

StockViz Capital Market Analytics for India—StockViz brings the power of data & technology to the individual investor. It delivers ground-breaking financial visualization, analysis and research for the Indian investor community. The StockViz dataset is being made available through DataMarket to allow users to access the underlying data and analytics through multiple interfaces.

ParcelAtlas Data from Boundary Solutions Inc available for purchase on DataMarket:

ParcelAtlas BROWSE Parcel Image Tile Service—National Parcel Layer composed of 80 million parcel boundary polygons across nearly 1,000 counties suitable incorporating a national parcel layer existing geospatial data models. Operations are restricted to display of parcel boundary as graphic tiles.

ParcelAtlas LOCATE—National Parcel Layer composed of parcel boundary polygons supported by situs address, property characteristics and owner information. Three different methods for finding an address expedite retrieving and displaying a desired parcel and its assigned attributes. Surrounding parcels are graphic tile display only.

ParcelAtlas REPORTS—National Parcel Layer composed of parcel boundary polygons supported by situs address, property characteristics and owner information.

UPC Datasets from Gregg London Consulting available for building rich apps on various platforms:

U.P.C. Data for Spirits—Point of Sale—U.P.C. Data for Spirits includes Basic Product Information for Items in the following Categories: Beer, Wine, Liquor, and Mixers.

U.P.C. Data—Full Food—U.P.C. Data for Food includes Full Product Information and Attributes.

U.P.C. Data for Tobacco—Extended—U.P.C. Data for Tobacco includes Basic plus Extended Product Information for the following Categories: Cigarettes, Cigars, Other Tobacco Products, and Roll Your Own.

U.P.C. Data for Spirits—Extended—U.P.C. Data for Spirits includes Basic plus Extended Product Information for Items in the following Categories: Beer, Wine, Liquor, and Mixers.

U.P.C. Data for Tobacco—Point of Sale—U.P.C. Data for Tobacco includes Basic Product Information for the following Categories: Cigarettes, Cigars, Other Tobacco Products, and Roll Your Own.

U.P.C. Data for Vending Machines—U.P.C. Data for Food, for the Vending Machine Industry, to maintain compliance with Section 2572 of Health Care Bill H.R. 3962.

- U.P.C. Data—Ingredients for Food—U.P.C. Data for Food includes Full Ingredient Information.

U.P.C. Data—Nutrition for Food—U.P.C. Data for Food includes Full Nutrition Information.

ISV Partners

DataMarket helps Dundas contextualize BI data in the most cost effective way: Learn how Dundas uses DataMarket to bring in reliable, trusted public domain and premium commercial data into Dashboard without a lot of development time and costs. This is only possible because DataMarket takes a standardized approach, with a REST-based model for consuming services and data views that are offered in tabular form or as OData feeds. Without having a standard in place, Dundas would be forced to write all kinds of web services and retrieval mechanisms to get data from the right places and put it into the proper format for processing.

DataMarket powers Presto, JackBe's flagship Real-Time Intelligent (RTI) Solution: Hear Dan Malks, Vice President for App Platform Engineering at JackBe, talk about how his company is taking advantage of DataMarket to power Presto, the company's flagship Real-Time Intelligence (RTI) solution. He adds, DataMarket speeds the decision-making process by providing a central place where they can find whatever data they need for different purposes, saving time and increasing efficiency.

Tableau uses DataMarket to add premium content as a data source option in its data visualization software: Ellie Fields, Director of Product Marketing at Tableau Software, talks about using DataMarket, to tame the Wild West of data. She adds, "Now, when customers use Tableau, they see DataMarket as a data source option. They simply provide their DataMarket account key for authentication and then find the data sets they want to use. Customers can import the data into Tableau and combine that information with their own corporate data for deep business intelligence."

Resources

- Marketplace: http://datamarket.azure.com/

Blog: http://blogs.msdn.com/datamarket

- Developer Documentation: http://msdn.microsoft.com/en-us/library/gg315539.aspx

Partner Testimonials: http://www.microsoft.com/showcase/en/us/details/421d10b0-89ea-4b90-be59-21052ee700d3

Facebook: http://www.facebook.com/azuredatamarket

YouTube: http://www.youtube.com/azuredatamarket

There was very little, if anything, new in the preceding post. However, the Windows Azure Marketplace DataMarket hasn’t received much attention recently.

• Marcelo Lopez Ruiz asserted Anecdotally, datajs delivers quiet success in a 5/4/2011 post:

Most of the people I've discussed datajs describe their experience as simply getting the code, using it and having it work on the first go. "It just works", and people get on with building the rest of their web app.

So far it seems that the simple API resonates well with developers, and we're hitting the right level of simplicity with control, but I'm interested in hearing more of course. If you've tried datajs, what was your experience like? Good things, bad things, difficult things? If you haven't, is there something that's getting in your way or something that could change that would make the library more appealing to you?

If you want to be heard, just comment on this post or drop me a message - thanks in advance for your time!

Marcelo also described What we're up to with datajs in a 5/3/2011 post:

It's been a while since I last blogged about datajs, so in the interest of transparency I thought I'd give a quick update on what we're up to.

There are three things that we're working on.

- Cache implementation. Alex is doing some awesome work here, but the changes are pretty deep, which is why we haven't uploaded code for a while; we want to make sure that the codebase gets more stable over time at this moment.

- Bug fixes. A few minor things here and there, nothing too serious has come up so far.

- Responding to feedback. There are a couple of tweaks in design that we're considering to help with some scenarios. This is a great time to contribute ideas, so if you're interested, just go over to the Discussions page and post - we're listening.

Pedro Ardila described Spatial Types in the Entity Framework as a precursor to their incorporation in OData feeds in a 5/4/2011 post to the Entity Framework Design blog:

One of the highly-anticipated features coming in the next version of Entity Framework is Spatial support. The team has been hard at work designing a compelling story for Spatial, and we would love to get your feedback on it. In this post we will cover:

- The basics of SQL Server’s spatial support

- Design goals

- Walkthrough of design: CLR types, Metadata, Usage, WCF Serialization, Limitations

- Questions (we want to hear from you!)

This entry will not cover the tools experience as we want to focus on what is happening under the hood. We will blog about the tools design at a later time. For now, be sure that we plan on shipping Spatial with Tools support.

The Basics of Spatial

For those of you that are new to Spatial, let’s briefly describe what it’s all about: There are two basic spatial types called Geometry and Geography which allow you to perform geospatial calculations. An example of a geospatial calculation is figuring out the distance between Seattle and New York, or calculating the size (i.e. area) of a state, where the state has been described as a polygon.Geometry and Geography are a bit different. Geometry deals with planar data. Geometry is well documented under the Open Geospatial Consortium (OGC) Specification. Geography deals with ellipsoidal data, taking in mind the curvature of the earth when performing any calculations. SQL introduced spatial support for these two types in SQL Server 2008. The SQL implementation supports all of the standard functions outlined in the OGC spec.

Programming Experience in SQL

The query below returns all the stores within half a mile of the address of a person whose ID is equal to 2. In the where clause we multiply the result of STDistance by 0.00062 to convert meters to miles.DECLARE @dist sys.geography SET @dist = (SELECT p.Address FROM dbo.People as p WHERE p.PersonID = 2) SELECT [Store].[Name], [Store].[Location].Lat, [Store].[Location].Long FROM [dbo].[Stores] AS [Store] WHERE (([Store].[Location].STDistance(@dist)) * cast(0.00062 as float(53))) <= .5In the sample below, we change the person’s address to a different coordinate. Note that STGeomFromText takes two parameters: the point, and a Spatial Reference Identifier (SRID). The value of 4326 maps to the WGS 84 which is the standard coordinate system for the Earth.

update [dbo].[People] set [Address] = geography::STGeomFromText('POINT(-122.206834 57.611421)', 4326) where [PersonID] = 2Note that when listing a point, the longitude is listed before the latitude.

Design

Goals

The goals for spatial are the following:

- To provide a first class support for spatial in EDM

- Rich Programming experience in LINQ and Entity SQL against Spatial types

- Code-first, Model-first, and Database-first support

- Tooling (not covered in this blog)

We have introduced two new primitive EDM Types called Geometry and Geography. This allows us to have spatial-typed properties in our Entities and Complex Types. As with every other primitive type in EDM, Geometry and Geography will be associated with CLR types. In this case, we have created two new types named DBGeometry and DBGeography which allows us to provide a first-class programming experience against these types in LINQ and Entity SQL.

One can describe these types in the CSDL in a straightforward fashion:

<EntityType Name="Store"> <Key> <PropertyRef Name="StoreId" /> </Key> <Property Name="StoreId" Type="Int32" Nullable="false" /> <Property Name="Name" Type="String" Nullable="false" /> <Property Name="Location" Type="Geometry" Nullable="false" /> </EntityType>Representing in SSDL is very simple as well:

<EntityType Name="Stores"> <Key> <PropertyRef Name="StoreId" /> </Key> <Property Name="StoreId" Type="int" Nullable="false" /> <Property Name="Name" Type="nvarchar" Nullable="false" MaxLength="50" /> <Property Name="Location" Type="geometry" Nullable="false" /> </EntityType>Be aware that spatial types cannot be used as entity keys, cannot be used in relationships, and cannot be used as discriminators.

Usage

Here are some scenarios and corresponding queries showing how simple it is to write spatial queries in LINQ:

Have in mind that spatial types are immutable, so they can’t be modified after creation. Here is how to create a new location of type DbGeography:

s.Location = DbGeography.Parse("POINT(-122.206834 47.611421)"); db.SaveChanges();Spatial Functions

Our Spatial implementation relies on the underlying database implementation of any of the spatial functions such as Distance, Intersects, and others. To make this work, we have created the most common functions as canonical functions on EDM. As a result, Entity Framework will defer the execution of the function to the server.

Client-side Behavior

DbGeometry and DbGeography internally use one of two implementations of DbSpatialServices for client side behavior which we will make available:One implementation relies on Microsoft.SQLServer.Types.SQLGeography and Microsoft.SQLServer.Types.SQLGeometry being available to the client. If these two namespaces are available, then we delegate all spatial operations down to the SQL assemblies. Note that this implementation introduces a dependency.

Another implementation provides limited services such as serialization and deserialization, but does not allow performing non-trivial spatial operations. We create these whenever you explicitly create a spatial value or deserialize one from a web service.

DataContract Serialization

Our implementation provides a simple wrapper that is serializable, which allows spatial types to be used in multi-tier applications. To provide maximum interoperability, we have created a class called DbWellKnownSpatialValue which contains the SRID, WellKnownText (WKT), and WellKnownBinary (WKB) values. We will serialize SRID, WKT and WKB.

Questions

We want to hear from you. As we work through this design, it is vital to hear what you think about our decisions, and that you chime in with your own ideas. Here are a few questions, please take some time to answer them in the comments:

- In order to have client-side spatial functionality, EF relies on a spatial implementation supplied by the provider. As a default, EF uses the SQL Spatial implementation. Do you foresee this being a problematic for Hosted applications which may or may not have access to a spatial implementation?

- Do you feel as though you will need richer capabilities on the client side?

- In addition to Geometry and Geography types, do you need to have constrained types like Point, Polygon?

- Do you foresee using heterogeneous columns of spatial values in your application?

Spatial data is ubiquitous now thanks to the widespread use of GPS-enabled mobile devices. We are very excited about bringing spatial type support to the Entity Framework. We encourage you to leave your thoughts on this design below.

Be sure to read the comments.

Arlo Belshee posted Geospatial data support in OData to the OData blog on 5/3/2011:

This is a strawman proposal. Please challenge it in the OData mailing list.

OData supports geospatial data types as a new set of primitives. They can be used just like any other primitives - passed in URLs as literals, as types and values for properties, projected in $select, and so on. Like other primitives, there is a set of canonical functions that can be used with them.

Why are we leaning towards this design?

Boxes like these question the design and provide the reasoning behind the choices so far.

This is currently a living document. As we continue to make decisions, we will adjust this document. We will also record our new reasons for our new decisions.

The only restriction, as compared to other primitives, is that geospatial types may not be used as entity keys (see below).

The rest of this spec goes into more detail about the geospatial type system that we support, how geospatial types are represented in $metadata, how their values are represented in Atom and JSON payloads, how they are represented in URLs, and what canonical functions are defined for them.

Modeling

Primitive Types

Our type system is firmly rooted in the OGC Simple Features geometry type system. We diverge from their type system in only four ways.

Figure 1: The OGC Simple Features Type HierarchyWhy a subset?

Our primary goal with OData is to get everyone into the ecosystem. Thus, we want to make it easy for people to start. Reducing the number of types and operations makes it easier for people to get started. There are extensibility points for those with more complex needs.

First, we expose a subset of the type system and a subset of the operations. For details, see the sections below.

Second, the OGC type system is defined for only 2-dimensional geospatial data. We extend the definition of a position to be able to handle a larger number of dimensions. In particular, we handle 2d, 3dz, 3dm, and 4d geospatial data. See the section on Coordinate Reference Systems (CRS) for more information.

Why separate Geometrical and Geographical types?

They actually behave differently. Assume that you are writing an application to track airplanes and identify when their flight paths intersect, to make sure planes don't crash into each other.

Assume you have two flight plans. One flies North, from (0, 0) to (0, 60). The other flies East, from (-50, 58) to (50, 58). Do they intersect?

In geometric coordinates they clearly do. In geographic coordinates, assuming these are latitude and longitude, they do not.

That's because geographic coordinates are on a sphere. Airplanes actually travel in arcs. The eastward-flying plane actually takes a path that bends up above the flight path of the northward plane. These planes miss each other by hundreds of miles.

Obviously, we want our crash detector to know that these planes are safe.

The two types may have the same functions, but they can have very different implementations. Splitting these into two types makes it easier for function implementers. They don't need to check the CRS in order to pick their algorithm.

Third, the OGC type system ised for flat-earth geospatial data (termed geometrical data hereafter). OGC does not define a system that handles round-earth geospatial data (termed geographical data). Thus, we duplicate the OGC type system to make a parallel set of types for geographic data.

We refer to the geographical vs geometrical distinction as the topology of the type. It describes the shape of the space that includes that value.

Some minor changes in representation are necessary because geographic data is in a bounded surface (the spheroid), while geometric data is in an infinite surface (the plane). This shows up, for example, in the definition of a Polygon. We make as few changes as possible; see below for details. Even when we make changes, we follow prior art wherever possible.

Finally, like other primitives in OData, the geospatial primitives do not use inheritance and are immutable. The lack of inheritance, and the subsetting of the OGC type system, give us a difficulty with representing some data. We resolve this with a union type that behaves much like the OGC's base class. See the Union types section below for more details.

Coordinate Reference Systems

Although we support many Coordinate Reference Systems (CRS), there are several limitations (as compared to the OGC standard):

- We only support named CRSated by an SRID. This should be an official SRID. In particular, we don't support custom CRS defined in the metadata, as does GML.

- Thus, some data will be inexpressible. For example, there are hydrodynamics readings data represented in a coordinate system where each point has coordinates [lat, long, depth, time, pressure, temperature]. This lets them do all sorts of cool analysis (eg, spatial queries across a surface defined in terms of the temperature and time axes), but is beyond the scope of OData.

- There are also some standard coordinate systems that don't have codes. So we couldn't represent those. Ex: some common systems in New Zealand & northern Europe.

- The CRS is part of the static type of a property. Even if that property is of the base type, that property is always in the same CRS for all instances.

- The CRS is static under projection. The above holds even between results of a projection.

- There is a single "undefined" SRID value. This allows a service to explicitly state that the CRS varies on a per-instance basis.

- Geometric primitives with different CRS are not type-compatible under filter, group, sort, any action, or in any other way. If you want to filter an entity by a point defined in Stateplane, you have to send us literals in Stateplane. We will not transform WGS84 to Stateplane for you.

- There are client-side libraries that can do some coordinate transforms for you.

- Servers could expose coordinate transform functions as non-OGC canonical function extensions. See below for details.

Nominally, the Geometry/Geography type distinction is redundant with the CRS. Each CRS is inherently either round-earth or flat-earth. However, we are not going to automatically resolve this. Our implementation will not know which CRS match which model type. The modeler will have to specify both the type & the CRS.

There is a useful default CRS for Geography (round earth) data: WGS84. We will use that default if none is provided.

The default CRS for Geometry (flat earth) data is SRID 0. This represents an arbitrary flat plane with unit-less dimensions.

The Point types - Edm.Point and Edm.GeometricPoint

Why the bias towards the geographic types?

Mobile devices are happening now. A tremendous amount of new data and new applications will be based on the prevalence of these devices. They all use WGS84 for their spatial data.

Mobile devide developers also tend to be more likely to try to copy some code from a blog or just follow intellisense until something works. Hard-core developers are more likely to read the docs and think things through. So we want to make the obvious path match the mobile developers.

"Point" is defined as per the OGC. Roughly, it consists of a single position in the underlying topology and CRS. Edm.Point is used for points in the round-earth (geographic) topology. Edm.GeometricPoint is a point in a flat-earth (geometric) topology.

These primitives are used for properties with a static point type. All entities of this type will have a point value for this property.

Example properties that would be of type point or geometric point include a user's current location or the location of a bus stop.

The LineString types - Edm.LineString and Edm.GeometricLineString

"LineString" is defined as per the OGC. Roughly, it consists of a set of positions with linear interpolation between those positions, all in the same topology and CRS, and represents a path. Edm.LineString is used for geographic LineStrings; Edm.GeometricLineString is used for geometric ones.

These primitives are used for properties with a static path type. Example properties would be the path for a bus route entity, or the path that I followed on my jog this morning (stored in a Run entity).

The Polygon types - Edm.Polygon and Edm.GeometricPolygon

"Polygon" is defined as per the OGC. Roughly, it consists of a single bounded area which may contain holes. It is represented using a set of LineStrings that follow specific rules. These rules differ for geometric and geographic topologies.

These primitives are used for properties with a static single-polygon type. Examples include the area enclosed in a single census tract, or the area reachable by driving for a given amount of time from a given initial point.

Some things that people think of as polygons, such as the boundaries of states, are not actually polygons. For example, the state of Hawaii includes several islands, each of which is a full bounded polygon. Thus, the state as a whole cannot be represented as a single polygon. It is a Multipolygon, and can be represented in OData only with the base types.

The base types - Edm.Geography and Edm.Geometry

The base type represents geospatial data of an undefined type. It might vary per entity. For example, one entity might hold a point, while another holds a multi-linestring. It can hold any of the types in the OGC hierarchy that have the correct topology and CRS.

Although core OData does not support any functions on the base type, a particular implementation can support operations via extensions (see below). In core OData, you can read & write properties that have the base types, though you cannot usefully filter or order by them.

The base type is also used for dynamic properties on open types. Because these properties lack metadata, the server cannot state a more specific type. The representation for a dynamic property MUST contain the CRS and topology for that instance, so that the client knows how to use it.

Therefore, spatial dynamic properties cannot be used in $filter, $orderby, and the like without extensions. The base type does not expose any canonical functions, and spatial dynamic properties are always the base type.

Edm.Geography represents any value in a geographic topology and given CRS. Edm.Geometry represents any value in a geometric topology and given CRS.

Each instance of the base type has a specific type that matches an instantiable type from the OGC hierarchy. The representation for an instance makes clear the actual type of that instance.

Thus, there are no instances of the base type. It is simply a way for the $metadata to state that the actual data can vary per entity, and the client should look there.

Spatial Properties on Entities

Zero or more properties in an entity can have a spatial type. The spatial types are regular primitives. All the standard rules apply. In particular, they cannot be shredded under projection. This means that you cannot, for example, use $select to try to pull out the first control position of a LineString as a Point.

For open types, the dynamic properties will all effectively be of the union type. You can tell the specific type for any given instance, just as for the union type. However, there is no static type info available. This means that dynamic properties need to include the CRS & topology.

Spatial-Primary Entities (Features)

This is a non-goal. We do not think we need these as an intrinsic. We believe that we can model this with a pass-through service using vocabularies.

Communicating

Metadata

We define new types: Edm.Geography, Edm.Geometry, Edm.Point, Edm.GeometricPoint, Edm.Polygon, Edm.GeometricPolygon. Each of them has a facet that is the CRS, called "coordinate_system".

Entities in Atom

What should we use?

Unknown.

To spark discussion, and because it is perhaps the best of a bad lot, the strawman proposal is to use the same GML profile as Sql Server uses. This is an admittedly hacked-down simplification of full GML, but seems to match the domain reasonably well.

Here are several other options, and some of the problems with each:

GeoRSS only supports some of the types.

Full GML supports way too much - and is complex because of it.

KML ised for spatial data that may contain embedded non-spatial data. This allows creating data that you can't then use OData to easily query. We would prefer that people use full OData entities to express this metadata, so that it can be used by clients that do not support geospatial data.

Another option would be an extended WKT. This isn't XML. This isn't a problem for us, but may annoy other implementers(?). More critically, WKT doesn't support 3d or 4d positions. We need those in order to support full save & load for existing data. The various extensions all disagree on how to extend for additional dimensions. I'd prefer not to bet on any one WKT implementation, so that we can wait for another standards body to pick the right one.

PostGIS does not seem to have a native XML format. They use their EWKT.

Finally, there's the SqlServer GML profile. It is a valid GML profile, and isn't quite as much more than we need as is full GML. I resist it mostly because it is a Microsoft format. Of course, if there is no universal format, then perhaps a Microsoft one is as good as we can get.

Entities in JSON

Why GeoJSON?

It flows well in a JSON entity, and is reasonably parsimonious. It is also capable of supporting all of our types and coordinate systems.

It is, however, not an official standard. Thus, we may have to include it by copy, rather than by reference, in our official standard.

Another option is to use ESRI's standard for geospatial data in JSON. Both are open standards with existing ecosystems. Both seem sufficient to our needs. Anyone have a good reason to choose one over the other?

We will use GeoJSON. Technically, since GeoJSON ised to support entire geometry trees, we are only using a subset of GeoJSON. We do not allow the use of types "Feature" or "FeatureCollection." Use entities to correlate a geospatial type with metadata.

Why the ordering constraint?

This lets us distinguish a GeoJSON primitive from a complex type without using metadata. That allows clients to parse a JSON entity even if they do not have access to the metadata.

This is still not completely unambiguous. Another option would be to recommend an "__type" member as well as a "type" member. The primitive would still be valid GeoJSON, but could be uniquely distinguished during parsing.

We believe the ordering constraint is lower impact.

Furthermore, "type" SHOULD be ordered first in the GeoJSON object, followed by coordinates, then the optional properties.

This looks like:

{ "d" : { "results": [ { "__metadata": { "uri": "http://services.odata.org/Foursquare.svc/Users('Neco447')", "type": "Foursquare.User" }, "ID": "Neco447", "Name": "Neco Fogworthy", "FavoriteLocation": { "type": "Point", "coordinates": [-127.892345987345, 45.598345897] }, "LastKnownLocation": { "type": "Point", "coordinates": [-127.892345987345, 45.598345897], "crs": { "type": "name", "properties": { "name": "urn:ogc:def:crs:OGC:1.3:CRS84" } }, "bbox": [-180.0, -90.0, 180.0, 90.0] } }, { /* another User Entry */ } ], "__count": "2", } }Dynamic Properties

Geospatial values in dynamic properties are represented exactly as they would be for static properties, with one exception: the CRS is required. The client will not be able to examine metadata to find this value, so the value must specify it.

Querying

Geospatial Literals in URIs

Why only 2d?

Because OGC only standardized 2d, different implementations differ on how they extended to support 3dz, 3dm, and 4d. We may add support for higher dimensions when they stabilize. As an example, here are three different representations for the same 3dm point:

- PostGIS: POINTM(1, 2, 3)

- Sql Server: POINT(1, 2, NULL, 3)

- ESRI: POINT M (1, 2, 3)

The standard will probably eventually settle near the PostGIS or ESRI version, but it makes sense to wait and see. The cost of choosing the wrong one is very high: we would split our ecosystem in two, or be non-standard.

There are at least 3 common extensions to WKT (PostGIS, ESRI, and Sql Server), but all use the same extension to include an SRID. As such, they all use the same representation for values with 2d coordinates. Here are some examples:

/Stores$filter=Category/Name eq "coffee" and distanceto(Location, POINT(-127.89734578345 45.234534534)) lt 900.0/Stores$filter=Category/Name eq "coffee" and intersects(Location, SRID=12345;POLYGON((-127.89734578345 45.234534534,-127.89734578345 45.234534534,-127.89734578345 45.234534534,-127.89734578345 45.234534534)))/Me/Friends$filter=distance_to(PlannedLocations, SRID=12345;POINT(-127.89734578345 45.234534534) lt 900.0 and PlannedTime eq datetime'2011-12-12T13:36:00'If OData were to support 3dm, then that last one could be exposed and used something like one of (depending on which standard we go with):

PostGIS:/Me/Friends$filter=distance_to(PlannedLocations, SRID=12345;POINTM(-127.89734578345 45.234534534 2011.98453) lt 900.0ESRI:/Me/Friends$filter=distance_to(PlannedLocations, SRID=12345;POINT M (-127.89734578345 45.234534534 2011.98453) lt 900.0Sql Server:/Me/Friends$filter=distance_to(PlannedLocations, SRID=12345;POINT(-127.89734578345 45.234534534 NULL 2011.98453) lt 900.0Why not GeoJSON?

GeoJSON actually makes a lot of sense. It would reduce the number of formats used in the standard by 1, making it easier for people to add geospatial support to service endpoints. We are also considering using JSON to represent entity literals used with Functions. Finally, it would enable support for more than 2 dimensions.

However, JSON has a lot of nesting brackets, and they are prominent in GeoJSON. This is fine in document bodies, where you can use linebreaks to make them readable. However, it is a problem in URLs. Observe the following example (EWKT representation is above, for comparison):

/Stores$filter=Category/Name eq "coffee" and intersects(Location, {"type":"polygon", "coordinates":[[[-127.892345987345, 45.598345897], -127.892345987345, 45.598345897], [-127.892345987345, 45.598345897], [-127.892345987345, 45.598345897]]]})Not usable everywhere

Why not?

Geospatial values are neither equality comparable nor partially-ordered. Therefore, the results of these operations would be undefined.

Furthermore, geospatial types have very long literal representations. This would make it difficult to read a simple URL that navigates along a series of entities with geospatial keys.

If your entity concurrency control needs to incorporate changes to geospatial properties, then you should probably use some sort of Entity-level version tracking.

Geospatial primitives MAY NOT be compared using

lt,eq, or similar comparison operators.Geospatial primitives MAY NOT be used as keys.

Geospatial primitives MAY NOT be used as part of an entity's ETag.

Distance Literals in URLs

Some queries, such as the coffee shop search above, need to represent a distance.

Distance is represented the same in the two topologies, but interpreted differently. In each case, it is represented as a float scalar. The units are interpreted by the topology and coordinate system for the property with which it is compared or calculated.

Because a plane is uniform, we can simply define distances in geometric coordinates to be in terms of that coordinate system's units. This works as long as each axis uses the same unit for its coordinates, which is the general case.

Geographic topologies are not necessarily uniform. The distance between longitude -125 and -124 is not the same at all points on the globe. It goes to 0 at the poles. Similarly, the distance between 30 and 31 degrees of latitude is not the same as the distance between 30 and 31 degrees of longitude (at least, not everywhere). Thus, the underlying coordinate system measures position well, but does not work for describing a distance.

For this reason, each geographic CRS also defines a unit that will be used for distances. For most CRSs, this is meters. However, some use US feet, Indian feet, German meters, or other units. In order to determine the meaning of a distance scalar, the developer must read the reference for the CRS involved.

New Canonical Functions

Each of these canonical functions is defined on certain geospatial types. Thus, each geospatial primitive type has a set of corresponding canonical functions. An OData implementation that supports a given geospatial primitive type SHOULD support using the corresponding canonical functions in $filter. It MAY support using the corresponding canonical functions in $orderby.

Are these the right names?

We might consider defining these canonical functions as Geo.distance, etc. That way, individual server extensions for standard OGC functions would feel like core OData. This works as long as we explicitly state (or reference) the set of functions allowed in Geo.

distance

Distance is a canonical function defined between points. It returns a distance, as defined above. The two arguments must use the same topology & CRS. The distance is measured in that topology. Distance is one of the corresponding functions for points. Distance is defined as equivalent to the OGC ST_Distance method for their overlapping domain, with equivalent semantics for geographical points.

intersects

Intersects identifies whether a point is contained within the enclosed space of a polygon. Both arguments must be of the same topology & CRS. It returns a Boolean value. Intersects is a corresponding function for any implementation that includes both points and polygons. Intersects is equivalent to OGC's ST_Intersects in their area of overlap, extended with the same semantics for geographic data.

length

Length returns the total path length of a linestring. It returns a distance, as defined above. Length is a corresponding function for linestrings. Length is equivalent to the OGC ST_Length operation for geometric linestrings, and is extended with equivalent semantics to geographic data.

Why this subset?

It matches the two most common scenarios: find all the interesting entities near me, and find all the interesting entities within a particular region (such as a viewport or an area a use draws on a map).

Technically, linestrings and length are not needed for these scenarios. We kept them because the spec felt jagged without them.

All other OGC functions

We don't support these, because we want to make it easier to stand up a server that is not backed by a database. Some are very hard to implement, especially in geographic coordinates.

A provider that is capable of handling OGC Simple Features functions MAY expose those as Functions on the appropriate geospatial primitives (using the new Function support).

We are reserving a namespace, "

Geo," for these standard functions. If the function matches a function specified in Simple Features, you SHOULD place it in this namespace. If the function does not meet the OGC spec, you MUST NOT place it in this namespace. Future versions of the OData spec may define more canonical functions in this namespace. The namespace is reserved to allow exactly these types of extensions without breaking existing implementations.In the SQL version of the Simple Features standard, the function names all start with ST_ as a way to provide namespacing. Because OData has real namespaces, it does not need this pseudo-namespace. Thus, the name SHOULD NOT include the ST_ when placed in the

Geonamespace. Similarly, the name SHOULD be translated to lowercase, to match other canonical functions in OData. For example, OGC'sST_Bufferwould be exposed in OData asGeo.buffer. This is similar to the Simple Features implementation on CORBA.All other geospatial functions

Any other geospatial operations MAY be exposed by using Functions. These functions are not defined in any way by this portion of the spec. See the section on Functions for more information, including namespacing issues.

Examples

Find coffee shops near me

/Stores$filter=/Category/Name eq "coffee" and distanceto(/Location, POINT(-127.89734578345 45.234534534)) lt 0.5&$orderby=distanceto(/Location, POINT-127.89734578345 45.234534534)&$top=3Find the nearest 3 coffee shops, by drive time

This is not directly supported by OData. However, it can be handled by an extension. For example:

/Stores$filter=/Category/Name eq "coffee"&$orderby=MyNamespace.driving_time_to(POINT(-127.89734578345 45.234534534, Location)&$top=3Note that while

distancetois symmetric in its args,MyNamespace.driving_time_tomight not be. For example, it might take one-way streets into account. This would be up to the data service that is defining the function.Compute distance along routes

/Me/Runs$orderby=length(Route) desc&$top=15Find all houses close enough to work

For this example, let's assume that there's one OData service that can tell you the drive time polygons around a point (via a service operation). There's another OData service that can search for houses. You want to mash them up to find you houses in your price range from which you can get to work in 15 minutes.

First,

/DriveTime(to=POINT(-127.89734578345 45.234534534), maximum_duration=time'0:15:00')returns

{ "d" : { "results": [ { "__metadata": { "uri": "http://services.odata.org/DriveTime(to=POINT(-127.89734578345 45.234534534), maximum_duration=time'0:15:00')", "type": "Edm.Polygon" }, "type": "Polygon", "coordinates": [[[-127.0234534534, 45.089734578345], [-127.0234534534, 45.389734578345], [-127.3234534534, 45.389734578345], [-127.3234534534, 45.089734578345], [-127.0234534534, 45.089734578345]], [[-127.1234534534, 45.189734578345], [-127.1234534534, 45.289734578345], [-127.2234534534, 45.289734578345], [-127.2234534534, 45.189734578345], [-127.1234534534, 45.289734578345]]] } ], "__count": "1", } }Then, you'd send the actual search query to the second endpoint:

/Houses$filter=Price gt 50000 and Price lt 250000 and intersects(Location, POLYGON((-127.0234534534 45.089734578345,-127.0234534534 45.389734578345,-127.3234534534 45.389734578345,-127.3234534534 45.089734578345,-127.0234534534 45.089734578345),(-127.1234534534 45.189734578345,-127.1234534534 45.289734578345,-127.2234534534 45.289734578345,-127.2234534534 45.189734578345,-127.1234534534 45.289734578345)))Is there any way to make that URL shorter? And perhaps do this in one query?

This is actually an overly-simple polygon for a case like this. This is just a square with a single hole in it. A real driving polygon would contain multiple holes and a lot more boundary points. So that polygon in the final query would realistically be 3-5 times as long in the URL.

It would be really nice to support reference arguments in URLs (with cross-domain support). Then you could represent the entire example in a single query:

/Houses$filter=Price gt 50000 and Price lt 250000 and intersects(Location, Ref("http://drivetime.services.odata.org/DriveTime(to=POINT(-127.89734578345 45.234534534), maximum_duration=time'0:15:00')"))However, this is not supported in OData today.

OK, but isn't there some other way to make the URL shorter? Some servers can't handle this length!

We are looking at options. The goal is to maintain GET-ability and cache-ability. A change in the parameters should be visible in the URI, so that caching works correctly.

The current front-runner idea is to allow the client to place parameter values into a header. That header contains a JSON dictionary of name/value pairs. If it does so, then it must place the hashcode for that dictionary in the query string. The resulting request looks like:

GET /Houses?$filter=Price gt 50000 and Price lt 250000 and intersects(Location, @drive_time_polygon)&orderby=distanceto(@microsoft)&$hash=HASHCODE Host: www.example.com X-ODATA-QUERY: { "microsoft": SRID=1234;POINT(-127.2034534534 45.209734578345), "drive_time_polygon": POLYGON((-127.0234534534 45.089734578345,-127.0234534534 45.389734578345,-127.3234534534 45.389734578345,-127.3234534534 45.089734578345,-127.0234534534 45.089734578345),(-127.1234534534 45.189734578345,-127.1234534534 45.289734578345,-127.2234534534 45.289734578345,-127.2234534534 45.189734578345,-127.1234534534 45.289734578345)), }Of course, nothing about this format is at all decided. For example, should that header value be fully in JSON (using the same formatting as in a JSON payload)? Should it be form encoded instead of JSON? Perhaps the entire query string should go in the header, with only a $query=HASHCODE in the URL? And there are a lot more options.

This entry was written by Arlo Belshee, posted on Tuesday, May 03, 2011 Bookmark the permalink. Follow any comments here with the Atom feed for this post.

Related posts

Justin Metzgar described a workaround for the WCF scales up slowly with bursts of work problem in a 5/4/2011 post to the .NET Endpoint blog:

A few customers have noticed an issue with WCF scaling up when handling a burst of requests. Fortunately, there is a very simple workaround for this problem that is covered in KB2538826 (thanks to David Lamb for the investigation and write up). The KB article provides a lot of good information about when this would apply to your application and what to do to fix it. In this post, I want to explore exactly what's happening.

The best part is that there is an easy way to reproduce the problem. To do this, create a new WCF service application with the .NET 4 framework:

Then trim the default service contract down to only the GetData method:

using System.ServiceModel; [ServiceContract] public interface IService1 { [OperationContract] string GetData(int value); }And fill out the GetData method in the service code:

using System; using System.Threading; public class Service1 : IService1 { public string GetData(int value) { Thread.Sleep(TimeSpan.FromSeconds(2)); return string.Format("You entered: {0}", value); } }The two second sleep is the most important part of this repro. We'll explore why after we create the client and observe the effects. But first, make sure to set the throttle in the web.config:

<configuration> <system.web> <compilation debug="true" targetFramework="4.0" /> </system.web> <system.serviceModel> <behaviors> <serviceBehaviors> <behavior> <serviceMetadata httpGetEnabled="true"/> <serviceDebug includeExceptionDetailInFaults="false"/> <serviceThrottling maxConcurrentCalls="100"/> </behavior> </serviceBehaviors> </behaviors> <serviceHostingEnvironment multipleSiteBindingsEnabled="true" /> </system.serviceModel> <system.webServer> <modules runAllManagedModulesForAllRequests="true"/> </system.webServer> </configuration>The Visual Studio web server would probably suffice, but I changed my web project to use IIS instead. This makes it more of a realistic situation. To do this go to the project properties, select the Web tab, and switch to IIS.

Be sure to hit the Create Virtual Directory button. In my case, I have SharePoint installed and it's taking up port 80, so I put the default web server on port 8080. Once this is done, build all and browse to the service to make sure it's working correctly.

To create the test client, add a new command prompt executable to the solution and add a service reference to Service1. Change the client code to the following:

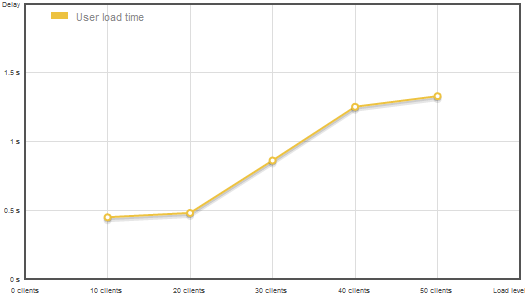

using System; using System.Threading; using ServiceReference1; class Program { private const int numThreads = 100; private static CountdownEvent countdown; private static ManualResetEvent mre = new ManualResetEvent(false); static void Main(string[] args) { string s = null; Console.WriteLine("Press enter to start test."); while ((s = Console.ReadLine()) == string.Empty) { RunTest(); Console.WriteLine("Allow a few seconds for threads to die."); Console.WriteLine("Press enter to run again."); Console.WriteLine(); } } static void RunTest() { mre.Reset(); Console.WriteLine("Starting {0} threads.", numThreads); countdown = new CountdownEvent(numThreads); for (int i = 0; i < numThreads; i++) { Thread t = new Thread(ThreadProc); t.Name = "Thread_" + i; t.Start(); } // Wait for all threads to signal. countdown.Wait(); Console.Write("Press enter to release threads."); Console.ReadLine(); Console.WriteLine("Releasing threads."); DateTime startTime = DateTime.Now; countdown = new CountdownEvent(numThreads); // Release all the threads. mre.Set(); // Wait for all the threads to finish. countdown.Wait(); TimeSpan ts = DateTime.Now - startTime; Console.WriteLine("Total time to run threads: {0}.{1:0}s.", ts.Seconds, ts.Milliseconds / 100); } private static void ThreadProc() { Service1Client client = new Service1Client(); client.Open(); // Signal that this thread is ready. countdown.Signal(); // Wait for all the other threads before making service call. mre.WaitOne(); client.GetData(1337); // Signal that this thread is finished. countdown.Signal(); client.Close(); } }The code above uses a combination of a ManualResetEvent and a CountdownEvent to coordinate the calls to all happen at the same time. It then waits for all the calls to finish and determines how long it took. When I run this on a dual core machine, I get the following:

Press enter to start test. Starting 100 threads. Releasing threads. Total time to run threads: 14.0s. Allow a few seconds for threads to die. Press enter to run again.If each individual request only sleeps for 2 seconds, why would it take 100 simultaneous requests 14 seconds to complete? It should only take 2 seconds if they're all executing at the same time. To understand what's happening, let's look at the thread count in perfmon. First, open Administrative Tools -> Performance Monitor. In the tree view on the left, pick Monitoring Tools -> Performance Monitor. It should have the % Processor counter in there by default. We don't need that counter so select it in the bottom and press the delete key. Now click the add button to add a new counter:

In the Add Counters dialog, expand the Process node:

Under this locate the Thread Count counter and then find the w3wp instance and press the Add >> button.

If you don't see the w3wp instance, it is most likely because it wasn't running when you opened the Add Counters dialog. To correct this, close the dialog, ping the Service1.svc service, and then click the Add button again in perfmon.

Now you can run the test and you should see results similar to the following:

In this test, I had pinged the service to get the metadata to warm everything up. When the test ran, you can see it took several seconds to add another 21 threads. The reason these threads are added is because when WCF runs your service code, it uses a thread from the IO thread pool. Your service code is then expected to do some work that is either quick or CPU intensive or takes up a database connection. But in the case of the test, the Thread.Sleep means that no work is being done and the thread is being held. A real world scenario where this pattern could occur is if you have a WCF middle tier that has to make calls into lower layers and then return the results.

For the most part, server load is assumed to change slowly. This means you would have a fairly constant load and the thread pool manager would pick the appropriate size for the thread pool to keep the CPU load high but without too much context switching. However, in the case of a sudden burst of requests with long-running, non-CPU-intensive work like this, the thread pool adapts too slowly. You can see in the graph above that the number of threads drops back down to 30 after some time. The thread pool has recognized that a thread has not been used for 15 seconds and therefore kills it because it is not needed.

To understand the thread pool a bit more, you can profile this and see what's happening. To get a profile, go to the Performance Explorer, usually pinned on the left side in Visual Studio, and click the button to create a new performance session:

Right click on the new performance session and select properties. In the properties window change the profiling type to Concurrency and uncheck the "Collect resource contention data" option.

Before we start profiling, let's first go to the Tools->Options menu in Visual Studio. Under Debugging, enable the Microsoft public symbol server.

Also, turn off the Just My Code option under Performance Tools:

The next step is to make the test client the startup project. You may need to ping the Service1.svc service again to make sure the w3wp process is running. Now, attach to the w3wp process with the profiler. There is an attach button at the top of the performance explorer window or you can right click on the performance session and choose attach.

Give the profiler a few seconds to warmup and then hit ctrl+F5 to execute the test client. After the test client finishes a single run pause and detach the profiler from the w3wp process. You can also hit the "Stop profiling" option but it will kill the process.

After you've finished profiling, it will take some time to process the data. Once it's up, switch to the thread view. In the figure below, I've rearranged the threads to make it easier to see the relationships:

Blue represents sleep time. This matches what we expect to see, which is a lot of new threads all sleeping for 2 seconds. At the top of the sleeping threads is the gate thread. It checks every 500ms to see if the number of threads in the thread pool is appropriate to handle the outstanding work. If not, it creates a new thread. You can see in the beginning that it creates 2 threads and then 1 thread at a time in 500ms increments. This is why it takes 14 seconds to process all 100 requests. So why are there two threads that look like they're created at the same time? If you look more closely, that's actually not the case. Let's zoom in on that part:

Here again the highlighted thread is the gate thread. Before it finishes its 500ms sleep, a new thread is created to handle one of the WCF service calls which sleeps for 2 seconds. Then when the gate thread does its check, it realizes that there is a lot of work currently backed up and creates another new thread. On a dual core machine like this, the default minimum IO thread pool setting is 2: 1 per core. One of those threads is always taken up by WCF and functions as the timer thread. You can see that there are other threads created up top. This is most likely ASP.Net creating some worker threads to handle the incoming requests. You can see that they don't sleep because they're passing off work to WCF and getting ready to handle the next batch of work.

The most obvious thing to do then would be to increase the minimum number of IO threads. You can do this in two ways: use ThreadPool.SetMinThreads or use the <processModel> tag in the machine.config. Here is how to do the latter:

<system.web> <!--<processModel autoConfig="true"/>--> <processModel autoConfig="false" minIoThreads="101" minWorkerThreads="2" maxIoThreads="200" maxWorkerThreads="40" />Be sure to turn off the autoConfig setting or the other options will be ignored. If we run this test again, we get a much better result. Compare the previous snapshot of permon with this one:

And the resulting output of the run is:

Starting 100 threads. Press enter to release threads. Releasing threads. Total time to run threads: 2.2s. Allow a few seconds for threads to die. Press enter to run again.This is an excellent result. It is exactly what you would want to happen if a sudden burst of work comes in. But customers were saying that this wasn't happening for them. David Lamb told me that if you run this for a long time, like 2 hours, it would eventually stop quickly adding threads and behave as if the min IO threads was not set.

One of the things we can do is modify the test code to give enough time for the threads to have their 15 second timeout and just take out all the Console.ReadLine calls:

using System; using System.Threading; using ServiceReference1; class Program { private const int numThreads = 100; private static CountdownEvent countdown; private static ManualResetEvent mre = new ManualResetEvent(false); static void Main(string[] args) { while (true) { RunTest(); Thread.Sleep(TimeSpan.FromSeconds(25)); Console.WriteLine(); } } static void RunTest() { mre.Reset(); Console.WriteLine("Starting {0} threads.", numThreads); countdown = new CountdownEvent(numThreads); for (int i = 0; i < numThreads; i++) { Thread t = new Thread(ThreadProc); t.Name = "Thread_" + i; t.Start(); } // Wait for all threads to signal. countdown.Wait(); Console.WriteLine("Releasing threads."); DateTime startTime = DateTime.Now; countdown = new CountdownEvent(numThreads); // Release all the threads. mre.Set(); // Wait for all the threads to finish. countdown.Wait(); TimeSpan ts = DateTime.Now - startTime; Console.WriteLine("Total time to run threads: {0}.{1:0}s.", ts.Seconds, ts.Milliseconds / 100); } private static void ThreadProc() { Service1Client client = new Service1Client(); client.Open(); // Signal that this thread is ready. countdown.Signal(); // Wait for all the other threads before making service call. mre.WaitOne(); client.GetData(1337); // Signal that this thread is finished. countdown.Signal(); client.Close(); } }Then just let it run for a couple hours and see what happens. Luckily, it doesn't take 2 hours and we can reproduce the weird behavior pretty quickly. Here is a perfmon graph showing several runs:

You can see that the first two bursts had quick scale up with the thread count. After that, it went back to being slow. After working with the CLR team, they determined that there is a bug in the IO thread pool. This bug causes the internal count of IO threads to get out-of-sync with reality. Some of you may be asking the question as to what min really means in terms of the thread pool. Because if you specify you want a minimum of 100 threads, why wouldn't there just always be 100 threads? For the purposes of the thread pool, the min is supposed to be the threshold that the pool can scale up to before it starts to be metered. The default working set cost for one thread is a half MB. So that is one reason to keep the thread count down.

Some customers have found that Juval Lowy's thread pool doesn't have the same problem and handles these bursts of work much better. Here is a link to his article: http://msdn.microsoft.com/en-us/magazine/cc163321.aspx

Notice that Juval has created a custom thread pool and relies on the SynchronizationContext to switch from the IO thread that WCF uses to the custom thread pool. Using a custom thread pool in your production environment is not advisable. Most developers do not have the resources to properly test a custom thread pool for their applications. Thankfully, there is an alternative and that is the recommendation made in the KB article. The alternative is to use the worker thread pool. Juval has some code for a custom attribute that you can put on your service to set the SynchronizationContext to use his custom thread pool and we can just modify that to put the work into the worker thread pool. The code for this change is all in the KB article, but I'll reiterate here along with with the changes to the service itself.

using System; using System.Threading; using System.ServiceModel.Description; using System.ServiceModel.Channels; using System.ServiceModel.Dispatcher; [WorkerThreadPoolBehavior] public class Service1 : IService1 { public string GetData(int value) { Thread.Sleep(TimeSpan.FromSeconds(2)); return string.Format("You entered: {0}", value); } } public class WorkerThreadPoolSynchronizer : SynchronizationContext { public override void Post(SendOrPostCallback d, object state) { // WCF almost always uses Post ThreadPool.QueueUserWorkItem(new WaitCallback(d), state); } public override void Send(SendOrPostCallback d, object state) { // Only the peer channel in WCF uses Send d(state); } } [AttributeUsage(AttributeTargets.Class)] public class WorkerThreadPoolBehaviorAttribute : Attribute, IContractBehavior { private static WorkerThreadPoolSynchronizer synchronizer = new WorkerThreadPoolSynchronizer(); void IContractBehavior.AddBindingParameters( ContractDescription contractDescription, ServiceEndpoint endpoint, BindingParameterCollection bindingParameters) { } void IContractBehavior.ApplyClientBehavior( ContractDescription contractDescription, ServiceEndpoint endpoint, ClientRuntime clientRuntime) { } void IContractBehavior.ApplyDispatchBehavior( ContractDescription contractDescription, ServiceEndpoint endpoint, DispatchRuntime dispatchRuntime) { dispatchRuntime.SynchronizationContext = synchronizer; } void IContractBehavior.Validate( ContractDescription contractDescription, ServiceEndpoint endpoint) { } }The areas highlighted above show the most important bits for this change. There really isn't that much code to this. With this change essentially what is happening is that WCF recognizes that when it's time to execute the service code, there is an ambient SynchronizationContext. That context then pushes the work into the worker thread pool with QueueUserWorkItem. The only other change to make is to the <processModel> node in the machine.config to configure the worker thread pool for a higher min and max.

<system.web> <!--<processModel autoConfig="true"/>--> <processModel autoConfig="false" minWorkerThreads="100" maxWorkerThreads="200" />Running the test again, you will see a much better result:

All … test runs finished in under 2.5 seconds.

We cannot make a recommendation on this alone though. There are other questions like how does this affect performance and does it continue to work this way for hours, days, weeks, etc. Our testing showed that for at least 72 hours, this worked without a problem. The performance runs showed some caveats though. These are also pointed out in the KB article. There is a cost for switching from one thread to another. This would be the same with the worker thread pool or a custom thread pool. That overhead can be negligible for large amounts of work. In the case that applies here with 2 seconds of blocking work, the context switch is definitely not a factor. But if you've got small messages and fast work, then it's likely to hurt your performance.

The test project is included with this post.

I wasn’t able to find a link to the test project and left Dustin a comment to that effect.

John Spurlock posted a downloadable odata4j v0.4 complete archive to Google Code on 4/18/2011 (missed when posted):

There is a vast amount of data available today and data is now being collected and stored at a rate never seen before. Much of this data is managed by Java server applications and difficult to access or integrate into new uses.

The Open Data Protocol (OData) is a Web protocol for querying and updating data that provides a way to unlock this data and free it from silos that exist in applications today. OData does this by applying and building upon Web technologies such as HTTP, Atom Publishing Protocol (AtomPub) and JSON to provide access to information from a variety of applications, services, and stores.

Project Info

odata4j is a new open-source toolkit for building first-class OData producers and first-class OData consumers in Java.

Goals and Guidelines

- Build on top of existing Java standards (e.g. JAX-RS, JPA, StAX) and use top shelf Java open-source components (jersey, Joda Time)

- OData consumers should run equally well under constrained Java client environments, specifically Android

- OData producers should run equally well under constrained Java server environments, specifically Google AppEngine

Consumers - Getting Started

- Download the latest archive zip

- Add odata4j-bundle-x.x.jar to your build path (or odata4j-clientbundle-x.x.jar for a smaller client-only bundle)

- Create an new consumer using ODataConsumer.create("http://pathto/service.svc/") and use the consumer for client scenarios

Consumers - Examples

(All of these examples work on Android as well)

- Basic consumer query & modification example using the OData Read/Write Test service: ODataTestServiceReadWriteExample.java

- Simple Netflix example: NetflixConsumerExample.java

- List all entities for a given service: ServiceListingConsumerExample.java

- Use the Dallas data service to query AP news stories (requires Dallas credentials): DallasConsumerExampleAP.java

- Use the Dallas data service to query UNESCO data (requires Dallas credentials): DallasConsumerExampleUnescoUIS.java

- Basic Azure table-service table/entity manipulation example (requires Azure storage credentials): AzureTableStorageConsumerExample.java

- Test against the sample odata4j service hosted on Google AppEngine: AppEngineConsumerExample.java

Producers - Getting Started

- Download the latest archive zip

- Add odata4j-bundle-x.x.jar to your build path (or odata4j-nojpabundle-x.x.jar for a smaller bundle with no JPA support)

- Choose or implement an ODataProducer

- Use InMemoryProducer to expose POJOs as OData entities using bean properties. Example: InMemoryProducerExample.java

- Use JPAProducer to expose an existing JPA 2.0 entity model: JPAProducerExample.java

- Implement ODataProducer directly

- Take a look at odata4j-appengine for an example of how to expose AppEngine's Datastore as an OData endpoint

- Hosting in Tomcat: http://code.google.com/p/odata4j/wiki/Tomcat

Status

odata4j is still early days, a quick summary of what's implemented

- Fairly complete expression parser (pretty much everything except complex navigation property literals)

- URI parser for producers

- Complete EDM metadata parser (and model)

- Dynamic entity/property model (OEntity/OProperty)

- Consumer api: ODataConsumer

- Producer api: ODataProducer

- ATOM transport

- Non standard behavior (e.g. Azure authentication, Dallas paging) via client extension api)

- Transparent server-driven paging Consumers

- Cross domain policy files for silverlight clients

- Free WADL for your OData producer thanks to jersey. e.g. odata4j-sample application.wadl

- Tested with current OData client set (.NET ,Silverlight, LinqPad, PowerPivot)

Todo list, in a rough priority order

- DataServiceVersion negotiation

- Better error responses

- gzip compression

- Access control

- Authentication

- Flesh out InMemory producer

- Flesh out JPA producer: map filters to query api, support complex types, etc

- Bring expression model to 100%, query by expression via consumer

- Producer model: expose functions

Disregard Google’s self-serving and untrue “Your version of Internet Explorer is not supported. Try a browser that contributes to open source, …” message. It appears to me that this message disqualifies Google Code as “open source.”

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• The Windows AppFabric Team announced Windows Azure AppFabric LABS Scheduled Maintenance and Breaking Changes Notification (5/12/2011) pm 5/6/2011:

Due to upgrades and enhancements we are making to Windows Azure AppFabric LABS environment, scheduled for 5/12/2011, users will have NO access to the AppFabric LABS portal and services during the scheduled maintenance downtime. [Emphasis added.]

When:

- START: May 12, 2011, 10am PST

- END: May 12, 2011, 5pm PST

- Service Bus will be impacted until May 16, 2011, 10am PST

Impact Alert:

- AppFabric LABS Service Bus, Access Control and Caching services, and the portal, will be unavailable during this period.

Additional impacts are described below.Action Required:

- Access to portal, Access Control and Caching will be available after the maintenance window and all existing accounts/namespaces for these services will be preserved.

- However, Service Bus will not be available until May 16, 2011, 10am PST. Furthermore, Service Bus namespaces will be preserved following the maintenance, BUT existing connection points and durable queues will not be preserved. [Emphasis added.]

- Users should back up Service Bus configuration in order to be able to restore following the maintenance. [Emphasis added.]

Thanks for working in LABS and providing us valuable feedback.

For any questions or to provide feedback please use our Windows Azure AppFabric CTP Forum.Once the maintenance is over we will post more details on the blog.

Steve Peschka continued his Federated SAML Authentication with SharePoint 2010 and Azure Access Control Service Part 2 series with a 5/6/2011 post:

In the first post in this series (http://blogs.technet.com/b/speschka/archive/2011/05/05/federated-saml-authentication-with-sharepoint-2010-and-azure-access-control-service-part-1.aspx) [see below] I described how to configure SharePoint to establish a trust directly with the Azure Access Control (ACS) service and use it to federate authentication between ADFS, Yahoo, Google and Windows Live for you and then use that to get into SharePoint. In part 2 I’m going to take a similar scenario, but one which is really implemented almost backwards to part 1 – we’re going to set up a typical trust between SharePoint and ADFS, but we’re going to configure ACS as an identity provider in ADFS and then use that to get redirected to login, and then come back in again to SharePoint. This type of trust, at least between SharePoint and ADFS, is one that I think more SharePoint folks are familiar with and I think for today plugs nicely into a more common scenario that many companies are using.

As I did in part 1, I’m not going to describe the nuts and bolts of setting up and configuring ACS – I’ll leave that to the teams that are responsible for it. So, for part 2, here are the steps to get connected:

1. Set up your SharePoint web application and site collection, configured with ADFS.

- First and foremost you should create your SPTrustedIdentityTokenIssuer, a relying party in ADFS, and a SharePoint web application and site collection. Make sure you can log into the site using your ADFS credentials. Extreme details on how this can be done is described in one of my previous postings at http://blogs.technet.com/b/speschka/archive/2010/07/30/configuring-sharepoint-2010-and-adfs-v2-end-to-end.aspx.

2. Open the Access Control Management Page

- Log into your Windows Azure management portal. Click on the Service Bus, Access Control and Caching menu in the left pane. Click on Access Control at the top of the left pane (under AppFabric), click on your namespace in the right pane, and click on the Access Control Service button in the Manage portion of the ribbon. That will bring up the Access Control Management page.

3. Create a Trust Between ADFS and ACS

- This step is where we will configure ACS as an identity provider in ADFS. To begin, go to your ADFS server and open up the AD FS 2.0 Management console

- Go into the AD FS 2.0…Trust Relationships…Claims Provider Trusts node and click on the Add Claims Provider Trust… link in the right pane

- Click the Start button to begin the wizard

- Use the default option to import data about the relying party published online. The Url you need to use is in the ACS management portal. Go back to your browser that has the portal open, and click on the Application Integration link under the Trust relationships menu in the left pane

- Copy the Url it shows for the WS-Federation Metadata, and paste that into the Federation metadata address (host name or URL): edit box in the ADFS wizard, then click the Next button

- Type in a Display name and optionally some Notes then click the Next button

- Leave the default option of permitting all users to access the identity provider and click the Next button.

- Click the Next button so it creates the identity provider, and leave the box checked to open up the rules editor dialog. The rest of this section is going to be very similar to what I described in this post http://blogs.technet.com/b/speschka/archive/2010/11/24/configuring-adfs-trusts-for-multiple-identity-providers-with-sharepoint-2010.aspx about setting up a trust between two ADFS servers:

You need to create rules to pass through all of the claims that you get from the IP ADFS server. So in the rules dialog, for each claim you want to send to SharePoint you're going to do the following:

- Click on Add Rule.

- Select the Pass Through or Filter an Incoming Claim in the Claim Rule Template drop down and click the Next button.