Windows Azure and Cloud Computing Posts for 5/28/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Updated 5/30/2011 with articles marked •• from Rob Tiffany, Maarten Balliauw, Jay Heiser, Juan De Abreu, the Silverlight Show, Steve Yi, Avkash Chauhan, and Riccardo Becker.

• Updated 5/29/2011 with articles marked • from James Podgorski, Pinal Dave, David Robinson, Christian Leinsberger, Roger Mall, István Novák, Claire Rogers, Tom Rizzo and Microsoft TechEd North America 2011 Team.

U.S. Memorial Day weekend catch-up issue. Will be updated 5/29 and 5/30/2011.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, SQL Compact and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF, Cache and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, SQL Compact and Reporting

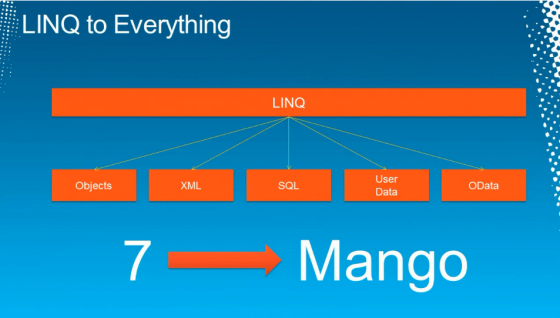

•• Rob Tiffany posted Video > New Windows Phone Mango Data Access Features @ Tech Ed North America 2011 on 5/27/2011:

If you are a Windows Phone developer, this sample-filled video shows you how to create, manage, and access structured data that is locally stored on the phone via SQL Server Compact. This new functionality will be available in the next version of Windows Phone Mango later this year. This session is a “must” if your application works with a lot of local structured data. Come and get a deep dive and learn how to use these capabilities.

From the Channel9 description:

If you are a Windows Phone developer,this sample-filled session shows you how to create,manage and access structured data that is locally stored on the phone. This new functionality will be available in the next version of Windows Phone later this year. This session is a "must" if your application works with a lot of local structured data. Come and get a deep dive and learn how to use these capabilities.

Watch the Channel9 WPH304 video and download slides here.

•• Lynn Langit (@llangit) posted Getting Started With SQL Azure Development on 5/29/2011:

**This is an update to the article published late last year in MSDN Magazine – it includes information current as of May 29th, including the TechEd 2011 SQL Azure announcements**

In addition to the information in this article, I recently did a series of presentations on SQL Azure for the SSWUG – [above] is a video preview.

Microsoft Windows Azure offers several choices for data storage. These include Windows Azure storage and SQL Azure. You may choose to use one or both in your particular project. Windows Azure storage currently contains three types of storage structures: tables, queues or blobs (which can optionally be virtual machines).

SQL Azure is a relational data storage service in the cloud. Some of the benefits of this offering are the ability to use a familiar relational development model that includes most of the standard SQL Server language (T-SQL), tools and utilities. Of course, working with well-understood relational structures in the cloud, such as tables, views and stored procedures also results in increased developer productivity when working in this new platform type. Other benefits include a reduced need for physical database administration tasks to server setup, maintenance and security as well as built-in support for reliability, high availability and scalability.

I won’t cover Windows Azure storage or make a comparison between the two storage modes here. You can read more about these storage options in the July 2010 Data Points column . It is important to note that Windows Azure tables are NOT relational tables. Another way to think about the two storage offerings is that Windows Azure includes Microsoft’s NoSQL cloud solutions and SQL Azure is the RDMS-cloud offering. The focus of this article is on understanding the capabilities included in SQL Azure.

In article I will be explaining differences between SQL Server and SQL Azure. You need to understand these differences in detail so that you can appropriately leverage your current knowledge of SQL Server as you work on projects that use SQL Azure as a data source. This article was originally published in September 2010. I have updated it as of June 2011.

If you are new to cloud computing you’ll want to do some background reading on Windows Azure before reading this article. A good place to start is the MSDN Developer Cloud Center.

Getting Started with SQL Azure

To start working with SQL Azure, you’ll first need to set up an account. If you are a MSDN subscriber, then you can use up to three SQL Azure databases (maximum size 1 GB each) for up to 16 months (details) at as a developer sandbox. You may prefer to sign up for a regular SQL Azure account (storage and data transfer fees apply), to do so go here. Yet another option is to get a trial 30-day account (no credit card required). To do the latter, go here and use signup code - DPEWR02.

After you’ve signed up for your SQL Azure account, the simplest way to initially access it is via the web portal at windows.azure.com. You must first sign in with the Windows Live ID that you’ve associated to your Windows Azure account. After you sign in, you can create your server installation and get started developing your application. The number of servers and / or databases you are allowed to create will be dependent on the type of account you’ve signed up for.

An example of the SQL Azure web management portal is shown in Figure 1. Here you can see a server and its associated databases. You’ll note that there is also a tab on this portal for managing the Firewall Settings for this particular SQL Azure installation.

Figure 1 Summary Information for a SQL Azure Server

As you initially create your SQL Azure server installation, it will be assigned a random string for the server name. You’ll generally also set the administrator username, password, geographic server location and firewall rules at the time of server creation. You can select the physical (data center) location for your SQL Azure installation at the time of server creation. You will be presented with a list of locations to choose from. As of this writing, Microsoft has 6 physical data centers, located world-wide to select from. If your application front-end is built in Windows Azure, you have the option to locate both that installation and your SQL Azure installation in the same geographic location by associating the two installations together by using an Affinity Group.

By default there is no client access to your newly-created server, so you’ll first have to create firewall rules for all client IPs. SQL Azure uses port 1433, so make sure that that port is open for your client application as well. When connecting to SQL Azure you’ll use the username@servername format for your username. SQL Azure supports SQL Authentication only; Windows authentication is not supported. Multiple Active Result Set (MARS) connections are supported.

Open connections will ‘time out’ after 30 minutes of inactivity. Also connections can be dropped for long-running queries or transactions or excessive resource usage. Development best practices in your applications around connections are to open, use and then close those connections manually, to include retry connection logic for dropped connections and to avoid caching connections because of these behaviors. Another best practice is to encrypt your connection string to prevent man-in-the-middle attacks. For best practices and code samples for SQL Azure connections (including a suggested library which includes patterned connection retry logic), see this TechNET blog post.

You will be connected to the master database by if you don’t specify a database name in the connection string. In SQL Azure the T-SQL statement USE is not supported for changing databases, so you will generally specify the database you want to connect to in the connection string (assuming you want to connect to a database other than master). Figure 2 below, shows an example of an ADO.NET connection:

Figure 2 Format for SQL Azure connection string

Setting up Databases

After you’ve successfully created and connected to your SQL Azure server, then you’ll usually want to create one or more databases. Although you can create databases using the SQL Azure portal, you may prefer to do so using some of the other tools, such as SQL Server Management Studio 2008 R2. By default, you can create up to 149 databases for each SQL Azure server installation, if you need more databases than that; you must call the Azure business desk to have this limit increased.

When creating a database you must select the maximum size. The current options for sizing (and billing) are Web or Business Edition. Web Edition, the default, supports databases of 1 or 5 GB total. Business Edition supports databases of up to 50 GB, sized in increments of 10 GB – in other words, 10, 20, 30, 40 and 50 GB. Currently, both editions are feature-equivalent.

You set the size limit for your database when you create it by using the MAXSIZE keyword. You can change the size limit or the edition (Web or Business) after the initial creation using the ALTER DATABASE statement. If you reach your size or capacity limit for the edition you’ve selected, then you will see the error code 40544. The database size measurement does NOT include the master database, or any database logs. For more detail about sizing and pricing, see this link Although you set a maximum size, you are billed based on actual storage used.

It’s important to realize that when you are creating a new database on SQL Azure, you are actually creating three replicas of that database. This is done to ensure high availability. These replicas are completely transparent to you. Currently, these replicas are in the same data center. The new database appears as a single unit for your purposes. Failover is transparent and part of the service you are paying for is a SLA of 99.9% uptime.

After you’ve created a database, you can quickly get the connection string information for it by selecting the database in the list on the portal and then clicking the ‘Connection Strings’ button. You can also test connectivity via the portal by clicking the ‘Test Connectivity’ button for the selected database. For this test to succeed you must enable the ‘Allow Microsoft Services to Connect to this Server’ option on the Firewall Rules tab of the SQL Azure portal.

Creating Your Application

After you’ve set up your account, created your server, created at least one database and set a firewall rule so that you can connect to the database, then you can start developing your application using this data source.

Unlike with Windows Azure data storage options such as tables, queues or blobs, when you are using SQL Azure as a data source for your project, there is nothing to install in your development environment. If you are using Visual Studio 2010, you can just get started – no additional SDKs, tools or anything else are needed.

Although many developers will choose to use a Windows Azure front-end with a SQL Azure back-end, this configuration is NOT required. You can use ANY front-end client with a supported connection library such as ADO.NET or ODBC. This could include, for example, an application written in Java or PHP. Of note is that connecting to SQL Azure via OLE DB is currently not supported.

If you are using Visual Studio 2010 to develop your application, then you can take advantage of the included ability to view or create many types of objects in your selected SQL Azure database installation directly from the Visual Studio Server Explorer View. These objects are Tables, Views, Stored Procedures, Functions or Synonyms. You can also see the data associated with these objects using this viewer. For many developers using Visual Studio 2010 as their primary tool to view and manage SQL Azure data will be sufficient. The Server Explorer View window is shown in Figure 3. Both a local installation of a database and a cloud-based instance are shown. You’ll note that the tree nodes differ slightly in the two views. For example there is no Assemblies node in the cloud installation because custom assemblies are not supported in SQL Azure.

Figure 3 Viewing Data Connections in Visual Studio

Of note also in Visual Studio is that using the Entity Framework with SQL Azure is supported. Also you may choose to use Data-Tier application packages (or DACPACs) in Visual Studio. You can create, import and / or modify DACPACS for SQL Azure schemas in VS2010.

Another developer tool that can now use to create applications which use SQL Azure as a data source is Visual Studio Light Switch. This is a light-weight developer environment, based on the idea of ‘data and screens’ created for those who are tasked with part-time coding, most especially those who create ‘departmental applications. To try out the beta version of Visual Studio Light Switch go to this location . Shown below (Figure 4) is connecting to a SQL Azure data source using the Light Switch IDE.

Figure 4 Connecting to SQL Azure in Visual Studio Light SwitchIf you are wish to use SQL Azure as a data source for Business Intelligence projects, then you’ll use Visual Studio Business Intelligence Development Studio 2008 (R2 version needed to connect to SQL Azure). In addition, Microsoft has begun a limited (invite-only) customer beta of SQL Azure Reporting Services, a version of SQL Server Reporting Services for Azure. Microsoft has announced that on the longer-term roadmap for SQL Azure, they are working to cloud-enable versions of the entire BI stack, that is Analysis Services, Integration Services and Reporting Services.

More forward-looking, Microsoft has announced that in vNext of Visual Studio the BI toolset will be integrated into the core product with full SQL Azure compatibility and intellisense. This project is code-named ‘Juneau’ and is expected to go into public beta later this year. For more information (and demo videos of Juneau) see this link.As I mentioned earlier, another tool you may want to use to work with SQL Azure is SQL Server Management Studio 2008 R2. Using SSMS, you actually have access to a fuller set of operations for SQL Azure databases using SSMS than in Visual Studio 2010. I find that I use both tools, depending on which operation I am trying to complete. An example of an operation available in SSMS (and not in Visual Studio 2010) is creating a new database using a T-SQL script. Another example is the ability to easily performance index operations (create, maintain, delete and so on). An example is shown in Figure 5 below.

Although working with SQL Azure databases in SSMS 2008 R2 is quite similar to working with an on-premises SQL Server instance, tasks and functionality are NOT identical. This is due mostly due to product differences. For example, you may remember that in SQL Azure the USE statement to CHANGE databases is NOT supported. A common way to do this when working in SSMS it is to right click an open query window, then click ‘Connection’>’Change connection’ on the context-sensitive menu and then to enter the next database connection information in the ‘Connect to Database Engine’ dialog box that pops up.Generally when working in SSMS, if an option isn’t supported in SQL Azure either, you simply can’t see it such as folders in the Explorer tree not present; context-sensitive menu-options not available when connected to a SQL Azure instance, or you are presented with an error when you try to execute a command this isn’t supported in this version of SQL Server. You’ll also note that many of the features available with GUI interfaces for SQL Server with SSMS are exposed only via T-SQL script windows for SQL azure. These include common features, such as CREATE DATABASE, CREATE LOGIN, CREATE TABLE, CREATE USER, etc…

One tool that SQL Server DBAs often ‘miss’ in SQL Azure is SQL Server Agent. This functionality is NOT supported. However, there are 3rd party tools as well as community projects, such as the one on CodePlex here which provide examples of using alternate technologies to create ‘SQL-Agent-like’ functionality for SQL Azure.

Figure 5 Using SQL Server Management Studio 2008 R2 to Manage SQL AzureAs mentioned in the discussion of Visual Studio 2010 support, newly released in SQL Server 2008 R2 is a data-tier application or DAC. DAC pacs are objects that combine SQL Server or SQL Azure database schemas and objects into a single entity.

You can use either Visual Studio 2010 (to build) or SQL Server 2008 R2 SSMS (to extract) to create a DAC from an existing database. If you wish to use Visual Studio 2010 to work with a DAC, then you’d start by selecting the SQL Server Data-Tier Application project type in Visual Studio 2010. Then, on the Solution Explorer, right-click your project name and click ‘Import Data Tier Application’. A wizard opens to guide you through the import process. If you are using SSMS, start by right-clicking on the database you want to use in the Object Explorer, click Tasks, and then click ‘Extract Data-tier Application’ to create the DAC. The generated DAC is a compressed file that contains multiple T-SQL and XML files. You can work with the contents by right-clicking the .dacpac file and then clicking Unpack. SQL Azure supports deleting, deploying, extracting, and registering DAC pacs, but does not support upgrading them. Figure 6 below, shows the template in Visual Studio 2010 for working with DACPACs

Figure 6 The ‘SQL Server Data-tier Application’ template in Visual Studio 2010 (for DACPACs)

Also of note is that Microsoft has released a CTP version of enhanced DACPACs, called BACPACs, that support import/export of schema AND data (via BCP). Find more information here . Another name for this set of functionality is the import/export tool for SQL Azure.

Another tool you can use to connect to SQL Azure is the Silverlight-based web tool called the SQL Azure Web Management tool shown in Figure 7 below. It’s intended as a zero-install client to manage SQL Azure installations. To access this tool navigate to the main Azure portal here, then click on the ‘Database’ node in the tree view on the left side. You will next click on the database that you wish to work with and then click on the ‘Manage’ button on the ribbon. This will open the login box for the web client. After you enter the login credentials, then a new web page will open which will allow you to work with that databases’ Tables, Views, Queries and Stored Procedures in a SQL Azure database installation.

Figure 7 Using the Silverlight Web Portal to manage a SQL Azure Database

Of course, because the portal is built on Silverlight, you can view, monitor and manage the exposed aspects of SQL Azure with any browser using the web management tool. Shown below in Figure 8 is the portal running on a MacOS with Google Chrome.

Figure 8 Using the Silverlight Web Portal to manage a SQL Azure Database on a Mac with Google Chrome

Still another tool you can use to connect to a SQL Azure database is SQLCMD (more information here ). Of note is that even though SQLCMD is supported, the OSQL command-line tool is not supported by SQL Azure.

Using SQL Azure

So now you’ve connected to your SQL Azure installation and have created a new, empty database. So what exactly can you do with SQL Azure? Specifically you may be wondering what are the limits on creating objects? And after those objects have been created, how do you populate those objects with data? As I mentioned at the beginning of this article, SQL Azure provides relational cloud data storage, but it does have some subtle feature differences to an on premise SQL Server installation. Starting with object creation, let’s look at some of the key differences between the two.

You can create the most commonly used objects in your SQL Azure database using familiar methods. The most commonly used relational objects (which include tables, views, stored procedures, indices, and functions) are all available. There are some differences around object creation though. I’ll summarize the differences in the next paragraph.

SQL Azure tables MUST contain a clustered index. Non-clustered indices CAN be subsequently created on selected tables. You CAN create spatial indices; you can NOT create XML indices. Heap tables are NOT supported. CLR types of Geo-spatial only types (such as Geography and Geometry) ARE supported. Also Support for the HierachyID data type IS included. Other CLR types are NOT supported. View creation MUST be the first statement in a batch. Also view (or stored procedure) creation with encryption is NOT supported. Functions CAN be scalar, inline or multi-statement table-valued functions, but can NOT be any type of CLR function.

There is a complete reference of partially supported T-SQL statements for SQL Azure on MSDN here .

Before you get started creating your objects, remember that you will connect to the master database if you do not specify a different one in your connection string. In SQL Azure, the USE (database) statement is not supported for changing databases, so if you need to connect to a database other than the master database, then you must explicitly specify that database in your connection string as shown earlier.

Data Migration and Loading

If you plan to create SQL Azure objects using an existing, on-premises database as your source data and structures, then you can simply use SSMS to script an appropriate DDL to create those objects on SQL Azure. Use the Generate Scripts Wizard and set the ‘Script for the database engine type’ option to ‘for SQL Azure’.

An even easier way to generate a script is to use the SQL Azure Migration Wizard available as a download from CodePlex here . With this handy tool you can generate a script to create the objects and can also load the data via bulk copy using bcp.exe.

You could also design a SQL Server Integration Services (SSIS) package to extract and run a DML or DDL script. If you are using SSIS, you’d most commonly design a package that extracts the DDL from the source database, scripts that DDL for SQL Azure and then executes that script on one or more SQL Azure installations. You might also choose to load the associated data as part of this package’s execution path. For more information about working with SSIS here.

Also of note regarding DDL creation and data migration is the CTP release of SQL Azure Data Sync Services here). You can also see this service in action in a Channel 9 video here . Currently SQL Azure Data Sync services works via Synchronization Groups (HUB and MEMBER servers) and then via scheduled synchronization at the level of individual tables in the databases selected for synchronization. For even more about Data Sync listen in to this recent MSDN geekSpeak show by new SQL Azure MVP Ike Ellis on his experiences with SQL Azure Data Sync.

You can use the Microsoft Sync Framework Power Pack for SQL Azure to synchronize data between a data source and a SQL Azure installation. As of this writing, this tool is in CTP release and is available here . If you use this framework to perform subsequent or ongoing data synchronization for your application, you may also wish to download the associated SDK.

What if your source database is larger than the maximum size for the SQL Azure database installation? This could be greater than the absolute maximum of 50 GB for the Business Edition or some smaller limit based on the other program options.

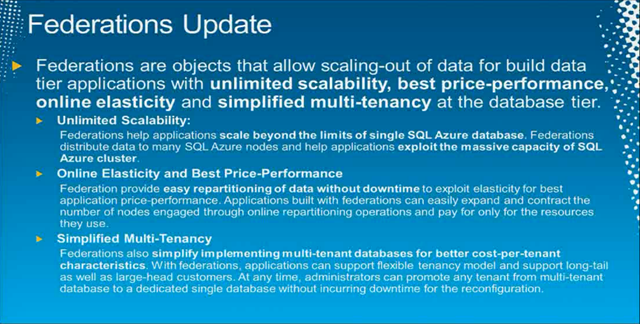

Currently, customers must partition (or shard) their data manually if their database size exceeds the program limits. Microsoft has announced that it will be providing a federation (or auto-partitioning utility) for SQL Azure in the future. For more information about how Microsoft plans to implement federation, read here. To support federations new T-SQL syntax will be introduced. From the blog post referenced above, Figure 9, below, shows a conceptual representation of that new syntax.

Figure 9 SQL Azure Federation (conceptual syntax)

As of this writing SQL Azure Federation customer beta program has been announced. To Sign up go here

It’s important to note that T-SQL table partitioning is NOT supported in SQL Azure. There is also a free utility called Enzo SQL Shard (available here) that you can use for partitioning your data source.

You’ll want to take note of some other differences between SQL Server and SQL Azure regarding data loading and data access. Added recently is the ability to copy a SQL Azure database via the Database copy command. The syntax for a cross-server copy is as follows:

CREATE DATABASE DB2A AS COPY OF Server1.DB1AThe T-SQL INSERT statement IS supported (with the exceptions of updating with views or providing a locking hint inside of an INSERT statement). Related further to data migration is that T-SQL DROP DATABASE and other DDL commands have additional limits when executed against a SQL Azure installation. Also the T-SQL RESTORE and ATTACH DATABASE commands are not supported. Finally, the T-SQL statement EXECUTE AS (login) is not supported.

If you are migrating from a data source other than SQL Server, there are also some free tools and wizards available to make the job easier. Specifically there is an Access to SQL Azure Migration wizard and a MySQL to SQL Azure Migration wizard. Both work similarly to the SQL Azure Migration wizard in that they allow you to map the source schema to a destination schema, then create the appropriate DDL, then they allow you to configure and to execute the data transfer via bcp. A screen from the MySQL to SQL Azure Migration wizard is shown in Figure 10 below.

Here are links for some of these tools:

1) Access to SQL Azure Migration Wizard – here

2) MySQL to SQL Azure Migration Wizard – here

3) Oracle to SQL Server Migration Wizard (you will have to manually set the target version to ‘SQL Azure’ for appropriate DDL script generation) – here

Figure 10 Migration from MySQL to SQL Azure wizard screen

For even more information about migration, you may want to listen in to a recently recorded a 90 minute webcast with more details (and demos!) for Migration scenarios to SQL Azure - listen in here. Joining me on this webcast is the creator of the open-source SQL Azure Migration Wizard – George Huey. I also posted a version of this presentation (both slides and screencast) on my blog – here.Data Access and Programmability

Now let’s take a look at common programming concerns when working with cloud data.

First you’ll want to consider where to set up your development environment. If you are an MSDN subscriber and can work with a database under 1 GB, then it may well make sense to develop using only a cloud installation (sandbox). In this way there will be no issue with migration from local to cloud. Using a regular (i.e. not MSDN subscriber) SQL Azure account you could develop directly against your cloud instance (most probably a using a cloud-located copy of your production database). Of course developing directly from the cloud is not practical for all situations.

If you choose to work with an on-premises SQL Server database as your development data source, then you must develop a mechanism for synchronizing your local installation with the cloud installation. You could do that using any of the methods discussed earlier, and tools like Data Sync Services and Sync Framework are being developed with this scenario in mind.

As long as you use only the supported features, the method for having your application switch from an on-premise SQL Server installation to a SQL Azure database is simple – you need only to change the connection string in your application.

Regardless of whether you set up your development installation locally or in the cloud, you’ll need to understand some programmability differences between SQL Server and SQL Azure. I’ve already covered the T-SQL and connection string differences. In addition all tables must have a clustered index at minimum (heap tables are not supported). As previously mentioned, the USE statement for changing databases isn’t supported. This also means that there is no support for distributed (cross-database) transactions or queries, and linked servers are not supported.

Other options not available when working with a SQL Azure database include:

- Full-text indexing

- CLR custom types (however the built-in Geometry and Geography CLR types are supported)

- RowGUIDs (use the uniqueidentifier type with the NEWID function instead)

- XML column indices

- Filestream datatype

- Sparse columnsDefault collation is always used for the database. To make collation adjustments, set the column-level collation to the desired value using the T-SQL COLLATE statement. And finally, you cannot currently use SQL Profiler or the Database Tuning Wizard on your SQL Azure database.

Some important tools that you CAN use with SQL Azure for tuning and monitoring are the following:

- SSMS Query Optimizer to view estimated or actual query execution plan details and client statistics

- Select Dynamic Management views to monitor health and status

- Entity Framework to connect to SQL Azure after the initial model and mapping files have been created by connecting to a local copy of your SQL Azure database.Depending of what type of application you are developing, you may be using SSAS, SSRS, SSIS or Power Pivot. You CAN also use any of these products as CONSUMERS of SQL Azure database data. Simply connect to your SQL Azure server and selected database using the methods already described in this article.

Another developer consideration is in understanding the behavior of transactions. As mentioned, only local (within the same database) transactions are supported. Also it is important to understand that the only transaction isolation level available for a database hosted on SQL Azure is READ COMMITTED SNAPSHOT. Using this isolation level, readers get the latest consistent version of data that was available when the statement STARTED. SQL Azure does not detect update conflicts. This is also called an optimistic concurrency model, because lost updates, non-repeatable reads and phantoms can occur. Of course, dirty reads cannot occur.

Yet another method of accessing SQL Azure data programmatically is via OData. Currently in CTP and available here , you can try out exposing SQL Azure data via an OData interface by configuring this at the CTP portal. For a well-written introduction to OData, read here . Shown in Figure 11 below is one of the (CTP) configuration screens for exposing SQL Azure data as OData.

Figure 11 SQL OData (CTP) configuration

Database Administration

Generally when using SQL Azure, the administrator role becomes one of logical installation management. Physical management is handled by the platform. From a practical standpoint this means there are no physical servers to buy, install, patch, maintain or secure. There is no ability to physically place files, logs, tempdb and so on in specific physical locations. Because of this, there is no support for the T-SQL commands USE <database>, FILEGROUP, BACKUP, RESTORE or SNAPSHOT.

There is no support for the SQL Agent on SQL Azure. Also, there is no ability (or need) to configure replication, log shipping, database mirroring or clustering. If you need to maintain a local, synchronized copy of SQL Azure schemas and data, then you can use any of the tools discussed earlier for data migration and synchronization – they work both ways. You can also use the DATABASE COPY command. Other than keeping data synchronized, what are some other tasks that administrators may need to perform on a SQL Azure installation?

Most commonly, there will still be a need to perform logical administration. This includes tasks related to security and performance management. Of note is that in SQL Azure only there are two new database roles in the master database which are intended for security management. These roles are dbmanager (similar to SQL Server’s dbcreator role) and (similar to SQL Server’s securityadmin role) loginmanager. Also certain common usernames are not permitted. These include ‘sa’, ‘admin’, ‘administrator’, ‘root’ and ‘guest’. Finally passwords must meet complexity requirements. For more, read Kalen Delaney’s TechNET Article on SQL Azure security here .

Additionally, you may be involved in monitoring for capacity usage and associated costs. To help you with these tasks, SQL Azure provides a public Status History dashboard that shows current service status and recent history (an example of history is shown in Figure 12) here .

Figure 12 SQL Azure Status History

There is also a new set of error codes that both administrators and developers should be aware of when working with SQL Azure. These are shown in Figure 13 below. For a complete set of error codes for SQL Azure see this MSDN reference. Also, developers may want to take a look at this MSDN code sample on how to programmatically decode error messages.

Figure 13 SQL Azure error codes

SQL Azure provides a high security bar by default. It forces SSL encryption with all permitted (via firewall rules) client connections. Server-level logins and database-level users and roles are also secured. There are no server-level roles in SQL Azure. Encrypting the connection string is a best practice. Also, you may wish to use Windows Azure certificates for additional security. For more detail read here .

In the area of performance, SQL Azure includes features such as automatically killing long running transactions and idle connections (over 30 minutes). Although you cannot use SQL Profiler or trace flags for performance tuning, you can use SQL Query Optimizer to view query execution plans and client statistics. A sample query to SQL Azure with Query Optimizer output is shown in Figure 14 below. You can also perform statistics management and index tuning using the standard T-SQL methods.

Figure 15 SQL Azure query with execution plan output shown

There is a select list of dynamic management views (covering database, execution or transaction information) available for database administration as well. These include sys.dm_exec_connections , _requests , _sessions, _tran_database_transactions, _active_transactions, _partition_stats For a complete list of supported DMVs for SQL Azure see here .

There are also some new views such as sys.database_usage and sys.bandwidth_usage. These show the number, type and size of the databases and the bandwidth usage for each database so that administrators can understand SQL Azure billing. Also this blog post gives a sample of how you can use T-SQL to calculate estimated cost of service. Here is yet another MVP’s view of how to calculate billing based on using these views. A sample is shown in Figure 16. In this view, quantity is listed in KB. You can monitor space used via this command:

SELECT SUM(reserved_page_count) * 8192 FROM sys.dm_db_partition_statsFigure 16 Bandwidth Usage in SQL Query

Further around SQL Azure performance monitoring, Microsoft has released an installable tool which will help you to better understand performance. It produces reports on ‘longest running queries’, ‘max CPU usage’ and ‘max IO usage’. Shown in Figure 17 below is a sample report screen for the first metric. You can download this tool from this location

Figure 17 Top 10 CPU consuming queries for a SQL Azure workload

You can also access the current charges for the SQL Azure installation via the SQL Azure portal by clicking on the Billing link at the top-right corner of the screen. Below in Figure 18 is an example of a bill for SQL Azure.

Figure 18 Sample Bill for SQL Azure services

Learn More and Roadmap

Product updates announced at TechEd US / May 2011 are as follows:

- SQL Azure Management REST API – a web API for managing SQL Azure servers.

- Multiple servers per subscription – create multiple SQL Azure servers per subscription.

- JDBC Driver – updated database driver for Java applications to access SQL Server and SQL Azure.

- DAC Framework 1.1 – making it easier to deploy databases and in-place upgrades on SQL Azure.

For deeper technical details you can read more in the MSDN documentation here .

Microsoft has also announced that is it is working to implement database backup and restore, including point-in-time restore for SQL Azure databases. This is a much-requested feature for DBAs and Microsoft has said that they are prioritizing the implementation of this feature set due to demand.

To learn more about SQL Azure, I suggest you download the Windows Azure Training Kit. This includes SQL Azure hands-on learning, whitepapers, videos and more. The training kit is available here. There is also a project on Codeplex which includes downloadable code, sample videos and more here . Also you will want to read the SQL Azure Team Blog here, and check out the MSDN SQL Azure Developer Center here .

If you want to continue to preview upcoming features for SQL Azure, then you’ll want to visit SQL Azure Labs here. Show below in Figure 19, is a list our current CTP programs. As of this writing, those programs include – OData, Data Sync and Import/Export. SQL Azure Federations has been announced, but is not open to invited customers.

Figure 19 SQL Azure CTP programs

A final area you may want to check out is the Windows Azure Data Market. This is a place for you to make data sets that you choose to host on SQL Azure publically available. This can be at no cost or for a fee. Access is via Windows Live ID. You can connect via existing clients, such as the latest version of the Power Pivot add-in for Excel, or programmatically. In any case, this is a place for you to ‘advertise’ (and sell) access to data you’ve chosen to host on SQL Azure.

Conclusion

Are you still reading? Wow! You must be really interested in SQL Azure. Are you using it? What has your experience been? Are you interested, but NOT using it yet? Why not? Are you using some other type of cloud-data storage (relational or non-relational)? What is it, how do you like it? I welcome your feedback.

• David Robinson presented the COS310: Microsoft SQL Azure Overview: Tools, Demos and Walkthroughs of Key Features session at TechEd North America 2011. From Channel9’s description:

This session is jam-packed with hands-on demonstrations lighting up SQL Azure with new and existing applications. We start with the steps to creat[e] a SQL Azure account and database, then walk through the tools to connect to it. Then we open Microsoft Visual Studio to connect to Microsoft .NET applications with EF and ADO.NET. Finally, plug in new services to sync data with SQL Server.

Outtake: Slide 10 at 00:21:28: Repowering SQL Azure with SQL Server Denali Engine

Outtake: Slide 16 at 00:33:43: SQL Azure sharding with Federations

As I noted in the SQL Azure Database and Reporting section of my earlier Windows Azure and Cloud Computing Posts for 5/26/2011+ post:

SQL Server Denali’s new Sequence object or its equivalent will be required to successfully shard SQL Azure Federation members with bigint identity primary key ranges. Microsoft’s David Robinson announced in slide 10 of his COS310: Microsoft SQL Azure Overview: Tools, Demos and Walkthroughs of Key Features TechEd North America 2011 session that “RePowering SQL Azure with SQL Server Denali Engine” is “coming in Next [SQL Azure] Service Release.”

For more details about sharding SQL Azure databases, read my Build Big-Data Apps in SQL Azure with Federation cover story for Visual Studio Magazine’s March 2011 issue.

• Pinal Dave described SQL Azure Throttling and Decoding Reason Codes in a 5/29/2011 post to his SQL Authority blog:

I was recently reading on the subject SQL Azure Throttling and Decoding Reason Codes and end up reading the article over here. What I really liked is the explanation of the subject with Graphic. I have never seen any better explanation of this subject.

I really liked this diagram. However, based on reason code, one has to adjust their resource usages. I now wonder do we have any tool available which can directly analysis the reason codes and based on it gives output that what kind of the throttling is happening. One of the idea I immediately got that I can make a Stored Procedure or Function where I pass this error code and it gives me back right away the throttling mode and resource type based on above algorithm.

Any one ha[ve] the T-SQL code available for the same?

Following is the content of the “Decoding Throttling Codes” section from the cited Error Messages (SQL Azure Database) topic from the MSDN Library:

This section describes how to decode the reason codes that are returned by error code 40501 "The service is currently busy. Retry the request after 10 seconds. Code: %d.". The reason code (

Code: %d) is a decimal number that contains the throttling mode and the exceeded resource type(s). The throttling mode enumerates the rejected statement types. The resource type specifies the exceeded resources. Throttling can happen on multiple resource types concurrently, such as CPU and IO.The following diagram demonstrates how to decode the reason codes.

To obtain the throttling mode, apply modulo

4to the reason code. The modulo operation returns the remainder of one number divided by another. To obtain the throttling type and resource type, divide the reason code by256as shown in step 1. Then, convert the quotient of the result to its binary equivalent as shown in steps 2 and 3. The diagram lists all the throttling types and resource types. Compare your throttling type with the resource type bits as shown in the diagram.The following table provides a list of the throttling modes.

As an example, use

131075as a reason code. To obtain the throttling mode, apply modulo4to the reason code.131075 % 4 = 3. The result3means the throttling mode is "Reject All".To obtain the throttling type and resource type, divide the reason code by

256. Then, convert the quotient of the result to its binary equivalent. 131075 / 256 = 512 (decimal) and 512 (decimal) = 10 00 00 00 00 (binary). This means the database was Hard-Throttled (10) due to CPU (Resource Type 4).

David Robinson presented the SQL Azure Advanced Administration: Backup, Restore and Database Management Strategies for Cloud Databases session at TechEd North America 2011 on 5/17/2011. From the Channel9 session description:

The cornerstone of any enterprise application is the ability to backup and restore data. In this session we focus on the various options available to application developers and administrators of SQL Azure applications for archiving and recovering database data.

We look at the various scenarios in which backup or restore is necessary,and discuss the requirements driven by those scenarios. Attendees also get a glimpse of future plans for backup/restore support in SQL Azure. The session is highly interactive,and we invite the audience to provide feedback on future requirements for backup/restore functionality in SQL Azure.

Dave is the Technical Editor of my Cloud Computing with the Windows Azure Platform book (WROX/Wiley, 2010).

<Return to section navigation list>

MarketPlace DataMarket and OData

•• Steve Yi announced the availability of an 00:11:24 Video How-To: Extending SQL Azure to Microsoft Applications [with OData] Webcast in a 5/30/2011 post:

This walkthrough demonstrates how easy it is to extend, share, and integrate SQL Azure data with Microsoft applications via an OData service. The video starts by reviewing the benefits of using SQL Azure, then goes on to show you how to enable cloud application to expose the data via an OData service.

By utilizing OData, SQL Azure data is made available to a variety of new user scenarios and client applications such as Windows Phone, Excel, and Javascript. We also include a lot of additional resources that offer further support.

Watch the video, and follow along by downloading the source code, all available on our page on Codeplex.

Take a look and if you have any questions leave a comment. Thanks!

• Christian Leinsberger and Roger Mall presented the COS307 Building Applications with the Windows Azure DataMarket session at TechEd North America 2011 on 5/18/2011. From the Channel9 description:

This session shows you how to build applications that leverage DataMarket as part of Windows Azure Marketplace. We are going to introduce the development model for DataMarket and then immediately jump into code to show how to extend an existing application with free and premium data from the cloud. Together we will build an application from scratch that leverages the Windows Phone platform,data from DataMarket and the location APIs,to build a compelling application that shows data around the end-user. The session will also show examples of how to use JavaScript, Silverlight and PHP to connect with the DataMarket APIs.

Elisa Flasko posted Jovana Taylor’s An Introduction to DataMarket with PHP guest article to the Windows Azure Marketplace DataMarket blog on 5/27/2011:

Hi! I’m Jovana, and I’m currently interning on the DataMarket team. I come from sunny Western Australia, where I’ve almost finished a degree in Computer Science and Mechatronics Engineering. When I came here I noticed that there wasn’t too much available in the way of tutorials for users who wanted to use DataMarket data in a project, but weren’t C# programmers. I’d written a total of one function in C# before coming here, so I’d definitely classify myself in that category. The languages I’m most familiar with are PHP, Python and Java, so over the next few weeks I’ll do a series of posts giving a basic introduction to consuming data from DataMarket using these languages. I’ll refer to the 2006 – 2008 Crime in the United States (Data.gov) dataset for these posts, which is free to subscribe to, and allows unlimited transactions.

In this post I’ll outline two methods for using PHP to query DataMarket; using the PHP OData SDK, and using cURL to read and then parse the xml data feed. For either method, you’ll firstly need to subscribe to a dataset, and make a note your DataMarket account key. Your account key can be found by clicking “My Data” or “My Account” near the top of the DataMarket webpage, then choosing “Account Keys” in the sidebar.

The PHP OData SDK

DataMarket uses the OData protocol to query data, a relatively new format released under the Microsoft Open Specification Promise. One of the ways to query DataMarket with PHP is to use the PHP OData SDK, developed by Persistent Systems Ltd. This is freely available from CodePlex, however unfortunately there seems to be little developer activity on the project since its release in March 2010, and users report that they need to do some source code modifications to get it to work on Unix systems. Setting up the SDK also involves making some basic changes to the PHP configuration file, potentially a problem on some hosted web servers.

A word of warning: not all DataMarket datasets can be queried with the PHP OData SDK! DataMarket datasets can have one of two query types, fixed or flexible. To check which type a particular set is, click on the “Details” tab in the dataset description page. The SDK only supports datasets with flexible queries. Another way to check is to take a look at the feed’s metadata. Copy the service URL, also found under the “Details” tab into your browser’s address bar and add $metadata after the trailing slash. Some browsers have trouble rendering the metadata; if you get an error, save the page and open it up in notepad. Look for the tab containing <schema xmlns=”…”> (There will probably be other attributes, such as namespace, in this tab). The PHP OData SDK will only work with metadata documents specifying their schema xmlns ending in one of “/2007/05/edm”, “/2006/04/edm” or “/2008/09/edm”.

Generating a Proxy Class

The PHP OData SDK comes with a PHP utility to generate a proxy class for a given OData feed. The file it generates is essentially a PHP model of the feed. The command to generate the file is

php PHPDataSvcUtil.php /uri=[Dataset’s service URL]

/out=[Name out output file]

Once generated, check that the output file was created successfully. The file should contain at least one full class definition. Below is a snippet of the class generated for the Data.gov Crime dataset. The full class is around 340 lines long.

/*** Function returns DataServiceQuery reference for* the entityset CityCrime* @return DataServiceQuery*/public function CityCrime(){$this->_CityCrime->ClearAllOptions();return $this->_CityCrime;}Using the Proxy class

With the hardest part complete, you are now ready to start consuming data! Insert a reference to the proxy class at the top of your PHP document.

require_once "datagovCrimesContainer.php";

Now you are ready to load the proxy. You’ll also need to pass in your account key for authentication.

$key = [Your Account Key];

$context = new datagovCrimesContainer();

$context->Credential = new WindowsCredential("key", $key);

The next step is to construct and run the query. There are a number of query functions available; these are documented with examples in the user guide. Keep in mind that queries can’t always be filtered by any of the parameters– for this particular dataset we can specify ROWID, State, City and Year. The valid input parameters can be found under the dataset’s “Details” tab. Note that some datasets have mandatory input parameters.

try{$query = $context->CityCrime()->Filter("State eq 'Washington' and Year eq 2007");$result = $query->Execute();}catch (DataServiceRequestException $e){echo "Error: " . $e->Response->getError();}$crimes = $result->Result;(If you get a warning message from cURL that isn’t relevant to the current environment, try adding @ in front of $query to suppress warnings.)

In this example we’ll construct a table to display some of the result data.

echo “<table>”;foreach ($crimes as $row){echo "<tr><td>" . htmlspecialchars($row->City) . "</td>";echo "<td>" . htmlspecialchars($row->Population) . "</td>";echo "<td>" . htmlspecialchars($row->Arson) . "</td></tr>";}echo "</table>";DataMarket will return up to 100 results for each query, so if you expect more than 100 results you’ll need to execute several queries. We simply need to wrap the execute command in some logic to determine whether all results have been returned yet.

$nextCityToken = null;while(($nextCityToken = $result->GetContinuation()) != null){$result = @$context->Execute($nextCityToken);$crimes = array_merge($crimes, $result->Result);}The documentation provided with the SDK outlines a few other available query options, such as sorting. Some users have reported bugs arising if certain options are used together, so be sure to test that your results are what you expect.

Using cURL/libcurl

If the PHP OData SDK isn’t suitable for your purpose, another option is to assemble the URL to the data you are after, then send a request for it using cURL and parse the XML result. DataMarket’s built in query explorer can help you out here – add any required parameters to the fields on the left, then click on the blue arrow to show the URL that corresponds to the query. Remember that any ampersands or other special characters will need to be escaped.

The cURL request

We use cURL to request the XML feed that corresponds to the query URL from DataMarket. Although there are a number of options that can be set, the following are all that is required for requests to DataMarket.

$ch = curl_init();curl_setopt($ch, CURLOPT_URL, $queryUrl);curl_setopt($ch, CURLOPT_USERPWD, ":" . $key);curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);curl_setopt($ch, CURLOPT_SSL_VERIFYPEER, false);$response = curl_exec($ch);curl_close($ch);The $response variable now contains the XML result for the query.

Parsing the response

Before using the data, you’ll need to parse the XML. Because each XML feed is different, each dataset needs a parser tailored especially to it. There are a number of methods of putting together a parser, the example below uses xml_parser.

The first step is to create a new class to model each row in the result data.

class CityCrime{var $City;var $Population;var $Arson;public function __construct(){}}I’m also going to wrap the all the parser functions in a class of their own. This function will be called with the query uri and account key. Firstly I’ll give it some class variables to store the data that has been parsed.

class CrimeParser{var $entries = array();var $count = 0;var $currentTag = "";var $key = "";var $uri = "";public function __construct($key, $uri){$this->key = $key;$this->uri = $uri;}}The parser requires OpenTag and CloseTag functions to specify what should happen when it reaches an open tag or close tag in the XML. In this case, we append or remove the tag name from the $currentTag string.

private function OpenTag($xmlParser, $data){$this->currentTag .= "/$data";}private function CloseTag($xmlParser, $data){$tagKey = strrpos($this->currentTag, '/');$this->currentTag = substr($this->currentTag, 0, $tagKey);}Now we are ready to write a handler function. Firstly declare the tags of all the keys that you wish to store. One method of finding the tags is to run the code using a basic handler function that simply prints out all tags as they are encountered.

private function DataHandler($xmlParser, $data){switch($this->currentTag){default:print "$this->currentTag <br/>";break;}}The switch statement in the handler needs a case for each key. We also need to let it know when it reaches a new object – from running the code with the previous handler, I knew that the properties for each row started and finished with the tag /FEED/ENTRY/CONTENT, so I’ll add a class variable to keep track of when the handler comes across that tag – every second time it comes across it I know that the result row has been fully processed.

var $contentOpen = false;const rowKey = '/FEED/ENTRY/CONTENT';const cityKey = '/FEED/ENTRY/CONTENT/M:PROPERTIES/D:CITY';const populationKey = '/FEED/ENTRY/CONTENT/M:PROPERTIES/D:POPULATION';const arsonKey = '/FEED/ENTRY/CONTENT/M:PROPERTIES/D:ARSON';private function DataHandler($xmlParser, $data){switch(strtoupper($this->currentTag)){case strtoupper(self::rowKey):if ($this->contentOpen){$this->count++;$this->contentOpen = false;}else{$this->entries[$this->count] = new CityCrime();$this->contentOpen = true;}break;case strtoupper(self::cityKey):$this->entries[$this->count]->City = $data;break;case strtoupper(self::populationKey):$this->entries[$this->count]->Population = $data;break;case strtoupper(self::arsonKey):$this->entries[$this->count]->Arson = $data;break;default:break;}}Now we create the parser, and parse the result from the cURL query.

$xmlParser = xml_parser_create();xml_set_element_handler($xmlParser, "self::OpenTag","self::CloseTag");xml_set_character_data_handler($xmlParser, "self::DataHandler");if(!(xml_parse($xmlParser, $xml))){die("Error on line " . xml_get_current_line_number($xmlParser));}xml_parser_free($xmlParser);After the call to xml_parse, the $entries will be populated. A table of the data can now be printed using the same foreach code as the SDK example, or manipulated in any way you see fit.

Final Thoughts

The two methods of consuming data from DataMarket with PHP both have their strengths and weaknesses. A proxy class generated from the OData SDK is very easy to add to existing code, but setting up the library can be tedious, and there is not much support available for it. Using cURL and parsing the xml provides slightly more flexibility, but requires much more coding to set up.

Since it only requires an URL and an Account key, opening the connection to DataMarket is very straightforward, whichever method is chosen. If the dataset you’re connecting to is free, I suggest opening Service Explorer and trying out various queries to get a feel for the data. Both methods shown above will result in the dataset’s conversion to an associative array, from which data can be manipulated using any of the PHP functions available.

At this stage, if you want to access a flexible query dataset, and are able to modify your PHP configuration file, the PHP OData SDK is a good tool for accessing OData feeds. However, if you want access to a fixed query dataset, or are unable to modify the configuration file, using cURL and parsing the result is straightforward enough to still be a valid option.

Eric White (@ericwhitedev) explained Consuming External OData Feeds with SharePoint BCS in a 5/23/2011 post (missed when posted):

I wrote an MSDN Magazine article, Consuming External OData Feeds with SharePoint BCS, which was published in April, 2011. Using BCS, you can connect up to SQL databases and web services without writing any code, but if you have more exotic data sources, you can write C# code that can grab the data from anywhere you can get it. Knowing how to write an assembly connector is an important skill for pro developers who need to work with BCS. This article shows how to write a .NET Assembly Connector for BCS. It uses as its data source an OData feed. Of course, as you probably know, SharePoint 2010 out-of-the-box exposes lists as OData feeds. The MSDN article does a neat trick – you write a .NET assembly connector that consumes an OData feed for a list that is on the same site. While this, by itself, is not very useful, it means that it is super easy to walk through the process of writing a .NET assembly connector.

I write the article and the code for the 2010 Information Worker Demonstration and Evaluation Virtual Machine (RTM). Some time ago, I wrote a blog post, How to Install and Activate the IW Demo/Evaluation Hyper-V Machine.

The MSDN article contains detailed instructions on how to create a read-only external content type that consumes an OData feed. This is an interesting exercise, but advanced developers will want to create .NET assembly connectors that can create/read/update/delete records. The procedures for doing all of that were too long for the MSDN magazine article, so of necessity, the article is limited to creating a read-only ECT.

However, I have recorded a screen-cast that walks through the entire process of creating a CRUD capable ECT. It is 25 minutes long, so some day when you feel patient, you can follow along step-by-step-by-step, creating a CRUD capable ECT that consumes an OData feed. Here is the video:

Walks through the process of creating an ,NET connector for an ECT that consumes an OData feed.

The procedure requires code for two source code modules: Customer.cs, and CustomerService.cs.

Here is the code for Customer.cs:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;namespace Contoso.Crm

{

public partial class Customer

{

public string CustomerID { get; set; }

public string CustomerName { get; set; }

public int Age { get; set; }

}

}Here is the code for CustomerService.cs:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Net;

using System.Text;namespace Contoso.Crm

{

public class CustomerService

{

public static Customer ReadCustomer(string customerID)

{

TeamSiteDataContext dc =

new TeamSiteDataContext(

new Uri("http://intranet.contoso.com/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

var customers =

from c in dc.Customers

where c.CustomerID == customerID

select new Customer()

{

CustomerID = c.CustomerID,

CustomerName = c.CustomerName,

Age = (int)c.Age,

};

return customers.FirstOrDefault();

}public static IEnumerable<Customer> ReadCustomerList()

{

TeamSiteDataContext dc =

new TeamSiteDataContext(

new Uri("http://intranet.contoso.com/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

var customers =

from c in dc.Customers

select new Customer()

{

CustomerID = c.CustomerID,

CustomerName = c.CustomerName,

Age = (int)c.Age,

};

return customers;

}public static void UpdateCustomer(Customer customer)

{

TeamSiteDataContext dc =

new TeamSiteDataContext(

new Uri("http://intranet.contoso.com/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

var query =

from c in dc.Customers

where c.CustomerID == customer.CustomerID

select new Customer()

{

CustomerID = c.CustomerID,

CustomerName = c.CustomerName,

Age = (int)c.Age,

};

var customerToUpdate = query.FirstOrDefault();

customerToUpdate.CustomerName = customer.CustomerName;

customerToUpdate.Age = customer.Age;

dc.UpdateObject(customerToUpdate);

dc.SaveChanges();

}public static void DeleteCustomer(string customerID)

{

TeamSiteDataContext dc =

new TeamSiteDataContext(

new Uri("http://intranet.contoso.com/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

var query =

from c in dc.Customers

where c.CustomerID == customerID

select new Customer()

{

CustomerID = c.CustomerID,

CustomerName = c.CustomerName,

Age = (int)c.Age,

};

var customerToDelete = query.FirstOrDefault();

dc.DeleteObject(customerToDelete);

dc.SaveChanges();}

public static void CreateCustomer(Customer customer,

out Customer returnedCustomer)

{

// create item

TeamSiteDataContext dc =

new TeamSiteDataContext(

new Uri("http://intranet.contoso.com/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

CustomersItem newCustomer = new CustomersItem();

newCustomer.CustomerID = customer.CustomerID;

newCustomer.CustomerName = customer.CustomerName;

newCustomer.Age = customer.Age;

dc.AddToCustomers(newCustomer);

dc.SaveChanges();

returnedCustomer = customer;

}

}

}

Lewis Benge (@lewisbenge) described Consuming OData on Windows Phone 7 in a 5/22/2011 post (missed when posted):

OData is a great concept, it provides the strongly-typed data formats of SOAP-based services, with the low payload highly flexible REST-based services. It also brings its own twists such as URL based operators to allow for SQL-like querying, sorting, and filtering. This lightweight data protocol has seen great adoption through both Microsoft, and non-Microsoft based products on both the client, and server and also throughout popular sites such as Netflix, eBay, and StackOverflow.

The construct and design of the OData protocol lend it to also be a great solution for mobile application development and Microsoft have even created client SDKs and code generator tools for all of the major mobile platforms, including their very own Windows Phone 7. The use of OData on Windows Phone is however very much a confusing story at this points in time. The Windows Phone API, as you more than likely know is built upon the .NET Compact Framework running a cut down version of Silverlight. Due to this it does not have fully fledging support for the .NET framework and thus has caused some issues with the OData API’s being ported across. If you fired up Visual Studio and created a new ASP.NET application to access OData you can simply use the built-in Add Service Reference code generator (or via the command line DataSvcUtil.exe) and you’ll automatically be presented with strongly typed entities (in the same fashion as a SOAP-based service) and a fluent LINQ-style data context you can use for data entry. Unfortunately within Windows Phone 7 development we don’t have these luxuries (yet) which has meant guides to using the OData client are misleading and sometimes even wrong (this is because early CTPs and beta’s of the WP7 client SDK where different to the released version). So I’d like to take some time out to create a basic demo and show you how easy(ish) it is to get up and running with OData on Windows Phone 7.\

Getting Set-up with MVVM Light

So open up Visual Studio and create a new Windows Phone 7 Silverlight project (if you don’t have this template you can download it from here).

Once you have done that we need to create our framework skeleton for our phone application. As this is a Silverlight project we are going to use Model View ViewModel (MVVM), and currently I prefer the MVVMLight framework. So I’m going to add this in using Nuget.

Once MVVMLight has installed itself, you’ll notice it would have created a ViewModel folder, and added two classes MainViewModel.cs and ViewModelLocator.cs. We’ll need to wire these up to our application, so first open up App.xaml and add the following code:

<Application.Resources>

<!--Global View Model Locator-->

<vm:ViewModelLocator x:Key="Locator" d:IsDataSource="True" />

</Application.Resources>Then open up the code behind (AppCode.xaml.cs) and add the following:

private void Application_Launching(object sender, LaunchingEventArgs e)

{

DispatcherHelper.Initialize();

}You will also need to add the namespace for the ViewModel (vm) :

xmlns:vm="clr-namespace:ODataApplication.ViewModel"Next you need to wire up the ViewModel to to the View, so open up MainPage.xaml and add the following to the phone:phoneApplication node:

DataContext="{Binding Main, Source={StaticResource Locator}}"Getting OData

So now we have our MVVM framework wired up, we need to somehow get our data plugged in. First create a directory within your projet called Service, this is where we will keep the OData classes. Nexy we need to download the OData Windows Phone 7 client SDK. You’ll find this on CodePlex here. There are a few different flavours of the download, but the one you want is ODataClient_BinariesAndCodeGenToolForWinPhone.zip which contains both the necessary assemblies and also a customised version of the Code Generator DataSvcUtil.exe for use with the WP7 Silverlight runtimes .

Once downloaded, add both System.Data.Services.Client.dll and System.Data.Services.Design.dll as references to your project (remember to unblock the assemblies first).

Next open up a command prompt, and set a path to the location of where your downloaded copy of DataSvcUtil.exe is located. Now change the path to your location of your Visual Studio project, and the Service directory you just created. From the command prompt run DataSvcUtil.exe, which takes the following arguments:

/in:<file> The file to read the conceptual model from

/out:<file> The file to write the generated object layer to

/language:CSharp Generate code using the C# language

/language:VB Generate code using the VB language

/Version:1.0 Accept CSDL documents tagged with m:DataServiceVersion=1.0 or lower

/Version:2.0 Accept CSDL documents tagged with m:DataServiceVersion=2.0 or lower

/DataServiceCollection Generate collections derived from DataServiceCollection

/uri:<URL> The URI to read the conceptual model from

/help Display the usage message (short form: /?)

/nologo Suppress copyright messageWe are going to use the following combination (NB: for this example we are using the NetFlix OData Service):

datasvcutil /uri:http://odata.netflix.com/Catalog /out:Model.cs /version:2.0 /DataServiceCollection

You will see the application execute, and it should complete with no errors or warnings.

Now open up Visual Studio, and click the show hidden files icon, find the newly created file (Model.cs) and include it in your solution.

Using OData Models

The file that was just created actually contains multiple objects, including a data context for accessing data and also model representations of the OData entities. As we used the /DataServiceCollection prefix all of our models have been created to support the INotifyPropertyChanged interface so we can actually exposes them all the way through to our View. So what we’ll do next is set-up the data binding between the Model –> ViewModel –> View. Open up your ViewModel (MainViewModel.cs) and create the following property:

private ObservableCollection<Genre> _genres;

public ObservableCollection<Genre> Genres

{

get { return _genres; }

set

{

_genres = value;

RaisePropertyChanged("Genres");

}

}Next (for simplicity) we’ll wire-up this property to the MainPage.xaml file using a ListBox that will repeat for each genre that is retrieved from the Netflix service and list the name out in a text block. Here is the XAML we need to implement:

<!--ContentPanel - place additional content here-->

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<ListBox ItemsSource="{Binding Genres, Mode=OneWay}">

<ListBox.ItemTemplate>

<DataTemplate>

<TextBlock Text="{Binding Name, Mode=OneWay}" />

</DataTemplate>

</ListBox.ItemTemplate>

</ListBox>

</Grid>Connecting OData EndPoint to a ViewModel

The last thing we need to do in our project is to populate our ViewModel with data. To do this we are going to create a new class within our Service directory, called NetflixServiceAgent.cs. For simplicity we’ll create a single method called GetGenres(Action<ObservableCollection<Genre>> sucess) which takes a single argument of an async Action to pass back data to our ViewModel. Populate the method with the following code:

public void GetGenres(Action<ObservableCollection<Genre>> success)

{

var ctx = new NetflixCatalog.Model.NetflixCatalog(new Uri("http://odata.netflix.com/Catalog/"));

var collection = new DataServiceCollection<Genre>(ctx);

collection.LoadCompleted += (s, e) =>

{

if (e.Error != null)

{

throw e.Error;

}

else

{

if (collection.Continuation != null)

{

collection.LoadNextPartialSetAsync();

}

else

{

success(collection);

}

}

};

collection.LoadAsync(new Uri(@"Genres/", UriKind.Relative));

}

What we are doing here is creating a new data context, which acts as an aggregate handling all of the interaction with the OData Service. Next we create a new DataServiceCollection (of the type of the Entity model we wish to return) and pass in the context to it’s constructor. DataSericeCollection is actually derived from ObservableCollection (the Collection type we use in Silverlight databinding) but it also contains methods to synchronous and asynch data retrieval as well as continuation where by the OData service is handling pagination. As with everything in Silverlight we are using the asynchronous data retrieval mechanism, and we pass in an anonymous method to the event handler which ensures an error has not been returned and then returns the collection. The actual LoadAsync method accepts an argument of type URI. This URI should be relative to the one we’ve already passed into the data context, and is representative of the both the entity and query we want to return. This is the primative form of the fluent-LINQ API we have in the full .NET framework, so for instance if we wanted to apply filters to the OData query we would change the URI to reflect this, such as “Genres/$skip=1&$top5”.

Once we have created our service agent, our final task to get us up and running is to call it from within the ViewModel. So open up MainViewModel.cs and within the constructor add the following:

var repo = new NetFlixServiceAgent();

repo.GetGenres((success) => DispatcherHelper.CheckBeginInvokeOnUI(() =>

{ Genres = success; }));And that’s it. By running the application we’ll see the not-so overly fancy list of genres being populated from the OData Service.

Over the next few weeks I’ll upload some more posts around OData clients and WCF Data Services to try and expand on this example so more.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF, Cache and Service Bus

•• Riccardo Becker (@riccardobecker) described Windows Azure AppFabric Cache next steps in a 5/30/2011 post:

A very straightforward of using Windows Azure Appfabric is to store records from a SQL Azure table (or another source of course).

Get access to your data cache (assuming your config settings are fine, see previous post).

List lookUpItems= null; DataCache myDataCache = CacheFactory.GetDefaultCache(); lookUpItems = myDataCache.Get("MyLookUpItems") as List; if (lookUpItems != null) //there is something in cache obviously { lookUpItems.Add("got these lookups from myDataCache, don't pick me"); } else //get my items from my datasource and save it in cache. { LookUpEntities myContext = new LookUpEntitites(); //EF var lookupItems = from lookupitem in myContext.LookUpItems select lookupitem.ID, lookupitem.Value; lookUpItems = lookupItems.Tolist(); /* assuming my static table with lookupitems might chance only once a day or so.Therefore, set the expiration to 1 day. This means that after one day after setting the cache item, the cache will expire and will return null */ myDataCache.Add("myLookupItems", lookUpItems , TimeSpan.FromDays(1)); }

Easy to use and very effective. The more complex and time-consuming your query to your datasource (wherever and whatever it is) the more your performance will benefit from this approach. But, still consider the price you have to pay! The natural attitude of developing for Azure is always: consider the costs of your approach and try to minimiza bandwidth and storage transactions.

Use local caching for speed

You can use local client caching to truely speed up lookups. Remember that changing local cache actually changes the items and changes the items in your comboboxes e.g.

• James Podgorski explained AppFabric Cache – Compressing at the Client in a 5/29/2011 post to the AppFabric CAT blog:

Introduction

The Azure AppFabric Cache price for a particular size has three quotas prorated over an hour period, the number of transactions, network bandwidth as measured in bytes and the number of concurrent connections. The three price points are bundled in basket form where the variable basket sizes are synonymous to the total cache size purchased. As the basket choices grow in size, so do the transaction, network throughput and number concurrent connection limits. See here for pricing details.

It is apparent that if one ‘squeezes’ the data before putting it into the AppFabric Cache they would be able to stuff more objects into cache for a given usage quota . Interestingly enough, the Azure AppFabric Cache API has a property on the DataCache class called IsCompressionEnabled, which implies that the Azure AppFabric Cache provides this capability out of the box. Unfortunately that’s not the case and an inspection with Red Gate .NET Reflector will show that before the object is serialized, the IsCompressionEnabled property is set to false and compression is not applied.

But what is the impact of compressing the data before placing it into Azure AppFabric Cache? Compression algorithms are available in the .NET Framework; the tools to ascertain this impact are accessible to any .NET developer.

In this blog, we will be looking at that very topic; inquiring minds (customers) have asked our team, I am curious as well. We will take the AdventureWorks database and add some compressed and non-compressed Product and ProductCategory data into cache and perform the associated retrieval. Measurements will be taken to compare the baseline non-compressed data size and access durations to that of the compressed data. In general an educated man would say that the total cached used would be probably be less but the overall duration of the operators to be longer due to the overhead imposed by the compression of the CLR objects. Let’s find out.

Implementation

Note: These tests are not meant to provide exhaustive coverage, but rather a probing of the feasibility of compression to shave bytes from the data before it is sent to AppFabric Cache.

To keep things simple, static methods were created to perform serialization of the data similar to the paradigms implemented by AppFabric Cache and compress the data using the standard compression algorithms exposed by the DeflateStream class. In short, the CLR object was serialized and then compressed.

Serialization

The Azure AppFabric cache API utilizes the NetDataContractSerializer to serialize any CLR object marked with the Serializable attribute. The XmlDictionaryWriter and XmlDictionaryReader pair will encode/decode serialized data to/from an internal byte stream using binary XML format. The combination of the NetDataContractSerializer and binary XML formatting provides the flexibility of shared types and performance of binary encoding. On the other hand, byte arrays bypass these serialization techniques and are written directly to an internal stream.

Armed with this knowledge, the following static methods were created to serialize and deserialize the objects so as to be able to compare the relative size of encoded objects before and after the bytes are passed to the compression algorithm.

Serialization is performed by the NetDataContractSerializer and encoded in binary XML format using the XmlDictionaryWriter.