Windows Azure and Cloud Computing Posts for 1/11/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Rob Tiffany posted Reducing SQL Server Sync I/O Contention :: Tip 3 on 1/11/2011:

GUIDs and Clustered Indexes

Uniqueness is a key factor when synchronizing data between SQL Server/Azure and multiple endpoints like Slates and Smartphones. With data simultaneously created and updated on servers and clients, ensuring rows are unique to avoid key collisions is critical. As you know, each row is uniquely identified by its Primary Key.

When creating Primary Keys, it’s common to use a compound key based on things like account numbers, insert time and other appropriate business items. It’s even more popular to create Identity Columns for the Primary Key based on an Int or BigInt data type based on what I see from my customers. When you designate a column(s) to be a Primary Key, SQL Server automatically makes it a Clustered Index. Clustered indexes are faster than normal indexes for sequential values because the B-Tree leaf nodes are the actual data pages on disk, rather than just pointers to data pages.

While Identity Columns work well in most database situations, they often break down in a data synchronization scenario since multiple clients could find themselves creating new rows using the same key value. When these clients sync their data with SQL Server, key collisions would occur. Merge Replication includes a feature that hands out blocks of Identity Ranges to each client to prevent this.

When using other Microsoft sync technologies like the Sync Framework or RDA, no such Identity Range mechanism exists and therefore I often see GUIDs utilized as Primary Keys to ensure uniqueness across all endpoints. In fact, I see this more and more with Merge Replication too since SQL Server adds a GUID column to the end of each row for tracking purposes anyway. Two birds get killed with one Uniqueidentifier stone.

Using the Uniqueidentifier data type is not necessarily a bad idea. Despite the tradeoff of reduced join performance vs. integers, the solved uniqueness problem allows sync pros to sleep better at night. The primary drawback with using GUIDs as Primary Keys goes back to the fact that SQL Server automatically gives those columns a Clustered Index.

I thought Clustered Indexes were a good thing?

They are a good thing when the values found in the indexed column are sequential. Unfortunately, GUIDs generated with the default NewId() function are completely random and therefore create a serious performance problem. All those mobile devices uploading captured data means lots of Inserts for SQL Server. Inserting random key values like GUIDs can cause fragmentation in excess of 90% because new pages have to be allocated with rows pushed to the new page in order to insert the record on the existing page. This performance-killing, space-wasting page splitting wouldn’t happen with sequential Integers or Datetime values since they actually help fill the existing page.

What about NEWSEQUENTIALID()?

Generating your GUIDs on SQL Server with this function will dramatically reduce fragmentation and wasted space since it guarantees that each GUID will be sequential. Unfortunately, this isn’t bulletproof. If your Windows Server is restarted for any reason, your GUIDs may start from a lower range. They’ll still be globally unique, but your fragmentation will increase and performance will decrease. Also keep in mind that all the devices synchronizing with SQL Server will be creating their own GUIDs which blows the whole NEWSEQUENTIALID() strategy out of the water.

Takeaway

If you’re going to use the Uniqueidentifier data type for your Primary Keys and you plan to sync your data with RDA, the Sync Framework or Merge Replication, ensure that Create as Clustered == No for better performance. You’ll still get fragmentation, but it will be closer to the ~30% range instead almost 100%.

See Cash Coleman (@cash_coleman) posted a .NET API Client Getting Started Guide for ClearDB on 1/11/2011 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

Steve Yi reminded SQL Azure developers by Announcing: The DataMarket Contest from The Code Project on 1/11/2011:

The Code Project is hosting a contest asking developers to build an innovative cloud application on the Windows Azure Platform utilizing DataMarket datasets. Contestants can win one of eight Intel Core i7 laptops plus the chance to win the Grand Prize of an Xbox 360 with Kinect.

Windows Azure Marketplace allows you to share, buy and sell building block components, training, premium data sets plus finished services and applications. DataMarket provides a one-stop shop for trusted public domain and premium datasets from leading data providers in the industry. DataMarket has various free datasets from providers like the United Nations, Data.gov, World Bank, Weather Bug, Weather Central, Alteryx, STATS, and Wolfram Alpha etc.

To make it easier to get started, all USA Code Project members also get a FREE Windows Azure platform 30-day pass, so you can put Windows Azure and SQL Azure through their paces. No credit card required: enter promo code: CP001.

The contest is a rolling contest running from January 3, 2011 thru March 31, 2011.

- January Contest Period: 3 Toshiba – Core i7 Satellite Laptops

- February Contest Period: 3 Toshiba – Core i7 Satellite Laptops

- March Contest Period: 2 Toshiba – Core i7 Satellite Laptops

- March Grand Prize: Xbox 360 with Kinect

The Rules:

- Submit application URL to contest@codeproject.com

- Monthly finalists will be determined by The Code Project

- The grand prize winner will be selected by The Code Project at the contest completion on March 31st, 2011

Prizes are awarded based on how tightly an entry* adhered to the conditions of entry, including: Focus and scope, overall quality, coherence, and structure. Learn more about the contest here.

* This competition is open to software development professionals & enthusiasts who are of the age of majority in their jurisdiction of residence; however, residents of Quebec and of the following countries are ineligible to participate due to legal constraints: Cuba, Iran, Iraq, Libya, North Korea, Sudan, and Syria.

I commented previously about lumping PQ with Cuba, Iran, Iraq, Libya, North Korea, Sudan, and Syria.

<Return to section navigation list>

MarketPlace DataMarket and OData

Kurt Mackie reported Microsoft Dynamics AX '6' Coming This Year in a 1/10/2011 post to Microsoft Certified Professional Magazine Online:

Microsoft plans to roll out the next version of its Dynamics AX enterprise resource planning (ERP) solution in the latter part of this year, the company announced today.

That version, called Microsoft Dynamics AX code-named "6," will succeed the currently available Dynamics AX 2009 flagship product. Microsoft is promising that the new release will have an improved architecture plus the usual integration with other Microsoft products, such as Microsoft Office 2010, SharePoint 2010, SQL Server 2008 R2 and Visual Studio 2010.

With the new release, Microsoft is highlighting a "model-driven layered architecture" in Dynamics AX 6 that will benefit developers and independent software developers (ISVs).

"Dynamics AX 6 has a model-driven-layer architecture that will accelerate the application development process for our partners, enabling them to write more quickly, to do less coding and to deliver the solution more quickly," said Crispin Read, general manager of Microsoft Dynamics ERP, in a phone interview. "They [developers] are modifying models vs. writing code -- that's a big new capability, a very significant capability in AX 6."

Read noted that ISVs will be able to use this modeling capability to better extend their products to additional markets. He claimed that the new model-driven layered architecture approach was "unusual" in the ERP software industry. Traditional ERP software products have tended to drift more toward "spaghetti code" when it came to product upgrades and expansions, he claimed.

Earlier versions of Dynamics AX have been based on a layered architecture, but they have not included this modeling capability. The modeling is based on a SQL Server-based model store, Read explained.

"The layered element is really intended to separate the work that is done by the different parties involved providing a customer solution," Read said. "That's very unique, but it's been a fundamental architectural attribute of AX for some time. We certainly improved it; certainly extended it, and we made it much more fine grained in AX 6 through layering. Now, it's model driven in the sense that we now have a SQL Server-based model store, so that there'll be fewer cases where you need to write code to modify or provide application functionality. There will be more cases where you can do that by tweaking the models themselves."

"Application modeling tools enable developers to customize an application using specialized languages that are simpler than a full programming language," explained Robert Helm, managing vice president at the Directions on Microsoft consultancy, in an e-mailed response. "Modeling can make writing customizations simpler than programming, which is how it's done today. Modeling can also make customizations easier to deploy, update and migrate to new application versions than code would be."

Microsoft dropped pushing through its Oslo repository for modeling, according to a September announcement. Instead, it now leans toward each of its solutions having its own model and data store, with Open Data Protocol (ODATA) and Entity Data Model (EDM) used for sharing and federation, explained Mike Ehrenberg, a Microsoft technical fellow. (Emphasis added.)

"Consistent with that, Microsoft Dynamics AX '6' does provide its own model store -- and per the announcement, a very sophisticated one," Ehrenberg stated in an e-mailed response. "First, the model store has moved from the file system to SQL Server in this release, improving scalability, model reporting, and deployment. Layering in the model store allows efficient support of a base model, extended for localization, industry specialization, and on top of that, ISV vertical specialization, reseller and customer specialization, with the ability to model very granular changes and effectively manage the application deployment lifecycle from ISV through to customer and the upgrade process. We provide a service interface to the model store, and it is possible to layer ODATA or EDM on that service."

Read cited three main benefits for ISVs that will come with the Dynamics AX 6 release. Products will be able to get to market faster, ISVs will be able to expand their marketing and they'll be able to reduce their lifecycle investment costs, he said. Read explained that Microsoft Dynamics AX is targeted toward addressing five markets, including retail, distribution, manufacturing, services and the public sector. The solution initially will be designed for 38 countries.

Microsoft plans to hold a technical conference in about a week to show Dynamics AX 6 to its partners, Read said. A community technical preview is planned for February. General availability of Dynamics AX 6 is expected in the third quarter of this year.

Possible for who to “layer oDATA or EDM on that service?” Microsoft or ISVs?

Kurt Mackie is online news editor, Enterprise Group, at 1105 Media Inc.

For more background on Microsoft Dynamics AX code-named "6," see the Microsoft Previews Next-Generation ERP press release of 1/11/2011 on Microsoft PressPass. It’s surprising that there’s no mention of Windows Azure or “cloud” in either Kurt’s article or the official press release. Will Ballmer apply the hatchet to Dynamics AX management?

Full disclosure: I’m a contributing editor for 1105 Media’s Visual Studio Magazine.

See Steve Yi reminded SQL Azure developers by Announcing: The DataMarket Contest from The Code Project on 1/11/2011 in the SQL Azure Database and Reporting section above.

See GigaOM announced on 1/11/2011 their Structure: Big Data 2011 conference to be held on 3/23/2011 in New York, NY: in the Cloud Computing Events section below.

I’m surprised that there’s no one representing the Windows Azure MarketPlace DataMarket as of today in the speakers roster.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bill Zack reminded erstwhile Windows Azure developers about The Windows Azure Platform 30-Day Pass - Free trial - No Credit Card Required! on 1/11/2011:

We're offering a Windows Azure platform 30-day pass, so you can put Windows Azure and SQL Azure through their paces. No credit card is required.

With the Windows Azure platform you pay only for what you use, scale up when you need capacity, and pull back when you don't. Plus, get no-cost technical support with Microsoft Platform Ready.

The easiest way to get started with Windows Azure. Includes a fixed amount of services at no cost after which you just pay at standard consumption rates.

See here for this offer and use the promo code MPR001. No credit card will be required. Also see this site for additional offers.

The 30-day pass lets you create a candidate for Microsoft Platform Ready testing and certification so that you can qualify for a one-year subscription to the Cloud Essentials Pack, as described in my Windows Azure Compute Extra-Small VM Beta Now Available in the Cloud Essentials Pack and for General Use post of 1/9/2010.

Note: A single Windows Live ID can be used for only one 30-day trial offer; attempting to use the same user and company name with another Live ID doesn’t work either. However, my first 30-day pass, which I initiated on 11/10/2010, has been automatically rejuvenated twice; it now doesn’t expire until 2/10/2010. The instance runs a second copy of my OakLeaf Systems Azure Table Services Sample Project.

Cash Coleman (@cash_coleman) posted a .NET API Client Getting Started Guide for ClearDB on 1/11/2011:

.NET API Client Getting Started Guide

Introduction

Welcome to the ClearDB .NET Client Getting Started Guide! This guide will walk you through the steps of connecting your .NET powered app or website to your ClearDB database. Let's get started!

Prerequisites

This guide presumes that you are working on creating a .NET-powered website or app. As such, it also presumes that you have prior .NET experience, as well as a recent version of the .NET framework (minimum .NET Framework 2.0 is required).

It also presumes that you have already created your App ID and API Key so that you can communicate with ClearDB using a ClearDB App Client.

Installation

You can download the .NET App client from https://www.cleardb.com/v1/client/cdb_dotnet_client-1.01.zip.

Getting Started

Start by copying the app client libs into your project folder, then add a reference to it from your Visual Studio project. Note: you will also need to add a reference to the JSON library that is included in the download. This is so your code can properly work with JSON objects that are returned from the ClearDB API.

Now that ClearDB has been added to your project, let's begin by creating an instance of the ClearDB client. Figure 10.0 demonstrates how to initialize your client and make a basic query to ClearDB, as well as iterate through a simple result.

using System; using System.Collections.Generic; using System.Text; using ClearDB; using Newtonsoft.Json.Linq; namespace ClearDBAppClientTest { class Program { static void Main(string[] args) { ClearDBAppClient client = new ClearDBAppClient( "4ffeab8b43c6f04bb807d69712b5943c", "0240b2b14441a96e904fa4ba21c575435b8066a0"); JObject users = client.query("SELECT first_name FROM users"); Console.WriteLine("Result: {0}", users["result"]); foreach (JObject user in users["response"]) { Console.WriteLine("User: {0}", user["first_name"]); } } } }Figure 10.0: Including, and instantiating a new instance of the ClearDB API client, with the App ID and API Key pre-loaded, along with a basic query and result iteration.If you have not yet created your App ID and API Key, you'll need to do that before continuing. See https://www.cleardb.com/api.html (Login Required) to set up your App, API Key, and Access Rules to define the security model for the API Key you will be using here.

The guide continues with a “Working with Transactions” topic. Adding a .NET API might bring ClearDB into the cloud database mainstream. Stay tuned for a post about my initial tests of the ClearDB API for .NET.

For more background on ClearDB see my Windows Azure and Cloud Computing Posts for 12/28/2010+ post (scroll down).

The Windows Azure Team announced New Windows Azure and PHP Tutorials and Tools Now Available on 1/10/2011:

Looking for the latest information about PHP and Windows Azure? Then look no further, below are links to the latest tutorials and tools to help you get started. You can also read more about these on the Interoperability @ Microsoft blog here.

New Tutorials:

- Using Worker Roles for Simple Background Processing

- Getting the Windows Azure Pre-Requisites via the Microsoft Web Platform Installer 2.0

- Getting the Windows Azure Pre-Requisites Via Manual Installation

- Deploying Your First PHP Application with the Windows Azure Command Line Tools for PHP

- Deploying a PHP application to Windows Azure

Updated Windows Azure Tools for PHP (Compatible with the Windows Azure 1.3 SDK):

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

David Linthcum claimed “With recent price drops and better offerings, Amazon.com seems unstoppable as a cloud infrastructure provider” in a deck for his Cloud infrastructure: Soon there'll be just one that counts article of 1/11/2011 for InfoWorld’s Cloud Computing blog:

One company dominates the infrastructure services cloud space: Amazon.com through its Amazon Web Service (AWS). When you talk to other infrastructure services providers, they describe their position in the market relative to AWS and even mimic the way AWS deploys its technology.

Amazon.com is further taunting the other infrastructure services providers by rolling out two premium support tiers and announcing a 50 percent price cut on existing premium plans. As a result, rivals such as Rackspace and GoGrid will have to chop pricing as well.

AWS is to infrastructure services what Saleforce.com is to software as a service (SaaS). It leads the market, and the remaining providers are left way behind. It has an excellent brand name in the cloud computing space and has made only a few missteps thus far. AWS appears to be unstoppable.

The reality is that the infrastructure services business is a much more commoditized space than most people understand. Because storage and compute services are their core offerings, infrastructure services rarely give the sense of a product behind the scenes -- they don't have an interface that demonstrates richness and value as with SaaS. Indeed, you could go with one infrastructure services provider one day and another the next, and the users wouldn't have a clue.

I believe that Amazon.com understands the commodity nature of the infrastructure services marketplace, so it is looking to grab share now, with the goal of making profits later. The end result is that Amazon.com will outmarket and outsell others in the infrastructure services space. After all, for a user, AWS is the No. 1 brand and innovator in the infrastructure services space -- and it is now cheaper than the rest.

A single company -- Amazon.com -- is rising quickly to the top of the infrastructure services market. It won't be a group of providers running neck and neck for your business.

It will be interesting to see if the Windows Azure Platform becomes the Amazon Web Services of PaaS under the new Server and Tools Business president that Steve Ballmer will hire to replace Bob Muglia this summer. My 1/10/2011 tweet reflects my sentiments:

Still in shock over Steve #Ballmer's removal of Bob #Muglia as president of the Server & Tools Business #Azure #SQLAzure #SQLServer #Hyper-V

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

David Pallman reported about Cloud Camp LA and Cloud Camp San Diego on 1/11/2011:

I'm looking forward to Cloud Camp LA the evening of Tuesday 1/11/11. This event is sold out, so if you're just hearing about it now it's too late to get in. However, not too far away is Cloud Camp San Diego on 2/09/11. [Emphasis added.]

I attended the first Cloud Camp LA in 2009 and it was interesting for several reasons. First, it was a chance to interact with not just Windows Azure people but also people who use other cloud platforms such as Amazon. Second, the conference is run in an "unconference" format where there are no pre-determined sessions or speakers. Instead, the people who show up decide on the spot what they want to talk about and who will facilitate discussions. I was skeptical of this idea going in but it actually worked well. However, you do need to set proper expectations. The impromptu format means you will not have the structured presentations with slides and demos a prepared session would have. What you do get are interesting discussions, and a chance to share with others / learn from others.

Cloudera, InfoBright, MongoDB and VoltDB will sponsor a BigData Online Summit to be held on 1/18/2011 at 10:00 to 11:00 AM:

Columnar, NoSQL, Hadoop, OLTP...these are the hot new technologies that are helping companies manage their big data challenges. Join the experts from Cloudera, 10gen, Infobright and VoltDB as they discuss these technologies and show - through customer use cases - where they best fit in addressing the challenges of analyzing large volumes of data.

Register today and find out how these solutions can deliver the business insights you need faster and easier - while lowering costs.

VoltDB added the following in a 1/11/2011 message:

At 2:00pm EDT, Dr. Daniel Abadi, Yale professor and database thought leader will be leading a discussion on topics related to the CAP theorem. The talk is entitled CAP, PACELC and Determinism, and will introduce an adaptation of CAP that incorporates latency into CAP's formulation. Registration details can be found here.

For more information about Dr. Abadi and his position on the CAP theorem, see his Problems with CAP, and Yahoo’s little known NoSQL system post to his DBMS Musings blog of 4/13/2010.

GigaOM announced on 1/11/2011 that their Structure: Big Data 2011 conference will be held on 3/23/2011 in New York, NY:

Big Data Means Big Money

That "fire hose" of information pouring through your company's databases and systems? That data, whether real-time or historic, presents an enormous opportunity to gain business insights to maximize profits and improve products.

Big Data, GigaOM's newest conference, is focused on helping technology executives develop a big data strategy. Turn your company's information into your next big revenue opportunity. Register today and save $400 (standard price is $1195).

Topics:

- Hadoop. What is Hadoop; what does it mean for the enterprise; and how does it power so many innovative solutions out there?

- Real Time Data. How can you prepare yourself to drink from a fire hose of data?

- MapReduce 101. Before you make MapReduce a core part of your data strategy, understand better what it is and what it can do.

- Commodity Scaling. Can you scale your storage needs quickly and cheaply?

- Security and Integrity. How do you know that petabyte of data you uploaded to the cloud is still there without downloading it again?

- Data Consistency. You have data everywhere around the world. How do you make sure it’s always up-to-date and consistent?

- Operational Policy. Where do you keep your data? What should you keep and what should you throw away?

- Visualization. “A picture is worth a thousand words,” they say. Will that be true in the enterprise?

- Algorithms and Analysis. Will your new competitive advantage arise from how you gain insights from your data?

Speakers:

In its charter year, we promise another landmark GigaOM conference with speakers representing the major technology companies as well as startups with significant new ideas in the storage, intelligence and discovery spaces. New speakers are being added weekly; take a look to the right to see a list of confirmed speakers to date. Register today and save $400 (standard price is $1195).

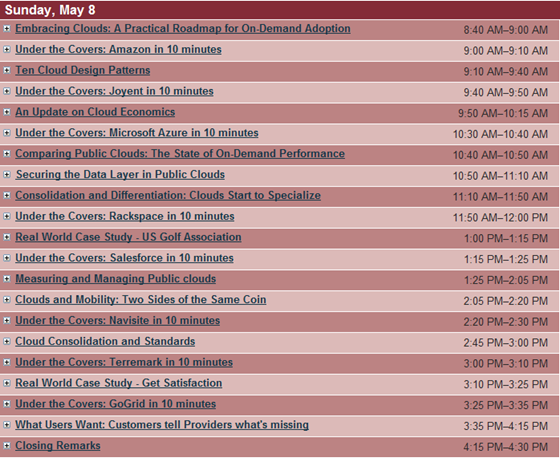

Interop Las Vegas 2011 posted in January 2011 details about their Cloud Computing Conference to be held 5/8 through 5/11/2011 at the Mandalay Bay Resort in Las Vegas, NV:

Track Chair: Alistair Croll, Founder, BitCurrent

Interop's Cloud Computing track brings together cloud providers, end users, and IT strategists. It covers the strategy of cloud implementation, from governance and interoperability to security and hybrid public/private cloud models. Sessions include candid one-on-one discussions with some of the cloud's leading thinkers, as well as case studies and vigorous discussions with those building and investing in the cloud. We take a pragmatic look at clouds today, and offer a tantalizing look at how on-demand computing will change not only enterprise IT, but also technology and society in general.

The conference also includes the two-day Enterprise Cloud Summit:

Enterprise Cloud Summit

In just a few years, cloud computing has gone from a fringe idea for startups to a mainstream tool in every IT toolbox. The Enterprise Cloud Summit will show you how to move from theory to implementation.

Learn about practical cloud computing designs, as well as the standards, infrastructure decisions, and economics you need to understand as you transform your organization's IT.

Enterprise Cloud Summit – Public Clouds

In Day One of Enterprise Cloud Summit, we'll review emerging design patterns and best practices. We'll hear about keeping data private in public places. We'll look at the economics of cloud computing and learn from end users' actual experience with clouds. Finally, in a new addition to the Enterprise Cloud Summit curriculum, major public clouds will respond to our shortlist questionnaire, giving attendees a practical, side-by-side comparison of public cloud offerings.

Enterprise Cloud Summit – Private Clouds

On Day Two of Enterprise Cloud Summit, we'll turn our eye inward to look at how cloud technologies, from big data and turnkey cloud stacks, are transforming private infrastructure. We'll discuss the fundamentals of cloud architectures, and take a deep dive into the leading private cloud stacks. We'll hear from more end users, tackle the "false cloud" debate, and look at the place of Platform-as-a-Service clouds in the enterprise.

Click here for interactive links with session descriptions.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

SD Times Newswire reported The Apache Software Foundation Announces Apache Cassandra 0.7 on 1/11/2011:

The Apache Software Foundation (ASF), the all-volunteer developers, stewards, and incubators of nearly 150 Open Source projects and initiatives, today announced Apache Cassandra v0.7, the highly-scalable, second generation Open Source distributed database.

"Apache Cassandra is a key component in cloud computing and other applications that deal with massive amounts of data and high query volumes," said Jonathan Ellis, Vice President of Apache Cassandra. "It is particularly successful in powering large web sites with sharp growth rates."

Apache Cassandra is successfully deployed at organizations with active data sets and large server clusters, including Cisco, Cloudkick, Digg, Facebook, Rackspace, and Twitter. The largest Cassandra cluster to date contains over 400 machines.

"Running any large website is a constant race between scaling your user base and scaling your infrastructure to support it," said David King, Lead Developer at Reddit. "Our traffic more than tripled this year, and the transparent scalability afforded to us by Apache Cassandra is in large part what allowed us to do it on our limited resources. Cassandra v0.7 represents the real-life operations lessons learned from installations like ours and provides further features like column expiration that allow us to scale even more of our infrastructure."Among the new features in Apache Cassandra v0.7 are:

- Secondary Indexes, an expressive, efficient way to query data through node-local storage on the client side;

- Large Row Support, up to two billion columns per row;

- Online Schema Changes – automated online schema changes from the client API allow adding and modifying object definitions without requiring a cluster restart.

Oversight and Availability

Apache Cassandra is available under the Apache Software License v2.0, and is overseen by a Project Management Committee (PMC), who guide its day-to-day operations, including community development and product releases.

Lydia Leong described The cloud/hosting Magic Quadrant audience in a 1/10/2011 post to her Cloud Pundit blog:

Simon Ellis over at LabSlice has posted a blog entry in which he notes that the recent Cloud IaaS and Web Hosting Magic Quadrant has, in his words, “an obvious bias towards delivering ‘enterprise’ cloud services“. He goes on to say, “Security, availability and professional services — Gartner is clearly responding to dot-points mentioned to them by the large corporates that consume their material. And I daresay that these companies may not be needing cloud IaaS, but just want to be part of the hype.“

I think his point is worth addressing, because it’s true that the MQ is written to an audience of such companies and is very explicit in the fact that we are rating enterprise-grade services, but not true that these companies just want to be part of the hype. That seems to be part of the confusion over what cloud IaaS is about, too. At Gartner, we’re excited by the whole consumerization-of-IT trend that intertwines with the cloud computing phenomenon. But we’re not writing for the individual dude, or the garage developers, or the rebels who want IT stuff without IT people. We’re writing for the corporate IT guy.

Our target audience for a Magic Quadrant is IT buyers — specifically, our end-user clients. They typically work in mid-sized businesses and large enterprises, but we also serve technology companies of all sizes. However, the typical IT buyer who uses this kind of research is the kind of person who turns to an analyst firm for help, which means that they are somewhat on the conservative and risk-averse side. It’s not that they’re not willing to be early adopters or to take risks, mind you. And they’ve embraced the cloud with a surprising enthusiasm. But they’re cautious. Our surveys and polls show that overwhelmingly, security is their top cloud concern, for instance — far and above anything else.

We expect that the IT buyer in our client audience is typically going to be sourcing cloud IaaS on behalf of his organization. He is not an individual developer looking to grab infrastructure with a credit card; in fact, he is probably somebody who is explicitly interested in preventing his developers from doing that. He might work for a start-up that’s looking for a cost-effective way to get infrastructure with no capex, but chances are he’s post-funding or post-revenue if his company can afford to be a Gartner client, so he he shares many so-called “enterprise” concerns — security, availability, performance, and so forth. Managed services options play into a lot of thinking, too — for established companies more in an “offload my hassle” way, and for start-ups more in a “I don’t have an operations team, really, so I’d like to deal with ops as little as possible” way. And yes, professional services can be a help — tons of our clients are head-scratching figuring out what’s the best way to move their infrastructure into the cloud.

Simon Ellis seems to think, from his blog post, that these corporate guys don’t actually need cloud, they just want to be a part of the hype. I think he’s wrong. The sheer number of actual sourcing discussions we’re having with our IT buyer clients — vendor short-listing, requirements, RFPs, contracts, etc. — make it very clear that they’re buying, right now. And they’re buying for real. Production websites / SaaS / etc., production “virtual data centers”, large-scale test-and-development virtual lab environments, cloud-based disaster recovery… This is real business, so to speak.

It’s not an overnight revolution. This is a transition. It usually represents only a portion of their overall IT infrastructure — usually a tiny percentage, although it grows over time. They usually don’t want to make any application changes in the process. They almost certainly aren’t designing to fail. Most of them aren’t building cloud-native apps — although an increasing number of them are beginning to deliberately try to build new applications with cloud-friendly design in mind. Few of them are really taking advantage of the on-demand infrastructure capabilities of the cloud. What they are doing, in other words, is not especially sexy. But they are spending money on the cloud, and that’s what matters in the market right now.

And so in the end, the Magic Quadrant is written to emphasize what we see our clients asking about right now. It’s not cloudy idealism, that’s for sure. And most deals contain some level of managed services, which is why there’s significant weighting on them in the Magic Quadrant. (Fully self-managed is nevertheless a crucial market segment that deserves its own MQ, later this year, although I think the interesting note there will turn out to be the upcoming Critical Capabilities, which is wholly focused on feature-set. Though it should be noted that even a cloud-only MQ is going to emphasize an enterprise-oriented feature-set, not a developer-centric one.)

When we were setting the evaluation criteria for this most recent MQ, we decided that the Execution axis would be wholly focused upon the things we were hearing our clients demand right now, and the Vision axis would be primarily focused upon where we thought cloud services would be going.

The immediate demands are probably most easily summed up as “improved agility at a comparable or lower cost, with no change to our applications, limited impact on IT operations, and limited risks to our business”. The ultimate compliment that one of our clients can give a cloud IaaS provider seems to be, “It works just like my virtualized infrastructure in my own data center, but it’s less hassle.” That’s what they say to each other at our end-user roundtables at conferences when they’re trying to convince non-adopters to adopt.

I’m a pragmatist in most of my published research. I think that as an analyst, it’s fun to prognosticate on the future, but ultimately, I’m cognizant that what ultimately pays my salary is an IT buyer coming to me for advice, and feeling afterwards like I helped him think through making the choice that was right for him. Not necessarily the ideal choice, almost certainly not the most forward-thinking choice, but the choice that would deliver the best results given the environment he has to deal with. Ditto for a vendor who comes to me for advice — I want to reflect what I think the market evolution will be and not what I think it should be.

I’m not here to cheerlead for the cloud. It doesn’t mean that I don’t believe in the promise of the cloud, or that I’m not interested in the segments of the market that aren’t represented by Gartner’s IT buyer clients (I’ve certainly been keeping an eye on developer-centric clouds, for instance), or that I don’t understand or believe in the fundamental transformations going on. But we’ve got to get there from here, and that’s doubly true when you’re talking to corporate buyers about what cloud-like stuff they want now.

<Return to section navigation list>

0 comments:

Post a Comment