Windows Azure and Cloud Computing Posts for 1/5/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket and OData

Justin Lee explained CRUD operations on Microsoft SharePoint 2010 oData Lists with cURL on 1/5/2011:

I’ll get straight to the point. cURL is a command line tool for transferring data with URL syntax, supporting HTTP, HTTPS, etc. oData is a Web protocol for querying and updating data built on top of upon Web technologies such as HTTP, Atom Publishing Protocol (AtomPub) and JSON.

Microsoft SharePoint 2010 exposes their Lists using the oData protocol which you can access from the following URL – http://<sharepoint>/_vti_bin/ListData.svc.

Here’s how you can do all 4 CRUD (Create, Read, Update, Delete) operations on your Microsoft SharePoint 2010 Lists using cURL which you can easily translate it to JSON and JQuery once you understand what’s going on.

Setting up

Basic syntax (for our purpose):

curl -ntlm -netrc http://sharepoint/_vti_bin/[moreurl]where:

.netrc (mode 600)

machine sharepoint login user passwordpwdCreate

In order to create an entry into the list, you have to pass in the data as shown below with the command “-d”. I’m passing in JSON in this case as set with the command “-H”, but you can pass in an XML string if you wish too. I find JSON shorter and more concise. You use the POST method request in order to create an entry or item.

curl –ntlm --netrc

-d"{ Title: 'January Team Meeting', StartTime: '2011-01-15T17:00:00', EndTime: '2011-01-15T18:00:00' }"

-H content-type:application/json

-X POST http://sharepoint/_vti_bin/ListData.svc/CalendarNotice I did not set all the fields from the Calendar List. You do need to set all the fields that are required, but those not required and not specified will be set to NULL or the default value.

You will get back a reply with the oData entry if successful.

Read

Getting data is as simple as doing a normal GET method request from the List itself.

curl --ntlm --netrc http://sharepoint/_vti_bin/ListData.svc/CalendarHere is a quick list of what kind of filters you can do.

Syntax:

http://sharepoint/_vti_bin/ListData.svc/{Entity}[({identifier})]/[{Property}]

Example to get budget hours for Project $4:

http://sharepoint/_vti_bin/ListData.svc/Projects(4)/BudgetHours

Example to get Projects for Clients in Chicago:

http://sharepoint/_vti_bin/ListData.svc//Projects?$filter=Client/City eq ‘Chicago’Example to get a Project and its related Client:

http://sharepoint/_vti_bin/ListData.svc/Projects?$expand=Client

Shows me parent that is associated with this XML

QueryString parameters for REST:

- $filter

- $expand

- $orderby

- $skip

- $top

- $metadata (will bring back all the XML metadata about the object. Think of it like WSDL for your REST call)

You can stack these parameters

Update

There are 2 ways of updating Microsoft SharePoint 2010 oData items. Notice the URL is pointing to the specific entry, in this case the entry with id=2 in the Calendar list.

One way is to use the PUT method request which essentially replaces an entirely new version of data into that entry. This is similar to creating an entry or item – You do need to set all the fields that are required, but those not required and not specified will be set to NULL or the default value.

curl –ntlm --netrc

-d"{ Title: 'January 2011 Team Meeting', StartTime: '2011-01-15T17:00:00', EndTime: '2011-01-15T18:00:00'}"

-H content-type:application/json

-H If-Match:*

-X PUT"http://sharepoint/_vti_bin/ListData.svc/Calendar(2)"Notice that you need to have the header “If-Match:” in order to enable the update to happen. This is to prevent concurrent updates and modifications to happen. Essentially, the right way is to read the data and get the “If-Match:” header tag which looks something like this If-Match: W/”X’000000000000D2F3′”, then put it in the header when you update. However, in this case, If-Match:* essentially ignores all concurrency problems and just tells the oData service to update.

The other way is to modify only the changes made, keeping all the other fields with data intact. You use the MERGE method request instead as seen below.

curl –ntlm --netrc

-d"{ Title: 'January 2011 Team Meeting'}"

-H content-type:application/json

-H If-Match:*

-X MERGE"http://sharepoint/_vti_bin/ListData.svc/Calendar(2)"It will return 200 (OK), 201 (Created) or 202 (Accepted) if successful. (I can’t remember which at the moment)

Delete

Deleting is as simple as using the DELETE method request.

curl --ntlm --netrc

-X DELETE"http://sharepoint/_vti_bin/ListData.svc/Calendar(2)"A successful delete will return a 200 (OK) status code.

Summary

So that’s how you do CRUD operations with all that low level HTTP method request. Create – POST, Read – GET, Update – PUT or MERGE, Delete – DELETE. Remember, in order to update, you need the “If-Match:” header when you send a PUT or MERGE method request else it will fail.

Tony Bailey (@tonybai2010, a.k.a. tbtechnet) claimed Mine the Data and the Data is mine in a 1/5/2011 post to TechNet’s WebTech blog:

Glad to see tbtechnet finally identified himself with a bio and photo. Tony works as a Senior Marketing Manager at Microsoft. His background includes product management for network security and network infrastructure. Bachelor and Doctorate degrees from the University of Birmingham, U.K.

Windows Azure Marketplace DataMarket is a fairly simple premise.

- Enable content providers to more easily market and sell their services and data

- Allow data consumers to acquire simpler to use content from a single location

I pulled this from the Windows Azure Marketplace DataMarket whitepaper:

DataMarket helps simplify all the steps associated with discovering, exploring, and acquiring information.

Content providers get:

- Easy publication of data.

- A scalable Microsoft cloud computing platform that handles delivery, billing, and reporting.

Developers get:

Trial subscriptions that let you investigate content and develop applications without paying data royalties.

- Simple transaction and subscription models that support pay as you grow access to multi-million-dollar datasets.

Information workers get:

- Simple, predictable licensing models for acquiring content.

The Code Project also just kicked off their Windows Azure Marketplace DataMarket contest.

Wes Yanaga recommended that you Register to Attend Web Camps in Silicon Valley or Redmond in this 1/5/2011 post to the US ISV Evangelism blog:

If you are a US developer based in either the SF Bay Area/Silicon Valley or Seattle/Redmond don’t miss the opportunity to attend Web Camp! The agenda at Web Camp will cover the following technologies: ASP.NET MVC, jQuery, OData, Visual Studio 2010 and more. [Emphasis added.]

If you are unfamiliar with Web Camps, these events are a great opportunity to learn in classroom and hands-on-labs. These events are staffed by subject matter experts to help answer questions. There is not cost to register, but seating is limited.

For More Information please visit Doris Chen’s Blog or register at the links below:

Guy Barrette announced on 1/5/2011 the Montreal Web Camp will be held 2/5/2011 from 9:00 AM to 4:30 PM:

The first Montreal Web Camp will take place on Saturday February 5th from 9AM to 4:30PM. This event is organized by Microsoft Canada in collaboration with the Montreal .NET Community.

You want to learn about MVC, OData and JQuery? This is the right place! It’s free and lunch is even included! [Emphasis added.]

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Alik Levin reported on 1/5/2011 the availability of a Video: Windows Azure AppFabric Access Control Service (ACS) v2 Prerequisites segment:

My colleague, Gayana, produced nice short video that outlines the prerequisites for working with Windows Azure AppFabric Access Control Service (ACS) v2.

I recommend watching it in full screen: Video (~3 min): Windows Azure AppFabric ACS v2 Prerequisites

Related videos:

- Video: What’s Windows Azure AppFabric Access Control Service (ACS) v2?

- Video: What Windows Azure AppFabric Access Control Service (ACS) v2 Can Do For Me?

- Video: Windows Azure AppFabric Access Control Service (ACS) v2 Key Components and Architecture

Slides are here.

The Claims-Based Identity blog announced Single Sign-On to Windows Azure using WIF and ADFS whitepaper now available on 1/5/2011:

We have published a whitepaper on how to enable Single Sign-On to Windows Azure using WIF and ADFS.

Here is the abstract:

This paper contains step-by-step instructions for using Windows® Identity Foundation, Windows Azure, and Active Directory Federation Services (AD FS) 2.0 for achieving SSO across web applications that are deployed both on premises and in the cloud. Previous knowledge of these products is not required for completing the proof of concept (POC) configuration. This document is meant to be an introductory document, and it ties together examples from each component into a single, end-to-end example.

Download it here!

The 36-page white paper of 12/16/2010 was authored by Vittorio Bertocci (as you'd expect) and David Mowers.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

See Microsoft Events announced on 1/5/2011 that Neudesic’s David Pallman will conduct a Webinar entitled What's New from PDC - Windows Azure AppFabric and Windows Azure Connect on 1/7/2011 at 10:00 to 11:00 AM PST in the Cloud Computing Events section below.

See Mari Yi announced a Selling the Windows Azure Platform- PDC Features Partner Training Live Meeting on January 10, 11:00 am–12:00 pm EST in a 1/5/2011 post to the Canada Partner Learning Blog in the Cloud Computing Events section below.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bruno Terkaly offered free onsite training in his Windows Azure Hands On Labs Soon to be Released post of 1/5/2011:

Free training available from me

Are you a potential user of the Windows Azure Platform? Well, while you were taking sleigh rides during the holidays, I was busy creating hands on labs that cover the major pillars of the Windows Azure Platform. I will post some of these over the next few weeks.

The labs cover:

Topic Details Windows Azure Web Roles, Worker Roles, Storage (Tables, Blobs, Queues) SQL Azure Migration, connection, utilization from Windows Phone 7 and Web applications AppFabric Connecting computers behind firewalls, routers, NAT devices. Also, publish/subscribe scenarios. The ultimate bridging of on-premise to the cloud. Little ‘r’ me if you are interested in free training

I will not charge a fee for hands-on, all day training. I show up at your company and we code all day. I bring the labs, some free test accounts and a lot of passion to get you up and running up in the cloud in the shortest possible amount of time.

Contact me at bterkaly@microsoft.com

Sounds like a bargain to me. I wonder how many other Microsoft cloud evangelists are offering free onsite training.

Avkash Chauhan explained Handling problem: Diagnostics Monitor is not sending diagnostics logs to Azure Storage after upgrading Windows Azure SDK from 1.2 to 1.3 in this 1/4/2011 post:

When you upgrade your service from Windows Azure SDK 1.2 to Windows Azure SDK 1.3 it is possible that your application running in Windows Azure, stop sending diagnostics logs. We have seen a few cases where such problem occurred. Not everyone have encountered the problem however if you encounter this problem you need to collect the logs related with MonAgentHost entries in it. You potentially could see something as below:

[Diagnostics]: Checking for configuration updates 11/30/2010 02:19:09 AM.

[MonAgentHost] Error: MA EVENT: 2010-11-30T07:21:35.149Z

[MonAgentHost] Error: 2

[MonAgentHost] Error: 4492

[MonAgentHost] Error: 8984

[MonAgentHost] Error: NetTransport

[MonAgentHost] Error: 0

[MonAgentHost] Error: x:\rd\rd_fun_stable\services\monitoring\shared\nettransport\src\netutils.cpp

[MonAgentHost] Error: OpenHttpSession

[MonAgentHost] Error: 686

[MonAgentHost] Error: 0

[MonAgentHost] Error: 57

[MonAgentHost] Error: The parameter is incorrect.

[MonAgentHost] Error: WinHttpOpen: Failed to open manually set proxy <null>; 87

[MonAgentHost] Error: MA EVENT: 2010-11-30T07:21:35.249Z

[MonAgentHost] Error: 2

[MonAgentHost] Error: 4492

[MonAgentHost] Error: 8984

[MonAgentHost] Error: NetTransport

[MonAgentHost] Error: 0

[MonAgentHost] Error: x:\rd\rd_fun_stable\services\monitoring\shared\nettransport\src\netutils.cpp

[MonAgentHost] Error: OpenHttpSession

[MonAgentHost] Error: 686

[MonAgentHost] Error: 0

[MonAgentHost] Error: 57

[MonAgentHost] Error: The parameter is incorrect.

[MonAgentHost] Error: WinHttpOpen: Failed to open manually set proxy <null>; 87

[Diagnostics]: Checking for configuration updates 11/30/2010 02:20:11 AM.The above errors will be logged by DiagnosticsAgent.exe (MonAgentHost.exe in Windows Azure 1.2) every time when the Diagnostic Monitor is trying to transfer the logs to the Table Storage. So the problem is actually occurred when the log are supposed to transfer from the diagnostics monitor tool depend on your *. ScheduledTransferPeriod() function.

To solve this problem you will not to disable to <sites> </sites> section in the ServiceDefinition.csdef as highlighted below:

<?xml version="1.0" encoding="utf-8"?>

<ServiceDefinition name="Your_Service_Name" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition">

<WebRole name="MyWebRole" enableNativeCodeExecution="true">

<!--<Sites>

<Site name="Web">

<Bindings>

<Binding name="Endpoint1" endpointName="Endpoint1" />

</Bindings>

</Site>

</Sites>-->

<Endpoints>

<InputEndpoint name="Endpoint1" protocol="http" port="80" />

</Endpoints>

<Imports>

<Import moduleName="Diagnostics" />

</Imports>

</WebRole>

</ServiceDefinition>You must know that when you comment/remove the above <sites> </sites> section from the service definition (csdef) you are running your application in HWC (Hostable Web Core) means within WaWebHost.exe process instead of full IIS role w3wp.exe. Having <sites> </sites> section in service definition (csdef) allow you to run your application in full Web Role.

It is also suggested that commenting <sites> </sites> section in service definition (csdef) means your application is running in legacy (Windows Azure SDK 1.2) based Web Role.

Dom Green (@domgreen) updated details about MCS, Windows Azure & Transport for London on 1/4/2010:

Microsoft Consulting Services in the UK have been working together with Transport for London (TfL) to move the train prediction web services from on premise into the Windows Azure Platform.

I have been working on this project for the past month to help design and implement the solution in the cloud and has proved a very challenging yet fun project. We have been able to allow the TfL APIs to scale out from their original web service that was released earlier in the year, to deal with the large volumes of traffic that the service attracts.

With moving the TfL API to Windows Azure we have been able to build a system that can handle in excess of 7 million requests per day and scale up during peak periods where customers have a heightened interest in the transport network, such as during the current snowy season.

You can see more about the TfL service and how you could use the real-time tube data within your applications visit the TfL developer page here.

You can also view press coverage of the launch at the following sites:

TfL’s live Tube information returns, empowers developers

They’re baaack: the live map of London Underground tube trains returns

Transport for London has yoked its API to Windows Azure systems that will be able to deal with millions of calls per day as it offers new data services

Transport for London routes real-time data to developers via Microsoft Azure

London Tries Microsoft Azure for Hosting Data Feeds

London’s agency for public transportation is using Microsoft Azure to disseminate data feeds for third party applications

Alex Handy asserted The future of dynamic languages is less than crystal clear in a 1/5/2011 post to SDTimes on the Web:

Lessons learned from the technology hype cycle dictate that dynamic languages should be in the trough of disillusionment by now. For the past three years, businesses have awakened to PHP, Python and Ruby as viable tools on the Web and in the server, and after extensive growth for all manner of non-Java, non-C languages, many businesses are now taking stock to see if all those promises have been fulfilled.

Mike Gualtieri, principal analyst at Forrester, said that those promises have not been fulfilled. He said he used to receive numerous phone calls asking about PHP, Python and Ruby from enterprises evaluating the technology. But he said that this year those questions have been replaced by new ones about mobile development and JavaScript.

“I think it's been proven to be false that you can build applications quicker with Ruby on Rails," he said. "The typical Ruby demo was, 'Look, I can put my data in and it creates the forms and the database for me, and I can update it and it changes that.' The problem is it's like the 1980s all over again. It breaks down when you start trying to do a real application."

Tom Mornini, CTO of Engine Yard, isn't so sure that this is the case, however. He admits that enterprises are not replacing core Java applications with Ruby versions, but he also said that Ruby on Rails remains a popular and compelling enterprise choice for smaller Web applications, like marketing sites and social engagement applications.

“Gartner says [Ruby] is being used considerably, but not for core competency applications," said Mornini. "We're starting to get uptake in training from large enterprises. The No. 1 thing our customers ask us about is, 'Do you know any Ruby developers?' The demand is off the charts. They're commanding very high rates of pay. Engine Yard is growing at multi-digit percentages a month. Java collapsing probably isn't hurting either,” he said, referring to the current kerfuffle within the JCP.

Mornini said Ruby on Rails is important for software development as a whole, and added that this is why it remains an appealing platform. “David Heinemeier Hansson's [the creator of Rails] philosophy of convention over configuration and simplification for Rails are going to pay back for generations,” he said.

This article might explain why Microsoft deemphasized support for IronPython and IronRuby in 2010 (see Open source Ruby, Python hit rocky ground at Microsoft of 8/9/2010.)

David Linthicum advised “Take these steps to enhance your cloud experience -- thus, making yourself more valuable and your business work better” in a deck for his How to enhance your career with cloud computing in a 1/5/2011 post to InfoWorld’s Cloud Computing blog:

Many practitioners in IT are ill-prepared for the continued emergence of cloud computing. Although this ignorance was almost cute in 2010, it will be career-limiting this year.

At its core, cloud computing, despite being hyped to death in 2010, has been largely misunderstood in terms of it true value to the enterprise and how IT needs to approach it. This needs to change. I have a few suggestions on how you can use cloud computing to enhance your career.

First, focus on education. I'm talking about cloud basics, such as the difference between infrastructure, software, and platform services, as well as when and where to use each. Much of the tech coverage has been a mile wide and an inch deep on the cloud basics. However, there are many good books on cloud computing, including mine, that have much more substance and practical advice. Also, make sure you check out InfoWorld's Cloud Computing Deep Dive, which has an excerpt of my book. While educating yourself is obvious advice, it's the single element that causes confusion around cloud adoption.

Next, create a cloud computing strategy. Creating a cloud computing strategy not only makes you look up to date and proactive, it also enhances the fundamentals around your IT planning. How? By marrying the use of the cloud with all aspects of IT resources, including providing business cases, road maps, and budgets. But watch out -- these resources are political footballs in many enterprises. Make sure you use this strategizing as an opportunity to work and play well with others.

Finally, encourage experimentation. This means creating an infrastructure-as-a-service or platform-as-a-service prototype to better understand the values that cloud computing can -- and can't -- bring. Make sure you dial information from this experimentation back into your planning.

<Return to section navigation list>

Visual Studio LightSwitch

Robert Green posted Using Both Remote and Local Data in a LightSwitch Application to the Visual Studio LightSwitch Team blog on 1/5/2011:

Hi. I’m Robert Green. You may remember me from such past careers as Developer Tools Product Manager/Visual Basic Program Manager at Microsoft and Sr. Consultant at MCW Technologies. After five and half years on “the other side” at MCW, I have returned to Microsoft. I am now a Technical Evangelist in the Developer Platform and Evangelism (DPE) group. I am focused on Visual Studio, including LightSwitch and the next version of Visual Studio. I am very happy to be back and very excited to be working with LightSwitch. This product is cooler and more powerful than many people realize and over the coming months, I will do my best to prove that assertion. Starting right now.

The first post in my blog (Adventures in DeveloperLand) is up now. In it I show how to build a LightSwitch application that uses both remote and local data. This will be a typical scenario. You build an application that works with existing data in a SQL Server database, and now you want to add additional data, in this case customer visits and notes. You can’t add new tables or columns to the existing database. Instead you can use local tables and accomplish the same thing.

Check it out and let me know what you think: Using Both Remote and Local Data in a LightSwitch Application [see below].

Here’s Robert’s post:

In LightSwitch, you can work with remote data and with local data. To use remote data, you can connect to a SQL Server or SQL Azure database, to a SharePoint site, and to a WCF RIA service. If you use local data, LightSwitch creates tables in a SQL Server Express database. When you deploy the application, you can choose to keep this local data in a SQL Server Express database or use SQL Server and SQL Azure.

In an application, you can use data from one or all of these data sources. I’m currently building a demo that uses local and remote data. A sales rep for a training company wants an application to manage customers and the courses they order. This data already exists in a SQL Server database. So I attached to the Courses, Customers and Orders tables in the TrainingCourses database. I then created screens to work with this data.

If you are new to LightSwitch, you can learn more about how I got to this point by checking out the How to: Connect to Data and How to: Create a New Screen help topics. You can also watch the first three videos in Beth Massi’s most excellent How Do I Video series.

Creating Relationships Between Local and Attached Data

In addition to managing this information, I want to keep track of customer visits. I also want to keep notes on customers. If I owned the SQL Server database, I could just add a Visits table and then add a Note column to the Customers table. But I don’t own the database, so I will use local tables in my LightSwitch application. I right-click on Data Sources in the Solution Explorer and select Add Table. I then create the Visits table.

Notice that the remote data is in the TrainingCoursesData node and the local data is in the ApplicationData node.

I need a one-to-many relationship between customers and visits, so I create that.

Notice that LightSwitch adds a Customer property to my Visits table. This represents the customer. It also adds the customer id (the Customer_CustomerId property), which is the foreign key. I don’t want this to show up on screens so I uncheck Is Visible On Screen in the Properties window.

Stop for a minute and consider the complete coolness of this. I just created a cross-data source relationship. I have a one to many relationship between customers in the remote SQL Server database and visits in my local SQL Server Express database. In a future post, I will add SharePoint data to this application and have a relationship between SQL data and SharePoint data. Federating multiple data sources is a unique and compelling feature of LightSwitch.

I can now create a master-detail screen with Customers and Visits. I won’t discuss that here because Beth covered that in episode 6 of the How Do I Video series.

What I want to talk about here is adding customer notes. I want the ability to add a note for each customer. My first thought is I will just add a Note property to the Customer entity. I can add the property, but I see that it is a computed property. That’s because LightSwitch does not allow changing the schema of attached data sources.

So I’ll create a local CustomerNotes table with a Note property. I will then create a one to zero or one relationship between Customers and CustomerNotes. Every note has a customer and each customer can have a note.

LightSwitch adds the foreign key, Customer_CustomerId to CustomerNote and I uncheck Is Visible On Screen for that.

Adding Customer Notes for Both New and Existing Customers

I am using the CustomerDetail screen both for adding and editing customers. (See episode 9 of the How Do I Video series.) I want to add customer notes to the screen. When I open it in design-mode, I see that CustomerNote shows up as part of the query that populates the screen. All I have to do is drag it and drop it into the vertical stack.

It shows up as a Modal Window Picker, but I can change that to a Vertical Stack, which by default includes a TextBox for the note and a Modal Window Picker for the customer. I already know who the customer is, so I can delete the Modal Window Picker and leave the TextBox.

Now when I view a customer, there is a place to see and edit notes. So far so good!

Note: I added the Delete button myself to this screen. I will discuss that in an upcoming post.

If the customer does not have a note or if it is a new customer, the Note text box is read only because there is no record in the CustomerNotes table. I need to automatically add a new note record for any customer that doesn’t already have one.

To make that happen for new customers, I add the following code to the Customer_Created method of the Customer entity:

Me.CustomerNote = New CustomerNote() Me.CustomerNote.Customer = MeMe represents the customer. Since I added the relationship between customers and notes, the Customer class has a CustomerNote property, which represents the customer’s note. In this code, I set that to a new instance of the CustomerNote class. The Customer property of the CustomerNote class represents the customer to which each note belongs. I set that to Me and the new customer now has a related note.

Now when I create a new customer in the CustomerDetails screen, I can add a note at the same time. When I save the new customer, I save the note as well.

The code above runs when a new customer is created. What about existing customers? I want this code to run for existing customers, but only if they don’t currently have a note. When the screen loads, I can check if an existing customer has a note. If not, I will create one. So I add the following code in bold to the CustomerDetail_Loaded method:

Me.CustomerId.HasValue Then Me.Customer = Me.CustomerQuery If Me.Customer.CustomerNote Is Nothing Then Me.Customer.CustomerNote = New CustomerNote() Me.Customer.CustomerNote.Customer = Me.Customer End If Else Me.Customer = New Customer Me.FindControl("DeleteButton").IsVisible = False End IfIn this code, Me represents the screen. Me.CustomerId represents a screen level property. If it has a value, then this is an existing customer. If it has no value, this is a new customer. Again, see episode 9 of the How Do I Video series for the full details behind this. There is also code in there to hide the Delete button when adding a new customer.

Note that I could put the four bolded lines of code after the End If and then I wouldn’t need the code I put in the Customer_Created earlier. However, if I did that, notes would only be created for new customers if the customer were added in this screen. I want to tie creating a new note to creating a new customer at the data level, not at the UI level, so the code exists in both places.

Summary

With one new table and a little bit of code, I have essentially added a local property to a remote database table. Nice! I can work with additional data even without the ability to add it to the main database.

Of course, I do need to remember that right now, the notes data only lives on my computer. Nobody else can see the customer notes I add and I can’t see the notes others add. And my notes aren’t backed up when the SQL Server database is backed up. The same is true for visits.

I should also mention that I am seeing some odd behavior. Right after adding a customer, if I select it in the customer list, I get an object reference not set error. When I close the application and come back in, the customer and note are there. I am hoping this is related to the following comment from The Anatomy of a LightSwitch Application Series Part 2 – The Presentation Tier:

In Beta 1, the screen's Save command operations over N data sources in an arbitrary order. This is not the final design. LightSwitch does not enforce correct ordering or updates for multiple data sources, nor do we handle compensating transactions for update failures. We are leaning toward selecting a single updatable data source per screen, where all other data sources represent non-updatable reference data. You can expect to see changes after Beta 1.

Beth Massi (@bethmassi) wrote Build Business Applications Quickly with Visual Studio LightSwitch for Code Magazine’s January/February 2011 issue:

LightSwitch is a new development tool and extensible application framework for building data-centric business applications. LightSwitch simplifies the development process because it lets you concentrate on the business logic and does a lot of the remaining work for you. With LightSwitch, an application can be designed, built, tested, and in your user’s hands quickly. LightSwitch is perfect for small business or departmental productivity applications that need to get done fast.

LightSwitch applications are based on Silverlight and a solid .NET application framework using well known patterns and best practices like n-tier application layering and MVVM as well as technologies like Entity Framework and RIA Services (Figure 1). The LightSwitch team made sure not to invent new core technologies like a new data access or UI layer; instead we created an application framework and development environment around these existing .NET technologies that a lot of developers are already building upon today.

Figure 1: LightSwitch applications are based on proven n-tier architecture patterns and .NET technologies developers are already building upon today.

This architecture allows LightSwitch applications to be deployed as desktop applications, giving them the ability to integrate with hardware devices such as a scanner or bar code reader as well as other applications like Microsoft Word and Excel, or they can be deployed as browser-based applications when broader reach is required.

Users expect certain features like search, the ability to sort and rearrange grids, and the ability to export data. With every LightSwitch application those features are already built in. You don’t have to write any code for navigation, toolbars/ribbons, dirty checking or database concurrency handling. Common data operations such as adding, updating, deleting are also built in, as well as basic data validation logic. You can just set some validation properties or write some simple validation code based on your business rules and you’re good to go. We strived to make it so that the LightSwitch developer only writes code that only they could write; the business logic. All the plumbing is handled by the LightSwitch application framework.

…

Beth continues with detailed instructions for developing a LightSwitch application. A second article about deploying a LightSwitch application is premium content which requires a Code Magazine subscription.

Return to section navigation list>

Windows Azure Infrastructure

David Pallman posted Taking a Fresh Look at Windows Azure on 1/4/2011:

In this post I'll take you through an updated tour of the Windows Azure platform. It's 2011, and the Windows Azure platform is coming up on the first anniversary of its commercial release. Much has been added in the last year, especially with the end-of-year 1.3 update. This will give you a good overview of what's in the platform now. Note, a few of these services are still awaiting release. This is an excerpt from my upcoming book, The Azure Handbook.

WINDOWS AZURE: CORE SERVICES

The Windows Azure area of the platform includes many core services you will use nearly every time you make use of the cloud, such as application hosting and basic storage. Currently, Windows Azure provides these services:

• Compute Service: application hosting

• Storage Service: non-database storage

• CDN Service: content delivery network

• Windows Azure Connect: virtual network

• DataMarket: marketplace for buying or selling reference dataWindows Azure Compute Service

The Compute service allows you to host your applications in a cloud data center, providing virtual machines on which to execute and a controlled, managed envi-ronment. Windows Azure Compute is different from all of the other platform services: your application doesn’t merely consume the service, it runs in the service.

The most common type of applications to host in the cloud are Internet-oriented, such as web sites and web services, but it’s possible to host other kinds of applications such as batch processes. You choose the size of virtual machine and the number of instances, which can be freely changed.Here’s an example of how you might use the Windows Azure Compute Service. Let’s say you have a public-facing ASP.NET web site that you currently host in your enterprise’s perimeter network (DMZ). You determine that moving the application to the Windows Azure platform has some desirable benefits such as reduced cost. You update your application code to be compatible with the Windows Azure Compute Service, requiring only minor changes. You initially update and test the solution locally using the Windows Azure Simulation Environment. When you are ready for formal testing, you deploy the solution to a staging area of the Windows Azure data center nearest you. When you are satisfied the application is ready, you promote it to a production area of the data center and take it live.

Windows Azure Storage Service

The Storage service provides you with persistent non-database storage. This storage is external to your farm of VM instances (which can come and go). Data you store is safely stored with triple redundancy, and synchronization and failover are completely automatic and not visible to you.

Windows Azure Storage provides you with 3 kinds of storage: blobs, queues, and tables. Each of these has an enterprise counterpart: blobs are similar to files, queues are similar to enterprise queues, and tables are similar to database tables but lack relational database features. In each case however there are important differences to be aware of. All 3 types of storage can scale to a huge level; for example a blob can be as large as a 1 terabyte in size and a table can hold billions of records.

Blobs can be made accessible as Internet URLs which makes it possible for them to be referenced by web sites or Silverlight applications. This is useful for dynamic content such as images, video, and downloadable files. This use of blobs can be augmented with the Windows Azure CDN service for global high-performance caching based on user locale.

Here’s an example of how you might use the Windows Azure Storage service. You have a cloud-hosted web site that needs to serve up images of real estate properties. You principally keep property information in a database but put property images in Windows Azure blob storage. Your web pages reference the images from blob storage.

Windows Azure CDN Service

The Content Delivery Network (CDN) Service provides high performance distri-bution of content through a global network of edge servers and caching. The CDN currently has about 24 edge servers worldwide currently and is being regularly expanded.

A scenario for which you might consider using the CDN is a web site that serves up images, audio, or video that is accessed across a large geography. For example, a hotel chain web site could use the CDN for images and videos of its properties and amenities.

As of this writing, the CDN service currently serves up blob storage only but additional capabilities are on the way. At the PDC 2010 conference, Microsoft announced new CDN features planned for 2011 including dynamic content caching, secure SSL/TLS channels, and expansion of the edge server network. Dynamic content caching in particular is of interest because it will allow your application to create content on the fly that can be distributed through the CDN, a feature found in many other CDN services.Windows Azure Connect

Windows Azure Connect provides virtual networking capability, allowing you to link your cloud and on-premise IT assets with VPN technology. You can also join your virtual machines in the cloud to your domain, making them subject to its policies. Many scenarios that might otherwise be a poor fit for cloud computing become feasible with virtual networking.

Here’s an example of how you might use Windows Azure Connect. Suppose you have a web application that you want to host in the cloud, but the application depends on a database server you cannot move off-premise. Using Windows Azure Connect, the web site in the cloud can still access the database server on-premise, without compromising security.

This service is not yet released commercially but is available for technical preview.Windows Azure Marketplace DataMarket

The Windows Azure Marketplace is an online marketplace where you can find (or advertise) partners, solutions, and data. In the case of data, the marketplace is also a platform service you can access called DataMarket. You can explore DataMarket interactively at http://datamarket.azure.com.

The DataMarket service allows you to subscribe to reference data. The cost of this data varies and some data is free of charge. There are open-ended subscriptions and subscriptions limited to a certain number of transactions. You can also sell your own reference data through the DataMarket service. You are in control of the data, pricing, and terms.

The data you subscribe to is accessed in a standard way using OData, a standard based on AtomPub, HTTP, and JSON. Because the data is standardized, it is easy to mash up and feed to visualization programs.

Here’s an example of how you might use the DataMarket service. Suppose you generate marketing campaign materials on a regular basis and wish to customize the content for a neighborhood’s predominant income level and language. You subscribe to demographic data from the DataMarket service that lets you retrieve this information based on postal code.SQL AZURE: RELATIONAL DATA SERVICES

The SQL Azure area of the platform includes services for working with relational data. Currently, SQL Azure provides these services:

• SQL Azure Database: relational database

• SQL Azure Reporting: database reporting

• SQL Azure Data Sync: database synchronization

• SQL Azure OData Service: data access serviceSQL Azure Database

The SQL Azure Database provides core database functionality. SQL Azure is very similar to SQL Server to work with and leverages the same skills, tools, and pro-gramming model, including SQL Server Management Studio, T-SQL, and stored procedures.

With SQL Azure, physical management is taken care of for you: you don’t have to configure and manage a cluster of database servers, and your data is protected through replicated copies.

Here’s an example of how you might use SQL Azure Database. You have a locally-hosted web site and SQL Server database and conclude it makes better sense in the cloud. You convert the web site to a Windows Azure Compute service and the database to a SQL Azure database. Now both the application and its database are in the cloud side-by-side.SQL Azure Reporting

SQL Azure Reporting provides reporting services for SQL Azure databases in the same way that SQL Server Reporting Services does for SQL Server databases. Like SSRS, you create reports in Business Intelligence Development Studio and they can be visualized in web pages.

Here’s an example of how you might use SQL Azure Reporting. You’ve tradition-ally been using SQL Server databases and SQL Server Reporting Services but are now starting to also use SQL Azure databases in the cloud. For reporting against your SQL Azure databases, the SQL Azure Reporting service is the logical choice.

This service is not yet released commercially but is available for technical preview.SQL Azure Data Sync Service

The SQL Azure Data Sync service synchronizes databases, bi-directionally. One use for this service is to synchronize between an on-premise SQL Server database and an in-cloud SQL Azure database. Another use is to keep multiple SQL Azure databases in sync, even if they are in different data center locations.

Here’s an example of how you might use the SQL Azure Data Sync service. You need to create a data warehouse that consolidates information that is sourced from multiple SQL Server databases belonging to multiple branch offices. You decide SQL Azure is a good neutral place to put the data warehouse. Using SQL Azure Data Sync you keep the data warehouse in sync with its source databases.

This service is not yet released commercially but is available for technical preview.SQL Azure OData Service

The SQL Azure OData service is a data access service: it allows applications to query and update SQL Azure databases. You can use the OData service instead of developing and hosting your own web service for data access.

OData is an emerging protocol that allows both querying and updating of data over the web; it is highly interoperable because it is based on the HTTP, REST, AtomPub, and JSON standards. OData can be easily consumed by web sites, desktop applications, and mobile devices.

Here’s an example of how you might use the SQL Azure OData service. Let’s say you have data in a SQL Azure database that you wish to access from both a web site and a mobile device. You consider that you could create and host a custom web service in the cloud for data access but realize you can avoid that work by using the SQL Azure OData service instead.

This service is not yet released commercially but is available for technical preview.WINDOWS AZURE APPFABRIC: ENTERPRISE SERVICES

The AppFabric area of the platform includes services that facilitate enterprise-grade performance caching, communication, and federated security. Currently, AppFabric provides these services:

• AppFabric Cache Service: distributed memory cache

• AppFabric Service Bus: publish-subscribe communication

• AppFabric Access Control Service: federated securityAppFabric Cache Service

The Cache service is a distributed memory cache. Using it, applications can improve performance by keeping session state or application data in memory. This service is a cloud analogue to Windows Server AppFabric Caching for the enterprise (code-named Velocity) and has the same programming model.

Here’s an example of using the AppFabric Cache service. An online store must retrieve product information as it is used by customers, but in practice some products are more popular than others. Using the Cache service to keep frequently-accessed products in memory improves performance significantly.

This service is not yet released commercially but is available for technical preview.AppFabric Service Bus

The Service Bus uses the cloud as a relay for communication, supporting publish-subscribe conversations that can have multiple senders and receivers. Uses for the service bus range from general communication between programs to connecting up software components that normally have no way of reaching each other. The Service Bus supports traditional client-server style communication as well as multicasting.

The Service Bus is adept at traversing firewalls, NATs, and proxies which makes it particularly useful for business-to-business scenarios. All communication looks like outgoing port 80 browser traffic so IT departments don’t need to perform any special configuration such as opening up a port; it just works. The Service Bus can be secured with the AppFabric Access Control Service.

Here’s an example of how you might use the Service Bus. You and your supply chain partners want to share information about forecasted and actual production activity with each other. Using the Service Bus, each party can publish event notification messages to all of the other parties.Access Control Service

The Access Control Service is a federated security service. It allows you to support a diverse and expanding number of identity schemes without having to implement them individually in your code. For example, your web site could allow users to sign in with their preferred Google, Yahoo!, Facebook, or Live ID identities. The ACS also supports domain security through federated identity servers such as ADFS, allowing cloud-hosted applications to authenticate enterprise users.

The ACS uses claims-based security and supports modern security protocols and artifacts such as SAML and SWT. Windows applications typically use Windows Identity Foundation to interact with the ACS. The ACS decouples your application code from the implementation of a particular identity system. Instead, your application just talks to the ACS and the ACS in turn talks to one or more identity providers. This approach allows you to change or expand identify providers without having to change your application code. You use rules to normalize the claims from different identity providers into one scheme your application expects.

Here’s an example of how you might use the ACS. Your manufacturing company has corporate clients across the country who need to interact with your online ordering, support, and repair systems—but you don’t want the burden of administering each of their employees as users. With the ACS, each client can authenticate using their preferred, existing identity scheme. One customer authenticates with their Active Directory, another uses IBM Tivoli, another uses Yahoo! identities. Claims from these identity providers are normalized into one scheme which is all your applications have to support.As you can see, the Windows Azure platform has come a long way in a short time--and there's plenty more innovation ahead.

Tom Hollander described how to manage Windows Azure scale-out and scale-back activity in his Responding to Role Topology Changes post of 1/4/2011 to the Windows Azure blog:

The Adoption Program Insights series describes experiences of Microsoft Services consultants involved in the Windows Azure Technology Adoption Program assisting customers deploy solutions on the Windows Azure Platform. This post is by Tom Hollander.

In the past, if you had an application running in a web farm and you needed more capacity, you would have needed to buy, install and configure additional physical machines - a process which could take months and potentially cost thousands of dollars. In contrast, if you deploy your application to Windows Azure this same process involves a simple configuration change and in minutes you can have additional instances deployed, and you only pay incremental hourly charges while these instances are in use. For applications with variable or growing load, this is a tremendous advantage of the Windows Azure platform.

If your role instances have been designed to be stateless and independent, you generally won't need to write any code to handle the times when your roles are scaled up or down (known in Azure as a topology change) - Windows Azure handles the configuration of the environment and as soon as any new instances are available (or old ones removed), the load balancer is reconfigured and your application continues to run as per normal. However, in some advanced scenarios, you may need your instances to be aware of the overall context in which they are running, and they may need to perform certain tasks when the role topology changes.

This post will help you write applications that can respond to topology changes by describing how Windows Azure raises events and communicates information about the Role Environment during these changes. This guidance applies whether you're scaling your application manually through the web portal, via the Service Management API or using automatic performance-based scaling.

Role Environment Methods and Events

There are five main places where you can write code to respond to environment changes. Two of these, OnStart and OnStop, are methods on the RoleEntryPoint class which you can override in your main role class (which is called WebRole or WorkerRole by default). The other three are events on the RoleEnvironment class which you can subscribe to: Changing, Changed and Stopping.

The purpose of these methods is pretty clear from their names:

- OnStart gets called when the instance is first started.

- Changing gets called when something about the role environment is about to change.

- Changed gets called when something about the role environment has just been changed.

- Stopping gets called when the instance is about to be stopped.

- OnStop gets called when the instance is being stopped.

In all cases, there's nothing your code can do to prevent the corresponding action from occurring, but you can respond to it in any way you wish. In the case of the Changing event, you can also choose whether the instance should be recycled to deal with the configuration change by setting e.Cancel = true.

Why aren't Changing and Changed firing in my application?

When I first started exploring this topic, I observed the following unusual behaviour in both the Windows Azure Compute Emulator (previously known as the Development Fabric[*]) and in the cloud:

- The Changing and Changed events did not fire on any instance when I made configuration changes.

- RoleEnvironment.CurrentRoleInstance.Role.Instances.Count always returned 1, even when there were many instances in the role.

It turns out that this is the expected behaviour when a role has in no internal endpoints defined, as documented in this MSDN article. So the solution is simply to define an internal endpoint in your ServiceDefinition.csdef file like this:

<Endpoints>

<InternalEndpoint name="InternalEndpoint1" protocol="http" />

</Endpoints>Which Events Fire Where and When?

Even though the names of the events seem pretty self-explanatory, the exact behaviour when scaling deployments up and down is not necessarily what you might expect. The following diagram shows which events fire in an example scenario containing a single role. 2 instances are deployed initially, the deployment is then scaled to 4 instances, then back down to 3, and finally the deployment is stopped.

There are several interesting things to note from this diagram:

- 1. The Changing and Changed events only fire for the instances that aren't starting or stopping. If you're adding instances, these events don't fire on the new instances, and if you're removing instances, these events don't fire on the ones being shut down.

- 2. In the Changing event, RoleEnvironment.CurrentRoleInstance.Role.Instances returns the original role instances, not the target role instances. There is no way of finding out the target role instances at this time.

- 3. In the Changed event, RoleEnvironment.CurrentRoleInstance.Role.Instances returns the target role instances, not the original role instances. If you need to know about the original instances, you can save this information when the Changing event fires and access it from the Changed event (since these events are always fired in sequence).

- 4. When instances are started, RoleEnvironment.CurrentRoleInstance.Role.Instances returns the target role instances, even if many of them are not yet started.

- 5. When instances are stopped, RoleEnvironment.CurrentRoleInstance.Role.Instances returns the original role instances. There is no way of finding out about the target instances at this time. Also note that there's no way that any instance can determine which instances are being shut down (it won't necessarily be the instances with the highest ID number). If Stopping and OnStop get called, it's you. If Changing gets called, it's not!

The above example assumed that the Changing event was not cancelled (with e.Cancel = true, which results in the instance being restarted before the configuration changes are applied). If you do choose to do this, the events that fire are quite different - Changed does not fire at all, but Stopping, Stopped and OnStart do. The following diagram shows what happens to instance IN_0 during a scale-up operation if the Changing event is cancelled.

One final note on these events: Although I didn't show it in either diagram, if you have multiple roles in your service and make a topology change in a single role, the Changing and Changed events will fire across all roles, even those where the number of instances did not change. You can tell from the event data whether the topology change occurred for the current role or a different one using code similar to this:

private void RoleEnvironmentChanging(object sender, RoleEnvironmentChangingEventArgs e)

{

var changes = from ch in e.Changes.OfType<RoleEnvironmentTopologyChange>()

where ch.RoleName == RoleEnvironment.CurrentRoleInstance.Role.Name

select ch;

if (changes.Any())

{

// Topology change occurred in the current role

}

else

{

// Topology change occurred in a different role

}

}Getting More Information

While the RoleEnvironment and the events listed above provide a lot of good information about changes to a service, there can be times when you need more information than the API provides. For example, I once worked on an Azure application where each instance needed to know which other instances had already started, and what their IP Addresses were. I chose to leverage an Azure table to record key information about the running instances. Every time an instance started or stopped, it was responsible for recording these details in the table, which could be read by all other instances. While this solution worked well, it required some careful and defensive coding to deal with cases where the table may have contained stale or incorrect data due to ungraceful shut downs. As such, you should only build solutions like this if absolutely necessary.

Conclusion

The ability to scale applications as needed is one of the great benefits of Windows Azure and the Fabric Controller is able to provide detailed information about the current status of, and changes to, the role environment through RoleEntryPoint methods and RoleEnvironment events. For most applications you won't need to put in any fancy code to handle scaling operations but if you're dealing with more complex applications, we hope this information will help you understand how topology changes can be handled effectively by your applications.

* Note: In case you missed this momentous event, Windows Azure’s Development Fabric morphed to Windows Azure Compute Emulator in November 2010. See MSDN’s Overview of the Windows Azure Compute Emulator updated 11/22/2010. Google Blog Search for the last month returned 48 hits on “Development Fabric” Azure, while “Compute Emulator” Azure returned only 10. Despite the new names (“Compute Emulator” and “Storage Emulator”) being used by the Windows Azure SDK v1.3 tools, the new moniker hasn’t gained much traction with blog writers. (If Bing has a similar Blog Search feature, I haven’t been able to find it.)

The PrivateCloud.com site reprinted on 1/5/2011 James Urquhart’s The top 12 gifts of cloud from 2010 article of 12/20/2010 for C|Net News’ The Wisdom of Clouds blog (missed when published):

What a year 2010 has been for cloud computing.

We've seen an amazing year of innovation, disruption, and adoption--one I personally think will go down in history as one of the most significant years in computing history. Without a doubt, a significant new tool has been added to the IT toolbox, and its one that will eventually replace most of the tools we know today.

Don't agree with me? Well, with the help of my generous Twitter community--and in the spirit of the season here in the US--I've assembled 12 innovations and announcements from 2010 that had big impact on the IT market. Take a look at these with an open mind and ponder just how much cloud computing changed the landscape through the course of the year:

1. The growth of cloud and cloud capacity

The number of cloud computing data centers skyrocketed in 2010, with massive investment by both existing and new cloud providers creating a huge burst in available cloud capacity. You might have noticed new services from Verizon, IBM, Terremark, and others. Tom Lounibos, CEO of "test in the cloud" success story SOASTA, notes that the number of data centers offered by Amazon, Microsoft, and IBM alone grew from four to 10, with that number slated to grow to more than 20 in the coming year.2. The acceptance of the cloud model

A number of studies showed a dramatic switch in the acceptance of the cloud model by enterprise IT between 2009 and 2010. Two that I often quote are one by the Yankee Group that noted that 60 percent of "IT influencers" now consider cloud computing services an enabler, versus 40 percent who considered it "immature"--a complete reversal from 2009. The second, published by Savvis, claimed that 70 percent of IT influencers were using or planning to use cloud services within two years. Since then, most studies show cloud computing becoming an increasingly high priority for CIOs everywhere.3. Private cloud debated...to a truce

Heated debates between representatives of public cloud computing companies and more traditional IT computing vendors raged over the summer of 2010, with the former arguing that there is no such thing as a "private cloud," and the latter arguing that there definitely is, and it should be an option in every IT arsenal. The argument died down, however, when both sides realized nobody was listening, and various elements of the IT community were pursuing one or the other--or both--options whether or not it was "right."4. APIs in--and out--of focus

As we started 2010, there were something like 18 APIs being proposed to define cloud operations interfaces. As the year wore on, it appeared that the AWS EC2 and S3 APIs were the de facto standards for compute and storage, respectively. However, VMware's vCloud, Red Hat's Delta Cloud, and Rackspace's Cloud Servers and Cloud Files APIs each still have legitimate chances to survive in the wild, as it were, and without Amazon's express support for implementations of their APIs by others, standardization around their offerings remains a risk.5. Cloud legal issues come to the forefront

Toward the end of the year, Amazon's decision to unilaterally shut down WikiLeaks' presence on their site demonstrated one of the true risks of the public cloud today: if a customer builds their business on a public cloud environment, and the provider terminates that relationship without warning or recourse (with or without cause), what are those customer's rights? The scenario was repeated in a different form with the shutdown of low-cost content-distribution provider SimpleCDN by a reseller of a third-party hosting environment. The issue here isn't whether the terminated party was doing right or wrong but what rights the law establishes for all parties in a public cloud operations scenario.6. Cloud economics defined

Two seminal works of cloud economic analysis had significant impact on our understanding of the forces behind cloud computing adoption. Joe Weinman's "Mathematical Proof of the Inevitability of Cloud Computing," published in November 2009, treated us to a carefully thought out "proof" of why pay-per-use pricing will eventually capture such a large chunk of our application workloads. James Hamilton's "Cloud Computing Economies of Scale" analyzes of the economics of the data center, and why a large scale provider with a wide customer base has tremendous advantages over a smaller provider with a smaller customer base.7. The rise of DevOps

Those of us studying cloud computing for some time have noted that cloud computing models are both the result of and driver for changes in the way we design, deploy, and operate applications in a virtualized model. We've seen a shift away from server-centric models to application-centric alternatives, and a rapid change from manual processes to automated operations. This, in turn, has driven several software methods that combine development and operations planning and execution. The result is automation packaged *with* the application at deployment time, rather than developed after-the-fact in a reactive fashion.8. Open source both challenged and engaged

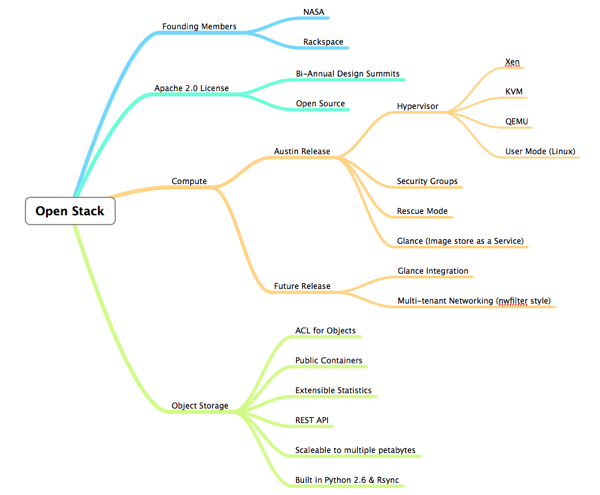

At the beginning of the year, open-source developers were watching developments in the cloud computing space with a wary eye and rightfully so. For infrastructure projects (one of the most successful classes of open-source software), the model threatens to change the community nature of open source. However, the year also showed us that open source plays a critical role in both cloud provider infrastructure and the software systems we build and run in the cloud. All in all, OSS seems to have found peace with the cloud, though much has yet to be worked out.9. Introducing OpenStack

One of the great success stories in open source for the cloud this year was that of the partnership between cloud provider Rackspace and NASA, producing the popular OpenStack project for compute and storage services. Drawing more than 300 attendees to its last design summit, OpenStack is quickly attracting individual developers, cloud start-ups, and established IT companies to its contributor list. While the long-standing poster child of open-source cloud infrastructure, Eucalyptus, seemed threatened initially, I think there is some truth to the argument that Eucalyptus is tuned for the enterprise, while OpenStack is being built more with public cloud providers in mind.10. Amazon Web Services marches on

Amazon Web Services continues to push an entirely new model for IT service creation, delivery, and operation. Key examples of what Amazon Web Services has introduced this year include: an Asia-Pacific data center; free tiers for SQS, EC2, S3 and others; cluster compute instances (for high-performance computing); and the recently announced VMDK import feature. Not to mention the continuous stream of improvements to existing features, and additional support for well-known application environments.11. Platform as a Service steps up its game

VMware announced its Cloud Application Platform. Salesforce.com introduced Database.com and its acquisition of Ruby platform Heroku. Google saw demand for developers with App Engine experience skyrocket. Platform as a Service is here, folks, and while understanding and adoption of these services by enterprise IT still lags that of the Web 2.0 community, these services are a natural evolutionary step for software development in the cloud.12. Traditional IT vendors take their best shots

2010 saw IBM introduce new services for development and testing, genome research and . HP entered the cloud services game with its CloudStart offering. Oracle jumped into the "complete stack" game with Exalogic (despite Ellison's derision of cloud in 2009). My employer, Cisco Systems, announced a cloud infrastructure service stack for service providers and enterprises. Even Dell acquired technologies in an attempt to expand its cloud marketshare. Of course, none of these offerings pushed the boundaries for cloud consumers, but for those building cloud infrastructures, there are now many options to choose from--including options that don't directly involve any of these vendors.Of course, these are just a few of the highlights from cloud's 2010. Not mentioned here were the plethora of start-ups in the space, covering everything from application management to cloud infrastructure to data management to...well, you get the idea. What does 2011 hold for cloud? I don't know, but I would hazard a guess that it will be at least as interesting as cloud's historic 2010.

Read more: http://news.cnet.com/8301-19413_3-20026113-240.html#ixzz1ABdCVNiu

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

Robert Duffner posted a Thought Leaders in the Cloud: Talking with Barton George, Cloud Computing Evangelist at Dell inteview on 1/5/2011:

Barton George (pictured at right) joined Dell in 2009 as the company's cloud computing evangelist. He acts as Dell's ambassador to the cloud computing community and works with analysts and the press. He is responsible for messaging as well as blogging and tweeting on cloud topics. Prior to joining Dell, Barton spent 13 years at Sun Microsystems in a variety of roles that ranged from manufacturing to product and corporate marketing. He spent his last three years with Sun as an open source evangelist, blogger, and driver of Sun's GNU/Linux strategy and relationships.

In this interview, we discuss:

- Just do it - While some people are hung up arguing about what the cloud is, others are just using it to get stuff done

- Evolving to the cloud - Most organizations don't have the luxury to start from scratch

- Cloud security - People were opposed to entering their credit card in the early days of the internet, now it's common. Cloud security perceptions will follow a similar trajectory

- Cost isn't king - For many organizations, is the "try something quickly and fail fast" agility that's drawing people to the cloud, not just cost savings

- The datacenter ecosystem - The benefits of looking at the datacenter as a holistic system and not individual pieces

Robert Duffner: Could you take a minute to introduce yourself and tell us a little bit about your experience with cloud computing?

Barton George: I joined Dell a little over a year ago as cloud evangelist, and I work with the press, analysts, and customers talking about what Dell is doing in the cloud. I also act as an ambassador for Dell to the cloud computing community. So I go out to different events, and I do a lot of blogging and tweeting.

I got involved with the cloud when I was at a small company right before Dell called Lombardi, which has since been purchased by IBM. Lombardi was a business process management company that had a cloud-based software service called Blueprint.

Before that, I was with Sun for 13 years, doing a whole range of things from operations management to hardware and software product management. Eventually, I became Sun's open source evangelist and Linux strategist.

Robert: You once observed that if you asked 10 people to define cloud, you'd get 15 answers. [laughs] How would you define it?

Barton: We talk about it as a style of computing where dynamically scalable and often virtualized resources are provided as a service. To simplify that even further, we talk about it as IT provided as a service. We define it that broadly to avoid long-winded discussions akin to how many angels can dance on the head of a pin. [laughs]

You can really spend an unlimited amount of time arguing over what the true definition of cloud is, what the actual characteristics are, and the difference between a private and a public cloud. I think you do need a certain amount of language agreement so that you can move forward, but at a certain point there are diminishing returns. You need to just move forward and start working on it, and worry less about how you're defining it.

Robert: There are a lot of granular definitions you can put into it, but I think you're right. And that's how we look at it here at Microsoft, as well. It's fundamentally about delivering IT as a service. You predict that traditional, dedicated physical servers and virtual servers will give way to private clouds. What's led you to that opinion?

Barton: I'd say that there's going to be a transition, but I wouldn't say that those old models are going to go away. We actually talk about a portfolio of compute models that will exist side by side. So you'll have traditional compute, you'll have virtualized compute, you'll have private cloud, and you'll have public cloud.

What's going to shift over time is the distribution between those four big buckets. Right now, for most large enterprises, there is a more or less equal distribution between traditional and virtualized compute models. There really isn't much private cloud right now, and there's a little bit of flirting with the public cloud. The public cloud stuff comes in the form of two main buckets: sanctioned and unsanctioned.

"Sanctioned" includes things like Salesforce, payroll, HR, and those types of applications. The "unsanctioned" bucket consists of people in the business units who have decided to go around their IT departments to get things done faster or with less red tape.

Looking ahead, you're going to have some traditional usage models for quite a while, because some of that stuff is cemented to the floor, and it just doesn't make sense to try and rewrite it or adapt it for virtualized servers or the cloud.

But what you're going to see is that a lot of these virtualized offerings are going to be evolved into the private cloud. Starting with a virtualized base, people are going to layer on capabilities such as dynamic resource allocation, metering, monitoring, and billing.

And slowly but surely, you'll see that there's an evolution from virtualization to private cloud. And it's less important to make sure you can tick off all the boxes to satisfy some definition of the private cloud than it is to make continual progress at each step along the way, in terms of greater efficiency, agility, and responsiveness to the business.

In three to five years, the majority of folks will be in the private cloud space, with still pretty healthy amounts in the public and virtualized spaces, as well.

Robert: As you know, Dell's Data Center Solutions Group provides hardware to cloud providers like Microsoft and helps organizations building their own private clouds. How do you see organizations deciding today between using an existing cloud or building their own?

Barton: Once again, there is a portfolio approach, rather than an either-or proposition. One consideration is the size of the organization. For example, it's not unusual for a startup to use entirely cloud-based services. More generally, decisions about what parts a business keeps inside are often driven by keeping sensitive data and functionality that is core to the business in the private cloud. Public cloud is more often used for things that are more public facing and less core to the business.

We believe that the IT department needs to remake itself into a service provider. And as a service provider, they're going to be looking at this portfolio of options, and they're going to be doing "make or buy" decisions. In some cases, the decision will be to make it internally, say, in the case of private cloud. Other times, it will be a buy decision, which will imply outsourcing it to the public cloud.

The other thing I'd say is that we believe there are two approaches to getting to the cloud: one is evolutionary and the other one is revolutionary. The evolutionary model is what I was just talking about, where you've made a big investment in infrastructure and enterprise apps, so it makes sense to evolve toward the private cloud.

There are also going to be people who have opportunities to start from ground zero. They are more likely to take a revolutionary approach, since they're not burdened with legacy infrastructure or software architecture. Microsoft Azure is a good example. We consider you guys a revolutionary customer, because you're starting from the ground up. You're building applications that are designed for the cloud, designed to scale right from the very beginning.

Some organizations will primarily follow one model, and some will follow the other. I would say that right now, 95% of large enterprises are taking the evolutionary approach, and only 5% are taking a revolutionary approach.

People like Microsoft Azure and Facebook that are focused on large scale-out solutions with a revolutionary approach are in a small minority. Over time, though, we're going to see more and more of the revolutionary approach, as older infrastructure is retired.

Robert: Let me switch gears here a little bit. You guys just announced the acquisition of Boomi. Is there anything you can share about that?

Barton: I don't know any more than what I've read in the press, although I do know that the Boomi acquisition is targeted to small and medium-sized businesses. We target that other 95% on the evolutionary side with what we call Virtual Integrated System. That's the idea of starting with the already existing virtualized infrastructure and building capabilities on top of it.

Robert: The White House recently rolled out Cloud Security Guidelines. At Microsoft and Dell, we've certainly spent a lot of time dealing with technology barriers. How much of the resistance has to do with regulation, policy, and just plain fear? And how much do things like cloud security guidelines and accreditation do to alleviate these types of concerns?

Barton: To address those issues, I think you have to look at specific customer segments. For example, HIPAA regulations preclude the use of public cloud in the medical field. Government also has certain rules and regulations that won't let them use public clouds for certain things. But as they put security guidelines in place, that's going to, hopefully, make it possible for the government to expand its use of public cloud.

I know that Homeland Security uses the public cloud for their public-facing things, although obviously, a lot of the top secret stuff that they're doing is not shared out on the public cloud. If you compare cloud computing to a baseball game, I think we're maybe in the bottom of the second inning. There's still quite a bit that's going to happen.