Windows Azure and Cloud Computing Posts for 1/20/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

CA Technologies ERwin offered a How Data Modeling Supports Database as a Service (DaaS) Implementations white paper from SQL Server Magazine on 1/20/2011:

Cloud Computing is an emerging market, and there exist an increasing number of companies that are implementing the cloud model, products and services. However, despite the popularity of large scale decision support systems, data analysis tasks and, applications data marts, the majority of these applications have not yet embraced the Cloud Computing paradigm. Support to dynamic scalability, heterogeneous environments or interfaces to business intelligence products ask for class of solutions to satisfy the paradigm. In this scenario, Data Modeling supports cloud computing since interaction among the Cloud Service Provider, Cloud Administration and Customer needs to include a well thought out data architecture before a DBMS designed offering in cloud computing is deployed.

Data modeling is even more important if you’re planning to federate the database(s) you migrate to SQL Azure.

<Return to section navigation list>

MarketPlace DataMarket and OData

Elisa Flasko (@eflasko) reported January DataMarket Content Update in a 1/20/2011 post to the new DataMarket Team blog:

Today we are announcing the release of a number a exciting new offerings on DataMarket – both public and commercial! Take a look at some of the great new data we have:

D&B

D&B Business Lookup - Use this data to identify the DUNS number of the business you wish to obtain more detailed information on. You use this service to get the DUNS number prior to using other D&B offerings, all of which require this DUNS number & Country code as input parameters.

- D&B Corporate Linkage Packet - Use this data to identify who the immediate domestic and global ultimate holding companies are of the business in question. Identify a company and its location with corporate linkage information such as parent/headquarter, domestic & global ultimate companies

- D&B Enterprise Risk Management packet - This packet of data provides globally consistent information from around the world in real time, enabling you to improve the timeliness and consistency of your credit decisions and help prioritize collection efforts by matching your company's credit policies and conditions with D&B credit scores and ratings.

- D&B Risk Management Business Verification Packet - Use this data to identify & verify a company and its location with background information such as primary name, address, phone number, SIC code, branch indicator and D&B® D-U-N-S® number.

- D&B Risk Management Decision Support Packet - This packet of data provides consistent information in real time, enabling you to improve the timeliness and consistency of your credit decisions and help prioritize collection efforts by matching your company's credit policies and conditions with D&B credit scores and ratings. Information includes company identification, payment trends and delinquencies, public record filings, high level Financials and key risk factors, including the commercial credit score and the financial stress score.

- D&B Risk Management Delinquency Score packet - This packet of data provides consistent information in real time, enabling you to increase the speed and accuracy of your organization's decision making. Use this data to help predict the likelihood of a company paying your invoices in a severely delinquent manner (90+ days past terms) over the next 12 months so that you can make faster, more informed decisions on whether to accept, set terms, or reject an account.

- D&B Risk Management Financial Standing packet - Use this data to assess a company's financial strength with data such as sales volumes, net worth, assets and liabilities. Information includes company identification, firmographic information, public filings indicators, equity, assets, liabilities and the D&B® Rating

- D&B Risk Management Quick Check packet - This packet of information delivers comprehensive risk insight from D&B's database. Use this information to perform high level credit assessments and pre-screen prospects with D&B's core credit evaluation data. Information includes company identification, payment activity summary, public filings indicators, high level Equity and the D&B® Rating

- D&B Vendor Management, Enrichment Data Packet - This packet of information delivers comprehensive supplier insight from D&B's database. Included are company identity and demographic information such as name, address, telephone, DUNS number, SIC Code, employees and years in business. Also delivered are 5 of D&B's risk ratings.

- D&B Vendor Qualification with Linkage and Diversity Data Packet - This packet of information delivers Supplier Qualification insight from D&B's database. Included are company identity and demographic information such as legal name, address, telephone number, DUNS number, Congressional District, SIC Code and Years In Business. Also delivered are 2 of D&B's key risk components.

- D&B Vendor Qualification with Linkage, Enrichment Data Packet - This packet of information delivers Supplier Qualification insight from D&B's database. Included are company identity and demographic information such as legal name, address, telephone number, DUNS number, SIC Code and Years In Business. Also delivered are 2 of D&B's key risk components.

- D&B Vendor Qualification, Enrichment Data Packet - This packet of information delivers Supplier Qualification insight from D&B's database. Included are company identity and demographic information such as legal name, address, telephone number, DUNS number, SIC Code and Years In Business. Also delivered are 2 of D&B's key risk components.

ESRI

- 2010 Key US Demographics by ZIP Code, Place and County - Esri 2010 Key US Demographics by ZIP Code, Place, and County Data is a select offering of the demographic data required to understand a market. ‘Esri 2010 Key Demographics’ contains current-year updates for 14 key demographic variables. Click here to see a trial version of this offer.

StrikeIron

- Sales and Use Tax Rates Complete - Uses a USA ZIP code or Canadian postal code to get the general sales and use tax rate levels for the state, county, city, MTA, SPD and more, including multiple tax jurisdictions within a single ZIP code (where county boundaries cross a ZIP code for example), and also includes multiple levels of tax rates such as MTA and SPD data, and 4 'other' tax rate fields.

- US Address Verification - This service corrects, completes, and enhances US addresses utilizing data from the United States Postal Service. It adds ZIP+4 data, provides delivery point verification, gives congressional districts, carrier routes, latitude, and longitude, and much more. Every element of an address is inspected to ensure its validity using sophisticated matching and data standardization technology.

United Nations

- National Accounts Official Country Data - United Nations Statistics Division - The database contains detailed official national accounts statistics in national currencies as provided by the National Statistical Offices. Data is available for most of the countries or areas of the world. For the majority of countries data is available form 1970 up to the year t-1.

As we post more datasets to the site we will be sure to update you here on our blog… and be sure to check the site regularly to learn more about the latest data offerings.

- The DataMarket Team

Elisa Flasko (@eflasko) published Real World DataMarket: Interview with Ellie Fields, Director of Product Marketing, Tableau Software on 1/20/2011:

As part of the Real World DataMarket series, we talked to Ellie Fields, Director of Product Marketing at Tableau Software, about using DataMarket, part of the Windows Azure Marketplace, to add premium content as a data source option in its data visualization software. Here’s what she had to say:

MSDN: Can you tell us more about Tableau Software and the products you offer?

Fields: Tableau Software offers “rapid-fire business intelligence.” Tableau has proprietary technology developed at Stanford University that enables users to drag and drop data from data sources to quickly transform text into rich data visualizations.

MSDN: What were the biggest challenges that Tableau Software faced prior to adopting DataMarket?

Fields: Data is proliferating everywhere, but it can be difficult and time-consuming for customers to find, purchase, and format data to augment their existing data sources. It’s like the Wild West of data out there, with data available everywhere and in any imaginable format. We’re always looking to improve our service offerings, but it’s a fine line—we want to deliver valuable services, but we don’t want to take our focus away from what we do best, which is data visualization.

MSDN: Can you describe how Tableau Software is using DataMarket to help tame the Wild West of data?

Fields: After the 5.0 release of Tableau Software, one of our developers coded a basic integration into DataMarket during one of our “hackathons.” We were so impressed with the opportunity to access premium content via DataMarket that we decided to include DataMarket as a data source option in our products. Now, when customers use Tableau, they see DataMarket as a data source option. They simply provide their DataMarket account key for authentication and then find the data sets they want to use. Customers can import the data into Tableau and combine that information with their own corporate data for deep business intelligence.

Figure 1: When using Tableau, customers see DataMarket as a data source option.

MSDN: What makes your solution unique and how does DataMarket play a role in that unique quality?

Fields: Unlike other data visualization software, Tableau Software gives customers the ability to simply drag and drop data to create business intelligence. Using DataMarket supports that same idea by offering premium content that is already structured and formatted, and easily available. Customers don’t have to spend valuable time seeking out and formatting the data before integrating it with their own data for rich visualizations. For example, they can add population data to their sales data to assess regions for growth.

MSDN: What kinds of benefits is Tableau Software realizing with DataMarket?

Fields: We have been able to add a valuable service for our customers—the data sets that customers can access in DataMarket are of tremendous value for creating very rich business intelligence. What’s really great is that we were able to very quickly and easily add access to DataMarket in the Tableau line of products, thanks to the DataMarket API, and did so while maintaining laser-sharp focus on our core business.

Read the full story at:

www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000009036To learn more, visit:

https://datamarket.azure.com

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Sebastian W (@qmiswax) posted Windows Azure AppFabric and CRM2011 online on 1/20/2011:

Long time ago I’ve tried to setup Windows Azure AppFabric integration with CRM 2011 online beta , but without sucess. It was due to some problems which were on beta version , anyway CRM 2011 Online is ready (officially today) so I’ve thought well it must work and yes it does work . Setup is easy but some of the parts are not clear so I’ve decied to share how-to do it.

- First we need Dynamic CRM 2011 Online account you can signup for 30 days trial for free here .

- Then after you login get authentication certificate, to optain certificate go to Settings-> Customizations->Developer Resources->Download Certificate

We also need new plugin registration tool (Part of CMR 2011 SDK . I’ve used version from december 2010 ), Plugin registration tool comes as solution with source code so it has to be compiled with VS2010.

- Time to login to azure portal and create service namespace for our connections (You need azure account, details how to get one are here and use service bus connection packs (5 is ok for testing). My service namespace is called cmr2011Online, we will need that namespace during configuration also from appfabric.azure.com we will need “Current Management Key” and “Management Key Name”

- Next step is to run plugin registration tool, and register new endpoint

- Next dialog will allow us to setup endpoint

Name: is any string for us for identifaction. Solution NameSpace is our service namespace name (in my case crm2011online). Path is finall part of service bus url i.e. https://crm2011online.servicebus.windows.net/RemoteService Contract in my case is one way only from CRM online to my lister on premise.

After clicking Save & Configure ACS, the ACS Configuration dialog box appears- Now is time to configure AppFabric ACS (Access control)

Management Key is our Current Management Key from azure portal

Cerficate file path to downloaded certificate from our crm online.Issuer The name of the issuer. This name must be the same name that was used to configure Microsoft Dynamics CRM for AppFabric integration. For Online version you can find that name in Settings-> Customizations->Developer Resources. Enter the appropriate data values into the form fields and then click confiigure ACS .

- Click close and then click Save & Verify Authentication in the Service Endpoint Details dialog box. After the verification is finished with sucess you can register new step

- Step registration is the some as for assemblies. I’ve tested with “create” message for account .

After step registration, system will send message to service bus, to test that without listener you need to go to settings in CRM and check “System Jobs”. They should be like on screen bellow

In next post I’ll publish second part of solution which will be on premise listener , so watch the space.

The Windows Azure Team posted Real World Windows Azure: Interview with David MacLaren, President and Chief Executive Officer at VRX Studios on 1/20/2010:

As part of the Real World Windows Azure series, we talked to David MacLaren, President and CEO at VRX Studios about using the Windows Azure platform for its enterprise-class digital asset management system, MediaValet. Here's what he had to say:

MSDN: Tell us about VRX Studios.

MacLaren: VRX Studios is a Microsoft Registered Partner that provides content production, management, distribution, and licensing services to over 10,000 hotels across the globe, including brands such as Hilton, Wyndham, Choice, Best Western, Fairmont, and Hyatt.

MSDN: What were the biggest challenges that you faced prior to implementing the Windows Azure platform?

MacLaren: Our biggest challenge came from managing hundreds of thousands of media assets for dozens of large hotel brands that each has thousands of hotels and tens of thousands of users. The digital asset management system that we were using was server based and developed in-house a decade ago. As a result, it could no longer support the scale and increasing growth rate of our image archive-severely limiting our ability to grow our business and provide services to our global clientele.

MSDN: Can you describe the digital asset management system you built with the Windows Azure platform to address your need for scalability?

MacLaren: After determining we needed to build an entirely new digital asset management system, we looked to the cloud to take advantage of many of its native traits: scalability, redundancy, and global reach. We considered cloud offerings from Amazon, Google, and several smaller providers, but none provided the development platform, support, and programs that we needed to be successful-Microsoft and the Windows Azure platform did.

Our new digital asset management system, MediaValet, uses Blob storage to store all media assets and the Content Delivery Network to cache blobs at the nearest locations to users; we use Table storage to store metadata, which aids in searching media assets; and we use Queue storage to manage events, such as when media assets are added and version control is required. In the near future, we plan to implement the Windows Azure AppFabric and Windows Azure Marketplace DataMarket.

MSDN: What makes MediaValet unique?

MacLaren: MediaValet is one of the first 100 percent cloud-based, enterprise-class, globally-accessible, digital asset management systems. Prior to building our own digital asset management system, our research found that existing systems were severally limited by their server-based architecture. By building MediaValet on the Windows Azure platform, our digital asset management system is fully managed, massively scalable, and accessible from anywhere in the world-from any browser; it's highly secure and reliable. This translates to our ability to easily meet the needs of any company, no matter what industry it's in, where its offices are located, how fast it's growing, how much content it has, or how many user accounts it requires.

MSDN: What kinds of benefits have you realized with the Windows Azure platform?

MacLaren: We were able to build MediaValet faster and with 40 percent fewer resources than was previously possible. We eliminated the majority, if not all, of the capital expenses traditionally required for developing and deploying large-scale software offerings, and reduced our hosting costs by up to 40 percent. This alone made our project feasible. Beyond this, the Windows Azure platform enabled us to build a stronger, more scalable, and resilient piece of software. Today, having built MediaValet on the Windows Azure platform, we can now quickly, cost effectively and reliably scale to meet the needs of any of our clients.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008848

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

Paul Krill asserted “Cobol applications now can be deployed to the Java Virtual Machine as well as to Windows Azure for the first time” as a deck for his Micro Focus extends Cobol to Java and the cloud in an 1/20/2011 article for InfoWorld (via NetworkWorld):

Micro Focus on Thursday announced it is extending its Cobol platform to Java and the Microsoft Windows Azure cloud platform, with the launch of Micro Focus Visual Cobol R3.

With the R3 version, Cobol applications can be deployed to the Java Virtual Machine as well as to Azure for the first time, Micro Focus said. Developers can work in either the Microsoft Visual Studio 2010 or Eclipse IDEs and deploy Cobol applications to multiple platforms from a single source without having to perform platform-specific work.

"Visual Cobol R3 combines the productivity and innovation of the industry's leading development environments with Cobol's business-tested performance. New recruits to Cobol can learn it in hours, not days, helping them extend the life of business-critical applications and develop new, high-powered applications using Cobol -- which many may not even [have] considered possible before," said Stuart McGill, CTO of Micro Focus, in a statement released by the company.

The Cobol release features C# and Java-like constructs to make the language easier to learn for new and existing customers, Micro Focus said.

Also featured in Visual Cobol R3 is Visual Cobol Development Hub, a development tool for remote Linux and Unix servers. Developers can use Cobol on the desktop to remotely compile and debug code. Having the tool within Visual Cobol R3 reduces user on-boarding time, increases developer productivity, and ensures that users can quickly deploy Cobol applications to multiple platforms, Micro Focus said.

Approximately 5 billion new lines of Cobol code are added to live systems every year, Micro Focus said. An estimate 200 billion lines of Cobol code are being used in business and finance applications today, the company said.

Avkash Chauhan describes a workaround for Windows Azure VM Role - Handling Error : The chain of virtual hard disk is broken... in a 1/19/2011 post:

One of the best thing with Windows Azure VM Role is to update and service your VM Role relatively easier then setting up the original VHD. As your initial VM Role VHD would be around 4GB+ and if you happen to do something locally to update this VHD, you might need to update the VHD on Windows Azure Portal. The solution is to create a DIFF vhd between your original and update one and use CSUPLOAD tool to upload the VHD using the following command line:

csupload Add-VMImage -Connection "SubscriptionId=<YOUR-SUBSCRIPTION-ID>; CertificateThumbprint=<YOUR-CERTIFICATE-THUMBPRINT>" -Description "UpdatedVMImage" -LiteralPath " C:\ Applications\Azure\VMRoleApp\DiffImage\baseimagediff.vhd" -Name baseimagediff.vhd -Location "South Centeral US"

After diff VHD image is uploaded, you will need to set the patent child relationship between original main VHD and then newly uploaded diff VHD using the command line as below:

csupload Set-Parent -Connection "SubscriptionId=<YOUR-SUBSCRIPTION-ID>; CertificateThumbprint=<YOUR-CERTIFICATE-THUMBPRINT>" -Child baseimagediff.vhd -Parent baseimage.vhdThe above two steps are explained in great details at the link below:

However it is possible when you upload the diff vhd you might encounter the following error with CSUPLOAD command:

csupload Add-VMImage -Connection "SubscriptionId=<YOUR-SUBSCRIPTION-ID>; CertificateThumbprint=<YOUR-CERTIFICATE-THUMBPRINT>" -Description "UpdatedVMImage" -LiteralPath " C:\ Applications\Azure\VMRoleApp\DiffImage\baseimagediff.vhd" -Name baseimagediff.vhd -Location "South Centeral US"

Windows(R) Azure(TM) Upload Tool 1.3.0.0 for Microsoft(R) .NET Framework 3.5

Copyright (c) Microsoft Corporation. All rights reserved.

An error occurred trying to attach to the vhd.

Detail: The chain of virtual hard disk is broken. The system cannot lcate the parent virtual hard disk for the differencing disk.

Detached C:\ Applications\Azure\VMRoleApp\DiffImage\baseimagediff.vhd

VM Role Agent not found.

Verification tests failed.

Error while preparing Vhd 'C:\ Applications\Azure\VMRoleApp\DiffImage\baseimagediff.vhd'.

The possible reason for this problem is that your difference VHD does not have the parent VHD path in it. When you create the differencing disk, it contains both the absolute and relative path to the corresponding parent disk in it. When you hit this error, it simply means, the parent disk information could not be found.

To solve this problem, I suggest the following:

Please use "Inspect Disk" option in the Hyper-V Actions menu on your Windows Server 2008 box as below:

In the dialog box please select the differencing disk and open it. Now please open the disk property window and you will see the "Parent" info will be described as "The differencing chain is broken."

You will also see a "Reconnect" button at bottom right corner on the same dialog box as in above image. Please use the "Reconnect" button to fix the parent path in the differencing disk. This should fix your problem.

Finally I am not a big fan of using -skipverify option with CSUPLOAD. This is because is there is any issue with my VHD, and I uploaded it without verifying it, I might encounter some other problem and all my work to upload may go in vein. That's why I prefer not to use "-skipverify" option with CSUPLOAD command and solve each problem if I encounter during CSUPLOAD without "-skipverify". However you sure can try using "-skipverify" with CSUPLOAD, if you have no other way to move forward.

David Aiken (@TheDavidAiken) explained Running Azure startup tasks as a real user in a 1/19/2011 post:

In my post about enabling PowerShell. I mentioned I got blocked for a while and would explain later why and what happened.

Problem: Windows Azure runs startup tasks as localsystem. Some startup tasks need to be running in the context of a user.

Solution: Use the task scheduler in Windows Server to execute the command.

A few people have already asked how to apply the technique to other things so here goes.

Lets take a look at the original startup task I was trying to execute.

netsh advfirewall firewall add rule name="Windows Remote Management (HTTP-In)" dir=in action=allow service=any enable=yes profile=any localport=5985 protocol=tcpPowershell -Command Enable-PSRemoting -ForceThis doesn’t work because the powershell command “Enable-PSRemoting” doesn’t work unless it runs as an elevated user that belongs to the administrators group. LocalSystem doesn’t belong to this group.

In my original blog post, I showed how you could enable this using winrm. But sometimes you want this something to happen when the role starts.

To make a task run as a user account as a startup task simply create a new task for the Windows Scheduler to execute as shown below.

netsh advfirewall firewall add rule name="Windows Remote Management (HTTP-In)" dir=in action=allow service=any enable=yes profile=any localport=5985 protocol=tcpnet user scheduser Secr3tC0de /addnet localgroup Administrators scheduser /addschtasks /CREATE /TN "EnablePS-Remoting" /SC ONCE /SD 01/01/2020 /ST 00:00:00 /RL HIGHEST /RU scheduser /RP Secr3tC0de /TR "powershell -Command Enable-PSRemoting -Force" /Fschtasks /RUN /TN "EnablePS-Remoting"Works like a charm. Hopefully you can figure out how to run your own commands instead of PowerShell.

It is safe to say that you probably want to secure the username and password that is created. I think ideally a wrapper that can read a username and encrypted password from config, create all the scheduled tasks and then execute them. Maybe that is a job for next time.

THIS POSTING IS PROVIDED “AS IS” WITH NO WARRANTIES, AND CONFERS NO RIGHTS

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) posted Tips and Tricks on Controlling Screen Layouts in Visual Studio LightSwitch on 1/20/2011:

Visual Studio LightSwitch has a bunch of screen templates that you can use to quickly generate screens. They give you good starting points that you can customize further. When you add a new screen to your project you see a set of screen templates that you can choose from. These templates lay out all the related data you choose to put on a screen automatically for you. And don’t under estimate them; they do a great job of laying out controls in a smart way. For instance, a tab control will be used when you select more than one related set of data to display on a screen. However, you’re not limited to taking the layout as is. In fact, the screen designer is pretty flexible and allows you to create stacks of controls in a variety of configurations. You just need to visualize your screen as a series of containers that you can lay out in rows and columns. You then place controls or stacks of controls into these areas to align the screen exactly how you want.

Let’s start with a simple example. I have already designed my data entities for a simple order tracking system similar to the Northwind database. I also have added a Search Data Screen to search my Products already. Now I will add a new Details Screen for my Products and make it the default screen via the “Add New Screen” dialog:

The screen designer picks a simple layout for me based on the single entity I chose, in this case Product. Hit F5 to run the application, select a Product on the search screen to open the Product Details Screen. Notice that it’s pretty simple because my entity is simple. Click the “Customize” button in the top right of the screen so we can start tweaking it.

The left side of the screen shows the containership of controls and data bindings (called the content tree) and the right side shows the live preview with data. Notice that we have a simple layout of two rows but only one row is populated (with a vertical stack of controls in this case). The bottom row is empty. You can envision the screen like this:

Each container will display a group of data that you select. For instance in the above screen, the top row is set to a vertical stack control and the group of data to display is coming from Product. So when laying out screens you need to think in terms of containers of controls bound to groups of data. To change the data to which a container is bound, select the data item next to the container:

You can select the “New Group” item in order to create more containers (or controls) within the current container. For instance to totally control the layout, select the Product in the top row and hit the delete key. This will delete the vertical stack and therefore all the controls on the screen. The content tree will still have two rows, but the rows are now both empty.

If you want a layout of four containers (two rows and two columns) then select “New Group” for the data item and then change the vertical stack control to “Two Columns” for both of the rows as shown here:

You can keep going on and on by selecting new groups and choosing between rows or columns. Here’s a layout with 8 containers, 4 rows and 2 columns:

And here is a layout with 7 content areas; one row across the top of the screen and three rows with two columns below that:

When you select Choose Content and select a data item like Product it will populate all the controls within the container (row or column in a vertical stack) however you have complete control on what to display within each group. You can delete fields you don’t want to display and/or change their controls. You can also change the size of controls and how they display by changing the settings in the properties window. If you are in the Screen Designer (and not the customization mode like we are here) you can also drag-drop data items from the left-hand side of the screen to the content tree. Note, however, that not all areas of the tree will allow you to drop a data item if there is a binding already set to a different set of data. For instance you can’t drop a Customer ID into the same group as a Product if they originate from different entities. To get around this, all you need to do is create a new group and content area as shown above.

Let’s take a more complex example that deals with more than just product. I want to design a complex screen that displays Products and their Category, as well as all the OrderDetails for which that product is selected. This time I will create a new screen and select List and Details, select the Products screen data, and include the related OrderDetails. However I’m going to totally change the layout so that a Product grid is at the top left and below that is the selected Product detail. Below that will be the Category text fields and image in two columns below. On the right side I want the OrderDetails grid to take up the whole right side of the screen. All this can be done in customization mode while you’re debugging the application.

To do this, I first deleted all the content items in the tree and then re-created the content tree as shown in the image below. I also set the image to be larger and the description textbox to be 5 rows using the property window below the live preview. I added the green lines to indicate the containers and show how it maps to the content tree (click to enlarge):

I hope this demystifies the screen designer a little bit. Remember that screen templates are excellent starting points – you can take them as-is or customize them further. It takes a little fooling around with customizing screens to get them to do exactly what you want but there are a ton of possibilities once you get the hang of it. Stay tuned for more information on how to create your own screen templates that show up in the “Add New Screen” dialog.

Robert Green described a LightSwitch Gotcha: How To Break Your LightSwitch Application in Less Than 30 Seconds on 1/20/2011:

This is the first in a series of LightSwitch Gotcha posts. I have no idea how many there will be, but I suspect there will be plenty as I run into things myself or hear about things that happened to other people.

To see this first gotcha in action, and to perform this trick yourself, build a very simple LightSwitch application. All you need is one table with a handful of properties. Then add a New Data Screen based on that table. Run the application and open the new data screen. You can add a new record.

Exit the application and roll up your sleeves to prove there is nothing up them. In the Solution Explorer, right-click on the screen and select View Screen Code. You will see code like the following (the C# code looks very similar):

Private Sub CreateNewEmployee_BeforeDataInitialize() ' Write your code here. Me.EmployeeProperty = New Employee() End Sub Private Sub CreateNewEmployee_Saved() ' Write your code here. Me.Close(False) Application.Current.ShowDefaultScreen(Me.EmployeeProperty) End SubDelete this code. Why would you do that? Perhaps it is days, weeks or months later and you have many screens in the application, as well as lots of data validation and other code. You open this screen code and you don’t recognize the code above. You assume you wrote it a while ago and that it is not needed. You also miss the warning sign, which is the Write your code here comment.

Run the application and open the new screen. Notice the controls are all disabled and the default values do not appear.

What happened? The code in the BeforeDataInitialize creates a new Employee object and then assigns that to Me.EmployeeProperty, which represents the screen’s data. At this point, a light bulb goes on (get it?

). Deleting that code is equivalent to dragging a table from the Data Sources window onto a Windows Form and then going to the form’s Loaded event handler and deleting the code that fills the DataAdapter. If you don’t retrieve data, you can’t populate a data entry screen!

No doubt many of you looked at that code and knew pretty quickly what it did. So when I said delete it, you knew why it would break the application. I am also willing to guess that many of you did not immediately know this, or at least could wind up in a situation where months later you did not recognize that code and immediately understand why you shouldn’t delete it.

The simple moral of the story? Understand that LightSwitch generates code for you. Most of it is behind the scenes, but not all of it. You need to be aware of that and be careful when you look at code. A best practice might be to put the LightSwitch generated code in it’s own region if you aren’t going to touch it. Or add a comment to identify it.

On a personal note, I am now rooting for the Chicago Bears in the NFL playoffs, since the teams from my wife’s and my other current or previous home towns (Boston, Baltimore, Seattle) are all out of it. Go Bears!!

Steve Fenton explained Using Visual Studio LightSwitch To Create An Application In Under Four Minutes in this 1/14/2011 (missed when published):

Download the Free Create An Application In LightSwitch In Under 4 Minutes PDF.

Visual Studio LightSwitch is a mind-blowing new offering from Microsoft, which is currently available as a public beta. The idea behind LightSwitch is to create data-backed applications without having to write any code. This sounded too good to be true, so I dedicated some time to trying it out. Three minutes and twenty seconds to be precise!

Here is a screen shot of the application I created - it's a simple email / phone directory for internal use within a company. I figured you'd want to store each person's name, department, email and some phone numbers.

Remember, the entire application took 3:20 to write from scratch - just look at this search screen. I've got my data persisted to a SQL Express database for free. I've got paged results for free, I've got a search box that searches multiple fields for free. I've got the option to export to excel for free. Also note that I've added a "Create New Contact" page and also a "Details" page (you click on the first name) to edit existing records. I did those inside of that 3:20 as well.

What other features do you get for free... well, there is form validation to make sure people enter all the required information when adding a new record and there is even dirty-data checking for free.

So how did I write this application. Here are the details...

Step 1 - What do you want to store

This is the first screen you get. You type in the fields that you want to use in your application. The "Type" is a drop down list that contains handy options like "PhoneNumber" and "EmailAddress" as well as the more traditional number types and strings.

Step 2 - Add a screen

From the view of the data model, you just hit the "Add Screen" button and select from the five available templates. The search data screen is the one I selected for the main view in my application. Then you give it a "Screen Name" and select the "Screen Data" and click on OK.

At this point, you are actually finished - although I repeated this step to add a "Create Contact" screen and a "Details" screen (which also lets you edit the record).

Run up the example and what you have is a fully functional application, persisting its data to a database and validating user input. It's a Silverlight application, so you can run it on the desktop or via a browser.

More Information

You can find out more about LightSwitch on the official Microsoft LightSwitch site.

Screen Shots

Here are a couple more screen shots that show some of the stuff you get for free when using LightSwitch, like validation messages and dirty-data warnings. Even the theme of the application is free, with it's tabbed interface and simple ribbon bar menu.

Validation

Return to section navigation list>

Windows Azure Infrastructure

Doug Rehnstrom explained Getting Started With Windows Azure – Setting up a Development Environment for Free in a 1/20/2011 post to the Learning Tree blog:

To use Windows Azure, you’ll want to set up Microsoft Visual Studio, and install the Windows Azure tools and SDK. If you don’t have Visual Studio, you may be thinking this will be expensive. Actually, it’s free! This article will walk you through it step-by-step.

Installing Visual Web Developer Express Edition

Windows Azure allows you to host massively scalable, zero-administration Web applications in the cloud, without buying any hardware. First, you need to create the Web application though, and for that you need a development tool. Microsoft offers a free version of Visual Studio that is perfect for this task. Go to this link, http://www.microsoft.com/windowsazure, to install it. (If you already have Visual Studio, you can skip this step and go right to installing Microsoft Windows Azure Tools for Visual Studio, see below.)

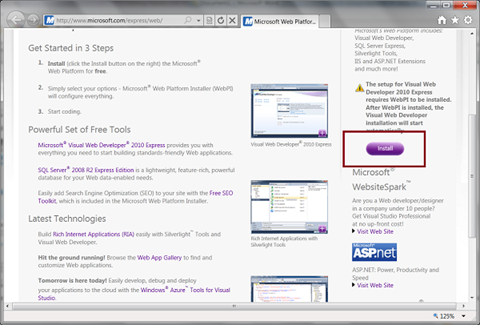

Click on the “Get tools and SDK” button, and you will be taken to the Windows Azure Developer Services page shown below. Scroll down a bit and click on the Download Visual Web Developer 2010 Express link.

Read the page shown below, and click on the “Install” button.

The Microsoft Web Platform Installer will start, as shown below. At the top, click on “Products”. Then, make sure Visual Web Developer 2010 Express is added. You may also want to install other products like SQL Server Express, IIS and so on. Click the “Install” button and run through the wizard. The installer should detect any dependencies your system requires. A reboot may also be required during the setup process.

Installing the Windows Azure Tools for Microsoft Visual Studio

After you have Visual Studio installed, go back to the Windows Azure Developer Services page, but this time click on the “Get tools and SDK” button, as shown below. You will be prompted to download and run an installation program. Do so, and the Windows Azure tools will be installed on your machine and the Windows Azure templates will be added to Visual Studio.

At this point, you should be able to create an Azure application. Start Visual Web Developer 2010 Express (or Visual Studio if you have it). Select the “File | New Project” menu. The New Project dialog will start as shown below. Under Installed Templates, expand the tree for your preferred language (Visual Basic or Visual C#). Select the Cloud branch. If you see the Windows Azure Project template, then it must have worked.

In another post, I’ll walk you through creating your first project.

You will also need to setup an account and subscription to use Windows Azure. See my earlier post, Getting Started with Windows Azure – Subscriptions and Users.

To learn more about Windows Azure, come to Learning Tree course 2602, Windows Azure Platform Introduction: Programming Cloud-Based Applications.

Dilip Tinnelvelly claimed “Ninety percent of U.S. & UK IT managers surveyed in Kelton Research's new report will implement new mobile applications in 2011” in a preface to his Mobile Cloud Computing Gathering Interest as Apps Continue Upward Trend post of 1/20/2011:

Kelton Research's new survey [for Sybase] reveals that this year 90 percent of IT managers are planning to implement new mobile applications that includes both hosted and on-premise mobility solutions. Nearly half of them believe that successful management of mobile applications will be top in their priority list this year.

The massive popularity of smartphones and tablets among business users have resulted in the unavoidable push for mission critical services catering to this mobile workforce and thereby drive productivity .While consumers have strongly subscribed to location based services (LBS) and navigation applications for quite some time now , the need for on-demand collaborative business applications beyond the standard email seems to be the driving force behind the current mobile application development momentum.

As the complexity and scope of enterprise demands increase, companies have no choice but to migrate their computing power and data storage into the cloud for their Business-to-employee solutions. Advantages are many. They will be able to encompass a broader network of mobile subscribers even those in emerging countries possessing phones with limited memory and power capabilities. They can decrease dependency on network operators and in-house servers while avoiding writing a pile of code to get around platform fragmentation. In fact about 82 percent of the managers in the Kelton survey think that it would be beneficial to host more of their mobile applications in the cloud.

We have seen key cloud industry players like Google and Salesforce.com embrace this strategy by doling out mobile cloud services. Earlier this month Amazon announced Kindle apps for Android and Windows based tablet after debuting a similar iPad app last year allowing for its digital content to be accessed by all these outside platforms. Initiatives such as the GSM Association's "OneAPI" standard which provides a collaborative set of cross-industry network APIs to overcome operator integration problems and HTML5 ‘s On-device caching capabilities with superior video publishing technology will only continue to spur activity in this segment.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

The privatecloud.com blog reposted Robert Duffner’s Thought Leaders in the Cloud: Talking with Barton George, Cloud Computing Evangelist at Dell interview on 1/20/2011:

Barton George [right] joined Dell in 2009 as the company’s cloud computing evangelist. He acts as Dell’s ambassador to the cloud computing community and works with analysts and the press. He is responsible for messaging as well as blogging and tweeting on cloud topics. Prior to joining Dell, Barton spent 13 years at Sun Microsystems in a variety of roles that ranged from manufacturing to product and corporate marketing. He spent his last three years with Sun as an open source evangelist, blogger, and driver of Sun’s GNU/Linux strategy and relationships.

In this interview, we discuss:

- Just do it – While some people are hung up arguing about what the cloud is, others are just using it to get stuff done

- Evolving to the cloud – Most organizations don’t have the luxury to start from scratch

- Cloud security – People were opposed to entering their credit card in the early days of the internet, now it’s common. Cloud security perceptions will follow a similar trajectory

- Cost isn’t king – For many organizations, is the “try something quickly and fail fast” agility that’s drawing people to the cloud, not just cost savings

- The datacenter ecosystem – The benefits of looking at the datacenter as a holistic system and not individual pieces

Robert Duffner: Could you take a minute to introduce yourself and tell us a little bit about your experience with cloud computing?

Barton George: I joined Dell a little over a year ago as cloud evangelist, and I work with the press, analysts, and customers talking about what Dell is doing in the cloud. I also act as an ambassador for Dell to the cloud computing community. So I go out to different events, and I do a lot of blogging and tweeting.

I got involved with the cloud when I was at a small company right before Dell called Lombardi, which has since been purchased by IBM. Lombardi was a business process management company that had a cloud-based software service called Blueprint.

Before that, I was with Sun for 13 years, doing a whole range of things from operations management to hardware and software product management. Eventually, I became Sun’s open source evangelist and Linux strategist.

Robert: You once observed that if you asked 10 people to define cloud, you’d get 15 answers. [laughs] How would you define it?

Barton: We talk about it as a style of computing where dynamically scalable and often virtualized resources are provided as a service. To simplify that even further, we talk about it as IT provided as a service. We define it that broadly to avoid long-winded discussions akin to how many angels can dance on the head of a pin. [laughs]

You can really spend an unlimited amount of time arguing over what the true definition of cloud is, what the actual characteristics are, and the difference between a private and a public cloud. I think you do need a certain amount of language agreement so that you can move forward, but at a certain point there are diminishing returns. You need to just move forward and start working on it, and worry less about how you’re defining it.

Robert: There are a lot of granular definitions you can put into it, but I think you’re right. And that’s how we look at it here at Microsoft, as well. It’s fundamentally about delivering IT as a service. You predict that traditional, dedicated physical servers and virtual servers will give way to private clouds. What’s led you to that opinion?

Barton: I’d say that there’s going to be a transition, but I wouldn’t say that those old models are going to go away. We actually talk about a portfolio of compute models that will exist side by side. So you’ll have traditional compute, you’ll have virtualized compute, you’ll have private cloud, and you’ll have public cloud.

What’s going to shift over time is the distribution between those four big buckets. Right now, for most large enterprises, there is a more or less equal distribution between traditional and virtualized compute models. There really isn’t much private cloud right now, and there’s a little bit of flirting with the public cloud. The public cloud stuff comes in the form of two main buckets: sanctioned and unsanctioned.

“Sanctioned” includes things like Salesforce, payroll, HR, and those types of applications. The “unsanctioned” bucket consists of people in the business units who have decided to go around their IT departments to get things done faster or with less red tape.

Looking ahead, you’re going to have some traditional usage models for quite a while, because some of that stuff is cemented to the floor, and it just doesn’t make sense to try and rewrite it or adapt it for virtualized servers or the cloud.

But what you’re going to see is that a lot of these virtualized offerings are going to be evolved into the private cloud. Starting with a virtualized base, people are going to layer on capabilities such as dynamic resource allocation, metering, monitoring, and billing.

And slowly but surely, you’ll see that there’s an evolution from virtualization to private cloud. And it’s less important to make sure you can tick off all the boxes to satisfy some definition of the private cloud than it is to make continual progress at each step along the way, in terms of greater efficiency, agility, and responsiveness to the business.

In three to five years, the majority of folks will be in the private cloud space, with still pretty healthy amounts in the public and virtualized spaces, as well.

Robert: As you know, Dell’s Data Center Solutions Group provides hardware to cloud providers like Microsoft and helps organizations building their own private clouds. How do you see organizations deciding today between using an existing cloud or building their own?

Barton: Once again, there is a portfolio approach, rather than an either-or proposition. One consideration is the size of the organization. For example, it’s not unusual for a startup to use entirely cloud-based services. More generally, decisions about what parts a business keeps inside are often driven by keeping sensitive data and functionality that is core to the business in the private cloud. Public cloud is more often used for things that are more public facing and less core to the business.

We believe that the IT department needs to remake itself into a service provider. And as a service provider, they’re going to be looking at this portfolio of options, and they’re going to be doing “make or buy” decisions. In some cases, the decision will be to make it internally, say, in the case of private cloud. Other times, it will be a buy decision, which will imply outsourcing it to the public cloud.

The other thing I’d say is that we believe there are two approaches to getting to the cloud: one is evolutionary and the other one is revolutionary. The evolutionary model is what I was just talking about, where you’ve made a big investment in infrastructure and enterprise apps, so it makes sense to evolve toward the private cloud.

There are also going to be people who have opportunities to start from ground zero. They are more likely to take a revolutionary approach, since they’re not burdened with legacy infrastructure or software architecture. Microsoft Azure is a good example. We consider you guys a revolutionary customer, because you’re starting from the ground up. You’re building applications that are designed for the cloud, designed to scale right from the very beginning.

Some organizations will primarily follow one model, and some will follow the other. I would say that right now, 95% of large enterprises are taking the evolutionary approach, and only 5% are taking a revolutionary approach.

People like Microsoft Azure and Facebook that are focused on large scale-out solutions with a revolutionary approach are in a small minority. Over time, though, we’re going to see more and more of the revolutionary approach, as older infrastructure is retired.

Robert: Let me switch gears here a little bit. You guys just announced the acquisition of Boomi. Is there anything you can share about that?

Barton: I don’t know any more than what I’ve read in the press, although I do know that the Boomi acquisition is targeted to small and medium-sized businesses. We target that other 95% on the evolutionary side with what we call Virtual Integrated System. That’s the idea of starting with the already existing virtualized infrastructure and building capabilities on top of it.

Robert: The White House recently rolled out Cloud Security Guidelines. At Microsoft and Dell, we’ve certainly spent a lot of time dealing with technology barriers. How much of the resistance has to do with regulation, policy, and just plain fear? And how much do things like cloud security guidelines and accreditation do to alleviate these types of concerns?

Barton: To address those issues, I think you have to look at specific customer segments. For example, HIPAA regulations preclude the use of public cloud in the medical field. Government also has certain rules and regulations that won’t let them use public clouds for certain things. But as they put security guidelines in place, that’s going to, hopefully, make it possible for the government to expand its use of public cloud.

I know that Homeland Security uses the public cloud for their public-facing things, although obviously, a lot of the top secret stuff that they’re doing is not shared out on the public cloud. If you compare cloud computing to a baseball game, I think we’re maybe in the bottom of the second inning. There’s still quite a bit that’s going to happen.

One of the key areas where we will make a lot of progress on in the next several years is with security, and I think people are going to start feeling more and more comfortable.

I liken it to when the Internet first entered broad use, and people said, “I would never put my credit card out on the Internet. Anyone could take it and start charging up a big bill.” Now, the majority of us don’t think twice about buying something off of the web with our credit cards, and I think we’re going to see analogous change in the use of the cloud.

Robert: Regardless of whether you have a public or private cloud, what are your thoughts on infrastructure as a service and platform as a service? What do you see as key scenarios for each of those kinds of clouds?

Barton: I think infrastructure as a service is a great way to get power, particularly for certain things that you don’t need all the time. For example, I was meeting with a customer just the other day. They have a web site that lets you upload a picture of your room and try all kinds of paint colors on it. The site renders it all for you.

They just need capacity for a short period of time, so it’s a good example of something that’s well suited to the public cloud. They use those resources briefly and then release them, so it makes excellent sense for them.

There’s also a game company we’ve heard about that does initial testing of some of their games on Amazon. They don’t know if it’s going to be hit or not, but rather than using their own resources, they can test on the public cloud, and if it seems to take off, they then can pull it back in and do it on their own.

I think the same thing happens with platform as a service. Whether you have the platform internal or external, it allows developers to get access to resources and develop quickly. It allows them to use resources and then release them when they’re not needed, and only pay for what they use.

Robert: In an article titled, “Cloud Computing: the Way Forward for Business?,” Gartner was quoted as predicting that cloud computing will become mainstream in two to five years, due mainly to cost pressures. When organizations look pass the cost, though, what are some of the opportunities you think cloud providers should really be focusing on?

Barton: I think it’s more about agility than cost, and that ability to succeed or fail quickly. To go back to that example of the game company, it gives them an inexpensive testing environment they can get up and going easily. They can test it without having to set up something in their own environment that might take a lot more time. A lot of the opportunity is about agility when companies develop and launch new business services.

The amount of time that it takes to provision an app going forward should, hopefully, decrease with the cloud, providing faster time to revenue and the ability to experiment with less of a downside.

Robert: Gartner also recently said that many companies are confused about the benefits, pitfalls, and demands of cloud computing. What are some of the biggest misconceptions that you still run into?

Barton: Gartner themselves put cloud at the very top of the hype cycle for emerging technologies last year, and then six weeks later, they turned around and named it the number one technology for 2010. There are a lot of misconceptions because people have seen the buzz and want to sprinkle the cloud pixie dust on what they offer.

This is true both for vendors, who want to rename things as cloud, and for internal IT, who when asked about cloud by their CIO, they say, “Oh, yes. We’ve been doing that for years.”

I do think people should be wary of security, and there are examples where regulations will prohibit you from using the cloud. At the same time, you also have to look at how secure your existing environment is. You may not be starting from a perfectly secure environment, and the cloud may be more secure than what you have in your own environment.

Robert: Those are the prepared questions I have. Is there anything interesting that you’d like to add?

Barton: Cloud computing is a very exciting place to be right now, whether you’re a customer, an IT organization, or a vendor. As I mentioned before, we are in the very days of this technology, and we’re going to see a lot happening going forward.

In much the same way that we really focused on distinctions between Internet, intranet, and extranet in the early days of those technologies, there is perhaps an artificial level of distinction between virtualization, private cloud, and public cloud. As we move forward, these differences are going to melt away, to a large extent.

That doesn’t mean that we’re not going to still have private cloud or public cloud, but we will think of them as less distinct from one another. It’s similar to the way that today, we keep certain things inside our firewalls on the Internet, but we don’t make a huge deal of it or regard those resources inside or outside as being all that distinct from each other.

I think that in general, as the principles of cloud grab hold, the whole concept of cloud computing as a separate and distinct entity is going to go away, and it will just become computing as we know it.

I see cloud computing as giving IT a shot in the arm and allowing it to increase in a stair-step fashion, driving what IT’s always been trying to drive, which is greater responsiveness to the business while at the same time driving greater efficiencies.

Robert: One big trend that we believe is going to fuel the advance of cloud computing is the innovation happening at the data center level. It’s one thing to go and either build a cloud operating system or try to deploy one internally, but it’s another thing to really take advantage of all the innovations that come with being able to manage the hardware, network connections, load balancers, and all the components that make up a data center. Can you comment a little bit about how you see Dell playing into this new future?

Barton: That’s really an area where we excel, and that’s actually why our Data Center Solutions Group was formed. We started four or five years ago when we noticed that some of our customers, rather than buying our PowerEdge servers, were all of a sudden looking at these second-tier, specialized players like Verari or Rackable. Those providers had popped up and identified the needs of these new hyperscale providers that were really taking the whole idea of scale-out and putting it on steroids.

Dell had focused on scale starting back in 2004, but this was at a whole other level, and it required us to rethink the way we approach the problem. We took a step back and realized that if we want to compete in this space of revolutionary cloud building, we needed to take a custom approach.

That’s where we started working with people like Microsoft Azure, Facebook, and others, sitting down with customers and focusing on the applications they are trying to run and the problems they are trying to solve, rather than starting with talking about what box they need to buy. And then we work together with that customer to design a system.

We learned early on that customers saw the system as distinct from the data center environment. Their orientation was to say, “Don’t worry about the data center environment. That’s where we have our expertise. You just deliver great systems and the two will work together.” But what we found is if you really want to gain maximum efficiencies, you need to look at the whole data center as one giant ecosystem.

For example, with one customer, we have decided to remove all the fans from the systems and the rack itself and put gigantic fans in the data center, so that the data center becomes the computer in and of itself. We have made some great strides thinking of it in that kind of a holistic way. Innovation when developing data centers is very crucial to the overall excellence in this area.

We’ve been working with key partners to deliver this modular data center idea to a greater number of people, so this revolutionary view of the data center can take shape more quickly. And then, because they’re modular, like giant Lego blocks, you can expand these sites quickly. But once again, the whole thing has to be looked at as an ecosystem.

<Return to section navigation list>

Cloud Security and Governance

Bob Violino reported Study: Cloud breaches show need for stronger authentication in a 1/19/2010 article for NetworkWorld’s Data Center blog:

As organizations increase their reliance on cloud-based services, collaboration tools and enabling users to access networks, the number of security breaches is on the rise. A new study by Forrester Research shows that more than half of the 306 companies surveyed (54 percent) reported a data breach in the previous year.

Even with the growing security threats, most enterprises continue to rely on the traditional username and password sign-on to verify a user's identity, rather than strong authentication, according to the study.

The report, "Enhancing Authentication to Secure the Open Enterprise," was conducted by Forrester late in 2010 on behalf of Symantec Corp. The vendor wanted to evaluate how enterprises are evolving their authentication and security practices in response to changing business and IT needs as exemplified by cloud and software-as-a-service (SaaS) adoption, the business use of Web 2.0 services, and user mobility trends.

Password issues are the top access problem in the enterprise, according to the study. Policies on password composition, expiration, and lockout that are put in place to mitigate risk have become a major burden to users, impeding their ability to be productive. They also result in help desk costs due to forgotten passwords.

The Forrester study recommends that organizations implement strong authentication throughout the enterprise, not just for select applications.

Mauricio Angee, VP and information security manager at Mercantil Commercebank N.A., agrees that passwords have become a problem.

"Today, there is a high percentage of calls and service requests related to password resets in our environment," Angee says. "Two-factor authentication has been implemented for network sign-ons, in addition to the deployment of single-sign-on, which has helped us [reduce] the amount of password management."

The concern with passwords, Angee says, "is that we have given the user the responsibility to change passwords, remember long complex pass-phrases, secure PINs, carry tokens, etc. This is a practice that has proved to be a huge weakness to keep our environments secure, not to mention the huge challenge to information security professionals who have to enforce policies and maintain an expected level of security."

Moving the entire infrastructure to strong authentication requires time and resources dedicated to assessment, analysis and testing systems and applications in order to determine if these systems have the capability to be integrated, Angee says. "Often, constraints are found, mostly with legacy systems, which has been the major [reason] to avoid moving forward with strong authentication. This is definitely an initiative we will be focusing our efforts to determine the feasibility, impact, and the ROI."

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Roman Irani invites developers to Demo Your Product for Free at GlueCon in a 1/20/2011 post to the ProgrammableWeb blog:

We live in a world of APIs, data formats and web applications. Integrating all of these services is one of the foremost challenges facing organizations as we move into the next stage of cloud adoption. If you have a product offering that addresses the challenge of web application integration in a unique way, you have a great chance to showcase it at Gluecon 2011 for free.

Gluecon, the conference that is devoted to addressing the web application integration challenge, is scheduled to be held on May 25-26 in Broomfield, Colorado. The conference is all about APIs, connectors, meta-data, standards and anything that can help “glue” together the infinite number of web applications that are out there.

As part of Alcatel-Lucent (parent company of ProgrammableWeb) becoming the Community Underwriter for Gluecon, 15 companies will be selected to have completely free demo space at Gluecon. The Demo Pod also includes passes to the show, signage and internet access.

To apply, you need to submit your details here by April 1. A panel of judges comprising a healthy mix of technical folks, analysts, journalists and top executives will review the submissions and choose the 15 companies. Check out more details on the process.

Gluecon 2011 promises to be a great conference for developers who are interested in APIs and the Cloud. If you’re planning to attend, get in on the early bird discount. The span of topics that are going to be discussed at Glue is vast and includes APIs, Protocols, Languages, Frameworks, Open Data, Data Storage, Platforms and all the bits and pieces that glue all of them together.

Murali M at Inspions reported Two Microsoft .NET Developer Events in DFW, You Must Attend on 2/16 to 2/17/2011 and 3/4 to 3/5/2011:

If you are a budding .NET developer looking to kick start your career in 2011 or advance your career on Microsoft .NET technologies, here are two great events right here in DFW that you must attend.

Windows Azure 2 Day BootCamp : 16th and 17th February 2011

This is a 2-day deep dive program to prepare you to deliver solutions on the Windows Azure Platform. We have worked to bring the region’s best Azure experts together to teach you how to work in the cloud. Each day will be filled with training, discussion, reviewing real scenarios, and hands on labs.

Dallas Day of Dot Net : 4th and 5th of March 2011

With Phil Haack kicking off the 2 day event with ASP.NET MVC 3, it got 2 days of immersive .NET programs including but not limited to “Windows phone, Azure, C# 4.0, HTML 5, Asp.Net MVC 3, WPF, Silverlight”. The event is priced at $200, but all proceeds go towards helping a cancer patient.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Popescu posted Comparing NoSQL Databases with Object-Oriented Databases to his myNoSQL Blog on 1/20/2011:

Don White* in an interview over odbms.org:

The new data systems are very data centric and are not trying to facilitate the melding of data and behavior. These new storage systems present a specific model abstractions and provide their own specific storage structure. In some cases they offer schema flexibility, but it is basically used to just manage data and not for building sophisticated data structures with type specific behavior.

Decoupling data from behavior allows both to evolve separately. Or differently put, it allows one to outlive the other.

Another interesting quote from the interview:

[…] why would you want to store data differently than how you intend to use it? I guess the simple answer is when you don’t know how you are going to use your data, so if you don’t know how you are going to use it then why is any data store abstraction better than another?

I guess this explains the 30 years dominance of relational databases. Not in the sense that we never knew how to use data, but rather that we always wanted to make sure we can use it in various ways.

And that explains also the direction NoSQL databases took:

*Don White is senior development manager at Progress Software Inc., responsible for all feature development and engineering support for ObjectStoreTo generalize it appears the newer stores make different compromises in the management of the data to suit their intended audience. In other words they are not developing a general purpose database solution so they are willing to make tradeoffs that traditional database products would/should/could not make. […] They do provide an abstraction for data storage and processing capabilities that leverage the idiosyncrasies of their chosen implementation data structures and/or relaxations in strictness of the transaction model to try to make gains in processing.

David Linthicum claimed “EMC's video sniping at Oracle makes both companies look silly -- and neither actually delivers on the cloud promise” in a deck for his The YouTube food fight over the nonexistent 'cloud in a box' post of 1/20/2011 to InfoWorld’s Cloud Computing blog:

On behalf of its Greenplum Data Computing Appliance "cloud in a box," EMC has been taking some cheap shots at Oracle's Exadata, with a YouTube video that shows an actor who looks a lot like Larry Ellison with a gift box attached to his fly. The topic is "cloud in a box," and this is a single video in EMC's Greenplum series, all focusing on Oracle.

Don't get me wrong: I'm all for having technology company take innovative approaches to marketing, such as stealing premises from Apple and hiring actors that look like billionaire software executives and attaching props to their crotch. However, EMC cloud is missing the core message and, thus, any potential customers it is trying to reach.

The truth is that both EMC and Oracle have done little to promote the value of cloud computing. Instead, they're selling very expensive software and hardware that may not bring the promise of cloud computing any closer to the enterprise. Although cloud computing can provide remotely accessible services that enterprises can use to make critical systems -- such as data warehousing -- much more affordable and efficient, that's not what EMC and Oracle are offering. Instead, they are trying to sell you more hardware and software for your data center. Why? Because they make money by selling hardware and software, not by selling cloud computing services.

The core message is one of confusion. Cloud computing is about using IT assets in much more efficient and effective ways, and not about layering another couple of million dollars in hardware and software technology in the existing data center just because somebody has associated the "cloud" label with it. There is no "cloud in a box" -- there are no shortcuts to cloud computing. No matter how many clever marketing videos you make, nothing will change that.

It appears to me that EMC to David’s complaint seriously.

Bob Warfield takes on Matt McAdams’ post in Database.com, nice name, shame about the platform (Huh?) in his 1/19/2011 post to the Enterprise Irregulars blog:

Matt McAdams writes an interesting guest post for Phil Wainewright’s ZDNet blog, Software as Services. I knew when I read the snarky title, “Database.com, nice name, shame about the platform,” I had to check it out.

The key issue McAdams seems to have with Database.com (aside from the fact his own company’s vision of PaaS is much different, more in a minute on that) is latency. He contends that if you separate the database from the application layer, you’re facing many more round trips between the application and the database. Given the long latency of such trips, your application’s performance may degrade until your app “will run at perhaps one tenth the speed (that is, page loads will take ten times longer) than a web app whose code and data are colocated.” McAdams calls this a non-starter.

If using Database.com or similar database-in-the-cloud services results in a 10x slower app, then McAdams is right, but there are already ample existence proofs that this need not be the case. There are also some other interesting considerations that may make it not the case for particular database-in-the-cloud services (hereafter DaaS to avoid all that typing!).

Let’s start with the latter. Salesforce’s offering is, of course, done from their data centers. From that standpoint, you’re going to pay whatever the datacenter-to-datacenter latency is to access it if your application logic is in some other Cloud, such as Amazon. That’s a bit of a setback, to be sure. But what if your DaaS provider is in your Cloud? I bring this up because some are. Of course some Clouds, such as Amazon offer DaaS services of various kinds. In addition, it’s worth looking at whether your DaaS vendor hosts from their own datacenter, or from a publicly accessible Cloud like Amazon. I know from having talked to ClearDB‘s CEO, Cashton Coleman, that their service is in the Amazon Cloud.

This is an important issue when you’re buying your application infrastructure as a service rather than having it hosted in your own datacenter. It also creates a network effect for Clouds, which is something I’ve had on my mind for a long time and written about before as being a tremendous advantage for Cloud market leaders like Amazon. These network effects will be an increasing issue for PaaS vendors of all kinds, and one that bears looking at if you’re considering a PaaS. The next time you are contemplating using some web service as infrastructure, no matter what it may be, you need to look into where it’s hosted and consider whether it makes sense to prefer a vendor who is colocated in the same Cloud as your own applications and data. Consider for example even simple things like getting backups of your data or bulk loading. When you have a service in the same Cloud, for example like ClearDB, it becomes that much cheaper and easier.

Okay, so latency can be managed by being in the same Cloud. In this case, Database.com is not in the same Cloud, so what’s next? Before leaving the latency issue, if I were calling the shots at Salesforce, I’d think about building a very high bandwidth pipe with lower latency into the Amazon Cloud. This has been done before and is an interesting strategy for maximizing an affinity with a particular vendor. For example, I wrote some time ago about Joyent’s high speed connection to Facebook.

Getting back to how to deal with latency, why not write apps that don’t need all those round trips? It helps to put together some kind of round-trip minimization in any event, even to make your own datacenter more efficient. There are architectures that are specifically predicated on this sort of thing, and I’m a big believer one whose ultimate incarnation I’ve taken to calling “Fat SaaS“. A pure Fat SaaS application minimizes its dependency on the Cloud and moves as much processing as possible into the client. Ideally, the Cloud winds up being just a data repository (that’s what Database.com and ClearDB are) along with some real-time messaging capabilities to facilitate cross-client communication. The technology is available today to build SaaS applications using Fat SaaS. There are multiple DaaS offerings to serve as the data stores and many of them are capable of serious scalability while they’re at it. There are certainly messaging services of various kinds available. And lastly, there is technology available for the client, such as the Adobe AIR ecosystem. It’s amazing what can be done with such a simple collection of components: Rich UX, very fast response, and all the advantages of SaaS. The fast response is courtesy of not being bound to the datastore for each and every transaction since you have capabilities like SQLite. Once you get used to the idea, it’s quite liberating, in fact.