Windows Azure and Cloud Computing Posts for 1/26/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

The Microsoft Case Studies Team posted Real World Windows Azure: Interview with Carl Ryden, Chief Technology Officer at MarginPro on 1/26/2011:

As part of the Real World Windows Azure series, we talked to Carl Ryden, CTO at MarginPro, about using the Windows Azure platform for its loan pricing and profitability system. Here's what he had to say:

MSDN: Tell us about MarginPro and the services you offer.

Ryden: MarginPro develops loan deposit pricing and profitability management software for community banks and credit unions. Our solution takes the intuition and guesswork out of loan structures and risk assessments to help banks increase their net interest margin and to be as competitive as possible when competing for the best borrowers.

MSDN: What were the biggest challenges that you faced prior to implementing the Windows Azure platform?

Ryden: From the beginning, we developed MarginPro for the cloud-to allocate resources for an on-premises server infrastructure would have been a no-starter for us, especially considering that we have to have a certifiably-secure data center. We hosted MarginPro at a third-party hosting provider, GoGrid, where we maintained virtual application servers and a database server. However, maintaining those servers took a substantial percentage of my time each day. At times, it was all consuming and worse yet, more often than not, I had to perform this work during off hours, such as late nights and weekends. That's time that I could be devoting to our core business. Customers don't pay me to manage servers; they pay me to deliver world-class loan pricing software.

MSDN: Can you describe the solution you built with the Windows Azure platform to address your need for a highly secure, low-maintenance cloud solution?

Ryden: Our web-based application is built on Microsoft ASP.NET MVC 2 and uses the Microsoft Silverlight 4 browser plug-in. We host that in Windows Azure web roles. We also use Queue storage and worker roles to handle some of our complex computing needs.

All of our data is stored in Microsoft SQL Azure, which is deployed in a multitenant environment. We also cache that relational data into Table storage in Windows Azure so that each time a customer calls data from the application, it is pulled from the tables and doesn't have to hit the database each time. When we need to deploy updates, we create new instances, use MSBuild [Microsoft Build Engine] to deploy the updates to the new instance, and then flip a switch to go live. We don't have to take down virtual servers and risk interrupting our service now.

MSDN: How does the Windows Azure platform help you better serve your customers?

Ryden: MarginPro integrates with our customers' core banking systems and enables customers to update the strategic value of their accounts for optimum loan pricing. This is a computationally-heavy process. Without Windows Azure, we'd have to run these processes sequentially for each customer, but now, we can run them in parallel by scaling up new computing instances. The integration module wouldn't be possibly without Windows Azure.

MSDN: What kinds of benefits are you realizing with the Windows Azure platform?

Ryden: A lot of times, benefits come down to money. For us, it's not that simple. The biggest benefit for us is that we don't have to worry about maintaining an infrastructure. We have a SAS 70 Type II-compliant infrastructure that is maintained by Microsoft, which leaves us to focus on what we do best. We can let Microsoft take care of what they do best, we can focus on creating world-class loan pricing software, and our customers can focus on their core business, too.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008935

To read more Windows Azure customer success stories, visit:www.windowsazure.com/evidence

Richard Jones (@richard_jones) described Building Cross Platform Mobile Online/Offline Application with Sync. Framework in a 1/26/2011 post:

So what happens when you need to build an application that works on a variety of mobile platforms that need to work offline from a backend database?

This is the situation I’ve found myself working on a proof of concept application, that needs to use a common data store on SQL Azure. The mobile application, needs to periodically synchronise to this data store but importantly work offline.

Microsoft have done just this with Microsoft Sync. Services 4.0 CTP.

Just watch this [PDC 2010 session]: http://player.microsoftpdc.com/Session/28830999-dd97-45c4-9bb4-bb16ba21a6b0

iPhone, Windows Mobile and Silverlight application examples all included.

I’m pleased to report it works fantastically.

I have a Windows Mobile and iOS sample application working quite happily with a common backend database.

<Return to section navigation list>

MarketPlace DataMarket and OData

William Vambenepe (@vambenepe) continued his REST API critique of 1/25/2011 with The REST bubble of 1/26/2011:

Just yesterday I was writing about how Cloud APIs are like military parades. To some extent, their REST rigor is a way to enforce implementation discipline. But a large part of it is mostly bling aimed at showing how strong (for an army) or smart (for an API) the people in charge are.

Case in point, APIs that have very simple requirements and yet make a big deal of the fact that they are perfectly RESTful.

Just today, I learned (via the ever-informative InfoQ) about the JBoss SteamCannon project (a PaaS wrapper for Java and Ruby apps that can deploy on different host infrastructures like EC2 and VirtualBox). The project looks very interesting, but the API doc made me shake my head.

The very first thing you read is three paragraphs telling you that the API is fully HATEOS (Hypermedia as the Engine of Application State) compliant (our URLs are opaque, you hear me, opaque!) and an invitation to go read Roy’s famous take-down of these other APIs that unduly call themselves RESTful even though they don’t give HATEOS any love.

So here I am, a developer trying to deploy my WAR file on SteamCannon and that’s the API document I find.

Instead of the REST finger-wagging, can I have a short overview of what functions your API offers? Or maybe an example of a request call and its response?

I don’t mean to pick on SteamCannon specifically, it just happens to be a new Cloud API that I discovered today (all the Cloud API out there also spend too much time telling you how RESTful they are and not enough time showing you how simple they are). But when an API document starts with a REST lesson and when PowerPoint-waving sales reps pitch “RESTful APIs” to executives you know this REST thing has gone way beyond anything related to “the fundamentals”.

We have a REST bubble on our hands.

Again, I am not criticizing REST itself. I am criticizing its religious and ostentatious application rather than its practical use based on actual requirements (this was my take on its practical aspects in the context of Cloud APIs).

Related posts:

- Amazon proves that REST doesn’t matter for Cloud APIs

- REST in practice for IT and Cloud management (part 3: wrap-up)

- REST in practice for IT and Cloud management (part 2: configuration management)

- Square peg, REST hole

- Dear Cloud API, your fault line is showing

- REST in practice for IT and Cloud management (part 1: Cloud APIs)

Directions Magazine published Esri’s Updated Demographics Data Now Available in the Windows Azure Marketplace on 1/25/2011:

Variables from Esri's Updated Demographics (2010/2015) data are now available from the Windows Azure Marketplace DataMarket. Demographic data categories such as population, income, and households enable market analysts to accurately research small areas and make better, more informed decisions.

Developed with industry-leading, benchmarked methodologies, Esri's Updated Demographics data identifies areas of high unemployment, activity in the housing market, rising vacancy rates, reduced consumer spending, changes in income, and increased population diversity. The data variables ensure that analysts can conduct their research with the most accurate information available, particularly for fast-changing areas.

Esri created Updated Demographics data to give market analysts the most accurate small-area analysis at any geographic level. The accuracy of this data helped Esri identify the beginning of the bursting housing bubble and subprime mortgage crisis a full two years before the market collapsed.

"Esri pays close attention to economic and social trends and how they influence the needs of businesses, consumers, and citizens," says Lynn Wombold, chief demographer and manager for data development at Esri. "The challenge of the current market underscores the importance of using accurate information for analyses."

ArcGIS technology used in the Windows Azure Marketplace DataMarket is based on ArcGIS API for Silverlight.

For more information, visit esri.com/azure/datamarket. For more information about Esri's Updated Demographics, visit esri.com/demographicdata.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Bart Wullems explained Azure Durable Message Buffers in a 1/26/2011 post:

Last year at PDC 2010, the Azure AppFabric team made a number of important announcements. One of the improvements they released was a significantly enhanced version of the Azure AppFabric Service Bus Message Buffers feature.

What’s the difference between the current Message Buffers and the new Durable Message Buffers?

The current version of Message Buffers provide a small in-memory store inside the Azure Service Bus where up to 50 messages no larger than 64KB can be stored for up to ten minutes. Each message can be retrieved by a single receiver. This makes the range of scenario’s where this is useful somewhat limited. The new version of Message Buffers enhance this feature so it could have a much wider appeal. Message Buffers are now durable and look much more like a queuing system. This makes it possible to start using Durable Message Buffers as an alternative to the Azure Queues.

Eugenio Pace (@Eugenio_Pace) posted A year’s balance–next project on 1/26/2011:

A little bit late for a year balance since the year has already started, or so I’m told. Anyway, as we prepare for the next project, I reflected on my team’s work for the last 18 months. 18 months is more than a year, so you might wonder why am I doing a year balance on the work done on 18 months? Good question.

Does content that nobody sees and uses really exist? Like the tree falling where nobody can listen. Does it make a sound? I figured that what you cared the most is the stuff that was available to you, not what we actually do here in the remote American northwest and keep to ourselves.

Let’s say then, that the output of that last 18 months was available to you in the last 12 months. Makes sense?

And here it is:

A Guide to Claims based Identity

This guide published a year ago, laid the foundations for all subsequent books. We heavily reused the core designs explained in this little book. We also explored a new format for our books, and a new style. This book inaugurated the “tubemap”: the diagram we used from then on to explain in a single picture the entire scope of the content.

Credits to our brilliant partner, Matias Woloski. Little known secret: VS 2010 samples of the book are available on codeplex.

Moving Applications to the Cloud

Our first book specifically focused on Windows Azure. We explored the considerations of migrating (and optimizing) applications for the cloud. As a colorful anecdote, this book includes an Excel spreadsheet with dollar amounts! A technical book with economics! Some were very opposed to it, and $ is a big deal in PaaS (and SaaS, and IaaS, and XaaS)! Money is a big motivator and every month, you get direct feedback on how you are using the platform. Other nuggets worth mentioning: MSBuild tasks to automate deployment, setting up multiple environments (think “Dev”, “Test”, etc.). It’s quite a popular book: made it to the top 25 in O’Reilly this week.

Developing Applications for the Cloud

Our second book on Windows Azure Platform. This time with no legacy constraints. Ah! the joy of building something from scratch using the greatest and latest! Notice the “1” and “2” on the covers of this and the other book? Well, it turns out people had quite some difficulty differentiating one from the other. So we re-designed the cover with the numbers now. Yes, we learned quite a bit on book design too. In addition to the end to end case study,

In addition to the end-to-end scenario (the surveys application we use as the “case study”), a popular chapter has been: troubleshooting Windows Azure apps. My personal favorite content is the little framework we wrote for asynchronous processing with queues and workers. Seems like a common pattern and I’ve already used it in many places.

Windows Phone 7 Developer Guide

The ying-yang of the Microsoft platform in one single book: small, portable devices on one end and the massive, infinitely scalable datacenter in the backend: Windows Phone 7 and Windows Azure. The most controversial aspects of the book? Use of the MVVM pattern is the by far top of the list. Second in the rankings? Use of Rx (Reactive Extensions) for asynchronous communications. Rx is like Linq in its beginnings: mind twisting at first, confusing perhaps, too much “black magic”, but impossible to live without it afterwards. Glad to see the framework available broadly now: .NET, JavaScript, Silverlight, etc.

With regards to MVVM, well…Silverlight might not be fully optimized for this pattern, but we still believe it is worth considering and paying the extra cost if things like testability are important for you. testability is important to us, so we decided to implement it and show you how. We ported Prism to the phone and replaced Unity with Funq for increased efficiency. But of course you don’t have to use any of that. We do expect you to slice and dice the guide as you see fit. Go ahead rip it off.

So, what’s next? What’s next comes in detail in the next post. But here’s in short: what we’d like to do is to extend the identity guide to include some really compelling and demanded scenarios (federation with social identity providers, SharePoint, Access Control Service, etc.) What do you think? Are you passionate about tokens and STSs and claims and WS-Federation, etc.? Have strong opinions on all of these? I’d love to know.

The Windows Azure AppFabric Team Blog reported on 1/26/2011 that the Windows Azure AppFabric LABS [will be] undergoing scheduled maintenance on Thursday 1/27:

- START: Thursday 27, 2011, 3 pm PST

- END: Thursday 27, 2011, 4 pm PST

Impact Alert:

- Customers of Service Bus CTP in LABS environment will experience intermittent failures for the duration of this maintenance.

Action Required:

- None

The Windows Azure AppFabric Team

Steve Plank (@plankytronixx) posted Whiteboard video: How ADFS and the Microsoft Federation Gateway work together up in the Office 365 Cloud on 1/25/2011:

Another “whiteboard video” that gives a quick overview of the flows of data and comes in as a handy reference to my previous video which showed how to set it all up when you want to federate your Active Directory with Office 365.

This video shows the browser case. I’ll do another when you use an Outlook client to access Office 365.

How ADFS and the Microsoft Federation Gateway work together up in the Office 365 Cloud. from Steve Plank on Vimeo.

Hope this helps if you ever find yourself down in the guts of fiddler trying to work out the data flows…

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Alex Williams offered 5 Tips for Creating Cost Effective Windows Azure Applications in a 1/26/2011 post to the ReadWriteCloud blog:

Paraleap is a company that optimizes the compute power for Windows Azure customers with a service coming out of beta today called AzureWatch.

It's the type of service we expect to see more of as companies move infrastructure to the cloud.

Here's why:

- The cloud promises scaling up and down according to usage but it is often a manual exercise

- Cloud management is still nascent. We are just beginning to understand how to optimize cloud environments.

- We're still in transition. Apps are being ported to the cloud but how to optimize is still a bit of an art and a science.

According to Paraleap's Web site, AzureWatch "dynamically adjusts the number of compute instances dedicated to your Azure application according to real time demand." It provides user-defined rules to specify when to scale up down.

Illustrating the complexities of this is a blog post by Paraeap's founder Igor Papirov [see below]. In it he covers five tips for creating cost effective Windows Azure applications:

Here they are:

Tip 1 - Avoid crossing data center boundaries

Data that does not leave Microsoft's data center is not subject to transfer charges. Keep your communication between compute nodes, SQL Azure and Table Storage within the same data center as much as possible. This is especially important for applications distributed among multiple geo-locations.

Tip 2 - Minimize the number of compute hours by using auto scaling

Total compute hour is the biggest cost for using Azure. Auto scaling can minimize the cost so the number of nodes on Azure scales up or down depending on demand.

Tip 3 - Use both Azure Table Storage (ATS) and SQL Azure

Papirov:

Try to not limit yourself to a choice between ATS or SQL Azure. Instead, it would be best to figure out when to use both together to your advantage. This is likely to be one of the tougher decisions that architects will need to make, as there are many compromises between relational storage of SQL Azure and highly scalable storage of ATS. Neither technology is perfect for every situation.Tip 4 - ATS table modeling

This gets into the nitty gritty so I will leave it best for Papirov to explain. Essentially, it requires modeling tablet storage. By modeling, you will "not only speed up your transactions and minimize the amount of data transferred in and out of ATS, but also reduce the burden on you compute nodes that will be required to manipulate data and directly translate into cost savings across the board."

Tip 5 - Data purging in ATS

This is where Azure can be very tricky. In ATS, you are charged for every gigabyte that is stored. Issues arise with data purging as ATS is not a relational database. Each and every row from an ATS table requires two transactions. As a result, it can be expensive, inefficient and slow to purge data. "A better way would be to partition a single table into multiple versions (e.g. Sales2010, Sales2011, Sales2012, etc.) and purge obsolete data by deleting a version of a table at a time."

The complexities of cloud computing will be a front and center issue this year. We expect it will continue to show why cloud management technologies will become critically important for any enterprise working to optimize its presence in the cloud.

Igor Papirov asserted As Windows Azure matures, independent software vendors are starting to provide extra value to Azure customers. Paraleap Technologies created a unique Elasticity-as-a-Service offering designed to help applications running in Azure capitalize on the pay-for-use model of Microsoft's cloud technology as a deck for his Microsoft Windows Azure Cloud's Elasticity is Enhanced by Chicago-based Paraleap Technologies press release of 1/26/2011:

Paraleap Technologies, a Chicago-based software company specializing in cloud-computing solutions, is proud to announce the long anticipated release of its flagship product AzureWatch, designed to provide dynamic scaling to applications running on Microsoft Windows Azure cloud platform.

After nearly a year of development and testing, AzureWatch is released with a purpose to deliver on true value of cloud computing: provide on-demand elasticity and just-in-time provisioning of compute resources for applications running in the cloud. By automatically scaling Azure compute nodes up or down to match real-time demand, AzureWatch gives customers confidence that their applications will automatically handle spikes and valleys in usage, and will eliminate the need for incessant manual monitoring of compute resource utilization.

"Elasticity of compute resources is one of the primary driving forces behind the cloud computing revolution," says Igor Papirov, Founder and CEO of Paraleap Technologies. "Not only can our customers take advantage of this awesome benefit of the cloud by using our technology, but they can do so in under 10 minutes and without writing a single line of code."

Built with focus on simplicity and ease of use, AzureWatch can be setup in just minutes. While architected with a powerful and scalable engine to enable elasticity, AzureWatch also provides monitoring, alerts and comprehensive reporting services. Offered as a hybrid software-as-a-service, AzureWatch is available at http://www.paraleap.com and comes with a simple and affordable pricing model that can be used free with a 14-day trial period.

About [the] Company

Founded in 2010, Paraleap Technologies is a Microsoft Bizspark startup specializing in software services for enabling cloud computing technologies. AzureWatch is Paraleap’s flagship product, designed to add dynamic scalability and monitoring to applications running on Microsoft Windows Azure cloud platform.

Christian Weyer explained Fixing Windows Azure SDK 1.3 Full IIS diagnostics and tracing bug with a startup task a grain of salt in a 1/26/2011 post:

We have been doing quite a few Azure consulting gigs lately. All in all Windows Azure looks quite OK with the SDK 1.3 and the features available.

But there is one big issue with web roles and Full IIS since SDK 1.3. I have hinted at it and my friend and colleague Dominick also blogged about the problem. The diagnostics topic seems like a never-ending story in Azure, but it got even worse with the bug introduced in SDK 1.3 – quoting the SDK release notes:

Some IIS 7.0 logs not collected due to permissions issues - The IIS 7.0 logs that are collected by default for web roles are not collected under the following conditions:

The root cause to both of these issues is the permissions on the log files. if you need to access these logs, you can work around this issue by doing one of the following:

- When using Hostable Web Core (HWC) on instances running guest operating system family 2 (which includes operating systems that are compatible with Windows Server 2008 R2).

- When using full IIS on instances running any guest operating system (which is the default for applications developed with SDK 1.3).

- To read the files yourself, log on to the instance with a remote desktop connection. For more information, see Setting Up a Remote Desktop Connection for a Role.

- To access the files programmatically, create a startup task with elevated privileges that manually copies the logs to a location that the diagnostic monitor can read.

Wonderful.

But I really needed this to work, to get my log files written in my web roles automatically collected by the diagnostics monitor.

Well, startup tasks to the rescue. I decided to change the ACLs on the said folder from the Azure local resources via a PowerShell script.

The script will be spawned through a .cmd file like this:powershell -ExecutionPolicy Unrestricted .\FixDiagFolderAccess.ps1And here is the beginning of PowerShell code. It tries to register the Windows Azure-specific CommandLets:

Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime $localresource = Get-LocalResource "TraceFiles"… some more stuff omitted …I tried it locally and it seemed to work, great! Deployed it to the Cloud… didn’t work… Then I got in touch with Steve Marx. He played around a bit and found out that there is some kind of timing issue with registering the CommandLets… argh…

So, with that knowledge the working PS script now looks like this:

Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime while (!$?) { echo "Failed, retrying after five seconds..." sleep 5 Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime } echo "Added WA snapin." $localresource = Get-LocalResource "TraceFiles" $folder = $localresource.RootPath $acl = Get-Acl $folder $rule1 = New-Object System.Security.AccessControl.FileSystemAccessRule(

"Administrators", "FullControl", "ContainerInherit, ObjectInherit",

"None", "Allow") $rule2 = New-Object System.Security.AccessControl.FileSystemAccessRule(

"Everyone", "FullControl", "ContainerInherit, ObjectInherit",

"None", "Allow") $acl.AddAccessRule($rule1) $acl.AddAccessRule($rule2) Set-Acl $folder $acl echo "Done changing ACLs."Yes, I do realize that I am adding Everyone with full access – mea culpa. I need to further investigate to actually cut down the ACLs. And let’s hope that Microsoft fixes this annoying bug quickly with a new SDK release.

So, how do we get things working properly now?

Another new feature since SDK 1.3 and with Full IIIS is that we now have two processes in the game: the w3wp.exe for your web application and the WaIISHost.exe for the RoleEntryPoint-based code. For this reason if you want to trace also in your WebRole.cs you need to register stuff in OnStart:public class WebRole : RoleEntryPoint { public override bool OnStart() { base.OnStart(); Trace.WriteLine("Entering OnStart in WebRole..."); var traceResource = RoleEnvironment.GetLocalResource("TraceFiles"); var config = DiagnosticMonitor.GetDefaultInitialConfiguration(); config.Directories.DataSources.Add( new DirectoryConfiguration { Path = traceResource.RootPath, Container = "traces", DirectoryQuotaInMB = 100 }); config.Directories.ScheduledTransferPeriod = TimeSpan.FromMinutes(1); DiagnosticMonitor.Start(

"Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString",

config); return true; } }In addition, let’s use my custom trace listener I demonstrated earlier. This goes into the WaIISHost.exe.config file for being picked up for tracing in your WebRole.cs (which will be compiled into WebRole.dll):

<configuration><system.diagnostics><trace autoflush="true"><listeners><add type="Thinktecture.Diagnostics.Azure.LocalStorageXmlWriterTraceListener,

AzureXmlWriterTraceListener, Version=1.0.0.0, Culture=neutral,

PublicKeyToken=null"name="AzureDiagnostics"initializeData="TraceFiles\web_role_trace.svclog" /></listeners></trace></system.diagnostics></configuration>Last, but not least also configure tracing for your web application, most likely in global.asax:

public class Global : System.Web.HttpApplication { void Application_Start(object sender, EventArgs e) { Trace.WriteLine("Application_Start..."); var traceResource = RoleEnvironment.GetLocalResource("TraceFiles"); var config = DiagnosticMonitor.GetDefaultInitialConfiguration(); config.Directories.DataSources.Add( new DirectoryConfiguration { Path = traceResource.RootPath, Container = "traces", DirectoryQuotaInMB = 100 }); config.Directories.ScheduledTransferPeriod = TimeSpan.FromMinutes(1); } }And the appropriate entries in web.config:

<configuration><system.diagnostics><trace autoflush="true"><listeners><add type="Thinktecture.Diagnostics.Azure.LocalStorageXmlWriterTraceListener,

AzureXmlWriterTraceListener, Version=1.0.0.0, Culture=neutral,

PublicKeyToken=null"

name="AzureDiagnostics"

initializeData="TraceFiles\web_trace.svclog" /></listeners></trace></system.diagnostics>With all that set up I can see my trace (.svclog) files – after the configured transfer period - now in my blob storage. Hooray!

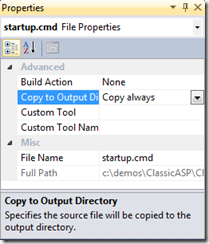

FYI, this is how my sample solution looks like in VS 2010. With the .cmd and .ps1 files in place (and make sure they are set to Copy always !):

Oh yeah: it finally also works as expected in the real Cloud

Note: make sure to read through Steve’s tips and tricks for using startup tasks.

Andy Cross (@andybareweb) described Windows Azure AppPool Settings Programmatic Modification in an 11/26/2011 post:

There are times when using IIS that you need to modify settings regarding that Application Pool that your project runs in. In Windows Azure from v1.3 your WebRoles usually run in full IIS, and so some settings that affect your software (say Recycling) are configured outside the scope of your application. In a traditional deployment you may consider modifying the settings manually in IIS Manager, but this is not an option in Azure since the roles may restart and if so will lose your settings. You may also consider modifying applicationHost.confg, which is certainly feasible, but depends upon a startup task outside of the standard web programming model.

This post will show how it is possible to programmatically

modify the settings for your Application Pool (appPool). The reasons for choosing this approach are compelling, as your site is not actually created by Windows Azure’s Compute services when other opportune moments occur (the aforementioned startup tasks)

Run as Elevated

The first thing we must configure is to start each role with Elevated permissions. This gives the Startup methods more access than they would otherwise have, indeed they may make any modification to the system that they are running on. This initially strikes many people as a dangerous move, but actually the only code that runs Elevated in this scenario is the RoleEntryPoint class, so usually only the OnStart method (and anything you call from it), as a little known tip from Steve Marx points out:

To clarify, running a web role elevated only runs your RoleEntryPoint code elevated, and that happens before the role goes out of the Busy state (so before you’re receiving external traffic). Assuming you only override OnStart, all the code that runs elevated stops before the website is even up. I don’t view that as a significant increase in the attack surface.

To do this, we simply modify our ServiceDefinition.csdef to add a node under our WebRole describing the runtime execution context:

view plaincopy to clipboardprint?

- <WebRole name="WebHost">

- <Runtime executionContext="elevated"/>

<WebRole name="WebHost"> <Runtime executionContext="elevated"/>Microsoft.Web.Administration

The guts of our work is to be performed by an assembly called Microsoft.Web.Administration. It is a very useful tool that allows for programmatic control of IIS:

The Microsoft.Web.Administration namespace contains classes that a developer can use to administer IIS Manager. With the classes in this namespace, an administrator can read and write configuration information to ApplicationHost.config, Web.config, and Administration.config files.

Using this library, we will determine our application’s Application Pool name, and begin setting values as we desire. I must thank Wade Wegner for his excellent post on changing appPool identity, as this forms the basis of my approach.

Firstly instantiate a ServerManager instance, and by building a string that matches our Web

using (ServerManager serverManager = new ServerManager()) { var siteApplication = serverManager.Sites[RoleEnvironment.CurrentRoleInstance.Id + "_" + webApplicationProjectName].Applications.First(); var appPoolName = siteApplication.ApplicationPoolName; var appPool = serverManager.ApplicationPools[appPoolName]; // more ...With this new instance variable “appPool” we can then access and set many property of the Application Pool, just as we might like to do in IIS Manager. For instance, we can make some changes to the way the Application Pool recycles itself:

appPool.ProcessModel.IdleTimeout = TimeSpan.Zero; appPool.Recycling.PeriodicRestart.Time = TimeSpan.Zero;Once we are happy with our modifications, we can commit these changes using:

serverManager.CommitChanges();This makes our OnStart method in total (don’t forget a using statement using Microsoft.Web.Administration;):

public override bool OnStart() { // For information on handling configuration changes // see the MSDN topic at http://go.microsoft.com/fwlink/?LinkId=166357. var webApplicationProjectName = "Web"; using (ServerManager serverManager = new ServerManager()) { var siteApplication = serverManager.Sites[RoleEnvironment.CurrentRoleInstance.Id + "_" + webApplicationProjectName].Applications.First(); var appPoolName = siteApplication.ApplicationPoolName; var appPool = serverManager.ApplicationPools[appPoolName]; appPool.ProcessModel.IdleTimeout = TimeSpan.Zero; appPool.Recycling.PeriodicRestart.Time = TimeSpan.Zero; serverManager.CommitChanges(); } return base.OnStart(); }Running this, either locally or in Azure, we can load inetmgr and see the result on the application Pool:

ProcessModel changes once applied

Recycling Changes when applied

Conclusion

This technique is a very powerful one, giving you a method to configure the heart of your application’s execution context straight from .net. I hope you find this useful.

I have uploaded a basic project source here: AppPoolProgrammaticModification

Steve Marx (@smarx) posted Windows Azure Startup Tasks: Tips, Tricks, and Gotchas on 1/25/2011:

In my last post, I gave a brief introduction to Windows Azure startup tasks and how to build one. The reason I’ve been thinking lately about startup tasks is that I’ve been writing them and helping other people write them. In fact, this blog is currently running on Ruby in Windows Azure, and this is only possible because of an elevated startup task that installs Application Request Routing (to use as a reverse proxy) and Ruby.

Through the experience of building some non-trivial startup tasks, I’ve learned a number of tips, tricks, and gotchas that I’d like to share, in the hopes that they can save you time. Here they are, in no particular order.

Batch files and Visual Studio

I mentioned this in my last post [see below], but it appears that text files created in Visual Studio include a byte order mark that makes it impossible to run them. To work around this, I create my batch files in notepad first. (Subsequently editing in Visual Studio seems to be fine.) This isn’t really specific to startup tasks, but it’s a likely place to run into this gotcha.

Debug locally with “start /w cmd”

This is a tip Ryan Dunn shared with me, and I’ve found it helpful. If you put

start /w cmdin a batch file, it will pop up a new command window and wait for it to exit. This is useful when you’re testing your startup tasks locally, as it gives you a way to try out commands in exactly the same context as the startup task itself. It’s a bit like setting a breakpoint and using the “immediate” panel in Visual Studio.Remember to take this out before you deploy to the cloud, as it will cause the startup task to never complete.

Make it a background task so you can use Remote Desktop

“Simple” startup tasks (the default task type) are generally what you want, because they run to completion before any of your other code runs. However, they also run to completion before Remote Desktop is set up (also via a startup task). That means that if your startup task never finishes, you don’t have a chance to use RDP to connect and debug.

A tip that will save you lots of debugging frustration is to set your startup type to “background” (just during development/debugging), which means RDP will still get configured even if your startup task fails to complete.

Log to a file

Sometimes (particularly for timing issues), it’s hard to reproduce an error in a startup task. You’ll be much happier if you log everything to a local file, by doing something like this in your startup task batch file:

command1 >> log.txt 2>> err.txt command2 >> log.txt 2>> err.txt ...Then you can RDP into the role later and see what happened. (Bonus points if you configure Windows Azure Diagnostics to copy these log files off to blob storage!)

Executing PowerShell scripts

To execute an unsigned PowerShell script (the sort you’re likely to include as a startup task), you need to configure PowerShell first to allow this. In PowerShell 2.0, you can simply launch PowerShell from a batch file with

powershell -ExecutionPolicy Unrestricted ./myscript.ps1. This will work fine in Windows Azure if you’re running withosFamily=”2”, which gives you an operating system image based on Windows Server 2008 R2. If you’re usingosFamily=”1”, though, you’ll have PowerShell 1.0, which doesn’t include this handy commandline argument.For PowerShell 1.0, the following one-liner should tell PowerShell to allow any scripts, so run this in your batch file before calling PowerShell:

reg add HKLM\Software\Microsoft\PowerShell\1\ShellIds\Microsoft.PowerShell /v ExecutionPolicy /d Unrestricted /f(I haven’t actually tested that code yet… but I found it on the internet, so it must be right.)

Using the Service Runtime from PowerShell

In Windows Azure, you’ll find a PowerShell snap-in that lets you interact with the service runtime APIs. There’s a gotcha with using it, though, which is that the snap-in is installed asynchronously, so it’s possible for your startup task to run before the snap-in is available. To work around that, I suggest the following code (from one of my startup scripts), which simply loops until the snap-in is available:

Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime while (!$?) { sleep 5 Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime }(That while loop condition amuses me greatly. It means “Did the previous command fail?”)

Using WebPICmdline to run 32-bit installers

[UPDATE 1/25/2011 11:47pm] Interesting timing! WebPI Command Line just shipped. If you scroll to the bottom of the announcement, you’ll see instructions for running it in Windows Azure. There’s an AnyCPU version of the binary that you should use. However, it doesn’t address the problem I’m describing here.

Using WebPICmdline

(in CTP now, but about to ship)is a nice way to install things (particularly PHP), but running in the context of an elevated Windows Azure startup task, it has a surprising gotcha that’s difficult to debug. Elevated startup tasks run asNT AUTHORITY\SYSTEM, which is a special user in a number of ways. One way it’s special is that its user profile is under thesystem32directory. This is special, because on 64-bit machines (like all VMs in Windows Azure), 64-bit processes see thesystem32directory, but 32-bit processes see theSysWOW64directory instead.When the Web Platform Installer (via WebPICmdline or otherwise) downloads something to execute locally, it stores it in the current user’s “Local AppData,” which is typically under the user profile. This will end up under

system32in our elevated startup task, because WebPICmdline is a 64-bit process. The gotcha comes when WebPI executes that code. If that code is a self-extracting executable 32-bit process, it will try to read itself (to unzip) and search in theSysWOW64directory instead. This doesn’t work, and will typically pop up a dialog box (that you can’t see) and hang until you dismiss it (which you can’t do).This is a weird combination of things, but the point is that many applications or components that you install via WebPICmdline will run into this issue. The good news is that there’s a couple simple fixes. One fix is to use a normal user (instead of

system) to run WebPICmdline. David Aiken has a blog post called “Running Azure startup tasks as a real user,” which describes exactly how to do this.I have a different solution, which I find simpler if all you need to do is work around this issue. I simply change the location of “Local AppData.” Here’s how to do that. (I inserted line breaks for readability. This should be exactly four lines long.)

md "appdata" reg add "hku\.default\software\microsoft\windows\currentversion\explorer\user shell folders" /v "Local AppData" /t REG_EXPAND_SZ /d "%~dp0appdata" /f webpicmdline /Products:PHP53 >>log.txt 2>>err.txt reg add "hku\.default\software\microsoft\windows\currentversion\explorer\user shell folders" /v "Local AppData" /t REG_EXPAND_SZ /d %%USERPROFILE%%\AppData\Local /fUse PsExec to try out the SYSTEM user

PsExec is a handy tool from Sysinternals for starting processes in a variety of ways. (And I’m not just saying that because Mark Russinovich now works down the hall from me.) One thing you can do with PsExec is launch a process as the

systemuser. Here’s how to launch a command prompt in an interactive session assystem:psexec –s -i cmdYou can only do this if you’re an administrator (which you are when you use remote desktop to connect to a VM in Windows Azure), so be sure to elevate first if you’re trying this on your local computer.

Steve Marx (@smarx) started a two-Part Series with his Introduction to Windows Azure Startup Tasks on 1/25/2011:

Startup tasks are a simple way to run some code during your Windows Azure role’s initialization. Ryan and I gave a basic introduction to startup tasks in Cloud Cover Episode 31. The basic idea is that you include some sort of executable code in your role (usually a batch file), and then you define a startup task to execute that code.

The purpose of a startup task is generally to set something up before your role actually starts. The example Ryan and I used was running Classic ASP in a web role. That’s quite a simple trick with a startup task. Add a batch file called

startup.cmdto your role. Be sure to create it with notepad or another ASCII text editor… batch files created in Visual Studio seem to have a byte order mark at the top that makes them fail when executed. Believe it or not, this is the entirety of the batch file:

start /w pkgmgr /iu:IIS-ASPMark the batch file as “Copy to Output Directory” in Visual Studio. This will make sure the batch file ends up in the

binfolder of your role, which is where Windows Azure will look for it:Create the startup task in by adding this code to

ServiceDefinition.csdefin the web role:<Startup> <Task commandLine="startup.cmd" executionContext="elevated" /> </Startup>Note that this startup task is set to run elevated. Had I chosen the other option (“limited”), the startup task would run as a standard (non-admin) user. Choosing an execution context of “elevated” means the startup task will run as

NT AUTHORITY\SYSTEMinstead, which gives you plenty of permission to do whatever you need.There’s another attribute on the

<Task />element, which I haven’t defined here. That’s thetaskTypeattribute, which specifies how the startup task runs with respect to the rest of the role lifecycle. The default is a “simple” task, which runs synchronously, in that nothing else runs until the startup task completes. The other two task types, “background” and “foreground,” run concurrently with other startup tasks and with the rest of your role’s execution. The difference between the two is analogous to the difference between background and foreground threads in .NET. In practice, I have yet to see the “foreground” task type used.The above code actually works. Add a

default.asp, and you’ll see Classic ASP running in Windows Azure.NOTE: In testing this code today, it seems that this only works if you’ve specified

osFamily=”2”in yourServiceDefinition.csdef. (WithosFamily=”1”, the behavior I was seeing was thatpkgmgrnever exited. I’m not sure why this is, and it could be something unrelated that I changed, but I thought I’d point it out.)Download the Code

If you want to try Classic ASP in Windows Azure, you can download a full working Visual Studio solution here: http://cdn.blog.smarx.com/files/ClassicASP_source.zip

More Information

Wade Wegner (Windows Azure evangelist and new cohost of Cloud Cover) has also been working with startup tasks recently, so he and I decided to do a show dedicated to sharing what we’ve learned. We’ll record that show tomorrow and publish it on Channel 9 this Friday. Be sure to check out that episode.

Avkash Chauhan described a workaround for Microsoft.WindowsAzure.StorageClient.CloudDriveException was unhandled by user code Message = ERROR_UNSUPPORTED_OS in a 1/25/2011 post:

I had my Windows Azure SDK 1.2 based ASP.NET application which was working fine in which I use a cloud drive within the Web role. I decided to upgrade the exact same application using Windows Azure SDK 1.3 and while testing it on cloud, immediately I hit the following error:

Microsoft.WindowsAzure.StorageClient.CloudDriveException was unhandled by user code Message=ERROR_UNSUPPORTED_OS

Source=Microsoft.WindowsAzure.CloudDrive StackTrace:at Microsoft.WindowsAzure.StorageClient.CloudDrive.InitializeCache(String cachePath, Int32 totalCacheSize)

I kept trying and I found the problem is intermittent and does not happen 100% of the time. Because my application was working fine with Windows Azure SDK 1.2 I decided to run my Windows Azure SDK 1.3 based application in legacy mode or in HWC mode. I commented the <Sites> section in ServiceConfiguration.cscfg. file and uploaded the package again. The results were same and the problem occurred same way as with Full IIS. I tried couple of times and the error "ERROR_UNSUPPORTED_OS" was intermittent with HWC mode as well.

At least now I had a theory that this problem may be related with CloudDrive Service which may not be available when the role started and made a CloudDrive call. I decided to RDP to my Windows Azure VM to track this issue internally. During investigation I found that the problem was actually related with the timing between Azure CloudDrive Service which is started by the Windows Azure OS however the role is started by WinAppAgent.exe process.

Further investigation shows that the problem could be related with following CoudDrive API as well:

- CloudDrive.Create()

- CloudDrive.Mount()

- CloudDrive.InitializeCache()

- CloudDrive.GetMountedDrives()

The Windows Azure Team do know about this issue and suggested the following workaround:

We recommend working around the issue by retrying the first CloudDrive API your service calls. The following is an example of code that retries the CloudDrive.InitializeCache()operation.

For (int i = 0; i < 30; i++) { try { CloudDrive.InitializeCache(localCache.RootPath,localCache.MaximumSizeInMegabytes); break; } catch (CloudDriveException ex) { if (!ex.Message.Equals("ERROR_UNSUPPORTED_OS") || i == 29) throw; Thread.Sleep(10000); } }

The new Avkash picture is much nicer than the previous cartoon character, no?

Wes Yanaga reported Free Windows Azure Training on Zune & iTunes in a 1/25/2011 post to the US ISV Evangelism blog:

Thanks to the Born to Learn blog for posting the “Building Cloud Applications using the Windows Azure Platform”. These HD videos are a 12 session in-depth illustration on how to leverage the Cloud using the Windows Azure Platform.

All of these HD-quality videos are both available on Zune and iTunes for free (also subscribe to the Zune RSS Feed ). You can watch each of these sessions here as well.

If you’re new to the Windows Azure platform you can get a no cost 30 day trail by visiting this link and using this promo code:

Promo Code: DPWE01 [for] Windows Azure platform 30 day pass (US Developers)

At present, a single Windows Live ID can take advantage of only one Windows Azure 30-Day Pass, regardless of the promo code.

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

Tim Anderson (@timanderson) asked and answered How is Windows Azure doing? Few mission critical apps says Microsoft on 1/26/2011:

I attended an online briefing given by Azure marketing man Prashant Ketkar. He said that Microsoft is planning to migrate its own internal systems to Azure, “causing re-architecture of apps,” and spoke of the high efficiency of the platform. There are thousands of servers being managed by ver7y few people he said – if you visit a Microsoft datacenter, “you will be struck by the absence of people.” Some of the efficiency is thanks to what he called a “containerised model”, where a large number of servers is delivered in a unit with all the power, networking and cooling systems already in place. “Just add water, electricity and bandwidth,”, he said, making it sound a bit like an instant meal from the supermarket.

But how is Azure doing? I asked for an indication of how many apps were deployed on Azure, and statistics for data traffic and storage. “For privacy and security reasons we don’t disclose the number of apps that are running on the platform,” he said, though I find that rationale hard to understand. He did add that there are more than 10,000 subscribers and said it is “growing pretty rapidly,” which is marketing speak for “we’re not saying.”

I was intrigued though by what Ketkar said about the kinds of apps that are being deployed on Azure. “No enterprise is talking about taking a tier one mission critical application and moving it to the cloud,” he said. “What we see is a lot of marketing campaigns, we see a lot of spiky workloads moving to the cloud. As the market start[s] to get more and more comfortable, we will see the adoption patterns change.”

I also asked whether Microsoft has any auto-scaling features along the lines of Amazon’s Elastic Beanstalk planned. Apparently it does. After acknowledging that there is no such feature currently in the platform, though third-party solutions are available, he said that “we are working on truly addressing the dynamic scaling issues – that is engineering work that is in progress currently.”

Related posts:

Tad Anderson posted Applied Architecture Patterns on the Microsoft Platform Book Review on 1/25/2011:

This is a book that would be good for anyone that wants to get a snapshot of the current Microsoft technology stack.

It gives decent primers on Window Communication Foundation (WCF) 4.0 and Windows Workflow (WF) 4.0, Windows Server AppFabric, BizTalk, SQL Server, and Windows Azure.

The primers are thorough enough to give you a decent understanding of each technology.

The book then covers common scenarios found in most enterprise level applications. The scenarios include, Simple Workflow, Content-based Routing, Publish-Subscribe, Repair/Resubmit with Human Workflow, Remote Message Broadcasting, Debatching Bulk Data, Complex Event Processing, Cross-Organizational Supply Chain, Multiple Master Synchronization, Rapid Flexible Scalability, Low-Latency Request-Reply, Handling Large Session and Reference Data, and Website Load Burst and Failover.

Each topic has a complete chapter dedicated to it.

The chapters start off by describing the requirements, presenting a pattern, and then they list several candidate architectures giving the good and bad aspects of each. They then pick the best one and implement a solution.

Most of the chapters were pretty good. The only one I found really lacking was the one on Multiple Master Synchronization. It included SSIS, Search Server Express, and Microsoft's Master Data Services as part of the solution. The details of the solutions were way to[o] vague to give any real insight into how to implement the suggested architecture.

All in all I found this book a very interesting read. It gave some great insight into Microsoft's current technology stack. I definitely recommend grabbing a copy.

Tad is a .NET software architect from Mt. Joy, Pennsylvania

Kurt Mackie posted Microsoft Unveils Online Services Support Lifecycle Policy to the Redmond Channel Partner Online blog on 1/25/2011:

Microsoft announced today that it has rolled out a new support lifecycle policy for its Online Services offerings.

The online policy differs from Microsoft's support lifecycle policies for its customer premises-installed business products, which generally have two support periods of five years each (called "mainstream support" and "extended support"). Online Services represent a different support scenario because the hosted applications are updated and maintained by Microsoft, on an ongoing basis, at its server farms. Consequently, the support lifecycle policy for Online Services varies from that of the installed products.

A Microsoft blog post explained that the support lifecycle for Online Services mostly becomes a consideration when Microsoft plans to deliver a so-called "disruptive change."

"Disruptive change broadly refers to changes that require significant action whether in the form of administrator intervention, substantial changes to the user experience, data migration or required updates to client software," the blog explains. Security updates delivered by Microsoft aren't considered by the company to be disruptive changes.

Under the Online Services support lifecycle policy, Microsoft plans to notify Online Services customers 12 months in advance "before implementing potentially disruptive changes that may result in a service disruption," the blog states.

The Online Services support lifecycle policy doesn't alter policies associated with Microsoft's premises-installed products. Customers using a hybrid approach, combining premises-installed Microsoft software plus services provided by Microsoft, will have to update those premise-installed products. In some cases, those on-premises updates must be applied to ensure continued integration with the online services.

The policy also spells out what happens when Microsoft ends a service. Microsoft will provide 12 months advance notice to the customer before ending a "Business and Developer-oriented Online Service," the blog explains. There's a 30-day holding period before removing customer data from Microsoft's server farms.

Microsoft's announcement today constitutes its debut of the new Online Services support lifecycle policy. For those organizations already subscribed to Microsoft's Online Services, implementation of the policy will take place this year or the next, depending on the contract, according to the Microsoft Online Services support lifecycle FAQ. Microsoft will deliver any notices about disruptive changes to the administrative contact established on the account.

Microsoft Online Services includes Windows Azure, Microsoft's platform in the cloud, along with various software-as-a-service offerings such as Office 365, which combines productivity, unified communications and collaboration apps. Microsoft also plans to roll out Windows Intune, a PC management service, sometime this year. Earlier this month, Microsoft announced the availability of Dynamics CRM 2011 Online, a hosted customer relationship management app. Microsoft's current Online Services offerings are described at this page. [Emphasis added.]

Kurt is online news editor, Enterprise Group, at 1105 Media Inc.

Full disclosure: I’m a contributing editor to 1105 Media’s Visual Studio Magazine.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

Andrew R. Hickey (@andrewrhickey) asked Dell Plots Public Cloud Provider Push, Does It Have The Chops? in a 1/25/2010 post to CRN:

Dell is mounting a major cloud computing offensive to take on Amazon and other public cloud providers with the launch of its own end-to-end public cloud computing play.

In a not-so-subtle tweet, Logan McLeod, Dell's cloud strategy director, vaulted Dell into the public cloud space and hinted that Dell is moving beyond its private cloud and server roots to become a full-on public cloud service provider.

"Dell as a public cloud end-to-end service provider? Yes. IaaS [and] PaaS. Coming soon. Dell DC near you," McLeod wrote from his Twitter feed about Dell throwing its hat into the public cloud ring. McLeod offered no additional information.

Dell would not clarify McLeod's tweet, but in a statement e-mailed to CRN the company said it "has already disclosed our plans to support Microsoft Azure to develop and deliver public and private cloud services to customers. We look forward to sharing more information at the appropriate time." Dell did not provide additional information.

Under Dell's partnership with Microsoft, Dell Services will implement the limited production release of the Windows Azure platform appliance to host public and private clouds for its customers and provide advisory services, application migration, and integration and implementation services. Dell will also work with Microsoft to develop a Windows Azure platform appliance for large enterprise, public and hosting customers to deploy in their own data centers, which will leverage infrastructure from Dell combined with the Windows Azure platform.

Dell's partnership with Microsoft, however, is not a full, end-to-end Dell public cloud solution complete with IaaS and PaaS as McLeod's tweet promises. Becoming a full-fledged public cloud and hosting provider with true Infrastructure-as-a-Service and Platform-as-a-Service in addition to its current private cloud placement would be somewhat of a departure for Dell, which has largely played in private clouds, and solution providers and industry watchers wonder if the tech superpower can hack it in a market that, while young, is already fairly established by the likes of Amazon, HP, IBM, Rackspace and myriad others.

"On the surface, it just seems like a me-too kind of play," said Tony Safoian, CEO of North Hollywood cloud solution provider SADA Systems.

If Dell were to launch a full set of public cloud services and hosting offerings it would be under intense pressure to differentiate itself from the pack. And Safoian said he doesn't see any clear differentiators.

Safoian added a Dell public cloud service could be a move on Dell's part to recoup some of the services revenue that is slipping away as companies shift to the cloud.

"This is a play that could increase services revenue that the cloud is eating into," Safoian said. "It's sort of a me-too to stay relevant and, if successful, bring back some revenue they're losing to the cloud paradigm shift."

But Safoian said Dell is already at a major disadvantage; getting into the public cloud late in the game is a daunting task. Just ask some of Dell's competitors. "Even Microsoft is seeing how it'll be tough for them," he said.

Next: Dell Looking To Compete With HP, IBM In Cloud

Cath Everett prefaced her Private cloud: Key steps for migrating your organisation post of 1/20/2011 to the Silicon.com blog with “All the issues, from lock-in to chargebacks and organisational and governance challenges...”:

ANALYSIS

Moving to private cloud services need not be as traumatic as moving house. Putting in the right foundations now eases the transition and helps when it comes to the shift to public cloud services. Cath Everett reports.

For the next couple of years, organisations will invest more in private cloud services than they will in public cloud offerings, but ultimately will use the private cloud as a stepping stone to the pubic cloud, according to Gartner.

Even so, the analyst firm believes that many organisations will operate a hybrid model that comprises a mix of private, public and non-cloud services for a number of years to come. This approach means that a primary responsibility for IT departments in future will be to source the most appropriate IT service for the job.

However, despite such predictions, it appears the wholesale adoption of private cloud is still some way off. Joe Tobolski, senior director of cloud assets and architectures at Accenture, explains: "The notion of private cloud is aspirational at best today and is in the very early days. There are two reasons for this - the hype is at an all-time high right now and there are very real commercial and architectural challenges involved."

Sectors showing most interest in private cloud are those where workload volumes peak and trough and tend to be cyclical

(Image credit: Shutterstock)To add to the situation, many organisations have also delayed much of the necessary internal application and infrastructure renewal work as a result of the still difficult economic climate.

But while some are claiming to run private clouds after having introduced a rack of virtualised servers, "99.99 per cent of datacentres are working in traditional ways. So rather than being private clouds, they're really islands of automation", Tobolski says.

The sectors that are showing most interest in the concept are those where workload volumes peak and trough and tend to be cyclical, such as retail, distribution, some global manufacturers and the public sector.

1. What is private cloud?

A starting point when defining private cloud is stating what it is not. And what it is not is simply the virtualisation and automation of existing IT. As Derek Kay, director of cloud services at Deloitte, points out, while genuine private cloud implementations do exist, some have simply been "sprayed with the private cloud spray" when they are in reality just big automated server farms."That will get you part of the way there, but really it's about a complete transformation programme. If you think of it as just a technical programme, you'll only get a fraction of the benefit, but for a small amount of additional focus and a willingness to adopt more widespread change, you can derive much more value at little extra cost," he says.

So one definition of private cloud is that it comprises IT services that are delivered to consumers via a browser, using internet protocols and technology. Underlying platforms are shared, scalability is unlimited and users can obtain either more or less capacity depending on their requirements. Finally, pricing is based on consumption and usage and is likely to include some form of chargeback mechanism.

One organisation that has gone a good way down the private cloud route, for example, is Reed Specialist Recruitment. It has centralised, standardised and virtualised its back-end infrastructure using EMC's VMware and rewritten most of its applications so that they can be accessed via a browser.

The firm, which employs 3,500 staff globally, 3,300 of which are based in the UK, has also adopted Itil to provide a service-management approach to IT delivery and introduce more rigour into its change-management processes, while likewise introducing a chargeback mechanism for payment.

But rather than use internet-based technology as its key means of...

Read more: 2, 3, 4, Next page »

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie (@lmacvittie) claimed Cloning. Boomeranging. Trojan clouds. Start up CloudPassage takes aim at emerging attack surfaces but it’s still more about process than it is product as a preface to her Get Your Money for Nothing and Your Bots for Free post of 1/26/2011 to F5’s DevCentral blog:

Before we go one paragraph further let’s start out by setting something straight: this is not a “cloud is insecure” or “cloud security – oh noes!” post.

Cloud is involved, yes, but it’s not necessarily the source of the problem - that would be virtualization and processes (or a lack thereof). Emerging attack methods and botnet propagation techniques can just as easily be problematic for a virtualization-based private cloud as they are for public cloud. That’s because the problem isn’t with necessarily cloud, it’s with an underlying poor server security posture that is made potentially many times more dangerous by the ease with which vulnerabilities can be propagated and migrated across and between environments.

That said, a recent discussion with a cloud startup called CloudPassage has given me pause to reconsider some of the ancillary security issues that are being discovered as a result of the use of cloud computing . Are there security issues with cloud computing? Yes. Are they enabled (or made worse) because of cloud computing models? Yes. Does that mean cloud computing is off-limits? No. It still comes down to proper security practices being extended into the cloud and potentially new architectural-based solutions for addressing the limitations imposed by today’s compute-on-demand-focused offerings. The question is whether or not proper security practices and processes can be automated through new solutions, through devops tools like Chef and Puppet, or require manual adherence to secure processes to implement.

CLOUD SECURITY ISN’T the PROBLEM, IT’S THE WAY WE USE IT (AND WHAT WE PUT IN IT)

We’ve talked about “cloud” security before and I still hold to two things: first, there is no such thing as “cloud” security and second, cloud providers are, in fact, acting on security policies designed to secure the networks and services they provide.

That said, there are in fact several security-related issues that arise from the way in which we might use public cloud computing and it is those issues we need to address. These issues are not peculiar to public cloud computing per se, but some are specifically related to the way in which we might use – and govern – cloud computing within the enterprise. These emerging attack methods may give some credence to the fears of cloud security. Unfortunately for those who might think that adds another checkmark in the “con” list for cloud it isn’t all falling on the shoulders of the cloud or the provider; in fact a large portion of the problem falls squarely in the lap of IT professionals.

CLONING

One of the benefits of virtualization often cited is the ability to easily propagate a “gold image”. That’s true, but consider what happens when that “gold image” contains a root kit? A trojan? A bot-net controller? Trojans, malware, and viruses are just as easily propagated via virtualization as web and application servers and configuration. The bad guys make a lot of money these days by renting out bot-net controllers, and if they can enlist your cloud-hosted services in that endeavor to do most of the work for them, they’re making money for nothing and getting bots for free.

Solution: Constant vigilance and server vulnerability management. Ensure that guest operating system images are free of vulnerabilities, hardened, patched, and up to date. Include identity management issues, such as accounts created for development that should not be active in production or those accounts assigned to individuals who no longer need access.

BOOMERANGING

Public cloud computing is also often touted as a great way to reduce the time and costs associated with development. Just fire up a cloud instance, develop away, and when it’s ready you can migrate that image into your data center production environment. So what if that image is compromised while it’s in the public cloud? Exactly – the compromised image, despite all your security measures, is now inside your data center, ready to propagate itself.

Solution: Same as with cloning, but with additional processes that require a vulnerability scan of an image before its placed into the production environment, and vice-versa.

SERVER VULNERABILITIES

How the heck could an image in the public cloud be compromised, you ask? Vulnerabilities in the base operating system used to create the image. It is as easy as point and click to create a new server in a public cloud such as EC2, but that server – the operating system – may not be hardened, patched, or anywhere near secured when it’s created. That’s your job. Public cloud computing implies a shared customer-provider security model, one in which you, as the customer, must actively participate.

“…the customer should assume responsibility and management of, but not limited to, the guest operating system.. and associated application software...”

“it is possible for customers to enhance security and/or meet more stringent compliance requirements with the addition of… host based firewalls, host based intrusion detection/prevention, encryption and key management.” [emphasis added]

-- Amazon Web Services: Overview of Security Processes (August 2010)

Unfortunately, most public cloud provider’s terms of service prohibit actively scanning servers in the public cloud for such vulnerabilities. No, you can’t just run Nexxus out there, because the scanning process is likely to have a negative impact on the performance of other customers’ services shared on that hardware. So you’re left with a potentially vulnerable guest operating system – the security of which you are responsible for according to the terms of service but yet cannot adequately explore with traditional security assessment tools. Wouldn’t you like to know, after all, whether the default guest operating configuration allows or disallows null passwords for SSH? Wouldn’t you like to know whether vulnerable Apache modules – like python – are installed? Patched? Up to date?

Catch-22, isn’t it? Especially if you’re considering migrating that image back into the data center at some point.

IT’S STILL a CONTROL THING

One aspect of these potential points of exploitation is that organizations can’t necessarily extend all the security practices of the data center into the public cloud.

If an organization routinely scans and hardens server operating systems – even if they are virtualized – they can’t continue that practice into the cloud environment. Control over the topology (architecture) and limitations on modern security infrastructure – such components often are not capable of handling the dynamic IP addressing environment inherent in cloud computing – make deploying a security infrastructure in a public cloud computing environment today nearly impossible.

Too, is a lack of control over processes. The notion that developers can simply fire up an image “out there” and later bring it back into the data center without any type of governance is, in fact, a problem. This is the other side of devops – the side where developers are being expected to step into operations and not only find but subsequently address vulnerabilities in the server images they may be using in the cloud.

Joe McKendrick, discussing the latest survey regarding cloud adoption from CA Technologies, writes:

The survey finds members of security teams top the list as the primary opponents for both public and private clouds (44% and 27% respectively), with sizeable numbers of business unit leaders/managers also sharing that attitude (23% and 18% respectively).

Overall, 53% are uncomfortable with public clouds, and 31% are uncomfortable with private clouds. Security and control remain perceived barriers to the cloud. Executives are primarily concerned about security (68%) and poor service quality (40%), while roughly half of all respondents consider risk of job loss and loss of control as top deterrents.

-- Joe McKendrick

, “Cloud divide: senior executives want cloud, security and IT managers are nervous”

Control, intimately tied to the ability to secure and properly manage performance and availability of services regardless of where they may be deployed, remains high on the list of cloud computing concerns.

One answer is found in Amazon’s security white paper above – deploy host-based solutions. But the lack of topological control and inability of security infrastructure to deal with a dynamic environment (they’re too tied to IP addresses, for one thing) make that a “sounds good in theory, fails in practice” solution.

A startup called CloudPassage, coming out of stealth today, has a workable solution. It’s host-based, yes, but it’s a new kind of host-based solution – one that was developed specifically to address the restrictions of a public cloud computing environment that prevent that control as well as the challenges that arise from the dynamism inherent in an elastic compute deployment.

CLOUDPASSAGE

If you’ve seen the movie “Robots” then you may recall that the protagonist, Rodney, was significantly influenced by the mantra, “See a need, fill a need.” That’s exactly what CloudPassage has done; it “fills a need” for new tools to address cloud computing-related security challenges. The need is real, and while there may be many other ways to address this problem – including tighter governance by IT over public cloud computing use and tried-and-true manual operational deployment processes – CloudPassage presents a compelling way to “fill a need.”

CloudPassage is trying to fill that need with two new solutions designed to help discover and mitigate many of the risks associated with vulnerable server operating systems deployed in and moving between cloud computing environments. Its first solution – focused on server vulnerability management (SVM) - comprises three components:

The Halo Daemon is added to the operating system and because it is tightly integrated it is able to perform tasks such as server vulnerability assessment without violating public cloud computing terms of service regarding scanning. It runs silently – no ports are open, no APIs, there is no interface. It communicates periodically via a secured, message-based system residing in the second component: Halo Grid.

Halo Grid

The “Grid” collects data from and sends commands to the Halo Daemon(s). It allows for centralized management of all deployed daemons via the third component, the Halo Portal.

Halo Portal

The Halo Portal, powered by a cloud-based farm of servers, is where operations can scan deployed servers for vulnerabilities and implement firewalling rules to further secure inter and intra-server communications.

Technically speaking, CloudPassage is a SaaS provider that leverages a small footprint daemon, integrated into the guest operating system, to provide centralized vulnerability assessments and configuration of host-based security tools in “the cloud” (where “the cloud” is private, public, or hybrid). The use of a message-based queuing-style integration system was intriguing. Discussions around how, exactly, Infrastructure 2.0 and Intercloud-based integration could be achieved have often come down to a similar thought: message-queuing based architectures. It will be interesting to see how well Cloud Passage’s Grid scales out and whether or not it can maintain performance and timeliness of configuration under heavier load, common concerns regarding queuing-based architectures.

The second solution from CloudPassage is its Halo Firewall. The firewall is deployed like any other host-based firewall, but it can be managed via the Halo Daemon as well. One of the exciting facets of this firewall and the management method is that eliminates the need to “touch” every server upon which the firewall is deployed. It allows you to group-manage host-based firewalls in a simple way through the Halo Portal. Using a simple GUI, you can easily create groups, define firewall policies, and deploy to all servers assigned to the group. What’s happening under the covers is the creation of iptables code and a push of that configuration to all firewall instances in a group.

What ought to make ops and security folks even happier about such a solution is that the cloning of a server results in the automatic update. The cloned server is secured automatically based on the group from which its parent was derived, and all rules that might be impacted by that addition to the group are automagically updated. This piece of the puzzle is what’s missing from most modern security infrastructure – the ability to automatically update / modify configuration based on current operating environment: context, if you will. This is yet another dynamic data center concern that has long eluded many: how to automatically identify and incorporate newly provisioned applications/virtual machines into the application delivery flow. Security, load balancing, acceleration, authentication. All these “things” that are a part of application delivery must be updated when an application is provisioned – or shut down. Part of the core problem with extended security practices into dynamic environments like cloud computing is that so many security infrastructure solutions are not Infrastructure 2.0 enabled and they are still tightly coupled to IP addresses and require a static network topology, something not assured in dynamically provisioned environments. CloudPassage has implemented an effective means by which at least a portion of the security side of application delivery concerns related to extreme dynamism can be more easily addressed.

As of launch, CloudPassage supports Linux-based operating systems with plans to expand to Windows-based offerings in the future.

No significant articles today.

Chris Hoff (@Beaker) posted George Carlin, Lenny Bruce & The Unspeakable Seven Dirty Words of Cloud Security on 1/26/2011 (That’s Carlin, not Hoff below):