Windows Azure and Cloud Computing Posts for 9/3/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Fabrice Marguerie announced Sesame: Spatial OData on Maps, Service Operations, HTTP Basic Authentication new features on 9/3/2010:

Operations, HTTP Basic Authentication

Sesame Data Browser has just been updated to offer the following features for OData feeds:

- Maps

- Improved Service operations (FunctionImport) support

- HTTP Basic Authentication support

- Microsoft Dallas support

Maps

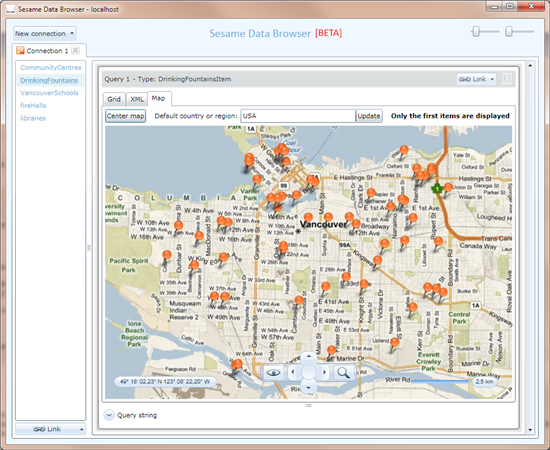

Sesame now automatically displays items on a map if spatial information is available in data.

This works when latitude and longitude pairs are provided.

Here is for example a map of drinking fountains in Vancouver:

This comes from DrinkingFountains in http://vancouverdataservice.cloudapp.net/v1/vancouver, which provides latitude/longitude for each fountain.

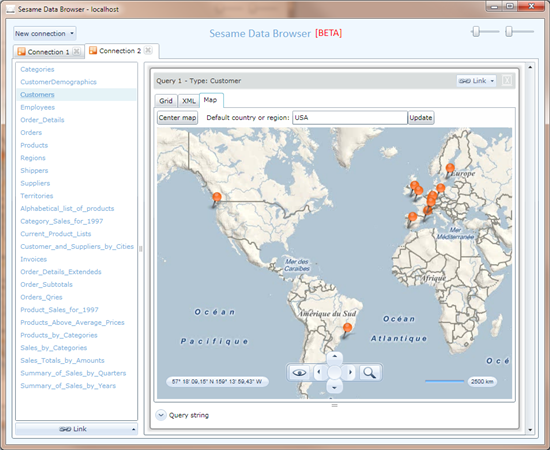

Here is another example, without latitude/longitude this time:

This is a map of the customers from the Northwind database, which are located based on their country, postal code, city, and street address.

Service operations (FunctionImport)

Support for service operations (aka FunctionImports) has been improved. Until now, only functions without parameters were supported.

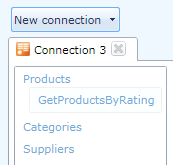

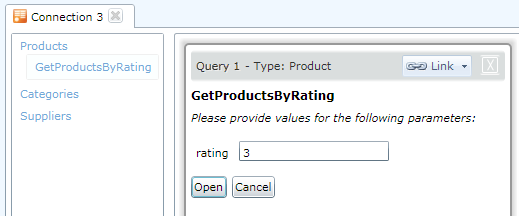

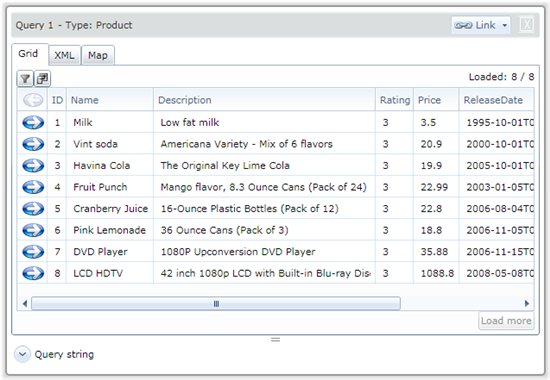

It's now possible to use service operations that take input parameters. Let's take as an example the GetProductsByRating function from http://services.odata.org/OData/OData.svc.

This function is attached to Products, as you can see below:

A "rating" parameter is expected in order to open the function:

After clicking Open, you'll get data as usual:

HTTP Basic Authentication

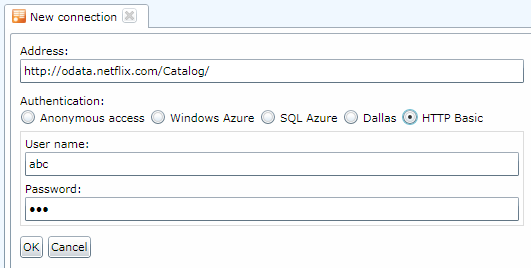

New authentication options have been added: HTTP Basic and Dallas (more on the latter in the next post).

HTTP Basic authentication just requires a user name and a password, and is simple to implement.

Microsoft Dallas support

All of the above features enabled support for Microsoft Dallas. See this other post about Dallas support in Sesame.Please give Sesame Data Browser a try. As always, your feedback and suggestions are welcome!

There’s no question that Fabrice’s Sesame is a full-featured data browser.

No significant articles today.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

The CloudSpace Team posted Demonstrating federation interop with CA, IBM, and Sun products on 9/3/2010:

Microsoft’s Patterns & Practices group recently wrote about three labs demonstrating federation interoperability between WIF and AD FS 2.0 and three other vendor products – specifically, CA SiteMinder 12.0, IBM Tivoli Federated Identity Manager 6.2, and Sun OpenSSO 8.0.

First, the team took the samples from the Claims Identity Guide and deployed them in a lab. They then configured the lab to use IBM, Computer Associates & Sun identity providers. Finally, they captured videos of demos for each configuration.

You can read about each of the labs here:

Ron Jacobs gave a taste of endpoint.tv - Troubleshooting WCF - Bad Address on Client troubleshooting in this 00:07:59 embedded Silverlight video segment:

Don't you hate it when things go wrong? Most of the time on endpoint.tv, I show you when everything is working properly. On this episode, however, I'm going to show you some tools and techniques for troubleshooting what happens when you invoke a proxy with the wrong service address.

Vittorio Bertocci (@vibronet) posted The Table of Content of Programming Windows Identity Foundation on 9/3/2010:

Various readers asked me to provide the table of content of Programming Windows Identity Foundation, so here it is. The formatting is not perfect, but I wanted to make sure to keep the page numbers and indentation so that you can assess how much space has been dedicated to any given topic you want to study.

I won’t repeat here what I wrote in the book intro (available also in this Microsoft Press post), but I do want to add a couple of notes.

- Although the topics covered by the book are a superset of the ones in the training kit, it’s hard to make comparisons. The book packs information at much higher density and goes significantly deeper than the kit. Apart from something in Part I there are no step-by-step instructions, as you can expect from a title in the Developer Pro References series.

- Apart from the parts explaining protocols and patterns, all the book is firmly anchored in code and gives very concrete guidance on how to implement the topic at hand. The only exception is Chapter 7: that chapter covers topics for which there are no official bits yet, and giving cove would have meant filling pages and pages of custom tactical code which could have become obsolete soon. What you get in chapter 7 is an intro to the topics (for example there are swimlane diagrams for OAuth 2 and similar) which helps you to wrap your head around the issue should you have to cope with it before official solutions arise. The exception to the exception is the part about MVC, where I do provide the code of a very simple and elegant solution (I wasn’t the one coming up with it :-)) which integrates really well with the MVC model.

And now, without further ado, the TOOOC ♪♬

Table of Contents

- Foreword xi

- Acknowledgments xiii

- Introduction xvii

Part I Windows Identity Foundation for Everybody

1 Claims-Based Identity 3

- What Is Claims-Based Identity? 3

- Traditional Approaches to Authentication 4

- Decoupling Applications from the Mechanics of Identity and Access 8

- WIF Programming Model 15

- An API for Claims-Based Identity 16

- WIF’s Essential Behavior 16

- IClaimsIdentity and IClaimsPrincipal 18

- Summary 21

2 Core ASP.NET Programming 23

- Externalizing Authentication 24

- WIF Basic Anatomy: What You Get Out of the Box 24

- Our First Example: Outsourcing Web Site Authentication to an STS 25

- Authorization and Customization 33

- ASP.NET Roles and Authorization Compatibility 36

- Claims and Customization 37

- A First Look at <microsoft.identityModel> 39

- Basic Claims-Based Authorization 41

- Summary 46 Part II Windows Identity Foundation for Identity Developers

3 WIF Processing Pipeline in ASP.NET 51

- Using Windows Identity Foundation 52

- WS-Federation: Protocol, Tokens, Metadata .54

- WS-Federation 55

- The Web Browser Sign-in Flow 57

- A Closer Look to Security Tokens 62

- Metadata Documents 69

- How WIF Implements WS-Federation 72

- The WIF Sign-in Flow .74

- WIF Configuration and Main Classes 82

- A Second Look at <microsoft.identityModel> .82

- Notable Classes 90

- Summary 94

4 Advanced ASP.NET Programming .95

- More About Externalizing Authentication 96

- Identity Providers ............................................97

- Federation Providers .99

- The WIF STS Template .102

- Single Sign-on, Single Sign-out, and Sessions 112

- Single Sign-on ..............................................113

- Single Sign-out .115

- More About Sessions .122

- Federation .126

- Transforming Claims 129

- Pass-Through Claims 134

- Modifying Claims and Injecting New Claims 135

- Home Realm Discovery .135

- Step-up Authentication, Multiple Credential Types, and Similar Scenarios .140 Claims Processing at the RP 141

- Authorization 142

- Authentication and Claims Processing 142

- Summary 143

5 WIF and WCF 145

- The Basics 146

- Passive vs.Active 146

- Canonical Scenario 154

- Custom TokenHandlers 163

- Object Model and Activation 167

- Client-Side Features 170

- Delegation and Trusted Subsystems 170

- Taking Control of Token Requests 179

- Summary 184

6

WIF and Windows Azure 185

- The Basics 186

- Packages and Config Files 187

- The WIF Runtime Assembly and Windows Azure 188

- Windows Azure and X.509 Certificates 188

- Web Roles 190

- Sessions 191

- Endpoint Identity and Trust Management 192

- WCF Roles 195

- Service Metadata 195

- Sessions 196

- Tracing and Diagnostics 201

- WIF and ACS 204

- Custom STS in the Cloud 205

- Dynamic Metadata Generation 205

- RP Management 213

- Summary 213 7

The Road Ahead 215

- New Scenarios and Technologies 215

- ASP.NET MVC 216

- Silverlight .223

- SAML Protocol 229

- Web Identities and REST .230

- Conclusion .239

Index 241

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Microsoft’s patterns & practices Team released volume 2 in their Windows Azure series, Developing Applications for the Cloud on the Microsoft Windows Azure Platform, on 9/3/2010:

Summary

How can a company create an application that has truly global reach and that can scale rapidly to meet sudden, massive spikes in demand? Historically, companies had to invest in building an infrastructure capable of supporting such an application themselves and, typically, only large companies would have the available resources to risk such an enterprise. Building and managing this kind of infrastructure is not cheap, especially because you have to plan for peak demand, which often means that much of the capacity sits idle for much of the time. The cloud has changed the rules of the game: by making the infrastructure available on a "pay as you go" basis, creating a massively scalable, global application is within the reach of both large and small companies.

The cloud platform provides you with access to capacity on demand, fault tolerance, distributed computing, data centers located around the globe, and the capability to integrate with other platforms. Someone else is responsible for managing and maintaining the entire infrastructure, and you only pay for the resources that you use in each billing period. You can focus on using your core domain expertise to build and then deploy your application to the data center or data centers closest to the people who use it. You can then monitor your applications, and scale up or scale back as and when the capacity is required.

Yes, by moving applications to the cloud, you're giving up some control and autonomy, but you're also going to benefit from reduced costs, increased flexibility, and scalable computation and storage. This guide shows you how to do this. …

Overview

This book is the second volume in a planned series about Windows Azure™ technology platform. Volume 1, Moving Applications to the Cloud on the Windows Azure Platform, provides an introduction to Windows Azure, discusses the cost model and application life cycle management for cloud-based applications, and describes how to migrate an existing ASP.NET application to the cloud. This book demonstrates how you can create from scratch a multi-tenant, Software as a Service (SaaS) application to run in the cloud by using the latest versions of the Windows Azure tools and the latest features of the Windows Azure platform. The book is intended for any architect, developer, or information technology (IT) professional who designs, builds, or operates applications and services that run on or interact with the cloud. Although applications do not need to be based on the Microsoft® Windows® operating system to work in Windows Azure, this book is written for people who work with Windows-based systems. You should be familiar with the Microsoft .NET Framework, Microsoft Visual Studio® development system, ASP.NET MVC, and Microsoft Visual C#® development tool.

Common Scenarios

"The Tailspin Scenario" introduces you to the Tailspin company and the Surveys application. It provides an architectural overview of the Surveys application; the following chapters provide more information about how Tailspin designed and implemented the Surveys application for the cloud. Reading this chapter will help you understand Tailspin's business model, its strategy for adopting the cloud platform, and some of its concerns.

"Hosting a Multi-Tenant Application on Windows Azure" discusses some of the issues that surround architecting and building multi-tenant applications to run on Windows Azure. It describes the benefits of a multi-tenant architecture and the trade-offs that you must consider. This chapter provides a conceptual framework that helps the reader understand some of the topics discussed in more detail in the subsequent chapters.

"Accessing the Surveys Application" describes some of the challenges that the developers at Tailspin faced when they designed and implemented some of the customer-facing components of the application. Topics include the choice of URLs for accessing the surveys application, security, hosting the application in multiple geographic locations, and using the Content Delivery Network to cache content.

"Building a Scalable, Multi-Tenant Application for Windows Azure" examines how Tailspin ensured the scalability of the multi-tenant Surveys application. It describes how the application is partitioned, how the application uses worker roles, and how the application supports on-boarding, customization, and billing for customers.

"Working with Data in the Surveys Application" describes how the application uses data. It begins by describing how the Surveys application stores data in both Windows Azure tables and blobs, and how the developers at Tailspin designed their storage classes to be testable. The chapter also describes how Tailspin solved some specific problems related to data, including paging through data, and implementing session state. Finally, this chapter describes the role that SQL Azure™ technology platform plays in the Surveys application.

"Updating a Windows Azure Service" describes the options for updating a Windows Azure application and how you can update an application with no interruption in service.

"Debugging and Troubleshooting Windows Azure Applications" describes some of the techniques specific to Windows Azure applications that will help you to detect and resolve issues when building, deploying, and running Windows Azure applications. It includes descriptions of how to use Windows Azure Diagnostics and how to use Microsoft IntelliTrace™ with applications deployed to Windows Azure.

Audience Requirements

The book is intended for any architect, developer, or information technology (IT) professional who designs, builds, or operates applications and services that run on or interact with the cloud. Although applications do not need to be based on the Microsoft® Windows® operating system to work in Windows Azure, this book is written for people who work with Windows-based systems. You should be familiar with the Microsoft .NET Framework, Microsoft Visual Studio® development system, ASP.NET MVC, and Microsoft Visual C#® development tool.

System Requirements

These are the system requirements for running the scenarios:

- Microsoft Windows Vista SP1, Windows 7, or Microsoft Windows Server® 2008 (32-bit or 64-bit)

- Microsoft Internet Information Services (IIS) 7.0

- Microsoft .NET Framework 4 or later

- Microsoft Visual Studio 2010

- Windows Azure Tools for Microsoft Visual Studio 2010

- ASP.NET MVC 2.0

- Windows Identity Foundation

- Microsoft Anti-Cross Site Scripting Library

- Moq (to run the unit tests)

- Enterprise Library Unity Application Block (binaries included in the samples)

Design Goals

Tailspin is a fictitious startup ISV company of approximately 20 employees that specializes in developing solutions using Microsoft® technologies. The developers at Tailspin are knowledgeable about various Microsoft products and technologies, including the .NET Framework, ASP.NET MVC, SQL Server®, and Microsoft Visual Studio® development system. These developers are aware of Windows Azure but have not yet developed any complete applications for the platform.

The Surveys application is the first of several innovative online services that Tailspin wants to take to market. As a startup, Tailspin wants to develop and launch these services with a minimal investment in hardware and IT personnel. Tailspin hopes that some of these services will grow rapidly, and the company wants to have the ability to respond quickly to increasing demand. Similarly, it fully expects some of these services to fail, and it does not want to be left with redundant hardware on its hands.

The Surveys application enables Tailspin's customers to design a survey, publish the survey, and collect the results of the survey for analysis. A survey is a collection of questions, each of which can be one of several types such as multiple-choice, numeric range, or free text. Customers begin by creating a subscription with the Surveys service, which they use to manage their surveys and to apply branding by using styles and logo images. Customers can also select a geographic region for their account, so that they can host their surveys as close as possible to the survey audience. The Surveys application allows users to try out the application for free, and to sign up for one of several different packages that offer different collections of services for a monthly fee.

Customers who have subscribed to the Surveys service (or who are using a free trial) access the Subscribers website that enables them to design their own surveys, apply branding and customization, and collect and analyze the survey results. Depending on the package they select, they have access to different levels of functionality within the Surveys application. Tailspin expects its customers to be of various sizes and from all over the world, and customers can select a geographic region for their account and surveys.

The architecture of the Surveys Application is straightforward and one that many other Windows Azure applications use. The core of the application uses Windows Azure web roles, worker roles, and storage. Figure below shows the three groups of users who access the application: the application owner, the public, and subscribers to the Surveys service (in this example, Adatum and Fabrikam).

It also highlights how the application uses SQL Azure™ technology platform to provide a mechanism for subscribers to dump their survey results into a relational database to analyze the results in detail. This guide discusses the design and implementation in detail and describes the various web and worker roles that comprise the Surveys application.

Some of the specific issues that the guide covers include how Tailspin implemented the Surveys application as a multi-tenant application in Windows Azure and how the developers designed the application to be testable. The guide describes how Tailspin handles the integration of the application's authentication mechanism with a customer's own security infrastructure by using a federated identity with multiple partners model. The guide also covers the reasoning behind the decision to use a hybrid data model that uses both Windows Azure Storage and SQL Azure. Other topics covered include how the application uses caching to ensure the responsiveness of the Public website for survey respondents, how the application automates the on-boarding and provisioning process, how the application leverages the Windows Azure geographic location feature, and the customer-billing model adopted by Tailspin for the Surveys application.

Community

This guide, like many patterns & practices deliverables, is associated with a community site. On this community site, you can post questions, provide feedback, or connect with other users for sharing ideas. Community members can also help Microsoft plan and test future guides, and download additional content such as extensions and training material.

Future Plans

An extension to this scenario is being developed for mobile users using Windows Phone 7 devices. Early versions of this are available here: http://wp7guide.codeplex.com

A third part is planned to cover integration scenarios as well as new capabilities. Check the community site for updates.

Feedback and Support

Questions? Comments? Suggestions? To provide feedback about this guide, or to get help with any problems, please visit the Windows Azure guidance Community site. The message board on the community site is the preferred feedback and support channel because it allows you to share your ideas, questions, and solutions with the entire community. This content is a guidance offering, designed to be reused, customized, and extended. It is not a Microsoft product. Code-based guidance is shipped "as is" and without warranties. Customers can obtain support through Microsoft Support Services for a fee, but the code is considered user-written by Microsoft support staff.

Authors and Contributors

This guide was produced by the following individuals:

- Program and Product Management: Eugenio Pace

- Architect: David Hill

- Subject Matter Experts: Dominic Betts, Scott Densmore, Ryan Dunn, Steve Marx, and Matias Woloski

- Development: Federico Boerr (Southworks), Scott Densmore, Matias Woloski (Southworks)

- Test team: Masashi Narumoto, Kirthi Royadu (Infosys Ltd.), Lavanya Selvaraj (Infosys Ltd.)

- Edit team: Dominic Betts, RoAnn Corbisier, Alex Homer, and Tina Burden

- Book design and illustrations: Ellen Forney, John Hubbard (eson), Katie Niemer and Eugenio Pace.

- Release Management: Richard Burte

We want to thank the customers, partners, and community members who have patiently reviewed our early content and drafts. Among those, we want to highlight the exceptional contributions of David Aiken, Graham Astor (Avanade), Edward Bakker (Inter Access), Vivek Bhatnagar (Microsoft), Patrick Butler Monterde (Microsoft), Shy Cohen, James Conard, Brian Davis (Longscale), Aashish Dhamdhere (Windows Azure, Microsoft), Andreas Erben (DAENET), Giles Frith , Eric L. Golpe (Microsoft), Johnny Halife (Southworks), Alex Homer, Simon Ince, Joshy Joseph, Andrew Kimball, Milinda Kotelawele (Longscale), Mark Kottke (Microsoft), Chris Lowndes (Avanade), Dianne O'Brien (Windows Azure, Microsoft), Steffen Vorein (Avanade), Brad Wilson (ASP.NET Team), Michael Wood (Strategic Data Systems).

Related Titles

Thanks to Eugenio Pace (@eugenio_pace) for the heads-up on the guide’s availability.

Ryan Dunn and Steve Marx describe Cloud Cover Episode 24 - Routing in Windows Azure in this 00:42:48 Channel9 Webcast:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow.

In this episode:

- Listen as we discuss the benefits and patterns of routing in Windows Azure.

- Learn how to route to stateful instances within your Windows Azure services.

- Watch us demo a simple router for 'sticky' HTTP sessions.

Show Links:

Ag.AzureDevelopmentStorageProxy

SQL Server to SQL Azure Synchronization using Sync Framework 2.1

Umbraco CMS on Windows Azure

Getting Started with the Windows Azure CDN

Security Resources for Windows Azure

Executing Native Code in Windows Azure and the Development Environment

The On Windows Team presented a 00:03:22 Case Study: Chicago Tribune embraces Azure video on 9/3/2010:

The Chicago, Illinois–based Tribune Company, which operates eight newspapers, 23 television stations, and a variety of news and information Web sites, needed to adapt its business to thrive in a changing market. Specifically, it wanted to make it possible for consumers to choose the content most relevant to them.

Tribune quickly centralised the content in its many data centres into a single repository using cloud computing on the Windows Azure platform.

The Azure Forum Support Team continues its Azure/Bing Maps series with an Azure + Bing Maps: Working with spatial data tutorial posted 9/1/2010:

This is the 4th article in our "Bring the clouds together: Azure + Bing Maps" series.

You can find a preview of live demonstration on http://sqlazurebingmap.cloudapp.net/.

For a list of articles in the series, please refer to http://blogs.msdn.com/b/windows-azure-support/archive/2010/08/11/bring-the-clouds-together-azure-bing-maps.aspx.

Introduction

The Plan My Travel sample stores the location information as spatial data. If you are already familiar with SQL Server spatial data, you can skip this post. We assume most developers are not quite familiar with spatial data yet. So we'll briefly introduce it in this post.

What is spatial data

If you haven't heard of the term spatial data, do not worry. It is simple. Spatial data is the data that represents geometry or geography information.

We have all learned from our geography lessons in middle school that to describe a location on the earth, latitude and longitude are used. In many cases, it is easy enough to store the latitude and longitude separately, using two columns in a database table.

However, there're also a lot of scenarios which require more than that. For example, how to store the boundary of a country (which can be considered as a polyline)? How to calculate the distance between two points on the earth (line)? In addition, even if you're working against a single location, it is often easier to consider the data as a point.

Traditionally, different solutions have been created for different applications. Each has its own advantages and disadvantages. But none is perfect, and multiple solutions make it difficult for different applications to interoperate with each other.

To solve the problems, an open standard must be embraced. Today the most widely adopted standard is OpenGIS created by Open Geospatial Consortium (OGC). Using OpenGIS, geometry and geography data can be represented in several ways, such as Well-known binary (WKB), Well-known text (WKT), and Geography Markup Language (GML).

WKB

WKB provides an efficient format to store spatial data. Since the data is stored in binary, it usually takes only a little space, and it is very easy for computers to perform calculations over the data.

In SQL Azure (as well as SQL Server and several popular third party databases such as Oracle and DB2), WKB is the internal presentation of the stored spatial data. This makes it easy to interoperate among different database providers.

However, WKB is not easy to read. It is machine friendly but not human friendly. We will not discuss the binary format in this article. You can always refer to the standards if you're interested in. To make the data more human friendly, other formats have been developed.

WKT

WKT is much more human friendly compared to WKB. For example:

- POINT(2 3)

- LINESTRING(2 3, 7 8)

- POLYGON((2 3, 7 8, 3 5, 2 3))

As you can see, the data is self-descriptive.

However, since the data is represented by text, it is less efficient for computers to perform calculations, and it usually takes more space to store the data (each character requires at least 8 bits). Fortunately, SQL Azure provides build-in features to convert between WKB and WKT. So in most cases, the data is stored using WKB, while WKT is used to represent the data to human.

For more information about WKT, please refer to the Wiki article as well as the standards.

GML

GML is another string representation of spatial data. It is usually lager than WKT, but it adopts another open standard: xml. Thus it is easier for programs to parse the data using xml libraries (such as LINQ to XML). We will not discuss GML in detail in this article. Please refer to http://en.wikipedia.org/wiki/Geography_Markup_Language if you're interested in.

Working with spatial data in SQL Azure

Create table and column

SQL Server 2008 introduced two new data types for spatial data: Geometry and Geography. They're fully supported in SQL Azure. To get started, please walk through the spatial data related tutorials in the SQL Server training course.

Spatial data types are just data types. So when creating a table column, you can use the data type just like nvarchar(50). As you've already seen in our last post, below is the Travel table:

[GeoLocation] [geography] NOT NULL, [Time] [datetime] NOT NULL, CONSTRAINT [PK_Travel] PRIMARY KEY CLUSTERED ( [PartitionKey] ASC, [RowKey] ASC ), CONSTRAINT [IX_Travel] UNIQUE NONCLUSTERED ( [Place] ASC, [Time] ASC ) )Please note the type of the GeoLocation column is geography.

Construct the data

The data is stored in binary. However, you don't need to understand the binary representation. You can construct the data using types defined in the Microsoft.SqlServer.Types.dll assembly. This is a SQL CLR assembly, which means it can be used in both managed languages and T-SQL.

For example, to create a geography object with a single point in C#:

SqlGeography sqlGeography = SqlGeography.Point(latitude, longitude, 4326);

In the above code, 4326 is reserved for geography data.

To create an object from WKT in T-SQL:

Geography::STGeomFromText(@GeoLocation, 4326)You can choose any programming language as you like. However, generally speaking, if the constructed object is for temporary usage (such as a temporary object used for calculating the distance between two locations), the code is usually executed from the application itself using a managed language without going through the database, especially if the database is on a cloud server instead of a local server.

If, however, the data needs to be stored in a database, a stored procedure written in T-SQL can help to increase performance. In addition, keep in mind that currently Entity Framework doesn't support spatial data directly. So if you choose Entity Framework as the technology for your data access layer, you must create a stored procedure and invoke it from the EF code.

Below is the InsertIntoTravel stored procedure used in the Plan My Travel application. Pay attention to the STGeomFromText method:

CREATE PROCEDURE [dbo].[InsertIntoTravel] @PartitionKey nvarchar(200), @Place nvarchar(200), @GeoLocation varchar(max), @Time datetime AS BEGIN Insert Into Travel Values(@PartitionKey, NEWID(), @Place, Geography::STGeomFromText(@GeoLocation, 4326), @Time) ENDAs discussed in our last post, the PartitionKey represents the user who creates this record. So we pass it as a parameter. The RowKey, however, is just a GUID, so we simply invoke NWEID to generate it automatically.

Now we can give the stored prodecure a quick test:

exec dbo.InsertIntoTravel @PartitionKey='TestPartition', @Place='Shanghai', @GeoLocation='POINT(121 31)', @Time='2010-09-01 00:00:00.000'Pay attention to the GeoLocation parameter. Its value is a WKT.

Now query the table, and we can see the data is inserted succesfully.

Figure 1: Query the inserted data

Similarly, the UpdateTravel stored procedure:

CREATE PROCEDURE [dbo].[UpdateTravel] @PartitionKey nvarchar(200), @RowKey uniqueidentifier, @Place NVarchar(200), @GeoLocation NVarchar(max), @Time datetime AS BEGIN Update [dbo].[Travel] Set [Place] = @Place, [GeoLocation] = Geography::STGeomFromText(@GeoLocation, 4326), [Time] = @Time Where PartitionKey = @PartitionKey AND RowKey = @RowKey ENDAnd the DeleteFromTravel stored procedure:

CREATE PROCEDURE [dbo].[DeleteFromTravel] @PartitionKey nvarchar(200), @RowKey uniqueidentifier AS BEGIN Delete From Travel Where PartitionKey = @PartitionKey AND RowKey = @RowKey ENDFor more information about working with spatial data, please refer to http://msdn.microsoft.com/en-us/library/bb933876.aspx.

Conclusion

This post introduced how to work with spatial data in SQL Azure. The same approach works for a normal SQL Server database as well (requires 2008 or later). The next post will discuss how to access data from an application.

CREATE TABLE [dbo].[Travel]( [PartitionKey] [nvarchar](200) NOT NULL, [RowKey] [uniqueidentifier] NOT NULL, [Place] [nvarchar](200) NOT NULL )

Previous articles in this series:

- Azure + Bing Maps: Working with spatial data

- Azure + Bing Maps: Designing a scalable cloud database

- Azure + Bing Maps: Choosing the right platform and technology

Return to section navigation list>

VisualStudio LightSwitch

Paul Patterson (@PaulPatterson) posted a Microsoft LightSwitch – Creating Table Relationships tutorial on 9/3/2010:

… In previous LightSwitch posts I have been been able to demonstrate stuff by using one table – a Customer table. I’ve only had to manage a relatively simple set of attributes (fields) about a customer. Things like the customer name, contact name, and whether or not they flip for the bill at lunch meetings, are all very simple characteristics of a customer. One table is all I need for that kind of information.

Now that I am looking down the road though, I don’t think just one table is going to work. The overall vision for my application is such that I am going store more than just simple customer information. I am going to want to do much more; like keep track of; appointments, orders, and invoicing. I know that one customer is going have any number of appointments, orders, or invoices. I need to somehow design my data storage needs so that the data is intuitive and manageable. I am going to need more than just one table to do the job.

Relation[al] Databases

The topic of relational database concepts is a big one, and too big for this blog post, but important to understand in creating LigthSwitch applications. If you really want to dive into the deep end of the topic pool, just Google “what is a relation database”. I think you’ll get a couple few hits on that one.

For clarity sake however, here is a condensed Cole’s notes version…

The relation[al] database concept has been around since the 70′s, maybe even earlier. In essence, relational databases separate information into chucks of data in a way that make bits of that data unique. Relationships are created between these chucks of data so that the data is persisted as pieces of information that are relative to each other.

For example, a company has a bunch of customers. Each of those customers may have orders, and each of those orders will have details about the orders on them. Putting all that information into just one place would make managing that information very expensive and ineffective. So, rather than putting all that data into one spot, like a giant spreadsheet, relational databases separate the data into related pieces. This may mean that customer information is one place, order information is in another, and details of the orders are in their own spots.

Understanding the concept of relational databases is fundamental when it comes to defining requirements for, and creating, robust and manageable LightSwitch solutions. LightSwitch takes most, if not all, of the work out of creating and enforcing the relationships created for a database application. But before LightSwitch can do that, the design off the data and the relationships between the data must be defined by the developer using simple relational database design concepts – which ends up being mostly common sense anyway.

Really, the goal is to design so that it; makes relative sense, is easy to manage, and is inexpensive to maintain. We don’t want a design that resembles the congenital relationships between the banjo playing family members from Deliverance. A good design with relationships that make sense will pay back big dividends in the future of any development efforts. Trust me on this. I’ve inherited a few whopper spreadsheet conversions in my day.

Creating a Relationship

So let’s get into the nitty gritty (is “nitty” that even a word?) of this LightSwitch table relationship stuff.

Using what I did in my last post, I open up my LightSwitch project and take a look at my customer table.

My Customer Table

What I want to do is add some simple scheduling to my application. I want to be able to schedule when I am going to visit a customer, or maybe schedule a time when I am going to do something specific for a customer – like take them to lunch invite them to take me to lunch. I need to be able to manage schedule specific information in my application.

I figure a customer may have any number of scheduled items assigned to them. Adding all this scheduling information to my customer table is unreasonable. How can add scheduling information to the same customer table when I don’t even know how many scheduled items there will be? Considering I want to keep track of each scheduled item’s; start time, end time, and a description, it only makes sense that the scheduling should be in its own place.

I create a new table named Schedule. In my new table I create three fields (attributes) that I think will help me define my schedule item:

- StartDateTime – a DateTime type field to store the date and time for the start of the scheduled event.

- EndDateTime – another DateTime type field to store when the scheduled event item will end.

- Description – a description of the scheduled event.

The new Schedule table

I’ve configured the Is Required property for each of these fields as true, because I want to ensure that I have all this information for every schedule record. I also make some edits to the Display Name property for each so that the labels on the screens I create for this entity will look nicer.

Now that I have a table to store schedule specific information, I need to somehow relate a schedule item to a customer. How easy is this? I am glad you asked. Let me show you…

On the top menu bar of the Schedule table designer I click the Relationship… item…

Click the Relationship... menu item.

Clicking the Relationship… menu item opens the Add New Relationship window.

The Add New Relationship window

The Add New Relationship window contains a grid where the relationship can be defined. The window also displays a graphical representation of the relationship. By default, the window displays my Schedule table as the Name value in the From column. The graphical display also shows the Schedule table.

I want to create a relationship between my schedule, and the customers that the schedule items are for. So, I select my Customer table from the dropdown box in the Name row of the To column.

Selecting the Customer table in the To column

After selecting the Customer table for the Name value in the To column, LightSwitch defaults the remaining values for my relationship.

Defaulted relationship properties

I already had a general idea of how the relationship between a schedule and a customer should be, but LightSwitch literally switched the light on in my head. The relationship designer has presented me with not only a graphical representation of the suggested relationship, LightSwitch also gives me a textual representation of the same relationship. the bottom of the window displays the rules for the relationship.

Working from the bottom up, I take a look at the textual description of the relationship:

Yes, a ‘Schedule’ must have a ‘customer’.

Yes, a ‘Customer’ can have many ‘Schedule’ instances.

A ‘Customer’ cannot be deleted, if there are related ‘Schedule’ instances? Sure, that makes sense.

Looking up at the graphical representation, it makes sense and seems to represent each line of the above.

The relationship picture

I recognize the notation used in the image. It is a common data modelling technique to annotate relationships between data entities – not that you would really care, but it does make me sound like I know what I am talking about.

…hey look at that; it’s a double rainbow, OMG, Damn!

Relationship Properties

Looking back at the grid in the Add New Relationship window, I examine each of the available properties of the relationship.

Name

The Name is obvious; I want a relationship to exist from the Schedule table to the Customer table. If I had more tables to choose from, then they would also show up in the drop down lists for the name property.

Multiplicity

Multiplicity represents the constraint of whether or not one entity can have one or more instances related to the other entity. In most relational databases, there a different types of relationships that can be created.

Perhaps I wanted to have it so that a customer can only have one schedule item, and a schedule item can only have one customer. This is considered a One-to-One relationship, which probably is not a good example to use because LightSwitch does (yet?) not support One-to-One relationships. Similarly, LightSwitch does not support Many-to-Many relationships.

In my case, I want a schedule item to be related to only one customer, and that customer can have many schedule items. This is a Many-to-One relationship (many schedules to one customer).

Multiplicity in my relationship

On Delete Behavior

Most relational databases management systems (or “RDMS”) software have the ability to enforce a relationship rule that, when a record from one table is deleted, records from a related table are also deleted. In LightSwitch, this is managed by setting the On Delete Behavior property.

Currently, the on delete behavior in my relationships is set to Restricted. This means that LightSwitch will not let me delete a customer record if there are any existing schedule records. I would be forced to go in and delete each related schedule record before I could delete the customer record.

Thinking about this a bit; do I really need to worry about schedule information for a customer if I delete the customer from my application? Probably not, so I change the on delete behavior setting to “Cascade Delete”

Changing the On Delete Behavior to "Cascade delete"

Now the relationship is set up so that if I delete a customer record, all of that customer’s schedule records will also be deleted.

Navigation Property

If I am not mistaken, I believe the Navigation Property is simply a name that references the relationship end points. If I change the value, I see that the same gets changed in the graphical representation. Instead of changing these values, I leave them as is because the names seem to make sense being either singular or pluralized values of the table names.

So, with all that, here is my finalized relationship…

The finalized relationship

I click the OK button to save the relationship.

Back in the table designer for my Schedule table, LightSwitch now displays my new relationship, linked to my Customer table.

The relationship showing in the table designer.

If I open the table designer for my Customer table, I also see the relationship. Pretty cool.

So now I want to start using this relationship, and start scheduling some customer meetings and visits – and start making note of who is generous in their lunches!

In my Solution Explorer I right-click the Screens folder and select Add Screen…

Add Screen...

Keeping it simple, I am going to screen that will let me add and edit from a grid. So, in the Add New Screen window, I select the Editable Grid Screen template. I select Schedules from the Screen Data drop down, and then edit the Screen Name value to read CustomerScheduleGrid

Creating the CustomerScheduleGrid screen

Clicking OK in the Add New Screen window opens the screen designer for my new CustomerScheduleGrid screen. The only thing I am going to change here is the Display Name property for the screen. I change the display name value to Schedule so that the name of screen is nicer when is shows when the application runs.

Groovy, I a hit the F5 key to start the application in debug mode…and ah la peanut butter sandwitches…the application opens.

I click the Schedule menu item and my new CustomerSheduleGrid screen opens.

Schedule Screen

For giggles, I add a couple of schedule items. When adding the items, I see that the new relationship is enforced because the Add New Schedule window forces me to select a customer for the schedule item.

Adding a schedule record forces me to select a customer.

Super! I can now start managing my schedule a little better by entering some simple appointments into my application.

Well that’s enough for now. My tummy is growling. In my next post I’ll fine tune my time management processing a bit by messing with some screen designs, as well as build a query to only show what I need to see from my schedule.

Ayende Rahien’s (@ayende) The Law of Conservation of Tradeoffs post of 9/3/2010 appears to me (and some commentors) to be directed to Visual Studio LightSwitch:

Every so often I see a group of people or a company come up with a new Thing. That new Thing is supposed to solve a set of problems. The common set of problems that people keep trying to solve are:

- Data access with relational databases

- Building applications without needing developers

And every single time that I see this, I know that there is going to be a catch involved. For the most part, I can usually even tell you what the catches involved going to be.

It isn’t because I am smart, and it is certain that I am not omnificent. It is an issue of knowing the problem set that is being set out to solve.

If we will take data access as a good example, there aren’t that many ways tat you can approach it, when all is said and done. There is a set of competing tradeoffs that you have to make. Simplicity vs. usability would probably be the best way to describe it. For example, you can create a very simple data access layer, but you’ll give up on doing automatic change tracking. If you want change tracking, then you need to have Identity Map (even data sets had that, in the sense that every row represented a single row :-) )

When I need to evaluate a new data access tool, I don’t really need to go too deeply into how it does things, all I need to do is to look at the set of tradeoffs that this tool made. Because you have to make those tradeoffs, and because I know the play field, it is very easy for me to tell what is actually going on.

It is pretty much the same thing when we start talking about the options for building applications without developers (a dream that the industry had chased for the last 30 – 40 years or so, unsuccessfully). The problem isn’t in lack of trying, the amount of resources that were invested in the matter are staggering. But again you come into the realm of tradeoffs.

The best that a system for non developers can give you is CRUD. Which is important, certainly, but for developers, CRUD is mostly a solved problem. If we want plain CRUD screens, we can utilize a whole host of tools and approaches to do them, but beyond the simplest departmental apps, the parts of the application that really matter aren’t really CRUD. For one application, the major point was being able to assign people to their proper slot, a task with significant algorithmic complexity. In another, it was fine tuning the user experience so they would have a seamless journey into the annals of the organization decision making processes.

And here we get to the same tradeoffs that you have to makes. Developer friendly CRUD system exists in abundance, ASP.Net MVC support for Editor.For(model) is one such example. And they are developer friendly because they give you he bare bones of functionality you need, allow you to define broad swaths. of the application in general terms, but allow you to fine tune the system easily where you need it. They are also totally incomprehensible if you aren’t a developer.

A system that is aimed at paradevelopers focus a lot more of visual tooling to aid the paradeveloper achieve their goal. The problem is that in order to do that, we give up the ability to do things in broad strokes, and have to pretty much do anything from scratch for everything that we do. That is acceptable for a paradeveloper, without the concepts of reuse and DRY, but those same features that make it so good for a paradeveloper would be a thorn in a developer’s side. Because they would mean having to do the same thing over & over & over again.

Tradeoffs, remember?

And you can’t really create a system that satisfy both. Oh, you can try, but you are going to fail. And you are going to fail because the requirement set of a developer and the requirement set of a paradeveloper are so different as to be totally opposed to one another. For example, one of the things that developers absolutely require is good version control support. And by good version control support i mean that you can diff between two versions of the application and get a meaningful result from the diff.

A system for paradeveloper, however, is going to be so choke full of metadata describing what is going on that even if the metadata is in a format that is possible to diff (and all too often it is located in some database, in a format that make it utterly impossible to work with using source control tools).

Paradeveloper systems encourage you to write what amounts to Bottun1_Click handlers, if they give you even that. Because the paradevelopers that they are meant for have no notion about things like architecture. The problem with that approach when developers do that is that it is obviously one that is unmaintainable.

And so on, and so on.

Whenever I see a new system cropping up in a field that I am familiar with, I evaluate it based on the tradeoffs that it must have made. And that is why I tend to be suspicious of the claims made about the new tool around the block, whatever that tool is at any given week.

Michael K. Campbell asked Visual Studio LightSwitch and WebMatrix: Are They Good for Professional Developers? in this 8/5/2010 post to the DevPro Connections blog (missed when posted):

Microsoft recently released WebMatrix–a web development tool for non-professional developers. Microsoft also just announced a new version of Visual Studio targeted at non-developers as well: Visual Studio LightSwitch. Response from the developer community has been mixed–with some developers excited about these tools and with others confused or annoyed.

Vison: The Argument for LightSwitch and WebMatrix

Something is driving a new push at Microsoft towards addressing the development needs of non-professional developers. My guess is that Microsoft has done a lot of number crunching and market analysis to determine that when folks need new business solutions, they’re going with something OTHER than solutions built upon the Microsoft stack. And my guess is that Visual Studio’s steep learning curve is a huge turn-off to most business users when they need a simple solution.

Consequently, I’m glad that Microsoft is showing real vision when it comes to figuring out how they can encourage more businesses to use the Microsoft stack for their development needs. As I pointed out in my previous article about Web Matrix, I think this move will create more demand for professional Microsoft services and development, which should be a win for all of us.

There are, of course, strong arguments about the dumb things that newbies will be able to do with “Fisher Price” versions of Visual Studio. But as I mentioned previously, those newbie mistakes will be executed in a friendly environment–where pros like us will eventually be able to correct those issues if the applications become successful enough that they need to be rewritten for scalability, security, and maintainability. Although it’s easy to argue that newbies can do dumb things with these new non-professional-level tools, it’s also easy to argue that pros and newbies can do REALLY dumb things with professional grade tools. In other words, there’s no true protection from dumb; nor is dumb a function of toolsets.

Sloth: My Only Argument Against WebMatrix and LightSwitch

My only argument against new tools really ISN’T an argument against them at all. Instead it’s more of a realization that Microsoft has finite developer resources when it comes to creating tooling and platforms. In fact, it’s not even so much of an argument as it is a worry.

And that worry is that because I’m a professional developer with a long list of things I’d like to see addressed, fixed, or corrected with Visual Studio, there’s a big part of me that worries that developers (and program managers) at Microsoft might be getting tired of dealing with the technical debt and other hoops that exist in Visual Studio, and they’re eager to just go solve new solutions without shackles or overhead.

In other words, I’m sure that a large number of the developers (and PMs) at Microsoft are there because they like to build complex and capable frameworks, tools, servers, and solutions. And I’m sure that plenty of those, ambitious,motivated developers would rather work on some shiny new project, rather than keep working with the bugs and technical debt they’re forced to deal with in Visual Studio.

Case in point: in a previous article, I mentioned my dislike of Visual Studio’s ‘bouncy toolbars’, and I pointed out that I’d welcome the Office Ribbon to Visual Studio as a way to get around that. The problem, however, with fixing bouncy’ toolbars or putting in a Ribbon toolbar is all of the technical debt involved. For example, there are literally thousands of plugins out there right now for Visual Studio. What happens to something like Resharper or CodeRush when Microsoft switches to a Ribbon? The integration of those tools has to be rewritten to use new Ribbon APIs for the new version of Visual Studio (instead of the convoluted approach now that involves ctc files and other evil). Even worse: What happens to a great plugin you’re using when the developer doesn’t have time to upgrade to the new version of Ribboning in Visual Studio vNext?

On the other hand, notice that Web Matrix employs (you guessed it): Ribbon toolbars. Obviously, that’s because there was no technical debt to content with, so the developers of Web Matrix were working with a clean slate.

Ergo, my worry is that Microsoft developers and PMs will be more drawn to these new, clean, solutions rather than fixing existing problems and concerns. And if you doubt that technical debt is a problem when it comes to addressing bugs or problems, look at a bug I ran into yesterday–where Microsoft refused to fix a bona-fide bug because the “cost” was too high.

My Hope for the Future

In the end, I don’t have a problem with Microsoft pursuing non-professional developers. In fact, I really hope that they succeed with that effort.

Consequently, my only hope is that Microsoft’s developers and project managers aren’t getting tired of dealing with the technical debt that they’ve been amassing with their current tooling in Visual Studio to the point where they’d rather work on new applications rather than solve some of the hard technical problems that continue to be an issue for professional developers.

<Return to section navigation list>

Windows Azure Infrastructure

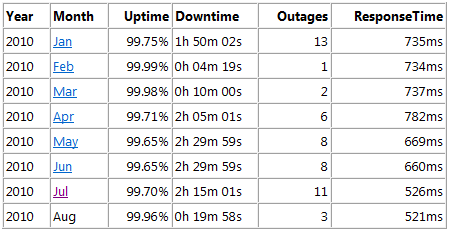

My Windows Azure Uptime Report: OakLeaf Table Test Harness for August 2010 (99.96%) post of 9/3/2010 shows that Microsoft’s South-Central US data center is meeing its Azure SLA with only a single Web instance:

Following is the Pingdom monthly report for a single instance of the OakLeaf Systems Windows Azure Table Test Harness running in Microsoft’s South Central US (San Antonio) data center:

The August 2010 downtime data is similar to that for preceding three months of Azure’s commercial operation, which began on January 4, 2010:

Note that the OakLeaf application is not subject to Microsoft’s 99.9% availability Service Level Agreement (SLA), which requires two running instances. …

One of the three outages appears to have been planned for fabric operating system maintenance. Lack of system upgrades during February and March would explain the higher uptime percentage.

It will be interesting to see if the 28.5% reduction in response time from the first quarter to August continues into autumn.

Note: September 2010 got off to a bad start with 2 hr 15 min of downtime on 9/2/2010. This outage did not appear in the status history as of 9/3/2010:

![image[5] image[5]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjw15Wmr4CAk5Wp0m-bEQh6VBXion-OP_lJCJjK1FIVi7kM0LfTHgS9UCORFdwn-ZRqux1K2T0inboFrb6Awth1iitoUNowWYkcf5Rocm5LbqxOjoVtKLTIagThDlhQ9MkhJoq4CuAq/?imgmax=800)

Alex Williams gave Azure’s version the nod in his Cloud Calculators: A Sign of Slick Marketing in the Cloud post of 9/3/2010 to the ReadWriteCloud blog:

As the cloud computing market gets more crowded, a number of Web-based calculators are popping up to lure customers.

These online calculators deserve their fair share of scrutiny. For the most part, they are there for the vendor to tell their own story in a way that shows the benefits of their service. Huge savings and incredible returns are pretty much what you are given when you pop in your numbers, requirements and company information. In the end, what you get is barely insightful. What the vendor gets is far more.

At their best, these cloud computing calculators provide a thumbnail view of the market. At their worst, they are slick tools for generating sales leads.

Here are three that we looked at. None of these calculators should ever be used to decide how to use cloud computing services. There are just too many factors to consider when making such a decision. It's a complex undertaking for any established company. It's why cloud management companies do so well. They provide a full gamut of services to help companies decide what should be in the cloud and what should not.

Astadia

Astadia developed a cloud calculator based on the data it collected from the integrations it did for its customers. Its main purpose is to show the return on the Google, Amazon Web Services and Force.com platforms.

The calculator is heavily biased. SearchCloudComputing.com observes that the actual calculator itself was built on the Force.com platform. Astadia develops marketing and sales apps. Much of the apps it develops are created on Force.com.

Google Calculator

This one stinks. The Google Cloud Calculator is hardly a calculator at all. It's an advertisement and a lead generator.

The calculator asks for your company name and then the number of employees. Input two employees and the calculator says you will save about $31,000. Put in 15 employees and you will also save about $31,000. So, either Google is inflating the numbers for a two employee company or is vastly underestimating the savings for a 15-person company.

Google does not have to promote itself in this manner. Google Apps is an excellent service, it can stand on its own. Cost comparisons are fine but to call it a calculator is a bit far fetched.

Windows Azure

The Windows Azure cloud calculator is better than the Google calculator advertisement. It still requires a high dose of skepticism, especially considering that it was built by a marketing firm.

Before you launch the calculator, they issue a disclaimer. That at least removes a bit of the marketing gleam.

The calculator asks a series of questions. SearchCloudComputing.com makes the point that the calculator is no doubt collecting hordes of marketing information. Again, its more of a marketing ploy than anything else. Still, Micrsoft at least tries to show some integrity:

"You should not view the results of this report as a substitute for engaging with a third party expert to independently evaluate you or your company's specific computing needs. The analysis report you will receive is for informational purposes only," it reads. It also assumes a rough estimate of $20,000 per year to manage a server on-premise, and about $4,000 for the Azure equivalent."

There are plenty of other cloud calculators. Rackspace has one as does Amazon Web Services. Our advice is to treat these calculators like you would any marketing information. They are simply tidbits of information that should provide nothing else but a snapshot of the market.

David Linthicum asserted “Apple TV and Google TV aren't just entertainment options; they also signify cloud computing's growing dependence on media” as deck for his Content is driving cloud computing's real expansion post of 9/3/2010 to InfoWorld’s Cloud Computing blog:

This week, we tuned in to learn about Apple's newest releases, including an upgrade to Apple TV. It joins the ranks of Google TV, as well as Web content clients from Amazon, Netflix, and YouTube included on many DVRs, DVD players, and high-def TVs. The clear trend is that we'll be consuming a lot more video and audio content over the Internet in the very near future.

All of this content will come from huge, new facilities, such as the monster data center Apple is building in Maiden, NC. The structure, which is nearly five times the size of Apple's data center in Newark, Calif., could not be used as anything other than a huge content server. Apple is making a great deal of money from that iTunes business, and the video and audio has to originate somewhere.

New content servers are being deployed all the time, but in much more distributed and less obvious ways. Companies such as Apple and Facebook may build data centers so large they get Greenpeace on their trail, but most businesses are taking a different approach to the expansion of content delivery capacity. Google and Microsoft, for example, are establishing servers in various locales.

The cloud's real expansion is centered on content these days, and these emerging cloud computing behemoths will lead the way for other more business-oriented types of applications. As Apple and Google become better at storing and serving up petabytes of video and audio, we can leverage those best practices for business data and applications. Moreover, you can count on these content delivery systems to morph into true cloud computing systems, supporting IaaS, SaaS, and PaaS, as the revenue opportunities become more apparent. Many cable companies are already moving in this direction, following the lead of Google and Microsoft.

Michael K. Campbell posted Azure and the Future of .NET Development to the DevPro Connections blog on 9/1/2010:

Microsoft is still aggressively touting the benefits of its Windows Azure platform. And while the big benefit of Azure today is that developers can push their applications into the cloud, I’ve long suspected that more good would come from Azure than just the immediate, and obvious, benefits that are currently available. I’ll share some of my suspicions and hopes. And look at how they might be starting to take shape with Microsoft’s “top secret” project Orleans.

A Missed Opportunity

A few weeks ago I was going to write about what I thought the addition of Mark Russinovich to the Azure team would mean (long-term) to .NET developers. I had the article drafted, but decided (last minute) to write about Visual Studio LightSwitch instead.

In thinking about Russinovich and Azure, I had outlined what I saw as current pain points with .NET development (in terms of scalability and availability). I was hoping that the inclusion of Russinovich (and other heavy hitters) to the Azure team would help bring about a fundamental change to the way application workloads are handled in truly “distributed” environments. I was hoping that .NET development would finally see some “grid-like” improvements or changes.

Then Mary Jo Foley broke the news of a new Microsoft R&D project she had discovered – called project Orleans. In quickly reviewing what she had discerned about this project, it looked like a dead-ringer for the fundamental changes I was planning to write about. So by not writing out my ideas a few weeks ago it appears that I missed an opportunity to look smart and predict things before they happened.

But the truth is that anyone who’s been developing with both .NET and SQL Server for the past few years could probably come to the same conclusions that I had. I’m excited to see what Orleans will mean for .NET developers, specifically in terms of how Orleans might address some of the pain-points that were on my mind.

SQL Server and SQL Azure Lead the Way

Just as I opined a few months ago about how SQL Azure would (eventually) bring game-changing improvements to future, non-cloud, versions of SQL Server, I’m convinced that Windows Azure will bring fundamental changes to the way that developers write applications.

Case in point: concurrency. In 2005 Herb Sutter released his The Free Lunch is Over article about how software developers needed to make a fundamental shift towards concurrency. For developers, this was a bit of a wake-up call confirming that applications would no longer be able to rely upon faster and faster processors to defray the cost of increasing complexity (and bloat) within their code and applications.

However, if you’re familiar with SQL Server, you know that it has been multi-processor aware for more than a decade now. More specifically, SQL Server has been able to “magically” take advantage of multiple processors to quickly perform operations without any real need for control or monitoring by developers. In fact, SQL Server’s ability to take advantage of multiple processors is so seamlessly implemented that the vast majority of SQL Server users probably don’t even realize that there’s a way (MAX DOP and processor affinity) to control how many processors SQL Server will use to complete a query or operation.

Too bad that’s not the case with .NET development today because even with parallel programming support within .NET 4.0, multi-threading remains non-trivial to implement. (Of course, that isn’t to say that leveraging multiple processors or cores from within SQL Server always works flawlessly; in rare cases DBAs need to tweak, configure, and constrain SMP operations, but in the vast majority of cases, multi-threading within SQL Server just works.)

Concurrency, Availability, and Scalability

It’s important to remember that concurrency (the ability to increase performance by throwing more processors at a problem) is just one consideration when it comes to .NET development. Two other big considerations are availability and scalability. And, interestingly enough, those two considerations are also the hallmarks of cloud computing. (Without the ability to make your applications or solutions more redundant, or fault-tolerant, and without the ability to easily scale your solutions, then all you have are hosted solutions–which are not the same as cloud solutions.)

Because Azure is truly a cloud-based service or offering, it’s only natural to assume that it will address business and developer needs for applications that are fault-tolerant and can more readily scale.

The only question for developers, then, is whether that’s all we’ll get? Or will experiences learned by the Azure team when it comes to abstracting workloads away from their physical implementation hosts be rolled back into future changes and directions for .NET development in general? I’m of the opinion that it’s going to be the latter because Microsoft has already placed such a huge amount of emphasis on addressing concurrency, scalability, and availability over the years.

Clustering Services, the Cloud, and the Need for Orleans

In my estimation, Microsoft has actually done a great job of addressing availability and scalability with clustering. Load-balancing and fault tolerance are key components that have made clustering such a viable option for so many enterprises. The problem with clustering, though, is that it’s really non-trivial to implement and maintain. Plus, clustering is quite expensive. Consequently, I’m hoping that since Azure needs to address these same issues of fault-tolerance and the ability to easily scale, we’ll start to see NEW solutions and paradigms for addressing these pain points from an Azure team that’s working to solve these problems in a real, viable, cloud.

Then, of course, I’m hoping that these insights make their way back into .NET development in general giving an area of focus for the development of the .NET Framework itself. And, frankly, it looks like this is exactly what project Orleans looks to address.

Project Orleans

If you haven’t done so already, take a quick peek at Mary Jo’s overview of what Orleans is shaping up to be. You’ll see that it’s built with increased fault-tolerance and scalability in mind and that these considerations are addressed through indirection or abstraction. More importantly, it looks like the abstraction in question will actually be a new platform one that I hope will be written from the ground up to support .NET solutions.

Think about that for a minute.

And, with that, here’s hoping that the Orleans runtime is everything it looks like it could be: distributed, manageable, versatile, scalable, fault-tolerant, and written specifically with .NET developers (and their apps) in mind.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

Sadagopan posted VMworld: Private Cloud & Random Musings to the Enterprise Irregulars blog on 9/1/2010:

I went to catch up with meet my friends coming to VMworld 2010 at San Francisco from around the world and ended up meeting some upcoming technology players as well. In the course of my discussions, I recognized the existence of a lot more energy in the cloud ecosystem – big and small, private and public cloud service providers and enterprises seriously exploring adoption opportunities.

First on VMware : The most important message that is coming across clearly is the ambition of VMware in becoming a key player across the stack – covering server and desktop virtualization and application platforms. This comes at a time when the number of virtualized servers set up this year exceeded the no . of actual physical servers set up and this is expected to grow further – we have seen projections that show that the installed base of virtual machines will grow 5Xin three years.

One can see in VMware’s strategy a broadly outlined roadmap for internal Clouds built on the server virtualization hypervisor layer with an integrated management (including security) backplane directly as part of the platform. Clearly, it is time for more established infrastructure players like BMC, CA, HP to be concerned about. I liked their announcement to provide more robust support and agility to port between internal and external Clouds – this move makes VMware a more strong player in the cloud ecosystem. VMware is building a new application platform for next-gen cloud applications leveraging SpringSource and partnering with Salesforce.com through vmforce.

Since it’s VMworld, it always begs the question what next for their customers. After all, the percentage of new servers running virtualization as the primary boot option will approach 90 percent by 2012, according to analysts. For many moving apps into cloud becomes a next logical step. Afterall, the movement toward private clouds started, with the virtualized data center and virtualized desktops. The movement toward broader cloud computing began for the enterprise with data center virtualization and consolidation of server, storage, and network resources to reduce redundancy and wasted space and equipment with measured planning of both architecture (including facilities allocation and design) and process.

Depending on the maturity of their adoption cycle, enterprises end up adopting different flavors of the cloud.I always maintain that business would embrace the right cloud at the right time – be it private, hybrid or public but the eventual goal would be to move everything into the public cloud to leverage true cloud benefits. While this may look logical, the path was not always a direct one for all embracing such a journey. Many approaches are being tried – the most ambitious one the introduction of publicly shared core services—much like domain name system (DNS) and peering services—into carrier and service provider networks will enable a more loosely coupled relationship between the customer and the cloud provider. With such infrastructure and services enables, enterprises will be able to choose among service providers, and federated service providers will be able to share service loads. Implication: Such a looser relationship will increase the elasticity of the cloud market and create a single, public, open cloud internetwork: the intercloud.

Now, with federation and application portability as the cornerstones of the intercloud, businesses will be able to achieve business process freedom and innovate, and users will experience choice and faster, better services. Obviously a journey like this involves careful planning, co-ordination and provides leverageable opportunities across the board. Consulting majors have defined approaches to help business move along this path as painlessly as could be possible.

Lets begin at the beginning to re-emphasize the perspective on cloud computing. Cloud computing model ought to provide resources and services , abstracted from the underlying infrastructure and provided on demand and at scale in a multi-tenant environment. In addition to its on-demand quality and its scalability, cloud computing can provide the enterprise with some key advantages like :

- Global deployment & support capabilities, with policy-based control by geography and other factors

- Operational efficiency from consistent infrastructure, policy-based automation, and trusted multi-tenant operations

- Integrated service management interfaces ( catalog management, provisioning etc.)native to the cloud

- Regulatory compliance ( both global and local) through automated data policies

- Better TCO and increased ease of operations

If we examine cloud computing particularly private clouds through this prism , it throws up some interesting insights. Different enterprises are getting ready to embrace the cloud but have different starting points and not without much trade-off analysis as to the best direction or computing model. To add to the confusion, I saw in the VMWorld meet, a number of players (some promising, I should say), talk about helping in setting private clouds in a manner suggesting a simplistic switch to the clouds. I also know that there are some cloud service players offering to provide readymade solutions to make business embrace private clouds faster and easier to adopt. This is startling so to say – a readymade solution may be farfetched ( clear analogy : a decade back some were promising to make enterprises web ready easily – we all know how different it is to enable business to be web enabled – certainly no readymade solutions nor shortcuts could have worked there).

Migration, workload balancing, and integration – all are overbearing issues to be resolved and certainly not minor things to be glossed over. Private clouds should be differentiated with hybrid clouds. (Note :A hybrid cloud uses both external (under the control of a vendor) and internal (under the control of the enterprise) cloud capabilities to meet the needs of an application system. A private cloud lets the enterprise choose, and control the use of, both types of resources).

Evidently, cloud shift is not an easy journey. The greatest barrier to cloud adoption would be for enterprises to make that switch – that would mean crossing so many issues centered on excitement to fear and uncertainty. This is a paradigm shift and not just an incremental change and as such would require planning, co-ordination and leadership to traverse the path. Initially, the administrative change would be far more pronounced as the shift happens and if this is entrusted to an external vendor – it would call for serious planning and training as the new environment would be dramatically different from what existed in the past.