Windows Azure and Cloud Computing Posts for 9/17/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Update 9/19/2010: Updated for Maarten Balliauw’s Introducing Windows Azure Companion – Cloud for the masses? in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section and other articles marked ••.

• Update 9/18/2010: Items marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

• David Pallman began a new series with Stupid Cloud Tricks #1: Hosting a Web Site Completely from Windows Azure Storage on 9/17/2010:

Can you host a web site in Windows Azure without using Windows Azure Compute? Sure you can: you can ‘host’ an entire web site in Windows Azure Storage, 100% of it, if the web site is static. I myself am currently running several web sites using this approach. Whether this is a good idea is a separate discussion. Welcome to “Stupid Cloud Tricks” #1. Articles in this series will share interesting things you can do with the Windows Azure cloud that may be non-obvious and whose value may range from “stupid” to “insightful” depending on the context in which you use them.

If you host a web site in Windows Azure the standard way, you’re making use of Compute Services to host a web role that runs on a server farm of VM instances. It’s not uncommon in this scenario to also make use of Windows Azure blob storage to hold your web site assets such as images or videos. The reason you’re able to do this is that blob storage containers can be marked public or private, and public blobs are accessible as Internet URLs. You can thus have HTML <IMG> tags or Silverlight <Image> tags in your application that reference images in blob storage by specifying their public URLs.

Let’s imagine we put all of the files making up a web site in blob storage, not just media files. The fact that Windows Azure Storage is able to serve up blob content means there is inherent web serving in Windows Azure Storage. And this in turn means you can put your entire web site there—if it’s of the right kind: static or generated web sites that serve up content but don’t require server-side logic. You can however make use of browser-side logic using JavaScript or Ajax or Silverlight.

How does ‘hosting’ a static web site out of Windows Azure Storage compare to hosting it through Windows Azure Compute?

- With the standard Windows Azure Compute approach, a single VM of the smallest variety @$0.12/hr will cost you about $88/month--and you need at least 2 servers if you want the 3 9's SLA. In addition you’ll pay storage fees for the media files you keep in Windows Azure storage as well as bandwidth fees.

- If you put your entire site in Windows Azure storage, you avoid the Compute Services charge altogether but you will now have more storage to pay for. As a reminder, storage charges include a charge for the amount of storage @$0.15/GB/month as well as a transaction fee of $0.01 per 10,000 transactions. Bandwidth charges also apply but should be the same in either scenario.

So which costs more? It depends on the size of your web site files. In the Compute Services scenario the biggest chunk of your bill is likely the hosting charges which are a fixed cost. In the storage-hosted scenario you’re converting this aspect of your bill to a charge for storage which is not fixed: it’s based on how much storage you are using. It’s thus possible for your 'Storage-hosted’ web site charges to be higher or lower than the Compute-hosted approach depending on the size of the site. In most cases the storage scenario is going to be less than the Compute Services scenario.

As noted, this is only useful for a limited set of scenarios. It’s not clear what this technique might cost you in terms of SLA or Denial of Service protection for example. Still, it’s interesting to consider the possibilities given that Windows Azure Storage is inherently a web server. The reverse is also true, Windows Azure Compute inherently comes with storage--but that’s another article.

It appears to me that the “entire site in Windows Azure storage” approach would be less expensive in almost every case. The only exception might be a site with no static content, which is difficult to imagine.

Brian Swan explained Accessing Windows Azure Table Data as OData via PHP in this 9/16/2010 post:

Did you know that data stored in Windows Azure Table storage can be accessed through an OData feed? Does that question even make sense to you? If you answered no to either of those questions and you are interested in learning more, then read on. In this post I’ll show you how to use the OData SDK for PHP to retrieve, insert, update, and delete data stored in Windows Azure Table storage. If you are new to either Windows Azure Table storage or the OData protocol, I suggest reading either (or both) of these posts (among other things, they will describe what Azure Table storage and OData are, and walk you through set up of Azure Table storage and the OData SDK for PHP):

Once you have set up Azure Table storage and installed the OData SDK for PHP, writing code to leverage Azure Table storage is easy…

Set Up

To use the OData SDK for PHP with Azure tables, we must define a class that describes the table structure. And, for the SDK to extract table metadata from the class, the class must…

Inherit from the TableEntry class

All the properties which is to be stored in the azure table must have the attribute @Type:EntityProperty

The table entry must have the following attributes:

@Class:name_of_table_Entry_class

@Key:PartitionKey

@Key:RowKey

So, the class I’m using (in a file called Contacts.php) describes a contact (note that the name of the class must be the name of the table in Windows Azure):

**

*@Class:Contacts

*@Key:PartitionKey

*@Key:RowKey

*/

class Contacts extends TableEntry

{

/**

*@Type:EntityProperty

*/

public $Name;

/**

*@Type:EntityProperty

*/

public $Address;

/**

*@Type:EntityProperty

*/

public $Phone;

}I’ll use "business” or “personal” as the value for the partition key, and an e-mail address for the value of the row key (the partition key and row key together define a unique key for each table entry). With that class in place we are ready to start using the SDK. In the code below, I create a new ObjectContext (which manages in-memory table data and provides functionality for persisting the data) and set the Credential property:

require_once 'Context/ObjectContext.php';

require_once 'Contacts.php';

define("AZURE_SERVICE_URL",'http://your_azure_account_name.table.core.windows.net');

define("AZURE_ACCOUNT_NAME", "your_azure_account_name");

define("AZURE_ACCOUNT_KEY", 'your_azure_account_key');$svc = new ObjectContext(AZURE_SERVICE_URL);

$svc->Credential = new AzureTableCredential(AZURE_ACCOUNT_NAME, AZURE_ACCOUNT_KEY);Creating a Table

To create a table, we use the Tables class that is included in the SDK. Note that the table name must be the same as my class above:

$table = new Tables();

$table->TableName = "Contacts";

$svc->AddObject("Tables", $table);

$svc->SaveChanges();Inserting an Entity

Inserting an entity simply means creating a new Contacts object, setting it’s properties, and using the AddObject and SaveChanges method on the ObjectContext class:

$tableEntity = new Contacts();

$tableEntity->PartitionKey = "business";

$tableEntity->RowKey = "Brian@address.com";

$tableEntity->Name = "Brian Swan";

$tableEntity->Address = "One Microsoft Way, Redmond WA";

$tableEntity->Phone = "555-555-5555";

$svc->AddObject("Contacts", $tableEntity);

$svc->SaveChanges();Retrieving Entities

The following code executes a query that returns all entities in the Contacts table. Note that all contacts come back as instances of the Contacts class.

$query = new DataServiceQuery("Contacts", $svc);

$response = $query->Execute();foreach($response->Result as $result)

{

//each result is a Contacts object!

echo $result->Name."<br/>";

echo $result->Address."<br/>";

echo $result->Phone."<br/>";

echo $result->PartitionKey."<br/>";

echo $result->RowKey."<br/>";

echo "----------------<br/>";

}You can filter entities by using the Filter method on the DataServiceQuery object. For example, by replacing $response = $query->Execute(); with this code…

$response = $query->Filter("PartitionKey eq 'business'")->Execute();

…you retrieve all “business” contacts. Or, this code will return all entities whose Name property matches the given string:

$response = $query->Filter("Name eq 'Brian Swan'")->Execute();

I’ve only demonstrated the equals (eq) condition in filters here. The SDK supports these conditions too: ne (not equal), lt (less than), le (less than or equal), gt (greater than), and ge (greater than or equal).

Updating an Entity

To update an entity, we have to first retrieve the entity to be updated. Once the entity is in memory, we can update its non-key properties. The Partition Key and Row Key cannot be updated. To achieve an update of a key property, an entity must be deleted and re-created with the correct keys. The following code updates the Address property of an entity:

$query = new DataServiceQuery("Contacts", $svc);

$filter = "(PartitionKey eq 'business') and (RowKey eq 'Brian@address.com')";

$response = $query->Filter($filter)->Execute();$tableEntity = $response->Result[0];

$tableEntity->Phone = "999-999-9999";

$svc->UpdateObject($tableEntity);

$svc->SaveChanges();Deleting an Entity

To delete an entity, we have to retrieve the entity to be deleted, mark it for deletion, then persist the deletion:

$query = new DataServiceQuery("Contacts", $svc);

$filter = "(PartitionKey eq 'business') and (RowKey eq 'Brian@address.com')";

$response = $query->Filter($filter)->Execute();$tableEntity = $response->Result[0];

$svc->DeleteObject($tableEntity);

$svc->SaveChanges();Deleting a Table

And finally, to delete a table…

$query = new DataServiceQuery('Tables', $svc);

$response = $query->Filter("TableName eq 'Contacts'")->Execute();

$table= $response->Result[0];

$svc->DeleteObject($table);

$svc->SaveChanges();That’s it. You want to play with the code above, you can download the files attached to this post. Let me know if this information is interesting and/or useful.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Wayne Walter Berry (@WayneBerry) reported Nick Bowyer interviews David Robinson about SQL Azure at Tech.Ed New Zealand 2010:

Visit the site for an embedded player.

Patrick Butler Monterde announced the new SQL Azure Website launched! on 9/17/2010:

We are pleased to announce the launch of the new SQL Azure website -- bookmark this now! www.microsoft.com/sqlazure

Highlights of the new SQL Azure site:

Easy to navigate new SQL Azure home page

The main SQL Azure home page highlights the most popular content categories and recent blog posts for enhanced discovery and richer experience throughout the site. The dynamic evidence box highlights top quotes and new success stories worldwide.

Seamless experience across Windows Azure platform service home pages

Customers landing on Windows Azure platform home page will have seamless transition experience between new SQL Azure and Windows Azure platform home pages.

New Videos page

We have a new resource page dedicated to training videos on SQL Azure for customers to ramp up. New videos are coming soon! Also, a more interactive and richer videos page will be available in v2 of the site!

Updated FAQs:

All of the SQL Azure FAQs have been updated.

New Community page

Dynamic blogs and all of the relevant external social media sites are easily accessible in this new community landing environment.

Link: MSDN SQL Azure

• Chris Woodruff (@cwoodruff, a.k.a. “Woody”) reported a new public baseball stats OData service on the OData Mailing List:

I created a new feed for people to use that contains baseball stats from 1864 to 2009: http://www.baseball-stats.info/OData/baseballstats.svc/

Enjoy!

Here’s most of the metadata:

and the properties from the entry for Hank Aaron returned by http://www.baseball-stats.info/OData/baseballstats.svc/Player('aaronha01'). Don’t forget to turn off IE8’s Feed Reading view:

Haris Kurtagic reported a presentation about OData and GeoREST in the OData Mailing List on 9/16/2010:

I would like to inform you about [our] work and presentation regarding OData.

Together with Geof Zeiss, we did [a] presentation at FOSS4G (http://2010.foss4g.org/ ). I presented [on] OData and how to create [an] OData producer with GeoREST (www.georest.org).

GeoREST enables creation of OData service[s] from spatial data. GeoREST extends OData filtering with spatial filters and adds GML and GeoJSON to entries.

I did [a] live demo with use of tools like [an] OData browser, Excel PowerPivot and Sesame browser connecting to GeoREST OData service. There is also [a] sample server here (http://www.sl-king.com/rest/odata.svc/), which enables an OData service from spatial data.

The sample data consists of the location and population of 500 small Italian towns between 45.116952, 8.071929 and 45.726084, 9.896158 in the Piedmont region (province of Torino, TO), east and southeast of Torino. Here’s the first entry for Brozolo:

and its Bing map:

Following is the abstract of the GeoREST: Open Web Access to Public Geodata Based on Atom Publishing presentation:

Open data is increasingly being seen as a way of promoting citizen engagement, provides transparency and contributes to the economy through re-use of public data for commercial purposes. Public agencies worldwide are making geospatial data available at low cost and with unrestricted re-use licensing. In his “Government Data Design Issues”, Tim Berners-Lee proposes that the first priority is to get data onto the web in raw form.

Recently, we have seen many examples of the availability of open raw geospatial data, for example, the UK government (data.gov.uk), the US Federal Government (data.gov), Natural Resources Canada (geogratis.gc.ca) and local governments like the City of Vancouver (data.vancouver.ca). However, a barrier remains between the public and public data because it is difficult to use standard Web tools to search for, retrieve and manipulate raw geospatial data. We suggest that simplest way to overcome this challenge is to publish raw geospatial data using open Web standards; HTTP, HTML, MIME, and Atom. For example, raw geodata made available as HTML and MIME is searchable by any Web search engines such as Google and Bing.

HTML/MIME web pages mean users can search for and find raw geospatial data in the same familiar way they find anything else on the web. We describe an open source project (www.geoREST.org) that provides an interface that relies on the Atom to provide access to geodata. We describe how government agencies are publishing their geodata, which may be in shape files, Oracle Spatial, SQL Server, MySQL and many other formats as HTML, GeoJSON, KML, and other Web-friendly formats using the GeoREST open source project. We will discuss how this has been implemented at sites in Europe and Canada, and show how this strategy of publishing feature-level representations of government data has enabled direct access by the public to government information.

• Haris responded to a request for the presentation itself in the OData Mailing list on 9/17/2010:

Conference organizers promised to publish presentations very soon. I will also post link here when it happens.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

The Windows Azure App Fabric team announced Windows Azure AppFabric LABS September release now available on 9/16/2010 at 10:00 AM, but it didn’t show up in their blog for me until 9/17/2010:

Today we deployed an incremental update to the Access Control Service in the Labs environment. It’s available here: http://portal.appfabriclabs.com. Keep in mind that there is no SLA around this release, but accounts and usage of the service are free while it is in the labs environment.

This release builds on the prior August release of the Access Control Service, and adds the following features:

- Support for OAuth 2.0 Web Server and assertion profiles (http://tools.ietf.org/html/draft-ietf-oauth-v2-10)

- Additional support for X.509 certificate authentication via WS-Trust and Service Identities

- The ability to map Identity Providers to Relying Parties

Several updates to the Management Web Portal:

- Upload a WS-Fed Metadata file through the portal

- Additional fields uploading encrypting and decrypting certificates

- Expanded support for machine keys (password, symmetric key, and X.509) and creation of valid usage dates

We’ve also added some more documentation and updated samples on our CodePlex project: http://acs.codeplex.com.

For more information about the Access Control Service, see the Channel 9 video at http://channel9.msdn.com/shows/Identity/Introducing-the-new-features-of-the-August-Labs-release-of-the-Access-Control-Service.

Vittorio Bertocci (@vibronet) adds his commentary about ACS updates in Say Hello to the September LABS Release of ACS of 9/16/2010:

Barely one month after the start of the ACS renaissance, here there’s a new update for you.

The September release brings a number of enhancements here and there, all the way from the small-but-useful to the Big Deal. Here’s a brief list:

- Miscellaneous portal enhancements. For example, now you can upload the federation metadata documents of the WS-Federation IPs you want to federate with: in the first release of the portal you had to expose the metadata docs on one internet-addressable endpoint (though it was possible to use the management APIs to obtain the same result)

- More documentation, more samples. Some capabilities already present in the August release weren’t too discoverable, such as for example the WS-Trust endpoints. The next release addresses this, although there are still tons of functionalities just waiting to be discovered

- X.509 certs as credential type for the WS-Trust endpoint

- OAuth2! Web server and autonomous (nee assertion) profiles, Draft 10 to be exact. That’s a veeery interesting arena. There’s a lot of work going on around there, and the new feature in ACS could not be have been more timely. Stay tuned…

- …and many others

You can read everything about it at the ACS home on Codeplex, in the announcement post and on Justin’s blog.

Rrrrock on!

Justin Smith’s last post appears to me to be about the August (not the September) Lab release.

Peter Kron announced “Programming Windows Identity Foundation” is available! in a 9/17/2010 post to the Claims-Based Identity blog:

My name is Peter Kron and I’m a Principal Software Developer on the Windows Identity Foundation team. Over the last year it has been my pleasure to work with Vittorio Bertocci as the technical reviewer for his latest book, Programming Windows Identity Foundation. Many of you will recognize Vittorio from his engaging sessions at PDC, TechEd, IDWorld and other conferences, or follow his popular blog, Vibro.NET. He has also authored or co-authored other books for Microsoft Press.

Vittorio is a Senior Architect Evangelist with Microsoft and over the past five years has been active (and if you know Vittorio, you know that is very active) in helping customers develop SOA based on WCF and, most recently, Identity.

His experience working through real-world scenarios with numerous developers makes him an ideal choice to write this book. He knows the issues they have faced and how Microsoft technologies like WCF and WIF can be brought to bear on them. In this book, Vittorio takes the reader through basic scenarios and explains the power of claims. He shows how to quickly create a simple claims-based application using WIF. Beyond that, he systematically explores the extensibility points of WIF and how to use them to handle more sophisticated scenarios such as Single Sign-on, delegation, and claims transformation, among others.

Vittorio goes on to detail the major classes and methods used by WIF in both passive browser-based applications and active WCF services. Finally he explores using WIF as your applications move to cloud-based Windows Azure roles and RIA futures.

I think you’ll find this book a valuable tool for learning how to build claims-based web applications and services. Or you will keep a copy handy for reference, as I do. The book is available now from Microsoft Press, and all of the sample code described in the book is available for download.

All of us on the WIF team are happy to see this in print (and e-book)!

My copy arrived yesterday!

David Kearns [pictured below] introduces an interesting OpenID kink with his “Access to one's data is hindered when identity providers close up shop” preface to his Disappearing identity providers pose problem post of 9/14/2010 to Network World’s Security blog:

Former Network World colleague John Fontana is now writing about IdM issues for Ping Identity. He recently commented upon an issue that may arise more and more in the future as identity providers (specifically OpenID Identity Providers) disappear.

Later this month (on Sept. 30), Six Apart will officially shut down VOX, a blogging site and an OpenID provider. How does this affect people using VOX as their OpenID Identity Provider (IDP)? Fontana explains: "If you have associated your VOX OpenID with services that you regularly use and where you store data, then there will be no one to validate that VOX OpenID." In effect, "you don't exist, and worse yet, you have no access rights to your stuff."

Another friend from Ping, Pam Dingle, outlines how this scenario unfolds:

"It's Oct. 1, you go to log into, say LiveJournal, with your VOX OpenID. LiveJournal cannot validate you as a user because the VOX service is no longer online. You get a log-in failed message.

"Now you have no access to your account and you will have to go through some sort of help desk hell trying to validate you are who you say you are or you'll never see your data, photos, etc. ever again.

"While this event isn't likely to crush a huge number of users under its wheel, it does start to expose some of the issues around OpenID. Can they be solved? Perhaps. The simple solution may be that OpenID IDPs consolidate into a handful of providers such as Google, which doesn't appear to be going anywhere soon.

"The VOX situation is the kind of scenario that has not yet been in the hype churn of OpenID, but is one that corporate users should be ponderng in any evaluation of consumer ID technologies."

In fact, this scenario has been scaring off customers of OpenID since the service was first started. The generally accepted workaround is to (where possible) have two separate logins to a service, both accessing all of your data. If one OpenID IDP fails you still have the second to fall back on. Of course that assumes that the service provider allows two separate logins to have administrative access to the single set of data, which is not always true.

This relates directly to OpenID, but would hold true for almost any third-party single sign-on process, especially one that relies on services away from your home domain, or in the cloud. It's a serious issue which needs to be addressed by any identity provider service you might be thinking of working with.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Vijay Rajagopalan reported Windows Azure Platform gets easier for PHP developers to write modern cloud applications in a post of 9/19/2010 to the Interoperability @ Microsoftt blog:

This week, I’m attending the Open Source India conference, in Chennai, India where I had the chance to participate in the opening keynote. During my talk, I gave a quick overview of the Interoperability Elements of a Cloud Platform, and I illustrated some elements through a series of demos. I used this opportunity to unveil a new set of developer tools and Software Development Kits (SDKs) for PHP developers who want to build modern cloud applications targeting Windows Azure Platform:

- Windows Azure Companion – September 2010 Community Technology Preview(CTP)– is a new tool that aims to provide a seamless experience when installing and configuring PHP platform-elements (PHP runtime, extensions) and Web applications running on Windows Azure. This first CTP focuses on PHP, but it may be extended to be used for deploying any open source component or application that runs on Windows Azure. Read below for more details.

- Windows Azure Tools for Eclipse for PHP - September 2010 Update–is a plug-in for PHP developers using the Eclipse development environment, which provides tools to create, test and deploy Web applications targeting Windows Azure.

- Windows Azure Command-line Tools for PHP – September 2010 Update– is a command-line tool, which offers PHP developers a simple way to package PHP based applications in order to deploy to Windows Azure.

- Windows Azure SDK for PHP– Version 2.0 – enables PHP developers to easily extend their applications by leveraging Windows Azure services (like blobs, tables and queues) in their Web applications whether they run on Windows Azure or on another cloud platform.

These pragmatic examples are good illustrations demonstrating Windows Azure interoperability. Keep in mind that Microsoft’s investment and participation in these projects is part of our ongoing commitment to openness, which spans the way we build products, collaborate with customers, and work with others in the industry.

A comprehensive set of tools and building blocks to pick and choose from

We’ve come a long way since we released the first Windows Azure SDK for PHP in May 2009, by adding complementary solutions with the Eclipse plug-in and the command line tools.

The Windows Azure SDK for PHP gives PHP developers a speed dial to easily extend their applications by leveraging Windows Azure services (like blobs, tables and queues), whether they run on Windows Azure or on another cloud platform. Maarten Balliauw, from RealDolmen, today released the version 2.0 of the SDK. Check out the new features on the project site: http://phpazure.codeplex.com/.

An example of how this SDK can be used is the Windows Azure Storage for WordPress, which allows developers running their own instance of WordPress to take advantage of the Windows Azure Storage services, including the Content Delivery Network (CDN) feature. It provides a consistent storage mechanism for WordPress Media in a scale-out architecture where the individual Web servers don't share a disk.Today we are also announcing updates on the Windows Azure Tools for Eclipse for PHP and the Windows Azure Command-line Tools for PHP.

Developed by Soyatec, the Windows Azure Tools for Eclipse plug-in offers PHP developers a series of wizards and utilities that allows them to write, debug, configure, and deploy PHP applications to Windows Azure. For example, the plug-in includes a Window Azure storage explorer that allows developers to browse data contained into the Windows Azure tables, blobs, or queues. The September 2010 Update includes many new features like enabling Windows Azure Drives, providing the PHP runtime of your choice, deploying directly to Windows Azure (without going through the Azure Portal), or the Integration of SQL CRUD for PHP, just to name a few. We will publish detailed information shortly, and in the meantime, check out the project site: http://www.windowsazure4e.org/.

We know that PHP developers use various developments environments – or none J, so that’s why we built the Windows Azure Command-line Tools, which let you easily package and deploy PHP applications to Windows Azure using a simple command-line tool. The September 2010 Update includes more deployment options, like new support for the Windows Azure Web & Worker roles.

So you might think that from the PHP developer point of view, you’re covered to write and deploy cloud applications for Windows Azure.” The answer is both yes, and no!

Yes, because these tools cover most scenarios where developers are building and deploying one application at time. But what if you want to deploy open source PHP SaaS applications on the same Windows Azure service? Or what if you are more of a Web applications administrator, and just want to deploy pre-built applications and simply configure them?This is where the Windows Azure Companion comes into the picture.

A seamless experience when deploying PHP apps to Windows Azure

The Windows Azure Companion – September 2010 CTP– is a new tool that aims to provide a seamless experience when installing and configuring PHP platform-elements (PHP runtime, extensions) and Web applications running on Windows Azure. This early version focuses on PHP, but it may be extended for deploying any open source component or application that runs on Windows Azure. Read below for more details.

It is designed for developers and Web application administrators who want to more efficiently “manage” the deployment, configuration and execution of their PHP platform-elements and applications.

The Windows Azure Companion can be seen as an installation engine that is running on your Windows Azure service. It is fully customizable through a feed which describes what components to install. Getting started is an easy three step process:

- Download the Windows Azure Companion package & set your custom feed

- Deploy Windows Azure Companion package to your Windows Azure account

- Using the Windows Azure Companion and your custom feed, deploy the PHP runtime, frameworks, and applications that you want

So, how did we build the Windows Azure Companion? The Windows Azure Companion itself is a Web application built in ASP.NET/C#. Why C#? Why not PHP? The answer is simple: the application is doing some low-level work with the Windows Azure infrastructure. In particular, it spins the Windows Azure Hosted Web Core Worker Role in which the PHP engine and applications are started and then executed. Doing these low level tasks in PHP would be much more difficult, so we chose C# instead. The source and the installable package (.cspkg & config files) are available on the MSDN Code Gallery: http://code.msdn.microsoft.com/azurecompanion. And from a PHP developer perspective, all you need is the installable package, and you don’t have to worry about the rest unless you are interested!

All you need is in the feed

The Windows Azure Companion Web application uses an ATOM feed as the data-source to display the platform-elements and Web applications that are available for installation. The feed provides detailed information about the platform element or application, such as production version, download location, and associated dependencies. The feed must be hosted on an Internet accessible location that is available to the Windows Azure Companion Web application. The feed conforms to the standard ATOM schema with one or more product entries as shown below:

<?xml version="1.0" encoding="utf-8"?>

<feed xmlns="http://www.w3.org/2005/Atom">

<version>1.0.1</version>

<title>Windows Azure platform Companion Applications Feed</title>

<link href="http://a_server_on_the_internet.com/feed.xml" />

<updated>2010-08-09T12:00:00Z</updated>

<author>

<name>Interoperability @ Microsoft</name>

<uri>http://www.interoperabilitybridges.com/</uri>

</author>

<id>http://a_server_on_the_internet.com/feed.xml </id>

<entry>

<productId>OData_SDK_for_PHP</productId>

<productCategory>SDKs</productCategory>

<installCategory>Frameworks and SDKs</installCategory>

<updated>2010-08-09T12:00:00Z</updated>

<!-- UI elements shown in Windows Azure platform Companion -->

<title>OData SDK for PHP</title>

<tabName>Platform</tabName>

<summary>OData SDK for PHP</summary>

<licenseURL>http://odataphp.codeplex.com/license</licenseURL>

<!-- Installation Information -->

<installerFileChoices>

<installerFile version="2.0" url="http://download.codeplex.com/Project/Download/FileDownload.aspx?ProjectName=odataphp&DownloadId=111099&FileTime=129145681693270000&Build=17027">

<installationProperties>

<installationProperty name="downloadFileName" value="OData_PHP_SDK.zip" />

<installationProperty name="applicationPath" value="framework" />

</installationProperties>

</installerFile>

</installerFileChoices>

<!-- Product dependencies -->

<dependencies>PHP_Runtime</dependencies>

</entry>

</feed>If you want to see a sample feed in action and the process for building it, I invite you to check Maarten Balliauw’s blog: Introducing Windows Azure Companion Cloud for the masses [below]. He has assembled a custom feed with interesting options to play with. And of course, the goal is to let you design the feed that contains the options and applications you need.

We are on a journey

Like I said earlier, we’ve come a long way in the past 18 months, understanding how to best enable various technologies on Windows Azure. We’re on a journey and there’s a lot more to accomplish. But I have to say that I’m very excited by the work we’re doing, and equally eager to hear your feedback.

Vijay Rajagopalan, Principal Architect

•• Maarten Balliauw posted Introducing Windows Azure Companion – Cloud for the masses? on 9/18/2010:

At OSIDays in India, the Interoperability team at Microsoft has made an interesting series of announcements related to PHP and Windows Azure. To summarize: Windows Azure Tools for Eclipse for PHP has been updated and is on par with Visual Studio tooling (which means you can deploy a PHP app to Windows Azure without leaving Eclipse!). The Windows Azure Command-line Tools for PHP have been updated, and there’s a new release of the Windows Azure SDK for PHP and a Windows Azure Storage plugin for WordPress built on that.

What’s most interesting in the series of announcements is the Windows Azure Companion – September 2010 Community Technology Preview(CTP). In short, compare it with Web Platform Installer but targeted at Windows Azure. It allows you to install a set of popular PHP applications on a Windows Azure instance, like WordPress or phpBB.

This list of applications seems a bit limited, but it’s not. It’s just a standard Atom feed where the Companion gets its information from. Feel free to create your own feed, or use a sample feed I created and contains following applications which I know work well on Windows Azure:

- PHP Runtime

- PHP Wincache Extension

- Microsoft Drivers for PHP for SQL Server

- Windows Azure SDK for PHP

- PEAR Archive Tar

- phpBB

- Wordpress

- eXtplorer File Manager

Obtaining & installing Windows Azure Companion

There are 3 steps involved in this. The first one is: go get yourself a Windows Azure subscription. I recall there is a free, limited version where you can use a virtual machine for 25 hours. Not much, but enough to try out Windows Azure Companion. Make sure to completely undeploy the application afterwards if you mind being billed.

Next, get the Windows Azure Companion – September 2010 Community Technology Preview(CTP). There is a source code download where you can compile it yourself using Visual Studio, there is also a “cspkg” version that you can just deploy onto your Windows Azure account and get running. I recommend the latter one if you want to be up and running fast.

The third step of course, is deploying. Before doing this edit the “ServiceConfiguration.cscfg” file. It needs your Windows Azure storage credentials and a administrative username/password so only you can log onto the Companion.

This configuration file also contains a reference to the application feed, so if you want to create one yourself this is the place where you can reference it.

Installing applications

Getting a “Running” state and a green bullet on the Windows Azure portal? Perfect! Then browse to http://yourchosenname.cloudapp.net:8080 (mind the port number!), this is where the administrative interface resides. Log in with the credentials you specified in “ServiceConfiguration.cscfg” before and behold the Windows Azure Companion administrative user interface.

As a side note: this screenshot was taken with a custom feed I created which included some other applications with SQL Server support, like the Drupal 7 alpha releases. Because these are alpha’s I decided to not include them in my sample feed that you can use. I am confident that more supported applications will come in the future though.

Go to the platform tab, select the PHP runtime and other components followed by clicking “Next”. Pick your favorite version numbers and proceed with installing. After this has been finished, you can install an application from the applications tab. How about WordPress?

In this last step you can choose where an application will be installed. Under the root of the website or under a virtual folder, anything you like. Afterwards, the application will be running at http://yourchosenname.cloudapp.net.

More control with eXtplorer

The sample feed I provide includes eXtplorer, a web-based file management solution. When installing this, you get full control over the applications folder on your Windows Azure instance, enabling you to edit files (configuration files) but also enabling you to upload *any* application you want to host on Windows Azure Companion. Here is me creating a highly modern homepage: and the rendered version of it:

Administrative options

As with any web server, you want some administrative options. Windows Azure Companion provides you with logging of both Windows Azure and PHP. You can edit php.ini, restart the server, see memory and CPU usage statistics and create a backup of your current instance in case you want to start messing things up and want a “last known good” instance of your installation.

Note: If you are a control freak, just stop your application on Windows Azure, download the virtual hard drive (.vhd) file from blob storage and make some modifications, upload it again and restart the Windows Azure Companion. I don’t recommend this as you will have to download and upload a large .vhd file but in theory it is possible to fiddle around.

Internet Explorer 9 jumplist support

A cool feature included is the IE9 jumplist support. IE9 beta is out and it seems all teams at Microsoft are adding support for it. If you drag the Windows Azure Companion administration tab to your Windows 7 taskbar, you get the following nifty shortcuts when right-clicking:

Scalability

The current preview release of Windows Azure Companion can not provide scale-out. It can scale up to a higher number of CPU, memory and storage, but not to multiple role instances. This is due to the fact that Windows Azure drives can not be shared in read/write mode across multiple machines. On the other hand: if you deploy 2 instances and install the same apps on them, use the same SQL Azure database backend and use round-robin DNS, you can achieve scale-out at this time. Not the way you'd want it, but it should work. Then again: I don’t think that Windows Azure Companion has been created with very large sites in mind as this type of sites will benefit more from a completely optimized version for “regular” Windows Azure.

Conclusion

I’m impressed with this series of releases, especially the Windows Azure Companion. It clearly shows Microsoft is not just focusing on its own platform but also treating PHP as an equal citizen for Windows Azure. The Companion in my opinion also lowers the step to cloud computing: it’s really easy to install and use and may attract more people to the Windows Azure platform (especially if they would add a basic, entry-level subscription with low capacity and a low price, pun intended :-))

Windows Companion makes headlines in India’s English-language press—The Hindu—on 9/19/2010:

• Ryan Dunn (@dunnry) and Steve Marx (@smarx) produced Cloud Cover Episode 26 - Dynamic Workers for Channel9 while seated on 9/18/2010:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow

In this episode:

- Discover how to get the most out of your Worker Roles using dynamic code.

- Learn how to enable multiple admins on your Windows Azure account.

Show Links:

Maximizing Throughput in Windows Azure – Part 1

Calling a Service Bus HTTP Endpoint with Authentication using WebClient

Requesting a Token from Access Control Service in C#

Two New Nodes for the Windows Azure CDN Enhance Service Across Asia

The Channel9 site has a new design, which I like (so far) and show has its own studio. Steve needs an 6-inch platform under his chair.

Brent Stineman (@BrentCodeMonkey) listed new happenings in the Windows Azure Platform product line in his Microsoft Digest for September 17th, 2010. Here’s an important item not reported by this blog:

And lastly, my friend (I hope he doesn’t mind me claiming him as such) Rob Gillen of the Oak Ridge National Laboratory has a nice part 1 article on maximizing throughput when using Windows Azure. At this point, I think Rob knows more about high-throughput scenarios in Azure then the development team does!

My last item for Rob Gillen was in Windows Azure and Cloud Computing Posts for 4/26/2010+. Subscribed.

The Windows Azure Team uploaded on 9/16/2010 a Dynamic List of Assemblies Running on Windows Azure for verifying the presence of .NET Assemblies for developers’ Web and Worker Roles in the Windows Azure Managed Library. Here’s the default page:

The About page explains how to use the list:

If your Windows Azure role relies on any assembly that is not part of the .NET Framework 3.5 or the Windows Azure managed library (listed here), you must explicitly include that assembly in the service package.

Before you build and package your service, verify that The Copy Local property is set to True for each referenced assembly in your project that is not listed here as part of the Windows Azure SDK or the .NET Framework 3.5, if you are using Visual Studio.

If you are not using Visual Studio, you must specify the locations for referenced assemblies when you call CSPack. See CSPack Command-Line Tool for more information.

The Upload page enables browsing for a Worker or Web Role’s project file (*.csproj or *.vbprog) on the developer’s computer and uploading it to the Azure project for testing.

Brent Stineman (@BrentCodeMonkey) explained how to get free trial versions of the tools required to host applications on Windows Azure but warns users about credit cards being dinged for usage charges in his There’s No Such Thing as a Free Lunch post of 9/16/2010.

Return to section navigation list>

VisualStudio LightSwitch

Deepak Chitnis announced Microsoft Visual Studio LightSwitch: The Basics the availability of five new LightSwitch tutorials on 9/15/2010:

I have been writing about and creating tutorial videos for Microsoft LightSwitch for about a couple of weeks now covering the basics on creating an application using this platform. It probably is a right time to consolidate the effort in one place for easier reference.

The tutorials start with downloading Microsoft Visual Studio LightSwitch and end with deploying a completed application. This is a series about the basics and so is not very technical, ideal for non-technical people, who would want to get a quick application going based on domain expertise. Following are the links to the tutorials:

- How to download and setup Microsoft LightSwitch Beta 1

- Getting Started with Microsoft Visual Studio LightSwitch

- Customizing the Microsoft LightSwitch generated application

- Securing a Microsoft LightSwitch application

- Deploying a Microsoft LightSwitch Application

Hope you find this series useful. After a short break, I plan on starting up a series on advanced usage of Microsoft Visual Studio LightSwitch. Stay tuned.

Deepak is Sr. Director - Architecture & Development at Universal Music Group

<Return to section navigation list>

Windows Azure Infrastructure

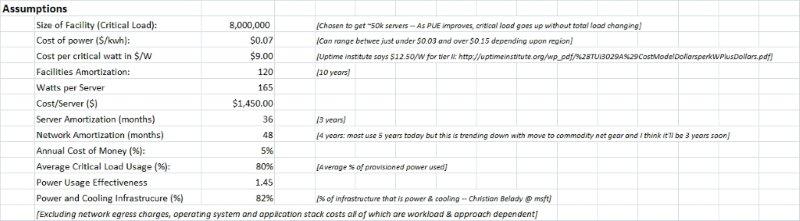

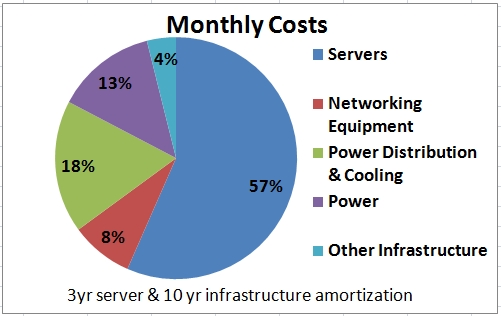

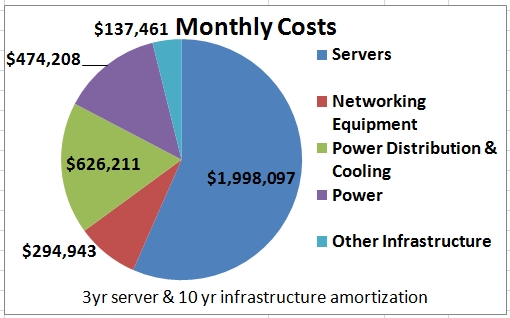

•• James Hamilton examined Overall Data Center Costs on 9/18/2010:

A couple of years ago, I did a detailed look at where the costs are in a modern , high-scale data center. The primary motivation behind bringing all the costs together was to understand where the problems are and find those easiest to address. Predictably, when I first brought these numbers together, a few data points just leapt off the page: 1) at scale, servers dominate overall costs, and 2) mechanical system cost and power consumption seems unreasonably high. Both of these areas have proven to be important technology areas to focus upon and there has been considerable industry-wide innovation particularly in cooling efficiency over the last couple of years.

I posted the original model at the Cost of Power in Large-Scale Data Centers. One of the reasons I posted it was to debunk the often repeated phrase “power is the dominate cost in a large-scale data center”. Servers dominate with mechanical systems and power distribution close behind. It turns out that power is incredibly important but it’s not the utility kWh charge that makes power important. It’s the cost of the power distribution equipment required to consume power and the cost of the mechanical systems that take the heat away once the power is consumed. I referred to this as fully burdened power.

Measured this way, power is the second most important cost. Power efficiency is highly leveraged when looking at overall data center costs, it plays an important role in environmental stewardship, and it is one of the areas where substantial gains continue to look quite attainable. As a consequence, this is where I spend a considerable amount of my time – perhaps the majority – but we have to remember that servers still dominate the overall capital cost.

This last point is a frequent source of confusion. When server and other IT equipment capital costs are directly compared with data center capital costs, the data center portion actually is larger. I’ve frequently heard “how can the facility cost more than the servers in the facility – it just doesn’t make sense.” I don’t know whether or not it makes sense but it actually is not true at this point. I could imagine the infrastructure costs one day eclipsing those of servers as server costs continue to decrease but we’re not there yet. The key point to keep in mind is the amortization periods are completely different. Data center amortization periods run from 10 to 15 years while server amortizations are typically in the three year range. Servers are purchased 3 to 5 times during the life of a datacenter so, when amortized properly, they continue to dominate the cost equation.

In the model below, I normalize all costs to a monthly bill by taking consumable like power and billing them monthly by consumption and taking capital expenses like servers, networking or datacenter infrastructure, and amortizing over their useful lifetime using a 5% cost of money and, again, billing monthly. This approach allows us to compare non-comparable costs such as data center infrastructure with servers and networking gear each with different lifetimes. The model includes all costs “below the operating system” but doesn’t include software licensing costs mostly because open source is dominant in high scale centers and partly because licensing costs very can vary so widely. Administrative costs are not included for the same reason. At scale, hardware administration, security, and other infrastructure-related people costs disappear into the single digits with the very best services down in the 3% range. Because administrative costs vary so greatly, I don’t include them here. On projects with which I’ve been involved, they are insignificantly small so don’t influence my thinking much. I’ve attached the spreadsheet in source form below so you can add in factors such as these if they are more relevant in your environment.

Late last year I updated the model for two reasons: 1) there has been considerable infrastructure innovation over the last couple of years and costs have changed dramatically during that period and 2) because of the importance of networking gear to the cost model, I factor out networking from overall IT costs. We now have IT costs with servers and storage modeled separately from networking. This helps us understand the impact of networking on overall capital cost and on IT power.

When I redo these data, I keep the facility server count in the 45,000 to 50,000 server range. This makes it an reasonable scale facility –big enough to enjoy the benefits of scale but nowhere close to the biggest data centers. Two years ago, 50,000 servers required a 15MW facility (25MW total load). Today, due to increased infrastructure efficiency and reduced individual server power draw, we can support 46k servers in an 8MW facility (12MW total load). The current rate of innovation in our industry is substantially higher than it has been any time in the past with much of this innovation driven by mega service operators.

Keep in mind, I’m only modeling those techniques well understood and reasonably broadly accepted as good quality data center design practices. Most of the big operators will be operating at efficiency levels far beyond those used here. For example, in this model we’re using a Power Usage Efficiency (PUE) of 1.45 but Google, for example, reports PUE across the fleet of under 1.2: Data Center Efficiency Measurements. Again, the spread sheet source is attached below so feel free to change to the PUE used by the model as appropriate.

These are the assumptions used by this year’s model:

Using these assumptions we get the following cost structure:

For those of you interested in playing with different assumptions, the spreadsheet source is here: http://mvdirona.com/jrh/TalksAndPapers/PerspectivesDataCenterCostAndPower.xls

If you choose to use this spreadsheet directly or the data above, please reference the source and include the URL to this pointing.

David Linthicum [pictured] pondered "Do SOA and enterprise architecture now mean the same thing?" Yes they do. in this 9/17/2010 post to ebizQ’s Where SOA Meets Cloud blog:

I want to thank Joe McKendrick, who is a fellow blogger and thought leader for recalling the statement that I made several years ago that SOA is good enterprise architecture (EA), and perhaps EA should be SOA. "Dave Linthicum predicted a couple of years back that SOA would be absorbed into EA. SOA is just good EA, he said. Has Dave's vision come to pass? Is any discussion of SOA automatically a discussion of EA, and perhaps even the other way around as well?"

While it's a bit scary to have postings/statements from two years ago brought up again, I still stand by that one. Many consider EA as a management discipline, and a way of planning and controlling, and SOA as a way of doing or a simple architectural pattern. However, I view EA as a path to good strategic architecture, where the technology aligns with the business. You can't do that without a good approach to dealing with the technology, and SOA is clearly the best practice there. Thus, good EA and SOA are no different in my mind, and SOA and EA are bound to merge conceptually. I think that is coming to pass today.

This kind of thinking has a tendency of flying in the face of traditional notions around EA. You know how it goes, some enterprise architect sits up in an ivory tower and creates presentation after presentation around platform standards, governance, data standards, MDM, ..., you get the idea. Typically without any budgetary authority, which means no ability to control anything. The end result is architecture-by-business-emergency, where stovepipe systems are tossed into the architectural mix, as the business needs them, with no overall strategy around how all of this will work and play well together over time. Agility dies a slow death.

So, traditional EA is dead, but long live EA with the concepts around SOA where there is some deep thinking around how enterprise IT assets will be addressed as sets of services that can be configured and reconfigured to solve specific and changing business problems. This gets to the value of agility, where IT can actually move to solve core business problems in a reasonable about of time. SOA is good architecture, and good architecture should be a core notion of EA. Thus, EA should be SOA, and SOA should be EA. I'll stand by that one.

David Lemphers described the Basic Elements of a Cloud Service in this 9/17/2010 post to his new personal blog:

I’m not sure about most folks, but when I hear the topic Cloud, and I consider all the different variations and permutations that constitute a Cloud, I always find it useful to relate what I’m hearing back to a simple service model.

So let me offer one up for consideration. This is based purely on my own experience, and while most of my hands-on experience is based on the Microsoft platform and more specifically, Windows Azure, I think the abstract concepts are pretty versatile.

I always start by thinking about the the service infrastructure that makes most Clouds, well, Clouds. If we boil down the most common, there is:

A hosting layer:

Key elements of the hosting layer are:

- The ability to receive incoming traffic (known as ingress) and the ability to transmit outgoing traffic (known as egress).

- The ability to execute a workload:

- This could be a piece of code running within a pre-determined environment, like it is in Windows Azure and Google App Engine currently, or

- This could be a virtual machine, like it is with Amazon EC2

These key elements are also the primary metrics that you get charged for, for example:

- How much traffic came into your service and how much traffic went out of your service. This is generally measured in gigabytes.

- How long did your service run for, and how many “instances” did you use. This is generally a function of hours multiplied by instances. Instances is really how many machines did you spin up for your service. If you ran your service for 8 hours and used 3 instances for fault tolerance and capacity, then you’ll get billed for 24 hours of usage at whatever the per hour rate is.

A storage layer:

Key elements of the storage layer are:

- The ability to receive incoming traffic (again, known as ingress), however, not in the same way as the hosting layer. The main difference here is that you don’t really execute anything inside the storage layer, this is generally a system all unto its own, with baked in security (auth, identity), load balancing, etc. What you do is send it data that you want it to store.

- The ability to transmit outgoing traffic (again, known as egress), and again, not the same as the hosting layer. When we think about egress for a storage layer, given the system is closed, it’s really about how much data is being sent out from the storage system from your “account”. For example, how many times did someone request and download a particular image or media element that you uploaded as part of a web app.

- The ability to execute a storage function. Most Cloud based storage systems have a predefined interface, a set of functions that you use to store and retrieve data. These generally map to some in-built metaphor, such as a table or a blob. So, you can do things like, AddTable, or AddRow. These functions may also have requirements, such as requiring a username or identity token.

Just like the hosting layer, the elements above dictate the way you pay for the service.

- Traffic is the same as it is for hosting, and this is primarily because the underlying infrastructure is network based, and not really value-add (as in, it’s a requirement to doing business, not something added to create value for the user), so the charging of it is fairly rudimentary.

- Accessing the storage system and storing and retrieving data is charged based on the relation of these functions against the underlying infrastructure. For example, if the storage system deploys the interface part of the system on a separate set of resources than the storage part of the system, then each time you call an interface function, you are using a resource that needs to have its cost recovered. Same with the storage. As these resources (network hardware, storage hardware) are generally different, the pricing is different too. So you might get charged a fraction of a cent for each function call you make, you might get charged a fraction of a dollar for every gigabyte of data you store. Considering how “chatty” your potential software clients (web, desktop, mobile) are starts to become a key design point, to keep costs down.

A connectivity layer:

This is something that is not available in all the Cloud offerings today in the market, but certainly something that the larger players either offer or are on the cusp of offering. It has one essential feature, the ability to seamlessly integrate resources behind a corporate firewall with resources in the cloud.

Some would argue that this is a critical feature when selecting a Cloud platform, for the real power of the Cloud is the ability to exploit the dynamic, scalable nature of the Cloud with secure, managed corporate resources.

A couple of things I left out of my post are things like:

- Developer tools – This is fundamental to any Cloud platform, but is a very broad topic, so one I’m going to save for another post.

- Monitoring and Operations support – Again, like developer tools, and somewhat tightly integrated; monitoring and ops is a very broad topic, so one I’m going to leave for later.

Now this is by no means a complete treatise on Cloud, but it is definitely a starting point. I’ll definitely revisit this topic and go deeper in future posts, and in the meantime, if you want me to take a stab at any other Cloud topics, just drop me a line.

David Lemphers answered What is my new day job? at PriceWaterhouseCoopers on 9/17/2010:

Let’s start with where am I working. I work for PwC, a.k.a PricewaterhouseCoopers. PwC has a long and successful history in consulting, primarily in the accounting area (tax, risk, that kind of thing), but also in the technology space (Oracle, SAP, etc).

Within PwC, there is a group of folks who focus purely on Technology. It makes so much sense really, I mean, there can be no successful adoption of technology without considering the business impact, so who better to advise on technology than a group of people who come from a strong business heritage.

And within that group of people, there is a group of people focusing purely on the Cloud. Now, in the limited days I’ve spent with PwC, and the small group of customers and partners I’ve had the opportunity to meet, the first reaction I get is, “You work for PwC… doing Cloud… really?”. It’s a completely fair reaction/response, I mean, Cloud is so new and shiny, that it is generally the big tech companies that spring to mind when you think Cloud. But what PwC has cooking is what got my attention in the first place.

See, like most folks who have been working on, in, and around the Cloud, sooner or later your going to realize that the technology bits and bytes are actually just one part of a bigger set of challenges. Once you’ve made the decision to explore the Cloud as a key piece of technology, the implications on the way you do business start to pop to the forefront. I’d even go as far as to say, if you’re thinking of doing “Cloud”, and you’re only thinking about technology, you’re going to find yourself in a quandary just before you launch your “thing”, as you realize the impact of your “Cloud” “thing” is pretty considerable in relation to your business. What am I talking about specifically, let me give you just one example. Say you sell software. And you used to sell all your software out of a particular state, mainly for tax purposes. Now you move some of your software functions to the Cloud, and start selling that to your customers. If the datacenter that serves out your service is located in a state other than the one you used to sell out of, and that new datacenter’s state conflicts some how with your tax strategy (say it has a higher sales tax), you’ve created a tax problem for yourself, that you’ll need to address to keep yourself out of hot water. What’s more, the effort and investment you’ve put into your “Cloud” “thing” will need to be refactored, which could cost time and money. There are many example more, such as how do you audit your billing pipeline to ensure you are charging customers the right amount for their use of your service, or, how do you ensure you’re SLA is watertight and not open to mischief? These are exactly the kinds of things that a mashup of PwC business talent and Cloud skills can help with.

So back to my job. Well, I kind of summed it up above. I’ve spent a lot of time on the technology side, designing, building and developing a Cloud, and now I’m going to spend a lot of time helping customers plan and adopt Cloud(s) as a key technology investment.

Dave is Director of Cloud Computing for PwC, focusing on how customers transform their businesses to take advantage of the cloud.

• Phil Wainwright posted Goodbye Windows, hello IE9 and the cloud on 9/16/2010 to his Software as Services ZDNet blog:

It seems mightily ironic that it should be a new browser release from Microsoft, of all people, that finally treats websites as applications. It’s been a long time coming — a decade at least — but I felt a sense of quiet satisfaction when I read Ed Bott’s review of the IE9 beta release, especially the second page on treating websites as apps.

I still remember back in 1999-2000 being shown a number of so-called web operating systems, which attempted to turn the browser into a workspace that both emulated and sought to replace the Windows desktop. Now Microsoft itself is conspiring to turn the browser into an application windowing system that, while tuned to take advantage of the underlying client environment, is at the same time independent of it.

IE9 effaces itself by adopting a minimalist frame and giving users the option of pinning shortcuts to individual websites to the desktop taskbar, treating them as if they were applications in their own right. Those shortcuts open a new browser window that’s branded with the website’s favicon and its dominant color, but which can still have other sites open in tabs within in it. Ed explains the advantage of this:

“It didn’t take long for me to begin creating groups of three or four related tabs for a common activity. For example, I have my blog’s home page pinned to the Taskbar, and I usually open Google Analytics and the WordPress dashboard for the site. Keeping those three tabs in a single group makes it easy for me to click the ZDNet icon on my Taskbar and find one of those tasks, which previously were scattered among dozens of open tabs.”

This is a welcome convenience that underlines how dependent many of us now are on web-based applications in our daily routines. But as more and more applications shift to the cloud, we’ll want much deeper integration between them than this (the phrase ‘lipstick on a pig’ comes to mind). IE9 will make it marginally easier to find your Salesforce.com contact list when a prospect email arrives in your Gmail inbox, but it’s hardly going to transform the way you work. For that, you’ll want much deeper integration of both data and process, all of which will take place in the cloud or the browser. IE9 moves the focus up off the desktop into the browser, and in doing so concedes the supremacy of cloud computing.

Jim White published on 9/7/2010 (missed when posted) the slide deck from his 10 Architectural/Design Considerations for Running in the Azure Cloud presentation to the Windows Azure [Online] Users Group of 9/7/2010. Here’s slide 3:

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

Rich Miller quoted Microsoft’s Elliott Curtis estimate of 12 to 18 months for more WAPA availability in a Microsoft to Hosters: Move Up The Value Chain post of 9/16/2010 to the Data Center Knowledge blog:

Microsoft’s push into online services has required a balancing act in its relationship with the hosting industry. On the one hand, Microsoft has far-reaching ambitions to deliver cloud services to consumers and businesses. At the same time, Microsoft sells nearly $1 billion a year in licenses and services to web hosting providers.

So is Microsoft a partner or rival for its hosting customers? In addressing the Hosting & Cloud Transformation Summit on Tuesday, Microsoft’s Elliot Curtis [pictured at right] emphasized that Microsoft doesn’t view itself as competing with its customers in the cloud computing realm – and, Curtis said, hosting providers shouldn’t either.

Big Enough Pie For All

“We believe the market is large enough for us, but also for our service provider partners,” said Curtis, Microsoft’s Director of North American Hosting Partners. “We believe the market is enormous and there are many customers who will not want to provision services (directly) from Microsoft.”

Curtis sought to position Microsoft’s relationship with hosters as one presenting opportunity, not risk. “We’re a global provider with our Azure platform, but we’ll also take the learnings and innovation from that and deliver them to the marketplace to provide building blocks for our customers,” he said.

But he also predicted that some hosters may struggle with the pace of change and infrastructure requirementsfor the expected cloud transition.”We understand that hosters have been pioneers in this space,” he said. “We get that. You really have been leaders. But make no mistake: this business is accelerating.”

Curtis said Microsoft believes as many as 30 million servers may move into the cloud, requiring hundreds of billions of dollars in investment in infrastructure to support these workloads. “Are you in the hosting business, or in the IT service delivery business?” Curtis asked. “Think about moving up the value chain and delivering IT as a service. Our goal is to evaluate what we do well, and also what our partners do well.”

12 to 18 Months on Azure Appliances

While predicting a fast-moving transition to the cloud, Curtis reported deliberate progress on a key component of Microsoft’s Azure-based offerings for service provider partners, the Windows Azure Appliance.“It’s a container we will put in a service provider’s data center,” said Curtis. “It’s still a service we’re delviering, even though it’s in a customer’s data center. We will operate that Azure environment and do the patching and updating.

“We are in very, very early days with our appliance.,” he said. “We expect that over the next 12-18 months it will become more available.”

The HPC in the Clouds blog reported Microsoft China Cloud Innovation Center Announced in Shanghai on 9/16/2010:

September 16, 2010, Shanghai, China - Microsoft China today announced the opening of the Microsoft China Cloud Innovation Center in Shanghai. The announcement was made at the company's Greater China Region Cloud Partner Summit. The company characterized the announcement as delivering on its commitment to building a healthy IT ecosystem through collaboration with the government, customers and partners in China.

Bob Muglia, President of the Server and Tools Business division (STB) at Microsoft said at the summit: "Microsoft is providing the industry's broadest range of cloud computing solutions to customers and partners world-wide. Today about 70 percent of Microsoft engineers are doing work related to the cloud, including people in Microsoft's Asia-Pacific Research and Development Group. We expect the number continue to grow in the future."

Muglia said that, China is strategically significant to the company and through the Microsoft China Cloud Innovation Center, the company will be able to better serve the China market, helping government, partners and customers boost their global competitiveness.

"We will staff the Cloud Innovation Center with a team of expert engineers who will work closely with customers and partners to help them realize the benefits of Microsoft's Server and Services Platform," Muglia said. Microsoft China Cloud Innovation Center's engineering teams will also focus on delivering products and technologies that are specific to China's Cloud Computing needs.

Dr. Shao ZhiQing, Deputy Director of Shanghai Municipal Commission of Economy and Informatization extended a warm welcome to Cloud Innovation Center: "Cloud computing will fundamentally transform the way of obtaining and disseminating knowledge. By increasing its investment in China and setting up the Microsoft China Cloud Innovation Center in Shanghai, Microsoft is demonstrating its commitment to providing local governments and enterprises with advanced technologies and solutions that will help Shanghai achieve the strategic objectives of promoting the cloud computing industry. "

Cloud computing is transforming business by delivering IT as a service, and, as the world's leading software and solutions provider, Microsoft has stated that it is 'All In' in the cloud. By leveraging its proven software platform, deep experience in Internet services and diversified business models, Microsoft provides customers with a full range of cloud computing solutions in secure, open and flexible computing environments.

Microsoft Asia-Pacific Research and Development Group (ARD) will continue to play a key development role in building many of Microsoft's global Cloud products, and contributes key components to global Cloud projects, such as Bing, Windows Live, Live Messenger, Microsoft Dynamics Online, and Commerce Transaction Platform. Server & Tools Business (STB) China, a key division of ARD, is working on core platform products such as Windows Server, SQL Server, Windows HPC Server, Visual Studio and System Center for both Private and Public Clouds.

Microsoft's cloud computing solutions are quickly gaining market share worldwide. In China, Microsoft has been deploying cloud technologies for several years. In November 2008, CNSaaS.com, a SaaS service platform established jointly by Microsoft, Suzhou Industrial Park and Jiangsu Feng Yun Network Service Co., Ltd., went online. Meanwhile, Microsoft established a cloud computing development and training platform in Hangzhou to help enterprises improve software R&D innovation capabilities and cut production costs. At the end of 2009, Microsoft signed an MOU with Taiwan-based Chunghwa Telecom in which the two companies will cooperate in the application of on-premise cloud services.

About Microsoft

Founded in 1975, Microsoft (NASDAQ: MSFT) is the worldwide leader in software, services and solutions that help people and businesses realize their full potential. For more information about Microsoft in China, please visit http:// www.microsoft.com/China.

<Return to section navigation list>

Cloud Security and Governance

•• Avi Rosenthal analyzed Cloud Computing and the Security Paradox in this 9/17/2010 post:

On September, 14th I participated in a local IBM conference titled: Smarter Solutions for a Smarter Business. One of the most interesting and practical presentations was Moises Navarro's presentation on Cloud Computing.

He quoted an IBM survey about suitable and unsuitable workload types for implementation in the Cloud. The ten leading suitable workloads included many Infrastructure services and Desktop Services. The unsuitable workloads list included ERP as well as other Core Applications as I would expect (for example, read my previous post SaaS is Going Mainstream).

However, it also included Security Services, as one of the most unsuitable workloads. On one hand, it is not a surprising finding because Security concerns are Cloud Computing inhibitors, but on the other hand Security Services are part of infrastructure Services, and therefore could be a good fit for implementation in the Cloud.

A recent Aberdeen Group's Research Note titled: Web Security in the Cloud: More Secure! Compliant! Less Expensive! (May 2010) supports the view that Security Services implementation in the Cloud, may provide significant benefits.

The study reveals that applying e-mail Security as a Service in the Cloud is more efficient and secure than applying e-mail On Premise Security. Aberdeen study was based upon 36 organizations using On Premise Web Security solutions and on 22 organizations using Cloud Computing based solutions.

Cloud based solutions reported significantly less Security incidents in every incident category checked. The categories were: Data Loss, Malware Infections, Web-Site compromise, Security related Downtime and Audit Deficiencies.

As far as efficiency is concerned, Aberdeen Group found that users of Cloud based Web Security solutions realized 42% reduction in associated Help Desk calls in comparison to users of On Premise solutions.

The findings may not be limited to Web Security and e-mail Security. Aberdeen Group identifies convergence process between Web Security; e-mail Security and Data Loss Prevention (DLP).

The paradox is that most Security threats are internal, while most Security concerns are about External threats. For example, approximately 60% of Security breaches in banks were Internal. Usually insiders can do more harm than outsiders.

The Cloud is not an exception to that paradoxical rule: many Security concerns about Cloud Based implementations and about Cloud based Security Services and relatively less Security breaches and more efficient implementation of Security Services in the Cloud.

Ari’s positions held include CTO for one of the largest software houses in Israel as well as the CTO position for one of the largest ministries of the Israeli government.

The CloudVenture.biz Team posted Cloud Enterprise Architecture on 9/16/2010:

Consultancy firm Deloitte has asked ‘does Cloud makes Enterprise Architecture irrelevant?’

This prompted a compelling discussion on the topic in a Linkedin group where I suggested that actually Cloud is Enterprise Architecture.

Yes “the Cloud” is a place, which people point to in a vague hand waving motion implying it’s really far away and quite ephereal, but Cloud Computing is now also a practice.

Cloud EA – Maturity model

Due to the convergence of enterprise IT and Internet 2.0 standards, and the expansion via Private Cloud, the field now represents a design approach to IT systems in general as well as hosted applications and infrastructure.

Cloud is actually becoming an excellent source of EA best practices. Standards work like Cloud Management from the DMTF now provides a fairly generalized set of blueprints for enteprise IT architecture that an organization could use as design assets independent of using any Cloud providers.

Cloud Identity Management

Of course it’s highly likely they will use Cloud providers, and so the reason why Cloud EA will be so valuable and powerful is that it can cope with this new world as well leverage it for better internal practices too.

For example one key area tackled in the DMTF architecture is ‘Cloud Identity’, stating that Cloud providers should utilize existing Identity Management standards to streamline their own apps, and should ideally integrate with corporate identity systems like Active Directory.

Catering for these types of needs is a great context for driving new business start-ups too. For example Cloud Identity meets these needs, and helps quantify the activities in this section of the model.