Windows Azure and Cloud Computing Posts for 9/7/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

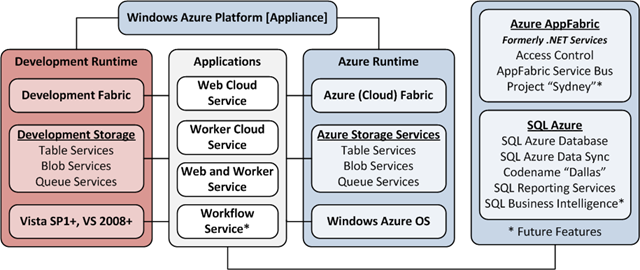

Ayende Rahien discussed excessive normalization and its affect on domain models in his Normalization is from the devil post of 9/7/2010:

The title of this post is a translation of an Arabic saying that my father quoted me throughout my childhood.

I have been teaching my NHibernate course these past few days, and I had come to realize that my approach for designing RDBMS based applications has gone a drastic change recently. I think that the difference in my view was brought home when I started getting angry about this model:

I mean, it is pretty much a classic, isn’t it? But what really annoyed me was that all I had to do was look at this and know just how badly this is going to end up as when someone is going to try to show an order with its details. We are going to have, at least initially, 3 + N(order lines) queries. And even though this is a classic model, loading it efficiently is actually not that trivial. I actually used this model to show several different ways of eager loading. And remember, this model is actually a highly simplified representation of what you’ll need in real projects.

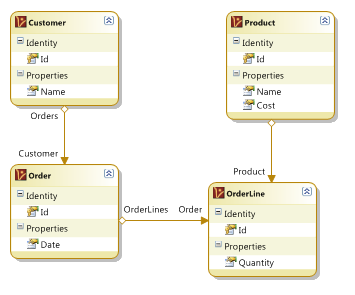

I then came up with a model that I felt was much more palatable to me:

And looking at it, I had an interesting thought. My problem with the model started because I got annoyed by how many tables were involved in dealing with “Show Order”, but the end result also reminded me of something, Root Aggregates in DDDs. Now, since my newfound sensitivity about this has been based on my experiences with RavenDB, I found it amusing that I explicitly modeled documents in RavenDB after Root Aggregates in DDD, then went the other way (reducing queries –> Root Aggregates) with modeling in RDBMS).

The interesting part is that once you start thinking like this, you end up with a lot of additional reasons why you actually want that. (If the product price changed, it doesn’t affect the order, for example).

If you think about it, normalization in RDBMS had such a major role because storage was expensive. It made sense to try to optimize this with normalization. In essence, normalization is compressing the data, by taking the repeated patterns and substituting them with a marker. There is also another issue, when normalization came out, the applications being being were far different than the type of applications we build today. In terms of number of users, time that you had to process a single request, concurrent requests, amount of data that you had to deal with, etc.

Under those circumstances, it actually made sense to trade off read speed for storage. In today’s world? I don’t think that it hold as much.

The other major benefit of normalization, which took extra emphasis when the reduction in storage became less important as HD sizes grew, is that when you state a fact only once, you can modify it only once.

Except… there is a large set of scenarios where you don’t want to do that. Take invoices as a good example. In the case of the order model above, if you changed the product name from “Thingamajig” to “Foomiester”, that is going to be mighty confusing for me when I look at that order and have no idea what that thing was. What about the name of the customer? Think about the scenarios in which someone changes their name (marriage is most common one, probably). If a woman orders a book under her maiden name, then changes her name after she married, what is supposed to show on the order when it is displayed? If it is the new name, that person didn’t exist at the time of the order.

Obviously, there are counter examples, which I am sure the comments will be quick to point out.

But it does bear thinking about, and my default instinct to apply 3rd normal form has been muted once I realized this. I now have a whole set of additional questions that i ask about every piece of information that I deal with.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

My (@rogerjenn) SQL Azure Portal Database Provisioning Outage on 9/7/2010 post of 9/7/2010 described:

At least some users, including me, were unable to provision SQL Azure Databases for three to four hours on Tuesday, 9/7/2010 starting at about 7:00 AM PDT. As of 9:30 AM, when I posted this article, I was unable to find an acknowledgement of the problem by Microsoft.

Update 9/9/2010, 10:00 AM PDT: The SQL Azure Provisioning feature is now operable.

1. I went to the Windows Azure Developer Portal to provision a SQL Azure database from the Windows Azure One-Month Pass I received this morning. I clicked the SQL Azure tab and the default ASP.NET Runtime Error message opened:

2. The Windows Azure Service Dashboard doesn’t report any problems with SQL Azure Database:

3. SQL Server 2008 R2 Management Studio had no problem connecting to my SQL Azure instances in the South Central US (San Antonio) data center:

4. I subscribe to Dashboard RSS 2.0 feeds for Windows Azure Compute and SQL Azure Database for the North Central US and South Central US data centers. The last message from the North Central data center for SQL Azure Database was:

and from the South Central data center:

The preceding messages indicate that users can expect warnings about scheduled maintenance that affects server provisioning, but no such message was posted for this provisioning outage. Windows Azure Compute messages about scheduled instance provisioning interruptions are similar.

5. I’ll update this post if and when the SQL Azure Team acknowledges the problem on their blog or Twitter and when the SQL Azure Database provisioning returns to service.

The upshot: Microsoft should report in a timely manner unscheduled problems, in addition to scheduled maintenance operations, that affect provisioning in the RSS 2.0 feeds for all regions affected.

See the original post for Twitter and SQL Azure Forum traffic about the issue.

Wayne Walter Berry (@WayneBerry) posted the first of two Securing Your Connection String in Windows Azure: Part 1 tutorials to the SQL Azure Team blog on 9/7/2010:

One of the challenges you face in running a highly secure SQL Azure environment is too keep your connection string to SQL Azure secure. If you are running Windows Azure you need to provide this connection string to your Windows Azure code, however you might not want to provide the production database password to the development staff creating the Windows Azure package. In this series of blogs posts I will take about how to secure your SQL Azure connection string in the Windows Azure environment.

Scoping the Scenario

Without any security measures your connection string appears in plain text, and includes your login and password to your production database. The goal is to allow the code running on Windows Azure be able to read your connection string, however the developers making the package for Windows Azure deployment should not be able to read it. In order to do that, Windows Azure needs know the “secret” to unlock the connection string; however the developers that are making the package should not. How is this accomplished when they have access to all the files in the package?

Using the Windows Azure Certificate Store

The way that we have a secret that only Windows Azure “knows” is to pre-deploy a certificate to the Windows Azure Certificate Store. This certificate contains the private key of a public/private key pair. Windows Azure then uses this private key to decrypt an encrypted connection string that is deployed in the configuration files of the Windows Azure package. The public key is passed out to anyone needing to encrypt a connection string. Users with the public key can use it to encrypt the connection string, but it doesn’t allow them to read the encrypted connection string.

In this technique there are three different types of users:

- The private key holder (the Windows Azure administrator) a very secure user that generates the private/public key pair, stores the private key backup, and puts the private key in place on Windows Azure. Only he and Windows Azure can decode the connection string.

- The SQL Administrator, he knows the connection string and he uses the public key (gotten from the Windows Azure Administrator) to encoded the connection string. The encoded bytes are giving to the web developers for the web.config.

- Web developers that make the Windows Azure package, they know the public key (everyone has access to it); however they don’t know the connection string nor the private key so they can’t get the SQL password.

Creating a Certificate

The first thing the Windows Azure administrator (private key holder) needs to do is use their local machine to create a certificate. In order to do this they will need Visual Studio 2008 or 2010 installed. The technique that I usually use to create a private/public key pair is with a program called makecert.exe.

Here are the steps to create a self-signed certificate in .pfx format.

- Open a Visual Studio command prompt (Run as administrator), you will find the command prompt in the start menu under Visual Studio tools.

- Execute this command:

makecert -r -pe -n "CN=azureconfig" -sky exchange "azureconfig.cer" -sv "azureconfig.pvk"

- You will be prompted for a password to secure the private key three times. Enter a password of your choice.

- This will generate an azureconfig.cer (the public key certificate) and an azureconfig.pvk (the private key file) file.

- Then enter the following command to create the .pfx file (this format is used to import the private key to Windows Azure). After the –pi switch, enter the password you chose.

pvk2pfx -pvk "azureconfig.pvk" -spc "azureconfig.cer" -pfx "azureconfig.pfx" -pi password-entered-in-previous-step

- You can verify that the certificate has been created by looking in the current directory in the Visual Studio command prompt.

Summary

In part two of the blog series I will show how the Windows Azure Administrator imports the private key (.pfx file) into Windows Azure.

Jim O’Neill tackled Dallas on PHP (via WebMatrix) on 9/7/2010:

Frequent readers know that I’ve been jazzed about Microsoft Codename “Dallas” ever since it was announced at PDC last year (cf. my now dated blog series), so with the release of CTP3 and my upcoming talk at the Vermont Code Camp, I thought it was time for another visit.

Catching Up With CTP3

I typically introduce “Dallas” with an alliterative (ok, cheesy) elevator pitch: “Dallas Democratizes Data,” and while that characterization has always been true – the data transport mechanism is HTTP and the Atom Publishing Protocol – it’s becoming ‘truer’ with the migration of “Dallas” services to OData (the Open Data Protocol). OData is an open specification for data interoperability on the web, so it’s a natural fit for “Dallas,” which itself is a marketplace for data content providers like NASA, Zillow, the Associated Press, Data.gov, and many others.

Exposing these datasets as OData in CTP3 is a significant development on two fronts:

It enables a more flexible query capability. For example, you can now use the OData $select system query option to indicate what columns of data you’re interested in, $orderby to sort, and $inlinecount to return the number of rows retrieved. All of that happens now on the server, reducing bandwidth and the time it takes to get just the specific data you want.

The developer experience is vastly improved. If you’re using Visual Studio, you can leverage the Add Service Reference functionality and work with the LINQ abstractions over the OData (WCF Data Services) protocol. As you might recall from my previous post, previously there was a rather manual process of generating C# service classes that, to boot, didn’t automatically support the asynchronous invocation required by Silverlight.

If you’re not using .NET or Visual Studio, the presence of numerous OData SDKs (Java, Ruby, PHP, iPhone, etc.) now makes accessing “Dallas” data a snap from many other applications and platforms as well. That’s the premise of this post, where I’ll share my experiences in accessing a “Dallas” data service via a PHP web page.Getting Your “Dallas” Account

“Dallas” is in a CTP phase, which stands for “Community Technology Preview” (but which means ‘free’!), and all you need is a Live ID to get started. If you don’t yet have a “Dallas” account, go to http://www.sqlazureservices.com, and click on the Subscriptions tab. You’ll be prompted to login via a Live ID and then see a short form to fill out and submit. You’ll immediately get an account key and be able to subscribe to the various data services.

In the CTP all the feeds are free, so it’s a good time to explore what’s out there via the Catalog tab. You’ll see both CTP3 and CTP2 services in the catalog; the CTP3 ones are OData-enabled, and the CTP2 ones are being updated to support OData, after which they’ll be decommissioned. The bulk of this article applies only to CTP3 services.

The CTP3 dataset I’ll use in this blog post comes from Infogroup and contains data about new businesses that have formed in the past two years in the US. Actually, since the CTP is gated, the data available now is only for Seattle, and query results are limited to 100 records, but it’s good enough for our purposes.

As with any of the datasets in “Dallas,” once you subscribe, you can query the data directly via the “Dallas” Explorer by selecting the Click here to explore the dataset link found with each dataset listed on your Subscriptions tab.. Each dataset exposes the same generic grid display and query parameter interface to give you a quick glimpse into the fields and data values that are part of the dataset. This functionality, by the way, has been a part of “Dallas” since day one.

With CTP3, as you experiment with your queries, the OData URI corresponding to the query is shown in the bottom left of the “Dallas” Explorer, and you can view that URL in a browser to see the raw Atom format! Basic Authentication is now used, so you’ll need to supply your “Dallas” account key as the password (and anything you want as the username) when prompted.

Setting Up PHP and the OData SDK

If you aren’t familiar with the Web Platform Installer (aka WebPI), it’s time you were! It’s a lightweight, smart client application that you can use to discover, install, and configure all sorts of web development tools and technologies, from ASP.NET to Visual Web Developer Express, from Windows Server AppFabric to WCF RIA Services, and from PHP to numerous open source offerings, like WordPress, Joomla!, Drupal, Umbraco, Moodle, and more. I use it whenever I refresh my machine – which is frequently in order to get rid of numerous trial and beta bits!

In my case, I already had PHP set up via WebPI, so I went straight to the instructions for installing the OData PHP SDK. The ZIP file contains everything you need, including a handy README file to set things up.

Caveat: One of the requirements (Step 6 in the README) is the installation of the PHP XSL extension, but that extension is not installed via the Web Platform Installer. If you don’t find php_xsl.dll in your PHP extensions directory, you can grab just that file from the distribution at http://windows.php.net and update your php.ini file to enable the extension. As of this writing, the Web Platform Installer installs the non-thread safe version 5.2.14. It’s important you get that right, or you’ll get some subtle failures later on.

Generating the PHP Proxy Class

With CTP3 services, .NET developers can use the familiar Add Service Reference functionality to generate the proxy plumbing needed to invoke “Dallas” services. The proxy code hides the underlying HTTP request/response goo and allows the developer to access the service and its data via a data context and LINQ operators, just as they might use LINQ to SQL or the ADO.NET Entity Framework.

Visual Studio’s “Add Service Reference” UI is actually just a nice wrapper for the WCF Data Service Client Utility, so it stands to reason that there be a similar utility for generating analogous PHP classes. It’s called PHPDataSvcUtil.php and is included with the PHP OData SDK you installed in the step above. The utility has a number of switches, but the most important one is

/urivia which you specify the “Dallas” service’s metadata endpoint (shown in the image above). For the service we’re interested in, the following command line (sans line breaks) will generate the desired PHP proxy class:

php PHPDataSvcUtil.php

/uri=https://api.sqlazureservices.com/Data.ashx/InfoUSA/HistoricalBusinessesSince I didn’t specify an output file, the file is placed in the same directory as PHPDataSvcUtil, and that file includes two significant class types:

A container class that serves the same function as a DataContext or ObjectContext in .NET. It handles the connection and authentication to the service and exposes query properties for the various data series exposed by the “Dallas” service. For the selected Infogroup service, the container class is InfoUSAHistoricalBusinessesContainer.

Classes for each series exposed by the service. Each class include properties corresponding to the ‘columns’ of data in that series as well as a few utility methods accessed by the container context. The service being used here has three series, and so three classes:

- Historical_Business,

- NewBusiness, and

- Nixie

Jim continues his tutorial with Writing Some PHP Code and Running Your Code (No, it doesn’t work the first time!) topics.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Scott Hanselman interviewed Andrew Arnott in Hanselminutes Podcast 229 - OpenID and OpenAuth with DotNetOpenAuth open source programmer Andrew Arnott on 9/7/2010:

Scott talks to Andrew Arnott about OpenID and OpenAuth. What are these two protocols, how do they relate to each other and what do we as programmers need to know about them? Do you use Twitter? Then you use OpenAuth and may not realize it. Andrew works at Microsoft and works on the side on his open source project DotNetOpenAuth.

Links from the Show

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Zhiming Xue advertised ARCast.TV - David Aiken on Azure Architecture Patterns on 9/9/2010:

David Aiken (@TheDavidAiken) is a Technical Evangelist for Windows Azure. David is responsible for :

Bid Now Sample at http://code.msdn.microsoft.com/BidNowSample.

- Windows Azure Platform training kit and Channel 9 learning course.

- Windows Azure MMC

- Windows Azure Table Browser

- Windows Azure Management PowerShell CmdLets

In this episode David talks to Bob Familiar about the architecture patterns that lend themselves to building scalable services for the cloud.

Ron Jacobs shows you how to How to Unit Test WF4 Workflows in this 9/7/2010 post to the .NET Endpoints blog:

Recently I asked you to vote on topics you want to see on endpoint.tv. Coming in at #3 was this question on how to Unit Test WF4 Workflows.

Because Workflows range from very simple activities to long and complex multi-step processes this can be a very challenging. I found after writing many activities that I began to see opportunities to create a library of test helpers which became the WF4 Workflow Test Helper library.

In this post I’ll show you how you can test a simple workflow using one of the helpers from the library.

Testing Activity Inputs / Outputs

If you have a simple activity that returns out arguments and does not do any long-running work (no messaging activities or bookmarks) then the easiest way to test it is with WorkflowInvoker.

The Workflow we are testing in this case contains an activity that adds two Int32 in arguments and returns an out argument named sum

The easiest way to test such an activity is by using Workflow Invoker

[TestMethod]

public void ShouldAddGoodSum()

{

var output = WorkflowInvoker.Invoke(new GoodSum() {x=1, y=2});

Assert.AreEqual(3, output["sum"]);

}While this test is ok it assumes that the out arguments collection contains an argument of type Int32 named “sum”. There are actually several possibilities here that we might want to test for.

There is no out argument named “sum” (names are case sensitive)

There is an argument named “sum” but it is not of type Int32

There is an argument named “sum” and it is an Int32 but it does not contain the value we expect

Any time you are testing out arguments for values you have these possibilities. Our test code will detect all three of these cases but to make it very clear exactly what went wrong I create a test helper called AssertOutArgument.

Now let’s consider what happens if you simply changed the name of the out argument from “sum” to “Sum”. From the activity point of view “Sum” is the same as “sum” because VB expressions are not case sensitive.

But our test code lives in the world of C# where “Sum” != “sum”. When I make this change the version of the test that uses Assert.AreEqual gets this error message

Test method TestProject.TestSumActivity.ShouldAddBadSumArgName threw exception:

System.Collections.Generic.KeyNotFoundException: The given key was not present in the dictionary.If you have some amount of experience with Workflow and arguments you know that the out arguments are a dictionary of name/value pairs. This exception simply means it couldn’t find the key – but what key?

Using WorklowTestHelper

Download the binaries for WorkflowTestHelper

Add a reference to the WorkflowTestHelper assemblies

Add a using WorkflowTestHelper statement

To use AssertOutArgument we change the test code to look like this

[TestMethod]

public void ShouldAddGoodSumAssertOutArgument()

{

var output = WorkflowInvoker.Invoke(new GoodSum() { x = 1, y = 2 });

AssertOutArgument.AreEqual(output, "sum", 3);

}Now the error message we get is this

AssertOutArgument.AreEqual failed. Output does not contain an argument named <sum>.

Wrong Type Errors

What if you changed the workflow so that “sum” exists but it is the wrong type? To test this I made “sum” of type string and changed the assignment activity expression to convert the result to a string.

The Assert.AreEqual test gets the following result

Assert.AreEqual failed. Expected:<3 (System.Int32)>. Actual:<3 (System.String)>.

This is ok but you do look twice – Expected:<3 then Actual:<3 but then you notice the types are different.

AssertOutArgument is more explicit

AssertOutArgument.AreEqual failed. Wrong type for OutArgument <sum>. Expected Type: <System.Int32>. Actual Type: <System.String>.

Summing It Up

AssertOutArgument covers three things, make sure the arg exists, make sure it is the correct type and make sure it has the correct value. Of course there is much more to the WF4 Workflow Test Helper that I will cover in future posts.

Return to section navigation list>

VisualStudio LightSwitch

Matt Thalman explained Filtering data based on current user in LightSwitch apps on 9/7/2010:

In many applications, you need to filter data that is only relevant to the particular user that is logged in. For example, a personal information manager application may only want users to view their own tasks and not the tasks of other users. Here’s a walkthrough of how you can setup this kind of data filtering in Visual Studio LightSwitch.

I’ll first create a Task table which has two fields: one for the task description and another to store the user name of the user who created the task.

Next, I’ll need to write some code so that whenever a Task is created, it will automatically have it’s CreatedBy field set to the current user. To do this, I can use Write Code drop-down on the table designer to select the Created method.

Here’s the code:

partial void Task_Created() { this.CreatedBy = this.Application.User.Name; }Now we’re at the data filtering step. What I’d really like to do is have all queries for Tasks be filtered according to the current user. So even if I model a new query for Tasks then it will automatically get this filtering behavior. That way I only have to write this code once and it will be applied whenever tasks are queried. LightSwitch provides a built-in query for each table that returns all instances within that table. The name of this query is TableName_All. All other queries for that table are based on that All query. So if I can modify the behavior of that All query, then every other query that queries the same table will also get that behavior. LightSwitch just so happens to provide a way to modify the default behavior of the All query. This can be done through the PreprocessQuery method. This method is also available through the Write Code drop-down.

The PreprocessQuery method allows developers to modify the query before it is executed. In my case, I want to add a restriction to it so that only tasks created by the current user are returned.

partial void Tasks_All_PreprocessQuery(ref IQueryable<Task> query) { query = query.Where(t => t.CreatedBy == this.Application.User.Name); }And that’s all I need to do. Now, whenever any query is made for tasks it will add this restriction.

Windows Azure Infrastructure

Windows Azure Compute, North Central US reported a [Yellow Alert] Windows Azure networking issue in North Central US on 9/7/2010:

Sep 7 2010 4:15PM A networking issue in the North Central US region might cause a very limited set of customers to see their application deployed in the Windows Azure developer portal but not accessible via the Internet. The team is actively working on the repair steps.

- Sep 7 2010 10:57PM The team is still actively working on repairing the networking issue.

Lori MacVittie (@lmacvittie) claimed Knowing the algorithms is only half the battle, you’ve got to understand a whole lot more to design a scalable architecture. in a preface to her Choosing a Load Balancing Algorithm Requires DevOps Fu post to F5’s DevCentral blog:

Citrix’s Craig Ellrod has a series of blog posts on the basic (industry standard) load balancing algorithms. These are great little posts for understanding the basics of load balancing algorithms like round robin, least connections, and least (fastest) response time. Craig’s posts are accurate in their description of the theoretical (designed) behavior of the algorithms. The thing that’s missing from these posts (and maybe Craig will get to this eventually) is context. Not the context I usually talk about, but the context of the application that is being load balanced and the way in which modern load balancing solutions behave, which is not as a simple Load balancer.

Different applications have different usage patterns. Some are connection heavy, some are compute intense, some return lots of little responses while others process larger incoming requests. Choosing a load balancing algorithm without understand the behavior of the application and its users will almost always result in an inefficient scaling strategy that leaves you constantly trying to figure out why load is unevenly distributed or SLAs are not being met or why some users are having a less than stellar user experience.

One of the most misunderstood aspects of load balancing is that a load balancing algorithm is designed to choose from a pool of resources and that an application is or can be made up of multiple pools of resources. These pools can be distributed (cloud balancing) or localized, and they may all be active or some may be designated as solely existing for failover purposes. Ultimately this means that the algorithm does not actually choose the pool from which a resource will be chosen – it only chooses a specific resource. The relationship between the choice of a pool and the choice of a single resource in a pool is subtle but important when making architectural decisions – especially those that impact scalability. A pool of resources is (or should be) a set of servers serving similar resources. For example, the separation of image servers from application logic servers will better enable scalability domains in which each resource can be scaled individually, without negatively impacting the entire application.

To make the decision more complex, there are a variety of factors that impact the way in which a load balancing algorithm actually behaves in contrast to how it is designed to act. The most basic of these factors is the network layer at which the load balancing decision is being made.

THE IMPACT of PROTOCOL

There are two layers at which applications are commonly load balanced: TCP (transport) and HTTP (application). The layer at which a load balancing is performed has a profound effect on the architecture and capabilities.

Layer 4 (Connection-oriented) Load Balancing

When load balancing at layer 4 you are really load balancing at the TCP or connection layer. This means that a connection (user) is bound to the server chosen on the initial request. Basically a request arrives at the load balancer and, based on the algorithm chosen, is directed to a server. Subsequent requests over the same connection will be directed to that same server.

Unlike Layer 7-related load balancing, in a layer 4 configuration the algorithm is usually the routing mechanism for the application.

Layer 7 (Connection-oriented) Load Balancing

When load balancing at Layer 7 in a connection-oriented configuration, each connection is treated in a manner similar to Layer 4 Load Balancing with the exception of how the initial server is chosen. The decision may be based on an HTTP header value or cookie instead of simply relying on the load balancing algorithm. In this scenario the decision being made is which pool of resources to send the connection to. Subsequently the load balancing algorithm chosen will determine which resource within that pool will be assigned. Subsequent requests over that same connection will be directed to the server chosen.

Layer 7 connection-oriented load balancing is most useful in a virtual hosting scenario in which many hosts (or applications) resolve to the same IP address.

Layer 7 (Message-oriented) Load Balancing

Layer 7 load balancing in a message-oriented configuration is the most flexible in terms of the ability to distribute load across pools of resources based on a wide variety of variables. This can be as simple as the URI or as complex as the value of a specific XML element within the application message. Layer 7 message-oriented load balancing is more complex than its Layer 7 connection-oriented cousin because a message-oriented configuration also allows individual requests – over the same connection – to be load balanced to different (virtual | physical) servers. This flexibility allows the scaling of message-oriented protocols such as SIP that leverage a single, long-lived connection to perform tasks requiring different applications. This makes message-oriented load balancing a better fit for applications that also provide APIs as individual API requests can be directed to different pools based on the functionality they are performing, such as separating out requests that update a data source from those that simply read from a data source.

Layer 7 message-oriented load balancing is also known as “request switching” because it is capable of making routing decisions on every request even if the requests are sent over the same connection. Message-oriented load balancing requires a full proxy architecture as it must be the end-point to the client in order to intercept and interpret requests and then route them appropriately.

OTHER FACTORS to CONSIDER

If that were not confusing enough, there are several other factors to consider that will impact the way in which load is actually distributed (as opposed to the theoretical behavior based on the algorithm chosen).

Application Protocol

HTTP 1.0 acts differently than HTTP 1.1. Primarily the difference is that HTTP 1.0 implies a one-to-one relationship between a request and a connection. Unless the HTTP Keep-Alive header is included with a HTTP 1.0 request, each request will incur the processing costs to open and close the connection. This has an impact on the choice of algorithm because there will be many more connections open and in progress at any given time. You might think there’s never a good reason to force HTTP 1.0 if the default is more efficient, but consider the case in which a request is for an image – you want to GET it and then you’re finished. Using HTTP 1.0 and immediately closing the connection is actually better for the efficiency and thus capacity of the web server because it does not maintain an open connection waiting for a second or third request that will not be forthcoming. An open, idle connection that will eventually simply time-out wastes resources that could be used by some other user. Connection management is a large portion of resource consumption on a web or application server, thus anything that increases that number and rate decreases the overall capacity of each individual server, making it less efficient.

HTTP 1.1 is standard (though unfortunately not ubiquitous) and re-uses client-initiated connections, making it more resource efficient but introducing questions regarding architecture and algorithmic choices. Obviously if a connection is reused to request both an image resource and a data resource but you want to leverage fault tolerant and more efficient scale design using scalability domains this will impact your choice of load balancing algorithms and the way in which requests are routed to designated pools.

Configuration Settings

A little referenced configuration setting in all web and application servers (and load balancers) is the maximum number of requests per connection. This impacts the distribution of requests because once the configured maximum number of requests has been sent over the same connection it will be closed and a new connection opened. This is particularly impactful on AJAX and other long-lived connection applications. The choice of algorithm can have a profound affect on availability when coupled with this setting. For example, an application uses an AJAX-update on a regular interval. Furthermore, the application requests made by that updating request require access to session state, which implies some sort of persistence is required. The first request determines the server, and a cookie (as one method) subsequently ensures that all further requests for that update are sent to the same server. Upon reaching the maximum number of requests, the load balancer must re-establish that connection – and because of the reliance on session state it must send the request back to the original server. In the meantime, however, another user has requested a resource and been assigned to that same server, and that user has caused the server to reach its maximum number of connections (also configurable). The original user is unable to be reconnected to his session and regardless of whether the request is load balanced to a new resource or not, “availability” is effectively lost as the current state of the user’s “space” is now gone.

You’ll note that a combination of factors are at work here: (1) the load balancing algorithm chosen, (2) configuration options and (3) application usage patterns and behavior.

Lori continued with an “IT JUST ISN’T ENOUGH to KNOW HOW the ALGORITHMS BEHAVE” topic.

Michael Coté posted a Data integration in a cloud world with Joe Dwyer – make all #009 podcast on 9/7/2010:

A cloud-based application usually has to integrate with many data sources – in this make all episode, I talk with Joe Dwyer about how his company, PropelWare architects that solution.

In this discussion we do a good job of pulling out some best practices and tips for others who’re in similar architectural circumstances.

Download the episode directly right here, subscribe to the feed in iTunes or other podcatcher to have episodes downloaded automatically, or just click play below to listen to it right here:

Show Notes

- PropelWare’s solutions integrate with many accounting services, PaaS’s, SaaS’s, and other “cloud-based” services. One of their primary integrations is with Intuit’s Partner Platform, giving them access to QuickBooks.

- We talk about how it fits in and what services are used, etc.

- I ask about the rise in mobile usage and what it ends up looking for their customers.

- I test one of my pet theories: does simple API mean interop matters less?

- Is there a common models between these accounting things?

- Feelings about switch to SaaS usage among customers? Are they afraid, happy – do they even notice?

- Azure usage for Office Manager.

- Their experience in the mobile world: what are some lessons learned.

Disclosure: this episode is sponsored by Intuit, who is a client.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

Eric Knorr asserted “The definition of the private cloud may be fuzzy, but it's all part of the age-old quest for greater efficiency in IT” in a preface to his What the 'private cloud' really means post of 9/6/2010 to InfoWorld’s Cloud Computing blog:

Cloud computing has two distinctly different meanings. The first is simple: The use of any commercial service delivered over the Internet in real time, from Amazon's EC2 to software as a service offered by the likes of Salesforce or Google.

The second meaning of cloud computing describes the architecture and technologies necessary to provide cloud services. The hot trend of the moment -- judging by last week's VMworld conference, among other indicators -- is the so-called private cloud, where companies in effect "try cloud computing at home" instead of turning to an Internet-based service. The idea is that you get all the scalability, metering, and time-to-market benefits of a public cloud service without ceding control, security, and recurring costs to a service provider.

I've ridiculed the private cloud in the past, for two reasons. First, because all kinds of existing technologies and approaches can be grandfathered into the definition. And second, because it smacks of the mythical "lights out" data center, where you roll out a bunch of automation, lay off your admins, and live happily ever after with vastly reduced costs. Not even the goofiest know-nothing believes that one anymore.

Yet, despite natural skepticism, the private cloud appears to be taking shape. The technologies underlying it are pretty obvious, beginning with virtualization (for easy scalability, flexible resource management, and maximum hardware utilization) and data center automation (mainly for auto-provisioning of physical hosts). Chargeback metering keeps business management happy (look, we can see the real cost of IT!), and identity-based security helps ensure only authorized people get access to the infrastructure and application resources they need.

<Return to section navigation list>

Cloud Security and Governance

See Wayne Walter Berry (@WayneBerry) posted the first of two Securing Your Connection String in Windows Azure: Part 1 tutorials to the SQL Azure Team blog on 9/7/2010 in the SQL Azure Database, Codename “Dallas” and OData section above.

<Return to section navigation list>

Cloud Computing Events

Robin Shahan (@robindotnet) will deliver a Migrating to Microsoft Azure from a Traditional Hosted Environment session to the East Bay .NET Users Group on 9/8/2010, 6:00 PM, at the University of Phoenix, 2481 Constitution Drive, Livermore, CA:

I have a desktop client application that talks to public web services (asmx) that talk to private web services (asmx) behind a firewall that talk to the SQLServer database with the servers all residing in a standard hosted environment. In this session, I'll discuss the whys and wherefores and hows of migrating from this old-school environment to Microsoft Azure.

Robin has over 20 years of experience developing complex, business-critical applications. She is currently the Director of Engineering for GoldMail, a small company based in San Francisco whose product provides voice-over-visual messaging. Robin is a moderator in the MSDN Forum for ClickOnce and Setup & Deployment projects, and vows to learn Windows Installer some day. She is also an MVP, Client App Dev.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Rick Vanover asked Is a Single Vendor Solution the Right Approach to Cloud? in a 9/7/2010 post to the HPC in the Cloud blog:

During VMworld 2009 in San Francisco, I had a number of opportunities to meet new people and see new technologies. This year’s show was materially different in the message from VMware. As expected, cloud computing is part of the message that is being delivered to customers. Also as expected, there is a great sense of skepticism and cautious embracement of cloud technologies by the customer base.

What I do like about VMworld is that aside from the core focus of the show, there are a number of supporting technologies and organizations that roll into the larger experience. I had a chance to meet some of the HP Cloud Advisors panel which specializes in fast-tracking an organization to cloud technologies. During our briefing, HP brings what is the most systematic and business-process focused approach to embracing cloud solutions that I have seen to date.

The panel was a good mix of experts from HP whom bring a wealth of experience and a great vision for HP’s cloud message, so I figured it was time to ask some hard questions. I explained to the panel that usually a solution or offering is piloted or developed with a customer’s needs in mind, which they agreed. Then I asked to describe when they had to tell the customer “no” during the design phase to one or more of their requirements.

Lee Kedrie, HP’s Chief Brand Officer & Evangelist of HP Technology Consulting said that there are number of occasions where it may seem like chairs will be thrown across the room of how the customer wants a design and HP’s view for a cloud solution design. The example raised was that the basic rules of separation and networking were the most common examples where customers whom embrace HP’s message are being challenged to rethink their approach.

What was clear is that HP is adopting cloud technologies from the solution perspective that puts the work back on technology as a whole. I’ve long thought that application design makes virtualization and other technologies easier to manage, and that is not even taking into account availability requirements such as disaster recovery.

Of course this is an emerging, integrated offering that rolls in hard goods from HP such as their performance optimized datacenter and professional services. Yet, I did appreciate their ability to identify the areas of an organization’s IT behavior and visualize the path to cloud computing. How successful a fully-integrated solution will be is yet to be determined, but for larger organizations I expect it will be a natural choice to go for a single solution provider for cloud endeavors.

Andrew asserted AWS Price Reductions Relentless in this 9/7/2010 post to the Cloudscaling blog:

Last week, amidst the din of VMWorld and people up in arms that OpenStack is implementing Rackspace’s APIs, Amazon’s Web Services Blog announced a significant price reduction on High-Memory Double Extra Large and High-Memory Quadruple Extra Large instances.

This reduction is indicative of Amazon’s efforts to drive down cost (and likely to level their work loads). We went through the AWS blog and pulled out all all the announcements that reduced costs for AWS users.

AWS has made changes that reduced prices on some part of the service 10 times in the last 18 months:

- Reserve Instances

- Lowered Reserve Instance Pricing

- Lowered EC2 Pricing

- Lowered S3 and EU Windows Pricing

- Spot Instances

- Lowered Data Transfer Pricing

- Combined Bandwidth Pricing

- Lowered CloudFront Pricing

- Free Tier and Increased SQS Limits

- Lowered High Memory Instance Pricing

We have outlined Amazon’s rate of innovation in the past. The pattern of these price reductions highlights Amazon’s commitment to not just innovate, but to also lower costs and pass that savings to their customers.

Amazon’s core competency is delivering goods at high volume for low margins. AWS was born from 2 decades of experience operating a massive web infrastructure and relentlessly driving down costs in the datacenter, with better processes and automation on commodity hardware and open source software.

Amazon is not resting on their proverbial cloud laurels, and will make sure no one else can either.

Alan Zeichcik reported Red Hat turns its eyes to the cloud in this 9/7/2010 article for SDTimes on the Web:

There’s always another way to make money from an open-source platform, and offering Platform-as-a-Service looks to be lucrative. No hardware, no installations, no rack of space at a collocation service. If it works for the enterprise and ISV, then it works for Red Hat, one of the latest companies to jump onto the cloud bandwagon.

In late August, the company unveiled what it calls Cloud Foundations, a PaaS based on the JBoss enterprise middleware stack. Red Hat intends Cloud Foundations to simplify the development of new simple Web applications as well as complex transactional applications, and to offer an integration point back to the enterprise data center.

According to Red Hat, the system will let developers build applications in Groovy, GWT, Java EE, POJO, Ruby, Seam, Spring or Struts. The company’s Eclipse-based JBoss Developer Studio will also have plug-ins that allow applications to be deployed directly into a JBoss platform instance within a public or private cloud.

Those JBoss platform instances, the company said, will be available through a variety of public and private clouds, including Amazon EC2, Red Hat Enterprise Linux, Red Hat Enterprise Virtualization, Windows Hyper-V and others. The system will include containers, transactions, messaging, data services, rules, presentation experience and integration services.

“With growing interest in the benefits of cloud computing, enterprises are looking to leverage cloud deployment of existing applications, as well as to develop new applications in the cloud,” said Craig Muzilla, vice president and general manager of the Middleware Business Unit at Red Hat. “We believe that Red Hat PaaS will be ideally suited to deliver the flexibility required by CIOs to respond to business needs with rapid development and deployment, and simplified management.”

<Return to section navigation list>

0 comments:

Post a Comment