Windows Azure and Cloud Computing Posts for 9/15/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Alan Smith posted a 00:15:15 Webcast: Azure in Action: Large File Transfer using Azure Storage on 9/16/2010:

This webcast is based on a real world scenario using Windows Azure Storage Blobs and Queues to transfer 15 GB of files between two laptops located behind firewalls. The use of Queues and Blobs resulted in a simple but very effective solution that supported load balancing on the download clients and automatic recovery from file transfer errors. The two client applications took about 30 minutes to develop, the transfer took a total of three hours, and the total cost for bandwidth was under $4.00.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Wayne Walter Berry (@WayneBerry) shows you how to Create a Numbers Table in SQL Azure in his 9/16/2010 post to the SQL Azure Team blog:

Often you may require number table for various purposes like parsing a CSV file into a table, string manipulation, or finding missing identities (see example below), etc. Numbers tables are used to increase performance (by avoiding cursors) and simplify queries. Because SQL Azure requires all tables have clustered indexes, you’ll need to things slightly differently that you would with SQL Server or SQL Express (see the blog post entitled SELECT INTO With SQL Azure). Here is a short Transact-SQL script that will create a numbers table in SQL Azure.

SET NOCOUNT ON CREATE TABLE Numbers (n bigint PRIMARY KEY) GO DECLARE @numbers table(number int); WITH numbers(number) as ( SELECT 1 AS number UNION all SELECT number+1 FROM numbers WHERE number<10000 ) INSERT INTO @numbers(number) SELECT number FROM numbers OPTION(maxrecursion 10000) INSERT INTO Numbers(n) SELECT number FROM @numbersYou can easily do the same thing for date ranges. The script looks like this:

SET NOCOUNT ON CREATE TABLE Dates (n datetime PRIMARY KEY) GO DECLARE @dates table([date] datetime); WITH dates([date]) as ( SELECT CONVERT(datetime,'10/4/1971') AS [date] UNION all SELECT DATEADD(d, 1, [date]) FROM dates WHERE [date] < '10/3/2060' ) INSERT INTO @dates(date) SELECT date FROM dates OPTION(maxrecursion 32507) INSERT INTO Dates(n) SELECT [date] FROM @datesNow that you have created a numbers table you can use it to find identity gaps in a primary key, here is an example:

-- Example Table CREATE TABLE Incomplete ( ID INT IDENTITY(1,1), CONSTRAINT [PK_Source] PRIMARY KEY CLUSTERED ( [ID] ASC) ) -- Fill It With Data INSERT Incomplete DEFAULT VALUES INSERT Incomplete DEFAULT VALUES INSERT Incomplete DEFAULT VALUES INSERT Incomplete DEFAULT VALUES INSERT Incomplete DEFAULT VALUES INSERT Incomplete DEFAULT VALUES -- Remove A Random Row DELETE Incomplete WHERE ID = (SELECT TOP 1 ID FROM Incomplete ORDER BY NEWID()) GO -- Find Out What That Row Is SELECT n FROM dbo.Numbers WHERE n NOT IN (SELECT ID FROM Incomplete) AND n < (SELECT MAX(ID) FROM Incomplete) ORDER BY n -- if you need only the first available -- integer value, change the query to -- SELECT MIN(Number) or TOP 1 Number -- Clean Up The Example Table DROP TABLE Incomplete

Woody Woodruff and John Papa announced an updated Baseball All Stars OData feed according to Brian Henderson on 9/15/2010:

Here’s most of the default metadata display at http://www.baseball-stats.info/OData/baseballstats.svc/ in IE8:

Here are the the AllstarFull entries at http://www.baseball-stats.info/OData/baseballstats.svc/AllstarFull with IE8 configured to not display Atom/RSS feeds:

And Hank Aaron’s detailed properties from http://www.baseball-stats.info/OData/baseballstats.svc/Player('aaronha01'):

Marcelo Lopez Ruiz posted a Missing function for OData and Office on 9/15/2010:

The last entry of Consuming OData with Office VBA series had a small glitch - one of the helper functions is missing. The code isn't too tricky to write, but it's a handy little thing to have in your VBA toolkit. It simply takes a collection of dictionaries, and returns a new collection with all the keys, with duplicates removed.

Function GetDistinctKeys(ByVal objCollection As Collection) As Collection

Dim objResult As Collection

Dim objDictionary As Dictionary

Dim objKeys As Dictionary

Dim objKey As Variant

' Gather each key.

Set objKeys = New Dictionary

For Each objDictionary In objCollection

For Each objKey In objDictionary.Keys

If Not objKeys.Exists(objKey) Then

objKeys.Add objKey, Nothing

End If

Next

Next

Set objResult = New Collection

' Put all keys in a collection.

For Each objKey In objKeys

objResult.Add objKey

Next

Set GetDistinctKeys = objResult

End FunctionThanks to Matt Chappel of www.coretech.net for spotting this one, and enjoy!

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Waiting for details of the September release promised for 9/16/2010.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Buck Woody explained The Cloud and Platform Independence – and Someplace Really Warm You Can Now Ice-Skate with an IPad client for an Azure app in this 9/16/2010 post:

A couple of days ago I posted a blog entry about a conversation I had with someone about a new way of working, and the fact that this was a cool thing. In the midst of the conversation, I mentioned an iPad – a computing tablet from Apple. Now, I’ve taken my shots at Apple in the past (all in fun), but it should be kept in mind that Microsoft is one of the largest sellers of software on the Mac platform (Microsoft Office) and has several hundred Apple developers here in Redmond – more than many shops around the world. I’m actually still registered as a Mac Developer, from my days when I did that sort of thing. I’ve used just about everything from PIC systems to OS9, OSX, Linux, Sun, Tru64, just about any OS in mass production. I even owned an Amiga!

But someone took issue with my statements. One of the responders to the blog said that “that Azure stuff” wouldn’t run on an iPad, but this gentleman got a little mixed up on his information. As I teach my daughter, if you're willing to learn, even your critics can be a great ally, even when they are wrong. What I derived from this person’s general statement was something the way I think a lot of people might think right now – that things made by Microsoft are only for Microsoft technologies, Apple things run only on Apples and so on.

But the cloud is more – much more. It’s the whole promise of being platform independent that I like. Sure, you can code things running SilverLight or Flash that won’t run on an iPad, but that’s not an artifact of Windows Azure – it’s a front-end design choice. If you code your application correctly, it can run anywhere. And you can even code Windows Azure in PHP, Java, or C++ if you want. It’s just back-end stuff - the front end is up to the developer.

So knowing that we’ll have this perception going forward, I put my money where my mouth is. I won a contest not long ago that gave me some money. So I took that money, drove to an Apple store, and bought – an iPad. That’s right, I’ve joined the “cool kids” in owning one, which I’m sure will shock some folks. It’s an interesting little device, and makes a decent enough web browser, but I doubt it will be my primary computing device any time soon. But I bought it with one thing in mind: I’ll demo Windows Azure on it.

There are lots of sites using Azure right now – one of them is NASA. They federate the Mars rover data (for free) using Azure. So I logged in, signed up, and hit the site – notice the platform for the data: http://pinpoint.microsoft.com/en-us/applications/nasa-mars-exploration-rover-mission-images-12884902246

From there, I opened – gasp – a Windows Azure platform-based site on an iPad – here’s the proof:

So does Azure “run” on an iPad? No! The point is, it doesn’t have to – the application runs on Azure, and the data is delivered to a web browser. The front-end of the application is truly separated from the back-end, so that I am indeed platform-independent, at least on this application.

As time goes on, I plan to write an application (and blog about it) that uses Windows Azure, Blob Storage, Table Storage, Queues, the App Fabric, and SQL Azure, as well as a local SQL Server database. I’ll explain what I’m doing, what I learn – warts and all – as I go. And I’ll also make sure it works on my new iPad.

The Windows Azure Team posted another Real World Windows Azure: Interview with Shekar Chandrasekaran, Chief Technology Officer at Transactiv case study on 9/16/2010:

As part of the Real World Windows Azure series, we talked to Shekar Chandrasekaran, Chief Technology Officer at Transactiv about using the Windows Azure platform to deliver its e-commerce transaction solution. Here's what he had to say:

MSDN: Tell us about Transactiv and the services you offer.

Chandrasekaran: Transactiv is a startup company that facilitates online transactions between buyers and sellers through social networking platforms. Our software enables three transaction models: business-to-business, business-to-consumer, and social commerce with affiliate model. We help make commerce easy for companies that may not have the resources to acquire, implement, and maintain existing commerce systems.

MSDN: What were the biggest challenges that Transactiv faced prior to implementing the Windows Azure platform?

Chandrasekaran: When we set out to build Transactiv, we knew that we wanted an infrastructure that offered high levels of scalability to manage unpredictable demand. In the early stages of our vision, we considered an on-premises, Linux/Apache/MySQL/PHP (LAMP) based architecture. However, it quickly became clear that as a small startup, we needed to avoid the cost-prohibitive capital expenses and IT management costs associated with on-premises servers.

MSDN: Can you describe the solution you built with the Windows Azure platform to address your need for cost-effective scalability?

Chandrasekaran: We developed Transactiv from the ground up for Windows Azure, after ruling out both Google Apps and Amazon Elastic Compute Cloud. We use Web roles and Worker roles in Windows Azure for computational processing, and we can quickly add new roles to scale up as we need to handle a growing list of customers and transactions. Transactiv uses Microsoft SQL Azure for its relational database needs and, to optimize its storage footprint, uses Blob storage in Windows Azure for product images, which it can quickly scale up without impacting relational storage. Transactiv also uses Table storage in Windows Azure to capture and store application log data and performance metrics.

Transactiv combines e-commerce and social networking, and enables customers to create catalogs of products for purchase on Facebook.

MSDN: What makes your solution unique?

Chandrasekaran: By using Transactiv, anyone can establish a transaction-based online presence through the popular social site, Facebook. For instance, a small company can set up a Facebook page with product and pricing details and allow customers to make purchases without using complicated and costly e-commerce solutions.

MSDN: Are you offering Transactiv to any new customer segments or niche markets?

Chandrasekaran: We primarily target small and midsize businesses, but anyone can use Transactiv to facilitate online commerce transactions.

MSDN: What kinds of benefits is Transactiv realizing with Windows Azure?

Chandrasekaran: The biggest benefit is that we were able to launch Transactiv to the market with the confidence that we have a scalable infrastructure that we can quickly and cost-effectively change to meet demand. By using Windows Azure, we were able to launch our service without buying any physical server hardware and over a three-year period, we will save 54.6 percent of our costs compared to procuring, managing, and maintaining an on-premises infrastructure.

Read the full story at: www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008004

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

The Internet Explorer 9 Team dogfooded their Beauty of the Web site by using Windows Azure to tout the public beta on 9/15/2010:

Return to section navigation list>

VisualStudio LightSwitch

Eric Erhardt of the VS LightSwitch Team explained How Do I: Create and Use Global Values In a Query in this 9/16/2010 post:

Visual Studio LightSwitch has a powerful query subsystem that enables developers to build the queries their applications need in order to display business data to end users. Developers can easily model a query that will filter and sort the results. They can also model parameters that can be used in the filters. And where the designer falls short, developers can drop into code and use LINQ to build up the query to get the job done. Read more about how to extend a query using code.

One scenario that often comes up in business applications (and probably most applications) is the ability to use contextual information, what we call Global Values in LightSwitch, when filtering data. For example, I want my screen to display the SalesOrders that were created today. Or I want to display the Invoices that are 30 days past due.

Visual Studio LightSwitch allows you to use some built-in Global Values in your query easily through the query designer. It also allows you to add your own Global Values. Global Values are very powerful and in this post I’ll show you how to use the built-in ones as well as how to add your own.

Using Global Values in a Query

As previously stated, LightSwitch defines some built-in Global Values that can be used out of the box. These values are all based on the Date and DateTime types:

- Now

- Today

- End of Day

- Start of Week

- End of Week

- Start of Month

- End of Month

- Start of Quarter

- End of Quarter

- Start of Year

- End of Year

In order to use one of these Global Values, create a table named “SalesOrder” with a CreatedDate property of type “Date”. Add a new Query on the SalesOrder table either by clicking the add Query button at the top of the designer, or by right-clicking the SalesOrders table in the Solution Explorer and selecting Add Query. Name the query “SalesOrdersCreatedToday”. Add a Filter and select CreatedDate for the property. In order to access the Global Values list, you need to select a different type of right value in the filter. To do this, drop down the combo box that has the ‘abl’ icon in it and select “Global”.

Since there are Global Values defined for the Date and DateTime types, anytime you create a filter on a property of these types, the “Global” option is available in this combo box. For types that do not have Global Values defined, the “Global” option won’t show up.

Now that you selected “Global”, you can pick from the built-in values for the value. Open the combo box at the right and choose “Today”.

You now have a query that returns only the SalesOrders that were created on the day that the query is executed. To display the query results on a screen, create a new screen named “NewOrders” and in the “Screen Data” picker, choose the SalesOrdersCreatedToday query.

When you open that screen, only the SalesOrders that were created today will be displayed.

Note: Date and DateTime properties work differently because DateTime also stores the time. So if the property you are using is of type DateTime, using a query filter ‘MyDateTime = Today’ will not work like it does for a Date type. Today really means System.DateTime.Now.Date – which equates to 12:00:00 AM of the current day. For a DateTime, Today really means Start of Day. So in order to create an equivalent query using a DateTime property, you would need to use the “is between” operator as shown below.

Defining a Global Value

Although the built-in Global Values that LightSwitch provides enable a lot of scenarios, there are times when a developer would want to create their own Global Value to use in their applications. The example I gave above, Invoices that are 30 days or more overdue, could be one of those times. Imagine I have an Invoice table with a “Closed'” Boolean property and a “DueDate” Date property. I want to define a query named OverdueInvoices that returns all Invoices that have not been closed and were due more than 30 days ago. Creating the first filter is very easy:

But defining the second filter cannot be done using the built-in Global Values. There isn’t a way to say “Today – 30” in the value picker. So instead, I would create a new Global Value named “ThirtyDaysAgo”. (You could also click on the “Edit Additional Query Code” link in the properties window to code this filter into the query. But some values are used so often, in different parts of the app, that you don’t want to write code every time you use them.)

To define a new Global Value, you will need to open your project’s ApplicationDefinition.lsml file using an xml editor and manually add a snippet of xml. There wasn’t enough time to create a visual designer for this scenario, so the only way to define new Global Values is to manually edit the xml. If you are unfamiliar with editing xml, you may want to create a back-up copy of the ApplicationDefinition.lsml file, in case you make a mistake and the designer is no longer able to load.

To open the file, click the view selector button at the top of the Solution Explorer and select “File View”.

Under the “Data” folder, you will find a file named “ApplicationDefinition.lsml”. Right-click that file and select “Open With…” and choose “XML (Text) Editor”. This will open the xml that the LightSwitch designer uses to model your application. Directly under the xml document’s root “ModelFragment” element, add the highlighted GlobalValueContainerDefinition element from the following snippet:

<?xml version="1.0" encoding="utf-8" ?> <ModelFragment xmlns="http://schemas.microsoft.com/LightSwitch/2010/xaml/model" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"> <GlobalValueContainerDefinition Name="GlobalDates"> <GlobalValueDefinition Name="ThirtyDaysAgo" ReturnType=":DateTime"> <GlobalValueDefinition.Attributes> <DisplayName Value="30 Days Ago" /> <Description Value ="Gets the date that was 30 days ago." /> </GlobalValueDefinition.Attributes> </GlobalValueDefinition> </GlobalValueContainerDefinition>You can name your GlobalValueContainerDefinition anything you want, it doesn’t need to be named GlobalDates. Also, you can define more than one GlobalValueDefinition in a GlobalValueContainerDefinition.

Now the only thing that is left to do for this Global Value is to write the code that returns the requested value. To do this, in the Solution Explorer right click on the “Common” project and select “Add" –> “Class…”. Name the class the exact same name as you named the GlobalValueContainerDefinition xml element above, i.e. “GlobalDates”. The class needs to be contained in the root namespace for your application. So if your application is named “ContosoSales”, the full name of the class must be “ContosoSales.GlobalDates”.

Now, for each GlobalValueDefinition element you added to the xml, add a Shared/static method with the same name and return type as the GlobalValueDefinition. These methods cannot take any parameters. Here is my implementation of ThirtyDaysAgo:

VB:

Namespace ContosoSales ' The name of your application Public Class GlobalDates ' The name of your GlobalValueContainerDefinition Public Shared Function ThirtyDaysAgo() As Date ' The name of the GlobalValueDefinition Return Date.Today.AddDays(-30) End Function End Class End NamespaceC#:

using System; namespace ContosoSales // The name of your application { public class GlobalDates // The name of your GlobalValueContainerDefinition { public static DateTime ThirtyDaysAgo() // The name of the GlobalValueDefinition { return DateTime.Today.AddDays(-30); } } }The Global Value is now defined and ready for use. Next, we need to hook our OverdueInvoices query up to this newly defined Global Value. Since we manually edited the ApplicationDefinition.lsml file, the designer needs to be reloaded to pick up the new model definitions. To do this, switch back to the “Logical View” using the view selector button at the top of the Solution Explorer. Right-click on the top-level application node and select “Reload Designer”.

Open the OverdueInvoices query and add a new filter using the Global Value we just defined:

That’s it! Now you can use this query on a screen or in your business logic and it will return all open Invoices that are 30 days past due.

Note: You can define a Global Value that returns a type other than DateTime. For example, you could make a “Current User” Global Value that returns the string name of the currently logged-in user by returning “Application.Current.User.Name” from the Shared/static method.

The Silverlight Show quoted Michael Washington about LightSwitch in this 9/16/2010 post:

In this post, Michael Washington explains why he believes that LightSwitch is "The One".

Source: Open Light Group

“Ok I’m calling it, I have felt this way for a while now, but now that I have had a chance to put together a strong case. Take a look at this walk-thru of an end to end application created in about 20 minutes: LightSwitch Student Information System.

The key “take away” is that all the fields are properly validated, there is full referential integrity, and… The UI is consistent. All the buttons and paging work properly. Dare I say it has LESS bugs than if it was coded manually, not to mention is was coded 900% faster?”

Beth Massi (@BethMassi) announced two new LightSwitch “How Do I” Videos on the Developer Center (Beth Massi) in this 9/15/2010 post:

If you haven’t been checking the Learn section of the LightSwitch Developer Center you’re missing out on a great series of step-by-step videos that show how to build applications with Visual Studio LightSwitch. The “How Do I” videos are short 5-15 minute lessons that walk you through a variety of topics as we build a sample application for order entry. Check them out:

- #1 - How Do I: Define My Data in a LightSwitch Application?

- #2 - How Do I: Create a Search Screen in a LightSwitch Application?

- #3 - How Do I: Create an Edit Details Screen in a LightSwitch Application?

- #4 - How Do I: Format Data on a Screen in a LightSwitch Application?

- #5 - How Do I: Sort and Filter Data on a Screen in a LightSwitch Application?

- #6 - How Do I: Create a Master-Details (One-to-Many) Screen in a LightSwitch Application?

- #7 - How Do I: Pass a Parameter into a Screen from the Command Bar in a LightSwitch Application?

- #8 - How Do I: Write business rules for validation and calculated fields in a LightSwitch Application?

- #9 - How Do I: Create a Screen that can Both Edit and Add Records in a LightSwitch Application?

- #10 - How Do I: Create and Control Lookup Lists in a LightSwitch Application?

[#9 and #10 are new]

We’ve also added a couple new features to the video pages which allow you to rate the video (5 stars being the best) as well as a related videos section so you can see the most watched videos in the series. I still recommend you watch them in order because the videos build upon each other.

I’ll be working on more so check the Learn section of the LightSwitch Developer Center. Next ones I’m working on will be on Security and Deployment so stay tuned.

Enjoy,

-Beth Massi, Visual Studio Community

<Return to section navigation list>

Windows Azure Infrastructure

The HPC in the Cloud blog reported 2,000 New Words and Phrases Added to the New Oxford Dictionary. And Cloud Computing is One of Them on 9/16/2010:

Full announcement below, but in case lexicon is not your thing, here's the one you're looking for:

cloud computing n. the practice of using a network of remote servers hosted on the Internet to store, manage, and process data, rather than a local server or a personal computer.

Page: 1 of 17: [Read the rest]:

1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 All »

Not exactly the NIST definition, eh?

Lori MacVittie (@lmacvittie) claimed Infrastructure 2.0 ≠ cloud computing ≠ IT as a Service. There is a difference between Infrastructure 2.0 and cloud. There is also a difference between cloud and IT as a Service. But they do go together, like a parfait. And everybody likes a parfait… as a preface to her Infrastructure 2.0 + Cloud + IT as a Service = An Architectural Parfait post of 9/15/2010 to F5’s DevCentral blog:

The introduction of the newest member of the cloud computing buzzword family is “IT as a Service.” It is understandably causing some confusion because, after all, isn’t that just another way to describe “private cloud”? No, actually it isn’t. There’s a lot more to it than that, and it’s very applicable to both private and public models.

Furthermore, equating “cloud computing” to “IT as a Service” does both a big a disservice as making synonyms of “Infrastructure 2.0” and “cloud computing.” These three [ concepts | models | technologies ] are highly intertwined and in some cases even interdependent, but they are not the same.

In the simplest explanation possible: infrastructure 2.0 enables cloud computing which enables IT as a service.

Now that we’ve got that out of the way, let’s dig in.

ENABLE DOES NOT MEAN EQUAL TO

One of the core issues seems to be the rush to equate “enable” with “equal”. There is a relationship between these three technological concepts but they are in no wise equivalent nor should be they be treated as such. Like SOA, the differences between them revolve primarily around the level of abstraction and the layers at which they operate. Not the layers of the OSI model or the technology stack, but the layers of a data center architecture.

Let’s start at the bottom, shall we?

INFRASTRUCTURE 2.0

At the very lowest layer of the architecture is Infrastructure 2.0. Infrastructure 2.0 is focused on enabling dynamism and collaboration across the network and application delivery network infrastructure. It is the way in which traditionally disconnected (from a communication and management point of view) data center foundational components are imbued with the ability to connect and collaborate. This is primarily accomplished via open, standards-based APIs that provide a granular set of operational functions that can be invoked from a variety of programmatic methods such as orchestration systems, custom applications, and via integration with traditional data center management solutions. Infrastructure 2.0 is about making the network smarter both from a management and a run-time (execution) point of view, but in the case of its relationship to cloud and IT as a Service the view is primarily focused on

management.

Infrastructure 2.0 includes the service-enablement of everything from routers to switches, from load balancers to application acceleration, from firewalls to web application security components to server (physical and virtual) infrastructure. It is, distilled to its core essence, API-enabled components.

CLOUD COMPUTING

Cloud computing is the closest to SOA in that it is about enabling operational services in much the same way as SOA was about enabling business services. Cloud computing takes the infrastructure layer services and orchestrates them together to codify an operational process that provides a more efficient means by which compute, network, storage, and security resources can be provisioned and managed. This, like Infrastructure 2.0, is an enabling technology. Alone, these operational services are generally discrete and are packaged up specifically as the means to an end – on-demand provisioning of IT services.

Cloud computing is the service-enablement of operational services and also carries along the notion of an API. In the case of cloud computing, this API serves as a framework through which specific operations can be accomplished in a push-button like manner.

IT as a SERVICE

At the top of our technology pyramid, as it is likely obvious at this point we are building up to the “pinnacle” of IT by laying more aggressively focused layers atop one another, we have IT as a Service. IT as a Service, unlike cloud computing, is designed not only to be consumed by other IT-minded folks, but also by (allegedly) business folks. IT as a Service broadens the provisioning and management of resources and begins to include not only operational services but those services that are more, well, businessy, such as identity management and access to resources.

IT as a Service builds on the services provided by cloud computing, which is often called a “cloud framework” or a “cloud API” and provides the means by which resources can be provisioned and managed. Now that sounds an awful lot like “cloud computing” but the abstraction is a bit higher than what we expect with cloud. Even in a cloud computing API we are steal interacting more directly with operational and compute-type resources. We’re provisioning, primarily, infrastructure services but we are doing so at a much higher layer and in a way that makes it easy for both business and application developers and analysts to do so.

An example is probably in order at this point.

THE THREE LAYERS in the ARCHITECTURAL PARFAIT

Let us imagine a simple “application” which itself requires only one server and which must be available at all times.

That’s the “service” IT is going to provide to the business.

In order to accomplish this seemingly simple task, there’s a lot that actually has to go on under the hood, within the bowels of IT.

LAYER ONE

Consider, if you will, what fulfilling that request means. You need at least two servers and a Load balancer, you need a server and some storage, and you need – albeit unknown to the business user – firewall rules to ensure the application is only accessible to those whom you designate. So at the bottom layer of the stack (Infrastructure 2.0) you need a set of components that match these functions and they must be all be enabled with an API (or at a minimum by able to be automated via traditional scripting methods). Now the actual task of configuring a load balancer is not just a single API call. Ask RackSpace, or GoGrid, or Terremark, or any other cloud provider. It takes multiple steps to authenticate and configure – in the right order – that component. The same is true of many components at the infrastructure layer: the APIs are necessarily granular enough to provide the flexibility necessary to be combined in a way as to be customizable for each unique environment in which they may be deployed. So what you end up with is a set of infrastructure services that comprise the appropriate API calls for each component based on the specific operational policies in place.

LAYER TWO

At the next layer up you’re providing even more abstract frameworks. The “cloud API” at this layer may provide services such as “auto-scaling” that require a great deal of configuration and registration of components with other components. There’s automation and orchestration occurring at this layer of the IT Service Stack, as it were, that is much more complex but narrowly focused than at the previous infrastructure layer. It is at this layer that the services become more customized and able to provide business and customer specific options. It is also at this layer where things become more operationally focused, with the provisioning of “application resources” comprising perhaps the provisioning of both compute and storage resources. This layer also lays the foundation for metering and monitoring (cause you want to provide visibility, right?) which essentially overlays, i.e. makes a service of, multiple infrastructure resource monitoring services.

LAYER THREE

At the top layer is IT as a Service, and this is where systems become very abstracted and get turned into the IT King “A La Carte” Menu that is the ultimate goal according to everyone who’s anyone (and a few people who aren’t). This layer offers an interface to the cloud in such a way as to make self-service possible. It may not be Infrabook or even very pretty, but as long as it gets the job done cosmetics are just enhancing the value of what exists in the first place. IT as a Service is the culmination of all the work done at the previous layers to fine-tune services until they are at the point where they are consumable – in the sense that they are easy to understand and require no real technical understanding of what’s actually going on. After all, a business user or application developer doesn’t really need to know how the server and storage resources are provisioned, just in what sizes and how much it’s going to cost.

IT as a Service ultimately enables the end-user – whomever that may be – to easily “order” IT services to fulfill the application specific requirements associated with an application deployment. That means availability, scalability, security, monitoring, and performance.

A DYNAMIC DATA CENTER ARCHITECTURE

One of the first questions that should come to mind is: why does it matter? After all, one could cut out the “cloud computing” layer and go straight from infrastructure services to IT as a Service. While that’s technically true it eliminates one of the biggest benefits of a layered and highly abstracted architecture : agility. By presenting each layer to the layer above as services, we are effectively employing the principles of a service-oriented architecture and separating the implementation from the interface. This provides the ability to modify the implementation without impacting the interface, which means less down-time and very little – if any – modification in layers above the layer being modified. This translates into, at the lowest level, vender agnosticism and the ability to avoid vendor-lock in. If two components, say a Juniper switch and a Cisco switch, are enabled with the means by which they can be enabled as services, then it becomes possible to switch the two at the implementation layer without requiring the changes to trickle upward through the interface and into the higher layers of the architecture.

It’s polymorphism applied to an data center operation rather than a single object’s operations, to put it in developer’s terms. It’s SOA applied to a data center rather than an application, to put it in an architect’s terms.

It’s an architectural parfait and, as we all know, everybody loves a parfait, right?

Vinnie Mirchandani cited Windows Azure in his The Perfect Storm for IT Outsourcing post of 9/15/2010 to the Enterprise Irregulars blog:

As I present to outsourcing audiences on the book tour, they pick up on several new services markets the book outlines – Accenture’s Mobility Operated Services. Cognizant being involved in the clinical tests for the high-profile H1N1 vaccine last year. Appriro’s various Social CRM projects. Wipro’s product engineering services as “buyer” organizations embed more and more technology in their products. BPO combined with SaaS as in the Genpact relationship with NetSuite, and others.

They also need to be aware that 3 “systems” described in the book are rising to create a perfect storm which will decimate traditional IT outsourcing markets

a) Clouds are reshaping expectations of infrastructure services: As they read Mike Manos describing the Azure infrastructure he built for Microsoft (he is now at Nokia), outsourcers should be in awe and fear. He describes modern day “pyramids” – new-age data centers costing several hundred millions of dollars and containing 200,000+ servers. The scale of the capex budgets is way beyond what most outsourcing firms are culturally used to. The energy and tax efficiency of these centers puts most outsourcing data centers to shame. Finally, outsourcing firms are used to 5 year contracts – watch them struggle to adjust to the model amazon web services is defining where they happily sell you compute and storage in hourly units! [Emphasis added.]

b) SaaS is reshaping expectations of application management services: Read about salesforce in the book and how they support 70,000+ customers in a multi-tenant model with a fraction of the staff even the most efficient offshore firms need to support applications. And talk about 5 minute upgrades compared to 2-3-4 month SAP and Oracle upgrades the service markets have been accustomed to.

c) Agile is reshaping expectations of systems integration services: The case study around an agile project – with its iterative design/development and minimal planning project in a regulation heavy environment at GE Healthcare should open some eyes. The logical question is why it is not more mainstream in SI world in less compliance sensitive industries . And by the way, that is still a tame form of “agile”. There are plenty of talent pools like the one I recently wrote about in Toronto which is into “extreme” development models.

Interesting times ahead for the industry…

R “Ray” Wang reported “Cloud deployments enter [his Five Factors for Vendor Selection Chart] at (47.38%)” in his Tuesday’s Tip: Customers Seek Turnkey Applications From Their Technology Provider post of 9/15/2010:

Customers Expect Their Software Solutions To Be Turnkey

The recent Q2 2010 update to the Software Insider Vendor Selection Survey highlights some changing expectations of 401 respondents in the vendor selection process. Business technologists were asked to rate their top 5 selection criteria. Key findings demonstrate how functionality, ownership experience, and ecosystem feedback categories dominate the decision making process much more than market execution and corporate vision (see Figure 1).

Figure 1. Vendor Selection Criteria Reflect Core Insider Insights™ Categories

Major changes from the previous survey include a drop in priorities for Microsoft Office integration, regulatory requirements, and middleware technologies. The main driver for a decrease in Microsoft Office integration from 83.38% (Q4 2009) to 54.61% (Q2 2010) stems from improved options delivered by vendors and Microsoft (See Figure 2). Regulatory requirements dropped from 53% (Q4 2009) to 32.67% (Q2 2010) as key regulatory functionality met customer concerns. Meanwhile, middleware technologies dropped as a key selection factor from 26.87% (Q2 2010) to 4.74% (Q2 2010) due to the decline in interest for SOA and related technologies.

Consequently, four of the five enterprise software mega-trends for 2010 (i.e. Social, Mobile, Cloud, Analytics, and Gaming) appear in the new survey results. Analytics continues as the second most popular requirement. Cloud deployments enter at (47.38%). Mobile device options and form factors increase 8% from 37.40% (Q4 2009) to 45.39% (Q2 2010). Social starts as a priority at 9.98% among respondents.

Ray continues with additional details about shifts from 2009 and “The Bottom Line For Buyers (Users) – Plan For The Five Mega Trends In Your Apps Strategy.”

Michael Biddick prepared 12 Steps Toward Making Cloud Computing Work for You as an InformationWeek 500 2010 Cloud Computing PowerPoint (*.pptx) presentation (site registration required):

Michael Biddick is president and CTO of Fusion PPT and an InformationWeek Analytics contributor. He has worked with hundreds of government and telecommunications service providers in the development of operational management solutions. Most recently he has supported the Department of Homeland Security and the U.S. Department of Defense in the deployment of ITIL-based processes that are utilized to make their organizations more transparent and cost effective. Certified in several ITIL lifecycle service areas, Michael is also able to leverage over a decade of operational tool design and implementation experience with service desks, network management systems and consolidated management portals in making enterprise architecture decisions.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

David Linthicum asserted “The traditional data center is in for some changes as cloud computing continues to spread” in his Cloud computing's role in the evolving data center post of 9/16/2010 to InfoWorld’s Cloud Computing blog:

This week I'm at the Hosting & Cloud Transformation Summit, aimed at analysts looking to bring together traditional data centers and cloud computing -- not a bad concept.

So what's new here? The unmistakable understanding that the data center is changing forever, and you'll either get on board or the train will leave you behind.

The data center players in attendance want to learn how the transformation needs to occur. Here's what they found out:

Many enterprise data centers will focus more on hosting private clouds in the future and, as a result, will need to retool to accommodate the required infrastructure. For example, intra-data-center bandwidth can no longer support server-to-server communications while driving massive amounts of virtualized servers. Moreover, they'll see a much larger number of cores per square foot, which have their own power and management requirements. Also, they'll need to consider different approaches for security and governance.

Many commercial data centers, such as colocated systems, are being overhauled to host public cloud providers and will need many of the features discussed above. However, they will be only a part of the offerings. Larger public clouds will be scattered all over the world, using numerous data centers owned by various entities.

Many data centers will have to consolidate in order to survive, including enterprise, government, and commercial systems. Never a high-margin business, many will become a part of a cloud provider or pool resources with their competition. Each must revamp and prep itself for the cloud, as few data centers are currently ready for the shift.

The data center is not dead, but it's going to morph into something much more efficient and effective than the current state of affairs. It's about time, if you ask me.

Liam Eagle reported Microsoft’s Cloud Vision for Hosting Providers – Elliot Curtis for Web Host Industry Review on 9/14/2010:

Elliot Curtis, director of the North American partner hosting channel for the communications sector, delivered the second session Tuesday morning at the Hosting & Cloud Transformation Summit, during which he set out to discuss Microsoft’s feeling and approach about the cloud, generally.

What has changed in the last 12 months or so, he says, is that the cloud has really captured the imagination of the IT industry.

A big part of that is looking at the way that customers – including small business, mid-sized companies, and enterprises – deal with the companies to which they outsource their IT services. Microsoft has a philosophy we’ve discussed before about becoming the “trusted advisor” to your customers (which may apply more directly in the smaller customer space)

Curis displayed a chart showing the dropping cost of hosting, which ended, of course, at zero – the current price of hosting, from a certain point of view.

He, like Tier1’s Antonio Piraino earlier in the morning, illustrated his vision of the cloud landscape, showing where he feels global providers like Google, Salesforce and Amazon lie, according to their product sets, and where VMWare lies, along the service provider and customer spectrum.

Microsoft’s approach, he says, is to cover all that ground – being a global provider, but also working specifically with the service provider and customer segments. Most importantly (to the service providers in attendance), Microsoft is working hard on working with service providers in the cloud hosting space.

Microsoft thinks about the cloud business in terms of what Microsoft is delivering, what it is enabling service providers to deliver, and what it enables customers to deliver. The company believes, he says, that the market is large enough to allow room for Microsoft, as well as the company’s service provider customers, to play in the space.

He distinguishes the “Microsoft cloud,” which generally includes Windows Azure and SQL Azure, and is delivered directly to the developer type customer, from the “Partner Cloud,” which is built on Windows Server and SQL server (as well as a few other products) and is managed, arranged and updated by the partner.

But Azure can be used by hosting providers to build out solutions for their own customers. He gives the example of a hosting provider who built a hybrid backup solution using the Azure platform and as a result was able to get it up and running very quickly.

Curtis discusses the Azure Appliance somewhat, emphasizing that the idea is an Azure architecture in a container, delivered to the customer’s data center, but that it is still a service. Microsoft, in this case, remains responsible for the operation and functioning over the device. But the customer has more control over how that platform operates. [Emphasis added.]

So, Microsoft’s vision for the cloud includes a platform (Azure) that Microsoft delivers as a service, a piece of the platform (Azure Appliance) that Microsoft distributes to partners, who use it to deliver their own services, and software that Microsoft or its partners deliver as a service.

In his wrap-up, Curtis touches on a question he raised earlier in the presentation – what business are you in? From Microsoft’s perspective, you have to figure out how to be in the IT business (versus the “hosting” business), which he says is the best way to communicate specific value to customers or potential customers

<Return to section navigation list>

Cloud Security and Governance

K. Scott Morrison quoted David Linthicum in The Increasing Importance of Cloud Governance post of 9/14/2010:

David Linthicum [pictured right] published a recent article in eBizQ noting the Rise of Cloud Governance. As CTO of Blue Mountain Labs, Dave is in a good position to see industry trends take shape. Lately he’s been noticing a growing interest in cloud management and governance tools. In his own words:

This is a huge hole that cloud computing has had. Indeed, without strong governance and management strategy, and enabling technology, the path to cloud computing won’t be possible.

It’s nice to see that he explicitly names Layer 7 Technologies as one of the companies that is offering solutions today for Cloud Governance.

It turns out that cloud governance, while a logical evolution of SOA governance, has a number of unique characteristics all its own. One of these is the re-distribution of roles and responsibilities around provisioning, security, and operations. Self-service is a defining attribute of cloud computing. Cloud governance solutions need to embrace this and provide value not just for administrators, but for the users who take on a much more active role in the full life cycle of their applications.

Effective cloud governance promotes agility, not bureaucracy. And by extension, good cloud governance solutions should acknowledge the new roles and solve the new problems cloud users face.

K. Scott Morrison is the Chief Technology Officer and Chief Architect at Layer 7 Technologies.

Onuora Amobi reported Trusted Computing Group eyes cloud security framework on 9/13/2010 (missed when posted):

The Trusted Computing Group Monday announced a working group aimed at publishing an open standards framework for cloud computing security that could serve as a blueprint for service providers, their customers and vendors building security products.

Known as the Trusted Multi-Tenant Infrastructure Work Group, there are about 50 TCG members participating, including HP, IBM, AMD and Microsoft.

The group also will receive input from U.S. Defense Department representatives and the U.K. government, according to sources. TCG has in all about 110 members that have worked over the years on standards-based initiatives in the area of trusted computing, including “Trusted Network Connect” and the “Trusted Platform Module.”

The latest plan is intended to put forward a security framework for cloud computing, including private, public and hybrid cloud environments as well as virtualized and non-virtualized ones. “Multi-tenant security is really an end-to-end security requirement,” says Eric Visnyak, information assurance architect at BAE Systems, which is participating in the new group. “We need to validate policy requirements in dedicated and shared infrastructure.”

The Trusted Multi-Tenant Infrastructure Work Group will make use of existing open standards to define end-to-end security, both virtual and physical, in a cloud-computing environment, including capabilities such as encryption and integrity monitoring, Visnyak says. TCG wants to publish its framework document later this year in draft form to receive public input, so that a final revision can be in place early next year.

The goal is to create a framework document for cloud-computing security that will not only serve as a baseline for security compliance and auditing, but also might also encourage the introduction of new products.

Joe McKendrick asserted “By 2020, IT departments will be about governance, not hands-on technology” as a pullquote in his Outsourcing and cloud-based IT: Has the great offloading begun? to his Service Oriented ZDNet blog on 9/13/2010 (missed when posted):

I got some interesting reactions to my most recent post on ZapThink’s 2020 vision, which included warnings about the potential “collapse of enterprise IT.”

Jason Bloomberg, author of the 2020 vision statement, provided some additional clarifications, noting that he was not recommending outsourcing IT, but issuing a warning about such practices gone too far: “Rather, many enterprises will be reaching a ‘crisis point’ as they seek to outsource IT, and if they don’t understand the inherent risks involved, then they will suffer the negative consequences that Michael Poulin and others fear,” he says.

The ZapThink 2020 vision was written to pull together multiple trends and delineates the interrelationships among them. Ultimately, the IT department of 2020 will not be about hands-on technology, but about governance, Jason explains. “One of the most closely related trends is the increased formality and dependence on governance, as organizations pull together the business side of governance (GRC, or governance, risk, and compliance), with the technology side of governance (IT governance, and to an increasing extent, SOA governance). Over time, CIOs become CGOs (Chief Governance Officers), as their focus shifts away from technology.”

“At some point you won’t think of them as an IT department at all,” he says.

While Jason and ZapThink foresee IT executives handling less technology; ironically, another observer sees non-technical executives and managers getting more involved in technology. Prashanth Rai of the CIO Weblog picked up on my observation that most technological resources needn’t reside within the organization as long as sufficiently technical strategic thinkers continue to do so.

However, Prashanth then goes on to say that the “technical strategic thinkers” that are emerging won’t necessarily by CIOs or IT managers. “A very short jump to believing that the IT strategy for most organizations will become a part of the purview of the CEO or COO. Those offices have already evolved markedly in their appreciation for the capabilities of transformative and competitive uses of technology; indeed, in many cases, they have outstripped the ranks of IT architects and CIOs who have been trained by years of limitations and industry best practices to be cautious and reactive.”

Jason also says any collapse of enterprise IT departments won’t entirely be due to outsourcing — cloud computing will drive much of it as well. “Outsourcing is a part of the story, but so is cloud computing,” he says. “We also see a shift in the approach to enterprise architecture that better abstracts the locations of IT resources.”

Jason delineates between outsourcing and cloud as separate categories, but with plenty of overlap. “Many Cloud initiatives outsource certain aspects of their infrastructure to a cloud provider, but not necessarily, private clouds being the primary exception. And outsourcing in general may involve various services that have nothing to do with cloud computing.”

Also, reader prdmarican provided a great comment to my recent post titled “Is IT losing its relevance?” Here is what he said to that point — and perhaps also a good counter-argument to the points made in the above post as well:

“I have a great idea… maybe this next Friday, all of us irrelevant IT workers should just shut all of our equipment off and take a three day weekend… and see how relevant we all seem on Monday when none of us are there…

“Outsourcing IT doesn’t make anything less relevant, it only shifts the work to another location - either to a company that will cost you lots more, or one that employs folks in some far away land that make $2 a day in wages. And while some things may make sense to move to the “cloud”, nobody wants the wait or the expense of having to call an outsider in for routine IT work.

“And the same folks that think that ‘IT’ is standing in the way of progress (please - what other group of folks love technology and gadgets more than us?) are the same ones who cry foul whenever some new virus or Trojan takes over their office - yeah, the one’s that they refused to be under the IT standards, because they know more than the IT department.”

“I’ve been in this business over 30 years now. Outsourcing may solve some problems, but it brings with it just as many if not more.”

“Choose wisely.”

<Return to section navigation list>

Cloud Computing Events

Mary Jo Foley (@maryjofoley) reported Microsoft adds 65 mini PDC events aimed at cloud, phone developers in a 9/15/2010 post to her All About Microsoft blog for ZDNet:

Microsoft may be limiting (severely) attendance at its upcoming Professional Developers Conference (PDC) in Redmond, but it is adding 65 regional “mini” PDC events in the U.S. and other countries worldwide to compensate.

At the various free events, Microsoft will be webcasting keynotes and sessions from the main PDC on big screens. It also will be offering training and access to various members of the Azure, Windows Phone 7, Windows and other teams to those who attend the regional events, company officials said.

Mini PDCs will be held in Beijing, Shanghai, Guangzhou, Hyderabad, Mumbai, Delhi, Bangalore, Tokyo, Mountain View, Calif., and multiple other cities in Europe, South America and Australia. Microsoft is updating the regional event map regularly. Those interested in attending a local event can register via the regional event site. [Emphasis added.]

The U.S. PDC is on Microsoft’s campus in Redmond this year and will be a two-day event at the end of October. Microsoft is expected to focus primarily on developer news around its Azure and smart phone platforms at the event. The company has yet to post information on any of the sessions planned for the confab.

The Mountain View venue is one the few locations with no date posted. Beijing, China and suburban Paris, France, were the only locations for which you could register on 9/16/2010.

SysCon announced early bird admission pricing to Cloud Expo 2010 West (11/1 to 11/4/2010 at the Santa Clara, CA Convention Center) expires tomorrow (9/17/2010):

Cloud Expo Silicon Valley will feature 200+ technical breakout sessions and General Sessions across four days from a rock star conference faculty drawn from the leading Cloud industry players in the world. We also have a packed program of Special Events, including the ever-popular Cloud Computing Bootcamp, CloudCamp @ Cloud Expo, and also "SOA in the Cloud."

All the main layers of the Cloud ecosystem will be represented in the 7th International Cloud Computing Conference & Expo - the infrastructure players, the platform providers, and those offering applications. The high-energy event is a must-attend for senior technologists including CIOs, CTOs, directors of infrastructure, VPs of technology, IT directors and managers, network and storage managers, network engineers, enterprise architects, and communications and networking specialists.

Microsoft is a “Platinum Sponsor.”

<Return to section navigation list>

Other Cloud Computing Platforms and Services

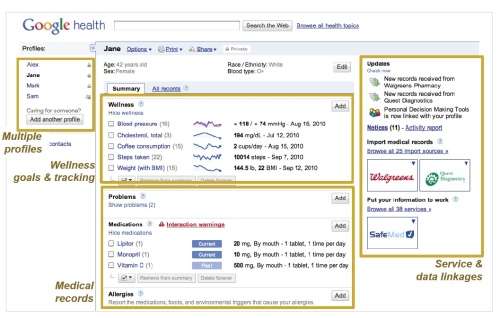

John Moore claimed Google Hits Reset Button on Google Health in his 9/15/2010 post to the Chilmark Research blog:

Google Health has seemingly been stuck in neutral almost from the start. Despite the fanfare of Google’s Eric Schmidt speaking at the big industry confab, HIMSS a couple of years back, an initial beta release with healthcare partner Cleveland Clinic and a host of partners announced once the service was opened to the public in May 2008, Google Health just has not seemed to live up to its promise. Chilmark has looked on with dismay as follow-on announcements and updates from Google Health were modest at best and not nearly as compelling as Google’s chief competitor in this market, Microsoft and its corresponding HealthVault. Most recently we began to hear rumors that Google had all but given up on Google Health, something that did not come as a surprise, but was not a welcomed rumor here at Chilmark for markets need competitors to drive innovation. If Google pulled out, what was to become of HealthVault or any other such service?

Thus, when Google contacted Chilmark last week to schedule a briefing in advance of a major announcement, we were somewhat surprised and welcomed the opportunity. Yesterday, we had that thorough briefing and Chilmark is delighted to report that Google Health is still in the game having made a number of significant changes to its platform.

Moving to Health & Wellness

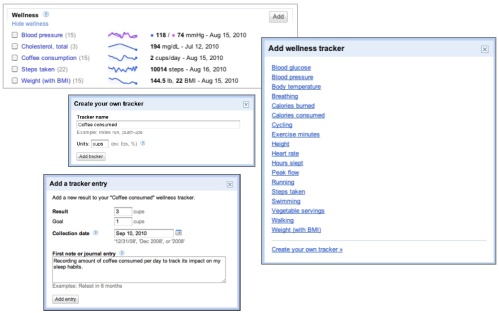

Today, Google is announcing a complete rebuild of Google Health with a new user interface (UI) a refocusing on health & wellness and signing on additional partners and data providers. Google told Chilmark that the new UI is based upon significant user feedback and a number of usability studies that they have performed over the last several months. Rather than a fairly static UI (the previous version), the new UI takes advantage of common portal technologies that allow the consumer to create a personalized dashboard presenting information that is most pertinent to a consumer’s specific health and wellness interests and needs. So rather then focusing on common, basic PHR-type functions, e.g., view immunization records, med lists, procedures and the like, the new UI focuses on the tracking of health and wellness metrics. This is not unlike what Microsoft is attempting to do with MSN Health and their health widgets that subsequently link into a consumer’s HealthVault account, though first impressions lead us to give a slight edge to Google Health’s new UI for tracking health metrics.

A particularly nice feature in the new Google Health is the consumer’s ability to choose from a number of pre-configured wellness tracking metrics such as blood pressure, caloric intake, exercise, weight, etc. Once a given metric is chosen, the user can set personal goals and track and trend results over time. There is also the ability to add notes to particular readings, thereby keeping a personal journal of what may have led to specific results. And if one cannot find a specific health metric they would like to track, the new platform provides one the ability to create their own, for example the one in the figure below to measure coffee consumption. Nice touch Google.

On the partnership front, Google is also announcing partnerships with healthcare organizations Lucille Packard Children’s Hospital, UPMC and Sharp Healthcare and has added some additional pharmacy chains such as Hannaford and Food Lion among others. On the device side, Google has the young Massachusetts start-up fitbit (novel pedometer that can also monitor sleep patterns) and the WiFi scale company, Withings. On the mobile front, Google has added what they say is the most popular personal trainer Android app, CardioTrainer and mPHR solution provider ZipHealth (full disclosure, I’m an advisor to the creators of ZipHealth, Applied Research Works) which has one of the better mPHR apps in the market.

If any metric is a sign of pent-up consumer demand for what Google Health will now offer it may be CardioTrainer. In our call yesterday, the new head of Google Health, Aaron Brown stated that they did a soft launch of CardioTrainer on Google Health by just putting a simple upload button in CardioTrainer that would move exercise data to a Google Health account. In two weeks, over 50K users have uploaded their data to Google Health. Pretty incredible.

Challenges Remain

Chilmark is delighted with what Google has done with Google Health. The new interface and focus takes Google Health in a new direction, one that focuses on the far larger segment of the market, those that are not sick and want to keep it that way through health and wellness activities. Today, within the employer market, there is a major transition occurring with employers focusing less on disease management and looking towards health and wellness solutions that keep their employees healthy, productive and out of the hospital. Google may be able to capitalize on this trend provided it strikes the right partnership deals with those entities that currently serve the employer market (payers and third party administrators). Chilmark will not be holding its breath though as to date, Google has not had much success in the enterprise market for virtually any of its services.

And that is one of many challenges Google will continue to face in this market.

First, how will Google readily engage the broad populace to use Google Health? Google has struggled in the enterprise market, regardless of sector, and will likewise struggle in health as well, be it payers, providers or employers. Without these entities encouraging consumers to use Google Health (especially providers as consumers have the greatest trust in them), Google Health will continue to face significant challenges in gaining broad adoption and use of its platform. But as the previous example of CardioTrainer points out, Google may have a card up its sleeve in gaining traction by going directly to the consumer through its partners, but it will need far more partners than it has today to make this happen.

Second, the work that Google has done to re-architect the interface and focus on wellness, particularly the tracking and trending of biometric or self-entered data is a step in the right direction, but Google has not been aggressive enough in signing on device manufacturers that can automatically dump biometric data into a consumer’s Google health account. Yes, Google is a member of the Continua Alliance but Continua and its members have been moving painfully slow in bringing consumer-centric devices to market. HealthVault, with its Connection Center, is leaps and bounds beyond where Google is today and where Google needs to be to truly support its new health and wellness tracking capabilities. Google’s ability to attract and retain new partners across the spectrum of health and wellness will be pivotal to long-term success.

Third, Google has chosen not to update its support of standards and remains dedicated to its modified version of CCR. While CCR is indeed a standard that has seen some uptake in the market, Chilmark is seeing most large healthcare enterprises devoting their energies to the support of the CCD standard. In our conversation with Google yesterday we mentioned this issue and Google stated that they are hoping the VA/CMS Blue Button initiative will take hold and provide a new mechanism by which consumers retrieve their healthcare data and upload it to Google Health. The Blue Button is far from a done deal and has its fair share of challenges as well. Google is taking quite a risk here and would be better off swallowing the CCD pill.

In closing, Chilmark is quite excited to see what Google has done with their floundering Google Health. They have truly hit the reset button, have a new team in place and are refocusing their efforts on a broader spectrum of the market. These are welcomed changes and it is our hope that with this new focus, this new energy, Google will begin to show the promise that we at Chilmark have always had for this company to help consumers better manage their health.

My wife and I are satisfied users of Microsoft HealthVault.

Larry Dignan reported Teradata, Cloudera team up on Hadoop data warehousing in a 9/15/2010 post to ZDNet’s Between the Lines blog:

Teradata and Cloudera on Wednesday announced plans to collaborate on Hadoop-powered enterprise analytics and data warehousing projects.

In a nutshell, Teradata customers will be able to use Cloudera’s Hadoop distribution to analyze unstructured data collected from various sources. This information can then be funneled into a Teradata data warehouse.

The partnership is notable since Hadoop is viewed by companies as a way to work around data warehousing systems. Yahoo is a big champion of Hadoop as a way to handle large scale data analytics.

Teradata and Cloudera say that the partnership will make it easier to query large data pools to develop insights. Teradata is pitching a hybrid Hadoop-data warehousing approach. The company said:

Parallel processing frameworks, such as Hadoop, have a natural affinity to parallel data warehouses, such as the powerful Teradata analytical database engine. Although designed for very different types of data exploration, together the two approaches can be more valuable in mining massive amounts of data from a broad spectrum of sources. Companies deploying both parallel technologies are inventing new applications, discovering new opportunities, and can realize a competitive advantage, according to an expert in very large data solutions.

In other words, Hadoop and data warehousing isn’t a zero sum game. The two techniques technologies will co-exist. Teradata will bundle a connector (the Teradata Hadoop Connector) to its systems with Cloudera Enterprise at no additional cost. Cloudera will provide support for the connector as part of its enterprise subscription. The two parties will also jointly market the connector.

Audrey Watters reported Heroku Extends Platform to Third Party Add-Ons in a 9/15/2010 post to the ReadWriteCloud:

Platform-as-a-service startup Heroku has launched an Add-On Provider Program that will provide a self-service portal and development kit for third-party service providers. The program provides a complete suite of technologies, business processes, and services necesssary to build Heroku Add-ons.

Heroku offers a Ruby platform-as-a-service that focuses on ease of use, automation, and reliability for web app builders. The third-party add-ons allow developers to easily integrate additional functionality into the apps they build on the Heroku platform. The catalog of add-ons already available includes full-text search services, NoSQL and SQL data stores, analytics tools, video transcoding technologies, and performance management services.

Heroku's new Add-on Provider Program will provide a process for bringing new add-ons from beta through release. The development kit includes a provisioning API, manifest format, single sign-on mechanism, and testing tools for integration with the Heroku platform. In exchange for the development kit and billing service, Heroku splits the revenue with the third-party developers.

Heroku has developed this new program in conjunction with the add-on providers it's already had in place. The company says it's dedicated to building a neutral marketplace of cloud services and anticipates that the program will help strength the Heroku platform. "A true platform is much broader than any single vendor - building a sustainable ecosystem of technologies, services, and resources is a key part of the Heroku vision," says James Lindenbaum, co-founder of Heroku.

Heroku says this is the first comprehensive third-party app development offering by a Cloud PaaS provider - a move that makes sense in terms of strengthening the Heroku market and ecosystem.

Joe Panettieri asked Verizon’s Computing as a Service for SMBs: No Partners Needed? in this 9/14/2010 post to the MSPMentor blog:

When Verizon launched its Computing as a Service (CaaS) SMB strategy today, I was curious to know if the big service provider plans to work with smaller channel partners. No doubt, Verizon’s cloud leverages partnerships with VMware and Terremark. But what’s the message to smaller MSPs? Perhaps the answer is for MSPs to dial Rackspace, The Planet or another channel-friendly cloud partner. Here’s why.

No doubt, Verizon’s CaaS SMB services will attract some small and midsize business customers. The CaaS SMB system offers virtual servers, storage, networking and other services to SMB customers.

A Matter of Trust

But here’s the twist: Within our own small business, I’m responsible for how our Web site infrastructure evolves and grows. Instead of choosing hosting partners and cloud partners on my own, I depend on our Web integrator for regular advice on all IT matters.

And generally speaking, it doesn’t sound like Verizon CaaS SMB is doing much to win the hearts and minds of Web integrators, VARs and MSPs — the hosting industry’s key influencers. Instead, Verizon seems to be pursuing a direct sales model in to the SMB space.

Reality Check

That may work for some companies. But I suspect most of MSPmentor’s readers are looking to partner up with hosting companies and cloud providers that have partner programs.

Rackspace, for one, has recruited channel veterans from AMD and Extreme Networks to build out its partner program. The result: Rackspace’s cloud seems to be gaining critical mass among the readers I hear from Similarly, The Planet continues to enhance its channel partner programs even as the big hosting provider merges with SoftLayer.

It looks like VMware and Terremark will potentially profit from Verizon’s SMB cloud efforts. Now, it’s time for Verizon to open its doors a little wider to the broader IT channel.

<Return to section navigation list>

0 comments:

Post a Comment