Windows Azure and Cloud Computing Posts for 9/23/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Update 9/26/2010: Articles marked ••

• Update 9/25/2010: Articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure, Codenames “Dallas” and “Houston,” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

•• Ryan Dunn (&dunnry) and Steve Marx (@smarx) produced a 00:41:10 Cloud Cover Episode 27 - Combining Roles and Using Scratch Disk on 9/26/2010:

Join Steve and Ryan [above] each week as they cover the Microsoft cloud. You can follow and interact with the show at@cloudcovershow.

In this episode:

- Learn how to effectively use the local disk (scratch disk) on your running instances.

- Discover how to combine your roles in your Windows Azure services

Show Links:

Announcing the 'Powered by Windows Azure' logo program

Announcing Compute Hours Notifications for Windows Azure Customers

ASP.NET Security Vulnerability

Windows Azure Companion

Maximizing Throughput in Windows Azure Part 2 [by Rob Gillen]

Web Image Capture in Windows Azure

Windows Azure’s local disk (scratch disk, not persistent) isn’t the same as Windows Azure Drive (persistent), but this seemed to me to be the appropriate location for the article.

<Return to section navigation list>

SQL Azure, Codenames “Dallas” and “Houston,” and OData

•• Eyal Vardi updated the CodePlex download of his OData Viewer Tool on 9/23/2010:

Project Description

I buil[t] this tool to help me build OData URL quer[ies] and see the result.

( for example http://e4d.com/Courses.svc/Courses?$orderby=Date/ )Please send feedback...

- URL IntelliSense

- URL Tooltip

- Data Grid View

- XML Atom View

- Data Service Metadata View

Data Grid View

XML Atom View

MetaData View

Source code is available in a separate download: Src - OData Viewer Tool.

• Kevin Blakey provided on 9/25/2010 the slide deck and sample code for his South West Code Camp - Presentation on OData:

This is the contents of my presentation from the South West Code Camp in Estoro. The presentation is on Building and Consuming OData Custom Providers. In this presentation I demo a basic OData provider using the Entity Framework provider. I show how to construct the provider and then show how easy it is to consume the data via Silverlight. In the second half of the presentation, I demonstrate how to create a provider using the reflection capabilities of .NET. This demo[nstrate]s how to create a provider for data that is not in a EF compatible database.

Download: OData.zip

• Dan Jones explains frequent release scheduling for SQL Server Management Studio (SSMS) and preventing DOS attacks on Project “Houston” in this 9/24/2010 post to TechNet’s SQL Server Experts blog;

Since my team started work on Project Houston hardly a day goes by I’m not in some discussion about the Cloud. It also hits me when I go through my RSS feeds each day: cloud this and cloud that. There is obviously a significant new trend here, it may even qualify as a wave; as in the Client/Server Wave or the Internet Wave or the SOA Wave, to name a few – ok, to name all the ones I can name. Almost 100% of what I read is focused on how the Cloud Wave impacts IT and the “business” in general. Don’t get me wrong, I completely agree it’s real and it’s going to have a profound impact. I think this posting by my friend Buck Woody (and fellow BIEB blogger) sums it up pretty succinctly. The primary point being it doesn’t matter what size your business is today or tomorrow, the Cloud will impact you in a very meaningful way.

What I don’t see much discussion about is how the Cloud Wave (is it just me or does that sound like a break dancing move?) changes the way software vendors (ISVs) develop software. In addition to Project Houston my team is responsible for keeping SQL Server Management Studio (SSMS) up with the SQL Azure feature set. If we step back for a second and look at what we have, we have a product that runs as a service on Windows Azure (running in multiple data centers across the globe) and the traditional thick client application that is downloaded, installed, and run locally. These are very different beasts but they are both challenged with keeping pace with SQL Azure.

SSMS has traditionally been on the release rhythm of the boxed product. This means a release every three years. The engineering system we use to develop software is finely tuned to the three year release cycle. The way we package up and distribute the software is also tuned to the three year release cycle. It’s a pretty good machine and by and large it works. When I went to the team who manages our release cycle and explained to them that I needed to release SSMS more frequently, as in at least once a quarter if not more often, they didn’t know what to say. This isn’t to say these aren’t smart people, they are. But they had never thought about how to adjust the engineering system to release a capability like SSMS more often than the boxed product, let alone every quarter. I hate to admit it but it took a couple of months of discussion just to figure how we could do this. It challenged almost every assumption made about how we develop, package and release software. But the team came through and now we’re able to release SSMS almost any time we want. There are still challenges but at least we have the backing of the engineering system. I’m pretty confident we would have eventually arrived at this solution even without SQL Azure. But given the rapid pace of innovation in the Cloud we were forced to arrive at it sooner.

Project Houston is an entirely different. There is no download for Project Houston, it only runs in the Cloud. The SQL Azure team runs a very different engineering system (although it is a derivation) than what we run for the boxed product. It’s still pretty young and not as tuned but it’s tailored to suit the needs of a service offering. When we first started Project Houston we tried to use our current engineering system. During development it worked pretty well. However, when we got to our first deployment it was a complete mess. We had no idea what we were doing. We worked with an Azure deployment team and we spoke completely different languages. It took a few months of constant discussion and troubleshooting to figure out what we were doing wrong and how we needed to operate to be successful. Today we snap more closely with the SQL Azure engineering system and we leverage release resources on their side to bridge the gap between our dev team and the SQL Azure operations team. It used to take us weeks to get a deployment completed. Now we can do it, should we have to, in the matter of hours. That’s a huge accomplishment by my dev team, the Azure ops team, and our release managers.

There’s another aspect to this as well. Releasing a product that runs as a service introduces an additional set of requirements. One in particular completely blindsided us. Sure when I tell you it’ll be obvious but it caught my team completely off guard. As we get close to releasing of any software (pre-release or GA) we do a formal security review. We have a dedicate[d] team that leads this. It’s a very thorough investigation of the design in an attempt to identify problems. And it works – let me just leave it at that. In the case of Project Houston we hit a situation no one anticipated. The SQL Azure gateway has built-in functionality to guard against DOS (Denial of Service) Attacks. Project Houston is running on the opposite side of the gateway from SQL Azure, it runs on the Windows Azure platform. Since Project Houston handles multiple users connecting to different servers & databases there’s an opportunity for a DOS. During the security review the security team asked how we were guarding against a DOS. As you can image our response was a blank stair and the words D-O-what were uttered a few times.

We had been heads down for 10 months with never a mention of handling a DOS. We were getting really close to releasing the first CTP. We could barely spell D-O-S much less design and implement a solution in the matter of a few weeks. The team jumped on it calling on experts from across the company. We reviewed 5 or 6 different designs each with their own set of pros and cons. The team finally landed on a design and got it implemented. We did miss the original CTP date but not by much.

You’re probably an IT person wondering why this is relevant to you. The point in all this is simple. When you’re dealing with a vendor who claims their product is optimized for the Cloud or designed for the Cloud do they really know what they’re talking about or did the marketing team simply change the product name, redesign the logo and graphics to make it appear Cloud enabled. Moving from traditional boxed software to Cloud is easy. Do it right, is hard – I know, I’m living through it every day.

I’d be interested in the details of the DOS-prevention design and implementation for Codename “Houston” and I’m sure many others would be, too.

• Jani Järvinen suggested “Learn about the OData protocol and how you can combine SQL Azure databases with WCF Data Services to create OData endpoints that utilize a cloud-based database” in his Working with the OData protocol and SQL Azure article of 9/24/2010 for the Database Journal:

OData is an interesting new protocol that allows you to expose relational database data over the web using a REST-based interface. In addition to just publishing data over an XML presentation format, OData allows querying database data using filters. These filters allow you to work with portions of the database data at a time, even if the underlying dataset is large.

Although these features – web-based querying and filtering – alone would be very useful for many applications, there is more to OData. In fact, OData allows you to also modify, delete and insert data, if you wish to allow this to your users. Security is an integral part of OData solutions.

In ASP.NET applications OData support is implemented technically using WCF Data Services technology. This technology takes for instance an ADO.NET Entity Framework data model, and exposes it to the web with settings you specify. Then, either using an interactive web browser or a suitable client application, you can access the database data over the HTTP protocol.

Although traditional on-premises ASP.NET and WCF applications continue to be work horses for many years, cloud based applications are galloping into the view. When working with Microsoft technologies, cloud computing means the Azure platform. The good news is that you can also expose data sources using OData from within your Azure applications.

This article explores the basics of OData, and more importantly, how you can expose OData endpoints from within your applications. Code shown is written in C#. To develop the applications walked through in this article, you will need Visual Studio 2010 and .NET 4.0, an Azure account, and the latest Azure SDK Kit, currently in version 1.2 (implicitly, this requires Windows Vista or Windows 7). See the Links section for download details.

Introduction to OData

OData (“Open Data”) is, as its name implies, an open standard for sharing database data over the Internet. Technically speaking, it uses the HTTP protocol to allow users and applications to access the data published through the OData endpoint. And like you learned earlier, OData allows intelligent querying of data directly with filters specified in the URL addresses.

In addition to reading, the OData protocol also allows modifying data on the server, according to specified user rights. Applications like Excel 2010 (with its PowerPivot feature) and most web browsers can already access OData directly, and so can libraries in Silverlight 4, PHP and Windows Phone 7, for instance

All this sounds good, but how do OData based systems tick? Take a look at Figure 1, where a WCF Data Service application written with .NET 4.0 shows an OData endpoint in a browser.

Figure 1: An OData endpoint exposed by a WCF Data Service in a web browserThe application exposes the Northwind sample database along with its multiple tables. For instance, to retrieve all customer details available in the Customers table, you could use the following URL:

http://myserver/NorthwindDataService.svc/CustomersThis is a basic query that fetches all records from the table. The data is published in XML based ATOM format, which is commonly used for RSS feeds for example. Here is a shortened example to show the bare essentials:

<?xml version="1.0" encoding="utf-8" standalone="yes"?> <feed xml:base="http://myserver/NorthwindDataService.svc/" xmlns:d="http://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns="http://www.w3.org/2005/Atom"> <title type="text">Customers</title> <id>http://myserver/NorthwindDataService.svc/Customers</id> <updated>2010-09-18T04:12:14Z</updated> ... <content type="application/xml"> <m:properties> <d:CustomerID>ALFKI</d:CustomerID> <d:CompanyName>Alfreds Futterkiste</d:CompanyName> <d:ContactName>Maria Anders</d:ContactName> <d:ContactTitle>Sales Representative</d:ContactTitle> <d:Address>Obere Str. 57</d:Address> <d:City>Berlin</d:City> <d:Region m:null="true" /> <d:PostalCode>12209</d:PostalCode> <d:Country>Germany</d:Country> <d:Phone>030-0074321</d:Phone> <d:Fax>030-0076545</d:Fax> </m:properties> </content> ...Using an XML based format means that the data downloaded is quite verbose, using a lot more bandwidth than for example SQL Server’s own TDS (Tabular Data Stream) protocol. However, interoperability is the key with OData.

If the Customer table would contain hundreds or thousands of records, then retrieving all records with a single query becomes impractical. To filter the data and for example return all customers starting with the letter A, use the following URL:

http://myserver/NorthwindDataService.svc/Customers?$filter=startswith(CompanyName, 'A') eq trueIn addition to simple queries like this, you can also select for instance top five or ten records, sort based on a given column (both ascending and descending), and use logical operations such as NOT, AND and OR. This gives you a lot of flexibility in working with data sources exposed through the OData protocol.

Different Ways to Work with OData on the Cloud

When speaking of OData and the cloud, you should be aware that there is no single architecture that alone fits all needs. In fact, you can easily come up with different options, Figure 2 showing you three of them. The two right-most options have one thing in common: they run the actual data source (the SQL Server database) inside the cloud, but the location of the WCF Data Service varies.

Figure 2: Three common architectures for working with ODataThat is, the WCF data service can run on your own servers and fetch data from the database on the cloud. This works as long as there is a TCP/IP connection to Microsoft’s data centers from your own location. This is important to keep in mind, as you cannot guarantee connection speeds or latencies when working through the open Internet.

To create a proof-of-concept (POC) application, you could start with the hybrid option shown in the previous figure. In this option, the database sits in the cloud, and your application on your own computers or servers. To develop such an application, you will need to have an active Azure subscription. Using the web management console for SQL Azure (sql.azure.com), create a suitable database to which you want to connect, and then migrate some data to the newly created database (see another recent article “Migrating your database applications to SQL Azure” by Jani Järvinen at DatabaseJournal.com for tips on migration issues).

While you are working with the SQL Azure management portal, take a note of the connection string to your database. You can find the connection string under the Server Administration web management page, selecting the database of your choice and finally clicking the Connection Strings button at the bottom. Usually, this is in the format like the following:

Server=tcp:nksrjisgiw.database.windows.net;Database=Northwind;User ID=username@nksrjisgiw;Password=mysecret;Trusted_Connection=False;Encrypt=True;Inside Visual Studio, you can use for instance the Server Explorer window to test access to your SQL Azure database. Or, you could use SQL Server Management Studio to do the same (Figure 3). With an interactive connection possibility, it is easy to make sure everything (such as Azure firewall policies) is set up correctly.

Figure 3: SQL Server Management Studio 2008 R2 can be used to connect to SQL AzureCreating a WCF Data Service Wrapper for an SQL Azure Database

Creating an OData endpoint using WCF technologies is easiest using the latest Visual Studio version 2010. Start or open an ASP.NET web project of your choice, for instance a traditional ASP.NET web application or a newer ASP.NET MVC 2 application. A WCF Data Service, which in turn is able to publish an OData endpoint of the given data source, requires a suitable data model within your application.

Although you can use for instance LINQ to Sql as your data model for a WCF Data Service, an ADO.NET Entity Framework data model is a common choice today. An Entity Framework model works well for the sample application, so you should continue by adding an Entity Framework model to your ASP.NET project. Since you want to connect to your SQL Azure database, simply let Visual Studio use the connection string that you grabbed from the SQL Azure web management portal. After this, you should have a suitable data model inside your project (Figure 4).

Figure 4: A simple ADO.NET Entity Framework data model (entity data model) in Visual StudioAfter the entity data model has been successfully created, you can publish it through a WCF Data Service. From Visual Studio’s Project menu, choose the Add New Item command, and from the opening dialog box, navigate to the Web category on the left, and choose the WCF Data Service icon (the one with a person in it). After clicking OK, Visual Studio will add a set of files and references to your project. Visual Studio will also open a skeleton code file for you, which you then must edit.

Basically, you must include your data model to the class definition, and then set various options for things like protocol version, security, access control, and so on. Once edited the finished code file should look like the following:

using System.Data.Services.Common; using System.Linq; using System.ServiceModel.Web; using System.Web; namespace SqlAzureODataWebApplication { public class NorthwindDataService : DataService { public static void InitializeService( DataServiceConfiguration config) { config.SetEntitySetAccessRule("*", EntitySetRights.AllRead); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; } } }At this point, your application is ready to be tested. Run your application, and your web browser should automatically launch. In your browser, you should see an XML presentation of your data, coming straight from the SQL Azure cloud database (Figure 5)!

Figure 5: An example OData endpoint showing data coming directly from SQL Azure [You must turn off Feed Reading in Tools | Options | Content to view OData documents in IE’s XML stylesheet.]Be also sure to test out URL addresses like the following (note that IE cannot display the first URL directly, but view the source of the resulting page to see the details):

http://localhost:1234/NorthwindDataService.svc/Customers('ALFKI') http://localhost:1234/NorthwindDataService.svc/Customers?$filter=Country eq 'USA' http://localhost:1234/NorthwindDataService.svc/Customers?$orderby=City&$top=10Query strings can have lots of power, can’t they?

Conclusion

OData is a new protocol for sharing data over the web. Although still much in its infancy, the protocol has lots of potential, especially when it comes to Microsoft platforms. For developers, OData gives a cross-platform and cross-language way of sharing relational data using web-friendly technologies such as HTTP, JSON and XML/Atom.

For .NET developers, OData is easy to work with. Publishing an OData endpoint is done using WCF Data Service technology, and with Visual Studio 2010, this doesn’t require many clicks. Internally inside your application, you will need to be using some object-oriented database technology, such as LINQ to SQL or ADO.NET Entity Framework.

On the client side, OData-published data can be explored in multiple ways. The good news is that .NET developers have many options: Silverlight 4 contains direct support for WCF Data Services, and in any other .NET application type, you can import an OData endpoint directly as a web service reference. This allows you to use technologies such as LINQ to query the data. Excel 2010 can do the trick for end-users.

Combining OData endpoints with a cloud-based database can give you several benefits. Firstly, excellent scalability allows you to publish large datasets over the OData protocol, meaning that you are not limited to data size of just a gigabyte or two. Secondly, decreased maintenance costs and the possibility to rapidly create databases for testing purposes are big pluses.

In this article, you learned about the OData protocol, and how you can combine SQL Azure databases with WCF Data Services to create OData endpoints that utilize a cloud based database. You also saw that Visual Studio 2010 is a great tool to build this kind of modern applications. It doesn’t require many clicks anymore to be able to use cloud databases in your applications, and to publish that data over the web. Happy hacking!

Links

- OData protocol pages: http://www.odata.org/

- Azure SDK 1.2 download page: http://www.microsoft.com/downloads/en/details.aspx?FamilyID=21910585-8693-4185-826e-e658535940aa&displaylang=en

- List of supported OData client applications: http://www.odata.org/consumers

- OData Protocol URI Conventions: http://www.odata.org/developers/protocols/uri-conventions

- The Atom Publishing Protocol: http://www.odata.org/media/6655/[mc-apdsu][1].htm

- Sample Northwind OData endpoint: http://services.odata.org/Northwind/Northwind.svc/

• Channel9 offers an Intro to Dallas course as part of its Windows Azure Training Course with team members David Aiken (@thedavidaiken), Ryan Dunn (@dunnry), Vittorio Bertocci (@vibronet), and Zack Skyles Owens (@ZackSkylesOwens):

In this lab we will preview Microsoft Codename “Dallas”. Dallas is Microsoft’s Information Service offering which allows developers and information workers to find, acquire and consume published datasets and web services. Users subscribe to datasets and web services of interest and can integrate the information into their own applications via a standardized set of API’s. Data can also be analyzed online using the Dallas Service Explorer or externally using the PowerPivot Add-In for Excel.

Objectives

In this Hands-On Lab, you will learn how to:

Explore the Dallas Developer Portal and Service Explorer

- Query a Dallas dataset using a URL

- Access and consume a Dallas dataset and Service via Managed Code

- Use the PowerPivot Add-In for Excel to consume and analyze data from Dallas datasets

Exercises

This Hands-On Lab comprises the following exercises:

- Exploring the Dallas Developer Portal and Service Explorer

- Querying Dallas Datasets via URL’s

- Consuming Dallas Data and Services via Managed Code

- Consuming Dallas Data via PowerPivot

Estimated time to complete this lab: 60 minutes.

Steve Yi announced patterns & practices’ Developing Applications for the Cloud on Azure online book is now available in this 9/24/2010 post to the SQL Azure team blog:

The Microsoft Patterns & Practices group has published the second free online book volume about Developing Applications for the Cloud on the Microsoft Windows Azure Platform. This book demonstrates how you can create a multi-tenant, Software as a Service (SaaS) application to run in the cloud by using the Windows Azure platform.

The book is intended for any architect, developer, or information technology (IT) professional who designs, builds, or operates applications and services that run on or interact with the cloud.

The Working with Data in the Surveys Application is especially interesting to me as it pertains to SQL Azure. Do you have questions, concerns, comments? Post them below and we will try to address them.

Liam Cavanagh (@liamca) posted How to Sync Large SQL Server Databases to SQL Azure to the Sync Framework Team blog on 9/24/2010:

Over the past few days I have seen a number of posts from people who have been looking to synchronize large databases to SQL Azure and have been having issues. In many of these posts, people have looked to use tools like SSIS, BCP and Sync Framework and have run into issues such as SQL Azure closing the transaction due to throttling of the connection (because it took to apply the data) and occasionally local out-of-memory issues as the data is sorted.

For today’s post I wanted to spend some time discussing the subject of synchronizing large data sets from SQL Server to SQL Azure using Sync Framework. By large database I mean databases that are larger than 500MB in size. If you are synchronizing smaller databases you may still find some of these techniques useful, but for the most part you should be just fine with the simple method I explained here.

For this situation, I believe there are three very useful capabilities within the Sync Framework:

1) MemoryDataCacheSize: This helps to limit the amount of memory that is allocated to Sync Framework during the creation of the data batches and data sorting. This typically helps to fix any out-of-memory issues. In general I typically allocate 100MB (100000) to this parameter as the best place to start, but if you have larger or smaller amounts of free memory, or if you still run out-of-memory, you can play with this number a bit.

RemoteProvider.MemoryDataCacheSize = 100000;

2) ApplicationTransactionSize (MATS): This tells the Sync Framework how much data to apply to the destination database (SQL Azure in this case) at one time. We typically call this Batching. Batching helps us to work around the issue where SQL Azure starts to throttle (or disconnect) us if it takes too long to apply the large set of data changes. MATS also has the advantage of allowing me to tell sync to pick up where it left off in case a connection drops off (I will talk more about this in a future post) and has the advantage that it provides me the ability to get add progress events to help me track how much data has been applied. Best of all it does not seem to affect performance of sync. I typically set this parameter to 50MB (50000) as it is a good amount of data that SQL Azure can commit easily, yet is small enough that if I need to resume sync during a network disconnect I do not have too much data to resend.

RemoteProvider.ApplicationTransactionSize = 50000;

3) ChangesApplied Progress Event: The Sync Framework database providers have an event called ChangesApplied. Although this does not help to improve performance, it does help in the case where I am synchronizing a large data set. When used with ApplicationTransactionSize I can tell my application to output whenever a batch (or chunk of data) has been applied. This helps me to track the progress of the amount of data that has been sent to SQL Azure and also how much data is left.

RemoteProvider.ChangesApplied += new EventHandler<DbChangesAppliedEventArgs>(RemoteProvider_ChangesApplied);

When I combine all of this together, I get the following new code that I can use to create a command line application to sync data from SQL Server to SQL Azure. Please make sure to update the connection strings and the tables to be synchronized.

100+ lines of C# code omitted for brevity.

Liam Cavanagh currently works as a Sr. Program Manager for Microsoft in the Cloud Data Services group. In this group he works on the Data Sync Service for SQL Azure – enabling organizations to extend on-premises data to the cloud as well as to remote offices and mobile workers to remain productive regardless of network availability.

Zane Adam from the SQL Azure Team described New Features in SQL Azure on 9/23/2010:

As part of our continued commitment to provide regular and frequent updates to SQL Azure, I am pleased to announce Service Update 4 (SU4). SU4 is now live with three new updates - database copy, improved help system, and deployment of our web-based tool Code-Named "Houston" to multiple data centers. Here are the details:

Support for database copy: Database copy allows you to make a real-time complete snapshot of your database into a different server in the data center. This new copy feature is the first step in backup support for SQL Azure, allowing you to get a complete backup of any SQL Azure database before making schema or database changes to the source database. The ability to snapshot a database easily is our top requested feature for SQL Azure. Note that this feature is in addition to our automated database replication, which keeps your data always available. The relevant MSDN Documentation is available here, titled: Copying Databases in SQL Azure.

- Additional MSDN Documentation: MSDN has created a new section called Development: How-to Topics (SQL Azure Database) which has links to information about how to perform common programming tasks with Microsoft SQL Azure Database.

- Update on "Houston": Microsoft Project Code-Named "Houston" is a light weight web-based database management tool for SQL Azure. Houston, which runs on top of Windows Azure, is now available in multiple datacenters, reducing the latency between the application and your SQL Azure database.

Steve Yi posted Creating Tables with Project Houston as his first contribution to the SQL Azure blog on 9/23/2010:

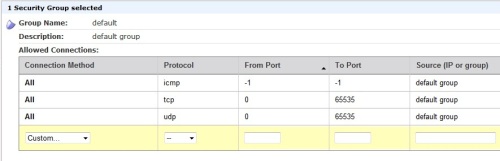

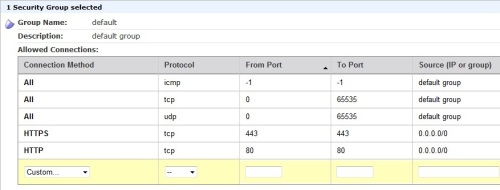

This is the second post in a series about getting started with Microsoft Project Code-Named “Houston” (Houston) (Part 1). In part 1 we covered the basics, logging in and navigation. In this post we will cover how to use the table designer in Project Houston. As a quick reminder, Microsoft Project Code-Named “Houston” (Houston) is a light weight database management tool for SQL Azure and is a community technology preview (CTP). Houston can be used for basic database management tasks like authoring and executing queries, designing and editing a database schema, and editing table data.

Currently, SQL Server Management Studio 2008 R2 doesn’t have a table designer implemented for SQL Azure. If you want to create tables in SQL Server Management Studio 2008 you have to type a CREATE TABLE script and execute it as a query. However, Project Houston has a fully implemented web-based table designer. Currently, Project Houston is a community technology preview (CTP).

You can start using Houston by going to: http://www.sqlazurelabs.com/houston.aspx (The SQL Azure labs site is the location for projects that are either in CTP or incubation form). Once you have reached the site login to your server and database to start designing a table. For more information on logging in and navigation in Houston, see the first blog post.

Designing a Table

Once you have logged in, the database navigation bar will appear in the top left of the screen. It should look like this:

To create a new table:

- Click on “New Table”

- At this point, the navigation bar will change to the Table navigation. It will look like this:

- A new table will appear in the main window displaying the design mode for the table.

The star next to the title indicates that the table is new and unsaved. Houston automatically adds three columns to your new table. You can rename them or modify the types depending on your needs.

If you want to delete one of the newly added columns, just click on the column name so that the column is selected and press the Delete button in the Columns section of the ribbon bar.

If you want to add another column, just click on the + Column button or the New button in the Columns section of the ribbon bar.

Here is a quick translation from Houston terms to what we are used to with SQL Server tools:

- Is Required? means that data in this column cannot be null. There must be data in the column.

- Is Primary Key? means that the column will be a primary key and that a clustered index will be created for this column.

- Is Identity? means that the column is an auto incrementing identity column, usually associate with the primary key. It is only available on the bigint, int, decimal, float, smallint, and tinyint data types.

Saving

When you are ready to commit your table to SQL Azure you need to save the table using the Save button in the ribbon bar.

We’re aware of a few limitations with the current offering such as creating tables with multiple primary key fails, and some renaming of tables and column and causes errors when saving. As with any CTP we are looking for feedback and suggestions, please log any bugs you find.

Feedback or Bugs?

Again, since this is CTP Project “Houston” is not supported by standard Microsoft support services. For community-based support, post a question to the SQL Azure Labs MSDN forums. The product team will do its best to answer any questions posted there.

To log a bug about Project “Houston” in this release, use the following steps:

- Navigate to Https://connect.microsoft.com/SQLServer/Feedback.

- You will be prompted to search our existing feedback to verify that your issue has not already been submitted.

- Once you verify that your issue has not been submitted, scroll down the page and click on the orange Submit Feedback button in the left-hand navigation bar.

- On the Select Feedback form, click SQL Server Bug Form.

- On the feedback form, select Version = Houston build – CTP1 – 10.50.9700.8.

- On the feedback form, select Category = Tools (Houston).

- Complete your request.

- Click Submit to send the form to Microsoft.

To provide a suggestion about Project “Houston” in this release, use the following steps:

- Navigate to Https://connect.microsoft.com/SQLServer/Feedback.

- You will be prompted to search our existing feedback to verify that your issue has not already been submitted.

- Once you verify that your issue has not been submitted, scroll down the page and click on the orange Submit Feedback button in the left-hand navigation bar.

- On the Select Feedback form, click SQL Server Suggestion Form.

- On the feedback form, select Category = Tools (Houston).

- Complete your request.

- Click Submit to send the form to Microsoft.

If you have any questions about the feedback submission process or about accessing the portal, send us an e-mail message: sqlconne@microsoft.com.

Summary

This is just the beginning of our Microsoft Project Code-Named “Houston” (Houston) blog posts, make sure to subscribe to the RSS feed to be alerted as we post more information.

Kathleen Richards reported Microsoft Kills Key Components of the 'Oslo' Modeling Platform in favor of OData and Entity Data Model v4 in this 9/22/2010 article for Redmond Developer News:

Microsoft is announcing today that key components of its "Oslo" modeling platform are no longer part of its model-driven development strategy. In the on-going battle of competing data platform technologies at Microsoft, the company is focusing its efforts on the Open Data Protocol (OData) and the Entity Data Model, which underlies the Entity Data Framework and other key technologies.

Announced in October 2007, the Oslo modeling platform consisted of the 'M' modeling language, a "Quadrant" visual designer and a common repository based on SQL Server. The technology was initially targeting developers, according to Microsoft, with an eye towards broadening tools like Quadrant to other roles such as business analysts and IT. Alpha bits of some of the components were first made available at the Professional Developers Conference in October 2008. Oslo was renamed SQL Server Modeling technologies in November 2009. The final community technical preview was released that same month and required Visual Studio 2010/.NET Framework 4 Beta 2.

The Quadrant tool and the repository, part of SQL Server Modeling Services after the name change, are no longer on the roadmap. Microsoft's Don Box, a distinguished engineer and part of the Oslo development team, explained the decision in the Model Citizen blog on MSDN:

"Over the past year, we’ve gotten strong and consistent feedback from partners and customers indicating they prefer a more loosely-coupled approach; specifically, an approach based on a common protocol and data model rather than a common store. The momentum behind the Open Data Protocol (OData) and its underlying data model, Entity Data Model (EDM), shows that customers are acting on this preference."

The end of Oslo is not surprising based on the project's lack of newsworthy developments as it was bounced around from the Connected Services division to the Developer division to the Data Platform team. The delivery vehicle for the technology was never disclosed, although it was expected to surface in the Visual Studio 2010 and SQL Server wave of products.

The "M" textual modeling language, originally described as three languages--MGraph, MGrammar and MSchema -- has survived, for now. Microsoft's Box explained:

"While we used "M" to build the "Oslo" repository and "Quadrant," there has been significant interest both inside and outside of Microsoft in using "M" for other applications. We are continuing our investment in this technology and will share our plans for productization once they are concrete."

The Oslo platform was too complex for the benefits that it offered, said Roger Jennings, principal consultant of Oakleaf Systems, "The Quadrant and 'M' combination never gained any kind of developer mindshare."

More and more people are climbing on the OData bandwagon, which is a very useful and reasonably open protocol, agreed Jennings. A Web protocol under the Open Specification Promise that builds on the Atom Publishing Protocol, OData can apply HTTP and JavaScript Object Notation (JSON), among other technologies, to access data from multiple sources. Microsoft "Dallas", a marketplace for data- and content-subscription services hosted on the Windows Azure platform, is driving some of the developer interest in OData, according to Jennings.

Developers may run into problems with overhead when using OData feeds, however. "XML is known as a high-overhead protocol, but OData takes that to an extreme in some cases," said Jennings, who is testing OData feeds with Microsoft's Dynamic CRM 2011 Online beta, the first product to offer a full-scale OData provider[*]. Jennings blogs about OData explorations, including his experiences with the Dynamic CRM Online beta in his Oakleaf Systems blog.

*The last paragraph needs a minor clarification: Dynamic CRM 2011 is the first version of the Dynamics CRM product to offer a full-scale OData provider. Many full-scale OData providers predate Dynamics CRM 2011.

Full disclosure: I’m a contributing editor for Visual Studio Magazine, which is published by 1105 Media. 1105 Media is the publisher of Redmond Developer News.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

The Windows Azure AppFabric team adds more details in Windows Azure AppFabric SDK September Release available for download of 9/24/2010:

As part of the Windows Azure AppFabric September Release we are now providing both 32- and 64-bit versions of the Windows Azure AppFabric SDK.

In addition to addressing several deployment scenarios for 64-bit computers, Windows Azure AppFabric now enables integration of WCF services that use AppFabic Service Bus endpoints in IIS 7.5, with Windows® Server AppFabric. You can leverage Windows® Server AppFabric functionality to configure and monitor method invocations for the Service Bus endpoints.

Note that the name of the installer has changed:

- Old Name: WindowsAzureAppFabricSDK.msi

- New names: WindowsAzureAppFabricSDK-x64.msi WindowsAzureAppFabricSDK-x86.msi

Any automated deployment scripts you might have built that use the previous name will need to be updated to use the new naming. No other installer option has changed.

The Windows Azure AppFabric SDK September Release is available here for download.

Wes Yanaga announced Windows Azure AppFabric SDK V1.0–September Update Released in a 9/24/2010 post to the US ISV Evangelism blog:

Windows Azure AppFabric provides common building blocks required by .NET applications when extending their functionality to the cloud, and specifically, the Windows Azure platform. The Windows Azure AppFabric is a key component of the Windows Azure Platform. It includes two services: AppFabric Access Control and AppFabric Service Bus.

This SDK includes API libraries for building connected applications with the Windows Azure AppFabric. It spans the entire spectrum of today’s Internet applications – from rich connected applications with advanced connectivity requirements to Web-style applications that use simple protocols such as HTTP to communicate with the broadest possible range of clients.

As part of the Windows Azure AppFabric September Release, we are now providing both 32- and 64-bit versions of the Windows Azure AppFabric SDK.

In addition to addressing several deployment scenarios for 64-bit computers, Windows Azure AppFabric now enables integration of WCF services that use AppFabric Service Bus endpoints in IIS 7.5, with Windows® Server AppFabric. You can leverage Windows® Server AppFabric functionality to configure and monitor method invocations for the Service Bus endpoints.

The Windows Azure AppFabric SDK September Release is available here for download.

The downloadable file is dated 9/23/2010.

Zane Adams posted Announcing BizTalk Server 2010 RTM and General Availability date on 9/23/2010:

Today we are excited to announce that we have Released to Manufacturing (RTM) BizTalk Server 2010 and it will be available for purchase starting October 1st, 2010.

BizTalk Server 2010 is the seventh major release of our flagship enterprise integration product, which includes new support for Windows Server AppFabric to provide pre-integrated support for developing new composite applications. This allows customers to maximize the value of existing Line of Business (LOB) systems by integrating and automating their business processes, and putting real-time, end-to-end enterprise integration within reach of every customer. All this coupled with the confidence of a proven mission-critical integration infrastructure that is available to companies of all sizes at a fraction of the cost of other competing products.

According to Steven Smith, President and Chief Executive Officer at GCommerce, “GCommerce has bet our mission-critical value-chain functionality on BizTalk Sever 2010 which we use to automate the secure and reliable exchange of information with our trading partners. Additionally, the Windows Azure Platform allows us to extend our existing business-to-business process into new markets such as our cloud-based inventory solution called the Virtual Inventory Cloud (VIC), which is based upon Windows Azure and SQL Azure. This extension to our trading platform allows us to connect our buyers experience from the purchasing process within VIC all the way through to the on-premises business systems built around BizTalk. This new GCommerce capability drives both top-line revenue as well as reduces bottom line costs, by making special orders faster than before.” Details are available in the GCommerce case study. [Emphasis added.]

The new BizTalk Server 2010 release enables businesses to:

Maximize existing investments by using pre-integrated BizTalk Server 2010 support with both Windows Server AppFabric and SharePoint Server 2010 to enable new composite application scenarios;

Further reduce total cost of ownership and enhance performance for large and mission-critical deployments through a new pre-defined dashboard that enables efficient performance tuning and streamlining deployments across environments, along with pre-integration with System Center;

Efficient B2B integration with highly-scalable Trading Partner Management and advanced capabilities for complex data mapping.

BizTalk Server 2010 also delivers updated platform support for Windows Server 2008 R2, SQL Server 2008 R2, .NET Framework 4 and Visual Studio 2010.

BizTalk Server is the most widely deployed product in the enterprise integration space with over 10,000 customers and 81% of the Global 100.

This release is another step in the on-going investments we have made in our application infrastructure technologies. Along with the releases earlier this calendar year of .NET Framework 4, Windows Server AppFabric and Windows Azure AppFabric, BizTalk Server 2010 makes it easier for customers to build composite applications that span both on-premises LOB systems and new applications deployed onto the public cloud.

To learn more regarding the BizTalk Server 2010 release and download our new free developer edition, please visit BizTalk Server website; more detailed product announcements about BizTalk Server 2010 are also contained on the BizTalk Server product team blog. You can learn more about Microsoft’s Application Infrastructure capabilities by exploring on-demand training at www.appinfrastructure.com.

Brian Loesgen adds download locations for BizTalk Server 2010 Evaluation and Developer editions in this 9/23/2010 post:

The BizTalk team announced today that the latest version of BizTalk Server, BizTalk Server 2010, has been released to manufacturing. It will be available for purchase starting October 1 2010.

The Developer and Evaluation Editions are available effective today at the links below.

For those of you that were working with the public beta, your results may vary, but I was able to do an in-place upgrade from the BETA to the RTM with no issues at all, all BizTalk applications/settings/artifacts/BAM/etc were maintained. Nice!

The Developer Edition (which is now FREE) download is available here:

The Evaluation Edition download is available here:

http://www.microsoft.com/downloads/en/details.aspx?FamilyID=8b1069cf-202b-462b-8d10-bec65d315c65

I won’t re-hash what the team said, you can read *all* about the new release at the official BizTalk team blog post here:

Summary by Charles Young, one of my co-authors on BizTalk Server 2010 Unleashed, is here. http://geekswithblogs.net/cyoung/archive/2010/09/23/biztalk-server-2010-rtm.aspx

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Bart Wullums posted on 9/25/2010 a reminder about the Layered architecture sample for Azure sample on CodePlex:

When browsing through Codeplex, I found the following interesting sample: Layered Architecture Sample for Azure. It is a layered application in .NET ported to the Windows Azure platform to demonstrate how a carefully designed layered application can be easily deployed to the cloud. It also illustrates how layering concepts can be applied in the cloud environment.

•• Jeff Sandquist announced that Channel9 v5 uses Windows Azure, SQL Azure, Azure Table Storage, and memcache in his Welcome to the all new Channel 9 post of 9/17/2010 (missed when posted):

Welcome to the all new Channel 9. This is the fifth major release of Channel 9 since our original launch back on April 6, 2004.

With this major release we focussed on top requests from you in the forums along telemetry data to guide our design. We've made it easier to find content through our browse section and did a dramatic redesign across the board for our popular Channel 9 Shows Area, the forums and learning.

Behind the scenes ait has been a complete rewrite of our code, a rebuild of the infrastructure and development methodology.

This fifth edition of Channel 9 is built using [emphasis added]:

- ASP.NET MVC

- SparkView engine

- jQuery

- Silverlight 4

- Windows Azure, SQL Azure, Azure Table Storage, and memcache

- ECN for the Content Delivery Network (videos)

For a deeper dive into how we built the site, watch Mike Sampson and Charles Torre Go Deep on Rev 9. All of these code improvements have resulted in our page load times improving dramatically and a greater simpli[fi]cation of our server environment too.

With well over a year in planning, development and testing today is the day for us to make the change over to the new Channel 9. We have been humbled by your never ending support, feedback and enthusiasm for Channel 9.

If you have feedback where we can make this a better place for all of us, please leave your feedback over on our Connect Site. We're listening.

On behalf of the entire Channel 9 Team , welcome to Rev 9.

Jeff Sandquist

Note: “Rev 9” appears to be a typo. The current version is Channel9 v5.

John C. Stame reminded readers about Microsoft Patterns and Practices – Moving Applications to the Cloud on Windows Azure:

Microsoft Patterns & Practices provides Microsoft’s applied engineering guidance and includes both production quality source code and documentation. Its a great resource that has mounds of great architectural guidance around Microsoft platforms.

Back in June, Patterns & Practices release a new book on Windows Azure, titled “Moving Applications to the Cloud”. I recently came across this and found it to be a great resource for my customers and architects that are looking at moving existing workloads in their enterprise to Windows Azure. Download it (its free) and check it out.

Maarten Balliauw described Windows Azure Diagnostics in PHP and his Windows Azure Diagnostics Manager for PHP on 9/23/2010:

When working with PHP on Windows Azure, chances are you may want to have a look at what’s going on: log files, crash dumps, performance counters, … All this is valuable information when investigating application issues or doing performance tuning.

Windows Azure is slightly different in diagnostics from a regular web application. Usually, you log into a machine via remote desktop or SSH and inspect the log files: management tools (remote desktop or SSH) and data (log files) are all on the same machine. This approach also works with 2 machines, maybe even with 3. However on Windows Azure, you may scale beyond that and have a hard time looking into what is happening in your application if you would have to use the above approach. A solution for this? Meet the Diagnostics Monitor.

The Windows Azure Diagnostics Monitor is a separate process that runs on every virtual machine in your Windows Azure deployment. It collects log data, traces, performance counter values and such. This data is copied into a storage account (blobs and tables) where you can read and analyze data. Interesting, because all the diagnostics information from your 300 virtual machines are consolidated in one place and can easily be analyzed with tools like the one Cerebrata has to offer.

Configuring diagnostics

Configuring diagnostics can be done using the Windows Azure Diagnostics API if you are working with .NET. For PHP there is also support in the latest version of the Windows Azure SDK for PHP. Both work on an XML-based configuration file that is stored in a blob storage account associated with your Windows Azure solution.

The following is an example on how you can subscribe to a Windows performance counter:

1 /** Microsoft_WindowsAzure_Storage_Blob */ 2 require_once 'Microsoft/WindowsAzure/Storage/Blob.php'; 3 4 /** Microsoft_WindowsAzure_Diagnostics_Manager */ 5 require_once 'Microsoft/WindowsAzure/Diagnostics/Manager.php'; 6 7 $storageClient = new Microsoft_WindowsAzure_Storage_Blob(); 8 $manager = new Microsoft_WindowsAzure_Diagnostics_Manager($storageClient); 9 10 $configuration = $manager->getConfigurationForCurrentRoleInstance(); 11 12 // Subscribe to \Processor(*)\% Processor Time 13 $configuration->DataSources->PerformanceCounters->addSubscription('\Processor(*)\% Processor Time', 1); 14 15 $manager->setConfigurationForCurrentRoleInstance($configuration);

Introducing: Windows Azure Diagnostics Manager for PHP

Just for fun (and yes, I have a crazy definition of “fun”), I started working on a more user-friendly approach for configuring your Windows Azure deployment’s diagnostics: Windows Azure Diagnostics Manager for PHP. It is limited to configuring everything and you still have to know how performance counters work, but it saves you a lot of coding.

The application is packed into one large PHP file and coded against every best-practice around, but it does the job. Simply download it and add it to your application. Once deployed (on dev fabric or Windows Azure), you can navigate to diagnostics.php, log in with the credentials you specified and start configuring your diagnostics infrastructure. Easy, no?

Here’s the download: diagnostics.php (27.78 kb)

(note that it is best to get the latest source code commit for the Windows Azure SDK for PHP if you want to configure custom directory logging)

The Windows Azure Team posted Real World Windows Azure: Interview with David Ruiz, Vice President of Products at Ravenflow on 9/23/2010:

As part of the Real World Windows Azure series, we talked to David Ruiz, Vice President of Products at Ravenflow, about using the Windows Azure platform to deliver the company's cloud-based process analysis and visualization solution. Here's what he had to say:

MSDN: Tell us about Ravenflow and the services you offer.

Ruiz: Ravenflow is a Microsoft Certified Partner that uses a patented natural language technology, called RAVEN, to turn text descriptions into business process diagrams. Our customers use RAVEN to quickly analyze and visualize their business processes, application requirements, and system engineering needs.

MSDN: What were the biggest challenges that Ravenflow faced prior to implementing the Windows Azure platform?

Ruiz: We already offered a desktop application but wanted to create a web-based version to give customers anywhere, anytime access to our unique process visualization capabilities. What we really wanted to do was expand the market for process visualization and make Ravenflow easily available for more customers. At the same time, we wanted to deliver a rich user experience while using our existing development skills and deep experience with the Microsoft .NET Framework.

MSDN: Can you describe some of the technical aspects of the solution you built by using the Windows Azure platform to help you reach more customers?

Ruiz: After evaluating other cloud platforms, we chose the Microsoft-hosted Windows Azure platform and quickly created a scalable new service: RAVEN Cloud. It has a rich, front-end interface that we developed by using the Microsoft Silverlight 3 browser plug-in. Once a customer enters a narrative into the interface, Web roles in Windows Azure place the narrative in Queue storage. From there, Worker roles access the narrative and coordinate and perform the RAVEN language analysis. The results are placed in Blob storage where they are collected by a Worker role, aggregated back together, and then returned to the Web role for final processing. RAVEN Cloud also takes advantage of Microsoft SQL Azure to store application logs as well as user account and tracking information.

RAVEN Cloud uses the patented RAVEN natural language technology to generate accurate process diagrams from the text that users enter through a website.

MSDN: What makes your solution unique?

Ruiz: The natural language engine behind RAVEN Cloud and its ability to generate diagrams from text is what makes it really stand out. Natural language analysis and visualization is a complex mathematical operation, and the Windows Azure platform is a natural fit for such compute-heavy processes. The elastic scalability features of Windows Azure allow us to scale with ease as the number of users grows.

MSDN: Are you offering RAVEN Cloud to any new customer segments or niche markets?

Ruiz: Since launching RAVEN Cloud in May 2010, we have served more than 1,000 customers each week-a number that continues to grow. While there is no specific industry segment that stands out, we are finding a very significant audience of business analysts who struggle with process modelling and like the fact that RAVEN Cloud helps them do that automatically.

MSDN: What kinds of benefits is Ravenflow realizing with Windows Azure?

Ruiz: We were able to quickly develop and deploy our natural language engine to the cloud as a service, and we look forward to improved time-to-market for new features and products in the future. By offering our software as a service, we have not only opened new business opportunities and extended the market reach for our process visualization solutions, but we can also maintain high levels of performance for our CPU-intensive application while minimizing operating costs.

Read the full story at: www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008080

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

John C. Stame described David Chou’s Building Highly Scalable Java Apps on Windows Azure presentation to the JavaOne conference in this 9/23/2010 post:

One of my brilliant colleagues, and friend David Chou (Architect Evangelist) at Microsoft just posted slide deck on SlideShare.net from his talk at JavaOne. David is a former Java Architect and has been at Microsoft for several years talking about our platform and now specifically our Windows Azure Platform.

I have embedded his presentation [link] and you can also click here to take you to SlideShare, where you can find more of David’s presentations.

Steve Marx (@smarx) explained Web Page Image Capture in Windows Azure in a CloudCover video episode according to this 9/23/2010 post:

In this week’s upcoming episode of Cloud Cover [see below], Ryan and I will show http://webcapture.cloudapp.net, a little app that captures images of web pages, like the capture of http://silverlight.net you see on the right.

When I’ve seen people on the forum or in email asking about how to do this, they’re usually running into trouble using IECapt or the .NET WebBrowser object. This is most likely due to the way applications are run in Windows Azure (inside a job object, where a number of UI-related things don’t work). I’ve found that CutyCapt works great, so that’s what I used.

Using Local Storage

The application uses CutyCapt, a Qt- and WebKit-based web page rendering tool. Because that tool writes its output to a file, I’m using local storage on the VM to save the image and then copying the image to its permanent home in blob storage.

This is the meat of the backend processing:

var proc = new Process() { StartInfo = new ProcessStartInfo(Environment.GetEnvironmentVariable("RoleRoot") + @"\\approot\CutyCapt.exe", string.Format(@"--url=""{0}"" --out=""{1}""", url, outputPath)) { UseShellExecute = false } }; proc.Start(); proc.WaitForExit(); if (File.Exists(outputPath)) { var blob = container.GetBlobReference(guid); blob.Properties.ContentType = "image/png"; blob.UploadFile(outputPath); File.Delete(outputPath); }Combining Roles

Typically, this sort of architecture (a web UI which creates work that can be done asynchronously) is accomplished in Windows Azure with two roles. A web role will present the web UI and enqueue work on a queue, and a worker role will pick up messages from that queue and do the work.

For this application, I decided to combine those two things into a single role. It’s a web role, and the UI part of it looks like anything else (ASP.NET MVC for the UI, and a queue to track the work). The interesting part is in

WebRole.cs. Most people don’t realize that the entire role instance lifecycle is available in web roles just as it is in worker roles. Even though the template you use in Visual Studio doesn’t do it, you can simply overrideRun()as you do in a worker role and put all your work there. The code that I pasted above is in theRun()method inWebRole.cs.If I later want to separate the front-end from the back-end, I can just copy the code from

Run()into a new worker role.Get the Code

You can download the full source code here: http://cdn.blog.smarx.com/files/WebCapture_source.zip, but note that it’s missing CutyCapt.exe. You can download the most recent version of CutyCapt.exe here: http://cutycapt.sourceforge.net. Just drop it in the root of the web role, and everything should build and run properly.

Watch Cloud Cover!

Be sure to watch the Cloud Cover episode about http://webcapture.cloudapp.net, as well as all of our other fantastic episodes.

If you have ideas about other things you’d like to see covered on the show, be sure to ping us on Twitter (@cloudcovershow) to let us know.

Ryan Dunn (&dunnry) and Steve Marx (@smarx) produced a 00:29:47 Cloud Cover Episode 26 - Dynamic Workers on 9/19/2010:

In this episode:

- Discover how to get the most out of your Worker Roles using dynamic code.

- Learn how to enable multiple admins on your Windows Azure account.

Show Links:

Maximizing Throughput in Windows Azure – Part 1

Calling a Service Bus HTTP Endpoint with Authentication using WebClient

Requesting a Token from Access Control Service in C#

Two New Nodes for the Windows Azure CDN Enhance Service Across Asia

Steve Sfartz, Vijay Rajagopalan and Yves Yang updated their Windows Azure SDK for Java Developers to v2.0.0.20100913 on 9/13/2010 (missed when updated):

Windows Azure SDK for Java enables Java developers to take advantage of the Microsoft Cloud Services Platform – Windows Azure. Java APIs for for Windows Azure Storage - Blobs, Tables & Queues Helper Classes for HTTP transport, REST, Error Management.

Kapil Mehra posted Announcing the [Windows Azure] Group Policy Search Service on 6/24/2010 to TechNet’s Ask Directory Devices Team blog (missed when posted):

Hello, Kapil here. I am a Product Quality PM for Windows here in Texas [i.e. someone who falls asleep cuddling his copy of Excel - Ned]. Finding a group policy when starting at the "is there even a setting?" ground zero can be tricky, especially in operating systems older than Vista that do not include filtering. A solution that we’ve recently made available is a new service in the cloud:

With the help of Group Policy Search you can easily find existing Group Policies and share them with your colleagues. The site also contains a Search Provider for Internet Explorer 7 and Internet Explorer 8 as well as a Search Connector for Windows 7. We are very interested in hearing your feedback (as responses to this blog post) about whether this solution is useful to you or if there are changes we could make to deliver more value.

Note - the Group Policy search service is currently an unsupported solution. At this time the maintenance and servicing of the site (to update the site with the latest ADMX files, for example) will be best-effort only.

Using GPS

In the search box you can see my search string “wallpaper” and below that are the search suggestions coming from the database.

On the lower left corner you see the search results and the first result is automatically displayed on the right hand side. The search phrase has been highlighted and in the GP tree the displayed policy is marked bold.

Note: Users often overlook the language selector in the upper right corner, where one can switch the policy results (not the whole GUI itself) to “your” language (sorry for having only UK English and not US English ;-) …

Using the “Tree” menu item you can switch to the “registry view”, where you can see the corresponding registry key/value, or you can reset the whole tree to the beginning view:

In the “Filter” menu, you can specify for which products you want to search (this means, if you select IE7, it will find all policies which are usable with IE7, not necessarily only these only available for IE7 and not for IE6 or IE8; this is done using the “supported on” values from the policies):

In the “copy” menu you can select the value from the results that you want to copy. Usually “URL” or “Summary” is used (of course you can easily select and CTRL+C the text from the GUI as well):

In the “settings” menu you can add the search provider and/or Connector.

Upcoming features (planned for the next release)

“Favorites” menu, where you can get a list of some “interesting” things like “show me all new policies IE8 introduced”:

“Extensions” menu:

We will introduce a help page with a description for the usage of the GPS.

GPS was written by Stephanus Schulte and Jean-Pierre Regente, both MS Internet Explorer Support Engineers in Germany. Yep, this tool was written by Support for you. :-)

The cool part – it’s all running in:

Kapil “pea queue” Mehra

I would have loved to have had this tool when writing Admin911: Windows 2000 Group Policy 10 years ago:

Return to section navigation list>

VisualStudio LightSwitch

Beth Massi delivered a 20-foot, lavishly illustrated Deployment Guide: How to Configure a Machine to Host a 3-tier LightSwitch Beta 1 Application on 9/24/2010. Following are the first few feet:

A lot of people have been asking in the forums about how to deploy a LightSwitch application and there are some really great tutorials out there like: Deploy and Update a LightSwitch (Beta 1) 3-tier Application

There’s also a lot of information in the official documentation on Deployment:

- Deploying LightSwitch Applications

- How to: Deploy a LightSwitch Application

- How to: Change the Deployment Topology and Application Type

Deploying a LightSwitch application on the same machine as you develop on is pretty easy because all the prerequisites are installed for you with Visual Studio LightSwitch, including SQL Server Express. In this post I’d like to walk you through configuring a clean machine to host a 3-tier LightSwitch application that shouldn’t have the development environment installed.

Before I begin please note: There is NO “go live” license for the LightSwitch Beta. You can deploy your LightSwitch applications to IIS for testing purposes ONLY. Also currently the Beta only supports IIS 7 at this time. You can only use Windows 7 or Windows 2008 Server (not 2003) to test deployment for Beta 1 LightSwitch applications. As you read through this guide I’ll note in sections where the team is still fixing bugs or changing experiences for the final release (RTM). Please be aware that the deployment experience will be much easier and full-featured for RTM.

In this post we will walk through details of configuring a web server first and then move onto deployment of a LightSwitch Beta 1 application. (BTW, a lot of this information should be useful even if you are creating other types of .NET web applications or services that connect to databases.)

Configuring the server

- Installing Beta 1 Prerequisites

- Verifying IIS Settings and Features

- Configuring Your Web Site for Network Access

- Configuring an Application Pool and Test Site

- Add User Accounts to the Database Server

Deploying and testing your LightSwitch application

- Publishing a LightSwitch Beta 1 Application

- Installing the LightSwitch Application Package on the Server

- Using Windows Integrated Security from the Web Application to the Database

- Launching the LightSwitch Application

So let’s get started!

Installing LightSwitch Beta 1 Prerequisites

You can use the Web Platform Installer to set up a Windows web server fast. It allows you to select IIS 7, the .NET Framework 4 and a whole bunch of other free applications and components, even PHP. All the LightSwitch prerequisites are there as well including SQL Server Express and the LightSwitch middle-tier framework. This makes it super easy to set up a machine with all the components you need.

NOTE: The team is looking at simplifying this process and possibly making the LightSwitch server component pre-reqs go away so this process will likely change for RTM.

To get started, on the Web Platform tab select the Customize link under Tools and check the Visual Studio LightSwitch Beta Server Prerequisites. This will install IIS 7, .NET Framework, SQL Server Express 2008 and SQL Server Management Studio for you so you DO NOT need to select these components again on the main screen.

If you already have one or more of these components installed then the installer will skip those. Here's the breakdown of the important dependencies that get installed:

- .NET Framework 4

- Middle-tier components for the LightSwitch runtime, for Beta 1 these are installed in the Global Assembly Cache (GAC)

- IIS 7 with the correct features turned on like ASP.NET, Windows Authentication, Management Services

- Web Deployment Tool 1.1 so you can deploy directly from the LightSwitch development environment to the server

- SQL Server Express 2008 (engine & dependencies) and SQL Server Management Studio (for database administration)(Note: LightSwitch will also work with SQL Server 2008 R2 but you will need to install that manually if you want that version)

- WCF RIA Services Toolkit (middle-tier relies on this)

Click the "I Accept" button at the bottom of the screen and then you'll be prompted to create a SQL Server Express administrator password. Next the installer will download all the features and start installing them. Once the .NET Framework is installed you'll need to reboot the machine and then the installation will resume.

Once you get to the SQL Server Management Studio 2008 setup you may get this compatibility message:

If you do, then just click "Run Program" and after the install completes, install SQL Server 2008 Service Pack 1.

Plan on about an hour to get everything downloaded (on a fast connection) and installed.

In the next couple sections I'm going to take you on a tour of some important IIS settings, talk you through Application Pools & Identities and how to get a simple test page to show up on another networked computer. Feel free to skip to the end if you know all this already and just want to see how to actually package and deploy a LightSwitch application. :-) …

Beth continues with more details.

I’m glad to see someone else challenge me for the longest blogposts in history prize.

Update 9/24/2010, 4:50 PM PDT Beth replies:

<Return to section navigation list>

Windows Azure Infrastructure

• Dan Grabham asserted “Integrated mobile apps mean a new dawn in automotive design” as he asked Will [auto] sat navs all be cloud-based by 2020? in a 9/24/2010 article for TechRadar.com:

A leading automotive analyst says that by the end of the decade all navigation will be cloud-based. Phil Magney, vice president of Automotive Research at analyst iSuppli, spoke about how mobile apps and the cloud are revolutionising the design of in-car HMI (Human Machine Interface) design.

[Image] The BMW Station will be launched at the Paris Motor Show in October

"What do I use? I use my Android phone. The content is just more relevant. In five years half the navigation users will be cloud-based... by the end of the decade everything will be cloud-based. The general telematics trend is moving [towards having] open platforms and app stores." [Emphasis added.]

"On-board resources are going out in favour of cloud-based resources. No matter what you say, it's all moving to the cloud."

Magney was speaking about the changing times in HMI design at the SVOX Forum in Zurich. SVOX is a provider for text-to-speech systems and has been working on more natural speech recognition for in-car use – its partners include Clarion, Microsoft Auto and the Open Handset Alliance (Android). [Emphasis added.]

"TTS (Text To Speech) is very, very important with the emphasis on bringing messaging and email into the car", said Magney. "This heightens the need for TTS."

Mobile apps running on smartphones can provide information or even a skin which runs on the head unit. Mini Connected is an iPhone app which enables you to listen to internet radio through your iPhone but using the controls of your Mini's HMI.

The stage on from that is to have apps running on the head unit itself, with a smartphone OS like Android inside the car – however, iSuppli warns that would require work on how the apps can be distributed and who gets a share in the revenue.

Connectivity and bandwidth will, however, surely be a major stumbling block with any of these systems. Magney was vague as to how this would be paid for. "I presume they'll go to a tiered pricing plan," he tamely suggested.

Likewise, Magney was also questioned about the quality of service on mobile networks while driving. "I guess it's my belief that LTE comes along and takes care of the issues with regard to bandwidth."

In another talk, BMW's Alexandre Saad said that mobile apps have to be well designed to succeed in-car, not least because of the cycle of car design. "A head unit could be four years old... the apps are not known at the design stage. Applications should be developed independently from car production cycles and other car technology."

Phil Magney also talked about the example of the BMW Station – pictured above – which enables an iPhone to effectively be embedded into the dashboard and - via a BMW app due in early 2011 – control in-car systems. We've also previously seen Audi's Google-based system at CES while Mercedes Benz has also shown a cloud-based head unit.

Next Page: In car tech: Potential for distraction

The Windows Azure team reported Windows Azure Domain Name System Improvements on 9/24/2010:

The Windows Azure Domain Name System (DNS) is moving to a new globally distributed infrastructure, which will increase performance and improve reliability of DNS resolution. In particular, users who access Windows Azure applications from outside the United States will see a decrease in the time it takes to resolve the applications' DNS names. This change will take effect on October 5th, at midnight UTC. Customers don't need to do anything to get these improvements, and there will be no service interruption during the changeover.

Because of the inherently distributed nature of this new global infrastructure, the creation of new DNS names associated with a Windows Azure application can take up to two minutes to be fully addressable on a global basis. During this propagation time, new DNS records will resolve as NXDOMAIN. If you attempt to access the new DNS name during this time window, most client computers will cache these negative responses for up to 15 minutes, causing "DNS Not Found" messages. Under most circumstances you will not notice this delay, but if you promote a Windows Azure staging deployment to production for a brand new hosted service, you may observe a delay in availability of DNS resolution.

Jame Urquhart asked What is the 'true' cloud journey? in a 9/24/2010 post to C|Net’s The Wisdom of Clouds blog:

The adoption of cloud computing is happening today, or so say a wide variety of analysts, vendors, and even journalists. The surveys show greatly increased interest in cloud computing concepts, and even increased usage of both public and private cloud models by developers of new application systems.

(Credit: Flickr/thomas_sly)

But does your IT organization really understand its cloud journey?

Friend, colleague, and cloud blogger Chris Hoff wrote a really insightful post today that digs into the reality--worldwide--of where most companies are with cloud adoption today...at least in terms of internal "private cloud" infrastructure. [See the Cloud Security and Governance section below.] In it, he describes the difficult options that are on the table for such deployments: