Windows Azure and Cloud Computing Posts for 9/14/2010+

A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles.

• Update 9/18/2010: Updated Joe Weinman’s “A Market for Melons,” a detailed, economic study of cloud-computing (in the Windows Azure Infrastructure section, click and scroll down). Other articles marked • added.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Liam Cavanagh explained Extending SQL Azure data to SQL Compact using Sync Framework 2.1 in this 9/13/2010 post to the Sync Framework Team blog:

I have just posted a webcast entitled "Extending SQL Azure data to SQL Compact using Sync Framework 2.1". This is part 2 to my original webcast where I showed how to use the Sync Framework to synchronize between an on-premises SQL Server database and a SQL Azure database. In this video I show you how to modify the code from the original webcast to allow a SQL Compact database to synchronize with a SQL Azure database.

Below I have included the main code (program.cs) associated with this console application that allows me to synchronize the Customer and Product table from the SQL Azure AdventureWorks databases to SQL Compact.

Make sure to update it with your own connection information and add references to the Sync Framework components.

See Liam’s post for the 100+ lines of C# code.

See Beth Massi (@BethMassi) said it’s Time to Register for Silicon Valley Code Camp! in this 9/14/2010 post in the Cloud Computing Events section below.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

The Windows AppFabric Team announced on 9/14/2010 Windows Azure AppFabric Labs September release – announcement and scheduled maintenance on 9/16/2010:

The next update to the Windows Azure AppFabric LABS environment is scheduled for September 16, 2010 (Thursday). Users will have NO access to the AppFabric LABS portal and services during the scheduled maintenance down time.

When:

- START: September 16, 2010, 10am PST

- END: September 16, 2010, 6pm PST

Impact Alert: The AppFabric LABS environment (Service Bus, Access Control and portal) will be unavailable during this period. Additional impacts are described below.

Action Required: Existing accounts and Service Namespaces will be available after the services are deployed.

However, ACS Identity Providers, Rule Groups, Relying Parties, Service Keys, and Service Identities will NOT be persisted and restored after the maintenance. Users will need independently back up their data if they wish to restore them after the Windows Azure AppFabric LABS September Release.

Thanks for working in LABS and giving us valuable feedback. Once the update becomes available, we’ll post the details via this blog.

Stay tuned for the upcoming LABS release!

The Windows Azure AppFabric Team

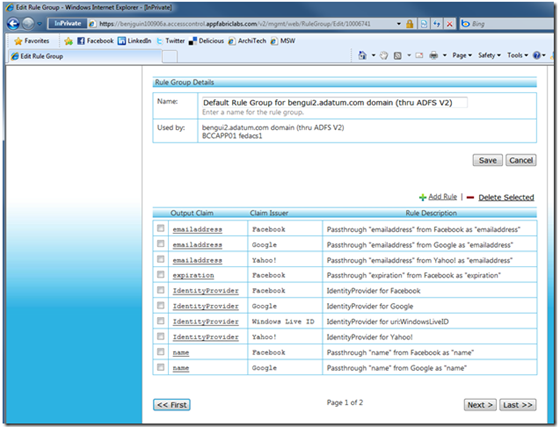

Benjamin Guinebertière posted a bilingual Fédération d’identité: ACS V2 via ADFS V2 | Federated Identity: ACS V2 via ADFS V2 analysis of Access Control Services and Active Directory Federation Services to his MSDN blog on 9/14/2010. Here is the English content:

In august 2010, the Windows Azure AppFabric team released a CTP(*) version of ACS(*) V2. (*) Abbreviations are given at the bottom of this page. There were blog posts and videos about the new features of this release. Here are a few links:

- portal.appfabriclabs.com: the portal where CTP is available

- http://channel9.msdn.com/posts/justinjsmith/ACS-Labs-Walkthrough/: demo

- http://blogs.msdn.com/b/justinjsmith/archive/2010/08/04/major-update-to-acs-now-available.aspx

- http://blogs.msdn.com/b/vbertocci/archive/2010/08/05/new-labs-release-of-acs-marries-web-identities-and-ws-blows-your-mind.aspx

- http://channel9.msdn.com/shows/Identity/Introducing-the-new-features-of-the-August-Labs-release-of-the-Access-Control-Service: Vittorio Bertocci interviewing Justin Smith)

- http://blogs.msdn.com/b/vbertocci/: Vittorio Bertocci’s blog were he’s been writing a lot about ACS V2 these times

Let’s suppose we have a RP(*), a Web site, which already has an ADFS V2 as its STS (*).

Typically, users who have Active Directory credentials can access to that web site, even when that web site is not hosted inside the domain. As an example, the web site may be hosted in Windows Azure, or anywhere else on the Internet.

In order to be able to authenticate other users (without AD credentials), one of the possible topologies is the following:

A motivation for this topology would be to have ADFS V2 controlling all the claims that are provided to the RP, as well as the list of available identity providers for the RP.

The main values of the components are:

- ACS V2 translates Open ID (ex: Yahoo!) or Facebook connect (and others) to WS-Federation protocol that can be understood by ADFS V2

- ADFS V2 can generate claims from Windows Authentication, as well as other external STS such as ACS V2, in a unified way (in terms of administration).

Here is how we can configure ADFS.

There are two providers: Active Directory, and Access Control Services V2:

Here are the rules for the Active Directory identity provider:

These are the rules for the RP (the application residing into Windows Azure in this example):

ACS V2 is configured in the following way: It has Facebook, Google, Windows Live ID and Yahoo! as identity providers.

ADSF V2 is a RP for ACS V2:

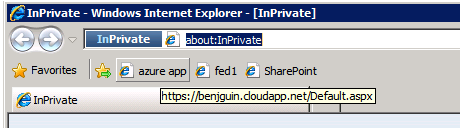

Let’s now test from a user session within the domain (user test-a).

The application redirects the browser to the only STS it knows: ADFS V2. As ADFS V2 has several possible identity providers, it asks user which one he wants to use. User will keep default one (AD).

RP application displays the claims it received (this is from a WIF template).

As user was authenticated thru Windows means, ADFS V2 added a custom role called FromADFS-Administrator so that application let user access more features.

This was done by a rule inside ADFS V2.As for any authenticated user, another rule added the FromADFS-Public role too.

Let’s close the browser and test again. In this case, user will choose ACS V2 as the identity provider.

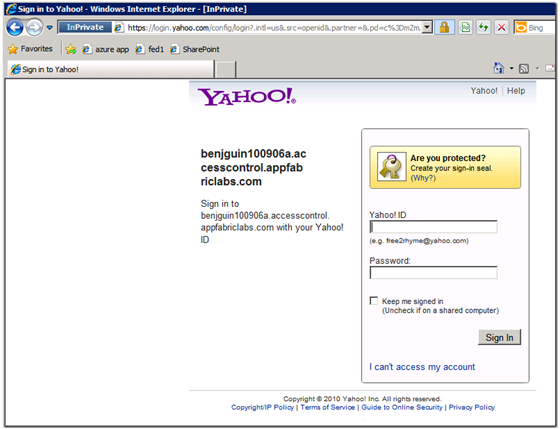

As ACS V2 also has several possible identoty providers, it asks user to choose. In this scenario, user chooses Yahoo!:

Yahoo! asks user if he agrees to send information to ACS V2. User agrees.

As users was not authenticated thru Windows means, ADFS V2 did not add FromADFS-Administrator. As for any authenticated user, another rule added the FromADFS-Public role.

With all the roles and other claims received by the RP, it has all needed information for its authorizations and personalization work.

ACS V2 being a cloud service, should other very popular identity providers or even protocols emerge on the Internet, it may be easy to add them from ACS V2 portal, without requiring to change ADFS V2 rules or application code.

Benjamin has filled a longtime need for a fully illustrated example of ACS/ADFS V2 authentication. Thanks!

Anil John posted IIW East Session on Role of Government as Identity Oracle (Attribute Provider) on 9/12/2010:

My proposal of this session at IIW East was driven by the following context:

- We are moving into an environment where dynamic, contextual, policy driven mechanisms are needed to make real time access control decisions at the moment of need

- The input to these decisions are based on attributes/claims which reside in multiple authoritative sources

- The authoritative-ness/relevance of these attributes are based on the closeness of a relationship that the keeper/data-steward of the source has with the subject. I would highly recommend reading the Burton Group paper (FREE) by Bob Blakley on "A Relationship Layer for the Web . . . and for Enterprises, Too” which provides very cogent and relevant reasoning as to why authoritativeness of attributes is driven by the relationship between the subject and the attribute provider

- There are a set of attributes that the Government maintains thorough its lifecycle, on behalf of citizens, that have significant value in multiple transactions a citizen conducts. As such, is there a need for these attributes to be provided by the government for use and is there a market that could build value on top of what the government can offer?

Some of the vocal folks at this session, in no particular order, included (my apologies to folks I may have missed):

- Dr. Peter Alterman, NIH

- Ian Glazer, Gartner

- Gerry Beuchelt, MITRE

- Nishant Kaushik, Oracle

- Laura Hunter, Microsoft

- Pamela Dingle, Ping Identity

- Mary Ruddy, Meristic

- Me, Citizen

We started out the session converging on (an aspect of) an Identity Oracle as something that provides an answer to a question but not an attribute. The classic example of this is someone who wishes to buy alcohol which is age restricted in the US. The question that can be asked of an Oracle would be "Is this person old enough to buy alcohol?" and the answer that comes back is "Yes/No" with the Oracle handling all of the heavy lifting on the backend regarding state laws that may differ, preservation of Personally Identifiable Information (PII) etc. Contrast this to an Attribute Provider to whom you would be asking "What is this person's Birthday?" and which releases PII info.

It was noted that the Government (Federal/State/Local/Tribal) is authoritative for only a finite number of attributes such as Passport #, Citizenship, Driver's License, Social Security Number etc and that the issue at present is that there does not exist an "Attribute Infrastructure" within the Government. The Federal ICAM Backend Attribute Exchange (BAE) is seen as a mechanism that will move the Government along on this path, but while there is clarity around the technical implementation, there are still outstanding governance issues that need to be resolved.

There was significant discussion about Attribute Quality, Assurance Levels and Authoritativeness. In my own mind, I split them up into Operational Issues and Governance Principles. On the Operational Issue arena, existing experiences with attribute providers have shown the challenges that exist around the quality of data and service level agreements that need to be worked out and defined as part of a multi-party agreement rather than bi-lateral agreements. On the Governance Principals side, there are potentially two philosophies for how to deal with authoritativeness:

- A source is designated as authoritative or not and what needs to be resolved from the perspective of an attribute service is how to show the provenance of that data as coming from the authoritative source

- There are multiple sources of the same attribute and there needs to be the equivalent of a Level of Assurance that can be associated with each attribute

At this point, I am very much in camp (1) but as pointed out at the session, this does NOT preclude the existence of second party attribute services that add value on top of the services provided by the authoritative sources. An example of this is the desire of an organization to do due diligence checks on potential employees. As part of this process, they may find value in contracting the services of service provider that aggregates attributes from multiple sources (some gov't provided and others not) that are provided by them in an "Attribute Contract" that satisfies their business need. Contrast this to them having to build the infrastructure, capabilities and business agreements with multiple attribute providers. The second party provider may offer higher availability, a more targeted Attribute Contract, but with the caveat that some of the attributes that they provide may be 12-18 hours out-of-date etc. Ultimately, it was noted that all decisions are local and the decisions about factors such as authoritativeness and freshness are driven by the policies of the organization.

In a lot of ways, in this discussion we got away from the perspective of the Government as an Identity Oracle but focused on it more as an Attribute Provider. A path forward seemed to be more around encouraging an eco-system that leveraged attribute providers (Gov't and Others) to offer "Oracle Services" whether from the Cloud or not. As such the Oracle on the one end has a business relationship with the Government which is the authoritative source of attributes (because of its close relationship with the citizen) and on the other end has a close contractual relationship which organizations, such as financial service institutions, to leverage their services. This, I think, makes the relationship one removed from what was originally envisioned as what is meant by an Identity Oracle. This was something that Nishant brought up after the session in a sidebar with Ian and Myself. I hope that there is further conversation on this topic about this.

My take away from this session was that there is value and a business need in the Government being an attribute provider, technical infrastructure is being put into place that could enable this, and while many issues regarding governance and quality of data still remains to be resolved, there is a marketplace and opportunity for Attribute Aggregators/Oracles that would like to participate in this emerging identity eco-system.

Raw notes from the session can be found here courtesy of Ian Glazer.

For more about IIW, see http://www.internetidentityworkshop.com/.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Case Studies Team reported Game Developer [Kobojo] Meets Demand and Prepares for Success with Scalable Cloud Solution on 9/14/2010:

Kobojo is a young company that develops online, casual games for social networks, such as Facebook. It is not unusual for social games to reach millions of users in a short period of time. After the tremendous success of one of the company’s games, the 10-server infrastructure that Kobojo had in place was nearly at capacity. Kobojo needed a highly scalable infrastructure—one that it could quickly scale up to respond to unpredictable game demand.

The company decided to adopt Windows Azure to host and manage its latest game, RobotZ. By using Windows Azure, Kobojo now has in place an infrastructure that it can rapidly scale up—a component that is critical to the company’s success and future growth. Also, by relying on Microsoft data centers, Kobojo simplified IT management while avoiding capital expenses—helping the company focus its efforts on creating compelling games

Business Situation

Knowing that its infrastructure would not meet long-term capacity needs, Kobojo needed to implement an infrastructure that could rapidly scale to meet the unpredictable demand of social games.

Solution

After evaluating many cloud platforms, including Amazon Elastic Compute Cloud (EC2), Kobojo implemented the Windows Azure platform and quickly developed and deployed its latest online game: RobotZ.

Benefits

- Improved scalability

- Simplified IT maintenance

- Avoided capital expenses

- Prepared for future growth

Software and Services

- Windows Azure

- Microsoft SQL Server 2008

- Microsoft Visual Studio 2008

- Microsoft .NET Framework

CloudBasic claimed it released “a new Application Platform that aims to provide to the cloud application environment the same level of productivity gains that Visual Basic provided in the client-server model” in its CloudBasic™ Revolutionizes the Development of Business Applications for Windows AZURE™ at DEMO Fall 2010 press release of 9/14/2010:

CloudBasic™, the leader in Rapid Application Development tools for Windows AZURE™, today announced the release of the CloudBasic Application Platform, a fully integrated and easily extendable cloud application environment. Announced at the DEMO Fall 2010 conference, the CloudBasic™ Application Platform for Windows AZURE™ provides the estimated 10 Million .NET developers worldwide with an immediate alternative to the future release of the VMForce enterprise cloud.

Purposely built and optimized for cloud application development, CloudBasic™ is a high performance platform that avoids the inherent inefficiencies and complexities of legacy cloud-enabled solutions. The designers' decision to intentionally stick with familiar and well understood technologies, like the .NET Framework and the SQL language, provides for a great ease of development and a very short learning curve.

A primary focus of CloudBasic™ is to simplify and speed up the adoption of cloud computing and especially Windows AZURE by organizations with limited IT resources. A patent-pending technology, called CloudBasic AppSheets™, allows nontechnical users to create within minutes fully functional, ready for enterprise integration applications based on existing spreadsheet designs. All formatting, references and calculations are carried over to preserve the look-and-feel of the original spreadsheet and facilitate a simple and elegant transition. For organizations that have discovered the benefits of cloud computing and have migrated from EXCEL to Google Docs, CloudBasic AppSheets™ for Windows AZURE™ provides the platform to scale up their spreadsheet based processes to enterprise class cloud applications.

To avoid the creation of isolated data silos and to address the needs of enterprise customers, CloudBasic™ offers the CloudBasic™ Integration process manager. It allows organizations to easily automate specific business processes such as data extraction and transformation, as well as integration with customers and partners. CloudBasic Integration comes with a Visual Workflow Designer, built-in scheduling and monitoring tools and takes full advantage of the underlying CloudBasic™ Application Platform to facilitate easy development and seamless cross-team interactions.

Availability and pricing

For the availability of the CloudBasic™ Application Platform on Windows AZURE™ and the upcoming Windows AZURE™ Appliance contact CloudBasic, Inc. at (877) 396-9447. Custom solutions and implementation consulting services are available upon request.

About CloudBasic

CloudBasic is intensely focused on developing cloud application tools that provide unparalleled productivity and ease-of-use to the .NET community. The innovative CloudBasic Application Platform allows organizations to take existing spreadsheet based processes and within minutes scale them up to fully functioning cloud applications. For more information, please visit http://www.cloudbasic.net.

About DEMO

Produced by the IDG Enterprise events group, the worldwide DEMO conferences focus on emerging technologies and new products innovations, which are hand selected from across the spectrum of the technology marketplace. The DEMO conferences have earned their reputation for consistently identifying cutting-edge technologies and helping entrepreneurs secure venture funding and establish critical business. For more information on the DEMO conferences, visit http://www.demo.com.

How do you become “the leader in Rapid Application Development tools for Windows AZURE™” on the day you release the product? I’d say VS 2010 was the leader for Windows Azure.

You can find more details about CloudBasic in these brochures: CloudBasic Platform, CLoudBasic AppSheets, and CloudBasic Integration.

Click Dimensions claimed it “has partnered with ExactTarget to provide Marketing Automation for Microsoft Dynamics CRM” in its ClickDimensions launches Windows Azure Marketing Automation solution for Microsoft Dynamics CRM press release of 9/14/2010:

ClickDimensions, a Microsoft BizSpark startup, launched its Marketing Automation for Microsoft Dynamics CRM solution today at Connections ’10, ExactTarget’s annual interactive marketing conference. ExactTarget will host a webcast on September 28 at 4pm EST to showcase the ClickDimensions solution and partnership. Register for the webcast at https://exacttargettraining.webex.com/exacttargettraining/onstage/g.php?t=a&d=964181814&sourceid=prweb.

The solution, which is 100% Software-as-a-Service and developed on the Microsoft Windows Azure platform, is built natively into Microsoft Dynamics CRM and leverages ExactTarget’s market leading email marketing and interactive marketing platform.

Through Web Tracking, Lead Scoring, Social Discovery, Form Capture and integrated and embedded ExactTarget Email Marketing, ClickDimensions enables marketers to generate and qualify high quality leads while providing sales people the ability to prioritize the best leads and opportunities. Using ClickDimensions, Microsoft CRM customers can see what their prospects are viewing, entering and downloading on their website so they may better prioritize prospects and more effectively engage them. Because ClickDimensions data is stored within Microsoft Dynamics CRM, it can be combined with any other CRM data, features and functionality to trigger emails when a prospect has reached a certain lead score, create sales follow ups when a visitor has completed a web form, provide a list of Leads that originated from a specific landing page and countless other scenarios.

“We are very excited about the partnership between ClickDimensions and ExactTarget,” commented Barry Givens, Global Channel Development Manager for CRM at Microsoft Corporation. “We’ve already seen many of our customers benefit from the high level of productivity delivered through the integration between Microsoft Dynamics CRM and ExactTarget. Many of our Microsoft Dynamics CRM customers can also benefit from the advanced marketing automation capabilities provided by ClickDimensions which is backed by ExactTarget’s high quality messaging solution and Microsoft’s high scale Windows Azure platform.”

“ClickDimensions Marketing Automation brings together the power of Microsoft Dynamics and ExactTarget’s interactive marketing technology in a single solution to help marketers easily create more targeted and relevant messaging campaigns,” said Scott Roth, senior director of partner marketing and alliances at ExactTarget. “As a member of our growing network of partners around the globe, we look forward to future innovation with ClickDimensions and continuing to deliver marketers integrated technologies to build effective interactive marketing programs.”

“Our solution allows our customers to more intelligently and efficiently qualify, target and close opportunities by bringing web tracking, lead scoring, social and web form capture data into Microsoft CRM and connecting that data with Microsoft CRM Lead, Contact and Campaign records,” commented ClickDimensions co-founder and Chief Executive Officer John Gravely. “This additional information allows marketers to measure the quality of leads they are generating, and provides sales people the information they need to more accurately tailor their offerings based on prospect attributes and interests. Because messaging quality and deliverability are among the most important components of a marketing automation solution, we’ve partnered with ExactTarget to ensure that our customers receive the highest quality messaging available in our solution.”

The news of ClickDimensions’ latest innovation comes in the midst of ExactTarget’s annual user conference Connections ’10, the industry’s largest gathering of interactive marketers. More than 2,000 are attending the three-day conference that features addresses by Sir Richard Branson, Twitter Chief Operating Officer Dick Costolo and more than 35 breakout sessions ranging from marketing best practices and industry-specific sessions to day-long “how to” workshops for beginners, experts and developers.

About ClickDimensions

ClickDimension’s Marketing Automation for Microsoft Dynamics CRM empowers marketers to generate and qualify high quality leads while providing sales the ability to prioritize the best leads and opportunities. Providing Web Tracking, Lead Scoring, Social Discovery, Form Capture and Email Marketing, ClickDimensions allows organizations to discover who is interested in their products, quantify their level of interest and take the appropriate actions. A 100% Software-as-a-Service (SaaS) solution built on the Microsoft Windows Azure platform and built into Microsoft Dynamics CRM, ClickDimensions allows companies to track their prospects from click to close. For more information about ClickDimensions visit http://www.clickdimensions.com, read our blog at http://blog.clickdimensions.com, follow us on Twitter at http://www.twitter.com/clickdimensions or email press(at)clickdimensions(dot)com.

Return to section navigation list>

VisualStudio LightSwitch

<Return to section navigation list>

Windows Azure Infrastructure

Davindra Hardawar posted DEMO: Microsoft cloud computing guru Matt Thompson on Windows Azure and the importance of data ([handheld] video) to the DemoBeat blog on 9/14/2010:

Microsoft’s Matt Thompson, general manager of developer and platform evangelism, hit the DEMO Fall 2010 stage this afternoon to chat about the company’s commitment to cloud computing with Windows Azure, as well as the ever-present value of data.

Thompson reiterated Microsoft’s position on Azure, which remains practically unchanged since it launched in October 2008: The company sees cloud computing as its next major computing platform beyond Windows and the internet. It’s planning to have cloud computing aspects built into everything they ship, and it’s also aiming to be more open with Windows Azure than any other Microsoft platform. “We believe we have the most open cloud on the planet,” Thompson said.

Microsoft foresees a world where users can run applications across its Azure platform and their own servers — as well as public clouds that we’ll see from government and healthcare organizations.

Thompson, who also spent 18 years at Sun Microsystems pursuing cloud computing initiatives, was mum on any major news announcements regarding Windows Azure. He did mention Microsoft’s Project Dallas — the company’s Information Services business which would create a marketplace for datasets. “Data will always have value, unlocking that data will always be an opportunity,” he said.

Though Microsoft has been slow to adopt recent computing trends like post-iPhone smartphones, it’s clearly looking forward to the next major computing zeitgeist with Azure and its increased focus on cloud computing. Azure is powering its new web-based Office applications, and there’s also the potential for it to be a major component in Windows Phone 7 services. Below is a video excerpt from Thompson’s talk with VentureBeat’s Matt Marshall.

Photo via DEMO’s Flickr, and Stephen Brashear Photography

John Hagel III and John Seely Brown posted Cloud Computing's Stormy Future to the Harvard Business Review’s blog:

Our latest book, The Power of Pull, describes a Big Shift that is profoundly re-shaping our global business landscape. Digital technology infrastructures are continuing to advance at a dizzying pace, creating both challenges and opportunities for businesses. In the next two blog posts, we will focus on two key building blocks of these new infrastructures — cloud computing and social software. Let's start with cloud computing.

Most non-technology executives are wary of cloud computing. They have been through technology fads before. While they will acknowledge that cloud computing is interesting in terms of potential to reduce IT costs, they harbor a suspicion that this might be just a lower cost form of IT outsourcing. If that is all it is, then it is important for the CIO to take care of, but there is no compelling need for the rest of the C-suite to get deeply involved in the technology.

In fact, this is a much too narrow view of cloud computing and puts the firm at risk. Cloud computing has the potential to generate a series of disruptions that will ripple out from the tech industry and ultimately transform many industries around the world. This is definitely a technology that deserves serious discussion from the entire senior leadership team of a company.

Accepted definitions of cloud computing quickly narrow the focus to distinctive delivery of technology resources. For example, a Gartner analyst describes a model characterized by location-independent resource pooling, accessibility through ubiquitous networks, on-demand self-service, rapid elasticity and pay-per-use pricing. Enough to lull the average C-level executive to sleep.

What seems to be missing in most discussions of cloud computing is the potential for new IT architectures and the evolution of the cloud into distinct layers of capabilities — from infrastructure to platform to application to business — each delivered "as-a-service." Rather than focus on how it can do what your IT already does — just cheaper or scaling faster — look for cloud computing to do the things that your IT never has been able to do, look to the unmet needs and then look for the disruptions that will sprout up across industry after industry.

The disruptive potential of cloud computing will likely play out in four distinctive but overlapping waves as described more fully in our working paper, "Storms on the Horizon." (pdf)

- The first wave of disruption focuses on new ways of delivering IT capability to enterprises that will be very challenging for traditional enterprise IT firms to embrace. This disruption will be largely confined to the technology industry itself, although it creates room for large companies in adjacent industries to enter the cloud computing arena as large scale providers of services.

- The second wave of disruption concentrates on the emergence of fundamentally new IT architectures designed to address unmet needs of enterprises as they seek to coordinate activities across scalable networks of business partners. These new IT architectures enable new ways of doing business that will be very disruptive in global markets. Entrepreneurial companies like Rearden Commerce and TradeCard are already implementing elements of these new architectures.

- The third wave of disruption will be a restructuring of the technology industry as vertically integrated cloud service providers begin to focus on different layers of the technology stack. Companies that target control points in this new industry structure will be in a powerful position to shape the entire technology industry.

- The fourth, and most powerful wave of disruption, plays out as companies begin to harness cloud computing platforms to disrupt an expanding array of industries with fundamentally new value propositions that will be very hard for incumbents to replicate. Among the industries that will be in the bulls eye for disruptive plays are media, healthcare, financial services and energy.

All four of these waves are already starting to play out — there are early examples of activity in all four waves, even though the critical mass of movement is still largely concentrated in the first wave of disruption.

How does this all relate to The Power of Pull? Cloud computing can enhance the ability to scale all three levels of pull described in our book.

- New architectures emerging from cloud computing providers will help significantly scale the ability to access highly specialized business providers on a global basis from orchestrators like Li & Fung.

- As these cloud platforms scale, companies will likely unexpectedly encounter resources and companies that they were not even aware existed, driving a second level of pull - attracting people and resources.

- Cloud computing can also provide a much more robust foundation for the creation spaces that we suggest will drive the third level of pull — achieving our full potential as individuals as institutions.

There's another way that cloud computing will play out in the Big Shift. This technology platform will help empower participants on various edges to scale their innovative practices much more rapidly to challenge the core of economic activity. As we have discussed before, edges take many different forms and are quite different from fringes that merely lead a precarious existence on the periphery of the core. Cloud computing helps to provide people with limited resources access to sophisticated computing, storage and networking capability that previously would have been beyond their reach.

This has the potential to fundamentally alter the dynamics of edge-core relationships, especially within the enterprise. In the past, conventional wisdom regarding change management in large institutions held that you funded experimental initiatives on the edge and then folded them back into the core in the hope that they would be catalysts for change in the core. In reality, most of these initiatives died horrible deaths as antibodies in the core rapidly overwhelmed the brave edge participants who ventured into the core.

Now, really for the first time, there is potential for the edge to scale much more rapidly through access to pull based resources like cloud computing. Rather than feeling the need to return to the core, edge participants now can grow in far more leveraged ways and increasingly pull more and more people and resources out of the core into the edge. Complementary edges can also use cloud computing to connect with each other and further amplify their impact.

The net result? Cloud computing is poised to help significantly compress the cycle of edge emergence and ability to overtake the earlier core as the focus of economic activity. Companies will have to be increasingly focused on the need to identify emerging edges earlier and investing appropriately to support these edges so that the company can avoid being blindsided by disruptions.

Given this perspective, it is essential for senior management to actively engage on understanding the capabilities of cloud computing and anticipating its ability to transform the way business is conducted. Every company should go through the exercise of defining scenarios of likely industry evolution over the next ten years given evolving cloud computing platforms and tracing out the implications for what capabilities their own company will need to acquire or develop to compete effectively in this changing environment.

What do you think? Have we convinced you that there's more to the cloud than simple IT outsourcing? And if we have, what does that mean to the typical executive? How does this change the way you would think about leveraging cloud computing for your business?

See also Shape Serendipity, Understand Stress, Reignite Passion of 8/20/2010 in the HBR Blog by the same authors about The Power of Pull.

David Linthicum claimed “Here are a few facts to keep in mind if you plan to implement a cloud computing project” as he regurgitated Five facts every cloud computing pro should know in this 9/14/2010 post to InfoWorld’s Cloud Computing blog:

Though cloud computing is widely defined, it's difficult for those working with the technology to reach a common understanding around concepts that are important to the success of their projects. Here are five important ideas -- or perhaps just misunderstandings -- that those looking to move to cloud computing should understand.

1. Cloud computing is not virtualization. This seems to be a common misunderstanding, so it's worth stating: Virtualized servers do not constitute a cloud. Cloud computing means auto provisioning, use-based accounting, and advanced multitenancy, capabilities well beyond most virtualization solutions. Many of you need to clip this paragraph out and put it in your wallets or purses as a reminder.

2. Cloud computing requires APIs. Those who put up websites and call them "cloud sites" need to understand that part of the value of cloud computing is API access to core cloud services. This means both public and private. Without an API, it's hardly a cloud.

3. Moving to a cloud is not a fix for bad practices. There is no automatic fix for bad architecture or bad application design when moving to cloud computing. Those issues need to be addressed before the migration.

4. Security is what you make of it, cloud or no cloud. While many are pushing back on cloud computing due to security concerns, cloud computing is, in fact, as safe as or better than most on-premises systems. You must design your system with security, as well as data and application requirements in mind, then support those requirements with the right technology. You can do that in both public or private clouds, as well as traditional systems.

5. There are no "quick cloud" solutions. While many companies are selling so-called cloud-in-a-box type solutions, few out-of-the-box options will suit your client computing needs without requiring a load of customizations and integration to get some value out of them.

Sorry to burst any bubbles.

Wolfgang Gentzsch interviews Dan Reed in a Technical Clouds: Seeding Discovery - An Interview with Microsoft's Dan Reed post of 9/14/2010 to the High-Performance Computing blog:

Dan Reed [pictured on right] helps to drive Microsoft’s long-term technology vision and the associated policy engagement with governments and institutions around the world. He is also responsible for the company’s R&D on parallel and extreme scale computing. Before joining Microsoft, Dan held a number of strategic positions, including Head of the Department of Computer Science and Director of the National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign (UIUC), Chancellor's Eminent Professor at the University of North Carolina (UNC) at Chapel Hill and Founding Director of UNC's Renaissance Computing Institute (RENCI).

In addition to his pioneering career in technology, Dan has also been deeply involved in policy initiatives related to the intersection of science, technology and societal challenges. He served as a member of the U.S. President's Council of Advisors on Science and Technology (PCAST) and chair of the computational science subcommittee of the President's Information Technology Advisory Committee (PITAC). Dr. Reed received his Ph.D. in computer science from Purdue University.

In my role as Chairman of the ISC Cloud Conference in Frankfurt, Germany, October 28-29, I interviewed Dan who will present the Keynote on Technical Clouds: Seeding Discovery.

Wolfgang: Dan, three years ago you joined Microsoft and are now the Corporate Vice President Technology Strategy and Policy & Extreme Computing. What was your main reason to leave research in academia, and what was your greatest challenge you faced when moving to industry?

Dan: It was an opportunity to tackle problems at truly large scale, create new technologies and build radical new hardware/software prototypes. Cloud data centers are far larger than anything we have build in the HPC world to date and they bring many of the same challenges in novel hardware and software. I have found myself working with many of the same researchers, industry leaders and government officials that I did in academia, but I am also able to see the direct impact of the ideas realized across Microsoft and the industry, as well in academia and government.

As for challenges, there really were not any. As part of Microsoft research, I have a chance to work with a world class team of computer scientists, just as I did in academia. Moreover, I had spent many years in university leadership roles and in national and international science policy and the technology strategy aspects have many of the same attributes. On the technology strategy front, my job is to envision the future and educate the community about the technology trends and their societal, government and business implications.

Wolfgang: You are our keynote speaker at the ISC Cloud Conference end of October in Frankfurt. Would you briefly summarize the key message you want to deliver?

Dan: I’d like to focus on two key messages.

First, let scientists be scientists. We want scientists to focus on science, not on technology infrastructure construction and operation. The great advantage of inexpensive hardware and software has been the explosive growth in computing capabilities, but we have turned many scientists and students into system administrators. The purpose of computing is insight, not numbers, as Dick Hamming used to say. The reason for using computing systems in research is to accelerate innovation and discovery.

Second, the cloud phenomenon offers an opportunity to fundamentally rethink how we approach scientific discovery, just as the switch from proprietary HPC systems to commodity clusters did. It’s about simplifying and democratizing access, focusing on science, discovery and usability. As with any transition, there are issues to be worked out, behavioral models to adapt and technologies to be optimized. However, the opportunities are enormous.

Cloud computing has the potential to provide massively scalable services directly to users which could transform how research is conducted, accelerating scientific exploration, discovery and results.

Wolfgang: What are the software structures and capabilities that best exploit cloud capabilities and economics while providing application compatibility and community continuity?

Dan: Scientists and engineers are confronted with the data deluge that is the result of our massive on-line data collections, massive simulations, and ubiquitous instrumentation. Large-scale data center clouds were designed to support data mining, ensemble computations and parameter sweep studies. But they are also very well suited to host on-line instances of easy-to-use desktop tools – simplicity and ease of use again.

Wolfgang: How do we best balance ease of use and performance for research computing?

Dan: I believe our focus has been too skewed toward the very high end of the supercomputing spectrum. While this apex of computing is very important, it only addresses a small fraction of working researchers. Most scientists do small scale computing, and we need to support them and let them do science, not infrastructure.

Wolfgang: What are the appropriate roles of public clouds relative to local computing systems, private clouds and grids?

Dan: Both have a role. Public clouds provide elasticity. This pay-as-you-go cost model is better for those who do not want to bear the expense of acquiring and maintaining private clusters. It also supports those who do not want to know how infrastructure works or who want to access large, public data. Access to scalable computing on-demand from anywhere on the Internet also has the effect of democratizing research capability. For a wide class of large computation, one doesn’t need local computing infrastructure. If the cloud were a simple extension of one’s laptop, one wouldn’t have a steep supercomputing learning curve, which could completely change a very large and previously neglected part research community.

Private clouds are ideal for many scenarios where long-term, dedicated usage is needed. Supercomputing facilities typically fit into this category. Grids are also about interoperability and collaboration, and some cloud-like capability has been deployed on top of a few of the successful grids.

Wolfgang: In a world where massive amounts of experimental and computational data are produced daily, how do we best extract insights from this data, both within and across disciplines, via clouds?

Dan: There are two things we must do. First we need to ensure that the data collected can be easily accessed. Data collections must be designed from the ground up with this concept in mind, because moving massive amounts of data is still very hard. Second, we must make the analysis applications easy to access on the web, easy to use and easy to script. Again, make the scalable analytics an extension of one’s everyday computing tools. Keep it simple. Make it easy to share data and results across distributed collaborations.

-----

Dr. Wolfgang Gentzsch is the General Chair for ISC Cloud'10, taking place October 28-29, in Frankfurt, Germany. ISC Cloud'10 will focus on practical solutions by bridging the gap between research and industry in cloud computing. Information about the event can be found at the ISC Cloud event website. HPC in the Cloud is a proud media partner of ISC Cloud'10.

Tim Anderson “noticed that of 892 Azure jobs at Indeed.com, 442 of the vacancies are in Redmond” in his 9/14/2010 Latest job stats on technology adoption – Flash, Silverlight, iPhone, Android, C#, Java post:

It is all very well expressing opinions on which technologies are hot and which are struggling, but what is happening in the real world? It is hard to get an accurate picture – surveys tend to have sampling biases of one kind or another, and vendors rarely release sales figures. I’ve never been happy with the TIOBE approach, counting mentions on the Internet; it is a measure of what is discussed, not what is used.

Another approach is to look at job vacancies. This is not ideal either; the number of vacancies might not be proportionate to the numbers in work, keyword searches are arbitrary and can include false positives and omit relevant ads that happen not to mention the keywords. Still, it is a real-world metric and worth inspecting along with the others. The following table shows figures as of today at indeed.com (for the US) and itjobswatch (for the UK), both of which make it easy to get stats.

Update – for the UK I’ve added both permanent and contract jobs from itjobswatch. I’ve also added C, C++, Python and F#, (which hardly registers). For C, I searched Indeed.com for “C programming”.

Indeed.com (US) itjobswatch (UK permanent) itjobswatch (UK contract) Java 97,890 17,844 6,919 Flash 52,616 2,288 723 C++ 48,816 8,440 2470 C# 46,708 18,345 5.674 Visual Basic 35,412 3,332 1,061 C 27,195 7,225 3,137 ASP.NET 25,613 10,353 2,628 Python 17,256 1,970 520 Ruby 9,757 968 157 iPhone 7,067 783 335 Silverlight 5,026 2,162 524 Android 4,755 585 164 WPF 4,441 3,088 857 Adobe Flex 2,920 1,143 579 Azure 892 76 5 F# 36 66 1

A few quick comments. First, don’t take the figures too seriously – it’s a quick snapshot of a couple of job sites and there could be all sorts of reasons why the figures are skewed.

Second, there are some surprising differences between the two sites in some cases, particularly for Flash – this may be because indeed.com covers design jobs but itjobswatch not really. The difference for Ruby surprises me, but it is a common word and may be over-stated at Indeed.com.

Third, I noticed that of 892 Azure jobs at Indeed.com, 442 of the vacancies are in Redmond.

Fourth, I struggled to search for Flex at Indeed.com. A search for Flex on its own pulls in plenty of jobs that have nothing to do with Adobe, while narrowing with a second word understates the figure.

The language stats probably mean more than the technology stats. There are plenty of ads that mention C# but don’t regard it as necessary to state “ASP.NET” or “WPF” – but that C# code must be running somewhere.

Conclusions? Well, Java is not dead. Silverlight is not unseating Flash, though it is on the map. iPhone and Android have come from nowhere to become significant platforms, especially in the USA. Beyond that I’m not sure, though I’ll aim to repeat the exercise in six months and see how it changes.

The SearchCloudComputing.com staff described a Cloud brainiac authors expansive pro-cloud report in this 9/13/2010 post:

Cloud computing: a market for melons?

Serial deep thinker, ex-VP of global portfolio strategy for AT&T Business Solutions and amateur economist Joe Weinman has released an 82-page formal dissertation on the economics behind the long-term shift to cloud computing that seems to drive a nail in the coffin of cloud haters.

Entitled "The Market for Melons," it's a twist on the classic economic explanation for information uncertainty taken from "The Market for Lemons: Quality Uncertainty and the Market Mechanism" by George Akerloff. Akerloff uses the used car market to show how suckers (aka used car buyers) buying lousy cars (lemons) creates an incentive to lower-quality goods being exchanged. The used car dealers know which cars are junk and the buyers don't, so they aim to sell more junky cars.

Weinman's twist on this for cloud computing is that cloud providers are in the exact opposite position; they know less and less about what their users are buying IT for, and therefore can't rip them off. Providers are forced to compete on quality, thus driving innovation in the market. The pay-per-use breakthrough of Amazon Web Services is used as the sterling example everyone is scrambling to keep up with.

Or so we think. The SearchCloudComputing.com staff is not a team of economists, but we are impressed.

Weinman’s report is a bit lengthy, but if you’re an amateur (or professional) economist, it makes an interesting read.

• Update 9/18/2010: Joe Weinman pointed to his ComplexModels web site, which also offers an animated simulation model of the Market for Melons in a 9/8/2010 tweet. Joe announced in this 9/9/2010 tweet: “Just finished my last official act for AT&T, a closing keynote here in London. I'm retiring to start a new job on the 20th.” I wished Joe good luck with his new job on Twitter.

Petri I. Salonen completes his series with Law #10 of Bessemer’s Top 10 Cloud Computing Laws and the Business Model Canvas –Cloudonomics requires that you plan your fuel stops very carefully of 9/13/2010:

I have so far reviewed nine laws of Bessemer Top 10 and with this last one is almost like a summary of the other nine laws. The reality of SaaS-based companies is that the biggest question they have is how to finance the growth and how to ensure that the Committed Monthly Recurring Revenue (CMRR) is going to be enough to fuel the growth and how the company should plan its capital infusion both short-and long-term. Every ISV leader needs to understand the financial drivers of the operations and I wrote about this in my blog entry by referring to the 6C’s of Cloud Finance that Bessemer has defined on their site.

Law #10: Cloudonomics requires that you plan your fuel stops very carefully

What makes a SaaS company so much different to a more traditional software company? To put it bluntly, a SaaS company needs to be better funded when compared to a traditional software company. The funding is not necessarily different when viewing the overall capital need, but funding will be needed specifically during the first few years when the company is building its revenue stream. The reason is obvious when analyzing the financial model.

A SaaS company has to fund the research, development, sales and marketing well upfront without receiving the payback immediately like was possible in the “good old days”. It was not unheard of getting 300k software deals that funded additional development and marketing for a small company but now this money will come gradually and only if the SaaS vendor is able to retain the clients. I did this in the companies I worked with, my colleagues did this and this is why one could build a nice and sustainable software business without having huge amounts of cash. What was needed was a good idea, a couple of good programmers and a product that was sold to a hefty price tag with a traditional licensing model.

Getting a healthy inflow of CMRR transactions is that will keep the company afloat but like Bessemer puts in its blog entry, it could take more than 4+ years to get a SaaS company cash flow positive. Bessemer concludes that SaaS companies typically need multiple rounds of financing and some of the well-known are Netsuite ($126m), Salesforce.com ($61m) and SuccessFactors $45m. These investments are obviously considerable when measured in typical startup scenarios, but the lesson that we can take from them is that regardless of what the ambitions are, the SaaS business model will require more upfront investments when compared with traditional model and that the return of positive cash flow will take more time.

Summary of our findings in respect to Business Model Canvas

Like in my previous blog entries, the objective of this blog was to view the current Bessemer law with Business Model Canvas from Dr. Osterwalder. Like I stated prior in this blog entry, the impact of this Law is dependent on each and every Business Model Canvas building block. However, if we need to pick one or two of these, it would be Revenue Streams (RS) as that dictates how well we run our business from revenue perspective, but also Key Activities (KA) which measures how well we understand and run our core business. It is also very important to understand that SaaS business will change the Channel (C) structure as well. ISVs can’t expect to have similar channel structure as they used to have in the past. Vendors such as Microsoft will open commercial app stores (The Dynamics Marketplace) for its development platforms such as Microsoft Dynamics CRM 2011 that has a landing page to learn more. Similar objectives exist for upcoming Windows Mobile 7 with app store like was reported by MobileCrunch.

The question that each ISV will have is how to utilize these app stores and what kind of impact do they have on the existing channels. In some cases, and typically in quite a few of them, solutions are complex and require system integrators to integrate these solutions to backend systems. In some cases the apps might be lightweight and can be deployed without any external help. Should ISVs in these cases rely purely on its own resources and marketing to end user clients, or should they still try to build a channel? I think it will be a combination of both whereby there is nothing new. Traditional software channels need to see the success of the software vendor, there is no way around it. SaaS will be just another way to deliver the solution and gives a broader exposure to ISVs as the whole world can find it through Internet.

This blog entry concludes my journey of Bessemer’s Top 10 Cloud Computing Laws and the Business Model Canvas. This journey of blog entries has been very educational even for me, as it has included a bunch of research of what is known of these topics and what people have written about it. I do have to admit, that there are so many variables to the SaaS world specifically from a financial perspective that I recommend every manager that is part of any ISV business to spend time around these topics. It is worth the investment and can save you from many mistakes; some of them could be disastrous to you from a financial perspective.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Tanya Forsheit asked Do Your Due Diligence-is the Forecast Cloudy or Clear? in a 9/13/2010 post to the Info Law Group blog:

Dave and I recently spoke with BNA's Daily Report for Executives about the importance of due diligence and planning for organizations entering into (or considering) enterprise cloud computing arrangements. The article is reproduced here with permission from Daily Report for Executives, 168 DER C-1 (Sept. 1, 2010). Copyright 2010 by The Bureau of National Affairs, Inc. (800-372-1033) http://www.bna.com. You can find the article, ‘Cloud’ Customers Facing Contracts With Huge Liability Risks, Attorneys Say, here.

As you can probably tell, the attorneys of InfoLawGroup have been quite busy of late. We promise to bring you new posts very soon on recent developments in breach notification, cloud, and even ethics. Stay tuned.

Tanya also posted about The Connecticut Insurance Department Bulletin on Breach Notification on 9/14/2010.

<Return to section navigation list>

Cloud Computing Events

Beth Massi (@BethMassi) said it’s Time to Register for Silicon Valley Code Camp! in this 9/14/2010 post:

It’s that time of year again, Silicon Valley Code Camp [to be held 10/9 and 10/10/2010 at Foothill College, Los Altos, CA] is one of the biggest there is spanning many different technologies and grabbing the attention of over 1000 attendees. There are currently 165 beginner to advanced sessions submitted. This year I’ll be speaking on OData with Office & LightSwitch:

Introducing Visual Studio LightSwitch

Level: Beginner

Visual Studio LightSwitch is the simplest way to build business applications for the desktop and cloud. LightSwitch simplifies the development process by letting you concentrate on the business logic, while LightSwitch handles the common tasks for you. In this demo-heavy session, you will see, end-to-end, how to build and deploy a data-centric business application using LightSwitch.

Visual Studio LightSwitch – Beyond the Basics

Level: Intermediate

LightSwitch is a new product in the Visual Studio family aimed at developers who want to easily create business applications for the desktop or the cloud. In this session we’ll go beyond the basics of creating simple screens over data and demonstrate how to create screens with more advanced capabilities. You’ll see how to extend LightSwitch applications with your own Silverlight custom controls and RIA services. We’ll also talk about the architecture and additional extensibility points that are available to professional developers looking to enhance the LightSwitch developer experience.

Creating and Consuming OData Services

Level: Intermediate

The Open Data Protocol (OData) is a REST-ful protocol for exposing and consuming data on the web and is becoming the new standard for data-based services. In this session you will learn how to easily create these services using WCF Data Services in Visual Studio 2010 and will gain a firm understanding of how they work as well as what new features are available in .NET 4 Framework. You’ll also see how to consume these services and connect them to other public data sources in the cloud to create powerful BI data analysis in Excel 2010 using the PowerPivot add-in. Finally, we will build our own Excel and Outlook add-ins that consume OData services exposed by SharePoint 2010.

Please register here: http://siliconvalley-codecamp.com/Register.aspx

Hope to see you there!

Internet Identity Workshop (IIW) #11 will take place on 11/2 to 11/4 at the Computer History Museum, Mountain View, CA, according to this post:

The Internet Identity Workshop (IIW) is a working group of the Identity Commons and has been convened in California semi-annually since 2005. The 10th IIW was held this past May and had the largest attendance thus far.

Internet Identity Workshops focus on “user-centric identity” addressing the technical and adoption challenge of how people can manage their own identity across the range of websites, services, companies and organizations with which they interact.

We will be posting more information about specific topics here.

About IIW

Unlike other identity conferences, IIW’s focus is on the use of identity management approaches based on open standards that are privacy protecting. It is a unique blend of technology and policy discussions where everyone from a diverse range of projects doing the real-work of making this vision happen, gather and work intensively for two days. It is the best place to meet and participate with all the key people and projects going on in the field.The event has a unique format – the agenda is created live the day of the event. This allows for the discussion of key issues, projects and a lot of interactive opportunities with key industry leaders.

RSVP on Social Networks: LinkedIN

How does it work?

After the brief introduction on the first day, there are no formal presentations, no keynotes and no panels. After introductions we start with a blank wall and, in less than an hour, with a facilitator guiding the process attendees create a full day, multi-track conference agenda that is relevant and inspiring to everyone there. All are welcome to put forward presentations and propose conversations.We do this in part because the field is moving so rapidly that it doesn’t make sense to predetermine the presentation schedule months before the event. We do know great people who will be there and it is the attendees who have a passion to learn and contribute to the event that will make it.

The event compiles a book of proceedings with all the notes from the conference. Here are the proceedings from IIW7, IIW8, IIW9 & IIW10. BTW these three documents are your key to convincing your employer that this event will be valuable.

James Hamilton reported DataCloud 201[1]: Workshop on Data Intensive Computing in the Clouds Call for Papers on 9/14/2010:

For those of you writing about your work on high scale cloud computing (and for those interested in a great excuse to visit Anchorage Alaska), consider submitting a paper to the Workshop on Data Intensive Cloud Computing in the Clouds (DataCloud 2011). The call for papers is below.

--jrh

*** Call for Papers ***

WORKSHOP ON DATA INTENSIVE COMPUTING IN THE CLOUDS (DATACLOUD 2011), in conjunction with IPDPS 2011, May 16, Anchorage, Alaska

http://www.cct.lsu.edu/~kosar/datacloud2011The First International Workshop on Data Intensive Computing in the Clouds (DataCloud2011) will be held in conjunction with the 25th IEEE International Parallel and Distributed Computing Symposium (IPDPS 2011), in Anchorage, Alaska.

Applications and experiments in all areas of science are becoming increasingly complex and more demanding in terms of their computational and data requirements. Some applications generate data volumes reaching hundreds of terabytes and even petabytes. As scientific applications become more data intensive, the management of data resources and dataflow between the storage and compute resources is becoming the main bottleneck. Analyzing, visualizing, and disseminating these large data sets has become a major challenge and data intensive computing is now considered as the “fourth paradigm” in scientific discovery after theoretical, experimental, and computational science.

DataCloud2011 will provide the scientific community a dedicated forum for discussing new research, development, and deployment efforts in running data-intensive computing workloads on Cloud Computing infrastructures.

The DataCloud2011 workshop will focus on the use of cloud-based technologies to meet the new data intensive scientific challenges that are not well served by the current supercomputers, grids or compute-intensive clouds. We believe the workshop will be an excellent place to help the community define the current state, determine future goals, and present architectures and services for future clouds supporting data intensive computing.

Topics of interest include, but are not limited to:

- Data-intensive cloud computing applications, characteristics, challenges

- Case studies of data intensive computing in the clouds

- Performance evaluation of data clouds, data grids, and data centers

- Energy-efficient data cloud design and management

- Data placement, scheduling, and interoperability in the clouds

- Accountability, QoS, and SLAs

- Data privacy and protection in a public cloud environment

- Distributed file systems for clouds

- Data streaming and parallelization

- New programming models for data-intensive cloud computing

- Scalability issues in clouds

- Social computing and massively social gaming

- 3D Internet and implications

- Future research challenges in data-intensive cloud computing

IMPORTANT DATES:

- Abstract submission: December 1, 2010

- Paper submission: December 8, 2010

- Acceptance notification: January 7, 2011

- Final papers due: February 1, 2011

PAPER SUBMISSION:

DataCloud2011 invites authors to submit original and unpublished technical papers. All submissions will be peer-reviewed and judged on correctness, originality, technical strength, significance, quality of presentation, and relevance to the workshop topics of interest. Submitted papers may not have appeared in or be under consideration for another workshop, conference or a journal, nor may they be under review or submitted to another forum during the DataCloud2011 review process. Submitted papers may not exceed 10 single-spaced double-column pages using 10-point size font on 8.5x11 inch pages (IEEE conference style), including figures, tables, and references. DataCloud2011 also requires submission of a one-age (~250 words) abstract one week before the paper submission deadline.

The call for papers continues with a lengthy list of program chairs and committee members.

Steve Plank revised details of the UK Windows Azure Online Conference date change - now 8th October in this 9/13/2010 post:

An online conference: "Microsoft Online Cloud Conference: the TechDays team goes online" has had a date change. In my first post about the conference, I said it was running on the 20th September. Well, the registration site was only created this morning and so to give people enough notice of the registration, the date has been changed. It is now running on the 8th October.

Here are the registration details:

- Event ID: 1032459728

- Language(s): English.

- Product(s): Windows Azure.

- Duration: 300 Minutes

- Date/Time: 08 October 2010 09:30 GMT, London

Here are a few details on top of what it says on the registration site's blurb. We've said it's a UK conference but that's kind of irrelevant seeing as it's online. Plus - we have presenters from Holland and Germany...

Track 1: Cirrus – the high level stuff

09:30 Welcome and Intro to Windows Azure: Steve Plank

09:30 Welcome and Intro to Windows Azure: Steve Plank - 10:30 Lap around Windows Azure: Simon Davies

- 11:30 Lap around SQL Azure: Keith Burns

- 12:30 Lunch

- 13:00 Lap around App Fab: David Gristwood

- 14:00 Windows Azure: the commercial details: Simon Karn

- 15:00 Q&A Panel: All presenters

- 15:30 Close

Track 2: Altocumulus – the mid level stuff (cast studies)

10:30 Case Study: TheWorldCup.com - Brian Norman, Earthware

10:30 Case Study: TheWorldCup.com - Brian Norman, Earthware - 11:30 Case Study: eTV – Dan Scarfe, DotNet Solutions

- 12:30 Lunch

- 13:00 Case Study: Mobile Ventures Kenya – Mark Hirst, ICS

- 14:00 Case Study: : IM Group Experiences – Jeremy Neal, IM Group

- 15:00 Q&A panel: All presenters

- 15:30 Close

Track 3: Stratocumulus – the low level stuff (deep tech)

10:30 Taking care of a cloud environment: Maarten Balliauw, Realdolmen

10:30 Taking care of a cloud environment: Maarten Balliauw, Realdolmen - 11:30 Windows Azure Guidance Project: Dominic Betts, ContentMaster

- 12:30 Lunch

- 13:00 Azure Table Service – getting creative with Microsoft’s NoSQL datastore: Mark Rendle, DotNet Solutions

- 14:00 Release the Hounds: Josh Twist, Microsoft

- 15:00 Q&A panel: All presenters

- 15:30 Close …

Steve continues with an abstract for each session.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Mike Hamblen reported “No minimum commitment; service can be secured with a credit card” in his Verizon launches cloud service for SMBs post of 9/14/2010 to ComputerWorld’s News blog:

Verizon today announced that it will offer a cloud computing service for small and midsize U.S. businesses to supplement a cloud service for large companies that it launched globally last year.

The company's new offering -- called Computing as a Service, SMB -- will be available to organizations with up to 1,000 employees. It will start at 3.7 cents per hour for a single virtual server with limited RAM and storage, said Patrick Sullivan, director of midtier products at Verizon's business unit.

The usage-based service can be secured with a credit card after a short online registration process, he said. There is no minimum commitment, he said.

The SMB service is housed on servers controlled by Verizon partner Terremark; the earlier-announced enterprise service is housed in Verizon's data center, Sullivan said. Using Terremark's servers helped Verizon bring the SMB service to market faster, he said, but added, "We still have a good amount of control."

Sullivan said Verizon expects its prices to be "very competitive" with those of other providers of cloud computing services to small and midsize businesses. The other providers include Amazon and Rackspace.

John Treadway claimed Fixed Instance Sizes Are Dumb in a 2/14/2010 post to his CloudBzz blog:

After my recent post on EC2 Micro instances, I received a great comment from Robert Jenkins over at CloudSigma regarding the “false construct” of fixed instance sizes. There’s no reason why an EC2-small has to have 1.7GB RAM, 1 VPU and 160GB of local storage. The underlying virtualization technology allows for fairly open configurability of instances. What if I want 2.5GB of RAM, 2 VPUs and 50GB of local storage? I can’t get that from Amazon – but the Xen hypervisor they doesn’t prohibit this. You’re never going to use exactly 160GB of storage, and Amazon is counting that most won’t use more than 50 or 60 GB – showing you how much of a deal you get for something they never have to provide.

Same is true for most cloud providers. Rackspace allows you to go down to 256mb RAM, 10GB disk and then a 10Mbps bandwidth limit. You can use more bandwidth and disk, you just pay for it.

Perhaps customers like the “value meal” approach with pre-configured instance types and they sell better. Perhaps Amazon likes being able to release a new instance type every quarter as a way to generate news and blog posts. Perhaps their ecommerce billing systems can’t handle the combinatorial complexity of variable memory, storage, bandwidth and VPUs. Whatever the reason, these fixed instance types limit user choice.

They’re dumb because they’re unnecessary.

Audrey Watters described Xeround, the Cloud Database, in her A SQL Database Built for the Cloud post of 9/14/2010 to the ReadWriteCloud blog:

The challenges that MySQL faces in the cloud - questions of elasticity, synchronization, scalability - are often referenced as part of the arguments in support of NoSQL database alternatives. But today, Xeround announces a solution to the problems of MySQL in the cloud that is actually a SQL solution - a SQL database for the cloud.

Xeround launches its new database that is, according to CEO Razi Sharir, "the best of both worlds." In other words, Xeround's new database technology promises both the transactional and query capabilities of relational databases alongside the scalability of NoSQL ones.

Xeround was built based on MySQL Storage Engine Architecture and acts as a pluggable storage engine. As such, it provides many of MySQL's features, but was designed as a virtual database, optimized for the cloud. The database handles multi-tenancy and auto-scaling and is self-healing. It guarantees always-on service during schema changes, resource modifications, and the scaling process. And for those currently working with MySQL databases, all, according to Sharir, "without changing a line of code."

For those in its private beta, Xeround will host its "Database-as-a-Service" in its private data center or on Amazon EC2. Other cloud service providers will soon be available. The DBaaS implementation simply requires uploading the database's schema and pointing apps to the new location. The price for the offering will be competitive with other RDBS and by Amazon's database offering.

James Hamilton posted Web-Scale Database on 9/14/2010:

I’m dragging myself off the floor as I write this having watched this short video: MongoDB is Web Scale.

It won’t improve your datacenter PUE, your servers won’t get more efficient, and it won’t help you scale your databases but, still, you just have to check out that video.

Thanks to Andrew Certain of Amazon for sending it my way.

And thanks to Alex Popescu for posting it to his myNoSQL blog.

<Return to section navigation list>

0 comments:

Post a Comment