Windows Azure and Cloud Computing Posts for 8/29/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 8/30/2012 5:00 PM PDT with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Chris Talbot (@ajaxwriter) reported CloudBerry Cloud Migrator Supports Cloud Data Migration in an 8/30/2012 post to the TalkinCloud blog:

CloudBerry Lab has sent a cloud storage migration tool into beta testing. CloudBerry Cloud Migrator is a service that aims to make it easy for customers to transfers files and data between cloud storage providers.

Transferring files from one cloud storage provider to another is not always the easiest of tasks, but CloudBerry hopes to make it easier for customers to shift files between Amazon S3, Windows Azure Blob Storage, Rackspace Cloud Files and FTP servers. Not exactly an exhaustive list of cloud storage providers, but CloudBerry has hit the big services, which is where it’s likely to find most of its customers.

Cloud Migrator is being offered as a service. Because it’s purely a web application, it gives end users the ability to transfer files between the different cloud storage services without the need to install any additional software. It executes all copy operations within a cloud.

Users will be able to copy files between different locations or accounts within a single cloud storage service or move them out to a different service.

For channel partners facing customer data migrations from one cloud service to another, this sounds like a good tool for making migration as easy as possible. At the same time, it also supports FTP for moving files from FTP to any of the three supported cloud storage services.

It’s a safe assumption that many customers and partners are already moving to or at least evaluating the new Amazon Glacier service. In that respect, Cloud Migrator isn’t going to be of any help — at least not yet. CloudBerry took note of the launch of the new Amazon archival storage service and said it plans to add Amazon Glacier to its list of supported cloud storage services in future versions of the service.

Will CloudBerry round out its supported services list with other cloud storage services, including the recently announced Nirvanix/TwinStrata cloud storage starter kit and Quantum’s Q-Cloud? We’ll have to wait and see.

Read More About This Topic

Denny Lee (@dennylee) posted A Quick HBase Primer from a SQLBI Perspective on 8/29/2012:

One of the questions I’m often asked – especially from a BI perspective – is how a BI person should look at HBase. After all, HBase is often described quickly as an in-memory column store database – isn’t that what SSAS Tabular is? Yet calling HBase an in-memory column store database isn’t quite right because in this case, the terms column, database, tables, and rows do not quite mean the same thing as one would think from a relational database aspect of things.

Setting the Context

How I usually start off is by providing a completely different context before I go back to BI. The best way to kick this off is to know that HBase is an integral part of Facebook’s messaging system. Facebook’s New Real-time Messaging System: HBase to Store 135+ Billion Messages a Month is a great blog post providing you the architecture details on how HBase allows Facebook to deal with excessively large volumes of volatile messages. This isn’t something you would typically would see in the BI world, eh?!

Understanding HBase and BigTable

With the context set, let’s go back to understanding more about HBase by reviewing Google’s BigTable. Understanding HBase and BigTable is a must read, the blog’s key concepts are noted below but attribution goes to Jim R. Wilson.

Concepts refresher for the definition of BigTable is:

A BigTable is a sparse, distributed, persistent multidimensional sorted map. The map is indexed by a row key, column key, and a timestamp; each value in the map is an uninterpreted array of bytes.

With the keywords here being:

map | persistent | distributed | sorted | multidimensionalFollowing JSON notation, a map can be seen as:

{ "zzzzz" : "woot", "xyz" : "hello", "aaaab" : "world", "1" : "x", "aaaaa" : "y" }while a sorted map is

{ "1" : "x", "aaaaa" : "y", "aaaab" : "world", "xyz" : "hello", "zzzzz" : "woot" }and a multidimensional sorted map looks like

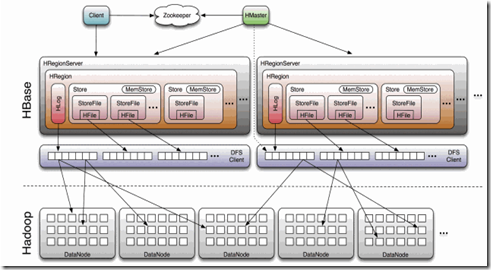

{ "1" : { "A" : "x", "B" : "z" }, "aaaaa" : { "A" : "y", "B" : "w" }, "aaaab" : { "A" : "world", "B" : "ocean" }, "xyz" : { "A" : "hello", "B" : "there" }, … }As for the description of persist and distributed, the best way to see this is through a picture from Lars George’s great post: HBase Architecture 101 – Storage

Some of the key concepts here are:

- HBase is extremely efficient at Random Reads/Writes

- Distributed, large scale data store

- Availability and Distribution is defined by Regions (more info at: http://hbase.apache.org/book/regions.arch.html)

- Utilizes Hadoop for persistence

- Both HBase and Hadoop are distributed

If the concepts are still a little vague, please do read Jim R. Wilson’s post Understanding HBase and BigTable and then read it again!

So what about Analytics?

Through all this terminology and architecture design, can HBase do analytics? And the answer is a yes, but its design is not about creating star schemas in the relational sense but creating column families which fits nicely into real time analytics. A great way to dive into this is to check out Dani Abel Rayan’s:

There are many other excellent references here and this is hardly an exhaustive post. But the key thing here is that while there are many similarities, HBase analytics and SQL Business Intelligence have different contexts. Because of its in-memory column families, its easy to adapt HBase for real time analytics (in addition to its ability to handle volatile messages). On the other hand, BI is about the ability to slide and dice using familiar BI tools against immense amounts of historical data. Over time we may see the concepts merge – Google BigQuery comes immediately to mind – but it’ll be awhile. [See Derrick Harris’s BiqQuery story in the Other Cloud Computing Platforms and Services section below.]

Meanwhile, I encourage folks that are comfortable with SQLBI to stretch the envelope a bit into the world of NoSQL like HBase. It’s a paradigm shift in some ways,… yet it also isn’t!

References

- Apache HBase Reference http://hbase.apache.org/book.html

- HBase: The Definitive Guide by Lars George http://www.amazon.com/HBase-Definitive-Guide-Lars-George/dp/1449396100

- HBase @ Facebook – The technology behind messages by Kannan Muthukkaruppan.

The Datanami Staff (@datanami) asserted Facebook Sees Hadoop Through Prism in an 8/28/2012 post:

A prism is used to separate a ray of light into its contingent frequencies, spreading them out into a rainbow. Maybe Facebook’s Prism will not make rainbows, but it will have the ability to split Hadoop clusters across the world.

This development, along with another Facebook project Corona which solves the single point of failure in MapReduce, was recently outlined with an emphasis on the role of the project for the larger Hadoop ecosystem.

Splitting a Hadoop cluster is difficult, explains Facebook’s VP of Engineering Jay Parikh. “The system is very tightly coupled,” said Facebook’s VP of Engineering Jay Parikh as he was explaining why this splitting of Hadoop clusters was not possible, “and if you introduce tens of milliseconds of delay between these servers, the whole thing comes crashing to a halt.”

So if a Hadoop cluster works fine when located in one data center and it is so difficult to change that, why go to the trouble? For a simple reason, according to Parikh. “It lets us move data around, wherever we want. Prineville, Oregon. Forest City, North Carolina. Sweden.”

This mobility mirrors that of Google Spanner, which moved data around its different centers to account for power usage and data center failure. Perhaps, with Prism, Facebook is preparing for data shortages/failures on what they call the world’s largest Hadoop cluster. That would be smart. Their 900 million users have, to this point, generated 100 petabytes of data that Facebook has to keep track of. According to Metz, they analyze about 105 terabytes every thirty minutes. That data is not shrinking, either.

Indeed, Parikh has an eye toward power usage and data failures. “It allows us to physically separate this massive warehouse of data but still maintain a single logical view of all of it. We can move the warehouses around, depending on cost or performance or technology…. We’re not bound by the maximum amount of power we wire up to a single data center.” Essentially, like Google Spanner, Prism will automatically copy and move data to other data centers when problems are detected or if needed elsewhere.

Facebook also improved Hadoop’s MapReduce system by introducing a workable solution that utilizes multiple job trackers. Facebook had already recently engineered a solution that takes care of the Hadoop File System which resulted in Hadoop’s HA NameNode, and Corona supplements that with a MapReduce fix. “In the past,” Parikh notes, “if we had a problem with the job tracker, everything kinda died, and you had to restart everything. The entire business was affected if this one thing fell over. Now, there’s lots of mini-job-trackers out there, and they’re all responsible for their own tasks.”

While both Corona and Prism seem like terrific additions to Hadoop, neither of these products have yet been released and Parikh gives no timetable for when that will happen. Further, according to Metz, MapR claims it has a solution similar to that of Facebook’s but nothing has hit the open source Hadoop.

Either way, there exist few companies who have as much at stake as Facebook when it comes to big data analytics. Improvements to Hadoop mean improvements to how Facebook handles their ridiculous amount of data. Hopefully, through Prism and Corona, they have found ways to split clusters among multiple data centers along with introducing backup trackers.

Related Stories

The Seattle Times reported Microsoft releases SkyDrive app for Android on 8/28/2012:

Microsoft announced today that a SkyDrive app for Android is now available for download.

The move comes about eight months after the company released SkyDrive apps for iPhone and Windows Phone.

The Android app is available for Android phone users with access to Google Play, and works best with Andoird 4.0 (Ice Cream Sandwich), though it's fully functional on Android 2.3 and above, according to the official Microsoft SkyDrive blog.

(Screenshot of SkyDrive for Android app from MIcrosoft SkyDrive blog)

I just installed the SkyDrive app on my Nexus 7 and it works like a champ!

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Paul Krill (@pjkrill) asserted “Windows Azure Mobile Services connects Windows 8-based client and mobile apps to scalable cloud; will include Android and iOS” in a deck for his Microsoft links Windows 8 apps to Azure article of 8/30/2012 for InfoWorld:

Microsoft is adding mobile connectivity between Windows applications and the Windows Azure cloud platform with the introduction this week of Windows Azure Mobile Services.

The technology will enable users to connect client and mobile applications based on Windows 8 to a scalable cloud back end, said Scott Guthrie, corporate vice president in the Microsoft Server and Tools Business, in a blog post. "It allows you to easily store structured data in the cloud that can span both devices and users, integrate it with user authentication, and send out updates to clients via push notifications." Windows 8 is due to ship on October 26.

Plans call for Windows Azure Mobile Services to soon add support for Windows Phone, Apple iOS, and Google Android devices. Users can get started by signing up for a 90-day free trial of Windows Azure, whereupon they enable their account to support the Mobile Services preview.

"Once you have the mobile services preview enabled, log into the Windows Azure Portal, click the 'New' button, and choose the new 'Mobile Services' icon to create your first mobile back end," Guthrie said. Once the back end is created, users will see a quick-start page with instructions on connecting the mobile service to an existing or new Windows 8 client application. Azure users can as many as 10 mobile services in a free, multitenant hosting environment.

"When you create a Windows Azure Mobile Service, we automatically associate it with a SQL database inside Windows Azure. The Windows Azure Mobile Service backend then provides built-in support for enabling remote apps to securely store and retrieve data from it (using secure REST end-points utilizing a JSON-based ODATA format) -- without you having to write or deploy any custom server code. Built-in management support is provided within the Windows Azure portal for creating new tables, browsing data, setting indexes, and controlling access permissions," Guthrie said.

• Gaurav Mantri (@gmantri) explained How I Built an “Awesome Chat” application for Windows 8 with Windows Azure Mobile Service in a 24-styled 8/30/2012 post:

In this blog post, I’m going to talk about how I built a Windows 8 application using Windows Azure Mobile Service. Windows Azure Mobile Service is the newest Windows Azure Services that you can use to provide backend services for your mobile applications (currently Windows 8, more coming soon). You can learn more and sign up for a preview of this service here: http://www.windowsazure.com/en-us/home/scenarios/mobile-services/. More information about this service can be found on Scott Guthrie’s blog post here: http://weblogs.asp.net/scottgu/archive/2012/08/28/announcing-windows-azure-mobile-services.aspx. The service looked really promising and I thought I should give it a try and trust me, you’re not going to be disappointed. All-in-all it took me about 4 hours to build the application (and that included installing Windows 8, VS 2012, signing up for a marketplace account etc.). Since I’m quite excited about this service and the work I was able to accomplish in such a short amount of time, I’m going to dramatize this blog post a bit! I am big fan of TV series 24 so I will use that for dramatization

. Without further ado, let me start!

Timeline

Following takes place between 5:00 PM and 10:00 AM. All events occur in real time

.

No employees were hurt during the implementation of this project

.

5:00 PM – Meeting with Boss

- Boss: We need a chat application for our company.

- Me: Ummm, OK. Let’s get Skype on everybody’s computer.

- Boss: No. No Skype.

- Me: OK. How about other chat applications?

- Boss: No. We need to build our own. It has to be a Windows 8 application.

- Me: OK. What else?

- Boss: It should scale up to thousands of users.

- Me: (Thinking) Dude, we only got 7 people in the office … but whatever.

- Me: What else?

- Boss: Should be cheap. I don’t want to pony up large moolah upfront.

- Me: Understood. What else?

- Boss: That should do it for now.

- Me: Let me think about it.

5:15 – 5:30 PM – In my thinking chair

I’ve heard of node.js and I think this would be the right choice for this kind of an application. I can then host the application in Windows Azure. The problem is I don’t know node.js (I’m just a front-end developer). How would I write a scalable application. I hear Glenn Block is in China

. May be I can convince my boss to bring him to India for a day or two. He sure can build this app in no time.

5:30 PM – Back in Boss’s office

- Me: I think we should use node.js for this application. From what I have read, it would certainly fit the bill.

- Boss: OK.

- Me: But I don’t know how to program in node.js. Can I get Glenn Block? He’s in China nowadays. We can fly him to India over the weekend.

- Boss: You don’t listen, do you? I said, I want it cheap.

- Me: How am I supposed to do it? I’m just a front-end developer. I know a bit of XAML and C#. I don’t know how to build massively scalable applications.

- Boss: Like I care. And one more thing …

- Me: Yeah.

- Boss: I need it by tomorrow morning.

- Me: Huh!!! and how am I going to do that?

- Boss: You don’t listen, do you? I said “Like I care …”

5:45 PM – Back in my thinking chair

Suddenly I had an epiphany: Windows Azure team has just released Windows Azure Mobile Services. May be I should look at that. So I watched some videos, read some blog posts and was convinced that I could use this service to build the application. There were so many things I liked there:

- Since it’s in preview mode, I don’t pay anything just yet. Once it goes Live, I will pay only for the resources I consume.

- It’s massively scalable (after all it is backed by Windows Azure). So if I (ever) need to support thousands of employees in my company, I can just scale my service.

- I really don’t need to do any server side coding. The service provides me with enough hooks to perform CRUD operations needed for my application. And if I do need to do work on the server i can take advantage of the nifty server side scripts feature. It supports Push Notifications which is something I would certainly need for my chat application. It also supports User Authentication which I can use later on.

- My current need is to build for Windows 8 which the service supports. I’m told that support for other mobile platforms like iOS and Android is coming in near future so I’m covered on that part as well.

All in all it looked like a winning solution to me. With that, I signed up for Windows Azure Mobile Service Preview and got approved in less than 10 minutes. And then I went home and completely forgot about it

.

11:00 PM – Realized I have an application to deliver

Man, I completely forgot that I had an application to deliver next morning. Forget sleep! Time to do some work.

11:00 PM – 11:30 PM – Install, Install, Install

Then I realized that I didn’t have necessary software installed. Luckily I had most of the things downloaded already. I started by installing Windows 8 and then Visual Studio 2012 and finally Windows Azure Mobile Services SDK was done by 11:30 PM.

11:30 PM – 00:30 AM – Build basic application

With the help of excellent tutorials and samples, I was able to build a basic chat application. All the application did at this time was save my messages in the backend database. Didn’t know (really didn’t want to know) how the data was saved but as long as I can see the data in the portal, I was happy.

Next I needed to worry about push notifications. Again tutorials to the rescue! After reading the tutorials, I realized I needed to sign up for Windows 8 Developer account first.

00:30 AM – 2:00 AM – Signing, Paying, Paying and more Paying

I spent next one and a half hour figuring how to sign up for Windows 8 Developer account. Man, this process can certainly be improved. They’re not taking individual developer account registration so I ended up signing for a corporate account. Again when signing up for a corporate account, I cannot list my email address as both developer and approver. What if I am a single person shop right now? Anyways, I ended up with that process and managed to get all the things I needed for push notification (namely client secret and package sid). Back to coding again!

2:00 AM – 2:30 AM – Implementing push notification

Again, excellent tutorial and code sample came in handy. I just copied the code and pasted it in proper places and I was getting push notifications in my application. Yay!!!

2:30 AM – 3:00 AM – Wrapping it up

Only thing I needed to do now is enable push notification for multiple users so I thought I will take care of it in the morning as I needed somebody to chat with me. Tweeted about the application I built and then got retweeted by none other than @scottgu. My day could not have ended on a better note

.

9:00 AM – 9:30 AM – Implementing push notification for multiple users

Again, excellent tutorial and code sample came in handy. I just copied the code and pasted it in proper places and I was getting push notifications in my application from other users. Couldn’t be happier and badly wanted to show it off to my boss!!!

10:00 AM – Outside Boss’s office

- Me: Is boss in?

- Boss’s assistant: He has gone on a 10 day vacation. Didn’t he tell you that?

- Me: WTF!

Anyways, the point I am trying to make here is that it is insanely simple to build mobile applications without really worrying too much about the server side coding using this service.

Technical Details

Enough kidding! Now let’s see some code. Here’s how I built the application:

Prerequisites

I’m assuming that you have already signed up for the preview services and have created the service and the database required for storing data. So I will not explain those.

Create table to store chat data

First thing you would need to create is a table which will store chat data. This is done on the portal itself. One interesting thing here is that even though the database used behind the scenes is SQL Azure (which is SQL Server), you can really have flexible schema. In fact, if you want you can just define the table and not define any columns (when a table is created, a column by the name “id” is created automatically for you). When the data is inserted the first time, the service automatically creates necessary columns for you. For this, make sure that dynamic schema is enabled as shown in the screenshot below:

Create model

Next thing you would want to do is create model for the data that you want to move back and forth between the devices. Since we’re building a chat application, we kept the model simple.

public class ChatMessage { public int Id { get; set; } [DataMember(Name = "From")] public string From { get; set; } [DataMember(Name = "Content")] public string Content { get; set; } [DataMember(Name = "DateTime")] public string DateTime { get; set; } [DataMember(Name = "channel")] public string Channel { get; set; } public override string ToString() { return string.Format("[{0}] {1} Says: {2}", DateTime, From, Content); } }Create XAML page

Again because our application was really simple, we created a single page application. This is how our XAML file looks like.

<Page x:Class="awesome_chat.MainPage" IsTabStop="false" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:local="using:awesome_chat" xmlns:d="http://schemas.microsoft.com/expression/blend/2008" xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006" mc:Ignorable="d"> <Grid Background="White"> <Grid.RowDefinitions> <RowDefinition Height="70"/> <RowDefinition/> </Grid.RowDefinitions> <TextBlock Grid.Row="0" Margin="5" FontSize="48" VerticalAlignment="Center" Text="Awesome Chat!"/> <Grid Grid.Row="1" x:Name="GridRegister" Visibility="Visible"> <Grid.RowDefinitions> <RowDefinition/> <RowDefinition Height="40"/> <RowDefinition Height="40"/> <RowDefinition/> </Grid.RowDefinitions> <TextBlock Grid.Row="1" Margin="5" FontSize="16" FontWeight="Bold" VerticalAlignment="Center" Text="Your name please:"/> <Grid Grid.Row="2"> <Grid.ColumnDefinitions> <ColumnDefinition/> <ColumnDefinition Width="100"/> </Grid.ColumnDefinitions> <TextBox x:Name="TextBoxName" Margin="5" VerticalAlignment="Center"/> <Button Grid.Column="1" x:Name="ButtonLetsGo" HorizontalAlignment="Center" VerticalAlignment="Center" Content="Let's Go!" Click="ButtonLetsGo_Click_1"/> </Grid> </Grid> <Grid Grid.Row="1" x:Name="GridChat" Visibility="Collapsed"> <Grid> <Grid.RowDefinitions> <RowDefinition Height="50"/> <RowDefinition Height="40"/> <RowDefinition/> </Grid.RowDefinitions> <Grid> <Grid.ColumnDefinitions> <ColumnDefinition/> <ColumnDefinition Width="75"/> </Grid.ColumnDefinitions> <TextBox Grid.Column="0" Name="TextBoxMessage" Margin="5" MinWidth="100"/> <Button Grid.Column="1" Name="ButtonContent" Content="Send" HorizontalAlignment="Center" Click="ButtonContent_Click_1"/> </Grid> <TextBlock Grid.Row="1" Text="Chat Messages:" Margin="5" VerticalAlignment="Center" FontSize="16" FontWeight="Bold"/> <TextBox x:Name="TextBoxChatMessages" Grid.Row="2" AcceptsReturn="True" Margin="5" TextWrapping="Wrap"/> </Grid> </Grid> </Grid> </Page>Implement CRUD operations in your code

Since we’re doing a simple chat application where all we are doing is inserting the data, we only implemented “insert” functionality. Depending on your application requirements, you may need to implement read / update / delete functionality as well.

private void ButtonContent_Click_1(object sender, RoutedEventArgs e) { if (!string.IsNullOrWhiteSpace(TextBoxMessage.Text)) { var chatMessage = new ChatMessage() { From = name, Content = TextBoxMessage.Text.Trim(), DateTime = DateTime.UtcNow.ToString("yyyy-MM-dd HH:mm:ss"), Channel = App.CurrentChannel.Uri }; InsertChatMessage(chatMessage); } ReadyForNextMessage(); } private async void InsertChatMessage(ChatMessage message) { TextBoxChatMessages.Text += string.Format("{0}\n", message.ToString()); await chatTable.InsertAsync(message); ReadyForNextMessage(); } private void ReadyForNextMessage() { TextBoxMessage.Text = ""; TextBoxMessage.Focus(Windows.UI.Xaml.FocusState.Programmatic); }Implement CRUD operations in server side scripts

Head back to Windows Azure Portal and select the table, and then click on “SCRIPT” tab. From the dropdown, you can select the operation for which you wish to create the script. Windows Azure Portal gives you the basic script to start with and then you can add more functionality if you like.

Currently this is how “insert” script of our application look like:

function insert(item, user, request) { request.execute({ success: function() { request.respond(); sendNotifications(); } }); function sendNotifications() { var channelTable = tables.getTable('Channel'); channelTable.read({ success: function(channels) { channels.forEach(function(channel) { push.wns.sendToastText04(channel.uri, { text1: item.DateTime, text2: item.From, text3: item.Content, }, { success: function(pushResponse) { console.log("Sent push:", pushResponse); } }); }); } }); } }Run the application

That’s pretty much it! Really. At this time, your application will be able to insert records into the database. You won’t be able to chat with anybody but that’s what we’ll cover next. To view the data, just click on “BROWSE” tab for your table.

Enable push notification

Before we enable push notification, we need to sign up for Windows Store first. You may find this link useful for that purpose: http://www.windowsazure.com/en-us/develop/mobile/tutorials/get-started-with-push-dotnet/. I followed the steps as is and was able to get push notifications for my messages.

Enable push notification for all users

Again, check out this tutorial: http://www.windowsazure.com/en-us/develop/mobile/tutorials/push-notifications-to-users-dotnet/. Follow this to a “T” and your code will work just fine. After this you should be able to receive notifications from other users and other users be able to receive notifications from you as well.

Code

I actually downloaded ToDoSample from the website and then modified the code for that. Here’re the modified files.

App.xaml.cs

using System; using System.Collections.Generic; using System.IO; using System.Linq; using Microsoft.WindowsAzure.MobileServices; using Windows.ApplicationModel; using Windows.ApplicationModel.Activation; using Windows.Foundation; using Windows.Foundation.Collections; using Windows.UI.Xaml; using Windows.UI.Xaml.Controls; using Windows.UI.Xaml.Controls.Primitives; using Windows.UI.Xaml.Data; using Windows.UI.Xaml.Input; using Windows.UI.Xaml.Media; using Windows.UI.Xaml.Navigation; using Windows.Networking.PushNotifications; using System.Globalization; using System.Collections.ObjectModel; namespace awesome_chat { /// <summary> /// Provides application-specific behavior to supplement the default Application class. /// </summary> sealed public partial class App : Application { // This MobileServiceClient has been configured to communicate with your Mobile Service's url // and application key. You're all set to start working with your Mobile Service! public static MobileServiceClient MobileService = new MobileServiceClient( "https://<yourapplicationname>.azure-mobile.net/", "<your application's secret key>" ); public static ObservableCollection<ChatMessage> Messages = null; /// <summary> /// Initializes the singleton application object. This is the first line of authored code /// executed, and as such is the logical equivalent of main() or WinMain(). /// </summary> public App() { this.InitializeComponent(); this.Suspending += OnSuspending; Messages = new ObservableCollection<ChatMessage>(); } public static PushNotificationChannel CurrentChannel { get; private set; } private async void AcquirePushChannel() { CurrentChannel = await PushNotificationChannelManager.CreatePushNotificationChannelForApplicationAsync(); IMobileServiceTable<Channel> channelTable = App.MobileService.GetTable<Channel>(); var channel = new Channel { Uri = CurrentChannel.Uri }; await channelTable.InsertAsync(channel); CurrentChannel.PushNotificationReceived += CurrentChannel_PushNotificationReceived; } void CurrentChannel_PushNotificationReceived(PushNotificationChannel sender, PushNotificationReceivedEventArgs args) { lock (this) { var messageContent = args.ToastNotification.Content; var textElements = messageContent.GetElementsByTagName("text"); var xml = textElements.ElementAt(0).GetXml(); var msgDateTime = textElements.ElementAt(0).InnerText; var msgFrom = textElements.ElementAt(1).InnerText; var msgContent = textElements.ElementAt(2).InnerText; var chatMessage = new ChatMessage() { Content = msgContent, From = msgFrom, DateTime = msgDateTime, }; Messages.Add(chatMessage); //args.Cancel = true; } } /// <summary> /// Invoked when the application is launched normally by the end user. Other entry points /// will be used when the application is launched to open a specific file, to display /// search results, and so forth. /// </summary> /// <param name="args">Details about the launch request and process.</param> protected override void OnLaunched(LaunchActivatedEventArgs args) { AcquirePushChannel(); // Do not repeat app initialization when already running, just ensure that // the window is active if (args.PreviousExecutionState == ApplicationExecutionState.Running) { Window.Current.Activate(); return; } if (args.PreviousExecutionState == ApplicationExecutionState.Terminated) { //TODO: Load state from previously suspended application } // Create a Frame to act navigation context and navigate to the first page var rootFrame = new Frame(); if (!rootFrame.Navigate(typeof(MainPage))) { throw new Exception("Failed to create initial page"); } // Place the frame in the current Window and ensure that it is active Window.Current.Content = rootFrame; Window.Current.Activate(); } /// <summary> /// Invoked when application execution is being suspended. Application state is saved /// without knowing whether the application will be terminated or resumed with the contents /// of memory still intact. /// </summary> /// <param name="sender">The source of the suspend request.</param> /// <param name="e">Details about the suspend request.</param> private void OnSuspending(object sender, SuspendingEventArgs e) { var deferral = e.SuspendingOperation.GetDeferral(); //TODO: Save application state and stop any background activity deferral.Complete(); } } }MainPage.xaml

<Page x:Class="awesome_chat.MainPage" IsTabStop="false" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:local="using:awesome_chat" xmlns:d="http://schemas.microsoft.com/expression/blend/2008" xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006" mc:Ignorable="d"> <Grid Background="White"> <Grid.RowDefinitions> <RowDefinition Height="70"/> <RowDefinition/> </Grid.RowDefinitions> <TextBlock Grid.Row="0" Margin="5" FontSize="48" VerticalAlignment="Center" Text="Awesome Chat!"/> <Grid Grid.Row="1" x:Name="GridRegister" Visibility="Visible"> <Grid.RowDefinitions> <RowDefinition/> <RowDefinition Height="40"/> <RowDefinition Height="40"/> <RowDefinition/> </Grid.RowDefinitions> <TextBlock Grid.Row="1" Margin="5" FontSize="16" FontWeight="Bold" VerticalAlignment="Center" Text="Your name please:"/> <Grid Grid.Row="2"> <Grid.ColumnDefinitions> <ColumnDefinition/> <ColumnDefinition Width="100"/> </Grid.ColumnDefinitions> <TextBox x:Name="TextBoxName" Margin="5" VerticalAlignment="Center"/> <Button Grid.Column="1" x:Name="ButtonLetsGo" HorizontalAlignment="Center" VerticalAlignment="Center" Content="Let's Go!" Click="ButtonLetsGo_Click_1"/> </Grid> </Grid> <Grid Grid.Row="1" x:Name="GridChat" Visibility="Collapsed"> <Grid> <Grid.RowDefinitions> <RowDefinition Height="50"/> <RowDefinition Height="40"/> <RowDefinition/> </Grid.RowDefinitions> <Grid> <Grid.ColumnDefinitions> <ColumnDefinition/> <ColumnDefinition Width="75"/> </Grid.ColumnDefinitions> <TextBox Grid.Column="0" Name="TextBoxMessage" Margin="5" MinWidth="100"/> <Button Grid.Column="1" Name="ButtonContent" Content="Send" HorizontalAlignment="Center" Click="ButtonContent_Click_1"/> </Grid> <TextBlock Grid.Row="1" Text="Chat Messages:" Margin="5" VerticalAlignment="Center" FontSize="16" FontWeight="Bold"/> <TextBox x:Name="TextBoxChatMessages" Grid.Row="2" AcceptsReturn="True" Margin="5" TextWrapping="Wrap"/> </Grid> </Grid> </Grid> </Page>MainPage.xaml.cs

using System; using System.Collections.Generic; using System.IO; using System.Linq; using System.Runtime.Serialization; using Microsoft.WindowsAzure.MobileServices; using Windows.Foundation; using Windows.Foundation.Collections; using Windows.UI.Xaml; using Windows.UI.Xaml.Controls; using Windows.UI.Xaml.Controls.Primitives; using Windows.UI.Xaml.Data; using Windows.UI.Xaml.Input; using Windows.UI.Xaml.Media; using Windows.UI.Xaml.Navigation; using System.Text; namespace awesome_chat { public class ChatMessage { public int Id { get; set; } [DataMember(Name = "From")] public string From { get; set; } [DataMember(Name = "Content")] public string Content { get; set; } [DataMember(Name = "DateTime")] public string DateTime { get; set; } [DataMember(Name = "channel")] public string Channel { get; set; } public override string ToString() { return string.Format("[{0}] {1} Says: {2}", DateTime, From, Content); } } public class Channel { public int Id { get; set; } [DataMember(Name = "uri")] public string Uri { get; set; } } public sealed partial class MainPage : Page { private string name = string.Empty; private IMobileServiceTable<ChatMessage> chatTable = App.MobileService.GetTable<ChatMessage>(); StringBuilder messages = null; public MainPage() { this.InitializeComponent(); App.Messages.CollectionChanged += Messages_CollectionChanged; messages = new StringBuilder(); } void Messages_CollectionChanged(object sender, System.Collections.Specialized.NotifyCollectionChangedEventArgs e) { if (e.Action == System.Collections.Specialized.NotifyCollectionChangedAction.Add) { foreach (var item in e.NewItems) { var chatMessage = item as ChatMessage; App.Messages.Remove(chatMessage); if (chatMessage.From != name) { this.Dispatcher.RunAsync(Windows.UI.Core.CoreDispatcherPriority.Normal, () => { TextBoxChatMessages.Text += string.Format("{0}\n", chatMessage.ToString()); }); } } } } private void ButtonLetsGo_Click_1(object sender, RoutedEventArgs e) { if (!string.IsNullOrWhiteSpace(TextBoxName.Text)) { name = TextBoxName.Text.Trim(); GridRegister.Visibility = Windows.UI.Xaml.Visibility.Collapsed; GridChat.Visibility = Windows.UI.Xaml.Visibility.Visible; ReadyForNextMessage(); } } private void ButtonContent_Click_1(object sender, RoutedEventArgs e) { if (!string.IsNullOrWhiteSpace(TextBoxMessage.Text)) { var chatMessage = new ChatMessage() { From = name, Content = TextBoxMessage.Text.Trim(), DateTime = DateTime.UtcNow.ToString("yyyy-MM-dd HH:mm:ss"), Channel = App.CurrentChannel.Uri }; InsertChatMessage(chatMessage); } ReadyForNextMessage(); } private async void InsertChatMessage(ChatMessage message) { TextBoxChatMessages.Text += string.Format("{0}\n", message.ToString()); await chatTable.InsertAsync(message); ReadyForNextMessage(); } private void ReadyForNextMessage() { TextBoxMessage.Text = ""; TextBoxMessage.Focus(Windows.UI.Xaml.FocusState.Programmatic); } } }Summary

As you saw in this blog post, it is really easy to build an application for Windows 8 which makes use of Windows Azure Mobile Service for performing heavy duty server side stuff. Obviously there are many things which we need to have but remember that at the time of writing this blog, it is still in “Preview” phase. I’m actually quite excited about the possibilities it opens up. For more reading, please visit Windows Azure Website at http://www.windowsazure.com/en-us/develop/mobile/. I hope you’ve found this information useful.

Acknowledgement

My sincere thanks to Glenn Block for being such a sport and playing a cameo in my 24 and also for the early feedback on the post. It’s much appreciated. I sincerely wish to have you visit India sometime soon.

• Mike Taulty (@mtaulty) described Windows 8 Development–When Your UI Thread is not Your Main Thread on 8/30/2012:

This is something that someone raised with me today and I thought I’d write it down because I noticed it when I first came to developing with .NET on Windows 8 and WinRT and it threw me for about an hour or so which perhaps lets me save you from spending an hour or so on it in the future.

Thinking from a .NET perspective, if you go into Visual Studio and make a new project as in;

and you let Visual Studio instantiate that template then build the project (CTRL+SHIFT+B) and then use the Solution Explorer (CTRL+ALT+L) to use the “Show All Files” button;

and then have a look in your debug\obj folder for a file called App.g.i.cs;

and jump to that method Main and set a breakpoint;

Now open up your App.xaml.cs file and find it’s method OnLaunched;

and put a breakpoint there and finally open up your MainPage.xaml.cs file and find it’s method OnNavigatedTo;

and put a breakpoint there. Run the application with F5 and when you land on the first breakpoint, use the Debug->Windows->Threads menu option to ensure that you can see what your threads are doing.

Here’s my breakpoint in Main;

and there’s not a lot there that’s going to surprise you – I have a single main thread that has called Main and it’s all as expected.

Here’s my breakpoint in OnLaunched;

and there is something there that might surprise you. We are not on our Main Thread but, instead, we are on a Worker Thread (8428) which is different from what you’d expect to see (I think) if you were coming from a WPF or Windows Forms background.

If we have a look at the current SynchronizationContext in the Watch window;

then we’ll notice that this is set up so (by default) any await work that we do can make use of that SynchronizationContext and if we continue on to our next breakpoint in OnLaunched we’ll notice that we are on the same Worker Thread (8428) that we were in OnLaunched.

So…take a little care when you’re debugging this applications because the assumption that I used to make which was Main Thread == UI Thread is not necessarily the case here so don’t let that lead you off down the wrong path as it did with me when I first saw it.

Of interest to developers working with Windows Azure Mobile Services.

• Stacey Higginbotham (@gigastacey) reported Microsoft joins startups in building the new app infrastructure stack in an 8/28/2012 post to GigaOm’s Cloud blog:

Microsoft is joining several startups in trying to entice developers to use its cloud as a specialized backend for their mobile applications. Microsoft’s Windows Azure Mobile Services joins offerings from Parse, Kinvey and Apigee in trying to establish a new infrastructure for the growing mobile ecosystem.

Microsoft just announced Windows Azure Mobile Services, a cloud offering that joins the ranks of Parse, Apigee and Kinvey in establishing a backend as a service designed for the mobile ecosystem. The goal of such a service is to provide a platform for mobile developers that will allow them to worry less about their infrastructure and only about their app.

If in the last four or five years the question for a promising startup has been whether to use your own servers or use Amazon Web Services, that calculus is changing. Now, startups should ask themselves, “Why architect your app for Amazon when you could forget having to architect an app at all?”

The development of these mobile infrastructure backends and the ecosystem for mobile developers is a topic that I’ll be discussing with Kevin Lacker, CTO at Parse, at our Mobilize conference on Sept. 20 and 21 in San Francisco. Parse, though, is just one of the vendors offering a mobile backend as a service.

What many of these vendors think developers really need is a way to build apps that perform flawlessly and scale rapidly up from a few to thousands (or millions) of users without requiring years worth of operations knowledge. The rise of startups like Parse is a response to the growing complexity of building out a mobile app and supporting it through spotty connections, delivering offline access to apps, and keeping an app up after a tweet or a “like” from someone famous enough to send millions of users to a service.

Microsoft’s new service envisions it as a platform as a service (hosted by Microsoft) attached to a SQL database also hosted in Microsoft’s data center. Today, Azure Mobile Services are available for Windows 8 apps, but later releases will support iOS, Android and Windows Phone.

Meanwhile, over at Kinvey, CEO Sravish Sridhar sees value in letting developers host their apps on their choice of cloud, hook into their choice of database, and basically serving as the glue bringing those underlying choices together.

Apigee has a different view that focuses on the pipeline that API calls travel as its key value add. Sam Ramji, head of strategy at Apigee, argues that in many cases APIs are the real value in today’s apps because they provide the channels that data can run through to be amalgamated on the other end in the form of services, mashups or whatever else the developer wants to do – and developers shouldn’t have to mess with the back end infrastructure at all.

Either way, all of these players are recognizing a fundamental shift in the type of infrastructure needed to host mobile applications. Expect more competitors to pile on.

Subscriber Content: Related research and analysis from GigaOM Pro

Full disclosure: I’m a registered GigaOm Analyst.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

• Denny Lee (@dennylee) posted What are thou Big Data? Asked the SQLBI Arbiter on 8/30/2012:

Over the last few days, I’ve been pinged the question:

What is Big Data?

Go figure, I actually have an answer of sorts – from a SQL BI perspective (since that’s my perspective, eh?!)

Above the cloud: Big Data and BI from Denny Lee

There are two blog posts that go with the above slides that provide the details. Concerning the concepts of Scaling Up or Scaling Out, check out Scale Up or Scale Out your Data Problems? A Space Analogy.

Concerning the concepts of data movement, check out Moving data to compute or compute to data? That is the Big Data question.

The WCF Data Services (a.k.a. Astoria) Team reported WCF Data Service 5.0.2 Released on 8/29/2012:

We’re happy to announce the release of WCF Data Services 5.0.2.

What’s in this release

This release contains a number of bug fixes:

- Fixes NuGet packages to have explicit version dependencies

- Fixes a bug where WCF Data Services client did not send the correct DataServiceVersion header when following a nextlink

- Fixes a bug where projections involving more than eight columns would fail if the EF Oracle provider was being used

- Fixes a bug where a DateTimeOffset could not be materialized from a v2 JSON Verbose value

- Fixes a bug where the message quotas set in the client and server were not being propagated to ODataLib

- Fixes a bug where WCF Data Services client binaries did not work correctly on Silverlight hosted in Chrome

- Allows "True" and "False" to be recognized Boolean values in ATOM (note that this is more relaxed than the OData spec, but there were known cases where servers were serializing "True" and "False")

- Fixes a bug where nullable action parameters were still required to be in the payload

- Fixes a bug where EdmLib would fail validation for attribute names that are not SimpleIdentifiers

- Fixes a bug where the FeedAtomMetadata annotation wasn't being attached to the feed even when EnableAtomMetadataReading was set to true

- Fixes a race condition in the WCF Data Services server bits when using reflection to get property values

- Fixes an error message that wasn't getting localized correctly

Getting the release

The release is only available on NuGet. To install this prerelease NuGet package, you can use the Package Manager GUI or one of the following commands from the Package Manager Console:

- Install-Package <PackageId>

- Update-Package <PackageId>

Our NuGet package ids are:

- Microsoft.Data.Services.Client (WCF Data Services Client)

- Microsoft.Data.Services (WCF Data Services Server)

- Microsoft.Data.OData (ODataLib)

- Microsoft.Data.Edm (EdmLib)

- System.Spatial (System.Spatial)

Call to action

If you have experienced one of the bugs mentioned above, we encourage you to try out these bits. As always, we’d love to hear any feedback you have!

Bin deploy guidance

If you are bin deploying WCF Data Services, please read through this blog post [below].

The WCF Data Services (a.k.a. Astoria) Team posted OData 101: Bin deploying WCF Data Services on 8/29/2012:

TL;DR: If you’re bin-deploying WCF Data Services you need to make sure that the .svc file contains the right version string (or no version string).

The idea for this post originated from an email I received yesterday. The author of the email, George Tsiokos (@gtsiokos), complained of a bug where updating from WCF Data Services 5.0 to 5.0.1 broke his WCF Data Service. Upon investigation it became clear that there was an easy fix for the short-term, and perhaps something we can do in 5.1.0 to make your life easier in the long-term.

What’s Bin Deploy?

We’ve been blogging about our goals for bin deploying applications for a while now. In a nutshell, bin deploy is the term for copying and pasting a folder to a remote machine and expecting it to work without any other prerequisites. In our case, we clearly still have the IIS prerequisite, but you shouldn’t have to run an installer on the server that GACs the Microsoft.Data.Services DLL.

Bin deploy is frequently necessary when dealing with hosted servers, where you may not have rights to install assemblies to the GAC. Bin deploy is also extremely useful in a number of other environments as it decreases the barriers to hosting a WCF Data Service. (Imagine not having to get your ops team to install something in order to try out WCF Data Services!)

Replicating the Problem

First, let’s walk through what’s actually happening. I’m going to do this in Visual Studio 2012, but this would happen similarly in Visual Studio 2010.

First, create an Empty ASP.NET Web Application. Then right-click the project and choose Add > New Item. Select WCF Data Service, assign the service a name, and click Add. (Remember that in Visual Studio 2012, the item template actually adds the reference to the NuGet package on your behalf. If you’re in Visual Studio 2010, you should add the NuGet package now.)

Stub enough code into the service to make it load properly. In our case, this is actually enough to load the service (though admittedly it’s not very useful):

using System.Data.Services; namespace OData101.UpdateTheSvcFileWhenBinDeploying { public class BinDeploy : DataService<DummyContext> { } public class DummyContext { } }Press F5 to debug the project. While the debugger is attached, open the Modules window (Ctrl+D,M). Notice that Microsoft.Data.Services 5.0.0.50627 is loaded:

Now update your NuGet package to 5.0.1 or some subsequent version. (In this example I updated to 5.0.2.) Debug again, and look at the difference in the Modules window:

In this case we have two versions of Microsoft.Data.Services loaded. We pulled 5.0.2 from the bin deploy folder and we still have 5.0.0.50627 loaded from the GAC. Were you to bin deploy this application as-is, it would fail on the server with an error similar to the following:

Could not load file or assembly 'Microsoft.Data.Services, Version=5.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35' or one of its dependencies. The located assembly's manifest definition does not match the assembly reference. (Exception from HRESULT: 0x80131040)

So why is the service loading both assemblies? If you look at your .svc file (you need to right-click it and choose View Markup), you’ll see something like the following line in it:

<%@ ServiceHost Language="C#" Factory="System.Data.Services.DataServiceHostFactory, Microsoft.Data.Services, Version=5.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" Service="OData101.UpdateTheSvcFileWhenBinDeploying.BinDeploy" %>That 5.0.0.0 string is what is causing the GACed version of Microsoft.Data.Services to be loaded.

Resolving the Problem

For the short term, you have two options:

- Change the version number to the right number. The first three digits (major/minor/patch) are the significant digits; assembly resolution will ignore the final digit.

- Remove the version number entirely. (You should remove the entire name/value pair and the trailing comma.)

Either of these changes will allow you to successfully bin deploy the service.

A Permanent Fix?

We’re looking at what we can do to provide a better experience here. We believe that we will be able to leverage the PowerShell script functionality in NuGet to at least advise, if not outright change, that value.

So what do you think? Would you feel comfortable with a package update making a change to your .svc file? Would you rather just have a text file pop up with a message that says you need to go update that value manually? Can you think of a better solution? We’d love to hear your thoughts and ideas in the comments below.

The WCF Data Services (a.k.a. Astoria) Team continued its roll with OData 101: Using the [NotMapped] attribute to exclude Enum properties on 8/29/2012:

TL;DR: OData does not currently support enum types, and WCF Data Services throws an unclear exception when using the EF provider with a model that has an enum property. Mark the property with a

[NotMapped]attribute to get around this limitation.In today’s OData 101, we’ll take a look at a problem you might run into if you have an Entity Framework provider and are using Entity Framework 5.0+.

The Problem

The problem lies in the fact that Entity Framework and WCF Data Services use a common format to describe the data model: the Entity Data Model (EDM). In Entity Framework 5.0, the EF team made some modifications to MC-CSDL, the specification that codifies CSDL, the best-known serialization format for an EDM. Among other changes, the EnumType element was added to the specification. An EF 5.0 model in a project that targets .NET 4.5 will allow developers to add enum properties. However, WCF Data Services hasn’t yet done the work to implement support for enum properties. (And the OData protocol doesn’t have a mature understanding of how enums should be implemented yet; this is something we’re working through with OASIS.)

If you are trying to use an EF model that includes an enum property with WCF Data Services, you’ll get the following error:

The server encountered an error processing the request. The exception message is 'Value cannot be null. Parameter name: propertyResourceType'. See server logs for more details. The exception stack trace is:

at System.Data.Services.WebUtil.CheckArgumentNull[T](T value, String parameterName) at System.Data.Services.Providers.ResourceProperty..ctor(String name, ResourcePropertyKind kind, ResourceType propertyResourceType) at System.Data.Services.Providers.ObjectContextServiceProvider.PopulateMemberMetadata(ResourceType resourceType, IProviderMetadata workspace, IDictionary2 knownTypes, PrimitiveResourceTypeMap primitiveResourceTypeMap) at System.Data.Services.Providers.ObjectContextServiceProvider.PopulateMetadata(IDictionary2 knownTypes, IDictionary2 childTypes, IDictionary2 entitySets) at System.Data.Services.Providers.BaseServiceProvider.PopulateMetadata() at System.Data.Services.Providers.BaseServiceProvider.LoadMetadata() at System.Data.Services.DataService1.CreateMetadataAndQueryProviders(IDataServiceMetadataProvider& metadataProviderInstance, IDataServiceQueryProvider& queryProviderInstance, BaseServiceProvider& builtInProvider, Object& dataSourceInstance) at System.Data.Services.DataService1.CreateProvider() at System.Data.Services.DataService1.HandleRequest() at System.Data.Services.DataService1.ProcessRequestForMessage(Stream messageBody) at SyncInvokeProcessRequestForMessage(Object , Object[] , Object[] ) at System.ServiceModel.Dispatcher.SyncMethodInvoker.Invoke(Object instance, Object[] inputs, Object[]& outputs) at System.ServiceModel.Dispatcher.DispatchOperationRuntime.InvokeBegin(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage5(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage41(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage4(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage31(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage3(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage2(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage11(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMessage1(MessageRpc& rpc) at System.ServiceModel.Dispatcher.MessageRpc.Process(Boolean isOperationContextSet)

Fortunately this error is reasonably easy to workaround.

Workaround

The first and most obvious workaround should be to consider removing the enum property from the EF model until WCF Data Services provides proper support for enums.

If that doesn’t work because you need the enum in business logic, you can use the

[NotMapped]attribute fromSystem.ComponentModel.DataAnnotations.Schemato tell EF not to expose the property in EDM.For example, consider the following code:

using System; using System.ComponentModel.DataAnnotations.Schema; namespace WcfDataServices101.UsingTheNotMappedAttribute { public class AccessControlEntry { public int Id { get; set; } // An Enum property cannot be mapped to an OData feed, so it must be // explicitly excluded with the [NotMapped] attribute. [NotMapped] public FileRights FileRights { get; set; } // This property provides a means to serialize the value of the FileRights // property in an OData-compatible way. public string Rights { get { return FileRights.ToString(); } set { FileRights = (FileRights)Enum.Parse(typeof(FileRights), value); } } } [Flags] public enum FileRights { Read = 1, Write = 2, Create = 4, Delete = 8 } }In this example, we’ve marked the enum property with the

[NotMapped]attribute and have created a manual serialization property which will help to synchronize an OData-compatible value with the value of the enum property.Implications

There are a few implications of this workaround:

- The

[NotMapped]attribute also controls whether EF will try to create the value in the underlying data store. In this case, our table in SQL Server would have an nvarchar column called Rights that stores the value of the Rights property, but not an integer column that stores the value of FileRights.- Since the FileRights column is not stored in the database, LINQ-to-Entities queries that use that value will not return the expected results. This is a non-issue if your EF model is only queried through the WCF Data Service.

Result

The result of this workaround is an enumeration property that can be used in business logic in the service code, for instance, in a

FunctionImport. Since the[NotMapped]attribute prevents the enum value from serializing, your OData service will continue to operate as expected and you will be able to benefit from enumerated values.In the example above, there is an additional benefit of constraining the possible values for Rights to some combination of the values of the

FileRightsenum. An invalid value (e.g., Modify) would be rejected by the server sinceEnum.Parsewill throw anArgumentException.

No significant articles today.

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

• Haishi Bai (@haishibai2010) recommended developers Sign out cleanly from Identity Providers when using ACS in a 8/29/2012 post:

The problem

Since you’ve searched and found this post, I assume you are facing the problem that your application is not completely signed out from the Identity Provider until you close your browser window, even if you’ve implemented sign out code using WIF like this:

WSFederationAuthenticationModule fam = FederatedAuthentication.WSFederationAuthenticationModule; FormsAuthentication.SignOut(); fam.SignOut(true);Although your application will prompt you to login again when you try to access a protected resource, you immediately gain the access back because the Identity Provider session state is not cleared. You may also have tried:

WSFederationAuthenticationModule.FederatedSignOut(new Uri("https://login.live.com/login.srf?wa=wsignout1.0"), new Uri("{your app URL}");This signs you out from the IP (in this case Windows Live), but the browser stayed on www.msn.com - not all Identity Providers honor the wreply parameter in wsingout1.0 request.Now what do we do to cleanly sign out from the Identity Provider and return to our own pages?

A “partial” (but easy) solution

Here I’m presenting a “partial” solution. First, it’s not “the” solution, but merely one of feasible ways to get it working. Second, it’s incomplete as there are some missing parts (more details below) that need to be filled by you. In addition, this solution is based on ASP.NET MVC, though it can be easily adapted for ASP.Net Web Forms.

Let’s assume you’ve got an ASP.NET MVC application that has a logout button that invoke above sign-out logics. Here’s what you need to do:

- Add a new View named LogOff under Views\Account folder. This will be the custom sign-out page. Here I’m presenting a sample with auto sign-out experience, but you can provide a manual sign-out experience using this page as well.

- Change the code of the view to this:

@model System.String[] <img src="@Model[0]" onerror="window.location='/';" />

There are two nifty (or dirty?) tricks here – the view loads a URL passed in via the view model, and when the image fails to load, redirects browser back to the home page. For simplicity there’s no error check, so if you pass in an empty array it will bark.- Now we modify the LogOff() method of our AccountController:

public ViewResult LogOff() { WSFederationAuthenticationModule fam = FederatedAuthentication.WSFederationAuthenticationModule; FormsAuthentication.SignOut(); fam.SignOut(true); return View("LogOff", new string[]{"https://login.live.com/login.srf?wa=wsignout1.0"}); }

What’s going on here? We give the Windows Live sign-out URL to our LogOff view, which will try to load it to the img tag. The image will not load (because the URL doesn’t point to an image!), hence onerror is triggered and we are brought back to the home page with a clear session.Why this is a “partial” solution

First of all, in the above code we hard-coded the sign-out URL. What you should do instead is to get the sign-out URL from ACS, which provides an endpoint for you to retrieve home-realm discovery information of trusted Identity Providers. However, that’s still not all. How do you know which Identity Provider the user has picked? There could be better ways, but one possible way is to provide your customized home-realm discovery page, and remember user’s selection so that when user logs out, you know which sign-out URL to use.

There you go. That’s my solution to the problem. Before I end this post, let’s reflect on why we are in this situation.

Why we are in this situation (in my understanding)?

In an ideal world, when we tell ACS that we want to sign out, ACS will contact all Identity Providers and tell them to sign out, then return to our application and tell our application to clean up. However, this is something that is hard for ACS to do. What ACS needs to deliver to you is a reliable claim that says “Now you’ve been signed out from all Identity Providers”. This is a hard assertion to make. First, not all identity providers support federated sign out – they just don’t. Second, ws-Federation doesn’t mandate and identity provider to provide any response to a wsignout1.0 request, So ACS won’t know if sign-out is successful nor not. It can wait for wsingoutcleanup1.0 requests back from the Identity Provider, but again that request is not mandatory in ws-Federation either. This is my understanding of the situation. I could be wrong, and I never lose hope that someday this problem will be addressed once and for all. Maybe, just maybe, we can get the next best thing from ACS, which is to tell us “Hey, I’ve tried to tell the Identity Providers to sign you out. Some of them did within the timeout window you provided. What you do now is up to you.”

Anton Staykov (@astaykov) described Creating a custom Login page for federated authentication with Windows Azure ACS in an 8/29/2012 post to the ACloudyPlace blog:

Since we already know how to delegate the login/authentication process to an Identity Provider using Windows Azure ACS (Online Identity Management via Windows Azure ACS and Unified Identity for web apps – the easy way ), let’s see how to create a custom login page and provide users with a seamless experience in our web application.

I will use the WaadacDemo demo code as a base to extend it to host its own login page. The complete code with custom login page is here.

Windows Azure ACS is a really flexible Identity Provider. Besides the default login page, it also provides a number of ways for us to customize the login experience for our users. All the ways are found under Application Integration menu in the Login Pages section:

Once there, we have to choose the Relying Party application for which we would like customization. Now we have a number of options:

Option 1 is the default option we were already using, it’s the ACS hosted login page. What we are interested in is Option 2 – hosting the login page ourselves.

There are two approaches here – the client side and the server side. Both rely to a JSON list of identity providers, the link to the JSON feed URL is displayed in the last text area. If you are the JavaScript/DOM guy – I highly encourage you to download the Example Login Page and play around with the client code provided. In the following lines I’ll describe how to create the Login page with server code.

As already mentioned, both approaches rely on JSON array of identity providers. Since I still don’t like dynamic, I will use a custom type on the server to deserialize my JSON array to. Here is my tiny IdentityProvider class:

public class IdentityProvider { public List<string> EmailAddressSuffixes { get; set; } public string ImageUrl { get; set; } public string LoginUrl { get; set; } public string LogoutUrl { get; set; } public string Name { get; set; } }Now I don’t want to reinvent the wheel, and will use a popular JSON deserializer – NewtonSoft JSON.NET. A simple WebClient call is needed to download the JSON string and pass it to the JSON deserializer:

private List<IdentityProvider> GetIdentityProvidersFromAcs() { var idPsUrl = ConfigurationManager.AppSettings["IdentityProvidersUrl"]; var webClient = new WebClient(); webClient.Encoding = System.Text.Encoding.UTF8; var jsonList = webClient.DownloadString(idPsUrl); var acsResult = JsonConvert.DeserializeObject<List<IdentityProvider>>(jsonList); return acsResult; }You might already have noticed that I use the appSettings section in web.config to keep the URL of the identity providers list. A small gotcha is to explicitly set text encoding of WebClient to UTF-8, because the Live ID identity provider has a small trademark symbol (™) in its name and that cannot be changed. If you do not use UTF-8 encoding, you will have some strange ASCII symbols instead of ( ™ ).

What do we do with the identity providers once we have them? Well, I’ve create a BaseMaster class which all of my MasterPages will inherit. This BaseMaster class has a property IdentityProviders:

public List<IdentityProvider> IdentityProviders { get { List<IdentityProvider> idPs = Cache.Get("idps") as List<IdentityProvider>; if (idPs == null) { idPs = this.GetIdentityProvidersFromAcs(); Cache.Add("idps", idPs, null, DateTime.Now.AddMinutes(20), TimeSpan.Zero, System.Web.Caching.CacheItemPriority.Normal, null); } return idPs; } }I also keep the retrieved list in local application cache since this is something not very likely to change often. But, you should leave it for just 20 minutes in the cache to refresh it from time to time.

Now the tricky part. In order to have your own login page we have to combine the force of Forms Authentication along with Windows Identity Foundation and its WSFederatedAuthenticationModule. If you happen to use an ASP.NET MVC application, then it is fairly easy and much discussed. I suggest that you go through Dominic Bayer’s blog post on that subject (and the details). Note that now we will not mix Forms and Claims authentication. We will use claims, but will have the Forms Authentication to help us with that task.

Let’s go through the changes needed in Web.config file. First we set the passiveRedirectEnabled attribute for wsFederation to false:

<wsFederation passiveRedirectEnabled="false" issuer="https://asdemo.accesscontrol.windows.net/v2/wsfederation" realm="http://localhost:2700/" requireHttps="false" />Then we change the Authentication from None for Forms:

<authentication mode="Forms"> <forms loginUrl="~/Login.aspx" /> </authentication>And now add some special Locations to allow all users: Styles, Login.aspx and authenticate.aspx. We need to do that to prevent the Forms Authentication to redirect requests for these URLs. Styles, of course need to be accessed for all users. Login.aspx is our custom login page, and authenticate.aspx is a custom authentication page which we will use as Return URL for our ACS configured relying party application:

Here is the code for authenticate.aspx.cs:

protected void Page_Load(object sender, EventArgs e) { if (HttpContext.Current.Request.Form[WSFederationConstants.Parameters.Result] != null) { // This is a response from the ACS - you can further inspect the message if you want SignInResponseMessage message = WSFederationMessage.CreateFromNameValueCollection( WSFederationMessage.GetBaseUrl(HttpContext.Current.Request.Url), HttpContext.Current.Request.Form) as SignInResponseMessage; FormsAuthentication.SetAuthCookie(this.User.Identity.Name, false); Response.Redirect("~/Default.aspx"); } else { Response.Redirect("~/Login.aspx"); } } We check whether the request contains SignInResponseMessage which would come from ACS. If so, then everything is fine and we must instruct the FormsAuthentication that the user is logged in via the FormsAuthentication.SetAuthCookie(). If not – redirect the user to the Login page.Finally we will create our custom login page. Just add it as a web form using Master Page. Select the Site.Master (which inherits from BaseMaster). The simplest way of showing Identity Providers I can think of is the Repeater Control:

<h2>Choose Login Method</h2> <asp:Repeater runat="server" ID="rptIdentityProviders"> <ItemTemplate> <asp:HyperLink runat="server" NavigateUrl='<%#DataBinder.Eval(Container.DataItem, "LoginUrl")%>' Text='<%#DataBinder.Eval(Container.DataItem, "Name")%>' /> <br /> <hr /> </ItemTemplate> </asp:Repeater>I bind it to the list of identity providers which I got from the BaseMaster:

protected void Page_Load(object sender, EventArgs e) { this.rptIdentityProviders.DataSource = ((BaseMaster)this.Master).IdentityProviders; this.rptIdentityProviders.DataBind(); }Let me visually summarize the process and why it works:

If a user requests protected content, the FormsAuthenticationModule kicks and redirects the user to our Login.aspx page. There we show a list of identity providers where one can authenticate. When the user authenticates successfully with the chosen IdP, a token is sent back to the Access Control Service. The ACS processes that token with the configured rules and relying party. If everything passes fine without errors, the ACS sends a new token to the relying party’s Return URL property. In our case this is http://localhost:2700/authenticate.aspx. Since we added this URI as a special location in web.confing, no FormsAuth redirects kicks here. The WsFederationAuthenticationModule intercepts the incoming token and creates the ClaimsIdentity based on that token. It also creates the FederatedAuthentication cookie, which will be used in the subsequent requests to build up the ClaimsIdentity again. Now is the moment to also instruct the FormsAuthenticationModule that the user is already authenticated, so that it doesn’t redirect to Login.aspx.

I hope you liked it and discovered that it is easier than you thought!

Full disclosure: I’m a paid contributor to Red Gate Software’s ACloudyPlace blog.

Haishi Bai (@haishibai2010) posted a Walkthrough: Setting up development environment for Service Bus for Windows Server on 8/28/2012:

In this walkthrough I’ll guide you through the steps of configuring a development environment for Service Bus for Windows Server (Service Bus 1.0 Beta) and creating a simple application. This post is a condensed version of the contents from previous link. If you’d rather to read the whole document, you are welcome to do so. On the other hand, if you prefer a shorter version with step-by-step guidance, please read on! Throughout the post we’ll also touch upon key concepts you need to understand to manage and use Service Bus. So, if you learn better with hands-on experiments, this is a perfect post (I hope) for you to get started.

Service Bus for Windows Server brings loosely-coupled, message-driven architecture to on-premises servers as well as development environments. There’s great parity between Service Bus for Windows Server and Service Bus on Windows Azure in terms of API and development experience. As for developers, you can use various client classes such as QueueClient and BrokeredMessage just as you work with Service Bus on Windows Azure. The main difference in code resides mostly in where namespaces are managed.

Prerequisites

- Windows 7 SP1 x64 or Windows 8 x64 (used in this post).

- SQL Server 2008 R2 SP1, SQL Server 2008 R2 SP1 Express, SQL Server 2012, or SQL Server 2012 Express (used in this post)

- .Net 4.0 or .Net 4.5 (used in this post)

- PowerShell 3.0

- Visual Studio 2010 or Visual Studio 2012 (used in this post)

Note: Service Bus for Windows Server supports Windows Server 2008 SP1 x64 and Windows Server 2012 x64 as production systems. However, you can install it on Windows 7 SP1 x64 and Windows 8 x64 for development purposes.

1. Installation

Click on the contextual installation link to launch installer. Follow the wizard to complete installation. If you encountered some errors, please refer to release notes page for known issues and some fix tips.

2. Configuration

On Windows 8, search for “Service Bus PowerShell” and launch the PowerShell host for Service Bus. On Windows 7, use Start->All Programs->Windows Azure Service Bus 1.0->Service Bus PowerShell menu.

- Create a new Service Bus Farm. A Service Bus Farm is a group of Service Bus Hosts. It provides a highly available and scalable hosting cluster for your messaging infrastructure. To create a new Service Bus Farm, use command:

$SBCertAutoGenerationKey = ConvertTo-SecureString -AsPlainText –Force -String "{your strong password}" New-SBFarm -FarmMgmtDBConnectionString 'Data Source=loca lhost; Initial Catalog=SBManagementDB; Integrated Security=True' -PortRangeStart 9000 -TcpPort 9354 -RunAsName {user}@{domain} -AdminGroup 'BUILTIN\Administrators' -GatewayDBConnectionString 'Data Source=localhost; Initial Catalog=SBGatewayDat abase; Integrated Security=True' -CertAutoGenerationKey $SBCertAutoGenerationKey -ContainerDBConnectionString 'Data Source=localhost; Initial Catalog=ServiceBus DefaultContainer; Integrated Security=True';This is a bit scary, isn’t it? Looking closer, you’ll see the script creates three databases, along with other security settings. In the script you can see references to a Gateway and a default Container. What are those? To explain, we’ll have to have a brief review of Service Bus architecture. If you want to read a more detailed description, read this page. Here’s a shortened version: Each server on a Service Bus Farm runs three processes, a Gateway, a Message Broker, and a Windows Fabric host. Windows Fabric hosts form a highly available hosting environment. They coordinate load-balancing and fail-over by communicating to each other. Message Brokers register themselves with the Fabric hosts and they in turn host Containers, which can be queues, topics, or subscriptions. Each Container is backed by a persistent storage (SQL Server). When a client send or receive a message, the request is sent to the Gateway first, and the Gateway will route requests to appropriate Message Broker. In case of Net.TCP interaction, Gateway can also redirect the client to a Message Broker so subsequent requests are directly handled by the broker.- Now we are ready to add a member server (called “host”) to the farm:

Add-SBHost -certautogenerationkey $SBCertAutoGenerationKey -FarmMgmtDBConnectionString "Data Source=localhost; Integrated Security=True"The command will prompt for your RunAs password. Enter the password of the account you chose to use ({user}@{domain} part in above script) to continue. Once the command completes, you should see a Services Bus Gateway services, a Service Bus Message Broker service, as well as a Windows Fabric Host Service running on your machine.- To check the status of the farm, use command:

Get-SBFarmStatusYou should see output similar to this:

3. Create a new namespace

You can use New-SBNamespace command to create a new Service Bus namespace:

New-SBNamespace -Name SBDemo -ManageUsers {your domain}\{your account}Once the namespace is created, you should see output similar to this:

3. Now we are ready to write some code!

- Launch Visual Studio, create a new Windows Console application targeting at .Net Framework 4.0.

- In NuGet package manager, search for “ServiceBus 1.0” (include prerelease), locate and install the package.

- Replace Main() method with the following code. Again, the code is a (over) simplified version of the MSDN sample :