Windows Azure and Cloud Computing Posts for 7/30/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 8/1/2012 10:20 AM PDT with new articles marked ••, including the new Windows Azure Active Directory Authentication Library (WAADAL) in the Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow section.

• Updated 7/31/2012 3:00 PM PDT with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

See David Pallman’s Introducing azureQuery: the JavaScript-Windows Azure Bridge for client-side JavaScript with Windows Azure blobs article in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

Rohit Bakashi and Mike Flasko presented a Hortonworks and Microsoft Bring Apache Hadoop to Windows webinar on 7/25/2012 (video archive requires registration):

Microsoft and Hortonworks announced a strategic relationship earlier this year to accelerate and extend the delivery of Apache Hadoop-based distributions for Windows Server and Windows Azure.

Join us in this 60-minute webcast with Rohit Bakashi, Product Manager at Hortonworks and Mike Flasko, Sr. Program Manager at Microsoft to discuss the work that’s being done since the announcement.

- Hortonworks Data Platform and Microsoft’s Big Data solutions.

- A demo of HDP on both Windows Server and Windows Azure.

- Real-world use cases that leverage Microsoft Big Data solutions to unlock business insights from structured and unstructured data.

See Chris Talbot’s Cloudera, HP To Simplify Hadoop Cluster Management report of 7/30/2012 post to the TalkinCloud blog in the Other Cloud Computing Platforms and Services below.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Himanshu Singh (@himanshuks, pictured below) posted Fault-tolerance in Windows Azure SQL Database by Tony Petrossian to the Windows Azure blog on 7/30/2012:

A few years ago when we started building Windows Azure SQL Database, our Cloud RDBMS service, we assumed that fault-tolerance was a basic requirement of any cloud database offering. Our cloud customers have a diverse set of needs for storage solutions but our focus was to address the needs of the customers who needed an RDBMS for their application. For example, one of our early adopters was building a massive ticket reservation system in Windows Azure. Their application required relational capabilities with concurrency controls and transactional guaranties with consistency and durability.

To build a true RDBMS service we had to be fault-tolerant while ensuring that all atomicity, consistency, isolation and durability (ACID) characteristics of the service matched that of a SQL Server database. In addition, we wanted to provide elasticity and scale capabilities for customers to create and drop thousands of databases without any provisioning friction. Building a fault-tolerant (FT) system at cloud scale required a good deal of innovation.

We began by collecting a lot of data on various failure types and for a while we reveled in the academic details of the various system failure models. Ultimately, we simplified the problem space to the following two principles:

- Hardware and software failures are inevitable

- Operational staff make mistakes that lead to failures

There were two driving factors behind the decision to simplify our failure model. First, a fault-tolerant system requires us to deal with low-frequency failures, planned outages, as well as high-frequency failures. Second, at cloud scale, the low-frequency failures happen every week if not every day.

Our designs for fault-tolerance started to converge around a few solutions once we assumed that all components are likely to fail, and, that it was not practical to have a different FT solution for every component in the system. For example, if all components in a computer are likely to fail then we might as well have redundant computers instead of investing in redundant components, such as power supplies and RAID.

We finally decided that we would build fault-tolerant SQL databases at the highest level of the stack instead of building fault-tolerant systems that run database servers that host databases. Last but not least, the FT functionality would be an inherent part of the offering without requiring configurations and administration by operators or customers.

Fault-Tolerant SQL Databases

Customers are most interested in the resiliency of their own databases and less interested in the resiliency of the service as a whole. 99.9% uptime for a service is meaningless if “my database” is part of the 0.1% of databases that are down. Each and every database needs to be fault-tolerant and fault mitigation should never result in the loss of a committed transaction. There are two major technologies that provide the foundation for the fault-tolerant databases:

- Database Replication

- Failure Detection & Failover

Together, these technologies allow the databases to tolerate and mitigate failures in an automated manner without human interventions while ensuring that committed transactions are never lost in user’s databases.

Database Fault-Tolerance in a Nutshell

Windows Azure SQL Database maintains multiple copies of all databases in different physical nodes located across fully independent physical sub-systems, such as server racks and network routers. At any one time, Windows Azure SQL Database keeps three replicas of each database – one primary replica and two secondary replicas. Windows Azure SQL Database uses a quorum-based commit scheme where data is written to the primary and one secondary replica before we consider the transaction committed. If any component fails on the primary replica, Windows Azure SQL Database detects the failure and fails over to the secondary replica. In case of a physical loss of the replica, Windows Azure SQL Database creates a new replica automatically. Therefore, there are at least two replicas of each database that have transactional consistency in the data center. Other than the loss of an entire data center all other failures are mitigated by the service.

The replication, failure detection and failover mechanisms of Windows Azure SQL Database are fully automated and operate without human intervention. This architecture is designed to ensure that committed data is never lost and that data durability takes precedence over all else.

The Key Customer Benefits:

- Customers get the full benefit of replicated databases without having to configure or maintain complicated hardware, software, OS or virtualization environments

- Full ACID properties of relational databases are maintained by the system

- Failovers are fully automated without loss of any committed data

- Routing of connections to the primary replica is dynamically managed by the service with no application logic required

- The high level of automated redundancy is provided at no extra charge

If you are interested in additional details, the next two sections provide more information about the internal workings of our replication and failover technologies.

Windows Azure SQL Database Replication Internals

Redundancy is the key to fault-tolerance and in Windows Azure SQL Database. Redundancy within Windows Azure SQL Database is maintained at the database level therefore each database is made physically and logically redundant. Redundancy for each database is enforced throughout the database’s lifecycle. Every database is replicated before it’s even provided to a customer to use and the replicas are maintained until the database is dropped by the customer. Each of the three replicas of a database is stored on a different node. Replicas of each database are scattered across nodes such that no two copies reside in the same “failure domain,” e.g., under the same network switch or in the same rack. Replicas of each database are assigned to nodes independently of the assignment of other databases to nodes, even if the databases belong to the same customer. That is, the fact that replicas of two databases are stored on the same node does not imply that other replicas of those databases are also co-located on another node.

For each database, at each point in time one replica is designated to be the primary. A transaction executes using the primary replica of the database (or simply, the primary database). The primary replica processes all query, update, and data definition language operations. It ships its updates and data definition language operations to the secondary replicas using the replication protocol for Windows Azure SQL Database. The system currently does not allow reads of secondary replicas. Since a transaction executes all of its reads and writes using the primary database, the node that directly accesses the primary partition does all the work against the data. It sends update records to the database’s secondary replicas, each of which applies the updates. Since secondary replicas do not process reads, each primary has more work to do than its secondary replicas. To balance the load, each node hosts a mix of primary and secondary databases. On average, with 3-way replication, each node hosts one primary database for every 2 secondary replica. Obviously, two replicas of a database are never co-located on the same physical node.

Another benefit of having each node host a mix of primary and secondary databases is that it allows the system to spread the load of a failed node across many live nodes. For example, suppose a node S hosts three primary databases PE, PF, and PG. If S fails and secondary replicas for PE, PF, and PG are spread across different nodes, then the new primary database for PE, PF, and PG can be assigned to three different nodes.

The replication protocol is specifically built for the cloud to operate reliably while running on a collection of hardware and software components that are assumed to be unreliable (component failures are inevitable). The transaction commitment protocol requires that only a quorum of the replicas be up. A consensus algorithm, similar to Paxos, is used to maintain the set of replicas. Dynamic quorums are used to maintain availability in the face of multiple failures.

The propagation of updates from primary to secondary is managed by the replication protocol. A transaction T’s primary database generates a record containing the after-image of each update by T. Such update records serve as logical redo records, identified by table key but not by page ID. These update records are streamed to the secondary replicas as they occur. If T aborts, the primary sends an ABORT message to each secondary, which deletes the updates it received for T. If T issues a COMMIT operation, then the primary assigns to T the next commit sequence number (CSN), which tags the COMMIT message that is sent to secondary replicas. Each secondary applies T’s updates to its database in commit-sequence-number order within the context of an independent local transaction that corresponds to T and sends an acknowledgment (ACK) back to the primary. After the primary receives an ACK from a quorum of replicas (including itself), it writes a persistent COMMIT record locally and returns “success” to T’s COMMIT operation. A secondary can send an ACK in response to a transaction T’s COMMIT message immediately, before T’s corresponding commit record and update records that precede it are forced to the log. Thus, before T commits, a quorum of nodes has a copy of the commit.

Updated records are eventually flushed to disk by primaries and secondary replicas. Their purpose is to minimize the delta between primary and secondary replicas in order to reduce any potential data loss during a failover event.

Updates for committed transactions that are lost by a secondary (e.g., due to a crash) can be acquired from the primary replica. The recovering replica sends to the primary the commit sequence number of the last transaction it committed. The primary replies by either sending the queue of updates that the recovering replica needs or telling the recovering replica that it is too far behind to be caught up. In the latter case, the recovering replica can ask the primary to transfer a fresh copy. A secondary promptly applies updates it receives from the primary node, so it is always nearly up-to-date. Thus, if it needs to become the primary due to a configuration change (e.g., due to load balancing or a primary failure), such reassignment is almost instantaneous. That is, secondary replicas are hot standbys and provide very high availability.

Failure Detection & Failover Internals

A large-scale distributed system needs a highly-reliable failure detection system that can detect failures reliability, quickly and as close as possible to customer. The Windows Azure SQL Database distributed fabric is paired with the SQL engine so that it can detect failures within a neighborhood of databases.

Centralized health monitoring of a very large system is inefficient and unreliable. The Windows Azure SQL Database failure detection is completely distributed so that any node in the system can be monitored by several of its neighbors. This topology allows for an extremely efficient, localized, and fast detection model that avoids the usual ping storms and unnecessarily delayed failure detections.

Although we collect detailed component-level failure telemetry for subsequent analysis we only use high-level failure signatures detected by the fabric to make failover decisions. Over the years we have improved our ability to fail-fast and recover so that degraded conditions of an unhealthy node do not persist.

Because the failover unit in Windows Azure SQL Database is the database, each database’s health is carefully monitored and failed over when required. Windows Azure SQL Database maintains a global map of all databases and their replicas in the Global Partition Manager (GPM). The global map contains the health, state, and location of every database and its replicas. The distributed fabric maintains the global map. When a node in Windows Azure SQL Database fails, the distributed fabric reliably and quickly detects the node failure and notifies the GPM. The GPM then reconfigures the assignment of primary and secondary databases that were present on the failed node.

Since Windows Azure SQL Database only needs a quorum of replicas to operate, availability is unaffected by failure of a secondary replica. In the background, the system simply creates a new replica to replace the failed one.

Replicas which are only temporarily unavailable for short periods of time are simply caught up with the small number of missing transactions that they missed. Its node asks an operational replica to send it the tail of the update queue that the replica missed while it was down. Allowing for quick synchronization of temporarily unavailable secondary replicas is an optimization which avoids the complete recreation of replicas when not strictly necessary.

If a primary replica fails, one of the secondary replicas must be designated as the new primary and all of the operational replicas must be reconfigured according to that decision. The first step in this process relies on the GPM to choose a leader to rebuild the database’s configuration. The leader attempts to contact the members of the entire replica set to ensure that there are no lost updates. The leader determines which secondary has the latest state. That most up-to-date secondary replica propagates changes that are required by the other replicas that are missing changes.

All connections to Windows Azure SQL Database databases are managed by a set of load-balanced Gateway processes. A Gateway is responsible for accepting inbound database connection requests from clients and binding them to the node that currently hosts the primary replica of a database. The Gateways coordinate with the distributed fabric to locate the primary replica of a customer’s databases. In the event of a fail-over, the Gateways renegotiate the connection binding of all connections bound to the failed primary to the new primary as soon as it is available.

The combination of connection Gateways, distributed fabric, and the GPM can detect and mitigate failures using the database replicas maintained by Windows Azure SQL Database.

Paras Doshi (@paras_doshi) summarized SQL Azure’s Journey from Inception in Year 2008 to June 2012! in a 7/24/2012 post (missed when published):

SQL Azure has been evolving at an amazing pace. Here is a list that summarizes the evolution till June 2012:

SQL Azure, June 2012 (During Meet Windows Azure event)

SQL Azure reporting is now generally available and is backed by SLA

- SQL Azure now called Windows Azure SQL Database

- Run SQL Server on Azure VM role

SQL Azure, May 2012

SQL Azure Data SYNC, April 2012

SQL Azure, February 2012:

SQL Azure Labs, February 2012:

- SQL Azure security services

- Microsoft Codename “Trust Services”

SQL Azure Labs, January 2012:

- Project codenamed “Cloud Numerics”

- Project codenamed “SQL Azure Compatibility Assessment”

Service Update 8, December 2011:

- Increased Max size of Database [Previous: 50 GB. Now: 150 GB]

- SQL Azure Federations

- SQL Azure import/export updated

- SQL Azure management portal gets a facelift.

- Expanded support for user defined collations

- And there are no additional cost when you go above 50 GB. [so cost of 50 GB database = cost of 150 GB DB = ~500$ per month]

SQL Azure Labs, November 2011:

Upcoming SQL Azure Q4 2011 service release announced

- SQL Azure federations

- 150 GB database

- SQL Azure management portal will get a facelift with metro styled UI among other great additions

- Read more

SQL Azure LABS, October 2011:

- Data Explorer preview

- Social Analytics preview

- New registrations for SQL Azure ODATA stopped.

News at SQLPASS, October 2011:

- SQL Azure reporting services CTP is now open for all!

- SQL Azure DATA SYNC CTP is now open for all!

- Upcoming: 150 GB database!

- Upcoming: SQL Azure Federations

- SQL Server Developer Tools, Codename “Juneau” supports SQL Azure

Following updates were not added in July 2011 and were later added in September 2011:

- New SQL Azure Management Portal

- Foundational updates for scalability and performance

Service Update 7, July 2011:

- Creating SQL Azure firewall rules with IP detect

- SQL Azure Co-Administrator support

- Enhanced spatial data types support

Service Update 6, May 2011:

- Support for Multiple servers per subscription

- SQL Azure database management REST API

- JDBC driver

- Support for Upgrading DAC packages

Service Update 5, October 2010:

- Support for SQL Azure sync

- Support for SQL Azure reporting

- SQL Azure Error messages

- Support for sp_tableoption system stored procedure

Service Update 4, August 2010:

- Update on project Houston, A silverlight based app to manage SQL Azure databases

- Support for TSQL command to COPY databases

- Books Online: Added How-To topics for SQL Azure

Service Update 3, June 2010:

- Support for database sizes up to 50 GB

- Support for spatial datatype

- Added East Asia and Western Europe datacenter

Service Update 2, April 2010:

- Support for renaming databases

- DAC (Data Tier Applications) support added

- Support for SQL Server management studio (SSMS) and Visual Studio (VS)

Service Update 1, February 2010:

- Support for new DMV’s

- Support for Alter Database Editions

- Support for longer running Queries

- Idle session Time outs increased from 5 min to 30 min

SQL Server Data Services / SQL Data Services Got a new Name: SQL Azure, July 2009

SQL Server Data Services was Announced, April 2008

Related articles

- How do you reduce the network “latency” between application and SQL Azure? (parasdoshi.com)

- Quick updates from meet windows azure event for Data Professionals (parasdoshi.com)

- One more way to run SQL Server on cloud: SQL server on AWS RDS (parasdoshi.com)

Erik Ejlskov (@ErikEJ) described The state and (near) future of SQL Server Compact in a 7/30/2012 post:

I recently got asked about the future of SQL Server Compact, and in this blog post I will elaborate a little on this and the present state of SQL Server Compact.

Version 4.0 is the default database in WebMatrix ASP.NET based projects, and version 2 of this product has just been released.

There is full tooling support for version 4.0 in Visual Studio 2012, and the “Local Database” project item is a version 4.0 database (not LocalDB). In addition, Visual Studio 2012, coming in august, will include 4.0 SP1, so 4.0 is being actively maintained currently. Entity Framework version 6.0 is now open source, and includes full support for SQL Server Compact 4.0. (Entity Framework 6.0 will release “out of band” after the release of Visual Studio 2012).

The latest release (build 8088) of version 3.5 SP2 is fully supported for Merge Replication with SQL Server 2012 (note that "LocalDB" cannot act as a Merge Replication subscriber), and Merge Replication with Windows Embedded CE 7.0 is also enabled.

On Windows Phone, version 3.5 is alive and well, and will of course also be included with the upcoming Windows Phone 8 platform. Windows Phone 8 will also include support for SQLite, mainly to make it easier to reuse projects between Windows Phone 8 and Windows 8 Metro.

On WinRT (Windows 8 Metro Style Apps), there is no SQL Server Compact support, and Microsoft is currently (doubt that will change) offering SQLite as an alternative. See Matteo Paganis blog post also: http://wp.qmatteoq.com/using-sqlite-in-your-windows-8-metro-style-applications

So, currently SQL Server Compact is available of the following Microsoft platforms: Windows XP and later, including ASP.NET, Windows Phone, Windows Mobile/Embedded CE.

On the other hand, SQL Server Compact is not supported with: Silverlight (with exceptions), WinRT (Windows 8 Metro Style Apps).

So I think it is fair to conclude that SQL Compact is alive and well. In some scenarios, SQL Server "LocalDB" is a very viable alternative, notice that currently LocalDB requires administrator access to be installed (so no "private deployment"). See my comparison here.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

• Edmund Leung described the Sencha Touch oData Connector and Samples for SAP in a 7/30/2012 post:

Today we’re releasing the Sencha Touch oData Connector for SAP, available on the Sencha Market. We’ve partnered with SAP to make it easier for SAP customers to build HTML5 applications using Sencha Touch. Sencha Touch is often used to build apps that mobilize enterprise applications, and using the oData Connector, Touch developers can now connect to a variety of SAP mobile solutions such as Netweaver Gateway, the Sybase Unwired Platform, and more. We announced our partnership with SAP earlier this year and have been working actively with SAP to build this shared capability to make it easy for developers to quickly build rich mobile enterprise applications.

The download comes with a getting started guide, a sample application, and the Sencha Touch oData Connector for SAP. The sample application, Flights Unlimited, connects to a hosted demo SAP Netweaver server and uses the oData Connector to query flight times, passenger manifests, pricing information and more. The application is built with Sencha Touch, so it’s pure HTML5 and can be deployed to the web, or hybridized with Sencha Touch Native Packaging for distribution to an app store. It’s a complete and comprehensive application that shows how developers can leverage the power of Sencha Touch and SAP mobility solutions together.

If you want to learn more, SAP hosted a webinar from SAP Labs in Palo Alto where we talk with SAP about how we’re enabling the oData capability and show off the Flights Unlimited demo. The recording of the webinar is available for streaming online.

We’re excited to see what our global development community will build with the new oData for SAP connector!

Mike Wheatley reported Census Bureau Unveils Public API For Apps Developers in a 7/30/2012 post to the SiliconANGLE blog:

The US Census Bureau has just made its wealth of demographic, socio-economic and housing data more accessible than ever before, with the launch of its first public API. Launched last Thursday, the API will surely lead to endless possibilities for developers of mobile and web applications.

Developers will be able to access two Census Bureau statistical databases through the API; the 2010 Census, which provides up to date information on age, population, race, sex and home ownership statistics in the US, and the 2006-2010 American Community Survey, which offers a more diverse range of socio-economic data, covering areas including employment, income and education.

As well as the API, the Census Bureau has also created a new “app gallery”, which will display all of the apps that have been built using US Census data.

Developers are being encouraged to design applications that can be customized for consumer’s needs, and are also invited to share and discuss new ideas on the Census Bureau’s new Developer’s Forum.

So far, two apps have already been built using the API – Poverty Map, developed by Cornell University researchers, which lets you view poverty statistics across different parts of New York State; and Age Finder, which can be used to measure population across different age groups over multiple years. The results can be filtered according to various demographics, such as sex, age and location.

In its official press release, the Census Bureau said that the idea behind the API is to make its data available to a wider audience, whilst ensuring greater transparency about its role.

Robert Groves, Director of the Census Bureau, said that he hopes to see many apps being developed on the back of the API:

“The API gives data developers in research, business and government the means to customize our statistics into an app that their audiences and customers need,” explained Groves.

Census Bureau Unveils Public API For Apps Developers is a post from: SiliconANGLE

We're now available on the Kindle! Subscribe today.In the same vein:

- Drawn to Scale to Offer Real Time Hadoop Database

- Census Bureau Joins the Visual Data Revolution

- Promising a Groundbreaking Integrated Solution, Storyteller Is Not for Kids

- Could Google’s Victory over Oracle be Short Lived?

- Three Years of Kickstarter: A Rewarding History

- Rackspace Open-Sources A Node.js Library for Creating and Parsing XML Documents

Nicole Hemsoth reported Pew Points to Troubles Ahead for Big Data in a 7/30/2012 post to the Datanami blog:

Admittedly, we bear the same mallets as the rest of the tech media that has been steadily beating its drums to the big data beat, but our ears are always open for a moment when we can put the parade at rest for a moment to reflect on the tune.

No matter how we crane our necks to offset the hype cycle’s bell curve, it’s fair to say that there has been a lot of general talk about the value of big data but the cries of those who worry about what it all portends are often drowned out by the din of excitement.

While there are numerous advocacy and protection groups with specific focus on consumer and constituent concerns, other important issues bubble to the surface, including the matter of over-reliance on data mining algorithms and forecasting, as just one example.

The Pew Research Center recently delved into the task of finding the good, the bad and the ugly sides of the big data conversation. The group released a study that was compiled as part of the fifth “Future of the Internet” survey from the Pew Research Center’s Internet and American Life Project and Elon University’s Imagining the Internet Center.

While many of the respondents (which included experts in systems, communications and other areas) felt that the new sources of exploitable information (and new frameworks and platforms to allow this) could enable further insight for business and research, there were some notable reservations about the risk of swimming in such a deep sea of information.

While just over half of the respondents expressed favorable opinions of the state of data and its use in 2020, we wanted to take a look at why 38 percent of respondents weren’t quite as optimistic. This group says that new tools and algorithms aside, big data will cause will more problems than it solves by 2020. They agreed that “the existence of huge data sets for analysis will engender false confidence in our predictive powers and will lead many to make significant and hurtful mistakes.” They also suggest that there is the ultimate possibility of extreme misuse of this data on the part of governments and companies and represents a “big negative for society in nearly all aspects.”

The following are some insights broken down by categories that represent the major concern areas for big data in the future as reported by Pew….

Next – Class, Conflict and SciFi Society >

Achmad Zaenuri described how to Check your Windows Azure Datamarket remaining quota in a 7/25/2012 post to his How To blog (missed when published):

In my previous post [see below], I show[ed] you how to use Windows Azure Datamarket to create a Bing Search Engine Position checker. I told you that for free account, you only have 5000 requests per month. Paid subscription has higher limit.

This time I’ll show you how to check your remaining monthly quota for all of your Windows Azure Datamarket subscription without logging in to Azure Datamarket. This is the main function:

error_reporting(E_ALL^E_NOTICE); function check_bing_quota($key) { $ret=array(); $context = stream_context_create(array( 'http' => array( 'request_fulluri' => true, 'header' => "Authorization: Basic " . base64_encode($key . ":" . $key) ) ) ); $end_point='https://api.datamarket.azure.com/Services/My/Datasets?$format=json'; $response=file_get_contents($end_point, 0, $context); $json_data=json_decode($response); foreach ($json_data->d->results as $res) { $ret[]=array( 'title'=>$res->Title, 'provider'=>$res->ProviderName, 'entry_point'=>$res->ServiceEntryPointUrl, 'remaining'=>$res->ResourceBalance ); } return $ret; }How to call it:

$ret=check_bing_quota('put_your_real_account_key_here');Example result:

array(2) { [0]=> array(4) { ["title"]=> string(36) "Bing Search API – Web Results Only" ["provider"]=> string(4) "Bing" ["entry_point"]=> string(57) "https://api.datamarket.azure.com/Data.ashx/Bing/SearchWeb" ["remaining"]=> int(4981) } [1]=> array(4) { ["title"]=> string(15) "Bing Search API" ["provider"]=> string(4) "Bing" ["entry_point"]=> string(54) "https://api.datamarket.azure.com/Data.ashx/Bing/Search" ["remaining"]=> int(4997) } }Fully working demo: http://demo.ahowto.net/bing_quota/

Example output formatted as table

Notes:

- The function will check ALL of your Windows Azure Datamarket subscription

- checking your quota does not subtract your quota

- your PHP version must be at least 5.2 and PHP-openssl module must be activated

Achmad Zaenuri posted New Bing SERP checker using Windows Azure Datamarket [php] in a 7/14/2012 post (missed when published):

So, I got email from Bing Developer Team informing that Bing Search API 2.0 will be gone and moving to new platform: Windows Azure Datamarket. On my previous Bing SERP checker project, I’m using Bing Search API 2.0 which means that those codes will no longer working after 1st August.

Windows Azure Datamarket provide a trial package and a free package that has limit for 5000 requests per month. More than enough for us to toying for Bing SERP checker.

Go get your Account Key (was Application ID) : https://datamarket.azure.com/

This is the revised main function php codes so it will work on new Windows Azure Datamarket web service

error_reporting(E_ALL^E_NOTICE); //this is the main function function b_serp($keyword, $site, $market, $api_key) { $found=FALSE; $theweb=''; $pos=0; $ret=array(); $limit=1; $site=str_replace(array('http://'), '', $site); $context = stream_context_create(array( 'http' => array( 'request_fulluri' => true, 'header' => "Authorization: Basic " . base64_encode($api_key . ":" . $api_key) ) ) ); $pos=1; while ((!$found)&&($pos<=100)) { $skip=($limit-1)*50; //this is the end point of microsoft azure datamarket that we should call -- only take data from web results $end_point='https://api.datamarket.azure.com/Data.ashx/Bing/SearchWeb/v1/Web?Query='.urlencode("'".$keyword."'").'&Market='.urlencode("'".$market."'").'&$format=JSON&$top=50&$skip='.$skip; //I'm using generic file_get_contents because cURL CURLOPT_USERPWD didn't work (no idea why) $response=file_get_contents($end_point, 0, $context); $json_data=json_decode($response); foreach ($json_data->d->results as $res) { $theweb=parse_url($res->Url); if (substr_count(strtolower($theweb['host']), $site)) { $found=TRUE; $ret['position']=$pos; $ret['title']=$res->Title; $ret['url']=$res->Url; return $ret; } $pos++; } $limit++; } if (!$found) { return NULL; } }How you call it:

$account_key='put your account key here'; $res=b_serp('how to upload mp3 to youtube', 'mp32u.net', 'en-US', $account_key);Example output:

array(3) { ["position"]=> int(3) ["title"]=> string(70) "MP32U.NET - Helping independent artist getting acknowledged in the ..." ["url"]=> string(21) "http://www.mp32u.net/" }Fully working demo: http://demo.ahowto.net/new_bserp/

Actually, there are a lot of other “Search Market” that supported by Bing but I only listed some of them just for example. Download this document for complete list of Bing’s supported Search Market: http://www.bing.com/webmaster/content/developers/ADM_SCHEMA_GUIDE.docx

Notes:

- Your PHP version must be PHP 5.2 or higher (because of json_decode command)

- You must activate PHP OpenSSL module (php_openssl) because we are using file_get_contents and the webservice end point must be accessed via HTTPS

Update (2012-07-20) : the endpoint URL is changed

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

•• Alex Simons posted Introducing a New Capability in the Windows Azure AD Developer Preview: the Windows Azure Authentication Library (AAL) on 8/1/2012:

Last month we announced the Developer Preview of Windows Azure Active Directory (Windows Azure AD) which kicked off the process of opening up Windows Azure Active Directory to developers outside of Microsoft. You can read more about that initial release here.

Today we are excited to introduce the Windows Azure Authentication Library (referred to as AAL in our documentation), a new capability in the Developer Preview which gives .NET developers a fast and easy way to take advantage of Windows Azure AD in additional, high value scenarios, including the ability to secure access to your application’s APIs and the ability to expose your service APIs for use in other native client or service based applications.

The AAL Developer Preview offers an early look into our thinking in the native client and API protection space. It consists of a set of NuGet packages containing the library bits, a set of samples which will work right out of the box against pre-provisioned tenants, and essential documentation to get started.

Before we start, I want to note that developers can of course write directly to the standards based protocols we support in Windows Azure AD (WS-Fed and OAuth today and more to come). That is a fully supported approach. The library is another option we making available for developers who are looking for a faster & simpler way to get started using the service.

In the rest of the post we will describe in more detail what’s in the preview, how you can get involved and what the future might look like.

What’s in the Developer Preview of the Windows Azure Authentication Library

The library takes the form of a .NET assembly, distributed via NuGet package. As such, you can add it to your project directly from Visual Studio (you can find more information about NuGet here).

AAL contains features for both .NET client applications and services. On the client, the library enables you to:

- Prompt the user to authenticate against Windows Azure AD directory tenants, AD FS 2.0 servers and all the identity providers supported by Azure AD Access Control (Windows Live ID, Facebook, Google, Yahoo!, any OpenID provider, any WS-Federation provider)

- Take advantage of username/password or the Kerberos ticket of the current workstation user for obtaining tokens programmatically

- Leverage service principal credentials for obtaining tokens for server to server service calls

The first two features can be used for securing solutions such as WPF applications or even console apps. The third feature can be used for classic server to server integration.

The Windows Azure Authentication Library gives you access to the feature sets from both Windows Azure AD Access Control namespaces and Directory tenants. All of those features are offered through a simple programming model. Your Windows Azure AD tenant already knows about many aspects of your scenario: the service you want to call, the identity providers that the service trusts, the keys that Windows Azure AD will use for signing tokens, and so on. AAL leverages that knowledge, saving you from having to deal with low level configurations and protocol details. For more details on how the library operates, please refer to Vittorio Bertocci’s post here. [See post below.]

To give you an idea of the amount of code that the Windows Azure Authentication Library can save you, we’ve updated the Graph API sample from the Windows Azure AD Developer Preview to use the library to obtain the token needed to invoke the Graph. The original release of the sample contained custom code for that task, which accounted for about 700 lines of code. Thanks to the Windows Azure Authentication Library, those 700 lines are now replaced by 7 lines of calls into the library.

On the service side, the library offers you the ability to validate incoming tokens and return the identity of the caller in form of ClaimsPrincipal, consistent with the behavior of the rest of our development platform.

Together with the library, we are releasing a set of samples which demonstrate the main scenarios you can implement with the Windows Azure Authentication Library. The samples are all available as individual downloads on the MSDN Code via the following links:

- Native Application to REST Service – Authentication via Browser Popup

- Native Application to REST Service – Authentication via User Credentials

- Server to Server Authentication

- Windows Azure AD People Picker – Updated with AAL

To make it easy to try these samples, all are configured to work against pre-provisioned tenants. They are complemented by comprehensive readme documents, which detail how you can reconfigure Visual Studio solutions to take advantage of your own Directory tenants and Windows Azure AD Access Control namespaces.

If you visit the Windows Azure Active Directory node on the MSDN documentation, you will find that it has been augmented with essential documentation on AAL.

What You Can Expect Going Forward

As we noted earlier, the Developer Preview of AAL offers an early look into our thinking in the native client and API protection space. To ensure you have the opportunity to explore and experiment with native apps and API scenarios at an early stage of development, we are making the Developer Preview available to you now. You can provide feedback and share your thoughts with us by using the Windows Azure AD MSDN Forums. Of course, because it is a preview some things will change moving forward. Here are some of the changes we have planned:

More Platforms

The Developer Preview targets .NET applications, however we know that there are many more platforms that could benefit from these libraries.

For client applications, we are working to develop platform-specific versions of AAL for WinRT, iOS, and Android. We may add others as well. For service side capabilities, we are looking to add support for more languages. If you have feedback on which platforms you would like to see first, this is the right time to let us know!

Convergence of Access Control Namespace and Directory Tenant Capabilities

As detailed in the first Developer Preview announcement, as of today there are some differences between Access Control namespaces and Directory tenants. The programming model is consistent across tenant types: you don’t need to change your code to account for it. However, you do get different capabilities depending on the type of tenant. Moving forward, you will see those differences progressively disappear.

Library Refactoring

The assembly released for the Developer Preview is built around a native core. The reason for its current architecture is that it shares some code with other libraries we are using for adding claims based identity capabilities to some of our products. The presence of the native core creates some constraints on your use of the Developer Preview of the library in your .NET applications. For example, the “bitness” of your target architecture (x86 or x64) is a relevant factor when deciding which version of the library should be used. Please refer to the release notes in the samples for a detailed list of the known limitations. Future releases of the Windows Azure Authentication Library for .NET will no longer contain the native core.

Furthermore, in the Developer Preview, AAL contains both client and service side features. Moving forward, the library will contain only client capabilities. Service side capabilities such as token validation, handling of OAuth2 authorization flows and similar features will be delivered as individual extensions to Windows Identity Foundation, continuing the work we begun with the WIF extensions for OAuth2.

Windows Azure Authentication Library and Open Source

The simplicity of the Windows Azure Authentication Library programming model in the Developer Preview also means that advanced users might not be able to tweak things to the degree they want. To address that, we are planning to release future drops of AAL under an open source license. So developers will be able to fork the code, change things to fit your needs and, if you so choose, contribute your improvements back to the mainline code.

The Developer Preview of AAL is another step toward equipping you with the tools you need to fully take advantage of Windows Azure AD. Of course we are only getting started, and have a lot of work left to do. We hope that you’ll download the library samples and NuGets, give them a spin and let us know what you think!

Finally, thanks to everyone who has provided feedback so far! We really appreciate the time you’ve taken and the depth of feedback you’ve provided. It’s crucial for us to assure we are evolving Windows Azure AD in the right direction!

•• Vittorio Bertocci (@vibronet) posted Windows Azure Authentication Library: a Deep Dive on 8/1/2012:

We are very excited today to announce our first developer preview of the Windows Azure Authentication Library (AAL).

For an overview, please head to Alex’s announcement post on the Windows Azure blog: but in a nutshell, the Windows Azure Authentication Library (AAL) makes it very easy for developers to add to their client applications the logic for authenticating users to Windows Azure Active Directory or their providers of choice, and obtain access tokens for securing API calls. Furthermore, AAL helps service authors to secure their API by providing validation logic for incoming tokens.

That’s the short version: below I will give you (much) more details. Although the post is a bit long, I hope you’ll be pleasantly surprised by how uncharacteristically simple it is going to be.

Not a Protocol Library

Traditionally, the identity features of our libraries are mainly projections of protocol artifacts in CLR types. Starting with the venerable Web Services Enhancements, going through WCF to WIF, you’ll find types representing tokens, headers, keys, crypto operations, messages, policies and so on. The tooling we provide protects you from having to deal with this level of detail when performing the most common tasks (good example here), but if you want to code directly against the OM there is no way around it.

Sometimes that’s exactly what you want, for example when you want full control over fine grained details on how the library handles authentication. Heck, it is even fairly common to skip the library altogether and code directly against endpoints, especially now that authentications protocols are getting simpler and simpler.

Some other time, though, you don’t really feel like savoring the experience of coding the authentication logic and just want to get the auth work out of your way ASAP and move on with developing your app’s functionality. In those cases, the same knobs and switches that make fine grained control possible will likely seem like noise to you, making harder to choose what to use and how.

With AAL we are trying something new. We considered some of the common tasks that developers need to perform when handling authentication; we thought about what we can reasonably expect a developers knows and understand of his scenario, without assuming deep protocol knowledge; finally, we made the simplifying assumption that most of the scenario details are captured and maintained in a Windows Azure AD tenant. Building on those considerations, we created an object model that – I’ll go out on a limb here - is significantly simpler than anything we have ever done in this space.

Let’s take a look about the simplest model a developer might have in his head to represent a client application which needs to securely invoke a service and relying on one identity as a service offering like Windows Azure AD.

- I know I want to call service A, and I know how to call it

- Without knowing other details, I know that to call A I need to present a “token” representing the user of my app

- I know that Windows Azure AD knows all the details of how to do authentication in this scenario, though I might be a bit blurry about what those details might be

…and that’s pretty much it. When using the current API, in order to implement that scenario developers must achieve a much deeper understanding of the details of the solution: for example, to implement the client scenario from scratch one developer would need to understand the concepts covered in a lengthy post, implement it in details, create UI elements to integrate it in your app’s experience, and so on.

On the server side, developers of services might go through a fairly similar thought process:

- I know which service I am writing (my endpoint, the style I want to implement, etc)

- I know I need to find out who is calling me before granting access; and I know I receive that info in a “token”

- I know that Windows Azure AD knows all the details of the type of token I need, from where, and so on

Wouldn’t it be great if this level of knowledge would be enough to make the scenario work?

Hence, our challenge: how to enable a developer to express in code what he or she knows about the scenario and make it work without requiring to go any deeper than that? Also, can we do that without relying on tooling?

What Can you Do with AAL?

If you are in a hurry and care more about what AAL can do for you than how it works, here there are some spoilers for you: we’ll resume the deep dive in a moment.

The Windows Azure Authentication Library is meant to help rich client developers and API authors. In essence, the Windows Azure Authentication Library helps your rich client applications to obtain a token from Windows Azure Active Directory; and it helps you to tell if a token is actually from Windows Azure Active Directory. Here there are some practical examples. With AAL you can:

- Add to your rich client application the ability to prompt your users (as in, generate the UI and walk the user through the experience ) to authenticate, enter credentials and express consent against Windows Azure AD directory tenants, ADFS2 instances (or any WS-Federation compliant authority), OpenID providers, Google, Yahoo!, Windows Live ID and Facebook

- Add to your rich client application the ability to establish at run time which identity providers are suitable for authenticating with a given service, and generate an experience for the user to choose the one he or she prefers

- Drive authentication between server-side applications via 2-legged OAuth flows

- If you have access to raw user credentials, use them to obtain access tokens without having to deal with any of the mechanics of adapting to different identity providers, negotiating with intermediaries and other obscure protocol level details

- If you are a API author, easily validate incoming tokens and extract caller’s info without having to deal with low level crypto or tools

Those are certainly not all the things you might want to do with rich clients and API, but we believe that’s a solid start: in my experience, those scenarios take on the fat part of the Pareto principle. We are looking into adding new scenarios in the future, and you can steer that with your feedback.

All of the things I just listed are perfectly achievable without AAL, as long as you are willing to work at the protocol level or with general purpose libraries: it will simply require you to work much harder.

The Windows Azure Authentication Library

The main idea behind AAL is very simple. If in your scenario there is a Windows Azure AD tenant somewhere, chances are that it already contains most of the things that describe all the important moving parts in your solution and how they relate to each other. It knows about services, and the kind of tokens they accept; the identity providers that the service trusts; the protocols they support, and the exact endpoints at which they listen for requests; and so on. In a traditional general-purpose protocol library, you need to first learn about all those facts by yourself; then, you are supposed to feed that info to the library.

With AAL you don’t need to do that: rather, you start by telling to AAL which Windows Azure tenant knows about the service you want to invoke.Once you have that, you can ask directly to the Windows Azure AD tenant to get for you a token for the service you want to invoke. If you already know something more about your scenario, such as which identity provider you want to use or even the user credentials, you can feed that info in AAL; but even if all you know is the identifier of the service you want to call, AAL will help the end user to figure out how to authenticate and will deliver back to your code the access token you need.

Object Model

Let’s get more concrete. The Windows Azure Authentication Library developer preview is a single assembly, Microsoft.WindowsAzure.ActiveDirectory.Authentication.dll,

It contains both features you would use in native (rich client) applications and features you’d use on the service side. It offers a minimalistic collection of classes, which in turn feature the absolute essential programming surface for accomplishing a small, well defined set of common tasks.

Believe it or not, if you exclude stuff like enums and exception classes most of AAL classes are depicted in the diagram below.

Pretty small, eh? And the best thing is that most of the time you are going to work with just two classes, AuthenticationContext and AssertionCredential, and just a handful of methods. …

Vittorio continues with more implementation details. Read more.

• Tim Huckaby (@TimHuckaby) interviewed Eric D. Boyd (@EricDBoyd) in a Bytes by MSDN July 31 Eric D. Boyd webcast of 7/31/2012:

Join Tim Huckaby Founder/Chairman, InterKnowlogy Founder/CEO, Actus Interactive Software and Eric D. Boyd Founder and CEO, responsiveX, as they discuss Windows Azure. Eric talks about Windows Azure Access Control Service (ACS) a federated identity service in the cloud which is unique to Windows Azure (e.g. Facebook, Windows Live ID, Active Directory, etc.). He also shares insights on how using Windows Azure can help you deal with scalability challenges in a cost effective manner. Great interview with invaluable information!

Get Free Cloud Access: Window Azure MSDN Benefits | 90 Day Azure Trial

Clemens Vasters (@clemensv) described Transactions in Windows Azure (with Service Bus) – An Email Discussion in a 7/30/2012 post:

I had a email discussion late last weekend and through this weekend on the topic of transactions in Windows Azure. One of our technical account managers asked me on behalf of their clients how the client could migrate their solution to Windows Azure without having to make very significant changes to their error management strategy – a.k.a. transactions. In the respective solution, the customer has numerous transactions that are interconnected by queuing and they’re looking for a way to preserve the model of taking data from a queue or elsewhere, performing an operation on a data store and writing to a queue as a result as an atomic operation.

I’ve boiled down the question part of the discussion into single sentences and edited out the customer specific pieces, but left my answers mostly intact, so this isn’t written as a blog article.

The bottom line is that Service Bus, specifically with its de-duplication features for sending and with its reliable delivery support using Peek-Lock (which we didn’t discuss in the thread, but see here and also here) is a great tool to compensate for the lack of coordinator support in the cloud. I also discuss why using DTC even in IaaS may not be an ideal choice:

Q: How do I perform distributed, coordinated transactions in Windows Azure?

2PC in the cloud is hard for all sorts of reasons. 2PC as implemented by DTC effectively depends on the coordinator and its log and connectivity to the coordinator to be very highly available. It also depends on all parties cooperating on a positive outcome in an expedient fashion. To that end, you need to run DTC in a failover cluster, because it’s the Achilles heel of the whole system and any transaction depends on DTC clearing it.

In cloud environments, it’s a very tall order to create a cluster that’s designed in a way similar to what you can do on-premises by putting a set of machines side-by-side and interconnecting them redundantly. Even then, use of DTC still put you into a CAP-like tradeoff situation as you need to scale up.

Since the systems will be running in a commoditized environment where the clustered assets may quite well be subject to occasional network partitions or at least significant congestion and the system will always require – per 2PC rules – full consensus by all parties about the transaction outcome, the system will inevitably grind to a halt whenever there are sporadic network partitions. That risk increases significantly as the scale of the solution and the number of participating nodes increases.

There are two routes out of the dilemma. The first is to localize any 2PC work onto a node and scale up, which lets you stay in the classic model, but will limit the benefits of using the cloud to having externalized hosting. The second is to give up on 2PC and use per-resource transaction support (i.e. transactions in SQL or transactions in Service Bus) as a foundation and knit components together using reliable messaging, sagas/compensation for error management and, with that, scale out.

Q: Essentially you are saying that there is absolutely no way of building a coordinator in the cloud?

I’m not saying it’s absolutely impossible. I’m saying you’d generally be trading a lot of what people expect out of cloud (HA, scale) for a classic notion of strong consistency unless you do a lot of work to support it.

The Azure storage folks implement their clusters in a very particular way to provide highly-scalable, highly-available, and strongly consistent storage – and they are using a quorum based protocol (Paxos) rather than classic atomic TX protocol to reach consensus. And they do while having special clusters that are designed specifically to that architecture – because they are part of the base platform. The paper explains that well.

Since the storage system and none of the other components trust external parties to be in control of their internal consistency model and operations – which would be case if they’d enlist in distributed transactions – any architecture built on top of those primitives will either have to follow a similar path to what the storage folks have done, or start making trades.

You can stick to the classic DTC model with IaaS; but you will have to give up using the PaaS services that do not support it, and you may face challenges around availability traded for consistency as your resources get distributed across the datacenter and fault domains for – ironically – availability. So ultimately you’ll be hosting a classic workload in IaaS without having the option of controlling the hardware environment tightly to increase intra-cluster reliability.

The alternative is to do what the majority of large web properties do and that is to deal with these constraints and achieve reliability by combining per-resource transactions, sagas, idempotency, at-least-once messaging, and eventual consistency.

Q: What are the chances that you will build something that will support at least transactional handoffs between Service Bus the Azure SQL database?

We can’t directly couple a SQL DB and Service Bus because SQL, like storage, doesn’t allow transactions that span databases for the reasons I cited earlier.

But there is a workaround using Service Bus that gets you very close. If the customer’s solution DB had a table called “outbox” and the transactions would write messages into that table (including the destination queue name and the desired message-id), they can get full ACID around their DB transactions. With storage, you can achieve a similar model with batched writes into singular partitions.

We can’t make that “outbox” table, because it needs to be in the solution’s own DB and inside their schema. A background worker can then poll that table (or get post-processing handoff from the transaction component) and then replicate the message into SB.

If SB has duplicate detection turned on, even intermittent send failures or commit issues on deleting sent messages from the outbox won’t be a problem, so this simple message transfer doesn’t require 2PC since the message is 100% replicable including its message-id and thus the send is idempotent towards SB – while sending to SB in the context of the original transaction wouldn’t have that.

With that, they can get away without compensation support, but they need to keep the transactions local to SQL and the “outbox” model gives the necessary escape hatch to do that.

Q: How does that work with the duplicate detection?

The message-id is a free-form string that the app can decide on and set as it likes. So that can be an order-id, some contextual transaction identifier or just a Guid. That id needs to go into the outbox as the message is written.

If the duplicate detection in Service Bus is turned on for the particular Queue, we will route the first message and drop any subsequent message with the same message-id during the duplicate detection time window. The respective message(s) is/are swallowed by Service Bus and we don’t specifically report that fact.

With that, you can make the transfer sufficiently robust.

Duplicate Detection Sample: http://code.msdn.microsoft.com/windowsazure/Brokered-Messaging-c0acea25#content

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

•• My (@rogerjenn) Configuring Windows Azure Services for Windows Server post of 8/1/2012 begins:

Introduction

The Microsoft Hosting site describes a new multi-tenanted IaaS offering for hosting service providers that the Windows Server team announced at the Worldwide Partners Conference (WPC) 2012, held in Houston, TX on 7/8 through 7/12/2012:

The new elements of Windows Azure Services for Windows Server 2008 R2 or 2012 (WAS4WS) are the Service Management Portal and API (SMPA); Web Sites and Virtual Machines are features of Windows Azure Virtual Machines (WAVM), the IaaS service that the Windows Azure team announced at the MEET Windows Azure event in San Francisco, CA held on 6/7/2012.

Licensing Requirements

Although Hosting Service Providers are the target demographic for WAS4WS, large enterprises should consider the service for on-site, self-service deployment of development and production computing resources to business units in a private or hybrid cloud. SMPA emulates the new Windows Azure Management Portal Preview, which also emerged on 6/7/2012.

When this post was written, WAS4WS required a Service Provider Licensing Agreement:

Licensing links:

Note: WAS4WS isn’t related to the elusive Windows Azure Platform Appliance (WAPA), which Microsoft introduced in July, 2010 and later renamed the Windows Azure Appliance (see Windows Azure Platform Appliance (WAPA) Announced at Microsoft Worldwide Partner Conference 2010 of 6/7/2010 for more details.) To date, only Fujitsu has deployed WAPA to a data center (see Windows Azure Platform Appliance (WAPA) Finally Emerges from the Skunk Works of 6/7/2011.) WAS4WS doesn’t implement Windows Azure Storage (high-availability tables and blobs) or other features provided by the former Windows Azure App Fabric, but is likely to be compatible with the recently announced Service Bus for Windows Server (Service Bus 1.0 Beta.)

Prerequisites

System Requirements

From the 43-page Getting started Guide: Web Sites, Virtual Machines, Service Management Portal and Service Management API July 2012 Technical Preview:

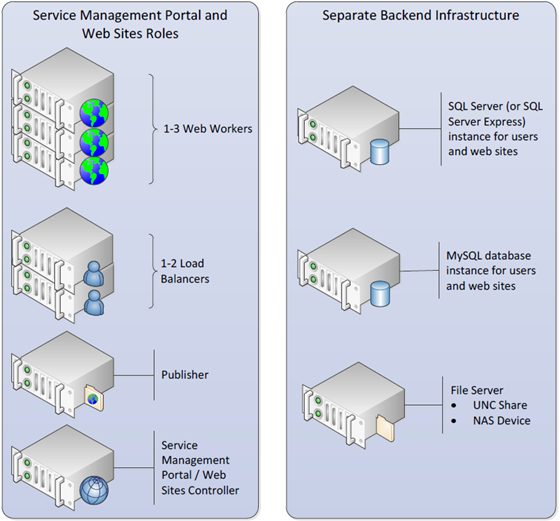

The Technical preview is intended to run on a single Hyper-V host with 7 virtual machines. In addition to the virtual machines required for the software, it is expected that there will be a separate server (or servers) in the datacenter running Microsoft SQL Server, MySQL Server, and a File Server (Windows UNC) or NAS device hosting web content.

Hyper-V Host server for Service Management Portal and Web Sites VMs:

- Dual Processor Quad Core

- Operating System: Windows Server 2008 R2 SP1 Datacenter Edition With Hyper-V (64bit) / Windows Server 2012 with Hyper-V (64 bit)

- RAM: 48 GB

- 2 Volumes:

First Volume: 40GB or greater (host OS).

Second Volume: 100GB or greater (VHDs).- Separate SQL server(s) for Web Sites configuration databases and users/web sites databases running Microsoft SQL Server 2008 R2.

- Separate MySQL server version 5.1 for users/web sites databases.

- Either a Windows UNC share or a NAS device acting as a File server to host web site content.

Note: The SQL Server, MySQL Server, and File Server can coexist with each other, and the Hyper-V host machine, but should not be installed in the same VMs as other Web Sites roles. Use separate SQL Server computers, or separate SQL instances, on the same SQL Server computer to isolate the Web Sites configuration databases from user/web sites databases.

A system meeting the preceding requirements is required to meet the high-end (three Web workers and two load balancers) of the following architecture:

Service Management Portal and Web Sites-specific server role descriptions:

- Web Workers – Web Sites-specific version of IIS web server which processes client’s web

requests. - Load Balancer(s) – IIS web server with Web Sites-specific version of ARR which accepts web

requests from clients, routes requests to Web Workers and returns web worker responses

to clients. - Publisher – The public version of WebDeploy and an Web Sites-specific version of FTP which

provide transparent content publishing for WebMatrix, Visual Studio and FTP clients. - Service Management Portal / Web Sites Controller – server which hosts several functions:

o Management Service - Admin Site: where administrators can create Web Sites

clouds, author plans and manage user subscriptions.

o Management Service - Tenant Site: where users can signup and create web sites,

virtual machineand databases.

o Web Farm Framework to provision and manage server Roles.

o Resource Metering service to monitor webservers and site resource usage. - Public DNS Mappings. (DNS management support for the software is coming in a future release. The recommended configuration for this technical preview is to use a single domain. All user-created sites would have unique host names on the same domain.)

- For a given domain such as TrialCloud.com you would create the following DNS A records: Host name

Software Requirements

- Download (as 1 *.exe and 19 *.rar files), expand to a 20+ GB VHD, and install System Center 2012 Service Pack 1 CTP2 – Virtual Machine Manager – Evaluation (VHD) on a Windows Server 2008 R2 (64-bit) or Windows Server 2012 host with the Hyper-V role enabled.

- Download and install System Center 2012 Service Pack 1 Community Technology Preview 2 - Service Provider Foundation Update for Service Management API to the VM created from the preceding VHD.

- Download and run the Web Platform Installer (WebPI, Single-Machine Mode) for the 60 additional components required to complete the VM configuration.

Note: This preview doesn’t support Active Directory for VMs; leave the VMs you create as Workgroup members.

Tip: Before downloading and running the WebPI, click the Configure SQL Server (do this first) button on the desktop (see below) and install SQL Server 2008 R2 Evaluation Edition with mixed-mode (Windows and SQL Server authentication) by giving the sa account a password. Logging in to SQL Server as sa is required later in the installation process (see step 1 in the next section).

…

And continues with a detailed and fully illustrated Configuring the Service Management Portal/Web Sites Controller section.

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Himanshu Singh (@himanshuks, pictured below) announced New Java Resources for Windows Azure! in a 7/31/2012 post:

Editor’s Note: Today’s post comes from Walter Poupore [@walterpoupore], Principal Programming Writer in the Windows Azure IX team. This post outlines valuable resources for developing Java apps on Windows Azure.

Make the Windows Azure Java Developer Center your first stop for details about developing and deploying Java applications on Windows Azure. We continue to add content to that site, and we’ll describe some of the recent additions in this blog.

Using Virtual Machines for your Java Solutions

We rolled out Windows Azure Virtual Machines as a preview service last month; if you’d like to see how to use Virtual Machines for your Java solutions, check out these new Java tutorials.

How to run a Java Application Server on a Virtual Machine - This tutorial shows you how to create a Windows Azure Virtual Machine, and then how to configure it to run a Java application server, in effect showing you how you can move your Java applications to the cloud. You can choose either Windows Server or Linux for your Virtual Machine, configure it, and then focus on your application development.

- How to Run a Compute-intensive Task in Java on a Virtual Machine - This tutorial shows you how to set up a Virtual Machine, run a workload application deployed as a Java JAR on the Virtual Machine, and then use the Windows Azure Service Bus in a separate client computer to receive results from the Virtual Machine. This is an effective way to distribute work to a Virtual Machine and then access the results from a separate machine.

By the way, since we’re mentioning Windows Azure Service Bus, it is one of several services provided by Windows Azure, some of which can be used independently of Windows Azure Virtual Machines. For example, you can incorporate Windows Azure Service Bus, Windows Azure SQL Database, or Windows Azure Storage in your existing Java applications, even if your applications are not yet deployed on Windows Azure.

New in Access Control

Included in the June 2012 Windows Azure release is an update to the Windows Azure Plugin for Eclipse with Java (by Microsoft Open Technologies). One of the new features is the Access Control Service Filter, which enables your Java web application to seamlessly take advantage of ACS authentication using various identity providers, such as Google, Live.com, and Yahoo!. You won’t need to write authentication logic yourself, just configure a few options and let the filter do the heavy lifting of enabling users to sign in using ACS. You can focus on writing the code that gives users access to resources based on their identity. Here’s a how-to guide for an example –

How to Authenticate Web Users with Windows Azure Access Control Service Using Eclipse - While the example uses Windows Live ID for the identity provider, a similar technique could be used for other identity providers, such as Google or Yahoo!

We hope you find these Java resources useful as you build on Windows Azure. Tell us what you think; give us your feedback in the comments section below, the Windows Azure MSDN Forums, Stack Overflow, or Twitter @WindowsAzure.

• Clemens Vasters (@clemensv) posted "I want to program my firewall using IP ranges to allow outbound access only to my cloud apps" on 7/31/2012:

We get a ton of inquiries along the lines of “I want to program my firewall using IP ranges to allow outbound access only to my cloud-based apps”. If you (or the IT department) insist on doing this with Windows Azure, there is even a downloadable and fairly regularly updated list of the IP ranges on the Microsoft Download Center in a straightforward XML format.

Now, we do know that there are a lot of customers who keep insisting on using IP address ranges for that purpose, but that strategy is not a recipe for success.

The IP ranges shift and expand on a very frequent basis and cover all of the Windows Azure services. Thus, a customer will open their firewall for traffic for the entire multitenant range of Azure, which means that the customer’s environment can reach their own apps and the backend services for the “Whack A Panda” game just the same. With apps in the cloud, there is no actual security gain from these sorts of constraints; pretty much all the advantages of automated, self-service cloud environments stem from shared resources including shared networking and shared gateways and the ability tot do dynamic failover including cross-DC failover and the like that means that there aren’t any reservations at the IP level that last forever.

The best way to handle this is to do the exact inverse of what’s being tried with these rules, and rather limit access to outside resources to a constrained set of services based on the services’ or users’ identity as it is done on our Microsoft corporate network. At Microsoft, you can’t get out through the NAT/Proxy unless you have an account that has external network privileges. If you are worried about a service or user abusing access to the Internet, don’t give them Internet. If you think you need to have tight control, make a DMZ – in the opposite direction of how you usually think about a DMZ.

Using IP-address based outbound firewall access rules constraining access to public cloud computing resources is probably getting a box on a check-list ticked, but it doesn’t add anything from a security perspective. It’s theater. IMHO.

• Karl Ots (@fincooper) explained How to install Windows Azure Toolkit (v1.3.2) for Windows Phone in a 7/31/2012 post:

The Windows Azure Toolkit for Windows Phone is a must-have set of tools and templates making it easier for you to build mobile applications that leverage cloud services running in Windows Azure. It’s latest update is from November 2011, which makes it a bit difficult to install with the latest tools.

This is the error message you see when trying to run the WATWP setup with WPI 4.0

The root of the error is the Web Platform Installer tool, which is used by the WATWP setup program to check for dependencies.The documented system requirements are assuming you have Web Platform Installer 3.0 installed, instead of the current default, i.e. Web Platform Installer 4.0. Once you’ve uninstalled the 4.0 version and installed the old 3.0 version, the setup error should diminish.

Visual Studio 2010 Service Pack 1

You might receive the following error when installing Windows Phone SDk 7.1:

The mysterious error indicating that you’ll have to install Visual Studio 2010 SP1 in order to continue.

If the error occurs, you probably have not installed Visual Studio 2010 SP1 yet. You can do that from the Web Platform Installer. Once it’s installed, you can refresh the WATWP setup and continue it’s installation:

• Dave Savage (@davidsavagejr) described Extending ELMAH on Windows Azure Table Storage in a 7/30/2012 post:

ELMAH [Error Logging Modules and Handlers] and Windows Azure go together like peanut butter and jelly. If you’ve been using both, you’re probably familiar with a Nuget package that hooks ELMAH up to Table Storage. But, you may hit a snag with large exceptions. In this post, I’ll take you through how to get ELMAH and Table Storage settle some of their differences.

If you’ve been building asp.net apps for Windows Azure, you may recognize ELMAH. It’s a fantastic logging add-on to any application. If you’ve never heard of it, check it out - it makes catching unhandled (and handled) exceptions a breeze.

This is especially helpful when you start deploying applications to Windows Azure, where having easy access to exceptions makes your life much less stressful when something inevitably goes wrong. Thankfully, Wade Wegner (@wadewegner) has made it super easy to get this working in Azure with his package that uses Table Storage to store exceptions. Wade gives a great write up on it in more detail.

A Little Problem

Logging the exceptions works great however, you may encounter the following exception at some point:

The property value is larger than allowed by the Table Service.

This is because the Table Storage Service limits the size of any string value property to 64KB. When you further examine the ErrorEntity class, you will find that this is actually quite easy to accomplish with exceptions that have large stack traces. A little examination reveals that the error caught by ELMAH is encoded to an XML string and saved to the SerializedError property.

Have No Fear

My solution to this is to store the serialized error in Blob Storage, and add a key (pointer) to it on the ErrorEntity. So, lets get right to the code…

public class ErrorEntity : TableServiceEntity

{

public string BlobId { get; set; }private Error OriginalError { get; set; }

public ErrorEntity() { }

public ErrorEntity(Error error) : base(…)

{

this.OriginalError = error;

}

public Error GetOriginalError()

{

return this.OriginalError;

}

}

I’ve added two new properties. BlobId - which will be our pointer to our blob record. OriginalError - which is needed, but we will use later. One thing you will notice is that SerializedError is gone, I will get to this a little later.

The other important things to capture is that we assign our Error that we constructed the ErrorEntity with to our new property and we also created a function to obtain it later on. The key here is that we mark the OriginalError property private. This is to prevent the property from being persisted as part of the Table Storage entity.

The Dirty Work

Now comes the fun part; getting the error into blob storage. Lets start with where we save the entity to Table Storage.

public override string Log(Error error)

{

var entity = new ErrorEntity(error);

var context = CloudStorageAccount.Parse(connectionString).CreateCloudTableClient().GetDataServiceContext();

entity.BlobId = SerializeErrorToBlob(entity);context.AddObject(“elmaherrors”, entity);

context.SaveChangesWithRetries();

return entity.RowKey;

}What we are doing here is simply making a call to a function (which we will define in a second) that will persist our error to blob storage and give us back and ID we can use to obtain it later.

private string SerializeErrorToBlob(ErrorEntity error)

{

string id = Guid.NewGuid().ToString();

string xml = ErrorXml.EncodeString(error.GetOriginalError());

var container = CloudStorageAccount.Parse(this.connectionString).CreateCloudBlobClient().GetContainerReference(“elmaherrors”);

var blob = container.GetBlobReference(id);

blob.UploadText(xml);

return id;