Windows Azure and Cloud Computing Posts for 7/20/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 7/21/2012 8:00 AM with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Nathan Totten (@ntotten) posted CloudCover Episode 84 - Windows Azure Storage Updates and a Preview of the Client Storage Library vNext on 7/20/2012:

Join Nate and Nick each week as they cover Windows Azure. You can follow and interact with the show at @CloudCoverShow.

In this episode, we are joined by Jai Haridas — Principal SDE Manager for Windows Azure Storage — and Joe Giardino — SDE for Windows Azure Storage — who show us the new features available in Windows Azure Storage. Additionally, Joe gives us a preview of the next release of the Windows Azure Storage libraries. Joe demonstrates how to use the future release of the storage libraries in a Windows 8 Metro App.

In the News:

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Cihan Biyikoglu (@cihangirb) asked EF (Entity Framework) Finally Open Source! Anyone up for contributing the Federations Support in EF? on 7/20/2012:

Finally, EF is open source and anyone can contribute. Many folks have been asking for EF to support federations. EF is the first to take this open source route in our data APIs and federations support is your chance to make your mark. You up for it?

http://weblogs.asp.net/scottgu/archive/2012/07/19/entity-framework-and-open-source.aspx

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

• Peter Horsman posted a Windows Azure Marketplace Update: New Features, Availability in 12 New Countries on 7/20/2012:

We are excited to announce the latest update to Windows Azure Marketplace, which brings some exciting new features, and commercial availability to 12 new countries. Now ISVs in 38 countries can benefit from the Windows Azure Marketplace.

New Features

- Integration of a self-publish app catalog in the Seller Dashboard,

- Availability of the Bing API exclusively through the Marketplace,

- Expanded offers through the introduction of Sample Data, and

- Integration of PowerPivot.

New Countries

Currently, the Windows Azure Marketplace serves approximately 50,000 users and more than 70,000 subscriptions.

The new release expands commercial availability to the following countries: Brazil, Chile, Colombia, Costa Rica, Greece, Israel, Luxembourg, Malaysia, Peru, Romania, South Korea, and Trinidad and Tobago.

Seller Dashboard Integration

Now ISVs can self-publish catalog apps through the Seller Dashboard. This more direct approach improves ease of use and supports faster time-to market for your apps.

Exclusive Availability of Bing API

We recently made the Bing API available through the Marketplace, and already there are thousands of users generating millions of transactions. Beginning 1 August 2012, Windows Azure Marketplace will be the only way to access the Bing API. Currently all Bing API offers include a free trial amount of 5,000 transactions to help you get started.

Introducing Sample Data

New functionality has been enabled that allows all Anonymous and Authenticated users to see Sample Data for selected data offers.

Sample data is a small subset of data that is being offered as published through the Marketplace. The purpose is to give you better insight into the shape and meaning of data offers, and to reduce the number of steps needed to get meaningful data views. It also gives publishers a great new way to promote and advertise their data.

Sample Data is available from the catalog and details pages of these offers:

US Air Carrier Flight Delays[*]

- ESRI - 2010 Key US Demographics by ZIP Code, Place and County (Trial)

- 2006 - 2008 Crime in the United States (Data.gov)

- London Borough Profiles

- European Greenhouse Gas Emissions

PowerPivot Makes BI Stronger

Microsoft Office has launched the Public Preview of Excel 2013! For the Windows Azure Marketplace this means new PowerPivot integration that a broad range of customers are sure to find very appealing to serve their Business Intelligence needs. Find a data set, build a query, pull it in and add the power of World Data to PowerPivot!

Make the most of this update! Explore the Windows Azure Marketplace and take advantage of these great new features.

Peter is a Sr. Partner & Channel Marketing Manager, Windows Azure Marketplace

* OakLeaf published the US Air Carrier Flight Delays dataset to the Windows Azure Marketplace DataMarket.

• Mark Stafford (@markdstafford) explained OData 101: What is JSON Light? in a 7/20/2012 post:

TL;DR: Tested against a real-world service, JSON Light results in a payload that is an order of magnitude smaller.

In this OData 101, we’ll take a short detour from code to understand the newest serialization format in the OData family: JSON Light.

What is JSON Light?

JSON Light is a new serialization format that is being added to the OData specification. Existing formats contain a lot of redundancy that can be optimized out. For example, consider the following real payloads:

JSON Light Format:

- {

- "odata.metadata":"http://services.odata.org/Experimental/OData/OData.svc/$metadata#ODataDemo.DemoService/Products/@Element",

- "ID":0,

- "Name":"Bread",

- "Description":"Whole grain bread",

- "ReleaseDate":"1992-01-01T00:00:00",

- "DiscontinuedDate":null,

- "Rating":4,

- "Price":"2.5"

- }

(Note: this is the JSON Light format as it is today, in prerelease bits. This format may change slightly before the bits are promoted to production.)

Atom Format:

- <entry xml:base="http://services.odata.org/Experimental/OData/OData.svc/"

- xmlns="http://www.w3.org/2005/Atom"

- xmlns:d="http://schemas.microsoft.com/ado/2007/08/dataservices"

- xmlns:m="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata"

- xmlns:georss="http://www.georss.org/georss"

- xmlns:gml="http://www.opengis.net/gml">

- <id>http://services.odata.org/Experimental/OData/OData.svc/Products(0)</id>

- <category term="ODataDemo.Product" scheme="http://schemas.microsoft.com/ado/2007/08/dataservices/scheme" />

- <link rel="edit" title="Product" href="Products(0)" />

- <link rel="http://schemas.microsoft.com/ado/2007/08/dataservices/related/Category" type="application/atom+xml;type=entry" title="Category" href="Products(0)/Category" />

- <link rel="http://schemas.microsoft.com/ado/2007/08/dataservices/related/Supplier" type="application/atom+xml;type=entry" title="Supplier" href="Products(0)/Supplier" />

- <title type="text">Bread</title>

- <summary type="text">Whole grain bread</summary>

- <updated>2012-07-16T19:07:34Z</updated>

- <author>

- <name />

- </author>

- <link rel="http://schemas.microsoft.com/ado/2007/08/dataservices/relatedlinks/Category" type="application/xml" title="Category" href="Products(0)/$links/Category" />

- <link rel="http://schemas.microsoft.com/ado/2007/08/dataservices/relatedlinks/Supplier" type="application/xml" title="Supplier" href="Products(0)/$links/Supplier" />

- <content type="application/xml">

- <m:properties>

- <d:ID m:type="Edm.Int32">0</d:ID>

- <d:ReleaseDate m:type="Edm.DateTime">1992-01-01T00:00:00</d:ReleaseDate>

- <d:DiscontinuedDate m:null="true" />

- <d:Rating m:type="Edm.Int32">4</d:Rating>

- <d:Price m:type="Edm.Decimal">2.5</d:Price>

- </m:properties>

- </content>

- </entry>

JSON Verbose Format:

- {

- "d":{

- "__metadata":{

- "id":"http://services.odata.org/Experimental/OData/OData.svc/Products(0)",

- "uri":"http://services.odata.org/Experimental/OData/OData.svc/Products(0)",

- "type":"ODataDemo.Product",

- "properties":{

- "Category":{

- "associationuri":"http://services.odata.org/Experimental/OData/OData.svc/Products(0)/$links/Category"

- },

- "Supplier":{

- "associationuri":"http://services.odata.org/Experimental/OData/OData.svc/Products(0)/$links/Supplier"

- }

- }

- },

- "Category":{

- "__deferred":{

- "uri":"http://services.odata.org/Experimental/OData/OData.svc/Products(0)/Category"

- }

- },

- "Supplier":{

- "__deferred":{

- "uri":"http://services.odata.org/Experimental/OData/OData.svc/Products(0)/Supplier"

- }

- },

- "ID":0,

- "Name":"Bread",

- "Description":"Whole grain bread",

- "ReleaseDate":"1992-01-01T00:00:00",

- "DiscontinuedDate":null,

- "Rating":4,

- "Price":"2.5"

- }

- }

Why so much ceremony?

OData depends heavily upon sufficient metadata to describe payloads. This is evident in the Atom format, where each property has type information associated with it and multiple links are returned for each navigation property. There is also a lot of ceremony in JSON Verbose, though most of that ceremony is related to predictable and repetitive absolute URIs.

With JSON Light our goal was to reduce the ceremony as much as possible. We saw two clear places where ceremony could be removed:

- Type information; if the client already has a representation of the model, we don’t need to include the type information in the payload unless there is some ambiguity.

- Calculable information; most OData services follow a predictable set of conventions. If the service is following conventions, we should be able to calculate the URIs for many things, such as navigation links.

The

odata.metadataURIFor an average payload, that reduces our ceremony to one field: the

odata.metadataURI. Theodata.metadataURI appears once per payload and helps the client to disambiguate the payload. For instance, http://services.odata.org/OData/OData.svc/$metadata#ODataDemo.DemoService/Products/@Element means that the payload is a single Product entity, whereas http://services.odata.org/OData/OData.svc/$metadata#ODataDemo.DemoService/Products/ means that the payload is a feed of Product entities. For clients that correlate requests and responses or have a sufficiently advanced heuristic to read the payload, even this small amount of ceremony may be removed by asking the service not to return any metadata.Other metadata in JSON Light payloads

In addition to the odata.metadata URI, some JSON Light payloads will contain data that deviates from conventions, such as custom edit links. If a calculable field deviates from conventions, the server should include that field in the JSON Light payload.

Real-World Savings

So what does this mean in the real world? Based on the Northwind sample service:

Or represented visually:

The bytes-on-the-wire-savings of JSON Light are dramatic! Even with these relatively small feeds, the performance benefit would be substantial. The really exciting part is thinking about the bigger feeds, where the number of records are in the hundreds of thousands or millions of rows.

In summary, JSON Light brings a significantly more efficient wire format that matches beautifully with OData’s powerful URI queries. I’m looking forward to measuring the different on the Netflix feed once it supports JSON Light!

• Mark Stafford (@markdstafford) described OData 101: Building our first OData consumer in a 7/19/2012 post:

In this OData 101, we will build a trivial OData consumption app that displays some titles from the Netflix OData feed along with some of the information that corresponds to those titles. Along the way, we will learn about:

- Adding service references and how adding a reference to an OData service is different in Visual Studio 2012

- NuGet package management basics

- The LINQ provider in the WCF Data Services client

Getting Started

First we need to create a new solution in Visual Studio 2012. I’ll just create a simple C# Console Application:

From the Solution Explorer, right-click the project or the References node in the project and select Add Service Reference:

This will bring up the Add Service Reference dialog. Paste http://odata.netflix.com/Catalog in the Address textbox, click Go and then replace the contents of the Namespace textbox with Netflix:

Notice that the service is recognized as a WCF Data Service (see the message in the Operations pane).

Managing NuGet Packages

Now for the exciting part: if you check the installed NuGet packages (right-click the project in Solution Explorer, choose Manage NuGet Packages, and select Installed from the left nav), you’ll see that the Add Service Reference wizard also added a reference to the Microsoft.Data.Services.Client NuGet package!

This is new behavior in Visual Studio 2012. Any time you use the Add Service Reference wizard or create a WCF Data Service from an item template, references to the WCF Data Services NuGet packages will be added for you. This means that you can update to the most recent version of WCF Data Services very easily!

NuGet is a package management system that makes it very easy to pull in dependencies on various libraries. For instance, I can easily update the packages added by ASR (the 5.0.0.50403 versions) to the most recent version by clicking on Updates on the left or issuing the Update-Package command in the Package Manager Console:

NuGet has a number of powerful management commands. If you aren’t familiar with NuGet yet, I’d recommend that you browse their documentation. Some of the most important commands are:

Install-Package Microsoft.Data.Services.Client -Pre -Version 5.0.1-rc

Installs a specific prerelease version of Microsoft.Data.Services.ClientUninstall-Package Microsoft.Data.Services.Client -RemoveDependencies

Removes Microsoft.Data.Services.Client and all of its dependenciesUpdate-Package Microsoft.Data.Services.Client -IgnoreDependencies

Updates Microsoft.Data.Services.Client without updated its dependenciesLINQ Provider

Last but not least, let’s write the code for our simple application. What we want to do is select some of the information about a few titles.

The WCF Data Services client includes a powerful LINQ provider for working with OData services. Below is a simple example of a LINQ query against the Netflix OData service.

- using System;

- using System.Linq;

- namespace OData101.BuildingOurFirstODataConsumer

- {

- internal class Program

- {

- private static void Main()

- {

- var context = new Netflix.NetflixCatalog(new Uri("http://odata.netflix.com/Catalog"));

- var titles = context.Titles

- .Where(t => t.Name.StartsWith("St") && t.Synopsis.Contains("of the"))

- .OrderByDescending(t => t.AverageRating)

- .Take(10)

- .Select(t => new { t.Name, t.Rating, t.AverageRating });

- Console.WriteLine(titles.ToString());

- foreach (var title in titles)

- {

- Console.WriteLine("{0} ({1}) was rated {2}", title.Name, title.Rating, title.AverageRating);

- }

- }

- }

- }

In this sample, we start with all of the titles, filter them down using a compound where clause, order the results, take the top ten, and create a projection that returns only portions of those records. Then we write

titles.ToString()to the console, which outputs the URL used to query the OData service. Finally, we iterate the actual results and print relevant data to the console:Summary

Here’s what we learned in this post:

- It’s very easy to use the Add Service Reference wizard to add a reference to an OData service

- In Visual Studio 2012, the Add Service Reference wizard and the item template for a WCF Data Service add references to our NuGet packages

- Shifting our distribution vehicle to NuGet allows people to easily update their version of WCF Data Services simply by using the Update-Package NuGet command

- The WCF Data Services client includes a powerful LINQ provider that makes it easy to compose OData queries

Sample source is attached; I’d encourage you to try it out!

• Mark Stafford (@markdstafford) explained OData 101: Constructing HTTP requests with Fiddler in a 7/19/2012 post:

TL;DR: Fiddler is an extremely useful tool for making ad-hoc OData requests. This tool is a must-have for any Web data developer’s arsenal.

Fiddler is a Web debugging proxy that makes it very easy to manipulate HTTP requests and responses. It’s an incredibly useful tool for debugging any client-side HTTP request/response on a Windows operating system. We will be using Fiddler in this instance to construct and manipulate requests to an OData service.

Note: Fiddler is capable of debugging HTTPS sessions as well, but it requires a little bit of setup. This page has more details on how to configure Fiddler to decrypt HTTPS.

Cleaning up a session

When Fiddler launches, it will automatically begin capturing. We don’t need the capturing for this post, so let’s turn it off by going to File > Capture Traffic or pressing F12:

We can then delete whatever traffic Fiddler has already captured by using the Remove sessions toolbar button:

Composing a request

Now we can issue our first request. We will start by composing the request from scratch. Fiddler contains a request composer, which you can get to by going to View > Composer or pressing F9:

I like to give the request composer its own window by going to the Options tab and clicking Tear Off:

(This makes more sense to me as the main Fiddler window is now exclusively an HTTP sniffing tool.)

Now let’s execute our first request. Ensure that your request type is set to GET, enter a valid OData URL in the text box next to the request type (http://odata.netflix.com/v2/Catalog will return the Netflix service document) and click Execute.

If you used URL above, you should see two responses pop up in the Web Sessions list. The first response is simply a 301 redirecting you to the full URL, http://odata.netflix.com/v2/Catalog/. Had we started with that URL, the 301 would not have been returned.

Note: If you need to brush up on your HTTP status codes, the official spec is a great place to start and is surprisingly easy to read for a spec.

Reading a response

The 200 response is the one we care about. If you click on it in the Web Sessions list, you’ll see the details of the request/response appear in the pane to the right (you’ll need to have the Inspectors tab selected – or press F8 – to see the information):

I tend to use the Raw view most frequently, but I also use Headers, JSON and XML quite frequently. If I’m dealing with an XML or HTML payload, SyntaxView is also very useful:

Note the Find… and View In Notepad controls at the bottom of the request and response panes.

Manipulating request headers

With OData, it’s very important to be able to modify the request headers. We recommend that all OData clients always set a value for the Accept header and the MaxDataServiceVersion header. Fiddler allows us to add these headers in the Request Headers textbox:

Note that if a server is unable to respond with the appropriate payload version, it will return an error – but this is better than causing a potentially serious parsing error on the client.

Takeaways

- Fiddler is a powerful tool for constructing ad-hoc HTTP requests

- Always set a value for the Accept header and the MaxDataServiceVersion header on OData requests

Mark Stafford (@markdstafford) posted OData 101: Enabling JSON Light from the WCF DS 5.1.0-rc1 client on 7/20/2012:

TL;DR: You can tell the 5.1.0-rc1 version of Microsoft.Data.Services.Client to use JSON Light by providing an implementation of

Func<ModelResolverArgs, ModelResolverResult>as shown in this gist.In this OData 101 we’ll build a JSON Light enabled client. JSON Light is the newest format in the OData family. (For an overview of the benefits of JSON Light, check out this blog post.)

The need for a model

OData requires an EDM model in order to understand type information, relationships, and other attributes of the model. Many OData Services expose this model through a serialization format known as Conceptual Schema Definition Language (CSDL). The CSDL-serialized model is commonly made available as the Service Metadata Document.

In many cases

$metadata(aka the Service Metadata Document) is used to generate proxy classes for accessing the service. These proxy classes are not strictly required, but greatly simplify the process of consuming an OData service.JSON Light benefits greatly from having a representation of the model client-side. It is possible to use JSON Light even if you don’t have the model, but we’ll cover that in a future blog post. For now, let’s assume that the model is required on the client.

Disclaimers

Before we get into the sample code, let’s cover a few disclaimers:

- This is sample code. It’s not intended to be production quality and there are a number of optimizations we’re foregoing for readability’s sake.

- We plan to ship some model resolvers out of the box, we just haven’t gotten there yet. For now you will need to supply the model resolver.

Code

We’ll walk through what the code is doing below, but let’s take a look at the code first:

- using System;

- using System.Collections.Generic;

- using System.Data.Services.Client;

- using System.Linq;

- using System.Text;

- using System.Xml;

- using Microsoft.Data.Edm;

- using Microsoft.Data.Edm.Csdl;

- using Microsoft.Data.Edm.Validation;

- using Scratch.NW;

- namespace Scratch

- {

- internal class Program

- {

- private static readonly Dictionary<Uri, IEdmModel> models = new Dictionary<Uri, IEdmModel>();

- private static void Main()

- {

- var context = new NorthwindEntities(new Uri("http://services.odata.org/Experimental/Northwind/Northwind.svc/"));

- // Note that for the release, code gen will likely provide a much friendlier alternative to this example

- context.Format.UseJson(args =>

- {

- Uri metadataUri = args.MetadataDocumentUri;

- if (!models.ContainsKey(metadataUri))

- {

- var xmlTextReader = new XmlTextReader(metadataUri.ToString());

- IEdmModel edmModel = null;

- IEnumerable<EdmError> errors = null;

- if (EdmxReader.TryParse(xmlTextReader, out edmModel, out errors))

- {

- models[metadataUri] = edmModel;

- }

- else

- {

- var errorMessageBuilder = new StringBuilder("Model creation failed; please resolve the following errors in the metadata document:");

- foreach (EdmError error in errors)

- {

- errorMessageBuilder.AppendLine(String.Format("t{0}", error.ErrorMessage));

- }

- throw new Exception(errorMessageBuilder.ToString());

- }

- }

- return new ModelResolverResult

- {

- ServiceModel = models[metadataUri]

- };

- });

- Category category = context.Categories.First();

- Console.WriteLine(category.CategoryName);

- foreach (Sales_Totals_by_Amount item in context.Sales_Totals_by_Amounts)

- {

- Console.WriteLine(item.SaleAmount);

- }

- }

- }

- }

Walkthrough

The interesting code starts on line 22 with the call to

context.Format.UseJson(). This is the call that enables JSON Light. Currently the API requires callers to pass the method an argument of typeFunc<ModelResolverArgs, ModelResolverResult>. This function will be used to get the model for a given URI. (We’ll come back to this part later.)The function that I’ve implemented in this sample will take the

MetadataDocumentUrifrom theModelResolverArgs, do a Web request to$metadata, and then parse that value into an EDM model that is returned on theModelResolverResult.Line 25 does some basic sanity checking to see whether or not we’ve already resolved the model. The model resolver func will be called every time a request is issued to an OData service, so we want to make sure we aren’t doing a new Web request to

$metadataevery time we send a request to the service.Lines 27-42 contain boilerplate code for parsing

$metadatainto an EDM model with EdmLib. On line 30 we check whether or not we were able to parse the model. If we were, we cache the model (on line 32). If model parsing failed, we throw (lines 36-41).On line 44 we return a new

ModelResolverResultpopulated with the cached EDM model.Trying it out

Now that we’ve walked through what the code does, let’s try it out and see it in action. Place a breakpoint on line 44 and start the application in debug mode. Examine the model in the

modelsdictionary and themetadataUri.Next, remove the breakpoint and fire up Fiddler. Run your application. You should see something like this in Fiddler:

The first request was the request for the data. When the client receives the response, it looks at the

odata.metadataURI in the response and sees that the response is of type http://services.odata.org/Experimental/Northwind/Northwind.svc/$metadata#ODataWebExperimental.Northwind.Model.NorthwindEntities/Sales_Totals_by_Amounts. The client will then call our model resolver func, passing in the part of the URI that can be passed to the server (everything before the #). Our model resolver then makes a request for$metadata(since we don’t have it cached yet), which you can see highlighted above.Writing a good model resolver is important for the prerelease bits. The model resolver above is a reasonable starting point, but clearly not issuing a Web request at all would be ideal.

Conclusion

JSON Light significantly improves the efficiency of OData wire formats. For JSON Light to work in an OData world, the client must have the model to parse the response properly. The model is currently provided to the client through the model resolver func,

Func<ModelResolverArgs, ModelResolverResult>. One such implementation is included above, however we plan to provide a better alternative in the full release.Please take the time to try out JSON Light and share your feedback!

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Caching, Active Directory and Workflow

• My (@rogerjenn) Windows Azure Active Directory enables single sign-on with cloud apps article of 7/19/2012 for TechTarget’s SearchCloudComputing.com Website begins:

Microsoft’s Windows Azure Active Directory (WAAD) Developer Preview provides simple user authentication and authorization for Windows Azure cloud services. The preview delivers online demonstrations of Web single sign-on (SSO) services for multi-tenanted Windows Azure .NET, Java and PHP applications, and programmatic access to WAAD objects with a RESTful graph API and OData v3.0.

The preview extends the choice of IPs to include WAAD, the cloud-based IP for Office 365, Dynamics CRM Online and Windows InTune. It gives Windows Azure developers the ability to synchronize and federate with an organization’s on-premises Active Directory.

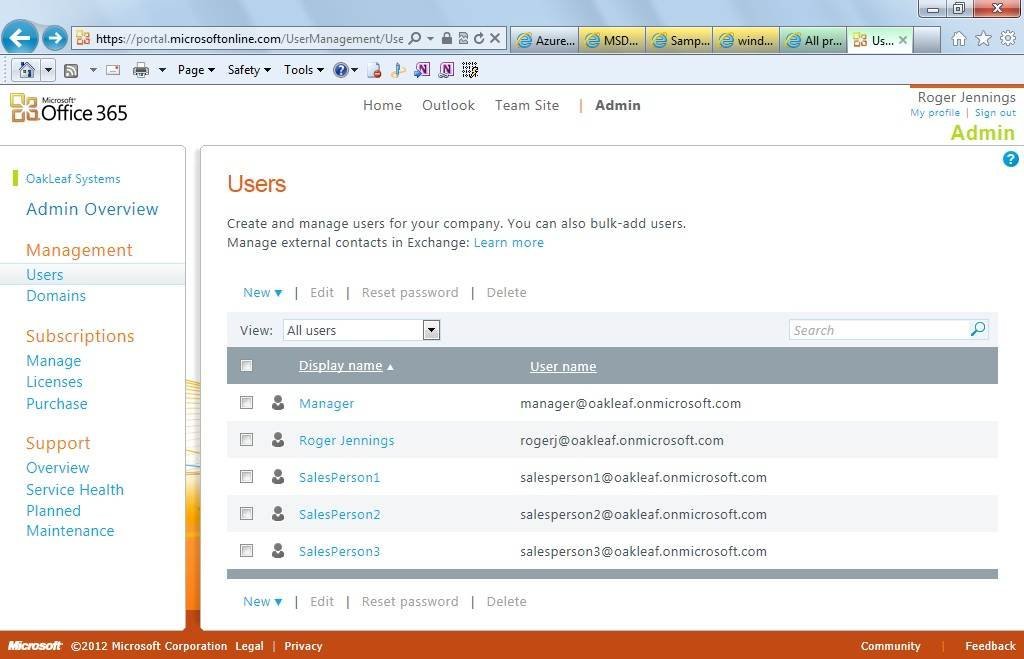

Figure 1. The Users page of the Office 365 Administrative portal enables adding detailed user accounts to an organization’s domain, oakleaf.onmicrosoft.com for this example.

Traditionally, developers provided authenticationfor ASP.NET Web applications with claims-based identity through Windows Azure Access Control Services (WA-ACS), formerly Windows Azure AppFabric Access Control Services.

According to Microsoft, WA-ACSintegrates with Windows Identity Foundation (WIF); supports Web identity providers (IPs) including Windows Live ID, Google, Yahoo and Facebook; supports Active Directory Federation Services (AD FS) 2.0; and provides programmatic access to ACS settings through an Open Data Protocol (OData)-based management service. A management portal also enables administrative access to ACS settings.

Running online Windows Azure Active Directory demos

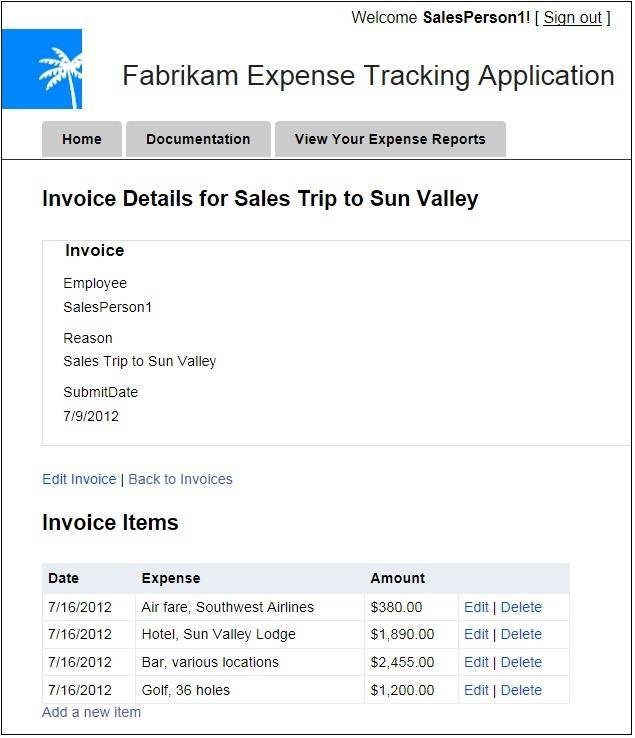

Taking full advantage the preview’s two online demonstration apps requires an Office 365 subscription with a few sample users (Figure 1). Members of the Microsoft Partner Network get 25 free Office 365 Enterprise licenses from the Microsoft Cloud Essentials benefit; others can subscribe to an Office 365 plan for as little as $6.00 per month. According to online documentation, the WAAD team plans to add a dedicated management portal to the final version to avoid reliance on Office 365 subscriptions. Note: The preview does not support Windows 8, so you’ll need to use Windows 7 or Windows Server 2008 R2 for the demo.Figure 2. Use the Fabrikam demo to add or edit detail items of an expense report.

The preview also requires users to download an updated Microsoft Online Services Module for Windows PowerShell v1.0 for 32-bit or 64-bitsystems. You’ll also need to download and save a prebuilt PowerShell authorization script, which you execute to extract the application’s identifier (Application Principal ID), as well as the tenant identifier (Company ID) for the subscribing organization.

The Fabrikam Expense report demo is a tool used to show interactive cloud Web apps to prospective Windows Azure users (Figure 2). The preview also includes open source code developers can download from GitHub and use under an Apache 2.0 license. Working with the source code in Visual Studio 2010 or later requires the Windows Azure SDK 1.7, MVC3 Framework, WIF runtime and SDK, as well as Windows Communication Framework (WCF) Data Services 5.0 for OData v3 and .NET 4.0 or higher. With a bit of tweaking, this ASP.NET MVC3 app could manage expense reports for small- and medium-sized companies. …

Full Disclosure: I’m a paid contributor to TechTarget’s SearchCloud… .com Websites.

• Dan Plastina let Tejas Patel describe how to Configure SharePoint Online Preview to use Windows Azure AD Rights Management in a 7/19/2012 post to the Active Directory RMS blog:

Hi folks,

Here is the follow-up post to our earlier post where you can learn more about how to quickly enable Rights management capabilities within the SharePoint Online preview.

I'll let Tejas Patel, provide more details.

Thanks,

Dan

Hi, I'm Tejas, a program manager on the Windows Azure AD Rights Management team, and I just want to provide some quick "jump start" steps for how you try out and get a feel for using Windows Azure AD Rights Management.

Yesterday, we discussed how to enable Rights management capabilities in the Exchange Online Preview. Today, I'll be discussing how to enable Rights management in Sharepoint Online Preview via Windows Azure AD Rights Management. If you haven’t read this post you should before trying the steps to enable Rights management features in SharePoint Online Preview.

Enable SharePoint Online Preview rights management capabilities

- Login to the SharePoint Administration Center, click settings and then select to Use the IRM service specified in your configuration.

- Create a new site or go to an existing site and then click Documents, then the Library tab, and then Library Settings.

- Click on Information Rights Management and configure the Document library for rights management.

- You can now go back to the document library, add a new Word document to the library, and then upon opening the document in Word 2013 (if configured using the steps from this post), the document will be rights protected.

As always, please let us know if there are any questions or feedback. I'll be doing blog posts next week to help show you some of the other ways you can use Windows Azure AD Rights Management with the Office 365 preview.

See the Windows Azure Active Directory Rights Management Preview now available post of 7/14/2012 below to learn why you might be interested in WAAD RMS.

• Dan Plastina let Tejas Patel explain how to Configure Exchange Online Preview to use Windows Azure AD Rights Management in a 7/18/2012 post:

Hi folks,

Here is the follow-up post to our earlier post where you can learn more about how to quickly enable Rights management capabilities within the Exchange Online preview. The below might appear complicated at first blush, but will be replaced with a single check-box at release.

I'll let Tejas Patel, provide more details.

Thanks,

Dan

Hi, I'm Tejas, a program manager on the Windows Azure AD Rights Management team, and I just want to provide some quick "jump start" steps for how you try out and get a feel for using Windows Azure AD Rights Management.

Yesterday, we discussed how to enable Rights management capabilities in Windows Azure AD Rights Management and Office 2013 Preview. Today, I'll be discussing how to enable Rights management in Exchange Online Preview via Windows Azure AD Rights Management. If you haven’t read this post you should before trying the steps to enable Rights management features in Exchange Online Preview.

Here are the steps:

Enable Exchange Online Preview rights management capabilities

- Connect to your Exchange Online account by using Windows PowerShell

- Login with this command:

- $LiveCred = Get-Credential

3. Begin configuration of Exchange Online:

- $Session = New-PSSession -ConfigurationName Microsoft.Exchange -ConnectionUri https://ps.outlook.com/powershell/ -Credential $LiveCred -Authentication Basic –AllowRedirection

- Import-PSSession $Session

- Enable-OrganizationCustomization

4. Run the following commands to enable Rights management within Exchange Online:

- Set-IRMConfiguration –RMSOnlineKeySharingLocation "https://sp-rms.na.aadrm.com/TenantManagement/ServicePartner.svc"

- Import-RMSTrustedPublishingDomain -RMSOnline -name "RMS Online"

- Set-IRMConfiguration -InternalLicensingEnabled $true

For regions outside North America, please substitute .NA. with .EU. for the European Union, and .AP. for Asia

e.g.: https://sp-rms.eu.aadrm.com/TenantManagement/ServicePartner.svc

e.g.: https://sp-rms.ap.aadrm.com/TenantManagement/ServicePartner.svcOptionally test the configuration by running the following command:

- Test-IRMConfiguration -sender user@company.onmicrosoft.com

Start using IRM functionality in Outlook Web Access

- Log into Outlook Web access.

- Create a new mail message and add a recipient.

- Click the ellipsis( “…”) besides the “INSERT” option in the tool strip and then select set permissions.

- Select the “Do Not Forward” template.

- Send the message to a recipient that is using the Office 365 Preview.

- The recipient will be able to open the message in Outlook Web Access or Outlook 2013 Preview (if they have configured their computer for Rights management using the steps I provided in yesterday's post).

As always, please let us know if there are any questions or feedback. I'll be doing other blog posts later this week to help show you some of the other ways you can use Windows Azure AD Rights Management with the Office 365 preview. Tomorrow, I will be discussing how to enable SharePoint Online Preview rights management capabilities.

• Dan Plastina let Tejas Patel explain Enabling Windows Azure AD Rights Management in Office 365 Enterprise Preview in a 7/17/2012 post:

As promised, here is the follow-up post to our earlier announcement about the Windows Azure AD Rights Management preview where you can learn more about how to quickly enable Rights management capabilities within the Office 365 Enterprise preview. And yes, these steps will be simpler/fewer in the final release of Azure AD Rights Management.

I'll let Tejas Patel, our resident expert, give you the inside track here.

Thanks,

Dan

Hi, I'm Tejas, a program manager on the Windows Azure AD Rights Management team, and I just want to provide some quick "jump start" steps for how you try out and get a feel for using Windows Azure AD Rights Management.

As Dan mentioned, our service is available as a part of the Office 365 Enterprise Preview. Once you have signed up for the preview, enabling Rights management capabilities within the Office 365 preview just takes a few additional steps.

Today, I'll be discussing how to enable Rights management and then how you can configure Office 2013 Preview to begin using its capabilities.

Quick Start for using Windows Azure AD Rights Management and within Office 2013 Professional Plus

- Enable Windows Azure AD Rights Management for your organization:

- Download the Windows Azure AD Rights Management administration module (WindowsAzureADRightsManagementAdministration.exe) for Windows PowerShell from here.

- From the local folder where you downloaded and saved the Rights Management installer file, double-click WindowsAzureADRightsManagementAdministration.exe to launch installation of the Rights Management administration module

- Open Windows PowerShell

- Type the following commands:

- Import-Module AADRM

- Connect-AadrmService -Verbose

- Enter your Office 365 Preview credentials when prompted, for example “user@company.onmicrosoft.com”

- Type the following commands:

- Enable-Aadrm

- Disconnect-AadrmService

- Configure Office 2013 Preview for Windows Azure AD Rights Management

- Install Office Professional Plus 2013 Preview from the download site.

- From an elevated command prompt, run the following registry update command:

- reg add "HKEY_CURRENT_USER\SOFTWARE\Microsoft\Office\15.0\Common\DRM" /t REG_DWORD /v "UseRMSOnline" /d 1 /f

- This step will be necessary on each machine with Office 2013 that you will use for Rights management.

- Start using IRM functionality

- Open Word 2013 Preview

- Sign in to your Office applications using your Microsoft Office 365 credentials.

- Create a new document

- Go to “File”, “Protect Document”, “Restrict Access” and then to“Connect to Digital Rights Management Servers and get templates”

- After this operation completes you are bootstrapped to use IRM functionality

- You can now share files that are IRM protected with other individuals that have configured their machines and signed up for the Office 365 Preview

As always, please let us know if there are any questions or feedback. I'll be doing other blog posts later this week to help show you some of the other ways you can use Windows Azure AD Rights Management with the Office 365 preview.

If you're curious what's coming next, here's a look ahead:

- Tomorrow, I will be discussing enabling Exchange Online Preview rights management capabilities.

- Later this week on Thursday, I will be discussing how to enable SharePoint Online Preview rights management capabilities.

• Dan Plastina announced Windows Azure Active Directory Rights Management Preview now available in a 7/16/2012 post (missed when published):

We’re delighted to bring information protection and rights management to Microsoft’s Office 365 Preview cloud offering with the today’s beta launch of Windows Azure Active Directory Rights Management. With Windows Azure AD Rights Management, customers can protect their data by encrypting and managing access rights, including Office documents, Exchange email, and SharePoint document libraries across Office 365 preview services and applications. The technology is highly integrated into Office 2013 Preview, Exchange Online Preview, and SharePoint Online Preview, and offers a seamless experience for both end users and administrators in document authoring, email, and SharePoint publishing.

Some of the benefits include:

Safeguarded sensitive information

- Users can protect their data directly using the Office Suite and ISV rights-management enabled applications. No additional steps are required – authoring documents, sending email, and publishing to SharePoint offer a consistent data protection experience.

Protection travels with the data

- Customers remain in control of who has access to their data, whether in the cloud, existing IT infrastructure, or at the user’s desktop. Customers can choose to encrypt their data and restrict access according to their business requirements.

Integrated with Office 2013 Preview and Office 365 Preview services

- Consistent rights management policy and data protection is applied and enforced throughout the Office 365 services and Office applications. Users can author, access, and manage their information safely, whether from a mobile device, Windows desktop, file share, email, or SharePoint libraries. Customers can choose to collaborate and share their data securely with other Office 365 users, and know their policies are enforced consistently and their information remains protected.

Default information protection policies

- Administrators and users can use standard policies for many common business scenarios: "Company Confidential –Read Only" and "Do Not Forward." A rich set of usage rights are supported such as read, copy, print, save, edit, and forward to allow flexibility in defining custom usage rights.

Using Windows Azure AD Rights Management, all these scenarios are seamless enabled with no additional administrator installation or deployments required. Learn more about the Windows Azure AD Rights Management at our at Technet site.

Check back shortly for a follow on post from Tejas Patel, a program manager on the Windows Azure Active Directory Rights Management team, for detailed steps on how you can enable this with the Office 365 Preview.

• Vittorio Bertocci (@vibronet) posted Inside the Windows Azure Active Directory Web SSO Sample for Java on 7/17/2012:

By now I am sure you heard that the Windows Azure Active Directory Developer Preview is out.

We announced so many interesting news that you might just have missed an interesting fact: as part of this release we made available a couple of samples developed on… something other than Visual Studio. Namely, I am referring to the Windows Azure AD SSO sample for Java and the Windows Azure AD SSO sample for PHP.

We provided detailed instructions on how to operate the Java sample, and I am told that the PHP one won’t take long to bring out; however I think it would be interesting to provide you with some insights on how the project are structured and how they make the magic of claims-based identity happen. Add to it that my wife is out, and that if I’d watch without the series finale of Eureka I’d be in deep trouble… and you’ve got yourself a blog post.

Let’s start with the Java sample. One word of warning: I already wrote a tl; dr (thanks Ryan for labeling it that way) post on how web SSO works in the developer preview, and I am not going to repeat any of it here as most of it (modulo syntactic sugar) holds regardless of the language you use.

The Project

We wrote the Java sample as a JBoss project. Before you come down with a case of apophenia and read who-knows-what in it: when we first started to work on the code we were in contact with one partner who wanted to connect with Windows Azure Active Directory from a JBoss application (on Solaris!), hence it was natural for us to go that way. However it should be pretty easy for you (assuming that you know Java better than I do) to port the code to any other application server. Also, this would be a great time for me to thank the usual Southworks for their help on this project. Thanks guys!

We worked with JBoss Studio, from where I’ll take most of the following screenshots. Also, we leveraged Maven for handling the many dependencies on the project.

The project have two main components: a library you can reuse across different projects, and a sample JSP web application that shows those in action.

The package com.microsoft.samples.federation provides you with the basic building blocks for handling claims-based identity via WS-Federation in your Java applications. Here, I’ll tell you a secret: the main hard rock when dealing with those scenarios is the SAML token handling itself, crypto verification, canonicalization, the whole brouhaha. Everything else is just a matter of triggering validation at the right times and in the right places; even ws-federation itself is really not that hard. In that optic, com.microsoft.samples.federation does the easy part of the job: we’ll see i9t in details later. For the heavy lifting – the SAML token processing – why reinventing the wheel? We use OpenSAML, a well-known Java and C++ library for handling (I *absolutely* loathe the expression “cracking a token”) SAML tokens.

The package com.microsoft.samples.waad.federation augments the basic federation capabilities in the former package with elements that are specific to Windows Azure Active Directory, such as the SPN-based realm validation described in the SSO deep dive.

Handling Web SSO

How does the sample really work? In a nutshell: we put a blanket filter in front of all pages; that filter intercepts requests, redirects the unauthenticated ones to a login page and restores the claims from the session for the authenticated ones. The login page performs HRD and generates the proper signin message, taking care of indicating as ultimate return URL the address of a servlet which is equipped to extract tokens from wresult and process them as appropriate. Most of the token & session processing logic is provided by the class ConfigurableFederatedLoginManager and its ancestor FederatedLoginManager.

Too fast? OK, let’s go through the same flow more in details. Here there’s the structure of the web application:

The web site is really minimal, there’s one resource (index.jsp) and one page to host the HRD experience (login.jsp).

The WEB-INF/web.xml carries the config: you can see part of it on the right side of the screenshot. From the top:

- We define the FederationServlet, associated with the homonym class, that will take care of handling incoming tokens.

- We define a filter, FederationFilter, which has direct knowledge of the URL of the HRD page. The filter also defines a series of exceptions, most notably login.jsp and the servlet endpoint

…and now comes the fun part. Aided by a good glass of Duvell, I debated a bit with myself about what would be the best way of walking you though the various classes and explaining what they contribute to the authentication flow. I concluded that walking through the files would end up forcing me to create a full reference documentation suite, which I don’t have time to write (after all, an Eureka episode lasts barely one hour). Hence, the most concise way appeared to be creating a diagram of what happens from the first unauthenticated resource to the moment in which the caller finally gains access, and explains what happens at every stage. So, here you go:

Don’t be scared, you’ll see that it is fact pretty straightforward. Let’s dive in!

- A client browser requests index.jsp. The request gets intercepted by the FederationFilter.

- The filter passes the request to the ConfigurableFederatedLoginManager, the handyman of this sample. ConfigurableFederatedLoginManager indicates that the request is not authenticated (as in, there is no session with claims in it), hence the filter redirects to login.jsp (but not before augmenting the request with a return url pointing to index.jsp, so that we can get back to it upon successful authentication)

- login.jsp queries a repository of trusted issuers, which play the same role as TrustedIssuers.xml in the .NET sample, and uses the list of trusted IdPs to create a series of link which contain the correct WS-Federation signin requests for each of the Idps. NOTE: the requests are all designed to redirect authentication tokens back to the FederationServlet endpoint (https://localhost:8443/sample/wsfed-saml in this sample)

- The user clicks on a link, and the Windows Azure Active Directory user authentication dance begins

- Assuming that the user authentication took place successfully, the browser POSTs the resulting token to the servlet endpoint as instructed by step #3. The servlet also instantiates ConfigurableFederatedLoginManager and feed the incoming request to its authenticate method. The method uses the trusted issuers repository for retrieving the necessary validation coordinates, then proceeds to validate the incoming token (using various other classes in the process)\

- Upon successful authentication, the ConfigurableFederatedLoginManager saves the resulting principal (i.e. the claims) in the session

- ConfigurableFederatedLoginManager raises the event OnAuthenticationSucceed for a FederatedAuthenticationListener. This is a bit like implementing the SignedIn() event in WIF: in this sample we really don’t do anything with this, but that’s substantially a way of offering a place in the pipeline where you can add custom processing logic outside of the application proper.

- Once all the validations are done, ConfigurableFederatedLoginManager retrieves from the request context (wctx) the URL of the resource originally requested (index.jsp, in our case) and redirects to it

- Here we get through the same flow as #2; however this time ConfigurableFederatedLoginManager finds a session and successfully retrieves the associated claims, hence…

- …the request finally reaches index.jsp

…and that’s the core of it! The rest is largely filling in the blanks with proper WS-Federation syntax, handling all the windows azure active directory-specific things I described in the deep dive, and so on.

Summary

This is not a complete reference, but hopefully it explains enough of the flow that it will help you to find your way as you explore the sample source. Also note: if you observe the flow and the various artifacts, you’ll see how it hints to some interesting potential extensions. Say that in the future there will be another redirect-based protocol you want to support, like SAML-P or OpenID Connect: all you’d need to do would be to add a servlet for the new protocol, enhance the FederatedLoginManager to recognize and enforce the protocol-specific flows, and orchestrate calls to whatever other support classes (such as for different token formats) you’d want to onboard. Pretty neat.

Of course I fully expect that the Java experts among you will have a ton of feedback about how we could have done this more efficiently, and we look forward to hear from you: but the hope is that this will help you to connect to Windows Azure Active Directory in a way that is closer to the practices and tools you are used to work with.

Well, the Eureka series finale still awaits in the DVR downstairs: however it is now awfully late, hence I successfully avoided to watch it… for tonight. I guess that for tomorrow I’ll have to find something else to write about.

In the meanwhile, please send us your feedback on the dev preview of Windows Azure Active Directory! We already got very interesting observations from many of you guys (thank you!) but the game has just begun!

My (@rogerjenn) Designating Managers and Adding Direct Reports with Exchange in Office 365 Enterprise (E-3) Preview post updated 7/20/2012 begins:

Updated 7/20/2012: Added “Alternative Method for Adding the Manager/ReportsTo Property Value” section at the end of this post and the following paragraph:

Thanks to John Palmer of Citrius for his assistance on resolving an issue with the DirectReports list. (See the Can't Add Users as DirectReports in the Exchange Admin Portal thread in the Using the Office 365 Enterprise Preview forum.

If you don’t have a current Office 365 subscription and would rather use the free Office 365 Enterprise (E-3) Preview version, substitute the following steps for those in the A Guided Tour of the Windows Azure Active Directory Developer Preview Sample Application post’s “Designating Managers and Adding Direct Reports with Exchange” section:

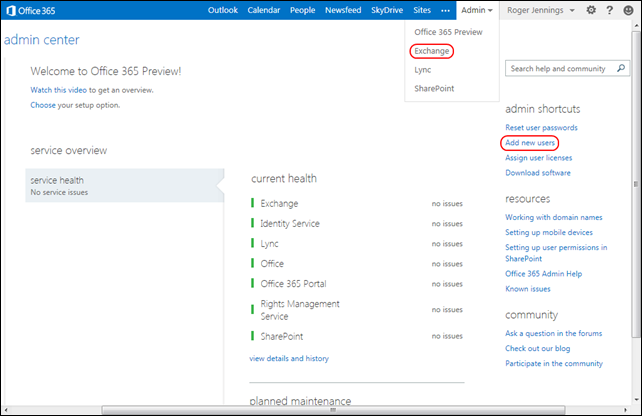

1. Open the Office 365 Preview Admin Center:

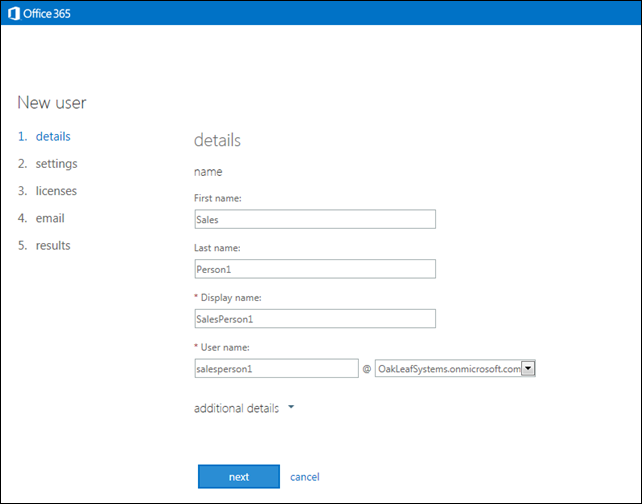

2. Click Add New Users to open the New User Details section and type employee information:

3. Click Next to display the Settings page, accept the No option, and select the country in which the user is located, United States for this example:

4. Click Next to display the Licenses page and accept the default selection of all available features:

5. Click Next to Display the Email page and accept the default Adminstrator’s email account:

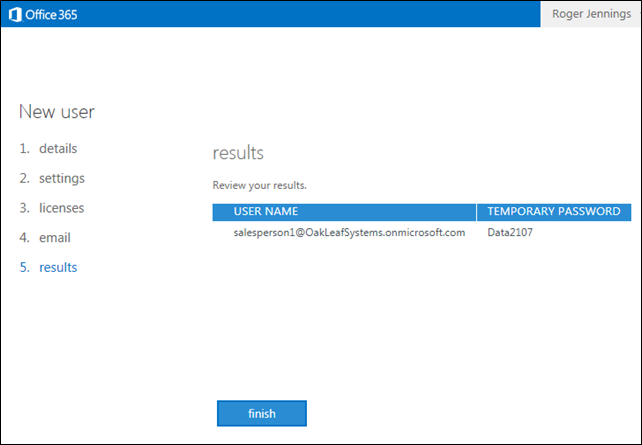

6. Click Create to display the Results page:

7. Repeat steps 2 through 6 for SalesPerson2 through SalesPerson4 and Manager. Click the + (Add) button in the Manage Users and Groups page to add the second and later user.

8. Optionally assign the Manager user the User Management Administrator role:

9. The users list appears as follows when you’re done:

Tip: See the “Alternative Method for Adding the Manager/ReportsTo Property Value to User Accounts” section near the end of this post. …

My (@rogerjenn) Adding Your Domain to a Windows Azure Active Directory in Office 365 Preview Enterprise (E3) Edition of 7/20/2012 begins:

If you have a custom domain registered for use with your Office 365 subscription, follow these steps to assign it to the Preview:

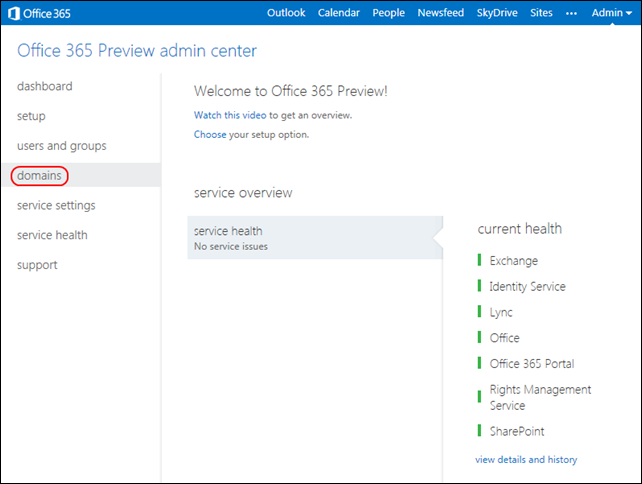

1. Open the Office 365 Preview Enterprise (E3) Edition administrative portal:

2. Click the Domains button in the navigation frame to open the Domains page and mark the current domain’s option button:

Following is the full text cut off above:

Your Microsoft Online Services account comes with a domain name — contoso.onmicrosoft.com — but if you have your own domain name already, you can use that domain name with Microsoft Online Services services too. To add your domain, click Add a domain.

If you don't already own a domain name, you can purchase one from a domain registrar, and then come back to add it to Microsoft Online Services.

3. Click the Add a Domain button to open the Specify a Domain page:

4. Click Next to display the Verify Domain page, select your domain registrar (Register.com for this example) from the first list and choose Add a TXT record in the Select a Verification Method list:

Note: Steps 5 through 9 are based on the instructions cut off above. Adding a TXT or MX record at other registrar’s sites follows a similar process. The Office 365 Team recommends using a TXT rather than a Mail Exchange (MX) record so as not to interfere with email operations. …

Brent Stineman (@BrentCodeMonkey) posted Service Bus and “pushing” notifications on 9/18/2012:

So the last several projects I’ve worked on, I’ve wanted to have a push notification system that I could use to send messages to role instances so that they could take actions. There’s several push notification systems out there, but I was after some simple that would be included as part of my Windows Azure services. I’ve put a version of this concept into several proposals, but this week finally received time to create a practical demo of the idea.

For this demo, I’ve selected to use Windows Azure Service Bus Topics. Topics, unlike Windows Azure Storage queues give me the capability to have multiple subscribers each receive a copy of a message. This was also an opportunity to dig into a feature of Windows Azure I haven’t worked with in over a year. Given how much the API has changed in that time, it was a frustrating, yet rewarding exercise.

The concept is fairly simple. Messages are sent to a centralized topic for distribution. Each role instance then creates its own subscriber with the appropriate filter on it so it receives the messages it cares about. This solution allows for multiple publishers and subscribers and will give me a decent amount of scale. I’ve heard reports/rumors of issues when you get beyond several hundred subscribers, but for this demo, we’ll be just fine.

Now for this demo implementation, I want to keep it simple. It should be a central class that can be used by workers or web roles to create their subscriptions and receive notifications with very little effort. And to keep this simplicity going, give me just as easy a way to send messages back out.

NotificationAgent

We’ll start by creating a class library for our centralized class, adding references to it for Microsoft.ServiceBus (so we can do our brokered messaging) and Microsoft.WindowsAzure.ServiceRuntime (for access to the role environment). I’m also going to create my NotificationTopic class.

Note: there are several supporting classes in the solution that I won’t cover in this article. If you want the full code for this solution, you can download it here.

The first method we’ll add to this is a constructor that takes the parameters we’ll need to connect to our service bus namespace as well as the name/path for the topic we’ll be using to broadcast notifications on. The first of these is creating a namespace manager so I can create topics and subscriptions and a messaging factory that I’ll use to receive messages. I’ve split this out a bit so that my class can support being passed a TokenProvider (I hate demo’s that only use the service owner). But here is the important lines:

TokenProvider tmpToken = TokenProvider.CreateSharedSecretTokenProvider(issuerName, issuerKey);

Uri namespaceAddress = ServiceBusEnvironment.CreateServiceUri(“sb”, baseAddress, string.Empty);

this.namespaceManager = new NamespaceManager(namespaceAddress, tokenProvider);

this.messagingFactory = MessagingFactory.Create(namespaceAddress, tokenProvider);We create a URI and a security token to use for interaction with our service bus namespace. For the sake of simplicity I’m using issuer name (owner) an the service administration key. I’d never recommend this for a production solution, but its fine for demonstration purposes. We use these to create a NamespaceManager and MessagingFactory.

Now we need to create the topic, if it doesn’t already exist.

try

{

// doesn’t always work, so wrap it

if (!namespaceManager.TopicExists(topicName))

this.namespaceManager.CreateTopic(topicName);

}

catch (MessagingEntityAlreadyExistsException)

{

// ignore, timing issues could cause this

}Notice that I check to see if the topic exists, but I also trap for the exception. That’s because I don’t want to assume the operation is single threaded. With this block of code running in many role instances, its possible that between checking if it doesn’t exist and the create. So I like to wrap them in a try/catch. You can also just catch the exception, but I’ve long liked to avoid the overhead of unnecessary exceptions.

Finally, I’ll create a TopicClient that I’ll use to send messages to the topic.

So by creating an instance of this class, I can properly assume that the topic exists, and I have all the items I need to send or receive messages.

Sending Messages

Next up, I create a SendMessage method that accepts a string message payload, the type of message, and a TImeSpan value that indicates how long the message should live. In this method we first create a BrokeredMessage giving it an object that represents my notification message. We use the lifespan value that is passed in and set the type as a property. Finally, we send the message using the TopicClient we created earlier and do appropriate exception handling and cleanup.

try

{

bm = new BrokeredMessage(msg);

bm.TimeToLive = msgLifespan;

// used for filtering

bm.Properties[MESSAGEPROPERTY_TYPE] = messageType.ToString();

topicClient.Send(bm);

success = true;

}

catch (Exception)

{

success = false;

// TODO: do something

}

finally

{

if (bm != null) // if was created successfully

bm.Dispose();

}Now the important piece here is the setting of a BrokeredMessage property. It’s this property that can be used later on to filter the messages we want to receive. So let’s not forget that. And you’ll also notice I have a TODO left to add some intelligent exception handling. Like logging the issue.

Start Receiving

This is when things get a little more complicated. Now the experts (meaning the folks I know/trust that responded to my inquiry), recommend that instead of going “old school” and having a thread that’s continually polling for responses, we instead leverage async processing. So we’re going to make use of delegates.

First we need to define a delegate for the callback method:

public delegate bool RecieverCallback(NotificationMessage mesage, NotificationMessageType type);

We then reference the new delegate in the method signature for the message receiving starter:

public void StartReceiving(RecieverCallback callback, NotificationMessageType msgType = NotificationMessageType.All)

Now inside this method we first need to create our subscriber. Since I want to have one subscriber for each role instance, I’ll need to get this from the Role Environment.

// need to parse out deployment ID

string instanceId = Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment.CurrentRoleInstance.Id;

subscriptionName = instanceId.Substring(instanceId.IndexOf(‘.’)+1);SubscriptionDescription tmpSub = new SubscriptionDescription(topicName, subscriptionName);Now is the point where we’ll add the in a filter using the Property that we set on the notification when we created it.

{

Filter tmpFilter = new SqlFilter(string.Format(“{0} = ‘{1}’”, MESSAGEPROPERTY_TYPE, msgType));

subscriptionClient.AddRule(SUBFILTER, tmpFilter);

}I’m keeping it simple and using a SqlFilter using the property name we assigned when sending. So this subscription will only receive messages that match our filter criteria.

Now that all the setup is done, we’ll delete the subscription if it already exists (this gets rid of any messages and allows us to start clean) and create it new using the NameSpaceManager we instantiated in the class constructor. Then we start our async operation to retrieve messages:

asyncresult = subscriptionClient.BeginReceive(waittime, ReceiveDone, subscriptionClient);

Now in this, ReceiveDone is the callback method for the operation. This method is pretty straight forward. We make sure we’ve gotten a message (in case the operation simply timed out) and that we can get the payload. Then, using the delegate we set up earlier, And then we end by starting another async call to get another message.

if (result != null)

{

SubscriptionClient tmpClient = result.AsyncState as SubscriptionClient; BrokeredMessage brokeredMessage = tmpClient.EndReceive(result);

//brokeredMessage.Complete(); // not really needed because your receive mode is ReceiveAndDeleteif (brokeredMessage != null)

{

NotificationMessage tmpMessage = brokeredMessage.GetBody<NotificationMessage>();// do some type mapping here

recieverCallback(tmpMessage, tmpType);

}

}// do recieve for next message

asyncresult = subscriptionClient.BeginReceive(ReceiveDone, subscriptionClient);Now I’ve added two null checks in this method just to help out in case a receive operation fails. Even the, I won’t guarantee this works for all situations. In my tests, when I set the lifespan of a message to less than 5 seconds, still had some issues (sorting those out yet, but wanted to get this sample out).

Client side implementation

Whew! Lots of setup there. This is where our hard work pays off. We define a callback method we’re going to hand into our notification helper class using the delegate we defined. We’ll keep it super simple:

private bool NotificationRecieved(NotificationMessage message, NotificationMessageType type)

{

Console.WriteLine(“Recieved Notification”); return true;

}Now we need to instantiate our helper class and start the process of receiving messages. We can do this with a private variable to hold on our object and a couple lines into role’s OnStart.

tmpNotifier = new NotificationTopic(ServiceNamespace, IssuerName, IssuerKey, TopicName);

tmpNotifier.StartReceiving(new NotificationTopic.RecieverCallback(NotificationRecieved), NotificationMessageType.All);Now if we want to clean things up, we can also add some code to the role’s OnStop.

try

{

if (tmpNotifier != null)

tmpNotifier.StopReceiving();

}

catch (Exception e)

{

Console.WriteLine(“Exception during OnStop: “ + e.ToString());

}base.OnStop();And that’s all we need.

In Closing

So that’s it for our basic implementation. I’ve uploaded the demo for you to use at your own risk. You’ll need to update the WebRole, WorkerRole, and NotifierSample project with the information about your Service Bus namespace. To run the demo, you will want to set the cloud service project as the startup project, and launch it. Then right click on the NotifierSample project and start debugging on it as well.

While this demo may work fine for certain applications, there is definitely room for enhancement. We can tweak our message lifespan, wait timeouts, and even how many messages we retrieve at one time. And it’s also not the only way to accomplish this. But I think it’s a solid starting point if you need this kind of simple, self-contained notification service.

PS – As configured, this solution will require the ability to send outbound traffic on port 9354.

Matias Woloski (@woloski) described Simpler WIF integration for ASP.NET web application using NuGet and cloud friendly appSettings in a 7/19/2012 post to his Auth10 blog:

Last week we’ve spent some time contributing to Jabbr (the open source chat app based on SignalR). David Fowler, the main dev behind the project, expressed on twitter that it would be great to have enterprise sign-on support on Jabbr so that it could be used as a chat system on the enterprise.

Being a single page application, we thought it would be a good idea to integrate it with Windows Identity Foundation (WIF), learn a couple of things to make this scenario much simpler and bring that back to Auth10 while also contributing to Jabbr. We went ahead and forked the Jabbr repo and within a couple of hours we had it working and a pull request was on its way.

We hosted a version of Jabbr using WIF in AppHarbor if you are curious about it http://jabbr-auth10.apphb.com/

We extracted what we learnt from this experience and packaged it into a couple of NuGets: Auth10.AspNet.SimpleConfig and Auth10.AspNet.SimpleConfig.WindowsAzureAD.IdentitySelector.

Screencast

What we’ve learnt from Jabbr

- There is a single page with the page structure and the rest is in JavaScript.

- Jabbr stores configuration items on appSettings to be cloud-friendly. Clouds like Windows Azure Web Sites or AppHarbor allows you to override config from appSetting but you can’t change complex config sections.

- Jabbr has its own mechanism to track a user logged in, they don’t use IPrincipal.

- Jabbr has two authentication mechanism: user and password or social identity providers (via JanRain)

- Once the user is logged in it will use SignalR in a trusted subsystem (i.e. trusting the cookie set on login)

- The Jabbr code is very clean and well structured!

In this scenario we needed non-intrusive, easy to integrate and minimum footprint code so that we don’t break things and adapt to whatever structure the application already have.

Less complexity, less footprint, less intrusive

Sometimes frameworks hide complexity away and leave us developers in hard to debug and extend systems. So we thought of doing this WIF integration using the least common denominator approach that everyone could understand.

We’ve spent some time packaging that in a seamless experience using NuGet.

Install-Package Auth10.AspNet.SimpleConfigThe NuGet package will add the following settings to

<appSettings><add key="fedauth.identityProviderUrl" value="https://auth10-preview.accesscontrol.windows.net/v2/wsfederation" /> <add key="fedauth.realm" value="urn.....ample-app" /> <add key="fedauth.replyUrl" value="" /> <add key="fedauth.certThumbprint" value="B538E6F6....B529F716" /> <add key="fedauth.requireSsl" value="true" /> <add key="fedauth.enableManualRedirect" value="false" />WIF SDK provides the Add STS reference wizard, we provide an equivalent to that in a form of a NuGet CmdLet. From the NuGet Package Manager console run the following:

Set-FederationParametersFromWindowsAzureActiveDirectory -realm urn...ample-app -serviceNamespace auth10-previewThat CmdLet will read the FederationMetadata and will fill the values of the configuration settings. We also provide a more generic CmdLet:

Set-FederationParametersFromFederationMetadataUrlandSet-FederationParametersFromFederationMetadataFileThe NuGet will also inject a slightly customized version of the WIF modules using App_Start WebActivator (or if it’s NET 3.5 the NuGet will add them under

<httpModules>).public static void PreAppStart() { DynamicModuleUtility.RegisterModule(typeof(CustomWSFederationAuthenticationModule)); DynamicModuleUtility.RegisterModule(typeof(CustomSessionAuthenticationModule)); }It will add the request validator that will allow tokens to be posted to the application.

<httpRuntime requestValidationMode="2.0" requestValidationType="$rootnamespace$.FederatedIdentity.Infrastructure.AllowTokenPostRequestValidator" />It will set the authentication mode to none and deny access to anonymous users. This is protecting the whole site but can be changed to use

[Authorize]attribute on MVC or another authorization mechanism.<authentication mode="None"> <authorization> <deny users="?" /> </authorization>It will add a static helper class with a few methods to allow triggering the login process programmatically instead of relying on the modules. It also provides the logoff methods that makes more explicit the logoff implementation.

FederatedIdentityHelper.LogOn([issuer], [realm], [homeRealm]) FederatedIdentityHelper.LogOff(); FederatedIdentityHelper.FederatedLogOff(idpSignoutUrl, [replyUrl]);Adding an identity provider selector

Another thing we’ve extracted from this experience is the concept of the identity selector. If you run the following NuGet, it will add to your application a small JavaScript component that will query Windows Azure Active Directory and build a list of identity providers that are configured for your application.

Install-Package Auth10.AspNet.SimpleConfig.WindowsAzureAD.IdentitySelectorThis NuGet provides a small snippet that you can add wherever you want in your app to show the selector.

<script src="/Scripts/waad.selector.js" type="text/javascript"></script> <script type="text/javascript"> $("#logon").click(function () { window.waadSelector('auth10-preview', 'urn.....ample-app', $("#identityProviderSelector")); // use jQuery UI to show the modal dialog (or any other javascript library) $("#identityProviderSelector").dialog({ modal: true }); return false; }); </script>The markup generated by the selector looks like this. We are generating CSS class with the pattern “selector-identityProvider” so that you could customize with a logo using CSS background-url for instance.

<div id="identityProviderSelector"> <ul> <li> <a href="https://www.google.com/...." alt="Login with Google" class="selector-Google">Google</a> </li> <li> <a href="https://..." alt="Login with Contoso AD" class="selector-Contoso-AD">Contoso AD</a> </li> </ul> </div>This is a screenshot from the screencast showing the selector. This is using jQuery UI and a simple ul/li but you could customize it the way you want (like we did on https://auth10.com/account/logon).

Conclusion

Auth10 mission is to democratize federated identity, making it simpler and easier. We know the only way to do that is by helping you, developers, providing the less intrusive, transparent and clean integrations. Go ahead and try the NuGet packages and the Auth10 dashboard. Plaese let us know what you think and how can we help you.

Haishi Bai (@HaishiBai2010) suggested that you Get Started with Windows Azure Active Directory (Developer Preview) in a 7/19/2012 post:

Guide: Greetings! Just like going to most tourist attractions where you can get a bunch of brochures to read about historical contexts, cultural significance, and ticket prices etc., here’s a list of online resources (with no particular order) I recommend reading if you want to gain deeper understanding of the topic. Read them, then come back to continue with the free tour.

- Kim Cameron: Identity Management as a Service

- John Shewchuk: Reimaging Active Directory for the Social Enterprise (Part 1), (Part 2)

- Stuart Kwan: A Lap Around Windows Azure Active Directory (TechEd US 2012 session recording)

- Edward Wu: Directory Graph API: Drill Down (TechEd US 2012 session recording)

- Vittorio Bertocci: Single Sign On with Windows Azure Active Directory: a Deep Dive (where I stole some contents that you’ll see in the guided tour part)

What is Windows Azure Active Directory and why you need it

Guide: Welcome back! It took me almost a day to go through above contents, I hope it didn’t take you as long. Here’s something I want to say about the service. If you’ve read enough, jump forward to the fun part!

Windows Azure Active Directory is Active Directory as a service. The concept is simple - if you chose to, Microsoft will keep your organization’s Active Directory running in the cloud so it’s highly scalable and highly available, without you needing to worry about maintaining the infrastructure. Now, the question is, why would you choose to host your Active Directory on Windows Azure? From IT’s perspective, cost, scalability and availability are very good reasons to switch to Windows Azure Active Directory. For application designers and architects, it’s important to realize how Windows Azure Active Directory can improve your Software Relevancy. First, nowadays services and applications are consumed in various contexts and users expect consistent experience no matter if they are on corporate network or in public Internet. Second, as users consume more and more services from different providers, they need SSO experience so they can move among services freely and, further more, orchestrate the services for business needs. Both requirements call for a mechanism for users to carry the concept of “me” around the world, not just within corporate networks. While your applications and services are used in a much wider scope, it’s utterly important to make user identities adapted for the wild so that your software can remain relevant to the users.

Key differences in programming model (comparing to traditional LDAP programming)

Guide: Before I became a tour guide I was a programmer who happened to work with digital identities and WIF for several years. Here’s a list of things I want to call out for you developers (or us developers – I’m a bit confused if I can still call myself a developer. It there a once-a-developer-always-a-developer thing?)

- Instead of LDAP, you use RESTful API (Called Directory Graph API) and OData 3.0 for advanced query scenarios. Yep. No LDAP.

- Use OAuth 2.0 for authentication when you access your directory. Yep. No Kerbero.

- Your application needs to be “registered” to your Windows Azure Directory tenant to gain access to it – this is a common practice accessing protected services. You do this by creating Service Principles – see the next section for details.

Limitations of preview environment

Guide: Last warning - this is a previewed area. Many moving parts that could change over time. Enter at your own risk!

- Read access to Directory Graph API only.

- Admin access only. You either have access to everything or nothing. There’s no fine-granularity control.

- No production SLA.

- Interfaces may change.

Part I: Create a new Service Principal

- First, you have to become a valid Office 365 tenant. You can go to this page to get a 30-day free trail (you can pick either Small business plan or Midsize business and enterprise plan). When you do this, Microsoft automatically creates a new Windows Azure Active Directory that is associated with your Office 365 account. After you’ve got your free trail, explore the Office 365 page to try out features such as creating users and groups, assigning users to roles, as well as creating online Office documents.

- Second, you need to create a Service Principle that applications can use to authenticate themselves with when they try to access your directory. To do this, you need to get Office 365 cmdlets, which can be downloaded here. Follow instructions on the page to complete installation (you need both Sign-In Assistant and the PowerShell cmdlets).

- Open a PowerShell window, and import required modules:

import-module MSOnlineExtended import-module MSOnline- Connect to your Office 365 tenant. When you issue the following command, you’ll be prompted with a logon window. Enter the administrator credential you used when creating your Office 365 tenant (such as admin@xxx.onmicrosoft.com and password):

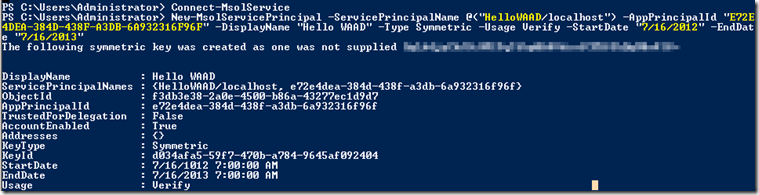

Connect-MsolService- Create a new Service Principle (you need to replay all {} parameters – see screenshot below for an example. If you are curious about the syntax of –ServicePrincipalName parameter, refer to Vittorio’s post):