Windows Azure and Cloud Computing Posts for 7/16/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Brad Calder (@CalderBrad) announced Windows Azure Storage – 4 Trillion Objects and Counting in a 7/18/2012 post to the Window Azure Team blog:

Windows Azure Storage has had an amazing year of growth. We have over 4 trillion objects stored, process an average of 270,000 requests per second, and reach peaks of 880,000 requests per second.

About a year ago we hit the 1 trillion object mark. Then for the past 12 months, we saw an impressive 4x increase in number of objects stored, and a 2.7x increase in average requests per second.

The following graph shows the number of stored objects in Windows Azure Storage over the past year. The number of stored objects is counted on the last day of the month shown. The object count is the number of unique user objects stored in Windows Azure Storage, so the counts do not include replicas.

The following graph shows the average and peak requests per second. The average requests per second is the average over the whole month shown, and the peak requests per second is the peak for the month shown.

We expect this growth rate to continue, especially since we just lowered the cost of requests to storage by 10x. It now costs $0.01 per 100,000 requests regardless of request type (same cost for puts and gets). This makes object puts and gets to Windows Azure Storage 10x to 100x cheaper than other cloud providers.

In addition, we now offer two types of durability for your storage – Locally Redundant Storage (LRS) and Geo Redundant Storage (GRS). GRS is the default storage that we have always provided, and now we are offering a new type of storage called LRS. LRS is offered at a discount and provides locally redundant storage, where we maintain an equivalent 3 copies of your data within a given location. GRS provides geo-redundant storage, where we maintain an equivalent 6 copies of your data spread across 2 locations at least 400 miles apart from each other (3 copies are kept in each location). This allows you to choose the desired level of durability for your data. And of course, if your data does not require the additional durability of GRS you can use LRS at a 23% to 34% discounted price (depending on how much data is stored). In addition, we also employ a sophisticated erasure coding scheme for storing data that provides higher durability than just storing 3 (for LRS) or 6 (for GRS) copies of your data, while at the same time keeping the storage overhead low, as described in our USENIX paper.

We are also excited about our recent release of Windows Azure Virtual Machines, where the persistent disks are stored as objects (blobs) in Windows Azure Storage. This allows the OS and data disks used by your VMs to leverage the same LRS and GRS durability provided by Windows Azure Storage. With that release we also provided access to Windows Azure Storage via easy to use client libraries for many popular languages (.net, java, node.js, php, and python), as well as REST.

Windows Azure Storage uses a unique approach of storing different object types (Blobs, Disks/Drives, Tables, Queues) in the same store, as described in our SOSP paper. The total number of blobs (disk/drives are stored as blobs), table entities, and queue messages stored account for the 4+ trillion objects in our unified store. By blending different types of objects across the same storage stack, we have a single stack for replicating data to keep it durable, a single stack for automatic load balancing and dealing with failures to keep data available, and we store all of the different types of objects on the same hardware, blending their workloads, to keep prices low. This allows us to have one simple pricing model for all object types (same cost in terms of GB/month, bandwidth, as well as transactions), so customers can focus on choosing the type of object that best fits their needs, instead of being forced to use one type of object over another due to price differences.

We are excited about the growth ahead and continuing to work with customers to provide a quality service. Please let us know if you have any feedback, questions or comments! If you would like to learn more about Windows Azure, click here.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

No significant articles today.

<Return to section navigation list>

Windows Azure Service Bus, Caching, Active Directory and Workflow

My (@rogerjenn) A Guided Tour of the Windows Azure Active Directory Developer Preview Sample Application post updated 7/19/2012 begins:

Contents:

Introduction

- Logging in to a Tenant’s Office 365 Active Directory

- Adding Users to a Tenant

- Adding Expense Reports as a User

- Submitting and Approving or Rejecting an Expense Report

- Conclusion

• Update 7/19/2012 7:30 AM PDT: You can not use an Office 365 Enterprise (E-3) Preview subscription, which includes 25 user licenses, in lieu of a paid production Office 365 subscription for this walkthrough. See the Logging in to a Tenant’s Office 365 Active Directory section for details.

Introduction

Alex Simon posted Announcing the Developer Preview of Windows Azure Active Directory to the Window Azure Team blog on 7/12/2012:

Today we are excited to announce the Developer Preview of Windows Azure Active Directory.

As John Shewchuk discussed in his blog post Reimagining Active Directory for the Social Enterprise, Windows Azure Active Directory (AD) is a cloud identity management service for application developers, businesses and organizations. Today, Windows Azure AD is already the identity system that powers Office 365, Dynamics CRM Online and Windows Intune. Over 250,000 companies and organizations use Windows Azure AD today to authenticate billions of times a week. With this Developer Preview we begin the process of opening Windows Azure AD to third parties and turning it into a true Identity Management as a Service.

Windows Azure AD provides software developers with a user centric cloud service for storing and managing user identities, coupled with a world class, secure & standards based authorization and authentication system. With support for .Net, Java, & PHP it can be used on all the major devices and platforms software developers use today.

Just as important, Windows Azure AD gives businesses and organizations their own cloud based directory for managing access to their cloud based applications and resources. And Windows Azure AD synchronizes and federates with their on-premise Active Directory extending the benefits of Windows Server Active Directory into the cloud.

Today’s Developer Preview release is the first step in realizing that vision. We’re excited to be able to share our work here with you and we’re looking forward to your feedback and suggestions!

The Windows Azure AD Developer Preview provides two new capabilities for developers to preview:

- Graph API

- Web Single Sign-On

This Preview gives developers early access to new REST APIs, a set of demonstration applications, a way to get a trial Windows Azure AD tenant and the documentation needed to get started. With this preview, you can build cloud applications that integrate with Windows Azure AD providing a Single Sign-on experience across Office 365, your application and other applications integrated with the directory. These applications can also access Office 365 user data stored in Windows Azure AD (assuming the app has the IT admin and/or user’s permission to do so). …

Read more: Announcing the Developer Preview of Windows Azure Active Directory

The walkthrough of Web Single Sign-On (WebSSO) preview follows below. My A Guided Tour of the Graph API Preview’s Graph Explorer Application provides a detailed look at the Graph API preview. The Graph Explorer requires the WAAD authorization credentials you obtain in this walkthrough.

Kim Cameron (@Kim_Cameron) gave an updated graphical introduction to Windows Azure Active Directory (WAAD) in his Diagram 2.0: No hub. No center. post of 7/2/2012 to his Identity Weblog:

As I wrote here, Mary Jo Foley’s interpretation of one of the diagrams in John Shewchuk’s second WAAD post made it clear we needed to get a lot visually crisper about what we were trying to show. So I promised that we’d go back to the drawing board. John put our next version out on twitter, got more feedback (see comments below) and ended up with what Mary Jo christened “Diagram 2.0″. Seriously, getting feedback from so many people who bring such different experiences to bear on something like this is amazing. I know the result is infinitely clearer than what we started with.

In the last frame of the diagram, any of the directories represented by the blue symbol could be an on-premise AD, a Windows Azure AD, something hybrid, an OpenLDAP directory, an Oracle directory or anything else. Our view is that having your directory operated in the cloud simplifies a lot. And we want WAAD to be the best possible cloud directory service, operating directories that are completely under the control of their data owners: enterprises, organizations, government departments and startups.

Further comments welcome.

Vittorio Bertocci (@vibronet) and Stuart Kwan presented A Lap Around Windows Azure Active Directory at TechEd North America 2012 on 6/11/2012. From Channel9’s description:

Windows Azure Active Directory provides easy-to-use, multi-tenant identity management services for applications running in the cloud and on any device and any platform. In this session, developers, administrators, and architects will take an end to end tour of Windows Azure Active Directory to learn about its capabilities, interfaces and supported scenarios, and understand how it works in concert with Windows Server Active Directory.

Logging in to a Tenant’s Office 365 Active Directory

You’ll need an Office 365 subscription with a few user accounts to make full use of the multi-tenanted Fabrikam Expense Tracking Application sample running in Windows Azure. The following walkthrough assume you have an Office 365 subscription and are willing to add a subscription or two for the limited time required to take this walkthrough:

• Update 7/19/2012 7:30 AM PDT: You can not use an Office 365 Enterprise (E-3) Preview subscription, which includes 25 user licenses, in lieu of a paid production Office 365 subscription for this walkthrough. The Enterprise Edition is required to obtain Exchange and Sharepoint instances in the 2013 version. During my testing of a new https://oakleafsyst.onmicrosoft.com E-3 Preview account, I discovered the Exchange 2013 Admin feature has a bug that prevents browsing the users list to add Direct Reports. (A thread about the problem in the Office 365 Preview forum starts here.) It’s clear from its ugly appearance that the Exchange 2013 Admin Portal isn’t fully cooked. Perhaps the Exchange team will perform an on-the-fly update to the feature.

The following actions are required for obtaining to authorize accessing existing tenant’s (organization’s) Office 365 subscription:

- Download the latest Microsoft Online Services Module for Windows PowerShell 32-bit Version / 64-bit version and install it on your machine.

- Download the PowerShell authorization script here. The following steps describe how to run the script.

Note: If you have an earlier version of the Microsoft Online Services PowerShell Module v1.0, you must remove it before installing the update to v1.0.

Following are the additional prerequisites for running the Fabrikam sample code, which is available from GitHub:

Tip: Don’t save your Office 365 administrative credentials for reuse. If you can’t logout, you must delete all your cookies if the WAAD team hasn’t enabled logging out of the sample application.

1. Launch the Fabrikam Fabrikam Expense Tracking Application at https://aadexpensedemo.cloudapp.net/.

2. Click the Sign Up button to open the Authorizing Your Applications page 1, which covers the Office 365 subscription step and click the Step 2 at the bottom to open page 2:

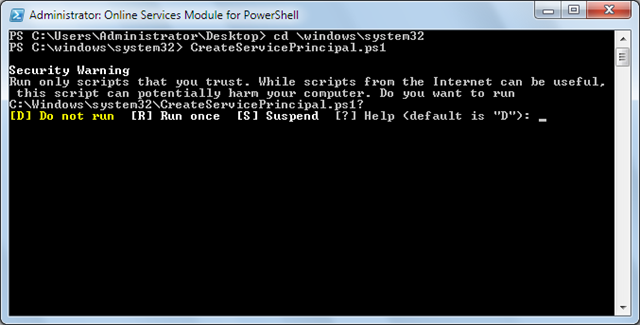

3. Review the Detailed Walkthrough section, then copy the CreateServicePrincipal.ps1 script from your Download folder to a Windows folder with a shorter path, such as \windows\system32.

4. Launch the Microsoft Online Services Module for Windows PowerShell from its desktop icon, change the directory to the script’s location, type CreateServicePrincipal.ps1 at the command prompt and press Enter:

3. Type r and press Enter to start the script, type a name for the Service Principal to create, waaddemo for this example, and press Enter: …

The post continues with a step-by-step walkthrough. Read more.

My (@rogerjenn) A Guided Tour of the Graph API Preview’s Graph Explorer Application post of 7/19/2012 begins:

The Graph API Preview’s Graph Explorer application for Windows Azure Active Directory (WAAD) reads the graph of the specified WAAD tenant (company), including its Users and their Managers, which WAAD exposes in OData v3 format.

Note: For more information about WAAD, including how to obtain authorization credentials for an Office 365 subscription, see my A Guided Tour of the Windows Azure Active Directory Developer Preview Sample Application post, updated 7/19/2012.

The Graph API’s Graph Explorer is modeled after Facebook’s Graph API Explorer application:

Edward Wu presented Directory Graph API: Drill Down at TechEd North America 2012 on 6/14/2012. From the video archive’s description:

This session introduces the new Directory Graph API, a REST-based API that enables access to Windows Azure Active Directory (Directory for Office 365 Tenants and Azure customers). We review the data directory model, the Graph API protocol (based on Odata V3 protocol), how authentication and authorization is managed, and demonstrate an end-to-end scenario. We walk through sample code calling the Directory Graph API. A roadmap is also reviewed.

MSDN’s Windows Azure Active Directory Graph topic provides detailed documentation for the Graph API.

The WAAD graph’s schema is defined by the metadata resource at https://directory.windows.net/$metadata:

Note: The preceding screen capture displays about 10% of the total metadata lines.

Following are detailed instructions for using the Graph Explorer to display a raw OData feed for the sample OakLeafSystems tenant described in the A Guided Tour of the Windows Azure Active Directory Developer Preview Sample Application post:

1. Launch the Graph Explorer, which runs under Windows Azure, at https://graphexplorer.cloudapp.net/, click the Use Demo Company link to add GraphDir1.OnMicrosoft.com/ to the URL, and click the Get button to display a list of the available EntitySets (collections):

2. If you have a Office 365 subscription, click the Sign Out button to return to the default resource, and add its domain name, oakleaf.onmicrosoft.com for this example, as a suffix to the default URL:

3. Add a virgule (/) and one of the of the EntitySet names, such as TenantDetails, as a query suffix to the resource text box (look ahead to step 4) and click the Get button to open the resource page with the Company text box populated. Copy the Principal Id and App Principal Secret values from the PowerShell script’s command window (see the A Guided Tour of the Windows Azure Active Directory Developer Preview Sample Application post) and paste them into the Principal Id and Symmetric Key text boxes, respectively:

Note: You should keep the Principal Id value confidential; a default Symmetric Key/App Principal Secret value applies to all Preview demo logins.

4. Glick the Log In button to display the query results for the selected EntitySet, TenantDetails for the Company ObjectType:

Note: The updated Office 365 AssignedPlans collection includes a ServiceInstance for AccessControlServiceS2S/NA, in addition to the standard three items for Exchange, SharePoint and Lync. …

The post continues with more Graph Explorer examples. Read more.

My (@rogerjenn) Windows Azure Active Directory enables single sign-on with cloud apps article of 7/19/2012 for TechTarget’s SearchCloudComputing.com begins:

Microsoft’s Windows Azure Active Directory (WAAD) Developer Preview provides simple user authentication and authorization for Windows Azure cloud services. The preview delivers online demonstrations of Web single sign-on (SSO) services for multi-tenanted Windows Azure .NET, Java and PHP applications, and programmatic access to WAAD objects with a RESTful graph API and OData v3.0.

The preview extends the choice of IPs to include WAAD, the cloud-based IP for Office 365, Dynamics CRM Online and Windows InTune. It gives Windows Azure developers the ability to synchronize and federate with an organization’s on-premises Active Directory.

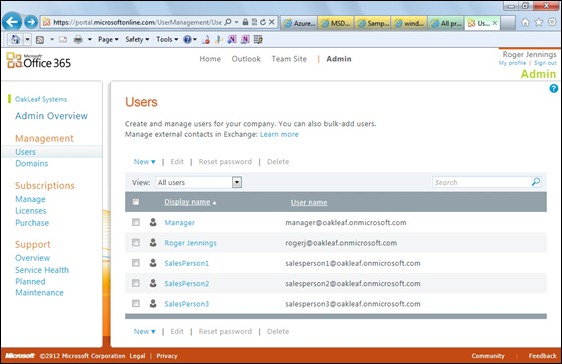

Figure 1. The Users page of the Office 365 Administrative portal enables adding detailed user accounts to an organization’s domain, oakleaf.onmicrosoft.com for this example.

Traditionally, developers provided authenticationfor ASP.NET Web applications with claims-based identity through Windows Azure Access Control Services (WA-ACS), formerly Windows Azure AppFabric Access Control Services.

According to Microsoft, WA-ACSintegrates with Windows Identity Foundation (WIF); supports Web identity providers (IPs) including Windows Live ID, Google, Yahoo and Facebook; supports Active Directory Federation Services (AD FS) 2.0; and provides programmatic access to ACS settings through an Open Data Protocol (OData)-based management service. A management portal also enables administrative access to ACS settings.

Running online Windows Azure Active Directory demos

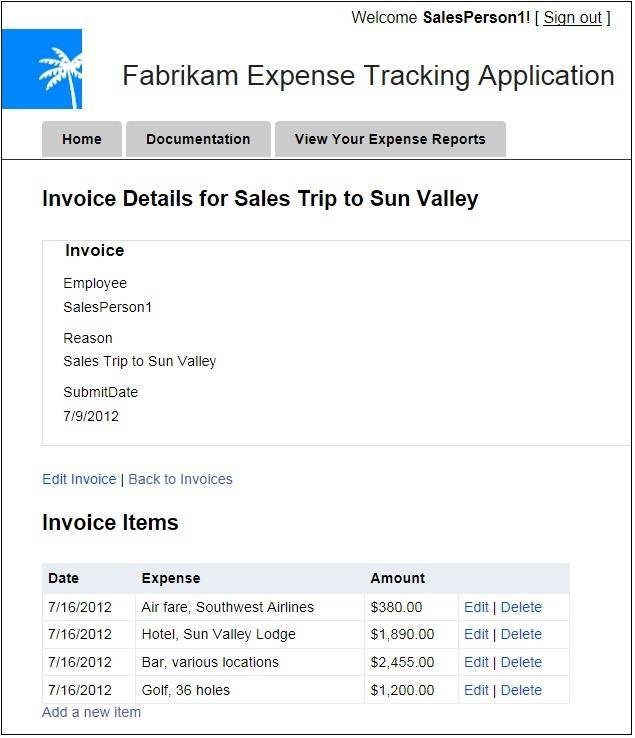

Taking full advantage the preview’s two online demonstration apps requires an Office 365 subscription with a few sample users (Figure 1). Members of the Microsoft Partner Network get 25 free Office 365 Enterprise licenses from the Microsoft Cloud Essentials benefit; others can subscribe to an Office 365 plan for as little as $6.00 per month. According to online documentation, the WAAD team plans to add a dedicated management portal to the final version to avoid reliance on Office 365 subscriptions. Note: The preview does not support Windows 8, so you’ll need to use Windows 7 or Windows Server 2008 R2 for the demo.Figure 2. Use the Fabrikam demo to add or edit detail items of an expense report.

The preview also requires users to download an updated Microsoft Online Services Module for Windows PowerShell v1.0 for 32-bit or 64-bitsystems. You’ll also need to download and save a prebuilt PowerShell authorization script, which you execute to extract the application’s identifier (Application Principal ID), as well as the tenant identifier (Company ID) for the subscribing organization.

The Fabrikam Expense report demo is a tool used to show interactive cloud Web apps to prospective Windows Azure users (Figure 2). The preview also includes open source code developers can download from GitHub and use under an Apache 2.0 license. Working with the source code in Visual Studio 2010 or later requires the Windows Azure SDK 1.7, MVC3 Framework, WIF runtime and SDK, as well as Windows Communication Framework (WCF) Data Services 5.0 for OData v3 and .NET 4.0 or higher. With a bit of tweaking, this ASP.NET MVC3 app could manage expense reports for small- and medium-sized companies. …

Full Disclosure: I’m a paid contributor to TechTarget’s SearchCloud… .com blogs.

Mary Jo Foley (@maryjofoley) asserted “The Microsoft Windows Azure Activity Directory developer preview is available for download, as is a beta of the Microsoft Azure Service Bus for Windows Server” in deck for her Microsoft's Active Directory in the cloud: Test bits available report of 2/17/2012:

Microsoft has made available to testers the promised Windows Azure Active Directory (WAAD) bits.

WAAD is Microsoft's project to bring the Active Directory technology in Windows Server to the cloud. Microsoft already is using WAAD to manage and federate identities in Office 365, Windows Intune and Dynamics CRM Online. According to Microsoft, some select third-party developers to provide single sign-on and identity-management for their Azure-hosted applications.

Here's Microsoft's latest graphical representation of how WAAD works:

Microsoft announced the planned developer preview for WAAD on June 7. This preview would provide the ability to connect and use information in the directory through a REST interface, officials said. It also would enable third-party developers to connect to the SSO the way Microsoft's own apps do.

As of July 12, Microsoft delivered two new components in its WAAD Developer Preview release. These are the graph application programming interface and Web single sign-on. The preview also includes access to new REST programming interfaces, a set of demonstration applications, a way to get a trial Azure AD tenant and related documentation.

"With this preview, you can build cloud applications that integrate with Windows Azure AD providing a Single Sign-on experience across Office 365, your application and other applications integrated with the directory. These applications can also access Office 365 user data stored in Windows Azure AD (assuming the app has the IT admin and/or user’s permission to do so)," explained Microsoft officials in a blog post about the preview.

Microsoft is working to provide symmetry across its Windows Azure cloud and Windows Server back down on earth. WAAD is an example of a Windows Server capabiltiy being replicated in the cloud. But Microsoft also is doing the reverse: Moving a number of its cloud capabilities back down to Windows Server, such as the recently announced Linux and Windows Server virtual machine hosting; its Web-site hosting; and its management portal and framework.

Microsoft also just announced it is making a subset of its Azure Service Bus -- i.e., its messaging platform -- available on Windows Server. The Service Bus 1.0 beta is available for download by testers who want to use it on Windows Server 2008 R2.

Sam Vanhoutte (@SamVanhoutte) described Service Bus for Windows Server, the New Features in a 7/16/2012 post:

The announcement

Because of the launch of the new Office version (read more here), there was also some interesting news for everyone interested in the Microsoft middleware… It was obviously not mentioned during the launch (which was off course consumer oriented), but as part of the new release, there are some interesting infrastructure components that are shipped. The service bus and the workflow team have shipped new bits that are available on premises and will be used by the new SharePoint version. This blog post dives deeper in the bits of the Service Bus for Windows Server. (read here)

Codit has been actively involved as a TAP (technology adoption program) customer for the Service Bus for Windows Server and we can say that we now have full symmetry for Codit Integration Cloud with Codit Integration Server. The exact same configuration and modules that are running in the cloud are also running on premises, giving our customers the choice to deploy locally, in the cloud or in a hybrid model.

Symmetry on the Microsoft cloud platform

The past years, we’ve seen Microsoft building a lot of things on the Windows Azure platform that they already had on premises. Some examples:

Cloud On premises Office 365 Sharepoint & Exchange CRM Online CRM 2011 Server SQL Azure SQL Server Windows Azure Windows Server AppFabric caching Windows Azure Storage Cache … … In all of the above cases, Microsoft brought capabilities (they had running on the Windows Server platform for years) from on premises to their cloud platform. And they always tried to make things as symmetric as possible (especially on the development model). But after reading the recent announcements where they are bringing Azure web sites, virtual machines and the management capabilities on premises (read more here), we now also see the first move in the other direction. And with the recent Office announcements, Microsoft has also released Service Bus for Windows server, a symmetric on premises version of the powerful Windows Azure Service Bus messaging. This post digs deeper in the details of this beta release, but this move shows that Microsoft wants to be the software company that provides software and services, running on the environment of your choice, in the business model of your choice. This will also give a lot of flexibility to ISV’s and companies with a very distributed environment.

One of the biggest unknowns at this time, is how Microsoft will keep symmetry on server. The release cadence of server products versus cloud based services is just too different. New features are being added to the various services all the time, and they also have to ship to the server installations over time. I’m curious to find out what the release cycle will be for these updates.

What's in the release

The announced release is the first public beta for Service Bus on Windows Server. It is called beta 2. The release of Service Bus for Windows server only contains the messaging capabilities of the Windows Azure Service Bus. This means there are no relaying capabilities (nor an on premises ACS service) added to this version. If you want to learn more about queues & topics/subscriptions, don’t hesitate to read more here:

Scenarios

This server product can be used in a variety of scenarios.

Durable messaging only scenarios

If you require to exchange messages in a local, messaging only scenario, you can perfectly use Service Bus for Windows server to deliver messages between applications and services in a durable and reliable fashion.

Store & forward scenarios

The Service Bus for Windows server release contains the possibility to define ForwardTo subscriptions on topics, so that messages that match the subscription of these rules, automatically get forwarded to the defined messaging entity. At this point it’s not possible to configure a ForwardTo that points to a remote entity, but one can work around that by having a subscriber that listens to a local ForwardTo entity and sends these messages to a public entity.

Distributed scenarios

A lot of organizations have different business units or plants that need to be interconnected. In a lot of companies (often after mergers and acquisitions), the technology that is used in these different plants is different. Therefore, it could be good to have Service Bus being used as the gateway to exchange messages between the different units, while every unit can use it’s standard of choice (REST, SOAP, .NET, AMQP…) to connect to that gateway.

Installation

The bits are available in the Web Platform Installer (Service Bus 1.0 beta and Workflow 1.0 beta).

Prerequisites

- Service Bus for Windows server requires Windows Server 2008 R2 SP1 (x64) or Windows Server 2012 (x64). It also installs on Windows 7.

- SQL Server is needed to host the different entities & configurations. SQL Server 2008 R2 SP1 and SQL Server 2012 are supported (also with their Express editions)

- Only Windows Authentication is supported, meaning Active Directory is required in a multi-machine scenario.

- The Windows Fabric gets installed on the Windows system.

Everything is described in detail in the installation guide.

Topologies

There are two typical topologies. A third could also be used, when having a dedicated SQL with one Service Bus server.

Single box

This is perfect for development purposes and light weight installations. Having everything in a single box, still allows to use virtualization to get better availability.

Farm for high availability

To get high availability, it is needed to have at least 3 service bus instances, due to the new concepts of the Windows Fabric. This is important, when making estimates or system topologies.

Configuration

To configure the Service Bus for Windows server, we needed to use PowerShell in the private beta. The following commands create a new service bus farm with a single server.

$mycert=ConvertTo-SecureString -string<Password> -force –AsPlainText

New-SBFarm -FarmMgmtDBConnectionString"data source=(local);integrated security=true"–CertAutoGenerationKey $mycert

Add-SBHost -certautogenerationkey $mycert -FarmMgmtDBConnectionString"data source=(local); integrated security=true"

Get-SBFarmStatus (shows three services running: Service Bus Gateway, Service Bus Message Broker, FabricHostSvc)Create a service bus namespace

Creating a namespace happens by using the following statement, providing a administrative user and the name of the namespace.

New-SBNamespace -Name CoditBlog -ManageUsers AdministratorAs a result, you can see the following information:

Name : CoditBlog

AddressingScheme : Path

CreatedTime : 10/07/2012 15:21:03

IssuerName : CoditBlog

IssuerUri : CoditBlog

ManageUsers : {Administrator}

Uri :

ServiceIdentifier :

PrimarySymmetricKey : vnAJ7rHp###REPLACE FOR SECURITY####MOvH8Yk=

SecondarySymmetricKey :New features

Check for matching subscriptions with the PreFiltering feature

Everyone who ever tried to build a BizTalk application has to be familiar with the following error description: “Could not find a matching subscription for the message”. This was a very common exception, indicating that a message was delivered to the BizTalk messaging agent, while no one was going to pick up that message. The fact that this error was thrown really made sure we would not lose messages in a black hole.

Looking at the publish & subscribe capabilities that came with Windows Azure Service Bus, this was one of the first things I missed. If you submit a message to a topic that does not have subscriptions that match, the message does not get deadlettered, the client does not get a warning or error code and the message is lost. While in certain scenarios this can be desired, a lot more scenarios would find this dangerous. The good thing is that now we get a choice with the Service Bus Server.

A new feature is available that takes care of this. This is PreFiltering. To enable this, you need to configure this on the TopicDescription. And notice one of the longer property names in the Service Bus object model

nsManager.CreateTopic(newTopicDescription { Path ="PrefilterTopic", EnableFilteringMessagesBeforePublishing =true});If we now submit a message to this topic that does not have a matching subscription configured, we’re getting the following exception.

Type: Microsoft.ServiceBus.Messaging.NoMatchingSubscriptionException

Message: Thereisno matching subscription foundforthe message with MessageId %1%..TrackingId:%2%,TimeStamp:%3%.As you can see, we can only configure this on a topic. I would have preferred to get the choice to configure this on the sender level. So that, even when sending to the same topic, the client could decide if he wants to have routing failures checked or not.

The complete sample code for this article can be downloaded at the bottom of this page.

ForwardTo: auto forwarding of messages to another entity

In our scenarios, we sometimes have the need to do archiving or auditing on incoming messages across all topics in our namespace. Until now, we have done that by creating a MatchAllFilter (1=1) on every topic and by creating a consumer for every subscription there. That off course takes up more threads and also more messaging transactions. This new feature is solving this for us. The ForwardTo allows us to define an automatic forwarding (transactional in the same namespace) action to another entity.

It’s important to understand that the subscription with ForwardTo enabled only acts as a ‘passthrough’ subscription and that you cannot read from it. The message automatically gets forwarded to its destination queue or topic. If you want to read from it, you get the following exception:

Can not create a message receiver on an entity with auto-forwarding enabled.To specify a ForwardTo on a subscription, you need to specify this in the SubscriptionDescription, by setting the ForwardTo string property to the path of the destination entity, as can be found in the following snippet:

nsManager.CreateTopic(newTopicDescription("ForwardToTopic");

nsManager.CreateSubscription(newSubscriptionDescription("ForwardToTopic","ForwardAll") {ForwardTo ="AuditQueue"},newSqlFilter("1=1");This feature comes in very handy for audit/tap scenarios and aggregated message handling.

Sending messages in batch

In service bus, we already had the EnableBatchedOperations to optimize ‘transmission performance’. With this release, we can now send messages in a single batch to a service bus entity. All of that in one transaction. The following code writes a batch of messages to a topic with a filter that accepts all messages with a number different from 8. Since PreFiltering is enabled (see above, no matching subscription), we can easily simulate an exception in the batch, by playing with the loop values. When we make the loop stop before number 8 is hit, all messages are successfully delivered, when we include number 8 (like in the sample), we get the NoMatchingSubscriptionException and not a single message is being delivered.

All of this is achieved by using the SendBatch method of the MessageSender class.

stringtopicName ="PrefilterBatchTopic";

if(!nsManager.TopicExists(topicName))

{

nsManager.CreateTopic(newTopicDescription(topicName) { EnableFilteringMessagesBeforePublishing =true});

var preFilterSubscription = nsManager.CreateSubscription(newSubscriptionDescription(topicName,"NoNumberEight"),newSqlFilter("MessageNumber<>8"));

}

var batchSender = msgFactory.CreateMessageSender(topicName);

// Create list of messages

List<BrokeredMessage> messageBatch =newList<BrokeredMessage>();

for(inti = 0; i < 11; i++)

{

BrokeredMessage msg =newBrokeredMessage("Test for subscription "+ i.ToString());

msg.Properties.Add("MessageNumber", i);

messageBatch.Add(msg);

}

batchSender.SendBatch(messageBatch);Receiving messages in batch

Just like we can send messages in a batch, we can also receive them in a batch. For this, we need to use the ReceiveBatch method of the MessageReceiver class. There are three overloads for this:

msgReceiver.ReceiveBatch(intmessageCount);

msgReceiver.ReceiveBatch(IEnumerable<long> sequenceNumbers);

msgReceiver.ReceiveBatch(messageCount, serverWaitTime);The first overload gets a batch of messages with a maximum of the specified messageCount. If there are 7 messages on the queue and the provided messageCount is 10, the method will return immediately with a batch of all 7 messages. It won’t wait until there are 10 messages on the queue, obviously. The third overload is much the same, except that you can specify a TimeSpan to wait. If no messages arrive during this timespan, an empty Enumerable will be returned.

The second overload is used to get specific messages (for example, messages that have been deferred) from the entity.

The following code reads the messages that have been written in the previous sample.

MessageReceiver msgReceiver = msgFactory.CreateMessageReceiver("PrefilterBatchTopic/Subscriptions/NoNumberEight", ReceiveMode.ReceiveAndDelete);

var messagesReceived = msgReceiver.ReceiveBatch(10, TimeSpan.FromSeconds(3));

Console.WriteLine("Batch received: "+ messagesReceived.Count());Some new properties

The TopicDescription and QueueDescription have some new properties available, aside from the ones discussed above.

- Authorization: contains all rules for authorization on the topic or queue. (not on SubscriptionDescription)

- AccessedAt, CreatedAt, UpdatedAt: these are all DateTime values that indicate the corresponding last actions for a queue. (In my private beta version, the AccessAt was still the DateTime.MinValue, however.)

- IsAnonymousAccessible: indicates if the queue has opened anonymous access (not on SubscriptionDescription)

- Status: the status of the queue (Active, Disabled, Restoring)

- UserMetadata: this is a property that one can use to custom metadata and so to a queue or topic.

Conclusion

With all recent announcements around symmetry between the cloud and the data center, we can fairly say that Microsoft is the only vendor that is powerful on both levels and gives its customers the choice to run their software in the best way possible. We truly believe in that vision and that's why we have taken the effort from the beginning of Integration Cloud to make the archticture flexible and modular to support this symmetry.

With Service Bus for Windows Server, we now have the same features available in a server only solution. And this now also gives me the chance to develop against the service bus while being disconnected.

Sam Vanhoutte

Updates

- Removed IIS from the topology diagrams. While IIS will often be installed and used in combination with Service Bus, it's not a requirement.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

No significant articles today.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The Entity Framework Team posted Entity Framework and Open Source to the ADO.NET blog on 7/19/2012:

It’s been a busy year on the Entity Framework team. A little over a year ago our team released EF4.1, which included the DbContext API and Code First support. Since then we’ve also delivered Code First Migrations. Our team is also in the final stages of wrapping up the EF5 release, which introduces enum support, spatial data types, table-valued function support and some serious performance improvements. The RTM of EF5 will be available alongside the RTM of Visual Studio 2012. The EF Designer in Visual Studio 2012 includes support for multiple diagrams per model, improvements for batch importing stored procedures and a new UI coming in the final release. We also released the EF Power Tools which previews some features we are considering for inclusion in the EF Designer.

One of the things we’ve done throughout the EF4 and EF5 development cycles has been to involve the community early as we make design decisions and solicit as much feedback as possible. Going forward with EF6 we are looking to take this to the next level by moving to an open development model.

Today the Entity Framework source code is being released under an open source license (Apache 2.0), and the code repository will be hosted on CodePlex to further increase development transparency. This will enable everyone in the community to be able to engage and provide feedback on code checkins, bug fixes, new feature development and build and test the product on a daily basis using the most up to date version of the source code and tests. Community contributions will also be welcomed, so you can help shape and build Entity Framework into an even better product. You can find all the details on the Entity Framework CodePlex Site.

EF isn’t the only product to make this move, last December the Windows Azure SDK released with a similar development model, and in March this year ASP.NET MVC, ASP.NET Web API, and ASP.NET Web Pages with Razor also became available on the ASP.NET CodePlex Site. These products have all found the open development approach to be a great way to build a tighter feedback loop with the community, resulting in better products. Our teams have been working together to keep the contribution process, source structure, etc. as simple as possible across all these products.

Same Support, Same Developers, More Investment

Very importantly – Microsoft will continue to ship official builds of Entity Framework as a fully supported Microsoft product both standalone as well as part of Visual Studio (the same as today). It will continue to be staffed by the same Microsoft developers that build it today, and will be supported through the same Microsoft support mechanisms. Our goal with today’s announcement is to increase the development feedback loop even more, allowing us to deliver an even better product.

The team is delighted to move to this more open development model. You’ll see plenty of commits coming soon as we work on some exciting new features.

EF6

The online code repository will be used to ship EF 6 and future releases, and much of the implementation of the async feature is already checked in.

Here are some of the features the team wants to tackle first for the Entity Framework 6 release. Feature specifications for these will be published shortly. You can check out the roadmap page on the CodePlex site for more details.

- Task-based async - Allowing EF to take advantage of .NET 4.5. async support with async queries, updates, etc.

- Stored Procedures & Functions in Code First - Allow mapping to stored procs and database functions using the Code First APIs.

- Custom Code First conventions - Allowing custom conventions to be written and registered with Code First.

Learn More

Head over to the Entity Framework Codeplex Site to learn more and get involved. And read about the new Microsoft Open Tech Hub and some of the process changes we are making to help enable this and other collaborations with the open source community.

More evidence that Microsoft will open source many more no-charge frameworks that it offers .NET, Java, PHP, and Ruby developers.

Beth Massi (@bethmassi) reported a New and Improved Office Integration Pack Extension for LightSwitch in a 7/18/2012 post:

I’ve been a big fan of Office development for a few years and have been keeping tabs on the free Office Integration Pack extension for LightSwitch from Grid Logic since it released almost a year ago. In fact, I used it in the latest VS 2012 version of my Contoso Construction sample application. Back in May they moved the source code onto CodePlex and a couple weeks ago they released a new version 1.03. I finally had some time to play with it yesterday and WOW there are a lot of cool new features.

Monday I was glued to the computer watching the Office 2013 preview announcements and keynote and boy am I impressed where Office and SharePoint development is headed! But realizing that many developers are building business apps for the “here and now” with Office 2010 (and earlier) I decided to take a tour of the new Office Integration Pack which allows you to automate Excel, Word and Outlook in a variety of ways to import and export data, create documents and PDFs, as well as work with email and appointments. The extension works with Office 2010 and LightSwitch desktop applications in both Visual Studio 2010 as well as Visual Studio 2012.

Get it here: http://officeintegration.codeplex.com/

First off, since they moved to CodePlex, everything is much more organized including the Documentation and the Sample Application (available in both VB and C#). It’s easy to pinpoint a current release and download the extension VSIX all from here. Running the sample application is quick and easy to learn from and they even improved the sample since prior releases as well. There are now a series of separate screens that demonstrate each of the features, from simple importing and exporting of data, to more complex reporting scenarios.

There are a ton of features so I encourage you to download the sample and play around. Here’s some of my favorite features I’d like to call out:

1. Import data from any range in Excel into LightSwitch screens

Similar to our Excel Importer sample extension, the Office Integration Pack will allow you to import data from Excel directly into LightSwitch screens. It lets the user pick a workbook and looks on Sheet1 for data. If the Excel column names (the first row) are different than the LightSwitch entity property names, a window will pop up that lets the user map the fields.

The Office Integration Pack can do this plus a lot more. You can automate everything. You can map specific fields to import into entity properties and you can specify the specific workbook and range all in code.

Dim map As New List(Of ColumnMapping) map.Add(New ColumnMapping("Name", "LastName")) map.Add(New ColumnMapping("Name2", "FirstName"))

Excel.Import(Me.Customers, "C:\Users\Bethma\Documents\Book1.xlsx", "Sheet2", "A1:A5", map)2. Export any collection of data to any range in an Excel Worksheet

When I say “any collection of data” I mean any IEnumerable collection of any Object. This means you can use data collections from screen queries, modeled server-side queries, or any in memory collection like those produced from LINQ statements. This makes exporting data super flexible and really easy. You also have many options to specify the workbook, sheet, range, and columns you want to export.

To export a screen collection:

Excel.Export(Me.Books)To export a modeled query (not on the screen):

Excel.Export(Me.DataWorkspace.ApplicationData.LightSwitchBooks)To export a collection from an in-memory LINQ query:

Dim results = From b In Me.Books Where b.Title.ToLower.Contains("lightswitch") Select Author = b.Author.DisplayName, b.Title, b.Price Order By Author Excel.Export(results)This is super slick! Of course you can specify which fields you want to export specifically but if you don’t, it will reflect over the objects in the collection and output all the properties it finds. In the case of the LINQ query above, this results in an output of Excel columns “Author” “Title” and “Price”. Notice how you can traverse up the navigation path to get at the parent properties as well.

3. Format data any way you want on Export

Not only can you export raw data from collections, you can also format it as it’s being exported by specifying a format delegate. For example, to format the title as upper case and the price as money you create a couple lambda expressions (fancy name for a function without a name) and specify that in the ColumnMapping class.

Dim formatPrice = Function(x As Decimal) As String Return Format(x, "c2") End Function Dim formatTitle = Function(x As String) As String Return x.ToUpper() End Function Dim map As New List(Of ColumnMapping) map.Add(New ColumnMapping("Author", "Author"))

map.Add(New ColumnMapping("Title", "Title", FormatDelegate:=formatTitle)) map.Add(New ColumnMapping("Price", "Price", FormatDelegate:=formatPrice))Excel.Export(Me.Books, "C:\Users\Bethma\Documents\Book1.xlsx", "Sheet2", "C5", map)4. Export hierarchical data, including images, to Word to provide template-based reports

With these enhancements we can now navigate the relationship hierarchy much easier through our data collections in order to create complex template-based reports with Word. In addition to adding formatting support, you can also export static values. Data ends up into content controls and bookmarked tables that you define in the document in the specific locations you want it to appear. They also added the ability to export image data into image content controls. This enables you to create a complex reports using a “data merge” directly from LightSwitch. Here’s a snippet from the sample application which demonstrates creating a book report from hierarchical data:

'Book fields = Content Controls (See BookReport.docx) Dim mapContent As New List(Of ColumnMapping) mapContent.Add(New ColumnMapping("Author", "Author")) mapContent.Add(New ColumnMapping("Title", "Title", FormatDelegate:=formatTitle)) mapContent.Add(New ColumnMapping("Description", "Description")) mapContent.Add(New ColumnMapping("Price", "Price", FormatDelegate:=formatPrice)) mapContent.Add(New ColumnMapping("Category", "Category", FormatDelegate:=formatCategory)) mapContent.Add(New ColumnMapping("PublicationDate", "PublicationDate", FormatDelegate:=formatDate)) mapContent.Add(New ColumnMapping("FrontCoverThumbnail", "FrontCoverThumbnail")) 'Author (parent) fields mapContent.Add(New ColumnMapping("Email", "Email", StaticValue:=Me.Books.SelectedItem.Author.Email)) Dim goodReviews = From b In Me.Books.SelectedItem.BookReviews Where b.Rating > 3 'Book reviews (child collection) = Bookmarked Tables Dim mapTable As New List(Of ColumnMapping) mapTable.Add(New ColumnMapping("Rating", "Rating")) mapTable.Add(New ColumnMapping("Comment", "Comment", FormatDelegate:=formatTitle)) Dim doc As Object = Word.GenerateDocument("BookReport.docx", Me.Books.SelectedItem, mapContent) Word.Export(doc, "ReviewTable", 2, False, Me.Books.SelectedItem.BookReviews, mapTable) Word.Export(doc, "GoodReviewTable", 1, True, goodReviews, mapTable) 'Save as PDF and open it Word.SaveAsPDF(doc, "BookReport.pdf", True)And here’s the resulting PDF:

As you can see the Office Integration Pack has got a lot of great features in this release. It’s one of the most downloaded extensions on the VS gallery so others definitely agree that it’s a useful extension – and it’s FREE.

THANK YOU Grid Logic for supporting the LightSwitch community! If any of you have questions or feedback, start a discussion on the CodePlex project site. And if you want to help build the next version, join the development team!

Return to section navigation list>

Windows Azure Infrastructure and DevOps

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

<Return to section navigation list>

Cloud Security and Governance

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

My (@rogerjenn) Google Compute Engine: A Viable Public Cloud Infrastructure as a Service Competitor article for Red Gate Software’s ACloudyPlace blog begins:

The public cloud-based Infrastructure as a Service (IaaS) marketplace heated up in June 2012. Microsoft announced new Windows Azure Virtual Machines, Virtual Networks, and Web Sites as a complete IaaS package at a Meet Windows Azure street festival in San Francisco’s Mission District on June 7. Derrick Harris outed budget-priced Go Daddy Cloud Servers in a June 16 post to Giga Om’s Structure blog. Finally, Google made the expected announcement of its new Compute Engine (GCE) service at its I/O 2012 Conference on June 28. Measured by press coverage, the GCE announcement made the biggest waves. Breathless tech journalists produced articles, such as the Cloud Times’ Google Compute Engine: 5 Reasons Why This Will Change the Industry, asserting GCE would be an IaaS game changer.

Au contraire. GCE is an immature, bare bones contender in a rapidly maturing, crowded commodity market. As of mid-2012, Google Cloud Services consist of a limited GCE preview, Google Big Query (GBQ), Google Cloud Storage (GCS, launched with BigQuery in May 2010) and Google App Engine (GAE, a PaaS offering, which became available as a preview in April 2008.) Market leader Amazon Web Services (AWS), as well as Windows Azure and Go Daddy, offer Linux and Windows Server images; GCE offers only Ubuntu Linux and CentOS images.

GCE Is a Limited Access, Pay-As-You Go Beta Service

Google is on-boarding a limited number of hand-picked early adopters who must specify the project they intend to run on GCE in a signup form text box. GCE currently is targeting compute-intensive, big data projects, such as the Institute for System Biology’s Genome Explorer featured in I/O 2012’s Day 2 Keynote (see Figure 1).

Figure 1 Genome Explorer Demo

Google Senior Vice President of Engineering (Infrastructure), Urs Hölzle, demonstrated the Institute for System Biology’s 10,000-core graphical Genome Explorer during Google I/O 2012’s day two keynote at 00:41:11 in the video archive. A transcript of Hölzle’s GCE announcement is available here.

Google is famous for lengthy preview periods. According to Wikipedia, Gmail had a five-year incubation as a preview, emerging as a commercial product in July 2009, and App Engine exited an almost three-and-one-half-year preview period in September 2011. Microsoft’s new IaaS and related offerings also are paid Community Technical Previews (CTPs), but Windows Azure CTPs most commonly last about six months. AWS is the IaaS price setter because of its dominant market position, and both Windows Azure and GCE prices approximate AWS charges for similarly scaled features (see Table 1.) Go Daddy’s Cloud Servers are priced to compete with other Web site hosters, such as Rackspace, and aren’t considered by most IT managers to be an enterprise IaaS option. …

Read more and see Tables 1 and 2. I like Google’s new Compute Engine logo.

Full disclosure: I’m a paid contributor to ACloudyPlace.com.

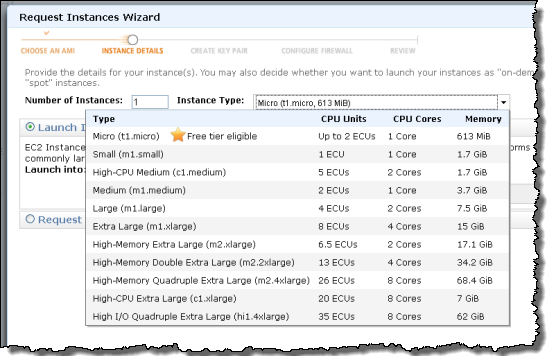

Jeff Barr (@jeffbarr) announced a New High I/O EC2 Instance Type - hi1.4xlarge - 2 TB of SSD-Backed Storage on 7/18/2012:

The Plot So Far

As the applications that you build with AWS grow in scale, scope, and complexity, you haven't been shy about asking us for more locations, more features, more storage, or more speed.

Modern web and mobile applications are often highly I/O dependent. They need to store and retrieve lots of data in order to deliver a rich, personalized experience, and they need to do it as fast as possible in order to respond to clicks and gestures in real time.

In order to meet this need, we are introducing a new family of EC2 instances that are designed to run low-latency, I/O-intensive applications, and are an exceptionally good host for NoSQL databases such as Cassandra and MongoDB.

High I/O EC2 Instances

The first member of this new family is the High I/O Quadruple Extra Large (hi1.4xlarge in the EC2 API) instance. Here are the specs:

- 8 virtual cores, clocking in at a total of 35 ECU (EC2 Compute Units).

- HVM and PV virtualization.

- 60.5 GB of RAM.

- 10 Gigabit Ethernet connectivity with support for cluster placement groups.

- 2 TB of local SSD-backed storage, visible to you as a pair of 1 TB volumes.

The SSD storage is local to the instance. Using PV virtualization, you can expect 120,000 random read IOPS (Input/Output Operations Per Second) and between 10,000 and 85,000 random write IOPS, both with 4K blocks. For HVM and Windows AMIs, you can expect 90,000 random read IOPS and 9,000 to 75,000 random write IOPS. By way of comparison, a high-performance disk drive spinning at 15,000 RPM will deliver 175 to 210 IOPS.

Why the range? Write IOPS performance to an SSD is dependent on something called the LBA (Logical Block Addressing) span. As the number of writes to diverse locations grows, more time must be spent updating the associated metadata. This is (very roughly speaking) the SSD equivalent of seek time for a rotating device, and represents per-operation overhead.

This is instance storage, and it will be lost if you stop and then later start the instance. Just like the instance storage on the other EC2 instance types, this storage is failure resilient, and will survive a reboot, but you should back it up to Amazon S3 on a regular basis.

You can launch these instances alone, or you can create a Placement Group to ensure that two or more of them are connected with non-blocking bandwidth. However, you cannot currently mix instance types (e.g. High I/O and Cluster Compute) within a single Placement Group.

You can launch High I/O Quadruple Extra Large instances in US East (Northern Virginia) and EU West (Ireland) today, at an On-Demand cost of $3.10 and $3.41, respectively. You can also purchase Reserved Instances, but you cannot acquire them via the Spot Market. We plan to make this new instance type available in several other AWS Regions before the end of the year.

Watch and Learn

I interviewed Deepak Singh, Product Manager for EC2, to learn more about this new instance type. Here's what he had to say:And More

Here are some other resources that you might enjoy:

- Adrian Cockcroft wrote about Benchmarking High Performance I/O with SSD for Cassandra on AWS for the Netflix Tech Blog.

- Werner Vogels went into detail on the differences between magnetic disk and SSD, and talks about ways to use this new instance type for databases in his post, Expanding The Cloud – High Performance I/O Instances for Amazon EC2.

- The Hacker News discussion has a lot of interesting commentary.

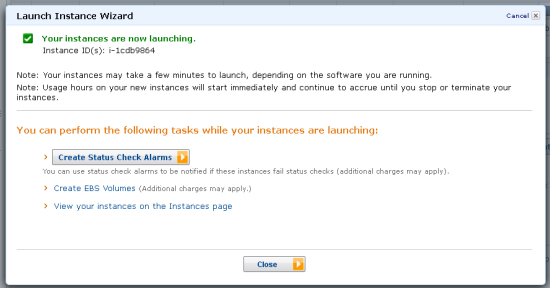

Jeff Barr (@jeffbarr) reported the availability of EC2 Instance Status Metrics in a 7/18/2012 post:

We introduced a set of EC2 instance status checks at the beginning of the year. These status checks are the results of automated tests performed by EC2 on every running instance that detect hardware and software issues. As described in my original post, there are two types of tests: system status checks and instance status checks. The test results are available in the AWS Management Console and can also be accessed through the command line tools and the EC2 APIs.

New Metrics

In order to make it even easier for you to monitor and respond to the status checks, we are now making them available as Amazon CloudWatch metrics at no charge. There are three metrics for each instance, each updated at 5 minute intervals:

- StatusCheckFailed_Instance is "0" if the instance status check is passing and "1" otherwise.

- StatusCheckFailed_System is "0" if the system status check is passing and "1" otherwise.

- StatusCheckFailed is "0" if neither of the above values is "0", or "1" otherwise.

For more information about the tests performed by each check, read about Monitoring Instances with Status Checks in the EC2 documentation.

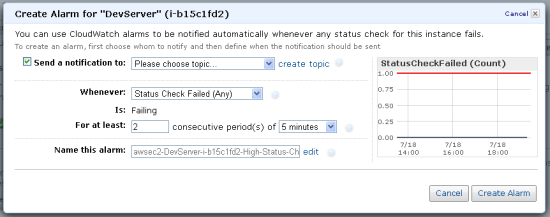

Setting Alarms

You can create alarms on these new metrics when you launch a new EC2 instance from the AWS Management Console. You can also create alarms for them on any of your existing instances. To create an alarm on a new EC2 instance, simply click the "Create Status Check Alarm" button on the final page of the launch wizard. This will let you configure the alarm and the notification:

To create an alarm on one of your existing instances, select the instance and then click on the Status Check tab. Then click on the "Create Status Check Alarm" button:

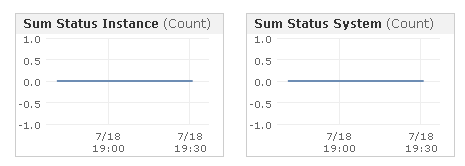

Viewing Metrics

You can view the metrics in the AWS Management Console, as usual:

And there you have it! The metrics are available now and you can use them to keep closer tabs on the status of your EC2 instances now.

Eric Knorr (@EricKnorr) described his Shining a light on Oracle Cloud article of 7/16/2012 for InfoWorld as “An interview with Senior Vice President Abhay Parasnis offers insight on the inner workings, ultimate intent of Oracle Cloud:”

It's been over a month since Larry Ellison strutted across the stage and unveiled Oracle Cloud and its first three enterprise applications: CRM, human capital management, and enterprise social networking. Plus, Ellison took the wraps off cloud versions of WebLogic and Oracle Database itself.

All told, Oracle's fearless leader said that more than 100 applications would be available, including ERP eventually -- typically the last application category enterprise customers consider trusting to the cloud.

Quite a few responses to the announcement have been cynical: another golden opportunity for Oracle lock-in. Or: The same old stuff, now available by subscription through the cloud at rates Oracle hasn't even seen fit to announce yet. Some even questioned whether it was a cloud offering at all, since Oracle touted the fact that each customer would get its own instance of the software.

But it's too early to pass judgment, precisely because so many questions about Oracle Cloud remain. At this early stage, you won't find many customers willing to talk, since Oracle is onboarding them one by one -- basically, you register and get in the queue.

To gain more insight into Oracle Cloud, InfoWorld Executive Editor Doug Dineley and I spent an hour interviewing Abhay Parasnis, senior vice president of Oracle Cloud. First of all, we wondered, why the highly controlled rollout?

Oracle won't disclose the current number of customers, but Parasnis said this was not because the applications themselves were unfinished. He gave a different explanation:

A lot of the customer base that is interested, as you can imagine, is the enterprise-class customer base, which is used to a certain level of stability and platform robustness when dealing with Oracle. Not to diminish some of the other players in the market, but they're not doing this as "let's just try out a toy application somewhere." I think the expectation, regardless of how slow or fast they start, is an enterprise-grade platform.

This cautious approach indicates perhaps the biggest difference between Oracle Cloud and other SaaS and PaaS (platform as a service) offerings. Salesforce.com, for example, has been proud of "sneaking" its SaaS apps into the enterprise; lines of business decide they can't get what they want from IT and turn to a SaaS provider instead. By contrast, Oracle seems intent on walking in the front door and selling to upper enterprise management of existing Oracle customers.

This focus on enterprises needs extends to the way Oracle will push out upgrades. As Parasnis explained:

When there comes a time when the vendor is ready to either upgrade or release new functionality, there are two broad schools of thought in the market today. For you to get the benefits of SaaS, where somebody else is going to manage your application, you must sign up to give up control in terms of when and how and what cadence you will actually upgrade your users to the new version, new workflows, or new capabilities. For some companies, or for some applications, that may be acceptable. For most enterprise users, ideally what they want are the benefits that cloud upholds in terms of somebody else managing the footprint and the lifecycle of applications, hopefully a lower cost structure. But more importantly, they don't want to lose the control.

To accommodate that desire for control, said Parasnis, Oracle will provide upgrade windows and work with customers to determine a convenient time to make the transition to new functionality or new versions. Now, of course, this is possible only because Oracle Cloud applications are not multitenanted. Instead of one huge instance of an application, to which all customers subscribe, Oracle deploys one instance per customer, which from a security standpoint supports greater isolation among customer data stores as well. …

Google’s also taking their time onboarding customers for Google Compute Engine.

Read more: 2

<Return to section navigation list>

0 comments:

Post a Comment