Windows Azure and Cloud Computing Posts for 7/2/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 7/4/2012 3:00 PM PDT with articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Richard Conway (@azurecoder) posted Copying Azure Blobs from one subscription to another with API 1.7.1 on 7/4/2012:

I read Gaurav Mantri’s excellent blog post on copying blobs from S3 to Azure Storage and realised we need that this had been the feature we’d been looking for ourselves for a while. The new 1.7.1. API enables copying from one subscription to another, intra-storage account or even inter-data centre OR as Gaurav has shown between Azure and another storage repo accessible via HTTP. Before this was enabled the alternative to write a tool read the blob into a local store and then upload to another account generating ingress/charges and adding a third unwanted wheel. You need to hit Azure on GitHub – as yet it’s not released as a nuget package. So go here and clone the repo and compile the source.

More on code later but for now let’s consider how this is being done.

Imagine we have two storage accounts, elastaaccount1 and elastaaccount2 and they are both in different subscriptions but we need to copy a package from one subscription (elastaaccount1) to another (elastaaccount2) using the new method described above.

Initially the API uses an HTTP PUT method with the new HTTP header x-ms-copy-source which allows elastaaccount2 to specify an endpoint to copy the blob from. Of course, in this instance we’re assuming that there is not security on this and the ACL is opened up to the public but it may be the case that this isn’t so in which case a Share Access Signature should be used which can be generated fairly easily in code from the source account and appended to the URL to allow the copy to ensue on a non-publicly accessible Blob.

PUT http://elastaaccount2.blob.core.windows.net/vanilla/mypackage.zip?timeout=90 HTTP/1.1 x-ms-version: 2012-02-12 User-Agent: WA-Storage/1.7.1 x-ms-copy-source: http://elastaaccount1.blob.core.windows.net/vanilla/mypackage.zip x-ms-date: Wed, 04 Jul 2012 16:39:19 GMT Authorization: SharedKey elastastorage3:<my shared key> Host: elastaaccount3.blob.core.windows.netThis operations returns a 202 Accepted. The API will then poll asynchronously since this is queued and then use the HEAD method to determine the status. The product team on their blog that there is no SLA currently so you can have this sitting in a queue without an ackowledgement from Microsoft BUT in all our tests it is very, very quick within the same data centre.

HEAD http://elastaaccount1.blob.core.windows.net/vanilla/mypackage.zip?timeout=90 HTTP/1.1 x-ms-version: 2012-02-12 User-Agent: WA-Storage/1.7.1 x-ms-date: Wed, 04 Jul 2012 16:32:16 GMT Authorization: SharedKey elastaaccount1:<mysharedkey> Host: elastaaccount1.blob.core.windows.netBless the Fabric – this is an incredibly useful feature. Here is a class that might help save you some time now.

///<summary> /// Used to define the properties of a blob which should be copied to or from /// </summary> public class BlobEndpoint { ///<summary> /// The storage account name /// </summary> private readonly string _storageAccountName = null; ///<summary> /// The container name /// </summary> private readonly string _containerName = null; ///<summary> /// The storage key which is used to /// </summary> private readonly string _storageKey = null; /// <summary> /// Used to construct a blob endpoint /// </summary> public BlobEndpoint(string storageAccountName, string containerName = null, string storageKey = null) { _storageAccountName = storageAccountName; _containerName = containerName; _storageKey = storageKey; } ///<summary> /// Used to a copy a blob to a particular blob destination endpoint - this is a blocking call /// </summary> public int CopyBlobTo(string blobName, BlobEndpoint destinationEndpoint) { var now = DateTime.Now; // get all of the details for the source blob var sourceBlob = GetCloudBlob(blobName, this); // get all of the details for the destination blob var destinationBlob = GetCloudBlob(blobName, destinationEndpoint); // copy from the destination blob pulling the blob destinationBlob.StartCopyFromBlob(sourceBlob); // make this call block so that we can check the time it takes to pull back the blob // this is a regional copy should be very quick even though it's queued but still make this defensive const int seconds = 120; int count = 0; while (count < (seconds * 2)) { // if we succeed we want to drop out this straight away if (destinationBlob.CopyState.Status == CopyStatus.Success) break; Thread.Sleep(500); count++; } //calculate the time taken and return return (int)DateTime.Now.Subtract(now).TotalSeconds; } ///<summary> /// Used to determine whether the blob exists or not /// </summary> public bool BlobExists(string blobName) { // get the cloud blob var cloudBlob = GetCloudBlob(blobName, this); try { // this is the only way to test cloudBlob.FetchAttributes(); } catch (Exception) { // we should check for a variant of this exception but chances are it will be okay otherwise - that's defensive programming for you! return false; } return true; } ///<summary> /// The storage account name /// </summary> public string StorageAccountName { get { return _storageAccountName; } } ///<summary> /// The name of the container the blob is in /// </summary> public string ContainerName { get { return _containerName; } } ///<summary> /// The key used to access the storage account /// </summary> public string StorageKey { get { return _storageKey; } } ///<summary> /// Used to pull back the cloud blob that should be copied from or to /// </summary> private static CloudBlob GetCloudBlob(string blobName, BlobEndpoint endpoint) { string blobClientConnectString = String.Format("http://{0}.blob.core.windows.net", endpoint.StorageAccountName); CloudBlobClient blobClient = null; if(endpoint.StorageKey == null) blobClient = new CloudBlobClient(blobClientConnectString); else { var account = new CloudStorageAccount(new StorageCredentialsAccountAndKey(endpoint.StorageAccountName, endpoint.StorageKey), false); blobClient = account.CreateCloudBlobClient(); } return blobClient.GetBlockBlobReference(String.Format("{0}/{1}", endpoint.ContainerName, blobName)); } }The class itself should be fairly self-explanatory and should only be used with public ACLs although the modification is trivial.

A simple test would be as follows:

var copyToEndpoint = new BlobEndpoint("elastaaccount2", ContainerName, "<secret primary key>"); var endpoint = new BlobEndpoint("elastaaccount1", ContainerName); bool existsInSource = endpoint.BlobExists(BlobName); bool existsFalse = copyToEndpoint.BlobExists(BlobName); endpoint.CopyBlobTo(BlobName, copyToEndpoint); bool existsTrue = copyToEndpoint.BlobExists(BlobName);

Andrew Brust (@andrewbrust) asserted “June was a big month for the cloud and for Hadoop. It was also a big month for the intersection of the two” in a deck for his Hadoop in the Cloud with Amazon, Google[, Microsoft] and MapR article of 7/2/2012 for ZDNet’s Big on Data blog:

June of 2012 was a big month for the cloud. On June 7th, Microsoft re-launched its Windows Azure cloud platform, which now features a full-fledged Infrastructure as a Service (IaaS) offering that accommodates both Windows and Linux. Then on June 28, Google, at its I/O conference, launched Google Compute Engine, its own IaaS cloud platform.

June was also a big month for Hadoop. The Hadoop Summit took place in San Jose, CA on June 13th and 14th. Yahoo offshoot Hortonworks used the event as the launchpad for its own Hadoop distribution, dubbed the Hortonworks Data Platform (HDP). Hortonworks will now work to achieve the same prominence for HDP as Cloudera has achieved for its Cloudera Distribution including Apache Hadoop (CDH).

Don't forget MapR

The Hadoop world isn't all about CDH and HDP though. Another important distribution out there is the one from MapR, which seeks to make the Hadoop Distributed File System more friendly and addressable as a Network File Storage volume. This HDFS-to-NFS translation gives HDFS files the read/write random access taken for granted with conventional file systems.

MapR trails Cloudera's distribution by quite a lot, however. And Hortonworks' distro will probably overtake it quickly, as well. But don't write MapR off just yet, because in the last couple of weeks it has emerged as an important piece of the Hadoop cloud puzzle.

MapR-ing the cloud

On June 16th, Amazon announced that in addition to its own Hadoop distribution, it now provides the option to use MapR's distro on the temporary clusters provisioned through its Elastic MapReduce service, hosted within its Elastic Compute Cloud (EC2). Customers can use the "M3" open source community edition of MapR or the Enterprise edition, known as "M5." M5 carries a non-trivial surcharge but offers Enterprise features like mirrored clusters and the ability to create snapshot-style backups of your cluster.

MapR didn't stop there. Instead, it continued its June cloud crusade, by announcing on June 28th at Google I/O a private beta of its Hadoop distribution running on the Google Compute Engine cloud. Suddenly the Hadoop distro that many considered an also-ran has become the poster child for Big Data in the cloud.

Cloud Hadoop by Microsoft, or even by yourself

Is that enough Hadoop in the cloud for you? If not, don't forget the Microsoft-Hortonworks Hadoop distro for Windows, now available through an invitation-only beta on its Azure cloud. And if you're still not satisfied, check out the Apache Whirr project, which lets you deploy virtually any Hadoop distribution to clusters built from cloud servers on Amazon Web Services or Rackspace Cloud Servers.

Hadoop in the cloud isn't always easy, especially since most cloud platforms have their own BLOB storage facilities that only a cloud vendor-tuned distribution can typically handle. But Hadoop in the cloud makes a great deal of sense: the elastic resource allocation that cloud computing is premised on works well for cluster-based data processing infrastructure used on varying analyses and data sets of indeterminate size.

Hadoop in the cloud is likely to get increasingly popular. In the future it will be interesting to see if the Hadoop distribution vendors, or the cloud platform vendors, will be the ones to lead the charge.

Lynn Langit (@lynnlangit) wrote a Hadoop on Windows Azure post for MSDN Magazine’s July 2012 issue. From the introduction:

There’s been a lot of buzz about Hadoop lately, and interest in using it to process extremely large data sets seems to grow day by day. With that in mind, I’m going to show you how to set up a Hadoop cluster on Windows Azure. This article assumes basic familiarity with Hadoop technologies. If you’re new to Hadoop, see “What Is Hadoop?” As of this writing, Hadoop on Windows Azure is in private beta. To get an invitation, visit hadooponazure.com. The beta is compatible with Apache Hadoop (snapshot 0.20.203+).

What Is Hadoop?

Hadoop is an open source library designed to batch-process massive data sets in parallel. It’s based on the Hadoop distributed file system (HDFS), and consists of utilities and libraries for working with data stored in clusters. These batch processes run using a number of different technologies, such as Map/Reduce jobs, and may be written in Java or other higher-level languages, such as Pig. There are also languages that can be used to query data stored in a Hadoop cluster. The most common language for query is HQL via Hive. For more information, visit hadoop.apache.org. …

Lynn goes on to describe “Setting Up Your Cluster” and related topics.

Carl Nolan (@carl_nolan) continued his series with Framework for .Net Hadoop MapReduce Job Submission Json Serialization on 7/1/2012:

A while back one of the changes made to the “Generic based Framework for .Net Hadoop MapReduce Job Submission” code was to support Binary Serialization from Mapper, in and out of Combiners, and out from the Reducer. Whereas this change was needed to support the Generic interfaces there were two downsides to this approach. Firstly the size of the output was dramatically increased, and to a lesser extent the data could no longer be visually inspected and was not interoperable with non .Net code. The other subtle problem was that to support textual output from the Reducer, ToString() was being used; not an ideal solution.

To fix these issues I have changed the default Serializer to be based on the DataContractJsonSerializer. For the most part this will result in no changes to the running code. However this approach does allow one to better control the serialization of the intermediate and final output.

As an example consider the following F# Type, that is used in one of the samples:

type MobilePhoneRange = { MinTime:TimeSpan; AverageTime:TimeSpan; MaxTime:TimeSpan }

This now results in the following output from the Streaming job; where the key is a Device Platform string and the value is the query time range in JSON format:

Android {"AverageTime@":"PT12H54M39S","MaxTime@":"PT23H59M54S","MinTime@":"PT6S"}

RIM OS {"AverageTime@":"PT13H52M56S","MaxTime@":"PT23H59M58S","MinTime@":"PT1M7S"}

Unknown {"AverageTime@":"PT10H29M27S","MaxTime@":"PT23H52M36S","MinTime@":"PT36S"}

Windows Phone {"AverageTime@":"PT12H38M31S","MaxTime@":"PT23H55M17S","MinTime@":"PT32S"}

iPhone OS {"AverageTime@":"PT11H51M53S","MaxTime@":"PT23H59M50S","MinTime@":"PT1S"}Not only is this output readable but it is a lot smaller in size than the corresponding binary output.

If one wants further control over the serialization one can now use the DataContract and DataMember attributes; such as in this C# sample class definition, again used in the samples:

[DataContract]

public class MobilePhoneRange

{

[DataMember] public TimeSpan MinTime { get; set; }

[DataMember] public TimeSpan MaxTime { get; set; }

public MobilePhoneRange(TimeSpan minTime, TimeSpan maxTime)

{

this.MinTime = minTime;

this.MaxTime = maxTime;

}

}This will result in the Streaming job output:

Android {"MaxTime":"PT23H59M54S","MinTime":"PT6S"}

RIM OS {"MaxTime":"PT23H59M58S","MinTime":"PT1M7S"}

Unknown {"MaxTime":"PT23H52M36S","MinTime":"PT36S"}

Windows Phone {"MaxTime":"PT23H55M17S","MinTime":"PT32S"}

iPhone OS {"MaxTime":"PT23H59M50S","MinTime":"PT1S"}Most types which support binary serialization can be used as Mapper and Reducer types. One type that warrants a quick mention is supporting serializing Generic Lists in F#. If one wants to use a Generic List, a simple List type is required to be defined that inherits from List<T>. Using the following definition one can now use a List of ProductQuantity types as part of any output.

type ProductQuantity = { ProductId:int; Quantity:float}

type ProductQuantityList() =

inherit List<ProductQuantity>()If one still wants to use Binary Serialization one can specify the optional output format parameter:

-outputFormat Binary

This submission attribute is optional and if absent the default value of Text is assumed; meaning the output will be in text format using the JSON serializer.

Hopefully this change will be transparent but will result in better performance due to the dramatically reduced files sizes; and more data readability and interoperability.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Richard Conway (@azurecoder) described Tricks with IaaS and SQL: Part 1 – Installing SQL Server 2012 VMs using Powershell in a 6/30/2012 post:

This blog post has been a long time coming. I’ve sat on IaaS research since the morning of the 8th June. Truth is I love it. Forget the comparisons with EC2 and the maturity of Windows Azure’s offering. IaaS changes everything. PaaS is cool, we’ve built a stable HPC cluster management tool using web and worker roles and plugins – we’ve really explored everything PaaS has to offer in terms of services over the last four years. What IaaS does is change the nature of the cloud.

- With IaaS you can build your own networks in the cloud easily

- You can virtualise your desktop environment and personally benefit from the raw compute power of the cloud

- You can hybridise your networks by renting cloud ready resources to extend your network through secure virtual networking

- Most importantly – you can make use of combinations of PaaS and IaaS deployments

The last point is an important one because the coupling between the two can make use of well groomed applications which need access to services not provided out-of-of-box by PaaS. For example, there is a lot of buzz about using IaaS to host Sharepoint or SQL Server as part of an extended domain infrastructure.

This three part blog entry will look at the benefits of hosting SQL Server 2012 using the gallery template provided by Microsoft. I’ll draw on our open source library Azure Fluent Management and powershell to show how easy it is to deploy SQL Server and look at how you can tweak SQL should you need to set up mixed mode authentication which it doesn’t default to.

So the first question is – why use SQL Server when you have SQL Azure which already provides all of the resilience and scalability you would need to employ in a production application? Well … SQL Azure is a fantastic and a very economical use of SQL Server which provides a logical model that Microsoft manages but it’s not without it’s problems.

- Firstly, it’s a shared resource so you can end up competing for resources. Microsoft’s predictive capability hasn’t proved to be that accurate with SQL Azure so it does sometimes have latency issues which are kinds of things that developers and DBAs go to great pains to avoid in a production application.

- Secondly, being on a contentious shared infrastructure leads to transient faults which can be more prolific than you would like so you have to think about transient fault handling (ToPAZ is an Enterprise Application Block library which works pretty much out-of-the-box with your existing .NET System.Data codebase).

- Thirdly, and most importantly, SQL Azure is a subset of SQL Server and doesn’t provide an all-encompassing set of services. For example, there is no XML support in SQL Azure, certain system stored procedures, synonyms, you can’t have linked servers so have to use multiple schemas to compensate. These are just a few things to worry about. In fact, whilst most databases can be updated, some can’t without a significant migration effort and a lot of thought and planning.

IaaS offers an alternative now. In one fell swoop we can create a SQL Server instance, load some data and connect to it as part of our effort. We’ll do exactly that here and the second part using our fluent management library. The third part will describe how we can use failover by configuring an Active-Passive cluster between two SQL Server nodes.

Let’s start with some powershell now.

If you haven’t done already download the WAPP powershell CmdLets. The best reference on this is through Michael Washam’s blog. If you were at our conference in London in June 22nd you would have seen Michael speak about this and will have some idea of how to use Powershell and IaaS. Follow the setup instructions and then import your subscription settings from a .publishsettings file that you’ve previously downloaded. The CmdLet to do the import is as below:

1> Import-AzurePublishSettingsFileThis will import the certificate from the file and associate its context with powershell session and each of the subscriptions.

If you haven’t downloaded a .publishsettings file for any reason use:

1> Get-AzurePublishSettingsFileand enter your live id online. Save the file in a well-known location and behind the scenes the fabric has associated the management certificate in the file to the subscriptions that your live id is associated with (billing, service or co-admin).

A full view of the CmdLets are available here:

http://msdn.microsoft.com/en-us/library/windowsazure/hh689725.aspx

In my day-to-day I tend to use Cerebrata CmdLets over WAPP so when I list CmdLets it gives me an extended list. Microsoft WAPP CmdLets load themselves in as a module whereas Cerebrata are a snap-in. In order to get rid of all of the noise you’ll see with the CmdLets and get a list of only the WAPP CmdLets enter the following:

1>Get-Command-ModuleMicrosoft.WindowsAzure.ManagementSince most of my work with WAPP is test only and I use Cerebrata for production I tend to remove most of the subscriptions I don’t need. You can do this via:

1> Remove-AzureSubscription-SubscriptionNameIf there is no default subscription then you may need to select a subscription before starting:

1>Select-Subscription-SubscriptionNameNext we’ll have to set some variables which will enable us to deploy the image and set up a VM. From top to bottom let’s describe the parameters. $img contains the name of the gallery image which will be copied across to our storage account. Remember this is an OS image which is 30GB in size. When the VM is created we’ll end up with C: and D: drive as a result. C is durable and D volatile. It’s important to note that a 30GB page blob is copied to your storage account for your OS disk and locked – this means that an infinte blob lease is used to lock out the drive so only your VM Image can write to it with the requisite lease id. $machinename (non-mandatory), $hostedservice (cloud service), $size and $password (your default Windows password) should be self-explanatory. $medialink relates to the storage location of the blob.

1>$img="MSFT__Sql-Server-11EVAL-11.0.2215.0-05152012-en-us-30GB.vhd"

2>$machinename="ELASTASQLVHD"

3>$hostedservice="elastavhd"

4>$size="Small"

5>$password="Password900"

6>$medialink= http://elastacacheweb.blob.core.windows.net/vhds/elastasql.vhdIn this example we won’t be looking at creating a data disk (durable drive E), however, we can have up to 15 data disks with our image each up to 1TB. With SQL Server it is better to create a datadisk to persist this and allow the database to grow beyond the size of the OS page blob. In part two of this series we’ll be looking at using Fluent Management to create the SQL Server which will be creating a data disk to store the .mdf/ldf SQL files.

More information can be found here about using data disks:

http://blogs.msdn.com/b/windowsazure/archive/2012/06/28/data-series-exploring-windows-azure-drives-disks-and-images.aspx

What the article doesn’t tell you is that if you inadvertantly delete your virtual machine (guilty) without detaching the drive drive first you won’t be able to delete it because of the infinite lease. You can also delete via powershell with the following CmdLets if you get into this situation which is easy to do!

1> Get-AzureDisk|SelectDiskNameThis gives you the name of the attached data or OS disks. If you use Cerebrata’s cloud storage studio you should be able to see these blobs themselves but the names differ. The names are actually pulled back from a disks catalog associated with the subscription via the Service Management API.

The resulting output from the above CmdLet can be fed into the following CmdLet to delete an OS or data disk.

1> Remove-AzureDisk-DiskNamebingbong-bingbong-0-20120609065137-DeleteVHDThe only thing necessary now is to be able to actually run the command to create the virtual machine. Michael Washam has added a -verbose switch to the CmdLet which helps us understand how the VM is formed. The XML is fairly self-explanatory here. First setup the VM config, then setup the Windows OS config and finally pipe everything into the New-AzureVM CmdLet which will create a new cloud service or use an existing one and create an isolated role for the VM instance (each virtual machine lives in a seperate role).

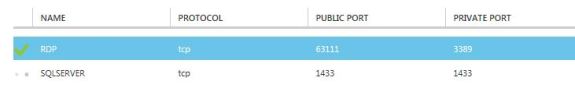

1> New-AzureVMConfig-name$machinename-InstanceSize$size-ImageName$img-MediaLocation$medialink-Verbose| Add-AzureProvisioningConfig-Windows-Password$password-Verbose| New-AzureVM-ServiceName$hostedservice-Location"North Europe"-VerboseBy default Remote Desktop is enabled with a random public port assigned and a load balanced redirect to a private port of 3389.

Remote Desktop Endpoint

In order to finalise the installation we need to setup another external endpoint which forwards request to port 1433, the port used by SQL Server. This is only half of the story because I also should add a firewall rule to enable access to this port via TCP as per the image.

SQL Server Endpoint

Opening a firewall port for SQL Server

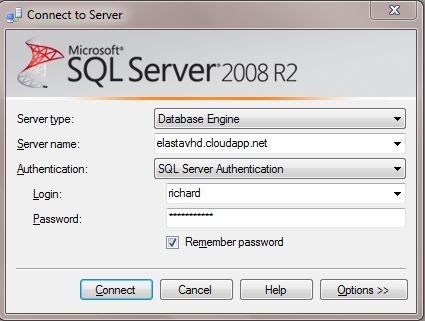

You would have probably realised by now that the default access to SQL Server is via windows credentials. Whilst DBAs will be over the moon with this for my simple demo I’d like you to connect to this SQL instance via SSMS (SQL Server Management Studio). As we’ll need to update a registry key to enable mixed mode authentication via regedit:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQLServer

And update the LoginMode (DWORD) to 2.

Once that’s done I should be able to startup SSMS on the virtual machine and create a new login called “richard” with password “Password_900″ and remove all of the password rules and expiries so that I can test this. In addition I will need to ensure that my user is placed in the appropriate role (sysadmin is easy to prove the concept) so that I can then go ahead and connect.

Logging into SQL Server

I then create a new database called “icanconnect” and can set this as the default for my new user.

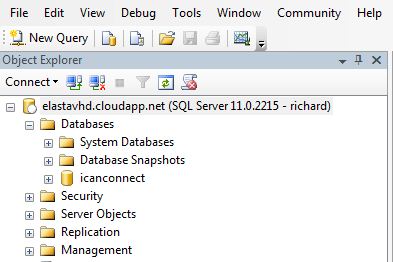

When I try to connect through my SSMS on my laptop I should be able to see the following as per the image below.

Seeing your database in SSMS

There’s a lot of separate activites here which we’ll add to a powershell script in second part of this series. I realise that this is getting quite long and I was keen to highlight the steps involved in this without information overload. In part two we’ll look at how Azure Fluent Management can be used to do the same thing and how we can also script the various activities which we’ve described here to shrink everything into a single step.

Doug Mahugh (@dmahugh) published Doctrine supports SQL Database Federations for massive scalability on Windows Azure to the Interoperability @ Microsoft blog on 6/29/2012:

Symfony and Doctrine are a popular combination for PHP developers, and now you can take full advantage of these open source frameworks on Windows Azure. We covered in a separate post the basics of getting started with Symfony on Windows Azure, and in this post we’ll take a look at Doctrine’s support for sharding via SQL Database Federations, which is the result of ongoing collaboration between Microsoft Open Technologies and members of the Symfony/Doctrine community.

SQL Database Federations

My colleague Ram Jeyaraman covered in a blog post last December the availability of the SQL Database Federations specification. This specification covers a set of commands for managing federations as objects in a database. Just as you can use SQL commands to create a table or a stored procedure within a database, the SQL Database Federations spec covers how to create, use, or alter federations with simple commands such as CREATE FEDERATION, USE FEDERATION, or ALTER FEDERATION.

If you’ve never worked with federations before, the concept is actually quite simple. Your database is partitioned into a set of federation members, each of which contains a set of related data (typically group by a range of values for a specified federation distribution key):

This architecture can provide for massive scalability in the data tier of an application, because each federation member only handles a subset of the traffic and new federation members can be added at any time to increase capacity. And with the approach used by SQL Database Federations, developers don’t need to keep track of how the database is partitioned (sharded) across the federation members – the developer just needs to do a USE FEDERATION command and the data layer handles those details without any need to complicate the application code with sharding logic.

You can find a detailed explanation of sharding in the SQL Database Federations specification, which is a free download covered by the Microsoft Open Specification Promise. Questions or feedback on the specification are welcome on the MSDN forum for SQL Database.

Doctrine support for SQL Database Federations

The Doctrine Project is a set of open-source libraries that help ease database development and persistence logic for PHP developers. Doctrine includes a database abstraction layer (DBAL), object relational mapping (ORM) layer, and related services and APIs.

As of version 2.3 the Doctrine DBAL includes support for sharding, including a custom implementation of SQL Database Federations that’s ready to use with SQL Databases in Windows Azure. Instead of having to create Federations and schema separately, Doctrine does it all in one step. Furthermore, the combination of Symfony and Doctrine gives PHP developers seamless access to blob storage, Windows Azure Tables, Windows Azure queues, and other Windows Azure services.

The online documentation on the Doctrine site shows how easy it is to instantiate a ShardManager interface (the Doctrine API for sharding functionality) for a SQL Database:

The Doctrine site also has an end-to-end tutorial on how to do Doctrine sharding on Windows Azure, which covers creation of a federation, inserting data, repartitioning the federation members, and querying the data.

Doctrine’s sharding support gives PHP developers a simple option for building massively scalable applications and services on Windows Azure. You get the ease and flexibility of Doctrine Query Language (DQL) combined with the performance and durability of SQL Databases on Windows Azure, as well as access to Windows Azure services such as blob storage, table storage, queues, and others.

Doug Mahugh

Senior Technical Evangelist

Microsoft Open Technologies, Inc.

See also Doug’s Symfony on Windows Azure, a powerful combination for PHP developers post in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

• The WCF Data Services Team described Trying out the prerelease OData Client T4 template in a 7/2/2012 post:

We recently prereleased an updated OData Client T4 template to NuGet. The template will codegen classes for working with OData v3 services, but full support has not been added for all v3 features.

What is the OData Client T4 template?

The OData Client T4 template is a T4 template that generates code to facilitate consumption of OData services. The template will generate a DataServiceContext and classes for each of the entity types and complex types found in the service description. The template should produce code that is functionally equivalent to the Add Service Reference experience. The T4 template provides some compelling benefits:

- Developers can customize the T4 template to generate exactly the code they desire

- The T4 template can be executed at build time, ensuring that service references are always fully up-to-date (and that any discrepancies cause a build error rather than a runtime error)

In this blog post, we will walk through how to obtain and use the OData Client T4 template. We will not be covering other details of T4; much of that information is available on MSDN.

OData Client T4 template walkthrough

Let’s begin by creating a new Console Application project.

Next we need to use NuGet to get the T4 template. We can do this by right-clicking the project in the Solution Explorer and choosing Manage NuGet Packages. NOTE: You may need to get the NuGet Package Manager if you are using VS 2010.

In the Package Explorer window, choose Online from the left nav, ensure that the prerelease dropdown is set to Include Prerelease (not Stable Only), and search for Microsoft.Data.Services.Client.T4. You should see the OData Client T4 package appear; click the Install button to install the package.

When the OData Client T4 package has been added to the project, you should see a file called Reference.tt appear in the Solution Explorer. (NOTE: It’s perfectly fine at this point to change the name of the file to something more meaningful for your project.) Expand Reference.tt using the caret to the left of the file and double-click to open Reference.cs. Note that the contents of Reference.cs were generated from the T4 template and that they provide further instructions on how to use the template.

Double-click Reference.tt to open the T4 template. On line 30, add the following:

MetadataUri = http://odata.netflix.com/Catalog/$metadata;. NOTE: The T4 template does not currently support actions; if you specify a $metadata that contains actions, code generation will fail.Save Reference.tt and expand Reference.cs in the Solution Explorer to see the newly generated classes. (NOTE: If you’re using VS 2010, you’ll need to open Reference.cs and browse the code in the file.

Finally, add the following code to Program.cs.

Press Ctrl+F5 to see the result.

Why should I use the T4 template?

Currently the Add Service Reference and DataSvcUtil experiences are both based on a DLL that does not allow for customizable code generation. We believe that in the future both of these experiences will use the T4 template internally, with the hope that you would be able to customize the output of either experience. (Imagine the simplicity of ASR with the power of T4!) To do that, we need to get the T4 template to a quality level equal to or better than the ASR experience.

Feedback, please!

We’d love to hear from you! Specifically, we would really benefit from answers to the following questions:

- Did the T4 template work for you? Why or why not?

- What customizability should be easier in the T4 template?

- Do you use DataSvcUtil today? For what reason?

- If you only use DataSvcUtil for msbuild chaining, could you chain a T4 template instead?

- Do you want the T4 template to generate C# or VB.NET?

For political correctness, I want the T4 template to generate either C# or VB.NET.

Clint Edmonson asserted Windows Azure Recipe: Big Data in a 7/2/2012 post to Microsoft’s US DPE Azure Connection blog:

As the name implies, what we’re talking about here is the explosion of electronic data that comes from huge volumes of transactions, devices, and sensors being captured by businesses today. This data often comes in unstructured formats and/or too fast for us to effectively process in real time. Collectively, we call these the 4 big data V’s: Volume, Velocity, Variety, and Variability. These qualities make this type of data best managed by NoSQL systems like Hadoop, rather than by conventional Relational Database Management System (RDBMS).

We know that there are patterns hidden inside this data that might provide competitive insight into market trends. The key is knowing when and how to leverage these “No SQL” tools combined with traditional business such as SQL-based relational databases and warehouses and other business intelligence tools.

Drivers

- Petabyte scale data collection and storage

- Business intelligence and insight

Solution

The sketch below shows one of many big data solutions using Hadoop’s unique highly scalable storage and parallel processing capabilities combined with Microsoft Office’s Business Intelligence Components to access the data in the cluster.

Ingredients

- Hadoop – this big data industry heavyweight provides both large scale data storage infrastructure and a highly parallelized map-reduce processing engine to crunch through the data efficiently. Here’s are the key pieces of the environment:

- Pig - a platform for analyzing large data sets that consists of a high-level language for expressing data analysis programs, coupled with infrastructure for evaluating these programs.

- Mahout - a machine learning library with algorithms for clustering, classification and batch based collaborative filtering that are implemented on top of Apache Hadoop using the map/reduce paradigm.

- Hive - data warehouse software built on top of Apache Hadoop that facilitates querying and managing large datasets residing in distributed storage. Directly accessible to Microsoft Office and other consumers via add-ins and the Hive ODBC data driver.

- Pegasus - a Peta-scale graph mining system that runs in parallel, distributed manner on top of Hadoop and that provides algorithms for important graph mining tasks such as Degree, PageRank, Random Walk with Restart (RWR), Radius, and Connected Components.

- Sqoop - a tool designed for efficiently transferring bulk data between Apache Hadoop and structured data stores such as relational databases.

- Flume - a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large log data amounts to HDFS.

- Database – directly accessible to Hadoop via the Sqoop based Microsoft SQL Server Connector for Apache Hadoop, data can be efficiently transferred to traditional relational data stores for replication, reporting, or other needs.

- Reporting – provides easily consumable reporting when combined with a database being fed from the Hadoop environment.

Training

These links point to online Windows Azure training labs where you can learn more about the individual ingredients described above.

Hadoop Learning Resources (20+ tutorials and labs)

Huge collection of resources for learning about all aspects of Apache Hadoop-based development on Windows Azure and the Hadoop and Windows Azure Ecosystems

Microsoft SQL Azure delivers on the Microsoft Data Platform vision of extending the SQL Server capabilities to the cloud as web-based services, enabling you to store structured, semi-structured, and unstructured data.

See my Windows Azure Resource Guide for more guidance on how to get started, including links web portals, training kits, samples, and blogs related to Windows Azure.

<Return to section navigation list>

Windows Azure Service Bus, Caching, Active Directory and Workflow

• Brent Stineman (@BrentCodeMonkey) described Session State with Windows Azure Caching Preview in a 7/3/2012 post:

I’m working on a project for a client and was asked to pull together a small demo using the new Windows Azure Caching preview. This is the “dedicated” or better yet, “self hosted” solution that’s currently available as a preview in the Windows Azure SDK 1.7, not the Caching Service that was made available early last year. So starting with a simple MVC 3 application, I set out to enable the new memory cache for session state. This is only step 1 and the next step is to add a custom cache based on the LocalStorage feature of Windows Azure Cloud Services.

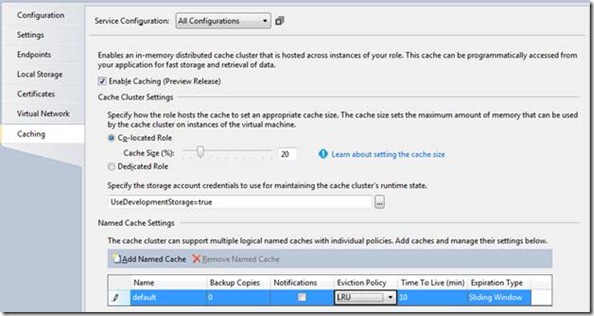

Enabling the self-hosted, in-memory cache

After creating my template project, I started by following the MSDN documentation for enabling the cache co-hosted in my MVC 3 web role. I opened up the properties tab for the role (right-clicking on the role in the cloud service via the Solution Explorer) and moved to the Caching tab. I checked “Enable Caching” and set my cache to Co-located (it’s the default) and the size to 20% of the available memory.

Now because I want to use this for session state, I’m also going to change the Expiration Type for the default cache from “Absolute” to “Sliding”. In the current preview, we only have one eviction type, Least Recently Used (LRU) which will work just fine for our session demo. We save these changes and take a look at what’s happened with the role.

There are three changes that I could find:

- A new module, Caching, is imported in the ServiceDefinition.csdef file

- A new local resource “Microsoft.WindowsAzure.Plugins.Caching.FileStore” is declared

- Four new configuration settings are added, all related to the cache: NamedCaches (a JSON list of named caches), LogLevel, CacheSizePercentage, and ConfigStoreConnectionString

Yeah PaaS! A few options clicked and the Windows Azure Fabric will handle spinning up the resources for me. I just have to make the changes to leverage this new resource. That’s right, now I need to setup my cache client.

Note: While you can rename the “default” cache by editing the cscfg file, the default will always exist. There’s currently no way I found to remove or rename it.

Client for Cache

I could configure the cache manually, but folks keep telling me to I need to learn this NuGet stuff. So lets do it with the NuGet packages instead. After a bit of fumbling to clean up a previously botched NuGet install fixed (Note: must be running VS at Admin to manage plug-ins), I right-clicked on my MVC 3 Webrole and selected “Manage NuGet Packages”, then following the great documentation at MSDN, searched for windowsazure.caching and installed the “Windows Azure Caching Preview” package.

This handles updating my project references for me, adding at least 5 of them that I saw at a quick glance, as well as updating the role’s configuration file (the web.config in my case) which I now need to update with the name of my role:

<dataCacheClientname=“default“>

<autoDiscoverisEnabled=“true“identifier=“WebMVC“ />

<!–<localCache isEnabled=”true” sync=”TimeoutBased” objectCount=”100000″ ttlValue=”300″ />–>

</dataCacheClient>Now if you’re familiar with using caching in .NET, this is all I really need to do to start caching. But I want to take another step and change my MVC application so that it will use this new cache for session state. This is simply a matter of replacing the default provider “DefaultSesionProvider” in my web.config with the AppFabricCacheSessionStoreProvider. Below are both for reference:

Before:

<addname=“DefaultSessionProvider“

type=“System.Web.Providers.DefaultSessionStateProvider, System.Web.Providers, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35“

connectionStringName=“DefaultConnection“

applicationName=“/“ />After:

<addname=“AppFabricCacheSessionStoreProvider“

type=“Microsoft.Web.DistributedCache.DistributedCacheSessionStateStoreProvider, Microsoft.Web.DistributedCache“

cacheName=“default“

useBlobMode=“true“

dataCacheClientName=“default“ />Its important to note that I’ve set the cacheName attribute to match the name of the named cached I set up previously, in this case “default”. If you set up a different named cache, set the value appropriately or expect issues.

But we can’t stop there, we also need to update the sessionState node’s attributes, namely mode and customProvider as follows:

<sessionStatemode=“Custom“customProvider=“AppFabricCacheSessionStoreProvider“>

Demo Time

Of course, all this does nothing unless we have some code that shows the functionality at work. So let’s increment a user specific page view counter. First, I’m going to go into the home controller and add in some (admittedly ugly) code in the Index method:

// create the session value if we’re starting a new session

if (Session.IsNewSession)

Session.Add(“viewcount”, 0);

// increment the viewcount

Session["viewcount"] = (int)Session["viewcount"] + 1;// set our values to display

ViewBag.Count = Session["viewcount"];

ViewBag.Instance = RoleEnvironment.CurrentRoleInstance.Id.ToString();The first section just sets up the session value and handles incrementing them. The second block pulls the value back out to be displayed. And then alter the associated Index.cshtml page to render the values back out. So just insert the following wherever you’d like it to go.

Page view count: @ViewBag.Count<br />

Instance: @ViewBag.InstanceNow if we’ve done everything correctly, you’ll see the view count increment consistently regardless of which instance handles the request.

Session.Abandon

Now there’s some interesting stuff I’d love to dive into a bit more if I had time, but I don’t today. So instead, let’s just be happy with the fact that after more than 2 years, Windows Azure finally has “built in” session provider that is pretty darned good. I’m certain it still has its capacity limits (I haven’t tried testing to see how far it will go yet), but to have something this simple we can use for most projects is simply awesome. If you want my demo, you can snag it from here.

Oh, one last note. Since Windows Azure Caching does require Windows Azure Storage to maintain some information, don’t forget to update the connection string for it before you deploy to the cloud. If not, you’ll find instances may not start properly (not the best scenario admittedly). So be careful.

Clemens Vasters (@clemensv) described A Smart Thermostat on the Service Bus in MSDN Magazine’s July 2012 issue. From the introduction:

Here’s a bold prediction: Connected devices are going to be big business, and understanding these devices will be really important for developers not too far down the road. “Obviously,” you say. But I don’t mean the devices on which you might read this article. I mean the ones that will keep you cool this summer, that help you wash your clothes and dishes, that brew your morning coffee or put together other devices on a factory floor.

In the June issue of MSDN Magazine (msdn.magazine.com/magazine/jj133825), I explained a set of considerations and outlined an architecture for how to manage event and command flows from and to embedded (and mobile) devices using Windows Azure Service Bus. In this article, I’ll take things a step further and look at code that creates and secures those event and command flows. And because a real understanding of embedded devices does require looking at one, I’ll build one and then wire it up to Windows Azure Service Bus so it can send events related to its current state and be remotely controlled by messages via the Windows Azure cloud.

Until just a few years ago, building a small device with a power supply, a microcontroller, and a set of sensors required quite a bit of skill in electronics hardware design as well as in putting it all together, not to mention good command of the soldering iron. I’ll happily admit that I’ve personally been fairly challenged in the hardware department—so much so that a friend of mine once declared if the world were attacked by alien robots he’d send me to the frontline and my mere presence would cause the assault to collapse in a grand firework of electrical shorts. But due to the rise of prototyping platforms such as Arduino/Netduino or .NET Gadgeteer, even folks who might do harm to man and machine swinging a soldering iron can now put together a fully functional small device, leveraging existing programming skills.

To stick with the scenario established in the last issue, I’ll build an “air conditioner” in the form of a thermostat-controlled fan, where the fan is the least interesting part from a wiring perspective. The components for the project are based on the .NET Gadgeteer model, involving a mainboard with a microcontroller, memory and a variety of pluggable modules.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• Avkash Chauhan (@avkashchauhan) described How to update CentOS kernel to CentOS Plus using Yum in a 7/3/2012 post:

When you run “sudo yum update” you Cent OS box is updated [with all] packages:

Installed:

kernel.x86_64 0:2.6.32-220.23.1.el6But if you want to update CentOS to CentOS Plus Kernel you would need to do the following:

First edit the your yum configuration repo for CentOS in [centos] section as following:

[root@hadoop ~]# vi /etc/yum.repos.d/CentOS-Base.repo

[centosplus]

enabled=1

includepkgs=kernel*Verify the changes as following:

[root@hadoop ~]# cat /etc/yum.repos.d/CentOS-Base.repo

# CentOS-Base.repo

#

# The mirror system uses the connecting IP address of the client and the

# update status of each mirror to pick mirrors that are updated to and

# geographically close to the client. You should use this for CentOS updates

# unless you are manually picking other mirrors.

#

# If the mirrorlist= does not work for you, as a fall back you can try the

# remarked out baseurl= line instead.

#

#[base]

name=CentOS-$releasever – Base

mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=os

#baseurl=http://mirror.centos.org/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6#released updates

[updates]

name=CentOS-$releasever – Updates

mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=updates

#baseurl=http://mirror.centos.org/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6#additional packages that may be useful

[extras]

name=CentOS-$releasever – Extras

mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=extras

#baseurl=http://mirror.centos.org/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-$releasever – Plus

mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=centosplus

#baseurl=http://mirror.centos.org/centos/$releasever/centosplus/$basearch/

gpgcheck=1

enabled=1

includepkgs=kernel*

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6#contrib – packages by Centos Users

[contrib]

name=CentOS-$releasever – Contrib

mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=contrib

#baseurl=http://mirror.centos.org/centos/$releasever/contrib/$basearch/

gpgcheck=1

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6[root@hadoop ~]# sudo yum update

Loaded plugins: fastestmirror, security

Loading mirror speeds from cached hostfile

* base: mirror.stanford.edu

* centosplus: centos.mirrors.hoobly.com

* extras: mirrors.xmission.com

* updates: mirror.san.fastserv.com

base | 3.7 kB 00:00

centosplus | 3.5 kB 00:00

centosplus/primary_db | 1.7 MB 00:00

extras | 3.5 kB 00:00

updates | 3.5 kB 00:00

Setting up Update Process

Resolving Dependencies

–> Running transaction check

—> Package kernel.x86_64 0:2.6.32-220.23.1.el6.centos.plus will be installed

—> Package kernel-firmware.noarch 0:2.6.32-220.23.1.el6 will be updated

—> Package kernel-firmware.noarch 0:2.6.32-220.23.1.el6.centos.plus will be an update

–> Finished Dependency ResolutionDependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

kernel x86_64 2.6.32-220.23.1.el6.centos.plus centosplus 25 M

Updating:

kernel-firmware noarch 2.6.32-220.23.1.el6.centos.plus centosplus 6.3 MTransaction Summary

================================================================================

Install 1 Package(s)

Upgrade 1 Package(s)Total download size: 31 M

Is this ok [y/N]: y

Downloading Packages:

(1/2): kernel-2.6.32-220.23.1.el6.centos.plus.x86_64.rpm | 25 MB 00:03

(2/2): kernel-firmware-2.6.32-220.23.1.el6.centos.plus.n | 6.3 MB 00:00

——————————————————————————–

Total 7.2 MB/s | 31 MB 00:04

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Updating : kernel-firmware-2.6.32-220.23.1.el6.centos.plus.noarch 1/3

Installing : kernel-2.6.32-220.23.1.el6.centos.plus.x86_64 2/3

Cleanup : kernel-firmware-2.6.32-220.23.1.el6.noarch 3/3Installed:

kernel.x86_64 0:2.6.32-220.23.1.el6.centos.plusUpdated:

kernel-firmware.noarch 0:2.6.32-220.23.1.el6.centos.plusComplete!

• Craig Landis started a Unable to Capture Image: "Endpoint Not found: There was no endpoint listening at..." thread on 7/3/2012 in the Windows Azure Platform > Windows Azure – CTPs, Betas & Labs Forums> Windows Azure Virtual Machines for Windows (and Linux) forums:

An issue has been identified that results in the inability to capture an image. We will update this post when the fix has been implemented.

When attempting to capture an image in the Windows Azure management portal you may receive the following error message:Endpoint Not found: There was no endpoint listening at https://management.core.windows.net:8443/4321d1e8-da89-46f6-a36f-879c82a4321f/services/hostedservices/myvm/deployments/myvm/roleInstances/myvm/Operations that could accept the message. This is often caused by an incorrect address or SOAP action. See InnerException, if present, for more details.

Using the Save-AzureVMImage PowerShell cmdlet, you may receive error:

PS C:\> save-azurevmimage -Name myvm -NewImageName myimage -NewImageLabel myimage -ServiceName mycloudservice

save-azurevmimage : HTTP Status Code: NotFound - HTTP Error Message:

https://management.core.windows.net/4321d1e8-da89-46f6-a36f-879c82a4321f/services/hostedservices/myvm/deployments/myvm/roleInstances/myvm/Operations does not exist.

Operation ID: 5d2f45a33ca54ed39886f42b05f2b71c

At line:1 char:1

+ save-azurevmimage -Name myvm -NewImageName myimage -NewImageLabel ...

+

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : CloseError: (:) [Save-AzureVMImage], CommunicationException

+ FullyQualifiedErrorId :Microsoft.WindowsAzure.Management.ServiceManagement.IaaS.SaveAzureVMImageCommandUsing the cross-platform Azure command-line tool you may receive error:

C:\>azure vm capture myvm myimage --delete

info: Executing command vm capture

verbose: Fetching VMs

verbose: Capturing VM

error: The deployment name 'myvm' does not exist.

error: vm capture command failed

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Robin Shahan (@RobinDotNet) described How to set up an HTTPS endpoint for a Windows Azure web role in a 7/2/2012 post. From the introduction:

At GoldMail, all of our WCF services have https endpoints. How do you do this? First, you need a domain. Then you need to CNAME what your service URL will be to your Azure service. For example, my domain is goldmail.com, and I have CNAMEd robintest.goldmail.com to robintest.cloudapp.net.

Next, you need a real SSL certificate from a Certificate Authority like VeriSign, purchased for your domain. A self-signed certificate won’t work. A certificate from a CA validates that you are who you say you are, and somebody visiting that domain can be sure it is you. You can buy an SSL certificate that is just for yourcompany.com, but if you buy one that is *.yourcompany.com, you can use it for mywebapp.mycompany.com, myotherwebapp.mycompany.com, myservice.mycompany.com, iworktoomuch.mycompany.com, etc., like mine noted above is robintest.goldmail.com.

Next, you need to upload the certificate to the Azure portal for the cloud service you are going to use to host the Azure web role. Then you need to assign the certificate to the web role and set up an https endpoint in the Azure configuration in Visual Studio. …

Robin continues with an illustrated, step-by-step tutorial.

• Robin Shahan (@RobinDotNet) continued here HTTPS series with How to debug a service with an HTTPS endpoint in the Azure compute emulator on 7/2/2012:

My previous post showed how to use your SSL certificate, set up an HTTPS endpoint for your service, and change your service to use HTTPS. If you now run this service in the Compute Emulator, you will see this:

And you will find if you put a breakpoint in the code of the service, and then try to call it from the client, it won’t let you debug into it because the certificate is invalid.

This was really frustrating for me, because I didn’t want to expose an HTTP endpoint, and I didn’t want to keep changing back and forth between HTTP and HTTPS. There’s always a chance I’ll miss something when changing it back, and we’ll publish it to staging and it won’t work right. (Don’t ask.) So I went looking for a way to get around this restriction.

When you run your service in the compute emulator, Windows Azure creates a certificate for 127.0.0.1 and installs it in the machine certificate store. This certificate is not trusted, which is why you get the security error when running your service locally.

To resolve this problem, you have to take that certificate and either copy it into the Trusted Root Certification store for the local machine, or copy it into your user account’s Trusted Root Certification store. This is a security hole on your computer if you trust any HTTPS websites with any valuable information. For this reason, I installed it in my own certificate store rather than the machine certificate store, so it would only endanger my own data. It also makes it easier to remove and re-install when you need to. …

Robin continues with another illustrated, step-by-step tutorial for the Compute Emulator.

Charlie Osborne (@ZDNetCharlie) asserted “Electronics giant Samsung Electronics has chosen to integrate Microsoft's Azure platform with its Smart TV infrastructure” in a deck for her Electronics giant Samsung Electronics has chosen to integrate Microsoft's Azure platform with its Smart TV infrastructure report of 7/3/2012 for ZDNet:

Electronics giant Samsung Electronics has chosen to implement Microsoft cloud technology through integration with its Smart TV infrastructure.

Samsung announced on Monday its decision to use Windows Azure technology to manage the Smart TV system through cloud-based technology. The company cited a reduction in costs, increased productivity and a flexible, scalable model which can be expanded to meet its growing customer base.

Samsung’s Smart TV service is currently operating in 120 countries -- and the technology giant intends to expand this further. With this in mind, Samsung said it chose Windows Azure in order to stem any concerns for reliability, as well as the ability to support a continual increase in traffic. Through testing, the company found the platform offered a greater speed than competitors, mainly within the Asia region.

Scalability was one concern, but Samsung has also said that Windows Azure allowed the company to quickly secure and store resources, and focus on expanding their business rather than on security issues. Bob Kelly, corporate vice president of Windows Azure at Microsoft said:

"Windows Azure gives Samsung the ability to focus on its business rather than having its technical team deal with problem-solving and troubleshooting issues."

Windows Azure is a modern Microsoft cloud computing platform used to build, host and scale web applications through data centers hosted by the company. The platform consists of various services including the Windows Azure operating system, SQL Azure, and Windows Azure AppFabric.

I have a two month old Samsung 46" LED 1080p 120Hz HDTV UN46D6050 Smart TV but ordinarily use my Sony BlueRay Disk player or a laptop in the living room for Internet access. Both have wired connections to the Internet via my AT&T DSL connection. The laptop connects to one of the TV’s four HDMI ports. By the way, I highly recommend Samsung HDTVs for their excellent image quality and many HDMI ports.

Read more: Microsoft’s Samsung Smart TV Tunes In to Windows Azure press release of 7/2/2012.

Shahrokh Mortazavi described Python on Windows Azure – a Match Made in the Clouds! in a 7/2/2012 post to the Windows Azure blog:

I’m very excited to talk about Python on Windows, which has enjoyed a resurgence at Microsoft over the past couple of years, culminating in the release of Python Tools for Visual Studio and Python on Windows Azure. Let’s dive in to see what we have. But first, a little background…

What is Python & Who Uses It?

Python is a modern, easy to use, concise language that has become an indispensable tool in the modern developer’s arsenal. It’s consistently been in the top 10 TIOBE index, and has become the CS101 language at many universities. But partly due to its vast and deep suite of libraries, it also spans the CS PhD and Mission Critical app zip codes as well. Who exactly uses Python these days? Here’s a snapshot; these names represent some of the highest trafficked sites and popular desktop and networked applications.

Python is also pretty popular. Here are the results of a popularity poll Hacker News recently ran:

Another key aspect of the language is that it’s cross-platform. In fact we had to do very little to get the Python Windows Azure Client Libraries to work on MacOS and Linux.

Python on Windows Azure

In our initial offering, Python on Windows Azure provides the following:

- Python Client Libraries

- Django & Cloud Services

- Visual Studio Integration for Python /Azure

- Python on Windows or Linux VM’s

- IPython

Python Client Libraries - The Client Libraries are a set of Pythonic, OS independent wrappers for the Windows Azure Services, such as Storage, Queues, etc. You can use them on Mac or Linux machines as well, locally or on VM’s to tap in to Windows Azure vast computation and storage services:

Upload to blob:

myblob = open(r'task1.txt', 'r').read()

blob_service.put_blob('mycontainer', 'myblob', myblob, x_ms_blob_type='BlockBlob')List the blobs:

blobs = blob_service.list_blobs('mycontainer')

for blob in blobs:

print(blob.name)

print(blob.url)download blobs:

blob = blob_service.get_blob('mycontainer', 'myblob')

with open(r'out-task1.txt', 'w') as f:

f.write(blob)Django - For web work, we’ve provided support for Django initially – this includes server side support via IIS and deployment via Python Tools for Visual Studio (PTVS). On Windows you can use WebPI to install everything you need (Python, Django, PTVS, etc.) and be up and running with your 1st website in 15 minutes. Once deployed, you can use Windows Azure’s scalability and region support to manage complex sites efficiently:

Preparing the app for deployment to Windows Azure in PTVS:

Python Tools for Visual Studio - There are a number of good IDE’s, including web-based ones for writing Python. We’re a bit biased toward PTVS, which is a free and OSS (Apache license) plug-in for Visual Studio. If you’re already a VS user, it’s just a quick install. If you’re not, you can install the Integrated Shell + PTVS to get going – this combo gives you a free “Python Express” essentially. The key features of PTVS are:

- Support for CPython, IronPython

- Intellisense

- Local & remote Debugging

- Profiling

- Integrated REPL with inline graphics

- Object Browser

- Django

- Parallel Computing

This video provides a quick overview of basic Editing features of PTVS.

Debugging Django templates:

Intellisense support for Django templates:

Check out this video to get a feel for deploying a Django site on Windows Azure (Cloud Service mode). You can also deploy from a Mac/Linux machine into a Linux VM.

PTVS also has advanced features such as high-performance computing (including debugging MPI code), which we’ll enable on Windows Azure in the future.

IPython - We’re also very excited about IPython notebook, which is a web-based general purpose IDE for developing Python, and more importantly, prototyping “ideas”. You can run the notebook on any modern browser that supports websockets (Chrome, Safari, IE10 soon, …) and the “Engine” itself runs on Windows Azure in a VM (Linux or Windows):

This powerful paradigm means instant access to the vast and deep Python ecosystem in a highly productive environment, backed by solid availability and scalability of Windows Azure. Whether you’re a modeler, teacher or researcher, this opens up a whole set of new possibilities, especially with prototyping, sharing and collaboration. And best of all, no installs! Check out this video of IPython, which describes some of its features.

Summary & Roadmap

As you can see, there’s a lot to like about Python on Windows Azure:

- It’s a great language, with awesome libraries and a very active community of developers, estimated to be around ~1.5 million

- Django & Flask are robust web frameworks with excellent Windows support

- PTVS with Windows Azure support turns VS into a world-class Python IDE & best of all it’s FREE (and OSS)

- IPython, backed by Windows Azure, turns any browser on any OS into a powerful exploration, analysis and visualization environment

- The Windows Azure Client Libraries provide easy, Pythonic access to Windows Azure services from Windows, MacOS and Linux

- Persistent Linux and Windows VM’s enable running any Python stack you desire

I should also emphasize that the members of the Python team are huge OSS fans and would love to see you get involved and contribute as well. The Windows Azure Client libraries are OSS on github. PTVS is OSS on Codeplex. If there’s a bug or feature you’d like to work on, please let us know!

We’re also considering support for Big Data (via Hadoop) and High Performance Computing on Windows Azure. Do you have any ideas on what we should work on? Please let us know!

I assume Shahrokh means “Considering support” for Python by Apache Hadoop for Windows Azure.

Bruce Kyle reported an ISV Video: Lingo Jingo Language Learning Software Powered By Windows Azure in a 7/2/2012 post to Microsoft’s US ISV Evangelism blog:

The Lingo Jingo app is powered by Windows Azure to deliver a social based language learning experience integrating with Facebook, Twitter and LinkedIn to share progress on lessons and exercises. Web roles are used to host its MVC based main marketing site and language learning app, SQL Azure is used for storing user data, sessions and lesson contexts and Azure blog storage for storing lesson images and videos.

Video link: Lingo Jingo Language Learning Software Powered By Windows Azure

In this video, Lingo Jingo's co-founder, Christian Hasker, speaks with Microsoft Architect Evangelist Allan Naim. Allan and Christian discusses why they chose building on Windows Azure. Christian explains the benefits realized from building on the Azure platform.

About Lingo Jingo

Lingo Jingo was founded with one goal in mind – to make it easier for the world to communicate with one another in different languages. If you've ever tried to speak a few words of a foreign language when you are on vacation you'll know how making a little effort to say "Hello, how are you?" can open up a whole new world of experiences.

If you know 500 words in any language, you'll be able to understand rudimentary conversations and make yourself understood. We'll help you gain that confidence.

Joseph Fultz explained Mixing Node.js into Your Windows Azure Solution in his “Forecast: Cloudy” column for MSDN Magazine’s July 2012 issue. From the introduction:

Node.js has been getting a lot of press as of late, and it’s highly touted for its asynchronous I/O model, which releases the main thread to do other work while waiting on I/O responses. The overarching rule of Node.js is that I/O is expensive, and it attempts to mitigate the expense by forcing an asynchronous I/O model. I’ve been thinking about how it might be incorporated into an already existing framework. If you’re starting from scratch, it’s relatively easy to lay out the technology choices and make a decision, even if the decision is pretty much based on cool factor alone. However, if the goal is to perform a technology refresh on one part of a solution, the trick is picking something that’s current, has a future, doesn’t come with a lot of additional cost and will fit nicely with the existing solution landscape.

That’s exactly what I’m going to demonstrate in this column. I’ll take an existing solution that allows viewing of documents in storage but requires a shared access signature to download them. To that solution I’ll add a simple UI using Node.js. To help with that implementation, I’ll take advantage of some commonly used frameworks for Node.js. The solution, therefore, will include:

- Node.js—the core engine

- Express—a Model-View-Controller (MVC)-style framework

- Jade—a rendering and templating engine

Together these three tools will provide a rich framework from which to build the UI, much like using ASP.NET MVC 3 and Razor.

Tarun Arora (@arora_tarun) described Visual Studio Load Testing using Windows Azure in a 7/1/2012 post:

In my opinion the biggest adoption barrier in performance testing on smaller projects is not the tooling but the high infrastructure and administration cost that comes with this phase of testing. Only if a reusable solution was possible and infrastructure management wasn’t as expensive, adoption would certainly spike. It certainly is possible if you bring Visual Studio and Windows Azure into the equation.

It is possible to run your test rig in the cloud without getting tangled in SCVMM or Lab Management. All you need is an active Azure subscription, Windows Azure endpoint enabled developer workstation running visual studio ultimate on premise, windows azure endpoint enabled worker roles on azure compute instances set up to run as test controllers and test agents. My test rig is running SQL server 2012 and Visual Studio 2012 RC agents. The beauty is that the solution is reusable, you can open the azure project, change the subscription and certificate, click publish and *BOOM* in less than 15 minutes you could have your own test rig running in the cloud.

In this blog post I intend to show you how you can use the power of Windows Azure to effectively abstract the administration cost of infrastructure management and lower the total cost of Load & Performance Testing. As a bonus, I will share a reusable solution that you can use to automate test rig creation for both VS 2010 agents as well as VS 2012 agents.

Introduction

The slide show below should help you under the high level details of what we are trying to achive...

Leveraging Azure for Performance Testing

View more PowerPoint from Avanade

Scenario 1 – Running a Test Rig in Windows Azure

To start off with the basics, in the first scenario I plan to discuss how to,

- Automate deployment & configuration of Windows Azure Worker Roles for Test Controller and Test Agent

- Automate deployment & configuration of SQL database on Test Controller on the Test Controller Worker Role

- Scaling Test Agents on demand

- Creating a Web Performance Test and a simple Load Test

- Managing Test Controllers right from Visual Studio on Premise Developer Workstation

- Viewing results of the Load Test

- Cleaning up

- Have the above work in the shape of a reusable solution for both VS2010 and VS2012 Test Rig

Scenario 2 – The scaled out Test Rig and sharing data using SQL Azure

A scaled out version of this implementation would involve running multiple test rigs running in the cloud, in this scenario I will show you how to sync the load test database from these distributed test rigs into one SQL Azure database using Azure sync. The selling point for this scenario is being able to collate the load test efforts from across the organization into one data store.

- Deploy multiple test rigs using the reusable solution from scenario 1

- Set up and configure Windows Azure Sync

- Test SQL Azure Load Test result database created as a result of Windows Azure Sync

- Cleaning up

- Have the above work in the shape of a reusable solution for both VS2010 and VS2012 Test Rig

The Ingredients

Though with an active MSDN ultimate subscription you would already have access to everything and more, you will essentially need the below to try out the scenarios,

- Windows Azure Subscription

- Windows Azure Storage – Blob Storage

- Windows Azure Compute – Worker Role

- SQL Azure Database

- SQL Data Sync

- Windows Azure Connect – End points

- SQL 2012 Express or SQL 2008 R2 Express

- Visual Studio All Agents 2012 or Visual Studio All Agents 2010

- A developer workstation set up with Visual Studio 2012 – Ultimate or Visual Studio 2010 – Ultimate

- Visual Studio Load Test Unlimited Virtual User Pack.

Walkthrough

To set up the test rig in the cloud, the test controller, test agent and SQL express installers need to be available when the worker role set up starts, the easiest and most efficient way is to pre upload the required software into Windows Azure Blob storage. SQL express, test controller and test agent expose various switches which we can take advantage of including the quiet install switch. Once all the 3 have been installed the test controller needs to be registered with the test agents and the SQL database needs to be associated to the test controller. By enabling Windows Azure connect on the machines in the cloud and the developer workstation on premise we successfully create a virtual network amongst the machines enabling 2 way communication. All of the above can be done programmatically, let’s see step by step how…

Scenario 1

Video Walkthrough–Leveraging Windows Azure for performance Testing

Scenario 2

Work in progress, watch this space for more…

Solution

If you are still reading and are interested in the solution, drop me an email with your windows live id. I’ll add you to my TFS preview project which has a re-usable solution for both VS 2010 and VS 2012 test rigs as well as guidance and demo performance tests.

Conclusion

Other posts and resources available here.

Possibilities…. Endless!

Doug Mahugh (@dmahugh) posted Symfony on Windows Azure, a powerful combination for PHP developers to the Interoperability @ Microsoft blog on 6/29/2012:

Symfony, the popular open source web application framework for PHP developers, is now even easier to use on Windows Azure thanks to Benjamin Eberlei’s Azure Distribution Bundle project. You can find the source code and documentation on the project’s GitHub repo.

Symfony is a model-view-controller (MVC) framework that takes advantage of other open-source projects including Doctrine (ORM and database abstraction layer), PHP Data Objects (PDO), the PHPUnit unit testing framework, Twig template engine, and others. It eliminates common repetitive coding tasks so that PHP developers can build robust web apps quickly.

Symfony and Windows Azure are a powerful combination for building highly scalable PHP applications and services, and the Azure Distribution Bundle is a free set of tools, code, and documentation that makes it very easy to work with Symfony on Windows Azure. It includes functionality for streamlining the development experience, as well as tools to simplify deployment to Windows Azure.

Features that help streamline the Symfony development experience for Windows Azure include changes to allow use of the Symfony Sandbox on Windows Azure, functionality for distributed session management, and a REST API that gives Symfony developers access to Windows Azure services using the tools they already know best. On the deployment side, the Azure Distribution Bundle adds some new commands that are specific to Windows Azure to Symfony’s PHP app/console that make it easier to deploy Symfony applications to Windows Azure:

- windowsazure:init – initializes scaffolding for a Symfony application to be deployed on Windows Azure

- windowsazure:package – packages the Symfony application for deployment on Windows Azure

Benjamin Eberlei, lead developer on the project, has posted a quick-start video that shows how to install and work with the Azure Distribution Bundle. His video takes you through prerequisites, installation, and deployment of a simple sample application that takes advantage of the SQL Database Federations sharding capability built into the SQL Database feature of Windows Azure:

Whether you’re a Symfony developer already, or a PHP developer looking to get started on Windows Azure, you’ll find the Azure Distribution Bundle to be easy to use and flexible enough for a wide variety of applications and architectures. Download the package today – it includes all of the documentation and scaffolding you’ll need to get started. If you have ideas for making Symfony development on Windows Azure even easier, you can join the project and make contributions to the source code, or you can provide feedback through the project site or right here.

Symfony and Doctrine are often used in combination, as shown in the sample application mentioned above. For more information about working with Doctrine on Windows Azure, see the blog post Doctrine supports SQL Database Federations for massive scalability on Windows Azure.