Windows Azure and Cloud Computing Posts for 1/30/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Added TeraSort and TeraValidate jobs on 1/30 and 1/31/2012 to my (@rogerjenn) Introducing Apache Hadoop Services for Windows Azure post. From the preface:

The SQL Server Team (@SQLServer) announced Apache Hadoop Services for Windows Azure, a.k.a. Apache Hadoop on Windows Azure or Hadooop on Azure, at the Profesional Association for SQL Server (PASS) Summit in October 2011.

Update 1/31/2011: Added steps 15 and 16 with Job History and Cluster Management for the TeraSort job.

Update 1/30/2011: Added TeraSort job, steps 10 through 14, and TeraValidate job, steps 17 through 19. Waiting for response regarding interpretation of TeraValidate results.

- Introduction

- Tutorial: Running the 10GB GraySort Sample’s TeraGen Job

- Tutorial: Running the 10GB GraySort Sample’s TeraSort Job

- Tutorial: Running the 10GB GraySort Sample’s TeraValidate Job

- Apache Hadoop on Windows Azure Resources

Denny Lee (@dennylee) asked Moving data to compute or compute to data? That is the Big Data question in a 1/31/2012 post:

… As noted in the previous post Scale Up or Scale Out your Data Problems? A Space Analogy, the decision to scaling up or scaling out your data problem is a key facet in your Big Data problem. But just as important as the ability to distribute the data across commoditized hardware, another key facet is the movement of data.

Latencies (i.e. slower performance) are introduced when you need to move data from one location to another. To solve this problem within the data world, you can solve this by making it easier to move the data faster (e.g. compression, delta transfer, faster connectivity, etc.) or you design a system that reduces the need to move the data in the first place (i.e. moving data to compute or compute to data).

Scaling Up the Problem / Moving Data to Compute

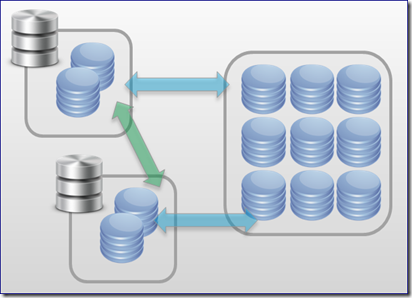

To help describe the problem, the diagram below is a representation of a scale up traditional RDBMS. The silver database boxes on the left represent the database servers (each with blue platters representing local disks), the box with 9 blue platters represents a disk array (e.g. SAN, DAS, etc.), the blue arrows represent fiber channel connections (between the server and disk array), and the green arrows represent the network connectivity.

In an optimized scale up RDBMS, we often will setup DAS or SANs to quickly transfer data from the disk array to the RDBMS server or compute node (often allocating the local disk for the compute node to hold temp/backup/cache files). This scenario works great under the specific scenario that you can ensure low latencies.

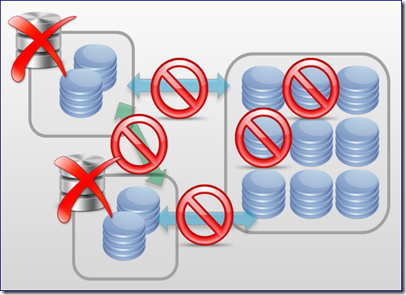

And this is where things can get complicated, because if you were to lose disks on the array and/or fiber channel connectivity to the disk array – the RDBMS would go offline. But as described in the above diagram, perhaps you setup active clustering so the secondary RDBMS can take over.

Yet, if you were to lose network connectivity (e.g. the secondary RDBMS is not aware the primary is offline) or lose fiber channel connectivity, you would also lose the secondary.

The Importance of ACID

It is important to note that many RDBMS systems have features or designs that work around these problems. But to ensure availability and redundancy, if often requires more expensive hardware to work around the problematic network and disk failure points.

As well, this is not to say that RDBMS are based design – they are designed with ACID in mind – atomicity, consistency, isolation, and durability – to guarantee the reliability and robustness of database transactions (for more info, check out the Wikipedia entry: ACID).

Scaling Out the Problem / Moving Compute to Data

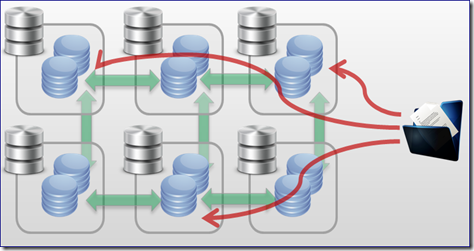

In a scale out or distributed solution, the idea is to have many commodity servers; they are many points of failure but there are also many paths for success.

Key to a distributed system is that as data comes in (the blue file icon on the right represent data such as web logs), the data is distributed and replicated in chunks to many nodes within the cluster. In the case of Hadoop, files are broken into 64K chunks and each of these chunks are placed into three different locations (if you set the replication factor to 3).

While you are using more disk space to replicate the data, now that you have placed the data into the system, you have ensured redundancy by replicating the data within it.

What is great about these types of distributed systems, they are designed right from the beginning to handle latency issues whether they be disk or network connectivity problems to out right losing a node. In the above diagram, a user is requesting data, but there is a loss to some disks and some network connections.

Nevertheless, there are other nodes that do have network connectivity and the data has been replicated so it is available. Systems that are designed to scale out and distribute like Hadoop can ensure availability of the data and will complete the query just as long as the data exists (it may take longer if nodes are lost, but the query will be completed).

The importance of BASE

By using many commodity boxes, you distribute and replicate your data to multiple systems. But as there are many moving parts, distributed systems like these cannot ensure the reliability and robustness of database transactions. Instead, they fall under the domain of eventual consistency where over a period of time (i.e. eventually) the data within the entire system will be consistent (e.g. all data modifications will be replicated throughout the cluster). This concept is also known as BASE (as opposed to ACID) – Basically Available, Soft State, Eventually Consistent. For more information, check out the Wikipedia reference: Eventual Consistency.

Discussion

Similar to the post Scale Up or Scale Out your Data Problems? A Space Analogy, choosing whether ACID or BASE works for you is not a matter of which one to use – but which one to use when. For example, as noted in the post What’s so BIG about “Big Data”?, the Yahoo! Analysis Services cube is 24 TB (certainly a case of moving data to compute with my obsession on random IO with SSAS) and the source of this cube is a 2PB of data from a huge Hadoop cluster (moving compute to data).

Each one has its own set of issues – scaling out increases the complexity of maintaining so many nodes, scaling up becomes more expensive to ensure availability and reliability, etc. It will be important to understand the pros/cons of each type – often it will be a combination of these two. Another great example can be seen in Dave Mariani (@mariani)’s post: Big Data, Bigger Brains at Klout’s blog.

ACID and BASE each have their own set of problems, the good news is that mixing them together often neutralizes the problems.

…

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

The SQL Azure Labs Team (@SQLAzureLabs) announced Microsoft Codename "SQL Azure Security Services" on 1/30/2012:

Scan your databases for security vulnerabilities

Microsoft Codename "SQL Azure Security Services" is an easy-to-use Web application that enables you to assess the security state of one or all of the databases on your SQL Azure server. The service scans databases for security vulnerabilities and provides you a report. You can use the recommendations and best practices provided in the report to improve the security state of your databases. The steps are simple:

Provide your SQL Azure account credentials to the service.

Choose the target for the report: Display in your browser or send to Windows Azure Blob storage.

View the report and get details from supporting documentation.

Take the corrective steps recommended in the report to secure your data.

Provide feedback on the data protection services you want in SQL Azure.

"SQL Azure Security Services" is an early prototype for solving the problem of securing your data in the cloud: no matter where it is, whatever the capacity and scale. Your feedback is very valuable. We look forward to hearing from you!

You will need a Windows Azure and SQL Azure subscription to use the service. If you don’t have one, click here to sign up for a free trial.

Click here to get started with this lab.

The SQL Server Team (@SQLServer) announced Microsoft SQL Server Migration Assistant 5.2 is Now Available in a 1/30/2012 post:

SQL Server Migration Assistant (SSMA) v5.2 is now available. SSMA simplifies database migration process from Oracle/Sybase/MySQL and Microsoft Access to SQL Server and SQL Azure. SSMA automates all aspects of migration including migration assessment analysis, schema and SQL statement conversion, data migration as well as migration testing to reduce cost and reduce risk of your database migration project.

- The new version of SSMA - SSMA 5.2 provides the following major enhancements:

- Support conversion of Oracle %ROWTYPE parameters with NULL default

- Support conversion of Sybase’s Rollback Trigger

- Better user credential security to support Microsoft Access Linked Tables

Reduce Cost and Risk of Competitive Database Migration

Does your customer have Oracle, Sybase, MySQL or Access databases that you like to migrate to SQL Server or SQL Azure? SQL Server Migration Assistant (SSMA) automates all aspects of migration including migration assessment analysis, schema and SQL statement conversion, data migration as well as migration testing. The tool provides the following functionalities:

- Database Migration Analyzer: Assess and report complexity of the source database for migration to SQL Server. The generated report include detailed information on the database schema, percentage of the schema objects that can be converted by the tool, and hours estimate for manually migrating those schema objects that cannot be converted automatically. The information can be used for your to decide and to plan for migration. Visit SSMA team site for video demonstration of this feature.

- Schema Converter: Automate conversion of schema objects (including programming code inside package, procedure, function) into equivalent SQL Server objects and T-SQL dialect. The tool provides ability to customize the conversion from hundreds of project setting options according to your specific business requirements. A report will be generated at the end of conversion for any object statement not supported for automated conversion. You can drill down to each of the migration issues and obtain side-by-side comparison between the original source code and the converted source code as well as make necessary modification directly from SSMA user interface. Visit SSMA team site for video demonstration of this feature.

- Data Migrator: Migrate data from the source database to the SQL Server using the same conversion logic and type mapping specified in the project setting during schema conversion. Visit SSMA team site for video demonstration of this feature.

- Migration Tester: Facilitate unit testing of converted program in SQL Server.

Simplify Migration to SQL Azure

Customers can simplify their move to the cloud with the SSMA. You can migrate from competitive database directly to SQL Azure. The tool reports possible migration issue to SQL Azure, convert schema, and migrate data to SQL Azure database. SSMA supports SQL Azure migration from Microsoft Access, MySQL, and Sybase.Receive FREE technical support and migration resources.

Microsoft Customer Service and Support (CSS) provides free email technical support for SSMA. SSMA product web site and SSMA team blog site provides many resources to help customer to reduce cost and risk for database migration.Download SQL Server Migration Assistant (SSMA) v.5.2

Launch the download of the SSMA for Oracle.

Launch the download of the SSMA for Sybase.

Launch the download of the SSMA for MySQL.

Launch the download of the SSMA for Access.

Cihan Biyikoglu (@cihangirb) posted a brief PHP and Federations in SQL Azure - Sample Code from Brian article on 1/29/2012:

Brian has a ton[n]e of samples on PHP and Federations in this post right here... Another great one!

http://blogs.msdn.com/b/silverlining/archive/2012/01/18/using-sql-azure-federations-via-php.aspx

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

The Social Analytics Team announced Enhanced Analytics and Sentiment Analysis Arrive in Social Analytics in a 1/31/2012 post to the Microsoft Codename “Social Analytics” blog:

We are proud to announce an update to the Microsoft Codename “Social Analytics” Lab. This update builds on the services we announced in October by providing the following new capabilities:

- Enhanced analytics in our API

- Sample usage of the enhanced analytics in the Engagement Client - 5 new analytic widgets

- Improved sentiment analysis for Tweets

1. Enhanced Analytics:

Our existing API now provides richer analytics views of social data. This makes it easier to find top conversations, contributors, keywords and sources related to any filter with a simple ODATA query and returns conversations related to these calculations to your application.

Here’s a sample* ODATA query returning the top 5 keywords found in a filter for the past 7 days:

You can start investigating the details of the API by going to this link. If you are new to Social Analytics, check out our API overview here.

* Note: You will need to use your existing credentials for the lab to use this sample code. (Look at the API section here for details)

2. New Analytics Widgets in the Engagement Client:

See the new enhanced analytics in use in our sample Silverlight UI – the Engagement Client. We built these 5 analytic widgets using the new analytic enhancements to our API. These widgets provide the following analytic views for any filter:

- Top Conversations

- Top Contributors

- Top Keywords

- Volume

- Sources

Here's an illustration of the new analytics in the sample Silverlight client:

3. Improved Sentiment Analysis for Tweets

In this release, we snuck in a little bonus! We updated the sentiment engine with minor enhancements to improve the analysis of sentiment in Tweets.

That's what's new in this release! In a few short months, we've made major progress on this experimental cloud service.

Now it's time for you to try the updated lab and let us know what you think. We're already working on the top feature request from our fall release - giving you the ability to define your own topics. We plan on making that functionality part of a future release.

For those new to this Lab, please check out our initial blog post for an overview of Microsoft Codename "Social Analytics". Here are some additional links from that post for your convenience:

- Get started today

- Visit our official homepage and Connect Site for more information

- Connect with us in our forums

- Stay tuned to our product blog for future updates

Update 2/1/2012: The Social Analytics Team promised in a comment “more details for the upgrade to the sentiment engine, as well as a description of the technology/algorithm(s) behind” its upgrade to the Microsoft Research sentiment code in the next post to the team’s blog.

Here’s my Microsoft Codename “Social Analytics” Windows Form Client about halfway through a test of 200,000 of the latest Tweets, posts, and other items:

Miguel Llopis (@mllopis) of the Microsoft Codename “Data Explorer” team posted Crunching Big Data with “Data Explorer” and “Social Analytics” on 1/30/2012:

Last week an interesting article was published in SearchCloudComputing which shows how to consume feeds from the “Social Analytics” Lab in “Data Explorer”, then perform some filter, transform and grouping operations on this data using Data Explorer’s intuitive UI and finally publish the resulting dataset as a snapshot so the contents at a given point in time can be accessed later on. You can take a look at the article following this link.

The author of this article, Roger Jennings, has also published a few other blog posts about Data Explorer on his blog. We particularly recommend you to follow the “41-step illustrated tutorial on creating a mashup”.

If you still haven’t done, you can sign up to try Data Explorer cloud service today!

Thanks for the kind words, Miguel.

Glenn Gailey (@ggailey777) posted More on the New OData T4 Template: Service Operations on 1/30/2012:

I’ve long missed support for calling service operations by using the proxy client code-generated by WCF Data Services, and I’ve described some workarounds in the post Calling Service Operations from the WCF Data Services Client. This is why I was excited to discover that the new T4 template, which I introduced in my previous post New and Improved T4 Template for OData Client and Local Database, now supports calling service operations as a first class behavior.

For example, the following T4-generated method on the NorthwindEntities context calls a GetOrdersByCity GET service operation:

public global::System.Data.Services.Client.DataServiceQuery<Order> GetOrdersByCity(global::System.String city) { return this.CreateQuery<Order>("GetOrdersByCity") .AddQueryOption("city" ,"'"+city+"'"); }And, here’s another generated method that returns a collection of strings:

public System.Collections.Generic.IEnumerable<string> GetCustomerNames() { return (System.Collections.Generic.IEnumerable<string>) this.Execute<string>(new global::System.Uri("GetCustomerNames", global::System.UriKind.Relative), Microsoft.Data.OData.HttpMethod.Get,false); }Note that these methods use essentially the same techniques described in Calling Service Operations from the WCF Data Services Client.

Before Getting Started with the T4 Template

Before we get started, I should point out that there is currently a bug ( which I reported) in line 868, which can be fixed by changing this line of code as follows:

parameters=string.Concat(parameters,"global::System." + GetNameFromFullName(p.Type.FullName()));(You might also want to do a global replace of "refrence" with "reference"--if you care about such things.)

Also, remember that this T4 template requires the current Microsoft WCF Data Services October 2011 CTP release for the upcoming OData release. To install this new T4 template into your project:

- Make sure that you have NuGet installed. You can install it from here: https://nuget.org/.

- If you haven’t already done so, use the Add Service Reference tool Visual Studio to add a reference to the OData service.

(The template needs the service.edmx file generated by the tool).- In your project, use the NuGet Package Manager Console to download and install the ODataT4-CS package:

PM> Install-Package ODataT4-CS- Open the Reference.tt template file and edit the line 868 to fix the bug described above.

- In the Reference.tt template file, change the value of the MetadataFilepath property in the TransformContext constructor to the location of the .edmx file generated by the service reference and update the Namespace property to a namespace that doesn’t collide with the one generated by the service reference.

Now let’s compare the ease of using the client proxy generated by this new T4 template against the examples from the topic Calling Service Operations (WCF Data Services).

Calling a Service Operation that Returns a Collection of Entities

For example, here’s the previously difficult to compose URI-based query to call the GetOrdersByCity operation:

string queryString = string.Format("GetOrdersByCity?city='{0}'", city) + "&$orderby=ShippedDate desc" + "&$expand=Order_Details"; var results = context.Execute<Order>( new Uri(queryString, UriKind.Relative));With the new template, this becomes a much nicer, LINQ query:

var results = from o in context.GetOrdersByCity(city) .Expand("Order_Details") orderby o.ShippedDate descending select o;Because this service operation returns an IQueryable<T> collection of entities, it can be further composed against and you get support for all the nice LINQ operations. And, as you would expect with DataServiceQuery<T>, the request to the service operation is made when the result is assigned or enumerated.

Calling a Service Operation that Returns a Single Entity

The benefits of this new client support for service operations are even more evident when calling a service operation that returns a single entity, which used to look like this:

string queryString = "GetNewestOrder"; Order order = (context.Execute<Order>( new Uri(queryString, UriKind.Relative))) .FirstOrDefault();Which can now be simplified to this one clean line of code:

Order order = context.GetNewestOrder();Note that in this case, the request is sent when the method is called.

Calling a Service Operation by using POST

It was a pet peeve of many folks that you couldn’t call a POST service operation from the client, even though it’s perfectly legal in OData. In this new version, you can now call DataServiceContext.Execute() and select the request type (GET or POST). This means that the new T4 template enables you to call POST service operations directly from methods:

// Call a POST service operation that returns a collection of customer names. IEnumerable<string> customerNames = context.GetCustomerNamesPost();(Please don’t give me grief over having a POST operation named Get…it’s just for demo purposes.) As before, you can only upload data by using parameters, which is still an OData requirement.

The Remaining Examples

Here’s the new versions of the remaining service operation examples (called synchronously) by using the new service operation methods on the client. In a way, they become almost trivial.

Calling a Service Operation that Returns a Collection of Primitive Types

var customerNames = context.GetCustomerNames();Calling a Service Operation that Returns a Single Primitive Type

int numOrders = context.CountOpenOrders();Calling a Service Operation that Returns Void

context.ReturnsNoData();Calling a Service Operation Asynchronously

OK, so it’s now super easy to synchronously call service operations using this new template-generated proxy client, but what about asynchronous calls? Well, there is a way to do this with the generated proxy, but it only works for service operations that return a collection of entities. In this case, it works because the service operation execution is represented as a DataServiceQuery<T> instance, which has its own async execution methods. For this kind of service operation, you can make the execution work asynchronously, like this:

public static void GetOrdersByCityAsync() { // Define the service operation query parameter. string city = "London"; // Create the DataServiceContext using the service URI. NorthwindEntities context = new NorthwindEntities(svcUri); // Define a query for orders based on calling GetOrdersByCity. var query = (from o in context.GetOrdersByCity(city) .Expand("Order_Details") orderby o.ShippedDate descending select o) as DataServiceQuery<Order>; // Asynchronously execute the service operation that returns // all orders for the specified city. query.BeginExecute(OnAsyncExecutionComplete, query); } private static void OnAsyncExecutionComplete(IAsyncResult result) { NorthwindEntities context = new NorthwindEntities(svcUri); // Get the context back from the stored state. var query = result.AsyncState as DataServiceQuery<Order>; try { // Complete the exection and write out the results. foreach (Order o in query.EndExecute(result)) { Console.WriteLine(string.Format("Order ID: {0}", o.OrderID)); foreach (Order_Detail item in o.Order_Details) { Console.WriteLine(String.Format("\tItem: {0}, quantity: {1}", item.ProductID, item.Quantity)); } } } catch (DataServiceQueryException ex) { QueryOperationResponse response = ex.Response; Console.WriteLine(response.Error.Message); } }Since the other types of execution rely on calling the synchronous DataServiceContext.Execute() method, I’m not sure how I would make this work with the existing APIs—I need to think more about it.

Service Operations Won’t Work on Asynchronous Clients

And don’t even bother trying to make any of this work with the Windows Phone client. First, there isn’t currently a Windows Phone client available that supports OData v3. (In fact, I removed the service operations code altogether from my hybrid T4 template, just to prevent any errors.) While the October CTP did include a Silverlight client, there is a bigger problem. Under the covers, most of the generated methods call DataServiceContext.Execute(), which is a synchronous method that doesn’t exist in the asynchronous clients. This means lots of errors should you encounter a service operation when using the template in a Silverlight app. The problem is that if you leave the T4 template code in place for a Silverlight client, when it comes across a service operation that requires the use of DataServiceContext.Execute(), which doesn’t exist in the Silverlight client, you will get a compiler error.

Just to be clear…on these async clients, only generated service operations methods are broken. The rest of the T4 template code works fine.

I’ve been providing feedback to the author of this excellent new template, so I have high hopes that much of this can be addressed.

<Return to section navigation list>

Windows Azure Access Control, Service Bus and Workflow

Mick Badran (@mickba) explained Azure ServiceBus: Fixing the dreaded ‘The X.509 certificate CN=servicebus.windows.net chain building failed’ error in a 1/30/2012 post:

Scotty & myself have had this error going for over 2 weeks now, and have tried many options, settings, registry keys, reboots and so on.

(we have had this on 2 boxes now, that are *not* directly connected to the internet. They are locked down servers with only required services accessible through the firewall)

Generally you’ll encounter this error is you install Azure SDK v1.6 – there has been people that have revert back to Azure v1.5 SDK when this error has been encountered and this seems to fix most of their problems.

Here I’m using netTcpRelayBinding, BizTalk 2010 but this could just have easily have been IIS or your own app.

Finding the outbound ports and Azure datacenter address space is always the challenge. Ports 80,443,9351 and 9352 are the main ones with the remote addresses being the network segments of your Azure Datacenter.

The problem: “Oh it’s a chain validation thing, I’ll just go and turn off Certificate checking…” let me see the options.

(this is what we thought 2+ weeks ago)Here I have a BizTalk shot of the transportClientEndpointBehaviour with Authentication node set to NoCheck and None (you would set these from code or a config file outside of biztalk)

We found that these currently have NO BEARING whatsoever…2 weeks we’ll never get back.

Don’t be drawn into here, it’s a long windy path and you’ll most likely end up short.

I am currently waiting to hear back from the folks on the product team to see what the answer is on this – BUT for now as a workaround we sat down with a network sniffer to see the characteristics.

Work around:

1. Add some Host Entries

2. Create a dummy site so the checker is fooled into grabbing local CRLs.

Add these Entries to your HOSTs file.

127.0.0.1 www.public-trust.com

127.0.0.1 mscrl.microsoft.com

127.0.0.1 crl.microsoft.com

127.0.0.1 corppkiDownload and extract these directories to your DEFAULT WEB SITE (i.e. the one that answers to http://127.0.0.1/…..)

This is usually under C:\inetpub\wwwroot (even if you have SharePoint installed)-------------------- The nasty error -------------------

The Messaging Engine failed to add a receive location "<receive location>" with URL "sb://<rec url>" to the adapter "WCF-Custom". Reason: "System.ServiceModel.Security.SecurityNegotiationException: The X.509 certificate CN=servicebus.windows.net chain building failed. The certificate that was used has a trust chain that cannot be verified. Replace the certificate or change the certificateValidationMode. The revocation function was unable to check revocation because the revocation server was offline.

---> System.IdentityModel.Tokens.SecurityTokenValidationException: The X.509 certificate CN=servicebus.windows.net chain building failed. The certificate that was used has a trust chain that cannot be verified. Replace the certificate or change the certificateValidationMode. The revocation function was unable to check revocation because the revocation server was offline.

at Microsoft.ServiceBus.Channels.Security.RetriableCertificateValidator.Validate(X509Certificate2 certificate)

at System.IdentityModel.Selectors.X509SecurityTokenAuthenticator.ValidateTokenCore(SecurityToken token)

at System.IdentityModel.Selectors.SecurityTokenAuthenticator.ValidateToken(SecurityToken token)

at System.ServiceModel.Channels.SslStreamSecurityUpgradeInitiator.ValidateRemoteCertificate(Object sender, X509Certificate certificate, X509Chain chain, SslPolicyErrors sslPolicyErrors)

at System.Net.Security.SecureChannel.VerifyRemoteCertificate(RemoteCertValidationCallback remoteCertValidationCallback)

at System.Net.Security.SslState.CompleteHandshake()

at System.Net.Security.SslState.CheckCompletionBeforeNextReceive(ProtocolToken message, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartSendBlob(Byte[] incoming, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessReceivedBlob(Byte[] buffer, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReceiveBlob(Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartSendBlob(Byte[] incoming, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessReceivedBlob(Byte[] buffer, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReceiveBlob(Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartSendBlob(Byte[] incoming, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessReceivedBlob(Byte[] buffer, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReceiveBlob(Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartSendBlob(Byte[] incoming, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ForceAuthentication(Boolean receiveFirst, Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessAuthentication(LazyAsyncResult lazyResult)

at System.ServiceModel.Channels.SslStreamSecurityUpgradeInitiator.OnInitiateUpgrade(Stream stream, SecurityMessageProperty& remoteSecurity)

--- End of inner exception stack trace ---

at System.ServiceModel.Channels.SslStreamSecurityUpgradeInitiator.OnInitiateUpgrade(Stream stream, SecurityMessageProperty& remoteSecurity)

at System.ServiceModel.Channels.StreamSecurityUpgradeInitiatorBase.InitiateUpgrade(Stream stream)

at System.ServiceModel.Channels.ConnectionUpgradeHelper.InitiateUpgrade(StreamUpgradeInitiator upgradeInitiator, IConnection& connection, ClientFramingDecoder decoder, IDefaultCommunicationTimeouts defaultTimeouts, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ClientFramingDuplexSessionChannel.SendPreamble(IConnection connection, ArraySegment`1 preamble, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ClientFramingDuplexSessionChannel.DuplexConnectionPoolHelper.AcceptPooledConnection(IConnection connection, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ConnectionPoolHelper.EstablishConnection(TimeSpan timeout)

at System.ServiceModel.Channels.ClientFramingDuplexSessionChannel.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at Microsoft.ServiceBus.RelayedOnewayTcpClient.RelayedOnewayChannel.Open(TimeSpan timeout)

at Microsoft.ServiceBus.RelayedOnewayTcpClient.GetChannel(Uri via, TimeSpan timeout)

at Microsoft.ServiceBus.RelayedOnewayTcpClient.ConnectRequestReplyContext.Send(Message message, TimeSpan timeout, IDuplexChannel& channel)

at Microsoft.ServiceBus.RelayedOnewayTcpListener.RelayedOnewayTcpListenerClient.Connect(TimeSpan timeout)

at Microsoft.ServiceBus.RelayedOnewayTcpClient.EnsureConnected(TimeSpan timeout)

at Microsoft.ServiceBus.Channels.CommunicationObject.Open(TimeSpan timeout)

at Microsoft.ServiceBus.Channels.RefcountedCommunicationObject.Open(TimeSpan timeout)

at Microsoft.ServiceBus.RelayedOnewayChannelListener.OnOpen(TimeSpan timeout)

at Microsoft.ServiceBus.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.Dispatcher.ChannelDispatcher.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.ServiceHostBase.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at Microsoft.ServiceBus.SocketConnectionTransportManager.OnOpen(TimeSpan timeout)

at Microsoft.ServiceBus.Channels.TransportManager.Open(TimeSpan timeout, TransportChannelListener channelListener)

at Microsoft.ServiceBus.Channels.TransportManagerContainer.Open(TimeSpan timeout, SelectTransportManagersCallback selectTransportManagerCallback)

at Microsoft.ServiceBus.SocketConnectionChannelListener`2.OnOpen(TimeSpan timeout)

at Microsoft.ServiceBus.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.Dispatcher.ChannelDispatcher.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.ServiceHostBase.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at Microsoft.BizTalk.Adapter.Wcf.Runtime.WcfReceiveEndpoint.Enable()

at Microsoft.BizTalk.Adapter.Wcf.Runtime.WcfReceiveEndpoint..ctor(BizTalkEndpointContext endpointContext, IBTTransportProxy transportProxy, ControlledTermination control)

at Microsoft.BizTalk.Adapter.Wcf.Runtime.WcfReceiver`2.AddReceiveEndpoint(String url, IPropertyBag adapterConfig, IPropertyBag bizTalkConfig)".

Scott Densmore (@scottdensmore) announced a Fluent API for Windows Azure ACS Management in a 1/29/2012 post:

The guys at SouthWorks have been hard at work again. We have been using the same setup program for our Windows Azure Projects to configure ACS for a while now. It is a bit rough around the edges but we have not had time to invest a little upgrade. Well, leave it to these guys to go above and beyond: Jorge Rowies has create a fluent API for setting things up. Looks great. Check it out and start forking in GitHub.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

MarketWire (@Marketwire) asserted “Further Investment in Microsoft's Cloud Platform Brings Greater Agility to Redknee's Cloud-Based Converged Billing Solutions” in an introduction to a Redknee Expands Cloud Offering With Windows Azure press release of 1/31/2012:

Redknee Solutions, Inc., a leading provider of business-critical billing, charging and customer care software and solutions for communications service providers, is pleased to announce that it is expanding its strategic alliance with Microsoft Corp. to support Redknee's strategy to deliver on-premise, private cloud, and public cloud-based converged billing solutions. The integration of Redknee's real-time converged billing platform to Windows Azure will reinforce the commitment by Redknee and Microsoft to develop solutions that deliver greater scalability and agility while reducing capital expenditure for communications service providers across the world.

Redknee's expanded cloud offering will enable service providers in competitive and fast growing markets to invest in a real-time converged billing and customer care solution based on the open and scalable Windows Azure public cloud. It will bring additional flexibility to Redknee's multi-tenant converged billing solution, which enables service providers to scale as required with a 'pay as your grow' model and minimizes risk. Redknee's cloud solution is supporting the growth of group operators, Tier 1 sub-brands, MVNOs, and MVNEs by enabling them to reduce CAPEX, standardize their billing operations, and launch into new markets quickly and effectively.

Lucas Skoczkowski, Redknee's CEO, commented: "Redknee's commitment to invest in Windows Azure reiterates our common goal with Microsoft to deliver solutions for communication service providers that empower them with greater business agility. This joint solution provides exceptional flexibility for service providers that lower the barriers to entry for next generation converged billing solutions by taking advantage of the benefits of the cloud. At Redknee, we continue to invest in developing cloud-based and on-premise monetization solutions to enable service providers to increase revenues, improve the customer experience and grow profitability."

The pre-integration of Windows Azure follows earlier investment by Redknee to pre-integrate its converged billing solution with Microsoft SQL Server 2012, formerly code-named "Denali," and Microsoft Dynamics CRM 2011.

Walid Abu-Hadba, Corporate Vice President of the Developer & Platform Evangelism Group at Microsoft, commented: "We are delighted to see Redknee launching a cloud strategy for the communications service provider community, with Windows Azure at its core. Microsoft's cloud platform delivers greater business agility to service providers, while significantly reducing capital expenditure so that they can focus on their core business. With this commitment to Windows Azure, Redknee advances its vision of creating a suite of agile, flexible solutions for communications service providers. Redknee's adoption of Windows Azure highlights the scalability, the reliability and the performance of the Microsoft cloud platform. Together we are ready to meet the most demanding needs of telecommunications service providers around the world."

For more information about Redknee and its solutions, please go to www.redknee.com .

About Redknee Redknee is a leading global provider of innovative communication software products, solutions and services. Redknee's award-winning solutions enable operators to monetize the value of each subscriber transaction while personalizing the subscriber experience to meet mainstream, niche and individual market segment requirements. Redknee's revenue generating solutions provide advanced converged billing, rating, charging and policy for voice, messaging and new generation data services to over 90 network operators in over 50 countries. Established in 1999, Redknee Solutions Inc. /quotes/zigman/38292 CA:RKN +2.00% is the parent of the wholly-owned operating subsidiary Redknee Inc. and its various subsidiaries. References to Redknee refer to the combined operations of those entities. For more information about Redknee and its solutions, please go to www.redknee.com .

Roope Astala explained “Cloud Numerics” Example: Statistics Operations to Azure Data in a 1/30/2012 post to the Codename “Cloud Numeric” blog:

This post demonstrates how to use Microsoft.Numerics C# API to perform statistical operations on data in Windows Azure blob storage. We go through the steps of loading data using IParallelReader interface, performing distributed statistics operations, and saving results to blob storage. As we sequence through the steps, we highlight the code samples from the application.

Note!

You will need to download and install the “Cloud Numerics” lab in order to run this example. To begin that process, click here.

Before You Run the Sample Application

Before you run the sample “Cloud Numerics” statistics application, complete the instructions in the “Cloud Numerics” Getting Started wiki post to:

- Create a Windows Azure account (if you do not have one already).

- Install “Cloud Numerics” on your local computer where you build and develop applications with Visual Studio.

- Configure and deploy a cluster in Azure (only if you have not done so already).

- Submit the sample C# “Cloud Numerics” program to Windows Azure as a test that your cluster is running properly.

- Download the project file and source code for the sample “Cloud Numerics” statistics application.

Blob Locations and Sample Application Download

You can download the sample application from the Microsoft Connect site (connect.microsoft.com). If you have not already registered for the lab, you can do that here. Registering for the lab provides you access to the “Cloud Numerics” lab materials (installation package, reference documentation, and sample applications).

Note!

If you are signed into Microsoft Connect, and you have already registered for your invitation to the “Cloud Numerics” lab, you can access the various sample applications using this link.

For your convenience, we have staged sample datasets of pseudorandom numbers in Windows Azure Blob Storage. You can access the small and medium datasets at their respective links:

- http://cloudnumericslab.blob.core.windows.net/smalldata

- http://cloudnumericslab.blob.core.windows.net/mediumdata

These datasets are intended merely as examples to get you started. Also, feel free to customize the sample application code to suit your own datasets.

Choosing the Mode: Run on Local Development Machine or on Windows Azure

To run the application on your local workstation:

- Set StatisticsCloudApplication as your StartUp project within Visual Studio. (From Solution Explorer in the Visual Studio IDE, right click the StatisticsCloudApplication subproject and select Set as Startup Project). Although, your application will run on your local workstation, the application will continue to use Windows Azure storage for data input and output.

- Change Start Option paths for the project properties to reflect your local machine.

a. Right click the StatisticsCloudApplication subproject, and select Properties.

b. Click the Debug tab

c. In the Start Options section of the Debug tab, edit the following fields to reflect the paths on your local development machine:

- For the Command line arguments field, change:

c:\users\roastala\documents\visual studio 2010\Projects… to

c:\users\<YourUsername>\documents\visual studio 2010\Projects… - For the Working directory field, change:

c:\users\roastala\documents\… to

c:\users\<YourUsername>\documents\…--Where c:\users\<YourUsername>\ reflects the home directory of the user on the local development machine where you installed the “Cloud Numerics” software.

To submit the application to Windows Azure (run on Windows Azure rather than locally):

Set AppConfigure as the StartUp project. (From Solution Explorer in the Visual Studio IDE, right click the AppConfigure subproject and select Set as Startup Project).

Note!

If you have already deployed your cluster or if it was pre-deployed by your site administrator, do not deploy it again. Instead, you only need to build the application and submit the main executable as a job.

Step 1: Supply Windows Azure Storage Account Information for Output

To build the application you must have a Windows Azure storage account for storing the output. Replace the string values “myAccountKey” and “myAccountName” with your own account key and name.

static string outputAccountKey = "myAccountKey"; static string outputAccountName = "myAccountName";The application creates a public blob for the output under this storage account. See Step 4 for details.

Step 2: Read in Data from Blob Storage Using IParallelReader Interface

Let us take a look at code in AzureArrayReader.cs file.

The input array in this example is in Azure blob storage, where each blob contains a subset of columns of the full array. By using the Microsoft.Numerics.Distributed.IO.IParallelReader interface we can read the blobs in distributed fashion and concatenate the slabs of columns into a single large distributed array.

First, we implement the ComputeAssignment method, which assigns blobs to the MPI ranks of our distributed computation.

public object[] ComputeAssignment(int nranks)

{

Object[] blobs = new Object[nranks];

var blobClient = new CloudBlobClient(accountName);

var matrixContainer = blobClient.GetContainerReference(containerName);

var blobCount = matrixContainer.ListBlobs().Count();

int maxBlobsPerRank = (int)Math.Ceiling((double)blobCount / (double)nranks);

int currentBlob = 0;

for (int i = 0; i < nranks; i++)

{

int step = Math.Max(0, Math.Min(maxBlobsPerRank, blobCount - currentBlob));

blobs[i] = new int[] { currentBlob, step };

currentBlob = currentBlob + step;

}

return (object[])blobs;

}Next, we implement the property DistributedDimension, which in this case is initialized to 1 so that slabs will be concatenated along the column dimension.

public int DistributedDimension

{

get { return 1; }

set { }

}The ReadWorker method:

- Reads the blob metadata that describes the number of rows and columns in a given slab.

- Checks that the slabs have an equal number of rows so they can be concatenated columnwise.

- Reads the binary data from blobs.

- Constructs a local NumericDenseArray.

public msnl.NumericDenseArray<double> ReadWorker(Object assignment)

{

var blobClient = new CloudBlobClient(accountName);

var matrixContainer = blobClient.GetContainerReference(containerName);

int[] blobs = (int[])assignment;

long i, j, k;

msnl.NumericDenseArray<double> outArray;

var firstBlob = matrixContainer.GetBlockBlobReference("slab0");

firstBlob.FetchAttributes();

long rows = Convert.ToInt64(firstBlob.Metadata["dimension0"]);

long[] columnsPerSlab = new long[blobs[1]];

if (blobs[1] > 0)

{

// Get blob metadata, validate that each piece has equal number of rows

for (i = 0; i < blobs[1]; i++)

{

var matrixBlob = matrixContainer.GetBlockBlobReference("slab" + (blobs[0] + i).ToString());

matrixBlob.FetchAttributes();

if (Convert.ToInt64(matrixBlob.Metadata["dimension0"]) != rows)

{

throw new System.IO.InvalidDataException("Invalid slab shape");

}

columnsPerSlab[i] = Convert.ToInt64(matrixBlob.Metadata["dimension1"]);

}

// Construct output array

outArray = msnl.NumericDenseArrayFactory.Create<double>(new long[] { rows, columnsPerSlab.Sum() });

// Read data

long columnCounter = 0;

for (i = 0; i < blobs[1]; i++)

{

var matrixBlob = matrixContainer.GetBlobReference("slab" + (blobs[0] + i).ToString());

var blobData = matrixBlob.DownloadByteArray();

for (j = 0; j < columnsPerSlab[i]; j++)

{

for (k = 0; k < rows; k++)

{

outArray[k, columnCounter] = BitConverter.ToDouble(blobData, (int)(j * rows + k) * 8);

}

columnCounter = columnCounter + 1;

}

}

}

else

{

// If a rank was assigned zero blobs, return empty array

outArray = msnl.NumericDenseArrayFactory.Create<double>(new long[] { rows, 0 });

}

return outArray;

}When an instance of reader is invoked by the Microsoft.Numerics.Distributed.IO.Loader.LoadData method, the ReadWorker instances are executed in parallel on each rank, and the LoadData method automatically takes care of concatenating the local pieces produced by the ReadWorkers.

Step 3: Compute Statistics Operations on Distributed Data

The source code in the Statistics.cs file implements the statistics operations performed on distributed data.

The sample data is stored at:

static string inputAccountName = @"http://cloudnumericslab.blob.core.windows.net";This is a storage account for our data. It contains the samples of random numbers in publicly readable containers named “smalldata” and “mediumdata.”

In the beginning of the main entry point of the application, we initialize the Microsoft.Numerics distributed runtime. This allows us to execute distributed operations by calling Microsoft.Numerics library methods.

Microsoft.Numerics.NumericsRuntime.Initialize();Next, we instantiate the array reader described earlier, and read data from blob storage.

var dataReader = new AzureArrayReader.AzureArrayReader(inputAccountName, arraySize); var x = msnd.IO.Loader.LoadData<double>(dataReader);The output x is a columnwise distributed array loaded with the sample data. We then compute the statistics of the data: min, max, mean, median and percentiles, and write the results to an output string.

// Compute summary statistics: max, min, mean, median

output.AppendLine("Summary statistics\n");

var xMin = ArrayMath.Min(x);

output.AppendLine("Minimum, " + xMin);

var xMax = ArrayMath.Max(x);

output.AppendLine("Maximum, " + xMax);

var xMean = Descriptive.Mean(x);

output.AppendLine("Mean, " + xMean);

var xMedian = Descriptive.Median(x);

output.AppendLine("Median, " + xMedian);

// Compute 10% quantiles

var tenPercentQuantiles = Descriptive.QuantilesExclusive(x, 10, 0).ToLocalArray();As x is a distributed array, the overloaded variant of the method (QuantilesExclusive) that distributes processing over nodes of the Azure cluster is used. Note that the result of the quantiles operation is a distributed array. We copy it to a local array in order to write the result to an output string.

Step 4: Write Results to Blob Storage as a .csv File

The application, by default, writes the result to the file system of the virtual cluster. This storage is not permanent; the file will be removed when you delete the cluster. The application creates a public blob on the named Azure account you supplied in the beginning of the application.

// Write output to blob storage

var storageAccountCredential = new StorageCredentialsAccountAndKey(outputAccountName, outputAccountKey);

var storageAccount = new CloudStorageAccount(storageAccountCredential, true);

var blobClient = storageAccount.CreateCloudBlobClient();

var resultContainer = blobClient.GetContainerReference(outputContainerName);

resultContainer.CreateIfNotExist();

var resultBlob = resultContainer.GetBlobReference(outputBlobName);

// Make result blob publicly readable,

// so it can be accessed using URI

// https://<accountName>.blob.core.windows.net/statisticsresult/statisticsresult

var resultPermissions = new BlobContainerPermissions();

resultPermissions.PublicAccess = BlobContainerPublicAccessType.Blob;

resultContainer.SetPermissions(resultPermissions);

resultBlob.UploadText(output.ToString());You can then view and download the results by using a web browser to open the blob. For example, the syntax for the URI would be:

https://<accountName>.blob.core.windows.net/statisticsresult/statisticsresult

--Where <accountName> is the name of the cluster account you deployed to Windows Azure.

For more background on Codename “Cloud Numerics,” see my Introducing Microsoft Codename “Cloud Numerics” from SQL Azure Labs and Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters posts of 1/28/2012.

Scott Densmore (@scottdensmore) described Creating a SSL Certificate for the Cloud Ready Packages for the iOS Windows Azure Toolkit

With the iOS Windows Azure Toolkit, you can use the ready made Windows Azure Packages that use ACS or Membership to manage users to Windows Azure Storage. These packages require you to have a SSL certificate. More than likely you are running on a Mac.

This is pretty straight forward. You will need to create a certificate and then create a PKCS12 (.pfx) file for it. You can generate the certificate from terminal using openssl with the following steps:

- open a terminal window

- enter the command: openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout iOSWAToolkit.pem -out iOSWAToolkit.pem

- iOSWAToolkit is the name of the file you want and can be anything you like.

- This will create a certificate that you can use to create the PKCS12 certificate. This command will ask for all the information for your cert that you enter.

- enter the command: openssl pkcs12 -export -out iOSWAToolkit.pfx -in iOSWAToolkit.pem -name "iOSWAToolkit"

- iOSWAToolkit is the name of the file you want and can be anything you like.

- This will ask you for a password that you need to remember so you can enter it when uploading to Windows Azure.

- enter the command: openssl x509 -outform der -in iOSWAToolkit.pem -out iOSWAToolkit.cer

- This will export a certificate with the public key which is what you will need for the service config file.

You will have two certificates that you will need to get the Cloud Ready Package(s) deployed. This is really easy when you use the Cloud Configuration Utility.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) described Calling Web Services to Validate Data in Visual Studio LightSwitch in a 1/30/2012 post:

Very often in business applications we need to validate data through another service. I’m not talking about validating the format of data entered – this is very simple to do in LightSwitch -- I’m talking about validating the meaning of the data. For instance, you may need to validate not just the format of an email address (which LightSwitch handles automatically for you) but you also want to verify that the email address is real. Another common example is physical Address validation in order to make sure that a postal address is real before you send packages to it.

In this post I’m going to show you how you can call web services when validating LightSwitch data. I’m going to use the Address Book sample and implement an Address validator that calls a service to verify the data.

Where Do We Call the Service?

In Visual Studio LightSwitch there are a few places where you can place code to validate entities. There are Property_Validate methods and there are Entity_Validate methods. Property_Validate methods run first on the client and then on the server and are good for checking the format of data entered, doing any comparisons to other properties, or manipulating the data based on conditions stored in the entity itself or its related entities. Usually you want to put your validation code here so that users get immediate feedback of any errors before the data is submitted to the server. These methods are contained on the entity classes themselves. (For more detailed information on the LightSwitch Validation Framework see: Overview of Data Validation in LightSwitch Applications)

The Entity_Validate methods only run on the server and are contained in the ApplicationDataService class. This is the perfect place to call an external validation service because it avoids having clients calling external services directly -- instead the LightSwitch middle-tier makes the call. This gives you finer control over your network traffic. Client applications may only be allowed to connect to your intranet internally but you can allow external traffic to the server managing the external connection in one place.

Calling Web Services

There are a lot of services out there for validating all sorts of data and each service has a different set of requirements. Typically I prefer REST-ful services so that you can make a simple http request (GET) and get some data back. However, you can also add service references like ASMX and WCF services as well. It’s all going to depend on the service you use so you’ll need to refer to their specific documentation.

To add a service reference to a LightSwitch application, first flip to File View in the Solution Explorer, right-click on the Server project and then select Add Service Reference…

Enter the service URL and the service proxy classes will be generated for you. You can then call these from server code you write on the ApplicationDataService just like you would in any other application that has a service reference. In the case of calling REST-ful services that return XML feeds, you can simply construct the URL to call and examine the results. Let’s see how to do that.

Address Book Example

In this sample we have an Address table where we want to validate the physical address when the data is saved. There are a few address validator services out there to choose from that I could find, but for this example I chose to sign up for a free trial of an address validation service from ServiceObjects. They’ve got some nice, simple APIs and support REST web requests. Once you sign up they give you a License Key that you need to pass into the service.

A sample request looks like this:

http://trial.serviceobjects.com/av/AddressValidate.asmx/ValidateAddress?Address=One+Microsoft+Way&Address2=&City=Redmond&State=WA&PostalCode=98052&LicenseKey=12345

Which gives you back the result:

<?xml version="1.0" encoding="UTF-8"?> <Address xmlns="http://www.serviceobjects.com/" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema"> <Address>1 Microsoft Way</Address> <City>Redmond</City> <State>WA</State> <Zip>98052-8300</Zip> <Address2/> <BarcodeDigits>980528300997</BarcodeDigits> <CarrierRoute>C012</CarrierRoute> <CongressCode>08</CongressCode> <CountyCode>033</CountyCode> <CountyName>King</CountyName> <Fragment/> </Address>If you enter a bogus address or forget to specify the City+State or PostalCode then you will get an error result:

<?xml version="1.0" encoding="UTF-8"?> <Address xmlns="http://www.serviceobjects.com/" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema"> <Error> <Desc>Please input either zip code or both city and state.</Desc> <Number>2</Number> <Location/> </Error> </Address>So in order to interact with this service we’ll first need to add some assembly references to the Server project. Right-click on the Server project (like shown above) and select “Add Reference” and import System.Web and System.Xml.Linq.

Next, flip back to Logical View and open the Address entity in the Data Designer. Drop down the Write Code button to access the Addresses_Validate method. (You could also just open the Server\UserCode\ApplicationDataService code file if you are in File View).

First we need to import some namespaces as well as the default XML namespace that is returned in the response. (For more information on XML in Visual Basic please see: Overview of LINQ to XML in Visual Basic and articles here on my blog.) Then we can construct the URL based on the entity’s Address properties and query the result XML for either errors or the corrected address. If we find an error, we tell LightSwitch to display the validation result to the user on the screen.

Imports System.Xml.Linq Imports System.Web.HttpUtility Imports <xmlns="http://www.serviceobjects.com/"> Namespace LightSwitchApplication Public Class ApplicationDataService Private Sub Addresses_Validate(entity As Address, results As EntitySetValidationResultsBuilder) Dim isValid = False Dim errorDesc = "" 'Construct the URL to call the web service Dim url = String.Format("http://trial.serviceobjects.com/av/AddressValidate.asmx/ValidateAddress?" & "Address={0}&Address2={1}&City={2}&State={3}&PostalCode={4}&LicenseKey={5}", UrlEncode(entity.Address1), UrlEncode(entity.Address2), UrlEncode(entity.City), UrlEncode(entity.State), UrlEncode(entity.ZIP), "12345") Try 'Call the service and load the XML result

Dim addressData = XElement.Load(url) 'Check for errors first Dim err = addressData...<Error> If err.Any Then errorDesc = err.<Desc>.Value Else 'Fill in corrected address values returned from service entity.Address1 = addressData.<Address>.Value entity.Address2 = addressData.<Address2>.Value entity.City = addressData.<City>.Value entity.State = addressData.<State>.Value entity.ZIP = addressData.<Zip>.Value isValid = True End If Catch ex As Exception Trace.TraceError(ex) End Try If Not (isValid) Then results.AddEntityError("This is not a valid US address. " & errorDesc) End If End Sub End Class End NamespaceRun it!

Now that I’ve got this code implemented let’s enter some addresses on our contact screen. Here I’ve entered three addresses, the first two are legal and the last one is not. Also notice that I’ve only specified partial addresses.

If I try to save this screen, an error will be returned from the service on the last row. LightSwitch won’t let us save until the address is fixed.

If I delete the bogus address and save again, you will see that the other addresses were verified and all the fields are updated with complete address information.

Wrap Up

I hope this gives you a good idea on how to implement web service calls into the LightSwitch validation pipeline. Even though each service you use will have different requirements on how to call them and what they return, the LightSwitch validation pipeline gives you the necessary hooks to implement complex entity validation easily.

Jan Van der Haegen (@janvanderhaegen) asked Dude, where’s my VB.Net code? on 1/30/2012:

It came to my attention that a large part of the LightSwitch community prefers VB.NET over C#. I always thought that C# and VB.NET are close enough together in semantics that any VB.NET developer can read C# and vica versa. However, I must admit that I looked at the VB.NET version of my own samples, and once there’s generics & optional parameters involved, the VB.NET version becomes significantly different compared to its C# brother. To overcome this language barrier, thank god there’s tools online like “Google Translate”, which for .NET code, is provided to us for free by Telerik.

A special thanks to Telerik, Todd Anglin in particular, for providing us with this awesome translating power!

I’ll promise to do my best to post my samples in both C# and VB.Net in the future.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

InformationWeek::Reports posted Wall Street & Technology: February 2012 on 1/31/2012:

EMBRACING CLOUD: Facing declining revenues and increasing pressure on capital expenditures, many Wall Street organizations are warming up to semi-public cloud offerings.

Table of Contents

Cloud Watching: As Wall Street continues its wild ride, capital markets firms are looking to cut spending in order to prop up the bottom line. Targeting the enormous costs of building and maintaining data centers and server farms, more and more Wall Street organizations are looking to outsource parts of their infrastructures to the cloud. And increasingly, financial services firms are warming up to private clouds run outside their firewalls.

What Went Wrong? Breaking Down Thomson Reuters Eikon: Tabbed as the future of the company, Thomson Reuters' next-generation market data platform, Eikon, has been off to a sluggish start, and several executives have paid the price. But Philip Brittan, the man in charge of the Eikon group, says the company's commitment to the product has never waned.

Anatomy of A Data Center (Photo Gallery): DFT's NJ1 facility in Piscataway, N.J., comprises 360,000 square feet of LEEDcertified, energy-efficient data center space. Home to a growing number of financial industry clients, including the NYSE, the data center is designed to lower costs to tenants. WS&T takes you along for a behind-the-scenes look at the facility.

Securing Advent's Legacy: Founder Stephanie DiMarco will step down at the end of June as Advent's CEO. In an exclusive interview, she explains why she's stepping down, reflects on the company's impact on the investment management industry over the past 30 years and discusses her plans for the future.

PLUS:

Wall Street IT Pros In Strong Demand

How to Measure IT Productivity

Larry Tabb's Suggestions for Fixing the Futures Market

The Cloud's Time Is Now

And more ...

Lori MacVittie (@lmacvittie) asserted While web applications aren’t sensitive to jitter, business processes are in an introduction to her Performance in the Cloud: Business Jitter is Bad post of 1/30/2012 to F5’s DevCentral blog:

One of the benefits of web applications is that they are generally transported via TCP, which is a connection-oriented protocol designed to assure delivery. TCP has a variety of native mechanisms through which delivery issues can be addressed – from window sizes to selective acks to idle time specification to ramp up parameters. All these technical knobs and buttons serve as a way for operators and administrators to tweak the protocol, often at run time, to ensure the exchange of requests and responses upon which web applications rely. This is unlike UDP, which is more of a “fire and forget” protocol in which the server doesn’t really care if you receive the data or not.

Now, voice and streaming video and audio over the web has always leveraged UDP and thus it has always been highly sensitive to jitter. Jitter is, without getting into layer one (physical) jargon, an undesirable delay in the otherwise consistent delivery of packets. It causes the delay of and sometimes outright loss of packets that are experienced by users as pauses, skips, or jumps in multi-media content.

While the same root causes of delay – network congestion, routing changes, time out intervals – have an impact on TCP, it generally only delays the communication and other than an uncomfortable wait for the user, does not negatively impact the content itself. The content is eventually delivered because TCP guarantees that, UDP does not.

However, this does not mean that there are no negative impacts (other than trying the patience of users) from the performance issues that may plague web applications and particularly those that are more and more often out there, in the nebulous “cloud”. Delays are effectively business jitter and have a real impact on the ability of the business to perform its critical functions – and that includes generating revenue.

BUSINESS JITTER and the CLOUD

David Linthicum summed up the issue with performance of cloud-based applications well and actually used the terminology “jitter” to describe the unpredictable pattern of delay:

Are cloud services slow? Or fast? Both, it turns out -- and that reality could cause unexpected problems if you rely on public clouds for part of your IT services and infrastructure.

When I log performance on cloud-based processes -- some that are I/O intensive, some that are not -- I get results that vary randomly throughout the day. In fact, they appear to have the pattern of a very jittery process. Clearly, the program or system is struggling to obtain virtual resources that, in turn, struggle to obtain physical resources. Also, I suspect this "jitter" is not at all random, but based on the number of other processes or users sharing the same resources at that time.

-- David Linthicum, “Face the facts: Cloud performance isn't always stable”

But what the multitude of articles coming out over the past year or so with respect to performance of cloud services has largely ignored is the very real and often measurable impact on business processes. That jitter that occurs at the protocol and application layers trickles up to become jitter in the business process; a process that may be critical to servicing customers (and thus impacts satisfaction and brand) as well as on the bottom line. Unhappy customers forced to wait for “slow computers”, as it is so often called by the technically less adept customer service representatives employed by many organizations, may take to the social media airwaves to express displeasure, or cancel an order, or simply refuse to do business in the future with the organization based on delays experienced because of unpredictable cloud performance.

Business jitter can also manifest as decreased business productivity measures, which it turns out can be measured mathematically if you put your mind to it.

Understanding the variability of cloud performance is important for two reasons:

- You need to understand the impact on the business and quantify it before embarking on any cloud initiative so it can be factored in to the overall cost-benefit analysis. It may be that the cost savings from public cloud are much greater than the potential loss of revenue and/or productivity, and thus the benefits of a cloud-based solution outweigh the risks.

- Understanding the variability and from where it comes will have an impact and help guide you to choosing not only the right provider, but the right solutions that may be able to normalize or mitigate the variability. If the primary source of business jitter is your WAN, for example, then it may be that choosing a provider that supports your ability to deploy WAN optimization solutions would be an appropriate strategy. Similarly, if the variability in performance stems from capacity issues, then choosing a provider that allows greater latitude in load balancing algorithms or the deployment of a virtual (soft) ADC would likely be the best strategy.

It seems clear from testing and empirical (as well as anecdotal) evidence that cloud performance is highly variable and, as David puts it, unstable. This should not necessarily be seen as a deterrent to adopting cloud services – unless your business is so highly sensitive to latency that even milliseconds can be financially damaging – but rather it should be a reality that factors into your decision making process with respect to your choice of provider and the architecture of the solution you’ll be deploying (or subscribing to, in the case of SaaS) in the cloud.

Knowing is half the battle to leveraging cloud successfully. The other half is strategy and architecture.

I’ll be at CloudConnect 2012 and we’ll discuss the subject of cloud and performance a whole lot more at the show!

Abel B. Cruz reported Cloud Computing Fueling Global Economic Growth: London School of Economics study on 1/30/2012:

The development of cloud computing will promote economic growth, increase productivity and shift the type of jobs and skills required by businesses, according to a new study by the London School of Economics and Political Science.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

David Linthicum (@DavidLinthicum) asserted “Amazon Storage Gateway is a prime example of using an old-fashioned data center approach in the cloud -- which seems to miss the point” in a deck for his Cloud appliances: The cloud that isn't the cloud post of 1/31/2012 to InfoWorld’s Cloud Computing blog:

Last week Amazon.com let it be known that it has launched a public beta test of Amazon Storage Gateway -- my colleague Matt Prigge has done an in-depth, hands-on look of it. This software appliance stores data on local hardware and uploads backup instances to Amazon Web Services' S3 (Simple Storage Service). The idea is to provide low-latency access to local data while keeping snapshots of that data in the AWS cloud.

Cloud appliances are nothing new -- we've been using them for years to maintain software control within the firewall. Their applications include caching data as it moved to and from the cloud to enhance performance, local storage that is replicated to the cloud (as is the case with AWS gateway), and providing protocol mediation services to systems that are not port 80-compliant.

However, the larger question here is obvious: If cloud computing is really around eliminating the cost of local hardware and software, why are cloud computing providers selling hardware and software?

There are practical reasons for using cloud appliances, such as those I just cited. However, the larger advantage may be more perceptual than technical. IT managers want their data in some box in their data center that they can see and touch. They also want to tell people that they are moving to cloud computing. The cloud appliance provides the best of both worlds, and I suspect that we'll see other IaaS providers follow if Amazon.com is successful.

However, I can't help thinking that we're just trading an internal path to complexity and increasing costs for a cloud path to complexity and increasing costs. That's antithetical to the cloud's notions of reducing costs through efficiency and scale and of reducing complexity through abstraction. But yet here we are.

The Windows Server and Cloud Platform Team reported System Center Cloud Services Management Pack RC Now Available! on 1/30/2012:

Check out Travis Wright’s blog and learn more about the System Center Cloud Services Management Pack Release Candidate that is now available for download.

The System Center Cloud Services Process Pack offers a self-service experience to facilitate private cloud capacity requests from your business unit IT application owners and end users, including the flexibility to request additional capacity as business demands increase. Download the Management Pack today!

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) posted Building/Bolting Security In/On – A Pox On the Audit Paradox! on 1/31/2012:

My friend and skilled raconteur Chris Swan (@cpswan) wrote an excellent piece a few days ago titled “Building security in – the audit paradox.”

This thoughtful piece was constructed in order to point out the challenges involved in providing auditability, visibility, and transparency in service — specifically cloud computing — in which the notion of building in or bolting on security is debated.

I think this is timely. I have thought about this a couple of times with one piece aligned heavily with Chris’ thoughts:

Chris’ discussion really contrasted the delivery/deployment models against the availability and operationalization of controls:

- If we’re building security in, then how do we audit the controls?

- Will platform as a service (PaaS) give us a way to build security in such that it can be evaluated independently of the custom code running on it?

Further, as part of some good examples, he points out the notion that with separation of duties, the ability to apply “defense in depth” (hate that term,) and the ability to respond to new threats, the “bolt-on” approach is useful — if not siloed:

There lies the issue – bolt on security is easy to audit. There’s a separate thing, with a separate bit of config (administered by a separate bunch of people) that stands alone from the application code.

…versus building secure applications:

Code security is hard. We know that from the constant stream of vulnerabilities that get found in the tools we use every day. Auditing that specific controls implemented in code are present and effective is a big problem, and that is why I think we’re still seeing so much bolting on rather than building in.

I don’t disagree with this at all. Code security is hard. People look for gap-fillers. The notion that Chris finds limited options for bolting security on versus integrating security (building it in) programmatically as part of the security development lifecycle leaves me a bit puzzled.

This identifies both the skills and cultural gap between where we are with security and how cloud changes our process, technology, and operational approaches but there are many options we should discuss.

Thus what was interesting (read: I disagree with) is what came next wherein Chris maintained that one “can’t bolt on in the cloud”:

One of the challenges that cloud services present is an inability to bolt on extra functionality, including security, beyond that offered by the service provider. Amazon, Google etc. aren’t going to let me or you show up to their data centre and install an XML gateway, so if I want something like schema validation then I’m obliged to build it in rather than bolt it on, and I must confront the audit issue that goes with that.

While it’s true that CSP’s may not enable/allow you to show up to their DC and “…install and XML gateway,” they are pushing the security deployment model toward the virtual networking hooks, the guest based approach within the VMs and leveraging both the security and service models of cloud itself to solve these challenges.

I allude to this below, but as an example, there are now cloud services which can sit “in-line” or in conjunction with your cloud application deployments and deliver security as a service…application, information (and even XML) security as a service are here today and ramping!