Windows Azure and Cloud Computing Posts for 6/24/2013+

Top News This Week:

- Scott Guthrie summarized Windows Azure announcements during //BUILD/ 2013’s second day keynote in the Cloud Computing Events section below.

- Steven Martin posted Announcing the General Availability of Windows Azure Mobile Services, Web Sites and continued Service innovation to the Windows Azure Team blog during the //BUILD/ 2013 Conference Thursday keynote. See the Windows Azure SQL Database, Federations and Reporting, Mobile Services directory for the full post.

- Scott Guthrie announced improvements to Windows Azure Active Directory at the //BUILD/ conference on 6/27/2013 at 9:47 AM PDT. See the Windows Azure Access Control, Active Directory, and Identity section.

- Satya Nadella announced General Availability (GA) for Windows Azure Web Sites at the //BUILD/ conference on 6/27/2013 at 9:30 AM PDT. See the Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN section.

- Craig Kitterman reported autoscaling for applications is built into Windows Azure applications (preview) as of 6/26/2013. See the Windows Azure Infrastructure and DevOps section. Scott Guthrie confirmed it during the //BUILD/ keynote at 9:47 AM.

- The TechNet Evaluation Center let you “Put Microsoft’s Cloud OS vision to work today” by downloading updated 2012 R2 components starting 6/24/2013. See the Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds section.

- Satya Nadella announced Microsoft and Oracle are “Partners in the Cloud” on 6/24/2013. See the Windows Azure Infrastructure and DevOps section.

- The //BUILD/ 2013 Conference starts in San Francisco on 6/26/2013. See the Cloud Computing Events section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 6/29/2013 with new articles marked ••.

‡ Updated 6/28/2013 with a caveat about Visual Studio 2013 Preview not supporting the current Windows Azure SDK for .NET and new articles marked ‡

• Updated 6/27/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight, Hadoop and Media Services

‡‡ Isaac Lopez (@_IsaacLopez) reported Yahoo! Spinning Continuous Computing with YARN in a 6/28/2013 post to the Datanami blog:

YARN was the big news this week, with the announcement that the Hadoop resource manager is finally hitting the streets as part of the Hortonworks Data Platform (HDP) “Community Preview.”

According to Bruno Fernandez-Ruiz, who spoke at Hadoop Summit this week, Yahoo! has been able to leverage YARN to transform the processing in their Hadoop cluster from simple, stodgy MapReduce, to a nimble micro-batch engine processing machine – a change which they refer to as “continuous computing.”

The problem with MapReduce, explained Fernandez-Ruiz, VP of Platforms at Yahoo!, is that it once the processing ship has launched, new data is left at the dock. This creates a problem in the age of connected devices and real time ad serving, especially when the network your running is trying to make sense of 21 billion events per day, and you’ve got users and advertisers counting on you to be right.

Having MapReduce batch jobs that take anywhere between two and six hours, Yahoo! wasn’t getting the fidelity that they needed in their moment-to-moment information for such things as their Right Media services, their personalization algorithms, and their machine learning models. Problems such as this became an impetus for their getting involved with Hortonworks and the Apache Hadoop community to develop YARN, said Fernandez-Ruiz.

“We figured out that we had to change the model, and this is what we’re calling ‘continuous computing,’” he explained. “How do you take MapReduce and change this notion of [going from] big long windows of several hours to running in small continuous incremental iterations – whether that is 5 minutes, or 5 seconds, or a half a second. How do you move that batch job from being long running, to being micro-batch?”

One of the solutions they’ve turned to, says Fernando-Ruiz, is to leverage YARN to move certain processes from big batch jobs to streaming. To accomplish this they turned to the open source distributed real-time computation system, Storm.

Pivoting off of YARN, Yahoo! was able to use Storm to reduce a process window that was previously 45 minutes (and as long as an hour and a half) long, to sub-5 seconds, correcting a problem that they had with unintentional client over-spend on their Right Media exchange.

Fernando-Ruiz says that they currently have Storm running in this implementation on 320 nodes of their Hadoop cluster, processing 133,000 events per second, corresponding roughly to 500 processes that are running with 12,000 threads. (Fernando-Ruiz says that their port of Storm into YARN has been submitted into the Storm distribution, and is now available for anyone to use).

He explained that they are also using YARN to run UC Berkely AMPLab’s data analytics cluster computing framework, Spark, in conjunction with MapReduce to help with personalization on their network. According to Fernando-Ruiz, Yahoo! is using long-running MapReduce to calculate the probabilities of an individual users interests (he used the example of a user having a fashion emphasis). While the batch runs, Spark is continuing to score the user.

“If you actually send an email, perform a search query, or you have been clicking on a number of articles, we can infer really quickly if you happen to be a fashion emphasis today,” he said. They then take the data and feed it into a scoring function, which flags the individual according to their interests for the next few minutes, or even 12 hours, delivering personalized content to them on the Yahoo! network.

According to Fernando-Ruiz, this deployment with Spark is currently running as a much smaller deployment of 40 nodes, and that the continuous training of these personalization algorithms has experienced a 3 times speed up. (Again, Spark has been ported to YARN, and is currently available for download).

The final use case he discussed uses HBase, Spark, and Storm running on 19,000 nodes for machine learning to train their personalization models. With data constantly accumulating, Yahoo! looks towards using the flood to calibrate and train their models and classifiers.

“The problem is that every time you load all that data…it’s a long running MapReduce job – by the time you finish it, you’re basically changing maybe 1% of the data. Ninety-nine percent of the data is that same, so why are you running the MapReduce job again on the same amount of data? It would be better to actually do it iteratively and incrementally, so we started to use Spark to train those models at the same time we’re using Storm.”

He says that Hadoop 2.0 is changing the way that they view Hadoop altogether. “It’s no longer just these long-running MapReduce jobs – it’s actually the MapReduce jobs together with an ability to process in very low latency the streaming signals that we get in. To not have that window of processing , but actually have a very small window of processing together with the ability to not have to reload all the data set in memory…to go and iteratively and incrementally do those micro-batch jobs.”

All that, he says, is possible thanks to YARN, and the new development of splitting the resource manager.

Related Items:

‡ Brad Calder (@CalderBrad) and Jai Haridas (@jaiharidas) posted Windows Azure Storage BUILD Talk - What’s Coming, Best Practices and Internals to the Windows Azure Storage Team blog on 6/28/2013:

At Microsoft’s Build conference we spoke about Windows Azure Storage internals, best practices and a set of exciting new features that we have been working on. Before we go ahead talking about the exciting new features in our pipeline, let us reminiscence a little about the past year. It has been almost a year since we blogged about the number of objects and average requests per second we serve.

This past year once again has proven to be great for Windows Azure Storage with many external customers and internal products like XBox, Skype, SkyDrive, Bing, SQL Server, Windows Phone, etc, driving significant growth for Windows Azure Storage and making it their choice for storing and serving critical parts of their service. This has resulted in Windows Azure Storage hosting more than 8.5 trillion unique objects and serving over 900K request/sec on an average (that’s over 2.3 trillion requests per month). This is a 2x increase in number of objects stored and 3x increase in average requests/sec since we last blogged about it a year ago!

In the talk, we also spoke about a variety of new features in our pipeline. Here is a quick recap on all the features we spoke about.

Queue Geo-Replication: we are pleased to announce that all queues are now geo replicated for Geo Redundant Storage accounts. This means that all data for Geo Redundant Storage accounts are now geo-replicated (Blobs, Tables and Queues).

By end of CY ’13, we are targeting to release the following features:

- Secondary read-only access: we will provide a secondary endpoint that can be utilized to read an eventually consistent copy of your geo-replicated data. In addition, we will provide an API to retrieve the current replication lag for your storage account. Applications will be able to access the secondary endpoint as another source for computing over the accounts data as well as a fallback option if primary is not available.

- Windows Azure Import/Export: we will preview a new service that allows customers to ship terabytes of data in/out of Windows Azure Blobs by shipping disks.

- Real-Time Metrics: we will provide in near real-time per minute aggregates of storage metrics for Blobs, Tables and Queues. These metrics will provide more granular information about your service, which hourly metrics tends to smoothen out.

- Cross Origin Resource Sharing (CORS): we will enable CORS for Azure Blobs, Tables and Queue services. This enables our customers to use Javascript in their web pages to access storage directly. This will avoid requiring a proxy service to route storage requests to circumvent the fact that browsers prevent cross domain access.

- JSON for Azure Tables: we will enable OData v3 JSON protocol which is much lighter and performant than AtomPub. In specific, JSON protocol has a NoMetadata option which is a very efficient protocol in terms of bandwidth.

If you missed the Build talk, you can now access it from [below or] here as it covers in more detail the above mentioned features in addition to best practices.

The SQL Server Team (@SQLServer) posted Microsoft Discusses Big Data at Hadoop Summit 2013 on 6/27/2013:

Hortonworks and Yahoo! kicked of the sixth annual Hadoop Summit yesterday in San Jose, the leading conference for the Apache Hadoop community. We’ve been on the ground discussing our big data strategy with attendees and showcasing HDInsight Service, our Hadoop-based distribution for Windows Azure, as well as our latest business intelligence (BI) tools.

This morning, Microsoft Corporate Vice President, Quentin Clark will deliver a presentation on “Reaching a Billion Users with Hadoop” where he will discuss how Microsoft is simplifying data management for customers across all types of platforms. You can tune in live at 8:30 AM PT at www.hadoopsummit.org/sanjose.

Hortonworks also made an announcement this morning that aligns well with our goal to continue to simplify Hadoop for the enterprise. They announced that they will develop management packs for Microsoft System Center Operations Manager and Microsoft System Center Virtual Machine Manager that will manage and monitor the Hortonworks Data Platform (HDP). With these management packs, customers will be able to monitor and manage HDP from System Center Operations Manager alongside existing data center deployments, and manage HDP from System Center Virtual Machine Manager in virtual and cloud infrastructure deployments. For more information, visit www.hortonworks.com.

Another Microsoft partner, Simba Technologies, also announced yesterday that it will provide Open Database Connectively (ODBC) access to Windows Azure HDInsight, Microsoft’s 100% Apache compatible Hadoop distribution. Simba’s Apache Hive ODBC Driver with SQL Connector provides customers easy access to their data for BI and analytics using the SQL-based application of their choice. For more information, see the full press release at http://www.simba.com/about-simba/in-the-news/simba-provides-hdinsight-big-data-connectivity. For more information on Hadoop, see http://hortonworks.com/hadoop/.

Mike Flasko, a senior program manager for SQL Server, will also deliver a session this afternoon at 4:25PM PT focused on how 343 Industries, the studio behind the Halo franchise, is leveraging Windows Azure HDInsight Service to gain insight into the millions of concurrent gamers that lead to weekly Halo 4 updates and support email campaigns designed to increase player retention. If you are attending Hadoop Summit, be sure to sit in on Quentin’s keynote, stop by Mike’s session and check out our booth in the Expo Hall!

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡‡ My (@rogerjenn) The OakLeaf System ToDo Demo Windows Store App Passes the Windows Blue (8.1) RT Preview Test post of 6/29/2013 reports:

I updated my wife’s Windows RT table to Windows Blue (8.1) RT by downloading the bits from the Windows Store on 6/29/2013. After verifying that the OS was behaving as expected, I decided to test the Windows Store app that’s described in my Installing and Testing the OakLeaf ToDo List Windows Azure Mobile Services Demo on a Surface RT Tablet of January 1, 2013.

Here’s Windows Blue start screen with the ToDo list demo emphasized:

Clicking the OakLeaf Systems ToDo List tile opens a splash screen:

After a few seconds, the sign-in screen opens:

Type a Windows Live ID and password, optionally mark the Keep Me Signed In check box and click Sign in to display a login confirmation dialog:

Click OK to open a text box and list of previous ToDo List items:

Type a ToDo item in the text box and click Save to add it to the list:

Click an item in the Query and Update Data list to mark it completed by removing it.

Click here for more information about creating Windows Azure Mobile Services apps.

‡‡ Carlos Figueira (@carlos_figueira) described Exposing authenticated data from Azure Mobile Services via an ASP.NET MVC application in a 6/27/2013 post:

After seeing a couple of posts in this topic, I decided to try to get this scenario working – and having never used the OAuth support in MVC, it was a good opportunity for me to learn a little about it. So here’s a very detailed, step-by-step of what I did (and worked for me), hopefully it will be useful if you got to this post. As in my previous step-by-step post, it can have more details than some people care about, so if you’re only interested in the connection between the MVC application and Azure Mobile Services, feel free to skip to the section 3 (using the Azure Mobile Service from the MVC app). The project will be a simple contact list, which I used in other posts in the past.

1. Create the MVC project

Let’s start with a new MVC 4 project on Visual Studio (I’m using VS 2012):

And select “Internet Application” in the project type:

Now the app is created and can be run. Now let’s create the model for our class, by adding a new class to the project:

- public class Contact

- {

- public int Id { get; set; }

- public string Name { get; set; }

- public string Telephone { get; set; }

- public string EMail { get; set; }

- }

And after building the project, we can create a new controller (called ContactController), and I’ll choose the MVC Controller with actions and views using EF, since it gives me for free the nice scaffolding views, as shown below. In the data context class, choose “<New data context...>” – and choose any name, since it won’t be used once we start talking to the Azure Mobile Services for the data.

Now that the template created the views for us, we can update the layout to start using the new controller / views. Open the _Layout.cshtml file under Views / Shared, and add a new action link to the new controller so we can access it (I’m not going to touch the rest of the page to keep this post short).

- <div class="float-right">

- <section id="login">

- @Html.Partial("_LoginPartial")

- </section>

- <nav>

- <ul id="menu">

- <li>@Html.ActionLink("Home", "Index", "Home")</li>

- <li>@Html.ActionLink("Contact List", "Index", "Contact")</li>

- <li>@Html.ActionLink("About", "About", "Home")</li>

- <li>@Html.ActionLink("Contact", "Contact", "Home")</li>

- </ul>

- </nav>

- </div>

At this point the project should be “F5-able” – try and run it. If everything is ok, you should see the new item in the top menu (circled below), and after clicking it you should be able to enter data (currently being stored in the local DB).

Now since I want to let each user have their own contact list, I’ll enable authentication in the MVC application. I found the Using OAuth Providers with MVC 4 tutorial to be quite good, and I was able to add Facebook login to my app in just a few minutes. First, you have to register a new Facebook Application with the Facebook Developers Portal (and the “how to: register for Facebook authentication” guide on the Windows Azure documentation shows step-by-step what needs to be done). Once you have a client id and secret for your FB app, open the AuthConfig.cs file under the App_Start folder, and uncomment the call to RegisterFacebookClient:

- OAuthWebSecurity.RegisterFacebookClient(

- appId: "YOUR-FB-APP-ID",

- appSecret: "YOUR-FB-APP-SECRET");

At this point we can now change our controller class to require authorization (via the [Authorize] attribute) so that it will redirect us to the login page if we try to access the contact list without logging in first.

- [Authorize]

- public class ContactController : Controller

- {

- // ...

- }

Now if we either click the Log in button, or if we try to access the contact list while logged out, we’ll be presented with the Login page.

Notice the two choices for logging in. In this post I’ll talk about the Facebook login only (so we can ignore the local account option), but this could also work with Azure Mobile Services, as shown in this post by Josh Twist.

And the application now works with the data stored in the local database. Next step: consume the data via Azure Mobile Services.

2. Create the Azure Mobile Service backend

Let’s start with a brand new mobile service for this example, by going to the Azure Management Portal and selecting to create a new Mobile Service:

Once the service is created, select the “Data” tab as shown below:

And create a new table. Since we only want authenticated users to access the data, we should set the permissions for the table operations accordingly.

Now, as I talked about in the “storing per-user data” post, we should modify the table scripts to make sure that no malicious client tries to access data from other users. So we need to update the insert script:

- function insert(item, user, request) {

- item.userId = user.userId;

- request.execute();

- }

Read:

- function read(query, user, request) {

- query.where({ userId: user.userId });

- request.execute();

- }

Update:

- function update(item, user, request) {

- tables.current.where({ id: item.id, userId: user.userId }).read({

- success: function(results) {

- if (results.length) {

- request.execute();

- } else {

- request.respond(401, { error: 'Invalid operation' });

- }

- }

- });

- }

And finally delete:

- function del(id, user, request) {

- tables.current.where({ id: id, userId: user.userId }).read({

- success: function(results) {

- if (results.length) {

- request.execute();

- } else {

- request.respond(401, { error: 'Invalid operation' });

- }

- }

- });

- }

We’ll also need to go to the “Identity” tab in the portal to add the same Facebook credentials which we added to the MVC application (that’s how the Azure Mobile Services runtime will validate with Facebook the login call)

The mobile service is now ready to be used, we need now to start calling it from the web app.

3. Using the Azure Mobile Service from the MVC app

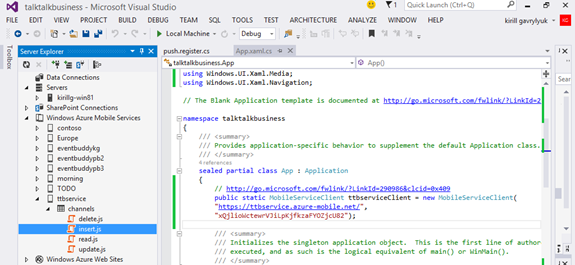

In a great post about this topic a while back, Filip W talked about using the REST API to talk to the service. While that is still a valid option, the version 1.0 of the Mobile Service SDK NuGet package also supports the “full” .NET Framework 4.5 (not only Windows Store or Windows Phone apps, as it did in the past). So we can use it to make our code simpler. First, right-click on the project references, and select “Mange NuGet Packages…”

And on the Online tab, search for “mobileservices”, and install the “Windows Azure Mobile Services” package.

We can now start updating the contacts controller to use that instead of the local DB. First, remove the declaration of the ContactContext property, and replace it with a mobile service client one. Notice that since we’ll use authentication, we don’t need to pass the application key.

- //private ContactContext db = new ContactContext();

- private static MobileServiceClient MobileService = new MobileServiceClient(

- "https://YOUR-SERVICE-NAME.azure-mobile.net/"

- );

Now to the controller actions. For all operations, we need to ensure that the client is logged in. And to log in, we need the Facebook access token. As suggested in the Using OAuth Providers with MVC 4 tutorial, I updated the ExternalLoginCallback method to store the facebook token in the session object.

- [AllowAnonymous]

- public ActionResult ExternalLoginCallback(string returnUrl)

- {

- AuthenticationResult result = OAuthWebSecurity.VerifyAuthentication(Url.Action("ExternalLoginCallback", new { ReturnUrl = returnUrl }));

- if (!result.IsSuccessful)

- {

- return RedirectToAction("ExternalLoginFailure");

- }

- if (result.ExtraData.Keys.Contains("accesstoken"))

- {

- Session["facebooktoken"] = result.ExtraData["accesstoken"];

- }

- //...

- }

Now we can use that token to log in the web application to the Azure Mobile Services backend. Since we need to ensure that all operations are executed within a logged in user, the ideal component would be an action (or authentication) filter. To make this example simpler, I’ll just write a helper method which will be called by all action methods. In the method, shown below, we take the token from the session object, package it in the format expected by the service (an object with a member called “access_token” with the value of the actual token), and make a call to the LoginAsync method. If the call succeeded, then the user is logged in. If the MobileService object had already been logged in, its ‘CurrentUser’ property would not be null, so we bypass the call and return a completed task.

- private Task<bool> EnsureLogin()

- {

- if (MobileService.CurrentUser == null)

- {

- var accessToken = Session["facebooktoken"] as string;

- var token = new JObject();

- token.Add("access_token", accessToken);

- return MobileService.LoginAsync(MobileServiceAuthenticationProvider.Facebook, token).ContinueWith<bool>(t =>

- {

- if (t.Exception != null)

- {

- return true;

- }

- else

- {

- System.Diagnostics.Trace.WriteLine("Error logging in: " + t.Exception);

- return false;

- }

- });

- }

- TaskCompletionSource<bool> tcs = new TaskCompletionSource<bool>();

- tcs.SetResult(true);

- return tcs.Task;

- }

Now for the actions themselves. When listing all contacts, we first ensure that the client is logged in, then retrieve all items from the mobile service. This is a very simple and naïve implementation – it doesn’t do any paging, so it will only work for small contact lists – but it illustrates the point of this post. Also, if the login fails the code simply redirects to the home page; in a more realistic scenario it would send some better error message to the user.

- //

- // GET: /Contact/

- public async Task<ActionResult> Index()

- {

- if (!await EnsureLogin())

- {

- return this.RedirectToAction("Index", "Home");

- }

- var list = await MobileService.GetTable<Contact>().ToListAsync();

- return View(list);

- }

Displaying the details for a specific contact is similar – retrieve the contacts from the service based on the id, then display it.

- //

- // GET: /Contact/Details/5

- public async Task<ActionResult> Details(int id = 0)

- {

- if (!await EnsureLogin())

- {

- return this.RedirectToAction("Index", "Home");

- }

- var contacts = await MobileService.GetTable<Contact>().Where(c => c.Id == id).ToListAsync();

- if (contacts.Count == 0)

- {

- return HttpNotFound();

- }

- return View(contacts[0]);

- }

Likewise, creating a new contact involves getting the table and inserting the item using the InsertAsync method.

- //

- // POST: /Contact/Create

- [HttpPost]

- [ValidateAntiForgeryToken]

- public async Task<ActionResult> Create(Contact contact)

- {

- if (ModelState.IsValid)

- {

- if (!await EnsureLogin())

- {

- return RedirectToAction("Index", "Home");

- }

- var table = MobileService.GetTable<Contact>();

- await table.InsertAsync(contact);

- return RedirectToAction("Index");

- }

- return View(contact);

- }

And, for completeness sake, the other operations (edit / delete)

- //

- // GET: /Contact/Edit/5

- public async Task<ActionResult> Edit(int id = 0)

- {

- if (!await EnsureLogin())

- {

- return RedirectToAction("Index", "Home");

- }

- var contacts = await MobileService.GetTable<Contact>().Where(c => c.Id == id).ToListAsync();

- if (contacts.Count == 0)

- {

- return HttpNotFound();

- }

- return View(contacts[0]);

- }

- //

- // POST: /Contact/Edit/5

- [HttpPost]

- [ValidateAntiForgeryToken]

- public async Task<ActionResult> Edit(Contact contact)

- {

- if (ModelState.IsValid)

- {

- if (!await EnsureLogin())

- {

- return RedirectToAction("Index", "Home");

- }

- await MobileService.GetTable<Contact>().UpdateAsync(contact);

- return RedirectToAction("Index");

- }

- return View(contact);

- }

- //

- // GET: /Contact/Delete/5

- public async Task<ActionResult> Delete(int id = 0)

- {

- if (!await EnsureLogin())

- {

- return RedirectToAction("Index", "Home");

- }

- var contacts = await MobileService.GetTable<Contact>().Where(c => c.Id == id).ToListAsync();

- if (contacts.Count == 0)

- {

- return HttpNotFound();

- }

- return View(contacts[0]);

- }

- //

- // POST: /Contact/Delete/5

- [HttpPost, ActionName("Delete")]

- [ValidateAntiForgeryToken]

- public async Task<ActionResult> DeleteConfirmed(int id)

- {

- if (!await EnsureLogin())

- {

- return RedirectToAction("Index", "Home");

- }

- await MobileService.GetTable<Contact>().DeleteAsync(new Contact { Id = id });

- return RedirectToAction("Index");

- }

That should be it. If you run the code now, try logging in to your Facebook account, inserting a few items then going to the portal to browse the data – it should be there. Deleting / editing / querying the data should also work.

Wrapping up

Logging in via an access (or authorization) token currently only works for Facebook, Microsoft and Google accounts; Twitter isn’t supported yet. So the example below could work (although I haven’t tried) just as well for the other two supported account types.

The code for this post can be found in the MSDN Code Samples at http://code.msdn.microsoft.com/Flowing-authentication-08b8948e.

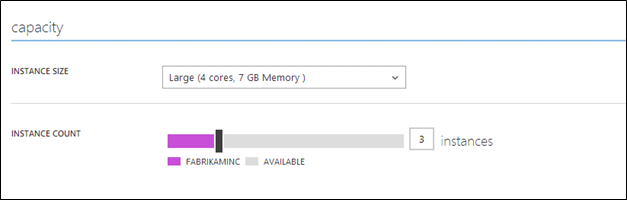

• Steven Martin (@stevemar_msft) posted Announcing the General Availability of Windows Azure Mobile Services, Web Sites and continued Service innovation to the Windows Azure Team blog during the //BUILD/ 2013 Conference keynote on 6/27/2013:

We strive to deliver innovation that gives developers a diverse platform for building the best cloud applications that can reach customers around the world in an instant. Many new applications fall into the category of what we call “Modern Applications” which are invariably web based and accessible by a broad spectrum of mobile devices. Today, we’re taking a major step towards making this a reality with the General Availability (GA) of Windows Azure Mobile Services and Windows Azure Web Sites.

Windows Azure Mobile Services

Mobile Services makes it fast and easy to create a mobile backend for every device. Mobile Services simplifies user authentication, push notification, server side data and business logic so you can get your mobile application in market fast. Mobile Services provides native SDKs for Windows Store, Windows Phone, Android, iOS and HTML5 as well as REST APIs.

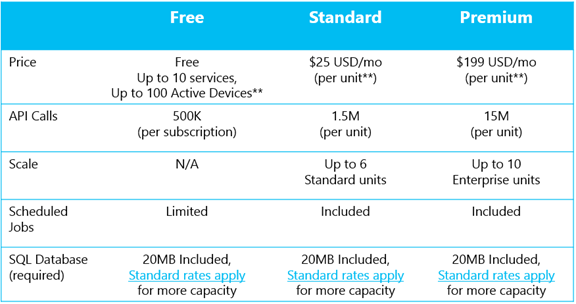

Starting today, Mobile Services is Generally Available (GA) in three tiers—Free, Standard and Premium. The Standard and Premium tiers are metered by number of API calls and backed by our standard 99.9% monthly SLA. You can find the full details of the new pricing here. All tiers of Mobile Services will be free of charge until August 1, 2013 to give customers the opportunity to select the appropriate tier for their application. SQL database and storage will continue to be billed separately during this period.

In addition, building Windows 8.1 connected apps is easier than ever with first class support for Mobile Services in the Visual Studio 2013 Preview and customers can also turn on Gzip compression between service and client.

Companies like Yatterbox, Sly Fox, Verdens Gang, Redbit and TalkTalk Business are already building apps that distribute content and provide up to the minute information across a variety of devices.

Developers can also use Windows Azure Mobile Services with their favorite third party services from partners such as New Relic, SendGrid, Twilio and Xamarin.

Windows Azure Web Sites

Windows Azure Web Sites is the fastest way to build, scale and manage business grade Web applications. Windows Azure Web-Sites is open and flexible with support for multiple languages and frameworks including ASP.NET, PHP, Node.JS and Python, multiple open-source applications including WordPress, Drupal and even multiple databases. ASP.NET developers can easily create new or move existing web-sites to Windows Azure from directly inside Visual Studio.

We are also pleased to announce the General Availability (GA) of Windows Azure Web Sites Standard (formerly named reserved) and Free tiers. The Standard tier is backed by our standard 99.9% monthly SA. The preview pricing discount of 33% for Standard tier Windows Azure Web Sites will expire on August 1, 2013. Websites running in the shared tier remain in preview with no changes. Visit our pricing page for a comprehensive look at all the pricing changes.

Service Updates for Windows Azure Web Sites Standard tier include:

- SSL Support: SNI or IP based SSL support is now available.

- Independent site scaling: Customers can select individual sites to scale up or down

- Memory dumps for debugging: Customers can get access to memory dumps using a REST API to help with site debugging and diagnostics.

- Support for 64 bit processes: Customers can run in 64 bit web sites and take advantage of additional memory and faster computation.

Innovation continues on existing Services

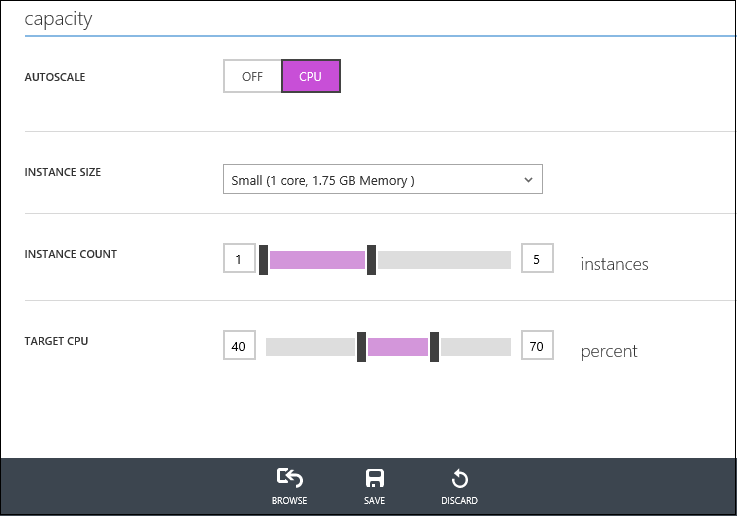

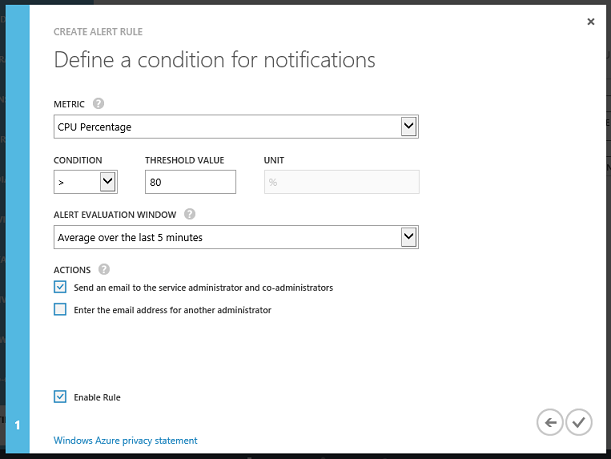

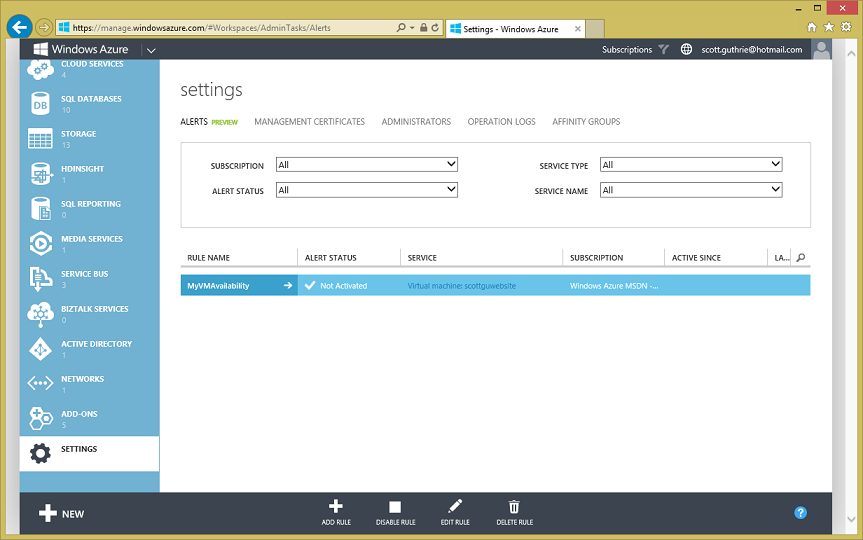

Auto scale, alerts and monitoring Preview

Windows Azure now provides a number of capabilities that help you better understand the health of your applications. These features, available in preview, allow you to monitor the health and availability of your applications, receive notifications when your service availability changes, perform action-based events, and automatically scale to match current demands.

Availability, monitoring, auto scaling and alerting are available in preview for Windows Azure Web Sites, Cloud Services, and Virtual Machines. Alerts and monitoring are available in preview for Mobile Services. There is no additional cost for these features while in preview.

New Windows Azure Virtual Machines images available

SQL Server 2014 and Windows Server 2012 R2 preview images are now available in the Virtual Machines Image Gallery. At the heart of the Microsoft Cloud OS vision, Windows Server 2012 R2 offers many new features and enhancements across storage, networking, and access and information protection. You can get started with Windows Server 2012 R2 and SQL Server 2014 by simply provisioning a prebuilt image from the gallery. These images are available now at a 33% discount during the preview. And you pay for what you use, by the minute.

Windows Azure Active Directory Sneak Peek

In today’s keynote at //Build, Satya Nadella gave a sneak peek into future enhancements to Windows Azure Active Directory. We’re working with third parties like Box and others so they can leverage Windows Azure Active Directory to enable a single sign-on (SSO) experience for their users. If a higher level of security is needed, you can leverage Active Authentication to give you multifactor authentication. If you are an ISV and interested in integrating with Windows Azure Active Directory for SSO please let us know by filling out a short survey.

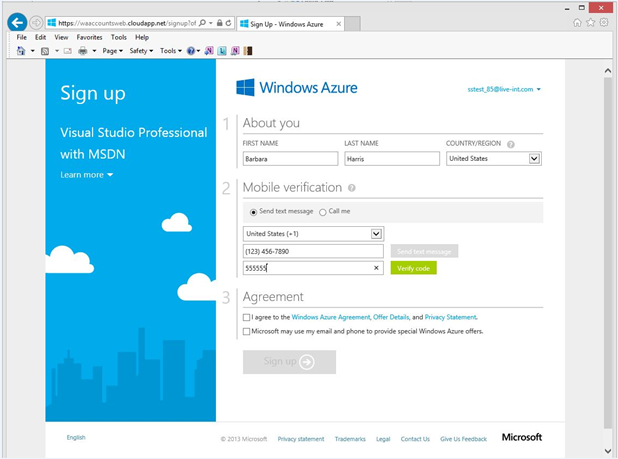

No credit card required for MSDN subscribers

Windows Azure is an important platform for development and test as it provides developers with computing capacity that may not be available to them on-premises. Previously, at TechEd 2013 we announced new Windows Azure MSDN benefits with monetary credit, reduced rates, and MSDN software usage on Windows Server for no additional fee. Now, most MSDN customers can activate their Windows Azure benefits in corporate accounts without entering a credit card number making it easier to claim this benefit.

Today’s announcement reinforces our commitment to developers building Modern Applications by delivering continued innovation to our platform and infrastructure services. Expect to see more new and exciting updates from us shortly, but in the meantime I encourage you to engage and build by visiting the developer center for mobile and web apps, watch live streams of sessions from //build/, and get answers to your questions on the Windows Azure forums and on Stack Overflow.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

The WCF Data Services Team announced availability of the WCF Data Services 5.6.0 Alpha on 6/27/2013:

Today we are releasing updated NuGet packages and tooling for WCF Data Services 5.6.0. This is an alpha release and as such we have both features to finish as well as quality to fine-tune before we release the final version.

You will need the updated tooling to use the portable libraries feature mentioned below. It takes us a bit of extra time to get the tooling up to the download center, but we will update this blog post with a link when the tools are available for download.

What is in the release:

Visual Studio 2013 Support

The WCF DS 5.6.0 tooling installer has support for Visual Studio 2013. If you are using the Visual Studio 2013 Preview and would like to consume OData services, you can use this tooling installer to get Add Service Reference support for OData. Should you need to use one of our prior runtimes, you can still do so using the normal NuGet package management commands (you will need to uninstall the installed WCF DS NuGet packages and install the older WCF DS NuGet packages).

Portable Libraries

All of our client-side libraries now have portable library support. This means that you can now use the new JSON format in Windows Phone and Windows Store apps. The core libraries have portable library support for .NET 4.0, Silverlight 5, Windows Phone 8 and Windows Store apps. The WCF DS client has portable library support for .NET 4.5, Silverlight 5, Windows Phone 8 and Windows Store apps. Please note that this version of the client does not have tombstoning, so if you need that feature for Windows Phone apps you will need to continue using the Windows Phone-specific tooling.

URI Parser Integration

The URI parser is now integrated into the WCF Data Services server bits, which means that the URI parser is capable of parsing any URL supported in WCF DS. We are currently still working on parsing functions, with those areas of the code base expected to be finalized by RTW.

Public Provider Improvements

In the 5.5.0 release we started working on making our providers public. In this release we have made it possible to override the behavior of included providers with respect to properties that don’t have native support in OData v3. Specifically, you can now create a public provider that inherits from the Entity Framework provider and override a method to make enum and spatial properties work better with WCF Data Services. We have also done some internal refactoring such that we can ship our internal providers in separate NuGet packages. We hope to be able to ship an EF6 provider soon.

Known Issues

With any alpha, there will be known issues. Here are a few things you might run into:

- We ran into an issue with a build of Visual Studio that didn’t have the NuGet Package Manager installed. If you’re having problems with Add Service Reference, please verify that you have a version of the NuGet Package Manager and that it is up-to-date.

- We ran into an issue with build errors referencing resource assemblies on Windows Store apps. A second build will make these errors go away.

We want feedback!

This is a very early alpha (we think the final release will happen around the start of August), but we really need your feedback now, especially in regards to the portable library support. Does it work as expected? Can you target what you want to target? Please leave your comments below or e-mail me at mastaffo@microsoft.com. Thank you!

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

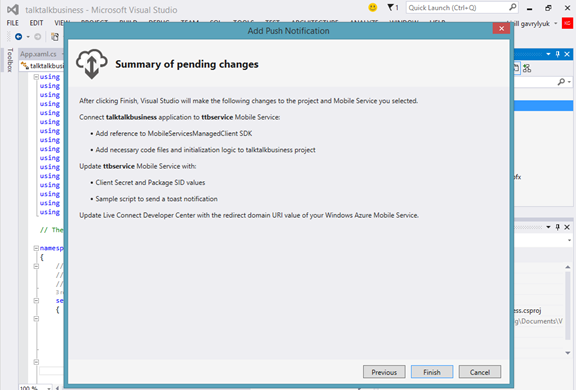

‡‡ Haddy Al-Haggan described Push Notification using Service Bus in a 6/28/2013 post:

Like the push notification on Windows Phone 7, there is also another type of notifications that you can do using the Service Bus. There are several ways to do so, for nowadays, there are a new way that facilitate much more the development and the subscription of the devices to the Service Bus. This kind of Service Bus is called the Service Bus Notification Hub, like the Service Bus Relay Messaging and the Service Bus Brokered Messaging, there are some steps that you should do to create the required Service Bus Notification Hub. This feature is still in Preview.

Here are the steps:

You have to download special references for the development for the Push Notification hub. After creating your project on Visual Studio, go to tools -> Library Package Manager -> Package Manager Console and enter the following command:

Install-Package ServiceBus.Preview

Going to the portal:

First of all, go to you Windows Azure Portal and quick Create a notification hub as shown below

After creating the service bus, there are several few things that must be done to register in the service bus.

Here they are in brief I will explain each one of them later on.

- Download the required ServiceBus.Preview.dll from the NuGet, previously explained.

- Create a Windows Store application using Visual Studio

- Get the Package SID & Client Secret from after registering the application in the store and paste it in the Notification Hub Configuration

- Associate the application created on Visual Studio with the one created on the store.

- Get the Connection information from the Notification hub.

- Enable the Toast Notification on the Windows 8 application.

- Get the Microsoft.WindowsAzure.Messaging.Managed.dll from the following link.

- Insert the notification hub as an attribute.

I will skip the second step which is so easy creating Windows Store application.

The third step go to https://appdev.microsoft.com create an account if you don’t have. After that submit your app, reserve your application name, then click on the third tile named Services.

Under the Services go to Authenticating your service

Copy the following package SID and the Client secret:

Now for the 4th step, in your created Windows Store application on Visual Studio right click on the project created and go to store and then click on associate App with the store. It will require that you sign in with the windows live account which you create the development account with. After that you will have to associate your application with the registered one on the store.

For the 5th step, let’s get back to the Azure account, in the Notification hub, we can get the connection information from the connection information under the service bus in the notification hub. Like the following picture:

The 6th step, is in your Windows 8 application to enable the toast push notification which is a very easy and small step. Just go to your Package.appmanifest and change the toast capable to “Yes”

The rest is for the development in the Windows Store, first thing we certainly have to do is to insert the libraries. So in the App.xaml enter the following libraries:

using

Microsoft.WindowsAzure.Messaging;using

System.Threading.Tasks;using

Windows.Data.Xml.Dom;using

Windows.UI.Notifications;using

Windows.Networking.PushNotifications;the next step is to create an instance of NotificationHub object:

NotificationHub

notificationhub;And in the constructor of the app.xaml, initialize the instance of the object. Just don’t forget to change the DefaultListenSharedAccessSignature by its true value from the connection information retrieved from the Azure account in a previous step.

notificationhub

=

new

NotificationHub(“myhub”, “DefaultListenSharedAccessSignature”);Initialize the notification by registering the channel by its Uri.

async

Task

initializenotification(){

var

channel

=

await

PushNotificationChannelManager.CreatePushNotificationChannelForApplicationAsync();await

notificationhub.RegisterAsync(new

Registration(channel.Uri));}

After that call the initialization function on the launch of the application or OnActivated.

await

initializenotification();The previous part was for receiving the notification for sending the notification the following code will solve the issue. Don’t forget to enter the necessary libraries.

You can enter the following code in the desired function:

var

hubClient

=

NotificationHubClient.CreateClientFromConnectionString(“connectionstring”, “myhub”);var

toast

=

“<toast> <visual> <binding template=\”ToastText01\”> <text id=\”1\”>Hello! </text> </binding> </visual> </toast>”;hubClient.SendWindowsNativeNotification(toast);

After that you will be able to develop the application as required, in the following link, you can find all the related development issues for Windows Store apps in this link, this one for the Android and the last one is for the iOS.

Now for further development I have done a simple Windows 8 application that sends and receive push notifications using Service Bus Notification Hub, you can download the source code from here.

The Notification hub for now supports only Microsoft Platform, Apple iOS and Android. Here is some video reference on Channel 9 that I hope it can help you during your development.

Here is one of my sources which explains everything in details about the Push Notification Using Service Bus.

•• Abishak Lal described the Durable Task Framework (Preview) with Azure Service Bus in a 6/27/2013 post:

In todays landscape we have connected clients and continuous services that are powering rich and connected experiences for users. Developers face challenges in solving various challenges when writing code for both clients and services. I recently presented a session at the TechEd conference covering some of the challenges that connected clients face and how Service Bus can help with these (Connected Clients and Continuous Services with Windows Azure Service Bus)

From the continuous services perspective consider some of the scenarios were you have to perform long running operations spanning several services in a reliable manner.Consider some examples:

Compositions: Upload video -> Encode -> Send Push Notification

Provisioning: Bring up a Database followed by a Virtual Machine

For each of these you will generally need a state management infrastructure that will then need to be maintained and incorporated in your code. With Windows Azure Service Bus providing durable messaging features including advanced features like sessions and transactions we have released a preview of Durable Task Framework that allows you to write these long running orchestrations easily using C# Task code. Following is a developer guide and additional information for this:

- Service Bus Durable Task Framework (Preview) Developer guide

- Code Samples for Durable Task Framework

- NuGet library for Durable Task Framework

Please give this a try and let us know what you think!

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

‡‡ Mike McKeown (@nwoekcm) posted Organizational Identity/Microsoft Accounts and Azure Active Directory – Part 1 to the Aditi Technologies blog on 6/24/2013 (missed when published):

A Microsoft account is the new name for what was previously called a Windows Live ID. Your Microsoft account is a combination of an email address and a password that you use to sign in to services like Hotmail, Messenger, SkyDrive, Windows Phone, Xbox LIVE, or Outlook.com.

If you use an email address and password to sign in to these or other Microsoft services, you already have a Microsoft account. Examples of a Microsoft account may be alex.smith@outlook.com or alex.smith@hotmail.com and can be managed here: https://account.live.com/. Once you log in you can manage your personal account information including security, billing, notifications, etc.

An Organizational Identity (OrgID) is a user identity stored in Azure Active Directory (AAD). Office 365 users automatically have an OrgID as AAD is the underlying directory service for Office 365. An example of an organizational account alex.smith@contoso.onmicrosoft.com

Why Two Different Identities?

A person’s Microsoft account is used by services generally considered consumer oriented. The user of a Microsoft account is responsible of the management (for example, password resets) of the account.

A person’s Organizational Identity is managed by their organization in that organization’s AAD tenant. The identities in the AAD tenant can be synchronized with the identities maintained in the organization’s on-premise identity store (for example, on-premise Active Directory). If an organization subscribes to Office 365, CRM Online, or Intune, user organizational accounts for these services are maintained in AAD.

Tenants and Subscriptions

AAD tenants are cloud-based directory service instances and are only indirectly related to Azure subscriptions through identities. That is identities can belong to an AAD tenant and identities can be co-administrator(s) of Azure subscription. There is no direct relationship between the Azure subscription and the AAD tenant except the fact that they might share user identities. An example of an AAD tenant may be contoso.onmicrosoft.com. An identity in this AAD tenant the same as a user’s OrgID.

Azure subscriptions are different than AAD tenants. Azure subscriptions have co-administrator(s) whose permissions are not related to permissions in an AAD tenant. An Azure subscription can include a number of Azure services and are managed using the Azure Portal. An AAD tenant can be one of those services managed using the Azure Portal.

Many Types of Administrators

Once you understand the types of accounts, tenants, and subscriptions, it makes sense to discuss the many types of administrators within AAD and Azure.

Administrators in AAD

An AAD Global Administrator is an administrator role for an AAD tenant.

- • If integration of duties across Azure and AAD is desired an AAD Global Administrator will require assignment as a co-administrator to an Azure subscription to manage. This allows that Global Administrators to manage their Azure subscription as well as the AAD tenant.

- • If the desire is separation of duties those that manage the organization’s production Azure subscription are separate from those that manage the AAD tenant. Create a new Azure subscription and only add AAD Global Administrators as Azure co-administrators.

This provides an AAD management portal while separating the two different administration functions – Azure production versus AAD production. In the near future the Azure Portal intends to provide more granular management capabilities eliminating the need for an additional Azure subscription for separation of duties.

Admins in Azure

Depending upon the subscription model there are many types of administrators in Azure.

Azure co-administrator is an administrator role for an Azure subscription(s). An Azure co-administrator requires Global Administrator privileges (granted in their AAD’s organizational account) to manage the AAD tenant as well as the Azure subscription.

Azure Service administrator is a special administrator role for an Azure subscription(s) who is assigned the subscription. This user cannot be removed as an Azure administrator until this user is unassigned from the Azure subscription.

Azure account administrator/owner monitors usage and manages billings through the Windows Azure Account Center. A Windows Azure subscription has two aspects:

- The Windows Azure account, through which resource usage is reported and services are billed. Each account is identified by a Windows Live ID or corporate email account, and is associated with at least one subscription.

- The subscription itself, which governs access to and use of Windows Azure subscribed service. The subscription holder uses the Management Portal to manage services.

The account and the subscription can be managed by the same individual or by different individuals or groups. In a corporate enrollment, an account owner might create multiple subscriptions to give members of the technical staff access to services. Because resource usage within an account billing is reported for each subscription, an organization can use subscriptions to track expenses for projects, departments, regional offices, and so forth. In this scenario, the account owner uses the Windows Live ID associated with the account to log into the Windows Azure Account Center, but does not have access to the Management Portal unless the account owners create a subscription for themselves.

Further information about Azure administrator roles can be found here:

- http://msdn.microsoft.com/en-us/library/windowsazure/hh531793.aspx

- http://www.windowsazure.com/en-us/support/changing-service-admin-and-co-admin/

In Part 2 of this post, we will examine the different use cases for AAD and Azure with respect to administrative access and the ability to authenticate and provide permissions to your directory and Cloud resources.

• Steven Martin (@stevemar_msft) posted Announcing the General Availability of Windows Azure Mobile Services, Web Sites and continued Service innovation to the Windows Azure Team blog during the //BUILD/ 2013 Conference keynote on 6/27/2013:

… Windows Azure Active Directory Sneak Peek

In today’s keynote at //Build, Satya Nadella gave a sneak peek into future enhancements to Windows Azure Active Directory. We’re working with third parties like Box and others so they can leverage Windows Azure Active Directory to enable a single sign-on (SSO) experience for their users.

If a higher level of security is needed, you can leverage Active Authentication to give you multifactor authentication. If you are an ISV and interested in integrating with Windows Azure Active Directory for SSO please let us know by filling out a short survey. …

![image_thumb2[1] image_thumb2[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgkTpKLG2rGaqNC6jXMkTiTmrJqN8KKWahesDNkUA3qcoDN71D0m2otRF4IjfAlpf2dyT1544D1JMBpJ8TZClRIDefan2N1YnVUxVP7sMLF_Zgh61fXGlUJ68_uQOkxtWrkD-tcnm9j/?imgmax=800) See the Windows Azure SQL Database, Federations and Reporting, Mobile Services directory for the full post.

See the Windows Azure SQL Database, Federations and Reporting, Mobile Services directory for the full post.

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

‡‡ Brady Gaster (@bradygaster) posted New Relic and Windows Azure Web Sites on 6/29/2013:

This past week I was able to attend the //BUILD/ Conference in San Francisco, and whilst at the conference I and some teammates and colleagues were invited to hang out with the awesome dudes from New Relic. To correspond with the Web Sites GA announcement this week, New Relic announced their support for Windows Azure Web Sites. I wanted to share my experiences getting New Relic set up with my Orchard CMS blog, as it was surprisingly simple. I had it up and running in under 5 minutes, and promptly tweeted my gratification.

Hanselman visited New Relic a few months ago and blogged about how he instrumented his sites using New Relic in order to save money on compute resources. Now that I’m using their product and really diving in I can’t believe the wealth of information available to me, on an existing site, in seconds.

FTP, Config, Done.

Basically, it’s all FTP and configuration. Seriously. I uploaded a directory, added some configuration settings using the Windows Azure portal, Powershell Cmdlets, or Node.js CLI tools, and partied. There’s extensive documentation on setting up New Relic with Web Sites on their site that starts with a Quick Install process.

In the spirit of disclosure, when I set up my first MVC site with New Relic I didn’t follow the instructions, and it didn’t work quite right. One of New Relic’s resident ninja, Nick Floyd, had given Vstrator’s Rob Zelt and myself a demo the night before during the Hackathon. So I emailed Nick and was all dude meet me at your booth and he was all dude totally so we like got totally together and he hooked me up with the ka-knowledge and stuff. I’ll ‘splain un momento. The point in my mentioning this? RT#M when you set this up and life will be a lot more pleasant.

I don’t need to go through the whole NuGet-pulling process, since I’ve already got an active site running, specifically using Orchard CMS. Plus, I’d already created a Visual Studio Web Project to follow Nick’s instructions so I had the content items that the New Relic Web Sites NuGet package imported when I installed it.

So, I just FTPed those files up to my blog’s root directory. The screen shot below shows how I’ve got a newrelic folder at the root of my site, with all of New Relic’s dependencies and configuration files.

They’ve made it so easy, I didn’t even have to change any of the configuration before I uploaded it and the stuff just worked.

Earlier, I mentioned having had one small issue as a result of not reading the documentation. In spite of the fact that their docs say, pretty explicitly, to either use the portal or the Powershell/Node.js CLI tools, I’d just added the settings to my Web.config file, as depicted in the screen shot below.

Since the ninjas at New Relic support non-.NET platforms too, they do expect those application settings to be set at a deeper level than the *.config file. New Relic needs these settings to be at the environment level. Luckily the soothsayer PM’s on the Windows Azure team predicted this sort of thing would happen, so when you use some other means of configuring your Web Site, Windows Azure persists those settings at that deeper level. So don’t do what I did, okay? Do the right thing.

Just to make sure you see the right way. Take a look at this screen shot below, which I lifted from the New Relic documentation tonight. It’s the Powershell code you’d need to run to automate the configuration of these settings.

Likewise, you could configure New Relic using the Windows Azure portal.

Bottom line is this:

- If you just use the Web.config, it won’t work

- Once you light it up in the portal, it works like a champ

Deep Diving into Diagnostics

Once I spent 2 minutes and got the monitoring activated on my site, it worked just fine. I was able to look right into what Orchard’s doing all the way back to the database level. Below, you’ll see a picture of the most basic monitoring page looks like when I log into New Relic. I can see a great snapshot of everything right away.

Where I’m spending some time right now is on the Database tab in the New Relic console. I’m walking through the SQL that’s getting executed by Orchard against my SQL database, learning all sort of interesting stuff about what’s fast, not-as-fast, and so on.

I can’t tell you how impressed I was by the New Relic product when I first saw it, and how stoked I am that it’s officially unveiled on Windows Azure Web Sites. Now you can get deep visibility and metrics information about your web sites, just like what was available for Cloud Services prior to this week’s release.

I’ll have a few more of these blog posts coming out soon, maybe even a Channel 9 screencast to show part of the process of setting up New Relic. Feel free to sound off if there’s anything on which you’d like to see me focus. In the meantime, happy monitoring!

‡‡ Scott Guthrie (@scottgu) recommended the Edge Show 64 - Windows Azure Point to Site VPN video by David Tesar (@dtzar) in a 6/30/2013 tweet:

Yu-Shun Wang, Program Manager for Windows Azure Networking, discusses the new networking enhancements currently in preview and lets us know some of the implementations they are considering for future releases. We dive into how to setup and configure and then demo the new point-to-site networking in Windows Azure.

In this interview that starts at [04:21], we cover:

- The differences between site-to-site and point to site VPN connections and when you might want to use one versus the other.

- [07:09] Can you use point-to-site and site-to-site to the same virtual network?

- [08:03] How do you connect two Windows Azure virtual networks to each other? How do you connect multiple sites to a single Windows Azure virtual network?

- [09:04] Demo—How to setup and configure a new point-to-site virtual network connection?

- Create a new Virtual Network

- How many clients can the point-to-site connection handle?

- What the gateway subnet does and when you should add it

- [16:38] What kinds of certificates can you use?

- [19:30] How the certificate gets attached to the VPN client and when to install it

- [21:42] What protocols does point-to-site use and what ports do you need to open up on your firewall?

- [22:10] Demo—point-to-site connection working between a VM in Windows Azure and a client machine over the internet.

- [23:50] What is the difference between dynamic and static routing in Windows Azure Networking? When should you use dynamic versus static routing?

- [25:50] What routing protocols are used with dynamic routing? Are we looking into supporting any routing protocols?

News:

- Edge Team on Channel 9 Live at TechEd NA

- Reminder: Edge Interviews @ MMS 2013 http://aka.ms/edgemms

- Lync - Skype Federation now available!

- MAP toolkit 8.5 beta available

- Updates to Windows Azure post GA

• Steven Martin (@stevemar_msft) posted Announcing the General Availability of Windows Azure Mobile Services, Web Sites and continued Service innovation to the Windows Azure Team blog during the //BUILD/ 2013 Conference keynote on 6/27/2013:

… Windows Azure Web Sites

Windows Azure Web Sites is the fastest way to build, scale and manage business grade Web applications. Windows Azure Web-Sites is open and flexible with support for multiple languages and frameworks including ASP.NET, PHP, Node.JS and Python, multiple open-source applications including WordPress, Drupal and even multiple databases. ASP.NET developers can easily create new or move existing web-sites to Windows Azure from directly inside Visual Studio.

We are also pleased to announce the General Availability (GA) of Windows Azure Web Sites Standard (formerly named reserved) and Free tiers. The Standard tier is backed by our standard 99.9% monthly SA. The preview pricing discount of 33% for Standard tier Windows Azure Web Sites will expire on August 1, 2013. Websites running in the shared tier remain in preview with no changes. Visit our pricing page for a comprehensive look at all the pricing changes.

Service Updates for Windows Azure Web Sites Standard tier include:

- SSL Support: SNI or IP based SSL support is now available.

- Independent site scaling: Customers can select individual sites to scale up or down

- Memory dumps for debugging: Customers can get access to memory dumps using a REST API to help with site debugging and diagnostics.

- Support for 64 bit processes: Customers can run in 64 bit web sites and take advantage of additional memory and faster computation.

New Windows Azure Virtual Machines images available

SQL Server 2014 and Windows Server 2012 R2 preview images are now available in the Virtual Machines Image Gallery. At the heart of the Microsoft Cloud OS vision, Windows Server 2012 R2 offers many new features and enhancements across storage, networking, and access and information protection. You can get started with Windows Server 2012 R2 and SQL Server 2014 by simply provisioning a prebuilt image from the gallery. These images are available now at a 33% discount during the preview. And you pay for what you use, by the minute. …

See the entire post in the Windows Azure SQL Database, Federations and Reporting, Mobile Services section above.

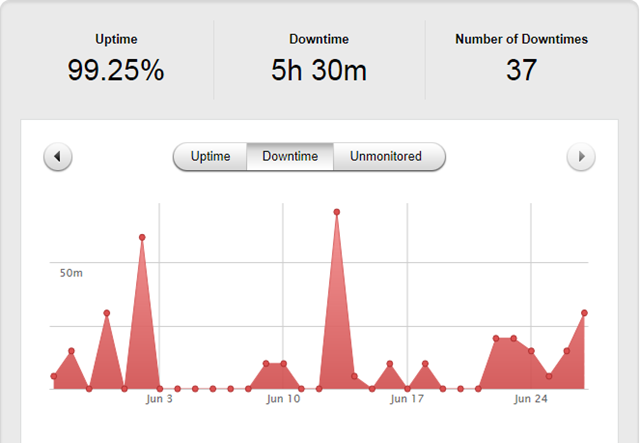

I’m not sure that WAWS is ready for prime time with a 99.9% availability SLA for shared sites, which remain in preview status. See my Uptime Report for My Windows Azure Web Services (Preview) Demo Site: May 2013 = 99.58% of 6/12/2013 for the downtime details in May 2013. Following is Pingdom’s Downtime report for my Android MiniPCs and TVBoxes WAWS for the last 30 days:

• Larry Franks (@larry_franks) described Custom logging with Windows Azure web sites in a 6/27/2013 post to the [Windows Azure’s] Silver Lining blog:

One of the features of Windows Azure Web Sites is the ability to stream logging information to the console on your development box. Both the Command-Line Tools and PowerShell bits for Windows Azure support this using the following commands:

Command-Line Tools

azure site log tailPowerShell

get-azurewebsitelog -tail

This is pretty useful if you're trying to debug a problem, as you don't have to wait until you download the log files to see when something went wrong.

One thing that I didn't realize until recently was that not only will this stream information from the standard logs created by Windows Azure Web Sites, but it will also stream information written to any text file in the D:/home/logfiles directory of your web site. This enables you to easily log diagnostic information from your application by just saving it out to a file.

Example code snippets

Node.js

Node.js doesn't really need to make use of this, as the IISNode module that node applications run under in Windows Azure Web Sites will capture stdout/stderr streams and save to file. See How to debug a Node.js application in Windows Azure Web Sites for more information.

However if you do want to log to file, you can use something like winston and use the file transport. For example:

var winston = require('winston'); winston.add(winston.transports.File, { filename: 'd:\\home\\logfiles\\something.log' }); winston.log('info', 'logging some information here');PHP

error_log("Something is broken", 3, "d:/home/logfiles/errors.log");Python

I haven't gotten this fully working with Python; it's complicated. The standard log handler (

RotatingFileHandler) doesn't play nice with locking in Windows Azure Web Sites. It will create a file, but it stays at zero bytes and nothing else can access it. I've been told that ConcurrentLogHandler should work, but it requires pywin32, which isn't on Windows Azure Web Sites by default.Anyway, I'll keep investigating this and see if I can figure out the steps and do a follow-up post.

.NET

Similar to Node.js, things written using the System.Diagnostics.Trace class are picked up and logged to file automatically if logging is enabled for your web site, so there's not as much need for this with .NET applications. Scott Hanselman has a blog post that goes into a lot of detail on how this works.

Summary

If you're developing an application on Windows Azure, or trying to figure out a problem with a production application, the above should be useful in capturing output from your application code.

• Yung Chou (@yungchou) produced TechNet Radio: How to Migrate from VMware to Windows Azure or Hyper-V for TechNet on 6/26/2013:

Keith Mayer and Yung Chou are back and in today’s episode they show us how to migrate your virtual machines from VMware to Windows Azure or Windows Server 2012. Tune in as they showcase some of the free tools that are available such as the Microsoft Virtual Machine Converter as well as walk us through an end-to-end virtual machine migration.

• The Windows Azure Virtual Network Team announced the demise of Windows Azure Connect on 7/3/2013 in a 4/15/2013 post (missed when posted):

Windows Azure Connect will be retired on 7/3/2013. We recommend that you migrate your Connect services to Windows Azure Virtual Network prior to this date. The Connect service will no longer be operational after 7/3/2013.

Please see About Secure Cross-Premises Connectivity for information about secure site-to-site and point-to-site cross-premises communication using Virtual Network.

Please refer to Migrating Cloud Services from Windows Azure Connect to Windows Azure Virtual Network for migration information.

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

‡‡ Brian Benz (@bbenz) posted Extensions and Binding Updates for Business Messaging Open Standard Spec OASIS AMQP by David Ingham and Rob Dolin (@robdolin, pictured below) to the Interoperabilty @ Microsoft blog on 6/28/2013:

- David Ingham, Program Manager, Windows Azure Service Bus

- Rob Dolin, Program Manager, Microsoft Open Technologies, Inc.

We’re pleased to share an update on four new extensions, currently in development, that greatly enhance the Advanced Message Queuing Protocol (AMQP) ecosystem.

First a quick recap - AMQP is an open standard wire-level protocol for business messaging. It has been developed at OASIS through a collaboration among:

- Larger product vendors like Red Hat, VMware and Microsoft

- Smaller product vendors like StormMQ and Kaazing

- Large user firms like JPMorgan Chase and Deutsche Bourse with requirements for extremely high reliability.

- Government institutions

- Open source software developers including the Apache Qpid project and the Fedora project

In October of 2012, AMQP 1.0 was approved as an OASIS standard.

EXTENSION SPECS: The AMQP ecosystem continues to expand while the community continues to work collaboratively to ensure interoperability. There are four additional extension and binding working drafts being developed and co-edited by ourselves, JPMorgan Chase, and Red Hat within the AMQP Technical Committee and the AMQP Bindings and Mappings Technical Committee:

- Global Addressing – This specification defines a standard syntax for representing AMQP addresses to enable routing of AMQP messages through a variety of network topologies, potentially involving heterogeneous AMQP infrastructure components. This enables more uses for AMQP ranging from business-to-business transactional messaging to low-overhead “Internet of Things” communications.

- Management – This specification defines how entities such as queues and pub/sub topics can be managed through a layered protocol that uses AMQP 1.0 as the underlying transport. The specification defines a set of standard operations including create, read, update and delete, as well as custom, entity-specific operations. Using this mechanism, any AMQP 1.0 client library will be able to manage any AMQP 1.0 container, e.g., a message broker like Azure Service Bus. For example, an application will be able to create topics and queues, configure them, send messages to them, receive messages from them and delete them, all dynamically at runtime without having to revert to any vendor-specific protocols or tools.

- WebSocket Binding – This specification defines a binding from AMQP 1.0 to the Internet Engineering Task Force (IETF) WebSocket Protocol (RFC 6455) as an alternative to plain TCP/IP. The WebSocket protocol is the commonly used standard for enabling dynamic Web applications in which content can be pushed to the browser dynamically, without requiring continuous polling. The AMQP WebSocket binding allows AMQP messages to flow directly from backend services to the browser at full fidelity. The WebSocket binding is also useful for non-browser scenarios as it enables AMQP traffic to flow over standard HTTP ports (80 and 443) which is particularly useful in environments where outbound network access is restricted to a limited set of standard ports.

- Claims-based Security – This specification defines a mechanism for the passing of granular claims-based security tokens via AMQP messages. This enables interoperability of external security token services with AMQP such as the IETF’s OAuth 2.0 specification (RFC 6749) as well as other identity, authentication, and authorization management and security services.

All of these extension and binding specifications are being developed through an open community collaboration among people from vendor organizations, customer organizations, and independent experts.

LEARNING ABOUT AMQP: If you’re looking to learn more about AMQP or understand its business value, start at: http://www.amqp.org/about/what.

CONNECTING WITH THE COMMUNITY: We hope you’ll consider joining some of the AMQP conversations taking place on LinkedIn, Twitter, and Stack Overflow.

TRY AMQP: You can also find a list of vendor-supported products, open source projects, and customer success stories on the AMQP website: http://www.amqp.org/about/examples. We’re biased, but you can try our favorite hosted implementation of AMQP: the Windows Azure Service Bus. Visit the Developers Guide for links to getting started with AMQP in .NET, Java, PHP, or Python.

Let us know how your experience with AMQP has been so far, whether you’re a novice user or an active contributor the community.

‡‡ Philip Fu posted [Sample Of Jun 29th] How to use bing search API in Windows Azure to the Microsoft All-In-One Code Framework site on 6/29/2013:

Sample Download :

- CS Version: http://code.msdn.microsoft.com/How-to-use-bing-search-API-4c8b287e

- VB Version: http://code.msdn.microsoft.com/How-to-use-bing-search-API-dfde7b10

The Bing Search API offers multiple source types (or types of search results). You can request a single source type or multiple source types with each query. For instance, you can request web, images, news, and video results for a single search query..

You can find more code samples that demonstrate the most typical programming scenarios by using Microsoft All-In-One Code Framework Sample Browser or Sample Browser Visual Studio extension.

They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates. If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, or read the introduction on our homepage http://1code.codeplex.com/.

•• Robert Green (@rogreen_ms) posted Episode 70 of Visual Studio Toolbox: Visual Studio 2013 Preview on 6/26/2013:

Visual Studio 2013 Preview is here with lots of exciting new features across Windows Store, Desktop and Web development. Dmitry Lyalin joins me for a whirlwind tour of this preview of the next release of Visual Studio, which is now available for download. Dmitry and Robert show the following in this episode:

Recap of Team Foundation Service announcements from TechEd [02:00], including Team Rooms for collaboration, Code Comments in changesets, mapping backlog items to features

- IDE improvements [11:00], including more color and redesigned icons, undockable Pending Changes window, Connected IDE and synchronized settings

- Productivity improvements [17:00], including CodeLens indicators showing references, changes and unit test results, enhanced scrollbar, Peek Definition for inline viewing of definitions

- Web development improvements [28:00], including Browser Link for connecting Visual Studio directly to browsers, One ASP.NET

- Debugging and diagnostics improvements [37:00], including edit and continue in 64-bit projects, managed memory analysis in memory dump files, Performance and Diagnostics hub to centralize analysis tools [44:00], async debugging [51:00]

- Windows Store app development improvements, including new project templates [40:00], Energy Consumption and XAML UI Responsiveness Analyzers [45:00], new controls in XAML and JavaScript [55:00], enhanced IntelliSense and Go To Definition in XAML files [1:00:00]

Visual Studio 2013 and Windows 8.1:

- Visual Studio 2013 Preview download

- Visual Studio 2013 Preview announcement

- Windows 8.1 Preview download

- Windows 8.1 Preview announcement

Additional resources:

- Visual Studio team blog

- Brian Harry's blog

- ALM team blog

- Web tools team blog

- Modern Application Lifecycle Management talk at TechEd

- Microsoft ASP.NET, Web, and Cloud Tools Preview talk at TechEd

- Using Visual Studio 2013 to Diagnose .NET Memory Issues in Production

- What's new in XAML talk at Build

- What's new in WinJS talk at Build

•• The Windows Azure Customer Advisory (CAT) Team (@WindowsAzureCAT) described Telemetry basics and troubleshooting in a 6/28/2013 post via @CraigKitterman:

Editor's Note: This post comes from Silvano Coriani from the Azure CAT Team.

In Building Blocks of Great Cloud Applications blog post, we introduced Azure CAT team series of blog posts and tech articles describing the Cloud Service Fundamentals in Windows Azure code project posted on MSDN Code Gallery. The first component we are addressing in this series is Telemetry. This has been one of the first reusable components we have built working on Windows Azure customer projects of all sizes. Indeed, someone once said: “Trying to manage a complex cloud solution without a proper telemetry infrastructure in place is like trying to walk across a busy highway with blind eyes and deft ears”. You have little to no idea of where the issues can come from, and no chances to take any smart move without getting in trouble. Instead, with an adequate level of monitoring and diagnostic information on the status of your application components over time, you will be able to take educated decisions on things like cost and efficiency analysis, capacity planning and operational excellence. This blog also has a corresponding wiki article that goes deeper into Telemetry basics and troubleshooting.