Windows Azure and Cloud Computing Posts for 6/3/2013+

Top News This Week: Scott Guthrie (@scottgu) reported from TechEd North America 2013:

This morning we released some fantastic enhancements to Windows Azure: Dev/Test in the Cloud : MSDN Use Rights, Unbeatable MSDN Discount Rates, MSDN Monetary Credits, and BizTalk Services : Great new service for Windows Azure that enables EDI and EAI integration...

Today we are announcing a number of enhancements to Windows Azure that make it an even better environment in which to do dev/test:

- No Charge for Stopped VMs

- Pay by the Minute Billing

- MSDN Use Rights now supported on Windows Azure

- Heavily Discounted MSDN Dev/Test Rates

- MSDN Monetary Credits

- Portal Support for Better Tracking MSDN Monetary Credit Usage

Check out full coverage of Windows Azure sessions at TechEd North America 2013 in the Windows Azure Service Bus, BizTalk Services and Workflow section below.

‡ Updated 6/8/2013 with new articles marked ‡

•• Updated 6/8/2013 with new articles marked ••.

• Updated 6/7/2013 for Microsoft Tech*Ed North America 2013 archive videos and slides marked • in the Windows Azure Pack, Hyper-V and Private/Hybrid Clouds section below.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hyper-V and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

No significant articles today

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

‡ Haishi Bai (@HaishiBai2010) described Content-based message routing using Windows Azure Service Bus in a 6/5/2013 post:

In one of my earlier blog article I introduced how to use SAS to provide constrained accesses to Service Bus clients. In the blog I provided a sample code for using Service Bus queues. Here I’m going to present another example to implement content-based message routing using Windows Azure Service Bus topics and subscriptions.

In this scenario, enterprise A needs to send orders to one of its two partners, enterprise B and enterprise C. Enterprise B handles high-valued orders, while enterprise C handles low-valued orders. For this purpose, enterprise A creates an “orders” topic, and creates two separate subscriptions for enterprise B and enterprise C, with attached filters by order values. In addition, enterprise A creates SASs for its partners with read-only access so that they don’t accidentally (or intentionally) get orders that are not supposed to be sent to them.

The sample code is a Visual Studio solution with two Console applications, one for enterprise A, and another for its partners.

Sender

Sender code is as shown below. You’ll need to add WindowsAzure.ServiceBus NuGet package as well as a reference to System.Configuration to your project, and update Microsoft.ServiceBus.ConnectionString setting in app.config to your Service Bus connection string.

static void Main(string[] args) { string topicName = "orders"; NamespaceManager nm = NamespaceManager.CreateFromConnectionString (ConfigurationManager.AppSettings["Microsoft.ServiceBus.ConnectionString"]); if (nm.TopicExists(topicName)) nm.DeleteTopic(topicName); TopicDescription topic = new TopicDescription(topicName); string keyA = SharedAccessAuthorizationRule.GenerateRandomKey(); string keyB = SharedAccessAuthorizationRule.GenerateRandomKey(); topic.Authorization.Add(new SharedAccessAuthorizationRule("ForCompanyA",keyA , new AccessRights[]{ AccessRights.Listen})); topic.Authorization.Add(new SharedAccessAuthorizationRule("ForCompanyB", keyB, new AccessRights[]{ AccessRights.Listen})); nm.CreateTopic(topic); Console.WriteLine("Key for company A: " + keyA); Console.WriteLine("Key for company B: " + keyB); nm.CreateSubscription(topicName, "high_value", new SqlFilter("value >= 10000")); nm.CreateSubscription(topicName, "low_value", new SqlFilter("value < 10000")); TopicClient client = TopicClient.CreateFromConnectionString (ConfigurationManager.AppSettings["Microsoft.ServiceBus.ConnectionString"], topicName); int orderNumber = 1; while (true) { Console.Write("Enter value for order #" + orderNumber + ": "); string value = Console.ReadLine(); int orderValue = 0; if (int.TryParse(value, out orderValue)) { var message = new BrokeredMessage(); message.Properties.Add("order_number", orderNumber); message.Properties.Add("value", orderValue); client.Send(message); Console.WriteLine("Order " + orderNumber + " is sent!"); orderNumber++; } else { if (string.IsNullOrEmpty(value)) break; else Console.WriteLine("Invalid input. Please try again."); } } }Receiver

(You’ll also need to add a reference to WindowsAzure.ServiceBus NuGet package )

static void Main(string[] args) { Console.Write("Please enter your key: "); string key = Console.ReadLine(); MessagingFactory factory = MessagingFactory.Create( ServiceBusEnvironment.CreateServiceUri("sb", "mybooks", string.Empty), TokenProvider.CreateSharedAccessSignatureTokenProvider("ForCompanyA", key)); SubscriptionClient client = factory.CreateSubscriptionClient("orders", "high_value"); Console.WriteLine("Waiting for message"); while (true) { var message = client.Receive(); if (message != null) { Console.WriteLine(string.Format("Received order #{0} with value {1}", message.Properties["order_number"], message.Properties["value"])); message.Complete(); } } }Test

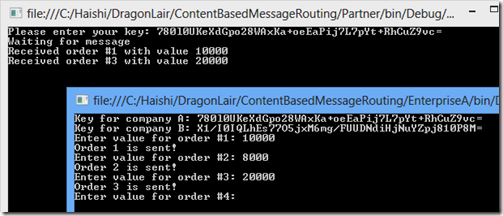

Launch both applications. When receiver starts, you’ll need to copy & paste the secret key for company A from sender screen. Then you can start entering different order values from sender screen. The following screenshot shows that I entered 3 different orders with value $10,000, $8000, and $20,000. Order 1 and 3 were sent to the partner, and order 2 was filtered out as expected:

‡ Nathan Totten (@ntotten) and Nick Harris (@CloudNick) produced CloudCover Episode 110 - Windows Azure BizTalk Services on 6/3/2013:

In this episode Nick Harris and Nathan Totten are joined by Kent Brown, Senior Product Marketing Manager, and Guru Venkataraman, Senior Program Manager, who introduce us to Windows Azure BizTalk Services. Kent and Guru discuss how BizTalk Services brings the power of BizTalk to the cloud and makes enterprise integration scenarios even easier. Guru also demonstrates how BizTalk can be used to connect SAP systems to both on-premise and cloud SQL databases.

For more details on BizTalk Services see WindowsAzure.com and Scott Guthrie's blog post.

Javed Sikander’s Introduction to Windows Azure BizTalk Services (WAD-B313) presentation became available on 6/4/2013 for viewing and downloading in high-quality MP4 format:

Microsoft has recently released Windows Azure BizTalk Services (WABS), our exciting new integration PaaS service. Learn about the core scenarios WABS enables for cloud integration and hybrid solutions. Learn how to develop solutions on BizTalk Services.

The BizTalk Server Team (@MS_BizTalk) posted Hello Windows Azure BizTalk Services! to its blog on 6/4/2013:

Today we are proudly announcing the preview release of Windows Azure BizTalk Services (WABS). We have collaborated with multiple partners and customers to build a simple, powerful and extensible cloud-based integration service that provides Business-to-Business (B2B) and Enterprise Application Integration (EAI) capabilities for delivering cloud and hybrid integration solutions. The service runs on a secure, dedicated per tenant environment that you can provision on demand, while it is being managed and operated by Microsoft.

Using the different offerings from Windows Azure users can create applications that run on the cloud. However, given the fact that these applications operate in their own ‘space’ on the cloud but at the same time need to interact with other on-premise or cloud applications, there is a need to bridge the message and transport protocol mismatch between these disparate applications. Bridging these mismatches is the realm of integration. There can be different forms of integration.

EAI

WABS provides rich EAI capabilities in providing config[uration-]driven design tools to bridge the message and transport protocol mismatch between two disparate systems. To name just a few of WABS EAI capabilities:

- The ability to connect systems following different transport protocols

- The ability to validate the message originating from the source endpoint against a standard schema

- The ability to transform the message as required by destination endpoints

- The ability to enrich the message and extract specified properties from the message. The extracted properties can then be used to route the message to a destination or an intermediary endpoint.

- The ability to track messages.

B2B

The WABS B2B solution, which comprises of the BizTalk Services Portal and B2B pipelines, enables customers to add trading partners and configure B2B pipelines that can be deployed to WABS. The trading partners will then be able to send EDI messages using HTTP, AS2, and FTP transports. Once the message is received, it will be processed by the B2B pipeline deployed on the cloud and will be routed to the destination configured in the B2B pipeline. Few of the WABS EDI capabilities are:

- Easily manage and onboard trading partners using the BizTalk Services Portal. With the BizTalk Services Portal, customers will be able to cut down the on-boarding time from weeks to days.

- Leverage Microsoft hosted B2B pipelines as services to exchange B2B documents and run them at scale for customers. This minimizes overhead in managing B2B pipelines and their corresponding scale issues with dedicated servers.

- Ability to track messages.

Getting Started

Follow this link to get started with provisioning your own BizTalk Service. You will need a Windows Azure account. If you do not have one, you can sign up for a no-obligation Free Trial.

Important Links

Please refer to the below links for more information.

Area

Link

SDK, EDI Schemas and Tools

http://www.microsoft.com/en-us/download/details.aspx?id=39087

Samples

Documentation

http://msdn.microsoft.com/en-us/library/windowsazure/hh689864.aspx & http://www.windowsazure.com/en-us/manage/services/biztalk-services/

BizTalk Portal

BizTalk Service Forums

http://social.msdn.microsoft.com/Forums/en-US/azurebiztalksvcs/threads

BizTalk Team Blog

Keep a lookout for more blogs in the near future explaining more of WABS capabilities. We are extremely excited by the opportunities that WABS is going to open up and hope that the community will continue to invest in WABS as we have, driving its evolution.

The BizTalk Server Team (@MS_BizTalk) also posted Windows Azure BizTalk Service EAI Overview on 6/3/2013:

Introduction

Earlier today we announced the public preview of our Windows Azure BizTalk Service (WABS). We have collaborated with multiple partners and customers to build a simple, powerful and extensible cloud-based integration service that provides Business-to-Business (B2B) and Enterprise Application Integration (EAI) capabilities for delivering cloud and hybrid integration solutions. The service runs on a secure, dedicated per tenant environment that you can provision on demand, while it is being managed and operated by Microsoft.

Let’s look at a brief overview of our EAI offering in WABS.

EAI in WABS

One of the core requirements for WABS is to bridge the message and transport protocol mismatch between two disparate systems. In cloud parlance, we should think of each system on the cloud as an endpoint. A message exchange between these two endpoints (which are either extensions of on-premises applications or representing an application running on the cloud) happens through Service Bus. Service Bus being a purely relay service, just passes on the message originating from one endpoint to another. However, given that the two systems are disparate and probably follow different messaging format and protocols, it becomes imperative that the Service Bus provides rich processing capabilities between the two endpoints. The processing capabilities could include the following:

- The ability to connect systems following different transport protocols

- The ability to validate the message originating from the source endpoint against a standard schema

- The ability to transform the message as required by destination endpoints

- The ability to enrich the message and extract specified properties from the message. The extracted properties can then be used to route the message to a destination or an intermediary endpoint.

These capabilities are made available through EAI on WABS. WABS Services provides these capabilities as different stages of a ‘message processing bridge’. Each of these stages can be configured as part of the bridge. Let’s overview some of these capabilities.

Feature outline and overview: Bridges

Conceptually, a bridge is a single message processing unit composed of 3 parts – sources, pipelines & destinations. This is the basic building block to design ones integration platform.

Pipelines are message mediation patterns. Message mediation, as the name implies, is an intermediate processing stage of the message as it travels from the originating to the final destination. Mediating the message might involve decoding the message, inspecting the message, transforming the message, validating the message, routing the message, enriching the message, etc. In a stricter sense with respect to WABS, bridges offer one kind of message mediation, which is to bridge message-related mismatches in scenarios where the origin and the destination of the message are heterogeneous but are still part of a message flow. Following are certain characteristics of bridges provided as part of Windows Azure BizTalk Services.

Pipelines are composed of stages and activities where each stage is a message processing unit in itself.

Each stage of a pipeline is atomic, which means either a message completes a stage or not. A stage can be turned on or off, indicating whether to process a message or simply let it pass through.

WABS also provides a rich set of sources and destinations to build ones message interchange platform along with the flexibility of configuring different types of pipelines.

Feature outline and overview: VS Design Experience

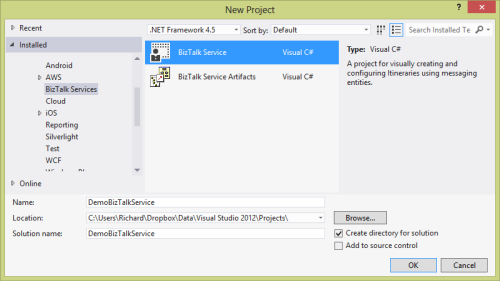

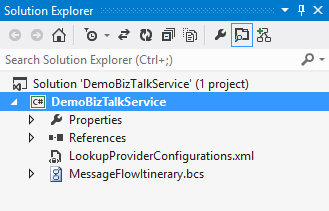

WABS EAI provides connectivity to different protocols and applications, and provide message-processing capabilities such as validation, transformation, extraction, and enrichment on the cloud. However, neither of these can be used in isolation and ‘tie up’ with other entities on the cloud like topics, queues, etc. to provide an end-to-end message flow. For example, you could have a scenario where the a client sends a request message that needs to be processed on the cloud, routed to a queue, and then eventually inserted into a SQL Server database. To configure this scenario, you need to use an XML bridge, a Service Bus queue, followed by BizTalk Adapter Service in a sequence. This presents a need for a design surface where you could stitch different components of a message flow together. Azure BizTalk Services provides a design surface called BizTalk Service project that helps you achieve this. The BizTalk Service project design surface is available as a Visual Studio project type and is installed with Azure BizTalk Services SDK.

The project provides a friendly tool-box from which one can drag and drop pipelines, sources, destinations including LOB entities. It allows for easy configuration of all involved entities and once done, also allows deploying this solution into your WABS service. You can also upload artifacts required by your project.

Feature outline and overview: Flat file/XML file support

Flat file message transfer is a key requirement in real world Azure BizTalk Services scenarios. Many enterprise applications receive flat file messages from client applications, such as SAP IDOCs. To enable flat-file processing over the cloud, you can use bridges available as part of Azure BizTalk Services.

Similar to flat files, EAI also supports XML file processing. XML is more of a standard message format and easy to work with.

Feature outline and overview: Custom code

While the fixed pattern of bridges (Validate, Enrich, Transform, and Enrich) provided with Azure BizTalk Services serves the requirements of many integration scenarios, sometimes you need to include custom processing as part of your bridge configuration. For example, you might want to convert a message from a flat-file or an XML format to other popular formats, such as XLS or PDF before sending the message out. Similarly, at each stage of message processing, you might want to archive the message to a central data store. In such cases, the fixed pattern of the out-of-box bridges becomes insufficient. Hence, to enable such scenarios, bridges include the option of executing custom code at some key stages of the bridge.

Feature outline and overview: Tracking

Within a bridge, a message undergoes processing under various stages and can be routed to configured endpoints. Specific details of the message such as transport properties, message properties, etc. need to be tracked and queried separately by the bridge developers to keep a track of message processing. Additionally, while a message is being processed by the bridge, there can be failures of many types. These failures must be propagated back to the bridge developers/administrators or the message sending client so that appropriate actions can be taken to fix these errors.

Bridges now provide support for tracking the messages thereby enabling the bridge developer and message sending clients to track message properties defined during the bridge configuration. You can configure the bridge to track the messages using options available from the Bridge Configuration surface.

The BizTalk Server Team (@MS_BizTalk) continued with Windows Azure BizTalk Service B2B Overview on 6/3/2013:

Introduction

Earlier today we announced the public preview of our Windows Azure BizTalk Service (WABS). We have collaborated with multiple partners and customers to build a simple, powerful and extensible cloud-based integration service that provides Business-to-Business (B2B) and Enterprise Application Integration (EAI) capabilities for delivering cloud and hybrid integration solutions. The service runs on a secure, dedicated per tenant environment that you can provision on demand, while it is being managed and operated by Microsoft.

Let’s look at a brief overview of our B2B offering in WABS.

B2B in WABS

To be successful as a business, enterprises must effectively manage data exchanged with other organizations such as vendors and business partners. Data received from partner organizations is often categorized as business-to-business (B2B) data transfer. One of the standard and most commonly used suite of protocols for B2B data transfer is Electronic Data Interchange or EDI.

Some of the challenges that customers face while opting for a B2B solution are:

- Total cost of ownership (TCO) for setting up a B2B solution, especially for the small and medium business (SMB) shops

- High maintenance cost for the B2B solutions including onboarding partners, managing agreements, etc.

Using B2B in WABS enables customers to:

- Lower their TCO with the pay-as-you-use model.

- Easily manage and onboard trading partners using the BizTalk Services Portal. With the BizTalk Services Portal, customers will be able to cut down the on-boarding time from weeks to days.

- Leverage Microsoft hosted B2B pipelines as services to exchange B2B documents and run them at scale for customers. This minimizes overhead in managing B2B pipelines and their corresponding scale issues with dedicated servers.

The WABS B2B solution, which comprises of the BizTalk Services Portal and B2B pipelines, enables customers to add trading partners and configure B2B pipelines that can be deployed to WABS. The trading partners will then be able to send EDI messages using Http, AS2, and FTP transports. Once the message is received, it will be processed by the B2B pipeline deployed on the cloud and will be routed to the destination configured in the B2B pipeline.

Feature outline and overview

B2B in WABS provides a multitude of features with the goal of making it Simple, Powerful and Extensible at the same time. To fulfill business needs, Service Providers need to model, store, and manage information about:

Partners and their businesses

Rules of engagement with the partners, which include details such as message encoding protocol (EDI standards like X12), transport protocol (AS2), etc.

Let us review some of these and how WABS B2B helps solve this.

Feature outline and overview: Partners

Each participating organization in a business relationship is a trading partner. A trading partner is at the root level and forms the base for a trading partner solution. A trading partner is one of the two or more participants in an ongoing business relationship.

Using the Windows Azure BizTalk Portal, one can easily set up partners representing all the organizations involved in a business trade. Each partner has an associated profile with it which can be updated as per business need. Multiple profiles may be created representing the different divisions of the organization.

Feature outline and overview: Agreements

A Trading Partner Agreement (TPA) is defined as a definitive and binding agreement between two trading partners for transacting messages over a specific B2B protocol. It is a comprehensive collection of all aspects governing the business transaction between the two trading partners.

The Windows Azure BizTalk Portal allows easy setting up of agreements between Trading Partners. Using the profiles created for Partners, one can very easily and quickly come up with templates for settings up agreements while reducing configuration errors.

Feature outline and overview: Artifacts

WABS portal allows one to manage the artifacts used for B2B operations. There are currently 4 kinds of artifacts supported – schemas, maps, certificates and assemblies. The portal allows users to upload and delete artifacts related to the partner agreements easily.

You are also able to manage the bridges created as part of the agreement deployments.

Feature outline and overview: Tracking

WABS provides the ability to monitor EDI and AS2 messages, batches, and bridges deployed in an Azure BizTalk Services subscription. The tracking information helps you in the following ways:

- · Helps troubleshoot message processing issues.

- · Provides specific details of a message’s properties

- · Creates copies of messages

- · Helps determine the flow of events when a message is being processing

You must enable tracking as part of an agreement before you can view tracking data

Feature outline and overview: Extensibility

The Windows Azure BizTalk Services Portal offers a rich user experience to create and manage partners and agreements for business-to-business messaging. However, at times you need to programmatically create the different entities that are part of BizTalk Services Portal. Windows Azure BizTalk Services offers a WCF Data Service-based object model to programmatically create and maintain different entities such as partners, agreement, etc. for the BizTalk Services Portal.

Along with this, Powershell Cmdlets are exposed for activities like creating artifacts, deleting artifacts, Start/Stop source, etc. to provide users with rich automation & scripting capabilities. These Powershell Cmdlets will be captured in more detail in an additional blog. The documentation has more information about these as well.

Sign up for a Windows Azure BizTalk Services Preview account and download the Windows Azure BizTalk Services Preview SDK, which supersedes the Windows Azure EAI and EDI Lab, available as of 6/3/2013:

Windows Azure BizTalk Services (WABS) is a simple, powerful, and extensible cloud-based integration service that provides Business-to-Business (B2B) and Enterprise Application Integration (EAI) capabilities for delivering cloud and hybrid integration solutions. The service is run on a secure, dedicated per tenant environment that you can provision on demand.

Windows Azure BizTalk Services SDK

- Installs the Visual Studio project templates for creating a BizTalk Service project for Enterprise Application Integration (EAI) and BizTalk Service Artifacts for Electronic Data Interchange (EDI).

- Provides the tools to develop and deploy EAI Bridges and Artifacts.

- BizTalk Adapter Service

- Developer SDK : This is required to create a BizTalk Service project that can include a BizTalk Adapter Service component.

- Runtime and Tools : The BizTalk Adapter Service (BAS) feature allows an application in the cloud to communicate with a Line-of-Business (LOB) system on-premise, in your network, behind your firewall. Also includes PowerShell cmdlets to manage the BAS Runtime components.

- Find the Windows Azure BizTalk Services Documentation here.

- Try out the Windows Azure BizTalk Services samples here.

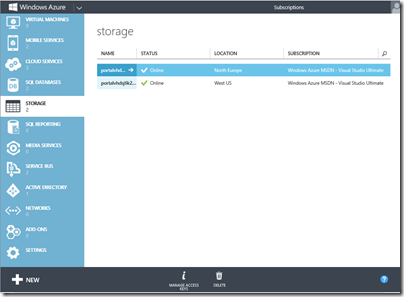

Then check out BizTalk Services: Provisioning Using Windows Azure Management Portal for setup details:

The Windows Azure BizTalk Services Team published Windows Azure BizTalk Services - June 2013 Preview documentation on 6/3/2013:

[This topic is pre-release documentation and is subject to change in future releases. Blank topics are included as placeholders.]

Windows Azure BizTalk Services provides integration capabilities for the Windows Azure Platform to extend on-premises applications to the cloud, provides rich messaging endpoints on the cloud to process and transform the messages, enables business-to-business messaging, and helps organizations integrate with disparate applications, both on cloud and on-premises. In other words, BizTalk Services provides common integration capabilities (e.g. bridges, transforms, B2B messaging) on Windows Azure.

Nitin Mehrota published Using Service Bus EAI and EDI Labs to Integrate with an On-Premises SAP Server to the Windows Azure Web site on 6/3/2013:

Windows Azure BizTalk Services provides a rich set of integration capabilities enabling organizations to create hybrid solutions such that their customer or partner facing applications are hosted on Azure, while the data related to customers or partners is stored on-premises using LOB applications. In this article, we talk about how to set up a similar hybrid scenario using Azure BizTalk Services. To demonstrate how to integrate Azure applications with an on-premises LOB application using Azure BizTalk Services, let us consider a scenario involving two business partners, Fabrikam and Contoso.

Business Scenario

Contoso sends a purchase order (PO) message to Fabrikam in an X12 Electronic Data Interchange (EDI) format using the PO (X12 850) schema. Fabrikam (that uses an SAP Server to manage partner data), accepts PO from its partners using the ORDERS05 IDOCS. To enable Contoso to send a PO directly to Fabrikam’s on-premises SAP Server, Fabrikam decides to use Windows Azure’s integration offering, Azure BizTalk Services, to set up a hybrid integration scenario where the integration layer is hosted on Azure and the SAP Server is within the organization’s firewall. Fabrikam uses Azure BizTalk Services in the following ways to enable this hybrid integration scenario:

- Fabrikam uses the BizTalk Adapter Service component available with Azure BizTalk Services to expose the Send operation on ORDERS05 IDOC as an operation using Service Bus relay endpoint. Contoso also creates the schema for Send operation using BizTalk Adapter Service.

Note

A Send operation on an IDOC is an operation that is exposed by the BizTalk Adapter Pack on any IDOC to send the IDOC to the SAP Server. BizTalk Adapter Service uses BizTalk Adapter Pack to connect to an SAP Server.

- Fabrikam uses the Transform component available with Azure BizTalk Services to create a map to transform the PO message in X12 format into the schema required by the SAP Server to invoke the Send operation on the ORDERS05 IDOC.

- Fabrikam uses the Windows Azure BizTalk Services Portal (TPM) portal available with Azure BizTalk Services to create and deploy an EDI agreement on Service Bus that processes the X12 850 PO message. As part of the message processing, the agreement also does the following:

- Receives an X12 850 PO message over FTP.

- Transforms the X12 PO message into the schema required by the SAP Server using the transform created earlier.

- Routes the transformed message to another XML bridge that eventually routes the message to a relay endpoint created for sending a PO message to an SAP Server. Fabrikam earlier exposed (as explained in bullet 1 above) the Send operation on ORDERS05 IDOC to enable partners to send PO messages using BizTalk Adapter Service.

Note: For the Azure BizTalk Services December 2011 release, the EDI bridge deployed using the TPM portal does not support setting route actions, which are mandatory for setting SOAP action headers on the messages being sent to an LOB relay endpoint. So, as an intermediate step, Fabrikam configures the EDI agreement to route the transformed message to an XML bridge, which then routes the message to the relay endpoint at which the Send operation for ORDERS05 IDOC is exposed. As part of this intermediary routing, the XML bridge sets the required SOAP action headers on the message.

Once this is set up, Contoso drops an X12 850 PO message to the FTP location and is consumed by the EDI receive pipeline, which processes the message, transforms it to an ORDERS05 IDOC, and routes it to the intermediary XML bridge. The bridge then routes the message to the relay endpoint on Service Bus, which is then sent to the on-premises SAP Server. The following illustration represents the same scenario.

How to Use This Article

This tutorial is written around a sample, SAPIntegration, which is available as part of the download (SAPIntegration.zip) from the MSDN Code Gallery. You could either use the SAPIntegration sample and go through this tutorial to understand how the sample was built or just use this tutorial to create your own application. This tutorial is targeted towards the second approach so that you get to understand how this application was built. Also, to be consistent with the sample, the names of artifacts (e.g. schemas, transforms, etc.) used in this tutorial are same as that in the sample.

The sample available from the MSDN code gallery contains only half the solution, which can be developed at design-time on your computer. The sample cannot include the configuration that you must do on the EDI Portal on Azure. For that, you must follow the steps in this tutorial to set up your EDI pipeline. Even though Microsoft recommends that you follow the tutorial to best understand the concepts and procedures, if you really wish to use the sample, this is what you should do:

- Download the SAPIntegration.zip package, extract the SAPIntegration sample and make relevant changes like providing your service namespace, issuer name, issuer key, etc. After changing the sample, deploy the application to get the endpoint URL at which the XML bridge is deployed.

- Go to the EDI Portal, and configure the EDI Receive pipeline as described at Create and Deploy the EDI Receive Pipeline and follow the procedures to hook the EDI Receive pipeline to the XML bridge you already deployed.

- Drop a test message at the FTP location configured as part of the agreement and verify that the application works as expected.

- If the message is successfully processed, it will be routed to the SAP Server and you can verify the ORDERS IDOC using the SAP GUI.

- If the EDI agreement fails to process the message, the failure/error messages are routed to a relay endpoint on Service Bus. To receive such messages, you must set up a relay receiver service that receives any message that comes to that specific relay endpoint. As part of the EDI agreement, you will specify this endpoint to receive notifications for messages that fail to be processed by the agreement. A receiver service (RelayReceiverService) is also provided as part of the SAPIntegration.zip package. You can use this service to receive the error messages explaining why the EDI agreement failed to process a PO message. More details on why you need this service and how to use it are available at Test the Solution.

In This Section

Richard Seroter (@rseroter) posted a Walkthrough of New Windows Azure BizTalk Services on 6/3/2013:

The Windows Azure EAI Bridges are dead. Long live BizTalk Services! Initially released last year as a “lab” project, the Service Bus EAI Bridges were a technology for connecting cloud and on-premises endpoints through an Azure-hosted broker. This technology has been rebranded (“Windows Azure BizTalk Services”) and upgraded and is now available as a public preview. In this blog post, I’ll give you a quick tour around the developer experience.

First off, what actually *is* Windows Azure BizTalk Services (WABS)? Is it BizTalk Server running in the cloud? Does it run on-premises? Check out the announcement blog posts from the Windows Azure and BizTalk teams, respectively, for more. But basically, it’s separate technology from BizTalk Server, but meant to be highly complementary. Even though It uses a few of the same types of artifacts such as schemas and maps, they aren’t interchangeable. For example, WABS maps don’t run in BizTalk Server, and vice versa. Also, there’s no concept of long-running workflow (i.e. orchestration), and none of the value-added services that BizTalk Server provides (e.g. Rules Engine, BAM). All that said, this is still an important technology as it makes it quick and easy to connect a variety of endpoints regardless of location. It’s a powerful way to expose line-of-business apps to cloud systems, and Windows Azure hosting model makes it possible to rapidly scale solutions. Check out the pricing FAQ page for more details on the scaling functionality, and the reasonable pricing.

Let’s get started. When you install the preview components, you’ll get a new project type in Visual Studio 2012.

Each WABS project can contain a single “bridge configuration” file. This file defines the flow of data between source and destination endpoints. Once you have a WABS project, you can add XML schemas, flat-file schemas, and maps.

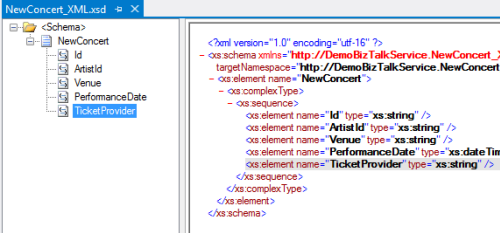

The WABS Schema Editor looks identical to the BizTalk Server Schema Editor and lets you define XML or flat file message structures. While the right-click menu promises the ability to generate and validate file instances, my pre-preview version of the bits only let me validate messages, not generate sample ones.

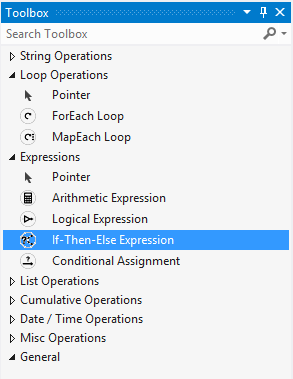

The WABS schema mapper is very different from the BizTalk Mapper. And that’s a good thing. The UI has subtle alterations, but the more important change is in the palette of available “functoids” (components for manipulating data). First, you’ll see more sophisticated looping and logical expression handling. This include a ForEach Loop and finally, an If-Then-Else Expression option.

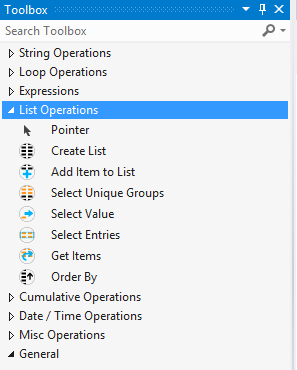

The concept of “lists” are also entirely new. You can populate, persist, and query lists of data and create powerfully complex mappings between structures.

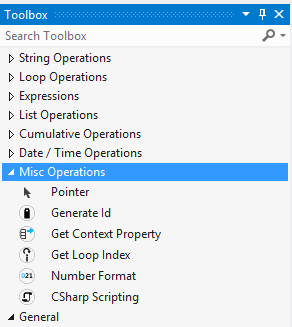

Finally, there are some “miscellaneous” operations that introduce small – but helpful – capabilities. These functoids let you grab a property from the message’s context (metadata), generate a random ID, and even embed custom C# code into a map. I seem to recall that custom code was excluded from the EAI Bridges preview, and many folks expressed concern that this would limit the usefulness of these maps for tricky, real-world scenarios. Now, it looks like this is the most powerful data mapping tool that Microsoft has ever produced. I suspect that an entire book could be written about how to properly use this Mapper. …

Read the rest of Richard’s detailed article here.

Following are prices of the four Windows Azure BizTalk Services versions during the preview period:

|

Version |

Developer | Basic | Standard | Premium |

|---|---|---|---|---|

| Price (Preview) | $0.065/hour

(~$48/ month) |

$0.335/hour

(~$249/ month) |

$2.015/hour

per unit (~$1,499/ month) |

$4.03/hour

per unit (~$2,998/ month) |

| Production Use | Not available | Yes | Yes | Yes |

| Scale Out | N/A | N/A | Up to 4 units | Up to 8 units |

| EAI Bridges per unit | 30 | 50 | 125 | 250 |

| Number of connections using BizTalk Adapter Service | 1 | 0 | 5 | 25 |

| EDI Agreements per unit | 10 | 0 | 0 | 1000 |

| Availability SLA | No | Yes for GA,

No for Preview |

Yes for GA,

No for Preview |

Yes for GA,

No for Preview |

Data transfer charges are billed separately at the standard Data Transfer rates.

Here’s are the features supported by each of the four WABS versions:

|

Version |

Developer | Basic | Standard | Premium |

|---|---|---|---|---|

| Basic Mediation | ✔ | ✔ | ✔ | ✔ |

| Standard Protocols: HTTP/S, REST, FTP, WCF, SB | ✔ | ✔ | ✔ | ✔ |

| Secure Protocol: SFTP | ✔ | ✔ | ✔ | ✔ |

| Custom Code | ✔ | ✔ | ✔ | ✔ |

| Transform (XSLT, Scripting) | ✔ | ✔ | ✔ | ✔ |

| EDI Capabilities (Message Types, TPM Portal) | ✔ | ✔ | ||

| Archiving | ✔ | ✔ | ||

| BizTalk Standard License for on-premises | ✔ |

| Edition | Primary Usage Scenario | Est. Price per

Core License |

Est. Cost

4 Cores, Min. |

| Enterprise | For customers with enterprise-level requirements for high volume, reliability, and availability |

$10,835 |

$43,340 |

| Standard | For organizations with moderate volume and deployment scale requirements |

$2,485 |

$9,940 |

| Branch | Specialty version of BizTalk Server designed for hub and spoke deployment scenarios, including RFID |

$620 |

$2,480 |

Licenses for a minimum of four cores must be purchased for each processor in which BizTalk Server 2013 runs. Per-core pricing for BizTalk Server 2013 is one-fourth that of BizTalk Server 2010’s per-processor license.

According to Microsoft’s Line of Business Adapter Pack page, the following adapters are included with BizTalk Server 2013 licenses:

According to Microsoft’s Kent Brown:

[With a] Standard or Premium subscription for Windows Azure BizTalk Services, you get rights to use the BizTalk Adapters on-prem (hosted in IIS). The number of connections allowed is according to Standard or Premium.

Note: The RSSBus folks announced a beta release of their OData BizTalk Adapter on 6/3/2013:

Enterprise BizTalk Adapters for OData

Powerful BizTalk Adapters that allow you to easily connect BizTalk Server with live OData feeds through standard orchestrations. Use the OData Data Adapters to synchronize with OData Services. Perfect for data synchronization, local back-ups, workflow automation, and more!

- Similar to the BizTalk Adapter for SQL Server but for OData Services.

- Supports meta-data discovery and schema generation for OData entities.

- Includes a Receive Adapter and a two-way Send Adapter with support for updategrams, stored procedures, and queries.

Connect BizTalk Workflows With OData Data

The RSSBus BizTalk Adapter for OData allows you to poll OData using SQL queries and stored procedures. The Adapter lets you create an XML view of your entities in OData allowing you to act on it as if it were an XML message. The Adapter supports the standard SQL updategrams making it easy to insert, update, or delete OData entities.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

•• Vittorio Bertocci (@vibronet) reported The JSON Web Token Handler for .NET 4.5 Reaches GA! in a 6/6/2013 post to his new CloudIdentity blog:

After just few months in developer preview, I am extremely pleased to report that the v1 or the JSON Web Token (JWT) Handler for .NET is generally available!

Your feedback was super-helpful in shaping the final form of the v1. I am currently at TechEd hence I won’t go in all the details here (will update this post afterwards), however here there are a couple of highlights from the main changes since the preview:

Namespace change. We got consistent feedback that the Microsoft.* namespace is for many associated to WIF 1.0 from .NET 3.5, and the fact that the handler builds on top of .NET 4.5 was source of confusion.

Hence, we took all the necessary steps to move the assembly under System.IdentityModel, where all the other associated classes live.

Important: this move changed the ID of the NuGet package, hence in order to pick up the GA version you’ll have to explicitly refer it. We’ll be taking down the preview version shortly.- Config integration. The handler is now better integrated with the WIF configuration elements: it plays nice with the ValidatingIssuerNameRegistry, has its own config element for custom settings, and so on

Improved mapping for short to long claim types. The handler has a fully revamped claim types mapping engine, reflecting the claim types traded by OpenID Connect, Windows Azure AD and ADFS in Windows Server 2012 R2. Also, you can customize the mapping or turn it off completely!

Hopefully this will make Dominick happy

- Multiple keys in TokenValidationParameters. We now allow you to specify a collection of signing keys, so that you don’t need to cycle thru explicit validation cycles when the issuer you trust features more than one key (e.g. the current key and one about to roll)

- Consistent behavior in BootstrapContext. In the preview a JWT in the bootstrapcontext would be presented as SecurityToken at first, but moved to a string at the first recycle. The GA version is always a string with the encoded original token.

- Signature provider factory. In the GA handler you can provide a custom signature algorithm

- MANY fine grained improvements in the object model, which we will detail shortly (see above)

All the samples (in common with the AAL. NET dev preview, also refreshed today) have been updated to reflect the new object model, I would recommend to check them out to see in detail what changed.

Thank you again for having helped us refine this fundamental building block of our REST development story. Also, keep the feedback coming!

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

•• The Data Platform Team wrote Cross-Post: SharePoint on Windows Azure Virtual Machines and Craig Kitterman (@craigkitterman) posted in to the Windows Azure blog on 6/7/2013:

(Editor's Note: This post comes from the Data Platform Team.)

SharePoint Farms

Hello everyone:

We want to inform you [about] a new tutorial. With this tutorial you will learn how to:

- Configure, and deploy a SharePoint farm on a set of Virtual Machines in this tutorial.

- Create a set of Virtual Machines using images from the gallery including Windows Server 2012, SQL Server 2012, and SharePoint Server 2013.

- Create a domain, join machines to the domain, and run the SharePoint configuration wizard.

- How to enable the SQL Server AlwaysOn feature for high availability.

You can find the tutorial here: Installing SharePoint 2013 on Windows Azure Infrastructure Services.

For additional guidance, go to SharePoint 2013 on Windows Azure Infrastructure Services.

•• My (@rogerjenn) Windows Azure competes with AWS, pushes more frequent, granular updates article of 4/30/2013 for TechTarget’s SearchCloudComputing.com (missed when published) begins:

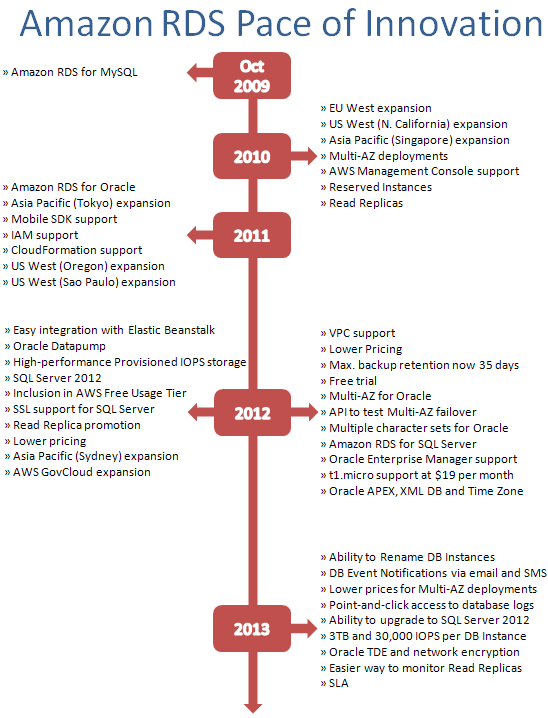

Developers and IT managers who've adopted or are evaluating Windows Azure often complain about the glacial pace of its infrastructure and platform advancements when compared to Amazon Web Services' almost weekly new feature proclamations. Microsoft Corporate Vice President Scott Guthrie put those gripes to rest with a volley of three blog posts describing his team's latest updates.

What does this mean for cloud consumers? The accelerated schedule likely means more granular updates and upgrades to Windows Azure Platform as a Service (PaaS) and Infrastructure as a Service (IaaS) offerings, similar in scope to those Jeff Barr posts to his AWS Evangelism blog. Here's a rundown of Microsoft's Windows Azure April updates.

April 22: General availability of IaaS

Windows Azure virtual machines (VMs) and virtual networks (VNs) -- the heart of Microsoft's drive to compete directly with AWS in the IaaS marketplace -- have been in preview purgatory since their announcement on June 7 last year. Enterprises are hesitant to deploy cloud projects to IaaS in the preview (read: beta) stage because previews don't offer service-level agreements (SLAs) and they're subject to frequent breaking technical changes that require costly DevOps workarounds.

Guthrie's April 22 blog post announced that VMs and VNs had gained full production status in Microsoft data centers supporting Windows Azure, as well as an enterprise SLA, deployment automation with the Windows Azure Management Portal and technical support through official Microsoft support channels. The upshot: Windows Azure IaaS was ready for enterprise prime time at last.

In addition, Guthrie pointed out these new technical and economic VM enhancements:

- More VM image templates, including SQL Server, BizTalk Server and SharePoint images

- More VM sizes, including larger memory machines

- Lower VM prices, reduced by 21% to 33% for IaaS and PaaS VMs

The official infrastructure general availability and pricing announcement from Microsoft's Bill Hilf is here.

Windows Azure IaaS' advance to GA status came hot off the heels of the decommissioning of Windows Azure VM roles in favor of VMs. …

The article continues with

Roger Doherty of the Customer Advisory Team described The Top 10 Things to Know When Running SQL Server Workloads on Windows Azure Virtual Machines in a 6/4/2013 post to the Windows Azure SQL Database blog:

When we announced the availability of the Windows Azure Virtual Machines and Virtual Network previews (we call these two sets of services Windows Azure Infrastructure Services) in June 2012, organizations all over the world began testing their Microsoft SQL Server workloads and pushing the preview to its limits.

You can do amazing things with Windows Azure Infrastructure Services. The ability to rapidly deploy virtual machines (VMs) capable of running many different types of SQL Server workloads at a low cost without having to procure and manage hardware has broad appeal. The ability to do complex multi-VM deployments in a virtual network, support for Active Directory (AD), support for SharePoint, and the ability to connect your virtual network back to on-premises networks or remote machines using virtual private network (VPN) gateways makes it even more interesting as an off-premises hosting environment for IT shops and developers alike.

Windows Azure Infrastructure Services is a stepping stone that organizations can use to migrate some of their existing workloads to the cloud, as is with no changes, while at the same time taking advantage of more modern "Platform-as-a-Service" capabilities of Windows Azure in a hybrid fashion. We've seen organizations run everything from simple development and test SQL Server workloads to complex distributed mission critical workloads. Here's a few things we've learned from their experiences.

- Know Your SLA:

Before you unplug that server and move your SQL Server workloads to Windows Azure, you need to understand the relevant Service Level Agreements (SLA). The key thing to pay attention to is this statement: "For all Internet facing Virtual Machines that have two or more instances deployed in the same Availability Set, we guarantee you will have external connectivity at least 99.95% of the time." What does this mean from a SQL Server perspective? It means that in order to be covered by this SLA, you will need to deploy more than one VM running SQL Server and add them all to the same Availability Set. See Manage the Availability of Virtual Machines for more details. It also means that you will need to implement a SQL Server High Availability Solution if you want to ensure that your databases are in sync across all of the virtual machines in your Availability Set. The bottom line is that you have to do some work to ensure high availability in the cloud just as you would have to do if these workloads were running on-premises. When properly configured, Availability Sets ensure that your SQL Server workloads will keep running even during maintenance operations like upgrades and hardware refreshes.- Know Your Support Policy:

The beautiful thing about running SQL Server in a Windows Azure Virtual Machines is that it's very much like running SQL Server anywhere else. It just works. You don't have to change your applications or worry whether various SQL Server features are supported. Most SQL Server features are fully supported when running on Windows Azure Infrastructure Services with a few important exceptions. Let's start with SQL Server version support. Microsoft provides technical support for SQL Server 2008 and later versions on Windows Azure Infrastructure Services. If you are still running workloads on SQL Server 2005 or earlier, you will need to upgrade to a newer version in order to get support. If you are going to upgrade, we suggest you upgrade to SQL Server 2012. It was designed to be "cloud ready" with native support for Windows Azure in the management tools, development tools and the underlying database engine.

First, let's talk about high availability. If you don't think you need to worry about implementing a high availability solution for your SQL Server deployments in Windows Azure Infrastructure Services, think again. As mentioned in the previous section, you will need to implement some kind of database redundancy in a virtual machine Availability Set in order to be covered by our SLA. However, there are some limitations that affect SQL Server high availability features. First of all, SQL Server Failover Clustering is not supported. Don't panic, there are plenty of other options if you want to deploy SQL Server in a high availability configuration, such as AlwaysOn Availability Groups, or by using legacy features like Database Mirroring or Log Shipping. We recommend you use the AlwaysOn Availability Groups feature in SQL Server 2012 for high availability, but there are some considerations you should be aware of if you go this route. Availability Group Listeners are not currently supported, but stay tuned as we plan to add support for this in the near future. If you can't wait for Listener support and you still want to use AlwaysOn Availability Groups, there is a work-around. You can use the FailoverPartner connection string attribute instead. You should be aware that this approach limits you to two replicas in your AlwaysOn Availability Group (one primary and one secondary), and does not support the concept of a readable secondary. See Connect Clients to a Database Mirroring Session for more information.

Next, let's talk about some important considerations that apply when configuring storage for your SQL Server databases. In general, we recommend you attach a single data disk to your VM and use it to store all of your data and log files. If you decide to spread your data and log files across multiple data disks to get more storage capacity or better performance, you should not enable geo-replication. Geo-replication cannot be used with multiple disks configurations, because consistent write order across multiple disks is not guaranteed.

The final aspect we will cover is the various "distributed" features that SQL Server supports such as Replication, Service Broker, distributed transactions, distributed queries, linked servers, etc. All of these things should work just fine across SQL Server VMs deployed in the same virtual network, but once you start crossing that boundary (either across the public internet or a virtual network VPN gateway), you had better test them thoroughly. These features were designed for use in on-premises data centers, LANs and WANs, not across the public internet.

Take some time to read the Support policy for Microsoft SQL Server products that are running in a hardware virtualization environment for more specifics.- Know Your Licensing:

The first (and easiest) way to license your SQL Server deployment on Windows Azure Infrastructure Services is to create a new virtual machine using one of our pre-built SQL Server platform images in the Image Gallery. Using this approach you pay an hourly rate depending upon the edition of SQL Server you choose (Enterprise, Standard or Web). There's no need to worry about product keys, activation, etc. and you can get access to your newly provisioned SQL Server VM in minutes. Be aware that you are charged per minute and there are no minimums. See Provisioning a SQL Server Virtual Machine on Windows Azure for more information. Microsoft doesn't look inside your VMs, so if you de-install SQL Server from a VM that was provisioned using a platform image you will still get charged for SQL Server usage unless you dispose of the VM.

The second option is to "bring your own VM". This involves building your own Hyper-V VMs on-premises, installing SQL Server on them, then uploading them to Windows Azure. See Creating and Uploading a Virtual Hard Disk that Contains the Windows Server Operating System for guidance on how to do this. When you bring your own VM, the cost of the Windows Server operating system license is built into your hourly compute charges, but this is not the case for other server products like SQL Server, and it's up to you to make sure that your VMs comply with Microsoft licensing policies. By default, server products like SQL Server are not licensed to run in virtualized configurations or in a hosting environment like Windows Azure Infrastructure Services. Microsoft offers different SQL Server licensing options for this scenario depending upon whether you are running production workloads or development / test workloads.

For running production SQL Server workloads, you must purchase software maintenance. See Microsoft License Mobility through Software Assurance for more details. From a licensing standpoint, you will need the same SQL Server edition and number of licenses that you needed on-premises. For instance, if you use SQL Server Standard or Enterprise Edition core-based licenses on-premises, with Software Assurance you can move those core licenses to Windows Azure. A minimum of four core licenses per Virtual Machine applies, so pick an appropriate sized virtual machine. Please note that you will have to wait for 90 days if you choose to reassign your license back to a server on-premises. For running development / test SQL Server workloads, you should consider purchasing an MSDN Pro, Premium or Ultimate Subscription. You can install much of the software included with your MSDN subscription (including SQL Server) on your VMs at no additional cost. See Windows Azure Benefit for MSDN Subscribers for more information.

If you do decide to create your own SQL Server VMs, you should be aware of some recent improvements we announced in a cumulative update to SQL Server 2012 SP1 that greatly simplify the preparation of SQL Server VM images using the SysPrep utility. See Expanded SysPrep Support in SQL Server 2012 SP1 CU2 for more information.

For SQL Server AlwaysOn Availability Group deployments, please be aware that the "free passive failover instance" licensing benefit does not apply for SQL Server deployments running in Windows Azure Infrastructure Services (or any other hosting environment). This benefit only applies to on-premises deployments, i.e. deployments that do not involve a shared hosting environment. That means all of your replicas will require a fully licensed copy of SQL Server Enterprise Edition. If you want to get up to speed on all of these details, check out the SQL Server 2012 Licensing Guide.- Know Your Hardware and Storage:

In this section we will examine the performance characteristics of Windows Azure Infrastructure Services from a CPU, RAM, I/O and network standpoint. Microsoft is committed to providing great compute and storage performance at a very competitive cost in Windows Azure Infrastructure Services (see Virtual Machine Pricing Details for more information). But you have to understand that the core value proposition of the cloud is to scale out using shared, low cost compute and storage infrastructure, not to scale up on expensive dedicated big iron. Many large organizations have already virtualized some or all of their SQL Server workloads in their own private clouds, yet some hard-core SQL Server stalwarts remain skeptical about performance and reliability. Just for the record, SQL Server virtualization is fully supported and is here to stay. Having said that, you cannot expect to achieve the same level of performance using a VM that is possible when scaling up on big expensive servers and storage subsystems.

Windows Azure Virtual Machines are hosted on commodity servers in shared clusters, and Windows Azure Disks (OS and data disks) are implemented using Windows Azure Storage which is a shared storage service with built in redundancy. From a CPU perspective, you pay a price for virtualization. From an IO perspective, you pay a price for shared redundant storage. So before you fork lift that highly tuned mission critical SQL Server OLTP workload to Windows Azure Infrastructure Services, you should do your homework on performance, throughput and latency. If you plan and test thoroughly, the vast majority of typical SQL Server workloads will run just fine in Windows Azure Virtual Machine. But there are a small percentage of performance sensitive "scale-up" workloads that will never be a good fit for this kind of environment.

Let's start with CPU. The clock speed and other characteristics of our virtual cores may vary somewhat depending upon what kind of host server you land on. You should use at least a medium sized VM instance (A2) because SQL Server needs approximately 4GB of RAM to breathe. A2 and larger VM's offer dedicated virtual cores, so you won't have to share your virtual cores with other VMs on the same host. Depending upon the VM size you choose, you can get anywhere from two virtual cores (A2) to eight virtual cores (extra-large A4 VMs and larger).

Next let's look at RAM. Read intensive SQL Server and Analysis Services workloads on big data sets often require lots of RAM to cache all that data in memory for optimal performance. If you see your SQL Server cache hit ratio trending downwards, you might want to move to a larger VM size to get more RAM. You can get anywhere from 3.5 GB RAM in a medium (A2) VM, to 56 GB RAM in a high memory A7 VM. In order to control costs, you should be conservative when sizing VMs. Test your workloads on smaller sized VM's and see if the performance is acceptable. You can always upgrade to a larger VM size later if necessary.

Next let's examine storage performance. As we mentioned before, your VHDs (both OS and data disks) are implemented using Windows Azure Disks, which are a special type of Windows Azure Storage page blob that are cached locally on the host server in a shared disk subsystem. Local redundancy is built in, and geo-redundancy is an additional option. The page blobs backing your locally cached VHDs are stored remotely in a shared storage service that is accessed via REST API's over high-speed interconnects. Check out Data Series: Exploring Windows Azure Drives, Disks and Images for more information. By now you should be realizing that the performance characteristics, configuration and behavior of Windows Azure Disks are quite different from locally attached storage or even SANs.

So what about storage capacity? First of all, do not store your databases on the OS drive unless they are very small. Do not use the D: temporary drive for databases (including tempdb), data stored on this drive could be lost after a restart and the temporary drive does not provide predictable performance. We recommend you attach a single data disk to your VM and use it to store all of your user databases. See How to Attach a Data Disk to a Virtual Machine for more information. A data disk can be up to 1 TB in size, and you can have a maximum of 16 drives on an A4 or larger VM. If your database is larger than 1 TB, you can use SQL Server file groups to spread your database across multiple data disks. Alternatively, you can combine multiple data disks into a single large volume using Storage Spaces in Windows Server 2012. Storage Spaces are better than legacy OS striping technologies because they work well with the append-only nature of Windows Azure Storage. As discussed previously in section 2, do not enable geo-replication if you intend to use multiple data disks. Input/Output Operations per Second (IOPS) tends to be the key metric used to measure disk performance for SQL Server workloads. So how many IOPS can you do on a Windows Azure disk? The answer is that it depends on the size of IOs and access patterns. For a 60/40 read/write ratio workload doing 8 KB IOs, our target is to provide up to 500 IOPS for a single disk. Need to go higher? Add more data disks and spread your database workload across them. When creating or restoring large databases, you should use instant file initialization to speed up performance.

One final point regarding network bandwidth and latency. VMs are allocated a certain amount of network bandwidth based upon size. This allocation can impact the performance of data transfers and backups, so if you plan on moving a lot of data around you should consider using a larger VM size. When connecting to your VMs over a public endpoint or VPN gateway, it's important to remember that your infrastructure is being accessed over the public internet. Your VMs are far away from your physical location and sitting behind sophisticated network infrastructure like load balancers and gateways with advanced security options enabled. This introduces network latency, and requires a different approach for many types of operations that rely on low-latency networks. For example, migrating a large database to the cloud will take much longer, and client applications that were not designed for cloud-style network latency may not behave properly. We'll discuss networking in more detail in the next section.

For more information on VM sizes and options, see Virtual Machine Cloud Service Sizes. See Performance Guidance for SQL Server in Windows Azure Virtual Machines for detailed information on performance best practices.- Plan Your Network First

Windows Azure Infrastructure Services offers a full range of network connectivity options for your VM deployments. You should plan your network configuration first before creating VMs to avoid having to start from scratch if you make a mistake. You can use Remote Desktop to connect to individual VMs from your desktop and administer them. See How to Log on to a Virtual Machine Running Windows Server for more information. If you want to allow connections into your VMs from the public internet, you can open up ports using endpoints. See How to Set Up Communication with a Virtual Machine for more details. If you want to administer your SQL Server remotely over the internet, you can create an endpoint that allows access to your VM over the standard SQL Server port 1433, but since this port is well known to hackers we suggest using a random public port for your SQL Server endpoint. You can automatically load-balance incoming connections to your endpoints across a collection of VMs. See Load Balancing Virtual Machines for more information. This is useful for scenarios like scaling out front-end web servers across multiple VMs.

In order to establish full connectivity between your VMs you should create a Windows Azure Virtual Network first, then create your VMs inside your new virtual network. Your new VMs will be automatically assigned an IP address using ranges specified in your virtual network configuration, there's no need to implement your own DHCP service. Virtual networks come with a built-in DNS, or you can deploy your own DNS server. See Windows Azure Name Resolution for more information. You should thoroughly test your name resolution before continuing. If you don't have a lot of networking expertise, you might want to have a colleague review your configuration before proceeding so you don't back yourself into a corner.

Now things start to get really interesting. You can establish site-to-site connectivity between your corporate network and your virtual network using a secure VPN gateway. You need a VPN device on your corporate network to do this. We support both dedicated VPN devices and software based options when establishing a VPN gateway. See Create a Virtual Network for Cross-Premise Connectivity for more information. You can also establish point-to-site connectivity using a VPN connection directly from your computer to your virtual network which is great for developers or administrators who need to access the virtual network from a remote location. See Configure a Point-To-Site VPN in the Management Portal for more information.

Most Windows Server deployments usually rely on Active Directory (AD) for identity and security. Windows Azure Infrastructure Services supports a full range of Active Directory deployment options. See Install a new Active Directory forest in Windows Azure if you want a stand-alone AD deployment used only by VMs in your virtual network. If you implement a VPN gateway, you can domain-join VM's in your virtual network to your corporate Active Directory. In this scenario it makes sense to deploy a read-only AD domain controller in your virtual network for improved performance and reliability. See Install a Replica Active Directory Domain Controller in Windows Azure Virtual Networks for guidance on how to do this. Many more powerful AD configurations are also supported, such as Active Directory Federation Services and support for hybrid identity scenarios that span your VMs and other cloud services running in Windows Azure. See Windows Azure Active Directory for more information.- Set the Time Zone on Your VMs to UTC

Consider setting the time zone on your VMs to UTC. Windows Azure Infrastructure Services uses UTC in all data centers and regions. Using the UTC time zone may avoid rare daylight savings related timing issues that could crop up in the future. Clients should of course continue using the local time zone.- Use Data and Backup Compression

SQL Server supports Data Compression and Backup Compression features that can help boost I/O performance with minimal CPU overhead. Compressing your data and backups results in faster I/O operations against Windows Azure Storage and your data will take up less space.- Back Up to Blob Storage Instead of Disks

In SQL Server 2012 Service Pack 1 Cumulative Update 2 we enabled a handy new backup scenario for SQL Server deployments in Windows Azure Infrastructure Services. Instead of having to provision additional data disks to store your backups, you can backup and restore your databases using Windows Azure Blob Storage. Blob storage provides limitless capacity and offers built-in local redundancy and optional geo-redundancy. This frees up precious capacity on your data disks so you can dedicate them to data and log files. See SQL Server Backup and Restore with Windows Azure Blob Storage Service for more information. As an added benefit, you can copy your backup blobs across storage accounts and even regions asynchronously without having to waste precious time and bandwidth performing unnecessary upload and download operations.- Don't Get Hacked

Take the time to properly secure your VMs and SQL Server deployments in Windows Azure Infrastructure Services to protect them from unauthorized access. Hackers are always looking to take over poorly secured machines on the Internet and use them for their own purposes. We recommend that you secure your SQL Server deployments in Windows Azure Infrastructure Services the same way you would secure your on-premises SQL Server deployments behind your network DMZ. Avoid opening public endpoints for RDP or TSQL. Instead, set up a secure VPN Gateway and administer your database servers directly. Use Windows Authentication for identity and access control. If you must use SQL Authentication, create a different account for SQL Server administration, add it to the sysadmin role, set up a strong password, then disable the sa account. Minimize your attack surface by stopping and disabling services that you don't intend to use. Consider using SQL Server Transparent Data Encryption (TDE) to protect your data, log and backup files at rest. If these files get copied outside of your VM they will be useless.- Learn PowerShell

The Windows Azure Management Portal offers a rich graphical interface for provisioning and managing your VM deployments in Windows Azure Infrastructure Services, but if you have to deploy a lot of virtual machines you should take the time to learn PowerShell. You can save yourself a ton of time and effort by developing a library of PowerShell scripts to provision and configure your VMs and virtual networks. See Automating Windows Azure Infrastructure Services (IaaS) Deployment with PowerShell for more information.Now that you are armed with some information, we encourage you to jump in and start identifying which of your SQL Server workloads are ready for Windows Azure Infrastructure Services. Start migrating some of your smaller workloads so you can learn and gain confidence, then move on to more intensive workloads. Keep in mind that besides compatibility, Windows Azure Infrastructure Services offers (and requires) granular control over the configuration and maintenance of your SQL Server deployments. This is a selling point for many IT organizations, but others want to get out of the business of maintaining servers so they can focus more on innovation.

Windows Azure SQL Database is built on SQL Server technology and delivered as a service. There's no need to install and manage server software, and advanced features like high availability and disaster recovery are built in. Still others want the best of both worlds by combining the compatibility and granular control offered when running SQL Server on Windows Azure Infrastructure Services to migrate existing workloads, combined with the agility and benefits of a managed service in Windows Azure SQL Database for new workloads. This kind of hybrid usage of Windows Azure is fully supported and has become one of the key differentiating factors in comparison with other public cloud providers. See Data Management for more information on all of these capabilities.

Yung Chou produced TechNet Radio: Virtually Speaking with Yung Chou Joined by Keith Mayer (Part 2): Greg Shields on Deploying & Managing a Service in the Cloud with Service Templates on 5/25/2013 (missed when published):

Yung Chou , Keith Mayer and Greg Shields from Concentrated Technology are back for part 2 of their deploying and managing a service in the cloud series and in today's episode they demo for us how to plan for and deploy RDS using System Center 2012 SP1 Virtual Machine Manager Service Templates. Tune in for this great follow-up episode and preview of an upcoming TechEd 2013 session.

This presentation implies that Remote Desktop Services (RDS) are supported by Windows Azure Virtual Machines running Windows Server 2012. It’s my understanding that licensing restrictions preclude use of RDS and Remote Desktop Web Access (RDWA) at this time.

I’ve been complaining about this issue since I wrote Installing Remote Desktop Services on a Windows Azure Virtual Machine running Windows Server 2012 RC on 6/12/2012. Perhaps Yung knows something about this topic that I’ve missed.

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

•• Beth Massi (@bethmassi) presented Building Modern, HTML5-Based Business Apps on Windows Azure with Microsoft Visual Studio LightSwitch to TechEd North America 2013 on 6/6/2013:

With the recent addition of HTML5 support, Visual Studio LightSwitch remains the easiest way to create modern line-of-business applications for the enterprise. In this demo-heavy session, see how to build and deploy data-centric business applications that run in Windows Azure and provide rich user experiences tailored for modern devices.

We cover how LightSwitch helps you focus your time on what makes your application unique, allowing you to easily implement common business application scenarios—such as integrating multiple data sources, data validation, authentication, and access control—as well as leveraging Data Services created with LightSwitch to make data and business logic available to other applications and platforms. Also, see how developers can use their knowledge of HTML5 and JavaScript to build touch-centric business applications that run well on modern mobile devices.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Mary Jo Foley (@maryjofoley) asserted “The new Linux and Windows Server virtual machines on Windows Azure are attracting more customers to Microsoft's public cloud” in a summary of her Microsoft: We're adding 7,000 Azure IaaS users per week article of 6/7/2013 for ZDNet’s All About Microsoft blog:

… I met with Martin at TechEd this week in New Orleans about Azure's growth trajectory. He said Microsoft is adding about 1,000 new Azure customers a day.

Microsoft officials said back in April 2013 that it has 200,000 customers for Windows Azure. Company officials have declined to say how many of these customers are part of Microsoft's own various divisions and/or how many of these customers are paying customers.

Here's where things get more interesting: Martin said that before Microsoft added a infrastructure-as-a-service (IaaS) components to Azure, it was adding about 3,000 customers a week. But since mid-April, when it made generally available persistent virtual machines for hosting Linux and Windows Server on Azure, Microsoft is adding 7,000 per week. Since April 2013, Microsoft has added a total of 30,000 Azure IaaS users (again, with no word on how many of these are Microsoft users and how many are paying customers), officials said.

When Microsoft first rolled out Windows Azure, it was almost entirely a platform-as-a-service (PaaS) play. To better compete with Amazon, Microsoft then decided to add IaaS elements to Azure, hoping to use IaaS as an onramp to PaaS.

Martin also said Microsoft plans to add 25 new datacenters in calendar year 2013. Some of these will be additional datacenters in existing locations; others will be brand-new locations, Martin said. Microsoft recently announced expansion plans for Azure coverage in China, Japan and Australia.

Another new development on the Windows Azure front which didn't get a lot of play this week -- but which current and potential customers may find useful -- is the addition to the Azure.com Web site of real pricing and licensing information about all the different Azure services. This isn't just a pricing calculator. It's the actual prices for individual components, all in one place.

Read Mary Jo’s entire post here.

<Return to section navigation list>

Windows Azure Pack, Hyper-V and Private/Hybrid Clouds

•• Marc van Eijk described Windows Azure Pack High Availability in a 6/5/2013 blog post:

This blog was published a couple of days after Microsoft announced (at TechEd 2013 North America) that Windows Azure Pack is the new name for Windows Azure for Windows Server. This blog is based on the RTM bits of Windows Azure for Windows Server. When the Windows Azure Pack software is available I’ll check the installation and if any changes are required I’ll update this blog.

My previous post System Center VMM 2012 SP1 High Availability with SQL Server 2012 AlwaysOn Availability Groups describes a complete step by step to install and configure a Private Cloud with high availability on the host level, the database level and the VMM level. With System Center 2012 SP1 Service Provider Foundation High Availability multi tenancy was added to the Private Cloud enabling Service Providers to provide their customers with an extensible OData web service and even leverage the investment in their current portal by connecting it to the extensible OData web service.

To top it off this blog will describe a complete step by step for installing Windows Azure for Windows Server in a High Available configuration. You could add two servers to the environment that was built in the previous blogs.