Windows Azure and Cloud Computing Posts for 12/10/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 12/12/2012 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

• Bryan Group reported a new .NET SDK for Hadoop Update in a 12/12/2012 post:

If you’re doing any development in the “big data” space then you’ll want to be aware of an update to the .NET SDK for Hadoop. This release contains updated versions (0.2.0.0-prerelease) of Map/Reduce, LINQ To Hive, and a new WebHDFS client.

This SDK currently contains an API for writing [Map/Reduce] jobs, as well as submitting them. According to the published roadmap, the full suite of components [to be] delivered once the SDK reaches the official release stage includes:

- LINQ to Hive Tooling to generate types

- Integration with Templeton/WebHCat

- Experiment enabling UDF's

- Support for Partitions and advanced Hive DDL

- LINQPad integration

- Job Submission API

- WebHDFS Client

More information on Hadoop for Azure (Windows Azure HDInsight Preview) can be found here.

Andy Cross (@andybareweb) continued his series with Bright HDInsight Part 4: Blob Storage in Azure Storage Vault (examples in C#) on 12/10/2012:

When using a HDInsight cluster, a key concern is sourcing the data on which you will run your Map/Reduce jobs. The load time to transfer the data for your Job into your Mapper or Reducer context must be as low as possible; the quality of a source can be ranked by this load wait – the data latency before a Map/Reduce job can commence.

Typically, you tend to store your data for your jobs within Hadoop’s Distributed FileSystem, HDFS. With HDFS, you are limited by the size of the storage attached to your HDInsight nodes. For instance, in the HadoopOnAzure.com preview, you are limited to 1.5Tb of data. Alternatively, you can use Azure Storage Vault and access up to 150 Tb in an Azure Storage account.

Azure Storage Vault

The Azure Storage Vault is a storage location, backed by Windows Azure blob Storage that can be addressed and accessed by Hadoop’s native processes. This is achieved by specifying a protocol scheme for the URI of the assets you are trying to access, ASV://. This ASV:// is synonymous with other storage accessing schemes such as file://, hdfs:// and s3://.

With ASV, you configure access to a given account and key in the HDInsight portal Manage section.

Once in this section, one simply begins the configuration process by entering the Account Name and Key and clicking Save:

Using ASV

Once you have configured the Account Name and Key, you can use the new storage provided by ASV by addressing using the ASV:// scheme. The format of these URIs are:

ASV://container/path/to/blob.ext

As you can see, the ASV:// is hardwired to the configured account name (and key) so that you don’t have to specify the account or authentication to access this account. It is a shortcut to http(s)://myaccount.blob.core.windows.net. Furthmore, since it encapsulates security, you don’t need to worry about the access to these blobs.

Benefits of ASV

There are many benefits of ASV, from exceptionally low storage costs (lower than S3 at the time of writing), to the ability to seemlessly provide geo-located redundancy on the files for added resilience. For me, as a user of the time limited (at the time of writing again!) HadoopOnAzure.com clusters, a really big benefit is that I don’t lose the files when the cluster is released, as I would do if they were stored on HDFS. Additional benefits to me include the ability to read and write to ASV and access those files immediately off the cluster in a variety of tools that I have gotten to know very well over the past few years, such as Cerebrata’s Cloud Storage Studio.

How to use ASV

It is exceptionally easy to configure your C# Map/Reduce tasks to use ASV, due to the way it has been designed. The approach is also equivalent and compatible with any other Streaming, Native or ancillary job creation technique.

To Use ASV in a C# application, first configure the ASV in the HDInsight portal as above, and then configure the HadoopJob to access that resource as the InputPath or OutputFolder locations.

public override HadoopJobConfiguration Configure(ExecutorContext context)

{

try

{

HadoopJobConfiguration config = new HadoopJobConfiguration();

config.InputPath = "asv://container/inputpath/";

config.OutputFolder = "asv://container/outputpath" + DateTime.Now.ToString("yyyyMMddhhmmss");

return config;

}

catch(Exception ex)

{

Console.WriteLine(ex.ToString());

}

return null;

}

view raw asv.cs This Gist brought to you by GitHub.

As you can see, this configuration commands Hadoop to load from the configured ASV:// storage account container “container”, find any files in the /inputpath/ folder location and include them all as input files. You can also specify an individual file.

Similarly, the outputFolder is specified as a location that the HDInsight job should write the output of the Map/Reduce job to.

As a nice additional benefit, using ASV adds counters on the amount of bytes read and written by the Hadoop system using ASV, allowing you to track your usage of the data in your Storage Account.

All very simple stuff, but amazingly powerful.

See Andy’s earlier post of part 3 below.

Joe Giardino and Serdar Ozler of the Windows Azure Storage Team posted Updated: Known Issues for Windows Azure Storage Client Library 2.0 for .NET and Windows Runtime to the Windows Azure Storage Team blog on 12/9/2012:

We recently released the 2.0 version of the Windows Azure Storage Client Library. This is our largest update to our .NET library to date which includes new features, broader platform compatibility, and revisions to address the great feedback you’ve given us over time. For more about this release see here. For information regarding breaking changes see here.

This Storage Client 2.0 release contains some known issues that are being addressed in the current and upcoming releases of the libraries and are detailed below. Some of these were reported by you, and we appreciate you bringing them to our attention!

Pending Issues

The following are the set of known issues that are pending fixes.

Some Azure SDK assemblies still reference Storage Client Library 1.7

- Description: SDK assemblies such as Microsoft.WindowsAzure.Diagnostics.dll and Microsoft.WindowsAzure.CloudDrive.dll still reference Storage Client Library version 1.7.

- Status: Will be resolved in a future release of the Windows Azure SDK

- Impacted Platforms: .NET

- Workaround: Please add references to both the old version (Microsoft.WindowsAzure.StorageClient.dll; version 1.7) and the new version (Microsoft.WindowsAzure.Storage.dll; version 2.0 or greater) of Storage Client Library in your project.

TableEntity does not support deserializing nullable values

- Description: TableEntity does not support nullable values such as int? or long? during deserialization. The example below illustrates this issue:

class AgeEntity : TableEntity { public int? Age { get; set; } } … AgeEntity entity = new AgeEntity() { PartitionKey = "FirstName", RowKey = "LastName", Age = 25, }; table.Execute(TableOperation.Insert(entity)); … TableResult result = table.Execute(TableOperation.Retrieve<AgeEntity>("FirstName", "LastName")); entity = (AgeEntity)result.Result; // entity.Age will be null

- Status: Will be resolved in version 2.0.3

- Resolution: TableEntity will support deserializing nullable int, long, double, and bool values.

- Impacted Platforms: All

- Workaround: If this impacts you, then upgrade to 2.0.3 when it is available. If you are unable to upgrade, please use the legacy Table Service implementation as described in the Migration Guide.

Extra TableResults returned via ExecuteBatch when a retry occurs

- Description: When a batch execution fails and is subsequently retried, it is possible to receive extraneous TableResult objects from any failed attempt(s). The resulting behavior is that upon completion the correct N TableResults are located at the end of the IList<TableResult> that is returned, with the extraneous results listed at the start.

- Status: Will be resolved in version 2.0.3

- Resolution: The list returned back will only contain the results from the last retry.

- Impacted Platforms: All

- Workaround: If this impacts you, then upgrade to 2.0.3 when it is available. If you are unable to upgrade, please only check the last N TableResults in the list returned. As an alternative, you can also disable retries:

client.RetryPolicy = new NoRetry();GenerateFilterConditionForLong does not work for values larger than maximum 32-bit integer value

- Description: When doing a table query and filtering on a 64-bit integer property (long), values larger than maximum 32-bit integer value do not work correctly. The example below illustrates this issue:

TableQuery query = new TableQuery().Where( TableQuery.GenerateFilterConditionForLong("LongValue", QueryComparisons.Equal, 1234567890123)); List<DynamicTableEntity> results = table.ExecuteQuery(query).ToList(); // Will throw StorageException

- Status: Will be resolved in version 2.0.3

- Resolution: The required ‘L’ suffix will be correctly added to 64-bit integer values.

- Impacted Platforms: All

- Workaround: If this impacts you, then upgrade to 2.0.3 when it is available. If you are unable to upgrade, please convert the value to a string and then append the required ‘L’ suffix:

TableQuery query = new TableQuery().Where( TableQuery.GenerateFilterCondition("LongValue", QueryComparisons.Equal, "1234567890123L")); List<DynamicTableEntity> results = table.ExecuteQuery(query).ToList();CloudTable.EndListTablesSegmented method does not work correctly

- Description: Listing tables segment by segment using APM does not work, because CloudTable.EndListTablesSegmented method always throws an exception. The example below illustrates this issue:

IAsyncResult ar = tableClient.BeginListTablesSegmented(null, null, null); TableResultSegment results = tableClient.EndListTablesSegmented(ar); // Will throw InvalidCastException

- Status: Will be resolved in version 2.0.3

- Resolution: EndListTablesSegmented will correctly return the result segment.

- Impacted Platforms: .NET

- Workaround: If this impacts you, then upgrade to 2.0.3 when it is available. If you are unable to upgrade, please use the synchronous method instead:

TableResultSegment results = tableClient.ListTablesSegmented(null);CloudQueue.BeginCreateIfNotExists and CloudQueue.BeginDeleteIfExists methods expect valid options argument

- Description: BeginCreateIfNotExists and BeginDeleteIfExists methods on a CloudQueue object do not work if the options argument is null. The example below illustrates this issue:

queue.BeginCreateIfNotExists(null, null); // Will throw NullReferenceException queue.BeginCreateIfNotExists(null, null, null, null); // Will throw NullReferenceException

- Status: Will be resolved in version 2.0.3

- Resolution: Both methods will be updated to accept null as a valid argument.

- Impacted Platforms: .NET

- Workaround: If this impacts you, then upgrade to 2.0.3 when it is available. If you are unable to upgrade, please create a new QueueRequestOptions object and use it instead:

queue.BeginCreateIfNotExists(new QueueRequestOptions(), null, null, null);Metadata Correctness tests fail when submitting apps to the Windows Store

- Description: An application that references Storage Client Library will fail the Metadata Correctness test during Windows Store certification process.

- Status: Will be resolved in version 2.0.3

- Resolution: All non-sealed public classes will be either removed or sealed.

- Impacted Platforms: Windows Store Apps

- Workaround: Not available

Missing Queue constants

- Description: A few general purpose queue constants have not been exposed as public on CloudQueueMessage. Missing constants are shown below:

public static long MaxMessageSize { get; } public static TimeSpan MaxTimeToLive { get; } public static int MaxNumberOfMessagesToPeek { get; }

- Status: Will be resolved in version 2.0.3

- Resolution: CloudQueueMessage constants will be made public.

- Impacted Platforms: Windows Store Apps

- Workaround: If this impacts you, then upgrade to 2.0.3 when it is available. If you are unable to upgrade, please use the values directly in your code. MaxMessageSize is 64KB, MaxTimeToLive is 7 days, and MaxNumberOfMessagesToPeek is 32.

Service Client RetryPolicy does not support null

- Description: The Cloud[Blob|Queue|Table]Client.RetryPolicy does not support null.

- Status: Not resolved

- Impacted Platforms: All

- Workaround: If you wish to disable retries, please use:

client.RetryPolicy = new NoRetry();Windows Store Apps developed in JavaScript cannot use Table Service layer due to missing OData dependencies

- Description: Windows Store Apps developed in JavaScript are unable to load the dependent dlls to a referenced component (WinMD). Because the Table Storage API is dependent on Microsoft.Data.OData.WindowsStore.dll, invoking Table APIs will result in a FileNotFoundException at runtime.

- Status: Not Resolved. We are actively exploring options to bring Table Storage support to Windows Store Apps developed in JavaScript.

- Impacted Platforms: Windows Store Apps

- Workaround: Not available

Resolved Issues

The following are the set of known issues that have been fixed and released.

IAsyncResult object returned by asynchronous methods is not compatible with TaskFactory.FromAsync

- Description: Both the System.Threading.Tasks.TaskFactory and System.Threading.Tasks.TaskFactory<TResult> classes provide several overloads of the FromAsync and FromAsync methods that let you encapsulate an APM Begin/End method pair in one Task instance or Task<TResult> instance. Unfortunately, the IAsyncResult implementation of Storage Client Library is not compatible with these methods, which leads to the End method being called twice. The effect of calling the End method multiple times with the same IAsyncResult is not defined. The example below illustrates this issue. The call will throw a SemaphoreFullException even if the actual operation succeeds:

TableServiceContext context = client.GetTableServiceContext(); // Your Table Service operations here await Task.Factory.FromAsync<DataServiceResponse>( context.BeginSaveChangesWithRetries, context.EndSaveChangesWithRetries, null); // Will Throw SemaphoreFullException

- Status: Resolved in version 2.0.2

- Resolution: IAsyncResult.CompletedSynchronously flag now reports the status correctly and thus FromAsync methods can work with the Storage Client Library APM methods.

- Impacted Platforms: .NET

- Workaround: If you are unable to upgrade to 2.0.2, please use APM methods directly without passing them to FromAsync methods.

Public DynamicTableEntity constructors use DateTime even though the Timestamp property is of type DateTimeOffset

· Description: DynamicTableEntity class defines the Timestamp property as a DateTimeOffset value. However, its public constructors use DateTime.MinValue to initialize the property. Therefore, if the machine’s time zone is ahead of UTC (such as UTC+01), DynamicTableEntity references cannot be instantiated. The example below illustrates this issue if the time zone is ahead of UTC:

CloudTable table = client.GetTableReference(tableName); TableQuery query = new TableQuery(); IEnumerable<DynamicTableEntity> result = table.ExecuteQuery(query); // Will Throw StorageException

- Status: Resolved in version 2.0.2

- Resolution: IAsyncResult.CompletedSynchronously flag now reports the status correctly and thus FromAsync methods can work with the Storage Client Library APM methods.

- Impacted Platforms: All

- Workaround: Not available

BeginSaveChangesWithRetries ignores SaveChangesOptions argument

- Description: TableServiceContext class provides several overloads of the BeginSaveChangesWithRetries method that let you begin an asynchronous operation to save changes. One of the overloads ignores the “options” argument. The example below illustrates this issue:

IAsyncResult ar = tableContext.BeginSaveChangesWithRetries( SaveChangesOptions.Batch, null, null); … DataServiceResponse response = tableContext.EndSaveChangesWithRetries(ar); int statusCode = response.BatchStatusCode; // Will return -1

- Status: Resolved in version 2.0.2

- Resolution: All overloads of BeginSaveChangesWithRetries now make use of the “options” argument correctly.

- Impacted Platforms: .NET

- Workaround: If you are unable to upgrade to 2.0.2, please use a different overload of BeginSaveChangesWithRetries method:

IAsyncResult ar = tableContext.BeginSaveChangesWithRetries( SaveChangesOptions.Batch, null, null, null, null); … DataServiceResponse response = tableContext.EndSaveChangesWithRetries(ar); int statusCode = response.BatchStatusCode;CloudStorageAccount.Parse cannot parse DevelopmentStorageAccount strings if a proxy is not specified

- Description: CloudStorageAccount.Parse() and TryParse() do not support DevelopmentStorageAccount strings if a proxy is not specified. CloudStorageAccount.DevelopmentStorageAccount.ToString() will serialize to the string: “UseDevelopmentStorage=true” which illustrates this particular issue. Passing this string into CloudStorageAccount.Parse() or TryParse() will throw a KeyNotFoundException.

CloudStorageAccount myAccount = CloudStorageAccount.Parse("UseDevelopmentStorage=true"); // Will Throw KeyNotFoundException

- Status: Resolved in version 2.0.1

- Resolution: CloudStorageAccount can now parse this string correctly.

- Impacted Platforms: All

- Workaround: If you are unable to upgrade to 2.0.1, you can use:

CloudStorageAccount myAccount = CloudStorageAccount.DevelopmentStorageAccount;StorageErrorCodeStrings class is missing

- Description: Static StorageErrorCodeStrings class that contains common error codes across Blob, Table, and Queue services is missing. The example below shows some of the missing error codes:

public const string OperationTimedOut = "OperationTimedOut"; public const string ResourceNotFound = "ResourceNotFound";

- Status: Resolved in version 2.0.1

- Resolution: StorageErrorCodeStrings class is added.

- Impacted Platforms: All

- Workaround: If you are unable to upgrade to 2.0.1, you can directly use the error strings listed in the Status and Error Codes article.

ICloudBlob interface does not contain Shared Access Signature creation methods

- Description: Even though both CloudBlockBlob and CloudPageBlob have methods to create a shared access signature, the common ICloudBlob interface does not contain them which prevents a generic method to create a SAS token for any blob irrespective of its type. Missing methods are shown below:

string GetSharedAccessSignature(SharedAccessBlobPolicy policy); string GetSharedAccessSignature(SharedAccessBlobPolicy policy, string groupPolicyIdentifier);

- Status: Resolved in version 2.0.1

- Resolution: GetSharedAccessSignature methods are added to ICloudBlob interface.

- Impacted Platforms: All

- Workaround: If you are unable to upgrade to 2.0.1, please cast your object to CloudBlockBlob or CloudPageBlob depending on the blob’s type and then call GetSharedAccessSignature:

CloudBlockBlob blockBlob = (CloudBlockBlob)blob; string signature = blockBlob.GetSharedAccessSignature(policy);Summary

We continue to work hard on delivering a first class development experience for the .NET and Windows 8 developer communities to work with Windows Azure Storage. We have actively embraced both NuGet and GitHub as release mechanisms for our Windows Azure Storage Client libraries. As such, we will continue to release updates to address any issues as they arise in a timely fashion. As always, your feedback is welcome and appreciated.

Andy Cross (@andybareweb) continued his series with Bright HDInsight Part 3: Using custom Counters in HDInsight on 12/9/2012:

Telemetry is life! I repeat this mantra over and over; with any system, but especially with remote systems, the state of the system is dificult or impossible to ascertain without metrics on its internal processing. Computer systems operate outside the scope of human comprehension – they’re too quick, complex and transient for us to ever be able to confidently know their state in process. The best we can do is emit metrics and provide a way to view these metrics to judge a general sense of progress.

Metrics in HDInsight

The metrics I will present here relate to HDInsight and the Hadoop Streaming API, presented in C#. It is possible to access the same counters from other programmatic interfaces to HDInsight as they are a core Hadoop feature.

These metrics shouldn’t be used for data gathering as that is not their purpose. You should use them to track system state and not system result. However this line is a thin line

For instance, if we know there are 100 million rows for data pertaining to “France” and 100 million rows for data pertaining to “UK” and these are across multiple files and partitions then we might want a metric which reports the progress across these two data aspects. In practice however, this type of scenario (progress through a job) is better measured without reverting to measuring data directly.

Typically we also want the ability to group similar metrics together in a category for easier reporting, and I shall show an example of that.

Scenario

The example data used here is randomly generated strings with a data identifier, action type and a country of origin. This slim 3 field file will be mapped to select the country of origin as the key and reduced to count everything by country of origin.

823708 rz=q UK

806439 rz=q UK

473709 sf=21 France

713282 wt.p=n UK

356149 sf=1 UK

595722 wt.p=n France

238589 sf=1 France

478163 sf=21 France

971029 rz=q France

……10000 rows…..Mapper

This example shows how to add the counters to the Map class of the Map/Reduce job.

using System;

using Microsoft.Hadoop.MapReduce;

namespace Elastacloud.Hadoop.SampleDataMapReduceJob

{

public class SampleMapper : MapperBase

{

public override void Map(string inputLine, MapperContext context)

{

try

{

context.IncrementCounter("Line Processed");

var segments = inputLine.Split("\t".ToCharArray(), StringSplitOptions.RemoveEmptyEntries);

context.IncrementCounter("country", segments[2], 1);

context.EmitKeyValue(segments[2], inputLine);

context.IncrementCounter("Text chars processed", inputLine.Length);

}

catch(IndexOutOfRangeException ex)

{

//we still allow other exceptions to throw and set and error state on the task but this

//exception type we are confident is due to the input not having >3 separated segments

context.IncrementCounter("Logged recoverable error", "Input Format Error", 1);

context.Log(string.Format("Input Format Error on line {0} in {1} - {2} was {3}", inputLine, context.InputFilename,

context.InputPartitionId, ex.ToString()));

}

}

}

}

view raw SampleMapper-edit.cs This Gist brought to you by GitHub.

Here we can see that we are using the parameter “context” to interact with the underlying Hadoop runtime. context.IncrementCounter is the key operation we are calling, using its underlying stderr output to write out in the format:

“reporter:counter:{0},{1},{2}”, category, counterName, incrementWe are using this method’s overloads in different ways; to increment a country simply by name, to increment a counter my name and category and to increment by with a custom increment.

We are free to add these counters to any point of the map/reduce program in order that we can gain telemetry of our job as it progresses.

Viewing the counters

In order to view the counters, visit the Hadoop Job Tracking portal. The Hadoop console output will contain the details for your Streaming Job job id, for example for me it was http://10.174.120.28:50030/jobdetails.jsp?jobid=job_201212091755_0001, reported in the output as:

12/12/09 18:01:37 INFO streaming.StreamJob: Tracking URL: http://10.174.120.28:50030/jobdetails.jsp?jobid=job_201212091755_0001

Since I used two counters that were not given a category, they appear in the “Custom” category:

Another of my counters was given a custom category, and thus it appears in a separate section of the counters table:

In my next posts, I will focus on error handling, status and more data centric operations.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

Adam Hoffman posted Developing iOS Applications with a cloud back end to the US DPE Azure Connection blog on 12/11/2012:

Developing iOS Applications with a cloud back end

Are you building iOS apps and need to build up a back end to store some data? Also interested in push notifications using the Apple Push Notifcation Service (APNS)? Then this should strike you as super cool news – the already awesome Windows Azure Mobile Services has been updated (again – it’s really growing fast) to provide full support for iOS.

WAMS already had an iOS SDK, but it was more limited initially. You could take advantage of the great data services (via the OData endpoints), but it didn’t originally support Push Notifications. Well, now it does. Push notifications for iOS now work very similarly to push notifications for Windows 8 and Windows Phone 8, except they use the Apple Push Notifications Service (APNS) instead of the Windows Push Notification Service.

Ready to get started and get a serious cloud back end for your iOS applications? Read the docs about configuring push notifications for your iOS applications here: https://www.windowsazure.com/en-us/develop/mobile/tutorials/get-started-with-push-ios/

Don’t forget – you can try the whole Azure stack for free with a 90 day trial by going to http://aka.ms/Azure90DayTrial . Even after the trial is done, you can have up to ten (10!) Windows Azure Mobile Services for free. Just convert your trial to a “pay as you go” account, and you’re up and running. If you end up using the data services in WAMS, then you’ll need to pay a tiny bit for the underlying Windows Azure SQL Database (currently just $5 per month for the first 100 MB, see below for more details) but it’s still an unbelievable bargain for the service you get.

You can get more details at ScottGu’s blog here: http://weblogs.asp.net/scottgu/archive/2012/12/04/ios-support-with-windows-azure-mobile-services-now-with-push-notifications.aspx.

Current (as of 12/11/2012) Windows Azure SQL Database Pricing

The question now is “When do we get full support for Android phones and tablets?”

Kirill Gavrylyuk (@kirillg_msft) described Best practices for using push notifications, SMS and email in your mobile app in a 12/10/2012 post to the Windows Azure Team blog:

After we delivered iOS client libraries for Mobile Services in October, many of you asked when support for iOS push notifications would follow. As Scott Guthrie announced Wednesday, Mobile Services now supports sending iOS push notifications! We’ve also improved our iOS Client API by adding an even easier login method; configuring user authentication now only takes a single line of Objective-C code.

With this update, Mobile Services now fully supports Windows Store, Windows Phone 8, and iOS apps.

Since we now support three means of communicating with your customers—push notifications, SMS via Twilio, and email via SendGrid—across three platforms, this post will first cover the basics of iOS push and then turn to general guidelines on when and why to use each.

The Basics of Configuring iOS Push Notifications

Scott’s blog has all the details you need to get started with push, but to summarize the two big takeaways:

- Mobile Services makes it incredibly easy to send a push notification.

- Mobile Services gives you the tools you need to handle feedback for expired devices and channels right in the portal. Periodically pruning your database of invalid tokens allows you to save money by not sending notifications to uninstalled apps.

Josh Twist, another member of the Mobile Services team, demos these new additions in the following video:

There are also two fantastic tutorials available in the mobile dev center. The first provides a walkthrough of the basics of configuring your iOS app for push and sending push notifications. The second details how to use a table to store APNS tokens that can then be used to send push notifications to an app’s users.

You can also review our reference docs for complete details on how to use the APNS object to send your push notifications.

When to use Push vs Email vs SMS

Just as important as understanding how to use these push notifications, is understanding when to use a push notification versus an SMS versus an email. The easy answer is that it depends on the app.

I’m going to share, however, some general guidelines we’ve adopted as best practices as well as share some examples that illustrate exceptions to the rule. I’d love to hear some of your own best practices in the comments, as well as discuss when it makes sense to depart from these or your own general guidelines.

Push Notifications: the Canonical Default

Push notifications were created specifically with smartphones and apps in mind. They are the most powerful and effective channel for engaging your customers.

Be careful, though, because with great power comes great responsibility. Most users will give you some leeway at the outset and allow push notifications. They will withdraw that consent just as quickly, however.

The most common danger zones?

- Apps with a social component that send alert notifications every time a user’s Facebook friend signs up, without limiting notifications to only those friends that the user actually invited or with whom they engage regularly.

- News apps that add to the badge count every time there’s a new story published in a category, even if you are not following the specific topic.

- Selling any type of notification to a third party is obviously a fast way have your user disable push notifications.

Toast notification best practices:

- Keep it brief and simple. Know that you’re working with a very small space and try to avoid ellipses. Make sure the content of a toast notification is valuable in and of itself.

The exception here is when the content of the notification before the ellipses is compelling enough for your user to open and interact in the app. If the content of the toast is a truly irresistible teaser, then by all means use the ellipses. Facebook posts are a great example of this exception.

- Use this with a clear call to a very specific action or non-action. Even with something as simple as a to-do app, “You have a new task ‘Buy a gallon of milk’” sounds infinitely better than “A new item ‘Buy a gallon of milk’ has been added”

Badge count best practices:

- The action required to engage with and reset the badge count should take three or fewer gestures (taps, swipes, etc.).

- Limit the number of things a badge notification can mean. Take a turn based game with a chat component. If the badge count increases with both game updates and a new message arriving to the in-game chat, your customer doesn’t have a clear understanding of the ask.

- The badge count should never hit double digits. Once it does, the customer is definitely ignoring the app and likely to disable push notifications right away, if not uninstall outright.

The Mail app is definitely the exception here. It’s not uncommon for users to not only tolerate a badge count of more than ten, but a badge count of more than one hundred. This is likely due to the fact that users have had decades to get comfortable and familiar with high unread mail counts. Unless your app has a similarly long history, it's probably best to avoid letting the badge count run too high.

SMS: Must Read Messages

There is an almost infinite number of ways to use SMS with your app. Our friends at Twilio have an army of Doers that have proved just how versatile a simple SMS can be. Let's narrow our discussion, however, to SMS only a means of customer communication. SMS should be reserved for must-read items. Since it can’t be turned off, the risk of uninstall is much higher too. Be sure that it’s worth it.

When to use Email: the Basics and the Important Stuff

Just like with SMS, there are tons of fantastic and non-standard use cases for Email in the modern app. Developers are using SendGrid to do that everyday. When limiting the discussion again to just a means of customer communication, however, one scenario shines through. Use email for the content you want your users to be able to go back and find days, weeks, or months later.

Does that include confirmation on successful authentication? Absolutely. Does that include a shot of the their highest scoring final board in a Scrabble type game? Absolutely. Does that include requests to invite friends to the app? Maybe not.

Uber does a great job with this. Instead of sending a toast notification that you have a new receipt, Uber emails a receipt and ride summary to their customer. This makes sense since it’s content that customers will likely revisit.

If you would like to get started with Mobile Services and are new to Windows Azure, sign up for the Windows Azure 90-day Free Trial and receive 10 free Mobile Services running on shared instances.

Visit the Windows Azure Mobile Development Center to learn more about building apps using Mobile Services.

If you have feedback (or just want to show off your app), shoot an email to the Windows Azure Mobile Services team at mobileservices@microsoft.com.

As always, if you have questions, please ask them in our forum. If there’s a feature you’d like to see, please visit our Mobile Services uservoice page and let us know.

You can also find me on Twitter @kirillg_msft

David Chappell wrote a Data Management: Choosing the Right Technology white paper for Microsoft’s Windows Azure site and the Windows Azure SQL Database Team posted it or or about 12/10/2012:

Windows Azure provides several different ways to store and manage data. This diversity lets users of the platform address a variety of different problems. Yet diversity implies choice: Which data management option is right for a particular situation?

This short overview is intended to help you answer that question. After a quick summary of the data management technologies in Windows Azure, we’ll walk through several different scenarios, describing which of these technologies is most appropriate in each case. The goal is to make it easier to choose the right options for the problem you face.

Table of Contents

Windows Azure Data Management: A Summary

As discussed in Windows Azure Data Management and Business Analytics, Windows Azure has four main options for data management:

SQL Database, a managed service for relational data. This option fits in the category known as Platform as a Service (PaaS), which means that Microsoft takes care of the mechanics—you don’t need to manage the database software or the hardware it runs on.

SQL Server in a VM, with SQL Server running in a virtual machine created using Windows Azure Virtual Machines. This approach lets you use all of SQL Server, including features that aren’t provided by SQL Database, but it also requires you to take on more of the management work for the database server and the VM it runs in.

Blob Storage, which stores collections of unstructured bytes. Blobs can handle large chunks of unstructured data, such as videos, and they can also be used to store smaller data items.

Table Storage, providing a NoSQL key/value store. Tables provide fast, simple access to large amounts of loosely structured data. Don’t be confused, however: Despite its name, Table Storage doesn’t offer relational tables.

All four options let you store and access data, but they differ in a number of ways. Figure 1 summarizes the differences that most often drive the decision about which data management technology to use.

Figure 1: Different Windows Azure data management options have different characteristics.Despite their differences, all of these data management options provide built-in replication. For example, all data stored in SQL Database, Blob Storage, and Table Storage is replicated synchronously on three different machines within the same Windows Azure datacenter. Data in Blob Storage and Table Storage can also be replicated asynchronously to another datacenter in the same region (North America, Europe, or Asia). This geo-replication provides protection against an entire datacenter becoming inaccessible, since a recent copy of the data remains available in another nearby location. And because databases used with SQL Server running in a VM are actually stored in blobs, this data management option also benefits from built-in replication.

It's also worth noting that third parties provide other data management options running on Windows Azure. For example, ClearDB provides a managed MySQL service, while Cloudant provides a managed version of its NoSQL service. It’s also possible to run other relational and NoSQL database management systems in the Windows and Linux VMs that Windows Azure provides.

Choosing Data Management Technologies: Examples

Choosing the right Windows Azure data management option requires understanding how your application will work with data in the cloud. What follows walks through some common scenarios, evaluating the Windows Azure data management options for each one.

Creating an Enterprise or Departmental Application for a Single Organization

Suppose your organization chooses to build its next enterprise or departmental application on Windows Azure. You might do this to save money—maybe you don’t want to buy and maintain more hardware—or to avoid complex procurement processes or to share data more effectively via the cloud. Whatever the reason, you need to decide which Windows Azure data management options your application should use.

If you were building this application on Windows Server, your first choice for storage would likely be SQL Server. The relational model is familiar, which means that your developers and administrators already understand it. Relational data can also be accessed by many reporting, analysis, and integration tools. These benefits still apply to relational data in the cloud, and so the first data management option you should consider for this new Windows Azure application is SQL Database. Most of the existing tools that work with SQL Server data will also work with SQL Database data, and writing code that uses this data in the cloud is much like writing code to access on-premises SQL Server data.

There are some situations where you might not want to use SQL Database, however, or at least, not only SQL Database. If your new application needs to work with lots of binary data, such as displaying videos, it makes sense to store this in blobs—they’re much cheaper—perhaps putting pointers to these blobs in SQL Database. If the data your application will use is very large, this can also raise concerns. SQL Database’s limit of 150 gigabytes per database isn’t stingy, but some applications might need more.

One option for working with data that exceeds this limit is to use SQL Database’s federation option. Doing this lets your database be significantly larger, but it also changes how the application works with that data in some ways. A federated database contains some number of federation members, each holding its own data, and an application needs to determine which member a query should target.

Another choice for working with large amounts of data is to use Windows Azure Table Storage. A table can be much, much bigger than a non-federated database in SQL Database, and tables are also significantly cheaper. Yet using tables requires your developers and administrators to understand a new data model, one with many fewer capabilities than SQL Database offers. Choosing tables also means giving up the majority of data reporting, analysis, and integration tools, since most of these are designed to be used with relational data.

Still, enterprise and departmental applications primarily target an organization’s own employees. Given this limited number of users, a database size limit of 150 gigabytes may well be enough. If it is, sticking with SQL Database and the relational world you know is likely to be the best approach.

One more option worth considering is SQL Server running in a Windows Azure VM. This entails more work than using SQL Database, especially if you need high availability, as well as more ongoing management. It does let you use the full capabilities of SQL Server, however, and it also allows creating larger databases without using federation. In some cases, these benefits might be worth the extra work this option brings.

Creating a SaaS Application for Many Organizations

Using relational storage is probably the best choice for a typical business application used by a single enterprise. But what if you’re a software vendor creating a Software as a Service (SaaS) application that will be used by many enterprises? Which cloud storage option is best in this situation?

The place to start is still relational storage. Its familiarity and broad tool support bring real advantages. With SQL Database, for example, a small SaaS application might be able to use just one database to store data for all of its customers. Because SaaS applications are typically multi-tenant, however, which means that they support multiple customer organizations with a single application instance, it’s common to create a separate database for each of these customers. This keeps data separated, which can help sooth customer security fears, and it also lets each customer store up to 150 gigabytes. If this isn’t enough, an application can use SQL Database’s federation option to spread the application’s data across multiple databases. And as with enterprise applications, using SQL Server in a VM can sometimes be a better alternative, such as when a SaaS application needs the full functionality of the product or when you need a larger relational database without using federation.

Yet Windows Azure Table Storage can also be attractive for a SaaS application. Its low cost improves the SaaS vendor’s margins, and its scalability allows storing huge amounts of customer data. Tables are also very fast to access, which helps the application’s performance. If the vendor’s customers expect traditional reports, however, especially if they wish to run these reports themselves against their data, tables are likely to be problematic. Similarly, if the way the SaaS application accesses data is complicated, the simple key/value approach implemented by Table Storage might not be sufficient. Because an application can only access table data by specifying the key for an entity, there’s no way to query against property values, use a secondary index, or perform a join. And given the limitations of transactions in Table Storage—they’re only possible with entities stored in the same partition—using them for business applications can create challenges for developers.

Table Storage also brings one more potential drawback for SaaS applications. Especially for smaller software vendors, it’s common to have some customers demand an on-premises version of the application—they don’t (yet) want SaaS. If the application uses relational storage on Windows Azure, moving both code and data to Windows Server and SQL Server can be straightforward. If the SaaS application uses Table Storage, however, this gets harder, since there’s no on-premises version of tables. When using the same code and data in the cloud and on-premises is important, Table Storage can present a roadblock.

These realities suggest that if relational storage can provide sufficient scale and if its costs are manageable, it’s probably the best choice for a SaaS application. Either SQL Server in a VM or SQL Database can be the right choice, depending on the required database size and the exact functionality you need. One more issue to be aware of is that because SQL Database is a shared service, its performance depends on the load created by all of its users at any given time. This means, for example, that how long a stored procedure takes to execute can vary from one day to the next. A SaaS application using SQL Database must be written to allow for this performance variability.

One final point: An application certainly isn’t obligated to use just one of the platform’s storage options. For example, a SaaS application might use SQL Database for customer data, Table Storage for its own configuration information, and Blob Storage to hold uploaded video. Understanding the strengths and weaknesses of each option is essential to choosing the right technology.

Creating a Scalable Consumer Application

Suppose you’d like to create a new consumer application in the cloud, such as a social networking app or a multi-player game. Your goal is to be wildly successful, attracting millions of users, and so the application must be very scalable. Which Windows Azure storage option should you pick?

Relational storage, whether with SQL Database or SQL Server in a VM, is less attractive here than it is for the more business-oriented scenarios we’ve looked at so far. In this scenario, scalability and low cost are likely to matter more than developer familiarity and the ability to use standard reporting tools. Given this, Table Storage should probably be the first option you consider. Going this route means that your application must be designed around the simple kinds of data access this key/value store allows, but if this is possible, tables offer fast data access and great scalability at a low price.

Depending on the kind of data your application works with, using Blob Storage might also make sense. And relational storage can still play a role, such as storing smaller amounts of data that you want to analyze using traditional business intelligence (BI) tools. In large consumer applications today, however, it’s increasingly common to use an inexpensive, highly scalable NoSQL technology for transactional data, such as Table Storage, then use the open-source technology Hadoop for analyzing that data. Because it provides parallel processing of unstructured data, Hadoop can be a good choice for performing analysis on large amounts of non-relational data. Especially when that data is already in the cloud, as it is with Windows Azure tables, running Hadoop in the cloud to analyze that data can be an attractive choice. Microsoft provides a Hadoop service as part of Windows Azure, and so this approach is a viable option for Windows Azure applications.

Creating a Big Data Application

By definition, a big data application works with lots of data. Cloud platforms can be an attractive choice in this situation, since the application can dynamically request the resources it needs to process this data. With Windows Azure, for instance, an application can create many VMs to process data in parallel, then shut them down when they’re no longer needed. This can be significantly cheaper than buying and maintaining an on-premises server cluster.

As with other kinds of software, however, the creators of big data applications face a choice: What’s the best storage option? The answer depends on what the application does with that data. Here are two examples:

An application that renders frames for digital effects in a movie might store the raw data in Windows Azure Blob Storage. It can then create a separate VM for each frame to be rendered and move the data needed for that frame into the VM’s local storage (also referred to as instance storage). This puts the data very close to the application—it’s in the same VM—which is important for this kind of data-intensive work. VM local storage isn’t persistent, however. If the VM goes down, the work will be lost, and so the results will need to be written back to a blob. Still, using the VM’s own storage during the rendering process will likely make the application run significantly faster, since it allows local access to the data rather than the network requests required to access blobs.

As mentioned earlier, Microsoft provides a Hadoop service on Windows Azure for working with big data. Called HDInsight, a Windows Azure application that uses it processes data in parallel with multiple instances, each working on its own chunk of the data. A Hadoop application might read data directly from blobs or copy it into a VM’s local storage for faster access.

One more concern with running big data applications in the cloud is getting the data into the cloud in the first place. If the data is already there—maybe it’s created by a consumer SaaS application using Table Storage—this isn’t a problem. If the big data is on premises, however, moving it into Blob Storage can take a while. In cases like this, it’s important to plan for the time and expense that moving the data will entail.

Creating a Data Streaming Application

An application that does data streaming, such as an online movie service, needs to store lots of binary data. It also needs to deliver this data effectively to its users, perhaps even to users around the world. Windows Azure can address both of these requirements.

To store the data, a data streaming application can use Blob Storage. Blobs can be big, and they’re inexpensive to use. To deliver the data in a blob, an application can use the Windows Azure Content Delivery Network (CDN). By caching data closer to its users, the CDN speeds up delivery of that data when it’s accessed by multiple customers. The Windows Azure CDN also supports smooth streaming to help provide a better video experience for diverse clients, including Windows 8, iOS, Android, and Windows Phone.

Moving a Windows Server Application to Windows Azure

It sometimes makes sense to move code and data from the on-premises Windows Server world to Windows Azure. Suppose an enterprise elects to move an existing application into the cloud to save money or free up datacenter space or for some other reason. Or imagine that a software vendor decides to create a SaaS version of an existing application, reusing as much of the application’s code as possible. In situations like these, what’s the best choice for data management?

The answer depends on what the application already uses. If the goal is to move to the cloud with as little change as possible, then using the Windows Azure options that most closely mirror the on-premises world is the obvious choice. For example, if the application uses SQL Server, the simplest option will likely be to use SQL Server running in a Windows Azure VM. This lets the application use whatever aspects of SQL Server it needs, since the same product is now running in the cloud. Alternatively, it might make sense to modify the application a bit to use SQL Database. Like SQL Server, SQL Database can be accessed using Entity Framework, ADO.NET, JDBC, PHP, and other options, which allows moving the application without extensive changes to its data access code. Similarly, an application that relies on the Windows file system can use the NTFS file system provided by Windows Azure drives to move code with as little change as possible.

Creating an On-Premises Application that Uses Cloud Data

Not all applications that use cloud data need to run in the cloud. It can sometimes be useful to store data in Windows Azure, then access that data from software running somewhere else. Here are a few examples:

An application running on mobile phones can rely on a large database stored in the cloud. For instance, a mapping application could require a database that’s too large to store on the phone, as might a mobile application that does language translation.

A business might store data in the cloud to help share the information across many users, such as its divisions or a dealer network. Think about a company that provides a large parts catalog, for example, that’s updated frequently. Storing this data in the cloud makes it easier to share and to update.

The creators of a new departmental application might choose to store the application’s data in the cloud rather than buy, install, and administer an on-premises database management system. Taking this approach can be faster and cheaper in quite a few situations.

Users that wish to access the same data from many devices, such as digital music files, might use applications that access a single copy of that data stored in the cloud.

This kind of application is sometimes referred to as a hybrid: It spans the cloud and on-premises worlds. Which storage option is best for these scenarios depends on the kind of data the application is using. Large amounts of geographic data might be kept in Blob Storage, for example, while business data, such as a parts catalog or data used by a departmental application, might use SQL Database. As always, the right choice varies with how the data is structured and how it will be used.

Storing Backup Data in the Cloud

One more popular way to use Windows Azure storage is for backups. Cloud storage is cheap, it’s offsite in secure data centers, and it’s replicated, all attractive attributes for data backup.

The most common approach for doing this is to use Blob Storage. Because blobs can be automatically copied to another datacenter in the same region, they provide built-in geo-replication. If desired, users are free to encrypt backups as well before copying them into the cloud. Another option is to use StorSimple, an on-premises hardware appliance that automatically moves data in and out of Windows Azure Blob Storage based on how that data is accessed. Especially for organizations new to the public cloud, using blobs for backup data can be a good way to get started.

Final Thoughts

Applications use many different kinds of data, and they work with it in different ways. Because of this, Windows Azure provides several options for data management. Using these options effectively requires understanding each one and the scenarios for which it makes the most sense.

Each of the platform’s data management options—SQL Database, SQL Server in a VM, Blob Storage, and Table Storage—has strengths and weaknesses. Once you understand what your needs are, you can decide which tradeoffs to make. Is cost most important? Does massive scalability matter, or is the ability to do complex processing on the data more important? Do you need to use standard business intelligence tools on the data? Will you be doing analysis with big data? Whatever your requirements, the goal of Windows Azure is to provide a data management technology that’s an effective solution for your problem.

About the Author

David Chappell is Principal of Chappell & Associates (www.davidchappell.com) in San Francisco, California. Through his speaking, writing, and consulting, he helps people around the world understand, use, and make better decisions about new technologies.

See Also

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, Caching Access Control, Active Directory, Identity and Workflow

• Bruno Terkaly (@brunoterkaly) and Ricardo Villalobos (@ricvilla) wrote Windows Azure Service Bus: Messaging Patterns Using Sessions for MSDN Magazine’s December 2012 issue. From the introduction:

In one of our previous articles, we discussed the importance of using messaging patterns in the cloud in order to decouple solutions and promote easy-to-scale software architectures. (See “Comparing Windows Azure Queues and Service Bus Queues” at msdn.microsoft.com/magazine/jj159884.)

Queuing is one of these messaging patterns, and the Windows Azure platform offers two main options to implement this approach: Queue storage services and Service Bus Queues, both of which cover scenarios where multiple consumers compete to receive and process each of the messages in a queue. This is the canonical model for supporting variable workloads in the cloud, where receivers can be dynamically added or removed based on the size of the queue, offering a load balancing/failover mechanism for the back end (see Figure 1).

.png)

Figure 1 Queuing Messaging Pattern: Each Message Is Consumed by a Single ReceiverEven though the queuing messaging pattern is a great solution for simple decoupling, there are situations where each receiver requires its own copy of the message, with the option of discarding some messages based on specific rules. A good example of this type of scenario is shown in Figure 2, which illustrates a common challenge that retail companies face when sending information to multiple branches, such as the latest products catalog or an updated price list.

.png)

Figure 2 Publisher/Subscriber Messaging Pattern: Each Message Can Be Consumed More Than OnceFor these situations, the publisher/subscriber pattern is a better fit, where the receivers simply express an interest in one or more message categories, connecting to an independent subscription that contains a copy of the message stream. The Windows Azure Service Bus implements the publisher/subscriber messaging pattern through topics and subscriptions, which greatly enhances the ability to control how messages are distributed, based on independent rules and filters. In this article, we’ll explain how to apply these Windows Azure Service Bus capabilities using a simple real-life scenario, assuming the following requirements:

- Products should be received in order, based on the catalog page.

- Some of the stores don’t carry specific catalog categories, and products in these categories should be filtered out for each store.

- New catalog information shouldn’t be applied to the store system until all the messages have arrived.

All the code samples for this article were created with Visual Studio 2012, using C# as the programming language. You’ll also need the Windows Azure SDK version 1.8 for .NET developers and access to a Windows Azure subscription. …

Read more.

Riccardo Becker (@riccardobecker) continued his Service Bus series with Chaining with Service Bus part 2 on 12/11/2012:

In the previous post I demonstrated how to send messages to a topic and how to add subscriptions receiving messages based on a region. The post will demonstrate how to scale out your system by using chaining and enabling messages to be forwarded from the region subscription to a specific country subscription. That post described how messages send to a topic are picked up by subscriptions based on a property of the message (in this case a region like USA or EMEA).

This post will demonstrate how to further distribute messages by another property called Country. To enable this we will use a technique called auto-forwarding. Consider the following:

Different systems insert purchase orders in our system. The purchase order is reflected by the following simplified class:

[DataContract]

public class PurchaseOrder

{

[DataMember]

public string Region;

[DataMember]

public decimal Amount;

[DataMember]

public string Article;

[DataMember]

public string Country;

}

Auto-forwarding enables a subscription to be "chained" to another topic or queue. The scenario we are realizing here is to have messages that are added to our EMEA subscription or forwarded to topics based on country e.g. Holland and Germany. Messages that are forwarded are automatically removed from the subscription (in our case, the EMEA subscription) and placed in the designated topic. See the code snippet below.

//FORWARDING

SubscriptionDescription description = new SubscriptionDescription(poTopic.Path, "Holland");

description.ForwardTo = "Holland";

SqlFilter filter = new SqlFilter("Country = 'Holland'");

if (!namespaceClient.SubscriptionExists(poTopic.Path, "Holland"))

{

namespaceClient.CreateSubscription(description, filter);

}

//FORWARDINGThis piece of code enables messages being send to the EMEA topic (as before) with another property called Country with the value 'Holland' to be forwarded to a specific topic called 'Holland' for further processing.

By using forwarding you can scale out your load on the initial topic and distribute messages based on some property. In the figure below you can see that no messages appear anymore in the EMEA topic but they all show up in the Holland Topic which is accomplished by the code snippet above.

Alex Simons reported Windows Azure Active Directory Supports JSON Web Tokens in a 12/10/2012 post to the Windows Azure Team blog:

Many of you may not have realized that the developer preview of Windows Azure Active Directory (AD) supports the JSON Web Token (JWT). We just haven’t talked about it much in this blog or in our developer documentation. JWT is a compact token format that is especially apt for REST based development. Defined by the OAuth Working Group at the IETF, JWT is also one of the basic components of OpenID Connect. JWT use is growing, and products supporting the format are increasingly common in the industry. Windows Azure Active Directory already issues JWT’s for a number of important scenarios, including securing calls to the Graph API, Office 365 integration scenarios and protection of 3rd party REST services.

Today I’m excited to let you know that we’ve just released the JSON Web Token Handler for the Microsoft .NET Framework 4.5, a .NET 4.5 assembly that (distributed via a NuGet package) to make it easy for .NET developers to use the JWT capabilities of Windows Azure AD. The JWT handler provides a collection of classes you can use for deserializing, validating, manipulating, generating, issuing and serializing JWTs. .NET developers will be able to use the JWT format both as part of existing workloads (such as Web single sign on) and within new scenarios (such as the REST based solutions made possible by the Windows Azure Authentication Library, also in preview) with the same ease they’d experience when using token formats supported out of the box, such as SAML2.0.

For more details on how to use the handler, including some concrete examples, please refer to Vittorio’s post here.

The JSON Web Token Handler provides a new capability for our platform which was previously obscured within the preview of the Windows Azure Authentication Library. We heard your feedback, and as part of our refactoring we made available that functionality as an individual component in its own right. Moving forward, you can expect other artifacts to build on it to deliver higher-level functionality such as support for protocols taking advantage of JWT.

I look forward to your comments, questions and feedback below and in the forums.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• See Craig Kitterman posted Il-Sung Lee’s Regulatory Compliance Considerations for SQL Server Running in Windows Azure Virtual Machine post in the Cloud Security, Compliance and Governance section below.

Brent Stineman (@BrentCodeMonkey) described Windows Azure Web Sites – Quotas, Scaling, and Pricing in a 12/11/2012 post:

It hasn’t been easy making the transition from a consultant to someone that for lack of a better explanation is a cross between pre-sales and technical support. But I’ve come to love two aspects of this job. First off, I get to talk to many different people and I’m constantly learning as much from their questions as I’m helping teach them about the platform. Secondly, when not talking with partners about the platform, I’m digging up answers to questions. This gives me the perfect excuse… er… reason to dig into some of the features and learn more about them. I had to do this as a consultant, but the issue there is that since I’d be asked to do this by paying clients, they would own the results. But now I do this work on behalf of Microsoft, it’s much easier to share these findings with the community (providing it doesn’t violate non-disclosure agreements of course). And since this blog has always been a way for me to document things so I can refer back to them, it’s a perfect opportunity to start sharing this research.

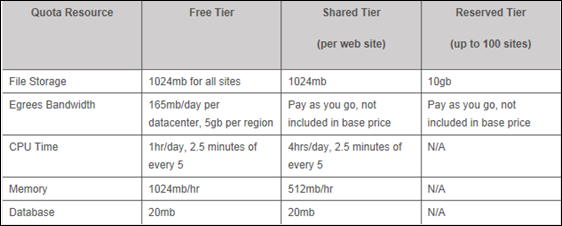

Today’s topic is Windows Azure Web Sites quotas and pricing. Currently we (Microsoft) doesn’t IMHO do a very good job of making this information real clear. Some of it is available over on the pricing page, but for the rest you’ve got to dig it out of blog posts or from the Web Site dashboard’s usage overview details in the management portal. So I decided it was time to consolidate a few things.

Usage Quotas

A key aspect of the use of any service is to understand the limits. And nowhere is this truer then the often complex/confusing world of cloud computing services. But when someone slaps a “free” in front of a service, we tend to forget this. Well here I am to remind you. Windows Azure Web Sites has several dials that we need to be aware of when selecting the level/scale of Windows Azure Web Sites (Free, Shared, and Reserved).

File System/Storage: This is the total amount of space you have to store your site and content. There’s no timeframe on this one. If you reach the quota limit, you simply can’t write any new content to the system.

Egress Bandwidth: This is the amount of content that is served up by your web site. If you exceed this quota, your site will be temporarily suspended (no further requests) until the quota timeframe (1 day) resets.

CPU Time: This is the amount of time that is spent processing requests for your web site. Like the bandwidth quota, if you exceed the quota, your site will be temporarily suspended until the quota timeframe resets. There are two quota timeframes, a 5 minute limit, and a daily limit.

Memory: is the amount of RAM that the site can use at one shot (there’s no timeframe). If you exceed the quota, a long running or abusive process will be terminated. And if this occurs often enough, your site may be suspended. Which is pretty good encouragement to rethink that process.

Database: There’s also up to 20mb for database support for your related database (MySQL or Windows Azure SQL Database currently). I can’t find any details but I’m hoping/guessing this will work much like the File Storage quota.

Now for the real meat of this. What are the quotas for each tier? For that I’ve created the following table:

Now there’s an important but slightly confusing “but” to the free tier. At that level, you get a daily limit egress bandwidth quota per sub-region (aka datacenter), but there’s also a regional (US, EU, Asia) limit (5GB). The regional limit is the sum total off all web sites you’re hosting that is shared with any other services. So if you’re also using Blob storage to serve up images from your site that will count against your “free” 5 GB. But when you move to the shared/reserved tier, there’s no limit, but you pay for every gigabyte that leaves the datacenter.

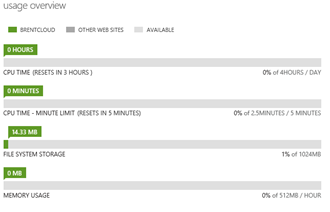

Monitoring Usage

Now the next logical question is how you monitor the resources your sites are using. Fortunately, the most recent update to Windows Azure portal has a dashboard that provides a quick glance as how much you’re using of each quota. This displays just below usage grid on the “Dashboard” panel of the web site.

At a glance you can tell where you on any quotas which also makes it convenient for you to predict your usage. Run some common scenarios and see what they do to your numbers and extrapolate from there.

You can also configure the site for diagnostics (again via the management portal). This allows you to take the various performance indicators and save them to Windows Azure Storage. From there you can download the files and set up automated monitors to alert you to problems. Just keep in mind that turning this on will consume resources and incur additional charges.

Fortunately, there’s a pretty good article we’ve published on Monitoring Windows Azure Web Sites.

Scaling & Pricing

Now that we’ve covered your usage quotas and how to monitor your usage, it’s important to understand how we can scale the capacity of our web sites and the impact this has on pricing.

Scaling our web site is pretty straight forward. We go can go from the Free Tier, to Shared, to Reserved using the management portal. Select the web site, click on the level, and then save to “scale” your site. But before you do that, you will want to understand the pricing impacts.

At the Free tier, we get up to 10 web sites. When we move a web site to shared, we will pay $0.02 per hour for each web site (at general availability). Now that this point, I can mix and match free (10 per sub-region/datacenter) and shared (100 per sub-region/datacenter) web sites. But things get a bit trickier when we move to reserved. A reserved web site is a dedicated virtual machine for your needs. When you move a web site within a region to the reserved tier, all web sites in that same sub-region/datacenter (up to the limit of 100) will also be moved to reserved.

Now this might seem a bit confusing until you realize that at the reserved tier, you’re paying for the virtual machine and not an individual web site. So it makes sense to have all your sites hosted on that instance, maximizing your investment. Furthermore, if you are running enough shared tier web sites, it may be more cost effective to run them as reserved.

Back to scaling, if you scale back down to the free or shared tiers, the other sites will revert back to their old states. For example, let’s assume you have two web sites one at the free tier, one at the shared tier. I scale the free web site up to reserved and now both sites are reserved. If I scale the original free tier site back to free, the other site returns to shared. If I opted to scale the original shared site back to shared or free, then the original free site returns to its previous free tier. So it’s important when dealing with reserved sites that you remember what tier they were at previously.

The tiers are not our only option for scaling our web sites. We also have a slider labelled instance count. When running at the free or shared tiers, this slider will change the number of processing threads that are servicing the web site allowing us between 1 and 3 threads. Of course, it we increase the threads, there’s a greater risk of hitting our cpu usage quota. But this adjustment could come in real handy if we’re experiencing a short term spike in traffic. Running at the reserved tier, the slider increases the number of virtual machine instances we (and subsequently our cost).

Also at the reserved tier, we can increase the size of our virtual machine. By default, our reserved instance will be a “small” giving us a single cpu core and 1.75 GB of memory at a cost of $0.12/hr. We can increase the size to “Medium” and even “Large” with each size increase doubling our resources and the price per hour ($0.24 and $0.48 respectively). This cost will be per virtual machine instance, so if I have opted to run 3 instances, take my cost per hour for the size and multiple it by 3.

So what’s next?

This pretty much hits the limits of what we can do with scaling web sites. But fortunately we’re running on a platform that’s built for scale. So it’s just a hop, skip, and jump from Web Sites to Windows Azure Cloud Services (Platform as a Service) or Windows Azure Virtual Machines (Infrastructure as a service). But that’s an article for another day.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Capt. Jeff Davis, US Navy reported NORAD Tracks Santa with the Help of Partners (Windows Azure and Bing Maps) in a 12/11/2012 guest post to TechNet’s Official Microsoft Blog:

Editor’s Note: The following is a guest post from Capt. Jeff Davis, U.S. Navy and Director, NORAD and U.S. Northern Command Public Affairs. For more than 50 years, NORAD has helped children around the world track Santa during his Christmas journey, and this year Microsoft is partnering with NORAD to make following the big red sleigh easier than ever. The Santa Tracker tool is built on the Microsoft Windows Azure cloud computing platform and Bing Maps, and anxious kids can even track Kris Kringle on Windows Phone and Windows 8 apps.

It’s hard to believe it all started with a typo.

A program renowned the world over – one that brings in thousands of volunteers, prominent figures such as the First Lady of the United States, and one that has been going on for more than five decades – all started as a misprint.

That error ran in a local Colorado Springs newspaper back in 1955 after a local department store printed an advertisement with an incorrect phone number that children could use to “call Santa.” Except that someone goofed. Or someone mistook a three for an eight. Maybe elves broke into the newspaper and changed the number. We’ll never know.

But somehow, the number in the advertisement changed, and instead of reaching the “Santa” on call for the local department store, it rang at the desk of the Crew Commander on duty at the Continental Air Defense Command Operations Center, the organization that would one day become the North American Aerospace Defense Command, or “NORAD.”

And when the commander on duty, Col. Harry Shoup, first picked up the phone and heard kids asking for Santa, he could have told them they had a wrong number.

But he didn’t.

Instead, the kind-hearted colonel asked his crew to play along and find Santa’s location. Just like that, NORAD was in the Santa-tracking business.

Colonel Shoup probably had no way of knowing what he had started. Fast forward 62 years later, and NORAD is still tracking Santa, and with the help of technology and our generous contributors – including Microsoft, Analytical Graphics Inc., Verizon, Visionbox and more than 50 others – the ability for people around the world to follow Santa’s journey has grown in ways no one could have imagined back in 1955.

This year, nearly 25 million people around the world are expected to follow Santa’s journey in real-time on the Web, on their mobile devices, by e-mail and by phone. This combination of new and old technologies is essential to helping NORAD keep up with the incredible demand for Santa tracking that grows each year.

To put the program into perspective, last year the NORAD Tracks Santa Operations Center volunteers in Colorado Springs received more than 102,000 calls, 7,721 e-mails and reached nearly 20 million people in more than 220 countries around the world through the www.noradsanta.org website. With the help of a worldwide network of partners, military and civilian volunteers, and thanks to the special friendship between the U.S. and Canada, NORAD will be able to reach even more people this year.

Starting at 12 a.m. MST on Dec. 24, website visitors can watch Santa make the preparations for his flight. Then, at 4:00 a.m. MST (6:00 a.m. EST), trackers worldwide can speak with a live phone operator to inquire as to Santa’s whereabouts by dialing the toll-free number 1-877-Hi-NORAD

(1-877-446-6723

) or by sending an e-mail to noradtrackssanta@outlook.com. NORAD’s “Santa Cams” will also stream videos as Santa makes his way over various locations throughout the world.

It’s a big job, and we can’t do it alone, but the holidays have always been a time for bringing people together. With the help of our industry partners and friends in Canada, the tradition that started as a mistake will live on again this year.

If you would like to track Santa, visit www.noradsanta.org or visit us on Facebook at http://www.facebook.com/noradsanta.

Bruno Terkaly (@brunoterkaly) described Leveraging Windows Azure and Twilio to support SMS in the Cloud in a 12/11/2012 post:

Introduction

Leveraging SMS messaging in a cloud context is a powerful capability. There are a wide variety of applications that can be built upon the techniques I illustrate. Imagine that you have a cloud service that responds to SMS messages. Here are some examples:

- Imagine you are presenting at a conference and you want to collect email addresses from the audience. This is the use case I will demonstrate.

- You could SMS the message "TIME, JAPAN" and the cloud would send back the answer

- The list goes on. Use your imagination.

- My solution leverages twilio from .net. I found the twilio guidance on doing this to be challenging. Myself, and my colleague, found it to be fairly challenging to implement. It took many hours of experimentation.

Prerequisites

- Sign up for an Azure account

- You can do that here: http://bit.ly/azuretestdrive