Windows Azure and Cloud Computing Posts for 12/5/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access & Identity Control, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop and Media Services

Steve Martin (@stevemar_msft) posted Announcing Reduced Pricing for Windows Azure Storage on 12/5/2012:

Today we are happy to announce another price reduction for Windows Azure Storage by as much as 28%, effective on December 12. This follows our March 8, 2012 reduction of 12% furthering our commitment to best overall value in the industry.

Reducing prices is only part of the story; we’ve also added more value to our storage offerings in a number of ways. Our Geo Redundant Storage continues to lead the market in durability with more than 400 miles of separation between replicas. We recently announced deployment of a flat network for Windows Azure across all of our datacenters to provide very high bandwidth network connectivity for storage clients which significantly enhances scenarios like MapReduce, HPC, and others. We also announced increased scalability targets for Windows Azure Storage and continue to invest in improving bandwidth between compute and storage.

Our continued commitment to reduce costs and increase capabilities seem to be resonating with our customers based on tremendous growth we are seeing. We have over 4 trillion objects stored, an average of 270,000 requests processed per second, and a peak of 880,000 requests per second.

Windows Azure Storage accounts have geo-replication on by default to provide the greatest durability. Customers can turn geo-replication off to use what we call Locally Redundant Storage, which results in a discounted price relative to Geo Redundant. You can find more information on Geo Redundant Storage and Locally Redundant Storage here.

Here is the detailed information on new, reduced pricing for both Geo and Locally redundant storage (prices below are per GB per month):

In addition to low cost storage, we continue to offer a variety of options for developers to use Windows Azure for free or at significantly reduced prices. To name a few examples:

- We offer a free 90 day trial for new users

- We have great offers for MSDN customers, Microsoft partner network members and startups that provide free usage every month up to $300 per month in value

- We have monthly commitment plans that can save you up to 32% on everything you use on Windows Azure

Find out more about Windows Azure pricing and offers, and sign up to get started.

This price reduction to meet Amazon S3 and Google Compute Engine storage price reductions was expected.

No significant articles today

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

Scott Guthrie (@scottgu) described iOS Support with Windows Azure Mobile Services – now with Push Notifications in a 12/4/2012 post:

A few weeks ago I posted about a number of improvements to Windows Azure Mobile Services. One of these was the addition of an Objective-C client SDK that allows iOS developers to easily use Mobile Services for data and authentication. Today I'm excited to announce a number of improvement to our iOS SDK and, most significantly, our new support for Push Notifications via APNS (Apple Push Notification Services). This makes it incredibly easy to fire push notifications to your iOS users from Windows Azure Mobile Service scripts.

Push Notifications via APNS

We've provided two complete tutorials that take you step-by-step through the provisioning and setup process to enable your Windows Azure Mobile Service application with APNS (Apple Push Notification Services), including all of the steps required to configure your application for push in the Apple iOS provisioning portal:

- Getting started with Push Notifications - iOS

- Push notifications to users by using Mobile Services - iOS

Once you've configured your application in the Apple iOS provisioning portal and uploaded the APNS push certificate to the Apple provisioning portal, it's just a matter of uploading your APNS push certificate to Mobile Services using the Windows Azure admin portal:

Clicking the “upload” within the “Push” tab of your Mobile Service allows you to browse your local file-system and locate/upload your exported certificate. As part of this you can also select whether you want to use the sandbox (dev) or production (prod) Apple service:

Now, the code to send a push notification to your clients from within a Windows Azure Mobile Service is as easy as the code below:

push.apns.send(deviceToken, {

alert: 'Toast: A new Mobile Services task.',

sound: 'default'

});

This will cause Windows Azure Mobile Services to connect to APNS (Apple Push Notification Service) and send a notification to the iOS device you specified via the deviceToken:

Check out our reference documentation for full details on how to use the new Windows Azure Mobile Services apns object to send your push notifications.

Feedback Scripts

An important part of working with any PNS (Push Notification Service) is handling feedback for expired device tokens and channels. This typically happens when your application is uninstalled from a particular device and can no longer receive your notifications. With Windows Notification Services you get an instant response from the HTTP server. Apple’s Notification Services works in a slightly different way and provides an additional endpoint you can connect to poll for a list of expired tokens.

As with all of the capabilities we integrate with Mobile Services, our goal is to allow developers to focus more on building their app and less on building infrastructure to support their ideas. Therefore we knew we had to provide a simple way for developers to integrate feedback from APNS on a regular basis.

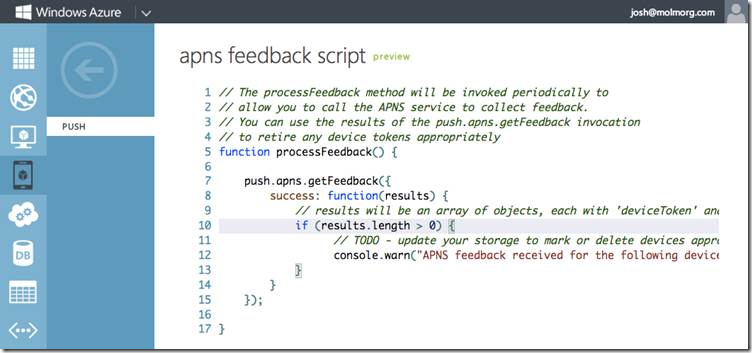

This week’s update now includes a new screen in the portal that allows you to optionally provide a script to process your APNS feedback – and it will be executed by Mobile Services on an ongoing basis:

This script is invoked periodically while your service is active. To poll the feedback endpoint you can simply call the apns object's getFeedback method from within this script:

push.apns.getFeedback({

success: function(results) {

// results is an array of objects with a deviceToken and time properties

}

});

This returns you a list of invalid tokens that can now be removed from your database.

iOS Client SDK improvements

Over the last month we've continued to work with a number of iOS advisors to make improvements to our Objective-C SDK. The SDK is being developed under an open source license (Apache 2.0) and is available on github.

Many of the improvements are behind the scenes to improve performance and memory usage. However, one of the biggest improvements to our iOS Client API is the addition of an even easier login method. Below is the Objective-C code you can now write to invoke it:

[client loginWithProvider:@"twitter"

onController:self

animated:YES

completion:^(MSUser *user, NSError *error) {

// if no error, you are now logged in via twitter

}];

This code will automatically present and dismiss our login view controller as a modal dialog on the specified controller. This does all the hard work for you and makes login via Twitter, Google, Facebook and Microsoft Account identities just a single line of code.

My colleague Josh just posted a short video demonstrating these new features which I'd recommend checking out:

Summary

The above features are all now live in production and are available to use immediately. If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using Mobile Services today. Visit the Windows Azure Mobile Developer Center to learn more about how to build apps with Mobile Services.

Cihan Biyikoglu (@cihangirb) reported Doctrine (PHP) Now Supports Federations in Azure SQL Database... in a 12/3/2012 post:

If you are a doctrine fan, now you can scale doctrine on Azure SQL DB with Federations. Details are published here;

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

Carl Nolan (@carl_nolan) described Co-occurrence Approach to an Item Based Recommender Update in a 12/5/2012 post:

In a previous post I talked about a Co-occurrence Approach to an Item Based Recommender, that utilized the Math.Net Numerics library. Recently the Math.Net Numerics library was updated to version 2.3.0. With this version of the library I was able to update the code to more efficiently read the Sparse Matrix entries. As such I have updated the code to reflect these library changes:

http://code.msdn.microsoft.com/Co-occurrence-Approach-to-57027db7

The new Math.Net Numerics Library changes were around the storage of the Vector and Matrix elements. As such I was now able to access the storage directly and use the Compress Sparse Row Matrix format to more efficiently access the Sparse Matrix elements.

The original code that accessed the elements of the Sparse Matrix was a simple row/column traverse:

let getQueue (products:int array) =

// Define the priority queue and lookup table

let queue = PriorityQueue(coMatrix.ColumnCount)

let lookup = HashSet(products)

// Add the items into a priority queue

products

|> Array.iter (fun item ->

let itemIdx = item - offset

if itemIdx >= 0 && itemIdx < coMatrix.ColumnCount then

seq {

for idx = 0 to (coMatrix.ColumnCount - 1) do

let productIdx = idx + offset

let item = coMatrix.[itemIdx, idx]

if (not (lookup.Contains(productIdx))) && (item > 0.0) then

yield KeyValuePair(item, productIdx)

}

|> queue.Merge)

// Return the queue

queueNow one has access to the storage elements I was able to more efficiently access just the sparse element values:

products

|> Array.iter (fun item ->

let itemIdx = item - offset

let sparse = coMatrix.Storage :?> SparseCompressedRowMatrixStorage<double>

let last = sparse.RowPointers.Length - 1

if itemIdx >= 0 && itemIdx <= last then

let (startI, endI) =

if itemIdx = last then

(sparse.RowPointers.[itemIdx], sparse.RowPointers.[itemIdx])

else

(sparse.RowPointers.[itemIdx], sparse.RowPointers.[itemIdx + 1] - 1)

seq {

for idx = startI to endI do

let productIdx = sparse.ColumnIndices.[idx] + offset

let item = sparse.Values.[idx]

if (not (lookup.Contains(productIdx))) && (item > 0.0) then

yield KeyValuePair(item, productIdx)

}

|> queue.Merge)

// Return the queue

queueIn the new version of the code The Values array provides access to the underlying non-empty values. The RowPointers array provides access to the value indexes where each row starts. Finally, the ColumnIndicies are the column indices corresponding to the values.

Other than this change all other aspects of the library’s usage were effectively unchanged; including the MapReduce code (postings can be found here), as this uses a collection of Vector types. I did however update the job submission scripts.

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

Clemens Vasters (@clemensv) reminded readers to Subscribe! - Getting Started with Service Bus on 12/4/2012:

Over on my new Channel 9 blog I've started a series that will (hopefully) help novices with getting started developing applications that leverage Windows Azure Service Bus (and, in coming episodes also Service Bus for Windows Server)

The first two episodes are up:

- Getting Started with Service Bus. Part 1: The Portal

- Getting Started with Service Bus. Part 2: .NET SDK and Visual Studio

There's much more to come, and the best way to get at it as it comes out is to subscribe to the RSS feed or bookmark the landing page.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Sandrino di Mattia described Securing access to your Windows Azure Virtual Machines in a 12/5/2012 post:

A few days ago Alan Smith (Windows Azure MVP) started a discussion about the “Virtual Machine hacking” thread on the MSDN forum and how we could protect our Virtual Machines. Let’s take a look at our options for reducing the attack surface of a Windows VM (some options can also be applied to Linux VMs).

The problem

When you create a new Windows Server Virtual Machine from the gallery you’ll see that you can’t change the username (defaults to Administrator). This means anyone who could access your Virtual Machine already knows your username is Administrator.

After your Virtual Machine has been created you’ll also see that the VM already has 1 endpoint defined:

Port 3389 allows you to connect to a machine using the Remote Desktop Protocol (RDP) and opening this endpoint means anyone can try to connect to your Virtual Machine. If you do a quick search on

So if you leave this open for a while there’s a high chance that you’ll see this in the Event Log of the VM:

Change the public port

A good start would be to change the public port:

This way people will have a harder time to guess what the port is for and you’ll probably already secure your VM from all those kiddie scripters. But the port is still open, meaning anyone who does a full portscan on each IP in the Windows Azure IP Address range could find this open endpoint. With the required tools they’ll also be able to see that this port is for RDP.

Change the administrator username

Ok so we kinda applied security through obscurity. What if we could make it harder for those tools by renaming the default Administrator user? This is very easy to do with Powershell:

This script will rename the Administrator user to a generated random username (with some allowed special characters). Make sure you also have a complex password and the bruteforce tools will have a hard time guessing the username/password combination.

I noticed a few issues after renaming the Administrator account (like IE not working) and simply rebooting the the Virtual Machine fixed the issues.

Improving security for the public port

This still leaves us with 1 issue, anyone connected to the internet could connect to our public port. But this can easily be changed using the Windows Firewall (in Control Panel > System And Security). You’ll see that the Windows Firewall contains 2 rules for RDP:

By double clicking these rules you can specify an IP address range which can access to these ports:

Add your static IP or your IP range(s) if you don’t have a static IP. If you press OK or Apply the firewall immediately applies these changes, so be careful and make sure you don’t lock yourself out.

Looks like we did it. RDP is only accessible from our machine/corporate network and we renamed the Administrator user to something complex.

Removing the RDP endpoint and using Windows Azure Connect

Using the Windows Firewall to secure the RDP port is ok for small deployments, but what if you have a whole farm you need to manage like this? There is an other way to secure the public endpoint… by removing it. Hackers won’t be able to connect to the endpoint but you won’t either.

The solution here would be to create an IPSec connection between your machine(s) and the Virtual Machines in Windows Azure (Virtual Networks would also be a solution but this means you would need specific hardware). Setting up this IPSec connection is possible with Windows Azure Connect. Simply go to the old portal (http://windows.azure.com) and navigate to the Virtual Network > Connect tab.

The Connect tab allows you to download the Windows Azure Connect Endpoint Software (because of the activation token you’ll need to download it once per machine you want to install it on). Click the Install Local Endpoint button to download and install the software on your machine and on the Virtual Machine:

After installing the software on your machine and your VM you’ll see them show up under endpoints:

Finally you need to create a group, add both machines in the group and check the “Allow connections between endpoints in group” option:

That’s it! Your machines are connected. Take a look at the Windows Azure Connect icon next to your clock (the tooltip should say Status: Connected). You should also be able to resolve the IP of the Virtual Machine (by using ping <vmname> for example):

You can now safely remove the endpoint and connect directly to the VM:

And in case there is an issue with Windows Azure Connect, you can simply add the public endpoint for RDP to connect to your VM and fix the issue.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Brian Benz (@bbenz) described Windows Azure Authentication module for Drupal using WS-Federation in a 12/5/2012 post to the Interoperability @ Microsoft blog:

At Microsoft Open Technologies, Inc., we’re happy to share the news that Single Sign-on of Drupal Web sites hosted on Windows Azure with Windows Live IDs and / or Google IDs is now available. Users can now log in to your Drupal site using Windows Azure's WS-Federation-based login system with their Windows Live or Google ID. Simple Web Tokens (SWT) are supported and SAML 2.0 support is currently planned but not yet available.

Setup and configuration is easy via your Windows Azure account administrator UI. Setup details are available via the Drupal project sandbox here. Full details of setup are here.

Under the hood, WS-Federation is used to identify and authenticate users and identity providers. WS-Federation extends WS-Trust to provide a flexible Federated Identity architecture with clean separation between trust mechanisms (In this windows Live and Google), security token formats (In this case SWT), and the protocol for obtaining tokens.

The Windows Azure Authentication module acts as a relying party application to authenticate users. When downloaded, configured and enabled on your Drupal Web site, the module:

- Makes a request via the Drupal Web site for supported identity providers

- Displays a list of supported identity providers with Authentication links

- Provides return URL for authentication, parsing and validating the returned SWT

- Logs the user in or directs the user to register

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) posted LightSwitch Community & Content Rollup–November 2012 on 12/5/2012:

Last year I started posting a rollup of interesting community happenings, content, samples and extensions popping up around Visual Studio LightSwitch. If you missed those rollups you can check them all out here: LightSwitch Community & Content Rollups.

I realize I’m a couple days late for this one but I’ve been travelling a lot lately. In fact, I’m sitting in a hotel right now in Toronto writing this before I head out to the Metro Toronto .NET User’s Group meeting (which is at capacity!) to deliver a session. I’m currently on a user group tour here in Canada and will end up in Vermont next week. For details on my schedule see the events section below…

HTML Client Preview 2 Released!

The big news in November was we released the LightSwitch HTML Client Preview 2 that installs right into Visual Studio 2012! With this release, LightSwitch enables developers to easily build touch-oriented business applications with HTML5 that run well across a breadth of devices. These apps can be standalone, but with this preview developers can now also quickly build and deploy data-driven apps for SharePoint using the new web standards-based apps model.

We created a page on the Developer Center that has everything you need to get started: http://msdn.com/lightswitch/htmlclient

1- Get the preview

The LightSwitch HTML Client Preview 2 is a Web Platform Installer (WPI) package which is included in the Microsoft Office Developer Tools for Visual Studio Preview 2. This includes other components for building SharePoint 2013 Apps. Make sure you have Visual Studio 2012 Professional or higher installed first.

Download: Microsoft Office Developer Tools for Visual Studio - Preview 2

2- Work through the tutorials

We’ve got a couple tutorials that we released to help you learn the new capabilities. Also check out the HTML Client documentation on MSDN.

LightSwitch HTML Client Tutorial – this tutorial walks you through building an application that connects to existing data services and provides a touch-first, modern experience for mobile devices.

LightSwitch SharePoint Tutorial – this tutorial shows you how to use LightSwitch to build a SharePoint application with an HTML client that runs on a variety of mobile devices. The tutorial shows you how to sign up and deploy to an Office 365 online account.

3- Ask questions and report issues in the forum

We’ve got a dedicated forum specifically for getting feedback and answering questions about the HTML Client Preview release. The team is ready and listening so fire away!

Events

As part of the .NET Rocks! Road Trip, I was in San Diego last week with Richard Campbell & Carl Franklin. Carl & Richard rented a big 37' RV and have been travelling all over the US & Canada for the launch of Visual Studio 2012. At each stop they record a live .NET Rocks! show with a guest star. Following that, they each do a presentation around building modern applications on the Windows platform.

We had the event at the Nokia offices and it was a great venue. Carl did a presentation on building a Windows 8 Store App that let you listen to the DnR podcasts and Richard showed us some of the cool stuff coming in Systems Center. The hosts were amazing, the crowd was great, and I am now totally convinced I need a red Lumia 920! ;-)

Right now I’m on my eastern Canada speaking tour so if you’re in the area and you want to learn more about all the great new stuff in LightSwitch, come on out! I’ll also be delivering an all day workshop on the 8th in Montreal that’s sure to be a blast!

Dec 5th: East of Toronto .NET UG (Pickering, ON)

- Dec 6th: Ottawa IT Community User Group (Ottawa, ON)

- Dec 8th: Full-day LightSwitch Workshop (Montreal, QC)

- Dec 10th – 11th: DevTeach 2012 (Montreal, QC)

- Dec. 11th: Vermont.NET User Group (Winooski, VT)

New Visual Studio 2012 Videos Released

In November, we also added a couple new videos to the LightSwitch Developer Center’s “How Do I?” video section. These videos continue the Visual Studio 2012 series where I walk through the new features available in LightSwitch in VS 2012.

How Do I: Perform Automatic Row-Level Filtering of Data?

In this video, see how you can perform row level filtering by using the new Filter methods in LightSwitch in Visual Studio 2012. Since LightSwitch is all about data, one of the features we added in Visual Studio 2012 is the ability to filter sets of data no matter how or what client is accessing them. This allows you to set up system-wide filtering on your data to support row level security as well as multi-tenant scenarios.

How Do I: Deploy a LightSwitch App to Azure Websites?

In this video lean how you can deploy your LightSwitch applications to the new Azure websites using Visual Studio 2012. Azure Websites are for quick and easy web application and service deployments. You can start for free and scale as you go. One of the many great features of LightSwitch is that it allows you to take your applications and easily deploy them to Azure.

More Notable Content this Month

Extensions released this month (see over 100 of them here!):

CLASS Extensions (Kostas Christodoulou (Computer Life)) - A Collection of business types, controls and more

Samples (see all 95 of them here):

- LightSwitch HTML Client Tutorial (LightSwitch Team)

- LightSwitch SharePoint Tutorial (LightSwitch Team)

- Executing an arbitrary method or long-running process on the LightSwitch server (Jan Van der Haegen)

- LightSwitch HTML Preview - first impressions (Jan Van der Haegen)

Team Articles:

- New LightSwitch HTML Client APIs (Stephen Provine)

- A New API for LightSwitch Server Interaction: The ServerApplicationContext (Joe Binder)

- Building a LightSwitch HTML Client: eBay Daily Deals (Andy Kung)

- Getting Started with the LightSwitch HTML Client Preview 2 (Beth Massi)

- Building an HTML Client for a LightSwitch Solution in 5 Minutes (Beth Massi)

Community Articles:

Thanks to all of you who share your knowledge with the community for free, whether that’s blogs, forums, speaking, etc. The community (particularly Michael Washington, Paul Van Bladel & Jan van der Haegen) also published these gems in November. As you can see, these folks are pretty excited about the HTML Client Preview 2.

- Writing JavaScript In LightSwitch HTML Client Preview

- Creating JavaScript Using TypeScript in Visual Studio LightSwitch (see the discussion in the forum thread here: TypeScript and LightSwitch HTML - a perfect match?)

- Fixing WCF RIA Services After Upgrading To HTML Client

- Theming Your LightSwitch Website Using JQuery ThemeRoller

- Rumor has it… LightSwitch HMTL Client preview 2 is released – omg omg omg with a ServerApplicationContext?!?

- Executing an arbitrary method or long-running process on the LightSwitch server

- OData Security Using The Command Table Pattern And Permission Elevation

- LightSwitch News – Find out what the LightSwitch community is up to

- CodeSchool: taking my first baby steps in JQuery

- Automatically deploy a LightSwitch-ready hosting website with ability to set the https certificate.

- Automatically deploy a LightSwitch-ready application pool with ability to set the app pool Identity.

- Integrating hosting web site creation in an automated LightSwitch factory

- Using SignalR in LightSwitch to process toast notifications to connected clients.

- Handling in LightSwitch commands in less than 10 lines of code.

- LightSwitch Windows Azure Website using OData service

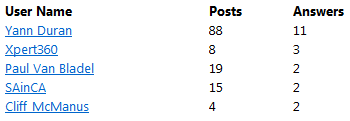

Top Forum Answerers

Thanks to all our contributors to the LightSwitch forums on MSDN. Thank you for helping make the LightSwitch community a better place. Another huge shout out to Yann Duran who consistently provides help in our General forum and is now one of our forum moderators!

Keep up the great work guys!

LightSwitch Team Community Sites

Become a fan of Visual Studio LightSwitch on Facebook. Have fun and interact with us on our wall. Check out the cool stories and resources. Here are some other places you can find the LightSwitch team:

LightSwitch MSDN Forums

LightSwitch Developer Center

LightSwitch Team Blog

LightSwitch on Twitter (@VSLightSwitch, #VS2012 #LightSwitch)

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Lydia Leong (@cloudpundit) asserted Cloud IaaS SLAs can be meaningless in a 12/5/2012 post:

In infrastructure services, the purpose of an SLA (or, for that matter, the liability clause in the contract) is not “give the customer back money to compensate for the customer’s losses that resulted from this downtime”. Rather, the monetary guarantees involved are an expression of shared risk. They represent a vote of confidence — how sure is the provider of its ability to deliver to the SLA, and how much money is the provider willing to bet on that? At scale, there are plenty of good, logical reasons to fear the financial impact of mass outages — the nature of many cloud IaaS architectures create a possibility of mass failure that only rarely occurs in other services like managed hosting or data center outsourcing. IaaS, like traditional infrastructure services, is vulnerable to catastrophes in a data center, but it is additionally vulnerable to logical and control-plane errors.

Unfortunately, cloud IaaS SLAs can readily be structured to make it unlikely that you’ll ever see a penny of money back — greatly reducing the provider’s financial risks in the event of an outage.

Amazon Web Services (AWS) is the poster-child for cloud IaaS, but the AWS SLA also has the dubious status of “worst SLA of any major cloud IaaS provider”. (It’s notable that, in several major outages, AWS did voluntary givebacks — for some outages, there were no applicable SLAs.)

HP has just launched its OpenStack-based Public Cloud Compute into general availability. HP’s SLA is unfortunately arguably even worse.

Both companies have chosen to express their SLAs in particularly complex terms. For the purposes of this post, I am simplifying all the nuances; I’ve linked to the actual SLA text above for the people who want to go through the actual word salad.

To understand why these SLAs are practically useless, you need to understand a couple of terms. Both providers divide their infrastructure into “regions”, a grouping of data centers that are geographically relatively close to one another. Within each region are multiple “availability zones” (AZs); each AZ is a physically distinct data center (although a “data center” may be comprised of multiple physical buildings). Customers obtain compute in the form of virtual machines known as “instances”. Each instance has ephemeral local storage; there is also a block storage service that provides persistent storage (typically used for databases and anything else you want to keep). A block storage volume resides within a specific AZ, and can only be attached to a compute instance in that same AZ.

AWS measures availability over the course of a year, rather than monthly, like other providers (including HP) do. This is AWS’s hedge against a single short outage in a month, especially since even a short availability-impacting event takes time to recover from. 99.95% monthly availability only permits about 21 minutes of downtime; 99.95% yearly availability permits nearly four and a half hours of downtime, cumulative over the course of the year.

However, AWS and HP both define their SLA not in terms of instance availability, or even AZ availability, but in terms of region availability. In the AWS case, a region is considered unavailable if you’re running instances in at least two AZs within that region, and in both of those AZs, your instances have no external network connectivity and you can’t launch instances in that AZ that do; this is metered in five-minute intervals. In the HP case, a region is considered unavailable if an instance within that region can’t respond to API or network requests, you are currently running in at least two AZs, and you cannot launch a replacement instance in any AZ within that region; the downtime clock doesn’t start ticking until there’s more than 6 minutes of unavailability.

Every AZ that a customer chooses to run in effectively imposes a cost. An AZ, from an application architecture point of view, is basically a data center, so running in multiple AZs within a region is basically like running in multiple data centers in the same metropolitan region. That’s close enough to do synchronous replication. But it’s still a pain to have to do this, and many apps don’t lend themselves well to a multi-data-center distributed architecture. Also, that means paying to store your data in every AZ that you need to run in. Being able to launch an instance doesn’t do you very much good if it doesn’t have the data it needs, after all. The AWS SLA essentially forces you to replicate your data in two AZs; the HP one makes you do this for all the AZs within a region. Most people are reasonably familiar with the architectural patterns for two data centers; once you add a third and more, you’re further departing from people’s comfort zones, and all HP has to do is to decide they want to add another AZ in order to essentially force you to do another bit of storage replication if you want to have an SLA.

(I should caveat the former by saying that this applies if you want to be able to usefully run workloads within the context of the SLA. Obviously you could just choose to put different workloads in different AZs, for instance, and not bother trying to replicate into other AZs at all. But HP’s “all AZs not available” is certainly worse than AWS’s “two AZs not available”.)

Amazon has a flat giveback of 10% of the customer’s monthly bill in the month in which the most recent outage occurred. HP starts its giveback at 5% and caps it at 30% (for less than 99% availability), but it covers strictly the compute portion of the month’s bill.

HP has a fairly nonspecific claim process; Amazon requires that you provide the instance IDs and logs proving the outage. (In practice, Amazon does not seem to have actually required detailed documentation of outages.)

Neither HP nor Amazon SLA their management consoles; the create-and-launch instance APIs are implicitly part of their compute SLAs. More importantly, though, neither HP nor Amazon SLA their block storage services. Many workloads are dependent upon block storage. If the storage isn’t available, it doesn’t matter if the virtual machine is happily up and running — it can’t do anything useful. For example of why this matters, you need look no further than the previous Amazon EBS outages, where the compute instances were running happily, but tons of sites were down because they were dependent on data stores on EBS (and used EBS-backed volumes to launch instances, etc.).

Contrast these messes to, say, the simplicity of the Dimension Data (OpSource) SLA. The compute SLA is calculated per-VM (i.e., per-instance). The availability SLA is 100%; credits start at 5% of monthly bill for the region, and go up to 100%, based on cumulative downtime over the course of the month (5% for every hour of downtime). One caveat: Maintenance windows are excluded (although in practice, maintenance windows seem to affect the management console, not impacting uptime for VMs). The norm in the IaaS competition is actually strong SLAs with decent givebacks, that don’t require you to run in multiple data centers.

Amazon’s SLA gives enterprises heartburn. HP had the opportunity to do significantly better here, and hasn’t. To me, it’s a toss-up which SLA is worse. HP has a monthly credit period and an easier claim process, but I think that’s totally offset by HP essentially defining an outage as something impacting every AZ in a region — something which can happen if there’s an AZ failure coupled with a massive control-plane failure in a region, but not otherwise likely.

Customers should expect that the likelihood of a meaningful giveback is basically nil. If a customer needs to, say, mitigate the fact he’s losing a million dollars an hour when his e-commerce site is down, he should be buying cyber-risk insurance. The provider absorbs a certain amount of contractual liability, as well as the compensation given by the SLA, but this is pretty trivial — everything else is really the domain of the insurance companies. (Probably little-known fact: Amazon has started letting cyber-risk insurers inspect the AWS operations so that they can estimate risk and write policies for AWS customers.)

I’m surprised Lydia Leong didn’t include Google Compute Engine and Windows Azure in her analysis.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Kevin Remde (@KevinRemde) released TechNet Radio: Cloud Innovators– My interview with Tom Shinder and Yuri Diogenes (Part 3) on 12/5/2012:

Continuing our Private Cloud basics series, Tom Shinder and Yuri Diogenes are back. And in today’s episode they focus on how you can get started in planning a private cloud environment. Tune in as they lay out some best practices you may want to consider.

After watching this video, follow these next steps:

Step #1 – Download Windows Server 2012

Step #2 – Download Your FREE Copy of Hyper-V Server 2012

If you're interested in learning more about the products or solutions discussed in this episode, click on any of the below links for free, in-depth information:

Resources:

Websites & Blogs:

- Kevin Remde’s Blog – Full of I.T.

- TechNet Private Cloud Scenario Hub

- TechNet Private Cloud Architecture Blog

- Yuri Diogenes’ Blog

- TechNet article: “Plan a Private Cloud”

- TechNet article: “Reference Architecture for Private Cloud”

Videos:

- TechNet Radio: Cloud Innovators – (Part 1) Private Cloud Principles

- TechNet Radio: Cloud Innovators – (Part 2) Private Cloud Security

Virtual Labs:

Follow @technetradio

Become a Fan @ facebook.com/MicrosoftTechNetRadio

Follow @KevinRemde

Become a Fan of Full of I.T. @ facebook.com/KevinRemdeIsFullOfIT

Subscribe to our podcast via iTunes, Zune, Stitcher, or RSS

Download

- MP3 (Audio only)

- MP4 (iPod, Zune HD)

- High Quality MP4 (iPad, PC)

- Mid Quality MP4 (WP7, HTML5)

- High Quality WMV (PC, Xbox, MCE)

<Return to section navigation list>

Cloud Security and Governance

<Return to section navigation list>

Cloud Computing Events

Scott Densmore announced on 12/5/2012 that p&p Symposium 2013 will be held in Remond, WA on 1/15 through 1/17/2012:

The patterns & practices Symposium 2013 is scheduled for January 15 – 17, on campus in Redmond. This is a great opportunity for you to connect and learn.

They have an amazing set of presenters this year. To celebrate the theme of exploration, they are bringing in two special speakers from outside the software development world.

Adam Steltzner

Curiosity Team@steltzner

Felicity Aston

Antarctic Explorer@felicity_aston

Along with many Microsoft and community speakers:

- Scott Guthrie

- Scott Hansleman

- Aleš Holeček

- Vittorio Bertocci

- Greg Young

- Ward Bell

- Ted Neward

- Mark Groves

- Guillermo Rauch

- Marc Mercuri

- Colin Miller

- Chris Tavers

- Eugenio Pace

- Grigori Melnik

- Larry Brader

More information about sessions, schedule, and registration is available online:

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Barr (@jeffbarr) reported AWS SDK for Node.js - Now Available in Preview Form in a 12/4/2012 post:

The AWS Developer Tools Team focuses on delivering the developer tools and SDKs that are a good fit for today's languages and programming environments.

Today we are announcing support for the JavaScript language in the Node.js environment -- the new AWS SDK for Node.js.

Node.js gives you the power to write server-side applications in JavaScript. It uses an event-driven non-blocking I/O model to allow your applications to scale while keeping you from having to deal with threads, polling, timeouts, and event loops. You can, for example, initiate and manage parallel calls to several web services in a clean and obvious fashion.

The SDK is available as an npm (Node Packaged Module) at https://npmjs.org/package/aws-sdk. Once installed, you can load it into your code and configure it as follows:

var AWS = require('aws-sdk');

AWS.config.update({

accessKeyId: 'ACCESS_KEY',

secretAccessKey: 'SECRET_KEY',

region: 'us-east-1'

});Then you create a service interface object:

var s3 = new AWS.S3();

You can then use the object to make requests. Here's how you would upload a pair of objects into Amazon S3 concurrently:

var params1 = {Bucket: 'myBucket', Key: 'myKey1', Body: 'Hello!'};

var params2 = {Bucket: 'myBucket', Key: 'myKey2', Body: 'World!'};

s3.client.putObject(params1).done(function(resp) {

console.log("Successfully uploaded data to myBucket/myKey1");

});

s3.client.putObject(params2).done(function(resp) {

console.log("Successfully uploaded data to myBucket/myKey2");

});The SDK supports Amazon S3, Amazon EC2, Amazon DynamoDB, and the Amazon Simple Workflow Service, with support for additional services on the drawing board.

Here are some links to get you going:

- AWS SDK for Node.js

- AWS SDK for Node.js Getting Started Guide

- AWS SDK for Node.js API Reference

- AWS SDK for Node.js on GitHub

Give it a shot and let me know what you think!

Brandon Butler (@BButlerNWW) asserted “In the cloud, it's a question of who has the ability to scale to meet enterprise needs” in a deck for his Cloud computing showdown: Amazon vs. Rackspace (OpenStack) vs. Microsoft vs. Google article of 12/3/2012 for Network World:

It wouldn't be a mischaracterization to equate the cloud computing industry to the wild, wild west.

There is such a variety of vendors gunning at one another and the industry is young enough that true winners and losers have not yet been determined. Amazon has established itself as the early market leader, but big-name legacy IT companies are competing hard, especially on the enterprise side, and a budding crop of startups are looking to stake their claims, too.

In its latest Magic Quadrant report, research firm Gartner lists 14 infrastructure as a service (IaaS) companies, but Network World looked at four of the biggest names to compare and contrast: Amazon Web Services, Rackspace (and OpenStack), Microsoft and Google.

Amazon Web Services

It's hard to find someone who doesn't agree that Amazon Web Services is the market leader in IaaS cloud computing. The company has one of the widest breadths of cloud services - including compute, storage, networking, databases, load balancers, applications and application development platforms all delivered as a cloud service. Amazon has dropped its prices 21 times since it debuted its cloud six years ago and fairly consistently fills whatever gaps it has in the size of virtual machine instances on its platform - the company recently rolled out new high-memory instances, for example.

There are some cautions for Amazon though. Namely, its cloud has experienced three major outages in two years. One analyst, Jillian Mirandi of Technology Business Researcher, has suggested that continued outages could eventually start hindering businesses' willingness to invest in Amazon infrastructure.

That sentiment gets to a larger point about AWS though - the service seems to be popular in the startup community, providing the IT infrastructure for young companies and allowing them to avoid investing in expensive technology themselves. But Mark Bowker, a cloud analyst for Enterprise Strategy Group, says Amazon hasn't been as popular in the enterprise community. "Amazon's made it really easy for pretty much anyone to spin up cloud services or get VMs," he says. Where is Amazon getting those customers from? Some are developers and engineers who get frustrated by their own IT shops not being able to supply VMs as quickly as Amazon can, so they use Amazon's cloud in the shadows of IT. "Taxi cabs pay for a lot of VMs," says Beth Cohen, an architect at consultancy Cloud Technology Partners, referring to users expensing Amazon services on travel reports. The point is there's a hesitation by some enterprises to place their Tier 1, mission critical applications in a public cloud.

Amazon is looking to extend its enterprise reach though. In recent months the company has made a series of announcements targeting enterprises and developers. It rolled out Glacier, a long-term storage service, while it's made updates to its Elastic application development platform and its Simple WorkFlow Service, which helps developers automate applications running in Amazon's cloud. …

<Return to section navigation list>

0 comments:

Post a Comment