Windows Azure and Cloud Computing Posts for 10/3/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 10/6/2012 for date change of Robin Shahan’s Azure Cloud Storage for Everyone! presentation to the San Francisco Bay Area Azure Developers Group meeting to 10/16/2012 from 10/9/2012 at 6:30 PM (see Cloud Computing Events section below.)

•• Updated 10/6/2012 with new articles marked ••.

• Updated 10/5/2012 with new articles marked •.

Tip: Copy bullet(s) or dagger, press Ctrl+f, paste it/them to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop and Media Services

• Guarav Mantri (@gmantri) began a series with Windows Azure Media Service–Part I (Introduction) on 10/5/2012:

Windows Azure Media Service (WAMS) is one of the newest service offering on Windows Azure platform. I haven’t really looked at it till now (thanks to Brent Stineman for pushing me) and in next few blog posts, I will share my understanding of this service, what it can do for you and how you can make use of it. Since my ultimate interest is in understanding the REST API for managing this, mostly I will talk about them (if I ever get to that).

This blog post is introductory blog post about what this service is, how you can sign up for this service and explanation of some of the processes and terminology. This post will become the foundation of subsequent posts (so wish me luck!!!)

What is Windows Azure Media Service (WAMS)?

This is how it is defined on MSDN website (http://msdn.microsoft.com/en-us/library/windowsazure/hh973632.aspx):

Windows Azure Media Services form an extensible cloud-based media platform that enables developers to build solutions for ingesting, processing, managing, and delivering media content. Media Services are built on the infrastructure of Windows Azure (to provide media processing and asset storage), and IIS Media Services (to provide content delivery).

If we look at the definition, a few things stand out:

- an extensible cloud-based media platform

- ingesting, processing, managing and delivering media content

- built on the infrastructure of Windows Azure and IIS Media Services

To understand the significance of this, let me share a story with you (and this is a real story).

A few years ago, a cousin of mine had an idea of broadcasting events live on the Internet. His idea was to broadcast events like weddings and birthday parties and other personal events over the Internet. Take the scenario if someone close to you in your family is getting married and for some unfortunate reason you’re not able to attend the wedding in person. My cousin’s idea was to provide a platform so that the wedding can be broadcasted live over the Internet so that you can watch the events in real-time as they occur instead of watching a DVD of the wedding later.

Anyways, he was supercharged about this idea and we discussed this idea in great detail. While I could see some sentimental value in this business but the barrier to entry was the requirement of massive technical infrastructure that would be needed to achieve this. He would need massive computing power to process the media and then once the media is processed he would need huge bandwidth to broadcast this event. Furthermore, if you’re aware of wedding scenarios in India they happen only at a certain times of the year thus he would need to be prepared for high traffic during the “wedding” season and then somehow justify the cost of infrastructure he would have acquired to accommodate this high traffic when his business is going through lean season.

Needless to say, I advised him to drop the idea and eventually he did.

Now let’s take the same scenario with this service in picture. With this service, he will be able to process the media without investing in the infrastructure needed for that purpose. Again for the delivery of the media, he can make use of this service (either through Windows Azure CDN, Partner CDN or Windows Azure Blob Storage) so that he need not invest in procuring really high speed Internet in his infrastructure. Again because this is built on Windows Azure Platform and the basic premise is that you only pay for the resources you consume, he need not worry about what to do with the infrastructure during lean season because simply he doesn’t own any infrastructure. Also during peak season, he can scale out thanks to the elastic nature of Windows Azure.

Based on this, if he were to come to me with this idea today, I would have told him to go ahead with it.

So what’s WAMS??? Essentially if you’re in a business where you want to either process and/or deliver media content but were worried about the scalable infrastructure requirement, WAMS is the answer for you. Think of it as a Platform as a Service (PaaS) for Media-based applications.

With WAMS, Microsoft has again lived up to its’ promise they made when they launched Windows Azure:

You (as a business owner/developer) focus on solving business problems, leave the platform to us (Microsoft).

Possibilities

This obviously opens up a lots of possibilities for developers/entrepreneurs like us. Some of the things I could think of (just thinking out loud):

- Build your own version of YouTube or Vimeo to serve user generated content.

- Build something like Spotify.

- If you’re an independent film maker, you could use this platform to process your movies/content.

and many more.

Signing Up

At the time of writing of this blog post, this service is in Preview mode (or in beta phase in other words). Thus you would need to enable this service for your subscription. To do so, please visit accounts section in Windows Azure Portal (https://account.windowsazure.com) and after signing in using your Live Id, click on “preview features” tab.

You should see the list of preview services. Click on “try it now” button next to Media Services.

On the pop-up window you will see all the subscriptions associated with your Live Id. Please select appropriate subscription and click OK button.

Once this feature is enabled under your subscription, you will receive an email and then you can start using this service. I got my notification email in about 10 minutes but if you don’t receive an email in 10 minutes, give it a day or so before contacting support. For some of the services, I had to wait for a day or so.

One important note:

Since this service is in preview mode, you would need to activate it individually for each subscription. For example, if you have 2 subscriptions (say one personal and one official), you would need to repeat the steps for both of your subscriptions.

This is applicable to all services which are in preview mode currently like this and Windows Azure Websites etc.

Terminology

Let’s take a moment and understand some of the terms used in context with WAMS. Most of the definitions are copied from MSDN site: http://msdn.microsoft.com/en-us/library/windowsazure/hh973632.aspx

Asset

An asset is a logical entity which contains information about media. It may contain one more files (audio, video etc.) which needs to be processed.

Delivery

Delivery is the operation of delivering processed media. This may include streaming content live or on-demand to clients, retrieving or downloading specific media files from the cloud, deploying media assets on other servers such as an Azure CDN server, or sending media content to another provider or delivery network.

File

A File is an object containing audio/video blob to be processed. Files are stored in Windows Azure Blob Storage. A file is always associated with an asset and as mentioned above, an asset can contain one or more files.

Ingestion

Ingestion is the process of bringing assets into WAMS. This include uploading files into blob storage and encrypting assets for protection.

Job

A job is again a logical entity representing the work to be done on assets and files. The work is performed by one or more tasks (described below). In short we can say that a job is a collection of tasks. For example, you could have a “Encoding” job which encodes an input file into multiple formats (each task is responsible for converting a file in a specific format).

Manage

Managing is the process of managing assets which are already in WAMS (i.e. ingestion process has been done on them). This may include listing and tagging media assets, deleting assets, editing assets, managing asset keys, DRM key management, and checking account usage reports.

Process

This to me is the most important operation in WAMS. This operation involves working on your ingested assets and performing encoding, converting them or creating new assets out of existing assets. This also includes encoding and bulk-encoding assets, transmuxing assets (transmuxing means changing the outer file or stream format that contains a set of media files, while keeping the media file contents unchanged), creating encoding jobs, creating job templates and presets, checking job status, cancelling jobs, and other related tasks.

Task

A task is an individual operation to be performed on asset or file. A task is always associated with a job.

Access Policies

As the name suggests, access policies define the permissions on an asset or a file. The permissions can be access type (read/write/delete/list/none) and the duration for which the access type is valid.

Locator

A locator is a URI which provides time based access to an asset. It is used in conjunction with an access policy to define the permissions and duration that a client has access to a given asset.

Job Templates

If you have a job that you wish to perform repeatedly, you can create a template for that job and reuse that template so that you don’t end up creating a new job from scratch. It is essentially a collection of task templates (defined below).

Content Keys

At times you may want to protect assets in WAMS by encrypting them. Content keys store the data which is used for encrypting an asset.

Task Templates

A task template basically provides a template that you can use for a task which you perform repeatedly so that you don’t end up creating a new task from scratch. A collection of these task templates create a job template.

Workflow

Basically your media application goes through these 4 operations/processes:

Ingest –> Process –> Manage –> Delivery

Please see the section above for explanation of these operations.

Some Useful Links

You may find these links useful when working with WAMS:

MSDN Documentation:

http://msdn.microsoft.com/en-us/library/windowsazure/hh973629.aspx

Mingfei Yen’s Blog: She is a PM on WAMS team and has written excellent blog posts on the same.

Summary

Well, this is the start of hopefully a long and fruitful journey exploring WAMS. I hope you have found this information useful. As always, if you find some issues with this blog post please let me know immediately and I will fix them ASAP. In the next post, we’ll start exploring some of the APIs (REST and hopefully managed too).

WAMS is and acronym for Windows Azure Mobile Services also. To avoid confusion, my posts about Mobile Services use WAMoS and Media Services use WAMeS.

Mary Jo Foley (@maryjofoley) asserted “Microsoft and Hortonworks' implementation of Hadoop for Windows Server hasn't disappeared or been cut. It's actually in private preview, according to a new Microsoft roadmap” in a deck for her Microsoft's Windows Server implementation of Hadoop is in private preview article of 10/3/2012 for ZDNet’s All About Microsoft blog:

At last, big-data fans, we've got some word of the seemingly-missing-but-not-forgotten Windows Server implementation of Hadoop promised by Microsoft and Hortonworks.

I'd started wondering whether Microsoft's repeated "no comments" about the project's whereabouts -- the most recent of which I received just a couple weeks ago, at the end of September 2012 -- meant Microsoft had decided to go cloud-only with Hadoop. But it turns out the Windows Server version of the Microsoft-Hortonworks Hadoop implementation is still around, and is just in private preview.

A quick refresher as to what's going on with Microsoft and Hadoop.

In the fall of 2011, Microsoft announced it was partnering with Hortonworks to create both a Windows Azure and Windows Server implementations of the Hadoop big data framework. At that time, Microsoft officials committed to providing a Community Technology Preview (CTP) test build of the Hadoop-based service for Windows Azure before the end of calendar 2011 and a CTP of the Hadoop-based distribution for Windows Server some time in 2012. A month after announcing the Hortonworks partnership, Microsoft dropped plans to make its own big data alternative, codenamed Dryad.

In late December 2011, Microsoft posted a video on its Channel 9 site that provided updated information about the company's Hadoop plans. According to that video, which Microsoft subsequently pulled from Channel 9, the company planned to make Hadoop on Windows Azure generally available in March 2012, and Hadoop for Windows Server generally available in June 2012.

Ever since, Microsoft officials have gone silent on the new timetables for the Hadoop for Azure and Hadoop for Windows Server offerings. Until late September 2012, that is.

A slide deck from the "24 Hours of PASS" event from Denny Lee, Technical Principal Program Manager for SQL Business Intelligence Group, made its way to the Web recently. Lee, according to his bio, is "one of the original core members of Microsoft Hadoop on Windows and Azure (code name: Isotope) and had helped bring Hadoop into Microsoft."

A few of the interesting slides from Lee's deck from his September 21, 2012 presentation:

Hadoop on Azure is still in preview, as Lee's slide says. (The latest publicly acknowledged build was the second Community Technology Preview release.) But now we know that the Windows Server version is in private preview, according to Lee's deck. I'm not sure how long it's been in private preview, and have never found any testers who've claimed to have been part of the preview for it.

Also: there's seemingly a new deliverable on the roadmap: An "on-demand" dedicated Hadoop cluster in the cloud, which seems to be some kind of hybrid between the two (best I can tell). Anyone know any more about this?

Microsoft officials have been saying for a while that it wasn't just the Hadoop framework which Microsoft planned to support. There are lots of other related components in the works, like the Excel Hive Add-in, Sqoop, Apache Pig, Hive ODBC and more, as this slide notes. I'm assuming the features listed below the beige bar are the features that will be in the Windows Server version of the Hadoop implementation, and those above the bar are what are in the Azure Hadoop one.

Hadoop for Windows Server includes an interactive console, remote-desktop support, and other related elements, as this slide seems to indicate.

The O'Reilly Strata Conference plus Hadoop World are on tap for late October in New York City. Maybe Microsoft and Hortonworks will share more about their Windows Azure and Windows Server Hadoop plans and progress then (even though there aren't many Softies listed as speakers)?

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Nick Harris (@cloudnick) started a new Blog Series: Sending Windows 8 Push Notifications using Windows Azure Mobile Services on 10/4/2012:

Recently we announced the preview of Windows Azure Mobile Services. In this blog series I will detail how to build a Windows Azure Mobile Service to send push notifications of varying types to your Windows 8 applications.

This series will walk through creating a push notification scenario using WNS and Windows Azure Mobile Services.

- Part 1: Windows Azure Mobile Services and Push Notifications an Overview

- Part 2: Setup: Create and configure your Mobile Service and Windows Store app for Push Notifications

- Part 3: Request a Channel and Register with your Mobile Service

- Part 4: Sending a Toast, Tile or Badge Notification

- Part 5: Efficiency tips and tricks for Push Notification scenarios

As I post each part I will update the links below and tweet as the post is available. So lets get started with Part 1 - Azure Mobile Services and Push Notifications an Overview

Part 1: Windows Azure Mobile Services and Push Notifications an Overview

What is Windows Azure Mobile Services?

Here is an info-graphic on the current Windows Azure Mobile Services stack I pulled together as part of a presentation I recently gave at TechEd. It’s important to note that this info-graphic captures Mobile Services today and over time you will see the feature set of Mobile Services grow exponentially with subsequent releases.

The goal of Windows Azure Mobile Services is to make it incredibly easy for developers to add a cloud backend to their client apps be it a Windows 8, Windows Phone, iOS or Android application. To do this we provide a number of turn key features baked right into the Mobile Services experience. As the diagram depicts Mobile Services today provides:

- Structured Storage

- ability to store structured data to a SQL Database using dynamic schema without being concerned with writing underlying T-SQL.

- If using single database apps are automatically partitioned by schema e.g AppX.Todoitem, AppY.Todoitem

- If you want access to your data you are not locked out and can manage it in a number of ways including the Mobile Service Portal, SQL Portal, SQL Management Studio, REST API etc.

- Server Logic

- Service API: Mobile services automatically generates a REST API to allow you to perform CRUD operations from your client application on your structured storage

- With Dynamic Schema (enabled by default), your Mobile Service will automatically add columns to tables as necessary to store incoming data.

- Ability to author server side business logic directly in the portal that is executed directly within the CRUD operation pipeline

- Auth

- Makes it easy for your users to Authenticate against Windows Live. Other major identity providers are coming soon.

- The REST API can be locked down using table level permissions using a simple drop down. No complex code required. Available permissions levels include: Everyone, Anyone with an Application Key, Only Authenticated Users, Only Scripts and Admins. These permissions can be set individually on each table and can granularly control each CRUD operation of each table.

- More granular control can be added using server side scripts and the user object

- Push Notifications

- Integrates with WNS to provide Toast, Tile and Badge Notifications.

- WNS auth is made easy with the portal captures your WNS client secret and package SID

- the server side script push.wns.* namespace performs WNS auth for you and provides a clean and easy object model to compose notifications

- Common tenants of Windows Azure Services

- Scale

- Compute - scale between shared and reserved mode, increase/decrease your instance count

- Storage - ability to scale out your mobile service tenant(s) to a dedicated SQL DB. Ability to scale up your SQL DB from web through business to 150GB.

- Diagnostics

- View diagnostics directly in the portal including API calls, CPU time and Data Out

- Logging

- Console.* operations like console.log and console.error provide an easy means to debug your server side scripts.

Today we provide client libraries for Windows 8 to make consuming mobile services easy. For more details on what client libraries (Windows 8, Windows Phone, iOS and Android) are supported at the time of reading please see: Mobile Services and Mobile Service Reference.

What are Push Notifications?

The Windows Push Notification Services (WNS) enables you to send toast, tile, badge and raw notifications from the cloud to your Windows Store applications even when your app is not running. Push notifications are ideal for scenarios when you need to target a specific user with personalized content.

The following diagram depicts the general Lifecycle of a push notifications via the Windows Azure Notification Service (WNS). We’ll walk through the steps shortly but before doing so I thought it would be important to call out that as a developer implementing a push notification scenario all you need to do is implement those interactions in grey and the applications/services in blue. Fortunately Mobile Services makes a great deal of this easy for you as you will see throughout the reset of this series.

The process of sending a push notification boils down to three basic steps:

- 1. Request a channel. Utilize the WinRT API to request a Channel Uri from WNS. The Channel Uri will be the unique identifier you use to send notifications to your application.

- 2. Register the channel with your Windows Azure Mobile Service. Once you have your channel you can then store your channel and associate it with any application specific data (e.g user profiles and such) until your services decide that it’s time to send a notification to the given channel.

- 3. Authenticate and Push Notification to WNS. To send notifications to your channel URI you are first required to Authenticate against WNS using OAuth2 to retrieve a token to be used for each subsequent notification that you push to WNS once you have this you can compose and push the notification to the channel recipient. The push.wns.* methods make this task exceptionally quick to accomplish compared to writing it all from scratch yourself.

All in all Windows Azure Mobile Services makes all these concepts and steps and incredibly simple to implement though its structured storage and push notifications features. This series will walk through creating a push notification scenario using WNS and Windows Azure Mobile Services.

- Part 1: Windows Azure Mobile Services and Push Notifications an Overview

- Part 2: Setup: Create and configure your Mobile Service and Windows Store app for Push Notifications

- Part 3: Request a Channel and Register with your Mobile Service

- Part 4: Sending a Toast, Tile or Badge Notification

- Part 5: Efficiency tips and tricks for Push Notification scenarios

As I post each part I will update the links below and tweet as the post is available

For my detailed Push Notifications tutorial for Windows Azure Mobile Services, see my Windows Azure Mobile Services Preview Walkthrough–Part 3: Pushing Notifications to Windows 8 Users (C#) post of 9/22/2012.

• Kirill Gavrylyuk (@kirillg_msft) and Josh Twist (@joshtwist) produced a 00:41:38 Inside Windows Azure Mobile Services video for Channel 9 on 10/3/2012:

Kirill Gavrylyuk and Josh Twist dig into Windows Azure Mobile Services, which enables developers to connect a scalable cloud backend to their client and mobile applications across platforms. Windows Azure Mobile Services allows you to easily store structured data in the cloud that can span both devices and users and integrate it with user authentication as well as send out updates to clients via push notifications.

In this conversation - with plenty of whiteboarding - Kirill and Josh explain how this works, what's involved, where it is today and may be tomorrow. If you want to understand the how and why behind Mobile Services, this is for you. Tune in.

• Matteo Pagani (@qmatteoq) described Having fun with Azure Mobile Services – The setup in a 10/2/2012 post:

Azure Mobile Services is one of the coolest feature that has been recently added on top of Azure. Basically, it’s a simple way to generate and host services that can be used in combination with mobile applications (not only Microsoft made, as we’ll see later) for different purposes: generic data services, authentication or push notification.

It isn’t something new: these services are build on top of the existing Azure infrastructure (the service is hosted by a Web Role and data is stored on a SQL Database), they’re just simpler for the developer to create and to interact with.

In the next posts I’m going to show you how to use Azure Mobile Services to host a simple service that provides some data and how to interact with these data (read, insert, update, etc.) from a Windows 8 and a Windows Phone application. These services are simply REST services, that returns JSON responses and that can be consumed by any application. As you will see, interacting with Windows 8 is really simple: Microsoft has released an add-on for Visual Studio 2012 that adds a library, that can be referenced by a Windows Store app, that makes incredibly easy to do operations with the service.

Windows Phone isn’t supported yet (even if I’m sure that, as soon as the Windows Phone 8 SDK will be released, a library for this platform will be provided too): in this case we’ll switch to “manual mode”, that will be useful to understand how to interact with Azure Mobile Services also from an application not written using Microsoft technology (like an iOS or Android app). In this case, we’ll have to send web requests to the service and parse the response: as we’ll see, thanks to some open source libraries, it won’t be so difficult sas it sounds.

Before starting to write some code, let’s see how to configure Azure Mobile Services.

Activating the feature

Of course, the first thing you’ll need is an Azure subscription: if you don’t have, you can subscribe for the free trial by following these instructions.

Then, you’ll need to enable the feature: in fact, since Azure Mobile Services are in a preview stage, they aren’t enabled by default. To do that, you’ll need to access to the Azure Account Management portal and open the Preview features section: you’ll see a list of the features that are available. Click on the Try now button next to the Mobile Services section, confirm the activation and… you’re ready! You should receive within a few minutes a confirm mail and the page should display the message You are active.

Creating the service

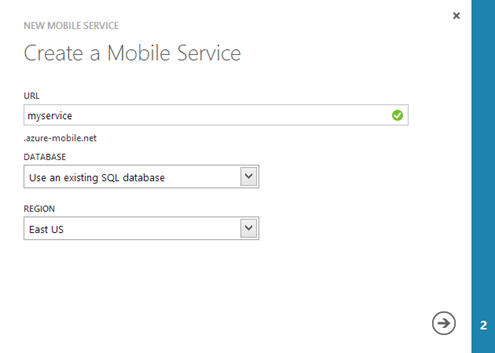

Now that the feature is enabled, we can start using it from the new Azure Management portal: press the New button and choose Create in the Mobile Servicessection. In the first step of the wizard you’ll be asked to choose:

- A name for the service (it will be the first part of the URL, followed by the domain azure-mobile.net (for example, myservice.azure-mobile.net)

- The database to use (actually, you’ll be forced to select Create a new SQL database, unless you already have other SQL Azure instances).

- The region where the service will be hosted: for better performance, choose the closest region to your country.

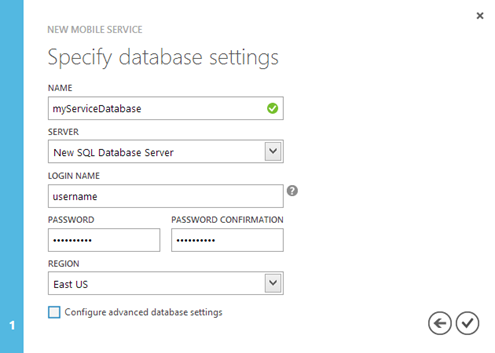

The next step is about the database: in the form you’re going to set some important options.

- The name of the database.

- The server where to store the database (use the default option, that is New SQL Database server).

- Login and password of the user that will be used to access to the database.

- The region where the database will be hosted: for better performance, choose the same region that you’ve selected to host the service.

And you’re done! Your service is up and running! If you go to the URL that you’ve chosen in the first step you’ll see a welcome page. This is the only “real” page you’ll see: we have created a service, not a website, specifically it’s a standard REST service. As we’ll see in a moment, we’ll be able to do operations on the database simply by using standard HTTP requests.

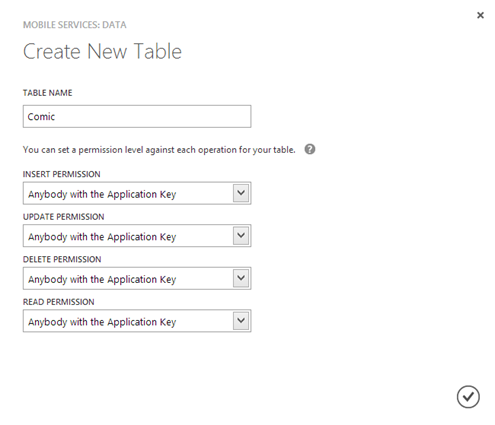

Let’s create a table

To host our data we need a table: for this example, since I’m a comic addicted, we’ll create a simple table to store comics information, like the title, the author and the publishing year. Creating the table is the only part a little bit tricky: the Azure Mobile Service interface, as we’ll see later, provides built in functions just to create a table, without providing functionalities to change the schema and add new columns.

The first thing is to create the table by choosing the service with just created in the portal (in the Mobile services section), switching to the Data tab and pressing the Create button. You’ll be asked to give to the table a name and to set the permissions: by default, we’ll give full access to any application that has been authorized using the secret application key.

Now it’s time to move to the specific Azure tool to manage our SQL instance: in fact, by default, the table will contain just an Id field, already configured to be an identity column (its value will be auto generated every time a new row is inserted) and to act as the primary key of our table. In the management panel, click on the SQL Databases tab; you’ll find the database you’ve just created in the previous wizard. Click on the Manage button that is placed below: if it’s the first time you connect to this database from your connection, the portal will prompt you to add your IP address to the firewall rules: just say Yes, otherwise you won’t be able to manage it.

Once you’ve added it, you’ll see another confirmation prompt, that this time will ask you if you want to manage your database now: choose Yesand login to the account using the database credentials you’ve created in the first step.

Now you have full access to the database management and we can start creating our table: click on the database connected to your service (look for the name you’ve chosen in the second step of the wizard), then click on the Design tab and choose Create new table. If you’re familiar with databases, it should be easy to create what we need: let’s simply add some columns to store our information.

- A title, which is a varchar with label Title

- An author, which is another varchar with label Author

There’s another way to add new columns: by using dynamic data. When this feature is enabled (you can check it in the Configure tab of your mobile service, but it’s enabled by default), you’ll be able to add new columns directly from your application, simply by adding new properties to the class that you’re going to use to map the table. We’ll see how to do this in the next post.

As we’ve seen before, if we call the URL of our service (for example, http://myapp.azure-mobile.net) you’ll see a welcome page: to actually query our tables, we have to do some REST calls. To get the content of a table, we simply need to do a GET using the following URL.

http://myapp.azure-mobile.net/tables/Comic

If everything worked fine, the browser should return you a JSON response with the following format:

{"code":401,"error":"Unauthorized"}This is the expected behavior: Azure Mobile Services require authentication, to avoid that everyone, just with the URL of your service, is able to access your data. By receiving this kind of error we have a confirmation that the table has been successfully created: otherwise, we would have received an error saying that the requested table doesn’t exist.

{"code":404,"error":"Table 'Comic' does not exist."}Now that we have setup everything we need, we are ready to write some code: in the next posts we’ll see how to develop a Windows 8 and a Windows Phone application that is able to connect to our service.

For a more detailed tutorial about setting up WAMS, see my Windows Azure Mobile Services Preview Walkthrough–Part 1: Windows 8 ToDo Demo Application (C#) of 9/8/2012.

Nick Harris (@cloudnick) described Localized Windows Azure Mobile Services Deck, HOL and Demo script in a 10/4/2012 post:

For those of you looking for localized content be it to help you get started with learning about Windows Azure Mobile Services or even if you want to go out and present about it in your local community we have made available a localized hands on labs, powerpoint decks and demo scripts

As a preview here is a screenshot of one of the Agenda slide from the Chinese zh-TW

poser pointPowerPoint deck:

We have localized the content into the following languages thus far. You can click on the link to get access to the content directly

· Chinese zh-TW

· English en-US

· German de-DE

· French fr-FR

· Italian it-IT

· Japanese ja-JP

· Korean ko-KR

· Portuguese pt-BRComing soon:

· Spanish

· Russian

Jesus Rodriguez described a Mobilizing Your Line of Business Systems : Introducing The Enterprise Mobile Backend As A Service Webinar by Tellago to be held on 10/11/2012 at 12:00 PM PDT:

After a brief hiatus, I am super happy to announce our next Tellago Technology Update. This time we will be focusing on one of my favorite topics: enterprise mobility. Here is a quick summary:

Title: Mobilizing Your Line of Business Systems : Introducing The Enterprise Mobile Backend As A Service

Enterprise mobility is, undoubtedly, one of the key trends in the modern enterprise. However, the traditional approach to enterprise mobility represents a fairly expensive proposition both technically and financially for most organizations. To keep up with the fast evolution of enterprise mobile trends, the industry desperately needs alternative technical and commercial models that can make enterprise mobility mainstream. The answer might be on another hot software trend: Backend as a Service.This session introduces the concept of an Enterprise Mobile Platform as a Service (EntMBaaS). The session explores the different EntMPaaS capabilities and how they enable the integration of enterprise mobile applications with line of business systems. We will use real world examples that illustrate how the EntMBaaS model facilitates the implementation and enablement of enterprise mobile applications and will compare the differences with traditional enterprise mobility approaches

Please register at https://www3.gotomeeting.com/register/429194358 we hope to see you there!

Sounds like Windows Azure Mobile Services to me.

Saurabh Kothari posted Introducing Windows Azure Mobile Services to the Aditi Technologies Blog on 10/3/2012:

With so much buzz around Windows 8 Metro and Windows Phone, Microsoft has enabled developers with a lot of tools and infrastructure. Any Mobile or Windows 8 Metro development requires data storage, push notifications and services to access the data store. All these are quite time consuming and requires stitching with various technologies to build an application with simple data model.

To build something as simple as a to-do list will require you to have a WCF service hosted in Windows Azure as a Web role and then talking to SQL Azure. Push notifications requires a bit of work as well. On top of it you would want to secure these services with some kind of authentication. Windows Azure does provide all infrastructure for this but; it there is still a lot of configuration and deployment.

Typically technologies involved would be:

- WCF Service

- Azure Hosting with Web Role

- Access Control Services

- SQL Azure

- Setting up Push notifications

- Code to do the push notifications.

Windows Azure Mobile Services is a single packaged solution for all of these tasks.

With Windows Azure Mobile Services, you can perform these tasks with just a few configurations:

- Set up Service End point

- Create database and tables on SQL Azure [you don’t have to go to SQL Azure management]

- Write triggers on data update to send push notifications with one line of code.

- Integration with Windows Live for authentications

Windows Azure Mobile services provide one unified solution to the developer to configure various aspects of the development. It takes care of exposing the SQL Azure tables as “Data” in the management portal.

As you see, you have tabs to configure items for Push notifications and Identity management. The current support of identity seems to be limited to Windows Live account. Whereas ACS would let you configure Facebook and various other OAuth providers.

So how does it work? Let’s map various development aspects that we discussed with the Windows Azure Mobile Services:

A. Data Storage - SQL Azure

- This is exposed tables for the developer. You can as many tables and define columns as is SQL Azure.

- This also lets you keep the table schema dynamic. This means that the object model can define the columns and if it tables does not have the column it creates one. Scenario of this..? Well I don’t have one in mind yet.

- This as Scripts options where you can do some processing on Insert, Update and Delete operations of data.

- One thing to note is that these are table level operations and not stored procedures.

B. WCF service – ODATA

- Now there is no separate WCF service hosting required.

- The table data is exposed as a ODATA rest based service encapsulated in Microsoft.WindowsAzure.MobileServices namespace and MobileServiceClient class.

- MobileServiceClient is initialized with the secret keys and the service URL [this is where it is hosted] https://xyz.azure-mobile.net/

- So we work with the object model that matches the table structure and call methods on MobileSeviceClient to update, Insert or delete similar to the entity framework.

C. Hosting

- This is taken care [of] as soon as you create new Mobile service.

D. Permissions

- You can set up the identity with windows Live as of now. On the Windows live, register the app for windows live integrations. Similar to older RPS model.

- At table level you can do permissions as follows:

- With MobileServiceClient, you can login the user by sending the Auth token received from the user’s live login using LoginAsync method.

E. Authentication: Windows Live

- You can use Windows Live SDK on the Client to log the user in to the application and send the authentication token to the Windows Mobile Azure services.

So with Windows Azure Mobile Services, all back end work is take care of for Windows 8 Metro and Windows Phone.

Bruno Terkaly (@brunoterkaly) completed his series with Part 4 of 4: Introduction to Consuming Azure Mobile Services from Android on 10/3/2012:

The

next post willpost covers:

- Creating a new Android Application

- How to name your application and modules

- Application Name

- Project Name

- Package Name

- Creating a simple hello world application

How to add a listview control

- Understanding and adding import statements

- Adding java code to populate the listview control with strings

- Download the httpclient library from the Apache Foundation

- Adding the httpclient library to our Android project

- Adding code to call into Azure Mobile Services

- Adding permissions to allow our Android app to call into Azure Mobile Services

- Adding all the java code needed to call into Azure Mobile Services

…

Bruno continues with a fully illustrated tutorial for a simple todo app.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

•• Christian Weyer reported a Bug in ASP.NET 4.0 routing: Web API Url.Link may return null in a 10/6/2012 post to the Thintecture blog:

The other day I was building an integration layer for native HTML5/JS-based mobile apps with Windows Azure’s ACS. For that I needed to craft a redirect URL in one of the action in a controller called AcsController.

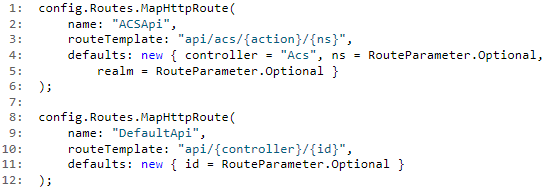

This is my route setup for Web API:

Then in the controller action I try to use Url.Link to build a redirect URL:

var redirectUrl = Url.Link("ACSApi", new { controller = "Acs", action = "Noop" });On various systems running .NET 4.0 this call returned null. Including Windows Azure Web Sites. On installations with .NET 4.5 all was fine. After several emails back and forth with the Web API team in Redmond it turned out that there is a bug in .NET 4.0.

The issue is in ASP.NET Routing with routes that have optional route values followed by a slash. This issue has been fixed in .NET 4.5. But there is a workaround for 4.0 like this:

Hope this helps.

•• Haishi Bai (@HaishiBai2010) described Cross-role Events using Windows Azure Caching (Preview) in a 10/5/2012 post:

Problem

There are several different options for Web Roles and Worker Roles to communicate with each other within the boundary of a Cloud Service:

- Use Service Bus Queues or Windows Azure Queue Storage (async communication, not constrained by service boundary)

- Use Internal Endpoints to directly dispatch workloads (direct communication, within service boundary)

- Use standard cross-machine communications such as WCF (depends on WCF configuration)

- Use a common storage such as Windows Azure Table Storage (shared-storage integration pattern, not constrained by service boundary)

Other than the WCF option, which is more complex comparing to other options, none of above options provides nice support for event-driven pattern. It would be nice if we can implement some simple cross-role eventing mechanism so that we can raise events across role boundaries.

Solution

Windows Azure Caching (Preview) provides some built-in notification capabilities. You can subscribe to notifications at cluster, region, as well as item level. Because the cache cluster is accessible to all configured roles, we can easily tap into this notification mechanism and gain some simple eventing capabilities without much effort. Of course, to implement a robust event system requires much more than what’s presented here. This post is merely to provide some foods for thoughts.

The first thing is to enable notification on your cache cluster. This is a simple configuration change:

Then, create a DataCache client with change-polling interval reduced. By default the interval is 5 minutes. In the following code I set the interval to 5 seconds instead. Note that in the code I’m looking for a specific cache named “role_events”. I think it’s a good idea to have named cache used for events separate from other caches - but of course you can use default cache if you want to.

DataCacheFactoryConfiguration configuration = new DataCacheFactoryConfiguration(); configuration.NotificationProperties = new DataCacheNotificationProperties(1000, new TimeSpan(0, 0, 5)); DataCacheFactory factory = new DataCacheFactory(configuration); DataCache cache = factory.GetCache("role_events");Once we have the cache client, we can subscribe to different cache notifications. In the following code I register for cluster-level add and replace events – depends on your design, you may want to use different events.

if (cache != null) { cache.AddCacheLevelCallback(DataCacheOperations.AddItem | DataCacheOperations.ReplaceItem, (namedCache, regionName, key, version, cacheOperation, nd) => { //CALL CALLBACKS }); }Now the callbacks will be called whenever an item is added or updated in “role_events” cache. To make things even a little nicer, I wrote a simple wrapper to enable .Net style events. Again, this is an over-simplified sample. Don’t use it in your production code

.

public class EventBus { public static event EventHandler<BrokeredEventArgs> EventReceived; private static DataCache mCache; private EventBus() { } static EventBus() { DataCacheFactoryConfiguration configuration = new DataCacheFactoryConfiguration(); configuration.NotificationProperties = new DataCacheNotificationProperties(1000, new TimeSpan(0, 0, 5)); DataCacheFactory factory = new DataCacheFactory(configuration); DataCache cache = factory.GetCache("role_events"); if (mCache != null) { mCache.AddCacheLevelCallback(DataCacheOperations.AddItem | DataCacheOperations.ReplaceItem, (namedCache, regionName, key, version, cacheOperation, nd) => { if (EventReceived != null) EventReceived(mCache, new BrokeredEventArgs(key, mCache.Get(key))); }); } } }Sending event is easy – simply add or update an item in the cache. Note that here I’m not using any custom classes. It’s all standard caching operation. On the other hand, it doesn’t hurt to add a Send() method on EventBus class to provide some additional abstraction. Note that on the sender’s side we don’t need aggressive notification polling as it is on the receiver’s side. Keep that in mind when you implement such as method.

DataCache cache = new DataCache(); cache.put("JobCreated", job);Receiving events is easy as well:

EventBus.EventReceived += (o, e) => { var name = e.EventName; var payload = e.Payload; };And BrokeredEventArgs is a simple class with a name and a payload:

public class BrokeredEventArgs:EventArgs { public string EventName { get; private set; } public object Payload { get; private set; } public BrokeredEventArgs(string name, object payload) { EventName = name; Payload = payload; } }That’s it! One last thing I want to mention is that, for simplicity (yeah, right), above code doesn’t prepare for any transient errors. However in reality you need to prepare for such errors especially when the caching cluster is hosted on a dedicated role. In this case the cluster role instances and other web/worker role instances are initialized independently. Chances are when you make your first caching call the cluster is not ready yet. Although DataCache has some built-in retries when it connects to a cluster, you’d better still prepare for such errors.

Bharat Shah announced Microsoft Acquires PhoneFactor on 10/4/2012:

Today I am excited to announce that we are welcoming PhoneFactor to the Microsoft family. For those of you not familiar with PhoneFactor, they are an industry leader in phone-based multi-factor authentication (MFA) and their solutions bring a unique blend of security and convenience to our developers, partners and customers.

People are connecting to critical applications and services through an ever-growing number of devices – corporate PCs, business or personal laptops, personal phones, and more. These applications and devices are generally only secured using single factor authentication (i.e. passwords). As many are aware, single-factor authentication can often be insufficient, which is why leading businesses around the world are turning to MFA to enhance security in a multi-device, mobile, and cloud-centric world. Typical MFA solutions require the user to have something they know (like their password) and something they physically possess (a device of some kind like a smartcard) – and the result is often too complex or hard to use. MFA is meant to provide enhanced security, but for it to be effective it must also be convenient. PhoneFactor is popular because its solutions interoperate well with Active Directory so users don’t have to learn new passwords and IT administrators and application developers can use infrastructure and services they already know. Also, perhaps most importantly, PhoneFactor is popular because it conveniently relies on a device that most users already have with them – their phone.

PhoneFactor’s solutions can be implemented to help Microsoft customers protect data in SharePoint, on their file servers and with their critical business apps running on-premises. In addition, they can be used to enhance the security of applications running in the cloud. To learn more about PhoneFactor and what our MFA solutions can do for you – today – please visit: www.PhoneFactor.com.

As we bring PhoneFactor onboard, we will drive further integration with key Microsoft technologies like Active Directory, Windows Azure Active Directory and Office 365, making it even easier for customers to protect their on-premises and cloud assets. Of course, we will continue to work with other security partners in the industry to offer a broad array of multi-factor and strong authentication solutions to best meet the wide-ranging and unique security requirements of our customers.

I am thrilled to welcome PhoneFactor to Microsoft and I look forward to sharing more about our plans in the near future.

- Bharat Shah, Corporate Vice President, Server and Tools Division

No significant articles today

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Haishi Bai (@HaishiBai2010) delivered Recipes for Multi-tenant Cloud Service Design – Recipe 1: Throttling by Tenants Using Multi-site Cloud Services and IIS 8 CPU Throttling on 10/3/2012:

About Multi-tenancy

One of the major mind shifts an ISV needs to go through when it migrates on-premises systems to cloud-based systems is the shift from multi-instance (or single-tenant) architecture to multi-tenant architecture. Instead of deploying separate service instances for individual customers, an ISV can serve all its customers from the cloud. During this migration, however, some problems, which may not be so critical in a multi-instance system, will surface and cause troubles if the ISV doesn’t plan ahead. So, migrating an existing service to cloud is not just a simple matter of switching host environments. There are several new challenges the ISV has to face:

- Tenant isolation. An ISV needs to ensure customers are isolated from each other – one customer should not gain access to other customers’ data, either accidentally or intentionally; workflows from different customers should not interfere with each other; provisioning or deprovisioning a customer should have zero impact on other customers; customer-level configuration changes should have no global impacts… In other words, a customer should feel (and be assured) that the service is logically dedicated to him, regardless the fact that it’s hosted on a shared infrastructure.

- Problem containment. A multi-tenant system comes with many benefits such as dramatic reduction in TCO, agility in reacting to market changes, and high-availability based on robust, fault-tolerance hosting environment such as Windows Azure. However, it also brings additional risks to an ISV. For example, a successful DoC attack on a on-premises system brings down only one customer, while the same attack may cause a system-wide failure across all customers. That’s a much higher risk to deal with. An ISV needs ways to closely monitor system health and to constraint the impacts to the smallest scope when problems do occur.

- Security and compliance. This is a broad problem that contains several aspects across a wide spectrum. First, authentication and authorization may become challenging for systems that have been relying on Active Directory. How do you leverage Active Directory on the cloud? Second, many industries and countries have strict compliance requirements such as where the data can be stored and who can execute a particular workflow. How does an ISV satisfy the compliance requirements while it runs the service on Cloud? Third, on a hosted environment, services clients communicate with the services over the public Internet. This requires precautions against attacks such as phishing and eavesdropping, etc. Fourth, on a multi-instance (or a single-tenant) system, the system contains a single security boundary, while in a multi-tenant system, the system contains multiple security boundaries isolating different customers. An ISV has to make sure such finer security boundaries are reinforced to guard against attacks such as spoofing and impersonation, etc.

- Data migration and transformation. Is the ISV’s database schema designed to hold data for multiple customers? Should the ISV consider using separate database for different customers? What are the needs to migrate existing customer data? Will the database layer scale? Should the data be synced among different sources? All these questions require serious consideration and careful planning.

- Transient errors. Service calls fail. Maybe the connection is dropped. Maybe the service is down. Maybe the server is throttling your requests. Maybe your requests timed out due to heavy workloads… Transient errors are the type of errors that are temporary and automatically resolved – if you try the same operation again later the call is likely to succeed. However, if an ISV’s code is not prepared for transient errors and takes granted that certain calls will complete, it will probably be surprised by strange behaviors that are hard to reproduce and very difficult to fix. And I can almost guarantee you when that happens, it ALWAYS happens at the worst possible time.

- Performance, performance, performance. I can’t emphasize enough on the importance of performance because now the ISV’s services and clients are communicating over a long wire. Is added latency acceptable? How to reduce it? In addition, transferring large amount of data on corporate network might work fine, but is it still feasible to transfer the same amount of data over the Internet? Can we keep the same level of caching per customer without exhausting server resources? How to maintain performance level when number of requests spikes? How to handle long-running third-party service calls? How to identify and resolve performance bottlenecks across application layers? Remember, in the world of Internet, a slow service equals to a broken service – and you can quote me on that.

- BI opportunities. It’s much easier for a multi-tenancy system to gain a holistic view of all customers because the system is directly connected with all customer activities. Very few ISVs can resist (nor should they) the tremendous business value such insights can bring them. And many more innovative scenarios become possible when deeper BI are acquired. However, BI doesn’t come for free. It’s by itself a sizable project. What I’ve observed is that without proper planning, some initial data-mining capabilities are sprinkled here and there and people LOVE them. And more and more demands come in (often from “the top”) as the project goes along, until the system starts to suffer from lacking of proper BI architecture. In one particular case, because the OLAP queries were locking up transactional data, they had to be pulled out from the system in a very late stage so that the service was not interrupted. And I can tell you the situation wasn’t pretty.

As you can see, there are LOTS to consider when implementing a multi-tenant system. But I don’t want you to get discouraged. Instead, you should be excited as MANY people have worked hard to provides all kinds of guidance, services and tools to help building multi-tenant systems. As a world leader of services, Microsoft is no exception. If you looked closely to the new features provided by Windows Server 2012, IIS 8, and Windows Azure, you can see multi-tenancy written everywhere. There are good chemical reactions happening between Windows Azure and Windows Servers – they leverage each other and pull each other forward. The interaction creates not only a better and better cloud platform, but also stronger, more scalable on-premises servers.

For the rest of the series, I’ll go through several recipes that provide potential solutions to many of above problems and challenges. Each recipe is presented in a problem-solution format, with tags on problems indicating to which areas the problems are related. I’ll be using features and capabilities of Windows Server 2012, IIS 8, Windows Azure, Windows Azure SDK, as well as .Net 4.5. However, you should be able to try out most of the samples with a Windows 8 + IIS 8 + Visual Studio 2012 + Windows Azure SDK machine. Now let’s jump in!

Recipe 1: Throttling by Tenants Using Multi-site Cloud Services and IIS 8 CPU Throttling

Problem [Tenant isolation][Problem containment][Performance]

It’s very common for an ISV to provide different sites for its SaaS subscribers. The approach creates not only a sense of ownership, but also security boundaries and management boundaries to manage the customers more effectively. Because customers from all sites compete for the same pool of precious resources the ISV can provide – CPU, storage, bandwidth, etc., the ISV has to make sure excessive workload from one customer has minimum impacts on other customers. In addition, the ISV may have signed different SLAs with different customers, it has to ensure the prime subscribers granted with sufficient resources to keep the SLA promises.

In summary, our requirements are:

- Be able to throttle users based on their service subscriptions.

- Heavy workloads should not jeopardize the overall system performance.

Solution

With IIS 8 on Windows Server 2012, IIS application pools are isolated into sand-boxes. The sand-boxes provide not only the security boundaries, but also the resource management boundaries. With sand-boxed application pools, an ISV can truly limit how much CPU each tenant can use (read more about sand-boxing on www.iis.net). In this sample solution, I’ll assume the ISV has two levels of subscriptions: gold and silver. To ensure SLA, the ISV allows gold subscribers to consume more CPU than the silver subscribers can ever request. In addition, the CPU throttling also makes sure the system is not saturated by unexpected surge of workloads. The solution is simple - I’ll use Web Role multi-site capability to create two separate sites, one for each level of subscription. And then, I’ll configure the corresponding application pools according to predefined throttling policy.

Sample walkthrough

The following is a walkthrough of creating the sample scenario from scratch. I do assume you are familiar with Windows Azure Cloud Service, IIS manager and ASP.NET MVC in general, so some of the steps won’t be as details as for beginners.

Prerequisites

- Visual Studio 2012

- Windows 8 with IIS 8.0

- Windows Azure SDK

Step 1: Create a simple multi-site Web Role with a heavy workload simulator.

- Create a new Cloud Service with a ASP.NET MVC4 Web Role.

- In Solution Explorer, right-click on the Cloud Service project and select Properties…. Then, in Web tab, change Local Development Server to Use IIS Web Server.

- Add a new CPUThrottleController Controller with an empty Index view.

- CPUThrottleController contains two methods:

public ActionResult Index() { return View(); } public ActionResult HeavyLoad() { int count = 4; for (int i = 0; i < count; i++) { ThreadPool.QueueUserWorkItem((obj) => { Random rand = new Random(); while (true) { rand.Next(); } }); } return new HttpStatusCodeResult(200); }The Index() method returns the Index view, which we’ll implement in a moment. The HeavyLoad() method simulates a CPU intensive work by creating a tight loop. Because my test machine has four logical processors, I used count 4 to make sure all of them are saturated. You can adjust this value according to your machine’s configuration.- Modify the Index view for CPUThrottleController and add a Kill CPU link:

@Html.ActionLink("Kill CPU", "HeavyLoad");- Now define the sites in Cloud Service project’s .csdef file:

... <Runtime executionContext="elevated"/> <Sites> <Site name="gold" physicalDirectory="..\..\..\IIS8Features.Web"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint1" hostHeader="gold.haishibai.com"/> </Bindings> </Site> <Site name="silver" physicalDirectory="..\..\..\IIS8Features.Web"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint1" hostHeader="silver.haishibai.com"/> </Bindings> </Site> </Sites> ...Note: you’ll need to update physicalDirectory values to reflect your own solution folder structure and Web Role project name. Also, I included instruction to run RoleEntry with elevated privilege, because we’ll need to reconfigure application pools, which needs administrative accesses. You can also update the hostHeader values to use the values of your choice.- Edit your hosts file under %sysemroot%\System32\Drivers\etc folder to include two lookup entries for above two host names:

127.255.0.0 gold.haishibai.com 127.255.0.0 silver.haishibai.comStep 2: Test the application without throttling

- Press F5 to start the application. You’ll see a browser with a 400 error – that’s expected.

- Launch another browser and go to either http://gold.haishibai.com:82 or http://silver.haishibai.com:82. Note that on your system you may get a different port number. The way to get this port number is to open IIS manager and examine the binding of deployed sites:

- Start the Task Manager. Arrange the browser window and Task Manager window side-by-side:

- Now in the browser address, add /CPUThrottle then press enter. Then, click on the Kill CPU link on the page. Watch CPUs go nuts:

- Close BOTH browser window. Visual studio will clear up the web sites and corresponding application pools it created for you. And your CPU usage should drop back to normal.

Step 3: Enable Throttling

- Add a reference to Microsoft.Web.Administration.dll to your Web Role project (browse to %systemroot%\System32\inetsrv folder to find the assembly).

- Modify the WebRole class to reconfigure application pools to enable CPU throttling:

public class WebRole : RoleEntryPoint { public override bool OnStart() { CustomerProfile[] profiles = { new CustomerProfile{Name ="gold", CPUThrottle = 40000}, new CustomerProfile{Name ="silver", CPUThrottle = 30000}}; using (ServerManager serverManager = new ServerManager()) { var applicationPools = serverManager.ApplicationPools; foreach (var profile in profiles) { var appPoolName = serverManager.Sites[RoleEnvironment.CurrentRoleInstance.Id + "_" + profile.Name].Applications.First().ApplicationPoolName; var appPool = applicationPools[appPoolName]; appPool.Cpu.Limit = profile.CPUThrottle; appPool.Cpu.Action = ProcessorAction.Throttle; } serverManager.CommitChanges(); } return base.OnStart(); } private struct CustomerProfile { public string Name; public int CPUThrottle; } }In above implementation, the OnStart() method finds the application pool each site is using, and then applies CPU throttling to each pool according to CustomerProfile settings. CPUThrottle is the value of 1/1000 of CPU percentage (so, 10 percent is 10,000). This sets the upper limit of CPU power the application pool is allowed to use. You can see for “gold” users we are allowing up to 40%, while for silver users we are allowing up to 30%. ProcessorAction.Throttle specifies when the throttling limit is hit, user requests should be throttled. There are other options such as KillW3wp (similar to what you get in IIS 7) and ThrottleUnderLoad. You can read more about these options on www.iis.net.- That’s all we have to do! Now launch the application again and try the Kill CPU link. You can see CPU consumption is well under control. If you want, you can launch another browser and navigate to the other site and do the same Kill CPU operation. Because collectively the applications are allowed to use up to 70% percent of CPU, the CPUs are not saturated in either case:

.

Some additional notes

- You can also configure the throttling settings via AppCmd. For example, this command limits CPU consumption of DefaultAppPool to 30%:

%systemroot%\system32\inetsrv\appcmd set apppool DefaultAppPool /cpu.limit:30000 /cpu.action:Throttle- If you want to use appcmd in a startup task to configure the application pools, be aware that by the time the startup task runs, the application pools have not been created. As a workaround, you can use appcmd to change the default application pool settings so that all application pools created hereafter will be affected. However it’s not possible to set up different settings for different application pools:

%systemroot%\system32\inetsrv\appcmd set config -section:system.applicationHost/applicationPools /applicationPoolDefaults.cpu.limit:90000 /commit:apphost- After the sites are deployed, you can also change the setting in IIS manager UI – simply select the application pool you want to configure and bring up Advanced Settings:

Part 1 Summary

In this post we created a multi-site Web Role for two levels of subscriptions – silver and gold. And then we configured corresponding application pools to ensure gold users have abundant CPU resources to use regardless the loads on the other group.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Himanshu Singh (@himanshuks) posted Real World Windows Azure: Exhaust Systems Manufacturer Akrapovič Revs Up for Global Operations with Windows Azure on 10/4/2012:

As part of the Real World Windows Azure series, I connected with Aleš Tancer, CIO at Akrapovič to learn more about how Windows Azure has provided the company with a platform that will support the high growth of their business and their global operations. Read Akrapovič’s success story here. Read on to find out what he had to say.

Himanshu Kumar Singh: Tell me about Akrapovič.

Aleš Tancer: Akrapovič is the leading global manufacturer of top-quality exhaust systems for motorcycles and performance cars and supports many of the world top motorcycle and car racing teams. Based in Slovenia, we are also an innovator in titanium and carbon fiber product manufacturing. Akrapovič aftermarket car exhaust systems can be found on leading global car brands such as Abarth, Audi, BMW, Ferrari, Porsche, Lamborghini and many others. Our motorcycle aftermarket or first fit exhaust systems are used by Aprilia, BMW, Ducati, Gilera, Harley Davidson, Honda and Yamaha, among others.

HKS: How fast is your company growing?

AT: We have a partner network that spans more than 60 countries and serves more than 3,000 partners and our innovative solutions and technologies and global brand recognition have driven rapid company growth. Our goal is to increase revenue by 100 percent in the next five years and add several thousand new partners to our partnership network. To effectively support these ambitious development goals, we needed a comprehensive solution that would efficiently link all business processes related to B2B and CRM operations.

HKS: Who did you turn to for the development of this comprehensive solution?

AT: We decided to entrust the development of our Akrapovič Business Platform system to Microsoft Consulting Services (MCS) Slovenia and Agito. Agito has already completed several projects for our company and has proven that they are among the most dependable, effective and competent partners for developing software projects by using Microsoft's technologies. The company is also renowned as a leading regional company for Microsoft SharePoint Server deployments, custom .NET development and different integration projects.

HKS: What role did Microsoft Consulting Services play?

AT: Microsoft Consulting Services participated in all stages of the projects, which included the assessment of the existing environment and planning future business processes and solutions. Microsoft architects were involved in designing the features that would be best suited to our needs in terms of performance, availability, security and scalability requirements. Microsoft Consulting Services also cooperated in the integration stage of the project where Microsoft consultants took over the integration of individual systems while Agito provided integration interfaces.

HKS: Tell me about the solution.

AT: The Akrapovič Business Platform solution consists of several closely linked systems: Product Information System (PIM), Business Portal (BP), Internet Portal (IP) and CRM system integration. It supports all the most important business processes: management of customers, campaigns, opportunities, orders, contracts and payments. The solution also links these processes to research and development processes.

We wanted to take advantage of the benefits offered by using both on-site solutions as well as cloud computing. The result is a tightly integrated hybrid environment, where some systems, such as Dynamics CRM and BizTalk are deployed on-site, while a hosting provider hosts others. We also utilized Windows Azure, which means that the solution represents a realistic and gradual migration path from the local environment to the cloud.

HKS: What other Microsoft solutions are you using?

AT: SharePoint Server 2010 enabled us to unify our Internet and Extranet platforms and to pave the way for deploying Intranet in the future. All collaboration processes and workflows that serve internal departments, teams, individuals, and external partners are supported by the system. The result of this approach are streamlined content management and content publishing processes.

Microsoft Dynamics CRM 2011 and Microsoft Office 2010 brought a fresh, yet familiar experience to our users in Marketing & Sales. The user experience that combines CRM and Outlook drives user adoption with a familiar and flexible user interface, helping to minimize resource and training investments. Many teams, especially in the sales department, have switched from SAP to CRM, where they have everything they need for their daily work.

Microsoft BizTalk Server 2010 was used to integrate SAP, CRM, PIM and the B2B Portal. Chosen because it is a mature enterprise product for integration and connectivity, BizTalk Server includes more than 25 multi-platform adapters and a robust messaging infrastructure to provide connectivity between core systems whether they are local, remote, hosted or cloud-based.

And we chose Windows Azure Content Delivery Network (CDN) to serve multimedia content and technical documents. Akrapovič products come with a huge amount of marketing multimedia and technical materials, which is ideal for cloud storage. Windows Azure CDN enables us to ensure that our partners all over globe share the same user experience in terms of download speeds.

HKS: How are you rolling out this new solution?

AT: At the project launch in Q1 of 2012 the first partners were given access to the new business portal and their feedback was very positive. Our goal is that all existing partners will be comprehended in the new business community, which means about 3.000 partners in more than 60 countries in Europe, North and South America, Asia and Middle East. In Q3 2012, the same solution will also be deployed in our American subsidiary. In the future, new Sales business units are scheduled to be opened in Europe, Asia and South America, where the same back-office solution will be considered.

HKS: What are some of the internal business benefits you’ve seen with this new solution on Windows Azure?

AT: The new solution provides us with better control over Marketing & Sales and Research & Development business processes, connects partners to all of our departments and people, and centralizes all information and content about products in one place, an important benefit for all partners.

Before the implementation of the new solution, all sales orders were taken by phone, fax or e-mail and manually entered into the SAP system. This process was very time consuming and required a lot of administrative work with much space for errors and uncertainty. Administrative burden was significantly reduced, resulting in a shorter and more effective sales cycle. Other benefits include a shorter sales cycle and more responsive marketing services, faster ordering process, and reduced time from ordering to distribution. We now also have a powerful marketing tool for executing campaigns or sending newsletters.

HKS: What about the partner and customer benefits?

AT: By implementing the new solution, we’ve been able to dramatically increase the quality and efficiency of collaboration in our partner network and to improve the control over our internal marketing, sales and development processes. The new solution provides a single point of entry and information source for all partners. The company is now able to provide richer and more up-to-date information to our partners.

HKS: Are there also business benefits to your company?

AT: Yes, by using the information from the on-line ordering system, we are better positioned to plan production more accurately to reduce back-orders and warehousing costs. The new solution offers a high level of flexibility that enables us to implement different business approaches for different partner segments and offer high levels of localization. We are now able to run more accurate analytics, which in turn supports better strategic decisions.

The solution also enables us to expand our business operations, both from the volume as well as geographical point of view. Agents can easily introduce new dealers, importers, and distributors and manage them. One of the many benefits of the new information system are also its high availability and the ability to place orders 24/7, which is mandatory for global expansion. By implementing the system, we have established a platform that will support high growth of our business and our global operations.

Read how others are using Windows Azure.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Gaurav Mantri posted Understanding Windows Azure Diagnostics Costs And Some Ways To Control It on 10/3/2012:

In this blog post, we’ll try to understand the costs associated with Windows Azure Diagnostics (WAD) and some of the things we could do to keep it down.

Brief Introduction

Let’s take a moment and talk briefly about WAD especially around how the data is stored. If you’re familiar with WAD and it’s data store, please feel free to skip this section.

Essentially Windows Azure Storage (Tables and Blobs) are utilized to store WAD data collected by your application. Following table summarizes the tables/blob containers used for storing WAD data:

Table / Blob Container Name Purpose WADLogsTable Table to store application tracing data. WADDiagnosticInfrastructureLogsTable Table to store diagnostics infrastructure data collected by Windows Azure. WADPerformanceCountersTable Table to store performance counters data. WADWindowsEventLogsTable Table to store event logs data. WADDirectoriesTable Pointer table for some of the diagnostics data stored in blob storage. wad-iis-logfiles Blob container to store IIS logs. wad-iis-failedrequestlogfiles Blob container to store IIS failed request logs. wad-crash-dumps Blob container to store crash dump data. wad-control-container Blob container to store WAD configuration data.

In this blog post, we will focus only on tables.

Understanding Overall Costing

Now let’s take a moment and understand how you’re charged. Since the data is stored in Windows Azure Storage, there’re two components:

Storage Costs

This is the cost of storing the data. Since the data is stored in the form of entities in the tables mentioned above, it is possible to calculate the storage size. The formula for calculating the storage cost of an entity is:

4 bytes + Len (PartitionKey + RowKey) * 2 bytes + For-Each Property(8 bytes + Len(Property Name) * 2 bytes + Sizeof(.Net Property Type))

Where, the Sizeof(.Net Property Type) for the different types is:

- String – # of Characters * 2 bytes + 4 bytes for length of string

- DateTime – 8 bytes

- GUID – 16 bytes

- Double – 8 bytes

- Int – 4 bytes

- INT64 – 8 bytes

- Bool – 1 byte

- Binary – sizeof(value) in bytes + 4 bytes for length of binary array

At the time of writing this blog post, the cost of storing 1 GB of data in Windows Azure Storage was:

$0.125 – Geo redundant storage

$0.093 – Locally redundant storage

Transaction Costs

This is the cost of inserting records in Windows Azure Table Storage. WAD makes use of entity group transactions and the PartitionKey for WAD tables actually represent date/time (in UTC) up to minutes precision. What that means is that for each minute of diagnostics data stored in table storage, you incur charge for a single transaction. This is based on the assumption that

- You’re not collecting more than 100 data points per minute because there’s a limit of 100 entities per entity group transaction. E.g. if you’re collecting 5 performance counters every second, then in a minute you’re collecting 300 data points per minute. In this case, to transfer this data WAD would need to perform 3 transactions.

- Total payload size is less than 4 MB because of the size limitation in an entity group transaction. E.g. if a WAD entity is say 1 MB in size and you have 10 such WAD entities per minute. Since the total payload is 10 MB, to transfer this data WAD would need to perform 3 transactions.

At the time of writing this blog post, the cost of performing 100,000 transactions against your storage account was $0.01.

Bandwidth Costs

There’s also bandwidth costs but we will not consider it in our calculations because I’m assuming your compute instances and diagnostics storage account are co-located in the same data center (even in the same affinity group) and you don’t pay for bandwidth unless the data goes out of the data center.

Storage Cost Calculator

Now let’s take some sample data and calculate how much it would cost us to store just that data. One can then extrapolate that data to calculate total storage costs.

Since all tables have different attributes, we will take each table separately.

So if I am writing the following line of code once per second: