Windows Azure and Cloud Computing Posts for 10/15/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 10/19/2012 8:00 AM PDT with corrected Codename “Cloud Numerics” signup link

and added other articles marked ‡.

•• Updated 10/18/2012 with new articles marked ••.

• Updated 10/16/2012 with new articles marked •.

Tip: Copy bullet(s) or dagger, press Ctrl+f, paste it/them to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop and Media Services

‡ Benjamin Guinebertiere (@benjguin) posted Hadoop + SSIS, SSIS + Windows Azure Blob Storage on 10/18/2012 in English and French. Here’s the English version:

I worked on a white paper which has just been published on MSDN: Leveraging a Hadoop cluster from SQL Server Integration Services (SSIS)

I’d like to point out that the paper comes with sample code (thanks Rémi!) that can also be used besides Hadoop as it enables data movement to and from Windows Azure Blob storage from SQL Server’s ETL: SSIS.

•• John Waters (@johnkwaters) asserted “A new, free tool from JNBridge connects .NET developers with HBase, the database for Hadoop” in a deck for his LINQing .NET and Hadoop article of 10/16/2012 for Visual Studio Magazine:

As Big Data becomes more critical, the tools that connect the data to various development environments take on increasing importance.

For .NET developers, gaps continue to exist, but they're getting filled more quickly than ever, making interoperability an issue that can almost be taken for granted.

Take, for example, JNBridge, the Boulder, Colo.-based maker of tools that connect Java and .NET Framework-based components and apps. Last week, it released another in its evolving series of free interoperability kits. The latest "lab" demonstrates how to build .NET-based LINQ providers for HBase, which "expands the possibilities by enabling .NET-based front-ends to access HBase."

HBase is a Java-based, open source, distributed, scalable database for Big Data used by Apache Hadoop, the popular open-source platform for data-intensive distributed computing. LINQ (Language Integrated Query) is Microsoft's .NET Framework component that adds native data querying capabilities to .NET languages (C#, VB, etc.).

HBase and Hadoop are now standard tools for accessing Big Data. But HBase can be accessed only through Java client libraries, and there's no support front-end data query through languages like LINQ. Developers working with Hadoop end up creating single-platform solutions -- which is a problem in the real world of the heterogeneous enterprise, explained JNBridge CTO Wayne Citrin.

"Considering that a lot of analysis and data visualization in the real world is done on things like Microsoft Excel," he told ADTmag, "wouldn't it be nice if you could use LINQ as an abstract layer and provide a .NET client so that .NET becomes a first-class citizen in the Hadoop world?"

The new kit offers two new ways to create queries in .NET-based clients: simple, straightforward LINQ providers for accessing HBase; and even more efficient (5 to 6 times faster, Citrin says) LINQ providers for HBase that integrate MapReduce into the queries.

"Developers using these LINQ providers in their code don't need to know anything about HBase and Hadoop," Citrin explained. "They can write a LINQ query, and it'll just work. Nothing else currently out there does this."

The company is aiming the interoperability kits at developers looking for new ways of connecting disparate technologies. The "some assembly required" kits are not yet full-blown products or features. They're scenarios that demonstrate the kinds of use cases possible with the company's out-of-the-box products, such as JNBridge Pro. They include pointers to documentation and links to source code, and users are encouraged to enhance them. This is the third kit offered by the company. The first, released in March, was an SSH Adapter for Microsoft's BizTalk Server, which was designed to enable the secure access and manipulation of files over the network. The second, released in May, demonstrated how to build and use .NET-based MapReducers with Hadoop.

"Microsoft has left a gap here, and we're filling it," Citrin said. "There's really nothing else out there like this."

The company's flagship product, JNBridgePro, is a general purpose Java/.NET interoperability tool designed to bridge anything Java to .NET, and vice versa, allowing developers to access the entire API from either platform. Last year the company stepped into the cloud with JNBridgePro 6.0.

The JNBridge Labs are free and available for download from the company's Web site here.

Full disclosure: I’m a contributing editor for 1105 Media’s Visual Studio Magazine.

•• Steve Marx (@smarx) described Wazproxy: an HTTP Proxy That Signs Windows Azure Storage Requests in a 10/17/2012 post:

Last night, I published Wazproxy, an HTTP proxy written in Node.js that automatically signs requests to Windows Azure blob storage for a given account. This is useful for developers who want to try out the Windows Azure REST API without having to deal with authentication. By running wazproxy and proxying web requests through it, you can use simple tools like curl or even a web browser to interact with Windows Azure storage.

Wazproxy is also useful for adapting existing apps to work with Windows Azure storage. For example, if you have an application that can consume a generic OData feed but doesn't support Windows Azure storage authentication, you can start wazproxy, change your proxy settings, and use the application as-is.

To install, just run npm install wazproxy -g. Then run wazproxy -h to see the usage:

Usage: wazproxy.js [options] Options: -h, --help output usage information -V, --version output the version number -a, --account [account] storage account name -k, --key [key] storage account key -p, --port [port] port (defaults to 8080)There are more examples on the Wazproxy GitHub page, but here's how you can manipulate blob storage using curl. This example creates a container, uploads a blob, retrieves that blob, and then deletes the container:

curl <account>.blob.core.windows.net/testcontainer?restype=container -X PUT -d "" curl <account>.blob.core.windows.net/testcontainer/testblob -X PUT -d "hello world" -H "content-type:text/plain" -H "x-ms-blob-type:BlockBlob" curl <account>.blob.core.windows.net/testcontainer/testblob # output: "hello world" curl <account>.blob.core.windows.net/testcontainer?restype=container -X DELETEGet the code

The full source code is available on GitHub, under an MIT license: https://github.com/smarx/wazproxy

Adam Hoffman (@stratospher_es) explained Enabling cross domain access to Windows Azure Blobs from Flash clients in a 10/15/2012 post:

Here’s an interesting tidbit that came across my desk recently. If you’re building applications with Adobe Flash and want to enable the use of Windows Azure for blob storage, you’ll need to be able to create a “cross-domain policy file” in order to get the Flash client to request blobs.

Why? Because the Flash client requires it. Specifically:

“For security reasons, a Macromedia Flash movie playing in a web browser is not allowed to access data that resides outside the exact web domain from which the SWF originated.” – Source: Cross-domain policy for Flash movies

So how does that relate to the use of Windows Azure Blob Storage from Flash applications?

Well, imagine this. You create a Flash application and host it on your site. It might even be a site hosted on Windows Azure, or maybe not. Either way, the application itself has an “exact web domain from which the SWF originated”, as follows:

Hosting Platform Typical URL Originating Domain (as seen by Flash) Non Windows Azure Host http://www.mycompany.com mycompany.com Windows Azure Cloud Services, no custom CNAME http://mycompany.cloudapp.net mycompany.cloudapp.net Windows Azure Cloud Services, with custom CNAME http://www.mycompany.com mycompany.com Windows Azure Websites http://mycompany.azurewebsites.net mycompany.azurewebsites.net Windows Azure Websites, Shared or Reserved Mode, with custom domain name http://www.mycompany.com mycompany.com Now, here comes the problem. When you access the Windows Azure Blob Storage, the domain that will be serving up your blobs is going to be a subdomain of http://blob.core.windows.net (something like http://yourcompany.blob.core.windows.net), and that doesn’t match up with _any_ of these domains here. By default, Flash won’t let you access this domain, unless you are able to serve up a crossdomain.xml file from that domain. This policy file is a little XML file that gives the Flash Player permission to access data from a given domain without displaying a security dialog. When it resides on a server, it lets the Flash Player have direct access to data on the server, without the prompts for user access. But since Windows Azure Blob Storage is an Azure service, that’s not possible, right?

As it turns out… it is possible. You can actually host the crossdomain.xml file in the root container of your blob storage, and then simply ensure that the root container has public read access. It looks like the following:

CloudBlobContainer cloudBlobContainer = cloudBlobClient.GetContainerReference("$root");

cloudBlobContainer.CreateIfNotExist();

cloudBlobContainer.SetPermissions(new BlobContainerPermissions { PublicAccess = BlobContainerPublicAccessType.Blob });

Thanks to my pal Marcus for the information on this!

Slodge (@slodge) showed how to enable Piggybank for Hadoop on Azure in a 10/15/2012 post:

I needed some time conversion scripts for my Pig on HadoopOnAzure...

I looked around and could only find this http://www.bimonkey.com/2012/08/no-piggybank-for-pig-on-hadoop-on-azure/

Fortunately, building one didn't seem too bad.... basically you just have to:

have JDK 7 installed

- download ant from Apache

- set up some path variables (ANT_HOME and JAVA_HOME)

- download the Pig source

- open a cmd prompt, cd to the pig directory, then type `ant`

- cd to the piggybank directory and type `ant`

- download the Jodatime source

- cd to the Jodatime directory and type `ant`

If this feels like too much effort... then here's some readymade jar's - http://slodge.com/pig/piggybank.zip.

• According to a message from Matt Winkler (@mwinkle) of 10/15/2012 in the HadoopOnAzureCTP Yahoo! Group (requires joining):

We've got piggybank on our backlog, likely won't be the next update which is rolling out shortly, but we will look at it after that. …

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡ Nathan Totten (@ntotten) and Nick Harris (@cloudnick) released Cloud Cover TV Episode 91 - Windows Azure Mobile Services Updates on 10/19/2012:

In this episode Nick and Nate catch up on a variety of Windows Azure news and discus the latest improvements to Windows Azure Mobile Services. Nick demonstrates how easy it is to send SMS messages using Twilio and Mobile Services. Additionally, Nick shows how to use the Windows Azure SDK for Node.JS from Mobile Services using the 'azure' node module.

In the News:

- Windows Azure Mobile Services: New support for iOS apps, Facebook/Twitter/Google

identity, Emails, SMS, Blobs, Service Bus and more- Best Practices for the Design of Large-Scale Services on Windows Azure Cloud

Services- Announcing: Improvements to the Windows Azure Portal

- Introducing a New User Experience for Windows Azure Service Bus

- Announcing The Microsoft Accelerator for Windows Azure, powered by TechStars

- Taking the Fear Out of Commitment; New Offer For the Way You Build in The Cloud

- Microsoft Acquires PhoneFactor

- Microsoft Reaches Definitive Agreement to Acquire StorSimple

‡ Han, MSFT reported SQL Data Sync Preview October Service Update Is Now Live! in a 10/18/2012 post to the Data Sync Team blog:

We have just released the October service update for SQL [Azure] Data Sync Preview. In this update, users can now create multiple Sync Servers under a single Windows Azure subscription. With this feature, users intending to create multiple sync groups with sync group hubs in different regions will enjoy performance improvement in data synchronization by provisioning the corresponding Sync Server in the same region where the hub is.

Please download the new Agent from http://www.microsoft.com/en-us/download/details.aspx?id=27693. For detail[ed] Agent upgrade procedures, please visit http://msdn.microsoft.com/en-us/library/windowsazure/hh667308.

•• Aneesh Pulukkul posted Understanding Push Notifications on Windows Azure on 10/17/2012 to the Aditi Technologies blog:

Picture this: there is a cricket match being played between your favorite teams. Due to an urgent task you have to give the match a miss but at the same time you wish to know how the game progresses. As the game advances you receive updates on your mobile device. How does this happen? An application vendor creates game score applications. When a game is on, the score updates are sent as Push Notification (PN) which the end-users receive on their mobile devices.

The concept of Push Notifications has been around for a while. Every firm has implemented its own version of Push Notification Service (PNS). Microsoft’s version of PNS Microsoft Push Notification Service (MPNS) helps application vendors send notifications to Windows Phone (WP) devices.

This blog post covers the custom implementation of a scalable PNS, which is hosted on Windows Azure and operates as proxy to MPNS. I will also discuss scalability and reliability which are the key characteristics of this service.

Data Flow Diagram:

To avail this service (MPNS), application vendors have to register themselves with the service. This registration is made through a portal. I am focusing on the services and not the operational details of the portal.

Mobile device users who use the WP application should register with PNS. When registering, the mobile device, users should specify the channels of their interest and the unique Device URI that they receive from MPNS. After this, vendors can send notifications to the registered mobile devices.

Architecture:

The PNS comprises of two components:

- RESTful Web service- this is implemented as a web role.

- Dispatcher – this is implemented as a worker role.

The PNS service uses Azure Tables, Queues and Blobs for storing information.

RESTful Web Service

To implement service operations, WCF REST Programming Model was chosen with XML as a data exchange format. JSON format is also an equally good choice for RESTful service.

Since MPNS uses XML for payloads, we opted for XML format to maintain consistency.

To protect the service operations, a hash token is generated when application vendor registers with the PNS initially. This hash token is hashed again (for protecting the token) and passed in the HTTP headers while accessing service operations.

In addition to the hash token, application vendors can configure a set of allowed IP addresses so that notifications from only those IP Addresses are accepted by the PNS.

Dispatcher

The role of a dispatcher is to fetch the details of devices subscribed to particular channels and forward the incoming messages from the application vendors to these devices. Since a dispatcher has a major amount of the work to do in the entire flow, it had to undergone a series of performance improvements which are mentioned in the section: Design Challenges.

Design Challenges:

Scalability - Since the PNS Is targeted for mobile users, it should be adequately scaled-up to address millions of requests without any degradation or disruption of service.

Performance - Performance is considered and tweaked at two areas:

- Partitioning of Azure tables

- Async operations for service using Task Parallel Library.

Since the device information is stored and retrieved from Azure tables, partitioning gains a lot of importance in regard to performance. We improved the table partitioning after a couple of iterations. The partition key was derived by adding the application’s unique ID to the remainder that was obtained by dividing the hash code of the device’s unique ID with a partition count.

I have shown this in the following code snippet that uses modulo operator (%):

device.AppId + "_" + (Math.Abs(device.DeviceId.GetHashCode()) % devicePartitionLimit).ToString("0000");Example:

For an application with application Id “AngryBirds”, device hash code 78 and deviceParitionLimit 9, the partition key would be AngryBirds_0006, since the modulo value of operation (78 % 9) is 6.

All the device registrations with same modulo value will be placed under a single partition.

The service operations are implemented as asynchronous so that a user does not have to wait for the operation to complete. This contributed to enhanced performance.

Handling failures:

Since the service is real-time and hosted on Azure, fault handling is crucial. The PNS is a real time service and during a failure the support personnel and technical team should resolve the issue at the earliest and get the service up and running. To help the technical support team in this regard, we have provided adequate level of logging to identify the root cause of error.

Operations and Support:

The service runs with two small instances for the services web-role and twenty extra-small instances of worker-roles. Extra-small instances were chosen to strike a balance between the cost and the resources. For details on Azure VM sizes, please refer to this MSDN link.

Need for Scalability:

While considering scalability, there are two ways in which it can be implemented, manual and automated. If the load is quite predictable, automated scaling-up would be a better option. When the load is known in a short-time window, manual scaling-up will do the job.

In this implementation of PNS, as required by customer, manual mode was chosen. There are two situations where scaling operations are performed:

- Customer informs the operations team about the upcoming demand. The operations team then adds the required number of virtual machines to the service.

- Operations team observes that a high number of messages to be processed are in the queue. In this case, the operations team increases the number of virtual machines proportionally to process the messages without delay.

Performance Counters:

When the PNS service is started, performance counters are registered. This helps in understanding the scaling needs for the service. For the web service, requests per second and available memory are considered whereas for the dispatcher, CPU usage, available memory and a custom counter for number of messages to be processed are considered.

•• Kirill Gavrylyuk (@kirillg_msft) rang in with Announcing the Windows Azure Mobile Services October Update on 10/17/2012:

Yesterday we made some big updates to Windows Azure Mobile Services! The feature suite for Windows Store apps is growing and you can begin using Mobile Services to develop native iOS apps.

This October update includes:

Current iOS libraries added to the Mobile Services GitHub repo

- Email services through partnership with SendGrid

- SMS & voice services through partnership with Twilio

- Facebook, Twitter, and Google user authentication

- Access to Windows Azure Blob, Table, Queues, and ServiceBus from your Mobile Service

- Deployment to the US-West data center

You can also check out Scott Guthrie’s blog post for more information regarding this update.

Update: iOS support still in development, but current libraries up on GitHub

The Mobile Services team is very proud to honor the wider Windows Azure commitment to open source development. We previously announced that iOS development was in the works, and today we’re happy to share an update on our progress. The most current iOS are available on GitHub, you can now access the iOS Quick Start project in the Windows Azure portal, and find tutorials in the Mobile Services dev center.

The current libraries support structured storage, the full array of user authentication options (Windows Live, Facebook, Twitter, Google), email send through SendGrid, SMS & voice through Twilio, and of course allow you to access Blobs, Tables, Queues, and ServiceBus. A simple push notification service for iOS is not currently supported. Look for subsequent preview releases to deliver a complete solution for iOS and to add support for Android and Windows Phone!

Mobile Services are still free for your first ten applications running on shared instances. With the 90-day Windows Azure free trial, you also receive 1 GB SQL database and 165 MB daily egress (unlimited ingress). Both iOS and Windows Store apps count towards your total of 10 free Mobile Services.

Power of email

The Windows Azure Mobile Services team is very excited to announce that we’re building on our partnership with SendGrid to deliver a turnkey email solution for your Mobile Services app. We’re teaming up to make it easier for you to include a welcome email upon successful authentication, an alert email when a table is changed, and pretty much anything else that will help you build a more complete and compelling app.

You can add email to your app in three simple steps.

- First sign up here to activate a SendGrid account and receive 25,000 free emails per month through the introductory SendGrid + Windows Azure offer.

- Once you receive approval from SendGrid, login to the Windows Azure portal and navigate to the DATA tab on your to-do-list getting started project.

- Click SCRIPT, then INSERT and replace pre-populated code with what is below:

That’s it. Now every time one user updates the todo list, the other users will get an email letting them know what needs to get done.

Visit the Windows Azure Mobile Services dev center for the full tutorial. Our friends at SendGrid have also put together a tutorial for sending a welcome email on successful authentication. If you’re fired up about how you added email to your Mobile Services app, let us know!

You can review how to use SendGrid with all Windows Azure services here.

SMS & Voice

Today at TwilioCon, Scott Guthrie’s demo showed just how quickly you can add SMS capabilities to your app through Twilio and Windows Azure Mobile Services, and just how powerful that end product can be.

Incorporating voice and SMS to your app is just as quick and painless as email was above. If you decide to send an SMS alert rather than an email every time an item is added to the todo list, you would still follow three easy steps:

- Activate a Twilio account. (When you’re ready to upgrade later, you can receive for 1000 free text messages or incoming voice minutes for using Twilio and Windows Azure together.)

- Head back to the Windows Azure portal and navigate to the DATA tab on your to-do-list getting started project.

- Click SCRIPT, then INSERT and replace the code you copied from above (or the pre-populated code if you’re starting fresh) with what is below:

If you want to show off how Twilio is making your Mobile Services app even better, tell us where to look!

You can review how to use Twilio with all Windows Azure services here.

3rd Party User Auth

Microsoft account authentication was part of the initial August preview launch and thousands of you have incorporated that into your Windows Store apps so far. Today, we’re expanding your authentication options to include Facebook, Twitter, and Google.

To add any of these authentication options, you first need to register your app with the identity provider of your choice. Then, copy your Mobile Service’s URL from the Dashboard (https://<yourapp-name>.azure-mobile.net) and follow the appropriate tutorial for registering your app with Microsoft, Facebook, Twitter, or Google.

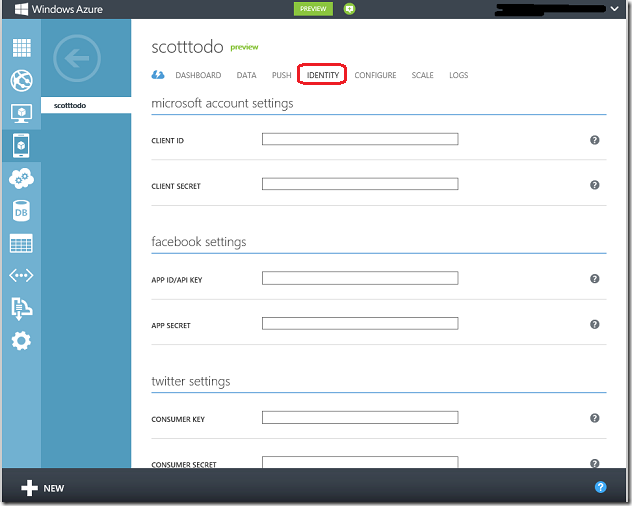

Each of these tutorials will show you how to get a client ID and secret key, which you will then need to paste into the appropriate location on the identity tab. Don’t forget to hit Save!

There are additional authentication tutorials available under the following ‘Getting Started’ walk-throughs:

- Getting started with authentication in Mobile Services for Windows Store (C#)

- Getting started with authentication in Mobile Services for Windows Store (JavaScript)

- Getting started with authentication in Mobile Services for iOS

Access Windows Azure Blog, Tables, and ServiceBus

This update includes the ability to work with other Windows Azure services from your Mobile Service server script. Mobile Service server scripts run using Node.js so to access additional Azure services, you simply need to use the Windows Azure SDK for Node.js. If you wanted to obtain a reference to a Windows Azure Table in a Mobile Services script, for instance, you would only need:

The tutorials in the Windows Azure Node.js dev center will tell you everything you need to know about starting to work with Blob, Tables, Queues, and ServicesBus using the azure module.

Deploy to US-West

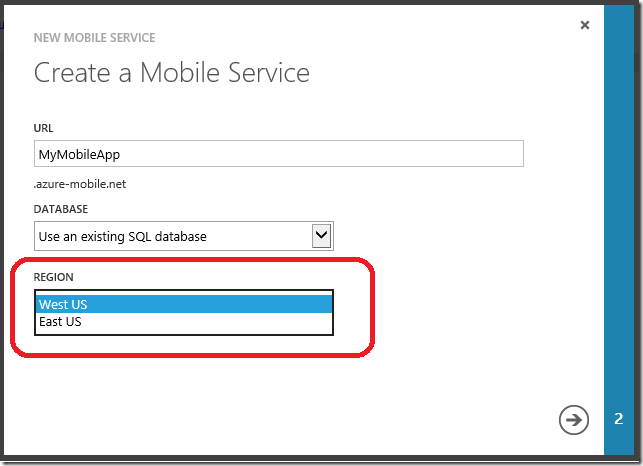

Until now, you’ve only been able to deploy Mobile Services to the US-East data center. Now you’ll also be able to deploy Mobile Services to the US-West data center.

There are a couple things you need to know:

- Manage cost and latency by deploying your Mobile Service and SQL database to the same data center.

If you are creating a new database, simply select the same data center in the drop down as you did for your Mobile Service. If you are connecting an existing database to a Mobile Service and need to move to a new data center, instructions for how to do so can be found here and here.

- You can deploy different Mobile Services to different data centers from the same subscription.

- If you upgrade a Mobile Service in one data center to Reserved instances, you must also upgrade all other Mobile Services you have deployed to that data center to Reserved instances.

For example, consider a scenario where you have four Mobile Services—A, B, C, and D—and the first two are deployed to the US-East data center but the second two are deployed to the US-West data center. If you upgrade Mobile Service A to Reserved instances, Mobile Service B will automatically be upgraded to Reserved instances as well because both are in the US-East data center. Mobile Services C and D will not automatically be upgraded since they are in a different data center.

If you later choose to upgrade Mobile Services C and D to Reserved instances, those charges will appear separately on your monthly bill so that you can better monitor your usage.

- You still receive 10 free Mobile Services in total, not 10 per data center. You can of course still deploy to US-East, if you prefer.

What’s Next?

Later this month we will add support for the Windows Server 2012 and .NET 4.5 release. In that update, we will enable new web and worker role images with Windows Server 2012 and .NET 4.5 as well as support .NET 4.5 with Web Sites.

If you have questions, ask them in the forum. If you have feedback (or just want to show off your app), send it to the Windows Azure Mobile Services team at mobileservices@microsoft.com.

•• Ralph Squillace riffed on Windows Azure Mobile Services with iOS and Android in a 10/27/2012 post:

Yesterday, Scott Guthrie blogged about the new iOS client SDK and feature set for Mobile Services.

I'd like to bring several great bits of documentation together so that if you're interested in seeing the different ways you can use non-Microsoft platforms like iOS and Android to connect to Mobile Services, you have them all here. First, you can build the quickstart "Todo" Mobile Service by starting with the Windows Store quickstart here: https://www.windowsazure.com/en-us/develop/mobile/tutorials/get-started/. (To use the iOS instructions, you can go to https://www.windowsazure.com/en-us/develop/mobile/tutorials/get-started-ios/.) (I had a hand in writing the code for the native iOS client SDK application tutorial.)

That's existed for some time, and early on Microsoft evangelist Bruno Terkaly was very interested in showing how to use the service from any client, so he build a great tutorial set for iOS that makes HTTP REST requests and responses and the JSONKit library to handle the JSON formatting. It's a very simple walkthrough, and once you've built the basic ToDo Mobile Service, you can use and reuse it again and again from any application, including nodejs or php sites (duh). But his iOS tutorial is in five parts, beginning here:

Now you can do these same steps using the Mobile Services client iOS SDK. Those tutorials are:

- Getting Started with Mobile Services iOS SDK.

- Getting Started with Data using an iOS application. (Shows how to add support for Mobile Services to a pre-existing iOS application.)

- Getting Started with Authentication using an iOS application. (Shows how to add support for FB, Google, Twitter, and Microsoft Account authentication to a pre-existing iOS application.)

Got that? The first five demonstrate how to use Mobile Services making direct HTTP requests and the JSONKit library for serialization. The second set show you how to do the same thing, with the same Todo Mobile Service, but using the native client iOS SDK.

But Bruno didn't stop there. He's still out ahead of our own releases. Next up is Android support, and sure enough, he's already there. I get to help write up any Android client SDK when it arrives, but for now:

- Mobile Services from Android, Part One.

- Mobile Services from Android, Part Two.

- Mobile Services from Android, Part Three.

- Mobile Services from Android, Part Four.

Hopefully we'll catch up with Bruno soon.

• Scott Guthrie (@scottgu) announced Windows Azure Mobile Services: New support for iOS apps, Facebook/Twitter/Google identity, Emails, SMS, Blobs, Service Bus and more in a 10/16/2012 post:

A few weeks ago I blogged about Windows Azure Mobile Services - a new capability in Windows Azure that makes it incredibly easy to connect your client and mobile applications to a scalable cloud backend.

Earlier today we delivered a number of great improvements to Windows Azure Mobile Services. New features include:

iOS support – enabling you to connect iPhone and iPad apps to Mobile Services

- Facebook, Twitter, and Google authentication support with Mobile Services

- Blob, Table, Queue, and Service Bus support from within your Mobile Service

- Sending emails from your Mobile Service (in partnership with SendGrid)

- Sending SMS messages from your Mobile Service (in partnership with Twilio)

- Ability to deploy mobile services in the West US region

All of these improvements are now live in production and available to start using immediately. Below are more details on them:

iOS Support

This week we delivered initial support for connecting iOS based devices (including iPhones and iPads) to Windows Azure Mobile Services. Like the rest of our Windows Azure SDK, we are delivering the native iOS libraries to enable this under an open source (Apache 2.0) license on GitHub. We’re excited to get your feedback on this new library through our forum and GitHub issues list, and we welcome contributions to the SDK.

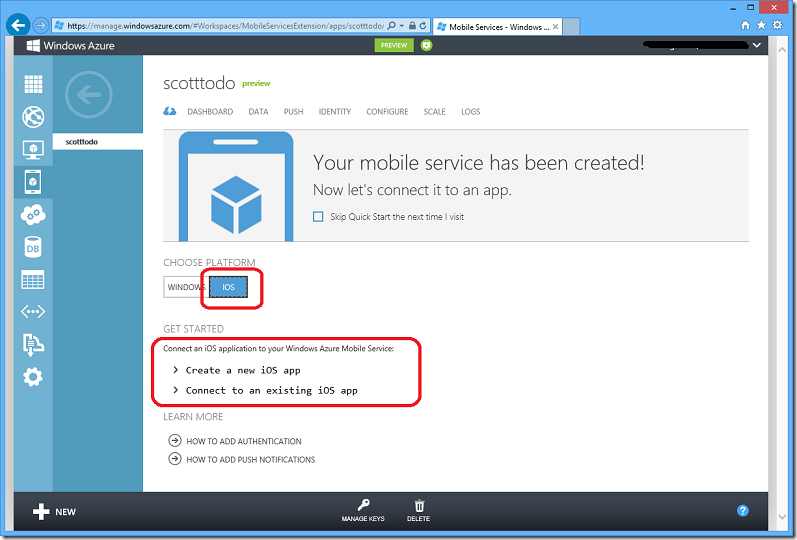

To create a new iOS app or connect an existing iOS app to your Mobile Service, simply select the “iOS” tab within the Quick Start view of a Mobile Service within the Windows Azure Portal – and then follow either the “Create a new iOS app” or “Connect to an existing iOS app” link below it:

Clicking either of these links will expand and display step-by-step instructions for how to build an iOS application that connects with your Mobile Service:

Read this getting started tutorial to walkthrough how you can build (in less than 5 minutes) a simple iOS “Todo List” app that stores data in Windows Azure. Then follow the below tutorials to explore how to use the iOS client libraries to store data and authenticate users.

- Get Started with data in Mobile Services for iOS

- Get Started with authentication in Mobile Services for iOS

Facebook, Twitter, and Google Authentication Support

Our initial preview of Mobile Services supported the ability to authenticate users of mobile apps using Microsoft Accounts (formerly called Windows Live ID accounts). This week we are adding the ability to also authenticate users using Facebook, Twitter, and Google credentials. These are now supported with both Windows 8 apps as well as iOS apps (and a single app can support multiple forms of identity simultaneously – so you can offer your users a choice of how to login).

The below tutorials walkthrough how to register your Mobile Service with an identity provider:

- How to register your app with Microsoft Account

- How to register your app with Facebook

- How to register your app with Twitter

- How to register your app with Google

The tutorials above walkthrough how to obtain a client ID and a secret key from the identity provider. You can then click on the “Identity” tab of your Mobile Service (within the Windows Azure Portal) and save these values to enable server-side authentication with your Mobile Service:

You can then write code within your client or mobile app to authenticate your users to the Mobile Service. For example, below is the code you would write to have them login to the Mobile Service using their Facebook credentials:

Windows Store App (using C#):

var user = await App.MobileService

.LoginAsync(MobileServiceAuthenticationProvider.Facebook);

iOS app (using Objective C):

UINavigationController *controller =

[self.todoService.client

loginViewControllerWithProvider:@"facebook"

completion:^(MSUser *user, NSError *error) {

//...

}];

Learn more about authenticating Mobile Services using Microsoft Account, Facebook, Twitter, and Google from these tutorials:

- Get started with authentication in Mobile Services for Windows Store (C#)

- Get started with authentication in Mobile Services for Windows Store (JavaScript)

- Get started with authentication in Mobile Services for iOS

Using Windows Azure Blob, Tables and ServiceBus with your Mobile Services

Mobile Services provide a simple but powerful way to add server logic using server scripts. These scripts are associated with the individual CRUD operations on your mobile service’s tables. Server scripts are great for data validation, custom authorization logic (e.g. does this user participate in this game session), augmenting CRUD operations, sending push notifications, and other similar scenarios.

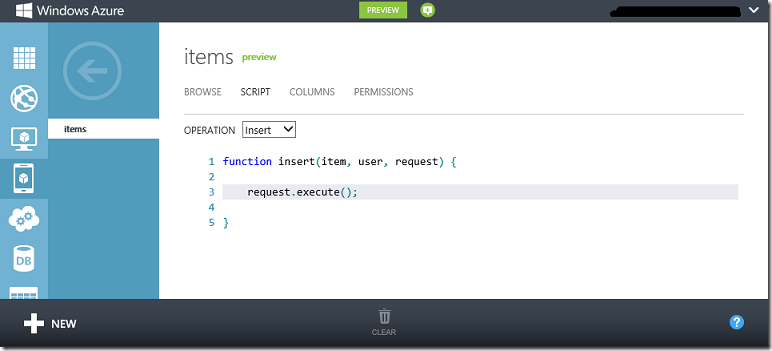

Server scripts are written in JavaScript and are executed in a secure server-side scripting environment built using Node.js. You can edit these scripts and save them on the server directly within the Windows Azure Portal:

In this week’s release we have added the ability to work with other Windows Azure services from your Mobile Service server scripts. This is supported using the existing “azure” module within the Windows Azure SDK for Node.js. For example, the below code could be used in a Mobile Service script to obtain a reference to a Windows Azure Table (after which you could query it or insert data into it):

var azure = require('azure');

var tableService = azure.createTableService("<< account name >>",

"<< access key >>");

Follow the tutorials on the Windows Azure Node.js dev center to learn more about working with Blob, Tables, Queues and Service Bus using the azure module.

Sending emails from your Mobile Service

In this week’s release we have also added the ability to easily send emails from your Mobile Service, building on our partnership with SendGrid. Whether you want to add a welcome email upon successful user registration, or make your app alert you of certain usage activities, you can do this now by sending email from Mobile Services server scripts.

To get started, sign up for SendGrid account at http://sendgrid.com . Windows Azure customers receive a special offer of 25,000 free emails per month from SendGrid. To sign-up for this offer, or get more information, please visit http://www.sendgrid.com/azure.html.

One you signed up, you can add the following script to your Mobile Service server scripts to send email via SendGrid service:

var sendgrid = new SendGrid('<< account name >>', '<< password >>');

sendgrid.send({

to: '<< enter email address here >>',

from: '<< enter from address here >>',

subject: 'New to-do item',

text: 'A new to-do was added: ' + item.text

}, function (success, message) {

if (!success) {

console.error(message);

}

});

Follow the Send email from Mobile Services with SendGrid tutorial to learn more.

Sending SMS messages from your Mobile Service

SMS is a key communication medium for mobile apps - it comes in handy if you want your app to send users a confirmation code during registration, allow your users to invite their friends to install your app or reach out to mobile users without a smartphone.

Using Mobile Service server scripts and Twilio’s REST API, you can now easily send SMS messages to your app. To get started, sign up for Twilio account. Windows Azure customers receive 1000 free text messages when using Twilio and Windows Azure together.

Once signed up, you can add the following to your Mobile Service server scripts to send SMS messages:

var httpRequest = require('request');

var account_sid = "<< account SID >>";

var auth_token = "<< auth token >>";

// Create the request body

var body = "From=" + from + "&To=" + to + "&Body=" + message;

// Make the HTTP request to Twilio

httpRequest.post({

url: "https://" + account_sid + ":" + auth_token +

"@api.twilio.com/2010-04-01/Accounts/" + account_sid + "/SMS/Messages.json",

headers: { 'content-type': 'application/x-www-form-urlencoded' },

body: body

}, function (err, resp, body) {

console.log(body);

});

I’m excited to be speaking at the TwilioCon conference this week, and will be showcasing some of the cool scenarios you can now enable with Twilio and Windows Azure Mobile Services.

Mobile Services availability in West US region

Our initial preview of Windows Azure Mobile Services was only supported in the US East region of Windows Azure. As with every Windows Azure service, overtime we will extend Mobile Services to all Windows Azure regions. With this week’s preview update we’ve added support so that you can now create your Mobile Service in the West US region as well:

Summary

The above features are all now live in production and are available to use immediately. If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using Mobile Services today. Visit the Windows Azure Mobile Developer Center to learn more about how to build apps with Mobile Services.

We’ll have even more new features and enhancements coming later this week – including .NET 4.5 support for Windows Azure Web Sites. Keep an eye out on my blog for details as new features become available.

Hope this helps,

Scott

It’s nice to have Mobile Services hosted closer to home. I’ll update the OakLeaf ToDo Demo app and resubmit it for listing in the Windows Store.

• Glenn Gailey (@ggailey777) provides additional background in his Windows Azure Mobile Services—Now We Are Getting Somewhere in a 10/16/2012 post:

The Windows Azure team just enabled a whole set of new features and functionalities for the still-in-preview Mobile Services offering. You can read ScottGu’s blog post with the full details (there is a lot), but I wanted to specifically highlight two huge features that are (IMHO) really going to drive the adoption of Mobile Services as a cloud backend service for mobile apps…..

Support for iOS Apps

Limiting support to Windows Store apps was an achievable and strategically positioned starting point for the Mobile Services preview. However, with a full-scale release of Windows 8 still pending, we have yet to see the impact of Windows Store apps in the mobile universe (although I’m pretty sure that apps will catch on with Windows as they have on iPad). While app devs today may be investigating Windows Store apps as the launch of Win8 looms, everyone who is writing mobile apps for a living are writing iOS apps for both iPhone and iPad, as well as Android apps. (Hopefully, we can soon add Windows Phone 8 to this list.)

The good news that Scott just announced is that we just added support for the iOS platform to the Mobile Services preview, which means iOS developers get the following:

- The same kind of quickstart experience, including a portal generated code project, that you get for Windows Store apps.

- An iOS client library that is (essentially) a functional equivalent of the C# and JavaScript client libraries.

- iOS versions of the following key tutorial topics:

(I’m not an iOS guy—I don’t even have an Apple machine on which to run Xcode—so I was able to get help with these tutorials from the ever versatile Ralph Squillace).

- Get started with Mobile Services

- Get started with authentication

- Use scripts to authorize users

- Get started with data

Just a note that support for iOS apps is still considered “in development,” in particular because support for push notifications is not yet available.

Support for Major Identity Providers

Apps need to be able to authenticate and authorize users to provide a more customized experience and a more secure partitioning of data. Mobile Services has always provided support for authenticating users, but at first this could only be done by using Live Connect with (what is now called) a Microsoft Account. This solution basically worked, but it was pretty much a Windows Store app thing that got even more difficult to configure after Windows retired their preview app registration site from last year’s BUILD conference. While providing the benefits of single sign-on for Windows Store apps and enabling you to retrieve Microsoft Account info for the logged-on user, all the authentication work is done on the client by using the Live Connect client library.

Today, in addition to using a Microsoft Account, you can also authenticate users by using a Facebook, Twitter, or Google login. Mobile Services enables you to register your app with these identity providers, register the client secret values with your mobile service, and request Mobile Services to initiate an authentication request to your preferred identity provider with a single line of code:

user = await App.MobileService

.LoginAsync(MobileServiceAuthenticationProvider.Facebook);This (C#) code sends a login request from a Windows Store app to Mobile Services asking to authenticate the user with (in this case) a Facebook login. The server then handles the OAuth interaction by displaying an identity provider web page that allows a user to login:

On successful completion of the login process, Mobile Services returns a userId value—the same userId value that is used on the service-side for authorization.

Pros on this new approach:

- Simple client code (single method)

- Free registration with identity providers (even for Windows Store apps)

- Users can choose their preferred login provider.

Cons:

- Mobile Services doesn’t request or store any individual user info from the identity provider, so you can’t access things like the user’s name in the client app like you can when using the Live Connect SDK.

For a complete walkthrough of this new authentication process, see Get started with authentication for Windows Store apps (or this new iOS version).

For folks writing Windows Store app with Live Connect, no need to despair. Single sign-on is still available for Windows Store apps, but you have to have a developer registration ($50) to be able to register your app just to try it out (you don’t need to actually publish your app ).

For instructions on how to still do this in a Windows Store app, see Authenticate with Live Connect single sign-on.

• CA Technologies announced CA ERwin Data Modeler for Microsoft SQL Azure in a recent two-page datasheet. From Page 1:

• Karthikeyan (@f5debug) posted Learn Windows Store App Development in 31 Days – Day 1 – Overview and Requirements of Windows Store App Development

Welcome to the Learn Windows Store Application Development in 31 days series. In this series we are going to start looking into what is a Windows Store App is all about and understand the latest and much awaited Modern UI design to develop your first Windows store application and upload to the Windows Store in this 31 days tutorial. This tutorial is targeted to the Level 100 to Level 300 audience which is going to explain about start developing the app from the scratch and explains the components and tools that will be used to develop a Unique application which will be packaged and uploaded to the Market place.

What is Windows Store Application?

Before we dig into what and why a Windows Store Application is all about, we will first take a look on what Windows 8 is all about as Windows 8 will be the base Operating system which the Windows Store is targeted upon. So for those who are not familiar with Windows 8 here is a small idea on the same, “ Windows 8 is the new operating system announced by Microsoft for use on personal computers, including home and business desktops, laptops, tablets, and home theater PCs. Windows 8 introduces significant changes to the operating system's graphical user interface and platform, such as a new interface design incorporating a new design language used by other Microsoft products, a new Start screen to replace the Start menu used by previous versions of Windows, a new online store that can be used to obtain new applications, along with a new platform for apps that can provide what Microsoft described as a "fast and fluid" experience with emphasis on touchscreen input.”

So as quoted in the above sentence, Microsoft has a new Online Store which is targeted to Application development on a set of pre acceptable guidelines which is basically a new Modern UI (Metro UI later) which is not called “Windows Store Application” Here is a small idea on how the new Windows 8 operating system will be.

Windows 8 is fast and speedy that when we use the application or play games, as Windows 8 starts quickly and uses less memory for the application and games. Also Windows 8 is cloud connected, so we can have direct access to the data over the cloud to the Windows 8 PC or the tablet on the go. Like Windows Phone Marketplace here in Windows 8 as well we have a market place where have option to download the latest applications and games and share along with your friends over the social networking media.

So What are the requirements of Windows Store App Development?

Windows store application can be developed with Visual Studio 2012 which has launched a month before, so basically we need to have a Windows 8 Operating System which is already available as RTM running on a development machine along with Visual Studio 2012 IDE. For downloading these software's visit to http://msdn.microsoft.com and install on the development machine. Below are some of the useful links which are used to download the required software.

Once you downloaded and installed the Windows 8 RTM and Visual Studio 2012 IDE we can see some of the base templates that are available to be used to develop the Windows store application as shown in the screen below.

We will look into these templates in detail in our upcoming articles, meanwhile once we can see the Windows Store template available we need to register and activate the license of the Windows Store application development using Visual Studio 2012. Please follow the steps that I have already documented in my other blog “ How to Activate Windows 8 RTM with a Product Key “.

What are the Software and Hardware Requirements to Build Windows Store Application?

Below are some of the requirements which needs to be taken into consideration while setting up the development environment for developing Windows Store Applications.

Hardware Requirements: (For Installing Windows 8 RTM)

- Processor: 1 gigahertz (GHz) or faster

- RAM: 1 gigabyte (GB) (32-bit) or 2 GB (64-bit)

- Hard disk space: 16 GB (32-bit) or 20 GB (64-bit)

- Graphics card: Microsoft DirectX 9 graphics device with WDDM driver

Operating System: (As on today RTM is the last build available for download)

- Windows 8 RTM evaluation copy

- Windows 8 RTM MSDN Copy

Software Requirements:

- Visual Studio 2012 Express or RTM

- Windows 8 SDK

- Windows App Certification Kit for Windows RT

- Windows 8 Ads in Apps SDK

- Windows Azure Mobile Services

How a Windows Store Application look like?

Windows Store applications are going to be next gen apps which will target the user experience more closely compared to the other aspects. With Windows Store application users will be really having some exciting options to navigate and play around with the application and games that can be developed. The application will be opened in Full Screen with navigation options available all through the sliding lookups at the right side as well with the new Start screen that is easily available.

The below screen is a sample on how a Windows Store Application looks like, this application is developed using XAML with C# for Windows Store application development. This app is called Jewel Manager which keeps track of all the purchase made on Jewels specifically Gold and Silver.

So in our next tutorial we will see the different templates available and how to start developing our first Windows Store Application using Visual Studio 2012 IDE. To follow this series I would suggest every one to start installing the required software’s on to there deveopment machines and be ready to develop your unique Windows Store app to publish to Windows Store.

Hope this tutorial will be useful to you, If interested please don’t forget to connect with me on Twitter, Facebook and GooglePlus for updates. Also subscribe to F5debug Newsletter to get all the updates delivered directly to your inbox. We won’t spam or share your email address as we respect your privacy.

David Pallman posted Windows 8 and the Cloud: Better Together on 10/14/2012:

In this post we're going to talk about how you can leverage cloud computing in your Windows 8 apps: why you should, how to do it, and some illustrative examples. We'll look at 4 ways to use the cloud in your Windows 8 apps:

A Quick Primer on Windows 8 and Windows Azure

Windows 8, as everyone knows, is Microsoft latest operating system. It's a big deal in many respects: a cross-over between PCs and mobile devices, designed to run well on both standard PCs and new ARM devices. It includes a new kind of app and styling ("The Design Style Formerly Known as Metro"). Developers need to go through a process to get their apps into the Windows Store, or alternatively they can be side-loaded for enterprise users. Developers have the choice of using C++/XAML, C#/XAML, or HTML5/CSS/JavaScript to create native applications.

Windows 8: Microsoft's New Cross-over Operating System

Windows Azure is Microsoft's cloud computing platform, and it is powerful indeed. With low-cost, consumption-based pricing and elastic scale, the cloud puts the finest data centers in the world in reach of just about anybody. It provides a wealth of services spanning from Compute (hosting) to Storage to Identity to Worldwide Traffic Management.

Windows Azure: Microsoft's Cloud Computing Platform

Both Windows 8 and Windows Azure are interesting and compelling in their own right, but the real power comes from combining them. Let's see why.

Why Use Windows 8 and the Cloud Together?

Although a Windows 8 app can be stand-alone, there are all sorts of reasons to consider leveraging cloud computing in your app. Here are some of the more compelling ones:

- Data: The cloud is a safe (triply-redundant) place for your app data

- Continuity: A home base so your users can switch between devices

- Elastic Scale: support mobile/social communities of any size

- Functionality: cloud computing service provide a spectrum of useful capabilities

- Connectivity: for sharing/collaborating with others you need a hub or exchange

- Processing: do background / deferred / parallel processing for your app in the cloud

First off, let's note that there are two big revolutions going on right now in the computing world: the front end revolution, which has to be do with HTML5, mobility, and social networking; and the back-end revolution, which has to do with services and cloud computing. The point of the front-end revolution is relevance: ensuring you reach and stay well-connected to your users and their changing digital lifestyles. The point of the back-end revolution is transforming the way we do IT and supporting that front-end revolution. So, the cloud provides a very necessary back-end to what's happening on the front lines. This is true not only for Windows 8 but for all mobile apps, whether native or web-based.

This new digital world has users moving between devices big and small all the time. Even a single user is likely to move around between different devices: their work computer, their home computer, their phone, their tablet, an airport web kiosk. People need continuity: the ability to get at their content and apps from anywhere, any time.; This behavior requires a backbone for consistency that is ever-present. The cloud and its services are that backbone. Microsoft has a very good description for this: We're living in the era of Connected Devices, Continuous Services.

And then there's the Personal Cloud pattern, a very good illustration of which can be found in the Kindle Reader iPad App. The Kindle app can be used on multiple devices. The app has two views, Cloud and Device. In the Cloud view, you see everything in your library that you've purchased. In the Device view, you see the title you've downloaded to this particular device. We can see this pattern implemented similarly in other popular apps and services such as iTunes.

Personal Cloud Pattern on Kindle iPad App

There's also a power and capacity synergy between device and cloud worth notice. Mobile devices, even though they're getting more and more powerful, still have very limited computing power and storage capacity--compared to the cloud, which has near-infinite processing and storage capacity. Smart apps combine the two.

1. Using SkyDrive in Your Windows 8 App

Although I'm mostly going to be talking about Windows Azure in this post, I want to mention that Windows Live SkyDrive comes with Windows 8 and there is automatic integration.As an example, there's a Windows 8 app I'm in the middle of working on called TaskBoard. When I invoke a File Open or File Save dialog, the user can navigate to a variety of file locations (such as Documents) and device where to open or save a project. Included in that navigation is the option to load or store in SkyDrive. I did not have to do anything special in my app to make this happen, it's an automatic feature.

SkyDrive is Built-in to Windows 8 File Open and Save Dialogues

2. Using Cloud Media in Your Windows 8 App

It's of course quite common these days to leverage media--images, audio, or video--in our modern apps. You could of course just include your media directly in your app, but that makes it difficult to extend or change the content, requiring you to update your app and push it out through the Windows Store. It's much more flexible to have your app get its media from the cloud, where you can update or extend the media collection any time you wish.The Windows Azure Storage service includes file-like storage called Blob storage. Like a file, a blob can hold any kind of content--and that includes media files. Blobs live in Containers, similar to how files reside in file folders. In the cloud, you can make your containers public or private. If private, you can only access them using a REST API and providing credentials. If public, the blobs have Internet URLs and for reading purposes you can use them anywhere--such as in the IMG tags of your HTML.

Let's demonstrate this, first by looking at one of the Windows 8 samples Microsoft provides which is called FlipView. We'll use the WinJS (HTML5/JavaScript) edition. FlipView shows us how to use the Windows 8 FlipView control, as you can see from the screen capture below. If we move through the FlipView by using its right or left navigation arrows, we see there is a collection of outdoor images.

FlipView Windows 8 Sample

If we look in the code, we see that the FlipView images are part of the solution itself and the list of images and description is nothing more than a JavaScript array. The HTML markup references a FlipView control and uses data binding attributes to define an image-and-title template to make it all happen.

FlipView Sample Markup and JavaScript Code

None of this is complicated to understand, but again all of this is hard-coded to the app internally. We'd like to make this dynamic and easily modifiable using the cloud. So let's get to it. In my case, I've made a copy of the app and I'm changing it around a bit to be about hot sauces. (October is Chili Cook-off seasons for me, and I spend much of the month subjecting my family to various recipes and hot sauces as we experiment).

The first step, then, is to get some images and put them in the cloud.A Collection of Images We'll Use for our Host Sauce Gallery App

After locating some images, I provisioned a Windows Azure storage account and created a container named hotsauces. I then used a Storage Explorer Tool to upload those images to the cloud container.

Images Uploaded to Windows Azure Blob Storage

Because the container is marked public, each image that has been uploaded has an Internet URL. For example, http://neudesic.blob.core.windows.net/hotsauces/cholula.png will bring up one of the image in a browser.

Now that we have our images in the cloud, we also need our JavaScript array describing the images and their title (and one more addition, Scoville heat rating) to be dynamic and hosted in the cloud. All we need to do for that is create a text file in JSON format and also upload it to the cloud.

JavaScript Array for Cloud-based Hot Sauce Items

With our data list and images in the cloud, all that remains is to change the Windows 8 app itself to retrieve those items. Since we've added a Scoville heat rating to our data, we'll first amend our HTML markup to include that data item.

In the application start-up code, we'll need to modify how the array is set and bound to the HTML. Previously, the array was just directly populated in code. Now, we're going to load it from the cloud. We'll do that using the WinJS.xhr function, which performs an asynchronous communication request. Since our JSON data and images are Internet-accessible URLs, this is very straightforward.The code below shows how we do it. Notice that the communication is asynchronous, and the inner code to push array items in the array happens upon successful retrieval of the JSON data.

Revised Code to Download JSON array and Images from the Cloud

That's all there is to it. When we now run the app, it goes out and gets the hot sauce JSON array which in turn has the title, heat rating, and Internet URLs of each hot sauce. Our app now looks like this when we run it:

Our Host Sauce App, Now Using Dynamic Content from the Cloud

Moreover, we can easily change, add, or remove images and data just by modifying what's up in the cloud storage account. There's no need to update and app and push out a new version if we want to update our content.

A couple of other things to know about using Windows Azure storage. If you want, you can combine what we just did with the Windows Azure Content Delivery Network (CDN). This uses an edge cache network to efficiently cache and deliver media worldwide based on user location. The only impact using the CDN would have on what we just did above is that the URL prefix would change. You should also be aware that Windows Azure Storage provides not only blob (file-like) storage but also data table storage and queues, all of which can be accessed through WinJS.xhr and a REST API.

If you're working with video and want to intelligently stream it and handle multiple formats, you should investigate Windows Azure Media Services which is currently in preview.

3. Create Your Own Back-end In the Cloud

Although you can create them in HTML5 and JavaScript, a Windows 8 app is now a web app. A web app always has a server, for example, and also domain-restricts communication to that server. Your Windows 8 app doesn't have a domain restriction, nor does it come with or require a server. However, nothing prevents you from putting up a server with web services for your app and this is often a good idea. Why have a server back-end in the cloud? Here are some reasons to consider:

- For many of the same reasons a web app benefits from a web server

- Distribution of work - some done on the local device, some done back on the server

- To keep credentials off the local machine which is a security vulnerability

- To take advantage of the many useful cloud services that are available

- To connect to your enterprise to integrate with its internal systems and resources

What are some of the cloud service offered by Windows Azure? They include these:

So then, you just might want to put up some web services for your app to use, and putting them in the cloud makes a lot of sense: you get elastic scale, meaning you can handle any size load; it's cost-effective; and you can have affordable worldwide presence.

Let's consider how we would build our own back-end service on Windows Azure. We're going to build a really simple service that returns the time of day in various time zones. For this, we'll use the new ASP.NET Web API that is becoming a popular alternative to WCF for building web services for apps in the Microsoft world. We're going to host this in the cloud, and there are actually a few different ways to do that (see Windows Azure is a 3-lane Highway). In our case we are just going to build a really simple service so well use the Windows Azure Web Sites hosting feature which is fast and simple. For a more complicated example where you leverage many of the cloud services, you'd be best off using the Cloud Services form of hosting.

Creating our service is quite easy. We fire up VS2012 and create a new MVC4 Web API project. We then go into the pre-generated "values' controller and add some methods to return time of day.

Web API Service to Return Time

If we run this locally, we can invoke its functions with URLs like this: http://localhost:84036/api/values/-7 and we'll get a simple response like "4:30 PM" in JSON format. Doing this much is enough to test locally so w can now move on to creating the client. Once we're satisfied everything works we will of course deploy this service up to the cloud.

Now for the client side. We create a new empty Windows 8 app--using the HTML5/JavaScript approach--and now we need to provide some markup, CSS, and JavaScript code. For the markup, we're just going to show the various time zones and current time in a couple of HTML tables, and we'll also include a world time zone map.Windows 8 World Time App - Markup

There's also a bit of CSS for styling but I won't bother showing that here. Now, what needs to happen coding-wise? At application start-up, we want to go out and get the time for each of the time zones in our table and populate its cell with a value. We'll use a timer to repeat this once a minute in order to keep the time current. We use the WinJS.xhr method to asynchronously invoke the web service.

Windows 8 World Time App - JavaScript Code

We can now run the app and see it work:

Windows 8 World Time App - Running

Very good - but remember, our service is still running locally. We need to put it up into the cloud. With Windows Azure Web Sites this is a fast and simple process that takes less than a minute.

Create a Windows Azure Web Site to Host Web Service in the Cloud

After creating the web site, we can download a publishing profile and deploy right from Visual Studio using Web Deploy. The last thing we need to do is change the URL the client code is using, which is now of the form http://timeservice.azurewebsites.net/api/values/timezone.

4. Using Windows Azure Mobile Services

We just showed you how you can create your own back-end in the cloud for your Windows 8 app, but maybe you don't really want to learn all those cloud details and would really like to stay focused on your app. Microsoft has a new service that will automatically create a back-end in the cloud for your Windows 8 app (and eventually, for other mobile platforms as well).

Because I've recently blogged on Windows Azure Mobile Services, I'll direct you that post rather than repeating it here. However, I do want to point out to you here and now how valuable this service is. It's really a mobility back-end in a box that you can set up and configure effortlessly. Among other things, it gives you support for the following:

- Relational Database (including auto-creation of new columns when you alter your app's data model)

- Identity

- Push Notifications

- Server-side scripting (in JavaScript)

Window Azure Mobile Services is definitely worth checking out. It has a great experience and is very easy to get started with.

This talk was recently given at a code camp, and you can find the presentation here.http://davidpallmann.blogspot.com/2012/10/presentation-windows-8-and-cloud.html

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

‡ •• Ronnie Hoogerwerf (@rhoogerw) announced Microsoft Codename “Cloud Numerics” Lab Refresh on 10/18/2012. This post is a repeat of an 8/2/2012 post about v0.2 August 2012 update, reported here, with minor edits which caused it to reappear with a new publish date:

We are announcing a refresh of the Microsoft Codename "Cloud Numerics" Lab. We want to thank everyone who participated in the initial lab, we amassed and used your feedback to make improvements and add exciting features. Your participation is what makes this lab a success. Thank you.

Here’s what is new in the refresh:

Improved user experience: through more actionable exception messages, a refactoring of the probability distribution function APIs, and better and more actionable feedback in the deployment utility. In addition, the deployment process time has decreased and the installer supports installation on a on-premises Windows HPC Cluster. All up, this refresh provides for a more efficient way of writing and deploying “Cloud Numerics” applications to Windows Azure. [Emphasis added.]

More scale-out enabled functions: more algorithms are enabled to work on distributed arrays. This significantly increases the breadth and depth of big data algorithms that can be developed using “Cloud Numerics” Lab. Scale-out functionality was added in the following areas: Fourier transforms, linear algebra, descriptive statistics, pattern recognition, random sampling, similarity measures, set operations, and matrix math.

Array indexing and manipulation: a large part of any data analytics application concerns handling and preparing data to be in the right shape and have the right content. With this refresh “Cloud Numerics” adds advanced array indexing enabling users to easily and efficiently set and extract subsets of arrays and to apply Boolean filters.

Sparse data structures and algorithms: much of the real-world big data sets are sparse, i.e., not every field in a table has a value. With this refresh of the lab we introduce a distributed sparse matrix structure to hold these datasets and introduce core sparse linear algebra functions enabling scenarios such as document classification, collaborative filtering, etc.

Apply/Sweep framework: in addition to the built-in parallelism the “Cloud Numerics” Lab, this refresh now exposes a set of APIs to enable embarrassingly parallel patterns. The Apply framework enables applying arbitrary serializable .NET code to each element of an array or to each row or column of an array. The framework also provides a set of expert level interfaces to define arbitrary array splits. The Sweep framework performs as its name implies —this framework enables distributed parameter sweeps across a set of nodes allowing for better execution times.

Improved IO functionality: we added more parallel readers to enable out of the box data ingress from Windows Azure storage and introduced parallel writers. [Emphasis added.]

Documentation: we introduced detailed mathematical descriptions of more than half of the algorithms using print-quality formulae and best-of-web equation rendering that help clarify algorithm mathematical definition and method behavior. In addition, we updated the “Getting Started” wiki, and we added conceptual documentation for the “Cloud Numerics” help that includes the programming model, the new Apply framework, IO, and so on.

Stay tuned for upcoming blog posts:

- F#: We’ll be distributing a F# add-in for “Cloud Numerics” soon. The add-in exposes the “Cloud Numerics” APIs in a more functional manner, introduces operators, such as matrix multiply, and F# style constructors for and indexing on “Cloud Numerics” arrays.

- Text analytics using sparse data structures

Do you want to learn more about Microsoft Codename “Cloud Numerics” Lab? Please visit us on our SQL Azure Labs home page, take a deeper look at the Getting Started material and Sign Up to get access to the installer. Let us know what you think by sending us email at cnumerics-feedback@microsoft.com.

The “Cloud Numerics” refresh depends on the newly released Azure SDK 1.7 and Microsoft HPC Server R2 SP4. It does not provide support for the Visual Studio 2012 RC. [Emphasis added.]

I’ll assume it supports VS 2012 RTM until I discover otherwise. However, I encountered problems with the sign-up link and download from Microsoft Connect. I’ll update this post when problems are resolved.

‡ As of 10/19/2012, 8:00 AM PDT Problems with the signup link above have been corrected. Sign into Microsoft Connect with your Microsoft Account (formerly Live Id.) If you didn’t sign up for an earlier version, complete and submit the self-nomination form, wait for the email acknowledging your signup, and follow its instructions to gain access to Codename “Cloud Numerics” downloads.

See the “Codename “Cloud Numerics” from SQL Azure Labs” section of my Recent Articles about SQL Azure Labs and Other Added-Value Windows Azure SaaS Previews: A Bibliography post for links to five earlier articles about “Cloud Numerics.”

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

No significant articles today

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• Michael Park announced Microsoft Reaches Definitive Agreement to Acquire StorSimple in a 10/16/2012 post to the Windows Azure blog:

Today I am excited to announce that we have reached a definitive agreement to acquire StorSimple, a leader in an emerging category known as Cloud-integrated Storage (CiS).

We know that many of you – our customers - are faced with an explosion in data and the resulting cost to store, manage and archive this data is ballooning. This is why cloud storage solutions are so compelling - they provide increased flexibility, almost unlimited scalability, and the improved economics you need. But to realize those benefits, cloud storage needs to be integrated into the enterprise IT infrastructure and application environment. This is where CiS and StorSimple come in.

CiS is a rapidly emerging category of storage solutions that consolidate the management of primary data, backup disaster recovery and archival data, and deliver seamless integration between on premise and cloud environments. This seamless integration and orchestration enables new levels of speed, simplicity and reliability for backup and disaster recovery (DR) while reducing costs for both primary data and data protection.

You may have heard us talk about the “Cloud OS” over the last few months - the Cloud OS is our vision to deliver a consistent, intelligent and automated platform of compute, network and storage across a company’s datacenter, a service provider’s datacenter and the Windows Azure public cloud. With Windows Server 2012 and Windows Azure at its core, and System Center 2012 providing automation, orchestration and management capabilities, the Cloud OS helps customers transform their data centers for the future.

StorSimple’s approach of seamless integration of on-premises storage with cloud storage is clearly aligned with our Cloud OS vision. Their innovative solutions enable IT organizations to reduce the cost of storing data for backup, DR and archival and ensure fast recovery through a single console. Customers looking to embrace cloud storage and realize its benefits today can learn more about this announcement and StorSimple here: www.StorSimple.com.

As you know, there are a number of robust storage options already available that integrate with Windows Server 2012 and Windows Azure and we will continue to work with our broad ecosystem of partners to deliver a variety of innovative storage solutions – both on-premises and cloud integrated.

By working together Microsoft and StorSimple can help you with the storage challenges you face today and continue to provide platforms and technologies on which our partners can innovate and extend. Obviously, we are in the early stages of this acquisition – but the possibilities are exciting – and we look forward to sharing more about our plans in the future.

Michael Park is Corporate Vice President, Server and Tools Division, Microsoft

• Brian Swan (@brian_swan) explained Getting Error Info for PHP Sites in Windows Azure Web Sites

This is just a short post about how to get error information for PHP sites running in Windows Azure Web Sites. We all want to know when something goes wrong, and better yet, we want to know why something goes wrong. Hopefully, the information here will help get you started in understanding the *why*. Note that many of the options below are probably intended for use when you are developing a site. You may want to turn off some of the functionality below when you are ready to go to production.

Turn on logging options

In the Windows Azure Management Portal, on the CONFIGURE tab for your website, you have the option of turning on three logging options: web server logging, detailed error messages, and failed request tracing. To turn these on, find the diagnostics section and click ON next to each (be sure to click SAVE at the bottom of the page!):

One way to retrieve these logs is via FTP. Again in the Azure Management Portal, down the right hand panel you should see FTP HOSTNAME and DEPLOYMENT /FTP USER. Using your favorite FTP client, you should be able to use those values (along with your password) to get the logs:

Another way to retrieve these files is by using the Windows Azure Command Line Tools for Mac and Linux. The following command will download a .zip file to the directory from which it was executed:

azure site log download <site name>

Note: You may have to run that as a super user on Mac or Linux (i.e. sudo azure …)

Configure PHP error reporting

Whether you are using the built-in PHP runtime or supplying your own, you can configure PHP to report errors via the php.ini file. If you are using a custom PHP runtime, you can simply modify the accompanying php.ini file, but if you are using the built-in PHP runtime, you need to use a .user.ini file. In either case, here are some of the settings I’d change:

display_errors=On

log_errors=On

error_log = "D:\home\site\wwwroot\bin\errors.log"Notice that for the error_log setting, you need to create a bin directory in your application root if you want to use the path I’m using. Regardless, you can only write to files in your application root, so you need to know that it is at D:\home\site\wwwroot.

Enable XDebug

I wrote a post a couple of week ago that describes how to enable XDebug for both the built-in PHP runtime and for a custom PHP runtime, so I just point you there: How to Enable XDebug in Windows Azure Web Sites. If you follow those instructions, you can get XDebug profiles via FTP:

Pro Tip