Windows Azure and Cloud Computing Posts for 10/12/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop and Media Services

Benjamin Guinebertiere (@benjguin) described How to download a blob on a linux machine | Comment télécharger un blob sur une machine Linux on 10/12/2012:

I suppose you have a Windows machine and a Linux machine. You want to get a blob directly from Blob storage to the Linux machine.

On the Windows machine, use a tool like CloudXplorer to create a Shared Access Signature.

Copy the shared access signature which is a URL with authorization to download the private blob.

Check the start date, don’t add more time than 1 hour for the authorized download window (that would require a policy at the container level, which is more complicated).

On the Linux machine, paste that URL in a curl command like below:

This technique could also be used to upload blobs, by creating a write shared access signature and use HTTP PUT instead of GET.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

No significant articles today

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

Richard Seroter (@rseroter) described Capabilities and Limitations of “Contract First” Feature in Microsoft Workflow Services 4.5 in a 10/12/2012 post:

I think we’ve moved well past the point of believing that “every service should be a workflow” and other things that I heard when Microsoft was first plugging their Workflow Foundation. However, there still seem to be many cases where executing a visually modeled workflow is useful. Specifically, they are very helpful when you have long running interactions that must retain state. When Microsoft revamped Workflow Services with the .NET 4.0 release, it became really simple to build workflows that were exposed as WCF services. But, despite all the “contract first” hoopla with WCF, Workflow Services were inexplicably left out of that. You couldn’t start the construction of a Workflow Service by designing a contract that described the operations and data payloads. That has all been rectified in .NET 4.5 as now developers can do true contract-first development with Workflow Services. In this blog post, I’ll show you how to build a contract-first Workflow Service, and, include a list of all the WCF contract properties that get respected by the workflow engine.

First off, there is an MSDN article (How to: Create a workflow service that consumes an existing service contract) that touches on this, but there are no pictures and limited details, and my readers demand both, dammit.

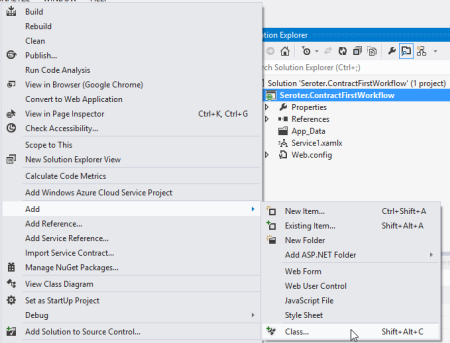

To begin with, I created a new Workflow Services project in Visual Studio 2012.

Then, I chose to add a new class directly to the Workflow Services project.

Within this new class filed, named IOrderService, I defined a new WCF service contract that included an operation that processes new orders. You can see below that I have one contract and two data payloads (“order” and “order confirmation”).

namespace Seroter.ContractFirstWorkflow { [ServiceContract( Name="OrderService", Namespace="http://Seroter.Demos")] public interface IOrderService { [OperationContract(Name="SubmitOrder")] OrderConfirmation Submit(Order customerOrder); } [DataContract(Name="CustomerOrder")] public class Order { [DataMember] public int ProductId { get; set; } [DataMember] public int CustomerId { get; set; } [DataMember] public int Quantity { get; set; } [DataMember] public string OrderDate { get; set; } public string ExtraField { get; set; } } [DataContract] public class OrderConfirmation { [DataMember] public int OrderId { get; set; } [DataMember] public string TrackingId { get; set; } [DataMember] public string Status { get; set; } } }Now which WCF service/operation/data/message/fault contract attributes are supported by the workflow engine? You can’t find that information from Microsoft at the moment, so I reached out to the product team, and they generously shared the content below. You can see that a good portion of the contract attributes are supported, but there are a number of key ones (e.g. callback and session) that won’t make it over. Also, from my own experimentation, you also can’t use the RESTful attributes like WebGet/WebInvoke.

Attribute Property Name Supported Description Service Contract CallbackContract No Gets or sets the type of callback contract when the contract is a duplex contract. ConfigurationName No Gets or sets the name used to locate the service in an application configuration file. HasProtectionLevel Yes Gets a value that indicates whether the member has a protection level assigned. Name Yes Gets or sets the name for the <portType> element in Web Services Description Language (WSDL). Namespace Yes Gets or sets the namespace of the <portType> element in Web Services Description Language (WSDL). ProtectionLevel Yes Specifies whether the binding for the contract must support the value of the ProtectionLevel property. SessionMode No Gets or sets whether sessions are allowed, not allowed or required. TypeId No When implemented in a derived class, gets a unique identifier for this Attribute. (Inherited from Attribute.) Operation Contract Action Yes Gets or sets the WS-Addressing action of the request message. AsyncPattern No Indicates that an operation is implemented asynchronously using a Begin<methodName> and End<methodName> method pair in a service contract. HasProtectionLevel Yes Gets a value that indicates whether the messages for this operation must be encrypted, signed, or both. IsInitiating No Gets or sets a value that indicates whether the method implements an operation that can initiate a session on the server(if such a session exists). IsOneWay Yes Gets or sets a value that indicates whether an operation returns a reply message. IsTerminating No Gets or sets a value that indicates whether the service operation causes the server to close the session after the reply message, if any, is sent. Name Yes Gets or sets the name of the operation. ProtectionLevel Yes Gets or sets a value that specifies whether the messages of an operation must be encrypted, signed, or both. ReplyAction Yes Gets or sets the value of the SOAP action for the reply message of the operation. TypeId No When implemented in a derived class, gets a unique identifier for this Attribute. (Inherited from Attribute.) Message Contract HasProtectionLevel Yes Gets a value that indicates whether the message has a protection level. IsWrapped Yes Gets or sets a value that specifies whether the message body has a wrapper element. ProtectionLevel No Gets or sets a value that specified whether the message must be encrypted, signed, or both. TypeId Yes When implemented in a derived class, gets a unique identifier for this Attribute. (Inherited from Attribute.) WrapperName Yes Gets or sets the name of the wrapper element of the message body. WrapperNamespace No Gets or sets the namespace of the message body wrapper element. Data Contract IsReference No Gets or sets a value that indicates whether to preserve object reference data. Name Yes Gets or sets the name of the data contract for the type. Namespace Yes Gets or sets the namespace for the data contract for the type. TypeId No When implemented in a derived class, gets a unique identifier for this Attribute. (Inherited from Attribute.) Fault Contract Action Yes Gets or sets the action of the SOAP fault message that is specified as part of the operation contract. DetailType Yes Gets the type of a serializable object that contains error information. HasProtectionLevel No Gets a value that indicates whether the SOAP fault message has a protection level assigned. Name No Gets or sets the name of the fault message in Web Services Description Language (WSDL). Namespace No Gets or sets the namespace of the SOAP fault. ProtectionLevel No Specifies the level of protection the SOAP fault requires from the binding. TypeId No When implemented in a derived class, gets a unique identifier for this Attribute. (Inherited from Attribute.)

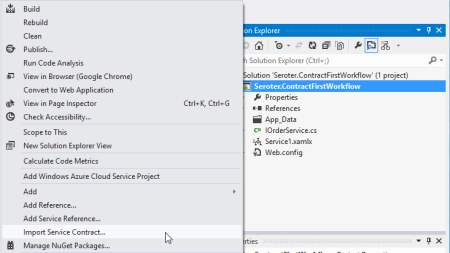

With the contract in place, I could then right-click the workflow project and choose to Import Service Contract.

From here, I chose which interface to import. Notice that I can look inside my current project, or, browse any of the assemblies referenced in the project.

After the WCF contract was imported, I got a notice that I “will see the generated activities in the toolbox after you rebuild the project.” Since I don’t mind following instructions, I rebuilt my project and looked at the Visual Studio toolbox.

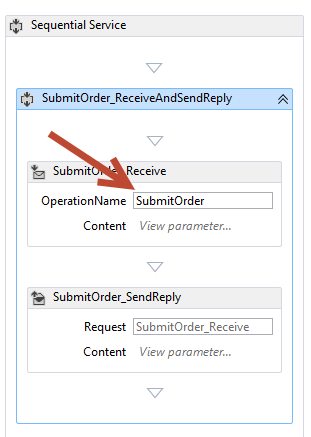

Nice! So now I could drag this shape onto my Workflow and check out how my WCF contract attributes got mapped over. First off, the “name” attribute of my contract operation (“SubmitOrder”) differed from the name of the operation itself (“Submit”). You can see here that the operation name of the Workflow Service correctly uses the attribute value, not the operation name.

What was interesting to me is that none of my DataContract attributes got recognized in the Workflow itself. If you recall from above, I set the “name” attribute of the DataContract for “Order” to “CustomerOrder” and excluded one of the fields, “ExtraField”, from the contract. However, the data type in my workflow is called “Order”, and I can still access the “ExtraField.”

So maybe these attribute values only get reflected in the external contract, not the internal data types. Let’s find out! After starting the Workflow Service and inspecting the WSDL, sure enough, the “type” of the inbound request corresponds to the data contract attribute (“CustomerOrder”).

In addition, the field (“ExtraField”) that I excluded from the data contract is also nowhere to be found in the type definition.

Finally, the name and namespace of the service should reflect the values I defined in the service contract. And indeed they do. The target namespace of the service is the value I set in the contract, and the port type reflects the overall name of the service.

All that’s left to do is test the service, which I did in the WCF Test Client.

The service worked fine. That was easy. So if you have existing service contracts and want to use Workflow Services to model out the business logic, you can now do so.

Christian Weyer (@christianweyer) asked and answered You thought identity management is done? Think twice: thinktecture IdentityServer v2 Beta is here on 10/12/2012:

The Beta version of our open source IdentityServer STS has been released today: http://leastprivilege.com/2012/10/12/thinktecture-identityserver-v2-beta/

Check out the short intro video to get a quick start: http://leastprivilege.com/2012/10/12/setup-thinktecture-identityserver-v2-in-7-minutes/

Thinktecture IdentityServer v2 Tutorial: Installation from thinktecture Videos on Vimeo.

Any feedback is always welcome and highly appreciated! Dominick and Brock (and a little bit of myself will hang out there…)

https://github.com/thinktecture/Thinktecture.IdentityServer.v2/issues

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Mike McKeown (@nwoekcm) posted Azure Tips from the IT Pro Water Cooler to the Aditi Technologies blog on 10/11/2012:

With the release of Infrastructure as a Service (IaaS) this summer there seems to suddenly be an increased interest for IT Pros in Windows Azure. Originally most of Azure's features, like Web and worker roles, were focused primarily upon developers. This accidentally led to Windows Azure being erroneously viewed by the IT Pro community as a chance for developers to bleed over into the roles of the IT Pro. It has also been viewed unfairly as a driver behind their jobs eventually going away. After all - if the Cloud handles all the deployment, installation, and patching of the core software, and provisioning of the hardware, what roles still exists for IT Pros in that environment?

Once you get a better understanding of the types of opportunities that are created by the Cloud you can see that the theory of an obsolete IT Pro replaced by Windows Azure is not very realistic. For the IT Pro Azure VMs and Azure Web sites with the introduction of IaaS, Windows Azure is now also an ITPro platform. As an IT Pro it might help to think of Azure as an extension of your IT department.

Over the next few posts I will be discussing concise Windows Azure tips and best practices for IT Pros. Let's start with our first topic - Deployment.

Deployment

- With Windows Azure, developers can go right to Azure and deploy their applications. For your on-premise server you would not allow developers to do direct deployment to production. IT Pros need to take this control back! To manage deployment and keep developers from directly deploying from Visual Studio (they can also deploy with configuration files) create two Azure accounts - one account for development and one for production. Give them the development account to work with and do what they want. However, when they have to deploy to production they need to go through your account and you do it for them.

- When you deploy try to keep the Azure storage and code on the same location. You can create and use affinity groups to help with that. So if you have a market in the Far East you can easily deploy it all into the Far East. This is an advantage for deployment you get with the cloud. You cannot change a data center but you can choose a region of the world. The affinity group guarantees a hosted service and storage will be in the same datacenter. Group application pieces into a single deployment package when they must be hosted in the same data center. Note - you cannot use affinity groups with Windows Azure SQL Database.

Management Certificates

Closely related to the topic of deployment is the management of certificates in the Azure portal. Each subscription should have its own separate Azure service management certificate which is unique to that subscription. To use a management or service certificates it must be uploaded through the Windows Azure Platform Management Portal. Windows Azure uses both management certificates and service certificates.

- Management Certificates – Stored at the subscription level, these certificates are used to enable Windows Azure using the ‘management’ tools: SDK tools, the Windows Azure Tools for Visual Studio, or REST API calls. These certificates are independent of any hosted service or deployment.

- Service Certificates – Stored at the hosted service level, these certificates are used by your deployed services.

Typical IT policies define distinct roles for parties associated with application Development, Test, Integration, Deployment, and Production. These policies restrict roles from operations that exceed their defined responsibilities. Here are some suggestions on how to manage certificates for these roles.

- Development – Share a certificate between all the developers to allow freedom of development.

- Integration – Have its’ own management certificate

- Test – Certificate shared only with the Operations team

- Deployment – Used for deployment roles and only distributed to parties responsible for application deployment

- Production - Certificate shared only with the Operations team

Any other tips? Leave me a comment.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Himanshu Singh (@himanshuks, pictured below) reported Real World Windows Azure: Egyptian World Heritage Site Launches Mobile Portal on Windows Azure to Enhance and Revitalize Luxor Tourism on 10/11/2012:

As part of the Real World Windows Azure series, we connected with Wafaa Hassan, Governorate Development Consultant at the Egyptian Ministry of Communications and Information Technology (MCIT) to find out how the company built the Luxor Mobile Portal on Windows Azure that enables visitors to take virtual tours of historical sites and locate services in Luxor. MCIT works with public entities in Egypt to help introduce new technologies and develop new business models that can be repeated throughout Egyptian society. Read the city of Luxor’s success story here. Read on to find out what he had to say.

Himanshu Kumar Singh: Tell me about tourism in the city of Luxor.

Wafaa Hassan: Luxor is a modern metropolis, the capital of Luxor Governorate, with a population of almost 500,000. Luxor is also the site of the Ancient city of Thebes, a United Nations World Heritage Site, which includes the temple complexes at Karnak and Luxor, the West Bank Necropolis on the Nile River, the Valley of the Kings, and the Valley of the Queens. As many as 12,000 visitors a day come to see what has been called one of the world’s great open air museums.

Tourism is a significant part of the city’s economy, generating E£400 million (US$66 million) a year. We want to encourage people around the world to visit Luxor’s temples, tombs, and other historical treasures, and help visitors get the most out of that experience. To help support the local tourist industry and promote amenities offered in the modern city, Luxor also needs to help visitors find tour guides, hotels, restaurants, entertainment, transportation, and vital services.

HKS: Why did you develop a mobile application?

WH: Luxor is an important cultural site and an international travel destination. We need to offer foreign visitors the information they want and help them find the services they need, and still serve the people who live and work in Luxor.

In 2011, Egypt entered a period of sweeping social change, which, while generally positive, reduced tourism in Egypt by almost 75 percent. Observers have noted the role that new technologies, such as that of social media, smartphones, and other mobile devices, played in the country’s transformation. we wanted to harness the power of these technologies to help bring visitors back to Luxor.

HKS: Tell me about the Luxor Mobile Portal.

WH: We began working with Tagipedia, an independent software vendor based in Cairo, to develop the Luxor Mobile Portal. Visitors to Luxor can now use smartphones running the Windows Phone 7 operating system to scan quick response (QR) tags installed at monument sites, visitor centers, and other locations around Luxor. The QR tags activate virtual tours of specific sites, with text, images, sound, and video. When visitors scan a Luxor Mobile Portal QR tag at a temple or other site, they can take the virtual tour of that site and follow links to related monuments. They can also search the portal for historical material or information about guided tours, lodging, dining, bank and ATM locations, and other services available in Luxor, with the ability to instantly connect with the businesses or organizations online or by direct phone call.

HKS: Where does Windows Azure fit into the solution?

WH: We originally supported the Luxor Mobile Portal with a private cloud environment and Windows Azure. Scalability of Windows Azure is a key benefit; seasonal increases and decreases in tourism directly affect demands on application usage, so we quickly decided to manage the Luxor Mobile Portal solely with Windows Azure. The portal uses Windows Azure Blob storage and Windows Azure SQL Database to store and manage data. When a user scans a QR code, Tagipedia technology hosted in Windows Azure identifies the application associated with the code, and either runs the program from within Tagipedia—as with the Luxor Mobile Portal—or allows the user to download the app from another source.

For the Luxor Mobile Portal, Tagipedia manages the Windows Azure infrastructure and operates analytics tools to monitor the application, estimate tourism levels or evaluate marketing campaigns, and deliver reports to Luxor. Luxor staff manages content on the portal. If a new bank or other service opens in Luxor, city staff can easily add the new information to the Luxor website and the mobile portal with a single update, which saves significant time.

HKS: How did you launch and promote the solution?

WH: We launched the Luxor Mobile Portal and installed permanent signs with QR tags at monument sites and other locations in Luxor in March 2012. We will also distribute tags with promotional material on airline flights and in magazine advertisements, so from anywhere in the world, users can scan a tag with a Windows Phone 7 device, take a tour of the sites at Luxor, and even begin planning a trip.

HKS: How does the mobile app help visitors?

WH: With the Luxor Mobile Portal we can provide visitors to Luxor a much richer experience. It’s like having a tour guide in the palm of your hand. Once visitors are in Luxor, they can use a single mobile application to fully experience some of the most important historical sites on Earth, find their way around Luxor, have a meal, and even make hotel reservations.

HKS: What results have you seen with the Luxor Mobile Portal?

WH: By launching the Luxor Mobile Portal, we have helped support one of the most important aspects of the Luxor economy and enhanced the city’s ability to serve visitors and local businesses in Luxor. The city can also track and analyze its marketing campaigns, so it can more effectively promote its historical sites.

By July 2012, the Egyptian Ministry of Antiquities wanted to deploy similar portals for other sites in Egypt, and South Sinai was interested in a portal to promote its beaches, activities, and resorts.

HKS: What’s next?

WH: As the mobile portal expands, Luxor can cross-market with other historical sites, resorts, or regional activities, such as hot-air balloon trips or photo-safaris.

And as the political situation in Egypt stabilizes and tourism increases, we can scale up Windows Azure easily and duplicate the success of the Luxor Mobile Portal in other cities.

Read how others are using Windows Azure.

Bruno Terkaly (@brunoterkaly, left below) and Ricardo Villalobos (@ricvilla, right below) wrote Windows 8 and Windows Azure: Convergence in the Cloud for the Windows 8 Special Issue of MSDN Magazine:

There’s little question that today’s software developer must embrace cloud technologies to create compelling Windows Store applications—the sheer number of users and devices make that a no-brainer. More than one-third of the Earth’s population is connected to the Internet, and there are now more devices accessing online resources than there are people. Moreover, mobile data traffic grew 2.3-fold in 2011, more than doubling for the fourth year in a row. No matter how you look at it, you end up with a very simple conclusion: Modern applications require connectivity to the cloud.

The value proposition of cloud computing is compelling. Most observers point to the scalability on demand and to the fact that you pay only for what you use as driving forces to cloud adoption. However, the cloud actually provides some essential technology in the world of multiple connected devices. Windows Store application users, likely to be using many applications and multiple devices, expect their data to be centrally located. If they save data on a Windows Phone device, it should also be immediately available on their tablets or any of their other devices, including iOS and Android devices.

Windows Azure is the Microsoft public cloud platform, offering the biggest global reach and the most comprehensive service back end. It supports the use of multiple OS, language, database and tool options, providing automatic OS and service patching. The underlying network infrastructure offers automatic load balancing and resiliency to hardware failure. Last, but not least, Windows Azure supports a deployment model that enables developers to upgrade applications without downtime.

The Web service application presented in this article can be hosted in one or more of Microsoft’s global cloud datacenters in a matter of minutes. Whether you’re building a to-do list application, a game or even a line-of-business accounting application, it’s possible to leverage the techniques in this article to support scenarios that rely on either permanently or occasionally connected clients.

What You’ll Learn

First, we’ll describe how to build a simple cloud-hosted service on Windows Azure to support asynchronous clients, regardless of the type of device it’s running on—phone, slate, tablet, laptop or desktop. Then we’ll show you how easy it is to call into a Web service from a Windows Store application to retrieve data.

Generally speaking, there are two ways data can make its way into a Windows Store application. This article will focus on the “pull approach” for retrieving data, where the application needs to be running and data requests are issued via HTTP Web calls. The pull approach typically leverages open standards (HTTP, JSON, Representational State Transfer [REST]), and most—if not all—device types from different vendors can take advantage of it.

We won’t be covering the “push approach” in this article. This approach relies on Windows Push Notification Services (WNS), which allows cloud-hosted services to send unsolicited data to a Windows Store application. Such applications don’t need to be running in the foreground and there’s no guarantee of message delivery. For information about using WNS, see bit.ly/RSXomc.

Two Projects

The full solution requires two main components: a server-side or Web services project (which can be deployed on-premises or in Windows Azure), and a client-side project, which consists of a Windows Store application based on the new Windows UI. Both projects can be created with Visual Studio 2012.

Basically, there are two options for building the Web services project: Windows Communication Foundation (WCF) or the ASP.NET Web API, which is included with ASP.NET MVC 4. Because exposing services via WCF is widely documented, for our scenario we’ll use the more modern approach that the ASP.NET Web API brings to the table, truly embracing HTTP concepts (URIs and verbs). Also, this framework lets you create services that use more advanced HTTP features, such as request/response headers and hypermedia constructs.

Both projects can be tested on a single machine during development. You can download the entire solution at archive.msdn.microsoft.com/mag201210AzureInsider.

What You Need

The most obvious starting point is that Windows 8 is required, and it should come as no surprise that the projects should be created using the latest release of Visual Studio, which can be downloaded at bit.ly/win8devtools.

For the server-side project, you’ll need the latest Windows Azure SDK, which includes the necessary assemblies and tooling for creating cloud projects from within Visual Studio. You can download this SDK and related tooling at bit.ly/NlB5OB. You’ll also need a Windows Azure account. A free trial can be downloaded at bit.ly/azuretestdrive.

Historically, SOAP has been used to architect Web services, but this article will focus on REST-style architectures. In short, REST is easier to use, carries a smaller payload and requires no special tooling.

Developers must also choose a data-exchange format when building Web services. That choice is generally between JSON and XML. JSON uses a compact data format based on the JavaScript language. It’s often referred to as the “fat-free alternative” to XML because it has a much smaller grammar and maps directly to data structures used in client applications. We’ll use JSON data in our samples.

With these decisions made, we’re ready to create our Web service. Our goal is to build an HTTP-based service that reaches the broadest possible range of clients, including browsers and mobile devices.

Building the Web Service

Let’s begin by starting Visual Studio 2012 as Administrator. Here are the steps to create the server-side Web service, using the ASP.NET MVC 4 Web API:

- Click on the File menu and choose New | Project (see Figure 1).

- Select Visual C# | Web for the template type.

- Select .NET Framework 4 in the dropdown at the top.

- Select ASP.NET MVC 4 Web Application.

- Enter the Name WebService and a Location of your choice.

- Click OK.

- The wizard will ask you to select the Project Template. Choose Web API and be sure the View engine is Razor (the default), as shown in Figure 2.

- A variety of files will be generated by Visual Studio. This can be overwhelming, but we only need to worry about a couple of files, as shown in Figure 3.

.png)

Figure 1 New Project Dialog Box

.png)

Figure 2 Project Template Dialog Box

.png)

Figure 3 Solution Explorer for WebService

… The authors continue with detailed instructions for completing the server and client components.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum) asserted “With the explosion of cloud computing jobs, now is the time to map your path to more money and more cloud” in a deck for his How to get your first cloud computing job article of 10/12/2012 for InfoWorld’s Cloud Computing blog:

Cloud computing is expanding rapidly, with an accompanying need for for cloud computing "experts" to make this technology work. That translates into many new jobs chasing very few qualified candidates. At the same time, many IT professionals are attempting to figure out how they can cash in on the cloud.

Most of the cloud jobs to be found these days require deep knowledge around a particular technology, such as Amazon Web Services, OpenStack, Salesforce.com, or Azure. This is typically due to the fact that the company has standardized on a cloud technology. I call these jobs cloud technology specialists, in that they focus on a specific cloud technology: development, implementation, management, and so on.

Others jobs would be cloud planning or architecture positions, often around the configuration of new systems in the cloud or the migration of existing systems to the cloud. I call these cloud planners. While you'd think candidates for this position would also be in demand, in most instances the listings are filled by existing IT staffers who understand full well that having cloud computing experience on their CV translates into larger paychecks going forward. You can't blame them.

Those looking to break into cloud computing will have the best luck by learning a specific technology, then taking a cloud technology specialist job. The trick is getting the initial experience.

The most ambitious candidates will begin their own "shadow IT" projects using a hot cloud computing technology, then soon find their way to a formal and high-paying cloud gig. Cloud computing is littered with stories about self-taught successes, due to the lack of formal training offered.

Those seeking higher-level jobs such as cloud planners and architects won't entertain as many options, but they can be found. The best way to prepare for these jobs is to understand all you can about the technology, including use cases and existing architectural best practices and approaches.

If there's an upside to the emergence of cloud computing, it's the number of job opportunities it's creating, much like any hyped technology trend we've seen in the past. Cloud computing, however, is a further-reaching, more systemic change in the way we consume technology. Thus, the job growth around this change will last for many years. Perhaps it's time to take advantage

Brian Prince (@brianhprince) described the recent Update to Windows Azure Management Portal in a 10/12/2012 post:

This past week we released several changes to the portal. As many of you know, we released the ‘new’ portal this past June. In that timeframe, there have been some features that were only still available in the ‘old’ portal.

The new portal is based on HTML so that it is more cross-platform, lining up to support those developers who are using our new native Mac and Linux tools. Yay us! The old portal still uses Silverlight.

Anyway, what is in this update?

- Service Bus Management and Monitoring – You can now manage all of your Service Bus needs from the new portal.

- Support for Managing Co-administrators – Many people create subscriptions under an accounting or ‘super admin’ live id, and then add their developers individual live id’s as co-admins. You can do this in the new portal now as well. It is under the settings tab.

- Import/Export support for SQL Databases – I love this feature. I can now, from the new portal, import and export the contents of my SQL Databases. The file format is BACPAC, which your normal, boring SQL Server fully understands. When you export, you will create the file in blob storage, which you can then download to your local machines.

- Virtual Machine Experience Enhancements – Now you can manage your VM disks when you manage your VM’s. In the new portal, you can delete a VM, and leave the disks in storage (for later use). If you want to delete the disks when you delete the VM because you are truly destroying everything (a sample machine for example), this will now be easier.

- Improved Cloud Service Status Notifications – In the new portal, it was hard to tell what was happening with instances for cloud services as they were changing state (coming online, updating, running out for cat food, etc.). This is now surfaced in the UI so you can be all up to date and stuff.

- Media Services Monitoring Support – We have the Media Services on Windows Azure, which let you run media encoding jobs. The new portal can now show you want the status of those jobs are.

- Storage Container Creation and Access Control Support – You have always been able to create storage accounts in the new portal, and manage the keys. We have now added the ability to create blob containers in a storage account, and set its permission level at creation from within the portal. This saves you from having to write some code to it.

It is amazing how fast we are moving with Windows Azure. Keep you ear out for more features, more fun, and more awesome.

For all of the details on this release to the portal, please check out Scott’s blog. He has fancy screen shots and everything. –> http://weblogs.asp.net/scottgu/archive/2012/10/07/announcing-improvements-to-the-windows-azure-portal.aspx

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

<Return to section navigation list>

Cloud Security and Governance

<Return to section navigation list>

Cloud Computing Events

Himanshu Singh (@himanshuks) posted Windows Azure Community News Roundup (Edition #40) on 6/12/2012:

Welcome to the latest edition of our weekly roundup of the latest community-driven news, content and conversations about cloud computing and Windows Azure. Here are the highlights for this week.

Articles and Blog Posts

- Windows Azure Cloud Service or Web Site: Where am I Deployed? by @SyntaxC4 (posted Oct 2)

- Setting up a Sharepoint foram as an extension to Enterprise Network by @BanerjeeSanjeev (posted Oct 2)

- Round Three: Windows Azure Mobile Services on DevRadio by @jerrynixon (posted Oct 5)

- Having fun with Windows Azure Mobile Services – The Windows Phone application by @qmatteoq (posted Oct 9)

- Windows Azure Mobile Services, Maps & More by @JohannesKebeck (posted Oct 9)

- What PartionKey and RowKey are for in Windows Azure Table Storage by @MaartenBalliauw (posted Oct 8)

Upcoming Events and User Group Meetings

North America

- October - June, 2013: Windows Azure Developer Camp – Various (50+ cities)

- October 10-14: Cloud & Virtualization LIVE – Orlando, FL

- October 16-18: TwilioCon – San Francisco, CA

- October 30-November 2: Microsoft BUILD 2012 – Redmond, WA

- November 12: (Satory Global) Windows Azure Developer Camp – Mtn. View, CA

- December 4: MongoSV – Silicon Valley, CA

Europe

- October 25: Catching the Long Tail with SaaS and Windows Azure – Leuven, Belgium

- November 5 – 9: Oredev – Malmo, Sweden

Rest of World/Virtual

- October 12,16,19: TechDays 2012 – South Africa (three cities)

- October 17: Brisbane Windows Azure User Group – Brisbane, Australia

- October 18: Windows Azure Sydney User Group – Sydney, Australia

- Ongoing: Windows Azure Virtual Labs – On-demand

Recent Windows Azure MSDN Forums Discussion Threads

- WAWS Preview to support .NET Framework 4.5 -- 1,819 views, 2 replies

- How to determine my monthly bill – 1,705 views, 3 replies

- How to change subscription location – 1,887 views, 1 replies

- Table data restriction – SQL server – 1,867 views, 1 replies

Recent Windows Azure Discussions on Stack Overflow

- Accessing Windows Azure Queues from client side javascript/jquery – 2 answers, 1 vote

- Does Azure Caching (Preview) preserve state across deployments? – 1 answer, 2 votes

Send us articles that you’d like us to highlight, or content of your own that you’d like to share. And let us know about any local events, groups or activities that you think we should tell the rest of the Windows Azure community about. You can use the comments section below, or talk to us on Twitter @WindowsAzure.

Jim O’Neil (@jimoneil) described on 10/11/2012 Boston Code Camp–October 20th:

I am a firm believe[r] that community events like Code Camps are one of the best investments you as a technologist can make in your own career.

Where else can you learn about a myriad of topics – from beginner to advanced – and network with fellow developers, designers, architects, and IT professionals – FREE?

Your next opportunity to make that investment is Boston Code Camp, being held on Saturday, October 20th, at the New England Research and Development Center (NERD) in Cambridge.

The agenda isn’t quite yet complete - there’s still a brief window of opportunity to submit your own session - but here’s a subset of the nearly three dozen topics proposed so far.

- Arduino and .NET, Android Services with C#, NoSQL with Couchbase Server

- Multithreading in .NET 4.5, TypeScript, SVG Graphics with D3

Spine.js on Azure, iPhone Native Apps with jyOS, Windows 8 and MonoGame

- PowerShell Programming, Securing WebAPI Services, Machine Learning in 60 Minutes

Attendance is free, thanks to sponsors like Telerik, ComponentOne, Bluefin Technical Services, and RGood Software, but do register for an accurate count for breakfast and lunch.

Steve Plank (@plankytronixx) reported on 10/11/2012 Event: Tech.Days Online in the UK: 30/31 October ‘12:

The IT Pro camps have proved super-popular. One of them filled within 10 minutes of its announcement on the blogs of my colleagues Simon May and Andrew Fryer. SO there will be online sessions added to the schedule. These are running on the 30th and 31st October.

- 9:30 –12:30 Windows Server 2012, PowerShell 3 and management , SMB3 , Hyper-V, to name a few

13:00-16:30 Windows Azure for IT Pros, so a close look at the new VM’s in Azure, ADFS and the stuff you need to know about managing Azure as part of your infrastructure

- 9:30 –12:30 Windows 8 featuring VDI, Branch cache, dynamic access control Bitlocker to go

- 13:00-16:30 Private Cloud. Focused on System Center 2012 with what’s new in the upcoming sp1

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Tiffany Trader asked Did Amazon Really Buy a Boatload of Teslas? in a 10/11/2012 post to the HPC in the Cloud (@HPCintheCloud) blog:

There's been some interesting speculation involving a popular GPU vendor and a certain Internet-bookstore-turned-cloud-provider. According to this piece published on Oct. 4, Amazon purchased more than 10,000 NVIDIA Tesla K10 boards for its AWS EC2 cloud.

While normally these boards, which pair two "Kepler" GK104 GPUs with 8GB of GDDR5 memory, sell for around $4,000, Amazon is said to have negotiated the price down to somewhere between $1,499 and $1,799 per card, bringing the total amount paid (or allegedly paid) into the $15 to $18 million range.

But there's more: Amazon reportedly subscribed to a warranty program worth almost as much as the GPGPUs. For an extra $500 per board per year, NVIDIA will immediately replace any faulty units. The two-year deal brings the total contract price up to a cool $25-28 million.

The author who made these detailed claims based them on "sources close to the company." He added that while NVIDIA was mainly interested in the single-precision K10s they're also looking at buying some K20 boards for the subset of HPC users who require double-precision performance. This K20 is the same GPU inside petascale behemoths like Titan and Blue Waters. It has a lower retail price of $3,199, but due to high demand, significant volume discounts are difficult to come by.

Are the rumors true?

If the buy happened, why not shout it from the rooftops? It's a win-win for both companies. For Amazon, it gives cred to the HPC cloud model and affirms the company's 2010 decision to enter the GPU supercomputing space. And for NVIDIA, this is a huge single-customer sale, even taking into account the volume discount. There's even speculation that NVIDIA's stock price has been influenced on the basis of this story alone.

The level of detail in the source article belies the usual rumor mill pap. The author even mentioned that the boards "look a bit different" from the standard issue press photo. And there's a logic to the story – it certainly could be true. Still, this is essentially an unvetted piece, one that our source at NVIDIA denied and our AWS contact was unwilling to comment on. Perhaps NVIDIA and Amazon signed a non-disclosure agreement. Maybe one or both are planning a grand announcement and don't want to spoil the surprise.

For the record, a number of NVIDIA partners are making the power of GPUs available as a service. Amazon Web Services, PEER1/Zunicore, Nimbix, SoftLayer and Penguin Computing all offer GPU-based supercomputing on-demand.

As is stands now, Amazon's Cluster GPU Instance comes with a pair of NVIDIA Tesla M2050 "Fermi" chips, each of which contains 448 CUDA cores and offers 1.03 teraflops of single-precision floating point performance with 148 gigabytes per second of memory bandwidth. The Kepler architecture, which fits two Tesla K10 GPUs on a single accelerator board, has 3,072 cores total (1,536 per GPU) and delivers an aggregate 4.58 teraflops of single-precision performance and 320 gigabytes per second memory bandwidth.

Amazon will need to upgrade its GPU offering sooner or later, and Kepler is the logical choice if they want to stay current to the needs of high-end users.

Related Articles

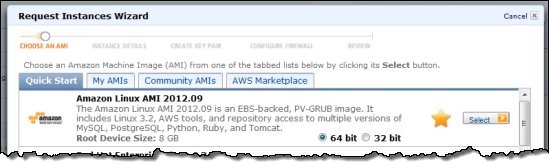

Jeff Barr (@jeffbarr) posted Amazon Linux AMI 2012.09 Now Available by Max Spevack on 10/11/2012:

Max Spevack of the Amazon EC2 team brings news of the latest Amazon Linux AMI.

-- Jeff;

The Amazon Linux AMI 2012.09 is now available.

After we removed the “Public Beta” tag from the Amazon Linux AMI last September, we’ve been on a six month release cycle focused on making sure that EC2 customers have a stable, secure, and simple Linux-based AMI that integrates well with other AWS offerings.

There are several new features worth discussing, as well as a host of general updates to packages in the Amazon Linux AMI repositories and to the AWS command line tools. Here's what's new:

- Kernel 3.2.30: We have upgraded the kernel to version 3.2.30, which follows the 3.2.x kernel series that we introduced in the 2012.03 AMI.

- Apache 2.4 & PHP 5.4: This release supports multiple versions of both Apache and PHP, and they are engineered to work together in specific combinations. The first combination is the default, Apache 2.2 in conjunction with PHP 5.3, which are installed by running yum install httpd php. Based on customer requests, we support Apache 2.4 in conjunction with PHP 5.4 in the package repositories. These packages are accessed by running yum install httpd24 php54.

- OpenJDK 7: While OpenJDK 1.6 is still installed by default on the AMI, OpenJDK 1.7 is included in the package repositories, and available for installation. You can install it by running yum install java-1.7.0-openjdk.

- R 2.15: Also coming from your requests, we have added the R language to the Amazon Linux AMI. We are here to serve your statistical analysis needs! Simply yum install R and off you go.

- Multiple Interfaces & IP Addresses: Additional network interfaces attached while the instance is running are configured automatically. Secondary IP addresses are refreshed during DHCP lease renewal, and the related routing rules are updated.

- Multiple Versions of GCC: The default version of GCC that is available in the package repositories is GCC 4.6, which is a change from the 2012.03 AMI in which the default was GCC 4.4 and GCC 4.6 was shipped as an optional package. Furthermore, GCC 4.7 is available in the repositories. If you yum install gcc you will get GCC 4.6. For the other versions, either run yum install gcc44 or yum install gcc47.

The Amazon Linux AMI 2012.09 is available for launch in all regions. Users of 2012.03, 2011.09, and 2011.02 versions of the Amazon Linux AMI can easily upgrade using yum.

The Amazon Linux AMI is a rolling release, configured to deliver a continuous flow of updates that allow you to roll from one version of the Amazon Linux AMI to the next. In other words, Amazon Linux AMIs are treated as snapshots in time, with a repository and update structure that gives you the latest packages that we have built and pushed into the repository. If you prefer to lock your Amazon Linux AMI instances to a particular version, please see the Amazon Linux AMI FAQ for instructions.

As always, if you need any help with the Amazon Linux AMI, don’t hesitate to post on the EC2 forum, and someone from the team will be happy to assist you.

<Return to section navigation list>

0 comments:

Post a Comment