Windows Azure and Cloud Computing Posts for 9/27/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 9/29/2012 8:00 AM PDT with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Data Hub, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop and Media Services

Mary Jo Foley (@maryjofoley) asserted “Microsoft's Bing team is teaming up with social-media vendor Klout in the name of social-influence and big data” in a deck for her Microsoft invests in Klout; integrates data into Bing post of 9/27/2012:

Microsoft is making a "strategic investment" of an undisclosed size in social-media vendor Klout, company officials announced on its Bing Community blog on September 27.

On Bing, Microsoft is going to display Klout data -- including a person's Klout score and topics they are "influential" about -- on the new Bing Sidebar pane for those users who can and want to see this information. And on Klout, "highlights from Bing will begin surfacing in the 'moments' section of some people's Klout profiles," a Microsoft spokesperson said.

This new partnership is related to Microsoft's ongoing work to integrate social-search results into its Bing search engine via the sidebar panel, the same way that it does with Quora and foursquare.

Your reaction to this news probably indicates a lot of things about you. (I know it does of me.)

If you're living in the Silicon Valley area and/or are someone who thinks your Klout score really matters, you probably are thinking: "Wow, Microsoft!" If you're a jaded non-Bubble-dwelling person like me, you might be thinking something more like "Wow, Microsoft?"

As one of my Twitter chums joked today, my Klout score on Microsoft -- which I truly don't know and don't care -- is probably minus-500 after my tweets and this post.

Microsoft is maintaining this isn't all fluff and no stuff. There's also a big-data connection to today's partnership and investment, according to today's post. Microsoft officials have said repeatedly that one of the biggest benefits of Bing is massive amount of information it helps Microsoft collect and parse.

"Search as a new outbound signal is an interesting new development in the way we think about big data and how it can add value to lots of the other services we use each day," according to today's post. (And no, I don't really know what, if anything, that sentence actually means, either.)

I'm not anti-social media. I find Twitter really useful, and I know some do take Klout score quite seriously. I am not among them. I would never use Klout to find an expert in a subject area, as I know that many folks give one another Klout points as jokes. But I'm also someone who doesn't want to see my Twitter, Facebook and LinkedIn friend's recommendations on my search queries, either -- which is something Microsoft is encouraging with its latest Bing redesign, which the company announced in March 2012.

To try the new Klout-Bing integration, users should go to Bing.com, log into Facebook and try some searches. Microsoft suggests starting with “movies, nfl schedule, or stanford university."

Update: So maybe there really is a big data --and a Hadoop-specific play -- in this Klout arrangement after all. Thanks to another of my Twitter buds, @Lizasisler from Perficient, comes this May 2012 GigaOm story about the relationship between Hadoop, Microsoft and Klout. (Remember, Microsoft is working on Hadoop for Windows Azure, and supposedly still Hadoop for Windows Server.) It sounds from this article as though Klout is a big SQL Server shop and a likely MySQL switcher.

I live in Oakland, about 50 miles north of Silicon Valley and my Klout score hovers near 50, whatever that means.

The Bing Team posted Taking the Fear Out of Commitment; New Offer For the Way You Build in The Cloud on 9/27/2012:

In May we introduced sidebar, bringing you relevant information from friends, experts and enthusiasts across social networks to help you find anything from technical tips and restaurant advice to the latest media buzz – right within Bing. Since then, we’ve added new partners and expanded our social data to include the top social networks on the web like Facebook, Twitter, foursquare and Quora – and we’re not done yet!

Today, we’re excited to announce that Bing and Klout are partnering to help enrich the discovery and recognition of influencers across our platforms. This is an alliance based on a shared belief that people are at the center of task completion. To help you find the right person we need to determine who is influential and trusted on different topics on the web. Bing and Klout share this vision. In addition to the technical partnership described below, we are announcing that we are making a strategic investment in Klout.

Klout brings a deep social DNA to the table having done some very cool and exciting work to help people understand social influence, find their voice and have an impact on the world. When we combine that expertise with the work our Bing team has done on the marriage of search and social, along with the big science challenges around relevance and ranking (especially people ranking), there are exciting possibilities for what we can do together for web users. Our engineering teams will work together to expand the scope or social search and influence.

In our products, we have some interesting integrations into Bing and also into Klout. On Bing, we will surface Klout scores and influential topics for many of the experts in the People Who Know section of the sidebar. Klout as a social influence signal in the sidebar will really help customers connect with the right experts on the topics they are searching for.

As part of Klout’s recently unveiled moments feature, we’ll begin surfacing Bing highlights on some Klout users profiles, demonstrating how search can be a powerful new indicator of online influence. What’s interesting about our work on the Klout service is that for the 1st time Bing is an outbound signal for influence. Search as a new outbound signal is an interesting new development in the way we think about big data and how it can add value to lots of the other services we use each day.

This is just the beginning of Bing and Klout’s partnership and we believe that there’s a lot of exciting opportunities when you look at deepening the integration of social search and online influence. We hope that the partnership will ultimately lead to platform enhancements that enrich the discovery and recognition of influencers on both Bing and Klout so stay tuned!

Give it a try at bing.com. Make sure you’re logged into Facebook and start searching. Try searching for “movies, nfl schedule, or stanford university” and tell us what you think.

Denny Lee (@dennylee) reported Klout powers Bing Social Search! on 9/27/2012:

A pretty exciting announcement by Klout happened today – they are now powering Bing Social Search as noted in Bing has Klout!

Hats off to them on how they have changed the landscape of social media.

And if you want more information on how they did it? Check out Dave Mariani (@dmariani) – VP Engineering at Klout – and my presentation during Hadoop Summit 2012:

How Klout is changing the landscape of social media with Hadoop and BI from Denny Lee [see post below.]

To know more about how Analysis Services to Hive works (the BI portion noted in the above slides), please refer to our case study SQL Server Analysis Services to Hive – A Klout Case Study authored by Kay Unkroth.

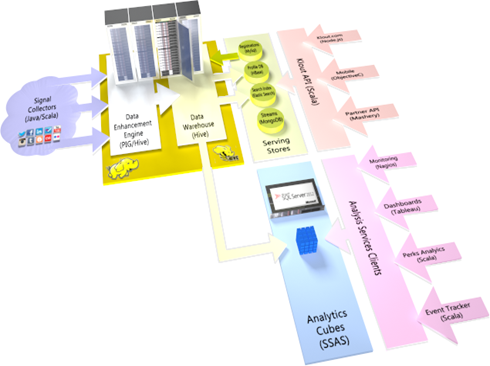

Denny Lee (@dennylee) described SQL Server Analysis Services to Hive [with SSIS] in a 9/26/2012 post:

Over the last few months, we’ve chatted about how Hadoop and BI are better together – it’s a common theme that Dave Mariani (@dmariani) and I have spoke together about in our session How Klout changed the landscape of social media with Hadoop and BI.

While this all sounds great, the typical question is “How do I do it”? I am proud to say that after months of intense writing – all of this is due to the amazing work by Kay Unkroth who regularly blogs on the official Analysis Services and PowerPivot Team blog – we now have the first of our great collateral: SQL Server Analysis Services to Hive: A Klout Case Study.

Boris Evelson asked What Do BI Vendors Mean When They Say They Integrate With Hadoop in a 9/25/2012 post to his Forrester Research blog:

There's certainly a lot of hype out there about big data. As I previously wrote, some of it is indeed hype, but there are still many legitimate big data cases - I saw a great example during my last business trip. Hadoop certainly plays a key role in the big data revolution, so all business intelligence (BI) vendors are jumping on the bandwagon and saying that they integrate with Hadoop. But what does that really mean?

First of all, Hadoop is not a single entity; it's a conglomeration of multiple projects, each addressing a certain niche within the Hadoop ecosystem, such as data access, data integration, DBMS, system management, reporting, analytics, data exploration, and much much more. To lift the veil of hype, I recommend that you ask your BI vendors the following questions

- Which specific Hadoop projects do you integrate with (HDFS, Hive, HBase, Pig, Sqoop, and many others)?

- Do you work with the community edition software or with commercial distributions from MapR, EMC/Greenplum, Hortonworks, or Cloudera? Have these vendors certified your Hadoop implementations?

- Are you querying Hadoop data directly from your BI tools (reports, dashboards) or are you ingesting Hadoop data into your own DBMS? If the latter:

- Are you selecting Hadoop result sets using Hive?

- Are you ingesting Hadoop data using Sqoop?

- Is your ETL generating and pushing down Map Reduce jobs to Hadoop? Are you generating Pig scripts?

- Are you querying Hadoop data via SQL?

- If yes, who provides relational structures? Hive? If Hive,

- Who translates HiveQL to SQL?

- Who provides transactional controls like multiphase commits and others?

- Do you need Hive to provide relational structures, or can you query HDFS data directly?

- Are you querying Hadoop data via MDX? If yes, please let me know what tools are you using, as I am not aware of any.

- Can you access NoSQL Hadoop data? Which NoSQL DBMS? HBase, Cassandra? Since your queries are mostly based on SQL or MDX, how do you access these key value stores? If yes, please let me know what use cases you have for BI using NoSQL, as I am not aware of any.

- Do you have a capability to explore HDFS data without a data model? We call this discovery, exploration.

- As Hadoop MapReduce jobs are running, who provides job controls? Do you integrate with Hadoop Oozie, Ambari, Chukwa, Zookeeper?

- Can you join Hadoop data with other relational or multidimensional data in federated queries? Is it a pass-through federation? Or do you persist the results? Where? In memory? In Hadoop? In your own server?

As you can see, you really need to peel back a few layers of the onion before you can confirm that your BI vendor REALLY integrates with Hadoop.

Curious to hear from our readers if I missed anything

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Nick Harris (@cloudnick) explained Windows 8: How to upload an Image using a Blob Storage SAS generated by Windows Azure Mobile Services on 9/25/2012 (missed when posted):

This post details the specific scenario on how to capture an image on windows 8 and upload it directly to Windows Azure Blob Storage using a Shared Access Signature (SAS) generated within Windows Azure Mobile Services. It demonstrates and alternative approach suited for larger scale implementations (i.e using a SAS) when contrasted with the following article Storing Images from Android in Windows Azure Mobile Services

Note: This topic is advanced and assumes that you have a good knowledge of the Windows Azure Blob REST API and Windows Azure Mobile Services. I would suggest you check out the following tutorials and Blob Storage REST API prior to starting

Note: that although this is specifically an image example you could upload any media/binary data to blob storage using the same approach.Background – Shared Access Signature

What Are Shared Access Signatures?

A Shared Access Signature is a URL that grants access rights to containers, blobs, queues, and tables. By specifying a Shared Access Signature, you can grant users who have the URL access to a specific resource for a specified period of time. You can also specify what operations can be performed on a resource that’s accessed via a Shared Access Signature. In the case of Blobs operations include:

- Reading and writing page or block blob content, block lists, properties, and metadata

- Deleting, leasing, and creating a snapshot of a blob

- Listing the blobs within a container

Why not just use the storage account name and key directly?

There are a few standout reasons:

- Security – When building device applications you should not store your storage account name and key within the device app. The reason is that it makes your storage account susceptible to being misused. If someone were to reverse engineer your application take your storage account key then they would essentially have access to 100TB of cloud based storage until such a time that you realized and reset the key. The safer approach is to use a SAS as it provides a time boxed token with defined permissions to a defined resource. With policies the token can also be invalidated/revoked

- Scale Out (and associated costs)- A common approach I see is uploading an image directly through their web tier e.g a Web API or Mobile Service unfortunate consequence of this at scale is that you are unnecessarily loading your web tier. Consider that each of your instances on your web tier has a limited network I/O. Uploading images directly through this will result in maxing out that I/O and the need to scale out (add more instances) much sooner then alternative approaches. Now consider a scenario where your application requests only a SAS from your web tier you have now moved MBs or image load off your web tier and instead replaced it with a small ~ 100 – 200 byte SAS. This essentially means a single instance now will provide much more throughput and your upload I/O now directly hits the Blob storage service

What is the general workflow for uploading a blob using a SAS?

The four basic steps required when uploading an image using the SAS approach depicted are as follows:

- Request a SAS from your service

- SAS returned from your service

- Upload blob (image/video/binary data) directly to Blob Storage using the SAS

- Storage service returns response

For this post we will focus specifically on how to write/upload a blob using a shared access signature that is generated in the mobile service insert trigger.

Creating your Mobile Service

In this post I will extend the Mobile Services quick start sample. Before proceeding to the next section create a mobile service and download the quickstart as detailed in the tutorial here

Capturing the Image|Media

Our first task is to capture the media we wish to upload. To do this follow the following steps.

- Add an AppBar to MainPage.xaml with a take photo button to allow us to capture the image

...

</Grid>

...

<Page.BottomAppBar>

<AppBar>

<Button Name="btnTakePhoto" Style="{StaticResource PhotoAppBarButtonStyle}"

Command="{Binding PlayCommand}" Click="OnTakePhotoClick" />

</AppBar>

</Page.BottomAppBar>

...

</Page>

view raw gistfile1.cs This Gist brought to you by GitHub.

- Add the OnTakePhotoClick handler and use the CameraCaptureUI class for taking photo and video

using Windows.Media.Capture;

private async void OnTakePhotoClick(object sender, RoutedEventArgs e)

{

//Take photo or video

CameraCaptureUI cameraCapture = new CameraCaptureUI();

StorageFile media = await cameraCapture.CaptureFileAsync(CameraCaptureUIMode.PhotoOrVideo);

}

view raw gistfile1.cs This Gist brought to you by GitHub.

Generating a Shared Access Signature (SAS) using Mobile Services server-side script

In this step we add sever-side script to generate a SAS on insert operation of the TodoItem table.

To do this perform the following steps:

- Navigate to your Mobile Service and select the Data Tab, then click on Todoitem

- Select Script, then the Insert drop down

- Add the following server side script to generate the SAS

Note: this code assumes there is already a public container called test.

Note:Simple example of Generating a Windows Azure blob SAS in Node created using the guidance here.//Simple example of Generating a Windows Azure blob SAS in Node created using the guidance at http://msdn.microsoft.com/en-us/library/windowsazure/hh508996.aspx.

//If your environment has access to the Windows Azure SDK for Node (https://github.com/WindowsAzure/azure-sdk-for-node) then you should use that instead.

function insert(item, user, request) {

var accountName = '<Your Account Name>';

var accountKey = '<Your Account Key>';

//Note: this code assumes the container already exists in blob storage.

// If you wish to dynamically create the container then implement guidance here - http://msdn.microsoft.com/en-us/library/windowsazure/dd179468.aspx

var container = 'test';

var imageName = item.ImageName;

item.SAS = getBlobSharedAccessSignature(accountName, accountKey, container, imageName);

request.execute();

}

function getBlobSharedAccessSignature(accountName, accountKey, container, fileName){

signedExpiry = new Date();

signedExpiry.setMinutes(signedExpiry.getMinutes() + 30);

canonicalizedResource = util.format(canonicalizedResource, accountName, container, fileName);

signature = getSignature(accountKey);

var queryString = getQueryString();

return util.format(resource, accountName, container, fileName, queryString);

}

function getSignature(accountKey){

var decodedKey = new Buffer(accountKey, 'base64');

var stringToSign = signedPermissions + "\n" + signedStart + "\n" + getISO8601NoMilliSeconds(signedExpiry) + "\n" + canonicalizedResource + "\n" + signedIdentifier + "\n" + signedVersion;

stringToSign = stringToSign.toString('UTF8');

return crypto.createHmac('sha256', decodedKey).update(stringToSign).digest('base64');

}

function getQueryString(){

var queryString = "?";

queryString += addEscapedIfNotNull(queryString, Constants.SIGNED_VERSION, '2012-02-12');

queryString += addEscapedIfNotNull(queryString, Constants.SIGNED_RESOURCE, signedResource);

queryString += addEscapedIfNotNull(queryString, Constants.SIGNED_START, getISO8601NoMilliSeconds(signedStart));

queryString += addEscapedIfNotNull(queryString, Constants.SIGNED_EXPIRY, getISO8601NoMilliSeconds(signedExpiry));

queryString += addEscapedIfNotNull(queryString, Constants.SIGNED_PERMISSIONS, signedPermissions);

queryString += addEscapedIfNotNull(queryString, Constants.SIGNATURE, signature);

queryString += addEscapedIfNotNull(queryString, Constants.SIGNED_IDENTIFIER, signedIdentifier);

return queryString;

}

function addEscapedIfNotNull(queryString, name, val){

var result = '';

if(val)

{

var delimiter = (queryString.length > 1) ? '&' : '' ;

result = util.format('%s%s=%s', delimiter, name, encodeURIComponent(val));

}

return result;

}

function getISO8601NoMilliSeconds(date){

if(date)

{

var raw = date.toJSON();

//blob service does not like milliseconds on the end of the time so strip

return raw.substr(0, raw.lastIndexOf('.')) + 'Z';

}

}

var Constants = {

SIGNED_VERSION: 'sv',

SIGNED_RESOURCE: 'sr',

SIGNED_START: 'st',

SIGNED_EXPIRY: 'se',

SIGNED_PERMISSIONS: 'sp',

SIGNED_IDENTIFIER: 'si',

SIGNATURE: 'sig',

};

var crypto = require('crypto');

var util = require('util');

//http://msdn.microsoft.com/en-us/library/windowsazure/hh508996.aspx

var resource = 'https://%s.blob.core.windows.net/%s/%s%s';

//Version of the storage rest API

var signedVersion = '2012-02-12';

//signedResource. use b for blob, c for container

var signedResource = 'b'; //

// The signedpermission portion of the string must include the permission designations in a fixed order that is specific to each resource type. Any combination of these permissions is acceptable, but the order of permission letters must match the order in the following table.

var signedPermissions = 'rw'; //blob perms must be in this order rwd

// Example - Use ISO 8061 format

var signedStart = '';

var signedExpiry = '';

// Eample Blob

// URL = https://myaccount.blob.core.windows.net/music/intro.mp3

// canonicalizedresource = "/myaccount/music/intro.mp3"

var canonicalizedResource = '/%s/%s/%s';

//The string-to-sign is a unique string constructed from the fields that must be verified in order to authenticate the request. The signature is an HMAC computed over the string-to-sign and key using the SHA256 algorithm, and then encoded using Base64 encoding.

var signature = '';

//Optional. A unique value up to 64 characters in length that correlates to an access policy specified for the container, queue, or table.

var signedIdentifier = '';

view raw gistfile1.js This Gist brought to you by GitHub.

Uploading the Image directly to storage using the SAS

- To generate the sas we must insert a todoItem. which will now return the SAS property for the image. Thus update the OnTakePhotoClick handler to insert an item.

private async void OnTakePhotoClick(object sender, RoutedEventArgs e)

{

//Take photo or video

CameraCaptureUI cameraCapture = new CameraCaptureUI();

StorageFile media = await cameraCapture.CaptureFileAsync(CameraCaptureUIMode.PhotoOrVideo);

//add todo item to trigger insert operation which returns item.SAS

var todoItem = new TodoItem() { Text = "test image", ImageName = media.Name };

await todoTable.InsertAsync(todoItem);

items.Add(todoItem);

//TODO: Upload image direct to blob storage using SAS

}

view raw gistfile1.cs This Gist brought to you by GitHub.

- Update OnTakePhotoClick handler to update the image directly to blob storage using the HttpClient and the generated item.SAS

private async void OnTakePhotoClick(object sender, RoutedEventArgs e)

{

//Take photo or video

CameraCaptureUI cameraCapture = new CameraCaptureUI();

StorageFile media = await cameraCapture.CaptureFileAsync(CameraCaptureUIMode.PhotoOrVideo);

//add todo item

var todoItem = new TodoItem() { Text = "test image", ImageName = media.Name };

await todoTable.InsertAsync(todoItem);

items.Add(todoItem);

//Upload image with HttpClient to the blob service using the generated item.SAS

using (var client = new HttpClient())

{

//Get a stream of the media just captured

using (var fileStream = await media.OpenStreamForReadAsync())

{

var content = new StreamContent(fileStream);

content.Headers.Add("Content-Type", media.ContentType);

content.Headers.Add("x-ms-blob-type", "BlockBlob");

using (var uploadResponse = await client.PutAsync(new Uri(todoItem.SAS), content))

{

//TODO: any post processing

}

}

}

}

view raw gistfile1.cs This Gist brought to you by GitHub.

Run the application

- Hit F5 on the application and right click with your mouse to show the app bar

- Press the Take Photo button

- Observe that the SAS is returned from your Mobile Service

- Check your storage account now has the captured virtual High Five photo/video

Mike Taulty (@mtaulty) continued his series with Experimenting with Windows Azure Mobiles Services (Round 2) on 9/27/2012:

One of the things that I was looking at in my post on Azure Mobile Services the other day was the idea of creating a ‘virtual table’ or a view which represented a join across two tables.

For example, imagine I have a new service mtaultyTest.azure-mobile.net where I’ve defined a person table;

and an address table;

and then if I insert a few rows;

and then I can very easily select data from either data with an HTTP GET but if I wanted to join the tables (server-side) it’s a little more complex because I’d have to alter the read script on the person table to automatically join to Address and return that dataset or I could alter the read script on the address table.

However, I don’t really want to alter either script and so (as in the previous post) I create a personAddress table where my hope is to join the tables together in a read script and present a ‘view’.

In the previous post while I knew that I could create a read script on personAddress, I didn’t know how I could grab the details of the query (against personAddress) with a view to trying to redirect it towards another table such as person. I asked around and Paul gave me some really useful info although it’s not quite going to get me where I want to be in this particular post.

Here’s the info that I wasn’t aware of - imagine I’m sending a query like this to the person table;

on the server-side, I can pick up the details of that query in a read script by making a call to getComponents();

and the log output of this looks like;

i.e. it gives me all the information I need to pull the query apart (albeit in a way that’s not documented against Azure Mobile Services right now as far as I know).

There’s a corresponding setComponents method which promises the idea of being able to change the query. Clearing the read script from my person table,for my personAddress table I thought that I might be able to write a read script like;

and, in theory, this does work and you can alter the properties like skip, take, includeTotalCount and so on but in the current preview you can’t actually alter the table property which is what I was wanting to do in order to query a different table than the one that the script is directly targeted at.

I believe that this might change in the future so I’ll revisit this idea as/when the preview moves along but I thought I’d share the getComponents()/setComponents() part.

Josh Twist (@joshtwist) explained Making HTTP requests from Scripts in Mobile Services in a 9/26/2012 post:

It’s no secret that my favorite feature of our first release of Mobile Services is the ability to execute scripts on the server. This is useful for all kinds of scenarios from validation and authorization to sending push notifications. We made it very easy to send push notifications via WNS (Windows Notification Services), it’s basically a single code statement:

push.wns.sendTileWideImageAndText01(channelUrl, { text: "foo", imageSrc: "http://someurl/where/an/image/lives.jpg" });

It’s amazing that this is all it takes to send a live tile update with an image to your windows 8 device. For more information on getting started with push – check out our tutorials:

HTTP with request

It’s also no secret that the Mobile Services runtime uses NodeJS to give you the power of JavaScript on the server – with the ability to require some of the best modules in Node, including my favorite: request from Mikeal. The request module makes http a doddle. Here’s what it looks like to make a simple request to twitter, for example, in a Mobile Services insert script.

function insert(item, user, request) {var req = require('request'); req.get({ url: "http://search.twitter.com/search.json", qs: { q: item.text, lang: "en" } }, function(error, result, body) { var json = JSON.parse(body); item.relevantTweet = json.results[0].text; // now go ahead and save the data, with // the new property, courtesy of twitter request.execute(); }); }

The script above uses the text property of item being inserted and searches twitter for matching tweets. It then appends a property to the record containing the content of the first tweet and inserts everything to the database. Who wouldn’t want a tweet to accompany each and every item on your todolist? Here’s the exciting result in the Windows Azure Portal:

Now that we understand push and HTTP wouldn’t it be cool to pull the two together, and use the power of the internet to help us find an image to accompany our push notification. Imagine we want to send all our devices a live tile with an image whenever a new item is added to our list. And what’s more we want the image to be something that portrays the text of item inserted.

Enter Bing Search.

You can sign up for the Bing Search API here and you get 5,000 searches for free per month. As you might expect, you can invoke the Bing Search API via HTTP.

Combining these two ideas is now pretty straightforward:

// This is your primary account key from the datamarket

// This isn’t my real key, so don’t try to use it! Get your own. var bingPrimaryAccountKey = "TIjwq9cfmNotTellingYouE6lNMNhFo4xSMkMGs="; // This creates a basic auth token using your account key var basicAuthHeader = "Basic " + new Buffer(":" + bingPrimaryAccountKey).toString('base64'); // and now for the insert script function insert(item, user, request) { request.execute({ success: function() { request.respond(); getImageFromBing(item, sendPushNotification); } }); } // this function does all the legwork of calling bing to find the image function getImageFromBing(item, callback) { var req = require('request'); var url = "https://api.datamarket.azure.com/Data.ashx" + "/Bing/Search/v1/Composite?Sources=%27image%27&Query=%27" + escape(item.text) + "%27&Adult=%27Strict%27&" + "ImageFilters=%27Size%3aMedium%2bAspect%3aSquare%27&$top=50&$format=Json"; req.get({ url: url, headers: { "Authorization": basicAuthHeader } }, function (e, r, b) { try{ var image = JSON.parse(b).d.results[0].Image[0].MediaUrl; callback(item, image); } catch (exc) { console.error(exc); // in case we got no image, just send no image callback(item, ""); } }); } // send the push notification function sendPushNotification(item, image) { getChannels(function(results) { results.forEach(function(result) { push.wns.sendTileWideImageAndText01(result.channelUri, { image1src: image, text1: item.text }, { // logging success is handy during development // mobile services automatically logs failure success: console.log }); }); }); } // this is where you load the channel URLs from the database. This is // really an exercise for the reader based on how they decided to store // channelUrls. See the second push tutorial above for an example function getChannels(callback) { // this example loads all the channelUris from a table called Channel var sql = "SELECT channelUri FROM Channel"; mssql.query(sql, { success: function(results) { callback(results); } }); }Which resulted in:

Which is pretty neat. Have fun.

Note: Finding images on the internet is risky, you really don’t know what might show up! Also, I make no statement about the legality or otherwise of using images from Bing or other search providers in your own applications. As always, consult the terms an conditions of any services you integrate with and abide by them. Be good

Bruno Terkaly (@brunoterkaly) completed his series with Part 5 of 5: Introduction to Consuming Azure Mobile Services from iOS on 9/26/2012:

This is probably the most code heavy of all the posts.

- You should be able to replicate everything I've done without too much trouble.

Previous Posts:

As explained previously, the JSON kit helps with the parsing of our JSON data.

- There are two files we are interested in:

- JSONKit.h

- JSONKit.m

Adding the JSON Kit to our project

- Simple copy and paste the two files to our project.

- After this step we will compile the project to make sure there aren't any errors.

Verifying correctness

- Go to the PRODUCT menu and choose BUILD.

Modifying RootViewController.h

- We will modify it to source code modules: (1) RootViewController.h and (2) RootViewController.m

- Perform the following:

- Add the two lines of code outlined in red.

- These lines will be used to contain the data returned by the Azure Mobile Service

- toDoItems will be assigned to the TableView control in the iOS application.

- toDoItems will hold the data coming back from the Windows Azure Mobile Service.

Add the JSONKit header declaration

- We do this at RootViewController.m

- This obviously assumes we've added the JSONKit to our project.

Modifying RootViewController.m

- This is where all the magic happens.

- There will be a combination of things added.

- Declarations for the data

- Methods to connect to Azure Mobile Services

- Code to retrieve the data from Azure Mobile Services, parse it, and add it to the TableView control in the iOS app

Adding code to RootViewController.m

- There are declarations to add as well as methods and code snippets.

- Use the table above to help you get your code to look like the code below.

RootViewController.m

[255 lines of source code elided for brevity; see the download link at the beginning of this post.] ,,,

- Assuming you've made no mistakes in your code.

- You can download the code here:

- The steps to execute are:

- From the menu, choose PRODUCT / BUILD.

- Next, from the menu, choose PRODUCT / RUN.

You will need a trial account for Windows Azure

Please sign up for it here: http://www.microsoft.com/click/services/Redirect2.ashx?CR_CC=200114759

Thanks..

I appreciate that you took the time to read this post. I look forward to your comments.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Data Hub, Big Data and OData

Avi Kovarsky announced Codename “Data Hub”: End of Service in a 9/26/2012 e-mail message:

As it was communicated before at the release of [the Codename “Data Hub”] lab we are shutting down the service by the end of this week.

The original plan was to make it by the end of August, but due to many requests from customers we took another month to let you to continue to test and provide us with your valuable feedback. But as everything comes to end one day – this is the end of the Data Hub Lab in SQL Azure Labs.

I wanted to thank every one of you for the great and valuable feedback you provided, it was our pleasure to work with you and we’ll be glad to see you joining us in the next milestone.

I’ll keep my promise and update you about next Public Preview of the SQL IS solution, where Data Hub is part of it, closer to the release date (potentially end of this year).

This Friday, September 28, we’ll delete all instances of the Data Hub provisioned during the Lab and all related data associated with the instances.

If for any reason, you need your instance to be live for additional couple of days, please let me know asap.

The WCF Data Services Team reported WCF Data Service 5.1.0-rc2 Released in a 9/26/2012 post:

Today we are releasing refreshed NuGet packages as well as an installer for WCF Data Services 5.1.0-rc2. This RC is very close to complete. We do still have a few optimizations to make in the payload, but this RC is very representative of the final product.

The RC introduces several new features:

JSON LightThe new JSON serialization format- $format/$callback

- New client-side events for more control over the request/response lifecycle

The new JSON serialization format

We’ve blogged a few times about the new JSON serialization format. We think this new format has the feel of a custom REST API with the benefits of a standardized format. What does that mean exactly?

The feel of a custom REST API

If you were to create a custom REST API, you probably wouldn’t create a lot of ceremony in the API. For example, if you were to GET a single contact, the payload might look like the payload below. In this payload, objects are simple JSON objects with name/value pairs representing most properties and arrays representing collections. In fact, the only indicator we have that this is an OData payload is the first name/value pair, which helps to disambiguate what is in the payload.

{ "odata.metadata":"http://contacts.svc/$metadata#MyContactsService/Contacts/@Element", "LastUpdatedAt":"2012-09-24T10:18:57.2183229-07:00", "FirstName":"Pilar", "LastName":"Ackerman", "Age":25, "Address":{ "StreetAddress":"5678 2nd Street", "City":"New York", "State":"NY", "PostalCode":"98052" }, "EmailAddresses":["pilar@contoso.com","packerman@cohowinery.com"], "PhoneNumbers":[ { "Type":"home", "Number":"(212) 555-0162" }, { "Type":"fax", "Number":"(646) 555-0157" } ] }In an upcoming blog post, we’ll dig more into what’s so awesome about this new format.

The benefits of a standardized format

One of the reasons OData is so powerful is that it has a very predictable set of patterns that enable generic clients to communicate effectively with a variety of servers. This is very different from the custom REST API world, where clients need to read detailed documentation on each service they plan to consume to determine things like:

- How do I construct URLs?

- What is the schema of the request and response payloads?

- What HTTP verbs are supported, and what do they do?

- How do I format the request and response payloads?

- How do I do advanced operations like custom queries, sorting, and paging?

Standardization + custom feel = new format

When we combine the terseness and custom feel of the new format with the power of standardization, we get a payload that is only ~10% of the size of AtomPub but is still capable of producing the same strong metadata framework.

Code gen

We’ve simplified the process for telling the client to ask for the new serialization format. Now all you need to do is call

context.UseJsonFormatWithDefaultServiceModel()(the jury’s out as to whether this will be the final API name, but you can be sure it will be as simple as a single method call). To get to that level of simplicity, we had to modify some things in code gen, so you’ll need to download the installer we just released if you want to simplify the process of bootstrapping a client.$format/$callback

In this release we’ve also provided in-the-box support for $format and $callback. These two system query options enable JSONP scenarios from many JavaScript clients. As a personal comment, I’ve also really appreciated not having to jump into Fiddler quite as much to look at JSON responses.

New client-side events

Finally, we have added two new events on the client side.

BuildingRequest

SendingRequest2 (and its deprecated predecessor SendingRequest) fires after the request is built. WebRequest does not allow you to modify the URL after construction. The new event lets you modify the URL before we build the underlying request, giving you full control over the request.

ReceivingResponse

This is an event the community has requested several times. When we receive ANY response on the client, we will fire this event so you can examine response headers and more.

But wait, there’s more…

There are still some things for us to do, but most applications will be unaffected by them (or will experience a free performance boost when we RTW). Please understand that these are our current plans, not promises. That said, here’s the remaining list of work we hope to complete by RTW:

- Relative URLs: When the server generates a URL and that URL actually needs to be in the new JSON serialization format, the server should automatically try to make it a relative URL.

- Actions/functions: There’s a little bit more smoothing necessary for action and function support.

- Disable the new format: Hopefully you’d never want to do this, but we have a common practice of allowing you to disable features in WCF Data Services.

- Bugs: We have some bugs and inconsistencies that we already know about that need to be fixed before we have release quality.

Known Issues

Among the other bugs and issues we’ve already identified, you should be aware that if you install the MSI referenced above, any new code gen’d service references will require the project to reference WCF Data Services 5.1.0. We will have an acceptable resolution for this problem by RTW.

We need your help

This is a big release. We could really use your feedback, comments and bug reports as we wind down this release and prepare to RTW. Feel free to leave a comment below or e-mail me directly at mastaffo@microsoft.com.

Mark Stafford (@markdstafford) posted API design: simplicity vs. explicitness on 9/25/2012:

We came across an interesting design question on the WCF Data Services team at Microsoft recently; I’m hoping you can help provide some feedback.

The issue

The issue in question the right set of APIs for turning on our new JSON serialization format (I’ll refer to this as just “JSON” throughout the rest of this post). Should we allow something as simple as

context.Format.UseJson(), or should we be more explicit and require the user to pass in their model, e.g.,context.Format.UseJson(context.GeneratedEdmModel)?Background

There are three things that we should cover as part of the context for this question. First, WCF Data Services has historically been very opinionated about design-time API experiences. That’s why the

DataServiceContextconstructor takes a URI rather than a string. The goal of the WCF Data Services team has been to prevent as many problems as possible at design time, with a secondary focus on the appearance of the API.Second, you should know that the WCF Data Services client requires an

IEdmModel(an in-memory representation of an Entity Data Model) to properly serialize/deserialize JSON payloads. Since JSON has a lot of magic that happens behind the scenes, this model is absolutely required for the client to work correctly.Third, for now the client has to opt in to turning on JSON as the communication format with the server. Since not all servers will support JSON, the client must call some API to indicate that they understand that they can communicate with the server using JSON. In the future this may change as we work on vocabularies that expose the server’s capabilities.

The candidates

We have two possible APIs that we could expose for turning on JSON in the client:

context.Format.UseJson();context.Format.UseJson(context.GeneratedEdmModel);Each API has strengths and weaknesses. Let’s look at them in detail.

public void UseJson(IEdmModel model);

This API is required; it will ship with WCF Data Services 5.1.0 regardless of the other API. It’s required in scenarios where the developer doesn’t use code generation or wants to supply their own model (e.g., using POCOs). For the short term, it will be up to the caller to come up with an

IEdmModel. This is fairly straightforward using EdmLib and CSDL, and significantly more complicated for reflection-based scenarios. (Although this is something we’d like to make easier someday.)We could use this API with code gen, also. In this case the developer using WCF Data Services would need to call something like

context.Format.UseJson(context.GeneratedEdmModel). The primary strength of this API is it’s explicitness. We’ve already stated that JSON does require the model, and this API accurately reflects that. The primary weakness of this API is the questions it raises: “Where do I get the model to pass to the method call? And what is an IEdmModel and why is it required in the first place?” Regardless of whether we make the model easy to get to in Add Service Reference (ASR) scenarios, it’s still something that will likely cause developers to stumble.public void UseJson();

This API is optional; we’re debating whether to ship it with WCF Data Services 5.1.0 in order to simplify enabling JSON from the client. This API is useful in our most common scenarios, where the developer uses the Add Service Reference (ASR) wizard to generate a

DataServiceContextin the client. This exempts the vast majority of our customers from having to worry about what anIEdmModelis or where to get it if you use ASR.We would primarily expect this API to be used with code gen, although it would be possible to use this API in other scenarios. The primary strength of this API is its simplicity. You don’t have to worry about what an

IEdmModelis or where to get it for most scenarios. The primary weakness of this API is that you might get a runtime error if you didn’t use Add Service Reference.What do you prefer?

So what do you think? Should we forge new ground and ship a simple API that could result in a runtime error in the 10% case? Or should we protect the 10% and accept understanding of IEdmModels as part of the bar to use JSON? I’m very interested in your opinion, which you can leave in the comments below or on the Contact Me page.

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

No significant articles today

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• Sanjeev Bannerjee described HOW TO: Install SharePoint Foundation on Windows Azure VM in a 9/28/2012 post to the Aditi Technologies blog:

Step 1 : Get an Azure Subscription or free trial ( need phone and credit card handy)

Step 2: Once subscription created, login to management portal

Step 3: create a storage blob

Step 4: Create a VM ( it might ask you to sign up for preview program) – I have selected Windows 2008 R2

Step 5: Configure the VM

Step 6: configure DNS

Step 7: review the VM provisioning. Notice IAAS cloud service is created

Step 8: Login to the VM using RDP

Step 9: Install SP 2010 foundation with SQL Express on the VM

Step 10: Add an endpoint for the VM

Step 11: We are done and now the SharePoint site on the Azure VM is accessible remotely

Clint Edmonson (@clinted) announced the availability of Windows Azure Virtual Machine Test Drive Kit in a 9/27/2012 post to the US DPE Azure Connection blog:

The public preview of hosted Virtual Machines in Windows Azure is now available to the general public. This platform preview enables you to evaluate our new IaaS and Enterprise Networking capabilities. Once you have registered for the 90 Day Free Trial and created a new account, you can access the preview directly at this link: https://account.windowsazure.com/PreviewFeatures

If you’ve been to any of my presentations lately, you’ll know that I’m fired up about these new offerings. As I’ve worked through some scenarios for myself and with my customers, I’ve been collecting the resources that helped me to ramp up.

Here’s a collection of links to the items I’ve found most useful:

Core Resources

- Digital Chalk Talk Videos – detailed technical overviews of the new Windows Azure services and supporting technologies as announced June 7, including Virtual Machines (IaaS Windows and Linux), Storage, Command Line Tools http://www.meetwindowsazure.com/DigitalChalkTalks

- Scenarios Videos on You Tube – “how to” guides, including “Create and Manage Virtual Networks”, “Create & Manage SQL Database”, and many more http://www.youtube.com/user/windowsazure

- Windows Azure Trust Center - provides a comprehensive of view of Windows Azure and security and compliance practices http://www.windowsazure.com/en-us/support/trust-center/

- MSDN Forums for Windows Azure http://www.windowsazure.com/en-us/support/preview-support/

- Microsoft Knowledge Base article Microsoft server software support for Windows Azure Virtual Machines

Videos

- Deep Dive into Running Virtual Machines on Windows Azure

- Windows Azure Virtual Machines and Virtual Networks

- Windows Azure IaaS and How It Works

- Deep Dive into Windows Azure Virtual Machines: From the Cloud Vendor and Enterprise Perspective

- An Overview of Managing Applications, Services, and Virtual Machines in Windows Azure

- Monitoring and Managing Your Windows Azure Applications and Services

- Overview of Windows Azure Networking Features

- Hybrid Will Rule: Options to Connect, Extend and Integrate Applications in Your Data Center and Windows Azure

- Business Continuity in the Windows Azure Cloud

- Linux on Windows Azure

Blogs

Brian Swan (@brian_swan) explained how to Store DB Connection Info in Windows Azure Web Sites App Settings in a 9/26/2012 post to the (Window Azure’s) Silver Lining blog:

This post is really just a Pro Tip: Use app settings in Windows Azure Web Sites to store your database connection information. A question on Stackoverflow the other day made me realize that using app settings in Windows Azure Web Sites to store database connection information was the key to migrating and/or upgrading databases easily. To test my idea, I decided to migrate a local WordPress website to a Windows Azure Web Site, then upgrade the database. Although I’m using WordPress, the idea can be used for any application. (I thought WordPress would be a good example since, like many PHP applications, database information is stored in a configuration file.) I won’t go into all the details, but will instead focus on how I used the app settings to make the migration and upgrade easy.

The first thing I did was to create a Windows Azure Web Site with a MySQL database and enable Git publishing. Then, on the CONFIGURE tab for my site in the Management Portal, I viewed my database connection string information and manually copied it into four app settings:

Note: Be sure to click Save at the bottom of the page after you enter app settings.

Now, to access the app settings, I needed to modify the wp-config.php file in my application. Instead of hard-coding database connection string information, I needed to use the getenv function to get this information from the app settings (which are accessible as environment variables):

// ** MySQL settings - You can get this info from your web host ** ///** The name of the database for WordPress */define('DB_NAME', getenv('DBNAME'));/** MySQL database username */define('DB_USER', getenv('DBUSER'));/** MySQL database password */define('DB_PASSWORD', getenv('DBPASSWORD'));/** MySQL hostname */define('DB_HOST', getenv('DBHOST'));Next, I used Git to push my local code to my remote site, and I used mysqldump to copy my local database to my remote DB. When I browsed to my Windows Azure Web Site, Voila!, everything worked perfectly.

The real value in storing my database connection info in app settings came when I wanted to upgrade my database. With Windows Azure Web Sites, you get one 20MB MySQL database for free, provided by ClearDB. However, ClearDB has made it very easy to add more MySQL databases of varying sizes (some for a fee, but not all). By selecting the database size that met my needs, I was able to choose where it is deployed...

…and I had connection information in a matter of minutes:

Then, I again used mysqldump to copy my existing database to the new (bigger) DB, and I only had to update my app settings with the new connection information to begin using it.

Hopefully, this gives you some ideas about ways to use the app settings that are available in Windows Azure Web Sites. If you have comments on this post, or if you have other ideas about useful ways to use app settings, I’d love to hear them in the comments below.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Martin Sawicki announced the availability of the Windows Azure Plugin for Eclipse with Java – September 2012 Preview in an 8/28/2012 post to the Interoperability @ Microsoft blog:

The Windows Azure Plugin for Eclipse with Java (by Microsoft Open Technologies) – September 2012 Preview has been released. This service update includes a number of additional bug fixes since the August 2012 Preview, as well as some feedback-driven usability enhancements in existing features:

Support for Windows 8 and Windows 2012 Server as the development OS, resolving issues previously preventing the plugin from working properly on those operating systems

- Improved support for specifying endpoint port ranges

- Bug fixes related to file paths containing spaces

- Role context menu improvements for faster access to role-specific configuration settings

- Minor refinements in the “Publish to cloud” wizard and a number of additional bug fixes

You can learn more about the plugin on the Windows Azure Dev Center.

To learn how to install the plugin, go here.

Towers Watson (@towerswatson) reported Towers Watson Develop Cloud Functionality for Risk-Based Modeling Applications in a 9/25/2012 press release reported by the HPC in the Cloud blog:

Global professional services company Towers Watson has expanded their alliance with Microsoft Corp. to adapt some of its software applications for the Windows Azure platform. As part of the expanded relationship, Towers Watson has been working closely with Microsoft’s technology centers to develop new processes for its risk-based modeling applications and solutions to exploit the flexibility offered by cloud computing.

“We have been working with Microsoft for many years, covering different technology areas, and this takes the relationship to a new level,” said Mark Beardall, global application leader, Risk and Financial Services, Towers Watson. “Cloud computing offers unique benefits in flexibility and cost effectiveness, and we are delighted to have access to the Microsoft labs to optimize and fully test our products for the cloud. We are one of the very few global companies in this position, and believe it is a compelling differentiator and the alliance underscores our commitment to be a technology leader.”

Over the years, Towers Watson has developed a wide range of software solutions to complement and support its capabilities in actuarial, investment, human resource and risk management. The technology is currently used by many clients including pension funds, insurers, multinational companies and sovereign funds. The alliance with Microsoft will strengthen the modeling software developed by Towers Watson’s risk management businesses, and specific applications are already in the pipeline.

“We are delighted to be strengthening our relationship with Towers Watson in this way,” said Dewey Forrester, senior director, Global Partner Team, Microsoft Corp. “Towers Watson is a company offering a powerful combination of deep consulting expertise and excellent software solutions across multiple industries. Their innovative use of Windows Azure in high-end computing and analytical modeling, will offer customers exceptional value.”

About Towers Watson

Towers Watson is a leading global professional services company that helps organizations improve performance through effective people, risk and financial management. The company offers solutions in the areas of benefits, talent management, rewards, and risk and capital management. Towers Watson has 14,000 associates around the world and is located on the web at towerswatson.com.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• The Windows Azure (@WindowsAzure) team sent an Introducing the Monetary Commitment Offer from Windows Azure email message on 9/28/2012:

Dear Customer,

We are making a change to our 6-month and 12-month purchase options for Windows Azure.

We are introducing a new plan, which can save you up to 32 percent. If you purchase our new 6-month plan and commit to a minimum of $500 per month, you can get the following discounts for usage associated with your Windows Azure subscription up to the levels provided in your monthly commitment plan:

Monthly commitment

Discount on 6-month plan*

$500 to $14,999

20%

$15,000 to $39,999

23%

$40,000 and above

27%

You can get an additional 2.5 percent discount if you purchase a 12-month plan and another 2.5 percent if you elect to pay for your entire commitment up-front. For more details, please visit purchase options.

*If your resource usage exceeds the level provided in your commitment plan, you’ll be charged at the base Pay-As-You-Go rates. For these commitment plans all prices for meters with graduated Pay-as-You-Go rates are calculated using the base rate, regardless of the amount used and may not be combined with graduated pricing discounts. Additional exclusions apply. See purchase options for details.

If you have any questions or would like to upgrade your existing subscription to one of these purchase options, please contact us.

Regards,

Windows Azure Team

• Richard Conway (@azurecoder) described writing A Windows Azure Service Management Client in Java in a 9/29/2012 post:

Recently I worked on a Service Management problem with my friend and colleague Wenming Ye from Microsoft. We looked at how easy it was to construct a Java Service Management API example. The criteria for us was fairly simple.

- Extract a Certificate from a .publishsettings file

- Use the certificate in a temporary call or context (session) and then delete when the call is finished

- Extract the relevant subscription information from .publishsettings file too

- Make a call to the WASM API to check whether a cloud service name is available

Simple right? Wrong!

Although I’ve been a Microsoft developer for all of my career I have written a few projects in Java in the past so am fairly familiar with Java Enterprise Edition. I even started a masters in Internet Technology (University of Greenwich) which revolved around Java 1.0 in 1996/97! In fact I’m applying my Java experience to Hadoop now which is quite refreshing!

I used to do a lot of Crypto work and was very familiar with the excellent Java provider model established in the JCE. As such I thought that the certificate management would be fairly trivial. It is but there are a a few gotchas. We’ll go over them now so that we can hit the problem and resolution before we hit the code.

The Sun Crypto provider has a problem with loading a PKCS#12 struct which contains the private key and associated certificate. In C# System.Cryptography is specifically built for this extraction and there is fairly easy and fluent way of importing the private key and certificate into the Personal store. Java has a keystore file which can act like the certificate store so in theory the PKCS#12/PFX (not the same exactly but for the purposes of this article they are) resident in the .publishsettings can be imported into the keystore.

In practice the Sun Provider doesn’t support unpassworded imports so this will always fail. If anybody has read my blog posts in the past you will know that I am a big fan of BouncyCastle (BC) and have used it in the past with Java (it’s original incarnation). Swapping the BC provider in place of the Sun one fixes this problem.

Let’s look at the code. To being we import the following:

import java.io.*; import java.net.URL; import javax.net.ssl.*; import javax.xml.parsers.DocumentBuilder; import javax.xml.parsers.DocumentBuilderFactory; import javax.xml.parsers.ParserConfigurationException; import org.bouncycastle.jce.provider.BouncyCastleProvider; import org.bouncycastle.util.encoders.Base64; import org.w3c.dom.*; import org.xml.sax.SAXException; import java.security.*;The BC import is necessary and the Xml namespaces used to parse the .publishsettings file.

We need to declare the following variables to hold details of the Service Management call and the keystore file details:

// holds the name of the store which will be used to build the output private String outStore; // holds the name of the publishSettingsFile private String publishSettingsFile; // The value of the subscription id that is being used private String subscriptionId; // the name of the cloud service to check for private String name;We’ll start by looking at the on-the-fly creation of the Java Keystore. Here we get a Base64 encoded certificate and after adding the BC provider and getting an instance of a PKCS#12 keystore we setup an empty store. When this is done we can decode the PKCS#12 structure into a byte input stream, add to the store (with an empty password) and write the store out, again with an empty password to a keystore file.

/* Used to create the PKCS#12 store - important to note that the store is created on the fly so is in fact passwordless - * the JSSE fails with masqueraded exceptions so the BC provider is used instead - since the PKCS#12 import structure does * not have a password it has to be done this way otherwise BC can be used to load the cert into a keystore in advance and * password*/ private KeyStore createKeyStorePKCS12(String base64Certificate) throws Exception { Security.addProvider(new BouncyCastleProvider()); KeyStore store = KeyStore.getInstance("PKCS12", BouncyCastleProvider.PROVIDER_NAME); store.load(null, null); // read in the value of the base 64 cert without a password (PBE can be applied afterwards if this is needed InputStream sslInputStream = new ByteArrayInputStream(Base64.decode(base64Certificate)); store.load(sslInputStream, "".toCharArray()); // we need to a create a physical keystore as well here OutputStream out = new FileOutputStream(getOutStore()); store.store(out, "".toCharArray()); out.close(); return store; }Of course, in Java, you have to do more work to set the connection up in the first place. Remember the private key is used to sign messages. Your Windows Azure subscription has a copy of the certificate so can verify each request. This is done at the Transport level and TLS handles the loading of the client certificate so when you set up a connection on the fly you have to attach the keystore to the SSL connection as below.

/* Used to get an SSL factory from the keystore on the fly - this is then used in the * request to the service management which will match the .publishsettings imported * certificate */ private SSLSocketFactory getFactory(String base64Certificate) throws Exception { KeyManagerFactory keyManagerFactory = KeyManagerFactory.getInstance("SunX509"); KeyStore keyStore = createKeyStorePKCS12(base64Certificate); // gets the TLS context so that it can use client certs attached to the SSLContext context = SSLContext.getInstance("TLS"); keyManagerFactory.init(keyStore, "".toCharArray()); context.init(keyManagerFactory.getKeyManagers(), null, null); return context.getSocketFactory(); }The main method looks like this. It should be fairly familiarly to those of you that have been working with the WASM API for a while. we load and parse the XML, add the required headers to the request, send it and parse the response.

ServiceManager manager = new ServiceManager(); try { manager.parseArgs(args); // Step 1: Read in the .publishsettings file File file = new File(manager.getPublishSettingsFile()); DocumentBuilderFactory dbf = DocumentBuilderFactory.newInstance(); DocumentBuilder db = dbf.newDocumentBuilder(); Document doc = db.parse(file); doc.getDocumentElement().normalize(); // Step 2: Get the PublishProfile NodeList ndPublishProfile = doc.getElementsByTagName("PublishProfile"); Element publishProfileElement = (Element) ndPublishProfile.item(0); // Step 3: Get the PublishProfile String certificate = publishProfileElement.getAttribute("ManagementCertificate"); System.out.println("Base 64 cert value: " + certificate); // Step 4: Load certificate into keystore SSLSocketFactory factory = manager.getFactory(certificate); // Step 5: Make HTTP request - https://management.core.windows.net/[subscriptionid]/services/hostedservices/operations/isavailable/javacloudservicetest URL url = new URL("https://management.core.windows.net/" + manager.getSubscriptionId() + "/services/hostedservices/operations/isavailable/" + manager.getName()); System.out.println("Service Management request: " + url.toString()); HttpsURLConnection connection = (HttpsURLConnection)url.openConnection(); // Step 6: Add certificate to request connection.setSSLSocketFactory(factory); // Step 7: Generate response connection.setRequestMethod("GET"); connection.setRequestProperty("x-ms-version", "2012-03-01"); int responseCode = connection.getResponseCode(); // response code should be a 200 OK - other likely code is a 403 forbidden if the certificate has not been added to the subscription for any reason InputStream responseStream = null; if(responseCode == 200) { responseStream = connection.getInputStream(); } else { responseStream = connection.getErrorStream(); } BufferedReader buffer = new BufferedReader(new InputStreamReader(responseStream)); // response will come back on a single line String inputLine = buffer.readLine(); buffer.close(); // get the availability flag boolean availability = manager.parseAvailablilityResponse(inputLine); System.out.println("The name " + manager.getName() + " is available: " + availability); } catch(Exception ex) { System.out.println(ex.getMessage()); } finally { manager.deleteOutStoreFile(); } }For completeness, in case anybody wants to try this sampe out here is the rest.

/* <AvailabilityResponse xmlns="http://schemas.microsoft.com/windowsazure" * xmlns:i="http://www.w3.org/2001/XMLSchema-instance"> * <Result>true</Result> * </AvailabilityResponse> * Parses the value of the result from the returning XML*/ private boolean parseAvailablilityResponse(String response) throws ParserConfigurationException, SAXException, IOException { DocumentBuilderFactory dbf = DocumentBuilderFactory.newInstance(); DocumentBuilder db = dbf.newDocumentBuilder(); // read this into an input stream first and then load into xml document @SuppressWarnings("deprecation") StringBufferInputStream stream = new StringBufferInputStream(response); Document doc = db.parse(stream); doc.getDocumentElement().normalize(); // pull the value from the Result and get the text content NodeList nodeResult = doc.getElementsByTagName("Result"); Element elementResult = (Element) nodeResult.item(0); // use the text value to return a boolean value return Boolean.parseBoolean(elementResult.getTextContent()); } // Parses the string arguments into the class to set the details for the request private void parseArgs(String args[]) throws Exception { String usage = "Usage: ServiceManager -ps [.publishsettings file] -store [out file store] -subscription [subscription id] -name [name]"; if(args.length != 8) throw new Exception("Invalid number of arguments:\n" + usage); for(int i = 0; i < args.length; i++) { switch(args[i]) { case "-store": setOutStore(args[i+1]); break; case "-ps": setPublishSettingsFile(args[i+1]); break; case "-subscription": setSubscriptionId(args[i+1]); break; case "-name": setName(args[i+1]); break; } } // make sure that all of the details are present before we begin the request if(getOutStore() == null || getPublishSettingsFile() == null || getSubscriptionId() == null || getName() == null) throw new Exception("Missing values\n" + usage); } // gets the name of the java keystore public String getOutStore() { return outStore; } // sets the name of the java keystore public void setOutStore(String outStore) { this.outStore = outStore; } // gets the name of the publishsettings file public String getPublishSettingsFile() { return publishSettingsFile; } // sets the name of the java publishsettings file public void setPublishSettingsFile(String publishSettingsFile) { this.publishSettingsFile = publishSettingsFile; } // get the value of the subscription id public String getSubscriptionId() { return subscriptionId; } // sets the value of the subscription id public void setSubscriptionId(String subscriptionId) { this.subscriptionId = subscriptionId; } // get the value of the subscription id public String getName() { return name; } // sets the value of the subscription id public void setName(String name) { this.name = name; } // deletes the outstore keystore when it has finished with it private void deleteOutStoreFile() { // the file will exist if we reach this point try { java.io.File file = new java.io.File(getOutStore()); file.delete(); } catch(Exception ex){} }Last thing to say. Head to the BouncyCastle website to download the package and provider and in this implementation Wenming and I called the class ServiceManager.

Looking to the future and when I’ve got some time I may look at porting Fluent Management to Java using this technique. As it stands I feel this is a better technique than using the certificate store to manage the keys and underlying collection in that you don’t need elevated privileges to interact. A consequence which has proved a little difficult to work with when I’ve been using locked down clients in the workplace or Windows 8.

I’ve been working on both Windows Azure Active Directory recently and the new OPC package format with Fluent Management so expect some more blog posts shortly. Happy trails etc.

• David Linthicum (@DavidLinthicum) asserted “Performance is a larger issue than many expected with cloud computing, but you can solve that problem if you think ahead” in a deck for his 3 ways to improve cloud performance article for InfoWorld’s Cloud Computing blog of 9/28/2012:

Performance issues hold back some cloud computing efforts. This happens because many of those who stand up cloud-based applications did not account for the latency systemic to many cloud-based systems.

For the most part, these performance issues are caused by the fact that cloud-based applications are typically widely distributed, with the data far away from the application logic, which itself may be far away from the user. Unless careful planning has gone into the design of the system, you're going to run into latency and even reliability issues.

Here are three things to look at to get good cloud computing performance:

- First, focus on the architecture and planning. At the end of the day, you're dealing with widely distributed, loosely coupled systems where the data, the application, and the human or machine that consumes the application services could be thousands of miles apart. Thus, you need to create an architecture designed explicitly to deal with the latency. Techniques include using buffers or a cache, as well as moving components that constantly chat closer together physically.

- Second, minimize how much information moves among the core components of your cloud-based applications. In on-premises systems, you're used to having the bandwidth and the performance to toss huge messages back and forth within the enterprise. Likewise, when dealing with cloud-based systems, moving information within the cloud typically does not cause that much latency. But handling latency across cloud providers and between the cloud and the enterprise can be a challenge.

- Third, test before you buy. In many cases, the latency issues can't be easily solved because their causes are engineered into the cloud platforms themselves. You need to undertake basic proof-of-concept testing, including performance and reliability, before you select your cloud providers. Make sure to test with real-world data loading, and don't be afraid to test-drive several providers. You'll find that they're all at least a little different.

Mike McKeown (@nwoekcm) posted Learn Everything About Azure Performance Counters and SCOM 2012 on 9/25/2012:

I have been playing around with SCOM 2012 and the System Center Monitoring Pack for Windows Azure Applications. My goal was to understand how to monitor and view Performance counters for my Azure service using SCOM. I had no previous experience with SCOM so this was a new adventure for me. Overall, I found SCOM very powerful, but not as straightforward to use as I had hoped.

There are more intuitive tools like AzureOps from OpStera to monitor Azure services and applications. I had to create Run As accounts as Binary Authentication (for the certificate an private key) and Basic Authentication (for the certificate’s password). I then created a management pack which serves as a container for other SCOM entities. From there I derived a Monitoring pack from the Windows Azure Application template. This is where I added the Azure-specific values to uniquely identify to SCOM the Azure service I wanted to monitor. Finally I created rules, one per each performance counter I wanted to monitor. Rule creation has a wizard to (most SCOM tasks I tried did) but a few of the fields were not as straightforward to complete, such as role instance type.

Counters used for an Azure application are a subset of those you would use for a Windows Server 2008 application. For my Azure application I decided to use a sampling rate of one minute (1-2 is recommended) and a transfer rate of every 5 minutes. The transfer rate is how often Diagnostics Monitor will move the counter data from local storage into Azure storage. I used the following Perfmon counters which are typical ones you would use in your Azure monitoring process. The counters I monitored for a worker role are a subset of those I monitored for a Web role because the worker role does not include any IIS or ASP.NET functionality.

Counters for Web Roles

The following counter is used with the Network Interface monitoring object.

Bytes Sent/sec – Helps you determine the amount of Azure bandwidth you are using.

The following counters are used with the ASP.NET Applications monitoring object.

Request Error Events Raised - If this value is high you may have an application problem. Excessive time in error processing can degrade performance.

(__Total__)\Requests/Sec - If this value is high you can see displays how your application is behaving under stress. If low value, and other counters show a lot of activity going on (CPU or memory) there is probably a bottleneck or a memory leak.

(__Total__)\Requests Not Found – If a lot of requests not found you may have a virus or something wrong with the configuration of your Web site.

(__Total__)\Requests Total – Displays the throughput of your application. If this is low and CPU or memory are begin used in large amounts, you may have a bottleneck or memory leak.

(__Total__)\Requests Timed Out – A good indicator of performance. A high value means your system cannot turnover requests fast enough to handle new ones. For an Azure application this might mean creating more instances of your Web role to the amount that these timeouts disappear.

(__Total__)\Requests Not Authorized – High value could mean a DoS attack. You can throttle them possibly to allow valid requests to come through.

Counters for Web and Worker Roles

For both worker and Web roles here are some counters to watch for your Azure service/application.

The following counter is used with the Processor monitoring object.

(_Total)\% Processor Time – One of the key counters to watch in your application. If value is high along with the number of Connections Established you may want to increase the # of core in the VM for your hosted service. If this value is high but low # of requests your application may be taking more CPU than it should.

The following counters are used with the Memory monitoring object.

Available Mbytes – If value is low you can increase the size of your Azure instance to make more memory available.

Committed Bytes – If constantly increasing it makes no sense to increase Azure instance size since you most likely have a memory leak.

The following counters are used with the TCPv4 monitoring object.

Connections Established – shows how many connections to your service. If high, and Processor Time counter is low, you may not be releasing connections properly.

Segments Sent/sec – If high value may want to increase the Azure instance size.

In summary, using Perfmon counters is a valuable way to indirectly keep an eye on your application’s use of Azure resources. Often performance monitors can be used more effectively when in conjunction with each other. For instance, if you see a lot of memory being used you might want to check CPU utilization. If high CPU, lots of apps are using the memory and you need to scale up. If low CPU then you probably have an issue with how the memory is being allocated or released.

You can use SCOM to track Perfmon if you know how to use it. Remember SCOM is a very rich and robust enterprise-scale tool with a ton of functionality. For instance, once you configure your hosted service as a monitoring pack, you can then view it in the Distributed Applications tab. This gives you consolidated and cascading summaries of the performance and availability of your Azure service.

If you don’t own or use SCOM, or if you want to keep it simple, then AzureOps is probably an easier option. It also has no installation/setup as well (runs as a Web service) and simple Azure auto-scaling based upon Perfmon threshold values. Take a look at it here.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

<Return to section navigation list>

Cloud Security and Governance