Windows Azure and Cloud Computing Posts for 9/17/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 9/19/2012 12:00 PM PDT with new articles marked ••.

• Updated 9/18/2012 4:30 PM PDT with new articles marked •.

Tip: Copy bullet(s), press Ctrl+f, paste it/them to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop, Online Backup and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop, Online Backup and Media Services

• Hortonworks (@hortonworks posted on 9/17/2012 a 00:52:00 archive of its Pig Out to Hadoop presentation of 9/12/2012 by Alan F. Gates (@alanfgates) - requires registration:

Pig has added some exciting new features in 0.10, including a boolean type, UDFs in JRuby, load and store functions for JSON, bloom filters, and performance improvements.

Join Alan Gates, Hortonworks co-founder and long-time contributor to the Apache Pig and HCatalog projects, to discuss these new features, as well as talk about work the project is planning to do in the near future. In particular, we will cover how Pig can take advantage of changes in Hadoop.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

•• My (@rogerjenn) Windows Azure Mobile Services Preview Walkthrough–Part 5: Distributing Your App From the Windows Store of 9/19/2012 begins with a workaround for a problem many Mobile Services users will encounter when registering a Windows Store (formerly Windows 8, Modern UI and Metro) App:

As noted at the end of the Windows Azure Mobile Services Preview Walkthrough–Part 4: Customizing the Windows Store App’s UI post:

I was surprised to discover that packaging and submitting the app to the Windows Store broke the user authentication feature described in the Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#) post, which causes the landing page to appear briefly and then disappear.

The problem is related to conflicts between registration of the app with the Live Connect Developer Center as described in Section 1 of my Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#) and later association and registration with the Windows Store as noted in the two sections below.

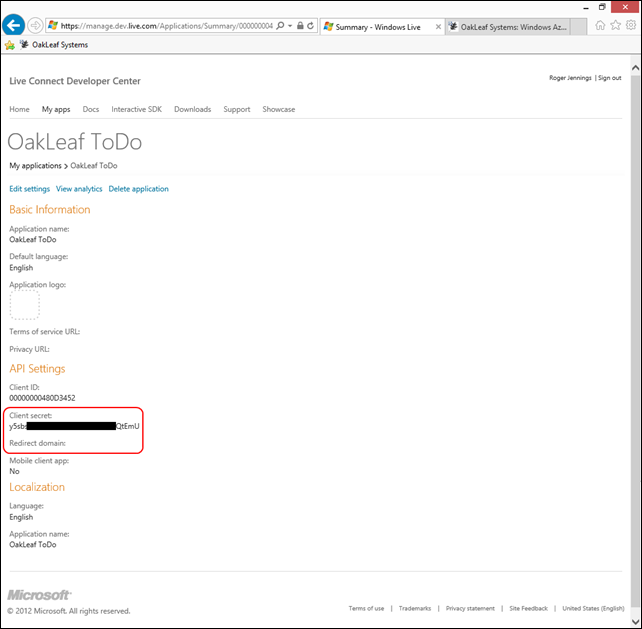

The Live Connect Developer Center’s My Applications page contains two entries for the OakLeaf ToDo app as emphasized below:

The other entries in the preceding list are Windows Azure cloud projects and storage accounts created during the past 1.5 years with my MSDN Benefit subscription.

Clicking the oakleaf_todo item opens the original app settings form with the Client Secret, Package Name, and Package Security Identifier (SID) as that shown in Step 1-3 of Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#):

The Redirect Domain points to the Mobile Services back end created in steps 7 through 13 of Windows Azure Mobile Services Preview Walkthrough–Part 1: Windows 8 ToDo Demo Application (C#).

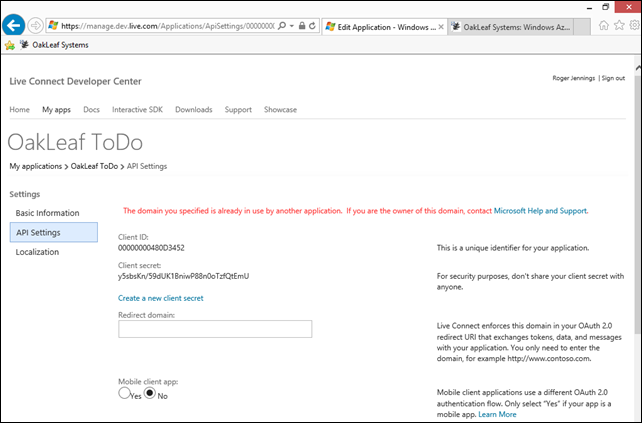

The OakLeaf ToDo app settings show a different Client Secret and empty Redirect Domain:

Clicking the Edit Settings link and clicking the API Settings tab opens this form with the above client secret, an empty Redirect Domain text box, and the missing Package SID:

If you type https://oakleaf-todo.azure-mobile.net/ and click save, you receive the following error:

There’s no apparent capability to delete an app from the list, so open the oakleaf_todo item, click Edit Settings, copy the redirect domain URL to the clipboard, delete the URL from the text box and click Save, which adds a “Your changes were saved” notification to the form.

Open the OakLeaf ToDo item in the list, click Edit Settings, paste the redirect domain URL to the text box and click Save, which adds the same “Your changes were saved” notification to the form.

The App Store’s registration process asserts that Terms of Service and Privacy URLs are required, so click the Basic Information tab, add the path to a 48-px square logo, copy the two URLs to the text boxes and click Save:

Note: Step 1-2 of Windows Azure Mobile Services Preview Walkthrough–Part 4: Customizing the Windows Store App’s UI specified a 50-px square logo.

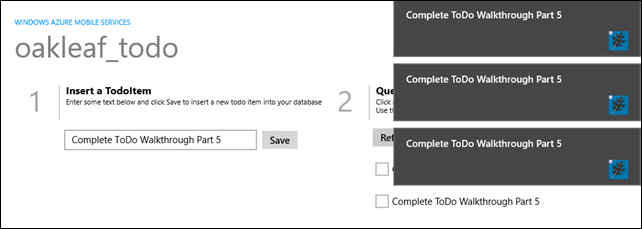

Clicking the Start Menu’s OakLeaf ToDo tile now opens the logged in notification page, as expected:

Clicking OK and inserting a ToDoItem displays the repeated notifications described in Windows Azure Mobile Services Preview Walkthrough–Part 3: Pushing Notifications to Windows 8 Users (C#)’s section “7 - Verify the Push Notification Behavior.”

Credit: Thanks to the Windows Azure Mobile Team’s Paul Batum for his assistance in tracking down the source of the problem. …

The post continues with “1 - Associate the App with the Windows Store” and “2 - Completing the Registration Process” sections.

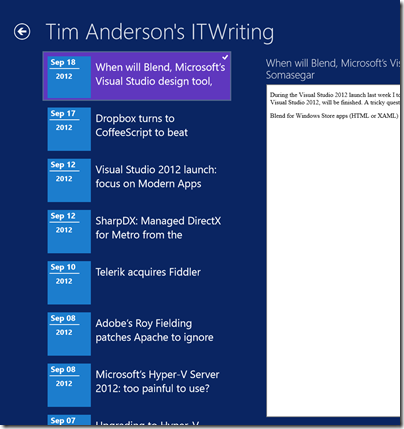

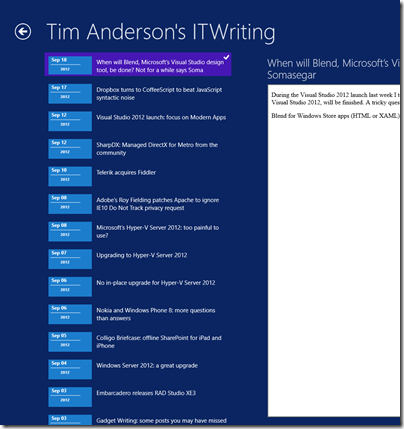

•• Tim Anderson (@timanderson) posted Information Density in Metro, sorry Windows Store apps in a 9/19/2012 post:

Regular readers will recall that I wrote a simple blog reader for Windows 8, or rather adapted Microsoft’s sample. The details are here.

This is a Windows Store app – a description I am trying to get used to after being assured by Microsoft developer division Corp VP Soma Somasegar that this really is what we should call them – though my topic is really the design style, which used to be called Metro but is now, hmm, Windows Store app design style?

No matter, the subject that caught my attention is that typical Windows Store apps have low information density. This seems to be partly due to Microsoft’s design guidelines and samples, and partly due to the default controls which are so boldly drawn and widely spaced that you end up with little information to view.

Part of the rationale is to make touch targets easy to hit with fat fingers, but it seems to go beyond that. We should bear in mind that Windows Store apps will also be used on screens that lack touch input.

I am writing this on a Windows 8 box with a 1920 x 1080 display. Here is my blog reader, which displays a mere 7 items in the list of posts:

This was based on Microsoft’s sample, and the font sizes and spacing come from there. I had a poke around, and after a certain amount of effort figuring out which values to change in the list’s item template, came up with a slightly denser list which manages to show 14 items in the list. The items are still easily large enough to tap with confidence.

Games aside though, I am noticing that other Windows Store apps also have low information density. Tweetro, for example, a Twitter client, shows only 11 tweets to view on my large display.

The densest display I can find quickly is in Wordament, which is a game but a text-centric one:

I have noticed this low information density issue less with iPad apps. Two reasons. One is that iOS does not push you in the same way towards such extremely large-looking apps. The other is that you only run iOS on either iPhone or iPad, not on large desktop displays.

Is Windows 8 pushing developers too far towards apps with low information density, or has Microsoft got it right? It is true that developers historically have often tried to push too much information onto single screens, while designers mitigate this with more white space and better layouts. I wonder though whether Windows 8 store apps have swung too far in the opposite direction.

Related posts:

- Run Metro apps in a window on Windows 8

- Windows Phone, Windows 8, and Metro Metro Metro feature in Microsoft’s last keynote at CES

- Mac App Store, Windows Store, and the decline of the open platform

- Embarcadero previews Metropolis in RAD Studio XE3: fake Metro apps?

- No Java or Adobe AIR apps in Apple’s Mac App Store

I share Tim’s concern with this issue.

•• Travis J. Hampton asserted Windows Azure Mobile Services Aim to Simplify App Development in a 9/19/2012 post to the SiliconANGLE blog:

Microsoft recently announced Windows Azure Mobile Services, an app infrastructure stack that will allow mobile developers to focus on developing their app rather than the infrastructure. In doing so, Microsoft is trying out what many startups have attempted: offering a mobile backend as a service to its app developers.

The problem with mobile app development is that developers in the past essentially had to start from scratch, building out their mobile apps, supporting them across multiple devices, and scaling them when necessary. To remedy this problem, many startups have emerged that offer app infrastructure. Microsoft’s will be built on an platform as a service (PaaS), hosted on Microsoft’s servers, and attached to an SQL database.

In what Microsoft calls a matter of “minutes”, app developers can add a cloud backend to their Windows Store apps. Currently, this is only available for Windows 8, but Microsoft plans to add support for iPhone, Android, and its own Windows Phone soon.

Whenever an app developer offers any type of service along with the app, whether it is a free trial, a sales promotion, simple notifications, or user authentication, the cloud backend can handle all of it, eliminating the need for the developer to also be a system administrator, hosting servers and pushing out services to customers.

Microsoft’s developer division president Scott Guthrie explained, “When you create a Windows Azure Mobile Service, we automatically associate it with a SQL Database inside Windows Azure. The Windows Azure Mobile Service backend then provides built-in support for enabling remote apps to securely store and retrieve data from it (using secure REST end-points utilizing a JSON-based OData format) — without you having to write or deploy any custom server code. Built-in management support is provided within the Windows Azure portal for creating new tables, browsing data, setting indexes, and controlling access permissions”.

Windows Azure is Microsoft’s cloud platform. With it, Microsoft users can deploy anything from single applications to full virtual operating systems from within the Azure interface. Like software as a service, Microsoft’s platform as a service is hosted at Microsoft’s data centers and is fully managed, offering the user a noticeably hands-off approach to server management. Microsoft has plenty of competition in this arena, and the company has even instituted the capability of installing non-windows operating systems, such as Linux, in order to stay competitive.

•• Gregory Leake posted Announcing Updates to Windows Azure SQL Database on 9/19/2012:

We are excited to announce that we have recently deployed new Service Updates to Windows Azure SQL Database that provide additional functionality. Specifically, the following new features are now available:

SQL Server support for Linked Server and Distributed Queries against Windows Azure SQL Database.

- Recursive triggers are now supported in Windows Azure SQL Database.

- DBCC SHOW_STATISTICS is now supported in Windows Azure SQL Database.

- Ability to configure Windows Azure SQL Database firewall rules at the database level.

SQL Server Support for Linked Server and Distributed Queries against Windows Azure SQL Database

It is now possible to add a Windows Azure SQL Database as a Linked Server and then use it with Distributed Queries that span the on-premises and cloud databases. This is a new component for database hybrid solutions spanning on-premises corporate networks and the Windows Azure cloud.

SQL Server box product contains a feature called “Distributed Query” that allows users to write queries to combine data from local data sources and data from remote sources (including data from non-SQL Server data sources) defined as Linked Servers. Previously Windows Azure SQL Databases didn’t support distributed queries natively, and needed to use the ODBC-to-OLEDB proxy, which was not recommended for performance reasons. We are happy to announce that Windows Azure SQL Databases can now be used through “Distributed Query”. In practical terms, every single Windows Azure SQL Database (except the virtual master) can be added as an individual Linked Server and then used directly in your database applications as any other database.

The benefits of using Windows Azure SQL Database include manageability, high availability, scalability, working with a familiar development model, and a relational data model. The requirements of your database application play an important role in deciding how it would use Windows Azure SQL Databases in the cloud. You can move all of your data at once to Window Azure SQL Databases, or progressively move some of your data while keeping the remaining data on-premises. For such a hybrid database application, Windows Azure SQL Databases can now be added as linked servers and the database application can issue distributed queries to combine data from Windows Azure SQL Databases and on-premise data sources.

Here’s a simple example explaining how to connect to a Windows Azure SQL Database using Distributed Queries:

------ Configure the linked server

-- Add one Windows Azure SQB DB as Linked Server

EXEC sp_addlinkedserver

@server='myLinkedServer', -- here you can specify the name of the linked server

@srvproduct='',

@provider='sqlncli', -- using SQL Server native client

@datasrc='myServer.database.windows.net', -- add here your server name

@location='',

@provstr='',

@catalog='myDatabase' -- add here your database name as initial catalog (you cannot connect to the master database)-- Add credentials and options to this linked server

EXEC sp_addlinkedsrvlogin

@rmtsrvname = 'myLinkedServer',

@useself = 'false',

@rmtuser = 'myLogin', -- add here your login on Azure DB

@rmtpassword = 'myPassword' -- add here your password on Azure DB

EXEC sp_serveroption 'myLinkedServer', 'rpc out', true;------ Now you can use the linked server to execute 4-part queries

-- You can create a new table in the Azure DB

exec ('CREATE TABLE t1tutut2(col1 int not null CONSTRAINT PK_col1 PRIMARY KEY CLUSTERED (col1) )') at myLinkedServer-- Insert data from your local SQL Server

exec ('INSERT INTO t1tutut2 VALUES(1),(2),(3)') at myLinkedServer

-- Query the data using 4-part names

select * from myLinkedServer.myDatabase.dbo.myTableMore information on Linked Servers and Distributed Queries is available here.

Recursive Triggers

A trigger may now call itself recursively if this option is set to on (Default) for a particular database. Just like SQL Server 2012, the option can be configured via the following query:

ALTER DATABASE YOURDBNAME SET RECURSIVE_TRIGGERS ON|OFF;

For full information on recursive triggers, see the SQL Server 2012 Books Online Topic.

DBCC SHOW_STATISTICS Now Supported

DBCC SHOW_STATISTICS displays current query optimization statistics for a table or indexed view. The query optimizer uses statistics to estimate the cardinality or number of rows in the query result, which enables the query optimizer to create a high quality query plan. For example, the query optimizer could use cardinality estimates to choose the index seek operator instead of the index scan operator in the query plan, improving query performance by avoiding a resource-intensive index scan. For more information click here.

Ability to Configure SQL Database Firewall Rules at the Database Level

Previously Windows Azure SQL Database firewall rules could be set only at the server level, either through the management portal or via T-SQL commands. Now, firewall rules can be additionally set at the more granular database level, with different rules for different databases hosted on the same logical SQL Database server. For more information click here.

For questions or more technical information about these features, you can post a question on the SQL Database MSDN Support Forum.

•• Mike Taulty (@mtaulty) described Experimenting with Windows Azure Mobile Services in a 9/19/2012 post:

The first preview of Windows Azure Mobile Services came out the other week. Over on YouTube, Scott Guthrie gives a great overview of what Mobile Services provides in the preview and on the new Mobile Services site you can get a whole bunch more detail about building with Mobile Services.

At the time, I made a note to set some time aside to look at Mobile Services but I hadn’t quite got around to that when I was down at the Dev4Good hackathon the weekend before last and some of the guys I was working with wanted to try and use these new bits to store their data in the cloud.

I ended up doing a bit of learning with them on the spot and, although I’d seen a few demos, it’s always different when you’re actually sitting in front of the code editor/configuration UIs yourself and someone says “Go on then, set it up” but between us we had something up and running in about 30 minutes which is testament to the productivity of the what Mobile Services does for you.

Beyond the main site, we spent a bit of time reading blog posts from Josh Twist and Carlos Figueira and I’d definitely recommend catching up with what those guys have been writing.

A couple of weeks later, I finally managed to get a bit of downtime to take a look at Mobile Services myself and the rest of this post is a set of rough notes I made as I was poking around and trying to make what I’d read in the quick-starts and on the blogs come to life by experimenting with some code and services myself.

What’s Mobile Services?

The description I’ve got in my head for Mobile Services at this point is something like “Instant Azure Storage & RESTful Data Services” with those services being client agnostic but already offering a specific set of features that make them very attractive as a back end for Windows 8 Store apps such as;

1) Easy integration of the Windows Notification Service for push notifications.

2) Integrated Windows Live authentication so that I can pick up a user’s live ID on the back-end and make authorization decisions around it.

3) Client libraries in place for Windows 8 Store apps written in .NET or JavaScript.

I think the ability to quickly create CRUD-like services over a set of tables and have them exposed over a set of remotely hosted services in a client-agnostic way is a pretty useful thing to be able to do and is likely to save a bunch of boiler-plate effort in hand-cranking your own service to expose and serialize data and get it all set up on Azure (or another cloud service) so I wanted to dive in and dig around a little.

Initial Steps

Once I’d signed up and ticked the right tickboxes for Mobile Services (which took about 3 minutes), I found myself at the dashboard.

Where I figured I’d create a new service alongside a new database;

I’ll delete this service and database server once I’ve written my blog post and I don’t generally use ‘mtaultySa’ as my user name just in case you were wondering.

With that in place, I had a database server and a mobile service sitting somewhere on the other side of the world and navigating into my service showed me that I didn’t have any tables hanging off the service so I figured I’d make one.

And I made one called Person because I always make something called Person when confronted with new technology.

And that made me a Person table;

Which had one column of type bigint (mandatory for a mobile service) to provide me with a primary key but with no data as of yet.

Now, from the quick-starts, I’m aware that a mobile service can have a dynamic schema such that if I send an insert to this table with a column called firstName which can be inferred to be a string then this option;

will ensure that my table will ‘magically’ grow a new column called firstName to store that string. However, rather than letting the data define the schema, I felt that I wanted to define my own schema a little to try things out and so instead I went over to the DB tab;

And wandered into the table definition and added a couple of new columns;

And then I saved that and added a couple of rows of data;

At this point, I felt that I wanted to be reassured that I could point SQL Management Studio at this database. I’m not sure why but I went off on that side-journey for a moment and made sure I could get a connection string from Azure and connect SQLMS to it once I’d set up the firewall rule to allow my connection to that server;

And that all worked fine and I can get to my data from management studio;

Although I’m not sure whether the Mobile Services support me in just creating arbitrary columns of any data type this as I think the data-types are [string, numeric, bool, datetime] and I think I also noticed that if I created a table in this DB then it didn’t magically show up in my Mobile Service so I guess there must be a configuration aspect to that somewhere which stores which DB tables are actually part of your service.

From there, I wanted to see my service so I went to its URL;

and, sure enough, there’s my service on the web or, at least, a web page that indicates that there’s a sign of life. Not bad for 3-5 minutes of clicking on the web site.

If you’re interested in Mobile Services, be sure to subscribe to Carlos Figueira’s (@carlos_figueira) blog. Thanks to Mike for the heads up.

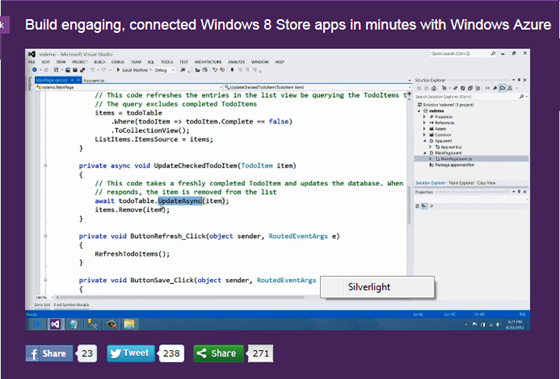

Josh Twist (@joshtwist) described how to Build engaging, connected Windows 8 Store apps in minutes with Windows Azure in a 9/17/2012 archive of his 14-minute Visual Studio Release video:

The best apps need cloud services. Join this session to see how you can leverage Visual Studio 2012 and Windows Azure Mobile Services to add structured storage, integrated authentication, and even push notifications in literally minutes to your Windows 8 Store app.

Click here to watch the video.

Chris Auld published on 9/17/2012 the source code for his SQL Azure Federations Deep Dive–TechEd ANZ presentation:

So I promised that I’d post all the bits from my TechEd Australia and NZ session on SQL Azure Federations. I have been tardy in delivering on this goal.

If you missed the session they recorded it for me in Auckland so you can take a look at the recording on channel 9: http://channel9.msdn.com/Events/TechEd/NewZealand/TechEd-New-Zealand-2012/AZR304

So here goes.

The first item is the SQL Script that I walked through. This will give you a high level idea of the basics of Federations using a simple eCommerce type scenario (partial AdventureWorks)

--<<<<<<<<<<<<<<<<<<<<< Task 1 – Create Federations Root Database >>>>>>>>>>>>>>>>>>>>> CREATE DATABASE [TechEd2012] GO --<<<<<<<<<<<<<<<<<<<<< Task 2 – Connect directly to Root Database >>>>>>>>>>>>>>>>>>>>> /* Using tooling support in latest release of SQML Management studio */ --<<<<<<<<<<<<<<<<<<<<< Task 3 – Create Federation Object >>>>>>>>>>>>>>>>>>>>> CREATE FEDERATION CustomerFederation(cid BIGINT RANGE) GO --<<<<<<<<<<<<<<<<<<<<< Task 4 – View Federations Metadata >>>>>>>>>>>>>>>>>>>>> -- Route connection to the Federation Root USE FEDERATION ROOT WITH RESET GO SELECT db_name() [db_name], db_id() [db_id] SELECT * FROM sys.federations SELECT * FROM sys.federation_distributions SELECT * FROM sys.federation_member_distributions ORDER BY federation_id, range_low; SELECT * FROM sys.databases; GO -- Route connection to the 1 Federation Member (aka shard) USE FEDERATION CustomerFederation(cid=100) WITH RESET, FILTERING=OFF GO SELECT db_name() [db_name], db_id() [db_id] SELECT * FROM sys.federations SELECT * FROM sys.federation_distributions SELECT * FROM sys.federation_member_distributions GO SELECT f.name, fmc.federation_id, fmc.member_id, fmc.range_low, fmc.range_high FROM sys.federations f JOIN sys.federation_member_distributions fmc ON f.federation_id=fmc.federation_id ORDER BY fmc.federation_id, fmc.range_low; GO --<<<<<<<<<<<<<<<<<<<<< Task 5 – Create Federated Tables >>>>>>>>>>>>>>>>>>>>> -- Route connection to the 1 Federation Member (aka shard). -- Filtering OFF so we can make DDL operations USE FEDERATION CustomerFederation(cid=10) WITH RESET, FILTERING=OFF GO -- Table [dbo].[Customer] SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[Customer]( [CustomerID] [bigint] NOT NULL, [Title] [nvarchar](8) NULL, [FirstName] [nvarchar](50) NOT NULL, [MiddleName] [nvarchar](50) NULL, [LastName] [nvarchar](50) NOT NULL, [Suffix] [nvarchar](10) NULL, [CompanyName] [nvarchar](128) NULL, [SalesPerson] [nvarchar](256) NULL, [EmailAddress] [nvarchar](50) NULL, [Phone] [nvarchar](25) NULL, CONSTRAINT [PK_Customer] PRIMARY KEY CLUSTERED ( [CustomerID] ASC ) )FEDERATED ON (cid=CustomerID) --Note the use of the FEDERATED ON statement GO CREATE TABLE [dbo].[Order]( [OrderID] [uniqueidentifier] NOT NULL DEFAULT newid(), [CustomerID] [bigint] NOT NULL, [OrderTotal] [money] NULL, CONSTRAINT [PK_Order] PRIMARY KEY CLUSTERED ( [OrderID],[CustomerID] ASC ) )FEDERATED ON (cid=CustomerID) --Note the use of the FEDERATED ON statement GO --<<<<<<<<<<<<<<<<<<<<< Task 6 – Insert Dummy Data >>>>>>>>>>>>>>>>>>>>> -- Route connection to the 1 Federation Member (aka shard). -- Filtering OFF so we can insert multiple Atomic Units USE FEDERATION CustomerFederation(cid=10) WITH RESET, FILTERING=OFF GO INSERT INTO [dbo].[Customer] ([CustomerID] ,[Title] ,[FirstName] ,[MiddleName] ,[LastName] ,[Suffix] ,[CompanyName] ,[SalesPerson] ,[EmailAddress] ,[Phone]) VALUES (55, 'Mr.', 'Frank', '', 'Campbell', '', 'Rally Master Company Inc', 'adventure-works\shu0', 'frank4@adventure-works.com', '491-555-0132'), …

Many lines of values code elided for brevity.

(210, 'Mr.', 'Josh', '', 'Barnhill', '', 'Gasless Cycle Shop', 'adventure-works\garrett1', 'josh0@adventure-works.com', '584-555-0192'); GO INSERT INTO [dbo].[Order]( [CustomerID], [OrderTotal]) VALUES (55,213.56), (55,31.56), (55,3412.56), (78,312.56), (78,255.78), (79,112.12), (79,555.00), (79,765.12), (79,967.88), (101,903.04), (101,512.43), (110,1250.00), (110,325.68), (204,112.99), (205,107.43), (205,895.21) GO --<<<<<<<<<<<<<<<<<<<<< Task 7 – Query Federation Data with Filtering off >>>>>>>>>>>>>>>>>>>>> -- Route connection to the 1 Federation Member (aka shard) USE FEDERATION CustomerFederation(cid=100) WITH RESET, FILTERING=OFF GO SELECT db_name() [db_name], db_id() [db_id] SELECT * FROM sys.federation_member_distributions -- Federatation ranges SELECT f.name, fmc.federation_id, fmc.member_id, fmc.range_low, fmc.range_high FROM sys.federations f JOIN sys.federation_member_distributions fmc ON f.federation_id=fmc.federation_id ORDER BY fmc.federation_id, fmc.range_low; -- User tables count (federated & reference) SELECT fmc.member_id,fmc.range_low,fmc.range_high,'[' + s.name + '].[' + t.name + ']' [name], p.row_count FROM sys.tables t JOIN sys.schemas s ON t.schema_id=s.schema_id JOIN sys.dm_db_partition_stats p ON t.object_id=p.object_id JOIN sys.federation_member_distributions fmc ON 1=1 WHERE p.index_id=1 ORDER BY s.name, t.name GO -- Query customer table for high/low Federated Keys SELECT MIN(CustomerID) [CustomerID Low], MAX(CustomerID) [CustomerID High] FROM Customer GO --<<<<<<<<<<<<<<<<<<<<< Task 8 – Perform Federations Split Operation >>>>>>>>>>>>>>>>>>>>> USE FEDERATION ROOT WITH RESET GO ALTER FEDERATION CustomerFederation SPLIT AT (cid=100) GO --<<<<<<<<<<<<<<<<<<<<< Task 9 – Wait for Split to complete >>>>>>>>>>>>>>>>>>>>> --View the background Federation operations table (Rinse and Repeat Until Complete) SELECT percent_complete, * FROM sys.dm_federation_operations GO --<<<<<<<<<<<<<<<<<<<<< Task 10 – Query Federation Members (again) >>>>>>>>>>>>>>>>>>>>> -- Route connection to the Federation Root USE FEDERATION ROOT WITH RESET GO SELECT * FROM sys.federations SELECT * FROM sys.federation_member_distributions ORDER BY federation_id, range_low; GO -- Route connection to the 2nd Federation Member (aka shard) USE FEDERATION CustomerFederation(cid=100) WITH RESET, FILTERING=OFF GO --Query metadata SELECT db_name() [db_name], db_id() [db_id] SELECT * FROM sys.federation_member_distributions -- Query customer table for high/low Federated Keys SELECT MIN(CustomerID) [CustomerID Low], MAX(CustomerID) [CustomerID High] FROM Customer --Get all rows from Customers in this member SELECT * FROM Customer GO --<<<<<<<<<<<<<<<<<<<<< Task 11 – Query data from Federation member with Filtering On >>>>>>>>>>>>>>>>>>>>> --Route to the 2nd federation but with filtering on USE FEDERATION CustomerFederation(cid=100) WITH RESET, FILTERING=ON GO -- Query customer table for high/low Federated Keys SELECT MIN(CustomerID) [CustomerID Low], MAX(CustomerID) [CustomerID High] FROM Customer --Get all rows from Customers SELECT * FROM Customer GO --<<<<<<<<<<<<<<<<<<<<< Task 12 – Using the SSMS Tooling >>>>>>>>>>>>>>>>>>>>> -- List federation members --<<<<<<<<<<<<<<<<<<<<< Perform Additional Split Operation >>>>>>>>>>>>>>>>>>>>> USE FEDERATION ROOT WITH RESET GO ALTER FEDERATION CustomerFederation SPLIT AT (cid=150) GO --<<<<<<<<<<Return to Deck>>>>>>>>>>>--The next item are the fan-out queries I showed. These should be used in conjunction with the Fan Out Query demo tool that can be found at: http://federationsutility-scus.cloudapp.net/

-- FANOUT SAMPLES. Execute using Fanout tool --Simple Count in each member SELECT count(*) from Customer --Simple Select * SELECT * FROM Customer -- ALIGNED QUERY with ALIGNED AGGREGATES -- get total orders value by customer having > $1000 SELECT [order].customerid, SUM(ordertotal) FROM customer inner join [order] on customer.customerid=[order].customerid GROUP BY [order].customerid HAVING SUM(ordertotal)>1000 --OK to add some more detail from customer table. SELECT firstname,lastname,companyname,[order].customerid, SUM(ordertotal) FROM customer inner join [order] on customer.customerid=[order].customerid GROUP BY [order].customerid,firstname,lastname,companyname HAVING SUM(ordertotal)>1000 --So now to group by Company name. i.e. Non Aligned query SELECT companyname, SUM(ordertotal) as ordertotal FROM customer inner join [order] on customer.customerid=[order].customerid GROUP BY companyname HAVING SUM(ordertotal)>1000 --We need a post processing step to aggregate the aggregates SELECT companyname, SUM(ordertotal) FROM #Table GROUP BY companyname -- ALIGNED QUERY with NONE-ADDITIVE AGGREGATES -- avg order size per customer -- OK because we are aggregating within the attomic unit SELECT firstname,lastname,companyname,[order].customerid, AVG(ordertotal) FROM customer inner join [order] on customer.customerid=[order].customerid GROUP BY [order].customerid,firstname,lastname,companyname --NON-ALIGNED QUERY with NON-ADDITIVE AGGREGATE --avg order size --#FAIL SELECT AVG(ordertotal) FROM [order] -- success -- -- Average: get sum and count instead of avg SELECT SUM(ordertotal) tot, COUNT(*) cnt FROM [order] -- Summary Query: SELECT SUM(tot)/SUM(cnt) average FROM #Table --<<<<<<<<<<<<Back to Deck>>>>>>>>>>>>>>>-- -- deploy a new reference table and add some data CREATE TABLE tab1(dbname nvarchar(128), secs int, msecs int, primary key (dbname, secs, msecs)); INSERT INTO tab1 values(db_name(), datepart(ss, getdate()), datepart(ms, getdate())); SELECT * FROM tab1; -- update stats on all members EXEC sp_updatestats -- #session per member SELECT db_name() dbname, count(a.session_id) session_count FROM sys.dm_exec_sessions a -- #session per member SELECT TOP 5 db_name(),query_stats.query_hash AS "Query Hash", SUM(query_stats.total_worker_time) / SUM(query_stats.execution_count) AS "Avg CPU Time", MIN(query_stats.statement_text) AS "Statement Text" FROM (SELECT QS.*, SUBSTRING(ST.text, (QS.statement_start_offset/2) + 1, ((CASE statement_end_offset WHEN -1 THEN DATALENGTH(ST.text) ELSE QS.statement_end_offset END - QS.statement_start_offset)/2) + 1) AS statement_text FROM sys.dm_exec_query_stats AS QS CROSS APPLY sys.dm_exec_sql_text(QS.sql_handle) as ST) as query_stats GROUP BY query_stats.query_hash ORDER BY 2 DESC;As always… feel free to flick across comments or questions.

FWIW, Chris was the developer of the first high-trafficked app (for event ticketing) running in Windows Azure.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

•• Manu Cohen-Yashar (@ManuKahn) described New tools for Federation in windows 8 and Framework 4.5 in a 9/19/2012 post:

If you try to install WIF SDK on a Windows 8 [machine] with Visual Studio 2012 and then create a simple claim based application, you will see that “Add STS reference” is gone.

So how do we use federation in Visual Studio 2012 and .NET 4.5?

Well it turns out that WIF as we know it is deprecated because it was integrated in the core of .NET 4.5 and the SDK is now provided as a set of powerful tools integrated into Visual Studio.

The tools includes built-in local STS for testing, Great integration with ACS, Integration with ADFS and much more…

Vibro.net has a great series of blogs describing the tools and how they can be used.

You can get it from here, or directly from within Visual Studio 11 by searching for “identity” directly in the Extensions Manager.

The good news: With VS 2012 and .NET 4.5, it is easer to do federation than ever before.

• Christian Weyer (@christianweyer) described Federating Windows Azure Service Bus Access Control Service with a custom STS: thinktecture IdentityServer helps with more real-world-ish Relay and Brokered Messaging in a 9/18/2012 post:

The Windows Azure Service Bus and the Access Control Service (ACS) are good friends – buddies, indeed.

Almost all official Windows Azure Service Bus-related demos use a shared secret approach to authenticate directly against ACS (although it actually is not an identity provider), get back a token and then send that token over to the Service Bus. This magic usually happens all under the covers when using the WCF bindings.

All in all, this is not really what we need and seek for in real projects.

We need the ability to have users or groups (or: identities with certain claims) authenticate against identity providers that are already in place. This IdP needs to be federated with the Access Control Service which then in turn spits out a token the Service Bus can understand.Wouldn’t it be nice to authenticate via username/password (for real end users) or via certificates (for server/services entities)?

Let’s see how we can get this working by using our thinktecture IdentityServer. For the purpose of this blog post I am using our demo IdSrv instance at https://identity.thinktecture.com/idsrv.

The first thing to do is to register IdSrv as an identity provider for the respective Service Bus ACS namespace. Each SB namespace has a so called buddy namespace in ACS. The buddy namespace for christianweyer is christianweyer-sb. You can get to it by clicking the Access Control Service button in the Service Bus portal like here:

In the ACS portal for the SB buddy namespace we can then add a new identity provider.

thinktecture IdentityServer does support various endpoints and protocols, but for this scenario we are going to add IdSrv as a WS-Federation-compliant IdP to ACS. At the end we will be requesting SAML token from IdSrv.

The easiest way to add it to ACS is to use the service metadata from https://identity.thinktecture.com/idsrv/FederationMetadata/2007-06/FederationMetadata.xml

Note: Make sure that the checkbox is ticked for the ServiceBus relying party at the bottom of the page.

Next, we need to add new claims rules for the new IdP.

Let’s create a new rule group.

I am calling the new group IdSrv SB users. In that group I want to add at least one rule which says that a user called Bob is allowed to open endpoints on my Service Bus namespace.

In order to make our goal, we say that when an incoming claim of (standard) type name contains a value Bob then we are going to emit a new claim of type net.windows.servicebus.action with the Listen value. This is the claim the Service Bus can understand.

Simple and powerful.We could now just add a couple more rules here for other users or even X.509 client certificates.

Before we can leave the ACS configuration alone we need to enable the newly created rule group on the ServiceBus relying party:

… and last but not least I have to add a new relying party configuration in Identity Server for my SB buddy namespace with its related WRAP endpoint:

Done.

For using the external IdP in my WCF-based Service Bus applications I wrote a very small and simpler helper class with extension methods for the TransportClientEndpointBehavior. It connects to the STS/IdP requesting a SAML token which is then used as the credential for the Service Bus.

using System; using System.IdentityModel.Tokens; using System.Security.Cryptography.X509Certificates; using System.ServiceModel; using System.ServiceModel.Security; using Microsoft.IdentityModel.Protocols.WSTrust; using Microsoft.IdentityModel.Protocols.WSTrust.Bindings; using Microsoft.ServiceBus; namespace ServiceBus.Authentication { public static class TransportClientEndpointBehaviorExtensions { public static string GetSamlTokenForUsername( this TransportClientEndpointBehavior behavior, string issuerUrl, string serviceNamespace, string username, string password) { var trustChannelFactory = new WSTrustChannelFactory( new UserNameWSTrustBinding(SecurityMode.TransportWithMessageCredential), new EndpointAddress(new Uri(issuerUrl))); trustChannelFactory.TrustVersion = TrustVersion.WSTrust13; trustChannelFactory.Credentials.UserName.UserName = username; trustChannelFactory.Credentials.UserName.Password = password; try { var tokenString = RequestToken(serviceNamespace, trustChannelFactory); trustChannelFactory.Close(); return tokenString; } catch (Exception ex) { trustChannelFactory.Abort(); throw; } } public static string GetSamlTokenForCertificate( this TransportClientEndpointBehavior behavior, string issuerUrl, string serviceNamespace, string certificateThumbprint) { var trustChannelFactory = new WSTrustChannelFactory( new CertificateWSTrustBinding(SecurityMode.TransportWithMessageCredential), new EndpointAddress(new Uri(issuerUrl))); trustChannelFactory.TrustVersion = TrustVersion.WSTrust13; trustChannelFactory.Credentials.ClientCertificate.SetCertificate( StoreLocation.CurrentUser, StoreName.My, X509FindType.FindByThumbprint, certificateThumbprint); try { var tokenString = RequestToken(serviceNamespace, trustChannelFactory); trustChannelFactory.Close(); return tokenString; } catch (Exception ex) { trustChannelFactory.Abort(); throw; } } private static string RequestToken(string serviceNamespace, WSTrustChannelFactory trustChannelFactory) { var rst = new RequestSecurityToken(WSTrust13Constants.RequestTypes.Issue, WSTrust13Constants.KeyTypes.Bearer); rst.AppliesTo = new EndpointAddress( String.Format("https://{0}-sb.accesscontrol.windows.net/WRAPv0.9/", serviceNamespace)); rst.TokenType = Microsoft.IdentityModel.Tokens.SecurityTokenTypes.Saml2TokenProfile11; var channel = (WSTrustChannel)trustChannelFactory.CreateChannel(); var token = channel.Issue(rst) as GenericXmlSecurityToken; var tokenString = token.TokenXml.OuterXml; return tokenString; } } }Following is a sample usage of the above class.

static void Main(string[] args) { var serviceNamespace = "christianweyer"; var usernameIssuerUrl = "https://identity.thinktecture.com/idsrv/issue/wstrust/mixed/username"; var host = new ServiceHost(typeof(EchoService)); var a = ServiceBusEnvironment.CreateServiceUri( "https", serviceNamespace, "echo"); var b = new WebHttpRelayBinding(); b.Security.RelayClientAuthenticationType = RelayClientAuthenticationType.None; // for demo only! var c = typeof(IEchoService); var authN = new TransportClientEndpointBehavior(); var samlToken = authN.GetSamlTokenForUsername( usernameIssuerUrl, serviceNamespace, "bob", "......."); authN.TokenProvider = TokenProvider.CreateSamlTokenProvider(samlToken); var ep = host.AddServiceEndpoint(c, b, a); ep.Behaviors.Add(authN); ep.Behaviors.Add(new WebHttpBehavior()); host.Open(); Console.WriteLine("Service running..."); host.Description.Endpoints.ToList() .ForEach(enp => Console.WriteLine(enp.Address)); Console.ReadLine(); host.Close(); }And the running service in action (super spectacular!)

Hope this helps!

Mary Jo Foley (@maryjofoley) asserted “Microsoft has added new updates to its Azure Web-hosting and directory service offerings” in a summary of her Microsoft updates Windows Azure Web Sites, Active Directory previews report of 9/17/2012 for ZDNet’s All About Microsoft blog:

Microsoft rolled out previews of a number of its Windows Azure technologies earlier this year. In the past week-plus, the team has updated these previews with some new features.

(Because these are "services" rather than "software," Microsoft seems to prefer to position these as "rolling updates" to the preview, rather than as a new version of the preview. The Softies' preferred naming convention seems to to refer to these as updates to the original previews rather than "Preview 2" or "Preview 3," etc. Microsoft is expected to continue to deliver updates to its Azure previews as it heads towards general availability of these various new cloud services. I'm now thinking the wave of latest Azure updates is what some of my contacts described as "RTMing" in August and being rolled out in September.)

First up: Windows Azure Active Directory, or WAAD, for short. WAAD is a cloud implementation of Microsoft's Active Directory directory service. A number of Microsoft cloud properties already are using WAAD, including the Windows Azure Online Backup, Windows Azure, Office 365, Dynamics CRM Online and Windows InTune.

Last week, Microsoft announced it was adding three new sets of capabilities to the WAAD preview which it rolled out in July 2012.

The three new additions:

- The ability to create a standalone Windows Azure AD tenant

- A preview the Directory Management User Interface. (This new UI supports the recently updated preview of Windows Azure Online Backup)

- Write support in WAAD's GraphAPI

Microsoft also announced on September 17 updates to Windows Azure Web Sites (codenamed Antares). Azure Web Sites is a hosting framework for Web applications and sites created using various languages and stacks -- including a number of open-source, non-Microsoft-developed ones. Microsoft's goal is to make this hosting framework available for both the cloud and on premises on Windows Servers, so that companies can use it as a hosting environment for public or private cloud sites and apps.

The newly announced additions to Azure Web Sites include a shared-mode scaling option; support for custom domains with shared and reserved mode web-sites using both CNAME and A-Records (the latter enabling naked domains -- (e.g. http://microsoft.com in addition to http://www.microsoft.com); and continuous deployment support using both CodePlex and GitHub, and FastCGI extensibility.

"We will also in the future enable Server Name Indication (SNI)-based SSL as a built-in feature with shared mode web-sites," blogged Server and Tools Corporate Vice President Scott Guthrie, who noted that this functionality isn’t supported with today’s release, but will be coming later this year to both the shared and reserved tiers.

When using reserved instance mode, an Azure customer's sites are guaranteed to run isolated within their own Small, Medium or Large virtual machine, meaning no other customers run within it, Guthrie explained. Users can run any number of web-sites within a VM, and there are no quotas on CPU or memory limits, he added.

"All of these improvements are now live in production and available to start using immediately," Guthrie said.

"We’ll have even more new features and enhancements coming in the weeks ahead – including support for the recent Windows Server 2012 and .NET 4.5 releases (we will enable new web and worker role images with Windows Server 2012 and .NET 4.5 next month)," Guthrie added.

See Scott Guthrie (@scottgu) posted Announcing: Great Improvements to Windows Azure Web Sites in the section below.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

•• Clint Edmonson (@clinted) posted Announcing the New Windows Azure Web Sites Shared Scaling Tier on 9/18/2012:

Windows Azure Web Sites has added a new pricing tier that will solve the #1 blocker for the web development community. The shared tier now supports custom domain names mapped to shared-instance web sites. This post will outline the plan changes and elaborate on how the new pricing model makes Windows Azure Web Sites an even richer option for web development shops of all sizes.

Setting the Stage

In June, we released the first public preview of Windows Azure Web Sites, which gave web developers a great platform on which to get web sites running using their web development framework of choice. PHP, Node.js, classic ASP, and ASP.NET developers can all utilize the Windows Azure platform to create and launch their web sites. Likewise, these developers have a series of data storage options using Windows Azure SQL Databases, MySQL, or Windows Azure Storage. The Windows Azure Web Sites free offer enabled startups to get their site up and running on Windows Azure with a minimal investment, and with multiple deployment and continuous integration features such as Git, Team Foundation Services, FTP, and Web Deploy.

The response to the Windows Azure Web Sites offer has been overwhelmingly positive. Since the addition of the service on June 12th, tens of thousands of web sites have been deployed to Windows Azure and the volume of adoption is increasing every week.

Preview Feedback

In spite of the growth and success of the product, the community has had questions about features lacking in the free preview offer. The main question web developers asked regarding Windows Azure Web Sites relates to the lack of the free offer’s support for domain name mapping. During the preview launch period, customer feedback made it obvious that the lack of domain name mapping support was an area of concern. We’re happy to announce that this #1 request has been delivered as a feature of the new shared plan.

New Shared Tier Portal Features

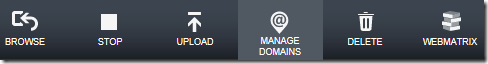

In the screen shot below, the “Scale” tab in the portal shows the new tiers – Free, Shared, and Reserved – and gives the user the ability to quickly move any of their free web sites into the shared tier. With a single mouse-click, the user can move their site into the shared tier.

Once a site has been moved into the shared tier, a new Manage Domains button appears in the bottom action bar of the Windows Azure Portal giving site owners the ability to manage their domain names for a shared site.

This button brings up the domain-management dialog, which can be used to enter in a specific domain name that will be mapped to the Windows Azure Web Site.

Shared Tier Benefits

Startups and large web agencies will both benefit from this plan change. Here are a few examples of scenarios which fit the new pricing model:

- Startups no longer have to select the reserved plan to map domain names to their sites. Instead, they can use the free option to develop their sites and choose on a site-by-site basis which sites they elect to move into the shared plan, paying only for the sites that are finished and ready to be domain-mapped

- Agencies who manage dozens of sites will realize a lower cost of ownership over the long term by moving their sites into reserved mode. Once multi-site companies reach a certain price point in the shared tier, it is much more cost-effective to move sites to a reserved tier.

Long-term, it’s easy to see how the new Windows Azure Web Sites shared pricing tier makes Windows Azure Web Sites it a great choice for both startups and agency customers, as it enables rapid growth and upgrades while keeping the cost to a minimum. Large agencies will be able to have all of their sites in their own instances, and startups will have the capability to scale up to multiple-shared instances for minimal cost and eventually move to reserved instances without worrying about the need to incur continually additional costs. Customers can feel confident they have the power of the Microsoft Windows Azure brand and our world-class support, at prices competitive in the market. Plus, in addition to realizing the cost savings, they’ll have the whole family of Windows Azure features available.

Continuous Deployment from GitHub and CodePlex

Along with this new announcement are two other exciting new features. I’m proud to announce that web developers can now publish their web sites directly from CodePlex or GitHub.com repositories. Once connections are established between these services and your web sites, Windows Azure will automatically be notified every time a check-in occurs. This will then trigger Windows Azure to pull the source and compile/deploy the new version of your app to your web site automatically.

Walk-through videos on how to perform these functions are below:

These changes, as well as the enhancements to the reserved plan model, make Windows Azure Web Sites a truly competitive hosting option. It’s never been easier or cheaper for a web developer to get up and running. Check out the free Windows Azure web site offering and see for yourself.

Stay tuned to my twitter feed for Windows Azure announcements, updates, and links: @clinted

• Brian Swan (@brian_swan) explained Using Custom PHP Extensions in Windows Azure Web Sites in a 9/17/2012 to the [Windows Azure’s] Silver Lining blog:

I’m happy to announce that with the most recent update to Windows Azure Web Sites, you can now easily enable custom PHP extensions. If you have read any of my previous posts on how to configure PHP in Windows Azure Web Sites (Windows Azure Web Sites: A PHP Perspective and Configuring PHP in Windows Azure Web Sites with .user.ini Files), you probably noticed one glaring shortcoming: you were limited to only the PHP extensions that were available by default. Now, the list of default extensions wasn’t bad (you can see the default extensions by creating a site and calling phpinfo() ), but not being able to add custom extensions was a real limitation for many PHP applications. The Windows Azure Web Sites team recognized this and has been working hard to add the flexibility you need to extend PHP functionality through extensions.

If you have used Windows Azure Web Sites before to host PHP applications, here’s the short story for how to add custom extensions (I’m assuming you have created a site already):

1. Add a new folder to your application locally and add your custom PHP extension binaries to it. I suggest adding a folder called bin so that visitors of your site can’t browse its contents. So, for example, I created a bin folder in my application’s root directory and added the php_mongo.dll file to it.

2. Push this new content to your site (via Git, FTP, or WebMatrix).

3. Navigate to your site’s dashboard in the Windows Azure Portal, and click on CONFIGURE.

4. Find the app settings section, and create a new key/value pair. Set the key to PHP_EXTENSIONS, and set the value to the location (relative to your application root) of your PHP extensions. In my case, it looks like this:

5. Click on the checkmark (shown above) and click SAVE at the bottom of the page.

That’s it. Any extensions you enabled should now be ready to use. If you want to add multiple extensions, simply set the value of PHP_EXTENSIONS to a comma delimited list of extension paths (e.g. bin/php_mongo.dll,bin/php_foo.dll).

Note: I’m using the MongoDB extension as an example here even though MongoDB is not available in Windows Azure Web Sites. However, you can run MongoDB in a Windows Azure Virtual Machine and connect to it from Windows Azure Web Sites. To learn more about how to do this, see this tutorial: Install MongoDB on a virtual machine running CentOS Linux in Windows Azure.

If you are new to using Windows Azure Web Sites to host PHP applications, you can learn how to create a site by following this tutorial: Create a PHP-MySQL Windows Azure web site and deploy using Git. Or, for more brief instructions (that do not include setting up a database), follow the How to: Create a Website Using the Management Portal section in this tutorial: How to Create and Deploy a Website. After you have created a website, follow the steps above to enable custom PHP extensions.

Enabling custom extensions isn’t the only nice thing for PHP developers in this update to Windows Azure Web Sites. You can now publish from GitHub and you can publish to a Windows Azure Web Site from a branch other than master. For more information on these updates, see the following videos:

• Tyler Doerksen described Deploying Azure Websites with Git in a 9/17/2012 post:

Right now I am gearing up for this weeks Winnipeg .NET User Group session “Git by a Git with Marc Jeanson” on September 20th (this Thursday). I am really excited about this session because Git is something that has always interested me but in my professional career I have not crossed paths with Git. That is, until now!

As an Azure Virtual Technical Specialist and overall enthusiast I try and keep up with all the great features of the Azure platform. Not the least of these features is the ability to publish changes to an Azure Website using Git and more specifically GitHub.

So, this is what I plan to accomplish in this post:

- Create a new Azure Website

- Associate my site to GitHub

- Make changes and re-deploy

Sounds simple right? Well lets find out.

Step 1: Setup a new Azure Website

With your Azure subscription you currently get 10 shared websites for free so this should be easy to setup and play with.

Go to http://manage.windowsazure.com and select “New” > “Compute” > “Web Site” > “Quick Create”, type in the Url prefix (in my case it is wpgnetug.azurewebsites.net) and which region and subscription you want this website hosted.

Once you select create it should be completed in no time, maybe a bit longer if this is your first website.

Select your new site from the list and if it does not bring you to the Quick Start click the little cloud

at the beginning of the top navigation bar.

There are two options for source control, TFS and Git. TFS only works with TfsOnline.com (the SaaS hosted TFS service) and right now we are going to focus on Git.

Note: You can only choose one deployment mechanism so if you need to change you may have to re-create your site or try contacting Azure support.

Select the “Set up Git publishing” link and you should eventually see a Git Url.

Step 2: Associate your site to GitHub

So we could normally go through setting up your local Git repository to Azure but I currently want to deploy from a GitHub project and with the GitHub client for Windows I can easily setup local repositories on my machine.

So once you have a project setup in GitHub, click the “Authorize Windows Azure” link under “Deploy from my GitHub project”

Once your authorized select the project you want to deploy.

If your site has code in there the deployment should start right away. Once it shows an active deployment go to your site and check it out (http://wpgnetug.azurewebsites.net/) you should also see this under the “Deployments” tab of your site.

Step 3: Make some changes to the site and re-deploy

So to do this I am going to make sure I have cloned the latest version of the site to my local repository. I use GitHub for Windows to manage the local repositories on my machine.

So to show the the deployment I am going to change the main title of the home page to Tylers .NET User Group – *Evil Laugh*

Now I see that GitHub shows uncommitted changes.

Commit the change with a description and then Sync to your repository. Now go back to the Windows Azure management page. You should see a new deployment.

And finally to verify the change I can see the updated title on http://wpgnetug.azurewebsites.net/

Wow! That was easy.

You can find more information on Git and Azure websites on the Azure in the documentation here: https://www.windowsazure.com/en-us/develop/net/common-tasks/publishing-with-git/

If you are in Winnipeg I hope to see you at this weeks event!

Scott Guthrie (@scottgu) posted Announcing: Great Improvements to Windows Azure Web Sites on 9/17/2012:

I’m excited to announce some great improvements to the Windows Azure Web Sites capability we first introduced earlier this summer.

Today’s improvements include: a new low-cost shared mode scaling option, support for custom domains with shared and reserved mode web-sites using both CNAME and A-Records (the later enabling naked domains), continuous deployment support using both CodePlex and GitHub, and FastCGI extensibility. All of these improvements are now live in production and available to start using immediately.

New “Shared” Scaling Tier

Windows Azure allows you to deploy and host up to 10 web-sites in a free, shared/multi-tenant hosting environment. You can start out developing and testing web sites at no cost using this free shared mode, and it supports the ability to run web sites that serve up to 165MB/day of content (5GB/month). All of the capabilities we introduced in June with this free tier remain the same with today’s update.

Starting with today’s release, you can now elastically scale up your web-site beyond this capability using a new low-cost “shared” option (which we are introducing today) as well as using a “reserved instance” option (which we’ve supported since June). Scaling to either of these modes is easy. Simply click on the “scale” tab of your web-site within the Windows Azure Portal, choose the scaling option you want to use with it, and then click the “save” button. Changes take only seconds to apply and do not require any code to be changed, nor the app to be redeployed:

Below are some more details on the new “shared” option, as well as the existing “reserved” option:

Shared Mode

With today’s release we are introducing a new low-cost “shared” scaling mode for Windows Azure Web Sites. A web-site running in shared mode is deployed in a shared/multi-tenant hosting environment. Unlike the free tier, though, a web-site in shared mode has no quotas/upper-limit around the amount of bandwidth it can serve. The first 5 GB/month of bandwidth you serve with a shared web-site is free, and then you pay the standard “pay as you go” Windows Azure outbound bandwidth rate for outbound bandwidth above 5 GB.

A web-site running in shared mode also now supports the ability to map multiple custom DNS domain names, using both CNAMEs and A-records, to it. The new A-record support we are introducing with today’s release provides the ability for you to support “naked domains” with your web-sites (e.g. http://microsoft.com in addition to http://www.microsoft.com). We will also in the future enable SNI based SSL as a built-in feature with shared mode web-sites (this functionality isn’t supported with today’s release – but will be coming later this year to both the shared and reserved tiers).

You pay for a shared mode web-site using the standard “pay as you go” model that we support with other features of Windows Azure (meaning no up-front costs, and you pay only for the hours that the feature is enabled). A web-site running in shared mode costs only 1.3 cents/hr during the preview (so on average $9.36/month).

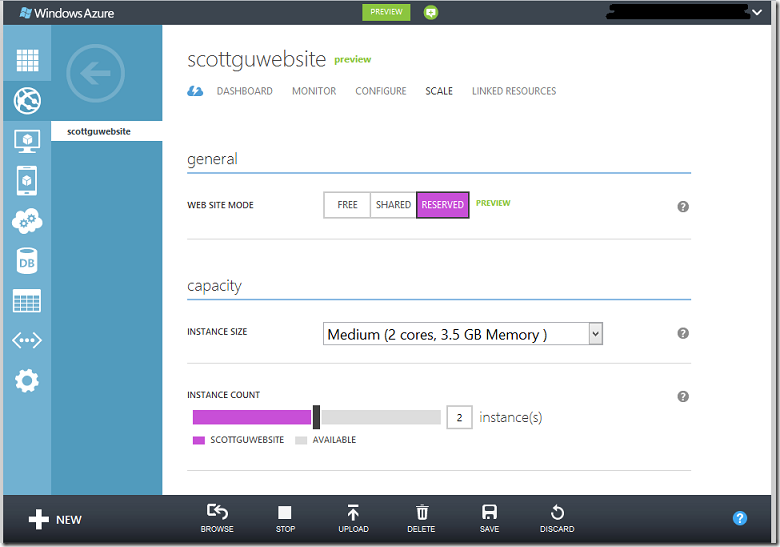

Reserved Instance Mode

In addition to running sites in shared mode, we also support scaling them to run within a reserved instance mode. When running in reserved instance mode your sites are guaranteed to run isolated within your own Small, Medium or Large VM (meaning no other customers run within it). You can run any number of web-sites within a VM, and there are no quotas on CPU or memory limits.

You can run your sites using either a single reserved instance VM, or scale up to have multiple instances of them (e.g. 2 medium sized VMs, etc). Scaling up or down is easy – just select the “reserved” instance VM within the “scale” tab of the Windows Azure Portal, choose the VM size you want, the number of instances of it you want to run, and then click save. Changes take effect in seconds:

Unlike shared mode, there is no per-site cost when running in reserved mode. Instead you pay only for the reserved instance VMs you use – and you can run any number of web-sites you want within them at no extra cost (e.g. you could run a single site within a reserved instance VM or 100 web-sites within it for the same cost). Reserved instance VMs start at 8 cents/hr for a small reserved VM.

Elastic Scale-up/down

Windows Azure Web Sites allows you to scale-up or down your capacity within seconds. This allows you to deploy a site using the shared mode option to begin with, and then dynamically scale up to the reserved mode option only when you need to – without you having to change any code or redeploy your application.

If your site traffic starts to drop off, you can scale back down the number of reserved instances you are using, or scale down to the shared mode tier – all within seconds and without having to change code, redeploy, or adjust DNS mappings. You can also use the “Dashboard” view within the Windows Azure Portal to easily monitor your site’s load in real-time (it shows not only requests/sec and bandwidth but also stats like CPU and memory usage).

Because of Windows Azure’s “pay as you go” pricing model, you only pay for the compute capacity you use in a given hour. So if your site is running most of the month in shared mode (at 1.3 cents/hr), but there is a weekend when it gets really popular and you decide to scale it up into reserved mode to have it run in your own dedicated VM (at 8 cents/hr), you only have to pay the additional pennies/hr for the hours it is running in the reserved mode. There is no upfront cost you need to pay to enable this, and once you scale back down to shared mode you return to the 1.3 cents/hr rate. This makes it super flexible and cost effective.

Improved Custom Domain Support

Web sites running in either “shared” or “reserved” mode support the ability to associate custom host names to them (e.g. www.mysitename.com). You can associate multiple custom domains to each Windows Azure Web Site.

With today’s release we are introducing support for A-Records (a big ask by many users). With the A-Record support, you can now associate ‘naked’ domains to your Windows Azure Web Sites – meaning instead of having to use www.mysitename.com you can instead just have mysitename.com (with no sub-name prefix). Because you can map multiple domains to a single site, you can optionally enable both a www and naked domain for a site (and then use a URL rewrite rule/redirect to avoid SEO problems).

We’ve also enhanced the UI for managing custom domains within the Windows Azure Portal as part of today’s release. Clicking the “Manage Domains” button in the tray at the bottom of the portal now brings up custom UI that makes it easy to manage/configure them:

As part of this update we’ve also made it significantly smoother/easier to validate ownership of custom domains, and made it easier to switch existing sites/domains to Windows Azure Web Sites with no downtime.

Continuous Deployment Support with Git and CodePlex or GitHub

One of the more popular features we released earlier this summer was support for publishing web sites directly to Windows Azure using source control systems like TFS and Git. This provides a really powerful way to manage your application deployments using source control. It is really easy to enable this from a website’s dashboard page:

The TFS option we shipped earlier this summer provides a very rich continuous deployment solution that enables you to automate builds and run unit tests every time you check in your web-site, and then if they are successful automatically publish to Azure.

With today’s release we are expanding our Git support to also enable continuous deployment scenarios and integrate with projects hosted on CodePlex and GitHub. This support is enabled with all web-sites (including those using the “free” scaling mode).

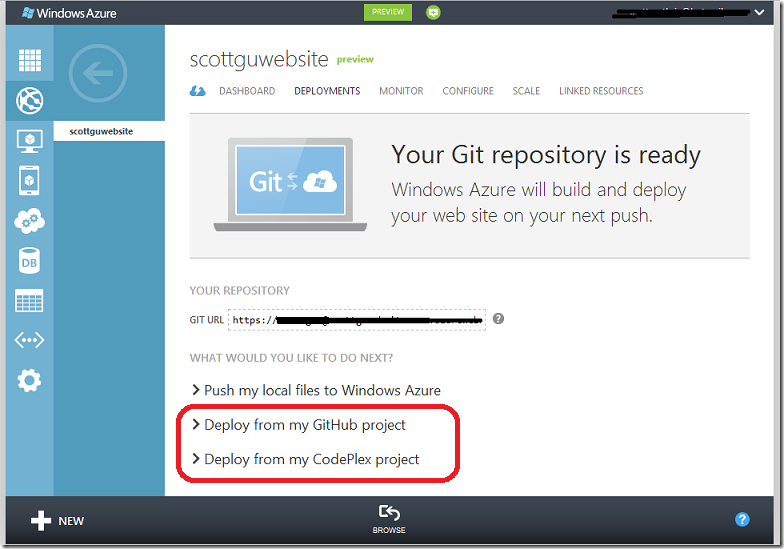

Starting today, when you choose the “Set up Git publishing” link on a website’s “Dashboard” page you’ll see two additional options show up when Git based publishing is enabled for the web-site:

You can click on either the “Deploy from my CodePlex project” link or “Deploy from my GitHub project” link to walkthrough a simple workflow to configure a connection between your website and a source repository you host on CodePlex or GitHub. Once this connection is established, CodePlex or GitHub will automatically notify Windows Azure every time a checkin occurs. This will then cause Windows Azure to pull the source and compile/deploy the new version of your app automatically.

The below two videos walkthrough how easy this is to enable this workflow and deploy both an initial app and then make a change to it:

- Enabling Continuous Deployment with Windows Azure Websites and CodePlex (2 minutes)

- Enabling Continuous Deployment with Windows Azure Websites and GitHub (2 minutes)

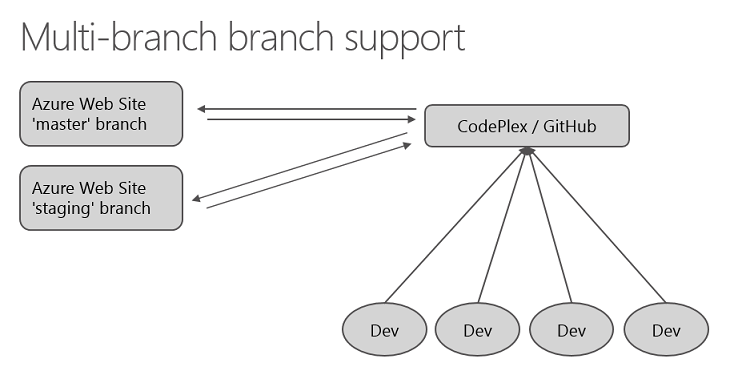

This approach enables a really clean continuous deployment workflow, and makes it much easier to support a team development environment using Git:

Note: today’s release supports establishing connections with public GitHub/CodePlex repositories. Support for private repositories will be enabled in a few weeks.

Support for multiple branches

Previously, we only supported deploying from the git ‘master’ branch. Often, though, developers want to deploy from alternate branches (e.g. a staging or future branch). This is now a supported scenario – both with standalone git based projects, as well as ones linked to CodePlex or GitHub. This enables a variety of useful scenarios.

For example, you can now have two web-sites - a “live” and “staging” version – both linked to the same repository on CodePlex or GitHub. You can configure one of the web-sites to always pull whatever is in the master branch, and the other to pull what is in the staging branch. This enables a really clean way to enable final testing of your site before it goes live.

This 1 minute video demonstrates how to configure which branch to use with a web-site.

Summary

The above features are all now live in production and available to use immediately. If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using them today. Visit the Windows Azure Developer Center to learn more about how to build apps with it.

We’ll have even more new features and enhancements coming in the weeks ahead – including support for the recent Windows Server 2012 and .NET 4.5 releases (we will enable new web and worker role images with Windows Server 2012 and .NET 4.5 next month). Keep an eye out on my blog for details as these new features become available.

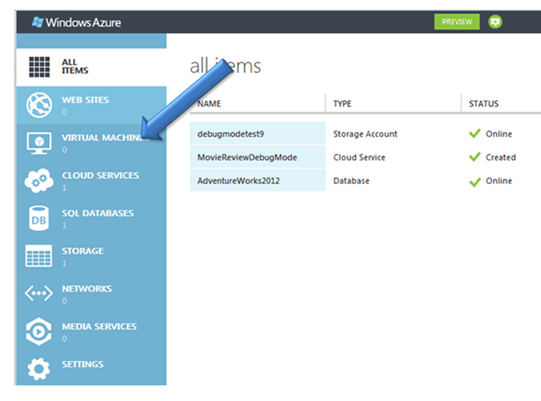

Dhananjay Kumar (@Debug_Mode) described Step by Step creating Virtual Machine in Windows Azure in a 9/17/2012 post:

In this post we will walkthrough step by step to create Virtual Machine in Windows Azure. To start with very first you need to login to Windows Azure Management Portal here and then click on Virtual Machines option in left panel.

If you do not have any virtual machine created then you will get following message. You need to click on CREATE A VIRTUAL MACHINE

If you do not have any virtual machine created then you will get following message. You need to click on CREATE A VIRTUAL MACHINE. After clicking that you will get following option

- QUICK CREATE

- FROM GALLERY

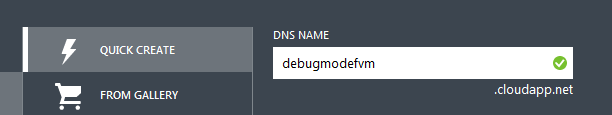

In this tutorial let us try option of Quick Create. On selecting this option

First you need to provide DNS NAME of the virtual machine. It should be unique. You will get a right mark on valid DNS name.

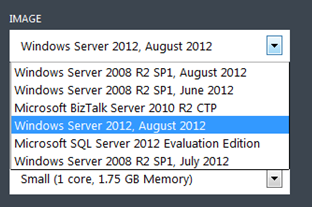

Next you need to choose the Image for the Virtual Machine. Choose any image as of your requirement. You will get virtual machine mount with image you will choose from the dropdown.

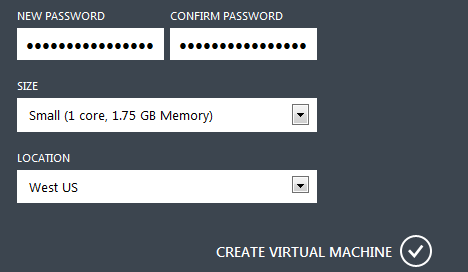

Next you need to provide password to access Virtual Machine. Choose the size of the machine and datacenter. After providing these entire information clicks on Create Virtual Machine to create the virtual machine.

In bottom of the page you can see information and status on creating virtual machine.

Once Virtual Machine got created successfully, you can see the details as following

In this way you can create Virtual Machine in Windows Azure. I hope you find this post useful. Thanks for reading.

Michael Washam (@MWashamMS) reported that his Windows Azure Virtual Machines and Virtual Networks – TechEd Australia Recording is available in a 9/16/2012 post:

The recording of my TechEd Australia session on Windows Azure Infrastructure as a Service is now posted:

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Larry Franks (@larry_franks) described Continuous Deployment with Windows Azure Web Sites in a 9/19/2012 post:

Last Friday, Windows Azure added support for continuous deployment from GitHub and CodePlex repositories to Windows Azure Web Sites. Continuous deployment notifies Windows Azure when updates are made to a Git repository on GitHub or CodePlex, and Windows Azure will then pull in those changes to update your website.

I thought I'd try setting up something that would benefit from continuous deployment. Like a blog created using Octopress.

Octopress

Octopress is a Ruby blogging framework that takes articles you write in Markdown and generates a static site from them. Static site means there's no database, no dynamic generation of pages when they're viewed, etc. Just plain old HTML, JavaScript, CSS, etc.

Since it's a blog, (which in theory I'd update regularly,) it's a perfect use for continuous deployment; I write a post, check it into my local Git repository, push to GitHub, and it automatically gets pulled into my website on Windows Azure.

Note that while I use Octopress, the same general process could be used with other projects. Octopress just happens to be a handy example.

Setting up a Windows Azure Web Site

Before we get into the process of setting up Octopress, let's walk through the steps to create a Windows Azure Web Site to host it. While there's multiple ways to create a Windows Azure Web Site, I just used the portal since it's fairly straight forward. Here's the steps:

Open a browser and go to http://windows.azure.com. Login with your subscription.

NOTE: You want the preview portal, which may or may not be your default (it stays with whichever portal you selected last.) If you're not on the preview portal, select the Visit the Preview Portal link at the bottom of the page.

Click + NEW at the bottom of the page, select WEB SITE, and then QUICK CREATE.

Fill in the URL, select a region, etc. and then click the checkbox to create the site.

Once the site has been created, select the site name to navigate to the DASHBOARD. Look for the SITE URL on the right side of the page. This will be something like http://mysite.azurewebsites.net/. Save this value as you will need it during the Octopress configuration steps.

Setting up Octopress

Octopress setup is pretty well documented already. I was able to follow the setup and configuration steps with only minor problems.

Start by creating a fork of the Octopress project on GitHub and cloning the fork to your local machine.

Follow the steps on the Octopress setup page. This will guide you through installing required gems and initializing the theme.

Follow the steps on the Configuring Octopress page. This will guide you through updating the _config.yml with values for your site. You will need the site URL value from the Windows Azure Portal for the 'url' value in this file.

Follow the steps on the Blogging basics page to create a blog post or two. This will guide you through the process of creating posts for the site.

Run

rake generateto generate the static files in the /public sub-directory.Finally, commit the updates to your local repository and push back to the GitHub repository by doing:

git add . git commit -m "new blog post" git push origin masterThat pushes your blog posts and the static site generated in step 5 above back up to your GitHub repository.

Enable continuous deployment

Back in the Windows Azure Portal, select your website and select the DASHBOARD. In the quick glance section, select setup git publishing.

NOTE: If you haven't set up a Git repository for a Windows Azure Web Site previously, you'll be prompted for a username and password.

Once the repository has been created, you'll have several options: Push my local files to Windows Azure, Deploy from my GitHub project, or Deploy from my CodePlex project. Expand Deploy from my GitHub project and click the Authorize Windows Azure link.

You'll be prompted to login to GitHub, and finally to select the repository you want to associate with this web site. Select the repository you created earlier for Octopress.

Once you've selected the repository, Windows Azure will pull in the latest changes and begin serving up the files.

Oh noes! Something is wrong!

If you've tried browsing your Windows Azure Web Site at this point, you'll get an error. Probably something along the lines of "You do not have permissions to view this directory or page". There's two problems causing this:

All our static files are down in the /public directory and there's no default file (index.html for example,) in the root of the site.

The .gitignore file for the project has the /public directory listed, which is excluding all our generated static files from being deployed.

Both problems are easily fixed by performing the following steps in the local repository:

Edit the .gitignore file and remove the line containing public.

Create a file named web.config in the root of your local Octopress repository and paste the following into it:

The important piece is the rules section, which takes incoming requests and rewrites the request to the public folder.

Commit this change by using:

git add . git commit -m "adding public content and rewrite rule" git push origin masterOnce the push has completed, if you look at the DEPLOYMENT section of your web site in the Windows Azure Portal you should see it automatically pull in this update as the new active deployment:

Now you can browse to the site URL and the main page should appear.

The secret sauce

The continuous deployment feature works by creating a unique URL for your web site, which then gets added to your repository settings on GitHub. You can find the URL by going to the CONFIGURE section of your web site in the Windows Azure portal and looking for the DEPLOYMENT TRIGGER URL. Note that right beneath this you can control what branch it pulls from also.

Now, to see where it's wired up on GitHub, go to the Admin link for your repository, select Service Hooks, and finally select WebHook URLs.

When you push an update to GitHub, it sends a POST request to the WebHook URL(s) telling those services that an update has occurred. Windows Azure checks if the update was to the branch you've told it to monitor (master by default) and if so, pulls down the updates.

If you want to disable continuous updates, you simply remove the WebHook URL from GitHub.

Archives

One annoyance I did run into is that the Archives link wouldn't work. Turns out this is because it is missing a trailing '/'. I updated the source/_includes/custom/navigation.html file and added a trailing '/' to the archives link.

Summary

The continuous update feature makes it pretty trivial to keep a Windows Azure Web Site updated with the latest and greatest software from your GitHub or CodePlex repository. While Octopress is just one example of using this functionality, you can do the same thing with any PHP, Node.js, .NET or static website. Alas, there's no Ruby support yet; for something like a Rails application you still have to use something like the RubyRole project I've blogged about previously.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

• The Entity Framework Team reported EF Nightly Builds Available on 9/17/2012:

A couple of months ago we announced that we are developing EF in an open source code base. As part of our ongoing effort to make it easier to get involved in the next version of EF and provide feedback we are now making nightly builds of the open source code base available.

There is a Nightly Builds page on our CodePlex site that provides instruction for using nightly builds.

Be sure to check out the Feature Specifications page for information on the features that are being added to the code base.

We make no guarantees about the quality or availability of nightly builds.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

•• Robert Green posted Episode 49 of Visual Studio Toolbox: Getting Started with Windows Azure on 9/19/2012: