Windows Azure and Cloud Computing Posts for 9/10/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 9/12/2012 3:00 PM with new articles marked •.

Tip: Copy bullet, press Ctrl+f, paste it to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop, Online Backup and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop, Online Backup and Media Services

• Mary Jo Foley (@maryjofoley) asserted “An updated test release of Microsoft's cloud backup service adds support for Windows Server Essentials 2012, among other new features” as a deck for her Microsoft releases updated preview of its Windows Azure cloud backup service report of 9/11/2012 for ZDNet’s All About Microsoft blog:

Microsoft delivered a beta of its Windows Azure Online Backup service back in March 2012. Late last week, officials shared more on the updated version of that service, which the company is calling a "preview."

Windows Azure Online Backup is a cloud-based backup service that allows server data to be backed up and recovered from the cloud. Microsoft is pitching it as an alternative to on-premises backup solutions. It offers block-level incremental back ups (only changed blocks of information are backed up to reduce storage and bandwidth utilization); data compression, encryption and throttling; and verification of data integrity in the cloud, among other features.

The beta of the Azure Online Backup service only worked with Windows Server 2012 (then known as Windows Server 8). The newly released Azure backup preview also works with the near-final release candidate build of Windows Server Essentials 2012, which is Microsoft's new small business server. The final version of Windows Server Essentials 2012 is due out before the end of this year.

The Azure backup service also supports the Data Protection Manager (DPM) component of the System Center 2012 Service Pack 1. Microsoft made available the beta of System Center 2012 Service Pack 1 on September 10, and has said the final version of that service pack will be out in early 2013.

Alongside the new preview of the backup service, Microsoft also released an updated preview build of the Windows Azure Active Directory Management Portal. This portal is the vehicle for signing up for the Windows Azure Online Backup service and how administrators can manage users' access to the service.

Microsoft officials said they had no comment on when Microsoft plans to move Windows Azure Online Backup from preview to final release.

Speaking of System Center 2012 Service Pack 1, there are a number of new capabilities and updates coming in this release. SP1 enables all System Center components to run on and manage Windows Server 2012. SP1 adds support for Windows Azure virtual machine management and is key to Microsoft's "software defined networking" support. On the client-management side, SP1 provides the ability to deploy and manage Windows 8 and Windows Azure-based distribution points.

The Configuration Manager Service Pack 1 component -- coupled with the version of Windows Intune due out in early 2013 -- will support the management of Windows RT and Windows Phone 8 devices.

Stay tuned for a walkthrough of Windows Azure Online Backup (WAOB) later this week.

Herve Roggero (@hroggero) suggested that you Backup Azure Tables, schedule Azure scripts… and more with Enzo Backup 2.0 beta in an 9/11/2012 post:

Well – months of effort are now officially over… or should I say it’s just the beginning? Enzo Cloud Backup 2.0 (beta) is now officially out!!!

This tool will let you do the following:

- * Backup SQL Database (and SQL Server to a limited extend)

- * Backup Azure Tables

- * Restore SQL Backups into another SQL environment

- * Restore Azure Tables in Azure Storage, or SQL Environment

- * Manage and schedule database maintenance scripts

- * Drop database schema containers (with preview) for SaaS environments

- * Receive alerts (SMTP) when operations complete or fail

That’s it at a high level… but you need to see the flexibility around these features. For example you can select a specific backup strategy for Azure Tables allowing faster backup operations when partition keys use GUIDs. You can also call custom stored procedures during the restore operation of Azure Tables, allowing you to transform the data along the way. You can also set a performance threshold during Azure Table backup operations to help you control possible throttling conditions in your Storage Account.

Regarding database scripts, you can now define T-SQL scripts and schedule them for execution in a specific order. You can also tell Enzo to execute a pre and post script during Azure Table restore operations against a SQL environment.

The backup operation now supports backing up to multiple devices at the same time. So you can execute a backup request to both a local file, and a blob at the same time, guaranteeing that both will contain the exact same data. And due to the level of options that are available, you can save backup definitions for later reuse. The screenshot below backs up Azure Tables to two devices (a blob and a SQL Database).

You can also manage your database schemas for SaaS environments that use schema containers to separate customer data. This new edition allows you to see how many objects you have in each schema, backup specific schemas, and even drop all objects in a given schema. For example the screenshot below shows that the EnzoLog database has 4 user-defined schemas, and the AFA schema has 5 tables and 1 module (stored proc, function, view…). Selecting the AFA schema and trying to delete it will prompt another screen to show which objects will be deleted.

As you can see, Enzo Cloud Backup provides amazing capabilities that can help you safeguard your data in SQL Database and Azure Tables, and give you advanced management functions for your Azure environment. Download a free trial today at http://www.bluesyntax.net.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• My (@rogerjenn) Windows Azure Mobile Services Preview Walkthrough–Part 3: Pushing Notifications to Windows 8 Users (C#) concludes the trilogy:

The Windows Azure Mobile Services (WAMoS) Preview’s initial release enables application developers targeting Windows 8 to automate the following programming tasks:

- Creating a Windows Azure SQL Database (WASDB) instance and table to persist data entered in a Windows 8 Modern (formerly Metro) UI application’s form

- Connecting the table to the data entry front end app

- Adding and authenticating the application’s users

- Pushing notifications to users

My Windows Azure Mobile Services Preview Walkthrough–Part 1: Windows 8 ToDo Demo Application (C#) post of 9/8/2012 covered tasks 1 and 2; Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#) covered task 3.

This walkthrough describes the process for completing task 4 based on the Get started with push notifications and Push notifications to users by using Mobile Services tutorials. The process involves the following steps:

- Add push notifications to the app

- Update scripts to send push notifications

- Insert data to receive notifications

- Create the Channel table

- Update the app

- Update server scripts

- Verify the push notification behavior

Screen captures for each step are added to the two tutorials and code modifications are made to accommodate user authentication.

Prerequisites: Completion of the oakleaf-todo C# application in Part 1, completing the user authentication addition of Part 2 and downloading/installing the Live SDK for Windows and Windows Phone, which provides a set of controls and APIs that enable applications to integrate single sign-on (SSO) with Microsoft accounts and access information from SkyDrive, Hotmail, and Windows Live Messenger on Windows Phone and Windows 8.

1 – Add Push Notifications to the App

1-1. Launch your WAMoS app in Visual Studio 2012 for Windows 8 or higher, open the App.xaml.cs file and add the following using statement:

using Windows.Networking.PushNotifications;1-2. Add the following code to App.xaml.cs after the OnSuspending() event handler:

public static PushNotificationChannel CurrentChannel { get; private set; } private async void AcquirePushChannel() { CurrentChannel = await PushNotificationChannelManager.CreatePushNotificationChannelForApplicationAsync(); }This code acquires and stores a push notification channel.

1-3. At the top of the OnLaunched event handler in App.xaml.cs, add the following call to the new AcquirePushChannel method:

AcquirePushChannel();This guarantees that the CurrentChannel property is initialized each time the application is launched.

1-4. Open the project file MainPage.xaml.cs and add the following new attributed property to the TodoItem class:

[DataMember(Name = "channel")] public string Channel { get; set; }Note: When dynamic schema is enabled on your mobile service, a new 'channel' column is automatically added to the TodoItem table when a new item that contains this property is inserted.

1-5. Replace the ButtonSave_Click event handler method with the following code:

private void ButtonSave_Click(object sender, RoutedEventArgs e) { var todoItem = new TodoItem { Text = TextInput.Text, Channel = App.CurrentChannel.Uri }; InsertTodoItem(todoItem); }This sets the client's current channel value on the item before it is sent to the mobile service.

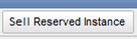

1-6. In the Management Portal’s Mobile Services Preview section, click the service name (oakleaf-todo for this example), click the Push tab and paste the Package SID value from Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#) step 1-3:

to the Package SID text box and click the Save button:

The walkthough continues with sections 2 through 7. Here’s a screen capture from section 7 with multiple push notifications:

My (@rogerjenn) Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#) continues the series:

The Windows Azure Mobile Services (WAMoS) Preview’s initial release enables application developers targeting Windows 8 to automate the following programming tasks:

Creating a Windows Azure SQL Database (WASDB) instance and table to persist data entered in a Windows 8 Modern (formerly Metro) UI application’s form

- Connecting the table to the data entry front end app

- Adding and authenticating the application’s users

- Pushing notifications to users

My Windows Azure Mobile Services Preview Walkthrough–Part 1: Windows 8 ToDo Demo Application (C#) post of 9/8/2012 covered tasks 1 and 2.

This walkthrough describes the process for completing task 3:

- Registering your Windows 8 app at the Live Connect Developer Center

- Restricting permissions to authenticated users

- Adding authentication code to the front-end application

- Authorizing users with scripts

The walkthrough is based on a combination of the Get started with authentication in Mobile Services and Authorize users with scripts tutorials. It has additional and updated screen captures for the first three operations. Step 4 is taken almost verbatim from the Microsoft tutorial.

A future walkthrough will cover task 4, Pushing notifications to Windows 8 users. I’ll provide similar walkthoughs for Windows Phone, Surface and Android devices when the corresponding APIs become available.

Prerequisites: Completion of the oakleaf-todo C# appliation in Part 1 and downloading/installing the Live SDK for Windows and Windows Phone, which provides a set of controls and APIs that enable applications to integrate single sign-on (SSO) with Microsoft accounts and access information from SkyDrive, Hotmail, and Windows Live Messenger on Windows Phone and Windows 8.

1 - Registering your Windows 8 app at the Live Connect Developer Center

1.1. Open the TodoList application in Visual Studio 2012 running under Windows 8, display Solution explorer, select the Package.appsmanifest node and click the Packaging tab:

1-2. Make a note of the Package Display Name and Publisher values for registering your app, and navigate to the Windows Push Notifications & Live Connect page, log on with the Windows Live ID you used to create the app, and type the Package Display Name and Publisher values in the text boxes:

Note: The preceding screen capture has been edited to reduce white space but the obsolete capture from Video Studio has not been updated.

1-3. Click the I Accept button to configure the application manifest and generate Package Name, Client Secret and Package SID values:

1-4. Copy the Package Name value to the clipboard, return to Visual Studio, and paste the Package Name value into the eponymous text box:

1-5. Press Ctrl+s to save the value, log on to the Windows Azure Management Portal, click the Mobile Services icon in the navigation frame, click your app to open its management page and click the Dashboard button:

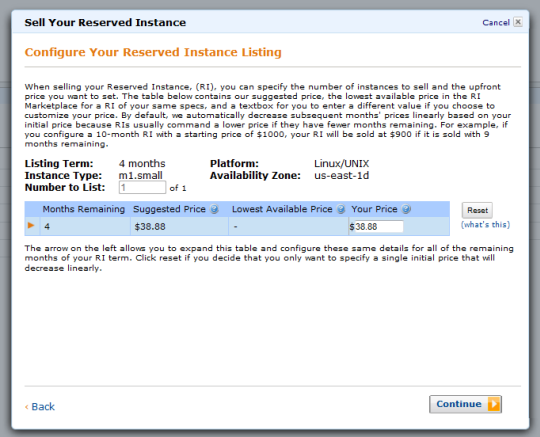

1-6. Make a note of the Site URL and navigate to the Live Connect Developer Center’s My Applications dashboard:

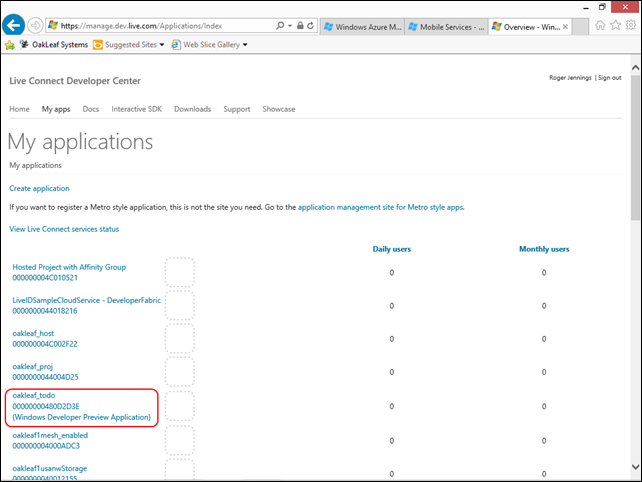

1-7. Click the app item (oakleaf_todo for this example) to display its details page:

1-8. Click the Edit Settings and API Settings buttons to open the Edit API Settings page and paste the Site URL value from step 5 in the Redirect Domain text box:

1-9. Accept the remaining defaults and click the Save button to save your changes, return to the Management Portal, click the Identity button, and paste the Client Secret value in the text box:

1-10. Click Save and Yes to save and confirm the change and complete configuration of your Mobile Service and client app for Live Connect. …

The article continues with “Restricting permissions to authenticated users,” “Adding authentication code to the front-end application” and “Authorizing users with scripts” sections.

The concluding part, Windows Azure Mobile Services Preview Walkthrough–Part 3: Pushing Notifications to Windows 8 Users (C#) post will be available shortly.

My (@rogerjenn) Windows Azure Mobile Services Preview Walkthrough–Part 1: Windows 8 ToDo Demo Application (C#) begins a series:

The Windows Azure Mobile Services (WAMoS) Preview’s initial release enables application developers targeting Windows 8 to automate the following programming tasks:

- Creating a Windows Azure SQL Database (WASDB) instance and table to persist data entered in a Windows 8 Modern (formerly Metro) UI application’s form

- Connecting the table to the generated data entry front end app

- Authenticating application users

- Pushing notifications to users

This walkthrough, which is simpler than the Get Started with Data walkthrough, explains how to obtain a Windows Azure 90-day free trial, create a C#/XAML WASDB instance for a todo application, add a table to persist todo items, and generate and use a sample oakleaf-todo Windows 8 front-end application. During the preview period, you can publish up to six free Windows Mobile applications.

My (@rogerjenn) Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#) covers task 3.

A future walkthrough will cover task 4, Pushing notifications to user, .

Prerequisites: You must perform this walkthrough under Windows 8 RTM with Visual Studio 2012 Express or higher. Downloading and installing the Mobile Services SDK Preview from GitHub also is required.

Note: The WAMoS abbreviation for Mobile Services distinguishes them from Windows Azure Media Services (WAMeS). …

Free trial signup steps elided for brevity.

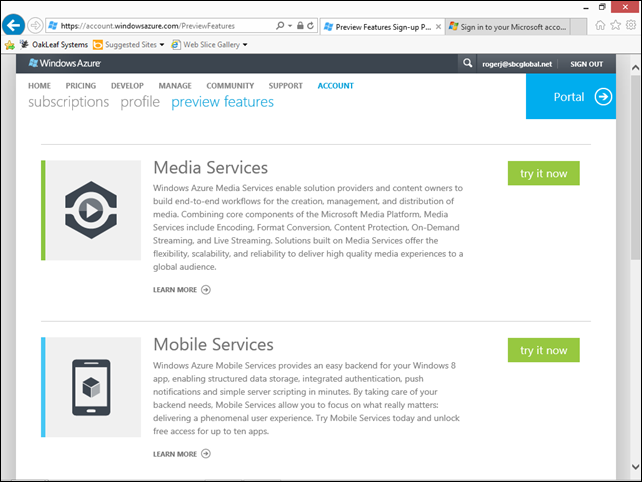

7. Open the Management Portal’s Account tab and click the Preview Features button:

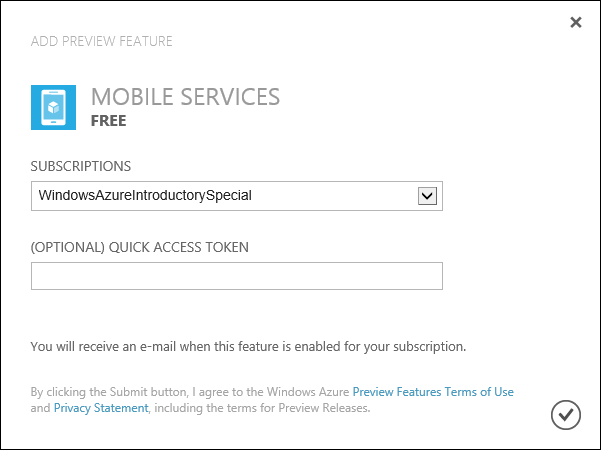

8. Click the Mobil Services’ Try It Now button to open the Add Preview Feature form, accept the default or select a subscription, and click the submit button to request admission to the preview:

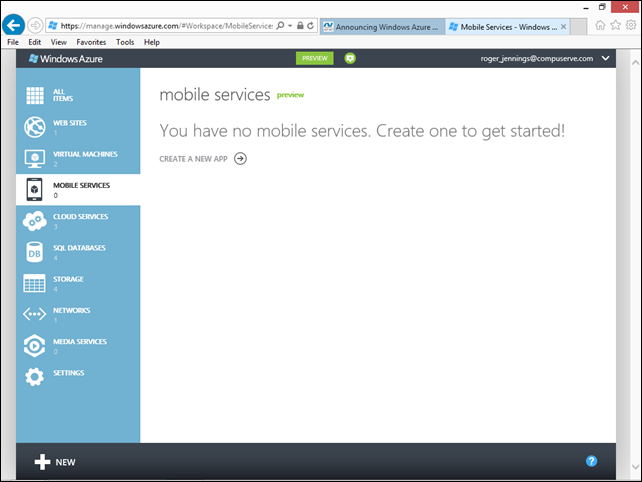

9. Follow the instructions contained in the e-mail sent to your Live ID e-mail account, which will enable the Mobile Services item in the Management Portal’s navigation pane:

Note: My rogerj@sbcglobal.net Live ID is used for this example because that account doesn’t have WAMoS enabled. The remainder of this walkthrough uses the subscription(s) associated with my roger_jennings@compuserve.com account.

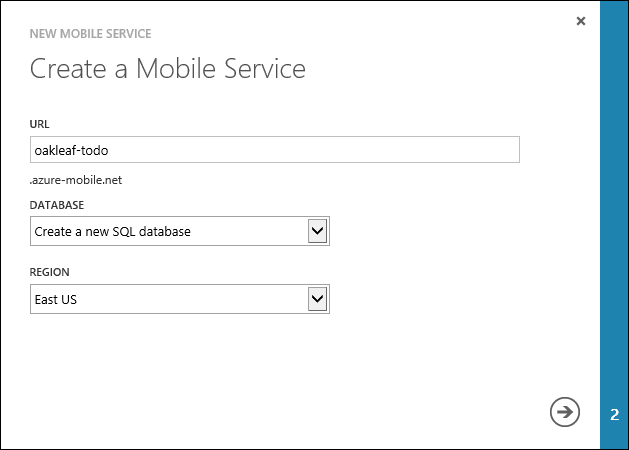

10. Click the Create a New App button to open the Create a Mobile Service form, type a DNS prefix for the ToDo back end in the URL text box (oakleaf-todo for this example), select Create a new SQL Database in the Database list, accept the default East US region.

Note: Only Microsoft’s East US data center supported WAMoS when this walkthrough was published.

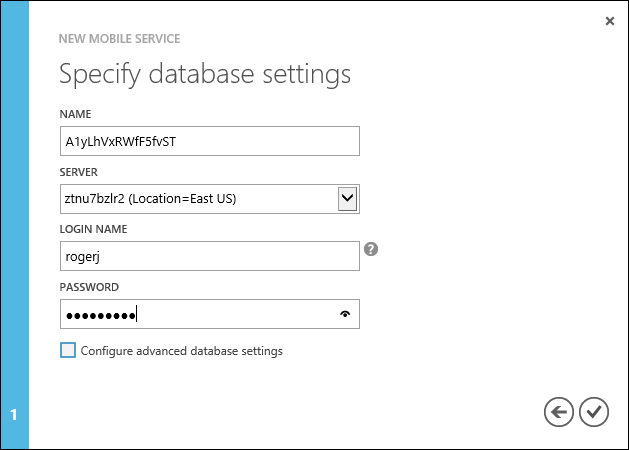

11. Click the next button to open the Specify Database Settings form, accept the default Name and Server settings, and type a database user name and complex password:

Note: You don’t need to configure advanced database settings, such as database size, for most Mobile Services.

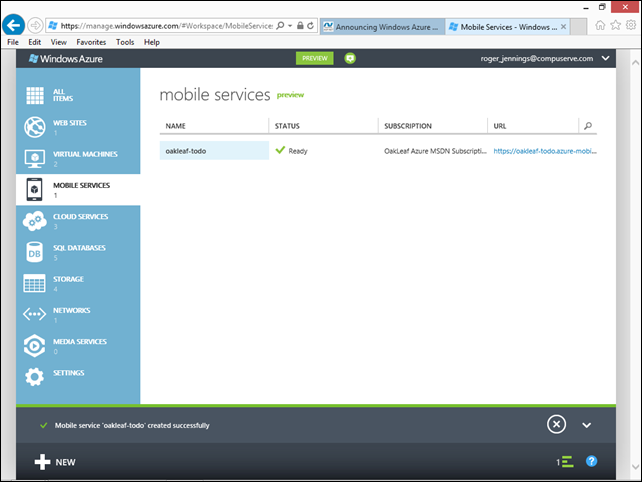

12. Click the Submit to create the Mobil Service’s database and enable the Mobile Services item in the Management Portal’s navigation pane. Ready status usually will appear in about 30 seconds:

The article continues with eight more illustrated steps.

• Frans Bouma (@FransBouma) describes the agony of The Windows Store... why did I sign up with this mess again? in a 9/12/2012 post:

Yesterday, Microsoft revealed that the Windows Store is now open to all developers in a wide range of countries and locations. For the people who think "wtf is the 'Windows Store'?", it's the central place where Windows 8 users will be able to find, download and purchase applications (or as we now have to say to not look like a computer illiterate: <accent style="Kentucky">aaaaappss</accent>) for Windows 8.

As this is the store which is integrated into Windows 8, it's an interesting place for ISVs, as potential customers might very well look there first. This of course isn't true for all kinds of software, and developer tools in general aren't the kind of applications most users will download from the Windows store, but a presence there can't hurt.

Now, this Windows Store hosts two kinds of applications: 'Metro-style' applications and 'Desktop' applications. The 'Metro-style' applications are applications created for the new 'Metro' UI which is present on Windows 8 desktop and Windows RT (the single color/big font fingerpaint-oriented UI). 'Desktop' applications are the applications we all run and use on Windows today. Our software are desktop applications. The Windows Store hosts all Metro-style applications locally in the store and handles the payment for these applications. This means you upload your application (sorry, 'app') to the store, jump through a lot of hoops, Microsoft verifies that your application is not violating a tremendous long list of rules and after everything is OK, it's published and hopefully you get customers and thus earn money. Money which Microsoft will pay you on a regular basis after customers buy your application.

Desktop applications are not following this path however. Desktop applications aren't hosted by the Windows Store. Instead, the Windows Store more or less hosts a page with the application's information and where to get the goods. I.o.w.: it's nothing more than a product's Facebook page. Microsoft will simply redirect a visitor of the Windows Store to your website and the visitor will then use your site's system to purchase and download the application. This last bit of information is very important.

So, this morning I started with fresh energy to register our company 'Solutions Design bv' at the Windows Store and our two applications, LLBLGen Pro and ORM Profiler. First I went to the Windows Store dashboard page. If you don't have an account, you have to log in or sign up if you don't have a live account. I signed in with my live account. After that, it greeted me with a page where I had to fill in a code which was mailed to me. My local mail server polls every several minutes for email so I had to kick it to get it immediately.

I grabbed the code from the email and I was presented with a multi-step process to register myself as a company or as an individual. In red I was warned that this choice was permanent and not changeable. I chuckled: Microsoft apparently stores its data on paper, not in digital form. I chose 'company' and was presented with a lengthy form to fill out. On the form there were two strange remarks:

- Per company there can just be 1 (one, uno, not zero, not two or more) registered developer, and only that developer is able to upload stuff to the store. I have no idea how this works with large companies, oh the overhead nightmares... "Sorry, but John, our registered developer with the Windows Store is on holiday for 3 months, backpacking through Australia, no, he's not reachable at this point. M'yeah, sorry bud. Hey, did you fill in those TPS reports yesterday?"

- A separate Approver has to be specified, which has to be a different person than the registered developer. Apparently to Microsoft a company with just 1 person is not a company. Luckily we're with two people! *pfew*, dodged that one, otherwise I would be stuck forever: the choice I already made was not reversible!

After I had filled out the form and it was all well and good and accepted by the Microsoft lackey who had to write it all down in some paper notebook ("Hey, be warned! It's a permanent choice! Written down in ink, can't be changed!"), I was presented with the question how I wanted to pay for all this. "Pay for what?" I wondered. Must be the paper they were scribbling the information on, I concluded. After all, there's a financial crisis going on! How could I forget! Silly me.

"Ok fair enough".

The price was 75 Euros, not the end of the world. I could only pay by credit card, so it was accepted quickly. Or so I thought. You see, Microsoft has a different idea about CC payments. In the normal world, you type in your CC number, some date, a name and a security code and that's it. But Microsoft wants to verify this even more. They want to make a verification purchase of a very small amount and are doing that with a special code in the description. You then have to type in that code in a special form in the Windows Store dashboard and after that you're verified. Of course they'll refund the small amount they pull from your card.

Sounds simple, right? Well... no. The problem starts with the fact that I can't see the CC activity on some website: I have a bank issued CC card. I get the CC activity once a month on a piece of paper sent to me. The bank's online website doesn't show them. So it's possible I have to wait for this code till October 12th. One month.

"So what, I'm not going to use it anyway, Desktop applications don't use the payment system", I thought. "Haha, you're so naive, dear developer!" Microsoft won't allow you to publish any applications till this verification is done. So no application publishing for a month. Wouldn't it be nice if things were, you know, digital, so things got done instantly? But of course, that lackey who scribbled everything in the Big Windows Store Registration Book isn't that quick. Can't blame him though. He's just doing his job.

Now, after the payment was done, I was presented with a page which tells me Microsoft is going to use a third party company called 'Symantec', which will verify my identity again. The page explains to me that this could be done through email or phone and that they'll contact the Approver to verify my identity. "Phone?", I thought... that's a little drastic for a developer account to publish a single page of information about an external hosted software product, isn't it? On Facebook I just added a page, done. And paying you, Microsoft, took less information: you were happy to take my money before my identity was even 'verified' by this 3rd party's minions! "Double standards!", I roared. No-one cared. But it's the thought of getting it off your chest, you know.

Luckily for me, everyone at Symantec was asleep when I was registering so they went for the fallback option in case phone calls were not possible: my Approver received an email. Imagine you have to explain the idiot web of security theater I was caught in to someone else who then has to reply a random person over the internet that I indeed was who I said I was. As she's a true sweetheart, she gave me the benefit of the doubt and assured that for now, I was who I said I was.

Remember, this is for a desktop application, which is only a link to a website, some pictures and a piece of text. No file hosting, no payment processing, nothing, just a single page. Yeah, I also thought I was crazy. But we're not at the end of this quest yet.

I clicked around in the confusing menus of the Windows Store dashboard and found the 'Desktop' section. I get a helpful screen with a warning in red that it can't find any certified 'apps'. True, I'm just getting started, buddy. I see a link: "Check the Windows apps you submitted for certification". Well, I haven't submitted anything, but let's see where it brings me. Oh the thrill of adventure!

I click the link and I end up on this site: the hardware/desktop dashboard account registration. "Erm... but I just registered...", I mumbled to no-one in particular. Apparently for desktop registration / verification I have to register again, it tells me. But not only that, the desktop application has to be signed with a certificate. And not just some random el-cheapo certificate you can get at any mall's discount store. No, this certificate is special. It's precious. This certificate, the 'Microsoft Authenticode' Digital Certificate, is the only certificate that's acceptable, and jolly, it can be purchased from VeriSign for the price of only ... $99.-, but be quick, because this is a limited time offer! After that it's, I kid you not, $499.-. 500 dollars for a certificate to sign an executable. But, I do feel special, I got a special price. Only for me! I'm glowing. Not for long though.

Here I started to wonder, what the benefit of it all was. I now again had to pay money for a shiny certificate which will add 'Solutions Design bv' to our installer as the publisher instead of 'unknown', while our customers download the file from our website. Not only that, but this was all about a Desktop application, which wasn't hosted by Microsoft. They only link to it. And make no mistake. These prices aren't single payments. Every year these have to be renewed. Like a membership of an exclusive club: you're special and privileged, but only if you cough up the dough.

To give you an example how silly this all is: I added LLBLGen Pro and ORM Profiler to the Visual Studio Gallery some time ago. It's the same thing: it's a central place where one can find software which adds to / extends / works with Visual Studio. I could simply create the pages, add the information and they show up inside Visual Studio. No files are hosted at Microsoft, they're downloaded from our website. Exactly the same system.

As I have to wait for the CC transcripts to arrive anyway, I can't proceed with publishing in this new shiny store. After the verification is complete I have to wait for verification of my software by Microsoft. Even Desktop applications need to be verified using a long list of rules which are mainly focused on Metro-style applications. Even while they're not hosted by Microsoft. I wonder what they'll find. "Your application wasn't approved. It violates rule 14 X sub D: it provides more value than our own competing framework".

While I was writing this post, I tried to check something in the Windows Store Dashboard, to see whether I remembered it correctly. I was presented again with the question, after logging in with my live account, to enter the code that was just mailed to me. Not the previous code, a brand new one. Again I had to kick my mail server to pull the email to proceed. This was it. This 'experience' is so beyond miserable, I'm afraid I have to say goodbye for now to the 'Windows Store'. It's simply not worth my time.

Now, about live accounts. You might know this: live accounts are tied to everything you do with Microsoft. So if you have an MSDN subscription, e.g. the one which costs over $5000.-, it's tied to this same live account. But the fun thing is, you can login with your live account to the MSDN subscriptions with just the account id and password. No additional code is mailed to you. While it gives you access to all Microsoft software available, including your licenses.

Why the draconian security theater with this Windows Store, while all I want is to publish some desktop applications while on other Microsoft sites it's OK to simply sign in with your live account: no codes needed, no verification and no certificates? Microsoft, one thing you need with this store and that's: apps. Apps, apps, apps, apps, aaaaaaaaapps. Sorry, my bad, got carried away. I just can't stand the word 'app'. This store's shelves have to be filled to the brim with goods. But instead of being welcomed into the store with open arms, I have to fight an uphill battle with an endless list of rules and bullshit to earn the privilege to publish in this shiny store. As if I have to be thrilled to be one of the exclusive club called 'Windows Store Publishers'. As if Microsoft doesn't want it to succeed.

Craig Stuntz sent me a link to an old blog post of his regarding code signing and uploading to Microsoft's old mobile store from back in the WinMo5 days: http://blogs.teamb.com/craigstuntz/2006/10/11/28357/. Good read and good background info about how little things changed over the years.

I hope this helps Microsoft make things more clearer and smoother and also helps ISVs with their decision whether to go with the Windows Store scheme or ignore it. For now, I don't see the advantage of publishing there, especially not with the nonsense rules Microsoft cooked up. Perhaps it changes in the future, who knows.

• David Ramel (@dramel) reported Microsoft Eases Mobile Data Access in the Cloud in an 8/29/2012 post to his Data Driver blog (missed when published):

The recent announcement of Windows Azure Mobile Services included some interesting stuff for you data developers.

As explained by Scott Guthrie, when Windows Azure subscribers create a new mobile service, it automatically is associated with a Windows Azure SQL Database. That provides ready-made support for secure database access. It uses the OData protocol, JSON and RESTful endpoints. The Windows Azure management portal can be used for common tasks such as handling tables, access control and more.

Guthrie provided a C# code snippet to illustrate how developers can write LINQ queries--using strongly typed POCO objects--that get translated into REST queries over HTTP.

The key point about all this is that it enables data access to the cloud from mobile or Windows Store (or desktop) apps without having to create your own server-side code, a somewhat difficult task for many developers. Instead, developers can concentrate on the client and user UI experience. That greatly appeals to me.

In response to a reader query about what exactly is "mobile" about Mobile Services, Guthrie explained:

The reason we are introducing Windows Azure Mobile Services is because a lot of developers don't have the time/skillset/inclination to have to build a custom mobile backend themselves. Instead they'd like to be able to leverage an existing solution to get started and then customize/extend further only as needed when their business grows.

Looks to me like another step forward in the continuing process to ease app development so just about anybody can do it. I'm all for it!

When asked by another reader why this new service only targets SQL Azure (the old name), instead of also supporting BLOBs or table storage, Guthrie replied that it was in response to developers who wanted "richer querying capabilities and indexing over large amounts of data--which SQL is very good at." However, he noted that support for unstructured storage will be added later for those developers who don't require such rich query capabilities.

This initial Preview Release only supports Windows 8 apps to begin with, but support is expected to be added for iOS, Android and Windows Phone apps, according to this announcement. Guthrie explains more about the new product in a Channel9 video, and more information, including tutorials and other resources, can be found at the Windows Azure Mobile Services Dev Center.

Full disclosure: I’m a contributing editor for 1105 Media’s Visual Studio Magazine.

Adam Hoffman (@stratospher_es) described Configuring Live SDK to allow your Windows 8 Application to use it on 9/11/2012:

Have you tried to use the new Live SDK to authenticate users of your application, only to find it throwing back errors like this?:

The app is not configured correctly to use Live Connect services. To configure your app, please follow the instructions on http://go.microsoft.com/fwlink/?LinkId=220871.

If so, the problem is that you haven’t yet told Windows Live (and the Windows Store) about the existence of your application. In order to do this, just do the following:

Head to the Windows Store Dashboard at http://msdn.microsoft.com/en-us/windows/apps/br216180 – and if you’re not already registered for the store, then everything here comes to a grinding halt, as you see the “The Windows Store is coming soon!” page. So now what?

Well, if you’re not fortunate enough to be early registered for the store (if you want to get registered, then contact me), you’ll have to go a different route for your development for now.

Instead, head to the Live Connect app management site for Metro style apps at https://manage.dev.live.com/build?wa=wsignin1.0 . Sign in if necessary and register your application here. You’ll be registering your application for “Windows Push Notifications and Live Connect”, which will allow you to use either of these two services from your application. Just follow the steps below:

Open your Package.appxmanifest file in Visual Studio 2012.

Switch to the Packaging tab.

Fill out reasonable values for “Package display name” and “Publisher”. These might already have reasonable defaults, but check to be sure they’re what you want. Once you have these values, you’ll copy and paste these into the form on the Live Connect app management site. This is what binds your application together with the Live ID service.

Press the I accept button. If all goes well, you’ll be redirected to a page that has new values for parts of your manifest. Copy and paste out the value that Live sends back to you for “Package name”. If you’re only using the Live Authentication service, you don’t need “Client secret” and Package Security Identifier (SID)” at this point. The are used for the Push Notifications Service though, so check out http://msdn.microsoft.com/en-us/library/windows/apps/hh465407.aspx if that’s what you’re trying to accomplish.

Save and rebuild your application, and you should now be able to use the Live services from your Windows 8 application.

My Windows Azure Mobile Services Preview Walkthrough–Part 2: Authenticating Windows 8 App Users (C#) post (above) describes this process with screen captures.

Josh Twist (@joshtwist) posted Understanding the pipeline (and sending complex objects into Mobile Services) on 9/9/2012:

In my last post, Going deep with Mobile Services data, we looked at how we could use server scripts to augment the results of a query, even returning a hierarchy of objects. This time, let us explore some trickery to do the same, but in reverse.

Imagine we want to post up a series of objects, maybe comments, all at once and process them in a single script… here’s a (somewhat manufactured scenario) demonstrating how you can do this. But first, we’ll need to understand a little more about the way data is handled in Mobile Services.

If you think of the Mobile Service data API as a pipeline, there are two key stages: scripting and storage. You write scripts that can intercept writes and reads to storage. There are two additional layers to consider:

- Pre-scripting

This is where Mobile Services performs the authentication checks and validates that the payload makes sense – we’ll talk about this in more detail below.- Pre-Storage

At this point, we have to make sure that anything you’re about to do makes sense – this validation layer is much stricter and won’t allow complex objects through. This stage is also where we handle and log any nasty errors (to help you diagnose issues) and perform dynamic schematization.The pre-scripting layer (1) expects a single JSON object (no arrays) and if the operation is an update (PATCH) and has an id specified in the JSON body – that id must match the id specified on the url, e.g. http://yourapp.azure-mobile.net/tables/foo/63

But with that knowledge in mind, we can still use the scripting layer to perform some trickery, if you so desire, and go to work on that JSON payload however it sees fit. Take the following example server script; it expects a JSON body like this:

{ ratings: [ { movieId: 10, rating: 5 }, { movieId: 63, rating: 2 }

] }A body, with a single ‘ratings’ property that contains an array of ratings.

function insert(item, user, request) { // TODO - perform any validation and security checks var ratingsTable = tables.getTable('ratings'); var ratings = item.ratings; var ids = new Array(ratings.length); var count = 0; ratings.forEach(function(rating, index) { ratingsTable.insert(rating, { success: function() { // keep a count of callbacks count++; // build a list of new ids - make sure

// they go back in the right order ids[index] = rating.id; if (ratings.length === count) { // we've finished all updates,

// send response with new IDs request.respond(201, { ratingIds: ids }); } } }); }); }Sending the correct payload from Fiddler is easy, just POST the above JSON body to http://yourapp.azure-mobile.net/tables/ratings and watch the script unfold the results for you. You should get a response as follows:

HTTP/1.1 201 Created

Content-Type: application/jsonContent-Length: 19

{"ratingIds":[7,8]}

Which is pretty cool – Mobile Services really does present a great way of building data focused JSON APIs. But what about uploading data like this using the MobileServiceClient in both C# and JS?

C# Client

The C# client is a little trickier. You’re probably using types and have a Rating class that has a MovieId and Rating property. If you have an IMobileServiceTable<Rating> then you can’t ‘insert’ a List<Rating> it simply won’t compile. In this case, you’ll want to drop to the JSON version of the client – it’s still really easy to use.

// Source data List<UserRating> ratings = new List<UserRating>() { new UserRating { MovieId = 5, Rating = 2 }, new UserRating { MovieId = 45, Rating = 7 } }; // convert to an array in JSON JsonArray arr = new JsonArray(); foreach (var rating in ratings) { arr.Add(MobileServiceTableSerializer.Serialize(rating)); } // Now create a JSON body JsonObject body = new JsonObject(); body.Add("ratings", arr); // insert! IJsonValue response = await MobileService.

GetTable("ratings").InsertAsync(body); // the whole hog - process results and

// attach the new Ids to objects var inserted =

response.GetObject()["ratingIds"].GetArray(); for (var i = 0; i < inserted.Count; i++) { ratings[i].Id = Convert.ToInt32(

inserted[i].GetNumber()); }If you were doing this a lot, you’d probably want to create a few helper methods to help you with the creation of the JSON, but otherwise, it’s pretty straightforward. Naturally, JavaScript has a slightly easier time with JSON:

JS Client

// data source var ratings = [{ movieId: 1, rating: 5 },

{ movieId: 2, rating: 4 }]; // insert! client.getTable("ratings").insert({ ratings: ratings }).done(function (result) { // map the result ids onto the source for (var i = 0; i < result.ratingIds.length; i++) { ratings[i].id = result.ratingIds[i]; } });Nice!

If this feels like too much work, don’t worry, we’re working on making it even easier to build any API you like in Mobile Services. Stay tuned!

Idera asserted “DBAs Can Quickly and Reliably Back Up and Restore Azure SQL Databases” in a deck for its Idera Announces Free Backup Product for SQL Databases in the Azure Cloud press release of 9/10/2012:

Today, Idera, a leading provider of application and server enagement solutions announced Azure SQL Database Backup™, a free product that provides fast, reliable backup and restore capabilities for Azure SQL Databases.

As more companies move their applications to Microsoft's Azure cloud service, they are looking for a fast, reliable backup solution to ensure that data stored in Azure SQL Databases can be recovered in the case of accidental deletion or damage. Built on technology acquired from Blue Syntax, a leading Azure consultancy and services provider led by SQL Azure Database MVP Herve Roggero, Idera's new Azure SQL Database Backup product provides fast, reliable backup and restore capabilities, including:

- Save time and storage space with compression up to 95%

- Backup on-premise or to Azure BLOB storage

- View historical backup and restore operations

- Restore databases to and from the cloud with transaction consistency

"Our new Azure SQL Database Backup product builds on our strong and successful portfolio of SQL Server management solutions," said Heather Sullivan, Director of SQL Products at Idera. "Moving SQL Server data to the cloud can be an intimidating exercise. Our Azure SQL Database Backup tool helps DBAs sleep better at night knowing they are reaping the benefits of cloud services while ensuring the integrity of their data."

Pricing and Availability

Idera Azure SQL Database Backup is available today for free. To learn more or to download Azure SQL Database Backup, please visit http://www.idera.com/Free-Tools/SQL-azure/.About Idera

Idera provides systems and application management software for Windows and Linux Servers, including solutions for SQL Server and SharePoint administration. Our award-winning products address real-world challenges including performance monitoring, backup and recovery, security, compliance, and administration. Headquartered in Houston, Texas, Idera is a Microsoft Managed Partner and has over 10,000 customers worldwide. To learn more, please contact Idera at +1-713.523.4433 or visit www.idera.com.Idera and Azure SQL Database Backup are registered trademarks of Idera, Inc. in the United States and trademarks in other jurisdictions. All other company and product names may be trademarks of their respective companies.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

• Parshva Vora described Client data access with OData and CSOM in SharePoint 2013 in a 9/11/2012 post to the Perficient Blog:

SharePoint 2013 has improved OData support. In fact, it offers full-blown OData compliant REST based interface to program against. For those who aren’t familiar with OData, OData is an open standard for querying and updating data that relies on common web standard HTTP. Read this OData primer for more details.

- SharePoint data can be made available on non-Microsoft platforms and to mobile devices

- SharePoint can connect and bring in data from any OData sources

Client Programming Options: In SharePoint 2010, there were primarily three ways to access SharePoint data from the client or external environment.

1. Client side object model(CSOM) - SharePoint offers three different set of APIs, each intended to be used in certain type of client applications.

- Manged client object model – for .Net client applications

- Silverlight client object model – for client applications written in Silverlight

- ECMAScript(JavaScript) object model – for JavaScript client applications

Each object model uses its own proxy to communicate with the SharePoint server object model through WCF service Client.SVC. This service is responsible for all communications between client models and server object model.

2. ListData.SVC – REST based interface to add and update lists.

3. Classic ASMX web services – These services were used when parts of server object model aren’t available through CSOM or ListData service such as profiles, publishing and taxonomy. They also provided backward compatibility to code written for SharePoint 2007.

4. Custom WCF services – When a part of server object model isn’t accessible through all of above three options, custom written WCF services can expose SharePoint functionalities.

Architecture:

In SharePoint 2010, Client.svc wasn’t accessible directly. SharePoint 2013 extends Client.svc with REST capabilities and it can now accepts HTTP GET, POST, PUT, MERGE and DELETE requests. Firewalls usually block HTTP verbs other than GET and POST. Fortunately, OData supports verb tunneling where PUT, MERGE and DELETE are submitted as POST requests and X-HTTPMehod header carries the actual verb. The path /_vti_bin/client.svc is abstracted as _api in SharePoint 2013.CSOM additions: User profiles, publishing, taxonomy, workflow, analytics, eDiscovery and many other APIs are available in client object model. Earlier these APIs are available only in server object model.

ListData.svc is still available mainly for backward compatibility.

Atom or JSON response – Response to OData request could be in Atom XML or JSON format. Atom is usually used with managed clients while JSON is the preferred format for JavaScript client as the response is a complex nested object and hence no extra parsing is required. HTTP header must have specific instructions on desired response type otherwise it would be an Atom response which is the default type.

• Parshva Vora posted Consuming OData sources in SharePoint 2013 App step by step in a 9/11/2012 post to the Perficient Blog:

SharePoint 2013 has an out-of-box supports for connecting to OData sources using BCS. You no longer are required to write .Net assembly connector to talk to external OData sources.

1. Open Visual Studio 2012 and create a new SharePoint 2013 App project. This project template is available after installing office developer tools for Visual Studio 2012.

Create App

Select App Settings

2. Choose a name for the app and type in the target URL where you like to deploy the App. Also choose the appropriate hosting option from the drop-down list. For on promise deployment select ”SharePoint-Hosted”. You can change the URL later by changing “Site URL” property of the App in Visual Studio.

3.Add an external content type as shown in the snapshot below. It will launch a configuration wizard. Type in OData source URL you wish to connect to and assign it an appropriate name. For this demo purpose, I am using publicly available OData source NorthWind.

4. Select the entities you would like to import. Select only entities that you need for the app otherwise not only App will end up with inflated model but also all data associated with each entity will be brought into external lists.Hit finish. At this point, Visual Studio has created a model under “External Content Types” for you. Feature is also updated to have new modules.

5.Expand “NorthWind” and you should see customer.ect. This is the BCS model. It doesn’t have a “.bdcm” extension like its predecessor. However it doesn’t alter its behavior as the model is still defined with XML. If you open the .ect file with ordinary XML editor, you can observe similarity in schema.

Customer BDC model representing OData entity

6. Deploy the App. And browse to the http://sp2013/ListCustomers/Lists/Customer({BaseTargetUrl}/{AppName}/Lists/{EntityName}) . You should see imported customer data in external list.

7. You can program against this data like any other external list.

Narine Mossikyan posted PowerShell cmdlets invocation through Management ODATA using WCF client on 9/10/2012:

ODATA uses the Open Data Protocol (ODATA) to expose and consume data over the Web or Intranet.

It is primarily designed to expose resources manipulated by PowerShell cmdlets and scripts as schematized ODATA entities using the semantics of representational state transfer (REST).

The philosophy of REST ODATA limits the verbs that can be supported on resources to only the basic operations: Create, Read, Update and Delete.

In this topic I will talk about Management ODATA being able to expose resources that model PowerShell pipelines that return unstructured data. This is an optional feature and is called “PowerShell pipeline invocation” or “Invoke”. A single Management ODATA endpoint can expose schematized resources, or the arbitrary cmdlet resources or both.In this blog I will show how to write a windows client built on WCF client to create a PowerShell pipeline invocation.

Any client can be used that supports ODATA. WCF Data Services includes a set of client libraries for general .NET Framework client applications that is used in this example.

You can read more about WCF at: http://msdn.microsoft.com/en-us/library/cc668792.aspx.

If you are building a WCF client, the only requirement is to use WCF Data Services 5.0 libraries to be compatible. In this topic I will assume you already have a MODATA endpoint configured and up and running. For more information on MODATA in general and how to create an endpoint please refer to msdn documentation at http://msdn.microsoft.com/en-us/library/windows/desktop/hh880865(v=vs.85).aspx

Since “Invoke” feature is an optional feature and is disabled by default, you will need to enable it by adding the following configuration to your MODATA endpoint web.config:

<commandInvocation enabled="true"/>

Table 1.1 – Enable Command Invocation

To make sure “Invoke” is enabled, you will need to send a GET http://endpoint_service_URI/$metadata query to MODATA endpoint and should see a similar response in return:

<Schema>

<EntityType Name="CommandInvocation">

<Key>

<PropertyRef Name="ID"/>

</Key>

<Property Name="ID" Nullable="false" Type="Edm.Guid"/>

<Property Name="Command" Type="Edm.String"/>

<Property Name="Status" Type="Edm.String"/>

<Property Name="OutputFormat" Type="Edm.String"/>

<Property Name="Output" Type="Edm.String"/>

<Property Name="Errors" Nullable="false" Type="Collection(PowerShell.ErrorRecord)"/>

<Property Name="ExpirationTime" Type="Edm.DateTime"/>

<Property Name="WaitMsec" Type="Edm.Int32"/>

</EntityType>

<ComplexType Name="ErrorRecord">

<Property Name="FullyQualifiedErrorId" Type="Edm.String"/>

<Property Name="CategoryInfo" Type="PowerShell.ErrorCategoryInfo"/>

<Property Name="ErrorDetails" Type="PowerShell.ErrorDetails"/>

<Property Name="Exception" Type="Edm.String"/>

</ComplexType>

<ComplexType Name="ErrorCategoryInfo">

<Property Name="Activity" Type="Edm.String"/>

<Property Name="Category" Type="Edm.String"/>

<Property Name="Reason" Type="Edm.String"/>

<Property Name="TargetName" Type="Edm.String"/>

<Property Name="TargetType" Type="Edm.String"/>

</ComplexType>

<ComplexType Name="ErrorDetails">

<Property Name="Message" Type="Edm.String"/>

<Property Name="RecommendedAction" Type="Edm.String"/>

</ComplexType>

</Schema>Table 1.2 - Command Invocation Schema Definition

Management ODATA defines two ODATA resource sets related to PowerShell pipeline execution: CommandDescriptions and

CommandInvocations. The CommandDescriptions resource set represents the collection of commands available on the server.By enumerating the resource set, a client can discover the commands that it is allowed to execute and their parameters.

The client must be authorized to execute Get-Command cmdlet for the CommandDescriptions query to succeed.

At a high level, if a client sends the following request:

GET http://endpoint_service_URI/CommandDescriptions

Table 1.3 – Command Invocation Query Sample

…then the server might reply with the following information:

<entry>

<id>http://endpoint_service_URI/CommandDescriptions('Get-Process')</id>

<category scheme="http://schemas.microsoft.com/ado/2007/08/dataservices/scheme" term="PowerShell.CommandDescription"/><link title="CommandDescription" href="CommandDescriptions('Get-Process')" rel="edit"/><title/><updated>2012-09-10T23:14:52Z</updated>-<author>

<name/>

</author>-<content type="application/xml">

-<m:properties>

<d:Name>Get-Process</d:Name><d:HelpUrl m:null="true"/><d:AliasedCommand m:null="true"/>-<d:Parameters m:type="Collection(PowerShell.CommandParameter)">

-<d:element>

<d:Name>Name</d:Name>

<d:ParameterType>System.String[]</d:ParameterType>

</d:element>-<d:element>

<d:Name>Id</d:Name>

<d:ParameterType>System.Int32[]</d:ParameterType>

</d:element>-<d:element>

<d:Name>ComputerName</d:Name>

<d:ParameterType>System.String[]</d:ParameterType>

</d:element>-<d:element>

<d:Name>Module</d:Name>

<d:ParameterType>System.Management.Automation.SwitchParameter</d:ParameterType>

</d:element>-<d:element>

<d:Name>FileVersionInfo</d:Name>

<d:ParameterType>System.Management.Automation.SwitchParameter</d:ParameterType>

</d:element>-<d:element>

<d:Name>InputObject</d:Name>

<d:ParameterType>System.Diagnostics.Process[]</d:ParameterType>

</d:element>-<d:element>

<d:Name>Verbose</d:Name>

<d:ParameterType>System.Management.Automation.SwitchParameter</d:ParameterType>

</d:element>-<d:element>

<d:Name>Debug</d:Name>

<d:ParameterType>System.Management.Automation.SwitchParameter</d:ParameterType>

</d:element>-<d:element>

<d:Name>ErrorAction</d:Name>

<d:ParameterType>System.Management.Automation.ActionPreference</d:ParameterType>

</d:element>-<d:element>

<d:Name>WarningAction</d:Name>

<d:ParameterType>System.Management.Automation.ActionPreference</d:ParameterType>

</d:element>-<d:element>

<d:Name>ErrorVariable</d:Name>

<d:ParameterType>System.String</d:ParameterType>

</d:element>-<d:element>

<d:Name>WarningVariable</d:Name>

<d:ParameterType>System.String</d:ParameterType>

</d:element>

</d:Parameters>

</m:properties>

</content>

</entry>Table 1.4 – Command Response Sample

This indicates that the client is allowed to execute the Get-Process command.

The CommandInvocations resource set represents the collection of commands or pipelines that have been invoked on the server. Each entity in the collection represents a single invocation of some pipeline. To invoke a pipeline, the client sends a POST request containing a new entity. The contents of the entity include the PowerShell pipeline itself (as a string), the desired output format (typically “xml” or “json”), and the length of time to wait synchronously for the command to complete. A pipeline string is a sequence of one or more commands, optionally with parameters and delimited by a vertical bar character.

For example, if the server receives the pipeline string “Get-Process –Name iexplore”, with output type specified as “xml” then it will execute the Get-Process command (with optional parameter Name set to “iexplore”), and send its output to “ConvertTo-XML”.

The server begins executing the pipeline when it receives the request. If the pipeline completes quickly (within the synchronous-wait time) then the server stores the output in the entity’s Output property, marks the invocation status as “Complete”, and returns the completed entity to the client.

If the synchronous-wait time expires while the command is executing, then the server marks the entity as “Executing” and returns it to the client. In this case, the client must periodically request the updated entity from the server; once the retrieved entity’s status is “Complete”, then the pipeline has completed and the client can inspect its output.

The client should then send an ODATA DeleteEntity request, allowing the server to delete resources associated with the pipeline.

There are some important restrictions on the types of commands that can be executed. Specifically, requests that use the following features will not execute successfully:

- script blocks

- parameters using environment variables such as "Get-Item -path $env:HOMEDRIVE\\Temp"

- interactive parameters such as –Paging (Get-Process | Out-Host –Paging )

Authorization and PowerShell initial session state are handled by the same CLR interfaces as for other Management ODATA resources. Note that every invocation calls some ConvertTo-XX cmdlet, controlled by the OutputFormat property of the invocation. The client must be authorized to execute this cmdlet in order for the invocation to succeed.

Here is the code snippet that shows how to send a request to create a PowerShell pipeline invocation and how to get the cmdlet execution result:

public class CommandInvocationResource

{

public Guid ID { get; set; }public string Command { get; set; }

public string OutputFormat { get; set; }

public int WaitMsec { get; set; }

public string Status { get; set; }

public string Output { get; set; }

public List<ErrorRecordResource> Errors { get; set; }

public DateTime ExpirationTime { get; set; }

public CommandInvocationResource()

{

this.Errors = new List<ErrorRecordResource>();

}

}public class ErrorRecordResource

{

public string FullyQualifiedErrorId { get; set; }public ErrorCategoryInfoResource CategoryInfo { get; set; }

public ErrorDetailsResource ErrorDetails { get; set; }

public string Exception { get; set; }

}public class ErrorCategoryInfoResource

{

public string Activity { get; set; }public string Category { get; set; }

public string Reason { get; set; }

public string TargetName { get; set; }

public string TargetType { get; set; }

}public class ErrorDetailsResource

{

public string Message { get; set; }public string RecommendedAction { get; set; }

}Table 2.1 – Helper class definitions

// user need to specify the endpoint service URI as well as pass user name, password and domain

Uri serviceroot = new Uri(“<endpoint_service_URL>”);

NetworkCredential serviceCreds = new NetworkCredential("testuser","testpassword","testdomain");

CredentialCache cache = new CredentialCache();

cache.Add(serviceroot, "Basic", serviceCreds);//create data service context with protocol version 3 to connect to your endpoint

DataServiceContext context = new DataServiceContext(serviceroot,System.Data.Services.Common.DataServiceProtocolVersion.V3);

context.Credentials = cache;// Expect returned data to be xml formatted. You can set it to “json” for the returned data to be in json

string outputType = "xml";//Powershell pipeline invocation command sample

String strCommand ="Get-Process -Name iexplore";//Create an invocation instance on the endpoint

CommandInvocationResource instance = new CommandInvocationResource()

{

Command = strCommand,

OutputFormat = outputType};

context.AddObject("CommandInvocations", instance);

DataServiceResponse data = context.SaveChanges();// Ask for the invocation instance we just created

DataServiceContext afterInvokeContext = new DataServiceContext(serviceroot,

System.Data.Services.Common.DataServiceProtocolVersion.V3);

afterInvokeContext.Credentials = cache;

afterInvokeContext.MergeOption = System.Data.Services.Client.MergeOption.OverwriteChanges;

CommandInvocationResource afterInvokeInstance = afterInvokeContext.CreateQuery

<CommandInvocationResource>("CommandInvocations").Where(it => it.ID == instance.ID).First();Assert.IsNotNull(afterInvokeInstance, "instance was not found!");

while (afterInvokeInstance.Status == "Executing")

{

//Wait for the invocation to be completed

Thread.Sleep(100);

afterInvokeInstance = afterInvokeContext.CreateQuery<CommandInvocationResource>

("CommandInvocations").Where(it => it.ID == instance.ID).First();

}if (afterInvokeInstance.Status == "Completed")

{

//Results is returned as a string in afterInvokeInstance.Output variable in xml format

}

// In case the command execution has errors you can analyze the data

if (afterInvokeInstance.Status == "Error")

{

string errorOutput;

List<ErrorRecordResource> errors = afterInvokeInstance.Errors;

foreach (ErrorRecordResource error in errors)

{

errorOutput += "CategoryInfo:Category " + error.CategoryInfo.Category + "\r\n";

errorOutput += "CategoryInfo:Reason " + error.CategoryInfo.Reason + "\r\n";

errorOutput += "CategoryInfo:TargetName " + error.CategoryInfo.TargetName + "\r\n";

errorOutput += "Exception " + error.Exception + "\r\n";

errorOutput += "FullyQualifiedErrorId " + error.FullyQualifiedErrorId + "\r\n";

errorOutput += "ErrorDetails" + error.ErrorDetails + "\r\n"; }

}//Delete the invocation instance on the endpoint

afterInvokeContext.DeleteObject(afterInvokeInstance);

afterInvokeContext.SaveChanges();Table 2.2 – Client Code Implementation

Narine Mossikyan

Software Engineer in Test

Standards Based Management

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

• Alex Simons reported More Advances in the Windows Azure Active Directory Developer Preview in a 9/12/2012 post:

I’ve got more cool news to share with you today about Windows Azure Active Directory (AD). We’ve been hard at work over the last 6 weeks improving the service and today we’re sharing the news about three major enhancements to our developer preview:

- The ability to create a standalone Windows Azure AD tenant

- A preview of our Directory Management User Interface

- Write support in our GraphAPI

With these enhancements Windows Azure Active Directory changes from a being compelling promise into a standalone cloud directory with a user experience supported by a simple yet robust set of developer API’s.

Here’s a quick overview of each of these new capabilities and links that let you try them out and read more about the details.

New standalone Windows Azure AD tenants

First we’ve added the ability to create a new Windows Azure AD tenant for your business or organization without needing to sign up for Office 365, Intune or any other Microsoft service. Developers or administrators who want to try out the Windows Azure Active Directory Developer preview can now quickly create an organizational domain and user accounts using this page. For the duration of the preview these AD tenants are available free of charge.

User Interface Preview

Second, I’m excited to share with you that we have also added a preview of the Windows Azure Active Directory Management UI. It went live as a preview last Friday to support the preview of Windows Azure Online Backup. With this new user interface administrators of any service that uses Windows Azure AD as its directory (Windows Azure Online Backup, Windows Azure, Office 365, Dynamics CRM Online and Windows InTune) can use the preview portal at https://activedirectory.windowsazure.com. Administrators can use this UI to manage the users, groups, and domains used in their Windows Azure Active Directory, and to integrate their on-premises Active Directory with their Windows Azure Active Directory. The UI is a standalone preview release. As we work to enhance it over the coming months, we will move it into the Windows Azure Management portal to assure developers and IT Admins have a single place to go to manage all their Windows Azure services.

Please note that if you log in using your existing Windows Azure AD account from Office 365 or another Microsoft service, you’ll be working with actual live data. So any changes made through this UI will affect live data in the directory and will be available in all the Microsoft services your company subscribes to (e.g. Office 365, InTune, etc.). That is of course the entire purpose of having a shared directory, but during the preview, you might want to create a new tenant and new set of users rather than experimenting with mission critical live data. Also note that the existing portals that you already use for identity management in these different apps will continue to work as is providing a dedicated in-service experience.

Write access for the Windows Azure Active Directory Graph API

In our first preview release of the Graph API we introduced the ability for 3rd party applications to Read data from Windows Azure Active Directory. Today we released the ability for applications to easily Write data to the directory. This update includes support for:

- Create, Delete and Update operations for Users, Groups, Group Membership

- User License assignments

- Contact management

- Returning the thumbnail photo property for Users

- Setting JSON as the default data format

An updated Visual Studio sample application is available from here, and a Java version of the sample application is available from here. Please try the new capabilities and provide feedback directly to the product team - the download pages, includes a section where you can submit questions, comments and report any issues found with the sample applications.

For more detailed information on the new capabilities for Windows Azure AD Graph API, visit our updated MSDN documentation.

It’s exciting to get to share these new enhancements with you. We really hope you’ll find them useful for building and managing your organizations cloud based applications. And of course we’d love to hear any feedback or suggestions you might have.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Mary Jo Foley (@maryjofoley) asserted “There's a new codename on the Windows Azure roadmap. And it involves bridging on-premises and cloud servers beyond what's available in Azure Connect” in a deck for her Project Brooklyn: Extending an enterprise network to the Windows Azure cloud about Windows Azure Virtual Networks of 9/12/2012:

It's time for another new Microsoft codename, decoder-ring fans.

This week's entry: Project Brooklyn. (Thanks to Chris Woodruff of Deep Fried Bytes podcast fame for the pointer.)

Here's the reasoning behind the codename: The same way that the Brooklyn Bridge connects Manhattan and Brooklyn, Project Brooklyn is designed to connect enterprise networks to the cloud, and specifically the Windows Azure cloud. The overarching idea is Brooklyn will allow enterprises to use Azure as their virtual branch office/datacenter in the cloud.

From Microsoft description of what this is:

"Project 'Brooklyn' is a networking on-ramp for migrating existing Enterprise applications onto Windows Azure. Project 'Brooklyn' enables Enterprise customers to extend their enterprise networks into the cloud by enabling customers to bring their IP address space into WA and by providing secure site-to-site IPsec VPN connectivity between the Enterprise and Windows Azure. Customers can run 'hybrid' applications in Windows Azure without having to change their applications."

(The "into WA" part of this description means into Microsoft's own Azure datacenters, I'd assume.)

There's a video walk-through about Brooklyn, dating back to June's TechEd 2012 show, available on Microsoft's Channel 9 site.

I believe Brooklyn was part of the wave of features Microsoft unveiled as part of its "spring" updates for Windows Azure. The service is still in preview, as of this writing. Update: The official product name for Brooklyn is Windows Azure Virtual Network.

Brooklyn got a brand-new mention this week in a blog post about High Performance Computing (HPC) Pack 2012, which is built on top of Windows Server 2012. (Microsoft is accepting beta applicants for the HPC Pack 2012 product as of September 10.) Among the new features listed as part of HPC Pack 2012 is Project Brooklyn.

Brooklyn seems to be the follow-on to Windows Azure Connect. Azure Connect, codenamed "Project Sydney," was originally announced in November 2009. The networking component of Connect was described as allowing cloud-hosted virtual machines and local computers to communicate via IPSec connection as if they were on the same network. Windows Azure Connect, as it evolved, didn't support virtual addresses and virtual servers, however; it was more about establishing networks between individual machines.

Brooklyn fits with Microsoft's goal of convincing users that they don't have to create Azure cloud apps from scratch (which was Microsoft's message until it added persistent virtual machines to Azure earlier this year). Microsoft's intention is to make it easier for users to bring existing apps to the Azure cloud and/or bridge their on-premises apps with Azure apps in a hybrid approach.

And as to why am I writing about this now -- since it was unveiled a few months ago -- it's all about the disovery of the codename for this codename queen.

The Project “Brooklyn” codename has been around (but under the covers) for quite a while.

The Windows Azure Connect team warned users to Upgrade to the latest Connect endpoint software now in a 9/10/2012 post:

On 10/28/2012, the current CA certificate used by Windows Azure Connect endpoint software will expire. To continue to use Windows Azure Connect after this date, Connect endpoint software on your Windows Azure roles and on-premises machines must be upgraded to the latest version. Depends on your environment and configuration, you may or may not need to take any action.

For your Web and Worker roles, if they are configured to upgrade to the new guest OS automatically, then you don’t need to take any action. When the new OS is rolled out later this month Connect endpoint software will be automatically refreshed to the new version. To upgrade endpoint software manually, you can set below to true in your .cscfg and “Upgrade”.

<Setting name="Microsoft.WindowsAzure.Plugins.Connect.Upgrade" value="true" />

For PaaS VM role, the on-premise VHD image can be either updated via Windows Update or manually updated, and then re-uploaded.

For the new IaaS roles and on-premise machines, you can use Windows Update to upgrade or manually install.

To verify that upgrade worked, in the Silverlight portal, go to “Virtual Network” –> “Activated Endpoints” –> “Properties” to make sure the version is 1.0.0960.2.

If you plan to do manual upgrade, below is Microsoft Update Catalog upgrade link for manual install.

Place the “update” in the “basket”, click “download” to a temp folder, and run either the x86 or the amd64 upgrade package.

Directory of C:\temp\Update for Windows Azure Connect Endpoint Upgrade (1.0.0960.2)

- 08/24/2012 10:05 AM 2,948,720 AMD64-en-wacendpointupg_prod_d0e8b5df2bdf2587cdbb75fdfdef1946de7f5f56.exe

- 08/24/2012 10:05 AM 2,316,424 X86-en-wacendpointupg_prod_f2f3fe266e7a3399691042ce8eb4606dc82a6925.exe

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Himanshu Singh published Guest Post: 10gen and Microsoft Partner to Deliver NoSQL in the Cloud on 9/11/2012:

Editor’s Note: Today’s post comes from Sridhar Nanjundeswaran, Software Engineer at 10gen [pictured at right]. 10gen develops MongoDB, and offers production support, training, and consulting for the open source database.

We at 10gen are excited about our ongoing collaboration with Microsoft. We are actively leveraging new features in Windows Azure to ensure our common customers using MongoDB on Windows Azure have access to the latest features and the best possible experience.

In early June, Microsoft announced the preview version of Windows Azure Virtual Machines, which enables customers to deploy and run Windows and Linux instances on Windows Azure. This provides more control over actual instances as opposed to using Worker Roles. Additionally, this is the paradigm that is most familiar to users who run instances in their own private clouds or on other public clouds. In conjunction with Windows Azure’s release, 10gen and Microsoft are now delivering the MongoDB Installer for Windows Azure.

The MongoDB Installer for Windows Azure automates the process of creating instances on Windows Azure, deploying MongoDB, opening up relevant ports, and configuring a replica set. The installer currently works when used on a Windows machine, and can be used to deploy MongoDB replica sets to Virtual Machines on Windows Azure. Additionally, the installer uses the instance OS drive to store MongoDB data, which limits storage and performance. As such, we recommend that customers only use the installer for experimental purposes at this stage.

There are also tutorials that walk users through how to deploy a single standalone MongoDB server to Windows Azure Virtual Machines for Windows 2008 R2 Server and CentOS. In both cases, by using Windows Azure data disks, this implementation provides data safety given the persistent nature of disks, which allows the data to survive instance crashes or reboots.

Furthermore, Windows Azure’s triple-replication of the data guards against storage corruption. Neither of these solutions, however, takes advantage of MongoDB’s high-availability features. To deploy MongoDB to be highly available, one can leverage MongoDB replica sets; more information on this high-availability feature can be found here.

Finally, customers who would like to deploy MongoDB replica sets to CentOS VMs can follow these basic steps:

- Sign up for the Windows Azure VM preview feature

- Create the required number of VM instances

- Attach disks and format

- Configure the ports to allow remote shell and mongodb access

- Install mongodb and launch

- Configure the replica set

Detailed steps for this procedure are outlined in the tutorial, “Deploying MongoDB Replica Sets to Linux on Windows Azure.”

Rick G. Garibay (@rickggaribay) reported on 9/10/2012 that CODE Magazine published Real-Time Web Apps Made Easy with WebSockets in .NET 4.5 in their Sept/Oct 2012 issue:

My new article on WebSockets has been published in the Sept/Oct issue of CODE Magazine: http://www.code-magazine.com/Article.aspx?quickid=1210051

The article includes many of the samples and concepts I’ve presented in my recent DevConnections and That Conference talks including using Node.js as simple alternative to ASP.NET/WCF 4.5. In fact, I plan to update all of the

samples to run in Windows Azure Compute Services (Worker Role) as soon as Server 2012/.NET 4.5 is rolled out to Windows Azure Compute Services and hopefully show at Desert Code Camp in November.

BTW, if you run a user group or involved in a code camp or other event and would be interested in passing out some complimentary copies of CODE magazine, please let me know and I'll get you hooked up with the right folks.

As always, appreciate any comments/feedback you might have.

Himanshu Singh (@himanshuks, pictured below) posted Gabe Moonhart’s Guest Post: Getting Started with SendGrid on Windows Azure on 9/10/2012:

Editor’s Note: Today’s post comes from Gabe Moothart, Software Engineer at SendGrid. SendGrid provides businesses with a cloud-based email service, relieving businesses of the cost and complexity of maintaining custom email systems.

Did you know that as a Windows Azure customer, you can easily access a highly scalable email delivery solution that integrates into applications on any Windows Azure environment? Getting started with SendGrid on Windows Azure is easy.

The first thing you’ll need is a SendGrid account. You can find SendGrid in the Windows Azure Marketplacehere. Just click the green “Learn More” button and continue through the signup process to create a SendGrid account. As a Windows Azure customer, you are automatically given a free package to send up to 25,000 emails/month.

And then “Sign up Now” to create your SendGrid account.

Now that your SendGrid account has been created, it’s time to integrate it into a Windows Azure Web Site. Login and click the “+ New” button at the bottom of the page to create a new Web Site:

After the website has been created, select it in the management portal and find the “Web Matrix” button at the bottom of the page. This will open your new website in WebMatrix, installing it if necessary. WebMatrix will alert you that your site is empty:

Choose “Yes, install from the template Gallery” and select the “Empty Site” template.

Choose the “Files” view on the left-hand side, and then from the File menu, select “New” -> “File”, and choose the “Web.Config (4.0)” file type.

Next, we need to tell Asp.Net to use the SendGrid SMTP server. Add this text to the new Web config, inside the <configuration> tag:

<system.net>

<mailSettings>

<smtp>

<network host="smtp.sendgrid.com" userName="sendgrid username"

password="sendgrid password" />

</smtp>