Windows Azure and Cloud Computing Posts for 12/3/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Important: See official notice of Windows Azure’s ISO 27001 certification in the Windows Azure Infrastructure and DevOps section and the clarification of 12/5/2011 that the certification does not include SQL Azure, Service Bus, Access Control, Caching, or Content Delivery Network.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Barb Darrow (@gigabarb) takes on the crowd in a Why some execs think Hadoop ain't all that … post of 11/30/2011 to Giga Om’s Structure blog:

And so the backlash begins. Hadoop, the open-source framework for handling tons of distributed data, does a lot, and it is a big draw for businesses wanting to leverage the data they create and that is created about them. That means it’s a hot button as well for the IT vendors who want to capture those customers. Virtually every tech vendor from EMC (s EMC) to Oracle (s ORCL) to Microsoft (s MSFT) has announced a Hadoop-oriented “big data” strategy in the past few months.

But here comes the pushback. Amid the hype, some vendors are starting to point out that building and maintaining a Hadoop cluster is complicated and — given demand for Hadoop expertise — expensive. Larry Feinsmith, the managing director of JPMorgan Chase’s (s JPM) office of the CIO, told Hadoop World 2011 attendees recently that Chase pays a 10 percent premium for Hadoop expertise — a differential that others said may be low.

Manufacturing, which typically generates a ton of relational and nonrelational data from ERP and inventory systems, the manufacturing operations themselves, and product life cycle management, is a perfect use case for big data collection and analytics. But not all manufacturers are necessarily jumping into Hadoop.

General Electric’s (s GE) Intelligent Platforms Division, which builds software for monitoring and collecting all sorts of data from complex manufacturing operations, is pushing its new Proficy Historian 4.5 software as a quicker, more robust way to do what Hadoop promises to do.

“We have an out-of-the-box solution that is performance comparable to a Hadoop environment but without that cost and complexity. The amount of money it takes to implement Hadoop and hire Hadoop talent is very high,” said Brian Courtney, the GM of enterprise data management for GE.

Proficy Historian handles relational and nonrelational data from product manufacturing and testing — data like the waveforms generated in the process. GE has a lot of historical data about what happens in the production and test phases of building such gear, and it could be put to good use, anticipating problems that can occur down the pike.

For example, the software can look at the electrical signature generated when a gas turbine starts up, said Courtney. “The question is what is the digital signature of that load in normal startup mode and then what happens if there’s an anomaly? Have you ever seen that anomaly before? Given this waveform, you can go back five years and look at other anomalies and whether they were part of a subsequent system failure.”

If similar anomalies caused a system failure, you can examine how much time it took after the anomaly for the failure to happen. That kind of data lets the manufacturer prioritize fixes.

The new release of Proficy software seeks to handle bigger amounts of big data, supporting up to 15 million tags, up from two million in the previous release.

Joe Coyle, the CTO of Capgemini, (s CAP) the big systems integrator and consulting company, said big data is here to stay but that many businesses aren’t necessarily clued in to what that means. “After cloud, big data is question number two I get from customers. CIOs will call and say, ‘Big data, I need it. Now what is it?’”

Coyle agrees that Hadoop has big promise but is not quite ready for prime time. “It’s expensive and some of the tools aren’t there yet. It needs better analytics reporting engines. Right now, you really need to know what to analyze. Hadoop brings a ton of data, but until you know what to ask about it, it’s pretty much garbage in, garbage out.”

The companies who make the best use of big data are those that know what to ask about it.

For example, Coyle explained, “Victoria’s Secret harvests information from Facebook and can tell you in detail about every 24-year-old who bought this product in the last 12 months. It’s very powerful, but that’s because of the humans driving it.”

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Patrick Schwanke (@pschwanke) described Monitoring SQL Azure with Quest Software’s new SQL Azure cartridge for its Foglight product in a 12/3/2011 post:

Quest Software (the company I’m working for) just brought out a SQL Azure cartridge for its application performance management solution Foglight.

Other than Spotlight on Azure and Spotlight on SQL Server Enterprise (covering also SQL Azure) which are tools focused on the Azure platform or SQL Server/SQL Azure, Foglight is a much broader story, covering among others end user, application, database, OS and virtualization layer performance.

So, how does Foglight’s SQL Azure part look like?

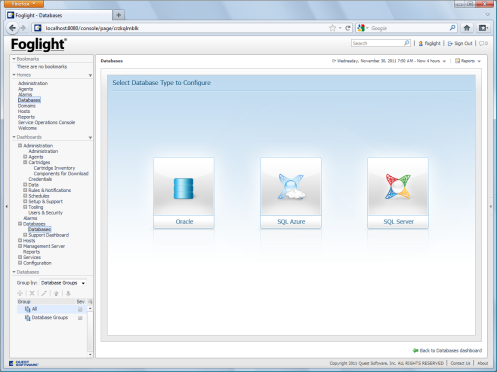

For playing around, I used my existing Foglight environment covering on-premise Oracle and SQL Server. After importing the SQL Azure cartridge, the starting page looks like this:

Starting page in Foglight for Databases

Clicking on the SQL Azure button launched a short wizard with these steps:

- Step 1: Choosing an agent manager: This is Foglight administrative stuff, determining where the remote collector agent is running. I used my local VM here. Alternatively, it could be deployed inside the Azure cloud.

- Step 2: Providing an Azure instance and login credentials.

- Step 3: Choosing one or more databases in the Azure instance.

- Step 4: Validating connectivity and starting monitoring

After that, the starting page looks like this. You may have to wait for a few minutes until all parts are actually filled with data:

Foglight for Databases Starting Page

On the upper part, it’s the overall database environment, including current alarm status. The lower part gives a quick overview of the selected Azure database. While the components are quite self-explaining, most of them offer some more details when clicked onto.

The Home Page button at the lower right sets the focus to the selected Azure database:

Foglight view of one Azure database

Among others it shows the current alarms, availability, performance and storage capacity of the database, I/O from applications in the Azure cloud as well as from those outside (on-premise applications), and cost. Again, all of these item are clickable and lead to detailed drilldowns. The same drilldowns can be reached via the menu on the top.

Nearly all of the displayed information is gathered automatically from the Azure instance and/or database. Two optional pieces of information have to provided manually in the Administration drilldown (see next screenshot):

- The Data Center and Windows Live ID, displayed in the upper left corner.

- Pricing information: Based on provided storage price and price for outgoing and incoming I/O, both per GB, the total storage cost is calculated and displayed in the upper right corner.

SQL Azure Administration drilldown

Only thing I couldn’t get working was the pricing information. From the error messages it looked very much like an issue with my German Windows mixing up dot and comma on the price values. OK, it’s the very first version of the SQL Azure cartridge… nevertheless, I already filed a CR to Quest support on this.

So, all this looks quite cool for diagnostics. But Foglight also claims to cover monitoring and alarming. Around a dozen alarms are pre-defined and can be adjusted in the Administation drilldown:

- Database Reaching Maximum Limit Capacity

- Database Requires Change In Edition

- Database Reaching Current Limit Capacity

- Long Lock Running

- Response Time

- Connection Time

- Instance/Database Unresponsive

- Database Property Changed

- Collection Status

- Executions Baseline Deviation

- Total CLR Time Baseline Deviation

- Total I/O Baseline Deviation

Alarm Rules Management

If you want to give it try, you can start downloading here.

Downloading a trial version requires registration. After registering, you can download the documentation for SQL Azure, most of which is dated 10/10/2011:

- Foglight for SQL Server-Release Notes

- Foglight for SQL Azure - User Guide

- Foglight for SQL Azure - User Guide

- Foglight for SQL Azure - Getting Started Guide

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

Chris “Woody” Woodruff (@cwoodruff) continued his series with 31 Days of OData – Day 3 OData Entity Data Model on 12/4/2011:

From Day 2 of the series, we looked at data types that are present in the OData protocol. These data types allow for the richness of the data experience. Next we will look at taking these data types and crafting the entities that make up the OData feed.

An OData feed is very much like a database with tables, foreign keys and stored procedures. The data from an OData feed does not have to originate in a relational database but this is the first and most important type of data repository that OData can represent. The OData’s Entity Data Model (EDM) is simple but elegant in its representation of the data. It is designed to handle the data but also the relationships between entities that allow for the rich data experience we experience.

The Roadmap of the EDM

Just like a roadmap the EDM acts as a guide that allows us to travel the OData feed’s paths. It furnishes us the collections of entities and all details needed to understand the entities within its map. The EDM also gives us the paths or associations between the unique Entities of the OData feed. The ability to navigate easily without knowing much about the layout of the OData land is where OData gives us the freedom to have the Data Experiences (DX) we need for today’s solutions and consumer expectations.

The Entitles in an OData feed represents a specific type of data or Entity Type. The Entity Types of an OData feed could simulate some real world item, concept or idea such as a Book, College Course or Business Plan. The Entity Type contains properties that must adhere to the data types allowed and provided by the OData protocol or be of a Complex Type (explained later). To exist in the OData protocol, the Entity Type must be unique and so must have a unique identifier that allow for the Entities of that Entity Type to be located and used within the OData Feed. The Entity Key can be composed of one or more Entity properties and will be a unique property for the Entity Type. Most Entity Types will have a single property but that is not a requirement. An example of a multiple property Entity Type key is on my baseball stats OData feed and the Batting Entity Type.

The Batting Entity Type in my baseball stats OData feed is comprised of the following properties:

- playerID – the Player Entity Type’s Key

- yearID – the year of the baseball season

- stint – baseball players could be traded and traded back to a team in the same season so needs to be tracked

- teamID – the team the Player played for in the stint

- lgID – the league of the team the player played for in the stint

As you can see OData can handle complex or composite Keys and allow for almost anything we give it. The collection of Entities is grouped in an Entity Set within the OData feed.

The OData feed can also contain Associations that define the connections (or roads from our earlier analogy) between the Entity Type and another Entity Type. An example would be the Association between a Baseball Player and his Batting Statistics. An Entity Type’s collection of Associations is called an Association Set and along with the Entity Set is assembled into an Entity Container. The Link or Navigation Property that makes up the Entity Type must act on behalf of a defined Association in the OData feed.

A special type of structured types that can be in an OData feed is a Complex Type. The Complex Type is a collection of properties without a unique Key. This type can only be contained within an Entity Type as a property or live outside of all Entity Types as temporary data.

The last item that can be represented in the Entity Data Model is a Service Operation. We will look at the Service Operation later in the series. For now understand that Service Operations are functions that can be called on an OData feed. A Service Operation behaves similar to the Stored Procedures in a relational database.

We have looked at the Entity Data Model and what defines it in the world of OData. This will allow us to lay down a foundation for future blog posts in this series to explain the behaviors and results that we expect from our OData feeds.

Chris “Woody” Woodruff (@cwoodruff) posted the second member of his OData series, 31 Days of OData – Day 2 Data Types of OData, on 12/2/2011:

Since data is the basis for all OData feeds it is natural to see then that describing the data that represents the types Entities with in the feed is important. It is not only important for the data but also for the metadata that plays a significant role in the OData protocol.

The OData protocol is a very structured standard and with it also come a set of primitive data types. Each data type has a distinct set of properties themselves and we will look at each and understand those characteristics. Let’s dig into the list shall we?

What are the Data Types for OData?

I will give the list of primitive data types that can be used with the OData protocol and additionally I will give some of the characteristics for each type. We all should know most of these data types but it will be a good refresher.

Primitive Types

Description

Notes

Examples

Null

Represents the absence of a value

null

Edm.Binary

Represent fixed- or variable- length binary data

X and binary are case sensitive. Spaces are not allowed between binary and the quoted portion. Spaces are not allowed between X and the quoted portion. Odd pairs of hex digits are not allowed.

X’23AB’

Edm.Boolean

Represents the mathematical concept of binary-valued logic

Example 1: true

Example 2: false

Edm.Byte

Unsigned 8-bit integer value

FF

Edm.DateTime

Represents date and time with values ranging from 12:00:00 midnight, January 1, 1753 A.D. through 11:59:59 P.M, December 9999 A.D.

Spaces are not allowed between datetime and quoted portion. datetime is case-insensitive

datetime’2000-12-12T12:00′

Edm.Decimal

Represents numeric values with fixed precision and scale. This type can describe a numeric value ranging from negative 10^255 + 1 to positive 10^255 -1

2.345M

Edm.Double

Represents a floating point number with 15 digits precision that can represent values with approximate range of ± 2.23e -308 through ± 1.79e +308

Example 1: 1E+10 Example 2: 2.029

Example 3: 2.0

Edm.Single

Represents a floating point number with 7 digits precision that can represent values with approximate range of ± 1.18e -38 through ± 3.40e +38

2.0f

Edm.Guid

Represents a 16-byte (128-bit) unique identifier value

’12345678-aaaa-bbbb-cccc-ddddeeeeffff’

Edm.Int16

Represents a signed 16-bit integer value

Example 1: 16

Example 2: -16

Edm.Int32

Represents a signed 32-bit integer value

Example 1: 32

Example 2: -32

Edm.Int64

Represents a signed 64-bit integer value

Example 1: 64L

Example 2: -64L

Edm.SByte

Represents a signed 8-bit integer value

Example 1: 8

Example 2: -8

Edm.String

Represents fixed- or variable-length character data

Example 1: ‘Hello OData’

Edm.Time

Represents the time of day with values ranging from 0:00:00.x to 23:59:59.y, where x and y depend upon the precision

Example 1: 13:20:00

Edm.DateTimeOffset

Represents date and time as an Offset in minutes from GMT, with values ranging from 12:00:00 midnight, January 1, 1753 A.D. through 11:59:59 P.M, December 9999 A.D

Where do the Data Types for OData come from?

Where do the datatypes for OData originate is a great look at the history of OData and what other products helped shape the protocol. OData originated with Microsoft so first we will look at where most data resides when developing solutions on the Microsoft stack of tools and technologies: SQL Server. When the OData protocol was announced back in 2010 most of the data types used in Microsoft SQL Server 2008 could be used to represent data in an OData feed. It would only seem logical that a technology that was developed with an organization would be shaped and influenced by the tools at hand and OData is no exception.

But that is just the start. We must also look at the Entity Framework as another key influencer in the maturation of the OData protocol. If you notice in the table above the list of the data types all have the prefix “Edm.” associated. That comes from the Entity Framework to mean “Entity Data Model”. Why is this so? It could be that other data types will be allowed at some time and that some with the same literal name could be brought into the OData protocol specification but with slightly different characteristics. If that happened these new set of primitives could conflict with the existing data types (example could be datatypes from Oracle).

The OData team was smart enough to allow for this and started using prefixes that could distinguish possibly a different representation of a string by two different database products (example could be the maximum fixed length of a string). These new data types could be given their own prefix and happily live side by side with the original data types from SQL Server and Entity Framework.

What about Spatial Data Types?

The Spatial data types that first were brought to the Microsoft world in SQL Server 2008 are a new exciting feature of both the Entity Framework 4.0 product but also for OData. These new data types are so exciting that I will be spending an entire blog post covering these later in the month. I will update and link this blog post chapter to that future post so stay tuned.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Damir Dobric (@ddobric) described X509 Certificate flow in Windows Azure Access Control Service in an 11/30/2011 post:

The picture below shows flow of X509 certificate in the communication between Client and Service by using WCF and Windows Azure Access Control Service. When using this scenario you will be definitely confused at least by number of certificates used in communication process. Hope this picture can help a bit.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Ralph Squillace claimed Windows Azure: P & P Enterprise Integration Pack with failure/retry **and** fine-grained autoscaling APIs (among others) in a 12/4/2011 post:

This release of the Patterns & Practices Enterprise Integration Pack is simply wonderful and is required for real-world Azure development. Read the announcement for the full list of features, but in particular I want to call out the autoscaling and transient failure/retry APIs offered. Azure applications can use these to handle a majority of the big needs that flexible and robust apps in the cloud need -- and still take advantage of the entire platform as designed, rather than being "trapped" in a VM.

We're soon going to release some guidance around building multi tenant applications in Windows Azure, and this integration pack is very, very important for that design approach as well. Have a look. [Emphasis added.]

Tarun Arora (@arora_tarun) continued his series with Part 3 - Load Testing In the Cloud Automating Test Rig Deployment on 12/3/2011:

Is this blog post worth your time?

It’s not challenging setting up the Load Test Rig in the Cloud, it’s more challenging to convince enterprises to embrace the cloud. Not all enterprises will accept their business critical applications being profiled from a VM hosted outside their network. Though through Windows Azure Connect you can join the Cloud hosted Role to the on premise domain effectively forcing the domain policies on the external VM but how many enterprises will authorize you to do this? I have worked with various Energy Trading companies who admire the flexibility that Azure brings but often shy away from the implementation because they still consider it risky. There is no immediate solution to this, but I sure think that the thought process could be transformed by implementing the change gradually. The client may not be willing to experiment with the business critical applications, but you stand a good chance if you can identify the not so important applications and highlight the benefits of Azure-ing the Test Rig such as,

- Zero Administration overhead.

- Since Microsoft manages the hardware (patching, upgrades and so forth), as well as application server uptime, the involvement of IT pros is minimized.

- Near to 100% guaranteed uptime.

- Utilizing the power of Azure compute to run a heavy virtual user load.

- Benefiting from the Azure flexibility, destroy Test Agents when not in use, takes < 25 minutes to spin up a new Test Agent.

- Most important test Network Latency, (network latency and speed of connection are two different things – usually network latency is very hard to test), by placing the Test Agents in Microsoft Data centres around the globe, one can actually test the lag in transferring the bytes not because of a slow connection but because the page has been requested from the other side of the globe.

Welcome to Part 3, In Part 1 we discussed the advantages of taking the Test Rig to the cloud, In Part 2 we manually set up the Test Rig in the cloud, In Part 3 I intent to completely automate the end to end deployment of the Test Agent to the cloud.

Another Azure Gem – Startup Tasks in Worker Role

Let’s get started by what we need to automate the Test Agent deployment to the cloud. We can use startup tasks to perform operations before the role starts. Operations that one might want to perform include installing a component, registering COM components, setting registry keys, or starting a long running process. Read more about start up tasks here. I will be leveraging startup tasks to install Visual Studio Test Agent software and further registering the Test Agent to the controller.

I. Packaging everything in to the Solution

In Part 2 we created a Solution using the Windows Azure Project Template, I’ll be extending the same solution,

DOWNLOAD A WORKING SOLUTION OR follow the instructions below,

1. StartupTask - Create a new Folder under the WorkerRole1 Project and call it StartupTask.

2. VS2010Agents.zip - If you haven’t already, download the Visual Studio Agents Software from MSDN, I used the ‘en_visual_studio_agents_2010_x86_x64_dvd_509679.iso’ extracted the iso and zipped the TestAgents folder and called it VS2010Agents.zip. Add the VS2010Agents.zip to the folder StartupTask, the approximate size of the VS2010Agents.zip would be around 75 MB.

3. Setup.cmd - Create a new file Setup.cmd and add it to the StartupTask folder. Setup.cmd will just be the workflow manager. The cmd file will call the Setup.ps1 script that we will generate in the next step and log the output to a log file. Your cmd file should look like below.

REM Allow Power Shell scripts to run unrestricted powershell -command "set-executionpolicy Unrestricted" REM Unzip VS2010 Agents powershell -command ".\setup.ps1" -NonInteractive > out.txt

4. Setup.ps1 - Unzipping VS2010Agents.zip – I found a very helpful post that shows you how to unzip a file using powershell. Create a function Unzip-File that accepts two parameters, basically, the source and destination, verifies that the source location is valid creates the destination location and unzips the file to the destination location. So, your Setup.ps1 file should look like below.

function Unzip-File { PARAM ( $zipfilename="E:\AppRoot\StartupTask\VS2010Agents.zip", $destination="C:\resources\temp\vsagentsetup" ) if(test-path($zipfilename)) { $shellApplication = new-object -com shell.application $zipPackage = $shellApplication.NameSpace($zipfilename) $destinationFolder = $shellApplication.NameSpace($destination) $destinationFolder.CopyHere($zipPackage.Items(), 1040) } else { echo "The source ZIP file does not exist"; } } md "C:\resources\temp\vsagentsetup" Unzip-File "E:\AppRoot\StartupTask\VS2010Agents.zip" "C:\resources\temp\vsagentsetup"5. SetupAgent.ini – Unattended Installation - When the setup.ps1 has been run, it would unzip the VisualStudioAgents.zip to C:\resources\temp\vsagentsetup. Now you need to install the Agents software, but you would want to run an unattended Installation. I found a great walkthrough on MSDN that shows you how to create an unattended installation of Visual Studio, I have used this walkthrough to create the setupAgent.ini file, don’t worry if you are having trouble creating the ini file, the entire solution is available for download at the bottom of the post.

Once you have created or downloaded the setupagent.ini file, you need to append instructions to the setup.cmd to start the unattended install using the setupagent.ini that you just created. You can add the below statements to the setup.cmd.

REM Unatteneded Install of Test Agent Software C:\resources\temp\vsagentsetup\VS2010TestAgents\testagent\setup.exe /q /f /norestart /unattendfile e:\approot\StartupTask\setupagent.ini6. ConfigAgent.cmd – Now you need to configure the agent to work with the test controller. This is easy to do, you can call the TestAgentConfig.exe from the command line by passing the user details and controller details. The MSDN post here was helpful. Create a new file call it ConfigAgent.cmd, it should look like below,

"D:\Program Files (x86)\Microsoft Visual Studio 10.0\Common7\IDE\TestAgentConfig.exe" configureAsService ^/userName:vstestagent /password:P2ssw0rd /testController:TFSSPDEMO:6901you will need to append instructions to setup.cmd to call the ConfigAgent.cmd and run it as a scheduled task, with elevated permissions. You can add the below statements to the setup.cmd

REM Create a task that will run with full network privileges. net start Schedule schtasks /CREATE /TN "Configure Test Agent Service" /SC ONCE /SD 01/01/2020 ^/ST 00:00:00 /RL HIGHEST /RU admin /RP P2ssw0rd /TR e:\approot\configagent.cmd /Fschtasks /RUN /TN "Configure Test Agent Service"7. Setupfw.cmd – You will need to open up ports for communication between the test controller and the test agent. In normal developer environment at times you can get away by turning off the firewall, however, this will not be a possibility in a corporate network. Found a very useful post that shows you how to configure the Windows 2008 Server Advanced firewall from the CLI. Once you are through the Setupfw.cmd should look like below,

netsh advfirewall firewall add rule name="RPC" ^dir=in action=allow service=any enable=yes profile=any localport=rpc protocol=tcp netsh advfirewall firewall add rule name="RPC-EP" ^dir=in action=allow service=any enable=yes profile=any localport=rpc-epmap protocol=tcp ..netsh advfirewall firewall add rule name="Port 6910 TCP" ^dir=in action=allow service=any enable=yes profile=any localport=6910 protocol=tcp netsh advfirewall firewall add rule name="Port 6910 UDP" ^dir=in action=allow service=any enable=yes profile=any localport=6910 protocol=udpII. How will the Startup Tasks be executed?

- Set the value of Copy to Output Directory to Copy Always. Failing to do so will result in a deployment error. This step will ensure that all the startupTask files are added to the package and uploaded to the Test Agent VM. By default the files are uploaded to E:\AppRoot\

- Open ServiceDefinition.csdef and include a trigger to call the workflow cmd to start the execution of the command file. The csdef file should look like below once you have added the Startup Task tags.

<?xml version="1.0" encoding="utf-8"?> <ServiceDefinition name="WindowsAzureProject2" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"> <WorkerRole name="WorkerRole1" vmsize="Small"> <Imports> <Import moduleName="Diagnostics" /> <Import moduleName="RemoteAccess" /> <Import moduleName="RemoteForwarder" /> <Import moduleName="Connect" /> </Imports> <Startup> <Task commandLine="StartupTask\setup.cmd" executionContext="elevated" taskType="background" /> <Task commandLine="StartupTask\setupfw.cmd" executionContext="elevated" taskType="background" /> </Startup> <LocalResources> </LocalResources> </WorkerRole> </ServiceDefinition>

- ExecutionContext – Make sure you set the execution context to run as elevated. The execution of the cmd file will fail if the file is executed in non elevated mode.

- TaskType – You have the option to set the task type as simple, backgroup, foreground. If you set the taskType to simple, then until the startup task is complete the deployment of the VM will not complete. I won’t recommend running the startup Task as simple, you should run it as background, in case something goes wrong you will have the ability to remotely login to the machine and investigate the reason for the failure. a

FAQ: Something has failed but i don’t know what?

Well to be honest there isn’t too much that can go wrong during the deployment, but in case there is a failure, your best bet is to look at the VM creation logs, the output.log created at the time of running the cmd file. I found some great troubleshooting tips and tricks and gotchas at the blog posts (this, this) of the channel 9 @cloudcover team.

III. Publish, Scaling up or down

Now the most important step, once you have finished writing all the startup tasks and changing the setting of the startup tasks to copy always, you are ready to test the deployment.

You have two options to carry out the deployment,

- Publish From Visual Studio – Right click the Azure project and choose Publish

- Package From Visual Studio – Once the package is ready, you can start deployment from the Windows Azure Portal.

Once the deployment is complete you still have a handle on changing the configurations. For example, in the screen shot below, I can change the Instance count configuration from 1 to 10. This will trigger an additional deployment of 9 more instances using the same package that was used to create the original machine. Benefit, you can scale up your environment in a very short interval. One also has the option to stop or delete the machines once they are not required.

Review and What’s next?

In this blog post, we have automated the process of spinning up the Test Agents in windows Azure. You can download the demo solution from here.

You can read the earlier posts in the series here,

Earlier Azure-ing your Test Rig posts

I have various blog posts on Performance Testing with Visual Studio Ultimate, you can follow the links and videos below,

Blog Posts:

- Part 1 – Performance Testing using Visual Studio 2010 Ultimate

- Part 2 – Performance Testing using Visual Studio 2010 Ultimate

- Part 3 – Performance Testing using Visual Studio 2010 Ultimate

Videos:

- Test Tools Configuration & Settings in Visual Studio

- Why & How to Record Web Performance Tests in Visual Studio Ultimate

- Goal Driven Load Testing using Visual Studio Ultimate

I have some ideas around Azure and TFS that I am continuing to work on, not giving away any hints here, but remember to subscribe to http://feeds.feedburner.com/TarunArora I’ll be blogging the results of these experiments soon! Hope you enjoyed this post, I would love to hear your feedback! If you have any recommendations on things that I should consider or any questions or feedback, feel free to leave a comment.

Tinu Thomas (@unitinu) claimed to have Been diagnosed with Acute Windows Azure Diagnostics Understanding Syndrome in a 12/2/2011 post:

I have had a total of 2 years, 3 months, 2 hours of Windows Azure developer experience and it humiliates me to suicidal extents to confess that I am hardly conversant with the platform’s native Diagnostics technicalities. “I am Tinu Thomas and I am a diagnostic-ignorant Azure Developer.” Oh my god…how could he?? Now that we have that past us <sheepish grin>, I would also like to bask in the afterglow of redemption. Thanks to a much-needed job transition phase, I was able to find enough time to explore my forbidden fantasies regarding Diagnostics and eventually came to a foregone conclusion – I “need” to blog about this, irrespective of whether or not it’s a tried and tested topic, it was my moral responsibility as a mortal developer to reiterate my freshly acquired knowledge to my other fellow kind, despite the fact that they may already be well aware and much more well-informed than me on the subject, because it is written in the holy texts of GeeksAndTheirInternet – ‘Thou shall blog what thou hath painstakingly learned’. Amen.

So how does WAD work?

So, before your Role ‘starts’, a process called as a Diagnostics Monitor latches itself on to the role (think Aliens and you got it). Lets say that this monitor has already been ‘configured’ (more on this later). Once the role starts, it goes about its usual activity <yada-yada-yada> all the while being stealthily screened by our DM for any errors, information or logs which are in turn stored within the role or VM’s local storage.These logs are then meticulously transferred to Azure Storage at intervals. So that’s basically the gist of it. Not that bad huh?

Well, put on your gas masks… We are going in!

When I mentioned that the monitor was ‘configured’, what did I mean? Well like every other process, Diagnostics Monitor also needs to be told what to do. Configuring the DM is like instructing it to follow a set of rules – for example: what exactly should it be monitoring, when exactly should it be monitoring that, when should it transfer the data from local to azure storage and so on. Alright, so lets take a look at how this configuration thing works.

1. Diagnostic Monitors have a default configuration. This configuration enables you to directly deploy your Windows Azure Application without typically having to take any extra efforts for setting up the DM. The only care that you have to take is ensure that the DM is initialized in your role (more later) and that a storage credential has been provided for logging the traces. Here’s a code-snippet that retrieves the default configuration of a Diagnostics Monitor:

public class WebRole : RoleEntryPoint { public override bool OnStart() { // For information on handling configuration changes // see the MSDN topic at http://go.microsoft.com/fwlink/?LinkId=166357. DiagnosticMonitorConfiguration diagConfig = DiagnosticMonitor.GetDefaultInitialConfiguration(); return base.OnStart(); } }The DiagnosticMonitorConfiguration is a class that holds all the configurable properties of the DM. The GetDefaultInitialConfiguration method of the DM returns the default configuration that the DM is currently equipped with. Lets see what this Default Configuration contains.

Ahh. Now we know what the DM has that we can configure. Big deal. The ConfigurationChangePollInterval property is set to 1 minute. This means that the DM will look for a change in its configuration every one minute. But where does it look? Patience my love. There are other properties that can be set and all these properties are nothing but elements present in the DiagnosticMonitorConfiguration schema. I will not go in depth about these properties in this blog post simply because I myself am yet to further explore them but rest assured my future posts will attempt to reveal the mysteries behind these.

2. Moving on. Once you have obtained the default configuration you can joyfully modify the configuration settings described above. Note that the code has been added within the OnStart method of your role. This means that you are altering the DM settings before the role is starting. Here’s an example wherein I have configured the DM to transfer only those Infrastructure Logs which have been logged in occurrence of an error. In addition I have also configured the transfer of the logs between local and azure storage to initiate every 30 seconds. Once the configuration is done I start the Diagnostics Monitor by providing it with these initial configs.

public class WebRole : RoleEntryPoint { public override bool OnStart() { DiagnosticMonitorConfiguration diagConfig = DiagnosticMonitor.GetDefaultInitialConfiguration(); diagConfig.DiagnosticInfrastructureLogs.ScheduledTransferLogLevelFilter = LogLevel.Error; diagConfig.DiagnosticInfrastructureLogs.ScheduledTransferPeriod = new TimeSpan(0, 0, 30); DiagnosticMonitor.Start("Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString", diagConfig); return base.OnStart(); } }The Start method of the DM takes in two parameters here. One is the DiagnosticStorageAccountConfigurationSetting Name. This is the name of the setting that you have provided in your cscfg for the DiagnosticsConnectionString. This will be further clear once we move to the DM Initialization part. The other parameter is the DiagnosticMonitorConfiguration object that we have just initialized and modified to suit our fancies.

The Start method can also be invoked by passing only the ConfigurationSettingName mentioned above in which case the DM will be started using the default config as explained in the first point. The other set of parameters that the Start method can take instead are the CloudStorageAccount object itself and then our DiagnosticMonitorConfiguration object (diagConfig).

3. So that was all about configuring the DM before the role starts – in short we set up a start-up task for the role. What if there are some configs that must be applied at runtime?Say that your application contains a Web and a Worker Role. After a particular event in the application – say an Administrator login – you need to monitor a Performance Counter of the Worker Role to check on the health of the VM. Oops! But you haven't configured the DM to log that particular information. Ta-Da. Presenting the RoleInstanceDiagnosticManager. This little superhero enables you to configure the diagnostics of a single role instance. Here’s how it works:

RoleInstanceDiagnosticManager instanceDiagManager = new RoleInstanceDiagnosticManager (CloudStorageAccount.Parse("Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString"), "<deployment-id>", "<role-name>", "<role-instance-id>"); DiagnosticMonitorConfiguration diagConfig = instanceDiagManager.GetCurrentConfiguration();So the RoleInstanceDiagnosticManager is initialized using 4 parameters : the CloudStorageAccount, the deploymentID, the Role Name, the RoleInstanceID, all of which can be retrieved using ServiceManagementAPI’s. Once initialized it can be used to obtain the current DiagnosticMonitorConfiguration using GetCurrentConfiguration. Note that the earlier config that we dealt with was the default one and this config is the one that the role would be equipped with at that moment of time. And that’s it. You can then use the diagConfig to alter the configuration settings of the DM (like in the startup task) and then call the instanceDiagManager.SetCurrentConfiguration(diagConfig) to set the diagnostics configurations of the DM for that particular Worker Role.

4. Another way to go about configuring the DM would be the PowerShell way. If you are into Windows Azure you have to be a PowerShell guy. Being the ‘suh-weeeet’ topic that it is, it deserves a blog post of its own and I being a worthy disciple of GeeksAndTheirInternet , will comply. My future blog posts will boast about the ways in which PowerShell can be used to remotely configure diagnostics. That’s right – remotely.

For now, lets get back to the DM initialization part. The what? Now, if you did scroll up and eventually notice that the 1st point in the DM config part did mention this vaguely, you would at this moment be going “Uh-huh. The DM initialization. ‘Course I remember”. Now, the DM initialization isnt that big a deal. In fact, chances are that in case you launched your first win azure app you didn’t even realize that you had initialized the DM. That’s because Visual Studio 2010 provides the Cloud solution with in-built csdef and cscfg’s that already have a Diagnostic Setting enabled for each role. Don’t believe me? Open your cscfg for a new Azure project and I bet what you find is this:

<ConfigurationSettings> <Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="UseDevelopmentStorage=true" /> </ConfigurationSettings>The settings described herein are the ones that provide the Storage Credentials of your Windows Azure Storage Account. While pushing your app on to the cloud it is imperative that you provide your storage credentials here. The DM will then know where to transfer the logs from the local storage. This setting name is the same that we had used as a parameter in the Start method of the DM in point 2 of our DM Configuration part. Thus the DM will use the storage credentials provided here to perform its activities.

The csdef is also built to import the Diagnostics Module.

<Imports> <Import moduleName="Diagnostics" /> </Imports>And if you open your ASP.NET Web Role’s web.config, you will very likely find this:

<system.diagnostics> <trace> <listeners> <add type="Microsoft.WindowsAzure.Diagnostics.DiagnosticMonitorTraceListener, Microsoft.WindowsAzure.Diagnostics, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" name="AzureDiagnostics"> <filter type="" /> </add> </listeners> </trace> </system.diagnostics>The above code is nothing but the declaration of a trace listener within the Web Role. This trace listener can then be used to trace errors within the application. Example:

try { string s = null; if (s.Length > 0) { // Hmm...Something tells me that this is not going to work! } } catch (Exception ex) { //Well.. at least I was right about something System.Diagnostics.Trace.TraceError(System.DateTime.Now + " Error: " + ex.Message); }The System.Diagnostics.Trace.TraceError method will now log the error into WAD Logs since the trace listener is in place.

Thus the DM has been initialized using nothing but simple Storage Account Credentials. Another question here is how and when does the DM know that the configuration has been changed? Well, remember that itsy-bitsy configuration setting – ConfigurationChangePollInterval – that we saw earlier. That setting decides the time interval at which the DM polls the azure storage to check if there are any changes that have been made to its configuration. Uhhh. Ok. But why does the DM poll the azure storage? ‘Cos that’s where the diagnostic configuration setting file has been kept silly! Wait. I dint tell you about the Diagnostic Configuration setting file? Oops! Remember when we talked about DiagnosticMonitorConfiguration schema earlier in the 1st point on DM config. Windows Azure maintains an XML file (extension as *.wadcfg) similar to your cscfg which is the grand summation of all the settings of your DM. This file is typically present in your Wad-Control-Container blob container within your Win Azure Blob Storage and also in your local storage within the root directory of your role. Here is a comprehensive detail of the schema. So that’s why the DM keeps polling the azure storage (blob) to check for any changes in the configuration and apply them accordingly.

Well, that just about covers the basics of Windows Azure Diagnostics. As promised, posts ahead will definitely dare to tread the fields of Diagnostic Configuration Settings elaboration and PowerShell usage to configure diagnostics.

Avkash Chauhan (@avkashchauhan) described Using CSPACK to create Windows Azure Package returns error “Error CloudServices050 : Top-level XML element does not conform to schema” in a 12/2/2011 post:

I was investigating an issue in which using CSPACK to create Windows Azure Package (*.cspkg) received error as below:

Error CloudServices050 : Top-level XML element does not conform to schema

The reason for this error was just simple. The problem was caused by passing service configuration file as parameter while CSPACK use service definition file as input parameter. Because CSPACK application searches for Service Definition name space setting so passing service configuration instead of service definition will cause this error.

To solve this problem you just need to pass correct service definition with CSPACK application.

Here is an example of Service Configuration:

<?xml version="1.0" encoding="utf-8"?> <ServiceConfiguration serviceName="WebRoleTestServer" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" osFamily="1" osVersion="*"> ………… </ServiceConfiguration>Here is an example of Service Definition:

<?xml version="1.0" encoding="utf-8"?> <ServiceDefinition name="WebRoleTestServer" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"> …. </ServiceDefinition>

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Return to section navigation list>

Windows Azure Infrastructure and DevOps

The Azureator.fr blog reported Windows Azure est ISO 27001 in a 12/4/2011 post:

Par Laurent Capin <Head in the cloud, Feet on Azure> le dimanche 4 décembre 2011, 19:26 - Actu- Lien permanent

Mais ISO 27001, qu'est-ce que c'est ? Eh bien c'est la norme décrivant les exigences pour un Système de Management de la Sécurité de l'Information. Et ce genre de démarches est primordiale dans le cloud, où la suspicion est forte et la prudence de mise. Mais ça ne sera peut-être pas suffisant pour convaincre les clients les plus paranoïaques, qui argueront que les organismes de certifications, même s'ils font un travail remarquable, ne sont pas tout à fait indépendants. Un peu comme pour CMMI, où les organismes de formations sont ceux qui certifient. Un point de plus pour une agence de notation du cloud ?

La certifiation : BSI

Une petite définition d'ISO 27001 : Wikipedia

Here’s the Bing translation:

But ISO 27001, what is it? Well that is the standard décrivantles requirements for a Management system of Information Security. FTEs kind of approaches is paramount in the cloud, where suspicion is forteet the last caution. But it may not be sufficient for customer convaincreles most paranoid, who argue that the decertifications organizations, even if they do a remarkable job, are not at faitindépendants. Just as for CMMI, where formations are unfurred certify. A point for a notationdu cloud Agency?

The certification: BSI

A small definition of ISO 27001: Wikipedia

[Bing appears to have a problem recognizing all spaces in the text.]

And here’s a capture of the PDF registered on 11/29/2011 from the La certifiation : BSI link:

Click for larger image

Now all we need is a copy of the Windows Azure ISMS statement.

Clarified 12/5/2011 10:22 AM PST: Note that the certificate does not include SQL Azure, Service Bus, Access Control, Caching or Content Delivery Network.

Steve Plank (@plankytronixx) reported Windows Azure is now ISO 27001 compliant in a 12/3/2011 post:

The data centres have enjoyed this certification for quite a while now, as has Office 365, but Windows Azure was trailing behind a little. Well, no longer. The certification was performed by BSI Americas. Microsoft will be receiving the certificate in approximately one month’s time.

I complained about Windows Azure’s comparative lack of certifications and attestations in my Where is Windows Azure’s Equivalent of Office 365’s “Security, Audits, and Certifications” Page? post of 11/17/2011.

My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: November 2011 of 12/2/2011 described the third month of 99.99% availability and the sixth month of >= 99.98% availability for the oldest Windows Azure sample app:

My live OakLeaf Systems Azure Table Services Sample Project demo runs two small Windows Azure Web role instances from Microsoft’s South Central US (San Antonio, TX) data center. Here’s its uptime report from Pingdom.com for November 2011:

The exact 00:05:00 downtime shown probably is a 5-minute sampling interval artifact and actual downtime probably was shorter.

Following is detailed Pingdom response time data for the month of October 2011:

This is the sixth uptime report for the two-Web role version of the sample project. Reports will continue on a monthly basis.

The Azure Table Services Sample Project

See my Republished My Live Azure Table Storage Paging Demo App with Two Small Instances and Connect, RDP post of 5/9/2011 for more details of the Windows Azure test harness instance.

I believe this project is the oldest continuously running Windows Azure application. I first deployed it in November 2008 when I was writing Cloud Computing with the Windows Azure Platform for Wiley/WROX, which was the first book published about Windows Azure.

For more details about the project or to download its source code, visit its Microsoft Pinpoint entry. Following are Pinpoint listings for two other OakLeaf sample projects:

- Microsoft Codename “Social Analytics” WinForms Client Sample (11/22/2011)

- SQL Azure Reporting Services Preview Sample (10/24/2011)

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Kent Weare (@wearsy) reported that he’s Speaking at Prairie Dev Con West–March 2012 in a 12/2/2011 post:

I have recently been informed that my session abstract has been accepted and I will be speaking at Prairie Dev Con West in March. My session will focus on Microsoft’s Cloud based Middleware platform: Azure AppFabric. In my session I will be discussing topics such as Service Bus, AppFabric Queues/Subscriptions and bridging on-premise Line of Business systems with cloud based integration.

You can read more about the Prairie Dev Con West here and find more information about registration here. Do note there is some early bird pricing in effect.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

My (@rogerjenn) Oracle Offers Database, Java and Fusion Apps Slideware in Lieu of Public Cloud post of 12/4/2011 takes on Oracle’s cloudwashing:

Oracle’s Public Cloud site includes a video describing a development team’s need to get an enterprise-grade “mission critical” application running in the cloud within 60 days. The problem is that the team must register to be notified when the Oracle Public Cloud will become available. There’s no clue from the site when a release might occur. Presumably, the initial release will be in preview or beta form.

The slideware is an impressive example of cloudwashing:

A more accurate Database slogan would be “The Oracle database you love, in the cloud someday.”

According to Oracle’s 10/5/2011 Oracle Unveils Oracle Public Cloud press release from Oracle Openworld, “Pricing for the Oracle Public Cloud will be based on a monthly subscription model, and each service can be purchased independently of other services.” Obviously, this pricing model will make it difficult for prospective users to compare its cost with competitive offerings from Amazon Web Services, Windows Azure/SQL Azure, and Rackspace, all of which charge hourly for computing resources and by the GB for data storage and egress.

I’ve requested to be notified of updates and will report if and when I hear anything concrete from Oracle

My (@rogerjenn) HP Announces “Secret Sauce” to Accelerate Windows Azure Development post of 12/3/2011 asks “What is it?”:

An HP Offers Services to Accelerate Application Development and Deployment on the Microsoft Windows Azure Platform press release of 11/30/2011 from its HP Discover 2011 - Vienna event begins:

HP Enterprise Services today announced new applications services designed to accelerate the building or migration of applications to the Microsoft Windows® Azure platform.

Traditional applications development and deployment approaches often take too long and cost too much. Cloud platform offerings, such as the industry-leading Windows Azure platform, can improve responsiveness to business needs while reducing costs.

With the new HP Cloud Applications Services for Windows Azure, applications developers can quickly design, build, test and deploy applications for the Windows Azure platform. As a result, enterprises can more quickly capitalize on new opportunities. The services also enable clients to accelerate adoption of the Windows Azure platform as part of their hybrid service delivery environments.

Additionally, clients can manage risk and optimize IT resources with a methodical approach to assessing, migrating and developing applications on the Windows Azure platform. With usage-based pricing for the platform, clients also can align IT costs with changes in demand and volumes. “As their needs shift, enterprises want to confidently and rapidly adopt cloud-based applications,” said Srini Koushik, vice president, Strategic Enterprise Services, Worldwide Applications and Business Services, HP. “HP helps clients speed time to market by transforming or developing new applications, then efficiently and cost-effectively deploying them onto the Windows Azure platform.”

HP has deep and diverse expertise in transforming legacy applications to modern platforms like Windows Azure. HP uses the HP Cloud Applications Guidebook and HP Cloud Advisory tool to analyze applications for cloud suitability based on business and technical characteristics. HP also developed and uses Windows Azure delivery and migration guides, plus proven techniques, to modernize and migrate applications.

HP Cloud Applications Services for Windows Azure is part of HP Hybrid Delivery, which helps clients build, manage and consume services using the right delivery model for them. Available worldwide, HP Cloud Applications Services for Windows Azure are delivered through a dedicated Windows Azure Center of Expertise that is supported in region by consulting services and seven global joint Microsoft and HP competency centers. [Link added.]

HP and Microsoft have delivered technical innovation together for more than 25 years. They offer joint solutions that help organizations around the world improve services through the use of innovative technologies. More information about the HP and Microsoft alliance is available at http://h10134.www1.hp.com/insights/alliances/microsoft/.

HP helps businesses and governments in their pursuit of an Instant-On Enterprise. In a world of continuous connectivity, the Instant-On Enterprise embeds technology in everything it does to serve customers, employees, partners and citizens with whatever they need, instantly.

The question is what are HP Cloud Applications Services for Windows Azure? The usual cadres of HP Enterprise Services (formerly EDS) consultants drawing boxes and lines on whiteboards at $300/hour each? HP’s Enterprise Service for Cloud Computing page (#230 of 266 categories) offers only the brief description outlined below in red:

Click image for larger capture

Other HP Enterprise Cloud Services offer links to additional descriptive information:

- With HP Enterprise Cloud Services – Compute, you receive a hosted cloud computing infrastructure-as-a-service that supports important business workloads through high levels of availability and responsiveness as well as an efficient, flexible consumption model.

- HP Enterprise Cloud Services for Microsoft Dynamics CRM offers a winning combination of the market's leading enterprise CRM application with our virtual private cloud solution.

- HP Enterprise Cloud Services for SAP Applications provide you with flexibility, improved cost control and agility needed to meet dynamic requirements; we currently offer services supporting an SAP development environment for your ERP projects and services supporting SAP’s CRM application to improve your customers’ experience with your company.

- HP Applications Transformation to Cloud services enables enterprises to determine cloud suitability for applications.

but not HP Cloud Applications Services for Windows Azure. Observe the lack of any reference to the promised HP implementation of the Windows Azure Platform Appliance (WAPA).

Note: Other writers have covered this topic but mistakenly named the offering “HP Cloud Applications Services for Microsoft Azure.” These articles include:

- HP Wants Third Parties Selling Its Cloudware by Maureen O’Gara (12/2/2011)

- HP Targets Cloud Providers With New Certifications, Wares by Andrew R. Hickey (11/30/2011)

Looks like more smoke and mirrors to me.

<Return to section navigation list>

1 comments:

Excellent Tips! Thanks for sharing the information!

Post a Comment