Making Sense of Window Azure’s Global Peformance Ratings by CloudSleuth

Update 4/4/2011 12:30 PM PDT: Ryan Bateman of Compuware Gomez/CloudSleuth responded to this post on Twitter. See end of article for tweets.

Lori MacVittie (@lmacvittie) asserted Application performance is more and more about dependencies in the delivery chain, not the application itself in a preface to her On Cloud, Integration and Performance post of 4/4/2011 to F5’s DevCentral blog:

When an article regarding cloud performance and an associated average $1M in loss as a result appeared it caused an uproar in the Twittersphere, at least amongst the Clouderati. There was much gnashing of teeth and pounding of fists that ultimately led to questioning the methodology and ultimately the veracity of the report.

If you were worried about the performance of cloud-based applications, here's fair warning: You'll probably be even more so when you consider findings from a recent survey conducted by Vanson Bourne on the behalf of Compuware.

On average, IT directors at 378 large enterprises in North America reported their organizations lost almost $1 million annually due to poorly performing cloud-based applications. More specifically, close to one-third of these companies are losing $1.5 million or more per year, with 14% reporting that losses reach $3 million or more a year.

In a newly released white paper, called "Performance in the Cloud," Compuware notes its previous research showing that 33% of users will abandon a page and go elsewhere when response times reach six seconds. Response times are a notable worry, then, when the application rides over the complex and extended delivery chain that is cloud, the company says.

-- The cost of bad cloud-based application performance, Beth Shultz, NetworkWorld

I had a chance to chat with Compuware about the survey after the hoopla and dug up some interesting tidbits – all of which were absolutely valid points and concerns regarding the nature of performance and cloud-based applications.

…

What the Compuware performance survey does is highlight the very real problem with measuring that performance from a provider point of view. It’s one thing to suggest that IT find a way to measure applications holistically and application performance vendors like Compuware will be quick to point out that agents are more than capable of not only measuring the performance of individual services comprising an application but that’s only part of the performance picture. As we grow increasingly dependent on third-party, off-premise and cloud-based services for application functionality and business processing we will need to find a better way to integrate performance monitoring into IT as well. And therein lies the biggest challenge of a hyper-connected, distributed application. Without some kind of standardized measurement and monitoring services for those application and business related services, there’s no consistency in measurement across customers. No measurement means no visibility, and no visibility means a more challenging chore for IT operations to optimize, manage, and provision appropriately in the face of degrading performance.

Application performance monitoring and management doesn’t scale well in the face of off-premise distributed third-party provided services. Cloud-based applications IT deploys and controls can employ agents or other enterprise-standard monitoring and management as part of the deployment, but they have no visibility into let alone control over Twitter or their supply-chain provider’s services.

It’s a challenge that will continue to plague IT for the foreseeable future, until some method of providing visibility into those services, at least, is provided such that IT and operations can make the appropriate adjustments (compensatory controls) internal to the data center to address any performance issues arising from the use of third-party provided services.

CompuWare’s CloudSleuth application is based on the company’s Gomez SaaS-based application performance management (APM) solution claimed to “provide visibility across the entire web application delivery chain, from the First Mile (data center) to the Last Mile (end user).”

Charles Babcock claimed “CloudSleuth's comparison of 13 cloud services showed a tight race among Microsoft, Google App Engine, GoGrid, Amazon EC2, and Rackspace” in a deck for his Microsoft Azure Named Fastest Cloud Service article of 3/4/2011:

In a comparative measure of cloud service providers, Microsoft's Windows Azure has come out ahead. Azure offered the fastest response times to end users for a standard e-commerce application. But the amount of time that separated the top five public cloud vendors was minuscule.

These are first results I know of that try to show the ability of various providers to deliver a workload result. The same application was placed in each vendor's cloud, then banged on by thousands of automated users over the course of 11 months. …

Google App Engine was the number two service, followed by GoGrid, market leader Amazon EC2, and Rackspace, according to tests by Compuware's CloudSleuth service. The top five were all within 0.8 second of each other, indicating the top service providers show a similar ability to deliver responses from a transaction application.

For example, the test involved the ability to deliver a Web page filled with catalog-type information consisting of many small images and text details, followed by a second page consisting of a large image and labels. Microsoft's Azure cloud data center outside Chicago was able to execute the required steps in 10.142 seconds. GoGrid delivered results in 10.468 seconds and Amazon's EC2 Northern Virginia data center weighed in at 10.942 seconds. Rackspace delivered in 10.999 seconds.

It's amazing, given the variety of architectures and management techniques involved, that the top five show such similar results. "Those guys are all doing a great job," said Doug Willoughby, director of cloud strategy at Compuware. I tend to agree. The results listed are averages for the month of December, when traffic increased at many providers. Results for October and November were slightly lower, between 9 and 10 seconds.

The response times might seem long compared to, say, the Google search engine's less than a second responses. But the test application is designed to require a multi-step transaction that's being requested by users from a variety of locations around the world. CloudSleuth launches queries to the application from an agent placed on 150,000 user computers.

It's the largest bot network in the world, said Willoughby, then he corrected himself. "It's the largest legal bot network," since malware bot networks of considerable size keep springing up from time to time.

Page 2: Testing Reflects The Last Mile

Read more: 2, Next Page »

Ryan Bateman (@ryanbateman) ranked Windows Azure #1 of the top 15 cloud service providers in his Cloud Provider Global Performance Ranking – January post of 3/16/2011 to the CloudSleuth blog (missed when posted):

A few weeks ago we released calendar year Q4 “Top 15 Cloud Service Providers – Ranked by Global Performance”. My intention here is to start a trend; releasing our ranking of the top cloud service providers based on a global average of real-time performance results as seen from the Gomez Performance Network and its Last Mile nodes.

If you missed out on the last post and are curious about the methodology behind these numbers, here is the gist:

1. We (Compuware) signed up for services with each of the cloud providers above.

2. We provisioned an identical sample application to each provider. This application represents a simple ecommerce design, one page with sample text, thumbnails and generic nav functions followed by another similar page with a larger image. This generic app is specifically designed as not to favor the performance strengths of any one provider.

3. We choose 200 of the 150,000+ Gomez Last Mile peers (real user PCs) each hour to run performance tests from. Of the 200 peers selected, 125 are in the US. The remaining 75 are spread across the top 30 countries based on GDP.

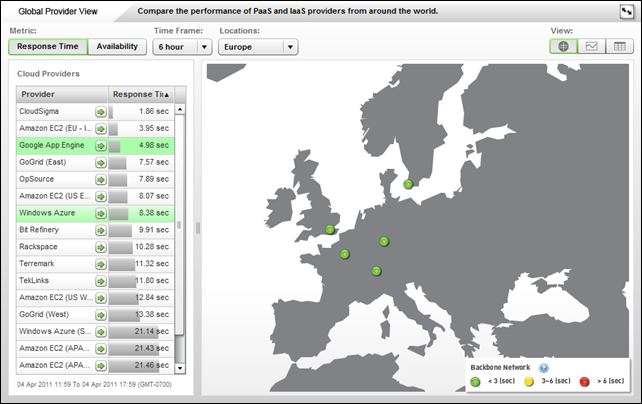

4. We gather those results and make them available through the Global Provider View.

These decisions are fueled by our desire to deliver the closest thing to an apples-to-apples comparison of the performance of these cloud providers. Got ideas about how to tweak the sample application or shift the Last Mile peer blend or anything else to make this effort more accurate? Let us know, we take your suggestions seriously.

Ryan’s post was reported (but usually not linked) by many industry pundits and bloggers.

Click here for more details about the technology Cloudsleuth uses.

Here’s a 4/1/2011 screen capture of the worldwide response-time results for the last 30 days with Google App Engine back in first place:

Note that the weighting of US users causes results from Amazon EC2 (EU – Ireland), Amazon EC2 (APAC – Tokyo) and Windows Azure (Southeast Asia) to be much slower than what users can expect in regions closer to these data centers.

Windows Azure still takes the top spot in North America, as shown here:

Checking response time by city by clicking the arrow at the right of the Provider column indicates that the data is for Microsoft’s North Central US (Chicago) data center:

4744

The following Global Provider View of 4/4/2011 indicates that the Global App Engine is located near Washington, DC (probably in Northern Virginia):

Unlike Amazon Web Services, for which CloudSleuth returns results for four regional data centers (US East – Northern Virginia, US West – Northern California, EU – Ireland, and APAC – Tokyo), data for Windows Azure is limited to two (US – North Central and Southeast Asia [Singapore]) data centers and Google App Engine only one.

Collecting data based on distant data centers when the provider has closer data centers unfairly penalizes those with multinational coverage, as shown here on 4/4/2011:

Chicago, IL is 587 miles further from Europe than Washington, DC, which could account for at least some of the difference in performance between Google App Engine and Windows Azure. Microsoft has European data centers for Windows Azure in Amsterdam and Dublin.

Google discloses public Data Center Locations on a Corporate page with the following details:

Click on the link for a specific location to read more about our data center there, as well as find out about available jobs and learn about our community efforts in the region.

United States

- Berkeley County, SC

- Council Bluffs, IA

- Lenoir, NC

- Mayes County, OK (scheduled to be operational in 2011)

- The Dalles, OR

Europe

- Hamina, Finland (scheduled to be operational in 2011)

- St Ghislain, Belgium

Conclusion:

CloudSleuth should revise its performance measurement methodology to use the provider’s data center that’s closest to the region of interest.

Note: Major parts of this post previously appeared in my Windows Azure and Cloud Computing Posts for 3/4/2011+ and Windows Azure and Cloud Computing Posts for 3/30/2011+.

Update 4/4/2011 12:30 PM PDT: Ryan Bateman tweeted the following:

I replied with this question:

Waiting for Ryan’s response. …

0 comments:

Post a Comment