Windows Azure and Cloud Computing Posts for 4/11/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Update 4/12/2011 8:00 PM PST from MIX 11: Articles marked •• from The Windows Azure Team, James Conard, Steve Marx, Steve Yi, Judith Hurwitz, Tyler Doerksen, Beth Massi, Dell Computer, Marketwire and Geva Perry.

• Update 4/11/2011 10:45 AM PST from MIX 11: Articles marked • from The Windows Azure Team, Windows Azure AppFabric Team, and Remy Pairault.

Note: Updates will not be as frequent or lengthy as usual this week as I am in Las Vegas for MIX 11 and a partial vacation with my wife.

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

•• Steve Yi (pictured below) posted Real World SQL Azure: Interview with John Dennehy, Founder and CEO at HRLocker to the SQL Azure team blog on 4/11/2011:

As part of the Real World SQL Azure series, we talked to John Dennehy, Founder and Chief Executive Officer at HRLocker, about using Microsoft SQL Azure for the company's cloud-based human resources management solution. Here's what he had to say:

MSDN: Can you tell us about HRLocker and the services you offer?

Dennehy: HRLocker is a low-cost, web-based human resources (HR) management solution that is ideal for small and midsize businesses that have globally dispersed workforces. By using HRLocker, employees can submit timesheets, share HR-related documents, and manage and share leave information with other employees.

MSDN: What were some of the challenges that HRLocker faced prior to adopting SQL Azure?

Dennehy: When I founded HRLocker, I knew I wanted to develop our software as a scalable, cloud-based solution, but we needed a platform as a service so that we could avoid costly infrastructure maintenance and management costs. At the same time, we needed a platform that we trusted with our customers' sensitive employee data and that offered reliable data centers around the globe to help us address application performance issues in China.

MSDN: Why did you choose SQL Azure and Windows Azure for your solution?

Denney: We had three criteria to meet when it came to choosing our cloud services provider: security, performance, and reliability. Windows Azure and SQL Azure met all of our requirements. The investment that Microsoft has made in security, and particularly when it comes to the Windows Azure platform, is industry-recognized and was key to our decision.

MSDN: Can you describe how HRLocker is using SQL Azure and Windows Azure?

Dennehy: HRLocker is hosted in web roles in Windows Azure and uses worker roles to execute its back-end processes. We use Windows Azure Blob Storage to collect binary data, such as images, documents, and other files that employees can upload; we also Table Storage in Windows Azure to keep application session data and configuration data, including new feature configurations. We deployed SQL Azure in a multitenant environment to store all employee data for customers, including names, email addresses, and benefit summary information, such as how much time off the employee has accrued. We had previously built a prototype of HRLocker that used Microsoft SQL Server data management software, and we simply used the same database schema and data structures in SQL Azure that we did in SQL Server.

MSDN: What makes your solution unique?

Dennehy: There is a lot of HR software on the market and many require significant investments in infrastructure. By offering a cloud-based solution, we can help customers avoid those costs. The total cost of ownership for HRLocker is at least 75 percent less than any other competing HR management application. We estimate that it costs customers from as little as U.S.$18 per employee annually to run HRLocker. For competing products, it costs at least $80 per employee annually.

MSDN: What benefits is HRLocker realizing with SQL Azure and Windows Azure?

Dennehy: We are growing our business, and it's important that we be able to choose which global data center location to deploy HRLocker because by bringing data closer to customers' physical locations, we can improve application performance. When we first demonstrated our prototype, which was hosted in Ireland, it took 20 seconds to load a single page when we were in China-the software was unusable. But we deployed an instance of HRLocker to the data center in Hong Kong and now it takes less than two seconds to load a page-it's nearly instantaneous and a dramatic improvement that makes our product viable in that region. In addition, we avoided the costly capital investments required to build our own hosting infrastructure. If we had built our own infrastructure, we would have nothing left from our initial seed funding.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000009552

To read more SQL Azure customer success stories, visit:

www.sqlazure.com

Shashank Pawar (@pawarup) described SQL Server as a Service/ SQL Server Private Cloud Offering on 4/11/2011:

Recently I have been presenting around Australia about implementing SQL Server as a Service, also referred to as SQL Server Private cloud. Post the presntations I was requested to make available resources to help you in implementing such a environment. As such I have made the slides we used for the Platform briefing available at the following link - http://cid-895a9e8d1c0f1cd0.office.live.com/view.aspx/Presentations/SQL%20as%20a%20service%5E_Final1.1%5E_ExtReady.pptx

In addition to the slides I presented a new guide has been made available based on the framework that is used by Microsoft Consulting Services to deliver virtualisation based solutions. This guide outlines the major considerations that must be taken into account when onboarding Microsoft SQL Server environments into a private cloud infrastructure based on virtualisation.

You can download the complete whitepaper here: http://download.microsoft.com/download/7/A/3/7A3278D7-C660-4DFB-91FD-0414E3E0E022/Onboarding_SQL_Server_Private_Cloud_Environments.docx

A SQL Server as a service/private cloud offering helps in optimising your existing SQL Server environment by reducing total costs of ownership and improves the agility for business solutions that require database platforms.

From the “Hyper-V Cloud Practice Builder: Onboarding SQL Server Environments” whitepaper’s introduction:

This guide outlines the major considerations that must be taken into account when onboarding Microsoft® SQL Server® environments into a private cloud infrastructure.

There is a strong trend in IT to virtualize servers whenever possible, driven by:

- Standardization

- Manageability

- IT agility and efficiency

- Consolidating servers reduces hardware, energy, and datacenter space utilization costs

- Virtualized environments allow new Disaster Recovery strategies

The Hyper-V™ role in Windows Server® 2008 R2 provides a robust and cost-effective virtualization foundation to deliver these scenarios.

However, there is significant risk in virtualizing SQL Server environments without giving careful consideration to the workloads being virtualized and the requirements of the server applications running on a Hyper-V environment.

This Guide is part of the Hyper-V Cloud Practice Builder that is based on the framework that Microsoft Consulting Services has utilized to deliver Server Virtualization for several years in over 82 countries.

Steve Yi described a Technet Wiki: SQL Azure Firewall article in a 4/11/2010 post:

SQL Azure Firewall provides users with security in the cloud, by allowing you to specify which computers have permission to access data. TechNet has released an interesting wiki article, describing SQL Azure Firewall and how to configure it using the Windows Azure Management Portal. Take a look at this article, and start optimizing your firewall settings.

Click here to go to the article.

If you have any questions or had a unique experience with SQL Azure firewall, or security in the cloud? Let me know by leaving a comment below.

<Return to section navigation list>

MarketPlace DataMarket and OData

Brian Keller produced a 00:09:17 OData Service for Team Foundation Server 2010 Channel9 video segment on 4/9/2011:

In this video, Brian Keller (Sr. Technical Evangelist for Team Foundation Server) demonstrates the beta of the new OData Service for Team Foundation Server 2010.

The purpose of this project is to help developers work with data from Team Foundation Server on multiple types of devices (such as smartphones and tablets) and operating systems. OData provides a great solution for this goal, since the existing Team Foundation Server 2010 object model only works for applications developed on the Windows platform. The Team Foundation Server 2010 application tier also exposes a number of web services, but these are not supported interfaces and interaction with these web services directly may have unintended side effects. OData, on the other hand, is accessible from any device and application stack which supports HTTP requests. As such, this OData service interacts with the client object model in the SDK (it does not manipulate any web services directly).

Download the beta: OData Service for Team Foundation Server 2010

Provide feedback on the beta: TFSOData@Microsoft.com

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

•• Tyler Doerksen described using Azure ACS on Windows Phone 7 in a 4/12/2011 post:

Recently I was digging into Azure ACS (Access Control Service) V2, trying to leverage ACS as an authentication mechanism for a Windows Phone application.

I found a sample app on the ACS Codeplex page here. The main architecture of the sample is as follows.

- WP7 client queries the JSON endpoint for a list of providers and the corresponding login pages.

- Using a Browser control the user logs in to the provider page which redirects the result to ACS

- ACS then redirects a token response to a “Relying Party Page”

- The “Relying Party Page” decodes the token and using a script notify sends the text form of the token back to the client

- WP7 client saves the text token and attaches an OAuth header to all requests to the server

Clear as mud? It seems like there are many steps that can be removed from that flow, and also it requires you to have a separate ASP.NET relying party page online which simply performs a script notify. I developed a work around that will eliminate the need for a Relying Party Page.

After postng on the Codeplex project, I found out that there is a parameter that can be set which will cause ACS to send a script notify to the client application. Here are the changes I made to the sample:

ContosoContactsApp\SignIn.xaml.cs

SignInControl.GetSecurityToken(new Uri("https://<namespace>.accesscontrol.appfabriclabs.com:443/v2/metadata/

IdentityProviders.js?protocol=javascriptnotify&realm=http%3a%2f%2fcontosocontacts%2f&reply_to=&context=&request_id=&version=1.0"));Added ACSResponse JSON Data Contract

[DataContract]

public class ACSResponse

{

[DataMember]

public string appliesTo { get; set; }

[DataMember]

public string context { get; set; }

[DataMember]

public long created { get; set; }

[DataMember]

public long expires { get; set; }

[DataMember]

public string securityToken { get; set; }[DataMember]

public string tokenType { get; set; }internal static ACSResponse FromJSON(string response)

{

var memoryStream = new MemoryStream(Encoding.Unicode.GetBytes(response));

var serializer = new DataContractJsonSerializer(typeof(ACSResponse));

var returnToken = serializer.ReadObject(memoryStream) as ACSResponse;

memoryStream.Close();return returnToken;

}

}AccessControlServiceSignIn.xaml.cs

private void SignInWebBrowserControl_ScriptNotify(object sender, NotifyEventArgs e)

{

var acsResponse = ACSResponse.FromJSON(e.Value);RequestSecurityTokenResponse rstr = null;

Exception exception = null;

try

{

ShowProgressBar("Signing In");string binaryToken = HttpUtility.HtmlDecode(acsResponse.securityToken);

string tokenText = RequestSecurityTokenResponseDeserializer.ProcessBinaryToken(binaryToken);

DateTime expiration = DateTime.Now + TimeSpan.FromSeconds(acsResponse.expires – acsResponse.created);rstr = new RequestSecurityTokenResponse

{

Expiration = expiration,

TokenString = tokenText,

TokenType = acsResponse.tokenType

};The RequestSecurityTokenResponseDeserializer is from the previous relying party page. However the ACS Response object does not have the same structure as the WS-Federation token so much of the deserializer can be removed. Here is what I have left.

public class RequestSecurityTokenResponseDeserializer

{

static XNamespace wsSecuritySecExtNamespace = "http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd";

static XName binarySecurityTokenName = wsSecuritySecExtNamespace + "BinarySecurityToken";public static string ProcessBinaryToken(string token)

{

XDocument requestSecurityTokenResponseXML = XDocument.Parse(token);

XElement binaryToken = requestSecurityTokenResponseXML.Element(binarySecurityTokenName);byte[] tokenBytes = Convert.FromBase64String(binaryToken.Value);

return Encoding.UTF8.GetString(tokenBytes, 0, tokenBytes.Length);

}}

And that is about it. You can download my source code. If you have any questions please comment or email me.

Making this change allowed me to eliminate lots of complexity in the overall architecture of this sample.

Hopefully soon more documentation will be added to the ACS Codeplex project for other gems like the different protocols. Until then I hope this will help.

• The Windows Azure AppFabric Team (a.k.a., NetSQLServicesTeam) announced Windows Azure AppFabric Access Control April release available now! on 4/12/2011:

Windows Azure AppFabric Access Control April release has been released to production and is now generally available at http://windows.azure.com.

The new version of the Access Control service represents a major step forward from the previous production version, introducing support for web application single sign-on (SSO) scenarios using WS-Federation, federation for SOAP and REST web services using WS-Trust and OAuth, and more. In addition, a new web-based management portal and OData-based management service are now available for configuring and managing the service.

Web Single Sign Scenarios

In web SSO scenarios, out of box support is available for Windows Live ID, Google, Yahoo, Facebook, and custom WS-Federation identity provider such as Active Directory Federation Services (AD FS) 2.0. To create the sign-in experience for these identity providers, the service now features a new JSON-based home realm discovery service that allows your web application to get your identity provider configuration from the service and display the correct sign in links.

Access Control automatically handles all protocol transitions between the different identity providers, including Open ID 2.0 for Google and Yahoo, Facebook Graph for Facebook, and WS-Federation for Windows Live ID and custom identity providers. The service then delivers a single SAML 1.1, SAML 2.0, or SWT token to your web application using the WS-Federation protocol once a user has completed the sign in process. The service works with Windows Identity Foundation (WIF), making it easy for your ASP.NET applications to consume SAML tokens issued from it.

For more information on web application scenarios and Access Control, see Web Applications and ACS in the MSDN documentation.

Web Service Scenarios

Access Control supports active federation with web services using the WS-Trust, OAuth WRAP, or OAuth 2.0 protocol.

To access a web service protected by Access Control, a web service client can obtain a bearer token from an identity provider (such as AD FS 2.0), and then exchange that token with the service for a new SAML 1.1, SAML 2.0, or SWT token required to access the protected web service. Alternatively, in the cases where no identity providers are available, the client can authenticate directly with Access Control using a service identity (a credential type configured in in the service) in order to obtain the required token. A service identity credential can be a password, an X.509 certificate, or a 256-bit symmetric signing key (used to validate the signature of a self-signed SWT token presented by the client).

For more information on web service scenarios and Access Control, see Web Services and ACS in the MSDN documentation.

Management

Access Control features a web-based management portal, which makes it easy to configure core web application and web service scenarios for a selected namespace. This includes support for configuring and managing the following components:

- Identity providers

- Relying party applications, which represent your web applications and services

- Rules and rule groups, which define what information is passed from identity providers and clients to your applications

- Certificates and keys for token signing, encryption, and decryption

- Service identities for web service authentication

- Management credentials for accessing the portal and management service

The management portal can be launched by visiting the Service Bus, Access Control, and Caching section of the Windows Azure portal, clicking the Access Control node, selecting a service namespace, and then clicking Manage Access Control Service in the ribbon above.

In addition to the management portal, Access Control now features a redesigned management service that enables all service components to be managed using the OData protocol. For more information on the Access Control management service, see ACS Management Service in the MSDN documentation.

Getting Started

Be sure to check out the following resources for help getting started with Access Control:

- Detailed FAQs

- MSDN Documentation

- CodePlex Site (for code samples)

- Identity Training Kit

- Windows Azure Platform Security Forum

If you have not signed up for Windows Azure AppFabric and would like to start using Access Control, be sure to take advantage of our free trial offer. Just click on the image below and get started today!

• The Windows Azure AppFabric Team posted Announcing the commercial release of Windows Azure AppFabric Caching and Access Control on 4/11/2011:

Today at the MIX conference we announced the first production release of Windows Azure AppFabric Caching service and a release of a new version of the production Access Control service. These two AppFabric services are particularly appealing and powerful for web development.

The Caching service is a distributed, in-memory, application cache service that accelerates the performance of Windows Azure and SQL Azure applications by allowing you to keep data in-memory and saving you the need to retrieve that data from storage or database.

Ebbe George Haabendal Brandstrup, CTO and co-founder of Pixel Pandemic, a company developing a unique game engine technology for persistent browser based MMORPGs, which is already using the Caching service as part of their solution is quoted saying that:

“We're very happy with how Azure and the caching service is coming along.

Most often, people use a distributed cache to relieve DB load and they'll accept that data in the cache lags behind with a certain delay. In our case, we use the cache as the complete and current representation of all game and player state in our games. That puts a lot extra requirements on the reliability of the cache. Any failed communication when writing state changes must be detectable and gracefully handled in order to prevent data loss for players and with the Azure caching service we’ve been able to meet those requirements.”The new version of the Access Control service adds all the great capabilities that provide a Single-Sign-On experience to applications by integrating with standards-based identity providers, including enterprise directories such as Active Directory®, and web identities such as Windows Live ID, Google, Yahoo! and Facebook.

Niels Hartvig, Founder of Umbraco, one of the most deployed Web Content Management Systems on the Microsoft stack, which can be integrated easily with the Access Control service through an extension, is quoted saying:

“We're excited about the very diverse integration scenarios the ACS (Access Control service) extension for Umbraco allows. The ACS Extension for Umbraco is one great example of what is possible with Windows Azure and the Microsoft Web Platform.”

At the PDC’10, we highlighted these two services as Community Technology Previews (CTPs) to get broad customer trial. After several months of listening to customer feedback during this preview period and making customer-requested enhancements, we’re now pleased to release them as production services with full SLAs.

The updated Access Control service is now live, and Caching will be released at the end of April.

Both services will have a promotion period of a few months in which customers can start using them for no charge.

To learn more about these services please use the following resources:

Caching:

- Technical blog post for the release

- Video: Introduction to the Windows Azure AppFabric Cache

- Video: Windows Azure AppFabric Caching – How to Set-up and Deploy a Simple Cache

- Detailed FAQs

- MSDN Magazine article on Caching

- MSDN Documentation

Access Control:

- Technical blog post for the release

- Detailed FAQs

- Vittorio Bertocci’s blog

- Channel 9 video announcing the release

- MSDN Documentation

The Access Control service is already available at our production environment at: http://appfabric.azure.com.

The Caching service is available on our previews environment at: http://portal.appfabriclabs.com/ and will be added to the production environment when released at the end of April. So be sure to login and start using the new capabilities.

If you have any questions, be sure to visit our Windows Azure Platform Forums.

For questions specifically on the Caching service visit the Windows Azure AppFabric CTP Forum.

For questions specifically on the Access Control service visit the Security for the Windows Azure Platform section of the forums.

If you have not signed up for Windows Azure AppFabric yet, and would like to start using these services, be sure to take advantage of our free trial offer. Just click on the image below and get started today!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• The Windows Azure Team posted Understanding Windows Azure CDN Billing on 4/11/2011:

What is the Windows Azure Content Delivery Network (CDN)?

The Windows Azure CDN is a global solution for delivering high-bandwidth content hosted on Windows Azure. The CDN caches publicly available objects at strategically placed locations to provide maximum bandwidth for delivering content to users.

What is Windows Azure billing in general?

The Windows Azure CDN is a pay-per-use service. As a subscriber, you pay for the "amount" of content delivered. This article will discuss how customers are billed for using the Windows Azure CDN. We'll cover the differences in geographies (regions), what incurs cost, and some specialized billing cases.

What is a billing region?

A billing region is a geographic area used to determine what rate is charged for delivery of objects from the CDN. The current billing regions are:

- United States

- Europe

- Rest of World

Click here for more details.

How are delivery charges calculated by region?

The CDN billing region is based on the location of the source server delivering the content to the end user. The destination (physical location) of the client is not considered the billing region.

For example, if a user located in Mexico issues a request and this request is serviced by a server located in a US point of presence (for example Los Angeles) due to peering or traffic conditions, the billing region will be US.

What is a billable CDN transaction?

Any HTTP(S) request that terminates at the CDN is a billable event. This includes all response types, success, failure, or other. However, different responses may generate very different traffic amounts. For example, 304 NOT MODIFIED (and other header-only) responses generate very little transfer since they are a small header response; similarly, error responses (e.g., 404 NOT FOUND) are billable but incur very little cost because of the tiny response payload.

What other Windows Azure costs are associated with CDN use?

Using the Windows Azure CDN also incurs some usage charges on the services used as "origin" for your objects. These costs are typically a very small fraction of the overall CDN usage cost.

If you are using blob storage as "origin" for your content, you also incur charges for cache fills for:

- Storage - actual GB used (the actual storage of your source objects),

- Storage - transfer in GB (amount of data transferred to fill the CDN caches), and

- Storage - transactions (as needed to fill the cache. Read this blog post for more information.)

If you are using "hosted service delivery" (available as of SDK 1.4) you will incur:

- Windows Azure Compute time (the compute instances that act as origin), and

- Windows Azure Compute transfer (the data transfer from the compute instances to fill the CDN caches)

If your client uses byte-range requests (regardless of "origin" service):

- A byte-range request is a billable transaction at the CDN. When a client issues a "byte-range" request, this is a request for a subset (range) of the object. The CDN will respond with only the partial content requested. The "partial" response is a billable transaction and the transfer amount is limited to the size of the range response (plus headers).

- When a request comes in for only part of an object (by specifying a byte-range header), the CDN may fetch the entire object into its cache. That means that although the billable transaction from the CDN is for a partial response, the billable transaction from the origin may involve the full size of the object.

How much transfer is incurred to support the cache?

- Each time a CDN point of presence needs to fill its cache, it will make a request to the origin for the object being cached. This means the origin will incur a billable transaction on every cache miss. How many cache misses you have depends on a number of factors:

- How "cacheable" content is: If content has high TTL/expiration values and is accessed frequently so it stays popular in cache, then the vast majority of the load is handled by the CDN. A typical "good" cache-hit ratio is well over 90%, meaning that less than 10% of client requests have to return to origin (either for a cache miss or object refresh).

- How many nodes need to load the object: Each time a node loads an object from the origin, it incurs a billable transaction. This means that more global content (accessed from more nodes) results in more billable transactions

- Influence of TTL: A higher TTL (Time-To-Live) for an object means it needs to be fetched from the origin less frequently. It also means clients (like browsers) can cache the object longer, which can even reduce the transactions to the CDN.

How do I manage my costs most effectively?

Set the longest TTLs possible. Click here to learn more.

Wely Lau explained Establishing Remote Desktop to Windows Azure Instance – Part 1 in a 4/11/2011 post:

Introduction

As a Platform-As-a-Service (PAAS) provider, Windows Azure provides provisioned VM environment for customer. It’s no longer our responsibility to take care of platform related matters. This also includes applying new patch on the operating system. Also the instance is actually sited behind the load-balancer.

We would be only focus on taking care of our application that is hosted in Windows Azure.

The application that is hosted on Windows Azure is precisely hosted on the VM instance. Those VM instances are actually provisioned as Windows Server 2008 images based on the VM size that we specify in ServiceDefinition file (small, medium, large, etc.).

Troubleshooting on Windows Azure

In many scenarios, especially troubleshooting an issue, we would need to understand the actual problem in the environment. Traditionally, we’ve got a well-known tools and protocol which has great ability to perform remote desktop to the particular machine. Before Windows Azure SDK 1.3, it’s just too hard to troubleshoot Windows Azure Application, really it was very painful. With the released of Windows Azure SDK 1.3 (around end of 2010), we are now be able to perform RDP to particular Windows Azure instance.

How It Works

The idea of RDP-ing to Windows Azure instance is to utilize RemoteAccess plugin in the Windows Azure project. As mentioned earlier the VMs on Windows Azure are actually sited behind the load-balancer, which mean when we perform RDP, we are not directly hitting the VM. In fact, it uses port 3389 to pass our request to the VM.

Alright, let’s more to the more hands-on stuff. There are 2 major set of tasks to be done. Firstly, we use Visual Studio to configure the RemoteDesktop settings. Secondly, we need to upload a certificate on Windows Azure Platform.

Part 1. Some settings on Visual Studio

0. I assume that you are ready to go with your application.

1. Right click on your Windows Azure Project, and select Publish.

2. You will see a Deploy Windows Azure project dialog box show up.

Regardless you want to deploy it now or later, click on the Configure Remote Desktop connections… link.

3. You will see another dialog box comes up, the Remote Desktop Configuration.

Check the Enable connection for all roles checkbox.

4. The next step is to select certificate. Essentially, we would also need to specify username and password that will be used when remote-in the instance. To ensure that our credential (username and password) to be encrypted properly, it uses certificate to secure communication between Visual Studio environment and the Windows Azure instance. The certificate itself could be self-signed by our own without having to purchase it to certificate provider.

Click on the dropdown list to select available certificate or create a new one. To create a new one, you can either make use of “makecert tools” or just simply use the wizard provided there.

In this example, I assume we have available certificate installed.

5. Subsequently, we’ll need to enter username, password. We would also need to specify the account expiration that to avoid inappropriate usage, just in case the credential is lost.

Click OK on Remote Desktop Configuration dialog.

6. Just click on Cancel. Do not click-on OK for the Deploy Windows Azure project dialog, as I guarantee that you’ll fail to deploy since you are not done yet.

7. If you open ServiceDefinition.csdef and ServiceConfiguration.cscfg file, you will notice that there’re some new stuff added automatically.

In ServiceDefinition.csdef file it adds 1 or 2 plugin module? Why it’s 1 or 2?

Okay, pay attention here, for Role that has input endpoint, it’s only require “Remote Access” module. However, for Role that do not have an input endpoint, it will need to have internal endpoint port 3389 that is accessible through the role with input endpoint.

In the example below. There are 2 roles: GatewayRole and TargetRole. In order to remote desktop to GatewayRole, RemoteAccess module only is sufficient enough.

However if we need to remote desktop to TargetRole, we need both include RemoteAccess in this role and RemoteForwarder plugin on the GatewayRole.

Still can’t understand? Forget it, it’s actually transparent for us anyway8. How about, check out the ServiceConfiguration.cscfg file.

It’s basically key-value pair of our setting that we’ve done in the wizard.

Time out here…

Take a break here. I’ll continue this on the next post again. Stay tuned.

See also Tim Anderson (@timanderson) described Trying out Remote Desktop to a Microsoft Azure virtual machine on 4/10/2011 in the Visual Studio LightSwitch section.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Steve Marx (@smarx) posted on 4/12/2011 Things You Can Do with Windows Azure to accompany his 4/13/2011 MIX 11 10 Things You Didn’t Know You Could Do with Windows Azure session:

Things You Can Do with Windows Azure

- Always Start with an Introduction

- Combine Web and Worker Roles

- Configure IIS Application Pools

- Create an Application Programmatically

- Deploy an Application Programmatically

- Enable Classic ASP

- Give Out Pre-Signed URLs to Blobs (SAS)

- Host Adaptive Streaming Video in Blob Storage

- Host Web Content in Blob Storage

- Install ASP.NET MVC 3

- Install Application Request Routing

- Install Expression Encoder

- Install PHP

- Install Python

- Install Ruby

- Lease Blobs

- Log Performance Counters in Real-Time

- Package from the Command Line

- Query Blob Storage from the Browser

- Register COM Objects

- Run ASP.NET Web Pages in a Web Role

- Run IIS in a Worker Role

- Run Mencoder

- Run Multiple Websites in the Same Web Role

- Run Node.js

- Run Web and Worker Roles Elevated

- Share Folders (net share)

- Use Custom Domains

- Use Extra Small VMs

- Use Memcached

- Use Remote Desktop

You already knew you could run your .NET-based applications on Windows Azure, but did you know you can encode videos in Windows Azure with Expression Encoder, use Memcached, run multiple web sites in a Web Role, and more? Steve Marx will show you ten things he’s done with Windows Azure that may surprise you, along with the technical details of how each was accomplished.

•• James Conard announced the availability of the Windows Azure Accelerator for Umbraco during his MIX 11 What’s New In the Windows Azure Platform session on 4/12/2011:

The Windows Azure Accelerator for Umbraco is designed to enable Umbraco applications to be easily deployed into the Windows Azure. By running in Windows Azure, Umbraco applications can benefit from the automated service management, reduced administration, high availability, and scalability provided by the Windows Azure Platform. For more information about the benefits of Windows Azure, please visit the Windows Azure web site.

The accelerator has been designed to enable you to rapidly deploy Umbraco applications and updates to your application without redeploying a full Windows Azure Service Package. Included with the accelerator is a simple deployment client that can upload and a start an Umbraco site within Windows Azure in a matter of minutes.

How does it work?

The accelerator is a simple application which synchronizes the instance of Umbraco in the Hosted Service with those stored in Blob Storage. This synchronization occurs both ways, that way, any change done on an instance is replicated to all the other.

If a new instance of Umbraco is detected in blob storage, then a new site is created and configured in the Hosted Service IIS, leveraging the Full IIS feature introduced with Windows Azure SDK & Tools 1.3.

For more information please refer to the setup documentation included with the release.

Requirements

For running the setup scripts and generating the deployment package you will need the following pre-requisites:

•• James Conard announced the availability of the Access Control Service Extensions for Umbraco during his MIX 11 What’s New In the Windows Azure Platform session on 4/12/2011:

Welcome!

Welcome to the home page of the Access Control Service (ACS) Extensions for Umbraco. The ACS Extensions augment your web site with powerful identity and access management capabilities:

- Authenticate users from social identity providers such as Facebook, Windows Live ID, Google and Yahoo.

- Manage those users and access policies though the familiar Umbraco management UI, without the hassle of maintaining passwords for them!

- Make your web site available to business federated partners from Active Directory or any other identity provider with federation capabilities. Apply fine-grained access policies mapping claims to your existing Member Groups.

- Handle user invitations via a powerful email invitation & verification system.

All those capabilities are implemented by integrating Umbraco with the Windows Azure AppFabric Access Control Service. The ACS Extensions are designed to blend into the normal workflow you follow when managing your Umbraco instance.

You can either install the ACS Extensions via the provided NuGet package (the preferred method), or you can follow the step by step document which details how to modify the Umbraco source. In both cases, the final result will be an enhanced Umbraco management UI which supports the new capabilities and guide you through the various configurations.

The simplest way of installing the ACS Extension is using the NuGet package directly from Visual Studio via Library Package Manager (see the NuGet web site for more info), or downloaded from the ACS Extensions home on Codeplex. Alternatively, you can download the ACS Extensions source code package and refer to the manual installation document it provides.IMPORTANT: The ACS Extensions code sample has been designed with the intent to demonstrate how to take advantage of the Windows Azure Access Control Service and the Umbraco extensibility model, and is not meant to be used in production as is.

Getting Started

We have documents and video that will help you to hit the ground running, by walking you through the process of setting up and using the ACS Extensions for common authentication and authorization tasks in your Umbraco web site.

- Setting up Umbraco and the ACS Extensions

- Provisioning, Authenticating and Authorizing Members from Social Providers

- Integrating with ADFS and Any Other WS-Federation Provider

If you want to keep a version of those documents on your local environment, you can download the source code package: you will find the same tutorials in HTML and DOCX format.

About Windows Azure AppFabric Access Control Service

Windows Azure AppFabric Access Control Service (ACS) is part of the Windows Azure Platform: it provides you a convenient way of brokering authentication between your application and the identity providers you want to target, decoupling your solutions form the complexities of implementing specific protocls, handling trust relationships and expressing access policies. The ACS Extensions augment Umbraco with identity and access control capabilities offered by ACS.

If you want to know more about ACS, please refer to the Service Bus, Access Control & Caching section in the Windows Azure Portal.Requirements

- Umbraco 4.7.0

- Microsoft .NET Framework 4

- Internet Information Services 7.x

- SQL Server 2008 Express (or later)

- NuGet 1.2

- Windows Identity Foundation

•• The Windows Azure Team reported on 4/10/2011 a New Azure Training Kit Available:

Today we released an updated version of the Azure Services Training Kit. The first Azure Services Training Kit was released during the week of PDC and it contained all of the PDC hands-on labs. Since then the team has been creating new content covering new features in the platform.

The Azure Services Training Kit April update now includes the following content covering Windows Azure, .NET Services, SQL Services, and Live Services:

- 11 hands-on labs – including new hands-on labs for PHP and Native Code on Windows Azure.

- 18 demo scripts – These demo scripts are designed to provide detailed walkthroughs of key features so that someone can easily give a demo of a service

- 9 presentations – the presentations used for our 3 day training workshops including speaker notes

The training kit is available as an installable package on the Microsoft Download Center. You can download it from http://go.microsoft.com/fwlink/?LinkID=130354

<Return to section navigation list>

Visual Studio LightSwitch

•• Beth Massi (@bethmassi) produced CodeCast Episode 104: Visual Studio LightSwitch with Beth Massi on 4/12/2011:

I woke up early this morning to do a phone interview with an old friend of mine, Ken Levy, about Visual Studio LightSwitch. We chatted about what LightSwitch is and what it’s used for, some of the latest features in Beta 2, deployment scenarios including Azure deployment, and a quick intro to the extensibility model. I’m always pretty candid in these and this one is no different – lots of chuckling and I had a great time as always. Thanks Ken!

Check it out: CodeCast Episode 104: Visual Studio LightSwitch with Beth Massi (Length: 47:44)

Links from the show:

- LightSwitch Developer Center - http://msdn.com/lightswitch

- LightSwitch Team Blog - http://blogs.msdn.com/lightswitch

And if you like podcasts, here are a couple more episodes with the team:

Mykre continued his Access Database to LightSwitch conversion example with LightSwitch Project management Sample - Part 5 on 4/12/2011:

Looking over the Access database application the Projects and Tasks have some fields that allow you to select from a drop down selection box. Inside Access you can add a Lookup for this or have the system read those values from a table or query. Inside LightSwitch you have simular options, the option I am going with for the conversion is to create a Choice List for these fields.

Previous Posts in the Series.

- Access, SQL Server and LightSwitch Data Types

- LightSwitch Project Management Sample – Part 1, Introduction

- LightSwitch Project Management Sample – Part 2, Access Data Model

- LightSwitch Project management Sample – Part 3, Data Model, Summary and Display Properties

- LightSwitch Project Management Sample – Part 4, Creating Screens

Creating a Choice List inside LightSwitch

- Inside Visual Studio open the Table designer for the Projects Table.

- Highlight the field “Priority”

- Inside the Properties window you will see an option called “Choice List…”, Select this Link.

- Enter the values as per the picture below

- Press OK to update the Choice List

- With the field “Priority” Still highlighted, change the data type to an “Integer”

- Save the Application and if you have the need you can execute it to see the changes.

When doing this change (As we are also changing the Data Type) you will notice that the IDE will give you a warning that changing this will remove all of the data as we are changing the table. This is ok as we do not have anything in there at the moment, but in a production system you will need to make sure that you are ready for the change.

Now that you have run the application you will notice that when adding or changing the priority you are now presented with a drop down box that has the values we just entered in. So in one swoop we have changed the control type, changed the data type and supplied the user with a hard coded value to choose from… and we did not have to change the screen design or code to do it.

Next we need to add another choice list for the Status field using the following values. Once done make the same changes to the Tasks Table in the “Priority” and “Status” Fields.

Pulling a Choice list from a Table

The other option of allowing a User to make a choice based on a list is to have that list stored as a table. Doing this allows that list to be added to.

To do this we will need to create a new table in the application.

Here is the Table.

Now inside the Projects Table Delete the Category Field and add a new Relationship as per the screen shot below.

Now as we have removed the “Category” Field from the Table we will need to go back and change the screen that we have created for the Projects.

On the Search screen when you look at the designer you will notice that the “Category” Field has been removed. What we need to do now is add the new “ProjectCategory” item in it’s place. On the left side you will see the Queries and the fields that can be added to the screen.

Drag the “ProjectCategory” Item onto the search screen in the place between the Project Name and the Priority items as per the screen shot below.

Doing this will add all of the controls that we need and set up the links. Next we need to do the same for the PojectDetails and the ProjectsListDetails screens. So that we can manage the Project Categories we need to add another screen. This screen only needs to be simple so an “Editable Grid Screen will do us. Leave the defaults when creating the screen as we can change things later if needed.

Once we have created the screen you will notice that the screen name is over complicated. To make it easier for the user open the screen in the designer and inside the properties you will see a Display Name option. Change the name there so it is simply “Project Categories”.

Organize our Menus

To make it easier for the user to find their way around, and also for us to lock it down we can use LightSwitch’s built in menus. When you run the application now you will see a menu system on the Left of the screen (Note that as templates start to appear on the web, this menu could be anywhere as it will be in relation to the template that you are using).

If you right click on the Project in the solution explorer and select the properties option you will see a Tab that is called “Screen Navigation” At this point I will get you to highlight the “Projects List Detail” and at the bottom of the Tab press the “Set” Button to set the screen as the start up screen.

You will see in the above screen shot that the screens are broken up into to main groups. At present we are not using the “Administration” section as we do not have the Authentication model set up.

Press the ‘Add Group” Button and add a “Project Configuration” Section. Once done press the “Include Screen” button and include the “Project Categories” Screen. You will now need to move up into the Tasks Section and Delete the Screen from that group.

Now if you execute the Application you will see that we have two menu groups active and we now have a full section to include our Project Configuration screens into.

Tim Anderson (@timanderson) described Trying out Remote Desktop to a Microsoft Azure virtual machine on 4/10/2011:

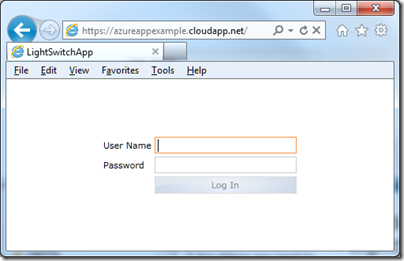

I have been trying out Visual Studio LightSwitch, which has an option to deploy apps to Windows Azure.

Of course I wanted to try this, and after a certain amount of hassle generating certificates and switching between Visual Studio LightSwitch and the Azure management portal I succeeded.

I doubt I would have made it without this step by step guide by Andy Kung. The article begins:

One of the many features introduced in Visual Studio LightSwitch Beta 2 is the ability to publish your app directly to Windows Azure with storage in SQL Azure. We have condensed many steps one would typically have to go through to deploy an application to the cloud manually.

Somewhere between 30 and 40 screens later he writes:

The last step shows you a summary of what you’re about to publish. FINALLY! Click Publish.

We just have to imagine how many screens there would have been if Microsoft had not condensed the “many steps”. The result is also not quite right, because it uses self-signed certificates that will present security warnings when you use the app. For a product supposedly aimed at non-developers it is all hopelessly difficult; but I guess techies are used to this kind of thing.

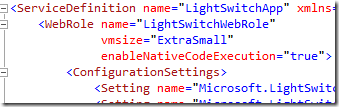

I was not content though. First, I wanted to use an Extra Small instance, and LightSwitch defaults to a Small instance with no obvious way to change it. I cracked that one. You switch the view in Solution Explorer to the File view, then find the file ServiceDefinition.csdef and edit the vmsize attribute:

It worked and I had an Extra Small instance.

I was still not satisfied though. I wanted to use Remote Desktop so I could check out the VM Azure had created for me. I could not see any easy way to do this in the LightSwitch project, so I created another Azure project and configured it for Remote Desktop access using the guide on MSDN. More certificate fun, more passwords. I then started to publish the project, but bailed out when it warned me that I was overwriting a previous deployment.

Then I copied the likely looking parts of ServiceDefinition.csdef and ServiceConfiguration.cscfg from the standard Azure project to the LightSwitch project. In ServiceDefinition.csdef that was the Imports section and the Certificates section. In ServiceConfiguration.cscfg it was all the settings starting Microsoft.WindowsAzure.Plugins.Remote…; and again the Certificates section. I think that was it.

It worked. I published the LightSwitch app, went to the Azure management portal, selected the instance, and clicked Connect.

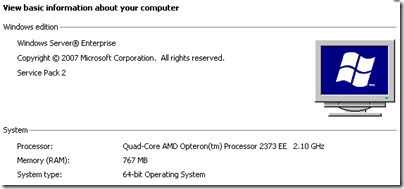

What I found was a virtual Quad-Core Opteron with 767MB RAM and running Windows Server 2008 Enterprise SP2. It seems Azure does not use Server 2008 R2 yet – at least, not for Extra Small instances.

750MB RAM is less than I would normally consider for Server 2008 – this is Extra Small, remember – but I tried using my simple LightSwitch app and it seemed to cope OK, though memory is definitely tight.

This VM is actually not that small in relation to many Linux VMs out there, happily running Apache, PHP, MySQL and numerous web applications. Note that my Azure VM is not running SQL Server; SQL Azure runs on separate servers. I am not 100% sure why Azure does not use Server Core for VMs like this. It may be because server core is usually used in conjunction with GUI tools running remotely, and setting up all the permissions for this to work is a hassle.

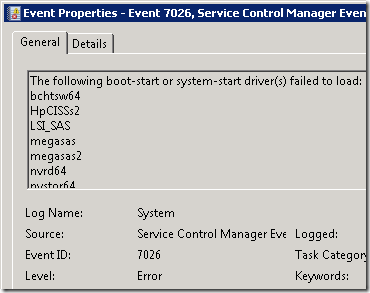

I took a look at the Event Viewer. I have never seen a Windows event log without at least a few errors, and I was interested to see if a Microsoft-managed VM would be the first. It was not, though there are a mere 16 “Administrative events” which is pretty good, though the VM has only been running for an hour or so. There were a bunch of boot-start drivers which failed to load:

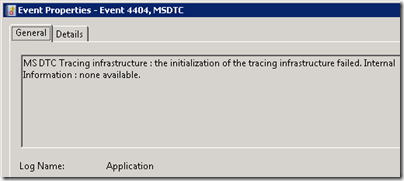

and this, which I would describe as a typical obscure and probably-unimportant-but-who-knows Windows error:

The Azure VM is not domain-joined, but is in a workgroup. It is also not activated; I presume it will become activated if I leave it running for more than 14 days.

Internet Explorer is installed but I was unable to browse the web, and attempting to ping out gave me “Request timed out”. Possibly strict firewall rules prevent this. It must be carefully balanced, since applications will need to connect out.

The DNS suffix is reddog.microsoft.com – a remnant of the Red Dog code name which was originally used for Azure.

As I understand it, the main purpose of remote desktop access is for troubleshooting, not so that you can install all sorts of extra stuff on your VM. But what if you did install all sorts of extra stuff? It would not be a good idea, since – again as I understand it – the VM could be zapped by Azure at any time, and replaced with a new one that had reverted to the original configuration. You are not meant to keep any data that matters on the VM itself; that is what the Azure storage services are for.

Related posts:

See also Wely Lau explained Establishing Remote Desktop to Windows Azure Instance – Part 1 in a 4/11/2011 post in the Windows Azure VM Role, Virtual Network, Connect, RDP and CDN section.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

•• The Windows Azure Team announced in a 4/12/2011 email to current users that the Windows Azure Platform Introductory Special will be extended to 9/30/2011 as follows:

We are pleased to inform you that as of April 12, 2011, the Windows Azure platform Introductory Special has been extended through September 30, 2011 and now includes 20 GB of storage plus data transfers of up to 20 GB in and 20 GB out. These enhancements have been applied to your subscription at no extra charge. Other services remain unchanged.

The Introductory Special promotion will end September 30, 2011 and all usage for billing months beginning after this date will be charged at the standard rates. Please refer to this link for complete details on your offer.

From the updated page:

This promotional offer enables you to try a limited amount of the Windows Azure platform at no charge. The subscription includes a base level of monthly compute hours, storage, data transfers, a SQL Azure database, Access Control transactions and Service Bus connections at no charge. Please note that any usage over this introductory base level will be charged at standard rates.

Included each month at no charge:

- Windows Azure

- 750 hours of an extra small compute instance

- 25 hours of a small compute instance

- 20 GB of storage

- 50,000 storage transactions

- SQL Azure

- 1GB Web Edition database (available for first 3 months only)

- Windows Azure platform AppFabric

- 100,000 Access Control transactions

- 2 Service Bus connections

- Data Transfers (worldwide)

- 20 GB in

- 20 GB out

Any monthly usage in excess of the above amounts will be charged at the standard rates. This introductory special will end on September 30, 2011 and all usage will then be charged at the standard rates.

• Remy Pairault reported Windows Azure Traffic Manager CTP is live on 4/12/2011:

In time for MIX’11, Windows Azure is unveiling the Windows Azure Traffic Manager Community Technology Preview (CTP).

This feature will allow Windows Azure customers to more easily balance application performance across multiple geographies. Using policy management you will be able to route some traffic to a hosted service in one region, and route another portion of your application traffic to another region.

The applications of such a feature are broken down three ways:

- Performance: route traffic to one region or another based on performance

- Failover: it the Traffic Manager detects that the service is offline, it will send traffic to the next best available hosted service

- Round Robin: route traffic alternatively across the hosted services in all regions

The Windows Azure Traffic Manager is now available as a Community Technology Preview (CTP), free of charge, but it is invitation only. You’ll have to request access on the Windows Azure Portal, and invitations will be processed based on feature and operational criteria, so you’ll have to be patient.

To submit your invitation request to join the Windows Azure Traffic Manager CTP, follow the instructions below:

- Go to http://windows.azure.com

- Sign in using a valid Windows Live ID for you Windows Azure subscription

- Click on the Beta Programs tab on the left nav bar

- Select the check box for Windows Azure Traffic Manager

- Click on Apply for Access

References:

• The Windows Azure Team announced Windows Azure News from MIX11 on 4/12/2011:

We announced several updates to the Windows Azure platform today at MIX11 in Las Vegas. These new capabilities will help developers deploy applications faster, accelerate their application performance and enable access to applications through popular identity providers including Microsoft, Facebook and Google. Details follow.

New Services and Functionality

A myriad of new Windows Azure services and functionality were unveiled today. These include:

- An update to the Windows Azure SDK that includes a Web Deployment Tool to simplify the migration, management and deployment of IIS Web servers, Web applications and Web sites. This new tool integrates with Visual Studio 2010 and the Web Platform Installer. This update will ship later today.

- Updates to the Windows Azure AppFabric Access Control service, which provides a single-sign-on experience to Windows Azure applications by integrating with enterprise directories and Web identities.

- Release of the Windows Azure AppFabric Caching service in the next 30 days, which will accelerate the performance of Windows Azure and SQL Azure applications.

- A community technology preview (CTP) of Windows Azure Traffic Manager, a new service that allows Windows Azure customers to more easily balance application performance across multiple geographies.

- A preview of the Windows Azure Content Delivery Network (CDN) for Internet Information Services (IIS) Smooth Streaming capabilities, which allows developers to upload IIS Smooth Streaming-encoded video to a Windows Azure Storage account and deliver that video to Silverlight, iOS and Android Honeycomb clients.

Windows Azure Platform Offer Changes

We also announced several offer changes today, including:

- The extension of the expiration date and increases to the amount of free storage, storage transactions and data transfers in the Windows Azure Introductory Special offer. This promotional offer now includes 750 hours of extra-small instances and 25 hours of small instances of the Windows Azure service, 20GB of storage, 50K of storage transactions, and 40GB of data transfers provided each month at no charge until September 30, 2011. More information can be found here.

- An existing customer who signed up for the original Windows Azure Introductory Special offer will get a free upgrade as of today. An existing customer who signed up for a different offer (other than the Windows Azure Introductory Special) would need to sign up for the updated Windows Azure Introductory Special Offer separately.

- MSDN Ultimate and Premium subscribers will benefit from increased compute, storage and bandwidth benefits for Windows Azure. More information can be found here.

- The Cloud Essentials Pack for Microsoft partners now includes 750 hours of extra-small instances and 25 hours of small instances of the Windows Azure service, 20GB of storage and 50GB of data transfers provided each month at no charge. In addition, the Cloud Essentials Pack also contains other Microsoft cloud services including SQL Azure, Windows Azure AppFabric, Microsoft Office 365, Windows Intune and Microsoft Dynamics CRM Online. More information can be found here.

Please read the press release or visit the MIX11 Virtual Press Room to learn more about today's announcements at MIX11. For more information about the Windows Azure AppFabric announcements, read the blog post, "Announcing the Commercial Release of Windows Azure AppFabric Caching and Access Control" on the Windows Azure AppFabric blog.

Follow @MIXEvent or search #MIX11 on Twitter for up-to-the-minute updates throughout the event.

Arik Hesseldahl [pictured below] had Seven Questions for Doug Hauger, Head of Microsoft’s Azure Cloud Platform in a 4/11/2011 interview for D|All Things Digital:

I had always been a little confused about Microsoft’s Windows Azure cloud computing platform. Amazon Web Services I get. But had you asked me to tell you how it and Windows Azure are different, I would have been a little hard pressed to tell you.

I can tell you that Windows Azure is going to make the telematics systems in the next generation of Toyota cars smarter. And I also know that this unit of Microsoft has been in a state of management flux recently. Amitabh Srivastava, the Microsoft Distinguished Fellow, who in 2006 took over a project then known only as Red Dog that went on to become Azure, left the company in February.

It’s no secret that, like so many other companies, Microsoft has some big plans for cloud services. It recently disclosed that it plans to spend more than $8 billion in research and development funds on its cloud strategy.

On a recent visit to the Microsoft campus in Redmond, I got a chance to sit down with Doug Hauger, Microsoft’s general manager of Windows Azure [pictured at right]. And my first question was really really basic.

NewEnterprise: Doug, there’s so much happening in the cloud computing space these days, and most of the time when people think of cloud services they think of Amazon Web Services. And if they mention Windows Azure, they think, well, that’s Microsoft’s answer to Amazon. But you describe Azure as more of a platform-as-a-service. Can you walk me through the differences?Hauger: Windows Azure started about five years ago. At that point it started because the company, as with all service providers, was facing some challenges on providing large, scalable, manageable services, not just to consumers, but to businesses that could dynamically scale, and that we could innovate on quickly, and bring out new features. Originally it was meant to be a platform we would use internally for services that we would then deliver out to customers. We quickly realized that we should sell it to partners and customers, and allow them to build on it as a platform.

There are fundamental differences between infrastructure as a service and what we did as platform as a service. It’s different in key ways from, say, what Amazon does with EC2 and S3 or VMWare being implemented in a data center. Our starting point for the design was to see the data center as a unit. That means the networking structure, the load-balancers, the power management, and so on–rather than in infrastructure as a service, you start from an individual server and move up.

If you allocate a service into Windows Azure and say you want it available 100 percent of the time, we will allocate it across multiple upgrade domains and physical power domains in such a way so that if any individual rack goes down or if we’re upgrading the operating system, there’s no interruption in service. That’s just a fundamentally different starting point, with an individual server and moving up. And the way that we do that is we have built out an abstraction layer of APIs that let you write to a set of services, storage services, computer services, networking services, et cetera. As a developer you can write to the service, and give us your application, and it just gets provisioned through what we call a fabric controller, that controls the data center, and also across multiple data centers. That was a design point. That’s how we allow people to write services that can scale and won’t fail and will be available all the time.

The conversation about infrastructure as a service typically starts at cost savings. You go see a customer and they say they want to cut their IT budget and outsource their IT, and so they start there. Platform as a service you start at the cost savings, but very quickly you see 10, 20 or 30 percent cost savings. But the conversation quickly turns to the innovation life cycle that they can get out of the platform. It’s much faster than you can at infrastructure as a service.

The big point that everyone gets about the cloud is that they can use it to save money, but then they quickly start asking what more can they do with the cloud. Are you seeing the same thing?

Yes, exactly. In the enterprise, they’re starting to turn the crank on innovation. I talk to customers who are turning things around in six weeks or a month whereas before they would six months or a year. I actually just talked to a customer the other day, and they said their developers were spending 40 to 50 percent of their time managing services and they couldn’t use that time writing software which was their job. When they moved to a platform as a service, they didn’t have to worry about that anymore. We’re seeing this happening in the enterprise where people are doing this for internal development and on services they’re building for their customers.

One example, Daimler just did their new version of the smart car. They wanted a service so you can check the status of your car when its charging from your smart phone, locate it, et cetera. They turned it around in a couple of weeks on Azure and launched it at the same time as the car launched.

We’re also seeing small players compete at the enterprise level. There’s a small company called Margin Pro and they do mortgage analysis and risk assessment on mortgages. Basically it’s a couple of economists and developers. They wrote the software on Windows Azure, and now they have 70 banks around the world, tens of millions of dollars in revenue, and they are competing with some of the biggest financial services companies in the world because of this back-end infrastructure data center they can use to deliver their results to their customers.

But do you have customers who run standard apps on it too?

Many standard applications have some level of customization, and so we’re seeing a lot of hybrid applications, where customers are extending them into Azure. We have a case with Coca-Cola Enterprises which has a back-end order-processing app that they’ve extended into Azure. And what they wanted to do was get more reach and more agility for the front-end. So they built a secure connection between their data center and Windows Azure and then extended the application out to their partners and customers, essentially people like Domino’s Pizza who order Coca Cola products. We’re seeing a lot of these cases of existing applications being extended like that.

We’re also seeing companies using the high performance computing workload. One example is a company called Greenbutton, which has done a high performance scheduling and billing system on Azure. Another is Pixar, which has an application called RenderMan, which does rendering. Most large animation houses have their own clusters they do this rendering on. Pixar wanted to open up a market for smaller animation houses, little Pixars if you will. They’re working with Greenbutton to embed their technology into RenderMan. They can farm their rendering out to Azure and be billed on a usage basis. That’s a case where you have a large company and a smaller one working together and leveraging the power of the cloud to open up a whole new marketplace where they can be competitive. We call it the democratization of IT.

At what point is the customers’ thinking right now? Are they still at that point where they want to see how much money they can save by moving things that are on-premise to the cloud or are they past that by now?

I would say there’s three buckets of customers. I’ve been in this role for three years and the conversations have evolved in some interesting ways. Three years ago I was telling people they should be adopters and get on board with this platform early. They all said to come back and talk to them in five years. Then about two years ago, the majority of customers were in the first bucket, interested in wanting to save money but they weren’t interested in doing any new innovation. And then there were a few willing to innovate a bit by extending their applications into the cloud. Today I would say many, but not the majority yet, but a lot of them say they get the cloud, they get the cost savings, and now they want to drive the innovation life cycle faster. And there is a growing percentage who are willing to do something completely different and compete in a new way and build a brand new business. It’s been exciting to see that.

What’s been really exciting has been seeing mid-sized companies realizing they can use the cloud to give them an advantage to innovate faster and compete against really big companies. So that is sort of the landscape. Interestingly, I’ve been seeing a lot more adoption among the financial services companies than I had anticipated.

Aren’t they the ones who are supposed to be the most conservative when it comes to IT? I mean, they’re aggressive on performance, but obsessed with security and so skeptical of using the cloud because they don’t want to let their data leave their hands.

Exactly. But think about financial services. They’ve been in cloud computing forever, but it’s just been running on their own proprietary clouds. And so they are very good about understanding their application portfolio, and what can run in a public cloud, what has to stay in a private cloud, and how they can span those clouds. You can basically say you want to do risk assessment on portfolios, you anonymize the data, and you run it on the public cloud, you do all the analytics, you bring it back on-premise and then you deliver it to your customer. Having that kind of mentality in that industry allows them to move very quickly.

Also, manufacturing is moving and adopting the cloud faster than I would have guessed. And interestingly enough, government–not so much federal, because there’s so many certification requirements–but state and local governments are embracing the cloud because of the economic situation, and these are not just governments within the U.S. In Australia and Western Europe, we’re seeing governments adopting and building out applications so they can get services out to their citizens.

So what’s keeping you up at night? What makes you worry?

There’s a few things I think about. While we drive customers to a very fast innovation life cycle, we need to stay ahead of that innovation life cycle ourselves. We’ve done a pretty good job with that. One example, when we first released in beta a few years ago, we had .NET but we didn’t have PHP or Java. We got feedback immediately, almost on the first day, that customers wanted those and right away. And so we turned it around and added those within three months. Our ability to turn the crank pretty quickly is there. And that is something that in the software industry and specifically Microsoft, we have to make sure we make this turn toward service delivery, where we have to innovate quickly so you can deliver services. I think we’re doing a good job, but it’s something top of mind for me.What are they asking for now? Is there something new the customers want that they don’t have?

They’re asking for continued investment in Java. We have it now, but making it a truly first class citizen, which is what we’re focused on delivering. We also need to keep our ear to the ground around things like application frameworks, extending the modeling capabilities in Visual Studio and things like that. It’s just a matter of thinking about the developer. We need to understand what they want, that’s what we’re here for.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

•• Dell Computer posted a Windows Azure Technology From Dell page as part of its recently revamped Cloud Computing site:

Windows Azure Technology From Dell

Providing Cloud Options, Easing Cloud Adoption

Searching for the right way to introduce the flexibility, scalability and cost savings of cloud computing into your IT environment? Dell can help. We have partnered with Microsoft to add the Windows® Azure™ platform to the options available from the Dell Services cloud.

We have also partnered in creating the Dell-powered Windows Azure Platform Appliance. Working on the platform-as-a-service (PaaS) cloud delivery model — one of three cloud delivery models — the Windows Azure Platform Appliance offers cost-saving elasticity. You can scale up when you need capacity and pull back when you don’t.

The result of using the Dell-powered Windows Azure Platform Appliance is a flexible cloud delivery model that adapts to your unique needs and goals — in other words, it’s a cloud the way you want it. With public or private options, we offer the level of cloud support you need, whether it’s:

- Standardized technology and proactive, remote management

- Simplicity of services delivered through the cloud

- Ease of use, with automated, self-configuring, open technology

- Hyper-efficient storage that’s tiered, safe, affordable and open

Dell Services is ready to help you evaluate where and how your organization can benefit from cloud computing. We offer:

- Cloud consulting services customized for your specialized needs

- Application migration, integration and implementation services

- Expertise in the full range of cloud models, including software as a service, infrastructure as a service and platform as a service

Dell has been designing infrastructure solutions for the leading cloud service providers for years. Talk to Dell today about how we can help you move to the cloud.

Cloud Solutions

Reduce operating expenses; increase capacity; provide resiliency — no matter what drives your cloud goals, Dell can help you get there.

More DetailsCloud-Based Services

Boost IT capabilities without adding hardware maintenance complexities and expensive up-front costs.

More Details

I’m glad to see Dell devoting some Web space to last year’s agreement with Microsoft to deliver turnkey WAPA data ceners.

•• Judith Hurwith (@jhurwitz) asserted Yes, you can have an elastic private cloud in an 4/11/2011 post:

I was having a discussion with a skeptical CIO the other day. His issue was that a private cloud isn’t real. Why? In contrast to the public cloud, which has unlimited capability on demand, a private cloud is limited by the size and capacity of the internal data center. While I understand this point I disagree and here is why. I don’t know of any data center that doesn’t have enough servers or capacity. In fact, if you talk to most IT managers they will quickly admit that they don’t lack physical resources. This is why there has been so much focus on server virtualization. With server virtualization, these organizations actually get rid of servers and make their IT organization more efficient.

Even when data centers are able to improve their efficiency, they still do not lack resources. What data centers lack is the organizational structure to enable provisioning of those resources in a proactive and efficient way. The converse is also true: data centers lack the ability to reclaim resources once they have been provisioned.

So, I maintain that the problem with the data center is not a lack of resources but rather the management and the automation of those resources. Imagine an organization leverages the existing physical resources in a data center by adding self-service provisioning and business process rules for allocating resources based on business need. This would mean that when developers start working on a project they are allocated the amount of resources they need – not what they want. More importantly, when the project is over, those resources are returned to the pool.

This, of course, does not work for every application and every workload in the data center. There are applications that are highly specialized and are not going to benefit from automation. However, there indeed can increasingly large aspects of computing that can be transformed in the private cloud environment based on truly tuning workloads and resources to make the private cloud as elastic as what we think of as a ever expanding public cloud.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

VMWare's CloudFoundry.com Announcement

<Return to section navigation list>

Other Cloud Computing Platforms and Services

•• Geva Perry (@gevaperry) reported VMWare's CloudFoundry.com Announcement in a 4/12/2011 post to his Thinking Out Cloud blog:

Today VMWare is about to make a big announcement about CloudFoundry.com. I'm writing this post before the actual announcement was made and while on the road, so more details will probably emerge later, but there is the gist of it:

VMWare is launching CloudFoundry.com. This is a VMWare owned and operated platform-as-a-Service. It's a big step in the OpenPaaS intiative they have been talking about for the past year: "Multiple Clouds, Multiple Frameworks, Multiple Services".

For those of you keeping track, CloudFoundry is the official name for the DevCloud and AppCloud services which have come out in various alpha and beta releases in the last few months.

The following diagram summarizes the basic idea behind CloudFoundry.com:

In other words, in addition to running your apps on VMWare's own PaaS service (CloudFoundry.com), VMWare will make this framework available to other cloud providers -- as well as for enterprises to run in-house as a private cloud (I'm told this will be in beta by the end of this year). In fact, they are going to open source CloudFoundry under an Apache license.They're also going to support multiple frameworks, not just their own Java/Spring framework but Ruby, Node.JS and others (initally, several JVM-based frameworks). This concept is similar to the one from DotCloud, which I discussed in What's the Best Platform-as-a-Service.