Windows Azure and Cloud Computing Posts for 4/4/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 4/5/2011 10:30 AM PDT with articles marked • from Wade Wegner and Karandeep Anand, Mark Seemann, Noah Gift, Tim Anderson, Adron Hall, Kenon Owens, Mikael Ricknäs, SharePoint Pro, IDC and IDG Enterprise, Microsoft PR and Beth Schultz.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Edu Lorenzo explained how to grant Read only access to SQL Azure DB in a 4/3/2011 post:

Just recently, I was testing out some stuff with SQL Azure. Then I got this bright idea of giving out the connection string to some friends, also for testing. As it turned out.. they all tried and succeeded in inserting data, a lot of data. So I got to thinking, is it possible to give out sql azure as read only?

Well there’s no better way than to try.

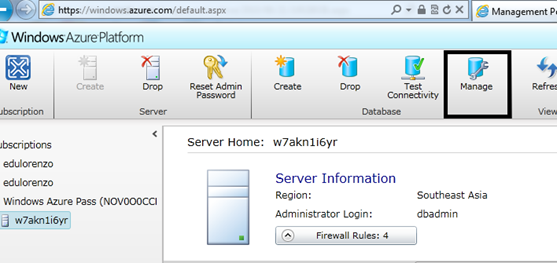

So I logged in to my SQL Azure DB and clicked “Manage” on the Master DB. This should restrict writing to ALL my databases as SQL Azure still does not support the USE keyword.

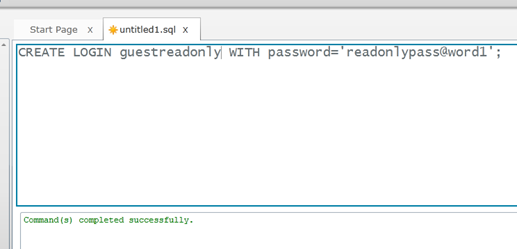

Then I create a readonly login

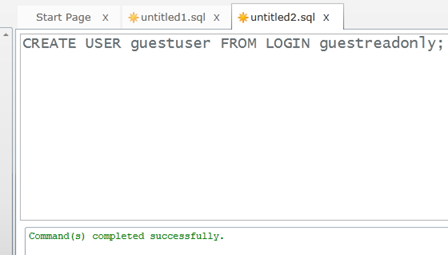

Then I create a user as guestreadonly

OK. So I have a type and a user. What I need to do next is to assign the role to the user and that’s it!

EXEC sp_addrolemember ‘db_datareader’, ‘guestuser’;

<Return to section navigation list>

MarketPlace DataMarket and OData

The Visit Mix 11 Team announced MIX11 OData Feed Now Available on 3/31/2011 (missed when posted):

Here’s Fabrice Marguerie’s Sesame OData feed reader displaying a few of the MIX 11 sessions tagged Windows Azure:

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• Wade Wegner (@WadeWegner) and Karandeep Anand wrote Introducing the Windows Azure AppFabric Caching Service for the 4/2011 issue of MSDN Magazine. From the introduction:

In a world where speed and scale are the key success metrics of any solution, they can’t be afterthoughts or retrofitted to an application architecture—speed and scale need to be made the core design principles when you’re still on the whiteboard architecting your application.

The Windows Azure AppFabric Caching service provides the necessary building blocks to simplify these challenges without having to learn about deploying and managing another tier in your application architecture. In a nutshell, the Caching service is the elastic memory that your application needs for increasing its performance and throughput by offloading the pressure from the data tier and the distributed state so that your application is able to easily scale out the compute tier.

The Caching service was released as a Community Technology Preview (CTP) at the Microsoft Professional Developers Conference in 2010, and was refreshed in February 2011.

The Caching service is an important piece of the Windows Azure platform, and builds upon the Platform as a Service (PaaS) offerings that already make up the platform. The Caching service is based on the same code-base as Windows Server AppFabric Caching, and consequently it has a symmetric developer experience to the on-premises cache.

The Caching service offers developers the following capabilities:

- Pre-built ASP.NET providers for session state and page output caching, enabling acceleration of Web applications without having to modify application code

- Caches any managed object—no object size limits, no serialization costs for local caching

- Easily integrates into existing applications

- Consistent development model across both Windows Azure AppFabric and Windows Server AppFabric

- Secured access and authorization provided by the Access Control service

While you can set up other caching technologies (such as memcached) on your own as instances in the cloud, you’d end up installing, configuring and managing the cache clusters and instances yourself. This defaults one of the main goals of the cloud, and PaaS in particular: to get away from managing these details. The Windows Server AppFabric Caching service removes this burden from you, while also accelerating the performance of ASP.NET Web applications running in Windows Azure with little or no code or configuration changes. We’ll show you how this is done throughout the remainder of the article.

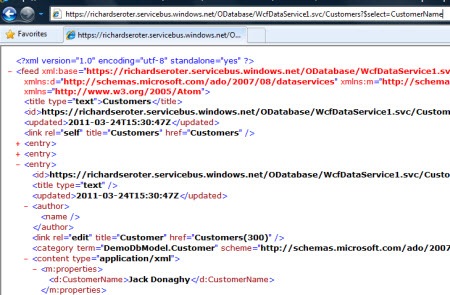

Richard Seroter (@rseroter) described Exposing On-Premise SQL Server Tables As OData Through Windows Azure AppFabric on 3/24/2011 (missed when published):

Have you played with OData much yet? The OData protocol allows you to interact with data resources through a RESTful API. But what if you want to securely expose that OData feed out to external parties? In this post, I’ll show you the very simple steps for exposing an OData feed through Windows Azure AppFabric.

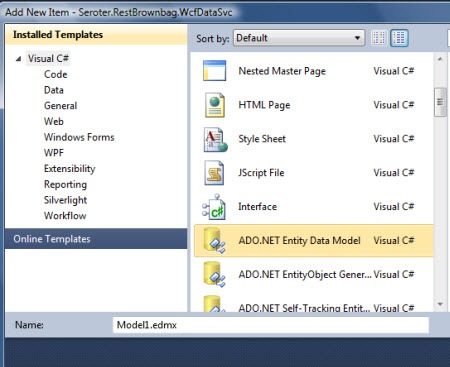

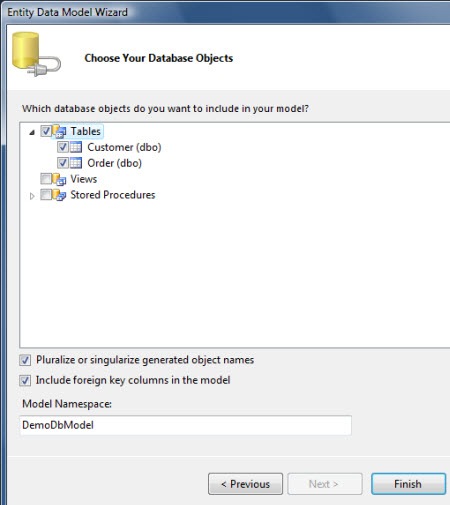

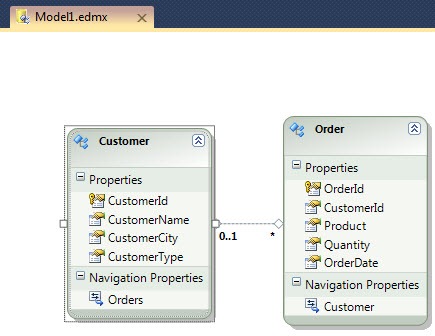

Create ADO.NET Entity Data Model for Target Database. In a new VS.NET WCF Service project, right click the project and choose to add a new ADO.NET Entity Data Model. Choose to generate the model from a database. I’ve selected two tables from my database and generated a model.

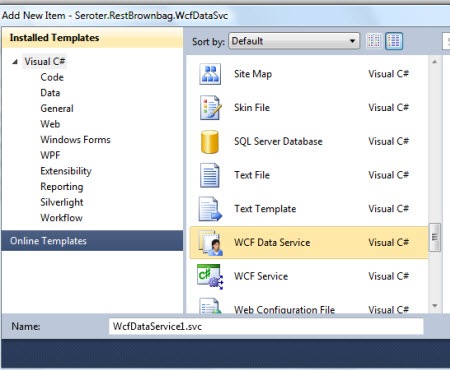

- Create a new WCF Data Service. Right-click the Visual Studio project and add a new WCF Data Service.

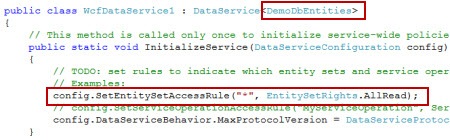

- Update the WCF Data Service to Use the Entity Model. The WCF Data Service template has a placeholder where we add the generated object that inherited from ObjectContext. Then, I uncommented and edited the “config.SetEntitySetAccessRule” line to allow Read on all entities.

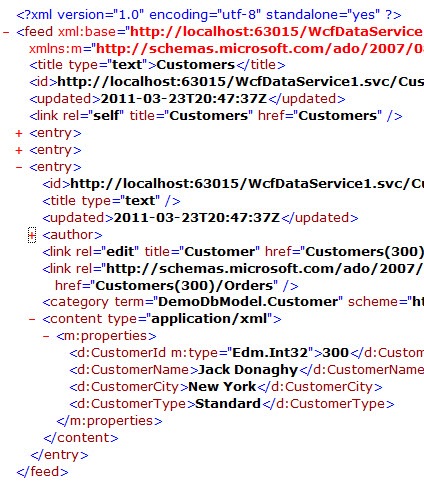

- View the Current Service. Just to make sure everything is configured right so far, I viewed the current service and hit my “/Customers” resource and saw all the customer records from that table.

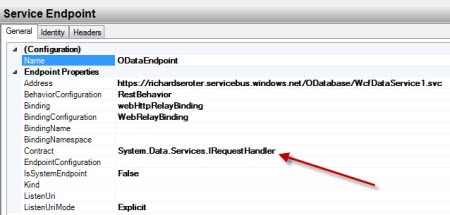

- Update the web.config to Expose via Azure AppFabric. The service thus far has not forced me to add anything to my service configuration file. Now, however, we need to add the appropriate AppFabric Relay bindings so that a trusted partner could securely query my on-premises database in real-time.

I added an explicit service to my configuration as none was there before. I then added my cloud endpoint that leverages the System.Data.Services.IRequestHandler interface. I then created a cloud relay binding configuration that set the relayClientAuthenticationType to None (so that clients do not have to authenticate – it’s a demo, give me a break!). Finally, I added an endpoint behavior that had both the webHttp behavior element (to support REST operations) and the transportClientEndpointBehavior which identifies which credentials the service uses to bind to the cloud. I’m using the SharedSecret credential type and providing my Service Bus issuer and password.

- Connect to the Cloud. At this point, I can connect my service to the cloud. In this simple case, I right-clicked my OData service in Visual Studio.NET and chose View in Browser. When this page successfully loads, it indicates that I’ve bound to my cloud namespace. I then plugged in my cloud address, and sure enough, was able to query my on-premises database through the OData protocol.

That was easy! If you’d like to learn more about OData, check out the OData site. Most useful is the page on how to manipulate URIs to interact with the data, and also the live instance of the Northwind database that you can mess with. This is yet another way that the innovative Azure AppFabric Service Bus lets us leverage data where it rests and allow select internet-connected partners access it.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan described How to Login into Windows Azure Virtual Machine using Remote Desktop in a 4/3/2011 post:

To start RDP Access first time, you just need to go to Windows Azure Management Portal to use the Remote Desktop credentials.

If you want to know how to create RDP access to Windows Azure Application see the following blog post:

In Windows Azure Management Portal when you select your service name (i.e. azure14VMRoleService and The Role name is "VmRoleFinal"), you will see that the Remote access toolbar options grayed out as below:

In same Windows Azure Management Portal if you select azure14VMRoleService service "Role Name" as "VMRole1", you will see that the a few setting in the Remote access toolbar options enabled as below:

In above Remote Access option:

- "Enable" means - you have RDP access enabled for this Role

- Configure option allows you to modify the following:

- username,

- password

- password expiration

- Password Encryption Certificate

If you click on "Configure" option, you will see the following dialog to modify the above details:

In same Windows Azure Management Portal, please select azure14VMRoleService service "Role Instance" as "VMRole1_IN_0", you will see the "Connect" option in Remote access toolbar is enabled as below:

Which means you can connect to the Virtual Machine associated with this particular role instance. To connect please select "Connect" button and you will see the following dialog (I am using IE9 as my default browser):

Once you select "Connect" option a RDP script file is created and made available for you, to download & save in your machine which can be used for subsequent RDP access. Let's "Save" the file to our Azure14VMRole folder.

Now whenever we need to RDP our Virtual Machine we can just run this azure14VMRoleService-Production-VMRole1_IN_0.rdp script instead of opening Windows Azure Management Portal.

Let's run azure14VMRoleService-Production-VMRole1_IN_0.rdp and select "Connect" option:

The following dialog will open for me to enter Remote Access credentials (User name and Password):

After entering correct "user name and password", the following dialog will appear to suggest that certificate authority for the certificate user are not trusted, this is because we have created the certificate in Visual Studio instead of buying form certified "Certificate Authority (CA)". Not a problem, please select "Yes" below:

You will see that the Remote Desktop session configuration is in progress as below:

After a few seconds finally the following window appears which shows that we have access to our Virtual Machine which we have created earlier:

That’s it!!

Once your work in done in Virtual Machine it is a safe practice to log off properly.

How to share RDP access with others:

If you find a situation, where you will have to share the RDP access with someone else, in this case you can do the following to keep your credentials:

- Go to Windows Azure Management Portal and in the Remote Access toolbar please select "Configure".

- Now change the user name and password (This way you can keep your personal password with you)

- After it, please select the "Connect" option from Remote Access Toolbar and when you were asked to save the .RDP file please save it on local machine.

- Finally, zip this .RDP file and share with anyone and pass your temporary credentials by email or verbally.

- Once the other person work is completed in your virtual machine, please follow the above step #2 and generate new username and password to disable previous credentials.

Richard Seroter (@rseroter) explained Using the BizTalk Adapter Pack and AppFabric Connect in a Workflow Service on 4/3/2011:

I was recently in New Zealand speaking to a couple user groups and I presented a “data enrichment” pattern that leveraged Microsoft’s Workflow Services. This Workflow used the BizTalk Adapter Pack to get data out of SQL Server and then used the BizTalk Mapper to produce an enriched output message. In this blog post, I’ll walk through the steps necessary to build such a Workflow. If you’re not familiar with AppFabric Connect, check out the Microsoft product page, or a nice long paper (BizTalk and WF/WCF, Better Together) which actually covers a few things that I show in this post, and also Thiago Almeida’s post on installation considerations.

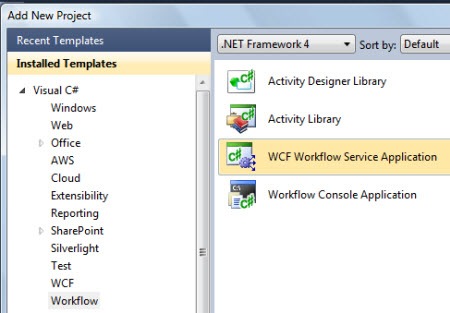

First off, I’m using Visual Studio 2010 and therefore Workflow Services 4.0. My project is of type WCF Workflow Service Application.

Before actually building a workflow, I want to generate a few bits first. In my scenario, I have a downstream service that accepts a “customer registration” message. I have a SQL Server database with existing customers that I want to match against to see if I can add more information to the “customer registration” message before calling the target service. Therefore, I want a reference both to my database and my target service.

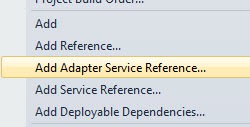

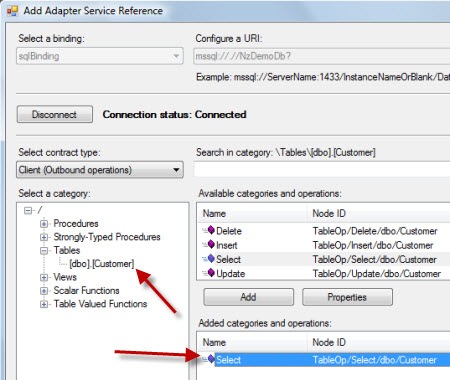

If you have installed the BizTalk Adapter Pack, which exposes SQL Server, Oracle, Siebel and SAP systems as WCF services, then right-clicking the Workflow Service project should show you the option to Add Adapter Service Reference.

After selecting that option, I see the wizard that lets me browse system metadata and generate proxy classes. I chose the sqlBinding and set my security settings, server name and initial database catalog. After connecting to the database, I found my database table (“Customer”) and chose to generate the WF activity to handle the Select operation.

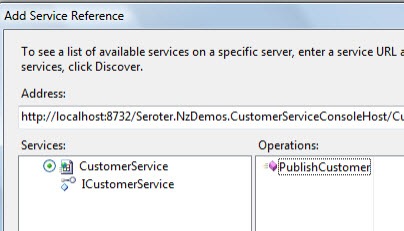

Next, I added a Service Reference to my project and pointed to my target service which has an operation called PublishCustomer.

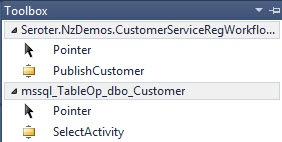

After this I built my project to make sure that the Workflow Service activities are properly generated. Sure enough, when I open the .xamlx file that represents my Workflow Service, I see the customer activities in the Visual Studio toolbox.

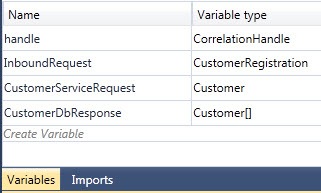

This service is an asynchronous, one-way service, so I removed the “Receive and Send Reply” activities and replaced it with a single Receive activity. But, what about my workflow variables? Let’s create the variables that my Workflow Service needs. The InboundRequest variable points to a WCF data contract that I added to the project. The CustomerServiceRequest variable refers to the Customer object generated by my WCF service reference. Finally, the CustomerDbResponse holds an array of the Customer object generated by the Adapter Service Reference.

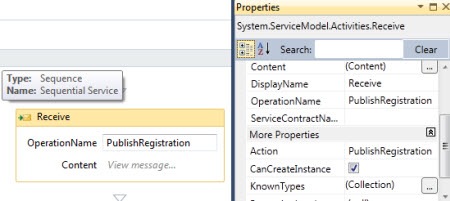

With all that in place, let’s flesh out the workflow. The initial Receive activity has an operation called PublishRegistration and uses the InboundRequest variable.

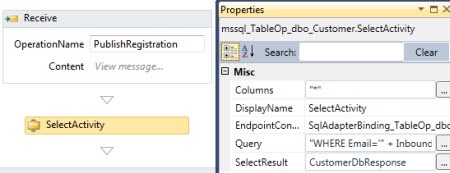

Next up, I have the custom Workflow activity called SelectActivity. This is the one generated by the database reference. It has a series of properties including which columns to bring back (I chose all columns), any query parameters (e.g. a “where” clause) and which variable to put the results in (the CustomerDbResponse).

Now I’m ready to start building the request message for the target service. In used an Assign shape to instantiate the CustomerServiceRequest variable. Then I dragged the Mapper activity that is available if you have AppFabric Connect installed.

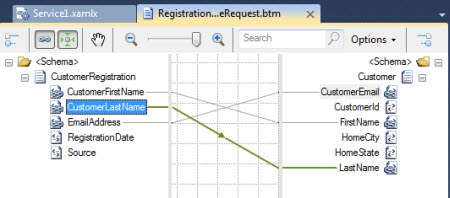

When then activity is dropped onto the Workflow surface, we get prompted for what “types” represent the source and destination of the map. The source type is the customer registration that the Workflow initially receives, and the destination is the customer object sent to target service. Now I can view, edit and save the map between these two data types. The Mapper activity comes in handy when you have a significant number of values to map from a source to destination variable and don’t want to have 45 Assign shapes stuffed into the workflow.

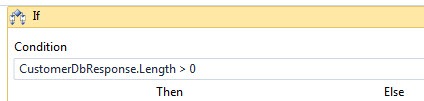

Recall that I want to see if this customer is already known to us. If they are not, then there are no results from my database query. To prevent any errors from trying to access a database result that doesn’t exist, I added an If activity that looks to see if there were results from our database query.

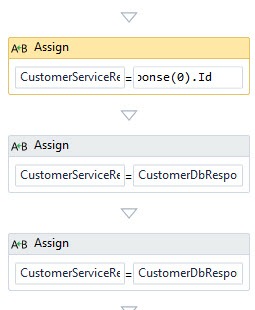

Within the Then branch, I extract the values from the first result of the database query. This done through a series of Assign shapes which access the “0” index of the database customer array.

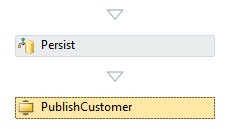

Finally, outside of the previous If block, I added a Persist shape (to protect me against downstream service failures and allow retries from Windows Server AppFabric) and finally, the custom PublishCustomer activity that was created by our WCF service reference.

The result? A pretty clean Workflow that can be invoked as a WCF service. Instead of using BizTalk for scenarios like this, Workflow Services provide a simpler, more lightweight means for doing simple data enrichment solutions. By adding AppFabric Connect and the Mapper activity, in addition to the Persist capability supported by Windows Server AppFabric, you get yourself a pretty viable enterprise solution.

Avkash Chauhan gave instructions for Setting RDP Access in Windows Azure Application with Windows Azure SDK 1.3/1.4 on 4/3/2011:

In this article we will learn how to enable RDP Access to Windows Azure Application. Right click on your Cloud Project i.e. "Azure14VMRoleApplication" Name and in the newly opened menu, please select "Publish" option as below:

You will see a new dialog opened as below:

Because we are setting RDP access to our application, so any of following setting does not matter at this time:

- Create Service Package Only

- Deploy your Windows Azure project to Windows Azure

We just need to click on "Configure Remote Desktop connections..." above.

We will see a new dialog "Remote Desktop Configuration". In this dialog, you will need to check "Enable connections for all role" check box in the above window, which will open all disabled input box to enter proper credentials. Once you checked the "Enable connections for all roles", please select a certificate, which will be used to encrypt your password. You also need to upload this certificate in the certificates section of your Service in Windows Azure Management Portal.

Above I have selected a certificate I would need to upload this certificate to Windows Azure Management Portal so let's export this certificate now.

To export the certificate please select the "View" button and you will see the certificate windows as below:

Now please open the "Details" tab in above windows and select "Copy to File":

This action will open "Certificate Export Wizard" as below, please select "Next":

In next "Export Private Key" wizard windows please select "Yes, export the private key" option and select "Next":

You will see the "Export File Format" window, please leave all default and just select "Next" here:

Now you will be asked to enter a password to protect your key so please enter a password in the both input boxes and then select "Next":

You will asked to provide a folder location and file name to save your certificate as below:

Please select the "Browse" button to locate your VM Role Training Material Folder as below and enter the Certificate name "Azure14VMRoleRDPCertificate":

The full file name with path will appear in the next window as below:

Please select "Next" in above dialog and the you will see the final confirmation about your exported certificate as below:

Please select "Finish" above and export confirmation will appear:

Finally, you can verify the exported certificate in your folder as below:

To upload recently exported certificate please select the "Certificate" node in your "Azure14VMRoleService" hosted service as below:

Once you select "Add Certificate" from the toolbar, you will see the following "Upload an X.509 Certificate" windows in which you will need to provide:

- The Azure14VMRoleRDPCertificate.pfx certificate which you can select using the "Browse" button.

- Enter the correct password and matching password

Once you select "Create" button above you will a "verification" activity and after a few seconds the certificate will be created as below:

If you select the above certificate by clicking on it, on right side of Windows Azure Management Portal you will see the detailed information about this certificate as below:

To confirm that your application have Remote Desktop Settings correctly, please open our Service Configuration (CSCGF) file which will have a few new settings indicating addition of "AccountUserName" and AccountEncryptedPassword" as below:

<?xml version="1.0" encoding="utf-8"?>

<ServiceConfiguration serviceName="Azure14VMRoleApplication" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" osFamily="1" osVersion="*">

<Role name="VMRole1">

<Instances count="1" />

<ConfigurationSettings>

<Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="UseDevelopmentStorage=true" />

<Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.Enabled" value="true" />

<Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountUsername" value="avkash" />

<Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountEncryptedPassword" value="***************************==" />

<Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountExpiration" value="2011-08-01T23:59:59.0000000-07:00" />

<Setting name="Microsoft.WindowsAzure.Plugins.RemoteForwarder.Enabled" value="true" />

</ConfigurationSettings>

<Certificates>

<Certificate name="Microsoft.WindowsAzure.Plugins.RemoteAccess.PasswordEncryption" thumbprint="*******************************" thumbprintAlgorithm="sha1" />

</Certificates>

</Role>

</ServiceConfiguration>

You are done from Windows Azure Management Portal for now you can go back to Visual Studio 2010 windows to further upload the package directly from VS2010 or publish locally and then upload manually.

Note: If you decide to use your own certificate instead of creating a new one, you sure can use above and then deploy it to Windows Azure Management Portal.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Noah Gift (@noahgift) wrote Parsing Log Files with F#, MapReduce and Windows Azure for MSDN Magazine’s 4/2011 issue. From the introduction:

As a longtime Python programmer, I was intrigued by an interview with Don Syme, architect of the F# language. In the interview, Don mentioned that, “some people see [F#] as a sort of strongly typed Python, up to syntactic differences.” This struck me as something worth investigating further.

As it turns out, F# is a bold and exciting new programming language that’s still a secret to many developers. F# offers the same benefits in productivity that Ruby and Python programmers have enjoyed in recent years. Like Ruby and Python, F# is a high-level language with minimal syntax and elegance of expression. What makes F# truly unique is that it combines these pragmatic features with a sophisticated type-inference system and many of the best ideas of the functional programming world. This puts F# in a class with few peers.

But a new high-productivity programming language isn’t the only interesting new technology available to you today.

Broad availability of cloud platforms such as Windows Azure makes distributed storage and computing resources available to single developers or enterprise-sized companies. Along with cloud storage have come helpful tools like the horizontally scalable MapReduce algorithm, which lets you rapidly write code that can quickly analyze and sort potentially gigantic data sets.

Tools like this let you write a few lines of code, deploy it to the cloud and manipulate gigabytes of data in an afternoon of work. Amazing stuff.

In this article, I hope to share some of my excitement about F#, Windows Azure and MapReduce. I’ll pull together all of the ideas to show how you can use F# and the MapReduce algorithm to parse log files on Windows Azure. First, I’ll cover some prototyping techniques to make MapReduce programming less complex; then, I’ll take the results … to the cloud.

• Mark Seeman wrote CQRS on Windows Azure for the 4/2011 issue of MSDN Magazine. From the introduction:

Microsoft Windows Azure offers unique opportunities and challenges. There are opportunities in the form of elastic scalability, cost reduction and deployment flexibility, but also challenges because the Windows Azure environment is different from the standard Windows servers that host most Microsoft .NET Framework services and applications today.

One of the most compelling arguments for putting applications and services in the cloud is elastic scalability: You can turn up the power on your service when you need it, and you can turn it down again when demand trails off. On Windows Azure, the least disruptive way to adjust power is to scale out instead of up—adding more servers instead of making existing servers more powerful. To fit into that model of scalability, an application must be dynamically scalable. This article describes an effective approach to building scalable services and demonstrates how to implement it on Windows Azure.

Command Query Responsibility Segregation (CQRS) is a new approach to building scalable applications. It may look different from the kind of .NET architecture you’re accustomed to, but it builds on tried and true principles and solutions for achieving scalability. There’s a vast body of knowledge available that describes how to build scalable systems, but it requires a shift of mindset.

Taken figuratively, CQRS is nothing more than a statement about separation of concerns, but in the context of software architecture, it often signifies a set of related patterns. In other words, the term CQRS can take on two meanings: as a pattern and as an architectural style. In this article, I’ll briefly outline both views, as well as provide examples based on a Web application running on Windows Azure.

Joannes Vermorel and Rinat Abdullin have written extensively on CQRS and Windows Azure. See their Lokad-CQRS project on Google Code. (Ignore the spurious “Your version of Internet Explorer is not supported” warning, an artifact of the new browser war. I don’t believe free browsers need have open source.)

Maarten Balliauw (@maartenballiauw) described Lightweight PHP application deployment to Windows Azure in a 4/4/2011 post:

Those of you who are deploying PHP applications to Windows Azure, are probably using the Windows Azure tooling for Eclipse or the fantastic command-line tools available. I will give you a third option that allows for a 100% customized setup and is much more lightweight than the above options. Of course, if you want to have the out-of-the box functionality of those tools, stick with them.

Note: while this post is targeted at PHP developers, it also shows you how to build your own .cspkg from scratch for any other language out there. That includes you, .NET and Ruby!

Oh, my syntax highlighter is broken so you won't see any fancy colours down this post :-)

Phase 1: Creating a baseline package template

Every Windows Azure package is basically an OpenXML package containing your application. For those who don’t like fancy lingo: it’s a special ZIP file. Fact is that it contains an exact copy of a folder structure you can create yourself. All it takes is creating the following folder & file structure:

- ServiceDefinition.csdef

- ServiceConfiguration.cscfg

- PhpOnAzure.Web

- bin

- resources

- Web.config

I’ll go through each of those. First off, the ServiceDefinition.csdef file is the metadata describing your Windows Azure deployment. It (can) contain the following XML:

<?xml version="1.0" encoding="utf-8"?>

<ServiceDefinition name="PhpOnAzure" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition">

<WebRole name="PhpOnAzure.Web" enableNativeCodeExecution="true">

<Sites>

<Site name="Web" physicalDirectory="./PhpOnAzure.Web">

<Bindings>

<Binding name="Endpoint1" endpointName="HttpEndpoint" />

</Bindings>

</Site>

</Sites>

<Startup>

<Task commandLine="add-environment-variables.cmd" executionContext="elevated" taskType="simple" />

<Task commandLine="install-php.cmd" executionContext="elevated" taskType="simple" />

</Startup>

<Endpoints>

<InputEndpoint name="HttpEndpoint" protocol="http" port="80" />

</Endpoints>

<Imports>

<Import moduleName="Diagnostics"/>

</Imports>

<ConfigurationSettings>

</ConfigurationSettings>

</WebRole>

</ServiceDefinition>Basically, it tells Windows Azure to create a WebRole named “PhpOnAzure.Web” (notice the not-so-coincidental match with one directory of the folder structure described earlier). It will contain one site that listens on a HttpEndpoint (port 80). Next, I added 2 startup tasks, add-environment-variables.cmd and install-php.cmd. More on these later on.

Next, ServiceConfiguration.cscfg is the actual configuration file for your Windows Azure deployment. It looks like this:

<?xml version="1.0" encoding="utf-8"?>

<ServiceConfiguration serviceName="PhpOnAzure" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" osFamily="2" osVersion="*">

<Role name="PhpOnAzure.Web">

<Instances count="1" />

<ConfigurationSettings>

<Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="<your diagnostics connection string here>"/>

</ConfigurationSettings>

</Role>

</ServiceConfiguration>Just like in a tooling-based WIndows Azure deployment, it allows you to set configuratio ndetails like the connection string where the diagnostics monitor should write all logging to.

The PhpOnAzure.Web folder is the actual root where my web application will live. It’s the wwwroot of your app, the htdocs folder of your app. Don’t put any contents n here yet, as we’ll automate that later in this post. Anyways, it (optionally) contains a Web.config file where I specify that index.php should be the default document:

<?xml version="1.0"?>

<configuration>

<system.webServer>

<defaultDocument>

<files>

<clear />

<add value="index.php" />

</files>

</defaultDocument>

</system.webServer>

</configuration>Everything still OK? Good! (I won’t take no for an answer :-)). Add a bin folder in there as well as a resources folder. The bin folder will hold our startup tasks (see below), the resources folder will contain a copy of the Web Platform Installer command-line tools.

That’s it! A Windows Azure deployment package is actually pretty simple and easy to create yourself.

Phase 2: Auto-installing the PHP runtime

I must admit: this one’s stolen from the excellent Canadian Windows Azure MVP Cory Fowler aka SyntaxC4. He blogged about using startup tasks and the WebPI Command-line tool to auto-install PHP when your Windows Azure VM boots. Read his post for in-depth details, I’ll just cover the startup task doing this. Which I shamelessly copied from his blog as well. Credits due.

Under PhpOnAzure.Web\bin, add a script named install-php.cmd and copy in the following code:

@echo off

ECHO "Starting PHP installation..." >> ..\startup-tasks-log.txtmd "%~dp0appdata"

cd "%~dp0appdata"

cd ..reg add "hku\.default\software\microsoft\windows\currentversion\explorer\user shell folders" /v "Local AppData" /t REG_EXPAND_SZ /d "%~dp0appdata" /f

"..\resources\WebPICmdLine\webpicmdline" /Products:PHP53 /AcceptEula >> ..\startup-tasks-log.txt 2>>..\startup-tasks-error-log.txt

reg add "hku\.default\software\microsoft\windows\currentversion\explorer\user shell folders" /v "Local AppData" /t REG_EXPAND_SZ /d %%USERPROFILE%%\AppData\Local /fECHO "Completed PHP installation." >> ..\startup-tasks-log.txt

What it does is:

- Create a local application data folder

- Add that folder name to the registry

- Call “webpicmdline” and install PHP 5.3.x. And of course, /AcceptEula will ensure you don’t have to go to a Windows Azure datacenter, break into a container and click “I accept” on the screen of your VM.

- Awesomeness happens: PHP 5.3.x is installed!

- And everything gets logged into the startup-tasks-error-log.txt file in the root of your website. It allows you to inspect the output of all these commands once your VM has booted.

Phase 3: Fixing a problem

So far only sunshine. But… Since the technique used here is creating a full-IIS web role (a good thing), there’s a small problem there… Usually, your web role will spin up IIS hosted core and run in the same process that launched your VM in the first place. In a regular web role, the hosting process contains some interesting environment variables about your deployment: the deployment ID and the role name and even the instance name!

With full IIS, your web role is running inside IIS. The real IIS, that’s right. And that’s a different process from the one that launched your VM, which means that these useful environment variables are unavailable to your application. No problem for a lot of applications, but if you’re using the PHP-based diagnostics manager from the Windows Azure SDK for PHP (or other code that relies on these environment variables, well, you’re sc…. eh, in deep trouble.

Luckily, startup tasks have access to the Windows Azure assemblies that can also give you this information. So why not create a task that copies this info into a machine environment variable?

We’ll need two scripts: one .cmd file launching PowerShel, and of course PowerShell. Let’s start with a file named add-environment-variables.cmd under PhpOnAzure.Web\bin:

@echo off

ECHO "Adding extra environment variables..." >> ..\startup-tasks-log.txtpowershell.exe Set-ExecutionPolicy Unrestricted

powershell.exe .\add-environment-variables.ps1 >> ..\startup-tasks-log.txt 2>>..\startup-tasks-error-log.txtECHO "Added extra environment variables." >> ..\startup-tasks-log.txt

Nothing fancy, just as promised we’re launching PowerShell. But to ensure that we have al possible options in PowerShell, the execution policy is first set to Unrestricted. Next, add-environment-variables.ps1 is launched:

[Reflection.Assembly]::LoadWithPartialName("Microsoft.WindowsAzure.ServiceRuntime")

$rdRoleId = [Environment]::GetEnvironmentVariable("RdRoleId", "Machine")

[Environment]::SetEnvironmentVariable("RdRoleId", [Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment]::CurrentRoleInstance.Id, "Machine")

[Environment]::SetEnvironmentVariable("RoleName", [Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment]::CurrentRoleInstance.Role.Name, "Machine")

[Environment]::SetEnvironmentVariable("RoleInstanceID", [Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment]::CurrentRoleInstance.Id, "Machine")

[Environment]::SetEnvironmentVariable("RoleDeploymentID", [Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment]::DeploymentId, "Machine")if ($rdRoleId -ne [Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment]::CurrentRoleInstance.Id) {

Restart-Computer

}[Environment]::SetEnvironmentVariable('Path', $env:RoleRoot + '\base\x86;' + [Environment]::GetEnvironmentVariable('Path', 'Machine'), 'Machine')

Wow! A lot of code? Yes. First of all, we’re loading the Microsoft.WindowsAzure.ServiceRuntime assembly. Next, we query the current environment variables for a variable named RdRoleId and copy it in a variable named $rdRoleId. Next, we set all environment variables (RdRoleId, RoleName, RoleInstanceID, RoleDeploymentID) to their actual values. Just like that. Isn’t PowerShell a cool thing?

After all this, the $rdRoleId variable is compared with the current RdRoleId environment variable. Are they the same? Good! Are they different? Reboot the instance. Rebooting the instance is the only easiest way for IIS and PHP to pick these new values up.

Phase 4: Automating packaging

One thing left to do: we do have a folder structure now, but I don’t see any .cspkg file around for deployment… Let’s fix that. by creating a good old batch file that does the packaging for us. Note that this is *not* a necessary part, but it will ease your life. Here’s the script:

@echo off

IF "%1"=="" GOTO ParamMissing

echo Copying default package components to temporary location...

mkdir deploy-temp

xcopy /s /e /h deploy deploy-tempecho Copying %1 to temporary location...

xcopy /s /e /h %1 deploy-temp\PhpOnAzure.Webecho Packaging application...

"c:\Program Files\Windows Azure SDK\v1.4\bin\cspack.exe" deploy-temp\ServiceDefinition.csdef /role:PhpOnAzure.Web;deploy-temp\PhpOnAzure.Web /out:PhpOnAzure.cspkg

copy deploy-temp\ServiceConfiguration.cscfgecho Cleaning up...

rmdir /S /Q deploy-tempGOTO End

:ParamMissing

echo Parameter missing: please specify the path to the application to deploy.:End

You can invoke it from a command line:

package c:\patch-to-my\app

This will copy your application to a temporary location, merge in the template we created in the previous steps and create a .cspkg file by calling the cspack.exe from the Windows Azure SDK, and a ServiceConfiguration.cscfg file containing your configuration.

Phase 5: Package hello world!

Let’s create an application that needs massive scale. Here’s the source code for the index.php file which will handle all requests. Put it in your c:\temp or wherever you want.

<?php

echo “Hello, World!”;Next, call the package.bat created previously:

package c:\patch-to-my\app

There you go: PhpOnAzure.cspkg and ServiceConfiguraton.cscfg at your service. Upload, deploy and enjoy. Once the VM is booted in Windows Azure, all environment variables will be set and PHP will be automatically installed. Feel free to play around with the template I created (lightweight-php-deployment-azure.zip (854.44 kb)), as you can also install, for example, the Wincache extension or SQL Server Driver for PHP from the WebPI command-line tools. Or include your own PHP distro. Or achieve world domination by setting the instance count to a very high number (of course, this requires you to call Microsoft if you want to go beyond 20 instances, just to see if you’re worthy for world domination).

Conclusion

Next to the officially supported packaging tools, there’s also the good old craftsmen’s hand-made deployment. And if you automate some parts, it’s extremely easy to package your application in a very lightweight fashion. Enjoy!

Here’s the download: lightweight-php-deployment-azure.zip (854.44 kb)

ConcreteIT published Showcase "Windows Azure calling, who is this?" – FAQ to a Facebook page on 3/30/2011 “[t]o draw attention to our session "Windows Azure calling who is this?" at TechDays 2011 in Örebro, Sweden”:

What was this demo site all about?

This very small lottery site is a sample site that uses a bunch of fun technology and had a two-fold purpose:

- To draw attention to our session "Windows Azure calling who is this?" at TechDays 2011 in Örebro, Sweden.

To showcase a web application in Windows Azure using claims based authentication along with the Windows Azure AppFabric Access Control (AC) service.

What made this demo site so special?

- Participants at the session "Windows Azure calling, who is this?" at TechDays 2011 in Örebro, Sweden, joined the lottery by signing in and adding themself to the draw.

- The site is built so as not to store any valuable information about the user, like for instance email. The site does not even have to have a privacy policy!

What technologies are used in the demo site?

- Claims based authentication

- Windows Azure AppFabric Access Control (AC) service

- Windows Azure

- ASP.NET MVC 3

- The Razor View Engine

Who build this demo site?

- Sergio Molero - Concrete IT - http://twitter.com/sergio_molero

- Magnus Mårtensson - Diversify - http://twitter.com/noopman

What was the session "Windows Azure calling, who is this?" actually about?

Identifying and authorizing your users in the cloud has taken quantum leaps in the latest versions of Windows Identity Foundation (WIF). The goal in your applications is to be able to have security confidence weather they are on premise or in the Cloud. The AppFabric Access Control service is the solution to these concerns. You do not have to know anything about how to implement security, nor do you need to know how to handle Claims Based Security. Access Control handles all of this for you.

The only thing that is left for you to do is configure Access Control and prepare your application to use it using the same application security code you have always done. In this session we looked at this powerful service and explained how you can begin to use it in your applications today!

Are you interested to know more about "The AppFabric Access Control" or this demo site? Feel free to send us a message here on Facebook and we’ll get back to you as soon as possible.

- Demo site for the session "Windows Azure calling who is this?" at TechDays 2011 in Örebro.

- The site shows off Windows Azure, MVC 3, Access Control Service and Claims Based Identities.

- Created 2011 by Magnus Mårtensson (Diversify) and Sergio Molero (Concrete IT).

<Return to section navigation list>

Visual Studio LightSwitch

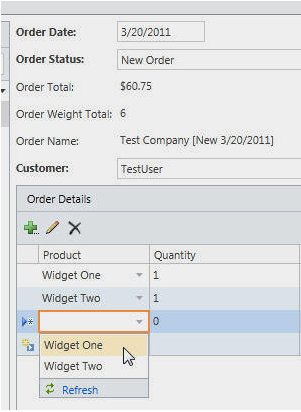

Michael Washingon (@ADefWebServer) updated his LightSwitch Online Ordering System for LightSwitch Beta 2 on 4/1/2011:

Also see: LightSwitch and HTML

Creating an ASP.NET website that communicates with the LightSwitch business layerAn End-To-End LightSwitch Example

In this article we will create an end-to-end application in LightSwitch. The purpose is to demonstrate how LightSwitch allows you to create professional business applications that would take a single developer weeks to create. With LightSwitch you can create such applications in under an hour.

I have more LightSwitch tutorials on my site http://LightSwitchHelpWebsite.com.

You can download LightSwitch at http://www.microsoft.com/visualstudio/en-us/lightswitch.

The Scenario

In this example, we will be tasked with producing an application that meets the following requirements for a order tracking system:

- Products

- Allow Products to be created

- Allow Products to have weight

- Allow Products to have a price

- Companies

- Allow Companies to be created

- Allow Companies to log in and see their orders

- Orders

- Allow administrators to see all Companies and to place an order for any Company

- Allow Companies who log in, to see and place orders only for themselves

- Business Rules

- No single order can be over 10 pounds

- No single order can be over $100

- A Order status must be one of the valid order types

Creating The Application

Open Visual Studio and select File, then New Project.

Select LightSwitch, and then the programming language of your choice. This tutorial will use C#.

In the Solution Explorer, open Properties.

Select Web as the Application Type, and indicate that it will be hosted in IIS.

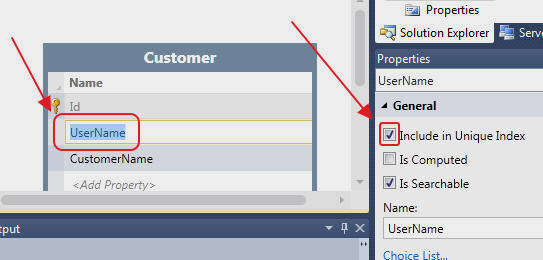

Select Create new table.

Click on the table name to change it to Customer. Enter the fields for the table according to the image above.

(Note: we will also reefer to the Tables as Entities, because when you are writing custom programming it is best to think of them as Entities)

Click on the UserName field, and in the Properties, check Include in Unique Index. This will prevent a duplicate UserName from being entered.

LightSwitch does not allow you to create primary keys for Entities created inside LightSwitch (you can have primary keys for external tables you connect to). Where you would normally create a primary key, use the Include in Unique Index.

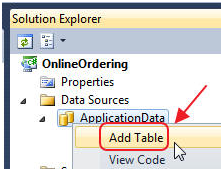

Right-click on the ApplicationData node to add a new Table (Entity).

Add a Product Entity according to the image above.

Add a Order Entity according to the image above.

Add a OrderDetail Entity according to the image above.

Creating A Choice List

Open the Order Entity and select the OrderStatus field. In Properties, select Choice List.

Enter the choices according to the image above.

This will cause screens (created later), to automatically provide a drop down with these choices.

Connect (Associate) the Entities (Tables) Together

It is important that you create associations with all the Entities in your LightSwitch project. In a normal application, this would be the process of creating foreign keys (and that is what LightSwitch does in the background). Foreign keys are usually optional because most applications will continue to work without them. With LightSwitch you really must create the associations for things to work properly.

It will be very rare for a Entity to not have one or more associations with other Entities.

Open the Order Entity and click the Relationship button.

Associate it with the Customer Entity according to the image above.

Repeat the process to associate the OrderDetail Entity to the Order entity according to the image above.

Repeat the process to associate the OrderDetail Entity to the Product entity according to the image above.

…

Michael continues with another 50 feet of detailed instructions.

According to his tweet stream, Michael has updated the following projects to LightSwitch Beta 2 as of 4/4/2011:

- Creating A #LightSwitch Custom Silverlight Control http://bit.ly/idsz2e

- A LightSwitch Home Page http://bit.ly/dSiheI

- #LightSwitch (Part 2): Business Rules and Screen Permissions http://bit.ly/a3BOXQ

- #LightSwitch Student Information System http://bit.ly/ax90OJ

Michael also announced that “http://LightSwitchHelpWebsite.com is now active! (ok it's just a new domain name.)”

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• Microsoft PR claimed “As industry calls for closer collaboration, Microsoft launches Reference Architecture Framework for Discrete Manufacturers Initiative to accelerate cloud computing, improved collaboration across value chain” in an introduction to their Digital Infrastructure, Cloud Computing Transforming Fragmented Manufacturing Industry Value Chain, According to Microsoft Study press release of 4/4/2011 from Hanover Messe 2011:

New technology advancements available in a digital infrastructure, such as cloud computing and social computing, are transforming manufacturing industry value chains, according to a Microsoft Corp. survey released today at HANNOVER MESSE 2011 in Hannover, Germany.

The survey results revealed a need to better integrate collaboration tools with business systems (47.4 percent) and to improve access to unstructured data and processes (36.2 percent). Almost 60 percent see an industrywide collaboration that includes manufacturing products and services providers, IT providers, systems integrators and in-house business analysts as most capable of bringing about these improvements.

To support tighter collaboration across these stakeholders, Microsoft has created a Reference Architecture Framework for Discrete Manufacturers Initiative (DIRA Framework) to drive solutions based on cloud computing across manufacturing networks while helping integrate processes within and across the enterprise, extend the reach of the network to more companies globally, and connect smart devices to the cloud. Microsoft partners involved in the initiative currently include Apriso Corp., Camstar Systems Inc., ICONICS Inc., Rockwell Automation Inc., Siemens MES and Tata Consultancy Services Ltd.

“Globalization has fragmented industry value chains, making them more complex and unable to quickly respond to increased competition and shorter product life cycles. Cloud computing is empowering today’s global manufacturers to rethink how they innovate and collaborate across the value chain,” said Sanjay Ravi, managing director, Worldwide Discrete Manufacturing Industry for Microsoft. “As a result of these increasingly rapid changes in technology and business, manufacturers are seeking guidance on how to best plan and deploy these powerful technologies in concert with their business strategies and priorities and how to achieve greater competitive differentiation. Microsoft is leading a DIRA Framework in response to this need while offering a pragmatic solution road map for IT integration and adoption.”

Cloud Computing Survey Findings

The Microsoft Discrete Manufacturing Cloud Computing Survey 2011, which polled 152 IT and business decision-makers within automotive, aerospace, high-tech and electronics, and industrial equipment manufacturing companies in Germany, France and the United States, found that the biggest benefit of cloud computing is lowered cost of optimizing infrastructure, according to 48.3 percent of respondents. This was followed closely by efficient collaboration across geographies (47.7 percent) and the ability to respond quickly to business demands (38.4 percent).

“The survey shows current cloud computing initiatives are targeted at cost reduction, but a growing number of forward-looking companies are exploring new and innovative business capabilities uniquely delivered through the cloud,” Ravi said. “Manufacturers are exploring ways to improve product design with social product development, enhance visibility across multiple tiers in the value chain, and create new business models and customer experiences based on smart devices connecting to the cloud.”

DIRA Framework

Development of the discrete manufacturing reference architecture will be an ongoing effort led by Microsoft, with close collaboration by participating companies, including industry solution vendors and systems integrators. The initiative defines six key themes to guide this development: natural user interfaces, role-based productivity and insights, social business, dynamic value networks, smart connected devices, and security-enhanced, scalable and adaptive infrastructure.

Microsoft has developed the principles of the DIRA Framework in conjunction with the technology adoption plans and solution strategies of its key partners. Several Microsoft partners across multiple business areas in manufacturing have endorsed the DIRA Framework because it helps them deliver high levels of customer value through complementary solutions aligned with the framework. …

The press release continues with the obligatory quotes from participants.

Lori MacVittie (@lmacvittie) asserted Application performance is more and more about dependencies in the delivery chain, not the application itself in a preface to her On Cloud, Integration and Performance post of 4/4/2011 to F5’s DevCentral blog:

When an article regarding cloud performance and an associated average $1M in loss as a result appeared it caused an uproar in the Twittersphere, at least amongst the Clouderati. There was much gnashing of teeth and pounding of fists that ultimately led to questioning the methodology and ultimately the veracity of the report.

If you were worried about the performance of cloud-based applications, here's fair warning: You'll probably be even more so when you consider findings from a recent survey conducted by Vanson Bourne on the behalf of Compuware.

On average, IT directors at 378 large enterprises in North America reported their organizations lost almost $1 million annually due to poorly performing cloud-based applications. More specifically, close to one-third of these companies are losing $1.5 million or more per year, with 14% reporting that losses reach $3 million or more a year.

In a newly released white paper, called "Performance in the Cloud," Compuware notes its previous research showing that 33% of users will abandon a page and go elsewhere when response times reach six seconds. Response times are a notable worry, then, when the application rides over the complex and extended delivery chain that is cloud, the company says.

-- The cost of bad cloud-based application performance, Beth Shultz, NetworkWorld

I had a chance to chat with Compuware about the survey after the hoopla and dug up some interesting tidbits – all of which were absolutely valid points and concerns regarding the nature of performance and cloud-based applications.

I SAY CLOUD YOU SAY TROPOSPHERE I’ve written some rather terse commentary regarding the use of language and in particular the use of the term “cloud” to refer to all things related to cloud because it – if you’ll pardon the pun – clouds conversation and makes it difficult to discuss the technology with any kind of common understanding. That’s primarily what happened with this report. The article in question used the words “cloud-based application performance” which many folks interpreted to merely mean “applications deployed in a public cloud.”

[ Insert pithy reference of choice to the adage about what happens you assume ]

If you dug into the white paper you might have discovered some details that are, for anyone intimately familiar with integration of applications, unsurprising. In fact I’d argue that the premise of the report is not really new, just the use of the term “cloud” to describe those external services upon which applications have relied for nearly a decade now. If you don’t like that “guilt by association”, then be ware to be more precise in your language. Those who decided to label every service or application delivered over the Internet “cloud” are to blame, not the messenger.

The findings of the report aren’t actually anything we haven’t heard before nor anything I haven’t said before. Performance of an application is highly dependent upon its dependent services. Applications are not islands, they are not silos, they are one cog in a much bigger wheel of interdependent services. If performance of one of them suffers, they all suffer. If the Internets are slow, the application is slow. If a network component is overloaded and becomes a bottleneck, impairing its ability to pass packets at an expected rate, the application is slow. If the third party payment processor upon which an application relies is slow because of high traffic or an impaired router or one the degradation of performance of one of its dependent services, the application is slow.

One of the challenges we faced back in the days of Network Computing and its “Real World Labs” was creating just such an environment for performance testing. It wasn’t enough for us to test a network component; we needed to know how the component performance in the context of a real, integrated IT architecture. As the editor responsible for maintaining NWC Inc, where we built out applications and integrated them with standard IT application and service components, I’d be the first one to stand up and say it still didn’t go far enough. We never integrated outside the lab because we had no control, no visibility, into the performance of externally hosted services. We couldn’t adequately measure those services and therefore their impact on the performance of whatever we were testing could not be controlled for in a scientific measurement sense. And yet the vast majority of enterprise architectures are dependent on at least some off-premise services over which they have no control. And some of them are going to be slow sometimes.

Slow applications lead to lost revenue. Yes, it’s only lost potential revenue (maybe visitors who abandoned their shopping carts were just engaged in the digital version of window shopping), but organizations have for many years now been treating that loss of potential revenue as a loss. They’ve also used that “loss”, as it were, to justify the need for solutions that improve performance of applications. To justify a new router, a second switch, another server, even new development tools. The loss of revenue or loyalty of customers due to “slow” applications is neither new nor a surprise. If it is, I’d suggest you’ve been living on some other planet.

And that’s what Compuware found: that the increasing dependence of applications on “cloud-based” applications is problematic. That poorly performing “cloud-based” applications impact the performance of those applications that have integrated its services. That “application performance” is really “application delivery chain performance” and that we ought to be focused on optimizing the entire service delivery chain rather than pointing our fingers at developers who, when it comes down to it, can’t do anything about the performance of a third-party service.

APPLICATION PERFORMANCE is a SUMMATION The performance of an application is not the performance of the application alone. It’s the performance of the application as an aggregate view of a request/response pair as served by a complete and fully functional infrastructure, with all dependent services included. Every microsecond of latency introduced by service requests on the back-end, i.e. the integrated services on and off-premise, are counted against the performance of the “application” as a whole.

Even services integrated at the browser, through included ad services or Twitter streams or Facebook authentication counts against the performance of the application because the user doesn’t necessarily stop to check out the status bar in their browser when a “web page” is loading slowly. They don’t and often can’t distinguish between the response you’re delivering and the data being delivered by a third-party service. It’s your application, it’s your performance problem.

What the Compuware performance survey does is highlight the very real problem with measuring that performance from a provider point of view. It’s one thing to suggest that IT find a way to measure applications holistically and application performance vendors like Compuware will be quick to point out that agents are more than capable of not only measuring the performance of individual services comprising an application but that’s only part of the performance picture. As we grow increasingly dependent on third-party, off-premise and cloud-based services for application functionality and business processing we will need to find a better way to integrate performance monitoring into IT as well. And therein lies the biggest challenge of a hyper-connected, distributed application. Without some kind of standardized measurement and monitoring services for those application and business related services, there’s no consistency in measurement across customers. No measurement means no visibility, and no visibility means a more challenging chore for IT operations to optimize, manage, and provision appropriately in the face of degrading performance.

Application performance monitoring and management doesn’t scale well in the face of off-premise distributed third-party provided services. Cloud-based applications IT deploys and controls can employ agents or other enterprise-standard monitoring and management as part of the deployment, but they have no visibility into let alone control over Twitter or their supply-chain provider’s services.

It’s a challenge that will continue to plague IT for the foreseeable future, until some method of providing visibility into those services, at least, is provided such that IT and operations can make the appropriate adjustments (compensatory controls) internal to the data center to address any performance issues arising from the use of third-party provided services.

Neil MacKenzie (@nknz) posted a consise Overview of Windows Azure on 4/3/2011:

Windows Azure is the Platform-as-a-Service offering (PaaS) from Microsoft. Like other cloud services it provides the following features:

- Compute

- Storage

- Connectivity

- Management

Compute

Windows Azure Compute is exposed through hosted services deployable as a package to an Azure datacenter. A hosted service provides an organizational and security boundary for a related set of compute resources. It is an organizational boundary because the compute and connectivity resources used by the hosted service are specified in its service model. It is a security boundary because only compute resources in the hosted service can have direct connectivity to other compute resources in the service.

A hosted service comprises a set of one or more roles, each of which provides specific functionality for the service. A role is a logical construct made physical through the deployment of one or more instances hosting the services provided by the role. Each instance is hosted in a virtual machine (VM) providing a guaranteed level of compute – cores, memory, local disk space – to the instance. Windows Azure offers various instance sizes from Small with one core to Extra-Large with eight cores. The other compute parameters scale similarly with instance size. An Extra-Small instance providing a fraction of a core is currently in beta.

A role is the scalability unit for a hosted service since its horizontal and vertical scaling characteristics are specified at the role level. All instances of a role have the same instance size, specified in the service model for the hosted service, but different roles can have different instance sizes. However, the power of cloud computing comes not from vertical scaling through changing the instance size, which is inherently limited, but from horizontal scaling through varying the number of instances of a role. Furthermore, while the instance size is fixed when the role is deployed the number of instances can be varied as needed following deployment. It is the elastic scaling – up and down – of instance numbers that provide the central financial benefit of cloud computing.

Windows Azure provides three types of role:

- Web role

- Worker role

- VM role

Web roles and worker roles are central to the PaaS model of Windows Azure. The primary difference between them is that IIS is turned on and configured for a web role – and IIS is sufficiently privileged that other web servers cannot be hosted on a web role. However, other web servers can be hosted on worker roles. Otherwise, there is little practical difference between web roles and worker roles.

Web roles and worker roles serve respectively as application-hosting environments for IIS websites and long-running applications. The power of PaaS comes from the lifecycle of role instances being managed by Windows Azure. In the event of an instance failure Windows Azure automatically restarts the instance and, if necessary, moves it to a new VM. Similarly, updates to the operating system of the VM can be handled automatically. Windows Azure also automates role upgrades so that instances of a role are upgraded in groups allowing the role to continue providing its services albeit with a reduced service level.

Windows Azure provides two features supporting modifications to the role environment: elevated privileges and startup tasks. When running with elevated privilege, an instance has full administrative control of the VM and can perform modifications to the environment that would be disallowed under the default limited privilege. Note that in a web role this privilege escalation applies only to the process hosting the role entry point and does not apply to the separate process hosting IIS. This limits the risk in the event of a website being compromised. A startup task is a script that runs on instance startup and which can be used, for example, to ensure that any required application is installed on the instance. This verification is essential due to the stateless nature of an instance, since any application installed on an instance is lost if the instance is reimaged or moved to a new VM.

The VM role exists solely to handle situations where even the modifications to a web role or worker possible using startup tasks and elevated privileges are insufficient. An example would be where the installation of an application in a startup task takes too long or requires human intervention. Deployment to a VM role requires the creation and uploading of an appropriately configured Windows Server 2008 guest OS image. This image can be configured as desired up to and including the installation of arbitrary software – consistent with any licensing requirements of that software. Once deployed, Windows Azure manages the lifecycle of role instances just as it does with other role types. Similarly to the other role types, VM roles host applications – the difference being that for a VM role the application is a Windows Server 2008 guest OS. For VM roles, it is particularly important to be aware of the statelessness of the instance. Any changes to an instance of a VM role are lost when the instance is reimaged or moved.

Storage

Windows Azure provides supports various forms of permanent storage with differing capabilities. The Windows Azure Storage service provides cost-effective storage for up to 100s of terabytes. SQL Azure provides a hosted version of SQL Server scaling for up to 10s of gigabytes. It is very much the intent that applications use both of these, as needed, with relational data stored in SQL Azure and large-scale data stored in Windows Azure Storage. Both Windows Azure Storage and SQL Azure are shared services with scalability limits to ensure that all users of the services get satisfactory performance.

The Windows Azure Storage Service supports blobs, queues and tables. Azure Blob supports the storage of blobs in a simple two-level hierarchy of containers and blobs. Azure Queue supports the storage of messages in queues. These are best-effort FIFO queues intended to support the asynchronous management of multi-step tasks such as when an image uploaded by a web role instance is processed by a worker role instance. Azure Table is the Windows Azure implementation of a NoSQL database supporting the storage of large amounts of data. This data is schemaless to the extent that each entity inserted into a table in Azure Table carries its own schema.

The definitive way to access the Azure Storage Service is through a RESTful interface. The Windows Azure SDK provides a Storage Client library which provides a high-level .NET wrapper to the RESTful interface. For Azure Table, this wrapper uses WCF Data Services. Other than to Azure Blob containers and blobs configured for public access, all storage operations against the Azure Storage Service are authenticated.

With Azure Drive, a blob containing an appropriately created virtual hard disk (VHD) can be used to provide the backing store for an NTFS drive mounted on a compute instance. This provides the appearance of locality for persistent storage attached to a compute instance.

SQL Azure is essentially a hosted version of Microsoft SQL Server exposing many but not all of its features. Connections to SQL Azure use the same TDS protocol used with SQL Server. Individual databases can be up to 50GB in size. The storage of larger datasets can be achieved through sharding (the distribution of data across several databases) which can also aid throughput. Microsoft has announced the development of SQL Azure Federation services which will essentially automate the sharding process for SQL Azure. All operations against SQL Azure must be authenticated using SQL Server authentication.

Windows Azure also supports two types of volatile storage: local storage on an instance and the Windows Azure AppFabric Caching service (currently in beta). Local storage for an instance can be configured in the service model to provide space on the local file system that can be used as desired by the instance. Local storage can be configured to survive an instance recycle but it does not survive instance reimaging. The Windows Azure AppFabric Caching service essentially provides a managed version of the Windows AppFabric Caching service. It provides the core functionality of this service including central caching of data with a local cache providing high-speed access to it. The Windows Azure AppFabric Caching service also supports directly the caching of ASP.Net session state for web roles.

Connectivity

Windows Azure supports various forms of connectivity;

- Input endpoints

- Internal endpoints

- Windows Azure AppFabric Service Bus

- Windows Azure Connect

A hosted service can expose one or more input endpoints to the public internet. All input endpoints use the single virtual IP address associated with the hosted service but each input endpoint uses a different port. The virtual IP address assigned to the hosted service is unlikely to change during the lifetime of the service but this is not guaranteed. All access to an input endpoint is load-balanced across all instances of whichever role is configured to handle that endpoint. A CNAME record can be used to map one or more vanity domains to the domain name of the hosted service provided by Windows Azure. These vanity domains can be handled by a web role and used to expose different websites to different domains.

An internal endpoint is exposed by instances of a role only to other instances inside the same hosted service. Internal endpoints are not visible outside the service. These endpoints are not role balanced.

The Windows Azure AppFabric Service Bus provides a way to expose web services through a public endpoint in a Windows Azure datacenter and consequently avoid compromising a corporate firewall. The physical endpoint of the service can remain private and, indeed, can remain behind a corporate firewall. The Windows Azure AppFabric Service Bus can be configured to attempt the fusion of the client request to the public endpoint with the services connection to the public endpoint and thereby allow a direct connection between the client and the service.

Windows Azure Connect supports the creation of a virtual private network (VPN) between on-premises computers and hosted services in a Windows Azure datacenter. This allows for hybrid services with, for example, a web role exposing a website where the backing store is a SQL Server database residing in a corporate datacenter. This allows a company to retain complete control of its data while gaining the benefit of using Windows Azure hosted services for its public-facing services. Windows Azure Connect also facilitates the remote administration of instances in a hosted service. Note that Windows Azure Connect is currently in CTP.

Connectivity brings with it the problem of authentication. The Windows Azure AppFabric Access Control Service (ACS) provides support for authentication for RESTful web services. The preview version, currently in the Windows Azure AppFabric labs, promises to be much more interesting and provides a security token service (STS) able to transform claims coming from various identity providers including Facebook, Google, Yahoo and Windows Live Id – as well as Active Directory Federation Services. The claims produced by the preview ACS can be consumed by a web role and used to support claims-based authentication to websites it hosts.

Management

The Windows Azure Portal can be used to manage the compute, storage and connectivity provided by Windows Azure. This includes the creation of hosted services and storage accounts as well as the management of the service endpoints required by the Windows Azure AppFabric Service Bus and Caching service. The Windows Azure Portal also supports the configuration of the Windows Azure Connect VPN.

The Windows Azure Service Management REST API exposes much of the functionality of the Windows Azure Portal for managing hosted services and Windows Azure Storage service storage accounts. The Windows Azure Service Management Cmdlets provide a wrapper to this API. Cerebrata has several useful management tools for Windows Azure including Cloud Storage Studio for managing the blobs, queues and tables hosted by the Windows Azure Storage service. SQL Server Management Studio can be used to manage SQL Azure databases.

Next Steps for Developers

The first step is to go to the Get Started page on the Windows Azure website and download the Windows Azure SDK. You can then try Windows Azure for free (without a credit card) by going to windowsazurepass.com and using the Promo Code NEILONAZURE (valid in the USA). This gets you a 30-day pass to try out Windows Azure by providing three small compute instances, two 1GB SQL Azure databases as well as connectivity and Windows Azure Storage.

Neil is a Windows Azure MVP.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

• Beth Schultz continued Network World’s cloud computing series by asserting “Many enterprises are looking at private cloud as first step, but experts urge an approach that incorporates all cloud options” as a deck for Part 2, Public cloud vs. private cloud: Why not both?, of 4/4/2011:

As cloud computing moves from hype to reality, certain broad trends and best practices are emerging when it comes to the public cloud vs. private cloud deployment debate.

Anecdotally and from surveys, it's becoming clear that most enterprises are first looking to the private cloud as a way to play with cloud tools and concepts in the safety of their own secure sandbox.

For example, a recent Info-Tech survey shows that 76% of IT decision-makers will focus initially or, in the case of 33% of respondents, exclusively on the private cloud.

"The bulk of our clients come in thinking private. They want to understand the cloud, and think it's best to get their feet wet within their own four walls," says Joe Coyle, CTO at Capgemini in North America.

But experts say a better approach is to evaluate specific applications, factor in security and compliance considerations, and then decide what apps are appropriate for a private cloud, as well as what apps can immediately be shifted to the public cloud.

Private cloud oftentimes is the knee-jerk reaction, but not necessarily the right decision, Coyle adds. "What companies really need to do is look at each workload to determine which kind of cloud it should be in. By asking the right questions around criteria such as availability, security and cost, the answers will push the workload to the public or private, or maybe community, cloud," he says.

Certainly before moving data to the multitenant public cloud, enterprise IT executives want assurances about the classic cautions around security, availability and accountability of data, agrees John Sloan, lead analyst with Info-Tech Research Group, and IT research and advice firm.

"But I stress, those are cautions and not necessarily red flags. They're more like yellow flags," he says. That's because what will assure one company won't come near to placating another, Sloan adds.

Company size and type of business make a difference. He uses availability as an example. "If you're at a smaller company and don't have an N-plus data center, then three-nines availability might be good enough for what you run and, in fact, it might even be better than what you can provide internally. So from that viewpoint, a public cloud service will be perfectly acceptable," Sloan says.

"But if you're at a larger enterprise with a large N-plus data center and you're guaranteeing five-nines availability to mission-critical or core applications, then you'd dismiss the public cloud for your most important stuff because it can't beat what you've already got," he adds.

And just as private vs. private doesn't have to be an either/or proposition, there are still other models, such as hosted private cloud, or hybrid cloud that provide additional options and flexibility for companies moving to the cloud.

If you have major security and privacy issues, and you don't want to build your own private cloud, a virtual private cloud, your own gated community within the public cloud universe, is an option.

• Kenon Owens described Great TechNet Edge Videos from MMS in a 4/3/2011 post to Nexus SC: The System Center Team blog:

Adam (Bomb) of TechNet Edge made some GREAT Videos during MMS, Check them Out:

The Microsoft Management Summit (MMS) and TechNet Edge have a great relationship – their audience is a dedicated, specialized IT Pro audience, and Edge has been on site doing interviews with them for the past 4 years. …

This year TechNet Edge was the digital media source for MMS – the on-demand keynotes from both days are hosted on Edge, and we worked closely with PR and Coms to release our videos in conjunction with their announcements. They linked to the Edge videos from their Press page. We did a bit on Windows Embedded and their new Device Management Product, and they put us on their press site, too.

The Day 1 Keynote was about Empowering IT – a big focus on System Center 2012 product announcements that enable Private and Public cloud capabilities, like Virtual Machine Manager 2012, the cloud based System Center Advisor, and Concero.

The Day 2 Keynote was about Empowering Users – consumerization of IT. Announcements included the mobile device management capabilities coming in Configuration Manager (including iOS, and Android mgmt.), and the RTM of Windows Intune for SaaS based PC management.

With the EvNet team heads down with Mix, we worked with the event team to deliver the show ourselves – sourcing local camera and editing crews, and publishing to TechNet. The STO team was a critical part of our success - most videos were published within hours of shooting.

In addition to interviewing CVP Brad Anderson, we also interviewed System Center Senior Director Ryan O’Hara, Config Manager Principal GPM Josh Pointer, and several other members of engineering teams (complete list below). All up, the Corp ITE team delivered 18 videos from MMS, in just over 2 days of shooting.

You can see them all by visiting the MMS2011 tag page on Edge. The whole list is also included below.