Windows Azure and Cloud Computing Posts for 4/16/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 4/16/2011 3:00 PM PDT with articles marked • from Dinesh Haridas, Bruce Kyle, Matias Woloski and Liran Zelkha.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

• Bruce Kyle (pictured below) announced the availability of an ISV Video: StorSimple Integrates On-Premises, Cloud Storage with Windows Azure on 4/16/2011:

ISV StorSimple provides a hybrid storage solution that combines on-premises storage with a scalable, on-demand cloud storage model. StorSimple integrates with several cloud storage providers, including Windows Azure.

Dr Iam Howells [pictured at right], CMO for StorSimple, talks with Developer Evangelist Wes Yanaga about how the team created a solution that enables companies to seamlessly integrate cloud storage services for Microsoft Exchange Server, SharePoint Server, and Windows Server. Howells describes why the company offers storage on Azure and how that benefits its customers.

Video link: StorSimple Integrates On-Premises Storage to Windows Azure

StorSimple uses an algorithm that tiers storage to an on-premises, high-performance disk or to Windows Azure Blob storage to optimize performance.

To address performance issues with on-premises data storage, a data compression process eliminates redundant data segments and minimizes the amount of storage space that an application consumes. At the same time that StorSimple optimizes storage space for data-intensive applications, it uses Cloud Clones—a patented StorSimple technology—to persistently store copies of application data in the cloud.

With StorSimple and Windows Azure, customers maintain control of their data and can take advantage of public cloud services with security-enhanced data connections. StorSimple uses Windows Azure AppFabric Access Control to provide rule-based authorization to validate requests and connect the on-premises applications to the cloud. Customers who deploy StorSimple use a private key to encrypt the data with AES-256 military-grade encryption before it is pushed to Windows Azure, helping to enhance security and ensure that data can only be retrieved by the customer.

About StorSimple

StorSimple provides application-optimized cloud storage for Microsoft Server applications. StorSimple's mission is to bring the benefits of the cloud to on-premises applications without forcing the migration of applications into the cloud. StorSimple manages storage and backup for working set apps such as Microsoft SharePoint, shared file drives, Microsoft Exchange, and virtual machine libraries, offering SSD performance with cloud elasticity.

Other ISV Videos

For videos on Windows Azure Platform, see:

- Accumulus Makes Subscription Billing Easy for Windows Azure

- Azure Email-Enables Lists, Low-Cost Storage for SharePoint

- Credit Card Processing for Windows Phone 7 on Windows Azure

- Crowd-Sourcing Public Sector App for Windows Phone, Azure

- Food Buster Game Achieves Scalability with Windows Azure

- BI Solutions Join On-Premises To Windows Azure Using Star Analytics Command Center

• Dinesh Haridas of the Windows Azure Storage Team described Using SMB to Share a Windows Azure Drive among multiple Role Instances on 4/16/2011:

We often get questions from customers about how to share a drive with read-write access among multiple role instances. A common scenario is that of a content repository for multiple web servers to access and store content. An Azure drive is similar to a traditional disk drive in that it may only be mounted read-write on one system. However using SMB, it is possible to mount a drive on one role instance and then share that out to other role instances which can map the network share to a drive letter or mount point.

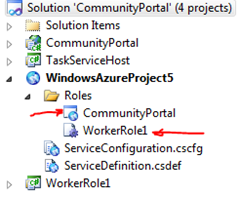

In this blog post we’ll cover the specifics on how to set this up and leave you with a simple prototype that demonstrates the concept. We’ll use an example of a worker role (referred to as the server) which mounts the drive and shares it out and two other worker roles (clients) that map the network share to a drive letter and write log records to the shared drive.

Service Definition on the Server role

The server role has TCP port 445 enabled as an internal endpoint so that it can receive SMB requests from other roles in the service. This done by defining the endpoint in the ServiceDefinition.csdef as follows

<Endpoints> <InternalEndpoint name="SMB" protocol="tcp" port="445" /> </Endpoints>Now when the role starts up, it must mount the drive and then share it. Sharing the drive requires the Server role to be running with administrator privileges. Beginning with SDK 1.3 it’s possible to do that using the following setting in the ServiceDefinition.csdef file.

<Runtime executionContext="elevated"> </Runtime>Mounting the drive and sharing it

When the server role instance starts up, it first mounts the Azure drive and executes shell commands to

- Create a user account for the clients to authenticate as. The user name and password are derived from the service configuration.

- Enable inbound SMB protocol traffic through the role instance firewall

- Share the mounted drive with the share name specified in the service configuration and grant the user account previously created full access. The value for path in the example below is the drive letter assigned to the drive.

Here’s snippet of C# code that does that.

String error; ExecuteCommand("net.exe", "user " + userName + " " + password + " /add", out error, 10000); ExecuteCommand("netsh.exe", "firewall set service type=fileandprint mode=enable scope=all", out error, 10000); ExecuteCommand("net.exe", " share " + shareName + "=" + path + " /Grant:" + userName + ",full", out error, 10000);The shell commands are executed by the routine ExecuteCommand.

public static int ExecuteCommand(string exe, string arguments, out string error, int timeout) { Process p = new Process(); int exitCode; p.StartInfo.FileName = exe; p.StartInfo.Arguments = arguments; p.StartInfo.CreateNoWindow = true; p.StartInfo.UseShellExecute = false; p.StartInfo.RedirectStandardError = true; p.Start(); error = p.StandardError.ReadToEnd(); p.WaitForExit(timeout); exitCode = p.ExitCode; p.Close(); return exitCode; }We haven’t touched on how to mount the drive because that is covered in several places including here.

Mapping the network drive on the client

When the clients start up, they locate the instance of the SMB Server and then identify the address of the SMB endpoint on the server. Next they execute a shell command to map the share served by the SMB server to a drive letter specified by the configuration setting localpath. Note that sharename, username and password must match the settings on the SMB server.

var server = RoleEnvironment.Roles["SMBServer"].Instances[0]; machineIP = server.InstanceEndpoints["SMB"].IPEndpoint.Address.ToString();machineIP = "\\\\" + machineIP + "\\"; string error; ExecuteCommand("net.exe", " use " + localPath + " " + machineIP + shareName + " " + password + " /user:"+ userName, out error, 20000);Once the share has been mapped to a local drive letter, the clients can write whatever they want to the share, just as they would to a local drive.

Note: Since the clients may come up before the server is ready, the clients may have to retry or alternatively poll the server on some other port for status before attempting to map the drive. The prototype retries in a loop until it succeeds or times out.

Enabling High Availability

With a single server role instance, the file share will be unavailable when the role is being upgraded. If you need to mitigate that, you can create a few warm stand-by instances of the server role thus ensuring that there is always one server role instance available to share the Azure Drive to clients.

Another approach would be to make each of your roles a potential host for the SMB share. Each role instance could potentially run an SMB service, but only one of them would get the mounted Azure Drive behind SMB service. The roles can then iterate over all the role instances attempting to map the SMB share with each role instance. The mapping will succeed when the client connects to the instance that has the drive mounted.

Another scheme is to have the role instance that successfully mounted the drive inform the other role instances so that the clients can query to find the active server instance.

Note: The high availability scenario is not captured in the prototype but is feasible using standard Azure APIs.

Sharing Local Drives within a role instance

It’s also possible to share a local resource drive mounted in a role instance among multiple role instances using similar steps. The key difference though is that writes to the local storage resource are not durable while writes to Azure Drives are persisted and available even after the role instances are shutdown.

Dinesh Haridas

Sample Code

Here’s the code for the Server and Client in its entirety for easy reference.

Server – WorkerRole.cs

This file contains the code for the SMB server worker role. In the OnStart() method, the role instance initializes tracing before mounting the Azure Drive. It gets the settings for storage credentials, drive name and drive size from the Service Configuration. Once the drive is mounted, the role instance creates a user account, enables SMB traffic through the firewall and then shares the drive. These operations are performed by executing shell commands using the ExecuteCommand() method described earlier. For simplicity, parameters like account name, password and the share name for the drive are derived from the Service Configuration.

using System; using System.Collections.Generic; using System.Diagnostics; using System.Linq; using System.Net; using System.Threading; using Microsoft.WindowsAzure; using Microsoft.WindowsAzure.Diagnostics; using Microsoft.WindowsAzure.ServiceRuntime; using Microsoft.WindowsAzure.StorageClient; namespace SMBServer { public class WorkerRole : RoleEntryPoint { public static string driveLetter = null; public static CloudDrive drive = null; public override void Run() { Trace.WriteLine("SMBServer entry point called", "Information"); while (true) { Thread.Sleep(10000); } } public override bool OnStart() { // Set the maximum number of concurrent connections ServicePointManager.DefaultConnectionLimit = 12; // Initialize logging and tracing DiagnosticMonitorConfiguration dmc = DiagnosticMonitor.GetDefaultInitialConfiguration(); dmc.Logs.ScheduledTransferLogLevelFilter = LogLevel.Verbose; dmc.Logs.ScheduledTransferPeriod = TimeSpan.FromMinutes(1); DiagnosticMonitor.Start("Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString", dmc); Trace.WriteLine("Diagnostics Setup complete", "Information"); CloudStorageAccount account = CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue("StorageConnectionString")); try { CloudBlobClient blobClient = account.CreateCloudBlobClient(); CloudBlobContainer driveContainer = blobClient.GetContainerReference("drivecontainer"); driveContainer.CreateIfNotExist(); String driveName = RoleEnvironment.GetConfigurationSettingValue("driveName"); LocalResource localCache = RoleEnvironment.GetLocalResource("AzureDriveCache"); CloudDrive.InitializeCache(localCache.RootPath, localCache.MaximumSizeInMegabytes); drive = new CloudDrive(driveContainer.GetBlobReference(driveName).Uri, account.Credentials); try { drive.Create(int.Parse(RoleEnvironment.GetConfigurationSettingValue("driveSize"))); } catch (CloudDriveException ex) { Trace.WriteLine(ex.ToString(), "Warning"); } driveLetter = drive.Mount(localCache.MaximumSizeInMegabytes, DriveMountOptions.None); string userName = RoleEnvironment.GetConfigurationSettingValue("fileshareUserName"); string password = RoleEnvironment.GetConfigurationSettingValue("fileshareUserPassword"); // Modify path to share a specific directory on the drive string path = driveLetter; string shareName = RoleEnvironment.GetConfigurationSettingValue("shareName"); int exitCode; string error; //Create the user account exitCode = ExecuteCommand("net.exe", "user " + userName + " " + password + " /add", out error, 10000); if (exitCode != 0) { //Log error and continue since the user account may already exist Trace.WriteLine("Error creating user account, error msg:" + error, "Warning"); } //Enable SMB traffic through the firewall exitCode = ExecuteCommand("netsh.exe", "firewall set service type=fileandprint mode=enable scope=all", out error, 10000); if (exitCode != 0) { Trace.WriteLine("Error setting up firewall, error msg:" + error, "Error"); goto Exit; } //Share the drive exitCode = ExecuteCommand("net.exe", " share " + shareName + "=" + path + " /Grant:" + userName + ",full", out error, 10000); if (exitCode != 0) { //Log error and continue since the drive may already be shared Trace.WriteLine("Error creating fileshare, error msg:" + error, "Warning"); } Trace.WriteLine("Exiting SMB Server OnStart", "Information"); } catch (Exception ex) { Trace.WriteLine(ex.ToString(), "Error"); Trace.WriteLine("Exiting", "Information"); throw; } Exit: return base.OnStart(); } public static int ExecuteCommand(string exe, string arguments, out string error, int timeout) { Process p = new Process(); int exitCode; p.StartInfo.FileName = exe; p.StartInfo.Arguments = arguments; p.StartInfo.CreateNoWindow = true; p.StartInfo.UseShellExecute = false; p.StartInfo.RedirectStandardError = true; p.Start(); error = p.StandardError.ReadToEnd(); p.WaitForExit(timeout); exitCode = p.ExitCode; p.Close(); return exitCode; } public override void OnStop() { if (drive != null) { drive.Unmount(); } base.OnStop(); } } }Client – WorkerRole.cs

This file contains the code for the SMB client worker role. The OnStart method initializes tracing for the role instance. In the Run() method, each client maps the drive shared by the server role using the MapNetworkDrive() method before writing log records at ten second intervals to the share in a loop.

In the MapNetworkDrive() method the client first determines the IP address and port number for the SMB endpoint on the server role instance before executing the shell command net use to connect to it. As in the case of the server role, the routine ExecuteCommand() is used to execute shell commands. Since the server may start up after the client, the client retries in a loop sleeping 10 seconds between retries and gives up after about 17 minutes. Between retries the client also deletes any stale mounts of the same share.

using System; using System.Collections.Generic; using System.Diagnostics; using System.Linq; using System.Net; using System.Threading; using Microsoft.WindowsAzure; using Microsoft.WindowsAzure.Diagnostics; using Microsoft.WindowsAzure.ServiceRuntime; using Microsoft.WindowsAzure.StorageClient; namespace SMBClient { public class WorkerRole : RoleEntryPoint { public const int tenSecondsAsMS = 10000; public override void Run() { // The code here mounts the drive shared out by the server worker role // Each client role instance writes to a log file named after the role instance in the logfile directory Trace.WriteLine("SMBClient entry point called", "Information"); string localPath = RoleEnvironment.GetConfigurationSettingValue("localPath"); string shareName = RoleEnvironment.GetConfigurationSettingValue("shareName"); string userName = RoleEnvironment.GetConfigurationSettingValue("fileshareUserName"); string password = RoleEnvironment.GetConfigurationSettingValue("fileshareUserPassword"); string logDir = localPath + "\\" + "logs"; string fileName = RoleEnvironment.CurrentRoleInstance.Id + ".txt"; string logFilePath = System.IO.Path.Combine(logDir, fileName); try { if (MapNetworkDrive(localPath, shareName, userName, password) == true) { System.IO.Directory.CreateDirectory(logDir); // do work on the mounted drive here while (true) { // write to the log file System.IO.File.AppendAllText(logFilePath, DateTime.Now.TimeOfDay.ToString() + Environment.NewLine); Thread.Sleep(tenSecondsAsMS); } } Trace.WriteLine("Failed to mount" + shareName, "Error"); } catch (Exception ex) { Trace.WriteLine(ex.ToString(), "Error"); throw; } } public static bool MapNetworkDrive(string localPath, string shareName, string userName, string password) { int exitCode = 1; string machineIP = null; while (exitCode != 0) { int i = 0; string error; var server = RoleEnvironment.Roles["SMBServer"].Instances[0]; machineIP = server.InstanceEndpoints["SMB"].IPEndpoint.Address.ToString(); machineIP = "\\\\" + machineIP + "\\"; exitCode = ExecuteCommand("net.exe", " use " + localPath + " " + machineIP + shareName + " " + password + " /user:" + userName, out error, 20000); if (exitCode != 0) { Trace.WriteLine("Error mapping network drive, retrying in 10 seoconds error msg:" + error, "Information"); // clean up stale mounts and retry ExecuteCommand("net.exe", " use " + localPath + " /delete", out error, 20000); Thread.Sleep(10000); i++; if (i > 100) break; } } if (exitCode == 0) { Trace.WriteLine("Success: mapped network drive" + machineIP + shareName, "Information"); return true; } else return false; } public static int ExecuteCommand(string exe, string arguments, out string error, int timeout) { Process p = new Process(); int exitCode; p.StartInfo.FileName = exe; p.StartInfo.Arguments = arguments; p.StartInfo.CreateNoWindow = true; p.StartInfo.UseShellExecute = false; p.StartInfo.RedirectStandardError = true; p.Start(); error = p.StandardError.ReadToEnd(); p.WaitForExit(timeout); exitCode = p.ExitCode; p.Close(); return exitCode; } public override bool OnStart() { // Set the maximum number of concurrent connections ServicePointManager.DefaultConnectionLimit = 12; DiagnosticMonitorConfiguration dmc = DiagnosticMonitor.GetDefaultInitialConfiguration(); dmc.Logs.ScheduledTransferLogLevelFilter = LogLevel.Verbose; dmc.Logs.ScheduledTransferPeriod = TimeSpan.FromMinutes(1); DiagnosticMonitor.Start("Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString", dmc); Trace.WriteLine("Diagnostics Setup comlete", "Information"); return base.OnStart(); } } }ServiceDefinition.csdef

<?xml version="1.0" encoding="utf-8"?> <ServiceDefinition name="AzureDemo" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"> <WorkerRole name="SMBServer"> <Runtime executionContext="elevated"> </Runtime> <Imports> <Import moduleName="Diagnostics" /> </Imports> <ConfigurationSettings> <Setting name="StorageConnectionString" /> <Setting name="driveName" /> <Setting name="driveSize" /> <Setting name="fileshareUserName" /> <Setting name="fileshareUserPassword" /> <Setting name="shareName" /> </ConfigurationSettings> <LocalResources> <LocalStorage name="AzureDriveCache" cleanOnRoleRecycle="true" sizeInMB="300" /> </LocalResources> <Endpoints> <InternalEndpoint name="SMB" protocol="tcp" port="445" /> </Endpoints> </WorkerRole> <WorkerRole name="SMBClient"> <Imports> <Import moduleName="Diagnostics" /> </Imports> <ConfigurationSettings> <Setting name="fileshareUserName" /> <Setting name="fileshareUserPassword" /> <Setting name="shareName" /> <Setting name="localPath" /> </ConfigurationSettings> </WorkerRole> </ServiceDefinition>ServiceConfiguration.cscfg

<?xml version="1.0" encoding="utf-8"?> <ServiceConfiguration serviceName="AzureDemo" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" osFamily="1" osVersion="*"> <Role name="SMBServer"> <Instances count="1" /> <ConfigurationSettings> <Setting name="StorageConnectionString" value="DefaultEndpointsProtocol=http;AccountName=yourstorageaccount;AccountKey=yourkey" /> <Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="DefaultEndpointsProtocol=https;AccountName=yourstorageaccount;AccountKey=yourkey" /> <Setting name="driveName" value="drive2" /> <Setting name="driveSize" value="1000" /> <Setting name="fileshareUserName" value="fileshareuser" /> <Setting name="fileshareUserPassword" value="SecurePassw0rd" /> <Setting name="shareName" value="sharerw" /> </ConfigurationSettings> </Role> <Role name="SMBClient"> <Instances count="2" /> <ConfigurationSettings> <Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="DefaultEndpointsProtocol=https;AccountName=yourstorageaccount;AccountKey=yourkey" /> <Setting name="fileshareUserName" value="fileshareuser" /> <Setting name="fileshareUserPassword" value="SecurePassw0rd" /> <Setting name="shareName" value="sharerw" /> <Setting name="localPath" value="K:" /> </ConfigurationSettings> </Role> </ServiceConfiguration>

Scott Densmore described adding Multi Entity Schema Tables in Windows Azure in a 4/14/2011 post:

The source for this is available on github.

Intro

When we wrote Moving Applications to the Cloud, we talked about doing a-Expense using a multi entity schema yet never implemented this in code. As we finish up V2 to the Claims Identity Guide, we are thinking of how we go about updating the other two guides for Windows Azure. I read an article from Jeffrey Richter about this same issue. I decided to take our a-Expense with Workers reference application and update it to use multi entity schemas based on the article. I also decided to fix a few bugs that we had as well. Most of this had to do with saving the expenses. In the old way of doing things, we would save expense items, the receipt images, and then the expense header. The first problem is that if you debugged the project things got a little out of sync, you could end up trying to update a reciept url on the expense item before it was saved. Also, if the expense header failed to save, you would have orphaned records that then you would need to create another process to go out and scavenge any orphaned records.

Implementation

There has been a lot of code changes since the version shipped for Moving to the Cloud. The major change comes to the save method for the expense repository.

The main changes for this code are the following:

- Multi Entity Schema for the Expense

- Remove updates back to the table for Receipt URIs

- Update the Queue handlers to look for Poison Messages and move to another Queue

Multi Entity Schema

The previous version of the project was split into two tables: Expense and ExpenseItems. This create a few problems that needed to be addressed. The first was that you could not create a transaction across the two entities. The way we solved this was to create the ExpenseItem(s) before creating the Expense. If there is a problem between the ExpenseItem and Expense, then there would be orphaned ExpenseItems. This would require a scavenger looking for all the orphaned records. This would all add up to more costs. Now we are going to save the ExpenseItem and Expense in the same table. The following is a diagram of doing this:

This now lets us have one transaction across the table.

Remove Updates for Receipts

In the first version, when you uploaded receipts along with the expense, the code would post a message to a queue that would then update the table with the URI of the thumbnail and receipt images. In this version, we used a more convention based approach. Instead of updating the table, a new property, "HasReceipts", is added so when displaying the receipts the code can tell when there is a receipt and where there is not. Now when there is a receipt the URI is built on the fly and accessed. This saves on the cost of the update to the table. Here is the diagram:

Poison Messages

In the previous version, when a message would be dequeued 5 times it would be deleted, now you have the option of moving it to a new queue.

...

try { action(message); success = true; } catch (Exception) { success = false; } finally { if (deadQueue != null && message.DequeueCount > 5) { deadQueue.AddMessage(message); } if (success || message.DequeueCount > 5) { queue.DeleteMessage(message); } }...

QueueCommandHandler.For(exportQueue).Every(TimeSpan.FromSeconds(5)).WithPosionMessageQueue(poisonExportQueue).Do(exportQueueCommand);Conclusion

This is the beginning of our updates to our Windows Azure Guidance. We want to show even better ways of moving and developing applications for the cloud. Go check out the source.

<Return to section navigation list>

SQL Azure Database and Reporting

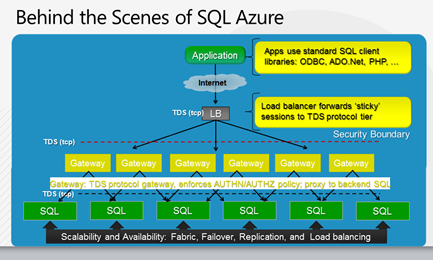

Per Ola Sæther published Push Notifications for Windows Phone 7 using SQL Azure and Cloud services - Part 1 to the DZone blog on 4/13/2011:

In this article series I will write about push notifications for Windows Phone 7 with focus on the server-side covering notification subscription management, subscription matching and notification scheduling. The server-side will be using SQL Azure and WCF Web Service deployed to Azure.

As an example I will create a very simple news reader application where the user can subscribe to receive notifications when a new article within a given category is published. Push notifications will be delivered as Toast notifications to the client. The server-side will consist of a SQL database and WCF Web Services that I deploy to Windows Azure.

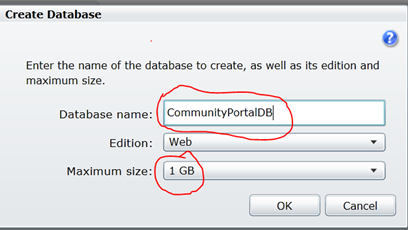

Creating the SQL Azure database

The database will store subscription information and news articles. To achieve this I will create three tables; Category, News and Subscription.

The category table is just a place holder for categories containing a unique category ID and the category name. The news table is where we store our news articles containing a unique news ID, header, article, date added and a reference to a category ID. In the subscription table we store information about the subscribers containing a global unique device id, channel URI and a reference to a category ID.

I create the database in Windows Azure as a SQL Azure database. To learn how to sign up to SQL Azure and create a database there you can read an article I wrote earlier explaining how sign up and use SQL Azure. When you have signed up to SQL Azure and created the database you can use the following script to create the three tables we will be using in this article.

01.USE [PushNotification]

02.GO

03.CREATETABLE[dbo].[Category]

04.(

05.[CategoryId] [int] IDENTITY (1,1)NOTNULL,

06.[Name] [nvarchar](60)NOTNULL,

07.CONSTRAINT[PK_Category]PRIMARYKEY([CategoryId])

08.)

09.GO

10.CREATETABLE[dbo].[Subscription]

11.(

12.[SubscriptionId] [int] IDENTITY (1,1)NOTNULL,

13.[DeviceId] [uniqueidentifier]NOTNULL,

14.[ChannelURI] [nvarchar](250)NOTNULL,

15.PRIMARYKEY([SubscriptionId]),

16.[CategoryId] [int]NOTNULLFOREIGNKEYREFERENCES[dbo].[Category]([CategoryId])

17.)

18.GO

19.CREATETABLE[dbo].[News]

20.(

21.[NewsId] [int] IDENTITY (1,1)NOTNULL,

22.[Header] [nvarchar](60)NOTNULL,

23.[Article] [nvarchar](250)NOTNULL,

24.[AddedDate] [datetime]NOTNULL,

25.PRIMARYKEY([NewsId]),

26.[CategoryId] [int]NOTNULLFOREIGNKEYREFERENCES[dbo].[Category]([CategoryId])

27.)

28.GOBy running this script the three tables Category, Subscription and News that will be used in this example are created. Be aware that I have not normalized the tables to avoid complicating the code. You can create a normal MS SQL database and run it locally or at a server if you want to, but for this example I have used SQL Azure.

Creating the WCF Cloud service

The WCF Cloud service will be consumed by the WP7 app and will communicate with the SQL Azure database. The service is responsible for pushing notifications to the WP7 clients.

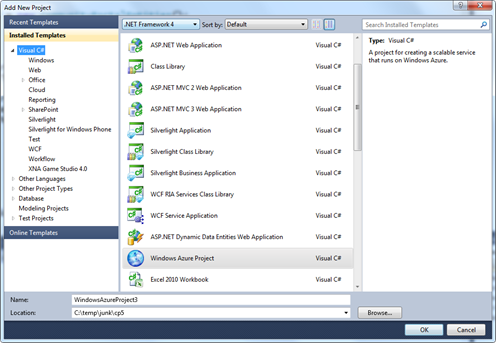

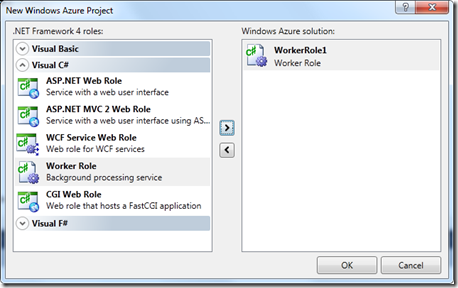

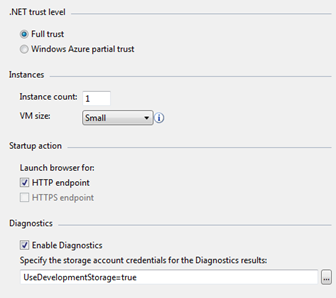

Steps to create the WCF Cloud service with Object Model

Create a Windows Cloud project in Visual Studio 2010, I named mine NewsReaderCloudService. Select WCF Service Web Role, I named mine NewsReaderWCFServiceWebRole. Then you need to create an Object Model that connects to your SQL Azure database, I named mine NewsReaderModel and I named the entity NewsReaderEntities. For a more detailed explanation on how to do this you can take a look at an article I have written earlier on the subject.

If you have followed the steps you should now have a Windows Cloud project connected to your SQL Azure database.

Creating the WCF contract

The WCF contract is written in the auto generated IService.cs file. The first thing I do is to rename this file to INewsReaderService.cs. In this interface I have added seven OperationContract methods: SubscribeToNotifications, RemoveCategorySubscription, GetSubscribtion, AddNewsArticle, AddCategory, GetCategories and PushToastNotifications. I will explain these methods in the next section when we implement the service based on the INewsReaderService interface. Below you can see the code for INewsReaderService.cs

01.usingSystem;

02.usingSystem.Collections.Generic;

03.usingSystem.ServiceModel;

04.namespaceNewsReaderWCFServiceWebRole

05.{

06.[ServiceContract]

07.publicinterfaceINewsReaderService

08.{

09.[OperationContract]

10.voidSubscribeToNotification(Guid deviceId,stringchannelURI,intcategoryId);

11.[OperationContract]

12.voidRemoveCategorySubscription(Guid deviceId,intcategoryId);

13.[OperationContract]

14.List<int> GetSubscriptions(Guid deviceId);

15.[OperationContract]

16.voidAddNewsArticle(stringheader,stringarticle,intcategoryId);

17.[OperationContract]

18.voidAddCategory(stringcategory);

19.[OperationContract]

20.List<Category> GetCategories();

21.[OperationContract]

22.voidPushToastNotifications(stringtitle,stringmessage,intcategoryId);

23.}

24.}Creating the service

The service implements the INewsReaderService interface and this is done in the auto generated Service1.svc.cs file, the first thing I do is to rename it to NewsReaderService.svc.cs. I start with implementing members from the interface. Below you can see the code for NewsReaderService.svc.cs with empty implementations. In the next sections I will complete the empty methods implemented.

01.usingSystem;

02.usingSystem.Collections.Generic;

03.usingSystem.Data.Objects;

04.usingSystem.IO;

05.usingSystem.Linq;

06.usingSystem.Net;

07.usingSystem.Xml;

08.namespaceNewsReaderWCFServiceWebRole

09.{

10.publicclassNewsReaderService : INewsReaderService

11.{

12.publicvoidSubscribeToNotification(Guid deviceId,stringchannelURI,intcategoryId)

13.{

14.thrownewNotImplementedException();

15.}

16.publicvoidRemoveCategorySubscription(Guid deviceId,intcategoryId)

17.{

18.thrownewNotImplementedException();

19.}

20.publicList<int> GetSubscriptions(Guid deviceId)

21.{

22.thrownewNotImplementedException();

23.}

24.publicvoidAddNewsArticle(stringheader,stringarticle,intcategoryId)

25.{

26.thrownewNotImplementedException();

27.}

28.publicvoidAddCategory(stringcategory)

29.{

30.thrownewNotImplementedException();

31.}

32.publicList<Category> GetCategories()

33.{

34.thrownewNotImplementedException();

35.}

36.publicvoidPushToastNotifications(stringtitle,stringmessage,intcategoryId)

37.{

38.thrownewNotImplementedException();

39.}

40.}

41.}SubscribeToNotification method

This is the method that the client calls to subscribe to notifications for a given category. The information is stored in the Subscription table and will be used when notifications are pushed.

01.publicvoidSubscribeToNotification(Guid deviceId,stringchannelURI,intcategoryId)

02.{

03.using(var context =newNewsReaderEntities())

04.{

05.context.AddToSubscription((newSubscription

06.{

07.DeviceId = deviceId,

08.ChannelURI = channelURI,

09.CategoryId = categoryId,

10.}));

11.context.SaveChanges();

12.}

13.}RemoveCategorySubscription method

This is the method that the client call to remove a subscription for notifications for a given category. If the Subscription table has a match for the given device ID and category ID this entry will be deleted.

01.publicvoidRemoveCategorySubscription(Guid deviceId,intcategoryId)

02.{

03.using(var context =newNewsReaderEntities())

04.{

05.Subscription selectedSubscription = (from oincontext.Subscription

06.where (o.DeviceId == deviceId && o.CategoryId == categoryId)

07.select o).First();

08.context.Subscription.DeleteObject(selectedSubscription);

09.context.SaveChanges();

10.}

11.}GetSubscribtions method

This method is used to return all categories which a device subscribes to.

01.publicList<int> GetSubscriptions(Guid deviceId)

02.{

03.var categories =newList<int>();

04.using(var context =newNewsReaderEntities())

05.{

06.IQueryable<int> selectedSubscriptions = from oincontext.Subscription

07.where o.DeviceId == deviceId

08.select o.CategoryId;

09.categories.AddRange(selectedSubscriptions.ToList());

10.}

11.returncategories;

12.}AddNewsArticle method

This method is a utility method so that we can add a new news article to the News table. I will use this later on to demonstrate push notification sent based on content matching. When a news article is published for a given category only the clients that have subscribed for that category will receive a notification.

01.publicvoidAddNewsArticle(stringheader,stringarticle,intcategoryId)

02.{

03.using(var context =newNewsReaderEntities())

04.{

05.context.AddToNews((newNews

06.{

07.Header = header,

08.Article = article,

09.CategoryId = categoryId,

10.AddedDate = DateTime.Now,

11.}));

12.context.SaveChanges();

13.}

14.}AddCategory method

This method is also a utility method so that we can add new categories to the Category table.

01.publicvoidAddCategory(stringcategory)

02.{

03.using(var context =newNewsReaderEntities())

04.{

05.context.AddToCategory((newCategory

06.{

07.Name = category,

08.}));

09.context.SaveChanges();

10.}

11.}GetCategories method

This method is used by the client to get a list of all available categories. We use this list to let the user select which categories to receive notifications for.

01.publicList<Category> GetCategories()

02.{

03.var categories =newList<Category>();

04.using(var context =newNewsReaderEntities())

05.{

06.IQueryable<Category> selectedCategories = from oincontext.Category

07.select o;

08.foreach(Category selectedCategoryinselectedCategories)

09.{

10.categories.Add(newCategory {CategoryId = selectedCategory.CategoryId, Name = selectedCategory.Name});

11.}

12.}

13.returncategories;

14.}PushToastNotification method

This method will construct the toast notification with a title and a message. The method will then get the channel URI for all devices that have subscribed to the given category. For each device in that list, the constructed toast notification will be sent.

01.publicvoidPushToastNotifications(stringtitle,stringmessage,intcategoryId)

02.{

03.stringtoastMessage ="<?xml version=\"1.0\" encoding=\"utf-8\"?>"+

04."<wp:Notification xmlns:wp=\"WPNotification\">"+

05."<wp:Toast>"+

06."<wp:Text1>{0}</wp:Text1>"+

07."<wp:Text2>{1}</wp:Text2>"+

08."</wp:Toast>"+

09."</wp:Notification>";

10.toastMessage =string.Format(toastMessage, title, message);

11.byte[] messageBytes = Encoding.UTF8.GetBytes(toastMessage);

12.//Send toast notification to all devices that subscribe to the given category

13.PushToastNotificationToSubscribers(messageBytes, categoryId);

14.}

15.privatevoidPushToastNotificationToSubscribers(byte[] data,intcategoryId)

16.{

17.Dictionary<Guid, Uri> categorySubscribers = GetSubscribersBasedOnCategory(categoryId);

18.foreach(Uri categorySubscriberUriincategorySubscribers.Values)

19.{

20.//Add headers to HTTP Post message.

21.var myRequest = (HttpWebRequest) WebRequest.Create(categorySubscriberUri);// Push Client's channelURI

22.myRequest.Method = WebRequestMethods.Http.Post;

23.myRequest.ContentType ="text/xml";

24.myRequest.ContentLength = data.Length;

25.myRequest.Headers.Add("X-MessageID", Guid.NewGuid().ToString());// gives this message a unique ID

26.myRequest.Headers["X-WindowsPhone-Target"] ="toast";

27.// 2 = immediatly push toast

28.// 12 = wait 450 seconds before push toast

29.// 22 = wait 900 seconds before push toast

30.myRequest.Headers.Add("X-NotificationClass","2");

31.//Merge headers with payload.

32.using(Stream requestStream = myRequest.GetRequestStream())

33.{

34.requestStream.Write(data, 0, data.Length);

35.}

36.//Send notification to this phone!

37.try

38.{

39.var response = (HttpWebResponse)myRequest.GetResponse();

40.}

41.catch(WebException ex)

42.{

43.//Log or handle exception

44.}

45.}

46.}

47.privateDictionary<Guid, Uri> GetSubscribersBasedOnCategory(intcategoryId)

48.{

49.var categorySubscribers =newDictionary<Guid, Uri>();

50.using(var context =newNewsReaderEntities())

51.{

52.IQueryable<Subscription> selectedSubscribers = from oincontext.Subscription

53.where o.CategoryId == categoryId

54.select o;

55.foreach(Subscription selectedSubscriberinselectedSubscribers)

56.{

57.categorySubscribers.Add(selectedSubscriber.DeviceId,newUri(selectedSubscriber.ChannelURI));

58.}

59.}

60.returncategorySubscribers;

61.}Running the WCF Cloud service

The WCF Cloud service is now completed and you can run it locally or deploy it to Windows Azure.

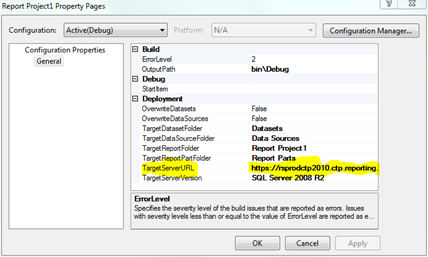

Deploying the WCF service to Windows Azure

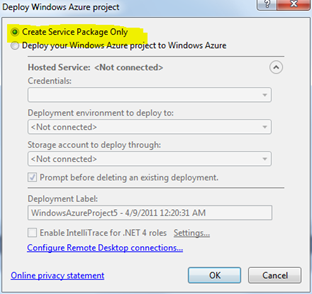

Create a service package

Go to your service project in Visual Studio 2010. Right click the project in the solution explorer and click “Publish”, select “Create service package only” and click “OK”. A file explorer window will pop up with the service package files that got created. Keep this window open, you need to browse to these files later.

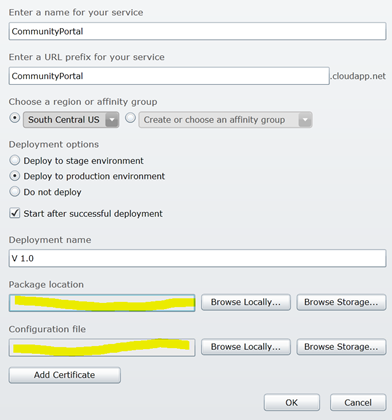

Create a new hosted service

Go to https://windows.azure.com and login with the account you used when signing up for the SQL Azure. Click “New Hosted Service” and a pop up window will be displayed. Select the subscription you created for the SQL Azure database, enter a name for the service and a url prefix. Select a region, deploy to Stage and give it a description. Now you need to browse to the two files that you created when you published the service in the step above and click “OK”. Your service will be validated and created (Note that this step might take a while). In the Management Portal for Windows Azure you will see the service you deployed in Hosted Services once it is validated and created.

Test the deployed WCF service with WCF Test Client

Open the WCF Test Client, on my computer it's located at: C:\Program Files (x86)\Microsoft Visual Studio 10.0\Common7\IDE\WcfTestClient.exe

Go to the Management Portal for Windows Azure and look at your hosted service. Select the NewsReaderService (type Deployment) and click the DNS name link on the right side. A browser window will open and most likely display a 403 Forbidden error message. That's OK, we are only going to copy the URL. Then select File and Add Service in WCF Test Client. Paste in the address you copied from the browser and add /NewsReaderService.svc to the end, click OK. When the service is added you can see the methods on the left side, double click AddCategory to add some categories to your database. In the request window enter a category name as value and click the Invoke button.

You can now log in to your SQL Azure database using Microsoft SQL Server Management Studio and run a select on the Category table. You should now see the Categories you added from the WCF Test Client.

Part 2

In part 2 of this article series I will create a Windows Phone 7 application that consumes the WCF service you just created. The application will also receive toast notifications for subscribed categories. Continue to part 2.

<Return to section navigation list>

MarketPlace DataMarket and OData

Channel9 posted the video segment for Mike Flasko’s OData in Action: Connecting Any Data Source to Any Device on 4/14/2011:

We are collecting more diverse data than ever before and at the same time undergoing a proliferation of connected devices ranging from phone to the desktop, each with its own requirements. This can pose a significant barrier to developers looking to create great end-to-end user experiences across devices. The OData protocol (http://odata.org) was created to provide a common way to expose and interact with data on any platform (DB, No SQL stores, web services, etc). In this code heavy session we’ll show you how Netflix, EBay and others have used OData and Azure to quickly build secure, internet-scale services that power immersive client experiences from rich cross platform mobile applications to insightful BI reports.

Tony Bailey (a.k.a. tbtechnet) described B2B Marketplaces Emerging for Windows Azure at Ingram Micro in a 4/12/2011 post to the Windows Azure Platform, Web Hosting and Web Services blog:

New “marketplaces” are emerging for developers, startups and ISVs that are building Windows Azure platform applications and who want visibility with VARs and to develop new channel development leads.

The new Ingram Micro Cloud Marketplace will feature solutions and help developers to participate in customized marketing campaigns.

Ingram Micro expects more than half of it's 20,000 active solution providers to deploy cloud software and services in the next two years.http://www.ingrammicro.com/ext/0,,23762,00.html

Ingram Micro Deliverables:

- Ingram Micro Cloud Platform: Marketplace with links to your website

- Your Cloud Service landing page with your services descriptions, specs, features and benefits

- Post your service assets (training videos, pricing, fact sheets, technical documents)

- Education section: post your white papers and case studies

- Banner ads on Ingram Micro Cloud and on your microsite

- Ingram Micro Services and Cloud newsletter presence (June and September)

- Face-to-face events

Join Ingram Micro at a live webinar on Thursday 28th April 10am PST to learn more about the opportunity to launch your solution in the Ingram Micro Cloud Marketplace.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• Mattias Woloski (@woloski) described Troubleshooting WS-Federation and SAML2 Protocol in a 4/16/2011 post:

During the last couple of years we have helped companies deploying federated identity solutions using WS-Fed and SAML2 protocols with products like ADFS, SiteMinder in various platforms. Claims-based identity has many benefits but as every solution it has its downsides. One of them is the additional complexity to troubleshoot issues if something goes wrong, especially when things are distributed and in production. Since the authentication is outsourced and it is not part of the application logic anymore you need someway to see what is happening behind the scenes.

I’ve used Fiddler and HttpHook in the past to see what’s going on in the wire. These are great tools but they are developer-oriented. If the user who is having issues to login to an app is not a developer, then things get more difficult.

- Either you have some kind of server side log with all the tokens that have been issued and a nice way to query those by user

- Or you have some kind of tool that the user can run and intercept the token

Fred, one of the guys working on my team, had the idea couple of months ago to implement the latter. So we coded together the first version (very rough) of the token debugger. The code is really simple, we are embedding a WebBrowser control in a Winforms app and inspecting the content on the Navigating event. If we detect a token being posted we show that.

Let’s see how it works. First you enter the url of your app, in this case we are using wolof (the tool we use for the backlog) that is a Ruby app speaking WS-Fed protocol. .

After clicking the Southworks logo and entering my Active Directory account credentials, ADFS returns the token and it is POSTed to the app. In that moment, we intercept it and show it.

You can do two things with the token: send it via email (to someone that can read it) or continue with the usual flow. If there is another STS in the way it will also show a second token.

Since I wanted to have this app handy I enabled ClickOnce deployment and deployed it to AppHarbor (which works really well btw)

If you want to use it browse to and launch the ClickOnce app @ http://miller.apphb.com/

If you want to download the source code or contribute @ https://github.com/federicoboerr/token-requestor

Vittorio Bertocci (@vibronet) began a new series with ACS Extensions for Umbraco - Part I: Setup on 4/14/2011:

More unfolding of the tangle of new content announced with the ACS launch.

Today I want to highlight the work we’ve been doing for augmenting Umbraco with authentication and authorization capabilities straight out of ACS. We really made an effort to make those new capabilities blend into the Umbraco UI, and without false modesty I think we didn’t get far from the mark.

I would also like to take this chance to thank Southworks, our long-time partner on this kind of activities, for their great work on the ACS Exensions for Umbraco.

Once again, I’ll apply the technique I used yesterday for the ACS+WP7+OAuth2+OData lab post; I will paste here the documentation as is. I am going to break this in 3 parts, following the structure we used in the documentation as well.

Access Control Service (ACS) Extensions for Umbraco

'Click here for a video walkthrough of this tutorial'Setting up the ACS Extensions in Umbraco is very simple. You can use the Add Library Package Reference from Visual Studio to install the ACS Extensions NuGet package to your existing Umbraco 4.7.0 instance. Once you have done that, you just need to go to the Umbraco installation pages, where you will find a new setup step: there you will fill in few data describing the ACS namespace you want to use, and presto! You’ll be ready to take advantage of your new authentication capabilities.

Alternatively, if you don’t want the NuGet Package to update Umbraco’s source code for you, you can perform the required changes manually by following the steps included in the manual installation document found in the ACS Extensions package. Once you finished all the install steps, you can go to the Umbraco install pages and configure the extension as described above. You should consider the manual installation procedure only in the case in which you really need fine control on the details of how the integration takes place, as the procedure is significantly less straightforward than the NuGet route.

In this section we will walk you through the setup process. For your convenience we are adding one initial section on installing Umbraco itself. If you already have one instance, or if you want to follow a different installation route than the one we describe here, feel free to skip to the first section below and go straight to the Umbraco.ACSExtensions NuGet install section.

Install Umbraco using the Web Platform Installer and Configure It

- Launch the Microsoft Web Platform Installer from http://www.microsoft.com/web/gallery/umbraco.aspx

Figure 1 - Windows Web App Gallery | Umbraco CMS

- Click on Install button. You will get to a screen like the one below:

Figure 2 - Installing Umbraco via WebPI

- Choose Options. From there you’ll have to select IIS as the web server (the ACS Extensions won’t work on IIS7.5).

Figure 3 - Web Platform Installer | Umbraco CMS setup options

- Click on OK, and back on the Umbraco CMS dialog click on Install.

- Select SQL Server as database type. Please note that later in the setup you will need to provide the credentials for a SQL administrator user, hence your SQL Server needs to be configured to support mixed authentication.

Figure 4 - Choose database type

- Accept the license terms to start downloading and installing Umbraco.

- Configure the web server settings with the following values and click on Continue.

Figure 5 - Site Information

- Complete the database settings as shown below.

Figure 6 - Database settings

- When the installation finishes, click on Finish button and close the Web Platform Installer.

- Open Internet Information Services Manager and select the web site created in step 7.

- In order to properly support the authentication operations that the ACS Extensions will enable, your web site needs to be capable of protecting communications. On the Actions pane on the right, click Bindings… and add one https binding as shown below.

Figure 7 - Add Site Binding

- Open the hosts file located in C:\Windows\System32\drivers\etc, and add a new entry pointing to the Umbraco instance you’ve created so that you will be able to use the web site name on the local machine.

Figure 8 - Hosts file entry

- At this point you have all the bits you need to run your Umbraco instance. All that’s left to do is make some initial configuration: Umbraco provides you with one setup portal which enables you to do just that directly from a browser. Browse to http://{yourUmbracoSite}/install/; you will get to a screen like the one below.

Figure 9 - The Umbraco installation wizard

- Please refer to the Umbraco documentation for a detailed explanation of all the options: here we will do the bare minimum to get the instance running. Click on “Let’s get started!” button to start the wizard.

- Accept the Umbraco license.

- Hit Install in the Database configuration step and click on Continue once done.

- Set a name and password for the administrator user in the Create User step.

- Pick a Starter Kit and a Skin (in this tutorial we use Simple and Sweet@s).

- Click on Preview your new website: your Umbraco instance is ready.

Figure 10 - Your new Umbraco instance is ready!

Install the Umbraco.ACSExtensions via NuGet Package

Installing the ACS Extensions via NuGet package is very easy.

- Open the Umbraco website from Visual Studio 2010 (File -> Open -> Web Site…)

- Open the web.config file and set the umbracoUseSSL setting with true.

Figure 11 - umbracoUseSSL setting

- Click on Save All to save the solution file.

- Right-click on the website project and select “Add Library Package Reference…” as shown below. If you don’t see the entry in the menu, please make sure that NuGet 1.2 is correctly installed on your system.

Figure 12 -

umbracoUseSSL setting

- Select the Umbraco.ACSExtensions package form the appropriate feed and click install.

At the time in which you will read this tutorial, the ACS Extensions NuGet will be available on the NuGet official package source: please select Umbraco.ACSExtensions from there. At the time of writing the ACS Extensions are not published on the official feed yet, hence in the figure here we are selecting it from a local repository. (If you want to host your own feed, see Create and use a NuGet local repository)

Figure 13 - Installing theUmbraco. ACSExtensions NuGet package

If the installation takes place correctly, a green checkmark will appear in place of the install button in the Add Library Package Reference dialog. You can close Visual Studio, from now on you’ll do everything directly from the Umbraco management UI.

Configure the ACS Extensions

Now that the extension is installed, the new identity and access features are available directly in the Umbraco management console. You didn’t configure the extensions yet: the administrative UI will sense that and direct you accordingly.

- Navigate to the management console of your Umbraco instance, at http://{yourUmbracoSite}/umbraco/. If you used an untrusted certificate when setting up the SSL binding of the web site, the browser will display a warning: dismiss it and continue to the web site.

- The management console will prompt you for a username and a password, use the credentials you defined in the Umbraco setup steps.

- Navigate to the Members section as shown below.

Figure 14 - The admin console home page

- The ACS Extensions added some new panels here. In the Access Control Service Extensions for Umbraco panel you’ll notice a warning indicating that the ACS Extensions for Umbraco are not configured yet. Click on the ACS Extensions setup page link in the warning box to navigate to the setup pages.

Figure 15 - The initial ACS Extensions configuration warning.

Figure 16 - The ACS Extensions setup step.

The ACS Extensions setup page extends the existing setup sequence, and lives at the address https://{yourUmbracoSite}/install/?installStep=ACSExtensions. It can be sued both for the initial setup, as shown here, and for managing subsequent changes (for example when you deploy the Umbraco site form your development environment to its production hosting, in which case the URL of the web site changes). Click Yes to begin the setup.

Access Control Service Settings

Enter your ACS namespace and the URL at which your Umbraco instance is deployed. Those two fields are mandatory, as the ACS Extensions cannot setup ACS and your instance without those.

The management key field is optional, but if you don’t enter most of the extensions features will not be available.

Figure 17 - Access Control Service Setttings

The management key can be obtained through the ACS Management Portal. The setup UI provides you a link to the right page in the ACS portal, hut you’ll need to substitute the string {namespace} with the actual namespace you want to use

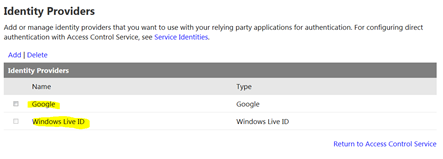

Social Identity Providers

Decide from which social identity providers you want to accept users from. This feature requires you to have entered your ACS namespace management key: if you didn’t, the ACS Extensions will use whatever identity providers are already set up in the ACS namespace.

Note that in order to integrate with Facebook you’ll need to have a Facebook application properly configured to work with your ACS namespace. The ACS Extensions gather from you the Application Id and Application Secret that are necessary for configuring ACS to use the corresponding Facebook application.

Figure 18 - Social Identity Providers

SMTP Settings

Users from social identity providers are invited to gain access to your web site via email. In order to use the social provider integration feature you need to configure a SMTP server.

Figure 19 - SMTP Settings

If everything goes as expected, you will see a confirmation message like the one above. If you navigate back to the admin console and to the member section, you will notice that the warning is gone. You are now ready to take advantage of the ACS Extensions.

Figure 21 - The Member section after the successful configuration of the ACS Extensions

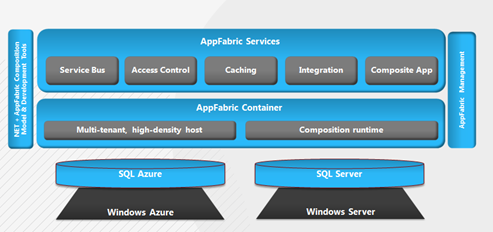

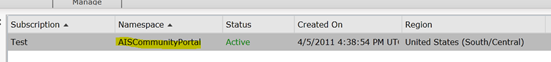

Zane Adam reported Delivering on our roadmap: Announcing the production release of Windows Azure AppFabric Caching and Access Control services in a 4/12/2011 post:

A few months ago, at the Professional Developer Conference (PDC), we released Community Technology Previews (CTPs) and made several announcements regarding new services and capabilities we are adding to the Windows Azure Platform. Ever since then we have been listening to customer feedback and working hard on delivering against our roadmap. You can find more details on these deliveries in my previous two blog posts on SQL Azure and Windows Azure Marketplace DataMarket.

Today at the MIX conference we announced the production release of two of the services that CTPed at PDC: the Windows Azure AppFabric Caching and Access Control services. The updated Access Control is now live, and Caching will be released at the end of April.

These two services are particularly appealing to web developers. The Caching service seamlessly accelerates applications by storing data in memory, and the Access Control service enables the developer to provide users with a single-sign-on experience by providing out-of-the-box support for identities such as Windows Live ID, Google, Yahoo!, Facebook, as well as enterprise identities such as Active Directory.

Pixel Pandemic, a company developing a unique game engine technology for persistent browser based MMORPGs, is already using the Caching service as part of their solution. Here is a quote from Ebbe George Haabendal Brandstrup, CTO and co-founder, regarding their use of the Caching service:

“We're very happy with how Azure and the caching service is coming along.

Most often, people use a distributed cache to relieve DB load and they'll accept that data in the cache lags behind with a certain delay. In our case, we use the cache as the complete and current representation of all game and player state in our games. That puts a lot extra requirements on the reliability of the cache. Any failed communication when writing state changes must be detectable and gracefully handled in order to prevent data loss for players and with the Azure caching service we’ve been able to meet those requirements.”Umbraco, one of the most deployed Web Content Management Systems on the Microsoft stack, can be easily integrated with the Access Control service through an extension. Here is a quote from Niels Hartvig, founder of the company, regarding the Access Control service:

“We're excited about the very diverse integration scenarios the ACS (Access Control service) extension for Umbraco allows. The ACS Extension for Umbraco is one great example of what is possible with Windows Azure and the Microsoft Web Platform.”

You can read more about these services and the release announcement on the Windows Azure AppFabric Team Blog.

We are continuing to innovate and move quickly in our cloud services and release new services as well as enhance our existing services every few months.

The releases of these two services are a great enhancement to our cloud services offering enabling developers to more easily build applications on top of the Windows Azure Platform.

Look forward to more CTPs and releases in the coming months!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Wely Lau continued his RDP series with Establishing Remote Desktop to Windows Azure Instance – Part 2 on 4/16/2011:

Part 2 - Uploading Certificate on Windows Azure Developer Platform

This is the second part of this blog post series about Establishing Remote Desktop to Windows Azure. You can check out the first part here.

So far we’ve just only do the work on the development environment side. There’re still something needs to be done on Windows Azure Portal.

Export Your Certificate

1. The first step is to export the physical certificate file since we need to upload it to Windows Azure Portal.

There’re actually few ways to export certificate file. The most common way is using MMC. Since we use Visual Studio to configure our remote desktop, we can utilize the feature as well. I refer to step 4 of the first part of the post, where you’ve just create a certificate using Visual Studio wizard. With the preferred certificate selected, click on View to see the detail of your certificate.

2. Click on Details tab of the Certificate dialog box, and then click on Copy to File button. It should bring you to a Certificate Export Wizard.

3. Clicking on Next button will bring you the next step where you can select whether to export private or public key. On the first step, select “No, do not export private key” first, keep following the wizard and eventually it will prompt you to the last page where you need to name the physical file [Name].CER.

4. Repeat from the step 1 to 3 but this time, select “Yes, export private key” which eventually will require you to define your password and export it to another [Name].pfx file.

Upload the certificate to Windows Azure Portal

Since we are done exporting both private and public key of the certificate, the next step is to upload it to Windows Azure.

5. Log-in to your Windows Azure Developer Portal (https//windows.azure.com). I assume that you’ve your subscription ready with your live id.

6. Click on the “Hosted Service, Storage Account, and CDN” on the left-hand side menu. On the upper part, click on Management Certificate. If you previously have uploaded the certificate, obviously you will see some of them.

7. Next step is to click on Add Certificate button and a modal pop up dialog will prompt you to select your subscription as well as upload your .CER certificate.

As instructed, go ahead to select your subscription and browse your .CER file where you’ve exported in step 3. It may take a few second to upload your certificate. You’ve successfully uploaded your public key of the certificate.

8. Now, you will also need to upload the private key. To do that, click on Hosted Service upper menu. Click on New Hosted Service button on upper menu and you will see Create a new Hosted Service dialog show up. There are a few section which you need to enter here.

a. Enter the name of your service and well as the URL prefix. Please note that the URL prefix must be globally unique.

b. Subsequently, select your region / affinity group, where do you want to host your service.

c. The next one is about your deployment option, whether you want to deploy it immediately as staging or production environment or do not deploy it first. I assume that you deploy it as production environment.

d. You can give your deployment name or label on it. People sometimes like to use either version number or current time as the label.

e. Now it’s your time to browse your package as well as configuration where you’ve created on the step 9 in previous post.

f. Finally, you need to add certificate again, but this time it’s private key certificate that you’ve specified in step 4 above.

Click OK when you are done with that. In the case where an warning occur, stating that you’ve only 1 instance, you can consider whether to increase your instance count to meet the 99.95% Microsoft SLA. If you are doing this only for development or testing purpose, I believe 1 instance doesn’t really matter. You can click on OK to continue.

8. It will definitely take some time to upload the package as well as wait for the fabric controller to allocate a hosted service place for you. You may see that the status will change slowly from “uploading”, “initializing”, “busy”, and eventually “ready”, if everything goes well.

Remote Desktop to Your Windows Azure Instance.

9. Assuming that your instance has been successfully uploaded. Now you can remote desktop by selecting the instance of your hosted service. And click on the Connect button in upper menu.

This will prompt you to download an .rdp file.

10. Open up the .rdp file and you will see a verification are you want to connect despite these certificate error. Just simply ignore it and click on Yes.

It will then prompt your for username and password that you’ve specified in Visual Studio when configuring the remote desktop. But, here’s little trick here. Just just simply click on your name since it will use your computer as domain. Instead, use “\” (backslash) and follow-up with your username. For example: “\wely”. And of course you’ll need to enter your password as well.

11. If it goes well, you’ll see that you’ve successfully remote desktop to you Windows Azure instance. Bingo!

Alright, that’s all for this post. Hope it helps! See you on another blog post.

Richard Seroter reported Code Uploaded for WCF/WF and AppFabric Connect Demonstration in a 4/13/2011 post:

A few days ago I wrote a blog post explaining a sample solution that took data into a WF 4.0 service, used the BizTalk Adapter Pack to connect to a SQL Server database, and then leveraged the BizTalk Mapper shape that comes with AppFabric Connect.

I had promised some folks that I’d share the code, so here it is.

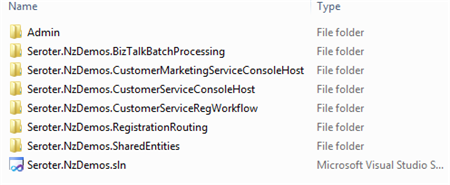

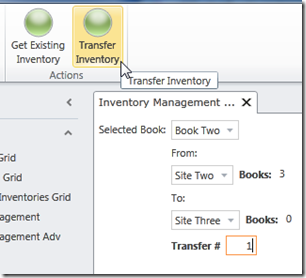

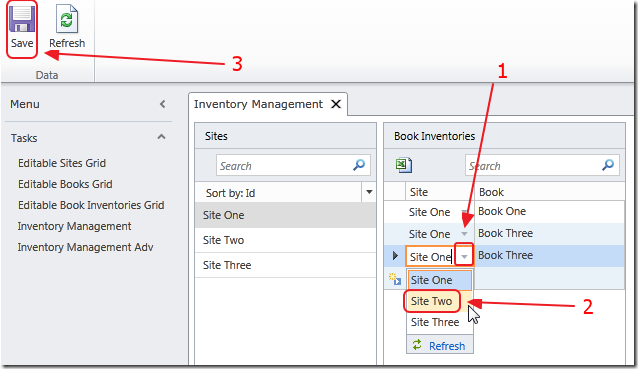

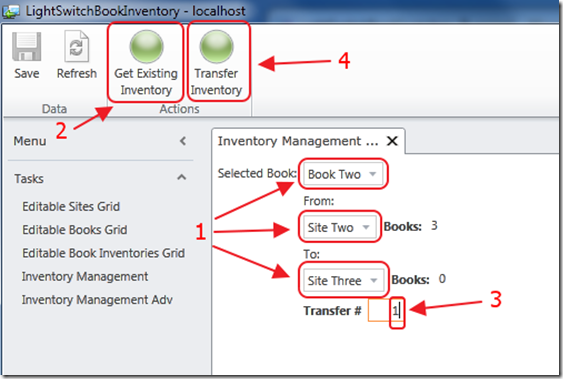

The code package has the following bits:

The Admin folder has a database script for creating the database that the Workflow Service queries. The CustomerServiceConsoleHost project represents the target system that will receive the data enriched by the Workflow Service. The CustomerServiceRegWorkflow is the WF 4.0 project that has the Workflow and Mapping within it. The CustomerMarketingServiceConsoleHost is an additional target service that the RegistrationRouting (instance WCF 4.0 Routing Service) may invoke if the inbound message matches the filter.

On my machine, I have the Workflow Service and WCF 4.0 Routing Service hosted in IIS, but feel free to monkey around with the solution and hosting choices. If you have any questions, don’t hesitate to ask.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Andy Cross (@andybareweb) described reducing iterative deployment time from 21 minutes to 5 seconds in his Using Web Deploy in Azure SDK 1.4.1 post of 4/16/2011:

The recently refreshed Azure SDK v1.4.1 now supports the in-place upgrade of running Web Role instances using Web Deploy. This post details how to use this new feature.

The SDK refresh is available for download here: Get Started

As announced by the Windows Azure team blog,

Until today, the iterative development process for hosted services has required you to re-package the application and upload it to Windows Azure using either the Management portal or the Service Management API. The Windows Azure SDK 1.4 refresh solves this problem by integrating with the Web Deployment Tool (Web Deploy).

A common complaint regarding Azure has been that roles take too long to start up, and since every update to your software requires a start up cycle (provision, initialize, startup, run) the development process is slowed significantly. The Web Deploy tool can be used instead to upgrade an in-place role – meaning the core of an application can be refreshed in an iterative development cycle without having to repackage the whole cloud project. This means a missing CSS link, validation element, code behind element or other change can be made without having to terminate your Role Instance.

It is a useful streamlining of the Windows Azure deployment path as it bypasses the lengthy process of Startup. This must be considered when choosing whether to do a full deployment or a partial Web Deploy, for things such as Startup Tasks do not run when performing a Web Deploy. Any install task (for instance) has already been undertaken when the Role was initially created, and so does not execute again.

In this blog, I use the code from my previous blog, Restricting Access to Azure by IP Address. I use this blog as it includes a startup task that adds a significant length of time from the initial publish to the Role becoming available and responsive to browser requests. This delay is greater than the standard delay in starting an Azure Role as it installs a module into IIS. It is also these types of delay that the Web Deploy integration ask as a remedy for, as the start tasks do not need to be repeated on a Web Deploy.

Firstly, one has to deploy the Cloud Project to Azure in the standard way. This is achieved by right clicking on the Cloud Project and selecting “Publish”.

Standard Publish

On this screen, those familiar with SDK v1.4 and earlier will notice a new checkbox; initially greyed out, this allows the enabling of Web Deploy to your Azure role. We need to enable this, and the way to do so is (as hinted by the screen label) to firstly enable Remote Desktop access to the Azure role. Click the “Configure Remote Desktop Connections” link, check the Enable Connections for all Roles and fill out all the details required on this screen. Make sure you remember the Password you enter, as you’ll need this later.

Set up Remote Desktop to the Azure Roles

Once we have done this, the Enable Web Deploy checkbox is available for our use.

Check the Enable Web Deploy checkbox

The warning triangle shown warns:

“Web Deploy uses an untrusted, self-signed certificate by default, which is not recommended for upload sensitive data. See help for more information about how to secure Web Deploy”.

For now I recommend you don’t upload sensitive data at all using the Web Deploy tool, and if in doubt, this should not be the preferred route for you to use.

Once you have enabled the Web Deploy for roles, click OK, and the deploy beings. This is the standard, full deploy that takes a while to provision an Instance and instantiate it.

From my logs, this takes 21 minutes. The reason for this particularly long delay is that I have an new module being installed into IIS which adds around 8 to 9 minutes at least to complete. Typically the deploy would take 10-15 minutes.

12:33:52 - Preparing... 12:33:52 - Connecting... 12:33:54 - Uploading... 12:35:08 - Creating... 12:36:04 - Starting... 12:36:46 - Initializing... 12:36:46 - Instance 0 of role IPRestricted is initializing 12:41:27 - Instance 0 of role IPRestricted is busy 12:54:12 - Instance 0 of role IPRestricted is ready 12:54:12 - Creating publish profiles in local web projects... 12:54:12 - Complete.This 21 minute delay is what Web Deploy is seeking to reduce. I will now access my role.

You are an IP address!

We will now make a trivial change to the MasterPage of the solution, changing “You are: an IP address” to “Your IP is: an IP address”. This change requires a recompilation of the ASPX Web Application, and so could be cosmetic like this change, or programmatic or referential. Some changes are not possible with Web Deploy – such as adding new Roles, changing startup tasks and changing ServiceDefinitions.

In order to update our code, right click on the Web Role APPLICATION Project in Visual Studio. This is different to the previous Publish, which was accessed by right clicking on the Cloud Project. Select “Publish”.

Web Deployment options

All of these options are completed for you, although you have to enter the Password that you entered when you set up the Remote Desktop connection to your Roles.

Clicking Publish builds and publishes your application to Azure. This happens for me in under 5 seconds.

========== Publish: 1 succeeded, 0 failed, 0 skipped ==========

That is that. It’s amazingly quick and I wasn’t even sure it was working initially

Going to the same Service URL gives the following:

An updated web app!

As you can see, the web application has been updated. We have gone from a deployment cycle of 21 minutes, to an iterative deployment cycle of 5 seconds. AMAZING.

One last thing to note, straight from the Windows Azure Team Blog regarding constraints on using Web Deploy:

Please note the following when using Web Deploy for interactive development of Windows Azure hosted services:

- Web Deploy only works with a single role instance.

- The tool is intended only for iterative development and testing scenarios

- Web Deploy bypasses the package creation. Changes to the web pages are not durable. To preserve changes, you must package and deploy the service.

Happy clouding,

Andy

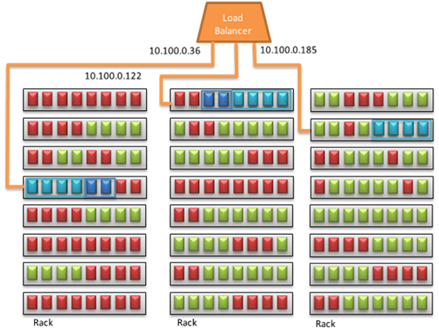

Neil MacKenzie described Windows Azure Traffic Manager in a 4/15/2011 post:

The CTP for the Windows Azure Traffic Manager was announced at Mix 2011, and a colorful hands-on lab was introduced in the Windows Azure Platform Training Kit (April 2011). The lab has also been added to the April 2011 refresh of the Windows Azure Platform Training Course – a good, but under-appreciated, resource. You can apply to join the CTP on the Beta Programs section of the Windows Azure Portal.

The Windows Azure Traffic Manager provides several methods of distributing internet traffic among two or more hosted services, all accessible with the same URL, in one or more Windows Azure datacenters. It uses a heartbeat to detect the availability of a hosted service. The Traffic Manager provides various ways of handling the lack of availability of a hosted service.

Heartbeat

The Traffic Manager requests a heartbeat web page from the hosted service every 30 seconds. If it does not get a 200 OK response for this heartbeat three consecutive times the Traffic Manager assumes that the hosted service is unavailable and takes it out of load-balancer rotation. (In fact, on not getting a 200 OK the Traffic Manager immediately issues another request – and failure requires three pairs of failed requests every 30 seconds.)

Traffic Manager Policies

The Traffic Manager is configured at the subscription level on the Windows Azure Portal through the creation of one or more Traffic Manager policies. Each policy associates a load-balancing technique with two or more hosted services which are subject to the policy. A hosted service can be in more than one policy at the same time. Policies can be enabled and disabled

The Traffic Manager supports various load balancing techniques for allocating traffic to hosted services.

- failover

- performance

- round robin

With failover, all traffic is directed to a single hosted service. When the Traffic Manager detects that the hosted service is not available it modifies DNS records and directs all traffic to the hosted service configured for failover. This failover hosted service can be in the same or another Windows Azure datacenter. Since it takes 90 seconds for the Traffic Manager to detect the failure and it takes a minute or two for DNS propagation the service will be unavailable for a few minutes.

In a round robin configuration, the Traffic Manager uses a round-robin algorithm to distribute traffic equally among all hosted services configured in the policy. The Traffic Manager automatically removes from the load-balancer rotation any hosted service it detects as unavailable. The hosted services can be in one or more Windows Azure datacenters.

With performance, the Traffic Manager uses information collected about internet latency to direct traffic to the “closest” hosted service. This is useful only if the hosted services are in different Windows Azure datacenters.

Thoughts of Sorts

The Windows Azure Traffic Manager is really easy to use and works as advertised. The configuration is simple with a nice user experience. This is the type of feature that simplifies the task of developing scalable internet services. In particular, a lot of people ask about automated failover when they initially find out about distributed datacenters. And it is a feature I have seen a lot of hand waving over. It looks like the hand waving is about to end.

Tony Bailey (a.k.a. tbtechnet) asserted Speed and Support Matters in a 4/12/2011 post to the Windows Azure Platform, Web Hosting and Web Services blog:

Sure. You can put up a website on a hosted or on-premise environment. You can then spend hours a month patching, monitoring, configuring your server.

Or, sure. You can go cheap and put up a site in a low monthly cost environment.

Azure is not about that.

The Windows Azure platform is about low cost of development because you can build web services fast. Azure is about no hassle server management. Azure is about building scalable solutions to accommodate high demand when you need it and scale down when you don’t.

Content management systems from Umbraco, Kentico, SiteCore and Composite can enable you to build highly scalable web experiences on Windows Azure.

Check it out: http://www.microsoft.com/windowsazure/free-trial/cloud-website-development/

And with Microsoft, you get that other scale. The scale of a massive ecosystem of developers and all kinds of free technical support:

Sounds to me like a shot at VMware’s CloudFoundry. See the Other Cloud Computing Platforms and Services section below for more about CloudFoundry.

Tony Bailey (a.k.a. tbtechnet) posted Build at the Speed of Social: Facebook and Azure on 4/12/2011 to the Windows Azure Platform, Web Hosting and Web Services blog:

As I’ve always said with Windows Azure and SQL Azure you can just concentrate on coding.

Let someone else worry about server provisioning, patching and configuring.

It now gets better for social applications.

Build Facebook apps quickly and on Windows Azure with less fuss.

- Technical Walkthrough

- Academy Live Session

- Free Facebook C# SDK download

- It’s all here: http://www.microsoft.com/windowsazure/social-applications/

Joe did it. So can you.

Need help? Get free technical support too: http://www.microsoftplatformready.com/us/dashboard.aspx

<Return to section navigation list>

Visual Studio LightSwitch

Matt Thalman described Invoking Tier-Specific Logic from Common Code in LightSwitch in a 4/12/2011 post (missed when posted):