Windows Azure and Cloud Computing Posts for 4/7/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 4/9/2011 with new articles marked • from Chris Hoff, Steve Plank, Derrick Harris, Alik Levin, SD Times on the Web, Steve Yi, Steve Marx, Matthew Weinberger, Nicholas Mukhar, Eric Erhardt, Chris Preimesberger, the SQL Azure Team, and the Windows Azure Team.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• The SQL Azure Team posted a SQL Azure @ MIX11: Here’s the Lowdown page on 4/8/2011:

MIX11

April 12-14, 2011

Mandalay Bay, Las Vegas

Heading to MIX11? We are too! Make sure you connect with the SQL Azure team. You’ll find us at these places.

SQL Azure Team Kiosk

Our kiosk will be located in the “Commons” (Ballrooms J-L). Come down and chat with us about SQL Azure, OData, DataMarket, and all things cloud; and get your questions answered. Plus, check out some very cool live demos!

SQL Azure Private After-Party (Limited Invites)

April 12th // 8:00pm -10:00pm // EyeCandy Sound Lounge, Mandalay Bay

We scored a sweet space in the EyeCandy Sound Lounge – in the same building as MIX11. It’s all about free drinks, food and a relaxed way to chat with the Microsoft team, peers, SMEs and User Group leaders, in a much cozier setting.

Plus, you could win an Xbox 360! Do you really need more of an excuse?

How to get in: There are a limited number of invites (wristbands) for the SQL Azure Private After-Party. Stop by our kiosk to grab one before they’re gone.

Sessions: SQL Azure and Cloud Data Services

Here are some interesting sessions at MIX11, which you’ve got to check out.

Powering Data on the Web and Beyond with SQL Azure (SVC05)

Mashing Up Data On the Web and Windows Phone with Windows Azure DataMarket (SVC08)

OData Roadmap: Services Powering Next Generation Experiences (FRM11)

OData in Action: Connecting Any Data Source to Any Device (FRM10)

OData Roadmap: Exposing Any Data Source as an OData service (FRM16)

Data in an HTML5 World

Build Fast Web Applications with Windows Azure AppFabric Caching (SVC01)

• Steve Yi described Migrating My WordPress Blog to SQL Azure in a 4/8/2011 post:

This week I decided to take on an interesting challenge and see what commonly available applications I could get running on SQL Azure and the Windows Azure platform. I remembered a while ago that WordPress had started making significant investments in partnering with Microsoft, and I was really pleased to run across http://wordpress.visitmix.com. Historically running only on MySQL, WordPress now runs on SQL Server and SQL Azure, utilizing the PHP Driver for SQL Server! The site has great how-to articles of how to install this on SQL Azure, and how to migrate existing WordPress blogs to your new deployment.

WordPress is one of the most prevalent content management and blog engines on the web, estimated to have nearly 1 million downloads per week used by up to 12% of all web sites. Coincidentally, my personal blog ran on WordPress and thought this was a fantastic challenge to get it migrated over to SQL Azure and Windows Azure.

I chose to implement this utilizing the Windows Azure VM role. With an on-premises Hyper-V server, I created a virtual image of Windows Server 2008 R2 with IIS and installed WordPress. The install wizard automatically implemented database schema, making the database portion of setup very easy. During the setup wizards, all I had to do was provide the location and credentials for my SQL Azure database running in the cloud. Walkthroughs of how to accomplish that are here.

Once I customized the settings and theme, I then uploaded and deployed the virtual image to Windows Azure - making this a complete cloud deployment of both runtime and database. Everything works without having to make any compromises to get this into the cloud.

Take a moment to check it out. The address is: http://blog.stevenyi.com. Right now it features my outdoor passions for climbing and photography - I'll start posting on some additional topics there in the future, too.

The last step was properly managing DNS entries to reach my blog via my 'vanity' URL instead of using the *.cloudapp.net address you assign when deploying a service in Windows Azure. Not being a DNS expert, this had me stumped for a little while. Windows Azure provides friendly DNS entries to provide a consistent way to access your instances to provide an abstraction layer from Virtual IP addresses (VIPs) which may change if you decide to deploy an application from a different or multiple datacenters.

Fortunately, one of the great things about working at Microsoft is being able to reach out to some very bright people. Steve Marx on the Windows Azure team authors a fantastic blog on development topics at http://blog.smarx.com that explains this in more detail and how to map custom domains to Windows Azure using CNAME records and domain forwarding. The post is here. I did mention that I wasn't a DNS expert - so after reading the post, he still needed to call me to basically repeat the same thing.

If you have a moment, check it out and hope you enjoy the pics.

Steve Yi posted a Real World SQL Azure: Interview with Robert Johnston, Founder and Vice President, Paladin Data Systems, a Microsoft Gold Certified Partner case study on 4/8/2011:

As part of the Real World SQL Azure series, we talked to Robert Johnston, Founder and Vice President of Paladin Data Systems, about moving to SQL Azure to easily and cost-effectively serve both larger customers and small civic governments and local jurisdictions. Here's what he had to say:

MSDN: Can you tell us about Paladin Data Systems and the products and services you offer?

Johnston: Our core philosophy is simple; we combine a commitment to old-world values and service with modern technology solutions for industries such as military and government organizations, the natural resource community, and city and county agencies. Our solutions help automate business processes and drive efficiencies for our customers.

MSDN: Why did you start thinking about offering a Microsoft cloud-based solution?

Johnston: Well, the story starts when we decided to recreate our planning and permits solution, called Interlocking Software, using Microsoft technologies. It had been built on the Oracle platform, but most local jurisdictions have settled into the Microsoft technology stack, and they considered Oracle to be too complicated and expensive. So with our new product, called SMARTGov Community, we made a move to Microsoft to open up that market. To ease deployment and reduce costs for small jurisdictions, we wanted to offer a hosted version. But we didn't want to get into the hosting business; it would be too expensive to build the highly available, clustered server scenario required to meet our customers' demands for application stability and availability. Luckily, it was around this time that Microsoft launched SQL Azure.

MSDN: Why did you choose SQL Azure and Windows Azure to overcome this challenge?

Johnston: We looked at Amazon Elastic Compute Cloud, but with this solution we would have had to manage our database servers. By comparison, Microsoft cloud computing offered a complete platform that combines computing services and data storage. Our solution is dependent on a relational database. Having a completely-managed relational database in the cloud proved to us that SQL Azure was made for SMARTGov Community. Talking to customers, we found that many trusted the Microsoft name when it came to cloud-based services. We liked the compatibility angle, because our own software is based on the Windows operating system and Windows Azure offers a pay-per-use pricing model that makes it easier for us to predict the cost of our expanding operations. And with SQL Azure, our data is safe and manageable. We can keep adding more customers without having to provision back-end servers, configure hard disks, or work with any kind of hardware layer.

MSDN: How long did it take to make the transition?

Johnston: It took a couple of months. It was a very easy transition for us to take the existing technology that we were developing and deploy it into the cloud. There were a few little things we had to account for to get SMARTGov Community to run in a high-availability, multitenant environment, but we didn't have to make major changes. Microsoft offered us a lot of help, which made the transition to SQL Azure much more successful.

MSDN: What benefits did you gain by switching to SQL Azure?

Johnston: With SQL Azure, we've taken a product with a lot of potential and tailored it to meet customers' needs in an untapped market: and our first customer, the City of Mukilteo, signed up for the solution as soon as it was released. Running SMARTGov Community in the cloud will reduce the cost of doing business compared to the on-premises, client/server model of its predecessor. And we are more agile: instead of spending up to 300 hours installing an on-premises deployment of Interlocking Software, we now offer customers a 30-hour deployment. Faster deployments reduce sales cycles and impress our customers. With SQL Azure, we found the perfect computing model for smaller jurisdictions that want easy, maintenance-free access to SMARTGov Community's rich features and functionality at low cost.

Read the full story at:

www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000009546To read more SQL Azure customer success stories, visit: www.sqlazure.com

<Return to section navigation list>

MarketPlace DataMarket and OData

Marcelo Lopez Ruiz announced OData and TFS and yes, CodePlex on 4/8/2011:

The beta of the OData Service for Team Foundation Service 2010 was announced yesterday, and of course as a TFS user I find this tremendously exciting. TFS ends up having a lot of data about my day-to-day work, and now thanks to OData it's easily unlocked and programmable!

For extra awesome points, there is a preview of the CodePlex OData API as well.

Scott Hanselman produced an Open Data Protocol (OData) with Pablo Castro Hanselminutes poscast (#205) on 3/11/2011 (missed when published):

Astoria, ADO.NET Data Services, [WCF Data Services] and OData - what's the difference and the real story? How does OData work and when should I use it? When do I use OData and when do I use WCF? Scott gets the scoop from the architect himself, Pablo Castro.

The episode includes a professionally produced transcript.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

The Windows Azure AppFabric Team announced Windows Azure AppFabric April release now available featuring a new version of the Access Control service! on 4/7/2011:

We are excited to announce that today we released the Windows Azure AppFabric April release that includes a new version of the Access Control service.

The new version of the Access Control service includes all the great capabilities and enhancements that have been available in the Community Technology Preview (CTP) of the service for several months. Now you can start using these capabilities using our production service.

The new version of the service adds the following capabilities:

Federation provider and Security Token Service

- Out of box federation with Active Directory Federation Services 2.0, Windows Live ID, Google, Yahoo, Facebook

New authorization scenarios

- Delegation using OAuth 2.0

Improved developer experience

- New web-based management portal

- Fully programmatic management using OData

- Works with Windows Identity Foundation

Additional protocol support

- WS-Federation, WS-Trust, OpenID 2.0, OAuth 2.0 (Draft 13)

We will share more on this release, including new content that will help you get started with the service in the near future.

The new version of the Access Control service will run in parallel with the previous version. Existing customers will not be automatically migrated to the new version, and the previous version remains fully supported. You will see both versions of the service in the AppFabric Management Portal.

We encourage you to try the new version of the service and will be offering the service at no charge during a promotion period ending January 1, 2012. Customers using the previous version of the service will also enjoy the promotion period.

Please use the following resources to learn more about this release:

Another change introduced in this release is in the way we calculate the monthly charge for Service Bus. From now on the computation of the daily connection charge will always assume a month of 31 days, instead of using the actual number of days in the current month. This is done in order to ensure consistency in the charges across months. You can find more details of how the Service Bus charges are calculated in our Detailed FAQs.

In addition, we have deprecated the ASP.NET Management Portal as part of this release. From now on only the new Silverlight based Management Portal will be available. The new portal has the same management capabilities as the ASP.NET management portal, but with an enhanced and improved experience. All links to the Management Portal should work and direct you to the new Silverlight portal.

The updates are available at: http://appfabric.azure.com, so be sure to login and start using the new capabilities.

If you have any questions, be sure to visit our Windows Azure Platform Forums. For questions specifically on the Access Control service visit the Security for the Windows Azure Platform section of the forums.

If you have not signed up for Windows Azure AppFabric and would like to start using these great new capabilities, be sure to take advantage of our free trial offer. Just click on the image below and get started today!

The Windows Azure AppFabric Team.

Eugenio Pace (@eugenio_pace) described Intuit Data Services + Windows Azure + Identity in a 4/7/2011 post to the Geneva Team Blog:

This week, we completed a small PoC for brabant court, a customer that is building a Windows Azure application that integrates with Intuit’s Data Services (IDS).

A couple words on mabbled from brabant court.

Mabbled is a Windows Azure app (ASP.NET MVC 3, EF Code First, SQL Azure, AppFabric ACS|Caching, jQuery) that provides complementary services to users of Intuit QuickBooks desktop and QuickBooks Online application. Mabbled achieves this integration with the Windows Azure SDK for Intuit Partner Platform (IPP). An overriding design goal of mabbled is to leverage as much of Microsoft’s platform and services as possible in order to avoid infrastructure development and focus energy on developing compelling business logic. A stumbling block for mabbled’s developers has been identity management and interop between Intuit and the Windows Azure application.

In this PoC we demonstrate how to integrate WIF with an Intuit/Windows Azure ASP.NET app. Intuit uses SAML 2.0 tokens and SAMLP. SAML 2.0 tokens are supported out of the box in WIF, but not the protocol.

I used one of Intuit’s sample apps (OrderManagement) as the base which currently doesn’t use WIF at all.

The goal: to supply to the .NET Windows Azure app, identity information originated in Intuit’s Workplace, using the WIF programming model (e.g. ClaimsPrincipal) and to use and leverage as much standard infrastructure as possible (e.g. ASP.NET authorization, IPrincipal.IsInRole, etc.).

Why? The biggest advantage of this approach is the elimination of any dependency to custom code to deal with identity related concerns (e.g. querying for roles, user information, etc.).

How it works today?

If you’ve seen Intuit’s sample app, you know that they provide a handler for the app that parses a SAML 2.0 token posted back from their portal (http://workplace.intuit.com). This SAML token contains 3 claims: LoginTicket, TargetUrl and RealmId. Of these, LoginTicket is also encrypted.

The sample app includes a couple of helper classes that use the Intuit API to retrieve user information such as roles, profile info such as e-mail, last login date, etc. This API uses the LoginTicket as the handle to get this information (sort of an API key).

Some of this information is then persisted in cookies, or in session, etc. The problem with this approach is identity data is not based on .NET standard interfaces. So the app is :

where RoleHelper.UserisInRole is:

WIF provides a nice integration into standard .NET interfaces, so code like this in a web page, just works: this.User.IsInRole(role);

The app currently includes a ASP.NET Http handler (called "SamlHandler”) whose responsibility is to receive the SAML 2.0 token, parse it, validate it and decrypt the claim. Sounds familiar? if it does, it’s because WIF does the same

What changed?

I had trouble parsing the token with WIF’s FederationAuthenticationModule (probably because of the encrypted claim which I think it is not supported, but I need to double check).

Inside the original app handler, I’m taking the parsed SAML token (using the existing Intuit’s code) and extracting the claims supplied in it.

Then, I query Intuit Workplace for the user’s general data (e.g. e-mail, name, last name, etc.) and for the roles he is a member of (this requires 2 API calls using the LoginTicket). All this information also goes into the Claims collection in the ClaimsPrincipal.

After that I create a ClaimsPrincipal and I add all this information to the claim set:

The last step is to create a session for this user, and for that I’m (re)using WIF’s SessionAuthenticationModule.

This uses whatever mechanism you configured in WIF. Because this was a quick test, I left all defaults. But since this is a Windows Azure app, I suggest you should follow the specific recommendations for this.

The handler’s original structure is the same (and I think it would need some refactoring, especially with regards to error handling, but that was out of scope for this PoC )

Some highlights of this code:

- Some API calls require a dbid parameter that is passed as a query string from Intuit to the app in a later call. I’m parsing the dbid from the TargetUrl claim to avoid a 2 pass claims generation process and solve everything here. This is not ideal, but not too bad. It would be simpler to get the dbid in the SAML token.

- The sample app uses local mapping mechanism to translate “Workplace roles” into “Application Roles” (it uses a small XML document stored in config to do the mapping). I moved all this here so the ClaimsPrincipal contains everything the application needs right away. I didn’t attempt to optimize any of this code and I just moved the code pieces from the original location to here. This is the “RoleMappingHelper”.

- I removed everything from the session. The “LoginTicket” for instance, was one of the pieces of information stored in session, but I found strange that it is sent as an encrypted claim in the SAML token, but then it is stored in a cookie. I removed all this.

- The WIF SessionAuthenticationModule (SAM) is then used to serialize/encrypt/chunk ClaimsPrincipal. This is all standard WIF behavior as described before.

The web application:

In the web app, I first changed the config to add WIF module and config:

Notice that the usual FederationAutheticationModule is not there. That’s because its responsibilities are now replaced by the handler. The SAM however is there and therefore it will automatically reconstruct the ClaimsPrincipal if it finds the FedAuth cookies created inside the handler. The result is that the application now will receive the complete ClaimsPrincipal on each request.

This is the “CustomerList.aspx” page (post authentication):

The second big change was to refactor all RoleHelper methods to use the standard interfaces:

An interesting case is the IsGuest property that originally checked that the user was a member of any role (the roles a user was a member of were stored in session too, which I’m not a big fan of). This is now resolved with this single query to the Claims collection:

The structure of the app was left more or less intact, but I did delete a lot of code that was not needed anymore.

Again, a big advantage of this approach is that it allows you to plug any existing standard infrastructure into the app (like [Authorize] attribute in an MVC application) and it “just works”.

In this example, the “CustomerList.aspx” page for example has this code at the beginning of PageLoad event:

As mentioned above, the RoleHelper methods are now using the ClaimsPrincipal to resolve the “IsInRole” question (through HttpContext.User.IsInRole). But you could achieve something similar with pure ASP.NET infrastructure. Just as a quick test, I added this to the web.config:

And now when trying to browse “CustomerList.aspx” you get an “Access Denied” because the user is not supplying a claim of type role with value “SuperAdministrator”:

Final notes

A more elegant approach would probably be to use deeper WIF extensibility to implement the appropriate “protocol”, etc., but that seems to be justified only if you are really implementing a “complete” protocol/handler (SAMLP in this case). That’s much harder work.

This is a more pragmatic approach that works for this case. I think it fulfills the goal of isolating as much “plumbing” as possible from the application code. When WIF evolves to support SAMLP natively for example, you would simply replace infrastructure, leaving your app mostly unchanged.

Finally, one last observation: we are calling the Intuit API a couple times to retrieve user info. This could be completely avoided if the original SAML token sent by Intuit contained the information right away! There might be good reasons why they are not doing it today. Maybe it’s in their roadmap. Once again, with this design, changes in your app would be minimized if that happens.

This was my first experience with Intuit’s platform and I was surprised how easy it was to get going and for their excellent support.

I want to thank Daz Wilkin (brabant court Founder) for spending a whole day with us. Jarred Keneally from Intuit for all his assistance and Federico Boerr & Scott Densmore from my team for helping me polish the implementation.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Ricardo LFH started a new series by describing Load Testing with Agents running on Windows Azure – part 1 in a 4/8/2011 post:

I’ve been following the cloud computing scene with some interest as I find cloud computing to be quite an interesting approach to IT for some/many companies. There are a few characteristics of cloud computing that I find very useful (and why not, exciting!), in particular the concepts around elasticity, when you scale to meet demand without worrying about hardware constraints, and the pay-as-you-go model, so that you are only charged when you actually deploy and use the system.

Of course a big part of getting value from cloud computing is identifying the most adequate workloads to take to the cloud, so after some thinking (and based on some needs from a customer) I’ve decided to try to make a Load Testing rig where the Agents are running on Windows Azure. This scenario has some aspects that make it “cloudable”:

- Running the Agents as Windows Azure instances allows me to quickly create more of them and, thus, generate as much load as I can;

- A load test rig is not something which is used 24x7, so it’d be nice to just destroy the rig and not having it around if not needed.

So, my first step was to decide how to design this and where to place each component (cloud or on-premises) to test a more or less typical application. Based on some decisions I’ll explain later in the post, I came up with this design:

The major components are:

- Agents – As intended, they should be running on Windows Azure so I could make the most of the cloud’s elasticity;

- Controller – I took some time deciding on where the controller should be located: cloud or on-premises. The Controller plays an important part in the testing process as it must communicate with both the agents, to send them work to do and collect their performance data, and the system being tested, to collect performance data. Because of this, some sort of cloud/on-premises communication would be needed. I decided to keep the controller on-premises mostly because the other option would imply having to either open the firewalls to allow connection between a Controller on the Cloud and, for example, a Database server or to deploy the Windows Azure Connect plugin to the database server itself. For the customer, I was thinking of this was not feasible.

- Windows Azure Connect – The Windows Azure Connect endpoint software must be active on all Azure instances and on the Controller machine as well. This allows IP connectivity between them and, given that the firewall is properly configured, allows the Controller to send work loads to the agents. In parallel, and using the LAN, the Controller will collect the performance data on the stressed systems, using the traditional WMI mechanisms.

Once I knew what I wanted to design, my next step was to start working hands-on on the scripts that would enable this to be built. My ultimate goal was to have something fully automated, I wanted as less manual work as possible. I also knew I had to make a decision about the type of role to be used for the Agent instances: web, worker or VM.

I’ll explore these topics in the next parts of this post.

Avkash Chauhan explained Sysprep related issues when creating Virtual Machine for VM Role in a 4/7/2011 post:

When creating a VHD for VM Role, one important steps is to SYSPREP your OS. You can launch SYSPREP application (sysprep.exe) which is located at %WINDIR%\System32\sysprep folder as below:

Once SYSPREP Application is launched you will see the following window, select "Enter System Out-of-Box Experience (OOBE), check "Generalize" and "Shutdown" as below then "OK":

If you meet any problem during SYSPREP phase, please read sysprep logs located below:

%WINDIR%\System32\sysprep\Panther

Details in SYSPREP log file will help you to solve problem. I found most common issues is related with to many SYSPREP execution without activation. And if that is the case, you will need to activate the OS only then you can run SYSPREP.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• The Windows Azure Team posted a Real World Windows Azure: Interview with Paul Hernacki, Chief Technology Officer at Definition 6 case study on 4/8/2011:

As part of the Real World Windows Azure series, we talked to Paul Hernacki, Chief Technology Officer at Definition 6 about using the Windows Azure platform, in combination with its content management system from Umbraco, to host its customers' websites. Here's what he had to say:

MSDN: Tell us about Definition 6 and the services you offer.

Hernacki: Definition 6 is a unified marketing agency that redefines brand experiences by unifying marketing and technology. We focus on developing interactive, rich-media websites and web-based applications that engage users and turn them into brand advocates for our customers. Most of the websites we develop are large, content-managed websites.

MSDN: What were the biggest challenges that you faced prior to implementing the Windows Azure platform?

Hernacki: We were looking for a content management system built on the Microsoft .NET Framework and one that worked well with Microsoft products and technologies that are commonly found in our customers' enterprise environments, such as Windows Server. For that we turned to Umbraco, an open-source content management system that is based on Microsoft ASP.NET. From there, we needed a cloud-based service that would work with Umbraco. While we offer traditional hosting methods, some of our customers wanted to host their websites in the cloud in order to scale up and scale down to meet unpredictable site traffic demand and to avoid capital expenditures.

MSDN: Why did you choose the Windows Azure platform?

Hernacki: We evaluated cloud offerings from Amazon and Google but neither of them seamlessly blend with the way we work, nor do they allow us to continue working exactly as we are with the tools that we have to build the websites that we want to build for our customers. However, Umbraco developed an accelerator that enables customers to deploy websites that use the content management system on the Windows Azure platform, which works seamlessly with customers' enterprise IT environments.

MSDN: Can you describe how you use the Windows Azure platform with the Umbraco content management system for customer websites?

Hernacki: We tested the solution on the Cox Enterprises' Cox Conserves Heroes website, which we completely migrated from our colocation hosting environment to the Windows Azure platform in only two weeks. We use web roles for hosting the website, easily adding new web role instances to scale up during high-traffic periods and reducing the number of instances to scale down when the site typically sees the fewest number of visitors. We also use Blob storage in Windows Azure to store binary data and Microsoft SQL Azure for our relational database needs.

MSDN: What makes Definition 6 unique?

Hernacki: In addition to the interactive sites we develop for our customers, we can also deliver a solution that includes a content management system and cloud service that all work together-and work well in an enterprise IT environment. It makes our customers' marketing directors and CIOs both happy.

MSDN: What kinds of benefits have you realized with the Windows Azure platform?

Hernacki: Development and deployment was very straightforward. We used our existing skills and development tools, including Microsoft Visual Studio development system, to migrate the website to the Windows Azure platform. Plus, we won't have to change the way we work going forward. We now have a viable cloud-based solution that we can offer to our customers. By using Windows Azure and the Umbraco accelerator, we can meet our customers' complex content management needs and their desire to take advantage of the benefits of the cloud.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000009229

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

• Alik Levin listed How-To’s And Scenarios Indexes For Windows Azure, Visual Studio Tools For Windows Azure, SQL Azure, Service Bus, And ACS in a comprehensive 4/8/2011 post:

Windows Azure How-to Index

- Windows Azure Tools for Microsoft Visual Studio

- Scenario: Creating a New Windows Azure Project with Visual Studio

- Scenario: Managing Roles in the Windows Azure Project with Visual Studio

- Scenario: Configuring the Windows Azure Application with Visual Studio

- Scenario: Accessing the Windows Azure Storage Services

- Scenario: Debugging a Windows Azure Application in Visual Studio

- Scenario: Deploying a Windows Azure Application from Visual Studio

- Scenario: Using Remote Desktop with Windows Azure Roles

- Scenario: Using Windows Azure Connect to Create Virtual Networks

- Scenario: Troubleshooting

ACS How To's

- SQL Azure Development: How-to Topics

- Service Bus How-To’s":

How to: Design a WCF Service Contract

How to: Expose a REST-based Web Service Through the AppFabric Service Bus

- How to: Design a WCF Service Contract for use with the Service Bus.

How to: Configure a Service Programmatically

How to: Configure a Service Using a Configuration File

How to: Configure a Service Bus Client Using Code

How to: Configure a Service Bus Client Using a Configuration File

How to: Configure a Windows Azure-Hosted Service or Client Application

How to: Change the Connection Mode

How to: Set Security and Authentication on an AppFabric Service Bus Application

How to: Modify the AppFabric Service Bus Connectivity Settings

How to: Host a WCF Service that Uses the AppFabric Service Bus Service

How to: Host a Service on Windows Azure that Accesses the AppFabric Service Bus

How to: Create a REST-based Service that Accesses the AppFabric Service Bus

How to: Use a Third Party Hosting Service with the AppFabric Service Bus

- How to: Create a WCF SOAP Client Application

How to: Publish a Service to the AppFabric Service Bus Registry

How to: Discover and Expose an AppFabric Service Bus Application

How to: Expose a Metadata Endpoint

How to: Configure an AppFabric Service Bus Message Buffer

How to: Create and Connect to an AppFabric Service Bus Message Buffer

How to: Send Messages to an AppFabric Service Bus Message Buffer

How to: Retrieve a Message from an AppFabric Service Bus Message Buffer

Related Materials

- Windows Azure: Changing Configuration In Web.Config Without Redeploying The Whole ASP.NET Application

- Widows Azure Content And Guidance Map For Developers – Part 1, Getting Started

- Widows Azure Content And Guidance Map For Developers – Part 2, Case Studies

- New Content Available For Windows Azure Developers - Windows Azure Code Quick Launch Samples and Walkthroughs

- WCF Data Services How-To’s Index

- WCF How To’s Index

Bruno Terkaly posted Bruno’s Tour of the Windows Azure Platform Training Kit–Web Roles, Worker Roles, Tables, Blobs, and Queues on 4/8/2011:

Introduction

My goal in this series is to highlight the best parts of the hands-on labs.

I constantly give presentations to software developers about cloud computing, specifically about the Windows Azure Platform. As the founder of The San Francisco Bay Area Azure Developers Group (http://www.meetup.com/bayazure/), I am always asked for the best place to start.

Luckily there is one easy answer - The Windows Azure Platform Training Kit.

As of this writing, this is the latest version.

Download the Windows Azure Platform Training Kit

Hands-On Labs or Demos

I will focus on Hands-On Labs, not the demos, atleast initially. But don't discount the value of the demos because they are easier and shorter. I will point out some of the useful demos as I progress through this post.

Lab #1 - Introduction to Windows Azure

This is perhaps the best of all the hands-on labs in the kit. But it certainly is not the easiest.

Technical Value

As you may be aware, the sweet spot for making use of Windows Azure is that it is "Platform as a Service (PaaS)," as opposed to "Infrastructure as a Service."

This post describes the difference:

Differences between IaaS, PaaS, SaaS

My take is that the future is PaaS because it frees you from worrying about the underlying servers, load balancers, and networking. It is also the most economical. At the end of the day is about the ratio of servers to underlying support staff. PaaS is far more self-sustaining and autonomous that IaaS.

The Big Picture

Now because Windows Azure is PaaS, Lab #1 is ideal. It gives you the "big picture," a clear understanding of how web roles, worker roles, tables, blobs, and queues work together to form a whole PaaS application.

What Lab #1 illustrates

Most of Azure’s core technologies are explained in this excellent lab.

For Beginners – The Demos

A more gentle introduction to the platform is found here in “Demos.”

Wade Wegner (@WadeWegner) posted a 00:30:13 Cloud Cover Episode 43 - Scalable Counters with Windows Azure Channel9 video segment on 4/8/2011:

Join Wade [right] and Steve [left] each week as they cover the Windows Azure Platform. You can follow and interact with the show @CloudCoverShow.

In this episode, Steve and Wade explain the application architecture of their latest creation—the Apathy Button. Like many other popular buttons on the Internet, the Apathy Button has to deal with the basic challenge of concurrency. This concurrency challenge arises from multiple role instances—as well as multiple threads—all trying to update the same number. In this show, you'll learn a few approaches to solving this in Windows Azure.

In the news:

- MSDN Magazine: Introducing the Windows Azure AppFabric Caching Service

- New Windows Azure Code Quick Start samples are available

- Japanese MVPs help out with relief efforts

- Windows Azure helps to power eBay's iPad Marketplace sales

Grab the source code the Apathy Button

Steve's blog post on the application

• Steve Marx (@smarx) expanded on the CloudCover episode in his Architecting Scalable Counters with Windows Azure post of 4/7/2011:

On this week’s upcoming episode of Cloud Cover, Wade and I showed our brand new creation, the Apathy Button. This app requires keeping an accurate counter at scale. (See the picture on the left… 36,000 clicks were registered at the time I’m writing this. The majority occurred during the hour-long period we were recording this week’s show.)

The basic challenge in building a scalable counter is one of concurrency. With many simultaneous requests coming to many role instances all updating the same number, the difficulty arises in making sure that number is correct and that the writers don’t spend a lot of time retrying after concurrency failures.

There are a number of potential solutions to this problem, and if you’re interested, I encourage you to watch the Cloud Cover episode for our discussion. Here I’ll outline the solution we chose and share the source code for the Apathy Button application.

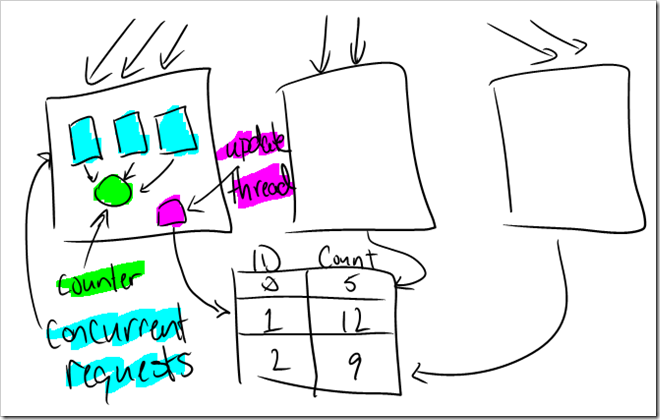

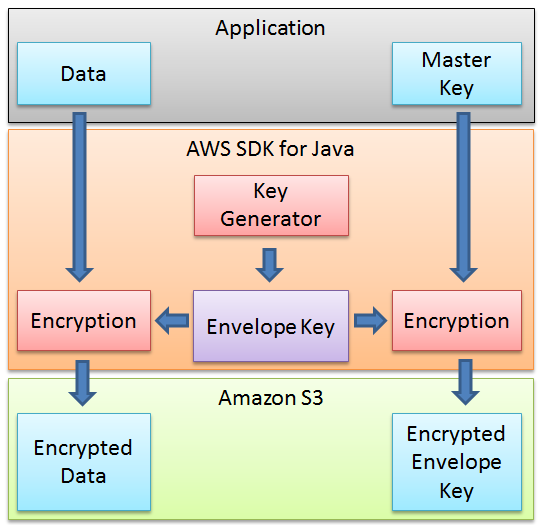

The diagram below shows the basic architecture. Each instance keeps a local count that represents the number of clicks handled by that instance (the green “counter” below). This count is incremented using Interlocked.Increment, so it can be safely updated from any number of threads (blue squares). Each instance also has a single thread responsible for pushing that local count into an entity in table storage (purple square). There’s one entity per instance, so no two instances are trying to write to the same entity. The click count is now the sum of all those entities in table storage. Querying for them and summing them is a lightweight operation, because the number of entities is bound by the number of role instances.

To see how it’s implemented, download the full source code here: http://cdn.blog.smarx.com/files/ApathyButton_source.zip and watch this week’s episode of Cloud Cover.

And of course, if none of this interests you in the slightest, be sure to show your apathy over at http://www.apathybutton

Joseph Palenchar provided additional background in his Toyota To Upgrade Telematics Services article of 4/7/2011 for This Week in Consumer Electronics (TWICE):

Toyota will upgrade its telematics capabilities in the U.S. and worldwide through a Microsoft partnership that, among other things, will enable consumers to control and monitor the vehicles' systems via smartphones.

Ford already offers a similar capability, as does GM's OnStar subsidiary.

The Toyota service will use Microsoft's cloud-computing Windows Azure platform to offer Toyota telematics services throughout the world. In the U.S., Toyota began offering telematics services on select 2010-model-year U.S. models through Dallas-based ATX Group, which provides GPS-based automatic collision notification, in-vehicle emergency assistance (SOS) button, roadside assistance and stolen-vehicle location service.

Some Azure-based telematics services will be available on electric and plug-in hybrid Toyotas in 2012, but the telematic platform won't be finished until 2015.

Starting in 2012, customers who purchase one of Toyota's electric or plug-in hybrid vehicles will be able to connect via the cloud to control and monitor their car from anywhere, The companies said. Consumers, for example, will be able to turn on the heat or AC in their car, dynamically monitor miles until the next charging station through their GPS system, and use a smart phone to remotely check battery power or maintenance information. Consumers would also be able to remotely command a car to charge at the time of day when energy demand is low, reducing charging costs.

The companies also see potential for monitoring and controlling home systems remotely from the car.

Toyota president Akio Toyoda said the Azure platform will also turn cars into information terminals, "moving beyond today's GPS navigation and wireless safety communications, while at the same time enhancing driver and traffic safety." For example, he said, "this new system will include advanced car-telematics, like virtual operators with voice recognition, management of vehicle charging to reduce stress on energy supply, and remote control of appliances, heating and lighting at home."

Microsoft CEO Steve Ballmer said his cloud platform "will be able to deliver these new applications and services in the 170 countries where Toyota cars are sold." In the past, he said, "this type of service was limited to only major markets where the automotive maker could build and maintain a datacenter." Toyota also benefits from paying only for the computing power it uses and speeding its telematics entry into new markets.

Cory Fowler (@SyntaxC4) described Cloud Aware Configuration Settings in a 4/7/2011 post:

In this post we will look at how to write a piece of code that will allow your Application to be Environment aware and change where it receives it’s connection string when hosted on Windows Azure.

Are you looking to get started with Windows Azure? If so, you may want to read the post “Get your very own Cloud Playground” to find out about a special offer on Windows Azure Deployments.

State of Configuration

In a typical ASP.NET application the obvious location to store a connectionString is the Web.config file. However when you deploy to Windows Azure the web.config gets packed within the cspkg File and is unavailable for configuration changes without redeploying your application.

Windows Azure does supply an accessible configuration file to store configuration settings such as a connectionString. This file is the cscfg file and is required to upload a Service to Windows Azure. The cscfg file is definitely where you will want to place the majority of your configuration settings that need to be modified over the lifetime of your application.

I know you’re probably asking yourself, what if I want to architect my application to work both On-Premise and in the Cloud on one Codebase? Surprisingly, this is possible and I will focus on a technique to allow a Cloud focused application to be deployed on a Shared Hosting or On-Premise Server without the need to make a number of Code Changes.

Obviously this solution does fit within a limited scope, and you may also want to consider architecting your solution for cost as well as portability. When building a solution on Windows Azure, look into leveraging the Storage Services as part of a more cost effective solution.

Cloud Aware Database Connection String

One of the most common Configuration Settings you would like to be “Cloud-Aware” is your Database Connection String. This is easily accomplished in your application code by making a class that can resolve your connection string based on where the code is Deployed.

How do we know where the code is deployed you ask? That’s rather simple, Windows Azure Provides a static RoleEnvironment Class which exposes a Property IsAvailable which only returns true if the Application is running in either Windows Azure itself or the Windows Azure Compute Emulator.

Here is a code snippet that will give you a rather good idea:

namespace Net.SyntaxC4.Demos.WindowsAzure { using System.Configuration; using Microsoft.WindowsAzure.ServiceRuntime; public static class ConnectionStringResolver { private static string connectionString; public static string DatabaseConnectionString { get { if (string.IsNullOrWhiteSpace(connectionString)) { connectionString = (RoleEnvironment.IsAvailable) ? RoleEnvironment.GetConfigurationSettingValue("ApplicationData") : ConfigurationManager.ConnectionStrings["ApplicationData"].ConnectionString; } return connectionString; } } } }Let’s take a moment to step through this code to get a better understanding of what the class is doing.

As you can see the class is declared as static and exposes one static property, this property will either grab the Configuration Setting that is required for the particular environment the Application is Deployed on.

If the connectionString variable has not been previously set a conditional statement is evaluated on the RoleEnvironment.IsAvailable Property. If the condition is found to be true the value of connectionString is retrieve from the CSCFG file by calling a static method of the RoleEnvironment class GetConfigurationSettingValue this searches through the Cloud Service Configuration file for a Value on a Setting with the Name “ApplicationData”.

If the RoleEnvironment.IsAvaliable Property evaluates false, the application is not hosted in the Cloud and the ConnectionString will be collected from the web.config file by using the System.Configuration.ConfigurationManager class.

The same technique can be used to resolve AppSettings by accessing the AppSettings NameValueCollection from the ConfigurationManager class.

Beyond The Basics

There are a few other things you may come across when creating your Cloud Aware application.

Providers, Providers, Providers

ASP.NET also contains a powerful Provider model which is responsible for such things as Membership (Users, Roles, Profiles). Typically these settings are configured using string look-ups that are done within the web.config file. This is problematic because we don’t have the ability to change the web.config without a redeployment of our Application.

It is possible to use the RoleEntryPoint OnStart method to execute some code to programmatically re-configure the Web.config, but that can be both a lengthy process as well as a very error prone way to set your configuration settings.

To handle these scenarios you will want to create a custom provider and provide a few [well documented] configuration settings within your Cloud Service Configuration file that are used by Convention.

One thing to note when using providers is you are able to register multiple providers, however you can only provide so much information in the web.config file. In order to make your application cloud aware you will need to wrap the use of the provider objects in your code with a check for RoleEnvironment.IsAvailable so you can substitute the proper provider for the current deployment.

Something to Consider

Up until now we’ve been trying to [or more accurately managed to] avoid the need to recompile our project to deploy to the Cloud. It is possible to package your Application into a Cloud Service Package without the need to recompile, however if you’re building your solution in Visual Studio there is a good chance the Application will get re-compiled before it is Packaged for a Cloud Deployment.

With this knowledge under your belt it enables a unique opportunity for you to remove a large amount of conditional logic that needs to be executed at runtime by handing that logic off to the compiler.

Preprocessor Directives are a handy feature that don’t get leveraged very often but are a very useful tool. You can create a Cloud Deployment Build Configuration which supplies a “Cloud” Compilation Symbol. Leveraging Preprocessor Conditional logic with this Compilation Symbol to wrap your logic that switches configuration values, or Providers in your application can reduce the amount of code that is executed when serving the application to a user as only the appropriate code will be compiled to the DLL. To Learn more about Preprocessor Directives see the first Programming Article I had written.

Conclusion

With a little bit of planning and understanding the application you are going to be building some decisions can be made really early to plan for an eventual cloud deployment of an application without the need for an abundance of code being written during the regular development cycle nor is there a need to re-write a large portion of your application if you would like to build the functionality in once the Application is ready for Cloud Deployment. With this said there are still obvious improvements to be gained by leveraging the Cloud Platform to it’s full Potential. Windows Azure has a solid SDK which is constantly and consistently iterated on to provide developers with a rich development API.

If you want to leverage more of Windows Azure’s offerings it is a good idea to create a wrapper around Microsoft’s SDK so you will be able to create a pluggable architecture for your application to allow for maximum portability from On-Premise to the Cloud and ultimately between different cloud providers.

<Return to section navigation list>

Visual Studio LightSwitch

• Eric Erhardt explained How Do I: Display a chart built on aggregated data (Eric Erhardt) in a 4/8/2011 post to the LightSwitch Team blog:

In a business application there is often a need to roll-up data and display it in a concise format. This allows decision makers the ability to analyze the state of the business and make a decision quickly and correctly. This roll-up may retrieve data from many different sources, slice it, dice it, transform it, and then display the information in many different ways to the user. One common way to display this information is in a chart. Charts display a lot of information in a small amount of space.

Visual Studio LightSwitch v1.0 doesn’t provide a report designer out of the box. However, that doesn’t mean that the hooks aren’t there for you to implement something yourself. It may take a little more work than building a search screen, or data entry screen, but being able to report on your business data is going to be a requirement from almost any business application user.

I am going to explain, from beginning to end, an approach you can use to aggregate data from your data store and then add a bar chart in your LightSwitch application to display the aggregate data.

The approach I am going to take is to use a Custom WCF RIA DomainService to return the aggregated data. It is possible to bring aggregate data into LightSwitch using a database View. However, that approach may not always be possible. If you don’t have the rights to modify the database schema, or if you are using the intrinsic “ApplicationData” data source, you won’t be able to use a database View. The following approach will work in all database scenarios.

In order to follow along with this example, you will need:

- Visual Studio LightSwitch Beta2

- Visual C# 2010 Express or Visual Basic 2010 Express or Visual Studio 2010 Professional or above

- Silverlight 4 Toolkit

- A Northwind database

The business scenario

Let’s say you have a sales management database, we’ll use the Northwind database for the example, that allows you to track the products you have for sale, your customers and the orders for these products. The sales manager would like to have a screen that allows him/her to view how much revenue each product has produced.

Creating your LightSwitch application

First, let’s start by launching Visual Studio LightSwitch and creating a new project. Name it “NorthwindTraders”. Click on the “Attach to external Data Source” link in the start screen, select Database and enter the connection information to your Northwind database. Add all tables in the Northwind database. Build your project and make sure there are no errors. Your Solution Explorer should look like the following:

Creating a Custom WCF RIA Service

LightSwitch version 1.0 doesn’t support aggregate queries (like GROUP BY, SUM, etc.). In order to get aggregate data into a LightSwitch application, we will need to create a Custom WCF RIA Service. In order to create a Custom WCF RIA Service, we need to create a new “Class Library” project. Visual Studio LightSwitch by itself can only create LightSwitch applications. You will need to either use Visual Studio or Visual C#/Basic Express to create the new Class Library project. Name this Class Library “NorthwindTraders.Reporting”. Build the project to make sure everything was created successfully.

Once you have created the Class Library project, you can add it into your LightSwitch solution. First, make sure the solution is being shown by opening Tools –> Options. Under “Projects and Solutions” ensure that “Always show solution” is checked. Then, in the Solution Explorer, right-click on the solution and say “Add –> Existing Project”. Navigate to the Class Library you created above. (The Open File Dialog may filter only *.lsproj files. Either typing the full path into the dialog or navigating to the folder, typing * and pressing “Enter” into the File name text box will allow you to select the NorthwindTraders.Reporting.cs/vbproj project.)

Now you will need to add a few references to the NorthwindTraders.Reporting project. Add the following:

- System.ComponentModel.DataAnnotations

- System.Configuration

- System.Data.Entity

- System.Runtime.Serialization

- System.ServiceModel.DomainServices.Server (Look in %ProgramFiles(x86)%\Microsoft SDKs\RIA Services\v1.0\Libraries\Server if it isn’t under the .Net tab)

- System.Web

You can rename the default “Class1” to “NorthwindTradersReportData”. Also make sure it inherits from the System.ServiceModel.DomainServices.Server.DomainService base class. You should now have a Custom WCF RIA Service that can be consumed in LightSwitch. For more information on using Custom WCF Ria Services in LightSwitch, refer to the following blog articles:

- The Anatomy of a LightSwitch Application Part 4 – Data Access and Storage

- How to create a RIA service wrapper for OData Source

Returning aggregate data from the Custom WCF RIA Service

Now comes the real work of connecting to the Northwind database and writing a query that will aggregate our product sales information. First, we need a way to query our Northwind data. One option is re-create an ADO.Net Entity Framework model that connects to the Northwind database, or use LINQ To SQL. However, that would force us to keep two database models in sync, which may take more maintenance. It would be really nice if we could re-use the Entity Framework model that our LightSwitch application uses. Fortunately we can re-use this model by Adding an Existing Item to our NorthwindTraders.Reporting project and selecting “Add as Link”. This will allow both our LightSwitch application and NorthwindTraders.Reporting projects to consume the same files. The LightSwitch application will keep the files up-to-date and the Reporting project will just consume them.

To do this, in the Solution Explorer right-click on the “NorthwindTraders.Reporting” project “Add –> Existing Item”. Navigate to the folder containing your LightSwitch application’s .lsproj file. Navigate “ServerGenerated” \ “GeneratedArtifacts”. Select “NorthwindData.vb/cs”. WAIT! Don’t click the “Add” button just yet. See that little drop down button inside the Add button? Click the drop down and select “Add As Link”.

The NorthwindData.vb/cs file is now shared between your LightSwitch application and the NorthwindTraders.Reporting project. As LightSwitch makes modifications to this file, both projects will get the changes and both projects will stay in sync.

Now that we can re-use our Entity Framework model, all we need to do is create an ObjectContext and query Northwind using our aggregate query. To do this, add the following code to your NorthwindTradersReportData class you added above.

Visual Basic:

Imports System.ComponentModel.DataAnnotations Imports System.Data.EntityClient Imports System.ServiceModel.DomainServices.Server Imports System.Web.Configuration Public Class NorthwindTradersReportData Inherits DomainService Private _context As NorthwindData.Implementation.NorthwindDataObjectContext Public ReadOnly Property Context As NorthwindData.Implementation.NorthwindDataObjectContext Get If _context Is Nothing Then Dim builder = New EntityConnectionStringBuilder builder.Metadata = "res://*/NorthwindData.csdl|res://*/NorthwindData.ssdl|res://*/NorthwindData.msl" builder.Provider = "System.Data.SqlClient" builder.ProviderConnectionString = WebConfigurationManager.ConnectionStrings("NorthwindData").ConnectionString _context = New NorthwindData.Implementation.NorthwindDataObjectContext(

builder.ConnectionString) End If Return _context End Get End Property ''' <summary> ''' Override the Count method in order for paging to work correctly ''' </summary> Protected Overrides Function Count(Of T)(query As IQueryable(Of T)) As Integer Return query.Count() End Function <Query(IsDefault:=True)> Public Function GetSalesTotalsByProduct() As IQueryable(Of ProductSales) Return From od In Me.Context.Order_Details Group By Product = od.Product Into g = Group Select New ProductSales With {.ProductId = Product.ProductID, .ProductName = Product.ProductName, .SalesTotalSingle = g.Sum(Function(od) _ (od.UnitPrice * od.Quantity) * (1 - od.Discount))} End Function End Class Public Class ProductSales <Key()> Public Property ProductId As Integer Public Property ProductName As String Public Property SalesTotal As Decimal ' This is needed because the cast isn't allowed in LINQ to Entity queries Friend WriteOnly Property SalesTotalSingle As Single Set(value As Single) Me.SalesTotal = New Decimal(value) End Set End Property End ClassC#:

using System.ComponentModel.DataAnnotations; using System.Data.EntityClient; using System.Linq; using System.ServiceModel.DomainServices.Server; using System.Web.Configuration; using NorthwindData.Implementation; namespace NorthwindTraders.Reporting { public class NorthwindTradersReportData : DomainService { private NorthwindDataObjectContext context; public NorthwindDataObjectContext Context { get { if (this.context == null) { EntityConnectionStringBuilder builder = new EntityConnectionStringBuilder(); builder.Metadata = "res://*/NorthwindData.csdl|res://*/NorthwindData.ssdl|res://*/NorthwindData.msl"; builder.Provider = "System.Data.SqlClient"; builder.ProviderConnectionString = WebConfigurationManager.ConnectionStrings["NorthwindData"].ConnectionString; this.context = new NorthwindDataObjectContext(builder.ConnectionString); } return this.context; } } /// <summary> /// Override the Count method in order for paging to work correctly /// </summary> protected override int Count<T>(IQueryable<T> query) { return query.Count(); } [Query(IsDefault = true)] public IQueryable<ProductSales> GetSalesTotalsByProduct() { return this.Context.Order_Details .GroupBy(od => od.Product) .Select(g => new ProductSales() { ProductId = g.Key.ProductID, ProductName = g.Key.ProductName, SalesTotalFloat = g.Sum(od => ((float)(od.UnitPrice * od.Quantity)) * (1 - od.Discount)) }); } } public class ProductSales { [Key] public int ProductId { get; set; } public string ProductName { get; set; } public decimal SalesTotal { get; set; } // This is needed because the cast isn't allowed in LINQ to Entity queries internal float SalesTotalFloat { set { this.SalesTotal = new decimal(value); } } } }Import the Custom WCF RIA Service into LightSwitch

Now that we have a WCF RIA Service that will return our aggregated data, it is time to bring that data into our LightSwitch application. In the Solution Explorer, right-click the “Data Sources” folder under your NorthwindTraders project. Select “Add Data Source…”. In the Attach Data Source Wizard, select “WCF RIA Service”. Click Next.

You shouldn’t have any available WCF RIA Service classes yet. LightSwitch needs you to add a reference to your NorthwindTraders.Reporting project in order for the service to be picked up. Click the Add Reference button.

In the Add Reference dialog, select the “Projects” tab at the top and select your “NorthwindTraders.Reporting” project.

After you add the reference, your NorthwindTradersReportData WCF RIA DomainService class will show up in the WCF RIA Service classes list. Select it and click Next.

Check the “Entities” node in the tree to select all the data source objects that are exposed by the NorthwindTradersReportData service. Then click Finish.

You should get a new Data Source node in your LightSwitch application project. This new data source will call into the aggregate query and return total sales numbers for each Product in the Northwind database. You should build a “Search Data Screen” based on the ProductSales data in order to test that everything is working in your Custom WCF RIA Service. If everything is working, you should get a screen that looks like the following:

Adding a Bar Chart using a Custom Control

Now that we can bring aggregate data into our LightSwitch application, we want to display the data it in a bar chart that will allow our end user to visually see the total sales information for each product.

First, we’ll need to create the bar chart Silverlight control. To do this, add a new .xaml file to the “Client” project. First, in the Solution Explorer, select the “NorthwindTraders” project node, and switch to “File View” by clicking the view switching button in the Solution Explorer toolbar:

Next, right-click on the “Client” project and select “Add –> New Item…”. Selecting a “Text File” in the dialog will be fine, but be sure to name it with a “.xaml” extension. I named mine “ProductSalesBarChart.xaml”.

Add the following xaml code to the file:

<UserControl x:Class="NorthwindTraders.Client.ProductSalesBarChart" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:chartingToolkit="clr-namespace:System.Windows.Controls.DataVisualization.Charting;assembly=S

ystem.Windows.Controls.DataVisualization.Toolkit"> <chartingToolkit:Chart x:Name="ProductSalesChart"> <chartingToolkit:Chart.Series> <chartingToolkit:BarSeries Title="Sales Totals" ItemsSource="{Binding Screen.ProductSales}" IndependentValueBinding="{Binding ProductName}" DependentValueBinding="{Binding SalesTotal}"> </chartingToolkit:BarSeries> </chartingToolkit:Chart.Series> </chartingToolkit:Chart> </UserControl>For Visual Basic users, this is the only file that is required. However, C# users will also have to add a code file that calls InitializeComponent from the constructor of the control:

namespace NorthwindTraders.Client { public partial class ProductSalesBarChart { public ProductSalesBarChart() { InitializeComponent(); } } }Since we use the Silverlight 4 Toolkit in the xaml, add a reference from the Client project to the "System.Windows.Controls.DataVisualization.Toolkit” assembly by right-clicking the Client project in the Solution Explorer and selecting “Add Reference…”.

Note: if you don’t see this assembly, make sure you have the Silverlight 4 Toolkit installed.

All that is left is to add this custom control to a LightSwitch screen. To do this, switch back to the “Logical View” in the Solution Explorer using the view switching toolbar button. Open the “SearchProductSales” screen that was previously created on the ProductSales data. Delete the “Data Grid | Product Sales” content item in the screen designer. This will leave you with a blank screen that has a single ScreenCollectionProperty named “ProductSales”.

Click on the “Add” button in the screen designer and select “New Custom Control…”

Next, find the ProductSalesBarChart custom control we added to the Client project. It should be under the “NorthwindTraders.Client” assembly in the “NorthwindTraders.Client” namespace. Select it and click OK.

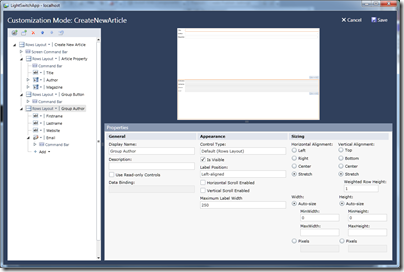

One last step is to fix a little sizing issue. By default, the Silverlight chart control will try to take up the minimal size possible, which isn’t readable in the screen. To fix this, you need to set the control to be “Horizontal Alignment = Stretch” and “Vertical Alignment = Stretch” in the Properties sheet.

Also remove the “Screen Content” default label.

You can now F5 your project and see your chart control in LightSwitch:

Something you should keep in mind is that this data is paged to 45 records by default. So you aren’t seeing all available products. You can either turn off paging (which I don’t recommend) or you can add paging buttons on your screen. The backing code for these paging buttons could look like:

Visual Basic

Private Sub PreviousPage_CanExecute(ByRef result As Boolean) ' PageNumber is 1-based result = Me.Details.Properties.ProductSales.PageNumber > 1 End Sub Private Sub PreviousPage_Execute() Me.Details.Properties.ProductSales.PageNumber -= 1 End Sub Private Sub NextPage_CanExecute(ByRef result As Boolean) result = Me.Details.Properties.ProductSales.PageNumber < Me.Details.Properties.ProductSales.PageCount End Sub Private Sub NextPage_Execute() Me.Details.Properties.ProductSales.PageNumber += 1 End SubC#

partial void PreviousPage_CanExecute(ref bool result) { // PageNumber is 1-based. result = this.Details.Properties.ProductSales.PageNumber > 1; } partial void PreviousPage_Execute() { this.Details.Properties.ProductSales.PageNumber--; } partial void NextPage_CanExecute(ref bool result) { result = this.Details.Properties.ProductSales.PageNumber < this.Details.Properties.ProductSales.PageCount; } partial void NextPage_Execute() { this.Details.Properties.ProductSales.PageNumber++; }Conclusion

Hopefully by now you can see that the extensibility story in Visual Studio LightSwitch is pretty powerful. Even though aggregate data and chart controls are not built into Visual Studio LightSwitch out of the box, there are hooks available that allow you to add these features into your application and meet your end users’ needs.

It’s too bad Eric didn’t include a link to download the finished project.

Tim Anderson (@timanderson) asked Hands On with Visual Studio LightSwitch – but what is it for? on 4/7/2011:

Visual Studio LightSwitch, currently in public beta, is Microsoft’s most intriguing development tool for years. It is, I think, widely misunderstood, or not understood; but there is some brilliant work lurking underneath it. That does not mean it will succeed. The difficulty Microsoft is having in positioning it, together with inevitable version one limitations, may mean that it never receives the attention it deserves.

Let’s start with what Microsoft says LightSwitch is all about. Here is a slide from its Beta 2 presentation to the press:

Get the idea? This is development for the rest of us, "a simple tool to solve their problems” as another slide puts it.

OK, so it is an application builder, where the focus is on forms over data. That makes me think of Access and Excel, or going beyond Microsoft, FileMaker. This being 2011 though, the emphasis is not so much on single user or even networked Windows apps, but rather on rich internet clients backed by internet-hosted services. With this in mind, LightSwitch builds three-tier applications with database and server tiers hosted on Windows server and IIS, or optionally on Windows Azure, and a client built in Silverlight that runs either out of browser on Windows – in which case it gets features like export to Excel – or in-browser as a web application.

There is a significant issue with this approach. There is no mobile client. Although Windows Phone runs Silverlight, LightSwitch does not create Windows Phone applications; and the only mobile that runs Silverlight is Windows Phone.

LightSwitch apps should run on a Mac with Silverlight installed, though Microsoft never seems to mention this. It is presented as a tool for Windows. On the Mac, desktop applications will not be able to export to Excel since this is a Windows-only capability in Silverlight.

Silverlight MVP Michael Washington has figured out how to make a standard ASP.NET web application that accesses a LightSwitch back end. I think this should have been an option from the beginning.

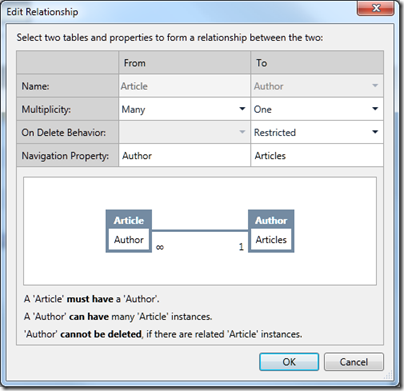

I digress though. I decided to have a go with LightSwitch to see if I can work out how the supposed target market is likely to get on with it. The project I set myself was a an index of magazine articles; you may recognize some of the names. With LightSwitch you are insulated from the complexities of data connections and can just get on with defining data. Behind the scenes it is SQL Server. I created tables for Articles, Authors and Magazines, where magazines are composed of articles, and each article has an author.

The LightSwitch data designer is brilliant. It has common-sense data types and an easy relationship builder. I created my three tables and set the relationships.

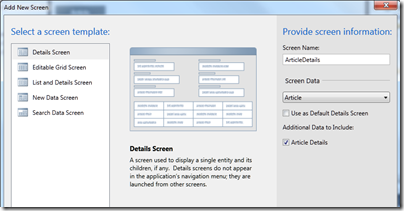

Then I created a screen for entering articles. When you add a screen you have to say what kind of screen you want:

I chose an Editable Grid Screen for my three tables. LightSwitch is smart about including fields from related tables. So my Articles grid automatically included columns for Author and for Magazine. I did notice that the the author column only showed the firstname of the author – not good. I discovered how to fix it. Go into the Authors table definition, create a new calculated field called FullName, click Edit Method, and write some code:

partial void FullName_Compute(ref string result)

{

// Set result to the desired field value

result = this.Firstname + " " + this.Lastname;}

Have we lost our non-developer developer? I don’t think so, this is easier than a formula in Excel once you work out the steps. I was interested to see the result variable in the generated code; echoes of Delphi and Object Pascal.

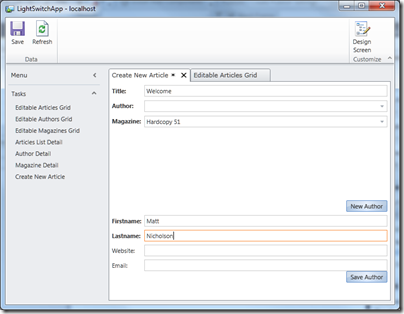

I did discover though that my app has a usability problem. In LightSwitch, the user interface is generated for you. Each screen becomes a Task in a menu on the left, and double-clicking opens it. The screen layout is also generated for you. My problem: when I tried entering a new article, I had to specify the Author from a drop-down list. If the author did not yet exist though, I had to open an Authors editable grid, enter the new author, save it, then go back to the Articles grid to select the new author.

I set myself the task of creating a more user-friendly screen for new articles. It took me a while to figure out how, because the documentation does not seen to cover my requirement, but after some help from LightSwitch experts I arrived at a solution.

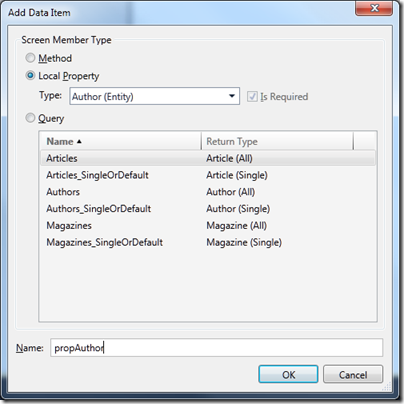

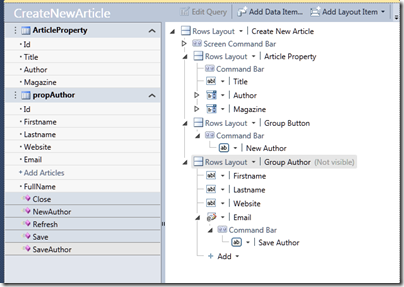

First, I created a New Data Screen based on the Article table. Then I clicked Add Data Item and selected a local property of type Author, which I called propAuthor.

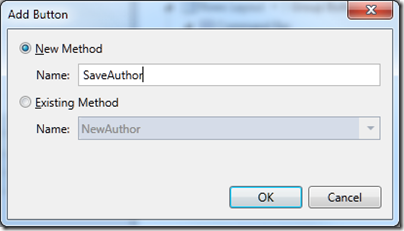

Next, I added two groups to the screen designer. Screen designs in LightSwitch are not like any screen designs you have seen before. They are a hierarchical list of elements, with properties that affect their appearance. I added two new groups, Group Button and GroupAuthor, and set GroupAuthor to be invisible. Then I dragged fields from propAuthor into the Author group. Then I added two buttons, one called NewAuthor and one called SaveAuthor. Here is the dialog for adding a button:

and here is my screen design:

So the idea is that when I enter a new article, I can select the author from a drop down list; but if the author does not exist, I click New Author, enter the author details, and click Save. Nicer than having to navigate to a new screen.

In order to complete this I have to write some more code. Here is the code for NewAuthor:

partial void NewAuthor_Execute()

{

// Write your code here.

this.propAuthor = new Author();

this.FindControl("GroupAuthor").IsVisible = true;

}Note the use of FindControl. I am not sure if there is an easier way, but for some reason the group control does not show up as a property of the screen.

Here is the code for SaveAuthor:

partial void SaveAuthor_Execute()

{

// Write your code here.

this.ArticleProperty.Author = propAuthor;

this.Save();

}This works perfectly. When I click Save Author, the new author is added to the article, and both are saved. Admittedly the screen layout leaves something to be desired; when I have worked out what Weighted Row Height is all about I will try and improve it.

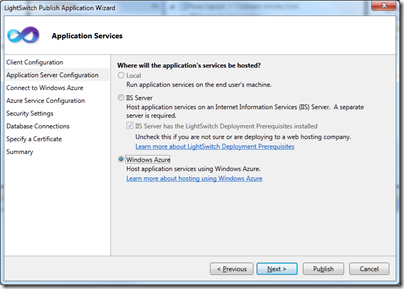

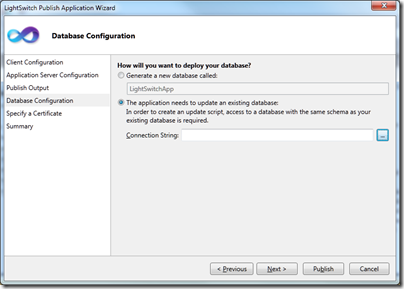

Before I finish, I must mention the LightSwitch Publish Wizard, which is clearly the result of a lot of work on Microsoft’s part. First, you choose between a desktop or web application. Next you choose an option for where the services are hosted, which can be local, or on an IIS server, or on Windows Azure.

Something I like very much: when you deploy, there is an option to create a new database, but to export the data you have already entered while creating the app. Thoughtful.

As you can see from the screens, LightSwitch handles security and access control as well as data management.

What do I think of LightSwitch after this brief exercise? Well, I am impressed by the way it abstracts difficult things. Considered as an easy to use tool for model-driven development, it is excellent.

At the same time, I found it frustrating and sometimes obscure. The local property concept is a critical one if you want to build an application that goes beyond what is generated automatically, but the documentation does not make this clear. I also have not yet found a guide or reference to writing code, which would tell me whether my use of FindControl was sensible or not.

The generated applications are functional rather than beautiful, and the screen layout designer is far from intuitive.

How is the target non-developer developer going to get on with this? I think they will retreat back to the safety of Access or FileMaker in no time. The product this reminds me of more is FoxPro, which was mainly used by professionals.

Making sense of LightSwitch

So what is LightSwitch all about? I think this is a bold effort to create a Visual Basic for Azure, an easy to use tool that would bring multi-tier, cloud-hosted development to a wide group of developers. It could even fit in with the yet-to-be-unveiled app store and Appx application model for Windows 8. But it is the Visual Basic or FoxPro type of developer which Microsoft should be targeting, not professionals in other domains who need to knock together a database app in their spare time.

There are lots of good things here, such as the visual database designer, the Publish Application wizard, and the whole model-driven approach. I suspect though that confused marketing, the Silverlight dependency, and the initial strangeness of the whole package, will combine to make it a hard sell for Microsoft. I would like to be wrong though, as a LightSwitch version 2 which generates HTML 5 instead of Silverlight could be really interesting.

Related posts:

- Ten things you need to know about Microsoft’s Visual Studio LightSwitch

- Visual Studio LightSwitch – model-driven architecture for the mainstream?

- Visual Studio 2010 nine months on: how good has it proved?

- Visual Studio 2010 and .NET Framework 4.0 – a simply huge release

- New Visual Studio 2010 beta has WPF editor, Silverlight designer

Alfredo Delsors described How to publish a Sharepoint 2010 list on Azure using LightSwitch Beta 2? in a 4/5/2011 post:

If you have a corporate Sharepoint 2010 behind firewalls, using LightSwitch Beta 2 you can publish any of its lists on azure with Zero Code.

Here the steps required.

1. Create a list on Sharepoint 2010, for example AzureSpainTasks:

3. Sharepoint 2010 publishes its Lists on a WCF Data Services endpoint that uses OData protocol. Browse to http://<spserver>/_vti_bin/ListData.svc/<ListName> like in the example to check it:

4. With the "Install Local Endpoint" button provided by "Virtual Network" on the Windows Azure Portal, install a WIndows Azure Connect on the Sharepoint Server:

5. Install the local endpoint in a development machine and create a "Virtual Network" Group containing both machines. Mark "Allow connections between endpoints in group" that allows the server be available by name from the development machine.

6. Wait until the local endpoint status is "Connected" on both machines, use the right button over the Connect icon on task bar and then select "Open Windows Azure Connect".

7. Create a LightSwitch project on the development machine:

8. Use LightSwitch Beta 2 to publish on Azure:

9. Select a Sharepoint Data Source:

10. With Azure Connect Virtual Network you have a DNS resolving names in the group. Use the name of the Sharepoint 2010 Server on the Sharepoint Site Address:

11. After a while you can select the List:

12. Details:

13. Add a screen:

14. Select a template for the screen and select as Screen Data the sharepoint list source data just created:

15. Test locally. You have access the sharepoint list also when you are not in the corporate network thanks to Azure Connect:

16. Publish the LightSwitch project on Azure:

17. The Other Connections option shows the connection to the sharepoint server: