Windows Azure and Cloud Computing Posts for 4/15/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Avkash Chauhan explained how to Mount a Page Blob VHD in any Windows Azure VM outside any Web, Worker or VM Role in a 4/15/2011 post:

Following is the C# Source code to mount a Page Blob VHD in any Windows Azure VM outside any Web, Worker or VM Role:

Console.Write("Role Environment Verification: "); if (!RoleEnvironment.IsAvailable) { Console.WriteLine(" FAILED........!!"); return; } Console.WriteLine(" SUCCESS........!!"); Console.WriteLine("Starting Drive Mount!!"); var cloudDriveBlobPath = http://<Storage_Account>.blob.core.windows.net/<Container>/<VHD_NAME(myvhd64.vhd); Console.WriteLine("Role Name: " + RoleEnvironment.CurrentRoleInstance.Role.Name); StorageCredentialsAccountAndKey credentials = new StorageCredentialsAccountAndKey("<Storage_ACCOUNT>", "<Storage_KEY>"); Console.WriteLine("Deployment ID:" + RoleEnvironment.DeploymentId.ToString()); Console.WriteLine("Role count:" + RoleEnvironment.Roles.Count); try { localCache = RoleEnvironment.GetLocalResource("<Correct_Local_Storage_Name>"); Char[] backSlash = { '\\' }; String localCachePath = localCache.RootPath.TrimEnd(backSlash); CloudDrive.InitializeCache(localCachePath, localCache.MaximumSizeInMegabytes); Console.WriteLine(localCache.Name + " | " + localCache.RootPath + " | " + localCachePath + " ! " + localCache.MaximumSizeInMegabytes); } catch (Exception eXp) { Console.WriteLine("Problem with Local Storage: " + eXp.Message); return; } drive = new CloudDrive(new Uri(cloudDriveBlobPath), credentials); try { Console.WriteLine("Calling Drive Mount API!!"); string driveLetter = drive.Mount(localCache.MaximumSizeInMegabytes, DriveMountOptions.None); Console.WriteLine("Drive :" + driveLetter); Console.WriteLine("Finished Mounting!!"); Console.WriteLine("************Lets Unmount now********************"); Console.WriteLine("Press any key......"); Console.ReadKey(); Console.WriteLine("Starting Unmount......"); drive.Unmount(); Console.WriteLine("Finished Unmounting!!"); Console.WriteLine("Press any key to exit......"); Console.ReadKey(); } catch (Exception exP) { Console.WriteLine(exP.Message + "//" + exP.Source); Console.WriteLine("Failed Mounting!!"); Console.ReadKey(); }Role Environment Verification: SUCCESS........!! Starting Drive Mount!! Role Name: VMRole1 Deployment ID:207c1761a3e74d6a8cad9bf3ba1b23dc Role count:1 LocalStorage | C:\Resources\LocalStorage | C:\Resources\LocalStorage ! 870394 Calling Drive Mount API!! Drive :B:\ Finished Mounting!! ************Lets Unmount now******************** Press any key...... Starting Unmount...... Finished Unmounting!! Press any key to exit......Possible Errors:

- 1. Be sure to have correct Local Storage Folder

- no such local resource

- 2. Be sure to have your VHD otherwise you will get error:

- ERROR_BLOB_DOES_NOT_EXIST

- 3. If your VHD in not a page blob VHD, it will not mount

- ERROR_BLOB_NOT_PAGE_BLOB

- 4. If you receive the following Error it means your application build is set to x86

- Could not load file or assembly 'mswacdmi, Version=1.1.0.0

- Please set the build to 64bit to solve this problem.

To Create VHD drive:

When using Microsoft Disk Management to create VHD be sure:

- To create FIXED SIZE VHD

- Use MBR Partition type (GPT based disk will not be able to mount)

I done testing with Web Role, Worker Role and VM Role and I was able to mount valid Page Blob VHD with this code with all 3 kinds of roles with Windows Azure SDK 1.4.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi reported the availability of a TechNet Wiki- Overview of Security of SQL Azure on 5/15/2011:

TechNet has written an article which provides an overview of the security features of SQL Azure. It provides a great overview of security considerations for SQL Azure and you should definitely take a look as you start putting your data into SQL Azure.

Click here to look at the article.

In a previous blog post we also covered SQL Azure security which had a good overview video and samples.

Bill Ramos explained Migrating Access Jet Databases to SQL Azure in a 4/14/2011 post:

In this blog, I’ll describe how to use SSMA for Access to convert your Jet database for your Microsoft Access solution to SQL Azure. This blog builds on Access to SQL Server Migration: How to Use SSMA using the Access Northwind 2007 template. The blog also assumes that you have a SQL Azure account setup and that you have configured firewall access for your system as described in the blog post Migrating from MySQL to SQL Azure Using SSMA.

Creating a Schema on SQL Azure

If you are using a trial version of SQL Azure, you’ll want to get the most out of your free 1 GB Web Edition database. By using a SQL Server schema, you can accommodate multiple Jet database or MySQL migrations into a single database and limit access to users for each schema via the SQL Server permissions hierarchy.

SSMA for Microsoft Access version 4.2 doesn’t support the creation of a database schema within the tool, so you will need to create the schema using the Windows Azure Portal. Launch the Windows Azure Portal with your Live ID and follow the steps as shown below.

- Click on the Database node in the left hand navigation pane.

- Expand out the subscription name for your Azure account until you see your databases

- Select the target database that you created when you first connected to the Azure portal – see Migrating from MySQL to SQL Azure Using SSMA for how the SSMADB was created for this blog.

- Click on the Manage command to launch the Database Manager. You will log in into SQL Azure database as shown below.

Once in the Database Manager, you will need to press the New Query command as shown below so that you can create the target schema for the Northwind2007 database.

Now that you have the new query window, you can do the following steps as illustrated below.

- Type in the Transact-SQL command to create your target schema: create schema Northwind2007

- Press the Execute command in the toolbar to run the statement.

- Click on the Message window command to show that the command was completed successfully.

You are now ready to use SSMA for Access to migrate your database to SQL Azure into the Northwind2007 schema.

Creating a Migration Project with SQL Azure as the Destination

Start SSMA for Access as usual, but close the Migration Wizard that starts by default. The Migration Wizard will end up creating the tables in the dbo schema instead of the Northwind2007 schema that you created. Follow the steps shown below to create your manual migration project.

- Click on the New Project command.

- Enter in the name of your project.

- Select SQL Azure for the Migration To option and click OK. If you forget to select SQL Azure, you’ll need to create a new project again because you can’t change the option once you have competed the dialog.

The next step is to add the Northwind2007 database file to the project and connect to your SQL Azure database as shown below.

- Click on the Add Databases command and select the Northwind2007 database.

- Expand the Access-metadata node in the Access Metadata Explorer to show the Queries and Tables nodes and select the Tables checkbox.

- Click on the Connect to SQL Azure command

- Complete the connection dialog to your SQL Azure database

Choosing the Target Schema

To change the target schema, you need to Modify the default value from master.dbo to database name and schema that you created for your SQL Azure database – in this example – SSMADB.Northwind2007 following the steps below.

- Click on the Modify button in the Schema tab.

- Click on the […] brose button in the Choose Target Schema dialog.

- Choose the target schema – Northwind2007 - and then click the Select and the OK button.

Migrate the Tables and Data with the Convert, Load, and Migrate Command

At this point, you are ready to proceed with the standard migration steps for SSMA which includes (ignoring errors):

- Click on the Tables folder for the Northwind2007 database in the Access Metadata Explorer to enable the migration toolbar commands.

- Click on the Convert, Load, and Migrate command to do all the steps to compete the migration with the one command.

- Click OK for the Synchronize with the Database dialog as shown below to create the tables in the Northwind2007 schema within the SSMADB database.

- Dismiss the Convert, Load, and Migrate dialog assuming everything worked.

Using SSMA to Verify the Migration Result

To verify the results, you can use the Access and SQL Azure Metadata Explorers to compare data after the transfer as follows.

- Click on the source table Employees in the Access Metadata Explorer

- Select the Data tab in the Access workspace to see the data

- Click on the target table Employees in the SQL Azure Metadata Explorer

- Select the Table tab in the SQL Azure workspace to see the schema or the Data tab to view the data.

You can also use the SQL Azure Database Manager to view the table schema and data as described at the end of the blog post Migrating from MySQL to SQL Azure Using SSMA.

Creating Linked Tables to SQL Azure for your Access Solution

To make your Access solution use the SQL Azure tables, you need to create Linked tables to the SQL Azure database. To create the Linked tables, you need to select the Tables folder in the Access Metadata Explorer as shown below.

Right click on the Tables folder and select the Linked Tables command. SSMA will create a backup of the tables in your Access solution file and then create the Linked Table that connects to the table in SQL Azure.

Summary

As you can see, migrating your Access solution that uses Jet tables as easy as:

- Creating a target schema in your target SQL Azure database.

- Creating a project with the Migrate To option set to SQL Azure.

- Following the normal steps for migrating schema and data within SSMA.

- Verifying the reports within SSMA or through the SQL Azure Database Manager.

- Creating Linked Tables to the SQL Azure database within your Access solution using SSMA.

Additional SQL Azure Resources

To learn more about SQL Azure, see the following resources.

<Return to section navigation list>

MarketPlace DataMarket and OData

Sudhir Hasbe described Real-time Stock quotes from BATS Exchange available on Azure DataMarket in a 4/13/2011 post:

Get instant access to real-time stock quotes for U.S. equities traded on NASDAQ, NYSE, NYSE Alternext and over-the-counter (OTC) exchanges. Quotes are provided by the BATS Exchange, one of the largest U.S. market centers for stock transactions. Each real-time quote includes trading volume as well as open, close, high, low and last sale prices.

For developers of websites and software applications, XigniteBATSLastSale offers a high-performance web service API capable of scaling to meet the requirements of even the most demanding software applications and websites. For individual investors or professional traders, XigniteBATSLastSale offers simple, easy-to-use operations that let you cherry-pick exactly the data you want when you want it and pay only for as much data as you think you’ll need.

The WCF Data Services Team posted a Reference Data Caching Walkthrough on 4/13/2011:

This walkthrough shows how a simple web application can be built using the reference data caching features in the “Microsoft WCF Data Services For .NET March 2011 Community Technical Preview with Reference Data Caching Extensions” aka “Reference Data Caching CTP”. The walkthrough isn’t intended for production use but should be of value in learning about the caching protocol additions to OData as well as to provide practical knowledge in how to build applications using the new protocol features.

Walkthrough Sections

The walkthrough contains five sections as follows:

- Setup: This defines the pre-requisites for the walkthrough and links to the setup of the Reference Data Caching CTP.

- Create the Web Application: A web application will be used to host an OData reference data caching-enabled service and HTML 5 application. In this section you will create the web app and configure it.

- Build an Entity Framework Code First Model: An EF Code First model will be used to define the data model for the reference data caching-enabled application.

- Build the service: A reference data caching-enabled OData service will be built on top of the Code First model to enable access to the data over HTTP.

- Build the front end: A simple HTML5 front end that uses the datajs reference data caching capabilities will be built to show how OData reference data caching can be used.

Setup

The pre-requisites and setup requirements for the walkthrough are:

- This walkthrough assumes you have Visual Studio 2010, SQL Server Express, and SQL Server Management Studio 2008 R2 installed.

- Install the Reference Data Caching CTP. This setup creates a folder at “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\” that contains:

- A .NETFramework folder with:

- i. An EntityFramework.dll that allows creating off-line enabled models using the Code First workflow.

- ii. A System.Data.Services.Delta.dll and System.Data.Services.Delta.Client.dll that allow creation of delta enabled Data Services.

- A JavaScript folder with:

- i. Two delta-enabled datajs OData library files: datajs-0.0.2 and datajs-0.0.2.min.js.

- ii. A .js file that leverages the caching capabilities inside of datajs for the walkthrough: TweetCaching.js

Create the web application

Next you’ll create an ASP.NET Web Application where the OData service and HTML5 front end will be hosted.

- Open Visual Studio 2010 and create a new ASP.NET web application and name it ConferenceReferenceDataTest. When you create the application, make sure you target .NET Framework 4.

- Add the .js files in “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\Javascript” to your scripts folder.

- Add a reference to the new reference data caching-enabled data service libraries found in “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\.NETFramework”:

- Microsoft.Data.Services.Delta.dll

- Microsoft.Data.Services.Delta.Client.dll

- Add a reference to the reference data caching-enabled EntityFramework.dll found in in “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\.NETFramework”.

- Add a reference to System.ComponentModel.DataAnnotations.

Build your Code First model and off-line enable it.

In order to build a Data Service we need a data model and data to expose. You will use Entity Framework Code First classes to define the delta enabled model and a Code First database initializer to ensure there is seed data in the database. When the application runs, EF Code First will create a database with appropriate structures and seed data for your delta-enabled service.

- Add a C# class file to the root of your project and name it model.cs.

- Add the Session and Tweets classes to your model.cs file. Mark the Tweets class with the DeltaEnabled attribute. Marking the Tweets class with the attribute will force Code First to generate additional database structures to support change tracking for Tweet records.

public class Session

{

public int Id { get; set; }

public string Name { get; set; }

public DateTime When { get; set; }

public ICollection<Tweet> Tweets { get; set; }

}

[DeltaEnabled]

public class Tweet

{

[Key, DatabaseGenerated(DatabaseGeneratedOption.Identity)]

[Column(Order = 1)]

public int Id { get; set; }

[Key]

[Column(Order = 2)]

public int SessionId { get; set; }

public string Text { get; set; }

[ForeignKey("SessionId")]

public Session Session { get; set; }

}

Note the attributes used to further configure the Tweet class for a composite primary key and foreign key relationship. These attributes will directly affect how Code First creates your database.3. Add a using statement at the top of the file so the DeltaEnabled annotation will resolve:

using System.ComponentModel.DataAnnotations;4. Add a ConferenceContext DbContext class to your model.cs file and expose Session and Tweet DbSets from it.

public class ConferenceContext : DbContext { public DbSet<Session> Sessions { get; set; } public DbSet<Tweet> Tweets { get; set; } }5. Add a using statement at the top of the file so the DbContext and DbSet classes will resolve:

using System.Data.Entity;6. Add seed data for sessions and tweets to the model by adding a Code First database initializer class to the model.cs file:

public class ConferenceInitializer : IncludeDeltaEnabledExtensions<ConferenceContext> { protected override void Seed(ConferenceContext context) { Session s1 = new Session() { Name = "OData Futures", When = DateTime.Now.AddDays(1) }; Session s2 = new Session() { Name = "Building practical OData applications", When = DateTime.Now.AddDays(2) }; Tweet t1 = new Tweet() { Session = s1, Text = "Wow, great session!" }; Tweet t2 = new Tweet() { Session = s2, Text = "Caching capabilities in OData and HTML5!" }; context.Sessions.Add(s1); context.Sessions.Add(s2); context.Tweets.Add(t1); context.Tweets.Add(t2); context.SaveChanges(); } }7. Ensure the ConferenceIntializer is called whenever the Code First DbContext is used by opening the global.asax.cs file in your project and adding the following code:

void Application_Start(object sender, EventArgs e) { // Code that runs on application startup Database.SetInitializer(new ConferenceInitializer()); }Calling the initializer will direct Code First to create your database and call the ‘Seed’ method above placing data in the database

8. Add a using statement at the top of the global.asax.cs file so the Database class will resolve:

using System.Data.Entity;Build an OData service on top of the model

Next you will build the delta enabled OData service. The service allows querying for data over HTTP just as any other OData service but in addition provides a “delta link” for queries over delta-enabled entities. The delta link provides a way to obtain changes made to sets of data from a given point in time. Using this functionality an application can store data locally and incrementally update it, offering improved performance, cross-session persistence, etc.

1. Add a new WCF Data Service to the Project and name it ConferenceService.

2. Remove the references to System.Data.Services and System.Data.Services.Client under the References treeview. We are using the Data Service libraries that are delta enabled instead.

3. Change the service to expose sessions and tweets from the ConferenceContext and change the protocol version to V3. This tells the OData delta enabled service to expose Sessions and Tweets from the ConferenceContext Code First model created previously.

public class ConferenceService : DataService<ConferenceContext> { // This method is called only once to initialize service-wide policies. public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("Sessions", EntitySetRights.All); config.SetEntitySetAccessRule("Tweets", EntitySetRights.All); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V3; } }4. Right-click on ConferenceService.svc in Solution Explorer and click “View Markup”. Change the Data Services reference as follows.

Change:

<%@ ServiceHost Language="C#" Factory="System.Data.Services.DataServiceHostFactory, System.Data.Services, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" Service="ConferenceReferenceDataTest.ConferenceContext" %>

To:

<%@ ServiceHost Language="C#" Factory="System.Data.Services.DataServiceHostFactory, Microsoft.Data.Services.Delta, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" Service="ConferenceReferenceDataTest.ConferenceService" %>

6. Change the ConferenceService.svc to be the startup file for the application by right clicking on ConferenceService.svc in Solution Explorer and choosing “Set as Start Page”.

7. Run the application by pressing F5.

- In your browser settings ensure feed reading view is turned off. Using Internet Explorer 9 you can turn feed reading view off by choosing Options->Content then clicking “Settings for Feeds and Web Slices”. In the dialog that opens uncheck the “Turn on feed reading view” checkbox.

- Request all Sessions with this OData query: http://localhost:11497/ConferenceService.svc/Sessions (note the port, 11497, will likely be different on your machine) and note there’s no delta link at the bottom of the page. This is because the DeltaEnabled attribute was not placed on the Session class in the model.cs file.

- Request all tweets with this OData query: http://localhost:11497/ConferenceService.svc/Tweets and note there is a delta link at the bottom of the page:

<link rel="http://odata.org/delta" href=http://localhost:11497/ConferenceService.svc/Tweets?$deltatoken=2002 />

The delta link is shown because the DeltaEnabled attribute was used on the Tweet class.

- The delta link will allow you to obtain any changes made to the data based from that current point in time. You can think of it like a reference to what your data looked like at a specific point in time. For now copy just the delta link to the clipboard with Control-C.

8. Open a new browser instance, paste the delta link into the nav bar: http://localhost:11497/ConferenceService.svc/Tweets?$deltatoken=2002, and press enter.

9. Note the delta link returns no new data. This is because no changes have been made to the data in the database since we queried the service and obtained the delta link. If any changes are made to the data (Inserts, Updates or Deletes) changes would be shown when you open the delta link.

10. Open SQL Server Management Studio, create a new query file and change the connection to the ConferenceReferenceDataTest.ConferenceContext database. Note the database was created by a code first convention based the classes in model.cs.

11. Execute the following query to add a new row to the Tweets table:

insert into Tweets (Text, SessionId) values ('test tweet', 1)

12. Refresh the delta link and note that the newly inserted test tweet record is shown. Notice also that an updated delta link is provided, giving a way to track any changes from the current point in time. This shows how the delta link can be used to obtain changed data. A client can hence use the OData protocol to query for delta-enabled entities, store them locally, and update them as desired.

13. Stop debugging the project and return to Visual Studio.

Write a simple HTML5 front end

We’re going to use HTML5 and the reference data caching-enabled datajs library to build a simple front end that allows browsing sessions and viewing session detail with tweets. We’ll leverage a pre-written library that uses the reference data capabilities added to OData and leverages the datajs local store capabilities for local storage.

1. Add a new HTML page to the root of the project and name it SessionBrowsing.htm.

2. Add the following HTML to the <body> section of the .htm file:

<body> <button id='ClearDataJsStore'>Clear Local Store</button> <button id='UpdateDataJsStore'>Update Tweet Store</button> <br /> <br /> Choose a Session: <select id='sessionComboBox'> </select> <br /> <br /> <div id='SessionDetail'>No session selected.</div> </body>3. At the top of the head section, add the following script references immediately after the head element:

<head> <script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-1.5.2.min.js" type="text/javascript"></script> <script src=".\Scripts\datajs-0.0.2.js" type="text/javascript"></script> <script src=".\Scripts\TweetCaching.js" type="text/javascript"></script> …4. In the head section, add the following javascript functions. These functions:

- Pull sessions from our OData service and add them to your combobox.

- Create a javascript handler for when a session is selected from the combobox.

- Create handlers for clearing and updating the local store.

<script type="text/javascript"> //load all sessions through OData $(function () { //query for loading sessions from OData var sessionQuery = " ConferenceService.svc/Sessions?$select=Id,Name"; //initial load of sessions OData.read(sessionQuery, function (data, request) { $("#sessionComboBox").html(""); $("<option value='-1'></option>").appendTo("#sessionComboBox"); var i, length; for (i = 0, length = data.results.length; i < length; i++) { $("<option value='" + data.results[i].Id + "'>" + data.results[i].Name + "</option>").appendTo("#sessionComboBox"); } }, function (err) { alert("Error occurred " + err.message); } ); //handler for combo box $("#sessionComboBox").change(function () { var sessionId = $("#sessionComboBox").val(); if (sessionId > 0) { var sessionName = $("#sessionComboBox option:selected").text(); //change localStore to localStore everywhere and TweetStore to be TweetStore var localStore = datajs.createStore("TweetStore"); document.getElementById("SessionDetail").innerHTML = ""; $('#SessionDetail').append('Tweets for session ' + sessionName + ":<br>"); AppendTweetsForSessionToForm(sessionId, localStore, "SessionDetail"); } else { var detailHtml = "No session selected."; document.getElementById("SessionDetail").innerHTML = detailHtml; } }); //handler for clearing the store $("#ClearDataJsStore").click(function () { ClearStore(); }); //handler for updating the store $("#UpdateDataJsStore").click(function () { UpdateStoredTweetData(); alert("Local Store Updated!"); }); }); </script>5. Change the startup page for the application to the SessionBrowsing.htm file by right clicking on SessionBrowsing.htm in Solution Explorer and choosing “Set as Start Page”.

6. Run the application. The application should look similar to:

- Click the ‘Clear Local Store’ button: This removes all data from the cache.

- Click the ‘Update Tweet Store’ button. This refreshes the cache.

- Choose a session from the combo box and note the tweets shown for the session.

- Switch to SSMS and add, update or remove tweets from the Tweets table.

- Click the ‘Update Tweet Store’ button then choose a session from the drop down box again. Note tweets were updated for the session in question.

This simple application uses OData delta enabled functionality in an HTML5 web application using datajs. In this case datajs local storage capabilities are used to store Tweet data and datajs OData query capabilities are used to update the Tweet data.

Conclusion

This walkthrough offers an introduction to how a delta enabled service and application could be built. The objective was to build a simple application in order to walk through the reference data caching features in the OData Reference Data Caching CTP. The walkthrough isn’t intended for production use but hopefully is of value in learning about the protocol additions to OData as well as in providing practical knowledge in how to build applications using the new protocol features. Hopefully you found the walkthrough useful. Please feel free to give feedback in the comments for this blog post as well as in our prerelease forums here.

The Asad Khan posted Announcing datajs version 0.0.3 on 3/13/2011 to the WCF Data Services Team Blog:

Today we are very excited to announce a new release of datajs. The latest release of datajs makes use of HTML5 storage capabilities to provide a caching component that makes your web application more interactive by reducing the impact of network latency. The library also comes with a pre-fetcher component that sits on top of the cache. The pre-fetcher detects application needs and brings in the data ahead of time; as a result the application user never experiences network delays. The pre-fetcher and the cache are fully configurable. The application developer can also specify which browser storage to use for data caching; by default datajs will pick the best available storage.

The following example shows how to setup the cache and load the first set of data.

var cache = datajs.createDataCache({

name: "movies",

source: "http://odata.netflix.com/Catalog/Titles?$filter=Rating eq 'PG'"

});// Read ten items from the start

cache.readRange(0, 10).then(function (data) {

//display 'data' results

});The createDataCache has a ‘name’ property that specifies the name that will be assigned to the underlying store. The 'source' property specifies the URI of the data source. The source property follows the OData URI convention (to read more about OData visit odata.org). The readRange takes the range of items you want to display on your first page.

The application developer has full control over size of the cache, the pre-fetch page size, and the lifetime of the cached items. . For example one can define the pageSize and the prefetchSize as follows:

var cache = datajs.createDataCache({

name: "movies",

source: "http://odata.netflix.com/Catalog/Titles?$filter=Rating eq 'PG'",

pageSize: 20,

prefetchSize: 350

});This release also includes a standalone local storage component which is built on HTML5. This is the same component that the datajs cache components use for offline storage. If you are not interested in the pre-fetcher you can directly use the local storage API to store items offline. datajs local storage gives you same storage experience no matter which browser or OS you run your application on.

You can get the latest drop of datajs library from: http://datajs.codeplex.com/. You can also get the latest version of the sources, which are less stable, but contain the latest features the datajs team is working on. Please visit the project page for detailed documentation, feedback and to get involved in the discussions.

datajs is an open source JavaScript library aimed at making data-centric web applications better. The library is released under the MIT license; you can read the earlier blog post on datajs here, or visit the project release site at http://datajs.codeplex.com.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, Caching WIF and Service Bus

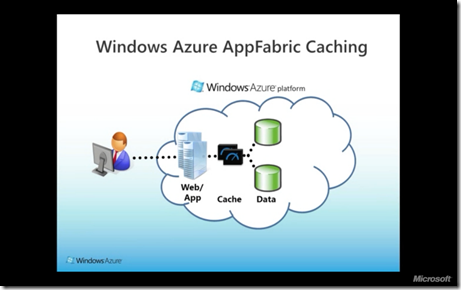

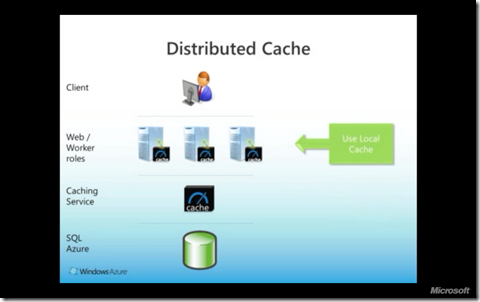

Cennest posted Microsoft Azure App-Fabric Caching Service Explained! on 4/14/2011:

MIX always comes with a mix of feelings…excitement at the prospect of trying out the new releases and the heartache that comes with trying to understand “in depth” the new technologies being released…and so starts the “googling..oops binging”,,,blogs,,,videos etc…What does it mean?? How does it impact me??

One such very important release at MIX 2011 is the AppFabric Caching Service …At Cennest we do a lot of Azure development and Migration work and this feature caught our immediate attention as something which will have a high impact on the architecture, cost and performance of new applications and Migrations .

So we collated information from various sources (references below ) and here is an attempt is simplify the explanation for you!

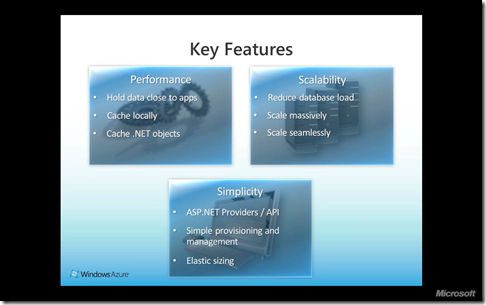

What is caching?

The Caching service is a distributed, in-memory, application cache service that accelerates the performance of Windows Azure and SQL Azure applications by allowing you to keep data in-memory and saving you the need to retrieve that data from storage or database.(Implicit Cost Benefit? Well depends on the costing of Cache service…yet to be released..)

Basically it’s a layer that sits between the Database and the application and which can be used to “store” data prevent frequent trips to the database thereby reducing latency and improving performance

How does this work?

Think of the Caching service as Microsoft running a large set of cache clusters for you, heavily optimized for performance, uptime, resiliency and scale out and just exposed as a simple network service with an endpoint for you to call. The Caching service is a highly available multitenant service with no management overhead for its users

As a user, what you get is a secure Windows Communication Foundation (WCF) endpoint to talk to and the amount of usable memory you need for your application and APIs for the cache client to call in to store and retrieve data.

The Caching service does the job of pooling in memory from the distributed cluster of machines it’s running and managing to provide the amount of usable memory you need. As a result, it also automatically provides the flexibility to scale up or down based on your cache needs with a simple change in the configuration.

Are there any variations in the types of Cache’s available?

Yes, apart from using the cache on the Caching service there is also the ability to cache a subset of the data that resides in the distributed cache servers, directly on the client—the Web server running your website. This feature is popularly referred to as the local cache, and it’s enabled with a simple configuration setting that allows you to specify the number of objects you wish to store and the timeout settings to invalidate the cache.

What can I cache?

You can pretty much keep any object in the cache: text, data, blobs, CLR objects and so on. There’s no restriction on the size of the object, either. Hence, whether you’re storing explicit objects in cache or storing session state, the object size is not a consideration to choose whether you can use the Caching service in your application.

However, the cache is not a database! —a SQL database is optimized for a different set of patterns than the cache tier is designed for. In most cases, both are needed and can be paired to provide the best performance and access patterns while keeping the costs low.

How can I use it?

- For explicit programming against the cache APIs, include the cache client assembly in your application from the SDK and you can start making GET/PUT calls to store and retrieve data from the cache.

- For higher-level scenarios that in turn use the cache, you need to include the ASP.NET session state provider for the Caching service and interact with the session state APIs instead of interacting with the caching APIs. The session state provider does the heavy lifting of calling the appropriate caching APIs to maintain the session state in the cache tier. This is a good way for you to store information like user preferences, shopping cart, game-browsing history and so on in the session state without writing a single line of cache code.

When should I use it?

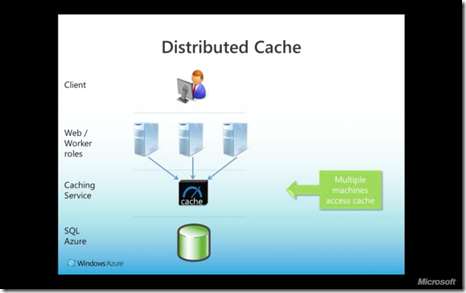

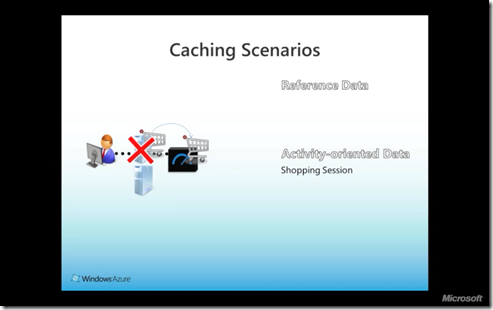

A common problem that application developers and architects have to deal with is the lack of guarantee that a client will always be routed to the same server that served the previous request.

When these sessions can’t be sticky, you’ll need to decide what to store in session state and how to bounce requests between servers to work around the lack of sticky sessions. The cache offers a compelling alternative to storing any shared state across multiple compute nodes. (These nodes would be Web servers in this example, but the same issues apply to any shared compute tier scenario.) The shared state is consistently maintained automatically by the cache tier for access by all clients, and at the same time there’s no overhead or latency of having to write it to a disk (database or files).

How long does the cache store content?

Both the Azure and the Windows Server AppFabric Caching Service use various techniques to remove data from the cache automatically: expiration and eviction. A cache has a default timeout associated with it after which an item expires and is removed automatically from the cache.

This default timeout may be overridden when items are added to the cache. The local cache similarly has an expiration timeout.

Eviction refers to the process of removing items because the cache is running out of memory. A least-recently used algorithm is used to remove items when cache memory comes under pressure – this eviction is independent of timeout.

What does it mean to me as a Developer?

One thing to note about the Caching service is that it’s an explicit cache that you write to and have full control over. It’s not a transparent cache layer on top of your database or storage. This has the benefit of providing full control over what data gets stored and managed in the cache, but also means you have to program against the cache as a separate data store using the cache APIs.

This pattern is typically referred to as the cache-aside, where you first load data into the cache and then check if it exists there for retrieving and, only when it’s not available there, you explicitly read the data from the data tier. So, as a developer, you need to learn the cache programming model, the APIs, and common tips and tricks to make your usage of cache efficient.

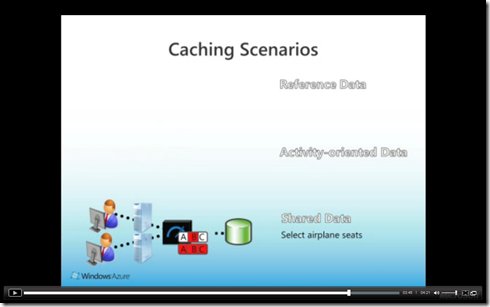

What does it mean to me as an Architect?

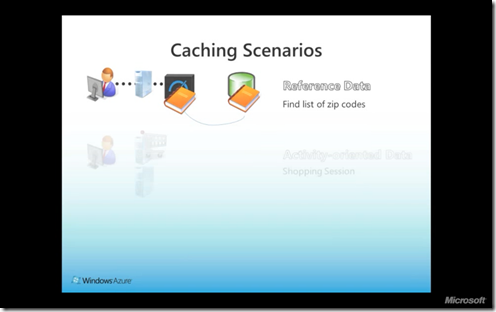

What data should you put in the cache? The answer varies significantly with the overall design of your application. When we talk about data for caching scenarios, usually we break it into the data types and access patterns

- Reference Data( Shared Read Data):-Reference data is a great candidate for keeping in the local cache or co-located with the client

- Activity Data( Exclusive Write):- Data relevant to the current session between the user and the application.

Take for example a shopping cart!During the buying session, the shopping cart is cached and updated with selected products. The shopping cart is visible and available only to the buying transaction. Upon checkout, as soon as the payment is applied, the shopping cart is retired from the cache to a data source application for additional processing.

Such an collection of data would be best stored in the Cache Server providing access to all the distributed servers which can send updates to the shopping cart . If this cache were stored at the local cache then it would get lost.

- Shared Data(Multiple Read and Write):-There is also data that is shared, concurrently read and written into, and accessed by lots of transactions. Such data is known as resource data.

Depending upon the situation, Caching shared data on a single computer can provide some performance improvements but for large-scale auctions, a single cache cannot provide the required scale or availability. For this purpose, some types of data can be partitioned and replicated in multiple caches across the distributed cache

Be sure to spend enough time in capacity planning for your cache. Number of objects, size of each object, frequency of access of each object and pattern for accessing these objects are all critical in not only determining how much cache you need for your application, but also on which layers to optimize for (local cache, network, cache tier, using regions and tags, and so on).

If you have a large number of small objects, and you don’t optimize for how frequently and how many objects you fetch, you can easily get your app to be network-bound.

Also Microsoft will soon release the pricing for using the caching service so obviously you need to ensure usage of the Caching service is “Optimized” and when it comes to the cloud “Optimized= Performance +Cost”!!

Matias Woloski described Adding Internet Identity Providers like Facebook, Google, LiveID and Yahoo to your MVC web application using Windows Azure AppFabric Access Control Service and jQuery in 3 steps in a 4/12/2011 post:

If you want to achieve a login user experience like the one shown in the following screenshot, then keep reading…

Windows Azure AppFabric Access Control 2.0 has been released last week after one year in the Labs environment and it was officially announced today at MIX. If you haven’t heard about it yet, here is the elevator pitch of ACS v2:

Windows Azure AppFabric Access Control Service (ACS) is a cloud-based service that provides an easy way of authenticating and authorizing users to gain access to your web applications and services while allowing the features of authentication and authorization to be factored out of your code. Instead of implementing an authentication system with user accounts that are specific to your application, you can let ACS orchestrate the authentication and much of the authorization of your users. ACS integrates with standards-based identity providers, including enterprise directories such as Active Directory, and web identities such as Windows Live ID, Google, Yahoo!, and Facebook

According to the blog published today by the AppFabric team you can use this service for free (at least throughout Jan 2012). Also the Labs environment are still available for testing purposes (not sure when they will turn this off).

We encourage you to try the new version of the service and will be offering the service at no charge during a promotion period ending January 1, 2012.

Now that we can use this for real, in this post I will show you how to create a little widget that will allow users of your website to login using social identity providers like Google, Facebook, LiveId or Yahoo. In this post I will go through the process of creating such experience for your website.

I will use an MVC Web Application, but this can be implemented in WebForms also or even WebMatrix if you understand the implementation details

Step 1. Configure Windows Azure AppFabric Access Control Service

- Create a new Service Namespace in portal.appfabriclabs.com or if you have an Azure subscription use the production version at windows.azure.com

- The service namespace will be activated in a few minutes. Select it and click on Access Control Service to open the management console for that service namespace.

- In the management console go to Identity Providers and add Google, Yahoo and Facebook (LiveID is added by default). It’s very straightforward to do it. This is the information you have to provide for each of them. I just googled for the logos and some of them are not the best quality, so feel free to change them

- Login Text: Google

- Image Url: http://www.google.com/images/logos/ps_logo2.png

- Yahoo

- Login Text: Yahoo

- Image Url: http://a1.twimg.com/profile_images/1178764754/yahoo_logo_twit_normal.jpg

- Facebok / Display Name: Facebook

- Application Id: …… (follow this tutorial to get one)

- Application secret: idem

- Application permissions: email (you can request more things from here http://developers.facebook.com/docs/authentication/permissions/)

- Image Url: https://secure-media-sf2p.facebook.com/ads3/creative/pressroom/jpg/n_1234209334_facebook_logo.jpg

- The next thing is to register the web application you just created in ACS. To do this, go to Relying party applications and click Add.

- Enter the following information

Name: a display name for ACS

Realm: https://localhost/<TheNameOfTheWebApp>/

This is the logical identifier for the app. For this, we can use any valid URI (notice the I instead of L). Using the base url of your app is a good idea in case you want to have one configuration for each environment.

Return Url: https://localhost/<TheNameOfTheWebApp>/

This is the url where the token will be posted to. Since there will be an http module listen for any HTTP POST request coming in with a token, you can use any valid url of the app. The root is a good choice and, don’t worry, then you can redirect the user back to the original url she was browsing (in case of bookmarking).- Leave the other fields with the default values and click Save. You will notice that Facebook, Google, LiveID and Yahoo are checked. This means that you want to enable those identity providers for this application. If you uncheck one of those, the widget won’t show it.

- Finally, go to the Rule Groups and click on the rule group for your web application.

- Since each identity provider will give us different information (claims about the user), we have to generate a set of rules to passthrough that information to our application. Otherwise by default that won’t happen. To do this, click on Generate, make sure all the identity providers are checked and save. You should see a screen like this

Step 2. Configure your application with Windows Azure AppFabric Access Control Service

- Now that we have configured ACS, we have to go to our application and configure it to use ACS.

- Create a new ASP.NET MVC Application. Use the Internet Application template to get the master page, controllers, etc.

NOTE: I am using MVC3 with Razor but you can use any version.- Before moving forward, make sure you have Windows Identity Foudnation SDK installed in your machine. Once you have it, then right click the web application and click Add STS Reference…. In the first step you will have already the right values so click Next

- In the next step, select Use an existing STS. Enter the url of your service namespace Federation Metadata. This URL has a pattern like this:

https://<YourServiceNamespace>.accesscontrol.appfabriclabs.com/FederationMetadata/2007-06/FederationMetadata.xml- In the following steps go ahead and click Next until the wizard finishes.

- The wizard will add a couple of http modules and a section on the web.config that will have the thumbprint of the certificate that ACS will use to sign tokens. This is the basis of the trust relationship between your app and ACS. If you change that number, it means the trust is broken.

- The next thing you have to do is replace the default AccountController with one that works when the authentication is outsourced of the app. Download the AccountController.cs, change the namespace to yours and replace it. Among other things, this controller will have an action called IdentityProviders that will return from ACS the list of identity providers in JSON format.

public ActionResult IdentityProviders(string serviceNamespace, string appId) { string idpsJsonEndpoint = string.Format(IdentityProviderJsonEndpoint, serviceNamespace, appId); var client = new WebClient(); var data = client.DownloadData(idpsJsonEndpoint); return Content(Encoding.UTF8.GetString(data), "application/json"); }Step 3. Using jQuery Dialog for the login box

- In this last step we will use the jQuery UI dialog plugin to show the list of identity providers when clicking the LogOn link. Open the LogOnPartial cshtml file

- Replace the LogOnPartial markup with the following (or copy from here). IMPORTANT: change the service namespace and appId in the ajax call to use your settings.

@if(Request.IsAuthenticated) { <text>Welcome <b>@Context.User.Identity.Name</b>! [ @Html.ActionLink("Log Off", "LogOff", "Account") ]</text> } else { <a href="#" id="logon">Log On</a> <div id="popup_logon"> </div> <style type="text/css"> #popup_logon ul { list-style: none; } #popup_logon ul li { margin: 10px; padding: 10px } </style> <script type="text/javascript"> $("#logon").click(function() { $("#popup_logon").html("<p>Loading...</p>"); $("#popup_logon").dialog({ modal: true, draggable: false, resizable: false, title: 'Select your preferred login method' }); $.ajax({ url : '@Html.Raw(Url.Action("IdentityProviders", "Account", new { serviceNamespace = "YourServiceNamespace", appId = "https://localhost/<YourWebApp>/" }))', success : function(data){ dialogHtml = '<ul>'; for (i=0; i<data.length; i++) { dialogHtml += '<li>'; if (data[i].ImageUrl == '') { dialogHtml += '<a href="' + data[i].LoginUrl + '">' + data[i].Name + '</a>'; } else { dialogHtml += '<a href="' + data[i].LoginUrl + '"><img style="border: 0px; width: 100px" src="' + data[i].ImageUrl + '" alt="' + data[i].Name + '" /></a>'; } dialogHtml += '</li>'; } dialogHtml += '</ul>'; $("#popup_logon").html(dialogHtml); } }) }); </script> }- Include jQuery UI and the corresponding css in the Master page (Layout.cshtml)

<link href="@Url.Content("~/Content/Site.css")" rel="stylesheet" type="text/css" /> <link href="@Url.Content("~/Content/themes/base/jquery-ui.css")" rel="stylesheet" type="text/css" /> <script src="@Url.Content("~/Scripts/jquery-1.4.4.min.js")" type="text/javascript"></script> <script src="@Url.Content("~/Scripts/jquery-ui.min.js")" type="text/javascript"></script>Step 4. Try it!

- That’s it. Start the application and click on the Log On link. Select one of the login methods and you will get redirected to the right page. You will have to login and the provider may ask you to grant permissions to access certain information from your profile. If you click yes you will be logged in and ACS will send you a set of claims like the screen below shows.

I added this line in the HomeController to show all the claims:

ViewBag.Message = string.Join("<br/>", ((IClaimsIdentity)this.User.Identity).Claims.Select(c => c.ClaimType + ": " + c.Value).ToArray());Well, it wasn’t 3 steps, but you get the point

. Now, it would be really cool to create a NuGet that will do all this automatically…

Just for future reference, these are the claims that each identity provider will return by default

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/nameidentifier: 619815976

http://schemas.microsoft.com/ws/2008/06/identity/claims/expiration: 2011-04-09T21:00:01.0471518Z

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress: yourfacebookemail@boo.com

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name: Matias Woloski

http://www.facebook.com/claims/AccessToken: 111617558888963|2.k <stripped> 976|z_fmV<stripped>3kQuo

http://schemas.microsoft.com/accesscontrolservice/2010/07/claims/identityprovider: Facebook-<appid>http://schemas.xmlsoap.org/ws/2005/05/identity/claims/nameidentifier: UoU">https://www.google.com/accounts/o8/id?id=AIt<stripped>UoU

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress: yourgooglemail@gmail.com

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name: Matias Woloski

http://schemas.microsoft.com/accesscontrolservice/2010/07/claims/identityprovider: GoogleLiveID

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/nameidentifier: WJoV5kxtlzEbsu<stripped>mMxiMLQ=

http://schemas.microsoft.com/accesscontrolservice/2010/07/claims/identityprovider: uri:WindowsLiveIDYahoo

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/nameidentifier: https://me.yahoo.com/a/VH6mn5oV<stripped>58mGa#e7b0c

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress: youryahoomail@yahoo.com

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name: Matias Woloski

http://schemas.microsoft.com/accesscontrolservice/2010/07/claims/identityprovider: Yahoo!Following up

Get the code used in this post from here.

If you are interested in other features of ACS, these are some of the things you can do:

- Read Vittorio’s post announcing ACS and pointing to lot of deliverables coming out today. This has been hard work from @nbeni, @sebasiaco, @litodam and more southies.

- Go through the ACSv2 labs in the Identity Training Kit

- Follow Vittorio’s blog, Eugenio’s blog, Justin’s blog and the AppFabric team blog

- Read articles like this

- I am collaborating with patterns & practices writing the second part of the Guide to Claims Based Identity and Access Control, including ACS and explaining the scenarios it enable. Stay tune at claimsid.codeplex.com for fresh content.

DISCLAIMER: use this at your own risk, this code is provided as-is.

Asir Vedamuthu Selvasingh posted AppFabric ACS: Single-Sign-On for Active Directory, Google, Yahoo!, Windows Live ID, Facebook & Others to the Interoperability @ Microsoft blog on 4/12/2011:

Until today, you had to build your own custom solutions to accept a mix of enterprise and consumer-oriented Web identities for applications in the cloud or anywhere. We heard you and we have built a service to make it simpler.

Today at MIX11, we announced a new production version of Windows Azure AppFabric Access Control service, which enables you to build Single-Sign-On experience into applications by integrating with standards-based identity providers, including enterprise directories such as Active Directory, and consumer-oriented web identities such as Windows Live ID, Google, Yahoo! and Facebook.

The Access Control service enables this experience through commonly used industry standards to facilitate interoperability with other software and services that support the same standards:

- OpenID 2.0

- OAuth WRAP

- OAuth 2.0 (Draft 13)

- SAML 1.1, SAML 2.0 and Simple Web Token (SWT) token formats

- WS-Trust, WS-Federation, WS-Security, XML Digital Signature, WS-Security Policy, WS-Policy and SOAP.

And, we continue to work with the following industry orgs to develop new standards where existing ones are insufficient for the emerging cloud platform scenarios:

- OpenID Foundation

- IETF OAuth WG

- OASIS Identity in the Cloud Technical Committee

- Cloud Security Alliance

Check out the Access Control service! There are plenty of docs and samples available on our CodePlex project to get started.

Thanks,

Asir Vedamuthu Selvasingh, Technical Diplomat, Interoperability

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team reported Now Available: Windows Azure SDK 1.4 Refresh with WebDeploy Integration on 4/15/2011:

We are pleased to announce the immediate availability of the Windows Azure SDK 1.4 refresh. Available for download here, this refresh enables web developers to increase their development productivity by simplifying the iterative development process of web-based hosted services.

Until today, the iterative development process for hosted services has required you to re-package the application and upload it to Windows Azure using either the Management portal or the Service Management API. The Windows Azure SDK 1.4 refresh solves this problem by integrating with the Web Deployment Tool (Web Deploy).

Please note the following when using Web Deploy for interactive development of Windows Azure hosted services:

- Web Deploy only works with a single role instance.

- The tool is intended only for iterative development and testing scenarios

- Web Deploy bypasses the package creation. Changes to the web pages are not durable. To preserve changes, you must package and deploy the service.

Click here to install the WebDeploy plug-in for Windows Azure SDK 1.4.

Steve Marx (@smarx) explained Building a Simple “About.me” Clone in Windows Azure in a 5/14/2011 post:

A few weeks ago, I built a sample app http://aboutme.cloudapp.net that I used as a demo in a talk for the Dr. Dobb’s “Programming for the Cloud: Getting Started” virtual event. That pre-recorded talk went live today, and I believe you can still watch the talk on-demand.

For regular readers of my blog, the talk is probably a bit basic, but the app is yet another example of that common pattern of a web role front-end serving up web pages and a worker role back-end doing asynchronous work. The full source code is linked from the bottom of the http://aboutme.cloudapp.net page, so feel free to check it out.

Note that this is pretty much the most obvious use case ever for the CDN, but I didn’t use it. That was to keep the example as simple as possible for people brand new to the platform.

I don’t promise to keep the app running for very long, so don’t get too attached to your sweet aboutme.cloudapp.net URL. (Mine’s http://aboutme.cloudapp.net/smarx.)

Marius Revhaug described the Microsoft Platform Ready Test Tool - Windows Azure Edition in a 5/14/2011 post:

This is a guide for testing Windows Azure and SQL Azure readiness with the MPR testing tool. However, you may also use the Microsoft Platform Ready (MPR) Test Tool you can test the following applications:

Windows® 7

Windows Server® 2008 R2

Microsoft® SQL Server® 2008 R2

Microsoft Dynamics™ 2011

Microsoft Exchange 2010 + Microsoft Lync™ 2010

This guide outlines the steps for using the Microsoft Platform Ready (MPR) Test Tool to verify a Windows Azure and SQL Azure application.

1. Download the MPR Test Toolkit

The MPR Test Toolkit is available from the www.microsoftplatformready.com Web site. You must first sign up to access the MPR Test Toolkit.

After you have successfully registered the application(s) you want to test, you will see the MPR Test Toolkit located on the Test tab as seen in figure 1.1.

Figure 1.1: MPR Test Tool download location2. Start the MPR Test Toolkit

After you have successfully installed the MPR Test Toolkit you will see shortcuts to start the MPR Test Toolkit available on the Start menu and on the Desktop.

3. Select an operation

Once you have started the MPR Test Tool, you can either start a new test, resume a previous test or create reports. The options are shown in figure 1.2.

Figure 1.2: MPR Test Tool options

4. Start a new test

Click Start New Test to choose the type of platform you want to test as shown in figure 1.3.

If your application utilizes several platforms, you can select all of the platforms used on this screen. This guide will focus on Windows Azure and SQL Azure.

At this point, name your test by entering a name in the Test Name textbox. The name of the test should match the name you specified when you registered your application on the MPR Web site.

Figure 1.3: Name your test and select the platform(s) to test5. Enter server information

On the next screen click the Edit links to enter appropriate information to connect to Windows Azure and SQL Azure.

For SQL Azure you need to specify the Server Name, Username and Password used to connect as seen in figure 1.4.

Figure 1.4: Specify server information

For Windows Azure you need to specify the Physical Server Address and Virtual IP Address used to connect as seen in figure 1.5.

Figure1.5: Specify server information.

The following screen summarizes the results of the Server Prerequisites test as seen in figure 1.6.

Figure 1.6: Summary of Server Prerequisites test6. Test application functionality

Your application’s primary functionality must be executed now. Before proceeding ensure that your application is already installed and fully configured. Execute all features in your application including functionalities that invoke drivers or devices where applicable. If your application is distributed on multiple computers exercise all client-server functionalities. Where applicable you are required to test client components in your application concurrently with server components in your application. You may now minimize the MPR Test Tool, execute primary functionality of your application and return to the MPR Test Tool.Once you have executed all primary functionality make sure to check the “Confirm that all primary functionality testing of your application” option as seen in figure 1.7.

Figure 1.7: Execute all primary functionality of your application

7. See if your application passed

Once you click Next, you will see immediately if your application passed the test as seen in figure 1.8.

If one or more components in your application fail, you have the ability to submit a waiver.

You will find the instructions for how to acquire a waiver described at the bottom of the screen.

Figure 1.8: You will see immediately if your application has passed or failed

8. View the test results report

You have now completed the test portion. To view the report, click the Show button seen in figure 1.9.

Figure 1.9: Click the Show button to view the test report

Figure 1.10 displays an example of a test report.

Figure 1.10: Example of a test report9. Generate a test results package

In this next step you will generate a test results package that you can submit to the MPR Web site.

Start this process by clicking the Reports button as seen in figure 1.11.

Figure 1.11: Click the Reports button to start the process for creating a test results package

10. Select reports to include in the Test Results Package

To create a Test Results Package start by selecting the test(s) you want to include.

You can include one or more tests. You can also click the Add button, as seen in figure 1.12, to select and include reports located on other computers.

Figure 1.12: Select the reports you want to include

11. View summary

The screen shown in figure 1.13 displays a summary of reports that will be included in the test results package.

Figure 1.13: Reports summary

12. Specify application name and version

Next you will specify the application name and version. Enter the application name and version in the textboxes shown in figure 1.14.

The application name should match the name you specified when you registered your application on the MPR Web site.

Figure 1.14: Specify application name and version

13. Specify test results package name and application ID

Next you will specify a name for the test package and application ID. Enter the name and application ID in the textboxes as shown in figure 1.15.

The application ID must match the application ID that was generated on the MPR Web site when you first registered your application.

You also need to agree to the Windows Azure Logo Program legal requirements before you can continue.

Figure 1.15: Specify the Test Results Package Name, Application ID and agree to Windows Azure Logo program requirements14. Finish the test process

The test process is now complete. You can now upload the Test Results Package (zip file) to the MPR Web site.

The path to the Test Results Package is located in the Test Results Package Location textbox as seen in figure 1.16.

Figure 1.16: Test Results Package location15. Upload Test Results Package to the MPR Web site

In this last and final step you will upload the Test Results Package created by the MPR tool.

To upload the Test Results Package visit the MPR Web site.

Click the Browse button to locate the generated Test Results Package zip file then click the Upload button as seen in figure 1.17.

Figure 1.17: Upload Test Results PackageThe MPR tool will upload your Test Results Package to the MPR Web site

The MPR tool’s UI has improved greatly since I used it to obtain the “Powered by Windows Azure” logo for my OakLeaf Systems Azure Table Services Sample Project - Paging and Batch Updates Demo several months ago.

Wes Yanaga recommended that you Speed up your Journey to the Cloud with the FullArmor CloudApp Studio (US) on 4/14/2011:

We know that getting to the cloud is not always straight forward. That's why we are committed to bringing you the latest tools and resources to help you to quickly get your solution cloud-ready.

We would like to introduce you to the FullArmor CloudApp Studio, it makes it easy to rapidly deploy applications and websites onto the Microsoft Windows Azure platform - making development into the cloud simple.

It's a tool designed to be used by anyone - not just developers - and doesn't require coding knowledge. Using a familiar interface it can take just a few minutes to get your application or website ready for Windows Azure.

You are invited to take part in a three month preview of the FullArmor CloudApp Studio, starting on April 14, 2011 and ending on June 30, 2011.

Steps to Get Started with the FullArmor CloudApp Studio Preview

- Sign up for a CloudApp Studio Preview Account.

- Log-in to the Windows Azure Dev portal as normal.

- If you don’t already have a Windows Azure account, you can try Windows Azure for 30 days – at no cost. Click here and use Promo Code DPWE01 for your free Windows Azure 30 Day Pass. No credit card required*.

- Select Beta Programs, then VM Role, then Apply for Access [takes 3-7 business days].

- Once your VM Role access has been enabled, download the FullArmor CloudApp Studio Quick Start Guide for information on how to get started.

- That's it! Log into FullArmour CloudApp Studio to start migrating your applications or websites onto the Windows Azure platform.

FullArmor CloudApp Studio Tech Specs:

- A single disk virtual machine running on Windows Server 2008 R2 Hyper-V X64

- Admin access to the virtual machine (for the virtual machine to Azure VMRole web site migration)

- Find more information in the CloudApp Studio Technical Guide

Get more out of the cloud with Microsoft Platform Ready for Windows Azure

Microsoft Platform Ready offers no-cost technical support, developer resources, software development kits and marketing resources to help you get into the cloud faster.

With Microsoft Platform Ready you gain exclusive access to developer resources and can test compatibility with online testing tools. Drive sales with customized marketing toolkits and when you’re done you can exhibit your solutions in Microsoft catalogs.

Windows Azure Platform developer resources

- Step-by-step guidance on developing and deploying on the Windows Azure Platform

- Get hands on learning by taking the Windows Azure virtual lab

- Analyze your potential cost savings with the Windows Azure Platform TCO and ROI Calculator]

- Connect with other developers at the Windows Azure Platform Developer Center

Try Windows Azure for 30 days – at no cost*

Take advantage of the CloudApp Studio preview with a free Windows Azure 30 day pass. You can quickly migrate your app or website to the cloud and see how it performs on the Windows Azure platform.

Use Promo Code DPWE01 to get your free 30 day Windows Azure Pass today

*Windows Azure 30 Day Pass Terms and Conditions

Note: If you would like to continue to use the Windows Azure platform after your 30 day pass has expired, you will need to sign up for a Windows Azure paying account here.

Julie Lerman (@julielerman) answered Why can’t I Edit or Delete my Data Service data? on 5/14/2011:

I spent waaaaaay too much time on this problem yesterday and wanted to share the solution, or more accurately, the workaround.

I’m creating an Azure hosted Data Service and to as a consumer, an Azure hosted MVC application.

My app allows users to query, create, edit and delete data.

I have the permission set properly in the data service. In fact, in desperation I completely opened up the service’s permissions with

config.SetEntitySetAccessRule("*", EntitySetRights.All);

But calling SaveChanges when there was a modification or delete involved always threw an error: Access is Denied and in some situations a 404 page not found.

When you develop Azure apps in Visual Studio, you do so with Visual Studio running as an Admin and the Azure tools use IIS to host the local emulator. I so rarely develop against IIS anymore. I mostly just use the development server (aka cassini) that shows up as localhost with a port number, e.g., localhost:37.

By default IIS locks down calls to POST, MERGE and DELETE (e.g. update and delete).

It took me a long time to even realize this but Chris Woodruff asked about that.

So I went into IIS and even saw that “All Verbs” was checked in the Handler Mappings and decided that wasn’t the problem.

Eventually I went back and explicitly added PUT, POST,MERGE,DELETE, GET in the verbs list.

But the problem still didn’t go away.

I deployed the app to see if Azure would have the same problem and unfortunately it did.

I did a lot of whining (begging for ideas) on twitter and some email lists and none of the suggestions I got were panning out.

Today I was very fortunate to have Shayne Burgess and Phani Raj from Microsoft take a look at the problem and Phani suggested to use the workaround that the WCF Data Services team created for this exact problem – when POST is being honored (e.g. insert) but PUT, MERGE and DELETE are being rejected by the server.

There is a property on the DataServiceContext called UsePostTunneling.

Setting it to true will essentially trick the request into thinking it’s a POST and then when the request got the server, saying “haha, just kidding it’s a MERGE or DELETE”. (My twisted explanation…Phani’s was more technical.)

This did the trick both locally and on Azure. But it is a workaround and our hope is to eventually discover the root cause of the problem.

Here's a blog post by Marcelo Lopez Ruis about the property:

http://blogs.msdn.com/b/marcelolr/archive/2008/08/20/post-tunneling-in-ado-net-data-services.aspx

Hopefully my blog post has some search terms that will help others find it who have the same problem. In all of the searching I did, I never came across post tunneling though I do remember seeing something about flipping POST and PUT in a request header but the context was not inline with my problem enough for me to recognize it as a path to the solution.

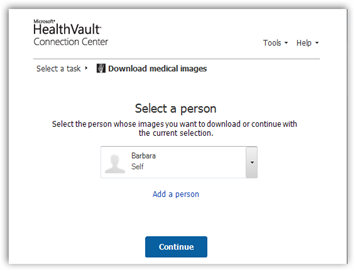

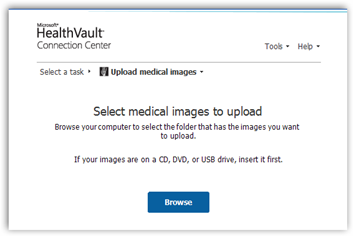

Barbara Duck (@MedicalQuack) reported HealthVault Begins Storing Medical Images (Dicom) Using Windows Azure Cloud Services With Full Encryption on 4/14/2011:

This is cool and makes me think of what my mother as a senior has gone through with 2nd opinions and so on. She has literally had to enlist the help of friends to pick up images and take them in hand to various doctor’s offices. Yes that is a pain and having HealthVault at a storage point is great. Dicom images are big files so that is where the cloud comes in. If you have images already in your possession there’s an interface to upload them to HealthVault.

If you share with a provider they can download and keep the images in your record so again this is a big timesaver. Imaging centers can automatically send your images to your personal health record with HealthVault.

The one vendor mentioned has a “drop box” for files and if you have used any of those types of sharing programs, it’s works well and is pretty simple to use. There’s always a link on this site under resources if you want to get started on the right hand side.

It has been a while since I mentioned this, you can track your prescriptions from Walgreens and CVS too and have them all in one place. Also at CVS Minute Clinic they work with HealthVault and pretty much anymore from conversations I have had with a few locations, they ask you right up front if you want to connect with HealthVault or Google Health any more too.

I have uploaded documents to my file but again imaging files are huge this using the cloud takes care of the “size” issues. What is also nice is having the Dicom viewer and not needing another piece of software to view the images. I can use one less image viewer on my computer too. BD

I'm always talking about how lame the health IT landscape is --- and with good reason. But there is one domain in which advances have been consistent and amazing and clearly impactful to care: medical imaging. Our ability to look inside a body and see with incredible detail what's going on inside is just staggering.

Well, today there is no way I could be more over-the-top excited to say --- medical imaging is now a core part of the HealthVault platform. We're talking full diagnostic quality DICOM images, with an integration that makes it immediately useful to consumers and their providers:

A new release of HealthVault Connection Center that makes it super-easy to upload images from a CD or DVD , and enables users to burn new discs to share with providers --- complete with a viewer and "autoplay" behavior that providers expect.

- Full platform support for HealthVault applications to store and retrieve images themselves, enabling seamless integration with radiology centers and clinical systems.

Of course, we have to ensure that we keep the data secure and private as well --- we do this with a really cool piece of code that creates unique encryption keys for every image, stores those keys in our core HealthVault data center, and only sends encrypted, chunked data to Azure. This effectively lets us use Azure as an infinitely-sized disk --- warms this geek's heart to see it all come together.

Bruce Kyle reported Windows Azure Updates SDK with New Deployment Tool, CDN Preview, Geo Performance Balancing on 4/13/2011:

Announcements at MIX11 myriad of new Windows Azure services and functionality that include:

- A new Web Deployment Tool that is part of an updated SDK.

- Preview of Windows Azure Content Delivery Network (CDN) for Internet Information Services (IIS) Smooth Streaming.

- Preview of Windows Azure Traffic Manager.

The announcements were made by the Windows Azure team on their blog Windows Azure News from MIX11.

New & Updated Windows Azure Features

Announcements include:

- An update to the Windows Azure SDK that includes a Web Deployment Tool to simplify the migration, management and deployment of IIS Web servers, Web applications and Web sites. This new tool integrates with Visual Studio 2010 and the Web Platform Installer. Get the tools from Download Windows Azure SDK.

- A community technology preview (CTP) of Windows Azure Traffic Manager, a new service that allows Windows Azure customers to more easily balance application performance across multiple geographies. To get the CTP, see the Windows Azure Traffic Manager section of Windows Azure Virtual Network.

- A preview of the Windows Azure Content Delivery Network (CDN) for Internet Information Services (IIS) Smooth Streaming capabilities, which allows developers to upload IIS Smooth Streaming-encoded video to a Windows Azure Storage account and deliver that video to Silverlight, iOS and Android Honeycomb clients. See Smooth Streaming section at Windows Azure Content Delivery Network (CDN).

AppFabric Offers Caching, Single Sign-On

Previously reported in this blog:

- Updates to the Windows Azure AppFabric Access Control service, which provides a single-sign-on experience to Windows Azure applications by integrating with enterprise directories and Web identities.

- Release of the Windows Azure AppFabric Caching service in the next 30 days, which will accelerate the performance of Windows Azure and SQL Azure applications.

See Windows Azure AppFabric Release of Caching, Single Sign-on for Access Control Service.

About MIX

In its sixth year, MIX was created to foster a sustained conversation between Microsoft and Web designers and developers. This year’s event offers two days of keynotes streamed live and approximately 130 sessions available for download, all at http://live.visitmix.com.

See The Web Takes Center Stage at Microsoft’s MIX11 Conference.

About Azure Traffic Manager

Windows Azure Traffic Manager is a new feature that allows customers to load balance traffic to multiple hosted services. Developers can choose from three load balancing methods: Performance, Failover, or Round Robin. Traffic Manager will monitor each collection of hosted service on any http or https port. If it detects the service is offline Traffic Manager will send traffic to the next best available service. By using this new feature businesses will see increased reliability, availability and performance in their applications.

About Content Delivery Network

The Windows Azure Content Delivery Network (CDN) enhances end user performance and reliability by placing copies of data closer to users. It caches your application’s static objects at strategically placed locations around the world.

Windows Azure CDN provides the best experience for delivering your content to users. Windows Azure CDN can be used to ensure better performance and user experience for end users who are far from a content source, and are using applications where many ‘internet trips’ are required to load content.

Both Channel 9 and this blog are served to you through CDN.

Getting Started with Windows Azure

See the Getting Started with Windows Azure site for links to videos, developer training kit, software developer kit and more. Get free developer tools too.

For a free 30-day trial, see Windows Azure platform 30 day pass (US Developers) No Credit Card Required - Use Promo Code: DPWE01.

For free technical help in your Windows Azure applications, join Microsoft Platform Ready.

Learn What Other ISVs Are Doing on Windows Azure

For other videos about independent software vendors (ISVs):

- Accumulus Makes Subscription Billing Easy for Windows Azure

- Azure Email-Enables Lists, Low-Cost Storage for SharePoint

- Crowd-Sourcing Public Sector App for Windows Phone, Azure<

- Food Buster Game Achieves Scalability with Windows Azure

- BI Solutions Join On-Premises To Windows Azure Using Star Analytics Command Center

The All-In-One Code Framework Team posted New Microsoft All-In-One Code Framework “Sample Browser” v3 Released on 4/13/2011:

Microsoft All-In-One Code Framework Sample Browser v3 was released today, targeting to provide a much better code sample download and management experience for developers.